- Institut für Qualität und Weiterbildung, Technische Hochschule Deggendorf, Deggendorf, Germany

Kirkpatrick's four-level training evaluation model assumes that a positive correlation exists between satisfaction and learning. Several studies have investigated levels of satisfaction and learning in synchronous online courses, asynchronous online learning management systems, and synchronous face-to-face classroom instruction. The goal of the present meta-analysis was to cumulate these effect sizes and test the predictive validity of Kirkpatrick's assumption. In this connection, particular attention was given to a prototypical form of synchronous online courses—so called “webinars.” The following two research questions were addressed: (a) Compared to asynchronous online and face-to-face instruction, how effective are webinars in promoting student learning and satisfaction? (b) What is the association between satisfaction and learning in webinar, asynchronous online and face-to-face instruction? The results showed that webinars were descriptively more effective in promoting student knowledge than asynchronous online (Hedges' g = 0.29) and face-to-face instruction (g = 0.06). Satisfaction was negligibly higher in webinars compared to asynchronous online instruction (g = 0.12) but was lower in webinars to face-to-face instruction (g = −0.33). Learning and satisfaction were negatively associated in all three conditions, indicating no empirical support for Kirkpatrick's assumption in the context of webinar, asynchronous online and face-to-face instruction.

Introduction

The middle of the 1990s witnessed the slow advent of Internet-based education and early applications of online distance learning (Alnabelsi et al., 2015). Since then, there has been a significant increase in the number of available e-learning resources and educational technologies (Ruiz et al., 2006; Gegenfurtner et al., 2019b; Testers et al., 2019), which have gained more importance in the higher education and professional training contexts (Wang and Hsu, 2008; Nelson, 2010; Siewiorek and Gegenfurtner, 2010; Stout et al., 2012; Knogler et al., 2013; McMahon-Howard and Reimers, 2013; Olson and McCracken, 2015; Testers et al., 2015; McKinney, 2017; Goe et al., 2018). To date, various possibilities regarding the implementation of e-learningi n educational contexts exist, one of which is the use of webinars—a prototypical form of synchronous online courses. The most obvious advantage of webinars is the high degree of flexibility they grant to participants. Whereas, traditional face-to-face teaching has locational limitations—i.e., the tutor and students have to be in the same physical space—webinars can be accessed ubiquitously via computer devices at students' homes or in other locations (Alnabelsi et al., 2015; Gegenfurtner et al., 2017; Tseng et al., 2019) without the need for students to travel long distances in order to participate synchronously in lectures or seminars (Gegenfurtner et al., 2018, 2019a,c).

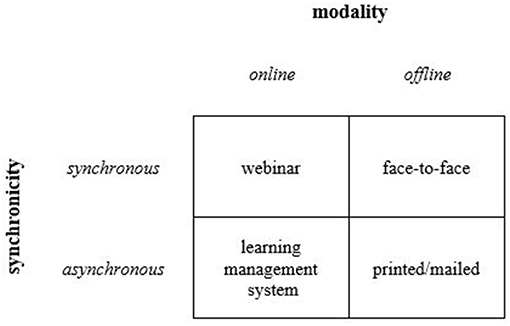

Synchronicity and Modality in Learning Environments

Learning environments can be classified in terms of their synchronicity and modality. First, synchronicity refers to the timing of the interaction between students and their lecturers. Synchronous learning environments enable simultaneous and direct interaction, while asynchronous learning environments afford temporally delayed and indirect interaction. Second, modality refers to the mode of delivery used in learning environments. Online environments afford technology-enhanced learning using the Internet or computer devices, while offline environments afford traditional instruction without the use of digital tools and infrastructure. Learning environments can be clustered into four groups according to their levels of synchronicity and modality. Figure 1 shows prototypical examples of these clusters. Specifically, webinars afford synchronous online learning, learning management systems afford asynchronous online learning, and traditional classroom instruction affords synchronous offline learning.

Compared to traditional face-to-face education, the use of online environments is accompanied by certain advantages and disadvantages. Webinars, for example, use video-conferencing technologies that enable direct interaction to occur between participants and their lecturers without the need for them to be in the same physical location; this geographical flexibility and ubiquity are an advantage of webinars. The synchronous setup makes it possible for participants to communicate directly with their instructors who are able to provide immediate feedback (Gegenfurtner et al., 2017). Any comments or questions that arise can, therefore, be instantly brought to the tutor's attention. Moreover, the modality allows for real-time group collaboration between participants to occur (Wang and Hsu, 2008; Siewiorek and Gegenfurtner, 2010; Johnson and Schumacher, 2016; Gegenfurtner et al., 2019a).

Asynchronous learning management systems use forum and chat functions, document repositories, or videos and recorded footage of webinars that can be watched on demand. These systems provide flexibility with regard to location and time. Using suitable technological devices, participants are able to access course content from anywhere. Furthermore, students are given the opportunity to choose precisely when they want to access the learning environment. However, this enhanced flexibility also has disadvantages. According to Wang and Woo (2007), it is difficult to replace or imitate face-to-face interaction with asynchronous communication; this is primarily due to the lack of immediate feedback (Gao and Lehman, 2003) and the absence of extensive multilevel interaction (Marjanovic, 1999) between students and lecturers (Wang and Hsu, 2008).

The Evaluation of Learning Environments

The increasing use of webinars in educational contexts was followed by studies that have examined the effectiveness of webinars in higher education and professional training under various circumstances (e.g., Nelson, 2010; Stout et al., 2012; Nicklen et al., 2016; Goe et al., 2018; Gegenfurtner et al., 2019c). According to Phipps and Merisotis (1999), research on the effectiveness of distance education typically includes measures of student outcomes (e.g., grades and test scores) and overall satisfaction.

A frequently used conceptual framework for evaluating learning environments is Kirkpatrick's (Kirkpatrick, 1959; Kirkpatrick and Kirkpatrick, 2016) seminal four-level model. This framework specifies the following four levels: reactions, learning, behavior, and results. The first two levels are particularly interesting because they can be easily evaluated in training programs using, for example, questionnaire and test items that assess trainee satisfaction and learning. A basic assumption is that reactions as affect, such as satisfaction, lead to learning. This positive association between learning and satisfaction is a cornerstone of Kirkpatrick's model. However, empirical tests of the predictive validity of this association indicate limited support. For example, in their meta-analysis of face-to-face training programs, Alliger et al. (1997) reported a correlation coefficient of 0.02 between affective reactions and immediate learning at post-test. More recently, Gessler (2009) reported a correlation coefficient of −0.001 between satisfaction and learning success in an evaluation of face-to-face training. Additionally, Alliger and Janak (1989), Holton (1996), as well as Reio et al. (2017), among others, offered critical accounts of the validity of Kirkpatrick's four-level model. However, although Kirkpatrick's model is widely used to evaluate levels of satisfaction and learning in webinar-based and online training, no test of the predictive validity of a positive association has been performed to date.

The Present Study

The present study focused on comparing levels of learning and satisfaction in webinars, online asynchronous learning management systems, and face-to-face classroom instruction. A typical problem related to the examination of webinars and other online environments is small sample size (e.g., Alnabelsi et al., 2015; Olson and McCracken, 2015); consequently, the findings might be biased by artificial variance induced by sampling error. Another problem relates to study design: Quasi-experimental studies often have limited methodological rigor, which can bias research findings and prohibit causal claims. To overcome these challenges, the present study used meta-analytic calculations of randomized controlled trials (RCTs) comparing webinar, online, and face-to-face instruction. The objective of the study was to test the predictive validity of Kirkpatrick (1959) four-level model, particularly the assumed positive association between satisfaction and learning. The following two research questions were addressed: (a) Compared to online and face-to-face instruction, how effective are webinars in promoting learning and satisfaction? (b) What is the association between satisfaction and learning in webinar, online, and face-to-face instruction?

Methods

Inclusion and Exclusion Criteria

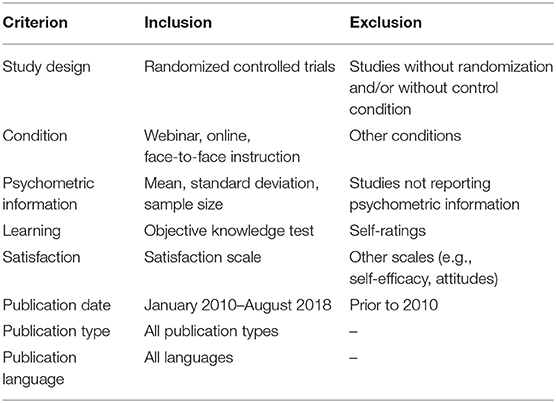

This meta-analysis was performed in adherence to the standards of quality for conducting and reporting meta-analyses detailed in the PRISMA statement (Moher et al., 2009). The analysis identified the effect sizes of RCTs on learning and satisfaction in webinar, online, and face-to-face instruction. The inclusion and exclusion criteria that were applied are reported in Table 1. A study had to report mean, standard deviation, and sample size information for the webinar and control conditions—or other psychometric properties that could be converted to mean and standard deviation estimates, such as the median and interquartile range (Wan et al., 2014)—in order for it be included in the meta-analysis. In an effort to minimize publication bias (Schmidt and Hunter, 2015), we included all publication types: peer-reviewed journal articles, book chapters, monographs, conference proceedings, and unpublished dissertations. Studies were omitted if they did not randomly assign participants to the webinar and control conditions (face-to-face and asynchronous online), and they were included if learning was measured objectively using knowledge tests. Studies using self-report learning data were omitted. The meta-analysis included various satisfaction scales.

The Literature Search

Based on these inclusion and exclusion criteria, a systematic literature search was conducted in two steps. The first step included an electronic search of four databases: ERIC, PsycINFO, PubMed, and Scopus. We did not exclude any publication type or language but omitted articles that were published before January 2003, as this enabled us to continue and update Bernard et al. (2004) meta-analysis about effectiveness of distance education. The following relevant keywords were used for the search: webinar, webconference, webconferencing, web conference, web conferencing, web seminar, webseminar, adobeconnect, adobe connect, elluminate, and webex. These were combined with training, adult education, further education, continuing education, and higher education and had to be included in the titles or abstracts of the potential literature.

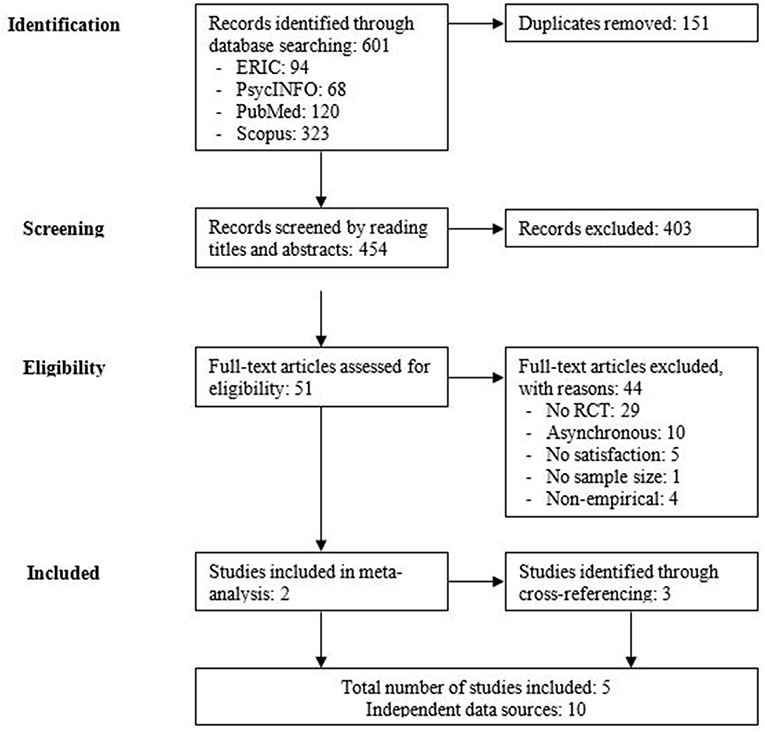

The searches conducted in the stated databases led to 601 hits, of which 94 were from ERIC, 68 from PsycINFO, 120 from PubMed, and 323 from Scopus. Duplicates that appeared in more than one database were identified and removed by two trained raters. Using this process, 151 duplicates were removed, leaving 454 articles. Subsequently, both raters screened a random subset of k = 46 articles—both independently and in duplicate—with the objective of measuring interrater agreement. As interrater agreement was high (Cohen's k = 0.93; 95% CI = 0.79–1.00), one rater screened the remaining articles for eligibility by reading titles and abstracts. The screening resulted in the exclusion of 403 articles because they reported qualitative research, were review papers or commentaries, or focused on asynchronous learning management systems or modules.

Subsequently, both raters read the full texts of the remaining 51 articles to ensure their eligibility. At this point, 44 articles were omitted for various reasons. Specifically, 29 articles did not contain RCTs and were, thus, removed. Another 10 articles were removed because they did not include a fully webinar condition. Five articles were ineligible for the meta-analysis because they did not report any satisfaction scales. Additionally, four articles were non-empirical, and one did not report sample size. When data were missing, the corresponding authors were contacted twice and asked to provide any missing information. Following the first step of the literature search, two articles (Constantine, 2012; Joshi et al., 2013) remained and were included in the meta-analysis.

The second step of the literature search contained a cross-referencing process that included articles that were used to identify other relevant studies. Using a backward-search process, we checked the reference list at the end of each article to find other articles that were not included in the database search but could potentially be eligible for inclusion in the meta-analysis. Pursuing the same goal, we then conducted a forward search, using Google Scholar to identify studies that cited the included articles. We also consulted the reference lists of 12 earlier reviews and meta-analyses of online and distance education (Cook et al., 2008, 2010; Bernard et al., 2009; Means et al., 2009, 2013; Martin et al., 2014; Schmid et al., 2014; Liu et al., 2016; Margulieux et al., 2016; Taveira-Gomes et al., 2016; McKinney, 2017; Richmond et al., 2017). This second step of the literature search resulted in another three publications (Harned et al., 2014; Alnabelsi et al., 2015; Olson and McCracken, 2015) that met all the inclusion criteria.

In summary, the two articles obtained from the electronic database search and the three resulting from the cross-referencing process led to the inclusion of five articles in the meta-analysis. Figure 2 presents a “PRISMA Flow Diagram” about the study selection. In the list of references, asterisks precede the studies that are included in the meta-analysis.

Literature Coding

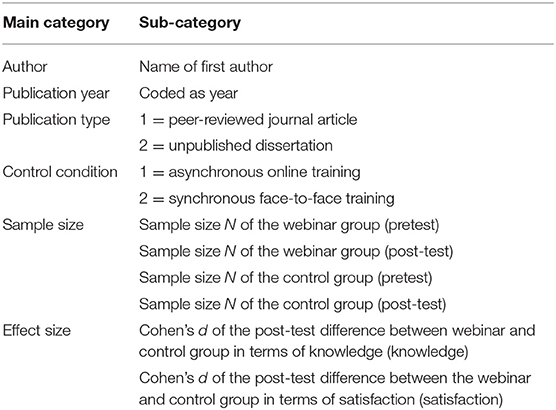

Following the completion of the literature search, two trained raters coded the included articles, both independently and in duplicate, using the coding scheme detailed in Table 2.

With regard to publication characteristics, we coded the name of the first author of the publication, as well as the publication year and type. Regarding publication type, we distinguished between peer-reviewed journal articles and unpublished dissertations. Regarding the control conditions, we distinguished between asynchronous online training and synchronous face-to-face training. Furthermore, we apprehended the sample sizes of the webinar and control group at pre- and post-test. The extracted effect sizes included the standardized mean difference Cohen's d (Cohen, 1988) of the knowledge post-test scores between the webinar and control group, as well as the standardized mean difference Cohen's d of the post-test satisfaction scores between the webinar and control group (elaborate explanation of statistical calculations is provided in section Statistical Calculations).

Interrater Reliability

To ensure the quality of the essential steps taken within the meta-analysis, two trained raters identified, screened, and coded the studies both independently and in duplicate. An important measure of consensus between the raters is represented through interrater reliability (Moher et al., 2009; Schmidt and Hunter, 2015; Tamim et al., 2015; Beretvas, 2019). To statistically measure interrater reliability, Cohen's kappa coefficient (κ) was calculated separately for the single steps of the literature search (identification, screening, and eligibility) and literature coding. Cohen's κ estimates and their standard errors were calculated using SPSS 24, and the standard errors were used to compute the 95% confidence intervals around κ.

According to Landis and Koch (1977), κ values between 0.41 and 0.60 can be interpreted as moderate agreement, values between 0.61 and 0.80 as substantial agreement, and values between 0.81 and 1.00 as almost perfect agreement.

For the literature search, Cohen's κ was estimated separately for the identification, screening, and eligibility checks of the studies, as detailed in the study selection flow diagram in Figure 1. The results of the statistical calculations of interrater reliability showed Cohen's κ values: κ = 0.99 (95% CI = 0.98–1.00) for study identification, κ = 0.93 (95% CI = 0.79–1.00) for screening, and κ = 1.00 (95% CI = 1.00–1.00) for eligibility. For the literature coding, interrater reliability was κ = 0.91 (95% CI = 0.82–0.99).

In summary, the values for interrater reliability showed an almost perfect agreement (Landis and Koch, 1977) between the two raters. This applied to both the literature search and the literature coding. If there was any disagreement between the two raters, it was resolved with consensus.

Statistical Calculations

Two meta-analytic calculations were conducted. The first calculation included a primary meta-analysis with the objective of computing corrected effect size estimates. In a second step, meta-analytic moderator analysis was used to identify the effect of two a priori defined subgroups on the corrected effect sizes. Correlation- and regression analyses were subsequently conducted to examine the relationship between participant satisfaction and knowledge gain.

The primary meta-analysis was carried out following the procedures for the meta-analysis of experimental effects (Schmidt and Hunter, 2015). As this calculation included a comparison between the post-test knowledge scores for the webinar and control conditions (face-to-face and asynchronous online), Cohen's d estimates were calculated based on the formula d = (MWeb post – MCon post)/SDpooled, as detailed in Schmidt and Hunter (2015). In this formula, “MWeb post” and “MCon post” represent the mean knowledge scores obtained in the post-test by the webinar and the control group (face-to-face and asynchronous online) and SDpooled describes the pooled standard deviation for the two groups. Given that mean and standard deviation estimates were not reported in an article, the formulae provided by Wan et al. (2014) were used to estimate these two variables based on sample size, median, and range. The resulting F values were then converted into Cohen's d using the formulae provided by Polanin and Snilstveit (2016). Finally, all Cohen's d values were transformed into Hedges (1981) g with the objective of controlling for small sample sizes.

The primary meta-analysis was followed by a meta-analytic moderator estimation to examine the influence of two control condition subgroups (face-to-face and asynchronous online) on the results of the corrected effect sizes of the primary meta-analysis. These categorical moderator effects were estimated using theory-driven subgroup analyses.

Finally, a two-tailed bivariate correlation analysis and a regression analysis were conducted to examine the relationship between the standardized mean differences in satisfaction and learning in webinar and control conditions (face-to-face and asynchronous online). Furthermore, the Pearson correlations of the mean estimates between learning and satisfaction for each subgroup (face-to-face, webinar, and online) were calculated. These computations were conducted to verify Kirkpatrick's (Kirkpatrick, 1959; Gessler, 2009; Kirkpatrick and Kirkpatrick, 2016) postulated causal relationship between satisfaction and learning.

Results

Description of Included Studies

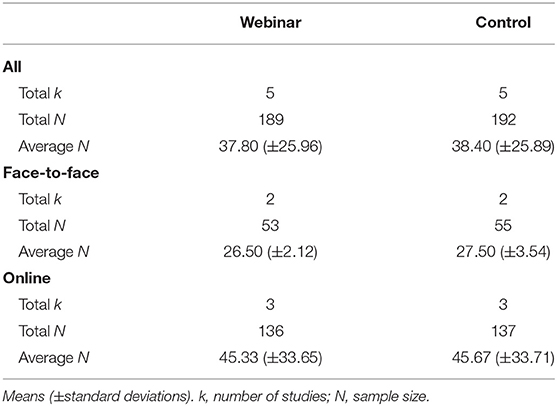

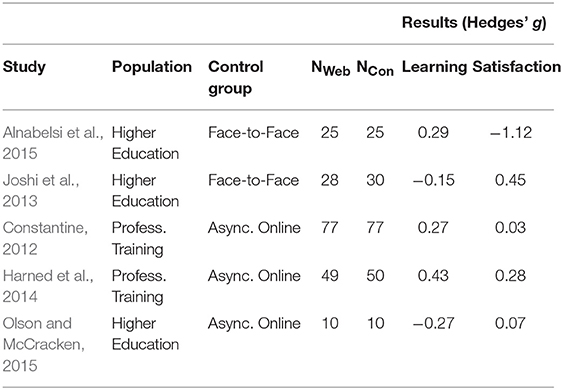

The included k = 5 studies offered a total of 10 effect sizes. The total sample size across conditions and measures was 381 participants. On average, the studies had 37 participants in the webinar condition and 38 in the control condition; in original studies, this small sample size signals the presence of sampling error, which tends to justify the use of meta-analytic synthesis to correct for sampling error. In the face-to-face subgroup, the average sample size was 27 control participants (compared to 26 webinar participants), while in the asynchronous online subgroup, the average sample size was 45 control participants (compared to 45 webinar participants). Table 3 presents information on the number of data sources and participants per condition and subgroup.

The included studies addressed a variety of topics. Alnabelsi et al. (2015) compared traditional face-to-face instruction with webinars. Two groups of medical students attended a lecture on otolaryngological emergencies either via a face-to-face session or by watching the streamed lecture online. The two modalities were then compared in terms of the students' knowledge test scores and overall satisfaction with the course.

Constantine (2012) examined the differences in performance outcomes and learner satisfaction in the context of asynchronous computer-based training and webinars. The sample comprised health-care providers in Alaska who were trained in telehealth imaging.

Harned et al. (2014) evaluated the technology-enhanced training of mental health providers in the area of exposure therapy for anxiety disorders. The participants were randomly assigned to an asynchronous condition or to a condition that included a webinar.

Joshi et al. (2013) examined pre-service sixth-semester nursing students to determine the differential effects of webinars and asynchronous self-paced learning. One group attended audiovisual lectures (webinars) on essential newborn care, while the other group participated in a traditional classroom environment.

Finally, Olson and McCracken (2015) compared the effectiveness of either a fully asynchronous or a mixed asynchronous and synchronous course design. The sample consisted of undergraduate students, and the measures included course grades and satisfaction.

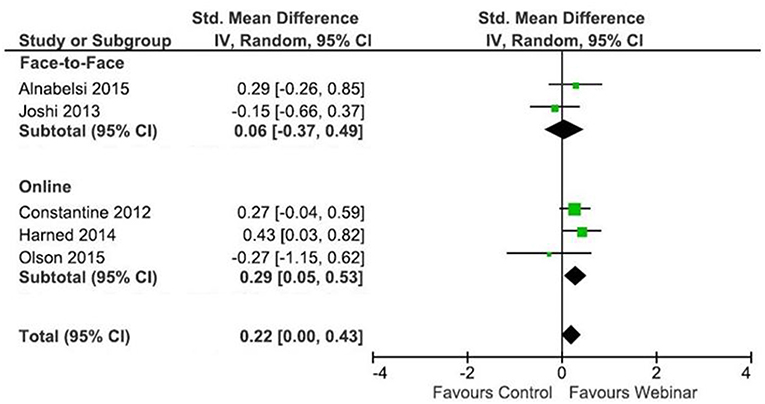

Learning

Figure 3 presents a forest plot of the learning effect sizes. Meta-analytic moderator estimation examined two subgroups: face-to-face and online. For the face-to-face subgroup, Hedges' g was 0.06 (95% CI = −0.37; 0.49), favoring webinar instruction over synchronous face-to-face instruction. For the online subgroup, Hedges' g was 0.29 (95% CI = 0.05; 0.53), favoring webinar instruction over asynchronous online instruction. The magnitude of the Hedges' g estimates indicates that, although the learning outcomes were better in webinars compared to asynchronous learning management systems and face-to-face classrooms, the effects were negligible in size.

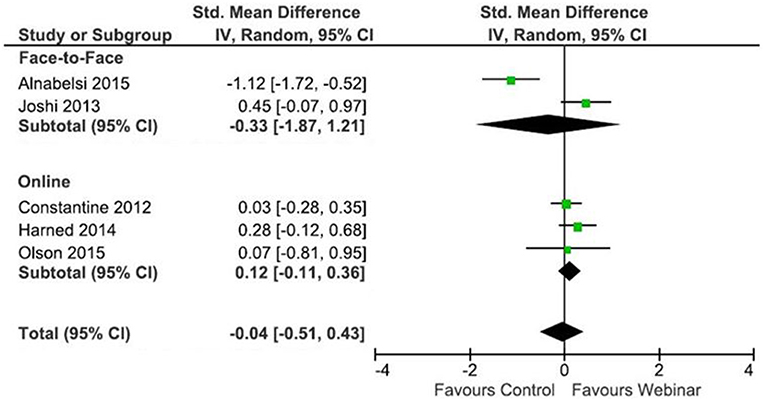

Satisfaction

Figure 4 presents a forest plot of the satisfaction effect sizes. Meta-analytic moderator estimation examined two subgroups: face-to-face and online. For the face-to-face subgroup, Hedges' g was −0.33 (95% CI = −1.87; 1.21), favoring synchronous face-to-face instruction over webinar instruction. For the asynchronous online subgroup, Hedges' g was 0.12 (95% CI = −0.11; 0.36), favoring webinar instruction over asynchronous online instruction. All effects were negligible in size and differences were statistically insignificant.

Finally, Table 4 gives a summary of the single-study results with regard to learning and satisfaction in webinars compared to the respective control conditions (face-to-face and asynchronous online). Positive Hedges' g values signify higher learning or satisfaction in webinars compared to the control condition. Negative Hedges' g values indicate the opposite effect.

The Association Between Satisfaction and Learning

To determine whether satisfaction and learning are associated, a two-tailed bivariate correlation analysis was performed. The Pearson's correlation coefficient was r = −0.55, p = 0.33. A sample size-weighted regression analysis almost reached statistical significance, with a standardized β = −0.87, p = 0.06. Thus, correlation and regression analyses showed a non-significant negative association. Note that these estimates do not represent a meta-analytic correlation between the mean estimates of both variables—because none of the studies reported correlations between these variables—but instead represents a correlation of the standardized mean differences in satisfaction and learning between the webinar and control conditions (face-to-face and asynchronous online).

If we calculate the Pearson's correlations of the mean estimates between learning and satisfaction, then r = −0.55, p = 0.34 in the webinar group, r = −1.00, p < 0.01 in the face-to-face group, and r = −0.58, p = 0.61 in the asynchronous online group. Sample size-weighted regression analyses showed β = −0.87, p = 0.06 for the webinar group, β = −1.00, p < 0.01 for the face-to-face group, and β = −0.49, p = 0.68 for the asynchronous online group. All of these estimates were negative, indicating no support for Kirkpatrick's assumption in the context of webinar-based, online-based, and face-to-face instruction.

Discussion

The goal of the current meta-analysis was to test the predictive validity of Kirkpatrick's (1959) assumption that a positive association between learning and satisfaction exists. This assumption was meta-analytically examined by comparing levels of learning and satisfaction in the contexts of webinars, traditional face-to-face instruction, and asynchronous learning management systems. The following sections summarize (a) the main results of the statistical calculations, (b) the implications for practical use of the different learning modalities, and (c) a discussion of the study limitations and the directions for future research.

The Main Findings

The research questions regarding the effectiveness of webinars in promoting post-test knowledge scores and the satisfaction of participants were answered using meta-analytic calculations that compared the webinars to the control conditions (face-to-face and asynchronous online) based on cumulated Hedge's g values. Meta-analytic moderator estimations identified the extent to which the two subgroups in the control conditions differed in comparison to the webinars. Finally, correlation analyses were conducted to examine the association between student satisfaction and post-test knowledge scores.

With regard to participant learning, webinars were more effective in promoting participant knowledge than traditional face-to-face (Hedges' g = 0.06) and asynchronous online instruction (Hedges' g = 0.29), meaning that the knowledge scores of the synchronous webinar participants were slightly higher at post-test compared to the two subgroups. In summary, the results concerning student learning show that webinars are descriptively more effective than face-to-face teaching and asynchronous online instruction. Nevertheless, as the differences between webinars and the two subgroups were marginal and statistically insignificant, one can assume that the three modalities tend to be equally effective for student learning.

With regard to student satisfaction, meta-analytic calculations showed that Hedges' g for the face-to-face subgroup was −0.33, favoring synchronous face-to-face instruction over webinar instruction. In contrast, Hedges' g for the asynchronous online subgroup was 0.12, favoring webinar instruction over asynchronous online instruction. Descriptively, it seems that student satisfaction in synchronous webinars is inferior to traditional face-to-face instruction, whereas synchronous webinars seem to result in slightly higher participant satisfaction when compared to asynchronous online instruction. However, despite descriptive differences, the extracted effects were negligible in size and thus it can be assumed, that satisfaction in webinars is about as high as in face-to-face or asynchronous online instruction.

Correlation analyses were conducted to determine the association between student satisfaction and participant knowledge at post-test, and the results showed negative relationships between the two variables in all learning modalities (webinar, face-to-face, and online). Therefore, Kirkpatrick's predicted positive causal link between satisfaction and learning could not be confirmed. For instance, the high satisfaction scores of the face-to-face subgroup were not associated with stronger post-test knowledge scores, compared to the lower satisfaction scores of the webinar group. This finding coincides with the results of other research that examined Kirkpatrick's stated positive causality between participant reaction (satisfaction) and learning success. A review article by Alliger and Janak (1989) calls Kirkpatrick's assumption “problematic,” and individual studies (e.g., Gessler, 2009; see also Reio et al., 2017) underline this critical view, with results showing no positive correlation between reaction and learning.

Implications for Practical Application

The results of the meta-analysis gave some indication of the useful practical application of e-learning modalities in higher education and professional training.

With regard to learning effects, webinars seemed to be equal to traditional face-to-face learning, whereas the effect sizes implied that asynchronous learning environments were at least descriptively less effective than the other two learning modalities. This could be a result of the previously discussed didactic disadvantages of asynchronous learning environments—namely, the lack of immediate feedback (Gao and Lehman, 2003; Wang and Woo, 2007) or the absence of extensive multilevel interaction (Marjanovic, 1999) between the student and the tutor. Nevertheless, marginal effect sizes indicate that the three learning modalities are roughly equal in outcome. In terms of effectiveness with regard to student satisfaction, traditional face-to-face learning seemed to have the strongest impact, followed by webinar instruction. Again, the asynchronous learning environment was inferior—if only marginally—to synchronous webinars. The latter pattern of results coincides with the findings of a recent study by Tratnik et al. (2017), which found that students in a face-to-face higher education course were generally more satisfied with the course than their online counterparts. Nevertheless, similarly to the analysis of learning effects—differences between subgroups were marginal and one can assume that the three compared modalities led to comparable student satisfaction.

These findings have implications for the practical implementation of e-learning modalities in educational contexts. As the three compared learning modalities were all roughly equal in their outcome (learning and satisfaction), the use of each one of them may be justified without greater concern for major negative downsides. Nevertheless, extracted effect sizes—even if they were small—from the current meta-analysis could inform about the possible use of specific modalities in certain situations.

Considering both dependent variables of the meta-analysis, traditional face-to-face instruction seems to be slightly superior to online learning environments in general. Therefore, if there is no specific need for a certain degree of flexibility (time or location), face-to-face classroom education seems to be an appropriate learning environment in higher education and professional training contexts.

Indeed, if there is a need for at least spatial flexibility in educational content delivery, webinars can provide an almost equally effective alternative to face-to-face learning. The only downside is the slightly reduced satisfaction of participants with the use of the webinar tool in comparison to the face-to-face variant. However, this downside is somewhat counteracted by the negative correlation between student satisfaction and knowledge scores. In terms of promoting post-test knowledge, webinars were slightly more effective than their offline counterpart, although the difference was only marginal.

If there is no possibility to convey content to all participants simultaneously, asynchronous learning environments can offer an alternative to face-to-face learning and webinars. For instance, if the participants in an educational course live in different time zones, face-to-face learning is almost impossible, and webinars cause considerable inconvenience. Aside from this extreme example, asynchronous learning environments can be used as a tool that complements other learning modalities.

The latter implications concerned the isolated use of every learning modality on their own. Nevertheless, regarding practical application, the combined use of the stated learning modalities can be useful. Depending on the participants' necessities, e-learning modalities can be combined with traditional face-to-face learning to create a learning environment that makes the most sense in certain situations. In summary, e-learning modalities in general and webinars in particular are useful tools for extending traditional learning environments and creating a more flexible experience for participants and tutors.

Limitations and Directions for Future Research

Some limitations of the current meta-analysis need to be mentioned. The first limitation is the small number of primary studies included in the meta-analysis. Research examining the effectiveness of webinars in higher education and professional training using RCTs is rare, and even less frequent are studies reporting quantitative statistics on the relation between knowledge scores and student satisfaction. On the one hand, the strict selection of RCT-studies in this meta-analysis was carried out to exclude research with insufficient methodological rigor that may be affected by certain biases. On the other hand, the fact that only five suitable RCT-studies were found could have led to other (unknown) bias in the current work. Nevertheless, although the small number of individual studies and the associated small sample sizes indicate a risk of second-order sampling error (Schmidt and Hunter, 2015; Gegenfurtner and Ebner, in press), the need for a meta-analysis of this topic was apparent, as the results of some existing individual studies pointed in heterogeneous directions.

Second, although the use of Hedges' g as a measure of effect sizes is suitable for small sample sizes, original studies might be affected by additional biases, such as extraneous factors introduced by the study procedure (Schmidt and Hunter, 2015). These factors could not be controlled in this meta-analysis, and this may have affected the results.

Finally, directions for future research should include the expansion of individual studies examining the effects of webinars on participant learning and satisfaction in higher education and professional training. As e-learning technologies advance rapidly, there is an urgent need for researchers to keep pace with the current status of technology in educational contexts to enable them to expand traditional face-to-face learning by introducing e-learning modalities. For instance, specific research could focus on comparing the effectiveness of different webinar technologies (e.g., AdobeConnect or Cisco WebEx). Furthermore, future research is needed to address different instructional framings of webinar-based training, including voluntary vs. mandatory participation (Gegenfurtner et al., 2016), provision of implementation intentions (Quesada-Pallarès and Gegenfurtner, 2015), levels of social support and feedback (Gegenfurtner et al., 2010; Reinhold et al., 2018), as well as different interaction treatments (Bernard et al., 2009).

Conclusion

As e-learning technologies become increasingly common in educational contexts (Ruiz et al., 2006; Testers et al., 2019), there is a need to examine their effectiveness compared to traditional learning modalities. The aim of the current meta-analysis was to investigate the effectiveness of webinars on promoting participant knowledge at post-test and the satisfaction scores of participants in higher education and professional training. Additionally, Kirkpatrick's assumption of a positive causal relationship between satisfaction and learning was investigated. To answer the associated research questions, meta-analytic estimations and correlation analyses were conducted based on five individual studies containing 10 independent data sources comparing 189 participants in webinar conditions to 192 participants in the control conditions. Additionally, the influence of two subgroups (face-to-face and asynchronous online) was examined. Summarizing the results, webinars provide an appropriate supplement for traditional face-to-face learning, particularly when there is a need for locational flexibility.

Data Availability

All datasets generated for this study are included in the manuscript/supplementary files.

Author Contributions

CE was responsible for the main part of the manuscript. AG supported by checking statistical calculations and rereading the paper.

Funding

This work was funded funded by the german Bundsministerium für Bildung und Forschung (BMBF; grant number: 16OH22004) as part of the promotion scheme Aufstieg durch Bildung: Offene Hochschulen.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Alliger, G. M., and Janak, E. A. (1989). Kirkpatrick's levels of training criteria: thirty years later. Pers. Psychol. 42, 331–342. doi: 10.1111/j.1744-6570.1989.tb00661.x

Alliger, G. M., Tannenbaum, S. I., Bennett, W., Traver, H., and Shotland, A. (1997). A meta-analysis of the relations among training criteria. Pers. Psychol. 50, 341–358. doi: 10.1111/j.1744-6570.1997.tb00911.x

*Alnabelsi, T., Al-Hussaini, A., and Owens, D. (2015). Comparison of traditional face-to-face teaching with synchronous e-learning in otolaryngology emergencies teaching to medical undergraduates: a randomised controlled trial. Eur. Archiv. Otorhinolaryngol. 272, 759–763. doi: 10.1007/s00405-014-3326-6

Beretvas, S. N. (2019). “Meta-analysis,” in The Reviewer's Guide to Quantitative Methods in the Social Sciences, 2nd Edn., eds G. R. Hancock, L. M. Stapleton, and R. O. Mueller (New York, NY: Routledge), 260–268. doi: 10.4324/9781315755649-19

Bernard, R. M., Abrami, P. C., Borokhovski, E., Wade, C. A., Tamim, R. M., Surkes, M. A., et al. (2009). A meta-analysis of three types of interaction treatments in distance education. Rev. Educ. Res. 79, 1243–1289. doi: 10.3102/0034654309333844

Bernard, R. M., Abrami, P. C., Lou, Y., Borokhovski, E., Wade, A., Wozney, L., et al. (2004). How does distance education compare to classroom instruction? A meta-analysis of the empirical literature. Rev. Educ. Res. 74, 379–439. doi: 10.3102/00346543074003379

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences. 2nd Edn. Hillsdale, NJ: Erlbaum.

*Constantine, M. B. (2012). A study of individual learning styles and e-learning preferences among community health aides/practitioners in rural Alaska (Unpublished doctoral dissertation), Trident University International, Cypress, CA, United States.

Cook, D. A., Garside, S., Levinson, A. J., Dupras, D. M., and Montori, V. M. (2010). What do we mean by web-based learning? A systematic review of the variability of interventions. Med. Educ. 44, 765–774. doi: 10.1111/j.1365-2923.2010.03723.x

Cook, D. A., Levinson, A. J., Carside, S., Dupras, D. M., Erwin, P. J., and Montori, V. M. (2008). Internet-based learning in the health professions: a meta-analysis. J. Am. Med. Assoc. 300, 1181–1196. doi: 10.1001/jama.300.10.1181

Gao, T., and Lehman, J. D. (2003). The effects of different levels of interaction on the achievement and motivational perceptions of college students in a web-based learning environment. J. Interact. Learn. Res. 14, 367–386.

Gegenfurtner, A., and Ebner, C. (in press). Webinars in higher education professional training: A meta-analysis systematic review of randomized controlled trials. Educ. Res. Rev.

Gegenfurtner, A., Fisch, K., and Ebner, C. (2019a). Teilnahmemotivation nicht-traditionell Studierender an wissenschaftlicher Weiterbildung: Eine qualitative Inhaltsanalyse im Kontext von Blended Learning. Beiträge zur Hochschulforschung.

Gegenfurtner, A., Fryer, L. K., Järvelä, S., Narciss, S., and Harackiewicz, J. (2019b). Affective learning in digital education. Front. Educ.

Gegenfurtner, A., Könings, K. D., Kosmajac, N., and Gebhardt, M. (2016). Voluntary or mandatory training participation as a moderator in the relationship between goal orientations and transfer of training. Int. J. Train. Dev. 20, 290–301. doi: 10.1111/ijtd.12089

Gegenfurtner, A., Schwab, N., and Ebner, C. (2018). “There's no need to drive from A to B”: exploring the lived experience of students and lecturers with digital learning in higher education. Bavarian J. Appl. Sci. 4:310322. doi: 10.25929/bjas.v4i1.50

Gegenfurtner, A., Spagert, L., Weng, G., Bomke, C., Fisch, K., Oswald, A., et al. (2017). LernCenter: Ein Konzept für die Digitalisierung berufsbegleitender Weiterbildungen an Hochschulen. Bavarian J. Appl. Sci. 3, 234–243. doi: 10.25929/z26v-0x88

Gegenfurtner, A., Vauras, M., Gruber, H., and Festner, D. (2010). “Motivation to transfer revisited,” in Learning in the Disciplines: ICLS2010 Proceedings, Vol. 1, K. Gomez, L. Lyons, and J. Radinsky (Chicago, IL: International Society of the Learning Sciences, 452–459.

Gegenfurtner, A., Zitt, A., and Ebner, C. (2019c). Evaluating webinar-based training: A mixed methods study on trainee reactions toward digital web conferencing. Int. J. Train. Dev.

Gessler, M. (2009). The correlation of participant satisfaction, learning success and learning transfer: an empirical investigation of correlation assumptions in Kirkpatrick's four-level model. Int. J. Manage. Educ. 3, 346–358. doi: 10.1504/IJMIE.2009.027355

Goe, R., Ipsen, C., and Bliss, S. (2018). Pilot testing a digital career literacy training for vocational rehabilitation professionals. Rehabil. Couns. Bull. 61, 236–243. doi: 10.1177/0034355217724341

*Harned, M. S., Dimeff, L. A., Woodcook, E. A., Kelly, T., Zavertnik, J., Contreras, I., et al. (2014). Exposing clinicians to exposure: a randomized controlled dissemination trial of exposure therapy for anxiety disorders. Behav. Ther. 45, 731–744. doi: 10.1016/j.beth.2014.04.005

Hedges, L. V. (1981). Distribution theory for Glass's estimator of effect size and related estimators. J. Educ. Stat. 6, 107–128. doi: 10.3102/10769986006002107

Holton, E. F. (1996). The flawed four level evaluation model. Hum. Resour. Dev. Q. 7, 5–21. doi: 10.1002/hrdq.3920070103

Johnson, C. L., and Schumacher, J. B. (2016). Does webinar-based financial education affect knowledge and behavior? J. Ext. 54, 1–10.

*Joshi, P., Thukral, A., Joshi, M., Deorari, A. K., and Vatsa, M. (2013). Comparing the effectiveness of webinars and participatory learning on essential newborn care (ENBC) in the class room in terms of acquisition of knowledge and skills of student nurses: a randomized controlled trial. Indian J. Pediatr. 80, 168–170. doi: 10.1007/s12098-012-0742-8

Kirkpatrick, D. L. (1959). Techniques for evaluating training programs. J. Am. Soc. Train. Directors 13, 21–26.

Kirkpatrick, J. D., and Kirkpatrick, W. K. (2016). Kirkpatrick's Four Levels of Training Evaluation. Alexandria, VA: ATD Press.

Knogler, M., Gegenfurtner, A., and Quesada Pallarès, C. (2013). “Social design in digital simulations: effects of single versus multi-player simulations on efficacy beliefs and transfer,” in To See the World and a Grain of Sand: Learning Across Levels of Space, Time, and Scale, Vol. 2, eds N. Rummel, M. Kapur, M. Nathan, and S. Puntambekar (Madison, WI: International Society of the Learning Sciences, 293–294.

Landis, J. R., and Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics 33, 159–174. doi: 10.2307/2529310

Liu, Q., Peng, W., Zhang, F., Hu, R., Li, Y., and Yan, W. (2016). The effectiveness of blended learning in health professions: systematic review and meta-analysis. J. Med. Internet Res. 18:e2. doi: 10.2196/jmir.4807

Margulieux, L. E., McCracken, W. M., and Catrambone, R. (2016). A taxonomy to define courses that mix face-to-face and online learning. Educ. Res. Rev. 19, 104–118. doi: 10.1016/j.edurev.2016.07.001

Marjanovic, O. (1999). Learning and teaching in a synchronous collaborative environment. J. Comput. Assist. Learn. 15, 129–138. doi: 10.1046/j.1365-2729.1999.152085.x

Martin, B. O., Kolomitro, K., and Lam, T. C. M. (2014). Training methods: a review and analysis. Hum. Resour. Dev. Rev. 13, 11–35. doi: 10.1177/1534484313497947

McKinney, W. P. (2017). Assessing the evidence for the educational efficacy of webinars and related internet-based instruction. Pedag. Health Promot. 3, 475–515. doi: 10.1177/2373379917700876

McMahon-Howard, J., and Reimers, B. (2013). An evaluation of a child welfare training program on the commercial sexual exploitation of children (CSEC). Eval. Program Plann. 40, 1–9. doi: 10.1016/j.evalprogplan.2013.04.002

Means, B., Toyama, Y., Murphy, R., and Baki, M. (2013). The effectiveness of online and blended learning: a meta-analysis of the empirical literature. Teach. Coll. Rec. 115, 1–47.

Means, B., Toyama, Y., Murphy, R., Baki, M., and Jones, K. (2009). Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies. Washington, DC: US Department of Education.

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., and The PRISMA Group (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Br. Med. J. 339:b2535. doi: 10.1136/bmj.b2535

Nelson, L. S. (2010). Learning outcomes of webinar versus classroom instruction among baccalaureate nursing students: a randomized controlled trial (Unpublished doctoral dissertation), Texas Woman's University, Denton, TX, United States.

Nicklen, P., Keating, J. L., Paynter, S., Storr, M., and Maloney, S. (2016). Remote-online case-based learning: a comparison of remote-online and face-to-face, case-based learning – a randomized controlled trial. Educ. Health 29, 195–202. doi: 10.4103/1357-6283.204213

*Olson, J. S., and McCracken, F. E. (2015). Is it worth the effort? The impact of incorporating synchronous lectures into an online course. Online Learn. J. 19, 73–84. doi: 10.24059/olj.v19i2.499

Phipps, R., and Merisotis, J. (1999). What's the Difference? A Review of Contemporary Research on the Effectiveness of Distance Learning in Higher Education. Washington, DC: The Institute for Higher Education Policy.

Polanin, J. R., and Snilstveit, B. (2016). Campbell Methods Policy Note on Converting Between Effect Sizes. Oslo: The Campbell Collaboration.

Quesada-Pallarès, C., and Gegenfurtner, A. (2015). Toward a unified model of motivation for training transfer: a phase perspective. Z. Erziehungswiss. 18, 107–121. doi: 10.1007/s11618-014-0604-4

Reinhold, S., Gegenfurtner, A., and Lewalter, D. (2018). Social support and motivation to transfer as predictors of training transfer: testing full and partial mediation using meta-analytic structural equation modeling. Int. J. Train. Dev. 22, 1–14. doi: 10.1111/ijtd.12115

Reio, T. G., Rocco, T. S., Smith, D. H., and Chang, E. (2017). A critique of Kirkpatrick's evaluation model. New Horiz. Adult Educ. Hum. Resour. Dev. 29, 35–53. doi: 10.1002/nha3.20178

Richmond, H., Copsey, B., Hall, A. M., Davies, D., and Lamb, S. E. (2017). A systematic review and meta-analysis of online versus alternative methods for training licensed health care professionals to deliver clinical interventions. BMC Med. Educ. 17:227. doi: 10.1186/s12909-017-1047-4

Ruiz, J. G., Mintzer, M. J., and Leipzig, R. M. (2006). The impact of e-learning in medical education. Acad. Med. 81, 207–212. doi: 10.1097/00001888-200603000-00002

Schmid, R. F., Bernard, R. M., Borokhovski, E., Tamim, R. M., Abrami, P. C., Surkes, M. A., et al. (2014). The effects of technology use in postsecondary education: a meta-analysis of classroom applications. Comput. Educ. 72, 271–291. doi: 10.1016/j.compedu.2013.11.002

Schmidt, F. L., and Hunter, J. E. (2015). Methods of Meta-Analysis: Correcting Error and Bias in Research Findings. 3rd Edn. Thousand Oaks, CA: Sage.

Siewiorek, A., and Gegenfurtner, A. (2010). “Leading to win: the influence of leadership style on team performance during a computer game training,” in Learning in the Disciplines: ICLS2010 Proceedings, Vol. 1, eds K. Gomez, L. Lyons, and J. Radinsky (Chicago, IL: International Society of the Learning Sciences, 524–531.

Stout, J. W., Smith, K., Zhou, C., Solomon, C., Dozor, A. J., Garrison, M. M., et al. (2012). Learning from a distance: effectiveness of online spirometry training in improving asthma care. Acad. Pediatr. 12, 88–95. doi: 10.1016/j.acap.2011.11.006

Tamim, R. M., Borokhovski, E., Bernard, R. M., Schmid, R. F., and Abrami, P. C. (2015). “A methodological quality tool for meta-analysis: The case of the educational technology literature,” in Paper Presented at the Annual Meeting of the American Educational Research Association (Chicago, IL).

Taveira-Gomes, T., Ferreira, P., Taveira-Gomes, I., Severo, M., and Ferreira, M. A. (2016). What are we looking for in computer-based learning interventions in medical education? A systematic review. J. Med. Internet Res. 18:e204. doi: 10.2196/jmir.5461

Testers, L., Gegenfurtner, A., and Brand-Gruwel, S. (2015). “Motivation to transfer learning to multiple contexts,” in The School Library Rocks: Living It, Learning It, Loving It, eds L. Das, S. Brand-Gruwel, K. Kok, and J. Walhout (Heerlen: IASL, 473–487.

Testers, L., Gegenfurtner, A., Van Geel, R., and Brand-Gruwel, S. (2019). From monocontextual to multicontextual transfer: organizational determinants of the intention to transfer generic information literacy competences to multiple contexts. Frontl. Learn. Res. 7, 23–42. doi: 10.14786/flr.v7i1.359

Tratnik, A., Urh, M., and Jereb, E. (2017). Student satisfaction with an online and a face-to-face Business English course in a higher education context. Innov. Educ. Teach. Int. 56, 1–10. doi: 10.1080/14703297.2017.1374875

Tseng, J.-J., Cheng, Y.-S., and Yeh, H.-N. (2019). How pre-service English teachers enact TPACK in the context of web-conferencing teaching: a design thinking approach. Comput. Educ. 128, 171–182. doi: 10.1016/j.compedu.2018.09.022

Wan, X., Wang, W., Liu, J., and Tong, T. (2014). Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med. Res. Methodol. 14:135. doi: 10.1186/1471-2288-14-135

Wang, Q., and Woo, H. L. (2007). Comparing asynchronous online discussions and face-to-face discussions in a classroom setting. Br. J. Educ. Technol. 38, 272–286. doi: 10.1111/j.1467-8535.2006.00621.x

Wang, S.-K., and Hsu, H.-Y. (2008). Use of the webinar tool (Elluminate) to support training: the effects of webinar-learning implementation from student-trainers' perspective. J. Interact. Online Learn. 7, 175–194.

*^The studies that are preceded by an asterisk were included in the meta-analysis.

Keywords: adult learning, computer-mediated communication, distance education and telelearning, distributed learning environments, media in education

Citation: Ebner C and Gegenfurtner A (2019) Learning and Satisfaction in Webinar, Online, and Face-to-Face Instruction: A Meta-Analysis. Front. Educ. 4:92. doi: 10.3389/feduc.2019.00092

Received: 07 June 2019; Accepted: 12 August 2019;

Published: 03 September 2019.

Edited by:

Fabrizio Consorti, Sapienza University of Rome, ItalyReviewed by:

Clifford A. Shaffer, Virginia Tech, United StatesJudit García-Martín, University of Salamanca, Spain

Tane Moleta, Victoria University of Wellington, New Zealand

Copyright © 2019 Ebner and Gegenfurtner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christian Ebner, Y2hyaXN0aWFuLmVibmVyQHRoLWRlZy5kZQ==

Christian Ebner

Christian Ebner Andreas Gegenfurtner

Andreas Gegenfurtner