- 1Department of Psychology, University of Cincinnati, Cincinnati, OH, United States

- 2Department of Psychology, North Carolina State University, Raleigh, NC, United States

Important strides have been made in the science of learning to read. Yet, many students still struggle to attain reading proficiency. This calls for sustained efforts to bridge theoretical insights with applied considerations about ideal pedagogy. The current study was designed to contribute to this conversation, namely by looking at the efficacy of an online reading program. The chosen reading program, referred to as MindPlay Virtual Reading Coach (MVRC), emphasizes the mastery of basic reading skills to support the development of reading fluency. Its focus on basic skills diverges from the goal of increasing reading motivation. And its focus on reading fluency, vs. broad literacy achievement, offers an alternative to already existing reading enrichment. In order to test the efficacy of MVRC, we recruited three school districts. One district provided data from elementary schools that used the MVRC program in Grades 2 to 6 (N = 2,531 total). The other two districts participated in a quasi-experimental design: Six 2nd-grade classrooms and nine 4th-grade classrooms were randomly assigned to one of three conditions: (1) instruction as usual, (2) instruction with an alternative online reading program, and (3) instruction with MVRC. Complete data sets were available from 142 2nd-graders and 172 4th-graders. Three assessments from the MVRC screener were used: They assessed reading fluency, phonic skills, and listening vocabulary at two time points: before and after the intervention. Results show a clear advantage of MVRC on reading fluency, more so than on phonics or listening vocabulary. At the same time, teachers reported concerns with MVRC, highlighting the challenge with reading programs that emphasize basic-skills mastery over programs that seek to encourage reading.

Highlights

- We assessed the efficacy of the online reading program MVRC on the reading fluency of children in Grades 2–6.

- Correlational analyses reveal a significant effect of MVRC time on improvements in reading fluency in Grades 2, 3, 5, and 6.

- MVRC time affected improvements in reading fluency more than in phonics or in listening vocabulary.

- Two randomized control trials (Grade 2; Grade 4) revealed a strong effect of MVRC on reading fluency, compared to instruction as usual and compared to an alternative online reading program.

- Teachers had some concerns with MVRC. When given a choice, none of the teachers interviewed were interested in adopting MVRC for their classroom.

Introduction

Attaining reading proficiency remains a challenge for many students. For example, the recent National Assessment of Educational Progress report found that only 37% of 4th-graders could read proficiently (National Center for Education Statistics, 2017). Online programs are a possible solution to this problem, variously referred to as computer-based teaching (CBT), computer-assisted instruction (CAI), computer-managed instruction (CMI), computer-assisted learning (CAL), digital game-based learning, or the like (Peterson et al., 1999; Kozma, 2003; Fenty et al., 2015; Jamshidifarsani et al., 2019). In the current paper, we test the efficacy of such a program: the MindPlay Virtual Reading Coach (www.mindplay.com). This program is designed to improve reading fluency via the mastery of basic reading skills.

Reading Fluency and the Importance of Basic Reading Skills

The pace at which an individual can read matters centrally for general literacy achievement (Alvermann et al., 2013; Silverman et al., 2013). A student needs to be able to read a minimum number of words per minute in order to be able to interpret and analyze a text. Yet, it is still unclear how to best raise a student's reading fluency. To illustrate this gap in our understanding, we briefly review the research listed in the What Works Clearinghouse (WWC; U.S. Department of Education1). This data base summarizes research studies that report on the efficacy of a pedagogical tool. While the details of the pedagogical tools differ, all studies in the WWC data base use a research design that provides conclusive evidence for effective pedagogy.

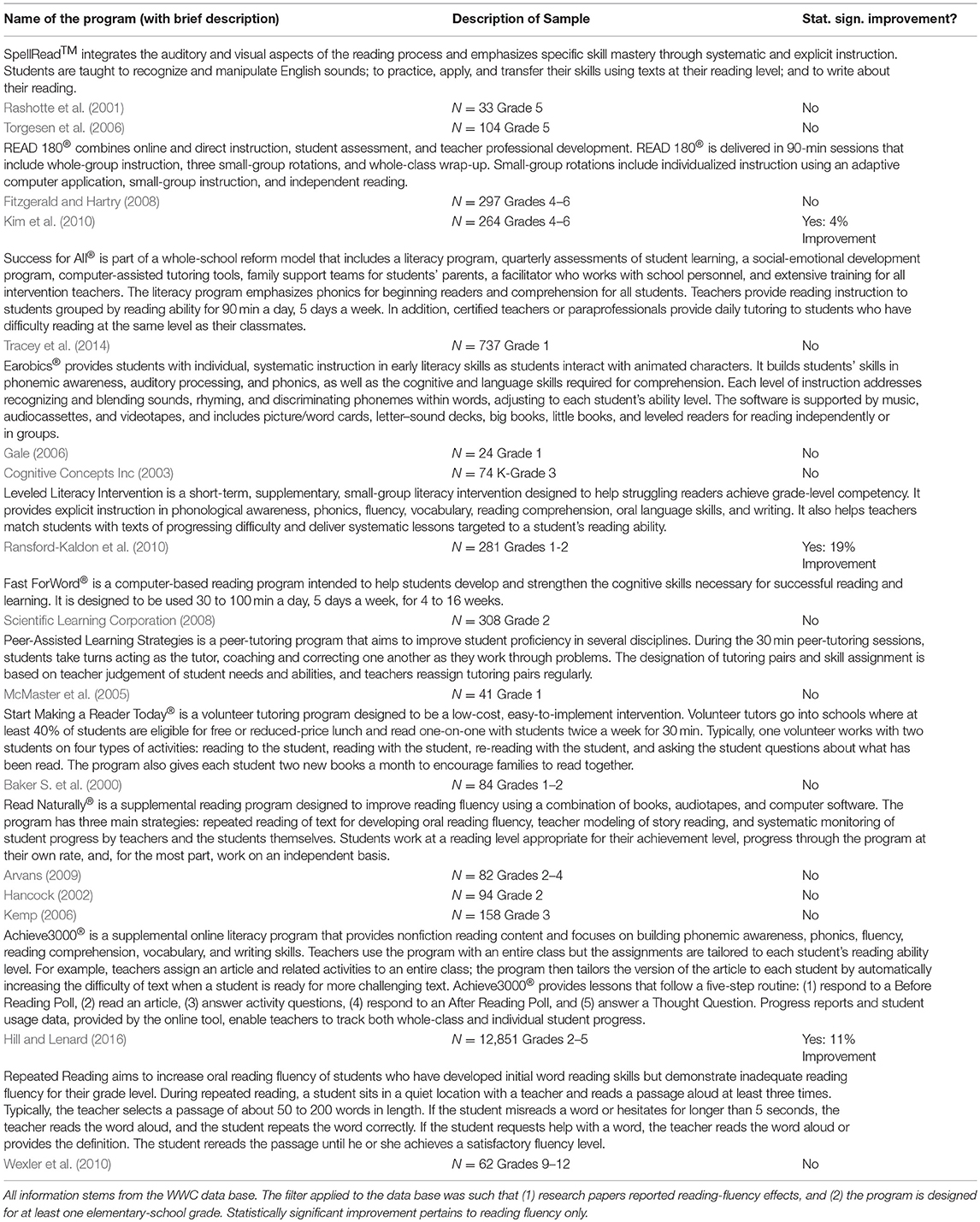

For the current purposes, we looked specifically at WWC research that assessed reading fluency in elementary school. This filter returned 16 research papers, covering the following literacy programs: SpellRead™, READ180, Success for All®, Earobics®, Leveled Literacy Intervention, Fast ForWord®, Peer-Assisted Learning Strategies, Start Making a Reader Today®, Read Naturally®, Achieve3000®, and Repeated Reading. Of these programs, 38% involved an online component, 31% involved small-group pedagogy, and 12% involved a training that went beyond reading (e.g., training in socio-emotional skills). Importantly, despite this diversity, improvement in reading fluency was rare – documented in less than 20% of the studies (see Table 1 for details). Thus, a large majority of existing tools did not lead to improved reading fluency (Macaruso et al., 2006; Ross et al., 2010; Balu et al., 2015).

In order to understand how to improve reading fluency, two extremes can be considered: Students either lack the required skills, or they lack the required motivation (Thomas, 2013). There is evidence for both extremes. For example, research has demonstrated that foundational skills of phonics are central to reading fluency (Ehri et al., 2001), even for adult readers (e.g., Van Orden et al., 1990). In line with these findings, the National Reading Panel recommends that reading lessons should focus on the fundamentals of phonemic awareness, phonics, fluency, vocabulary, grammar, and comprehension strategies (National Reading Panel, 2000). Early reading skill was even found to predict later reading motivation, further highlighting the relevance of fundamental reading skills (e.g., Chapman and Tunmer, 1997; Poskiparta et al., 2003).

On the other hand, researchers have proposed that poor motivation may be a defining factor of reading failure (e.g., Baker L. et al., 2000; Pressley, 2002; Wang and Guthrie, 2004; Lepola et al., 2005; Sideridis et al., 2006; Morgan et al., 2008). The argument is that the development of reading skills requires frequent reading practice, which, in turn, depends on perceiving reading as valuable and fun (Echols et al., 1996; Griffiths and Snowling, 2002; Meece et al., 2006; Froiland and Oros, 2014). In support of this argument, children's early reading motivation was found to predict later reading ability (Chapman et al., 2000; Onatsu-Arvilommi and Nurmi, 2000).

It should be noted that an ideal reading pedagogy is not an either-or proposition (Madden et al., 1993; Mano and Guerin, 2018; Prescott et al., 2018). Basic skills of decoding, phonics, and grammar are not detached from the communicative aspects of an interesting read (e.g., Fountas and Pinnell, 2006; Wallot, 2014). However, recent years have seen an increased emphasis on the communicative aspect of reading, as illustrated by the recommendation from the American Academy for Pediatrics to read with children at home (American Academy of Pediatrics News, 2014). This might have inadvertently pushed the pendulum too far into the camp of increasing reading motivation, at the expense of the development of basic phonics and grammar skills (Wise et al., 2000; e.g., Bosman et al., 2017).

MVRC has the potential to re-adjust the pendulum of pedagogy, away from an over-emphasis on content-rich activities of literacy, and toward a focus on the mechanics of decoding. MVRC is based on the idea that training in foundational skills can improve reading fluency more so than mere reading practice (Bosman and Van Orden, 1997; Mellard et al., 2010; Huo and Wang, 2017; Cordewener et al., 2018). Given this theoretical commitment, MVRC does not consider students' preferences about what to read, and there are no choices about lessons and practice activities. Rather than seeking to entice students to read, MVRC rolls out a learning regime that targets identified gaps in foundational reading skills.

Details About MVRC

MindPlay Virtual Reading Coach is a commercially available educational software geared toward improving reading fluency in an individualized learning environment. According to their webpage, lessons are provided by virtual reading specialists and speech pathologists, followed by practice that includes immediate and specific feedback. Depending on the proficiency of the student, the program covers phonological awareness, phonics skills, vocabulary, grammar, silent reading fluency, and comprehension. A unique and differentiated syllabus is automatically developed and then adapted continuously to fit the needs and emerging skills of individual students.

More specifically, MVRC combines three facets of pedagogy: a comprehensive diagnostic tool, a lesson/practice pairing that is calibrated to fit the proficiency gaps of the student, and a flow-chart structure of activities designed to support mastery of foundational skills. The diagnostic tool, known as the universal screener, is administered when the student first logs on. It consists of several elements, each of which is normed internally (MindPlay Universal Screener™, 2018). For Grades 2 and older, the first part of the screener is designed to determine the student's reading fluency. Subsequent parts pertain to a visual scanning test, a listening vocabulary test, a phonics test, and a letter-discrimination test.

Depending on the screener's outcome profile of a specific student, MVRC determines lesson/practice pairings that precisely fit the skills and gaps of the student (MindPlay Virtual Reading Coach, 2017). The lessons pertain to phonemic awareness, phonics, fluency, vocabulary, grammar for meaning, and comprehension. Lessons are delivered by an online reading coach, requiring minimal involvement of the teacher. Each lesson is followed by pertinent practice activities to enforce the material covered in a lesson. Importantly, the amount of practice is adjusted continuously, depending on a student's success rate during the practice activities. Thus, the speed at which students move through the lessons is determined by their error rate and types of errors.

An underlying flow-chart structure defines the order in which lessons and practice activities are presented. Central to this structure is the gradual increase in difficulty. For example, in phonics, the initial lessons involve single letters that have relatively straight-forward phonics (e.g., letters m, b, t, s and a), followed by letter groups that have more complex phonics (e.g., th, sh). A minimum of 90% mastery is required before the student moves on to a new lesson set. If this level of mastery is not achieved on the first try, the lesson content is revisited in several different ways so that mastery becomes attainable without excessive repetitions. The expected outcome is an improvement in reading fluency.

Preliminary research with MVRC was carried out in several dissertations (Jensen, 2015; Sherrow, 2015; Kealey, 2017; Mann, 2017; Reiser, 2018). There is also published research, for example with college-students placed in a remedial reading course (Bauer-Kealey and Mather, 2018), with 2nd-graders in a diverse school district (Schneider et al., 2016), with 2nd-grade second-language learners (Vaughan et al., 2004), and with 8th-graders enrolled in a summer program (Chambers et al., 2013). Results are promising: For example, 2nd-graders who logged in for an average of 44 MVRC hours improved in their reading fluency more than students who did not take part in the intervention (Schneider et al., 2016). The current study was designed to substantiate these findings, looking specifically at the impact of the MVRC intervention on children's reading fluency.

Overview of the Current Study

In order to investigate the effect of MVRC on reading fluency, we focused specifically on elementary-school students. These children are old enough to complete the reading fluency test, and they often have gaps in phonics and basic grammar skills. Thus, these grades are ideal to investigate the link between foundational skills and reading fluency. Three Midwestern public-school districts participated. District 1 provided data from elementary schools that used the MVRC program in Grades 2 to 6. Districts 2 and 3 participated in a quasi-experimental design: Six 2nd-grade classrooms and nine 4th-grade classrooms were randomly assigned to one of three conditions: (1) instruction as usual, (2) instruction with an alternative online reading program, and (3) instruction with MVRC.

For each district, we used three assessments from the MVRC screener, administered to all children before and after the intervention, independently of grade, school, or condition: reading fluency, phonics, and listening vocabulary. Reading fluency was considered the target measure, given MVRC's explicit purpose to improve reading fluency. In contrast, the measures of phonics and listening vocabulary were considered control measures. The decision to treat phonics and listening vocabulary as control measures (vs. as mediating or moderating variables) allowed us to account for idiosyncratic aspects of the assessment and thus provide a strong test of the claim that MVRC affects reading fluency.

District 1: Correlational Design With Grades 2-6

Our first question was whether children's gain in reading fluency is related to the amount of the time they spend actively using the MVRC program.

Method

Description of the School District, Schools, and Students

District 1 serves about 20,000 students, approximately 60% of whom identify as Caucasian. For the year in which our data were collected, over two thirds of the district's schools were rated as “D” or “F” schools by the state's Department of Education. Data were obtained from a total of 29 schools (N = 2,531 students). No information was provided about the gender, race, ethnicity, or economic level of the students from these schools.

Description of the Measures

Three measures were of specific interest, all of which are returned by the MVRC screener. Reading Fluency is the target measure: It is assessed in two steps, each time involving a text and a multiple-choice comprehension test. Texts are chosen randomly from an assortment of stories of a given Lexile (MetaMetrics, Inc.). The first text is presented one page at a time, with words disappearing at a predetermined rate. This forces students to read faster than the rate at which the words disappear. Depending of students' comprehension score obtained for this first text, the second text is presented either in meaningful chunks (if students performed poorly on the first comprehension test), or it is presented in its entirety and then removed after a certain amount of time. Students' comprehension scores are used to determine their effective reading rate, transformed into a grade-equivalence score.

Phonics is our first control measure: The assessment examines the student's knowledge of English spelling rules. Nonsense words are enunciated by a virtual speech pathologist, and the student is asked to type part or all of the word on the keyboard. Listening Vocabulary is the second control measure. The assessment consists of a series of unrelated questions (e.g., “Which of these would you do on a ship?”). For each question, students have to choose among several answer options. For the example question above, answer options include “sail,” “cut,” and “roar.” Both the phonics test and the listening vocabulary test are adaptive: The difficulty level of items is adjusted based on the students' ongoing level of success during the assessment.

Procedure

Students were given access to MVRC for an entire school year. The pre-test assessment took place during the Fall semester (September or October), and the post-test assessment took place during the Spring semester (April or May, depending on school). All of the students with these two data points were included in the analysis.

Results and Discussion

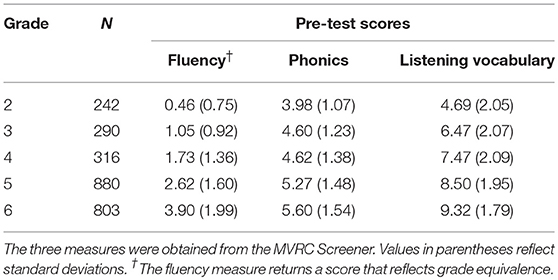

All results were analyzed by grade level to account for grade-relevant discrepancies in assessments (e.g., Lexile band). Table 2 provides the descriptive statistics of pre-test data, including number of students, separated by grade level. Note that there were more 5th- and 6th-graders in our sample than students of lower grades. Note also that the average reading fluency at pre-test was below the students' actual grade level. Specifically, 3rd-graders were approximately one grade level behind, on average, and students in all other grades were more than two grade levels behind, on average. This finding is consistent with the low state ratings of the schools.

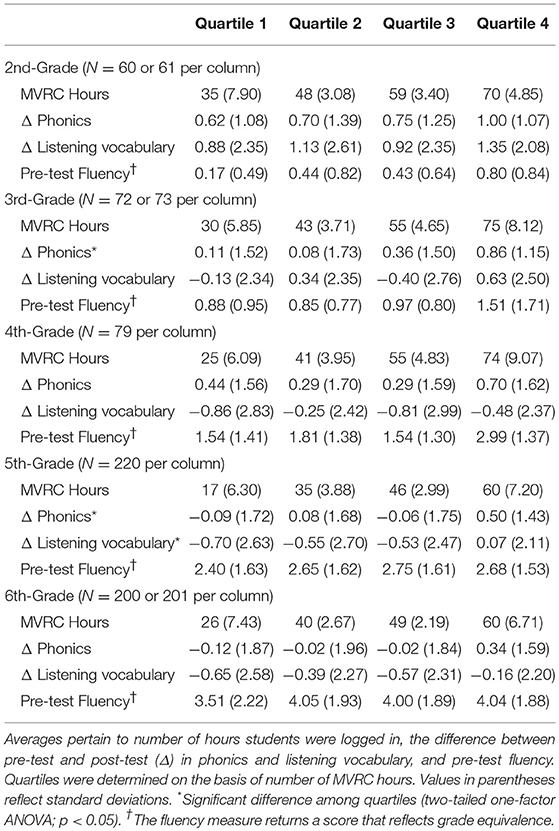

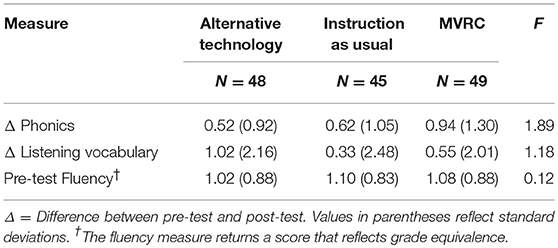

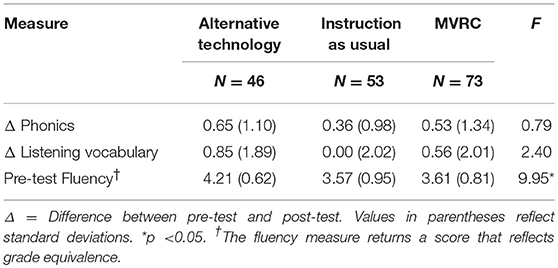

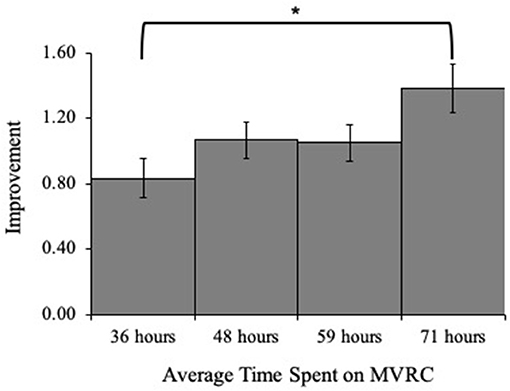

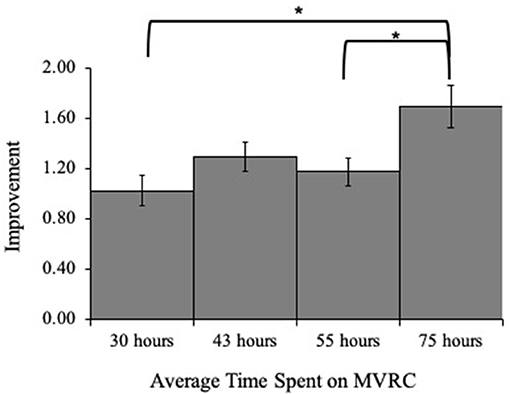

For each grade level, we turned the continuous measure of MVRC time into discrete quartiles: Children in Quartile 1 spent the shortest time on MVRC, and children in Quartile 4 spent the longest time on MVRC. This made it possible to account for distributional inconsistencies in MVRC time and to obtain a more detailed picture of the effect of MVRC time on children's performance (e.g., non-linear patterns). Absolute improvement in fluency, phonics, and listening vocabulary served as dependent variables (post-test performance minus pre-test performance)2. Table 3 provides the average number of hours on MVRC for each quartile. It also provides the average improvement in phonics, the average improvement in listening vocabulary, and the average fluency at pre-test (separated by quartile).

Second-Grade Findings

Figure 1 illustrates the 2nd-grade improvements in reading fluency, separated by quartiles of data (N = 60 or 61 per quartile). A one-way ANOVA revealed a significant effect of MVRC time on reading fluency, F(3, 238) = 3.38, p < 0.05, η2 = 0.04. Bonferroni's post-hoc pair-wise comparisons reveal a significant difference between the first quartile (MMVRC time = 36, MFluency Improvement = 0.83) and the last quartile (MMVRC time = 71, MFluency Improvement = 1.38), p < 0.05. By comparisons, improvement in neither of the two control measures (phonics, listening vocabulary) was affected by MVRC time, ps > 0.34.

Figure 1. Average improvement of 2nd-grade reading fluency, as a function of hours practiced (separated into quartiles). Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.05).

Third-Grade Findings

Figure 2 illustrates the 3rd-grade improvements in reading fluency, separated by quartiles of data (N = 72 or 73 per quartile). Mimicking our findings with 2nd-graders, a one-way ANOVA revealed a significant effect of MVRC time on improvements in reading fluency, F(3, 286) = 4.68, p < 0.01, η2 = 0.05. The effect stems from significant differences between the first (MMVRC time = 30, MFluency Improvement = 1.03) and last quartile (MMVRC time = 75, MFluency Improvement = 1.69), as well as between the third (MMVRC time = 55, MFluency Improvement = 1.18) and last quartile, Bonferroni's ps < 0.05. MVRC time had a significant effect on phonics improvements, F(3, 286) = 4.21, p < 0.01, η2 = 0.04. However, MVRC time did not affect listening vocabulary, p > 0.06, η2 = 0.03.

Figure 2. Average improvement of 3rd-grade reading fluency, as a function of hours practiced (separated into quartiles). Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.05).

Fourth-Grade Findings

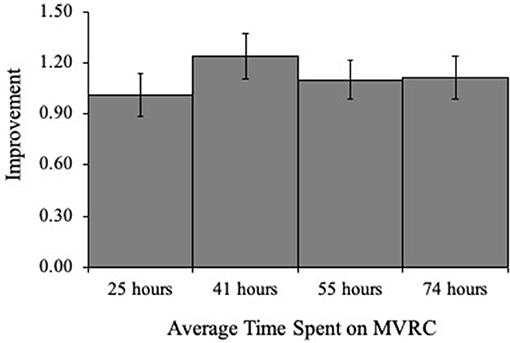

Figure 3 illustrates the 4th-grade improvements in reading fluency, separated by quartiles of data (N = 79 per quartile). Unlike what we found for 2nd- and 3rd-graders, a one-way ANOVA revealed no significant effect of MVRC time on reading fluency, p > 0.65, η2 < 0.01. This result did not change when we implemented the statistically more powerful linear contrast. Thus, for 4th-grade, time on MVRC did not have the expected effect. Neither phonics nor listening vocabulary changed as a function of MVRC time, ps > 0.35.

Figure 3. Average improvement of 4th-grade reading fluency, as a function of hours practiced (separated into quartiles). Error bars represent standard errors.

Fifth-Grade Findings

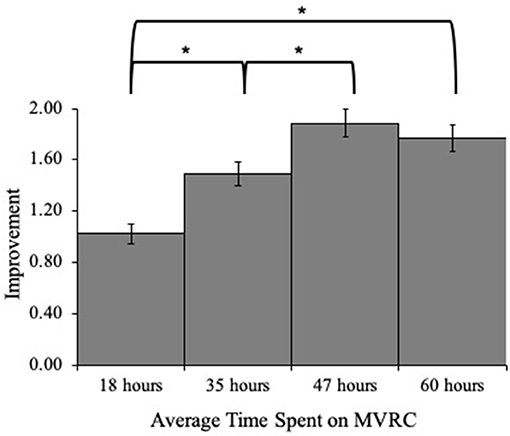

Figure 4 illustrates the 5th-grade improvements in reading fluency, separated by quartiles of data (N = 220 per quartile). Mimicking our findings with 2nd- and 3rd-graders, a one-way ANOVA revealed a significant effect of MVRC time on fluency improvement, F(3, 876) = 15.64, p < 0.01, η2 = 0.05. Bonferroni's post-hoc pair-wise comparisons reveal significant differences between the first (MMVRC time = 18, MFluency Improvement = 1.02) and second quartile (MMVRC time = 35, MFluency Improvement = 1.49), as well as between the second and third quartile (MMVRC time = 47, MFluency Improvement = 1.89), ps < 0.05. The first quartile also differed from the last quartile (MMVRC time = 60, MFluency Improvement = 1.77), p < 0.05.

Figure 4. Average improvement of 5th-grade reading fluency, as a function of hours practiced (separated into quartiles). Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.05).

For 5th-graders, MVRC time also affected changes in the two control measures. Specifically, in phonics, F(3, 876) = 6.07, p < 0.01, η2 = 0.02, the first quartile (MPhonicsImprovement = −0.09) and the second quartile (MPhonicsImprovement = 0.08) differed significantly from the last quartile (MPhonicsImprovement = 0.50), Bonferroni ps <0.05. And in listening vocabulary, F(3, 876) = 4.12, p < 0.01, η2 = 0.01, the first quartile (MVocabImprovement = −0.70) differed significantly from the last quartile (VocabImprovement = 0.07), Bonferroni ps < 0.05.

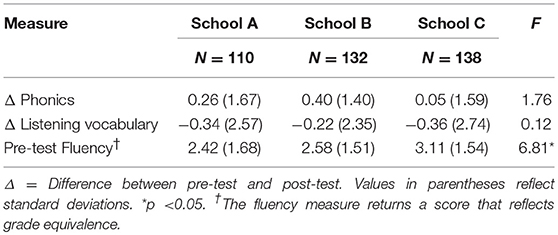

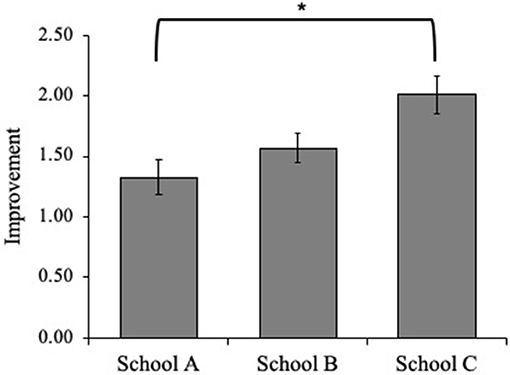

Given the high number of 5th-graders, we were able to analyze improvement in reading fluency as a function of school. Five of the schools had sufficiently high numbers of students (N > 100). Of these five school, we decided against including two of them, given knowledge about special circumstances (e.g., one was a summer school). Figure 5 illustrates the improvements in reading fluency for the three remaining schools. Interestingly, improvement in reading fluency differed by school, even though we controlled for the amount of time students spent on the MVRC program, one-way ANCOVA F(3, 376) = 10.94, p < 0.01, η2 = 0.08. Thus, even though students were at the same grade level, and presented with the exact same online reading tool for similar durations, the degree to which they benefited from it differed (see Table 4 for additional information).

Figure 5. Average improvement of 5th-grade reading fluency as a function of school. Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.05).

Sixth-Grade Findings

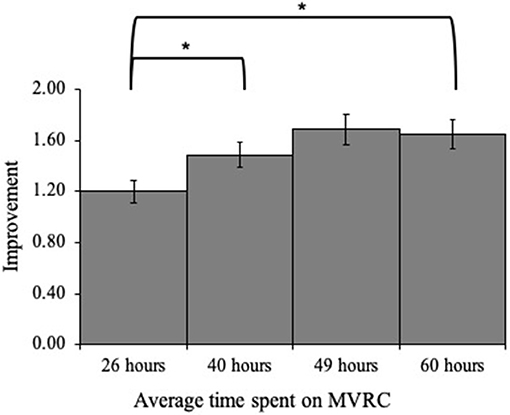

Figure 6 illustrates the improvements in reading fluency found for 6th-grade, separated by quartiles of data (N = 200 or 201 per quartile). Effects of MVRC time on reading fluency were comparable to those obtained for 5th-graders, F(3, 799) = 4.26, p < 0.01, η2 = 0.02. Bonferroni's post-hoc pair-wise comparisons reveal significant differences between the first (MMVRC time = 24, MFluency Improvement = 1.21) and second quartile (MMVRC time = 40, MFluency Improvement = 1.48), as well as between the first and third quartile (MMVRC time = 49, MFluency Improvement = 1.69), ps < 0.05. The first quartile also differed from the last quartile (MMVRC time = 60, MFluency Improvement = 1.65), p < 0.05. MVRC time did not affect improvements in phonics or listening vocabulary ps > 0.06.

Figure 6. Average improvement of 6th-grade reading fluency, as a function of hours practiced (separated into quartiles). Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.05).

In sum, we found robust effects of MVRC time on improvement in reading fluency, consistently more so than on improvements in phonics or in listening vocabulary. For example, while MVRC time affected reading fluency in Grades 2, 3, 5, and 6, it affected listening vocabulary only in Grade 5. This demonstrates that the time of MVRC practice operates as designed, affecting primarily students' reading fluency. However, specifics of the school appear to matter. Incidentally, the school that benefited the most from MVRC practice was rated as “D” school by the state's Department of Education. In contrast, the two schools that benefited least from MVRC were rated as “F” school.

District 2: Quasi-Experiment With Grade 2 Students

Our next question was whether the positive effect of the MVRC program holds up in a randomized control trial. District 2 made such a research design possible with 2nd-grade classrooms. At this grade level, some children might have made the transition to reading for comprehension, while other children might still be focused on decoding and the mechanics of reading. Thus, we can shed light on the effect of the MVRC during this transitional time.

Method

Description of the School District, Schools, and Students

District 2 serves a sub-urban community of middle- and upper-middle class families. Six classrooms participated from the same public elementary school. All teachers reported having a bachelor's degree, two received a master's degree, and all others have attended conferences and training to enhance their teaching ability. The number of years teaching ranged from 14 years to 23 years. Out of the six classrooms, data from 152 students were available. Pre-test and post-test were completed by 142 students. None of these students had an identified learning disability.

Description of the Measures

In addition to the measures of reading fluency, phonics, and listening vocabulary, we had access to students' scores from a state-wide assessment (MAP reading percentile), their scores from the Test of Silent Word Reading Fluency (TOSWRF-2; Mather et al., 2014), and their scores from the Test of Silent Contextual Reading Fluency (TOSCRF-2; Hammill et al., 2006). The TOSWRF-2 and the TOSCRF-2 are 3-min assessments designed to measure the rate and accuracy at which the students can recognize words. Printed words are strung together without spaces and students are asked to draw lines where the spaces should be between words. Words are either random (TOSWRF-2) or embedded in sentences (TOSCRF-2).

Design and Procedure

Classrooms were randomly assigned to one of three groups: (1) instruction as usual, (2) use of an alternative computer-based reading program, and (3) use of the MVRC program. The alternative computer-based reading program was decided upon by the school. Teachers were asked to allow their students to work on the assigned reading program for 30 min per day for the duration of 9 weeks (either the alternative program or MVRC). At the end of the intervention, teachers were asked to provide feedback. They were also asked to decide whether they would use the technology during the subsequent semester. In order to protect confidentiality, we present the teacher results in the aggregate, across Districts 2 and 3 (see Discussion). Standardized assessments (MAP, TOSWRF-2; TOSCRF-2) were administered prior to the intervention.

Results and Discussion

In a set of preliminary analyses, we used the standardized assessments obtained during pre-testing to validate the reading fluency measure returned by the MVRC screener. As expected, correlations were high: rMAP = 0.68, rTOSWRF−2 = 0.54, rTOSCRF−2 = 0.49, ps < 0.01. In contrast, fluency grade equivalence scores correlated only moderately with the control measures of phonics and listening vocabulary, rs < 0.34, speaking to the validity of the reading-fluency measure.

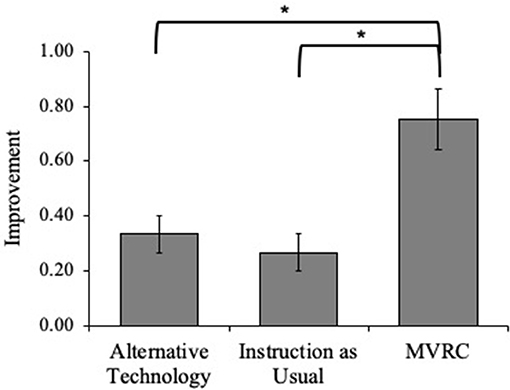

A one-way ANOVA determined that there was no effect of condition on reading fluency at pre-test, F(2, 139) = 0.12, p = 0.89 (see Table 5 for more information). Figure 7 shows the average improvement in reading fluency as a function of condition. A one-factor ANOVA revealed a significant effect of condition on fluency improvement, F(2, 139) = 9.62, p < 0.01, η2 = 0.12. As predicted, Bonferroni's post-hoc test revealed that the improvement was significantly greater in the MVRC group (M = 0.76, SD = 0.63) than the alternative technology group (M = 0.33, SD = 0.48) or the instruction as usual group (M = 0.27, SD = 0.45), ps < 0.05. Condition had no effect on the improvement in phonics or listening vocabulary, ps > 0.16.

Figure 7. Average improvement of 2nd-grade reading fluency as a function of condition. Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.01).

District 3: Quasi-Experiment With Grade 4 Students

District 3 participated in a randomized control trial with 4th-graders. This grade level is particularly important, given the surprising findings with 4th-graders from District 1.

Methods

Description of the School District, Schools, and Students

District 3 serves a community of suburban upper-middle class families. Nine classrooms participated from the same public elementary school. All teachers had received a bachelor's degree, two had received a master's degree, and one had participated in graduate classes. All teachers reported to engage in ongoing professional development to enhance their teaching ability. The number of years teaching ranged from <1 year to 27 years. Out of the nine classrooms, data from 183 students were available. Pre-test and post-test were completed by 172 students. None of these students had an identified learning disability.

Measurements, Design, and Procedure

The same three measures from the MVRC screener were used that we used at the other two districts. They were administered before and after the 9-week intervention. Classrooms were randomly assigned to three condition: (1) instruction as usual, (2) use of an alternative computer-based reading program, and (3) use of MVRC. As was the case for District 2, the alternative computer-based reading program was decided upon by the school. It was not the same as the reading program chosen by District 2, and the researchers had no control over this choice. Teachers received the same instructions as teachers from District 2.

Results and Discussion

A one-way ANOVA determined there was a significant effect of condition on reading fluency at pre-test, F(2, 169) = 9.95, p < 0.01, η2 = 0.10 (see Table 6 for additional information). Bonferroni's post-hoc test revealed that this effect favored the alternative technology group, which had a significantly higher initial reading fluency (M = 4.21, SD = 0.62) than the MVRC group (M = 3.62, SD = 0.81) and the instruction as usual group (M = 3.57, SD = 0.95), ps < 0.05.

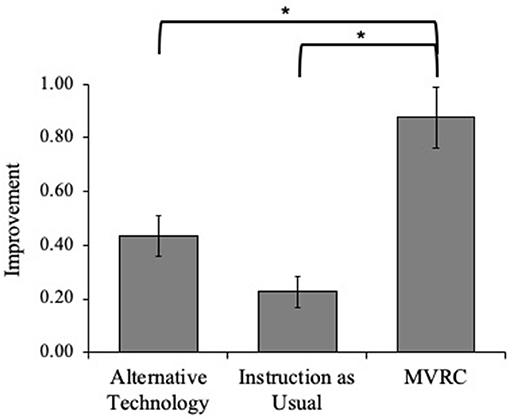

Figure 8 shows the average improvement in reading fluency as a function of condition. There was a significant effect of condition, F(2, 169) = 13.31, p < 0.01, η2 = 0.14. Bonferroni's post-hoc test showed that the MVRC group (M = 0.88, SD = 0.97) made larger gains than the alternative technology group (M = 0.43, SD = 0.50) and the instruction as usual group (M = 0.23, SD = 0.42), ps < 0.01. Unlike the effect on fluency improvement, condition had no effect on the improvement on the control measures of phonics or listening vocabulary, ps > 0.09).

Figure 8. Average improvement of 4th-grade reading fluency, as a function of condition. Error bars represent standard errors. *Significant difference obtained with Bonferroni pair-wise comparisons (p < 0.01).

General Discussion

The goal of the current study was to test the effect of the MVRC MindPlay Virtual Reading Coach on the reading fluency of elementary-school children. Three school districts were involved, whether by making available existing data sets (Grades 2–6), or by participating in a randomized control trial (Grades 2 and 4). Results show important strengths of MVRC, as well as some challenges. Specifically, while our analyses established a link between improvement of reading fluency and MVRC practice time, this effect was modulated by grade level and school. The two quasi-experimental studies provided conclusive evidence for the positive effect of MVRC on reading fluency. At the same time, teachers expressed concerns with the reading program, preferring other types of pedagogy when given a choice. These findings shed light on the complexity inherent in supporting children's emerging literacy skills.

Strengths of the MVRC

MVRC offers a benchmark assessment of reading fluency that returns a grade equivalent score. We found that this fluency score correlated highly with three outside measures: a state-wide assessment and two standardized measures of orthographic reading skills. In contrast, fluency scores correlated less with the control measure of phonics and listening vocabulary. This speaks to the construct validity of the fluency measure. As such, the MVRC fluency assessment might be a cost-effective alternative to currently available fluency measures (e.g., DIBELS Oral Reading Fluency®). It can be administered to a larger number of students simultaneously, and its two-step feature allows for adaptability that is missing from paper-and-pencil options.

MVRC's effect on reading fluency was most evident in the experimental studies. Specifically, for 2nd-graders (District 2), the MVRC group improved by 0.76 grade levels over the course of 9 weeks. In contrast, the other groups improved by only half of that amount (0.33 grade levels for the alternative technology group and 0.27 grade levels for the instruction as usual group). The same pattern emerged for 4th-graders (District 3): The MVRC group improved by 0.88 grade levels, more than double the fluency improvement seen in the other two groups (0.43 grade levels for the alternative technology group and 0.23 grade levels for the instruction as usual group). MVRC did not account for improvements in phonics or listening vocabulary, which is further evidence for the program's stated goal to address reading fluency.

The quartile analyses carried out with data from District 1 provide important nuances to the findings obtained with the randomized control trials. Across grades and schools, we found that the amount of time spent on MVRC predicted reading fluency to a higher degree than it predicted phonics or listening vocabulary. Together, these findings suggest that the time spent on MVRC maps directly onto improvements in the central skill of reading fluency. This builds upon the broader literature on the importance of filling gaps in fundamental reading skills (e.g., Gibson et al., 2011). Importantly, we found that the intervention helped improve reading fluency even for children from middle-class homes who are not specifically at risk for reading failure (Districts 2 and 3).

Weaknesses of the MVRC

Despite promising results of MVRC, we found that the program faces some challenges. Evidence for such challenges comes from teacher feedback (Districts 2 and 3): When given a choice, none of the teachers assigned to the MVRC condition wanted to continue with the program. In contrast, teachers were very satisfied with the alternative technology. This suggests that components of the MVRC are incongruent with the learning environment that teachers seek to create for their students. Teacher concerns were multi-faceted, ranging from perceived student frustration to feeling unable to connect effectively with students during their MVRC learning experience. There was no apparent difference in feedback between 2nd- and 4th-grade teachers, indicating that the expressed concerns are not tied to the curricular requirements of a particular grade level. This finding highlight the importance of integrating basic-skills training with motivational considerations.

Related, we found that time spent on the program was not a reliable predictor of learning for 4th-graders in District 1: The amount of time on MVRC was unrelated to improvement in reading fluency, even though many children were logged in for over 70 h over the course of a year. While these students improved in reading fluency by approximately one grade level, this was equivalent to the improvement of children who logged in for an average of only 25 h. The 4th-graders in the high-use group might have hit a roadblock that hindered them from making progress, despite spending additional time on the program. Feeling stuck with a program might have led to student frustrations, which, in turn, could affect the teachers' view of the program (Messer and Nash, 2018; cf., Fan and Williams, 2018).

It is also possible that some students logged into the program merely to comply with the instructions of their teacher, without being motivated to learn. This is likely to be a general challenge for online programs that operate without face-to-face supervision (Kearsley and Shneiderman, 1998; Kauffman, 2004; Campuzano et al., 2009). MVRC might be particularly vulnerable on this front, given its emphasis on foundational skills. There are obvious limits to the degree to which phonics and grammar activities are fun, whether the focus is on delivering a lesson or providing children with practice. By comparison, a program that focuses more directly on story reading might have the option to give children choices about what reading passage to read, increasing the fun factor of the enrichment. It remains to be seen how online programs focused on foundational skills can sustain children's intrinsic learning motivation.

Limitations of the Current Study

There are several limitations of our study that need to be considered when attempting to generalize these findings. Regarding the experimental data, one limitation is the choice of the alternative reading technology. The districts chose the alternative reading program themselves, without necessarily trying to offer a direct comparison to the specific philosophy of MVRC. The alternative technology merely allowed us to control for factors related to generic online reading activity (e.g., students being on the computer for a certain amount of time). For this reason, we cannot speak to the relative strength or weaknesses of the specific programs chosen. Future work will need to contrast the effect of programs that differ in key aspects.

Problematic is also that there were some inconsistencies in our findings: For example, while 4th-graders from District 1 did not appear to benefit from MVRC in a linear way, our findings from District 3 showed strong benefits for 4th-graders. Similarly, while MVRC affected improvements in reading fluency overall, schools differed substantially in fluency improvements, even when MVRC time was accounted for (Grades 5 from District 1). There are, of course, many differences among the participating units, which could explain the inconsistencies. However, these explanations would be speculative, leaving the question open about the full strength of MVRC in different circumstances (see also Ertmer et al., 2001).

Finally, our study provided only very limited insights about why teachers had concerns with MVRC. Our focus groups captured the general mood and mimicked a naturalistic scenario of teacher exchange of information. However, they are limited in their capacity to trace the source of attitudes. It is possible, for example, that teachers' concerns stem from students' negative attitudes toward the program. Or they could stem from teachers lacking the resources to support their students effectively. Additional probing would be necessary, for example with teacher surveys, interviews with students, or direct observations, to shed light on the basis for teacher dissatisfaction. Absent data from such methods, speculations about what made for the negative teacher experience would be pre-mature.

Conclusions

Online programs have an important role to play in helping children read proficiently: They can create an individualized lesson plan and provide children with enrichment that fits their skill levels. Here we focused specifically on MVRC and its effect on reading fluency in elementary school (Grades 2–6). For these grade levels, MVRC concentrates primarily on phonics and grammar, the idea being that these basic skills will improve children's reading fluency even if students do not choose their reading. Our results from three school districts provide support for this claim. Specifically, our analyses showed that the total time spent with the program contributed to children's increase in reading fluency, more so than to their improvements in listening vocabulary. And the quasi-experimental design showed that the MVRC group improved in reading fluency more than children in the two control conditions. Future work will need to focus on the effect of idiosyncratic factors inherent in school settings and how those factors moderate the effectiveness of computerized programs that seek to address foundational skills.

Data Availability

The datasets generated for this study are available upon request to the corresponding author.

Ethics Statement

This study was carried out in accordance with a research protocol approved by the Institutional Review Board of the University of Cincinnati (Protocol # 2014-4138). Our subjects were the administrators from Districts 1, 2, and 3, as well as the participating teachers from Districts 2 and 3. All subjects gave written informed consent in accordance with the Declaration of Helsinki. Specifically, administrators agreed to make available de-identified data for research purposes, and teachers agreed to participate in interviews. Written informed parental consent was not required as per the protocol approved by the IRB of the University of Cincinnati and as per national regulations. This is because (1) students did nothing that would be outside of what they are typically asked to do in a school setting, and (2) all data were provided in de-identified format.

Author Contributions

All five authors of this manuscript are accountable for the content of the work. They have contributed to various aspects of the project collectively, including design of the study, recruitment, data collection, data analysis, and dissemination.

Funding

Partial funding was provided by MindPlay, as well as by the University of Cincinnati and the North Carolina State University.

Conflict of Interest Statement

The research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. All of the opinions expressed in this paper are only those of the authors, arrived at through in-depth analysis of the available research and data. There have been no incentives of any kind tied to the outcome of this study.

Acknowledgments

We thank the teachers and administrators who agreed to participate in the study. We also thank MindPlay for access to their product. MindPlay staff was available for questions when aspects of their product were unclear.

Footnotes

1. ^U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, What Works Clearinghouse.

2. ^Patterns of results remain the same when we used relative improvement as outcome measure (i.e., proportion of improvement as a function of a student's pre-test).

References

Alvermann, D. E., Unrau, N., and Ruddell, R. B. (2013). Theoretical Models and Processes of Reading. Newark: International Reading Association.

American Academy of Pediatrics News (2014). Parents who Read to Their Children Nurture More Than Literary Skills. Washington, DC: AAP News.

Arvans, R. (2009). Improving Reading Fluency and Comprehension in Elementary Students Using Read Naturally. Ann Arbor, MI: Western Michigan University.

Baker, L., Dreher, M. J., and Guthrie, J. T. (2000a). Engaging Young Readers: Promoting Achievement and Motivation. New York, NY: Guilford Press.

Baker, S., Gersten, R., and Keating, T. (2000). When less may be more: A 2-year longitudinal evaluation of a volunteer tutoring program requiring minimal training. Read. Res. Q. 35, 494–519. doi: 10.1598/RRQ.35.4.3

Balu, R., Zhu, P., Doolittle, F., Schiller, E., Jenkins, J., and Gersten, R. (2015). Evaluation of Response to Intervention Practices for Elementary School Reading. Retrieved from: http://ies.ed.gov/ncee/pubs/20164000/pdf/20164000.pdf

Bauer-Kealey, M., and Mather, N. (2018). Use of an online reading intervention to enhance the basic reading skills of community college students. Commu. College J. Res. Pract. 43, 631–647. doi: 10.1080/10668926.2018.1524335

Bosman, A. M., Schraven, J. L., Segers, E., and Broek, P. (2017). How to teach children reading and spelling. In Developmental Perspectives in Written Language and Literacy: In Honor of Ludo Verhoeven, eds E. Segers, and P, Broek van den, 277–293.

Bosman, A. M., and Van Orden, G. C. (1997). “Why spelling is more difficult than reading,” in Learning to Spell: Research, Theory, and Practice Across Languages, eds C. A. Perfetti, L. Rieben, and M. Fayol (London, UK: Routledge) 173–194.

Campuzano, L., Dynarski, M., Agodini, R., and Rall, K. (2009). Effectiveness of Reading and Mathematics Software Products: Findings From Two Student Cohorts. Washington, DC: National Center for Education Evaluation and Regional Assistance; Institute of Education Sciences.

Chambers, A., Mather, N., and Stoll, K. (2013). MindPlay Virtual Reading Coach: Initial Research Study. Retrieved from https://mindplay.com/wp-content/uploads/2015/06/SummerResearch-20131.pdf

Chapman, J. W., and Tunmer, W. E. (1997). A longitudinal study of beginning reading achievement and reading self-concept. Br. J. Edu. Psychol. 67, 279–291. doi: 10.1111/j.2044-8279.1997.tb01244.x

Chapman, J. W., Tunmer, W. E., and Prochnow, J. E. (2000). Early reading-related skills and performance, reading self-concept, and the development of academic self-concept: a longitudinal study. J. Educ. Psychol. 92:703. doi: 10.1037//0022-0663.92.4.703

Cognitive Concepts Inc (2003). Outcomes Report: Los Angeles Unified School District. California: Cognitive Concepts Inc.

Cordewener, K. A., Hasselman, F., Verhoeven, L., and Bosman, A. M. (2018). The role of instruction for spelling performance and spelling consciousness. J. Exp. Edu. 86, 135–153. doi: 10.1080/00220973.2017.1315711

Echols, L. D., West, R. F., Stanovich, K. E., and Zehr, K. S. (1996). Using children's literacy activities to predict growth in verbal cognitive skills: a longitudinal investigation. J. Educ. Psychol. 88:296. doi: 10.1037//0022-0663.88.2.296

Ehri, L. C., Nunes, S. R., Stahl, S. A., and Willows, D. M. (2001). Systematic phonics instruction helps students learn to read: evidence from the national reading panel's meta-analysis. Rev. Educ. Res. 71, 393–447. doi: 10.3102/00346543071003393

Ertmer, P. A., Gopalakrishnan, S., and Ross, E. M. (2001). Technology-using teachers. J. Res. Comput. Edu. 33, 1–27.

Fan, W., and Williams, C. (2018). The mediating role of student motivation in the linking of perceived school climate and academic achievement in reading and mathematics. Front. Edu. 3:50. doi: 10.3389/feduc.2018.00050

Fenty, N., Mulcahy, C., and Washburn, E. (2015). Effects of computer-assisted and teacher-led fluency instruction on students at risk for reading failure. Learn. Disabil. 13, 141–156.

Fitzgerald, R., and Hartry, A. (2008). What Works in Afterschool Programs: The Impact of a Reading Intervention on Student Achievement in the Brockton Public Schools (phase II). Berkeley, CA: MPR Associates, Inc. and the National Partnership for Quality Afterschool Learning at SEDL.

Fountas, I. C., and Pinnell, G. S. (2006). Teaching for Comprehending and Fluency: Thinking, Talking, and Writing About Reading, K-8. Portsmouth, NH: Heinemann.

Froiland, J. M., and Oros, E. (2014). Intrinsic motivation, perceived competence and classroom engagement as longitudinal predictors of adolescent reading achievement. Edu. Psychol. 34, 119–132. doi: 10.1080/01443410.2013.822964

Gale, D. (2006). The Effect of Computer-Delivered Phonological Awareness Training on the Early Literacy Skills of Students Identified as at-Risk for Reading Failure. Tampa, FL: University of South Florida.

Gibson, L., Cartledge, G., and Keyes, S. E. (2011). A preliminary investigation of supplemental computer-assisted reading instruction on the oral reading fluency and comprehension of first-grade African American urban students. J. Behav. Edu. 20, 260–282. doi: 10.1007/s10864-011-9136-7

Griffiths, Y. M., and Snowling, M. J. (2002). Predictors of exception word and nonword reading in dyslexic children: the severity hypothesis. J. Edu. Psychol. 94:34. doi: 10.1037/0022-0663.94.1.34

Hammill, D. D., Wiederholt, J. L., and Allen, E. A. (2006). TOSCRF: Test of Silent Contextual Reading Fluency. Dallas, TX: Pro-Ed.

Hancock, C. M. (2002). Accelerating reading trajectories: the effects of dynamic research-based instruction. Dissert. Abstr. Int. 63:2139A.

Hill, D. V., and Lenard, M. A. (2016). The Impact of Achieve3000 on Elementary Literacy Outcomes: Randomized Control Trial Evidence, 2013-14 to 2014-15 (DRA Report No. 16.02). Cary, NC: Wake County Public School System, Data and Accountability Department.

Huo, S., and Wang, S. (2017). The effectiveness of phonological-based instruction in english as a foreign language students at primary school level: a research synthesis. Front. Edu. 2:15. doi: 10.1007/978-981-10-4030-6

Jamshidifarsani, H., Garbaya, S., Lim, T., Blazevic, P., and Ritchie, J. M. (2019). Technology-based reading intervention programs for elementary grades: an analytical review. Comput. Edu. 128, 427–451. doi: 10.1016/j.compedu.2018.10.003

Jensen, J. W. (2015). The effect of my reading coach on the change in reading scores (Doctoral dissertation). Baker University, Baldwin City, KS, United States.

Kauffman, D. F. (2004). Self-regulated learning in web-based environments: instructional tools designed to facilitate cognitive strategy use, metacognitive processing, and motivational beliefs. J. Edu. Comput. Res. 30, 139–161. doi: 10.2190/AX2D-Y9VM-V7PX-0TAD

Kealey, M. (2017). Meeting the diverse needs of community college students: using computer assisted instruction to improve reading skills (Doctoral dissertation). Arizona State University, Tempe, AZ, United States.

Kearsley, G., and Shneiderman, B. (1998). Engagement theory: a framework for technology-based teaching and learning. Edu. Technol. 38, 20–23. doi: 10.1007/BF02299671

Kemp, S. C. (2006). Teaching to read naturally: examination of a fluency training program for third grade students. Dissert. Abstr. Int. 67, 95–2447.

Kim, J. S., Samson, J. F., Fitzgerald, R., and Hartry, A. (2010). A randomized experiment of a mixed-methods literacy intervention for struggling readers in grades 4–6: effects on word reading efficiency, reading comprehension and vocabulary, and oral reading fluency. Read. Writ. 23, 1109–1129. doi: 10.1007/s11145-009-9198-2

Kozma, R. B. (2003). Technology and classroom practices: an international study. J. Res. Technol. Edu. 36, 1–14. doi: 10.1080/15391523.2003.10782399

Lepola, J., Poskiparta, E., Laakkonen, E., and Niemi, P. (2005). Development of and relationship between phonological and motivational processes and naming speed in predicting word recognition in grade 1. Sci. Studies Read. 9, 367–399. doi: 10.1207/s1532799xssr0904_3

Macaruso, P., Hook, P. E., and McCabe, R. (2006). The efficacy of computer-based supplementary phonics programs for advancing reading skills in at-risk elementary students. J. Res. Read. 29, 162–172. doi: 10.1111/j.1467-9817.2006.00282.x

Madden, N. A., Slavin, R. E., Karweit, N. L., Dolan, L. J., and Wasik, B. A. (1993). Success for all: longitudinal effects of a restructuring program for inner-city elementary schools. Am. Edu. Res. J. 30, 123–148. doi: 10.3102/00028312030001123

Mann, K. (2017). Effects of the mindplay computer program on student reading achievement: an action research study (Doctoral Dissertation, University of South Carolina) Retrieved from https://scholarcommons.sc.edu/etd/4082

Mano, Q. R., and Guerin, J. (2018). Direct and indirect effects of print exposure on silent reading fluency. Read. Writ. 31, 483–502. doi: 10.1007/s11145-017-9794-5

Mather, N., Hammill, D. D., Allen, E. A., and Roberts, R. (2014). TOSWRF-2: Test of Silent Word Reading Fluency. Dallas, TX: Pro-ed.

McMaster, K. L., Fuchs, D., Fuchs, L. S., and Compton, D. L. (2005). Responding to nonresponders: an experimental field trial of identification and intervention methods. Except. Child. 71, 445–463. doi: 10.1177/001440290507100404

Meece, J. L., Anderman, E. M., and Anderman, L. H. (2006). Classroom goal structure, student motivation, and academic achievement. Ann. Rev. Psycholol. 57, 487–503. doi: 10.1146/annurev.psych.56.091103.070258

Mellard, D. F., Fall, E., and Woods, K. L. (2010). A path analysis of reading comprehension for adults with low literacy. J. Learn. Disabil. 43, 154–165. doi: 10.1177/0022219409359345

Messer, D., and Nash, G. (2018). An evaluation of the effectiveness of a computer-assisted reading intervention. J. Res. Read. 41, 140–158. doi: 10.1111/1467-9817.12107

MindPlay Universal Screener™ (2018). Resource Guide, MINDPLAY®. Tucson, AZ: Division of Methods & Solutions, Inc.

MindPlay Virtual Reading Coach (2017). Resource Guide, MINDPLAY®, Tucson, AZ: Division of Methods & Solutions, Inc.

Morgan, P. L., Fuchs, D., Compton, D. L., Cordray, D. S., and Fuchs, L. S. (2008). Does early reading failure decrease children's reading motivation? J. Learn. Disabil. 41, 387–404. doi: 10.1177/0022219408321112

National Center for Education Statistics (2017). U.S. Department of Education. Washington, DC: Institute of Education Sciences.

National Reading Panel (2000). Teaching Children to Read: An Evidence-Based Assessment of the Scientific Research Literature on Reading and its Implications for Reading Instruction: Reports of the Subgroups (National Institute of Health Publication No. 00-4769). Washington, DC: National Institute of Child Health and Human Development.

Onatsu-Arvilommi, T., and Nurmi, J. E. (2000). The role of task-avoidant and task-focused behaviors in the development of reading and mathematical skills during the first school year: a cross-lagged longitudinal study. J. Edu. Psychol. 92:478. doi: 10.1037/0022-0663.92.3.478

Peterson, C. L., Burke, M. K., and Segura, D. (1999). Computer-based practice for developmental reading: Medium and message. J. Develop. Edu. 22:12.

Poskiparta, E., Niemi, P., Lepola, J., Ahtola, A., and Laine, P. (2003). Motivational-emotional vulnerability and difficulties in learning to read and spell. Br. J. Edu. Psychol. 73, 187–206. doi: 10.1348/00070990360626930

Prescott, J. E., Bundschuh, K., Kazakoff, E. R., and Macaruso, P. (2018). Elementary school–wide implementation of a blended learning program for reading intervention. J. Edu. Res. 111, 497–506. doi: 10.1080/00220671.2017.1302914

Pressley, M. (2002). Effective beginning reading instruction. J. Liter. Res. 34, 165–188. doi: 10.1207/s15548430jlr3402_3

Ransford-Kaldon, C. R., Flynt, E. S., Ross, C. L., Franceschini, L., Zoblotsky, T., Huang, Y., et al. (2010). Implementation of Effective Intervention: An Empirical Study to Evaluate the Efficacy of Fountas & Pinnell's Leveled Literacy Intervention System (LLI). Memphis, TN: Center for Research in Educational Policy (CREP).

Rashotte, C. A., MacPhee, K., and Torgesen, J. K. (2001). The effectiveness of a group reading instruction program with poor readers in multiple grades. Learn. Disabil. Quart. 24, 119–134. doi: 10.2307/1511068

Reiser, D. A. (2018). The effects of MindPlay Virtual Reading Coach (MVRC) on the spelling Growth of students in second grade (Doctoral dissertation). Arizona State University, Tempe, AZ, United States.

Ross, S. M., Morrison, G. R., and Lowther, D. L. (2010). Educational technology research past and present: balancing rigor and relevance to impact school learning. Contemp. Edu. Technol. 1, 17–35.

Schneider, D., Chambers, A., Mather, N., Bauschatz, R., Bauer, M., and Doan, L. (2016). The effects of an ICT-based reading intervention on students' achievement in Grade two. Read. Psychol. 37, 793–831. doi: 10.1080/02702711.2015.1111963

Sherrow, B. L. (2015). The effects of MindPlay Virtual Reading Coach (MVRC) on the spelling growth of students in second grade . (Doctoral dissertation) Arizona State University, Tempe, AZ, United States.

Sideridis, G. D., Morgan, P. L., Botsas, G., Padeliadu, S., and Fuchs, D. (2006). Predicting LD on the basis of motivation, metacognition, and psychopathology: an ROC analysis. J. Learn. Disabil. 39, 215–229. doi: 10.1177/00222194060390030301

Silverman, R. D., Speece, D. L., Harring, J. R., and Ritchey, K. D. (2013). Fluency has a role in the simple view of reading. Sci. Stud. Read. 17, 108–133. doi: 10.1080/10888438.2011.618153

Torgesen, J., Myers, D., Schirm, A., Stuart, E., Vartivarian, S., Mansfield, W., et al. (2006). National Assessment of Title I: Interim Report. Volume II: Closing the Reading Gap: First Year Findings from a Randomized Trial of Four Reading Interventions for Striving Readers. Washington, DC: National Center for Education Evaluation and Regional Assistance; Institute of Education Sciences.

Tracey, L., Chambers, B., Slavin, R. E., Madden, N. A., Cheung, A., and Hanley, P. (2014). Success for all in england: results from the third year of a national evaluation. SAGE Open. 4, 1–10. doi: 10.1177/2158244014547031

Van Orden, G. C., Pennington, B. F., and Stone, G. O. (1990). Word identification in reading and the promise of subsymbolic psycholinguistics. Psychol. Rev. 97:488. doi: 10.1037/0033-295X.97.4.488

Vaughan, J., Crews, J., Sisk, K., and Garcia, L. (2004). The Effects of My Reading Coach™ on the reading comprehension of second graders Whose Native Language is English and Second Graders Who are Second Language Learners: An Initial Study of Programmatic Effectiveness. Heath, TX: Children's Institute of Literacy Development, Inc., Technical Report.

Wallot, S. (2014). From “cracking the orthographic code” to “playing with language”: toward a usage-based foundation of the reading process. Front. Psychol. 5:891. doi: 10.3389/fpsyg.2014.00891

Wang, J. H. Y., and Guthrie, J. T. (2004). Modeling the effects of intrinsic motivation, extrinsic motivation, amount of reading, and past reading achievement on text comprehension between US and Chinese students. Read. Res. Quart. 39, 162–186. doi: 10.1598/RRQ.39.2.2

Wexler, J., Vaughn, S., Roberts, G., and Denton, C. A. (2010). The efficacy of repeated reading and wide reading practice for high school students with severe reading disabilities. Learn. Disabil. Res. Pract. 25, 2–10. doi: 10.1111/j.1540-5826.2009.00296.x

Keywords: technology-based intervention, reading enrichment, controlled quasi-experimental design, reading fluency, children

Citation: Kloos H, Sliemers S, Cartwright M, Mano Q and Stage S (2019) MindPlay Virtual Reading Coach: Does It Affect Reading Fluency in Elementary School? Front. Educ. 4:67. doi: 10.3389/feduc.2019.00067

Received: 18 February 2019; Accepted: 26 June 2019;

Published: 10 July 2019.

Edited by:

Kang Kwong Luke, Nanyang Technological University, SingaporeReviewed by:

Angela Jocelyn Fawcett, Swansea University, United KingdomAngeliki Mouzaki, University of Crete, Greece

Copyright © 2019 Kloos, Sliemers, Cartwright, Mano and Stage. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Heidi Kloos, aGVpZGkua2xvb3NAdWMuZWR1

Heidi Kloos

Heidi Kloos Stephanie Sliemers1

Stephanie Sliemers1 Macey Cartwright

Macey Cartwright