- 1Department of Higher Education, University of Surrey, Guildford, United Kingdom

- 2School of Psychology, University of Surrey, Guildford, United Kingdom

- 3School of Life and Health Sciences, Aston University, Birmingham, United Kingdom

Developing the requisite skills for engaging proactively with feedback is crucial for academic success. This paper reports data concerning the perceived usefulness of the Developing Engagement with Feedback Toolkit (DEFT) in supporting the development of students' feedback literacy skills. In Study 1, student participants were surveyed about their use of feedback, and their perceptions of the utility of the DEFT resources. In Study 2, students discussed the resources in focus groups. Study 3 compared students' responses on a measure of feedback literacy before and after they completed a DEFT feedback workshop. Participants perceived the DEFT favorably, and the data indicate that such resources may enhance students' general feedback literacy. However, the data raise questions about when such an intervention would be of greatest value to students, the extent to which students would or should engage voluntarily, and whether it would engage those students with the greatest need for developmental support.

Feedback can strongly benefit students' learning and skill development (e.g., Hattie and Timperley, 2007). Reaping these benefits, though, depends on the quality of students' engagement with the feedback, and their ability to demonstrate proactive recipience, defined as “a state or activity of engaging actively with feedback processes” (Winstone et al., 2017a, p. 17; see also Handley et al., 2011; Price et al., 2011). Numerous studies suggest that students do not always feel sufficiently equipped to implement the feedback they receive (e.g., Weaver, 2006; Burke, 2009), and indeed, the research literature contains many examples of minimal engagement with feedback by students (e.g., Gibbs and Simpson, 2004; Hounsell, 2007; Sinclair and Cleland, 2007; Carless and Boud, 2018). Researchers and educators have responded to this problem by testing out various initiatives for improving students' engagement with feedback, with varying success (Winstone et al., 2017a). In this paper, we report an exploration of the perceived usefulness of the Developing Engagement with Feedback Toolkit (DEFT), a set of freely available and flexible resources, designed for the purpose of supporting educators and students to build students' proactive recipience skills (Winstone and Nash, 2016; the fully editable DEFT resources are available open-access at https://tinyurl.com/FeedbackToolkit).

Many initiatives for developing feedback practice in higher education align with a cognitivist transmission model, insofar that their focus is on making changes to the mechanics of the feedback process (e.g., reducing the turnaround time, or developing new feedback pro formas; see Boud and Molloy, 2013). In contrast, a socio-constructivist approach emphasizes the importance of students' engagement with feedback, and the impact of feedback on their subsequent learning (Price et al., 2007; Ajjawi and Boud, 2017). The latter approach is important as it encourages us to conceptualize feedback as a process in which both staff and students have roles to play. Feedback is unlikely to have optimal impact if emphasis is placed solely on the ways in which educators craft feedback. Instead, it is important that educators create environments in which using feedback is perceived to be both possible and valuable, and in which students perceive a need to commit their time and energy to engaging with and implementing the advice they receive (Nash and Winstone, 2017).

When educators see students failing to make the most of feedback opportunities, some might assume that students lack motivation and commitment (see Higgins et al., 2002 for discussion of this issue). But in many cases it is likely that more lies beneath this apparent disengagement. To consider some of the possible reasons, Jönsson (2013) reviewed the research literature on students' use of feedback, and concluded that students can find it difficult to use feedback when it comes too late to be useful, when it is insufficiently detailed or individualized, or when it is seen as too authoritative. In addition, Jönsson suggests that students can find it difficult to decode the specialist language often used in feedback, and can also be unaware of useful strategies for implementing the feedback. Beyond these possible reasons, we should also recognize that there is an affective dimension to receiving feedback: the “emotional backwash” that people often experience in response to feedback can inhibit their cognitive processing of the developmental information (Pitt, 2017; Pitt and Norton, 2017; Ryan and Henderson, 2018).

Building upon Jönsson's review of the literature, Winstone et al. (2017b) identified four distinct barriers to students' engagement with feedback information, through a thematic analysis of focus groups with undergraduate students. The first barrier was termed awareness, representing students' difficulty decoding the language used within feedback, alongside recognizing the purpose of feedback and from where it is obtained. Like this barrier, the next of Winstone et al.'s barriers resonates with Jönsson's discussion: the term cognisance was used to represent students' difficulty in knowing which strategies they could use to implement feedback. The third barrier, agency, refers to students' difficulties in feeling empowered to act upon feedback; a common issue arising under this theme was the perceived difficulty in transferring feedback across different unrelated assignments in a modularized curriculum (see also Hughes et al., 2015; Jessop, 2017). Finally, the barrier of volition represents unwillingness on students' part to put in the “hard graft” required to realize the impact of feedback (Carless, 2015).

When considering the barriers to productive engagement with assessment feedback, it is important to consider that some students might be less affected than others by these barriers. For example, in an online survey Forsythe and Johnson (2017) recently explored whether students' having a “fixed” mindset (i.e., unchangeable) vs. “growth” mindset (i.e., malleable; Dweck, 2002) about their own abilities was related to their self-reported feedback behavior. Their results demonstrated that students who reported a fixed mindset—those who believed their abilities were relatively difficult to change or improve—were more likely to agree that they exhibited defensive behaviors when receiving feedback. Findings like these suggest that we should be mindful of individual differences when considering students' engagement with assessment feedback. This consideration is also likely to be important when asking what kinds of interventions might be most effective for supporting students' engagement and skills.

So what kinds of interventions are successful in breaking down some of these barriers to using feedback effectively? Aiming to synthesize the research literature on this matter, Winstone et al. (2017a) carried out a systematic review that uncovered a wide range of interventions, from the use of educational technology, to peer- and self-assessment, to structured portfolio tools. The authors described four general categories into which these interventions could be conceptually grouped. Some interventions focused on students internalizing and applying standards, and these sought to better equip students to use feedback by giving them opportunities to take the perspective of an assessor and to understand the standards and criteria used to assess their work (e.g., peer assessment, dialogue, and discussion). Second, sustainable monitoring interventions aimed to put practices in place for enabling students to record and track their improvement and use of feedback, such as the use of portfolios and action-planning. Third, collective provision of training interventions were those that could be delivered to entire cohorts of students at a time, such as feedback workshops and the provision of exemplar assignments. Fourth and finally, interventions focused on the manner of feedback delivery included attempts to change the way in which feedback was presented or delivered to students (e.g., audio feedback, withholding the grade until the descriptive feedback had been read). For each intervention included in the review, Winstone et al. (2017a) attempted to identify the authors' rationale for why it should or could be effective. That is, what particular skill or process were they trying to develop in students? From this analysis, Winstone et al. identified four separate skills that were cited as desired outcomes of the interventions reported, and that were therefore believed to underpin proactive recipience: these were self-appraisal; assessment literacy; goal-setting and self-regulation; and engagement and motivation. In sum, interventions for improving students' effective engagement with feedback need to consider both the barriers that stand in the way of this engagement, and the recipience skills that are required in order to reap the benefits of feedback. Winstone et al. (2017a) suggested that a holistic, rather than piecemeal, approach to developing proactive recipience would therefore be most beneficial, by targeting multiple barriers, and multiple recipience skills simultaneously. It was upon this foundation that we developed the DEFT.

The DEFT is a toolkit of resources for developing students' skills of “proactive recipience,” comprising the building blocks for a feedback glossary, feedback guide, feedback workshop, and feedback portfolio (Winstone and Nash, 2016). The DEFT was developed in the context of an undergraduate Psychology programme; in the spirit of student-staff partnership (Deeley and Bovill, 2017), a small group of undergraduate students played active roles at all stages of the DEFT's development, including consultation, design, implementation, and evaluation. In determining the content of the DEFT tools, specific emphasis was placed upon considering the four types of barriers identified in Winstone et al.'s (2017b) focus groups. We also took into account some initial data gathered during one of the participants' activities in those focus groups, which were published in Supplementary Materials alongside that paper. There, each focus group ranked the usefulness and their likelihood of using various different feedback recipience interventions. We found that feedback resources, workshops, and portfolios were all considered to be among the most useful interventions in principle, and considered the most likely to be engaged with in practice.

The first component of the DEFT, the feedback glossary, was designed principally to support students' awareness of what common feedback comments mean. We consulted the transcripts from Winstone et al. (2017b), to find examples of feedback terms that participants spontaneously mentioned finding confusing. We then consulted teaching staff who were asked to provide short definitions of what they might mean when using each of these terms, and these definitions were synthesized. The resulting glossary was inserted as one part of the second DEFT component, the feedback guide. The guide is a short booklet for students designed principally to support their cognisance and agency, giving them concrete strategies that they might use to support their implementation of feedback. The guide was initially constructed by our student partners, and then refined and improved collaboratively. As part of their consultations with other students, our student partners created a feedback flowchart for inclusion in the guide, which set out a simplistic step-by-step process that could be taken when receiving feedback.

Like the feedback guide, the feedback workshop was also designed principally to support students' cognisance and agency, through giving them opportunities to reflect on what feedback is, how it can be used, and some of the difficulties that people face in reaping the benefits of feedback. Some of the workshop activities involve students bringing along a recent example of feedback they have received, so that they can discuss and analyse ways to use the advice they received. The workshop resources were designed around the conceptual framework of feedback literacy (Sutton, 2012). In his model, Sutton argues that feedback literacy comprises three domains: knowing, which reflects knowledge of the learning potential of feedback; being, which represents an understanding of the impact of feedback on identity and emotion; and acting, which represents an awareness of the importance of taking concrete action in response to feedback. For each of these three domains, we designed three interactive activities, with accompanying resources. The workshop is designed to be flexible, such that an educator might choose to use one individual activity to facilitate discussions, or build a longer workshop using a combination of activities.

Finally, the feedback portfolio was designed principally to target the barriers of agency and volition, by enabling students to better see how their use of feedback was having an impact over time, and to synthesize and reflect upon their feedback. We created a series of resources that could be used within either an electronic or paper-based portfolio, such as guided reflection sheets, and a tool to support students in making use of non-personalized, cohort-level feedback (see https://tinyurl.com/FEATSportfolio for an example of how the portfolio might be built electronically).

In this paper, we report three studies designed to explore the perceived usefulness, benefits, and limitations of the DEFT. To this end, because our investigations served partly to benefit our own professional practice and pedagogy, in all three of the present studies we worked solely with students from our own subject discipline of Psychology. In the first study, using a survey method, we asked whether students believe the resources would benefit them in principle. In particular, we were interested in whether students believed that their engagement with the DEFT resources could reduce any difference between their self-reported current level of engagement with feedback, and the level at which they perceive they should engage with feedback. In this first study only, we also explored the influence of individual differences on students' perceptions of the DEFT resources. Specifically, based on prior findings indicating systematic variability in different students' engagement with feedback (e.g., Winstone et al., 2016; Forsythe and Johnson, 2017), we wanted to explore individual differences in the perceived utility of the resources and in students' perceptions of their likelihood of using them. Here we drew upon a key construct relevant to learning opportunities: students' approaches to learning (Biggs et al., 2001). A “deep” approach to learning represents an intrinsic motivation to learn, and a desire to understand meaning and interconnecting themes relating to learning material; in contrast, a “surface” approach represents a focus on the minimum requirements of a learning task, deploying strategies such as rote memorization. We predicted that students with higher deep approaches to learning, and those with lower surface approaches, would be most likely to perceive the DEFT resources as useful and most likely to say that they would use them.

In the second study, using a focus group method, we built on the initial quantitative exploration with a more in-depth exploration of students' perceptions of the DEFT resources, exploring potential aids and barriers to their engagement. Here we did not focus on any systematic individual differences between participants. In the third and final study, we sought to conduct an initial evaluation of the impact of the workshop component of the DEFT, by using a simplistic and exploratory self-report measure of feedback literacy to explore changes pre- and post-workshop. Taken together, exploring these issues can facilitate an initial discussion of how toolkits of resources such as the DEFT, in contrast with individual interventions that might focus on tackling only one kind of barrier or skill, might be used to develop students' proactive recipience of feedback information.

Study 1

Method

Participants

A total of 92 undergraduate Psychology students from different levels of study (54% first-years, 32% second-years, 5% professional placement years, and 9% final-years) completed the study in exchange for course credits. The total sample included 79 females and 13 males (mean age = 20.50, SD = 3.65, range = 18–39).

Materials

The study comprised an online survey that included the following sets of questions.

Perceptions of Feedback Usage

All participants responded to questions that required them to rate the extent to which they currently put into action the feedback that they receive on their assessed work (hereafter, “current use,” and the extent to which they believe they should do so (hereafter, “ideal use”). Separately, they rated the extent to which they could put their feedback into action, if they were to receive and engage with the DEFT resources (“potential use”). For each of these three questions they responded using an on-screen sliding scale equivalent to a visual analog scale. The scales were anchored from “Not at all” to “Frequently,” and the final position of each slider was automatically converted to a score between 0 and 100 for the purposes of analysis.

Perceptions of the DEFT Components

Participants rated the utility of the four DEFT components, each on two separate scales. Specifically, for each component they rated the extent to which students would benefit from having access to the resource (hereafter, “usefulness in principle”), and the likelihood that they would actually take advantage of the resource if it were available to them (“likelihood of use”). They made these ratings on scales from 1 (Very useless / unlikely) to 7 (Very useful/likely).

Approaches to Learning

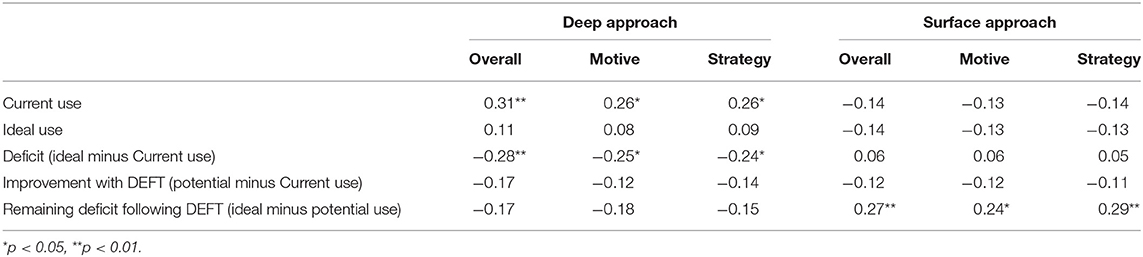

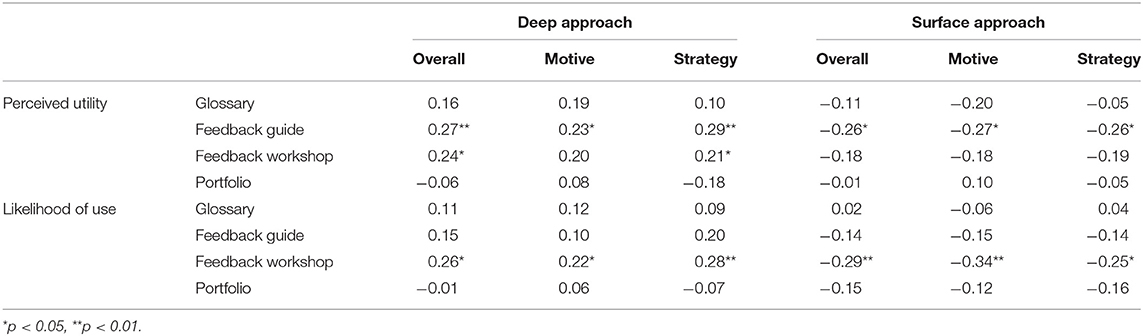

All participants completed the Revised two-factor Study Process Questionnaire (R-SPQ-2F; Biggs et al., 2001). The R-SPQ-2F is a widely used measure of deep and surface approaches to learning, and participants are required to indicate their agreement with 20 statements such as “My aim is to pass my course whilst doing as little work as possible,” using scales from 1 (Never true of me) to 5 (Always true of me). Deep and surface approaches are measured separately (in this sample, the overall correlation between both was r = −0.36, p < 0.001). The measure possessed good internal consistency in this sample (Deep approach α = 0.80; Surface approach α = 0.80). In the original Biggs et al. (2001) questionnaire, both the deep and surface approach dimensions are sub-divided further into “strategy” and “motive” sub-dimensions. Here our findings were highly comparable between these sub-dimensions; therefore although we report the more granular findings in Tables 1, 2 for completeness, we comment only on the overall results for deep and surface approaches.

Table 1. Spearman correlations between participants' reported approaches to learning, and their perceptions of feedback usage with and without use of the DEFT.

Table 2. Spearman correlations between participants' reported approaches to learning, and their perceptions of the utility and likelihood of use of each DEFT component.

Procedure

The procedure for all studies in this paper were approved by an institutional ethics review board, and all participants in these studies gave informed consent to take part.

All participants completed the survey in the same order. After giving consent to take part, and indicating their age, gender, and level of study, they first answered the questions on current and ideal use of feedback. Next, they received a one-sentence description of the purpose and intended use of each of the four components of the DEFT, before rating their perceptions of each component as described above, and then rating their potential use of feedback if they were to use the DEFT. Finally, they completed the R-SPQ-2F, before receiving a written debriefing.

Results

Perceptions of Feedback Usage

We began by examining our participants' perceptions of the extent to which they currently use their feedback, the extent to which they should use it, and the extent to which using the DEFT resources could reduce the gap between these two. Overall, participants believed that in principle they should put their feedback into action very regularly (M = 90.63, SD = 10.45), but that they currently fall rather short of this normative ideal (M = 60.53, SD = 19.38). Participants felt that with the support of the DEFT resources, they would be able to use their feedback more regularly (M = 80.07, SD = 16.48), thus making up much of the deficit between their current and ideal standards.

As described above, one subsequent question was whether these perceptions of feedback use varied systematically according to students' approaches to learning. We explored this question using correlation analyses; however, because participants' mean responses for the “ideal use” measure were near ceiling, and therefore not normally distributed, we took a non-parametric approach to all of these analyses. As Table 1 shows, participants who expressed more of a deep approach to learning typically rated their current use of feedback as higher, and they perceived a smaller deficit between their current, and ideal use of feedback. However, approaches to learning were not systematically related to the extent to which people felt the DEFT resources could help them reduce this deficit. There was a weak and non-significant tendency for those students higher in deep approach to perceive that they would improve less as a result of using the DEFT. However, even if it were treated as meaningful, this negative correlation is particularly difficult to interpret because the equivalent correlation between potential improvement and surface approach is also negative (and weak and non-significant). Overall then, the absence of clear correlations here leads us to best conclude that people's perceptions of their potential improvement in feedback use after using the DEFT were not significantly related to the extent of their deep or surface approach. Consequently, we found that participants with a more strongly surface approach tended to envisage a larger remaining deficit from their ideal standards even were they to use the DEFT. The opposite effect for deep approach was not statistically significant.

Perceptions of the DEFT Tools

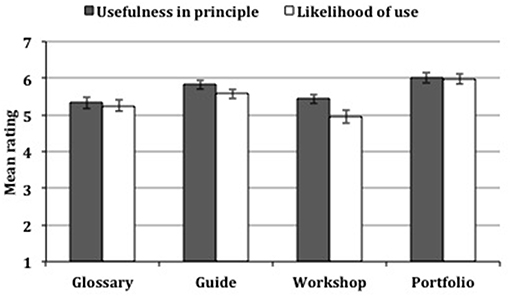

Next, we examined whether participants believed that each of the individual DEFT components—the glossary, the feedback guide as a whole, the feedback workshop, and the portfolio—would be useful in principle, and whether they would actually engage with them in practice. Figure 1 shows that in general they perceived all four components as useful in principle, rating all four above five on the 7-point scale. Participants also generally believed that they would actually engage with these tools, although their ratings of likelihood of use were statistically lower, overall, than their ratings of usefulness in principle, Wilcoxon Z (N = 92) = 3.16, p < 0.01. This difference was foremostly seen for the feedback workshop, but the overall size of the difference was small. In terms of both perceived utility in principle, and likelihood of use in practice, participants rated the portfolio tool the most positively. We must, of course, remember that these self-report measures lend themselves easily to demand effects, whereby participants provide the responses that they believe the researchers desire; the anonymous online format of the survey clearly mitigates but does not entirely remove this concern.

Figure 1. Mean perceptions of each DEFT tool's usefulness in principle, and likelihood of being used. Error bars are equal to ±1 standard error of the mean.

Once again, we took a non-parametric approach to analyse how these perceptions were associated with participants' approaches to learning. A number of systematic patterns emerged across the different components, as summarized in Table 2. As the table shows, it was generally the case that those with stronger deep approaches were somewhat more optimistic, and those with stronger surface approaches were somewhat more pessimistic about these tools, especially the feedback guide. Not all of these relationships were statistically significant, though; in particular we found no evidence of systematic individual differences in students' perceptions of the utility or likelihood of using the feedback portfolio. Put differently, these data offer some evidence that certain DEFT components are more likely to be used by some students than by others, not just because some students are more likely than others to engage, but also because they differ in their perceptions of the potential utility of such tools. However, it is important to emphasize that all of these relationships—even those that were statistically significant—were relatively small in size.

Study 2

Method

Participants

A total of 13 Psychology students from different levels of undergraduate study (nine first-years, three second-years, and one final-year) each took part in one of four focus groups (denoted below as FG1, FG2, FG3, and FG4, which contained two, four, two, and three participants, respectively), in exchange either for course credits or for £5. In total the sample comprised 12 females and one male.

Materials and Procedure

The focus groups were facilitated by a research assistant who was unknown to the participants. Following a rapport-building activity involving introductions and general conversation (Kitzinger, 1994), the research assistant facilitated discussion through a semi-structured topic guide. The facilitator first introduced the project through which the resources were developed, and then explained that the purpose of the focus groups was to gather students' perceptions of the tools. First, participants were introduced to each of the DEFT components. For each component they received a summary sheet that explained what the tool is, its intended purpose, and some information about how the tool was developed. They also received a copy of the feedback guide (containing the glossary), a summary of some workshop exercises and activities, and some screenshots of the portfolio as it might appear in a web-browser. As students explored each resource, the facilitator encouraged discussion structured around the ways in which the resource would be helpful for their learning, what would make it difficult to use, and how it could be further improved. The facilitator provided prompts where necessary, but otherwise his role was minimal. Once participants had explored all components of the DEFT, they were given the opportunity to share any further perspectives about their use of feedback. Finally, students were invited to express the “take home” message that they would like the researchers to take from their discussions. Following the completion of all four focus groups, the recordings were transcribed verbatim, and all participants were assigned an alphabetic identifier to preserve their anonymity. The transcripts were analyzed deductively using thematic analysis (Braun and Clarke, 2006), through which we searched the transcripts using a realist approach for evidence of likely barriers and facilitators to students' engagement with the resources as identified on a semantic level (Braun and Clarke, 2006). We followed Braun and Clarke's recommended stages of analysis, with the processes of generating codes and organizing them into themes being carried out by two researchers through an iterative process of dialogue.

Results

Recall that the aim of this study was to explore students' perceptions of the DEFT resources, including the potential aids and barriers to their engagement. Below we outline some of what we judged to be the most noteworthy or recurrent viewpoints that came up in participants' discussions, for each of the four DEFT components.

Feedback Glossary

When discussing the glossary, participants were in general agreement that it could help them with “demystifying” academic terminology, and could give them clearer insight into the perspective of a marker. Some students saw the glossary as a resource that they might use after receiving feedback, as one might use a dictionary, having it available “on the side like when you're looking at [feedback]” (Participant A, FG1). Other students expressed a belief that they would benefit from digesting the information as more of a preparatory exercise, and would “probably read through it at the beginning of the year and then I probably wouldn't look at it again” (Participant D, FG2). Indeed, several participants perceived that a glossary of feedback terms would be of most use to them in the early years of their course:

D: Probably the first few essays you get back –

E: Yeah

D: – and then you get to know what everything means (FG2).

N: Maybe like you'd use it more in first year cos then there would be more terms that you're unfamiliar with, but then that are used like again in second and final year (FG4).

Nevertheless, there was a clear view that the participants would not wish for such a resource to be a substitute for dialogue with academic staff, and that this would influence the way in which students engage with the resource:

G: I think it's probably really useful, it's probably quite reassuring as a first port of call but I think in most situations, if you were that concerned about what they meant in your feedback, you'd go and talk to the lecturer (FG2).

Feedback Guide

In participants' discussions of the feedback guide, it became clear that they particularly valued the explicit guidance on how to use feedback in general:

D: I guess [the guide] also gives you the steps for things to actually look for, so at the beginning I kind of looked at [my feedback] and thought “okay so there's some stuff that I've done okay, and there is some stuff I can improve, but I don't really know how should I go forward from that, I've read but now what do I do?” So then saying like actually, think about what you can do to improve solidly and then do an action plan, I might possibly engage with [my feedback] more (FG1).

In some cases participants saw this instructive value as being less about providing concrete strategies to follow, and more about providing motivation and encouragement. In their discussions of the resources, students frequently acknowledged the various difficulties associated with using feedback well. In particular, students recognized that their emotional responses to feedback can hamper their engagement, and that the flowchart contained within the feedback guide might help them to engage with feedback in a better way by directing them to think about their emotional readiness: “I think it's actually quite good—I never even considered like assuming you would be emotionally ready, so that's quite good” (Participant B, FG1). The flowchart was also perceived to be beneficial in terms of giving students a sense of agency and motivation to take action on feedback:

O: I like the flow chart. A lot.

K+N+J: Yeah.

K: It's really good.

N: I think it sort of encourages you to actually do something about your feedback?

K: Yeah.

N: Cos like a lot of the time you just sort of get it and then just forget about it and put it aside (FG3).

In all four focus groups, students discussed how the fact that the feedback guide was authored by students enhanced its accessibility: “I think it's good because it's not like really academic it's more like informal like a few tips…” (Participant K, FG4). Participants also discussed how reading about other students' difficulties when using feedback, as well as their suggested strategies for success, is at once both reassuring and motivating:

H: I quite like this because it's quite realist, it includes like erm the opinions of students at the back and then at the front, erm, in how to use this guide, for example the points made as it can be difficult to feel motivated to use feedback. I think that would…that may encourage students to look over their feedback and use it effectively (FG3).

O: I like the “you are not alone” section.

J: Yeah.

O: Cos it's probably like saying exactly what you're thinking so it sort of helps you out a bit (FG4).

Despite clear recognition of the benefits of the feedback guide, some participants did question whether students would fully engage with all of the information in the guide, because “there's so much text they may feel demotivated to look through all of this.” (Participant H, FG3). Others questioned whether the feedback guide was a resource that students would actually return to: “if you read it once, would you read it again?” (Participant B, FG1).

There was also a perception amongst some students that whilst resources such as a student-authored feedback guide might be useful in principle, the resource would be most useful for those who have yet to develop the skills to implement feedback. However, students also recognized that those who might benefit the most from the resource may also be less likely to engage with it, perhaps because their emotional response to feedback may inhibit engagement:

G: The chances are, if you're one of those students that wants to act on feedback to do it, you would have already sorted out how to act on feedback (FG2).

E: I think the problem is, if you've just got some bad feedback you're not going to want to read all this. You're going to be in a bad mood and you're just going to kinda want the facts and get straight to the information you want—you might not want all this kind of trying to personalize it and try and make it relate to you (FG2).

Feedback Workshop

When discussing the feedback workshop resources, students perceived benefits of taking part in a facilitated session focused on engagement with feedback, and judged the interaction and discussion with peers to be one particular benefit. One participant for example said “it's very useful to hear comments from your peers and see how they respond to certain bits of criticism” (Participant L, FG4), whilst another remarked that: “it's great that this kind of talks you through the process of going through it and you're discussing with other people so you get more ideas” (Participant B, FG1).

Another perceived benefit of the feedback workshop was that the activities gave them the opportunity to apply the techniques learnt to their own feedback, by using examples of their own feedback as the basis for exercises:

B: I just think it would be really helpful to apply this to your own work and then see if you've kind of done the approach right maybe, and get feedback from your tutor (FG1).

G: I think as well there should be the option to, if you're comfortable with it, to use specific examples from your own feedback to make it more specific to students obviously people might…people might not want to talk about their feedback, but if you're comfortable with talking about it within the group then I think there should be the chance to make it about your own feedback (FG2).

C: it kind of encourages you to actually engage with your feedback and see how you can make it better, because sometimes you look at it and think okay I need to do that next time, but you're not sure how to do. So if you get hands-on experience with your own work of how to use your feedback, I think that would be helpful (FG2).

Here, participants envisaged that although not all students would be comfortable sharing examples of feedback they had received, there was potentially much to gain from engaging in the workshop activities that centered on the use of personal examples. Students also perceived that the workshop affords an opportunity to get “hands-on experience” with using feedback, which could increase their agency to enact these techniques when receiving feedback again in the future.

In all of the focus groups, interesting discussions arose regarding the way in which the workshops might be scheduled. The consensus seemed to be that the value of the workshop comes from creating a dedicated time and space to work on skills for engaging with feedback, which students may not otherwise use to focus on feedback. This led to suggestions that “perhaps they should be compulsory” (Participant H, FG3):

B: Erm I guess the fir…the initial thought is it's quite good because it will make you engage with it. I mean it's an hour that you have to dedicate to thinking about how you'd approach feedback, whereas I think realistically would you give an hour of your time to think about how to approach feedback? (FG1).

Feedback Portfolio

Discussion around whether engaging with the DEFT should be optional or compulsory also arose when students explored the feedback portfolio resources. In some students' views, the portfolio would not take significant time or effort to utilize, and would promote their own engagement with feedback: “I also don't think that it would take that much of your time maybe quarter of an hour…erm and it would make you do it.” (Participant B, FG1). Across the focus groups, though, different opinions were expressed about the optionality of engaging with the portfolio. For some, “if it's something that is optional, then the people that really want to make a difference to their work are going to do it but I don't think it is something you can say is compulsory” (Participant D, FG2). Others expressed a belief that use of the portfolio would need to be embedded into scheduled contact time: “I wouldn't want to waste my own time doing this kind of—I wouldn't want to do this in my spare time. But if you incorporated it into academic tutorials then it would be quite good” (Participant E, FG2).

Despite these discussions around how best to facilitate engagement with the portfolio resource, students identified and discussed what they perceived to be key benefits to using such a tool. First, students discussed that it would be useful to bring all of their feedback together in one place, facilitating synthesis of their strengths and areas for development:

B: Yeah no I think that's a really good point it's like synthesizing everything…with how it is at the moment you get a piece of feedback and you probably save it on the folder that piece and module's in, and then it's loads of different places (FG1).

A: Cos it just makes it all like… like I said it's all in one page you don't have to look at like your past like two essays separately to see ‘oh what did I do wrong there?’ to make sure you don't do it again (FG1).

G: I think this is really good, having it all in one place… you can see where you've improved because you're…you can look at how it's jumped forward in different assignments (FG2).

Second, students reflected upon the potential benefit of the portfolio for facilitating dialogue with their lecturer or personal tutor:

K: Yeah that's a good idea. To be like, for the personal tutor to have access to what you've written… and say “oh I've noticed that you've written this in a lot of essays but you still haven't seen improvement” (FG4).

N: I think it would be good for a tutor to like erm look at it sort of once you've completed it? Cos say you…you think you're doing something not so well each time…like they could be “oh no you're actually doing this okay, but now do this”…? (FG4)

Interestingly, students envisaged benefits to this kind of dialogue beyond just engaging with feedback, where the portfolio could become a vehicle for their stronger engagement with personal tutoring: “I'm wondering if actually now getting your tutor to be involved in your academic progress is one way to get people more involved with their personal tutor” (Participant G, FG2).

Third, students expressed a belief that the portfolio would support their reflection on feedback, enabling stronger engagement with the information and a clearer sense of how to enact the advice:

A: …and then the next time round when you get that piece of feedback be like “oh I didn't even realize that I've done this bad twice in a row, now there's obviously something wrong with this” (FG1).

O: I think it's a really good idea. Cos if say you're getting low marks on like essay one and two and then you're sort of getting a bit annoyed with yourself like essay three you might want to really like change something. You can see like the common things that you're doing wrong and like really make an effort to change it (FG4).

As was the case for participants' discussions of the feedback glossary, students also discussed the relevance of the portfolio resource at different stages of their degree programmes, seeing greater benefit to using the portfolio during the early stages: “I think to help in first and second year it's probably good but maybe beyond that, if you're improving as a whole student that's more important” (Participant G, FG2).

Summary

When discussing all four resources within the DEFT, students expressed what they perceived to be key benefits to the tools, such as supporting decoding, identifying actions, and synthesizing and reflecting upon feedback. Students' discussions also identify several key considerations to be taken into account when planning how to support students to use the resources; the point within the degree programme at which students would engage, as well as the optionality of engaging with resources, seem to be important considerations. It appears that students perceive the optimum timepoint for introducing the DEFT resources to be early in their degree programmes, as part of the development of their academic literacy—this finding might possibly, however, be an artifact of the majority of our participants being in their first-year of study; our analysis did not explore the possibility of individual differences in this regard. To begin to explore the utility of the DEFT resources in supporting students' feedback literacy from the start of a degree programme, we implemented and evaluated a DEFT feedback workshop in a Level 4 (i.e., first year of university) academic skills tutorial programme.

Study 3

Method

Participants

A total of 103 first-year undergraduate Psychology students voluntarily completed the first part of the study during class. One week later, 77 of these students completed the second part of the study during a tutorial—the remaining 26 participants were excluded from analysis. We did not collect demographic data about the participants, but the sampled population was disproportionately female, and most would have been aged 18–19.

Materials

We developed a simplistic and exploratory measure containing 14 items that related to each of the three different elements of feedback literacy outlined by Sutton (2012), namely knowing, being, and acting. We should be clear that we did not conduct any prior formal validation of this scale, and indeed, that our aim here was not to develop and validate such a measure of feedback literacy. Instead, our aim here was simply to develop a series of items that would provide an exploratory indicator of the effectiveness of our classroom intervention. The item development process therefore involved detailed scrutiny of Sutton's (2012) article, then developing and refining items that reflected each of the knowing, being, and acting elements. For example, the items included “Feedback supports the development of broader academic skills”; “My engagement with feedback does not depend on the mark I receive”; and “In order to be useful, feedback needs to be acted upon.” Participants were required to rate each statement on a scale from 1 (Strongly disagree) to 5 (Strongly agree). One of the items was reverse scored during analysis. For each participant we calculated an overall feedback literacy score at each of Time 1 and Time 2 by averaging all 14 items (α = 0.73 at Time 1, α = 0.75 at Time 2). A full list of the items can be found in Table S1 in the Supplementary Materials.

Procedure

Time 1

At the beginning of a timetabled teaching session, all participants were asked to take part in the study by completing our 14-item feedback literacy measure, which was then collected from them. The session that followed constituted the DEFT feedback workshop. In this 1-h workshop, participants completed a number of individual and group-based activities, as well as class discussions, centering around several key aspects of feedback literacy. First, participants took part in a group discussion activity structured around the following questions: what is feedback; where and whom does feedback come from; what is the impact of feedback; and what can make feedback difficult to use. Next, participants worked in groups to discuss a series of exemplar feedback comments, how they might interpret these comments, and what might plausibly be learned from them. In the third activity, participants had the opportunity to consider, first individually and then in groups, the emotions raised by feedback and how to manage them. In the final activity, participants discussed in groups what actions they could take on a new series of exemplar feedback comments.

Time 2

At the start of a second scheduled teaching session, 1 week after the first, participants were asked to complete the same 14-item measure that they completed at Time 1. After finishing this measure, all participants were debriefed.

Results

For all 14 of the individual scale items, there was variability in participants' responses at both Time 1 and Time 2. At both time points, we averaged participants' scores across these 14 survey items to produce an overall score. These scores were distributed approximately normally (Time 1: skewness z = 1.32, kurtosis z = −0.02; Time 2: skewness z = 1.00, kurtosis z = 2.06). Nevertheless, because the distributions of responses on some individual items were highly skewed, we chose to analyse the average scores using both a parametric and non-parametric approach, to ensure that the findings would be robust to alternative analyses. At Time 1, participants provided mean scores of 3.99 out of 5 (SD = 0.32; Median = 3.93; Range = 3.29–4.93) across the 14 survey items. In contrast, at Time 2 they provided mean scores of 4.19 (SD = 0.34; Median = 4.14; Range = 3.64–4.93). A paired t-test confirmed that this represented a statistically significant increase in feedback literacy scores, t(76) = 6.80, p < 0.001, d = 0.62; the same outcome held when we instead analyzed the data using a non-parametric Wilcoxon test, Z(n = 77) = 5.55, p < 0.001, r = 0.45.

Discussion

Ensuring students' proactive engagement with feedback is crucial if that feedback is ever to be useful. Having created the DEFT as a resource for breaking down barriers to proactive recipience and for developing recipience skills, the three studies reported in this paper served to evaluate the perceived value of this kind of systematic approach.

Our first aim here was to explore students' perceptions of the potential utility of the DEFT resources. Taking the DEFT as a whole, the findings from Study 1 illustrate that participants saw potential for the DEFT to reduce the deficit between their current feedback recipience and what they believe to be the ideal level of feedback recipience. In addition, participants' ratings of the utility of each component in the DEFT, as well as their reported likelihood of engagement with the resources, were relatively high across the board. Nevertheless, participants' ratings of the likelihood of engaging with the resources were statistically lower than their ratings of the perceived utility of the resources. The data from the focus groups in Study 2 provide converging evidence for these conclusions. When exploring the DEFT resources in the focus groups, participants discussed what they perceived to be unique benefits to each component; for example, believing that the glossary would support their decoding of terminology, and that the portfolio would enable them to effectively synthesize feedback from multiple assignments. However, participants suggested that several of the resources would be most beneficial at an early stage of their university programmes. This perception may explain why some participants, perhaps those at a later stage of a programme, perceived the potential utility of certain tools in principle to be greater than their likelihood of using the tools in practice. Similarly, students expressed differing perspectives about whether or not engagement with the resources should be compulsory. Some students believed that they would be unlikely to engage with the resources unless they were compulsory; others believed that engagement with the resources should be voluntary. These differences of opinion serve to demonstrate the importance of seeking to explore individual differences in students' engagement with feedback interventions.

On the matter of individual differences, in Study 1 we found that participants who scored highly on self-reported deep approach to learning tended to claim that they currently use feedback more frequently than did their “low on deep approach” counterparts. Importantly, though, there were no statistically significant differences (according to approaches to learning) in the extent to which participants felt the DEFT would enhance their current use of feedback. What we did find, though, was that deep approach participants rated the utility of a feedback guide and feedback workshop as higher, and believed they would engage more with the workshop in particular; the reverse was true for surface approach participants. In other words, we found counterintuitively that deep and surface learners differed in how useful they perceived specific DEFT resources to be, even though they did not differ significantly in the extent to which they thought the DEFT resources as a whole would increase their use of feedback. These findings, together with students' discussions in the focus groups (where we did not explore systematic individual differences, but we did note differences of opinion), demonstrate that not all students are likely to engage with the resources in the same way. In particular, given findings that approaches to learning are associated with academic attainment (see, for example, Richardson et al., 2012), our findings align with other reports in the literature that those students who are most likely to benefit from feedback interventions may be least likely to use them. For example, in the context of medical education, Harrison et al. (2013) showed that “just-passing” students were less likely to use feedback on summative OSCE exams than were higher performing students. Our findings suggest that studying systematic individual differences could potentially help with understanding how best to engage and support a diverse population of students.

The final aim of this paper was to test for changes in students' self-reported feedback literacy before and after attending a feedback workshop utilizing the DEFT resources. Here we found that after attending the workshop, participants' scores increased significantly on a simplistic and exploratory measure of feedback literacy (Sutton, 2012). This preliminary evidence of the efficacy of the workshop is an encouraging indicator of the potential benefits of such interventions. However, it should be treated with caution given the potential for demand effects in self-report ratings, and in particular given that our feedback literacy measure was not validated. Scale validation is an important step in ensuring that a measure taps appropriately into the construct that it claims to measure. Therefore, future research involving classroom interventions on this topic would benefit substantially from the development of a reliable and well-validated measure of feedback literacy, which could be used by researchers and practitioners for better understanding students' engagement with feedback.

The data reported in this paper represent an initial evaluation of students' perceptions of one approach to developing students' feedback literacy. Each of our individual studies carries limitations beyond those already mentioned above. In Study 1, some of the outcome measures contained relatively little variability between participants; for example, the top anchor for our scale of “ideal” feedback recipience was “frequently,” and a more extreme choice of anchor might have allowed greater variability in our data and in turn influenced the strength of the overall findings. In Study 2, students' discussions were limited to the tools contained within the DEFT; they were not able to discuss any different interventions that they perceived might benefit their feedback literacy, and we did not get a clear picture of any relevant individual differences in participants' viewpoints. In Study 3, we measured the change in responses to scale items relating to feedback literacy after a relatively short period of time, rather than tracking the impact of the workshops over a longer time period. In all three studies, our sample sizes were relatively small and were constrained to a single subject discipline.

Thinking more broadly about the outstanding questions raised by our work, it is important to emphasize that our evaluation of the resources focuses on students' subjective perceptions of the benefit of these tools, as well as a short-term exploration of the impact of a feedback literacy workshop. Future research should seek to measure the longer-term impact of interventions to support students' feedback literacy, and the effects on their subsequent use of feedback. Indeed, there is a real scarcity of research in the literature that explores the impact of feedback interventions on long-term behavioral outcomes that are less susceptible to demand effects than are self-report measures (Winstone et al., 2017a). Yet longer-term outcome measures are crucial if we are to ascertain the real impact of feedback interventions on students' learning and skill development.

These limitations aside, the present studies suggest that when striving to enhance students' feedback literacy, a skills-based intervention focused on tackling barriers to engagement has good potential. This kind of intervention aligns well with a socio-constructivist approach to feedback, in which emphasis is placed on the active engagement of the student in the feedback process, and on seeing evidence of the impact of feedback on students' learning (e.g., Ajjawi and Boud, 2017). And importantly, it also aligns with the notion that truly developing these important skills requires a sharing of responsibility by both educators and by students (Nash and Winstone, 2017).

Ethics Statement

This study was carried out in accordance with the recommendations of the British Psychological Society's Code of Ethics and Conduct (2009) and Code of Human Research Ethics (2014) with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the University of Surrey Ethics Committee.

Author Contributions

NW and RN designed the DEFT with input from GM. NW and RN designed and conducted the studies. NW and RN analyzed the data. All three authors wrote the paper.

Funding

This work was supported by the Higher Education Academy under Grant number GEN1024.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2019.00039/full#supplementary-material

References

Ajjawi, R., and Boud, D. (2017). Researching feedback dialogue: an interactional analysis approach. Assess. Evalu. High. Educ. 42, 252–265. doi: 10.1080/02602938.2015.1102863

Biggs, J., Kember, D., and Leung, D. Y. (2001). The revised two-factor study process questionnaire: R-SPQ-2F. Br. J. Educ. Psychol. 71, 133–149. doi: 10.1348/000709901158433

Boud, D., and Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assess. Eval. High. Educ. 38, 698–712. doi: 10.1080/02602938.2012.691462

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Q. Res. Psychol. 3, 77–101.

Burke, D. (2009). Strategies for using feedback students bring to higher education. Assess. Evalu. High. Educ. 34, 41–50. doi: 10.1080/02602930801895711

Carless, D. (2015). Excellence in University Assessment: Learning from Award-Winning Practice. London: Routledge. doi: 10.4324/9781315740621

Carless, D., and Boud, D. (2018). The development of student feedback literacy: enabling uptake of feedback. Assess. Evalu. High. Educ. 43, 1315–1325. doi: 10.1080/02602938.2018.1463354

Deeley, S. J., and Bovill, C. (2017). Staff student partnership in assessment: enhancing assessment literacy through democratic practices. Assess. Evalu. High. Educ. 42, 463–477. doi: 10.1080/02602938.2015.1126551

Dweck, C. S. (2002). “The development of ability conceptions,” in Development of Achievement Motivation, eds A. Wigfield and J. Eccles (New York, NY: Academic Press) 57–88. doi: 10.1016/B978-012750053-9/50005-X

Forsythe, A., and Johnson, S. (2017). Thanks, but no-thanks for the feedback. Assess. Evalu. High. Educ. 42, 850–859. doi: 10.1080/02602938.2016.1202190

Gibbs, G., and Simpson, C. (2004). Conditions under which assessment supports students' learning. Learn. Teach. High. Educ. 1, 3–31. doi: 10.1007/978-3-8348-9837-1

Handley, K., Price, M., and Millar, J. (2011). Beyond ‘doing time’: investigating the concept of student engagement with feedback. Oxford Rev. Educ. 37, 543–560. doi: 10.1080/03054985.2011.604951

Harrison, C. J., Könings, K. D., Molyneux, A., Schuwirth, L. W., Wass, V., and van der Vleuten, C. P. M. (2013). Web-based feedback after summative assessment: how do students engage? Med. Educ. 47, 734–744. doi: 10.1111/medu.12209

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Higgins, R., Hartley, P., and Skelton, A. (2002). The conscientious consumer: reconsidering the role of assessment feedback in student learning. Stud. High. Educ. 27, 53–64. doi: 10.1080/03075070120099368

Hounsell, D. (2007). “Towards more sustainable feedback to students,” in Rethinking Assessment in Higher Education. Learning for the Longer Term eds D. Boud and N. Falchikov (London: Routledge) 101–113.

Hughes, G., Smith, H., and Creese, B. (2015). Not seeing the wood for the trees: developing a feedback analysis tool to explore feed forward in modularised programmes. Assess. Evalu. High. Educ. 40, 1079–1094. doi: 10.1080/02602938.2014.969193

Jessop, T. (2017). “Inspiring transformation through TESTA's programme approach,” in Scaling up Assessment for Learning in Higher Education, eds D. Carless, S. M. Bridges, C. K. Y. Chan, and R. Glofcheski (Singapore:Springer), 49–64. doi: 10.1007/978-981-10-3045-1_4

Jönsson, A. (2013). Facilitating productive use of feedback in higher education. Active Learn. High. Educ. 14, 63–76. doi: 10.1177/1469787412467125

Kitzinger, J. (1994). The methodology of focus groups: the importance of interaction between research participants. Sociol. Health Illn. 16, 103–121. doi: 10.1111/1467-9566.ep11347023

Nash, R. A., and Winstone, N. E. (2017). Responsibility-sharing in the giving and receiving of assessment feedback. Front. Psychol. 8:1519. doi: 10.3389/fpsyg.2017.01519

Pitt, E. (2017). “Student utilisation of feedback: a cyclical model,” in Scaling up Assessment for Learning in Higher Education eds D. Carless, S. M. Bridges, C. K. Y. Chan, and R. Glofcheski (Singapore:Springer), pp. 145–158. doi: 10.1007/978-981-10-3045-1_10

Pitt, E., and Norton, L. (2017). ‘Now that’s the feedback I want!' Students' reactions to feedback on graded work and what they do with it. Assess. Evalu. High. Educ. 42, 499–516. doi: 10.1080/02602938.2016.1142500

Price, M., Handley, K., and Millar, J. (2011). Feedback: focusing attention on engagement. Stud. High. Educ. 36, 879–896. doi: 10.1080/03075079.2010.483513

Price, M., O'Donovan, B., and Rust, C. (2007). Putting a social-constructivist assessment process model into practice: building the feedback loop into the assessment process through peer review. Innovations Educ. Teach. Int. 44, 143–152. doi: 10.1080/14703290701241059

Richardson, M., Abraham, C., and Bond, R. (2012). Psychological correlates of university students' academic performance: a systematic review and meta-analysis. Psychol. Bull. 138, 353–387. doi: 10.1037/a0026838

Ryan, T., and Henderson, M. (2018). Feeling feedback: students' emotional responses to educator feedback. Assess. Evalu. High. Educ. 43, 880–892. doi: 10.1080/02602938.2017.1416456

Sinclair, H. K., and Cleland, J. A. (2007). Undergraduate medical students: who seeks formative feedback? Med. Educ. 41, 580–582. doi: 10.1111/j.1365-2923.2007.02768.x

Sutton, P. (2012). Conceptualizing feedback literacy: knowing, being, and acting. Innovations Educ. Teach. Int. 49, 31–40. doi: 10.1080/14703297.2012.647781

Weaver, M. R. (2006). Do students value feedback? Student perceptions of tutors' written responses. Assess. Evalu. High. Educ. 31, 379–394. doi: 10.1080/02602930500353061

Winstone, N. E., and Nash, R. A. (2016). The Developing Engagement with Feedback Toolkit (DEFT). York: Higher Education Academy.

Winstone, N. E., Nash, R. A., Parker, M., and Rowntree, J. (2017a). Supporting learners' agentic engagement with feedback: a systematic review and a taxonomy of recipience processes. Educ. Psychol. 52, 17–37. doi: 10.1080/00461520.2016.1207538

Winstone, N. E., Nash, R. A., Rowntree, J., and Menezes, R. (2016). What do students want most from written feedback information? Distinguishing necessities from luxuries using a budgeting methodology. Assess. Evalu. High. Educ. 41, 1237–1253. doi: 10.1080/02602938.2015.1075956

Keywords: feedback literacy, portfolio, workshop, student, self-regulation

Citation: Winstone NE, Mathlin G and Nash RA (2019) Building Feedback Literacy: Students’ Perceptions of the Developing Engagement With Feedback Toolkit. Front. Educ. 4:39. doi: 10.3389/feduc.2019.00039

Received: 25 January 2019; Accepted: 30 April 2019;

Published: 16 May 2019.

Edited by:

Anders Jönsson, Kristianstad University, SwedenReviewed by:

Lan Yang, The Education University of Hong Kong, Hong KongAlexandra Mary Forsythe, University of Liverpool, United Kingdom

Copyright © 2019 Winstone, Mathlin and Nash. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Naomi E. Winstone, bi53aW5zdG9uZUBzdXJyZXkuYWMudWs=

Naomi E. Winstone

Naomi E. Winstone Georgina Mathlin

Georgina Mathlin Robert A. Nash

Robert A. Nash