95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Cardiovasc. Med. , 07 February 2023

Sec. Cardiovascular Imaging

Volume 10 - 2023 | https://doi.org/10.3389/fcvm.2023.944135

This article is part of the Research Topic Quantitative Imaging (QI) and Artificial Intelligence (AI) in Cardiovascular Diseases View all 16 articles

Chao Cong1,2†

Chao Cong1,2† Yoko Kato1†

Yoko Kato1† Henrique Doria De Vasconcellos1

Henrique Doria De Vasconcellos1 Mohammad R. Ostovaneh1

Mohammad R. Ostovaneh1 Joao A. C. Lima1

Joao A. C. Lima1 Bharath Ambale-Venkatesh3*

Bharath Ambale-Venkatesh3*Background: Automatic coronary angiography (CAG) assessment may help in faster screening and diagnosis of stenosis in patients with atherosclerotic disease. We aimed to provide an end-to-end workflow that separates cases with normal or mild stenoses from those with higher stenosis severities to facilitate safety screening of a large volume of the CAG images.

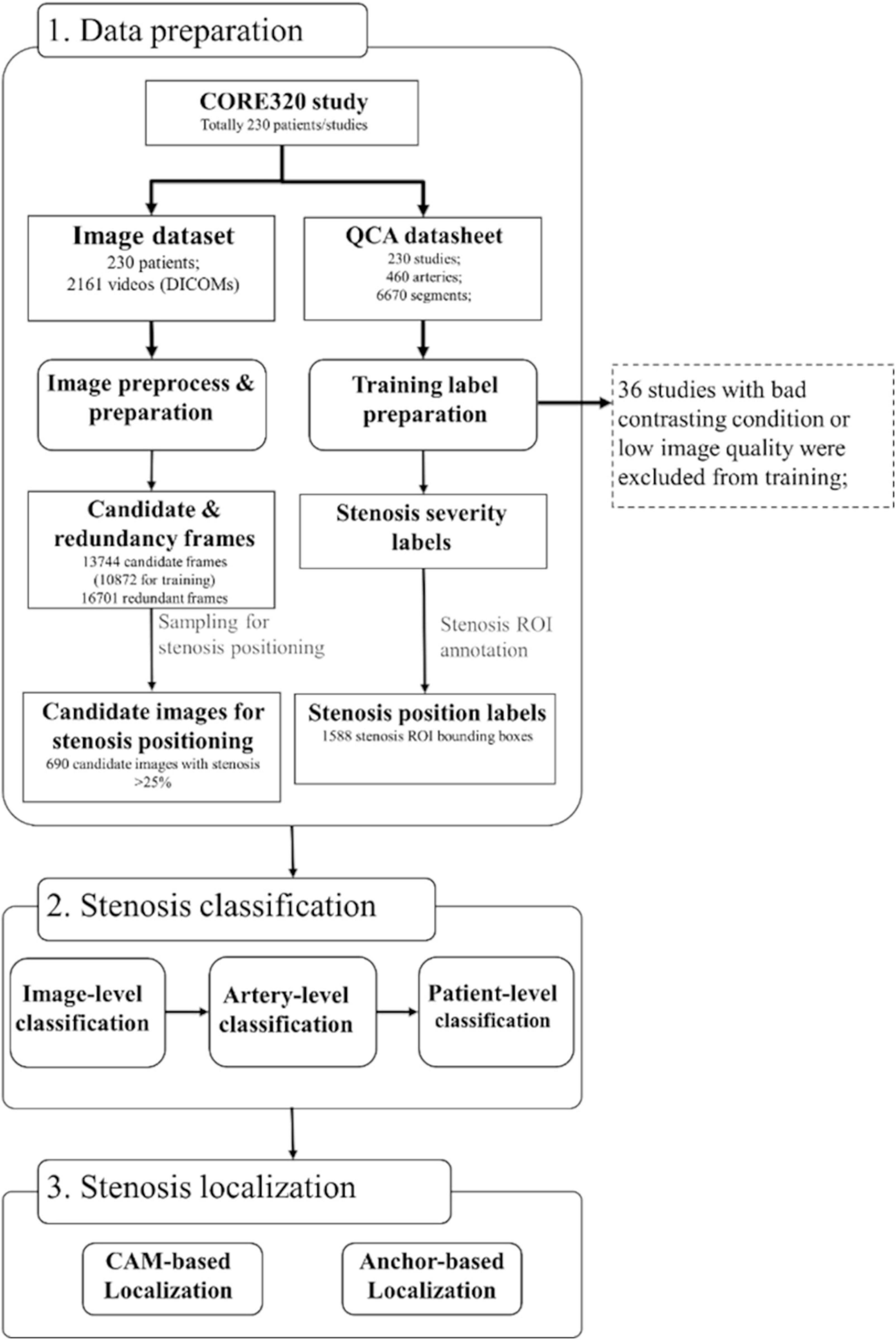

Methods: A deep learning-based end-to-end workflow was employed as follows: (1) Candidate frame selection from CAG videograms with Convolutional Neural Network (CNN) + Long Short Term Memory (LSTM) network, (2) Stenosis classification with Inception-v3 using 2 or 3 categories (<25%, >25%, and/or total occlusion) with and without redundancy training, and (3) Stenosis localization with two methods of class activation map (CAM) and anchor-based feature pyramid network (FPN). Overall 13,744 frames from 230 studies were used for the stenosis classification training and fourfold cross-validation for image-, artery-, and per-patient-level. For the stenosis localization training and fourfold cross-validation, 690 images with > 25% stenosis were used.

Results: Our model achieved an accuracy of 0.85, sensitivity of 0.96, and AUC of 0.86 in per-patient level stenosis classification. Redundancy training was effective to improve classification performance. Stenosis position localization was adequate with better quantitative results in anchor-based FPN model, achieving global-sensitivity for left coronary artery (LCA) and right coronary artery (RCA) of 0.68 and 0.70.

Conclusion: We demonstrated a fully automatic end-to-end deep learning-based workflow that eliminates the vessel extraction and segmentation step in coronary artery stenosis classification and localization on CAG images. This tool may be useful to facilitate safety screening in high-volume centers and in clinical trial settings.

Coronary artery disease (CAD) is the leading cause of morbidity and mortality worldwide (1). X-ray coronary angiography (CAG) is the current gold standard imaging technique for CAD diagnosis. Expert CAG interpretation requires considerable “hands-on” training both visually and cognitively. In clinical practice and also for quality control purposes in research settings, screening CAG studies visually to distinguish cases with normal or mild stenosis from those with higher stenosis severity is a time-consuming process even for experienced readers. Developing an automatic CAG assessment tool to exclude normal or mild stenosis cases would facilitate diagnosis and treatment and enable the screening of large data sets for quality control purposes.

Recent studies confirmed the feasibility of using deep learning methods for CAG stenosis detection. Generally, the method consists of multiple steps. The most widely used vessel-based workflow starts from the visual or automatic selection of candidate frames (2–4) or regions (5, 6) from a CAG video. This is followed by the artery extraction using image segmentation algorithms (7) like center-tracking (8, 9), model-based (10), or Convolutional Neural Network (CNN) (11–15). Finally, individual stenotic lesion localization and classification is performed in two ways: patch-wise (16–18) and image-wise (2, 3, 6).

However, there are limitations in previous CAG stenosis classification and detection methods. One of the main drawbacks is that the vessel shape and characterization (19, 20) were not well exploited from a multi-view CAG study, causing a relatively low accuracy in detecting the stenotic lesions, especially in curved or bifurcation regions in the vascular tree (21, 22). Another limitation is that there are numerous pre-processing stages (manually or automatically) in some methods (15, 18, 23), such as detecting keyframes/region/views from a CAG sequence, or annotating segmentation for vessels, or preparing patches and labels for training procedure. The need for extensive human interaction during image data and training label preparation, in addition to addressing problems of sampling imbalance during supervised-learning, has led to algorithms that are commonly evaluated on small datasets prone to overfitting (7). Clinically speaking, those studies generally aimed to differentiate significant stenosis from non-significant stenosis in CAG images while developing a tool to facilitate safety screening of a large volume of CAG images by separating cases with normal or mild stenoses from those with higher stenosis severities have not been targeted (24).

In this study, we propose a fully automatic, deep learning-based end-to-end CAG stenosis detection method to achieve efficient safety screening and precise localization of stenoses. Our method consists of following unique steps that (1) it eliminates the vessel extraction and segmentation step for supervised learning; (2) the CNN + LSTM structure is designed for automatic detection of candidate frames from CAG sequences to improve training efficiency and reduce overfitting; (3) a multi-view analyzing architecture is established to train CNNs for different angle-views and generate classification results in artery-level and patient-level; (4) the redundancy training strategy is proposed to eliminate the negative effect of background and unnecessary features in training; and (5) the unsupervised- and supervised-learning methods are explored to localize the coronary stenoses in CAG images, which includes an anchor-based feature pyramid network (FPN).

This research was retrospectively performed on 230 participants with available data from a ‘‘Combined Non-invasive Coronary Angiography and Myocardial Perfusion Imaging Using 320 Detector Computed Tomography (CORE320)’’ study (NCT00934037),1 a prospective, multicenter, international study that assessed the performance of combined 320-row CTA and myocardial CT perfusion imaging (CTP) in comparison with the combination of invasive CAG and single-photon emission computed tomography myocardial perfusion imaging (SPECT-MPI) for detecting myocardial perfusion defects and luminal stenosis in patients with suspected CAD (25, 26). For the stenosis classification, 36 studies out of 230 were excluded from the training due to the low image quality or contrasting condition. These images, however, were included for evaluation. The original CORE320 study was approved by central and local institutional review boards, and written informed consent was obtained from all participants (25, 26). Given the retrospective and ancillary nature of the data, the current study is covered by the original CORE320 study IRB.

The entire study workflow is summarized in Figure 1. All the CAG studies were saved in the universal DICOM format with a resolution of 512 × 512, 15 fps, typically 60–200 frames per view. The detailed imaging parameters were summarized in Supplementary Table 1. Coronary type (left and right coronary artery, LCA, and RCA) was classified initially by experts in a small subset (19 patients). This was then leveraged by training an inception-V3 classifier (27) for automated coronary selection (100% classification accuracy was obtained). To identify the angle views of the CAG images, DICOM tags were used. Overall 4 angles for LCA [left anterior oblique (LAO) Cranial, LAO Caudal, right anterior oblique (RAO) Cranial, and RAO Caudal] and 3 angles for RCA (LAO, straight RAO, and shallow LAO/RAO Cranial) were used based on the optimal view map (OVM) (20).

Figure 1. Dataset and algorithm workflow. Three steps of data preparation, stenosis classification, and stenosis positioning were presented. The steps of image and training label preparation including coronary artery selection, viewing angle selection, and contrasting frame detection were designed in a fully automatic manner. Stenosis severity classification training was performed on image-level, artery-level, and patient-level. Stenosis positioning was performed in two methods of CAM-based and anchor-based methods. CAM, class activation map; QCA, quantitative coronary angiography.

A CNN + Long Short Term Memory (LSTM) network was implemented for the candidate frame selection from 19 patients (146 videos in total, and 18,688 frames overall). A candidate frame was defined as an image with good quality, full contrasting, clear vessel border, and anatomical significance of stenosis (if it had stenosis) in a video frame. Inception-v3 was employed as a basic classifier to recognize full-contrasting frames and non-contrasting frames as candidates or redundancy frames. Then, the fully connection layer of inception-v3 was output to a bi-directional LSTM with 32 time-steps (units), and also concatenated with the output of forward and backward LSTM units. The concatenation result was connected with a multi-layer perception (MLP, with one hidden layer) and a binary activation layer (sigmoid). The detailed structure of inception-v3 and LSTM is provided in Supplementary Figure 1. The inception model was initialized by ImageNet weights and then pre-trained for 200 epochs with the initial learning rate (LR) of 1e−4 with the loss function as binary entropy. The LSTM was initialized using Xavier uniform method for kernels and orthogonal matrix for recurrent weights, then trained for 100 epochs with LR = 4e–5 with the loss function of convolutional F1 score. Typically, this strategy selected 5–10 candidate frames per video.

The performance of candidate frame detection was tested with 582 videos from 175 patients using mean error and standard deviations of beginning contrasting frame (BCF) and ending contrasting frame (ECF) between ground-truth and prediction. The acceptance and error rates were also calculated with average differences of BCF and ECF in a pre-defined range (2), in which accept rate with the error ≤3 frames and error rate with the error ≥10 frames. Performance was reported using classification accuracy, F1, and Kappa.

For the stenosis classification, the quantitative coronary angiography (QCA) results previously documented per segmental level in the CORE320 study were utilized as a reference (25, 26, 28). In the current study, in order to accommodate with our study goals (separating cases with normal coronary arteries or mild stenoses from that with higher stenosis severities), coronary stenosis severities were re-categorized into the per-coronary artery, i.e., per LCA or RCA, and grouped into three categories of < 25%, 25–99%, and total occlusion in 3-categories (CAT), or two groups of < 25 and ≥ 25% in 2-CAT. It is known that there is a mismatch between the coronary stenosis severity and functional significance. Even the intermediate stenosis lesion can present functionally significant stenosis by fractional flow reserve (29, 30). Since we aimed to develop a safety screening tool for a large volume of the CAG images, we selected a stenosis threshold with high specificity to correctly separate cases that does and does not need further functional stenosis assessment.

Different CNN architectures of ResNet-50, ResNet-101, Inception-v3 and InceptionResNet-v2 were employed for the image-level stenosis classification training and prediction. And the inception-v3 was employed finally in image-level, artery- and patient-level stenosis prediction, since it has a good balance in transfer timing, parameter size and performance. The training was performed on 4 models of LCA for each angle view and one model of RCA combining the three angle views due to the complicated features of LCA when compared to the RCA (31).

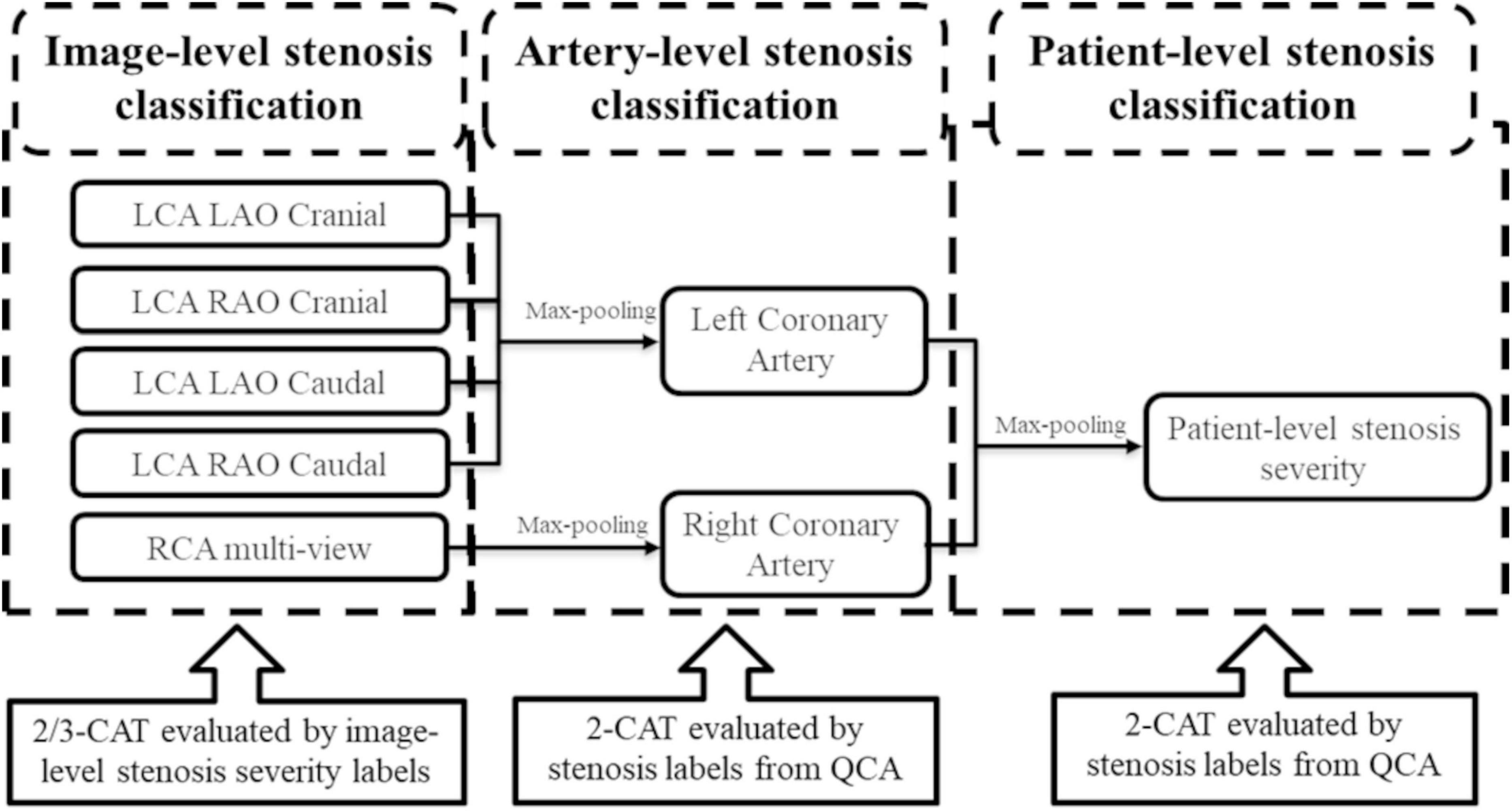

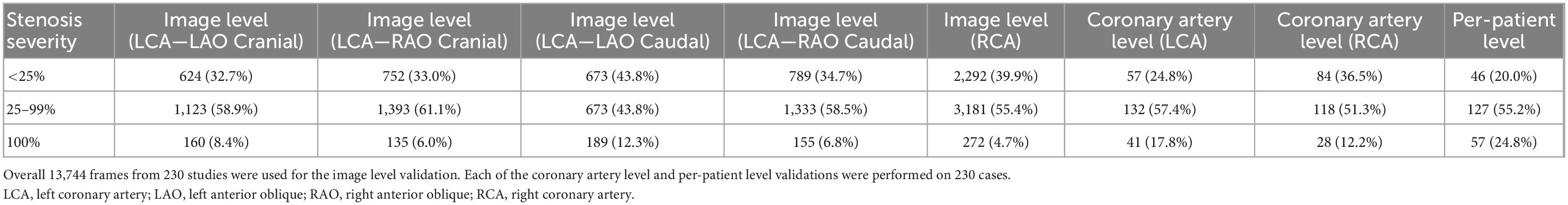

The classification prediction of artery-level and patient-level was implemented by a multi-view analyzing architecture, as described in Figure 2. For artery-level prediction, CNN scores from 4, or, 3 angle-views were combined and fed into a max pooling layer to generate LCA/RCA classification results, respectively. Similarly, the patient-level prediction scores were generated by feeding LCA and RCA scores into another max pooling layer (Figure 2). For the image-level labeling, 2 or 3-CAT stenosis categories were assigned in each angle view. For the artery-level labeling, 2-CAT stenosis categories were assigned in each coronary artery, i.e., in the LCA and the RCA. Overall 10,872 frames from 194 studies were used for image-level stenosis classification training and 13,744 frames from 230 studies were used for the fourfold cross-validation. The distribution of the cases in the image-, artery-, and patient-levels are summarized in Table 1. Performance of image-level classification on 3- CAT and 2- CAT with and without redundancy training was reported using accuracy, sensitivity, F1, Kappa, and area under the curve (AUC). Performance of artery-level and per-patient level classification was assessed on the 2-CAT with redundancy training image-level results and reported using accuracy, sensitivity, and AUC.

Figure 2. The architecture of the output of the stenosis classification inception model. A max-pooling layer was added to the output of inception to evaluate the artery-level stenosis prediction and the patient-level stenosis prediction. LCA, left coronary artery; LAO, left anterior oblique; RAO, right anterior oblique; RCA, right coronary artery; QCA, quantitative coronary angiography.

Table 1. Distribution of the cases in each stenosis severity category used for the image view, artery, and patient-levels validation.

In the image-level stenosis classification training, the redundancy frames were accessorily added to the training dataset but not in the validation set. A redundancy frame was defined as a background frame without any contrasting agent in arteries. Thereafter, the redundancy categories were comprised of background frames with the roughly same amount of samples as the target categories in training dataset. Subsequently, there are 12,351 redundancy frames combined with 10,872 candidate frames in 3- and 2- CAT image-level training, namely redundancy training, as the similar methods used before (3, 32). It is expected that the use of redundancy frames can hedge against the invalid feature learning and reduce the train/test overfitting.

For the stenosis positioning, two methods were investigated: (1) class activation map (CAM) (33) based on the back-propagation from the stenosis classification decision and (2) anchor-based FPN. The anchor-based FPN model is developed from RetinaNet (34) and FPNs (35), using the pre-trained inception-V3 as backbone. The network structure is demonstrated as Supplementary Figure 2. The 1st, 2nd, and 3rd feature map in the pyramid were derived from the output of the concatenate feature before the 1st, 2nd, and 3rd pooling layer, respectively. The 4th and 5th feature maps were down sampled from the previous layers. For FPN inputs, 1,588 positioning boxes with a minimal size of 35 × 35 pixels were annotated by two independent expert cardiologists. The shapes of anchor were preset by K-Means clustering method with seven different groups of height and width. The anchor-based model was trained with Learning Rate = 1e–4 over 500 epochs. Then FPN was built on the feature maps of pre-trained classification models. The same reader-annotated bounding boxes were also used for the evaluation of the CAM-based localization technique. For the positioning training and fourfold evaluation, 690 frames with > 25% stenosis were used (Figure 1).

The performances of the two stenosis localization methods were assessed by the metrics of global-sensitivity, per-stenosis-sensitivity (Sens_s), per-stenosis-specificity (Spec_s), and mean square error (MSE). Global-sensitivity was defined as the recall of localization for the most significant stenosis in the images, which is similar to AR∧(max = 1) in COCO benchmark (21). Sens_s and Spec_s were defined as the recall rate of all stenosis localizations in the images. MSE was assessed in 512 × 512 images for the CAM-based model and the anchor-based models. Due to the lower resolution, metrics for the CAM-based model were calculated with Intersection over Union (IoU) > 0.2 in the CAM-based model whereas IoU > 0.5 for the anchor-based model.

All the statistical evaluation was performed in Python (version 3.6; Python Software Foundation, Wilmington, Del).2 In this study, the calculation for diagnostic performance was based on a per-patient approach, including image-level severity classification. Accuracy, f1-score, and Cohen’s Kappa were calculated for image-level stenosis classification; receiver operating characteristic (ROC) analysis and areas under the curves (AUC) were used to further evaluate the image-/artery-/patient-level diagnostic performance. Stenosis positioning was evaluated by sensitivity, specificity, and MSE as described above. The CNN, LSTM, CAM, and anchor-based models were performed on TensorFlow (version 2.4.0), Python (version 3.6), and the Ubuntu system (version 20.04). All metrics were computed using Scikit-learn, version 0.19.1. Continuous variables that were normally distributed were summarized and reported as means ± standard deviations.

The study participants’ characteristics are given in Table 2. A total of 230 individuals were included in our analysis. The median age was 62 years (IQR 55, 69), 70% were men, 45% were white, 82% had hypertension, 71% had dyslipidemia, 16% were current smokers, and 27% had a high pretest probability of obstructive CAD.

The automatic model achieved a mean error of 2.05 and 2.27 in BCF and ECF detection, respectively. The acceptance and error rates were 83% and 5.0%. A common feature of misclassified cases was a relatively short contrast duration in the video (typically < 5 frames with adequate vessel-to-background contrast). The network did not adequately handle this type of condition because the training dataset had very few instances of short-duration contrasting frames.

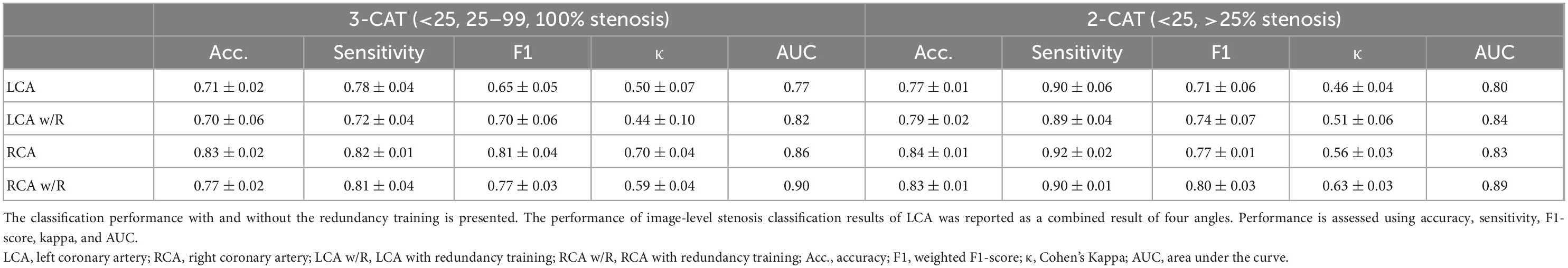

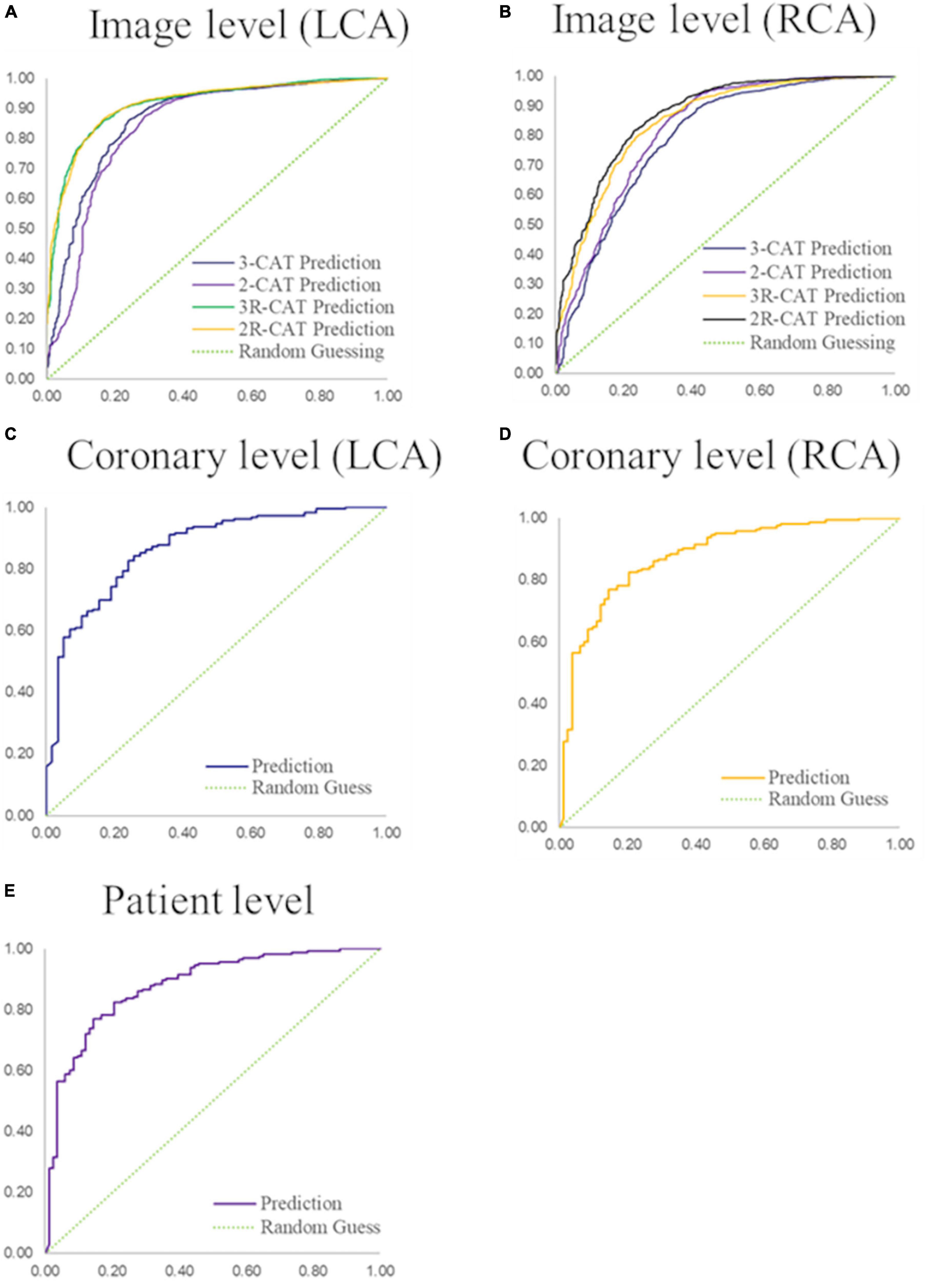

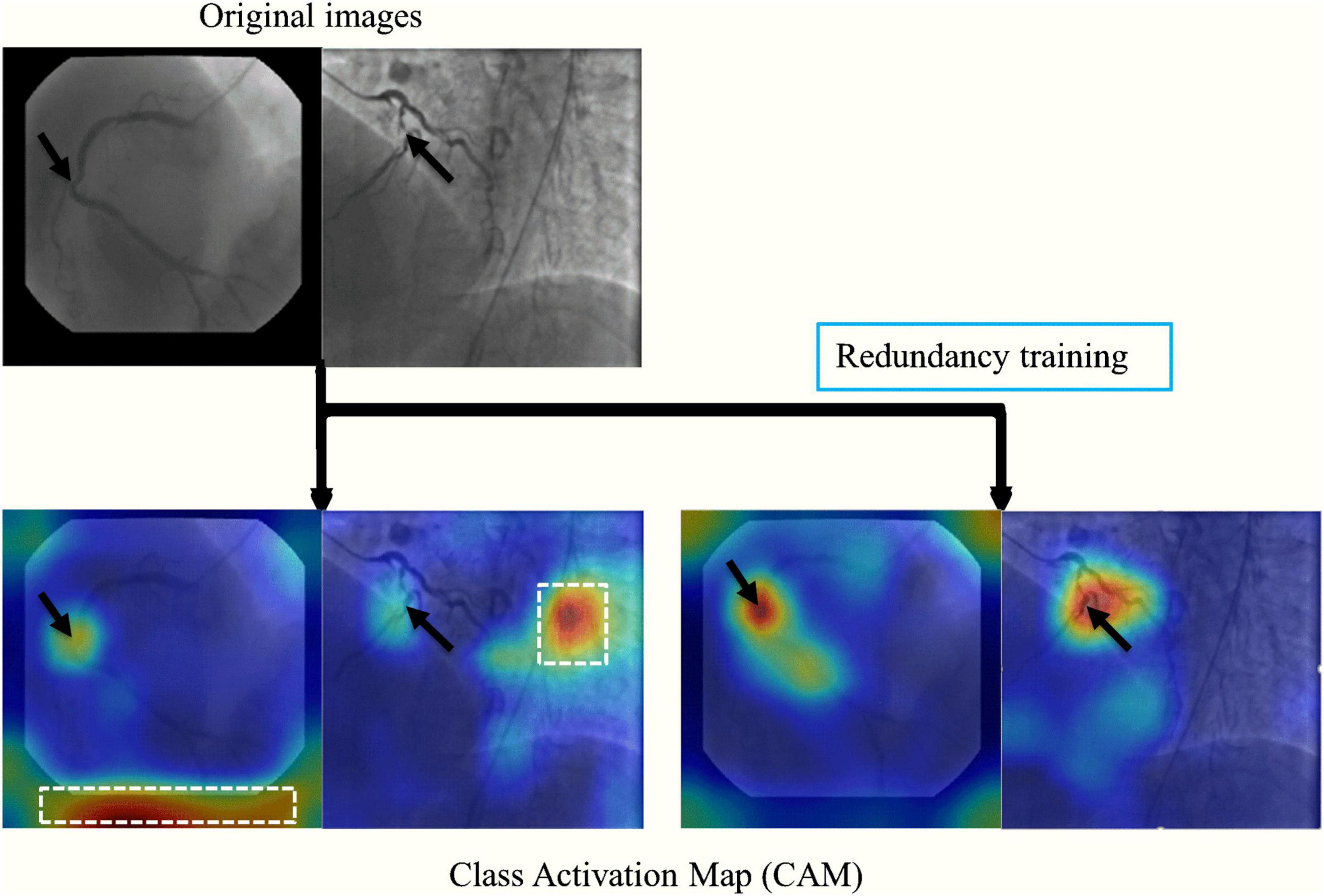

The stenosis classification results in 3-CAT and 2-CAT with and without redundancy training models are summarized in Table 3 and Figure 3. In brief, the image-level classification performance was better in 2-CAT than 3-CAT for the LCA while not significantly different for the RCA. The redundancy training improved the AUC values for both 2-CAT and 3-CAT, as well as the accuracy, F1-score, and kappa score in 2-CAT. Based on the better performance in 2-CAT as well as our aim to separate normal coronary/mild stenoses from higher severity of stenosis, 2-CAT evaluation was performed for artery-level (LCA and RCA) and patient-level classification. The accuracies were 0.83, 0.81, 0.85, the sensitivities were 0.94, 0.90, and 0.96 and AUCs were 0.87, 0.88, and 0.86 at the artery-level; LCA and RCA, and at the per-patient level, respectively. A representative image illustrating the effect of the redundancy training is demonstrated in Figure 4 with visualization aided by a heatmap. The overfitting caused by background structures is markedly reduced, likely resulting in the improvement in classification performance.

Table 3. The image-level stenosis classification performance for the 2-category and 3-category severity levels.

Figure 3. Performance of coronary stenosis classifications in image, coronary artery, and patient levels. (A,B) ROC curves of image-level classification on 3-CAT and 2-CAT with and without redundancy training on LCA and RCA. (C,D) ROC curves of coronary artery level classification on LCA and RCA. (E) ROC curve of patient-level classification. The AUC values are summarized in Table 3. RCA, right coronary artery; LCA, left coronary artery; AUC, area under the curve.

Figure 4. A representative image of the effect of the redundancy training demonstrated in a heatmap style. In the original training, the model had mid-to-high level attention on background regions. The redundancy training reduced the overfitting caused by background structures and improved the performance of stenosis classification.

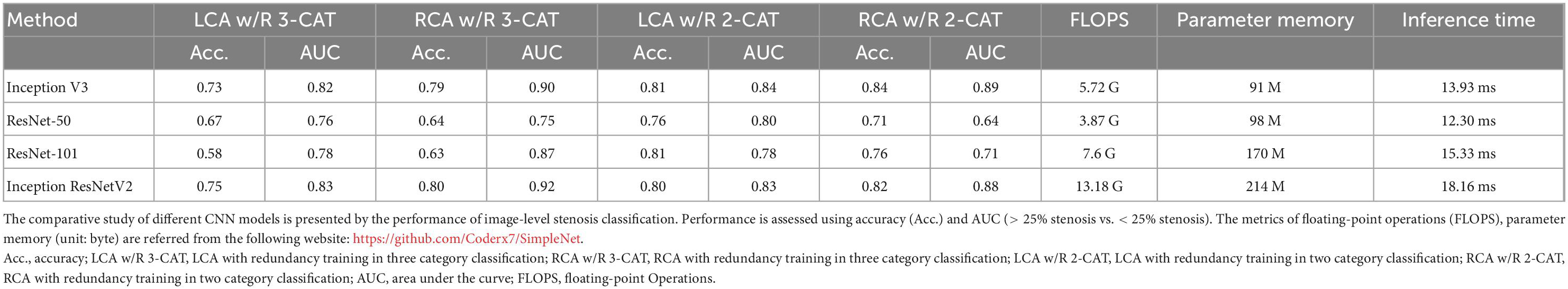

Additionally, the image-level classification performances of different CNN models of ResNet-50, ResNet-101, Inception-v3 and InceptionResNet-v2 were compared in Table 4. The comparative result suggests that Inception-v3 is the most suitable one among all four models, because of its fast inference speed, small size, and high accuracy in many tasks.

Table 4. The comparative study of different CNN models in image-level stenosis classification performance.

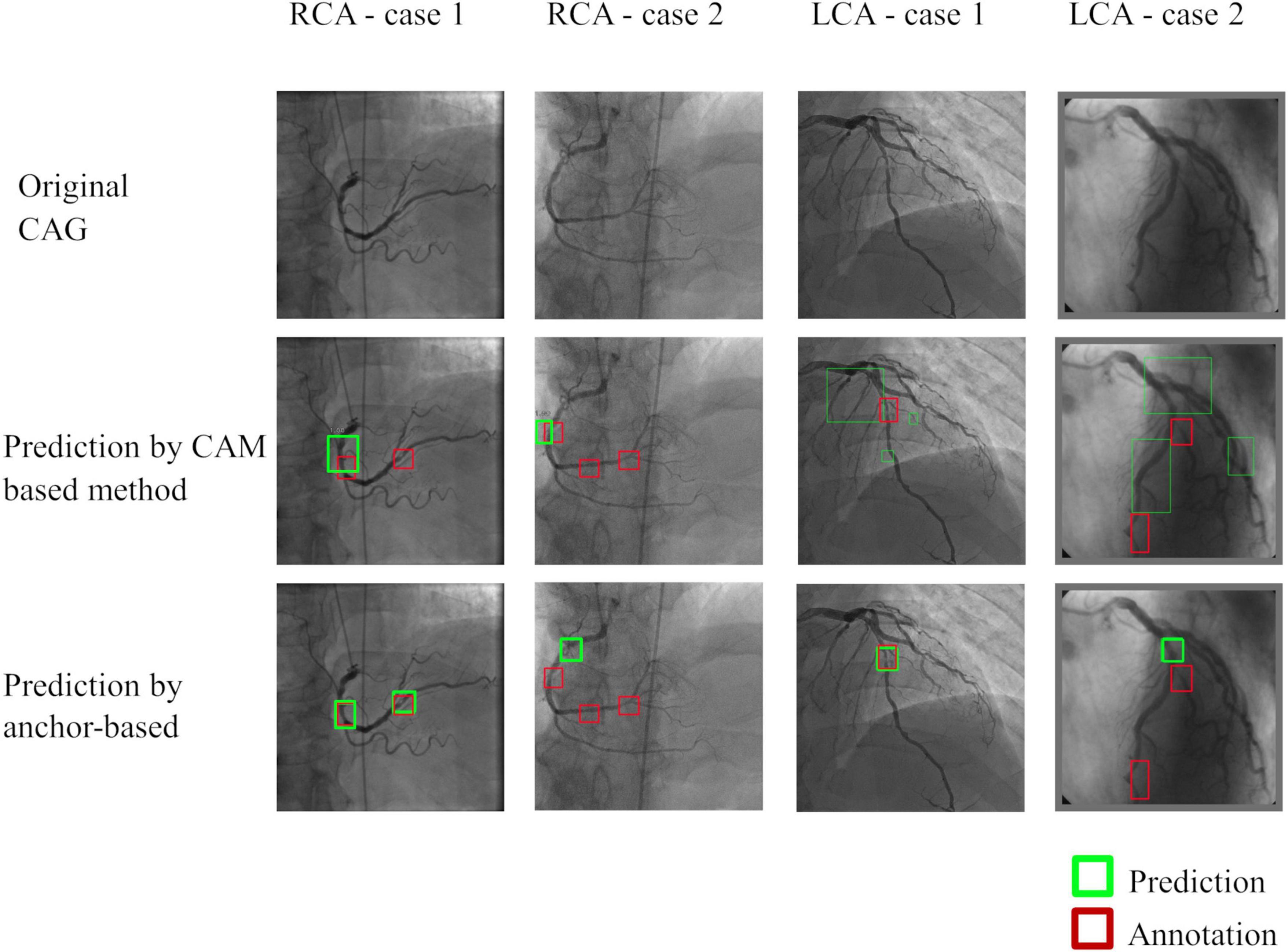

Quantitative results were summarized in Table 5. In brief, the anchor-based FPN method showed better performance than the CAM-based method by all the metrics studied. Both the localization techniques performed better for RCA images than for LCA images. In both methods, Sensitivity was low due to the many annotations that highlighted small lesions that had ambiguous feature patterns in the arteries. Performance was also lower when there were multiple stenoses in distal coronary arteries or branches (see Figure 5 for illustration).

Figure 5. Representative images of stenosis position localization experiments. Predicted boxes from the anchor-based model produced more accurate boxes when compared to the CAM-based model. Multiple stenoses in distal coronary arteries or branches were difficult for correct localization, which was the main reason for the failed cases.

In this study, we developed a CAG stenosis detection and localization tool to facilitate safety screening of a large volume of the CAG images. The main findings from the present study are summarized as follows: (1) the fully automatic, end-to-end workflow, which eliminated the vessel extraction and segmentation step for supervised-learning was developed; (2) the multi-view CAG analyzing architecture for artery- and patient-level stenosis classification, achieving an accuracy of 0.85, a sensitivity of 0.96 and an AUC of 0.86 at the per-patient level; (3) redundancy training improved classification performance, hedged against the invalid feature learning and reduced the error between the training and validation sets; (4) stenosis localization was investigated with two methods of CAM-based and anchor-based models, with superior quantitative results with the anchor-based models.

End-to-end workflow is advantageous in reducing human interaction steps. In our proposed workflow, once applied to the CAG videos, the model automatically selects the optimal frames, performs stenosis classification and localizes stenosis positions, providing robust results at both the artery and patient levels. Our workflow is advantageous in a large volume clinical setting or quality control purposes because the timely screening of many CAG videos to identify cases with normal or only mild stenoses consequences to secure more time on the cases with higher stenosis severities, which can translate into improved productivity and facilitated safety screening. Additionally, by providing stenosis classification and localization, the reader/physician can quickly focus on the lesion and perform quantitative CAG in an efficient manner.

The candidate frame selection performance presented here was better than previous publication by another group (4), likely due to the use of the bi-directional CNN + LSTM network to effectively extract high dimensional features of contrast flows from images, so that the network can effectively detect the changing trend in temporal sequences and find the contrast frames with higher accuracy than the RNN-only method (4). The stenosis classification results in the current study are encouraging that are comparable and sometimes outperforming when compared to the methods reported in previous studies; in which the image-based stenosis classification methods (5, 36) presented patient-level 2-CAT sensitivity of 0.80, 0.87; other three vessel- and patch-based studies (6, 9, 17) presented accuracies of 0.94, 0.97, and 0.92, respectively. We attribute our favorable results to addressing different aspects of a typical CAG study such as multiple angle views, background frames, and visually insignificant features of vessel stenoses through redundancy training to reduce overfitting in classification training.

Redundancy training is an effective tool for improving classification accuracy and reducing the error between the training and validation sets. In the original training, CNNs may be activated by invalid features such as image background and artifacts, which can be visualized from CAM heatmap. Comparatively, the redundancy frames were introduced as new categories in redundancy training, therefore the stenosis features were more activated on effective features such as vessel morphology, intensity change and narrow characteristics.

The comparison between 2-CAT and 3-CAT classification implies extra characteristics in stenoses analyzing. From the experimental result, the image-level classification performance is better in 2-CAT than 3-CAT for the LCA (accuracy = 0.77 vs. 0.71) while not significantly different for the RCA (accuracy = 0.84 vs. 0.83). One possible reason is that LCA anatomy presents itself with more variation than RCA (31), causing adverse factors against the CNN models to detect vascular blockage and occlusion in 3-CAT classification. Another explanation is that there are slightly imbalanced category distributions in 3-CAT classification than in 2-CAT, resulting in reduced accuracy (in LCA) and sensitivities (both in LCA and RCA).

Our study also explores a solution to the stenosis localization problem via an object detection framework. Two different stenosis localization methods of CAM and FPN were compared. The CAM-based model has the strength of employing a simple derivation that uses stenosis classification as a backbone model. However, as the activation map should be calculated by feature maps in deep layers from CNNs, CAM method is unfavorable for fine-grained and multiple object detection, such as small blood vessel stenoses in the same CAG image. In contrast, anchor-based model showed a better performance for stenosis positioning, since the different scales of features can be well exploited by feature pyramid structure. The trade-off is that the additional annotations and supervised-learning procedure were necessary for training the anchor-based model. Additionally, the comparison of the stenosis localization performances between RCA and LCA also support our viewpoint that the complexity of morphology and structure of angiographic vessels may be a severely adverse factor to the accuracy of the algorithms (classification and localization). In LCA angle views, two main arteries (LAD and LCX) may interlap on the 2-dimensional CAG image and twist with each other, raising difficulties in stenosis visualization. In some cases, there are multiple lesions in separate vessels or segments in LCA (such as second diagonal, second obtuse marginal or posterolateral), with vague and insignificant visual characteristics. By comparison, RCA has clearer vessel shapes and simpler morphologic characteristics so that more significant stenosis features. Therefore, all the above factors lead to better localization performances with both methods for RCA than for LCA.

Future work will aim at the following aspects. (1) We could perform an external validation in different studies as a means to generalize our technique and further improve performance. We believe that the proposed method will achieve good results with new images. Considering that the new dataset may have different imaging parameters (angle views, phase intervals, and FOVs), we may have to adjust the image pre-processing algorithm to accommodate the new images. Furthermore, transfer learning in a small subset could also improve performance. (2) Application in a variety of clinical or investigative scenarios beyond safety screening with different clinical goals such as fine-grained stenosis classification/localization.

Our study had a few important limitations. Training and evaluation were performed in the same cohort. A validation study using an external cohort is needed to accurately assess the performance of our techniques. Stenosis classification was simply categorized into three groups of < 25, 25–99%, and total occlusion for 3-CAT while < 25 and 25–100% stenosis for 2-CAT. Our aim was to develop a tool that identifies normal and mild stenosis cases within a large cohort. In this regard, more granular categories for mild to moderate stenosis may be considered for different clinical or investigational purposes, such as the detection of hemodynamically significant stenosis.

In conclusion, a fully automatic end-to-end deep learning-based workflow for CAG images that eliminates the vessel extraction and segmentation step was accomplished. Our redundancy-based algorithm showed high accuracy for stenosis classification, and accurate localization was achieved by an anchor-based model. This end-to-end approach may facilitate safety screening in high-volume centers and in clinical trial settings.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Johns Hopkins Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

CC and YK contributed to conception and design of the study, data analysis, manuscript drafting, and revisions. HV, MO, JL, and BA-V participated in the design and coordination of the study as well as manuscript revisions. All authors have read and approved the final manuscript.

This study was supported by Master Research Agreement 09–115 and Artificial Intelligence Health Information Exchange (AIHEX).

JL reported receipt of grant support from Canon Medical Systems.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcvm.2023.944135/full#supplementary-material

Supplementary Figure 1 | The detailed structure of inception-v3 and LSTM. Inception-v3 was employed as a basic classifier to recognize full-contrasting frames and non-contrasting frames as candidates or redundancy frames. Then, the fully connection layer of inception-v3 was output to a bi-directional LSTM with 32 time-steps (units), and also concatenated with the output of forward and backward LSTM units. The concatenation result was connected with a multi-layer perception (MLP, with one hidden layer) and a binary activation layer (sigmoid).

Supplementary Figure 2 | The architecture of the anchor-based feature pyramid network for stenosis localization. The 1st, 2nd, and 3rd feature map in the pyramid were derived from the output of the concatenate feature before the 1st, 2nd, and 3rd pooling layer, respectively. The 4th and 5th feature maps were down sampled from the previous layers. The shapes of anchor were preset by K-Means clustering method with seven different groups of height and width.

1. Tsao C, Aday A, Almarzooq Z, Alonso A, Beaton A, Bittencourt M, et al. Heart disease and stroke statistics—2022 update: a report from the American heart association. Circulation. (2022) 145:e153–639. doi: 10.1161/CIR.0000000000001052

2. Pang K, Ai D, Fang H, Fan J, Song H, Yang J. Stenosis-detnet: sequence consistency-based stenosis detection for X-Ray coronary angiography. Comput Med Imaging Grap. (2021) 89:101900. doi: 10.1016/j.compmedimag.2021.101900

3. Cong C, Kato Y, Vasconcellos H, Lima J, Venkatesh B editors. Automated stenosis detection and classification in x-ray angiography using deep neural network. 2019 IEEE international conference on bioinformatics and biomedicine (BIBM). Piscataway, NJ: IEEE (2019).

4. Ma H, Ambrosini P, van Walsum T editors. s. Fast Prospective Detection of Contrast Inflow in X-Ray Angiograms with Convolutional Neural Network and Recurrent Neural Network. International conference on medical image computing and computer-assisted intervention. Berlin: Springer (2017).

5. Wu W, Zhang J, Xie H, Zhao Y, Zhang S, Gu L. Automatic detection of coronary artery stenosis by convolutional neural network with temporal constraint. Comput Biol Med. (2020) 118:103657. doi: 10.1016/j.compbiomed.2020.103657

6. Moon J, Cha W, Chung M, Lee K, Cho B, Choi J. Automatic stenosis recognition from coronary angiography using convolutional neural networks. Comput Methods Programs Biomed. (2021) 198:105819. doi: 10.1016/j.cmpb.2020.105819

7. Moccia S, De Momi E, El Hadji S, Mattos L. Blood vessel segmentation algorithms — review of methods, datasets and evaluation metrics. Comput Methods Programs Biomed. (2018) 158:71–91. doi: 10.1016/j.cmpb.2018.02.001

8. Compas C, Syeda-Mahmood T, McNeillie P, Beymer D editors. Automatic Detection of Coronary Stenosis in X-Ray Angiography through Spatio-Temporal Tracking. 2014 IEEE 11th international symposium on biomedical imaging (ISBI). Piscataway, NJ: IEEE (2014).

9. Wan T, Feng H, Tong C, Li D, Qin Z. Automated identification and grading of coronary artery stenoses with X-Ray angiography. Comput Methods Programs Biomed. (2018) 167:13–22. doi: 10.1016/j.cmpb.2018.10.013

10. Hernandez-Vela A, Gatta C, Escalera S, Igual L, Martin-Yuste V, Sabate M, et al. Accurate coronary centerline extraction, caliber estimation, and catheter detection in angiographies. IEEE Trans Inform Technol Biomed. (2012) 16:1332–40. doi: 10.1109/TITB.2012.2220781

11. Yang S, Kweon J, Roh J, Lee J, Kang H, Park L, et al. Deep learning segmentation of major vessels in X-Ray coronary angiography. Sci Rep. (2019) 9:16897. doi: 10.1038/s41598-019-53254-7

12. Nasr-Esfahani E, Samavi S, Karimi N, Soroushmehr S, Ward K, Jafari M, et al. editors. Vessel Extraction in X-Ray Angiograms Using Deep Learning. 2016 38th Annual international conference of the IEEE engineering in medicine and biology society (EMBC). Piscataway, NJ: IEEE (2016).

13. Nasr-Esfahani E, Karimi N, Jafari M, Soroushmehr S, Samavi S, Nallamothu B, et al. Segmentation of vessels in angiograms using convolutional neural networks. Biomed Signal Processing Control. (2018) 40:240–51. doi: 10.1016/j.bspc.2017.09.012

14. Jo K, Kweon J, Kim Y, Choi J. Segmentation of the main vessel of the left anterior descending artery using selective feature mapping in coronary angiography. IEEE Access. (2019) 7:919–30. doi: 10.1109/ACCESS.2018.2886009

15. Liu Y, Zhang X, Wan W, Liu S, Liu Y, Liu H, et al. Two new stenosis detection methods of coronary angiograms. Int J Comput Assisted Radiol Surgery. (2022) 17:521–30. doi: 10.1007/s11548-021-02551-6

16. Antczak K, Liberadzki Ł editors. Stenosis detection with deep convolutional neural networks. MATEC web of conferences. Les Ulis: EDP Sciences (2018).

17. Ovalle-Magallanes E, Avina-Cervantes J, Cruz-Aceves I, Ruiz-Pinales J. Hybrid classical–quantum convolutional neural network for stenosis detection in X-Ray coronary angiography. Expert Syst Appl. (2022) 189:116112. doi: 10.1016/j.eswa.2021.116112

18. Ovalle-Magallanes E, Avina-Cervantes J, Cruz-Aceves I, Ruiz-Pinales J. Transfer learning for stenosis detection in X-Ray coronary angiography. Mathematics. (2020) 8:1510. doi: 10.3390/math8091510

19. Galassi F, Alkhalil M, Lee R, Martindale P, Kharbanda R, Channon K, et al. 3d reconstruction of coronary arteries from 2d angiographic projections using non uniform rational basis splines (NURBS) for accurate modelling of coronary stenoses. PLoS One. (2018) 13:23. doi: 10.1371/journal.pone.0190650

20. Garcia J, Movassaghi B, Casserly I, Klein A, Chen S, Messenger J, et al. Determination of optimal viewing regions for x-ray coronary angiography based on a quantitative analysis of 3d reconstructed models. Int J Cardiovasc Imaging. (2009) 25:455–62. doi: 10.1007/s10554-008-9402-5

21. Rodriguez-Granillo G, García-García H, Wentzel J, Valgimigli M, Tsuchida K, van der Giessen W, et al. Plaque composition and its relationship with acknowledged shear stress patterns in coronary arteries. J Am Coll Cardiol. (2006) 47:884–5. doi: 10.1016/j.jacc.2005.11.027

22. Markl M, Wegent F, Zech T, Bauer S, Strecker C, Schumacher M, et al. In Vivo wall shear stress distribution in the carotid artery: effect of bifurcation geometry, internal carotid artery stenosis, and recanalization therapy. Circulation. (2010) 3:647–55. doi: 10.1161/CIRCIMAGING.110.958504

23. Nasr-Esfahani E, Samaivi S, Karimi N, Soroushmehr SMR, Ward K, Jafari MH. Vessel extraction in X-Ray angiograms using deep learning. Orlando, FL: IEEE (2016). doi: 10.1109/EMBC.2016.7590784

24. Ovalle-Magallanes E, Alvarado-Carrillo D, Avina-Cervantes J, Cruz-Aceves I, Ruiz-Pinales J, Correa R. Deep Learning-Based Coronary Stenosis Detection In x-Ray Angiography Images: Overview And future Trends. In: C Lim, A Vaidya, Y Chen, V Jain, L Jain editors. Artificial intelligence and machine learning for healthcare: vol 2: emerging methodologies and trends. Cham: Springer International Publishing (2023). p. 197–223.

25. Vavere A, Simon G, George R, Rochitte C, Arai A, Miller J, et al. Diagnostic performance of combined noninvasive coronary angiography and myocardial perfusion imaging using 320 row detector computed tomography: design and implementation of the core320 multicenter, multinational diagnostic study. J Cardiovasc Comput Tomograp. (2011) 5:370–81. doi: 10.1016/j.jcct.2011.11.001

26. Rochitte C, George R, Chen M, Arbab-Zadeh A, Dewey M, Miller J, et al. Computed tomography angiography and perfusion to assess coronary artery stenosis causing perfusion defects by single photon emission computed tomography: the core320 study. Eur Heart J. (2013) 35:1120–30. doi: 10.1093/eurheartj/eht488

27. Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z editors. Rethinking the inception architecture for computer vision. Las Vegas, NV: IEEE (2016).

28. Alderman E, Stadius M. The angiographie definitions of the bypass angioplasty revascularization investigation. Coronary Artery Dis. (1992) 3:1189–208.

29. Park S, Kang S, Ahn J, Shim E, Kim Y, Yun S, et al. Visual-functional mismatch between coronary angiography and fractional flow reserve. JACC. (2012) 5:1029–36. doi: 10.1016/j.jcin.2012.07.007

30. Johnson N, Kirkeeide R, Gould K. Coronary anatomy to predict physiology. Circulation. (2013) 6:817–32. doi: 10.1161/CIRCIMAGING.113.000373

31. Singh S, Ajayi N, Lazarus L, Satyapal K. Anatomic study of the morphology of the right and left coronary arteries. Folia Morphol. (2017) 76:668–74. doi: 10.5603/FM.a2017.0043

32. Wang J, Wei J, Yang Z, Wang S. Feature selection by maximizing independent classification information. IEEE Trans Knowl Data Eng. (2017) 29:828–41. doi: 10.1109/TKDE.2017.2650906

33. Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A editors. Learning deep features for discriminative localization. arXiv. (2016). [Preprint]. doi: 10.48550/arXiv.1512.04150

34. Lin T, Goyal P, Girshick R, He K, Dollár P editors. Focal loss for dense object detection. proceedings of the IEEE international conference on computer vision. Piscataway, NJ: IEEE (2017).

35. Lin T, Dollár P, Girshick R, He K, Hariharan B, Belongie S editors. Feature pyramid networks for object detection. proceedings of the IEEE conference on computer vision and pattern recognition. San Juan, PR: IEEE (2017).

Keywords: stenosis localization, stenosis classification, catheter coronary angiography, end-to-end workflow, deep learning, redundancy training

Citation: Cong C, Kato Y, Vasconcellos HDD, Ostovaneh MR, Lima JAC and Ambale-Venkatesh B (2023) Deep learning-based end-to-end automated stenosis classification and localization on catheter coronary angiography. Front. Cardiovasc. Med. 10:944135. doi: 10.3389/fcvm.2023.944135

Received: 14 May 2022; Accepted: 16 January 2023;

Published: 07 February 2023.

Edited by:

Sebastian Kelle, German Heart Center Berlin, GermanyReviewed by:

Mingxing Xie, Huazhong University of Science and Technology, ChinaCopyright © 2023 Cong, Kato, Vasconcellos, Ostovaneh, Lima and Ambale-Venkatesh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bharath Ambale-Venkatesh,  YmFtYmFsZTFAamhtaS5lZHU=

YmFtYmFsZTFAamhtaS5lZHU=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.