- 1Department of Communication and Media Technologies, University of Colombo School of Computing, Colombo, Sri Lanka

- 2C-MEIC and the School of Automation, Nanjing University of Information Science and Technology, Nanjing, China

Assistive technologies play a major role in bridging the accessibility gap in arts (especially paintings). Despite the constant advancements in these areas, the visually impaired often encounter challenges in independently experiencing and interpreting paintings. Our goal was to effectively convey the contents of a painting to visually impaired students using selected multi-sensory stimuli (tactile, auditory, and somatosensory) to compensate for the loss of input from the sense of sight. A prototype (named SEMA—Specially Enhanced Multi-sensory Art) was developed around a simple painting to incorporate descriptive outputs of the aforementioned stimuli. The prototype was developed and refined iteratively with the visually impaired students at the University of Colombo, Sri Lanka. We evaluated all the systems individually using the user feedback and several quantitative and qualitative measures were adopted to analyze the results. The final user study with 22 visually impaired participants yielded a strong preference (92.6 %) for the prototype and highlighted its potential to enhance the art experiences. The findings of this study contribute to the further exploration of multi-sensory integration in entertainment and its impact on the visually impaired community.

1 Introduction

Entertainment is a significant part of the everyday life of human society. Many forms of entertainment throughout history greatly rely on the sense of sight, evident by the Renaissance art era (Panofsky, 2018). However, in the natural environment, people perceive events through multiple senses (Stevenson et al., 2014). Then the brain decides the kind of information to group and the kind of information to segregate. Over the years entertainment has evolved to combine different stimuli elements and provide the audience with a richer experience (Kuhns, 2005).

Sensory alignment is a concept that is critical when discussing sensory stimulation since it aims to find the perfect alignment between different stimuli (Marshall et al., 2019). Since a perfect alignment is not always ideal for the overall experience, it is important to have a good understanding of the different ways in which senses work, as this can explain how different sensory cues are processed and integrated into the brain.

However, this trend of associating more than one sense to enjoy a work of art is hardly adopted by the masses due to the restraints they have put on. Museums limit touching their artifacts for preservation reasons and as for paintings, creators mostly work with 2D artworks which are not quite enjoyable in any other sense than sight. This causes elimination and alienation of a large group of audience that is visually impaired. Currently, the world population sits on a number of 285 million people with visual impairments (Masal et al., 2024). This audience is mostly deprived of good entertainment that does not heavily rely on sight. Even the technologies tackling these problems like Virtual Reality and Haptic Technologies have not yet addressed this problem in a meaningful and cost-effective way. The audience that does not suffer from any impairment is not given many chances to enjoy or interact with a multi-sensory art often and without sensory overload due to the lack of understanding of how several stimuli inputs work (Obrist et al., 2017).

There is research that addresses this issue such as the Tate Sensorium (Pursey and Lomas, 2018), Neurodigital: Touching Masterpieces Haptic glove project (Lannan, 2019), The Rain Room (Yuan et al., 2020), The Form of Eternity (Christidou and Pierroux, 2019) using many different combinations of sensory stimuli to study how multi-sensory integration can effectively enhance a entertainment experience.

Using the above research as the foundation, we present this study as an attempt to understand how somatosensory (heat and cold), and auditory and tactile stimuli can enhance the art “viewing” experience for a visually impaired individual. The design effort is carried out as a prototype design named SEMA: Specially Enhanced Multi-sensory Art which encompasses the stimulation of the mentioned stimuli in an artwork. Several user-centric programs and hardware equipment were developed as assistive technologies in this process. User experience was then assessed through a short questionnaire provided at the end of their experience with SEMA and was elaborated by semi-structured interviews to gather qualitative data that will serve as a method for understanding the subjectivity of their experience and identifying patterns.

2 Background

Many methods of entertainment have now evolved toward variety (Kuhns, 2005). Different forms of entertainment are blended to provide a richer experience for the audience. There are many similar instances where one form of experience is coupled with another to provide a more equipped experience than one form. Research points out that people perceive events through multiple senses (Velasco and Obrist, 2021).

2.1 Introduction: definition of multi- sensory experience in entertainment

In recent research, the focus has been devoted to finding ways to control the sensory stimuli to give a relevant, to-the-point experience to the user, rather than just integrating different sensory stimuli together (Chou et al., 2020). Lately, an ideal approach to sensory stimulation is provided by Marshall et al. (2019). That is by aiming for the perfect alignment between the senses. However, the research also states that not having a perfect alignment can sometimes have some feasible effects on the audience. Research on art has discovered that physical mechanisms of awareness encourage consumers to create their own phenomenal worlds (Joy and Sherry, 2003). Hence the ability to combine different sensory stimuli in a meaningful and engaging way is being appreciated by audiences and this can lead to a positive impression (Solves et al., 2022).

2.2 Multi-sensory phenomena and brain processing: theories and findings

Various theories, as well as models, have been proposed to explain multi-sensory phenomena. One instance is the concept that all brain areas may be naturally Multi-sensory or might contain some Multi-sensory inter-neurons (Allman and Meredith, 2007). Another interesting finding is that the timing of when the senses are combined can be important for how the brain processes them (Lakatos et al., 2007). Further, many multi-sensory experiences involve interactions between different areas of the brain, rather than just one specific region (Vuilleumier and Driver, 2007).

2.3 The influence of immersive technologies in contemporary entertainment

As an area of focus within this domain, researchers try to create inclusive art for people with disability by inventing novel ways for them to enjoy art (Rieger and Chamorro-Koc, 2022). The viewers could experience the arts with high-definition digital replicas of Van Gogh's paintings in a 360-degree virtual space at Van Gogh: The Immersive Experience (Yu, 2022). “Neurodigital: Touching Masterpieces” study uses ultrasound haptic feedback with virtual reality to create sensations for users interacting with famous museum artworks like statues, enabling individuals with visual impairments to experience the artworks. Instead of a VR headset, they use a pair of gloves that use ultrasounds to simulate the texture and 3D shape of the selected statues (Lannan, 2019). The Tate Sensorium, a project initiated by the company “Flying Object,” was a groundbreaking experience for art viewers. It featured different paintings, each of which was enhanced by a mix of sensory stimuli, to investigate the possibilities of multi-sensory experiences (Pursey and Lomas, 2018). “Eyes-Off Your Fingers” study investigates how tactile engagement and auditory feedback, delivered through gradual variations in surface sensations, can be used to create multi-sensory interactions, allowing users to explore and perceive virtual environments without relying on visual input (Bernard et al., 2022). The Rain Room is an artwork hosted in an art exhibition in 2012 and 2013 at the Barbican Museum and Museum of Modern Arts (MoMA), created by Random International in collaboration with Hyundai Art and Technology. The audio in the Rain Room is carefully calibrated to match the flow of water to create an immersive experience (Yuan et al., 2020).

The Museum of Pure Form allows its visitors to wear a device on their index, letting them receive haptic sensations of the 3D artworks they are looking at Vaz et al. (2020). Edible cinema brings together audio, visual, and gustatory stimuli to create a rich cinematic experience that compliments the narrative of the movie (Velasco et al., 2018). Faustino et al. (2017) have created a wearable device called the SensArt. It stimulates the auditory sense, Mechanoreceptors, and Thermoreceptors to give a fuller and richer experience. The Cultural Survival Gallery uses moving images and audio-visual media to create an “imaginary sensory environment” (Morgan, 2012). The vibrotactile augmented garment created by Giordano et al. is used in the sensory art installation called “Ilinx.” This installation blends together sound, visuals, and whole-body vibrations (Giordano et al., 2015).

Bumble Bumble is a musical system that is developed by Zhou et al. (2004). The same developers created the Magic Music Desk (MMD). Both of those systems offer a unique experience to music enthusiasts. Input for MMD is taken through natural, intuitive hand and speech commands to produce music. EXTRACT/INSERT is an exhibition curated by the Herbert Museum in Coventry, England. It merges the real and virtual worlds using a series of sensory cues. As a result, visitors can see avatars from a virtual world enter into the physical space (Kawashima, 2006). They reproduced five paintings that are under the possession of the Fine Arts Museum, Bilbao using Didu technology. Didu is a technology that carves and etches a design into a block. The audience can touch the paintings while an audio track guides them through the interpretation of each piece of art (McMillen, 2015).

2.4 Strengths and limitations of multi-sensory integration

There are several strengths and limitations of multi-sensory integration we identified while referring to the literature that we expected to use while developing SEMA. Strengths:

• Multi-sensory integration helps effective information communication with a greater inclusion rate (people with learning disabilities, etc.) (Matos et al., 2015).

• New technologies can be adopted to further strengthen the ideas and products of the domain. Technologies like FMRI and EEG are giving a new point of view into the inside of the human brain, allowing researchers to study not only how multi-sensory applications affect a person's arousal but also how effective different multi-sensory cues are (Verma et al., 2020).

• Multi-sensory integration for entertainment increases the user experience, involvement and engagement (Atkinson and Kennedy, 2016). The collaboration between the users can be enhanced (Frid et al., 2019). User satisfaction of such an experience is recorded as higher than a traditional experience (Gong et al., 2022).

• It creates a broader range of sensations with different stimuli (Lannan, 2019).

• It builds a three-dimensional space around the listener, especially with the auditory-related integration and improves spatial presence (Cabanillas, 2020; Velasco et al., 2018).

• Adds value to the traditional entertainment (Velasco et al., 2018).

• Creates deeper emotional reactions in the visitors (Vi et al., 2017).

Limitations:

• Some technologies can have physical manifestations of discomfort like simulator sickness caused by the Microsoft HoloLense (Vovk et al., 2018).

• Limitations of the visual stimuli (specifically video) such as limited field-of-view, low resolution, user disorientation (Guo et al., 2021).

• Because of the researchers' lack of understanding of the sensory alignment and how to effectively integrate several stimuli together, the audience sometimes gets exhausted or overwhelmed from the constant flow of information they are getting (Velasco et al., 2018; Vi et al., 2017).

• The users develop psychological effects such as sensory overload, depression, perceptual effects and illusions (Frid et al., 2019).

• Some sensory cues are easy to miss if not communicated properly, specially if there are certain instructions that the audience has to follow simultaneously with the experience (Velasco et al., 2018).

• Inaccuracies in identifying tactile sensations is also an occurrence (West et al., 2019).

3 Materials and methods

This research aimed to combine somatosensory, tactile, and auditory stimuli to provide more information for visually impaired individuals when they experience a painting. Three main research questions were identified to be answered with the aid of SEMA:

1. How can we enhance accessibility in visual art forms for visually impaired persons?

2. How can a traditional painting be enhanced to provide a multi-sensory artistic experience for viewers?

3. How can we overcome the challenge of limited engagement in art viewing?

SEMA is the apparatus we designed to test different technologies and parameters with our subjects. SEMA was a product of an iterative design and development process.

3.1 Design

This research attempts to create a means for visually impaired individuals to use other senses to experience a painting. The prototype we developed encompasses stimulating three main senses: tactile, auditory, and somatosensory. By integrating these different sensory stimuli, we aimed to understand how multi-sensory integration contributes to understanding a painting better when the sense of sight is limited. While popular works like Van Gogh's Starry Night or Vermeer's Girl with a Pearl Earring possess artistic merit, these paintings, even for sighted viewers, require contextual understanding to fully grasp the artist's intent. The focus of this research, however, was not on conveying complex emotions or narratives but rather on evaluating the effectiveness of multi-sensory augmentation in conveying basic visual information through touch and sound.

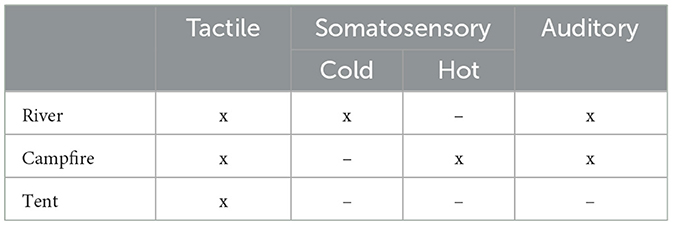

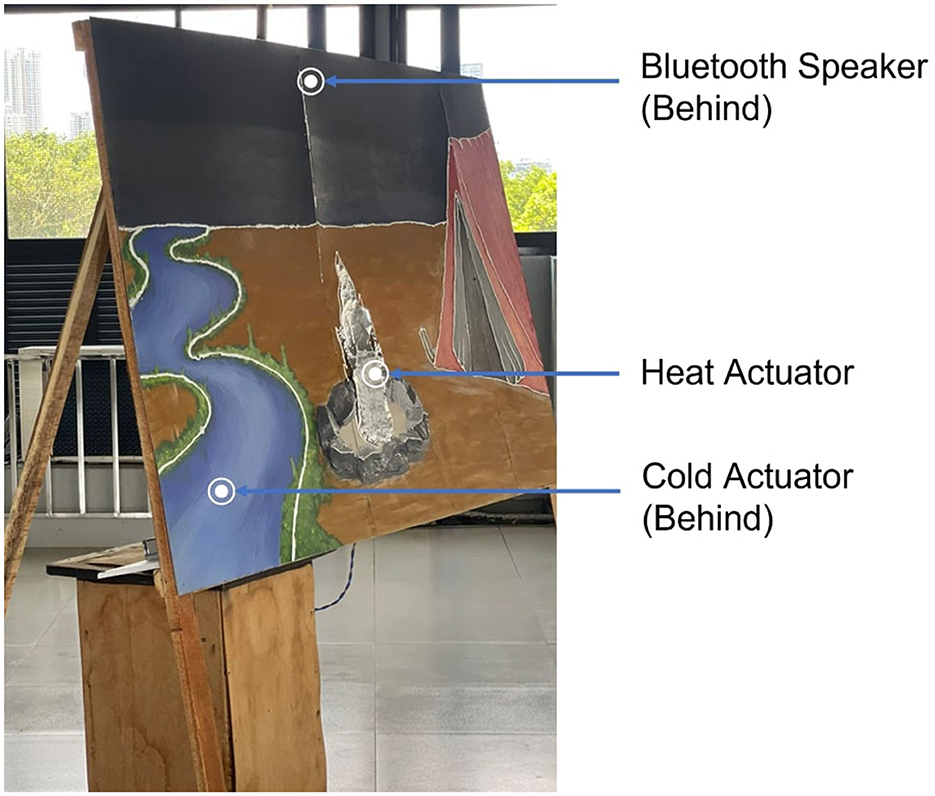

Therefore, we opted for a painting that could be deconstructed into fundamental visual components comprehensible through tactile and auditory means. Simple geometric shapes, while readily understood through touch, would not necessitate a painting in the first place. We designed a painting consisting of three basic, easy-to-understand elements that serve as the backdrop to deliver the necessary stimuli clearly. We chose a river (somatosensory-cold), a bonfire (somatosensory-hot), and a tent. Table 1 shows which stimuli are integrated with each of these elements. Several hardware components were designed and developed to provide additional sensory stimulation for these elements.

When the user approaches the proposed system SEMA, they can start with any of the elements and explore the others to fully experience SEMA. To support this architecture, several hardware components and programs were developed. Each hardware component focuses solely on providing one type of stimulus, facilitating easy modification and integration. For instance, the thermal actuator component provides only heat, without any tactile or auditory stimuli. Separate components were built to provide the other two types of stimuli.

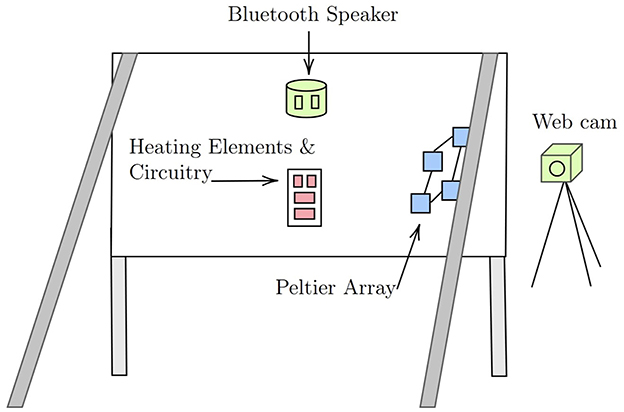

To that end, the initial electrical design of SEMA was planned as demonstrated in the Figure 1.

3.2 Development and implementation

The painting was created on a portable wooden frame. The painting itself is six feet long and four feet tall, with the board on which the drawing was done being 2.5 mm thick. Each of the hardware components and programs that enhanced the painting was developed iteratively. The evolution of each of these components is described in the following subsections.

3.2.1 Tactile stimuli—acrylic relief

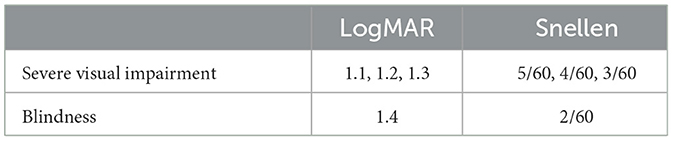

After drawing the painting on the board, tactile sensory stimuli were embedded on top of it. To create the basic patterns of the painting, a technique called Acrylic Relief was used. We conducted a pretest with participants from the Sri Lanka Council for the Blind to identify the optimal thickness and spatial distance between the plaster of Paris lines for the final painting. There were three main stages in the pretests.

• Level 1: Contour line thickness: 1 mm.

• Level 2: Contour line thickness: 3 mm.

• Level 3: Contour line thickness: 5 mm.

Additionally, in each level, the contour lines were embedded with spatial intervals varying from 1 to 30 mm to determine how sensitive a visually impaired person's touch is to contour lines that are closely spaced (refer Figure 2).

Figure 2. Three levels of contour line thickness for the pretest on touch sensitivity of visually impaired individuals.

In the pretest, the participants gave their feedback on how well they can identify contour lines. According to them, “as long as they have enough space between them, we [visually impaired individuals] can differentiate between contour lines.” We later clarified with them that the space had to be more than 1 mm for them to identify two contour lines separately. They did not indicate any preference toward using one thickness over the other, and therefore, for our design we decided to use the level 2 option with 3 mm thickness.

The river, bonfire and tent outlines were embedded with the plaster of Paris a way of highlighting the boundaries and the shape of elements. The river and the campfire were also coupled with the somatosensory stimuli while the tent was just embedded with plaster of Paris to serve as a controlled substance. The objective was to understand whether touching would provide enough information without the additive of other sensory stimuli. After this design was used in the first experiment iteration, we were given some suggestions to improve the sensibility of the tactile stimuli by the participants. Therefore, some rocks were molded and embedded into the campfire element to give it a realistic feel.

3.2.2 Somatosensory stimuli—heat actuator

For the first iteration, a Peltier module was employed as the heat emitter, assisted by a circuitry comprising an Arduino UNO (RRID:SCR-017284) micro-controller board, a temperature sensor, a mechanical relay, a transformer, and a 13 × 13 × 3 mm heat sink. An Arduino program was developed using the Arduino IDE (RRID:SCR-024884) to maintain the temperature at a certain level. Based on the pretest, participants preferred the temperature to be around 55–60°C for the campfire. However, due to our narrow temperature limit (5°C), the relay could not effectively cut off and turn on the power to the circuitry fast enough, resulting in inconsistencies in the generated heat. As the primary issue we encountered was achieving consistent heat distribution, we transitioned from using Peltier modules to a resistor array.

Utilizing the same transformer, eight resistors (10K Ohm and 5K Ohm 5% 5W Watt Fixed Cement Power Resistors: four each) were connected in parallel and powered. This circuit was then affixed on top of the campfire element and enveloped with heat-conductive aluminum foil to ensure uniform distribution in the shape of the fire (refer to Figure 3). Power was supplied to the resistor circuit for 3 min at 2-min intervals to maintain the temperature between 55–60°C.

Figure 3. The resistor array was securely fixed to the board before being covered with foil to ensure proper functionality.

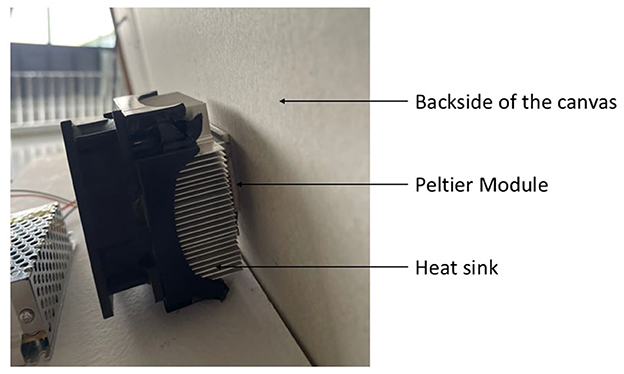

3.2.3 Somatosensory stimuli—cold actuator

To create the cold stimuli, we used a Peltier module along with a case fan to control the heating of the unused side of the module. A thermal compound was used to glue the case fan into the module. Both the Peltier module and the case fan were supplied electricity with a transformer. The temperature limit we used was between 13 and 16°C. The humidity that was collected over the painting due to the cold temperature of the module was used as an additional sensory stimulant; conveying that the area is supposed to represent a body of water.

3.2.4 Auditory stimuli

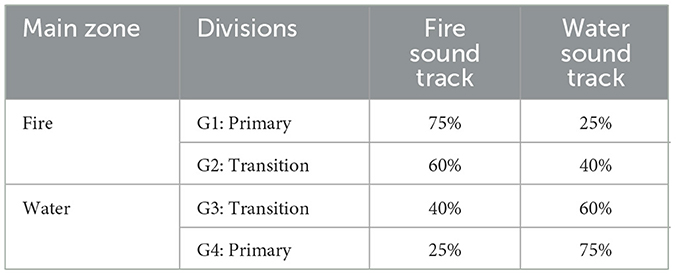

Two speakers were installed on the two sides of the painting. The painting was primarily divided into two main zones: Fire and Water. As the names suggest, the fire zone was assigned a fire ambient soundtrack, and the water zone was assigned a water ambient soundtrack. In the first iteration, both soundtracks were played simultaneously through the two speakers. However, during the experiments, participants provided feedback indicating a significant area for improvement. They mentioned that the sounds seemed “a bit cluttered” due to harsh overlapping.

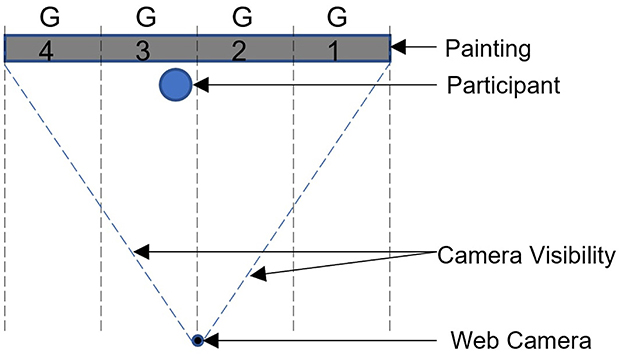

For the second iteration, we opted to utilize only one speaker to avoid sound cluttering. A program was developed using the Python language to track the subject with the assistance of a webcam positioned in front of the painting. After several design adjustments, we settled on dividing the painting into four zones, each playing a different composition of the ambient soundtracks as shown in Table 2.

This approach was positively received by the participants because it created a “rather smooth transition.” Figure 4 represent the high level architecture of this particular setup.

3.2.5 Integration

After each component was individually developed and tested in the pre-testing process, the integration took place. The cold stimulation actuator was installed in the back of the painting with the Peltier module directly contacting the board the painting was drawn on as demonstrated by the Figure 5.

Figure 5. Actuator for Cold Stimulation Using a Peltier Module with a heat sink integrated into the prototype.

The cold sensation transferred through the thin board and started humidifying using the vapor in the atmosphere as planned. We developed a wooden frame to hold the equipment in place so that it would be removable and installed in a different place.

The heat stimulation actuator was mounted on the painting surface and subsequently covered with heat-conductive aluminum foil shaped to resemble fire. Plaster of Paris outlines were then applied in the same shapes to confine the heat within the boundaries of the fire. The chosen board for the painting was not a heat conductor, thus limiting the spread of heat within the painting. The speaker was positioned at the top center of the painting frame and connected to the computer via Bluetooth. A Python program utilizing the OpenCV library (Bradski and Kaehler, 2000) was developed to play the corresponding soundtrack by tracking the user's full-body position on a grid, using a webcam for real-time assistance (refer to Figure 6).

3.3 Study

The completed prototype was then used to evaluate the overall experience as well as the success of each stimulus in resembling the content of the painting. The studies were conducted in accordance with the ethical research guidelines provided by the Ethics Review Committee (ERC) of the University of Colombo School of Computing and with ERC approval. The primary objective of our study was to evaluate whether interacting with the SEMA prototype would lead to a higher overall experience rating compared to receiving only a verbal description of the painting.

3.3.1 Participants

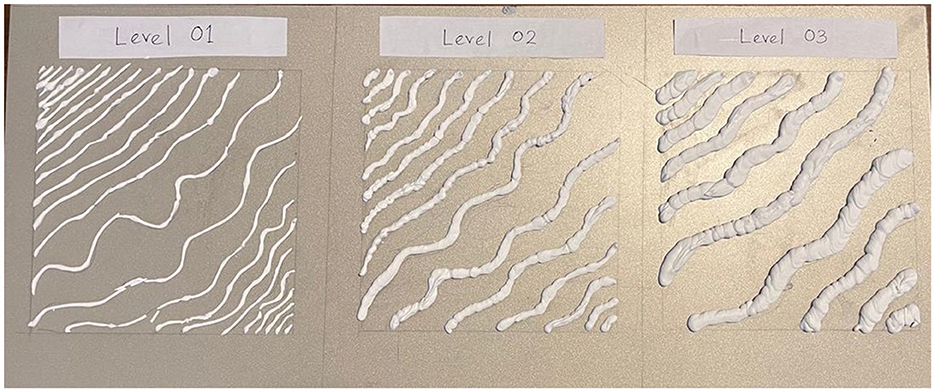

We recruited 20 visually impaired students (nine female, 11 male, mean age 24.85, SD 5.96), studying at the Center for Disability Research, Education, and Practice (CEDREP) at the University of Colombo. For this research, subjects were chosen with “Severe visual impairment” (n = 12) or “Blindness” (n = 8) according to the WHO distant visual impairment category (PAHO, 2024). The measurements in the LogMAR and Snellen scales (Solebo and Rahi, 2013) for the above categories are as shown in Table 3. Random assignment was used to divide participants (n = 20) equally into two groups as “Prototype” and “Control.” The Prototype Group, being the main focus, interacted with the SEMA prototype, while the Control Group received only a verbal description, simulating the typical way a VI person would experience a painting.

3.3.2 Procedure

After receiving a detailed description of the painting and a briefing on the study, participants in the prototype group were allocated around 5 min to interact with the SEMA prototype (refer to Figure 7). Following the interaction, they completed a brief questionnaire consisting of seven quantitative Likert-scale questions (1 = lowest preference/agreement to 5 = highest preference/agreement) and eight open-ended questions to gather qualitative feedback. Additionally, demographic information such as age, sex, and visual impairment category was recorded.

4 Results

We utilize both quantitative and qualitative methods to assess participant reactions to the prototype and explore the insights obtained from their feedback. From the feedback we collected, we could extrapolate the following points about participants' perception of the sensory augmentation of the painting.

4.1 Quantitative findings

The primary hypothesis was evaluated statistically using 1 to 5 Likert-scale questions asking participants from both groups “How do you like the overall experience?”. A two-tailed independent samples t-test was conducted to compare responses between the Prototype group and the Control group. Participants in the Prototype group (M = 4.24, SD = 0.97) reported significantly higher ratings compared to participants in the Control group (M = 2.71, SD = 0.99). The test yielded a t-statistic of 3.74 with 18 degrees of freedom, and a p-value of 0.0015 [t(18) = 3.74, p = 0.0015], indicating that the difference in experience ratings between the two groups was statistically significant.

These values suggest that interacting with the SEMA prototype significantly improved participants' overall experience compared to the traditional method of receiving only verbal descriptions. The probability that the observed difference between the two groups occurred by random chance is < 0.15%, thus providing strong evidence to reject the null hypothesis of no difference. To assess the practical significance of the difference, Cohen's d was calculated after conducting the independent samples t-test, yielding a value of 1.67. This large effect size indicates an improvement in the overall experience for participants using the SEMA prototype and suggests that the observed difference was statistically significant and practically meaningful.

We also analyzed participant feedback for individual stimuli, asking them to rate the intensity and their overall experience with tactile, heat, coldness, and auditory stimuli. Heat sensation received the highest average score of 4.30 (SD = 0.67), followed closely by auditory experience with an average of 4.20 (SD = 0.79). Texture scored an average rating of 4.10 (SD = 0.88), while the response to the coldness received an average of 3.90 (SD = 0.74). However, no statistical significance was found to suggest that participants favored one type of stimulus over another.

The final evaluation was done after refining the individual stimuli based on the participant feedback, we were able to identify the optimal temperature levels, volume levels and tactile thickness ultimately leading to a more optmized and positive experience.

4.2 Qualitative findings

Overall, we observed that the participants enjoyed experiencing the prototype, and many stated it was engaging and realistic during the questionnaire. Many described their experience of SEMA as “novel” (P2), “interesting” (P15), “unique” (P6), “realistic, I really enjoyed it” (P11), “exciting and a good experience” (P13). While their feedback was overwhelmingly positive (which also fits the quantitative results) there were also some critical voices. Such criticism came due to the fact that some sensory stimuli were not strong enough. We could see some participants liking one sensory stimulus more than another, highlighting that finding the right balance between them is important for a better experience. All participants of the prototype group strongly acknowledged that stimulating multiple senses added another perspective to the experience of the paintings and opened new ways of thinking and interpreting art. P19 said: “It helped me to be close with the painting because when you touch it and feel the different sensations, you kind of begin to start creating an idea of what's actually going on in the painting or what the story is.” The findings suggest that stimulating multiple senses can be beneficial, but it should be done in a way that prevents one sense from overwhelming the others. Otherwise, the excitement of one stimulus (such as the coldness of the river area in the painting) may overshadow the other cues.

The control group was also invited to interact with the prototype, and they showed great interest in engaging with the contents of the painting. P1 noted, “The description helped to grasp an idea of what to expect, and also to find the objects that they heard.” This highlights the importance of providing a better description prior to experiencing a multi-sensory painting.

4.3 Summary of key findings

Overall, all participants expressed interest in encountering similar multi-sensory artworks in the future and a curiosity regarding the technology's potential evolution. The feedback also highlighted the potential subjectivity of optimal stimulus intensity, as variations were observed even within the small sample size of this study. Furthermore, the subjective nature of art requires sensitivity to individual preferences and the providing adequate time for exploration at each participant's own pace. This is represented by one participant's request to re-touch the artwork after some time, possibly motivated by the novelty of the experience. When asked about her desire to return, the participant cited the “addictive experience” of feeling the warmth in her hand. Such feedback underscores the value of further exploration into user experiences over extended periods.

5 Discussion

The SEMA prototype served as a test bed to evaluate established theories within the field of multisensory integration, such as Sensory Alignment (Marshall et al., 2019) and Inverse Effectiveness (Stein et al., 2009). Sensory alignment, which states that sensory inputs should be harmonized to enhance perception, was key in our design, as we carefully matched tactile and auditory cues to the artwork's features by iterative design. Inverse effectiveness, the principle that weaker individual stimuli can result in stronger multisensory responses, was particularly showcased in this context, as participants demonstrated enhanced engagement when subtle touch and sound cues were combined.

5.1 Overall multi-sensory experience: immersive vs. distracting

While most participants felt that additional sensory stimuli did not change their initial impression of the artwork, some acknowledged the potential for deeper engagement. P6 remarked, “Visually impaired people are typically not interested in paintings because it's a visual art form; we only hear what's described to us. But SEMA allowed us to physically connect with a painting, which I never thought I'd be able to do.” This highlights how sensory augmentation can enhance the art experience by fostering personal connections. Feedback revealed a duality in the multi-sensory stimuli. While they helped focus attention on specific details, they could also be distracting, as mentioned in previous studies by Pursey and Lomas (2018) and Cavazos Quero et al. (2021). P5 mentioned, “I liked the painting but I was kind of disturbed by the switching of the sound,” while P13 expressed, “It's a funny thing trying to listen to the sound and touching and imagining because I was actively building a painting in my head as I run my fingers along the lines.” Regarding the haptic component, P2 shared, “Having never seen a fire or fireplace, I wanted to feel the warmth it gave me while understanding its shape. I feel like I now have knowledge about its exact shape, so when someone mentions a bonfire or fireplace, I know exactly what to imagine.” This emphasizes the importance of balancing subjective artistic expression with objective realism in multi-sensory art.

5.2 Balance in sensory design: curated vs. explorative

The impact of the sensory stimuli on each individual's experience was not always straightforward and sometimes bipolar in the sense that multisensory augmentation of art can either open up opportunities for interpretation, but can also narrow down the visitor's perspective. Before the interaction, participants were asked for consent to have their hands guided along the contours of the painting (refer to Figure 8). Most agreed, but they did not want assistance for the entire experience. Once they understood what to trace, their body language indicated they could manage on their own, and some even expressed this verbally. P9 said, “It felt like it was leading you somewhere because it was already a choice, it was another choice from someone else, so I felt like I was being guided into someone else's perspective.” Another participant stated, “I think it's interesting for us to experience the paintings this way, but I found it to be a little too directed.” This is similar to the findings done by Pursey and Lomas (2018) when participants were asked about the flow of the experience. However, this attitude can often be a result of excitement due to the novel engagement experience. It highlights the need for future multi-sensory art installations and museum/art gallery settings to cater to a broader range of visitor expectations by offering varying degrees of guidance or introduction, potentially tailored to the specific artwork in question.

5.3 Integration of touch and sound

Current literature provides a rich foundation of theories and case studies supporting the notion that multi-sensory stimulation provides a more enriching experience compared to uni-sensory engagement. In this regard, participants described the experience as much more balanced between touch and sound. P11 said: “I think the constant change in sound with respect to where you are, actually illustrates the picture. It kind of makes sense.” The painting was well integrated with the sound and emphasized the physicality of the painting, thus creating an advantage for touch. A dissimilar outcome is observed in the study by Cavazos Quero et al. (2021), where sound was used to provide descriptions and Braille served as a tactile medium to convey content. In contrast, SEMA employs a universal mode of communication that does not rely on language. Unlike the user feedback in Cavazos Quero et al. (2021), where some participants expressed dissatisfaction with tactile elements, SEMA participants embraced the tactile drawings as a self-guiding aid, without needing an instruction set. Looking forward, using precise tracking technology like optical sensing could allow us to directly follow the participant's finger, enhancing the system's accuracy and responsiveness without relying on body or face tracking.

5.4 Somatosensory simulation to enhance art

Our findings revealed a significant influence of temperature variation on user reported arousal levels. The arousal from the gradient pattern of heat actuators are thought to come from its more natural feel, created by using an array of resistors underneath. Future research could explore more efficient methods for inducing coldness, such as using ThermoSurf (Peters et al., 2023), a technology capable of creating precise temperature variations that, when combined with tactile feedback, can represent both temperature and textures within the artwork. Furthermore, studies like Metzger et al. (2018) highlight the necessity of examining finger movements to determine which object parts are more salient to touch, emphasizing the role of spatial features in tactile accuracy. This can also enhance the sensations associated with this, such as coordinating changes in ambient sound. As noted by Zimmer et al. (2020), the absence of a standard 'lexicon of touch' is relevant here too because the perception of touch varies greatly between non-VI and VI individuals, and even among VI individuals themselves, since touch plays a critical role in their daily life for exploring the world. However, the Zimmer et al. (2020) study does not account for the lack of vision, so future studies would find it interesting to examine how the perception of materials changes when the visitor is not accustomed to vision.

5.5 Design considerations for a multisensory art

A crucial consideration in designing multi-sensory art experiences is achieving optimal sensory alignment. The SEMA user study highlighted the importance of ensuring consistency between the artistic message and the chosen sensory stimuli (e.g., warmth for fire, coldness for water). However, the findings also emphasized the need for user agency. While some participants appreciated guided exploration, others expressed a desire for more control over their sensory journey. Future iterations should strive for a balance between offering a guiding framework and allowing for individual exploration.

One of the key strengths of the SEMA prototype lies in its innovative use of somatosensory and tactile elements. Traditionally, art appreciation has relied heavily on visual perception. By incorporating sound, touch and temperature variations, SEMA offers a unique pathway for visually impaired individuals to engage with art through other senses than the visual sense. This approach not only expands accessibility but also opens doors for a richer and more immersive art experience for all viewers.

6 Conclusion

Since traditional art focuses on the sense of sight to convey information and themes of the artwork to the public, it is hard for a visually impaired audience to grasp the ideas that are being presented. In the user study, it was highlighted that visually impaired people are very much ignorant of how real-world objects are represented in the 2-dimensional canvas because there is nothing to touch. This was why all elements in SEMA were embedded with plaster of Paris contour lines to highlight the shapes they are usually drawn on a painting. Further, we explored the ways to combine Heat, Cold, and Sounds to enhance the accessibility more than an audio description of the painting that most people with moderate to severe visual impairments will fail to grasp. The results showed significant improvements in SEMA over the traditional method, proving that additional stimuli do play a role in increasing entertainment for the visually impaired. They were able to correctly identify that the heat and wood chirping sounds were attached to fire and that coldness and humidity with the water running sound were attached to a river. This also improved engagement. Users commented positively on the freedom they had in the free-paced engagement. Overall, the finding of this study suggests that the three sensory stimuli somatosensory, audio, and tactile can work together to create a more comprehensive experience for the user and it does somewhat compensate for the loss of vision when they are experiencing an artwork.

To address the limitation of the limited generalizability of the findings to other contexts and populations, future studies can consider recruiting a more diverse sample from multiple locations. For the limitation regarding the controlled environment of the user study, future work could involve conducting field studies in various real-world scenarios to assess the usability and effectiveness of SEMA. Enhancing the area coverage of stimuli, integrating other stimuli and experimenting with different combinations of stimuli are perceived as future objectives.

The authors are confident that the proposed solution holds promise for improving the accessibility of entertainment and art gallery experience for the visually impaired community. Despite the limitations, this research contributes to the field of human-computer interaction and has the potential to benefit individuals with disabilities and the wider user population. The proposed approach can be further developed and refined to provide more inclusive and engaging experiences in various domains. It is hoped that the findings of this study serve as a foundation for future research that is able to explore the full potential of multi-sensory integration in entertainment and its impact on user experiences.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://github.com/heshanwee/sema-evaluation-app.

Ethics statement

The studies involving humans were approved by University of Colombo School of Computing—Ethics Review Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

RW: Writing – original draft, Writing – review & editing. AM: Writing – original draft, Writing – review & editing. HW: Writing – original draft, Writing – review & editing. KK: Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing – review & editing. DS: Conceptualization, Formal analysis, Funding acquisition, Investigation, Project administration, Resources, Supervision, Validation, Writing – review & editing. SA: Formal analysis, Funding acquisition, Investigation, Project administration, Resources, Supervision, Validation, Writing – review & editing. AC: Conceptualization, Funding acquisition, Investigation, Project administration, Resources, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the University of Colombo School of Computing through the Funding Scheme Research Allocation for Research and Development under Grant UCSC/RF/MXR/GN001.

Acknowledgments

The authors would like to thank Mr Dushan Dinushka, an instructor at the University of Colombo, for his support in developing the hardware devices, Mr Ashoka Weerawardena, instructor in Computer Technology (Special Needs) at the Center for Disability Research, Education and Practice (CEDREP), University of Colombo, for his invaluable support in conducting user studies and evaluations. The authors would also like to thank students and the staff members of the CEDREP, and School of Computing at the University of Colombo for actively helping this research in various ways. Sincere appreciation is extended to all those who contributed their expertise and time to the success of this research project. Their diverse insights and substantial commitments have greatly enriched the work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2024.1450799/full#supplementary-material

References

Allman, B. L., and Meredith, M. A. (2007). Multisensory processing in “unimodal” neurons: cross-modal subthreshold auditory effects in cat extrastriate visual cortex. J. Neurophysiol. 98, 545–549. doi: 10.1152/jn.00173.2007

Atkinson, S. A., and Kennedy, H. (2016). Inside-the-scenes: the rise of experiential cinema. Participation 13, 139–151.

Bernard, C., Monnoyer, J., Ystad, S., and Wiertlewski, M. (2022). “Eyes-off your fingers: Gradual surface haptic feedback improves eyes-free touchscreen interaction,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (New York, NY: ACM), 1–10. doi: 10.1145/3491102.3501872

Cabanillas, R. (2020). Mixing Music in Dolby Atmos. Available at: https://digitalcommons.csumb.edu/caps_thes_all/956/

Cavazos Quero, L., Iranzo Bartolomé, J., and Cho, J. (2021). Accessible visual artworks for blind and visually impaired people: comparing a multimodal approach with tactile graphics. Electronics 10:297. doi: 10.3390/electronics10030297

Chou, C.-H., Su, Y.-S., Hsu, C.-J., Lee, K.-C., and Han, P.-H. (2020). Design of desktop audiovisual entertainment system with deep learning and haptic sensations. Symmetry 12:1718. doi: 10.3390/sym12101718

Christidou, D., and Pierroux, P. (2019). Art, touch and meaning making: an analysis of multisensory interpretation in the museum. Mus. Manag. Curatorsh. 34, 96–115. doi: 10.1080/09647775.2018.1516561

Faustino, D. B., Gabriele, S., Ibrahim, R., Theus, A.-L., and Girouard, A. (2017). “Sensart demo: A multisensory prototype for engaging with visual art,” in Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces (New York, NY: ACM), 462–465. doi: 10.1145/3132272.3132290

Frid, E., Lindetorp, H., Hansen, K. F., Elblaus, L., and Bresin, R. (2019). “Sound forest: evaluation of an accessible multisensory music installation,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY: ACM), 1–12. doi: 10.1145/3290605.3300907

Giordano, M., Hattwick, I., Franco, I., Egloff, D., Frid, E., Lamontagne, V., et al. (2015). “Design and implementation of a whole-body haptic suit for “ilinx”, a multisensory art installation,” in 12th International Conference on Sound and Music Computing (SMC-15), Ireland, July 30, 31 & August 1, 2015, Volume 1 (Kildare: Maynooth University), 169–175.

Gong, Z., Wang, R., and Xia, G. (2022). Augmented reality (AR) as a tool for engaging museum experience: a case study on Chinese art pieces. Digital 2, 33–45. doi: 10.3390/digital2010002

Guo, K., Fan, A., Lehto, X., and Day, J. (2021). Immersive digital tourism: the role of multisensory cues in digital museum experiences. J. Hosp. Tour. Res. 47:10963480211030319. doi: 10.1177/10963480211030319

Joy, A., and Sherry Jr, J. F. (2003). Speaking of art as embodied imagination: a multisensory approach to understanding aesthetic experience. J. Consum. Res. 30, 259–282. doi: 10.1086/376802

Kawashima, N. (2006). Audience development and social inclusion in Britain: tensions, contradictions and paradoxes in policy and their implications for cultural management. Int. J. Cult. Policy 12, 55–72. doi: 10.1080/10286630600613309

Kuhns, R. (2005). Decameron and the Philosophy of Storytelling: Author as Midwife and Pimp. New York, NY: Columbia University Press.

Lakatos, P., Chen, C.-M., O'Connell, M. N., Mills, A., and Schroeder, C. E. (2007). Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292. doi: 10.1016/j.neuron.2006.12.011

Lannan, A. (2019). A virtual assistant on campus for blind and low vision students. J. Spec. Educ. Apprenticeship 8:n2. doi: 10.58729/2167-3454.1088

Marshall, J., Benford, S., Byrne, R., and Tennent, P. (2019). “Sensory alignment in immersive entertainment,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY: ACM), 1–13. doi: 10.1145/3290605.3300930

Masal, K. M., Bhatlawande, S., and Shingade, S. D. (2024). Development of a visual to audio and tactile substitution system for mobility and orientation of visually impaired people: a review. Multimed. Tools Appl. 83, 20387–20427. doi: 10.1007/s11042-023-16355-0

Matos, A., Rocha, T., Cabral, L., and Bessa, M. (2015). Multi-sensory storytelling to support learning for people with intellectual disability: an exploratory didactic study. Procedia Comput. Sci. 67, 12–18. doi: 10.1016/j.procs.2015.09.244

McMillen, R. (2015). Museum Disability Access: Social Inclusion Opportunities Through Innovative New Media Practices. Fresno Pacific University. Available at: https://fpuscholarworks.fresno.edu/items/c73a0098-8d5a-426b-8799-29d8b8b264f1

Metzger, A., Toscani, M., Valsecchi, M., and Drewing, K. (2018). “Haptic saliency model for rigid textured surfaces,” in Haptics: Science, Technology, and Applications: 11th International Conference, EuroHaptics 2018, Pisa, Italy, June 13-16, 2018, Proceedings, Part I 11 (Cham: Springer), 389–400. doi: 10.1007/978-3-319-93445-7_34

Obrist, M., Gatti, E., Maggioni, E., Vi, C., and Velasco, T. C. (2017). Multisensory experiences in hci. IEEE MultiMedia 24, 9–13. doi: 10.1109/MMUL.2017.33

PAHO (2024). Visual Health – paho.org. Available at: https://www.paho.org/en/topics/visual-health (accessed Septmber 26, 2024).

Panofsky, E. (2018). Studies in iconology: humanistic themes in the art of the Renaissance. London: Routledge. doi: 10.4324/9780429497063

Peters, L., Serhat, G., and Vardar, Y. (2023). Thermosurf: thermal display technology for dynamic and multi-finger interactions. IEEE Access 11, 12004–12014. doi: 10.1109/ACCESS.2023.3242040

Pursey, T., and Lomas, D. (2018). Tate sensorium: an experiment in multisensory immersive design. Senses Soc. 13, 354–366. doi: 10.1080/17458927.2018.1516026

Rieger, J., and Chamorro-Koc, M. (2022). “A multisensorial storytelling design strategy to build empathy and a culture of inclusion,” in Transforming our World through Universal Design for Human Development (Amsterdam: IOS Press), 408–415. doi: 10.3233/SHTI220867

Solebo, A., and Rahi, J. (2013). Epidemiology, aetiology and management of visual impairment in children. Arch. Dis. Child. 99, 375–379. doi: 10.1136/archdischild-2012-303002

Solves, J., Sánchez-Castillo, S., and Siles, B. (2022). Step right up and take a whiff! Does incorporating scents in film projection increase viewer enjoyment? Stud. Eur. Cinema 21, 4–17. doi: 10.1080/17411548.2022.2064155

Stein, B. E., Stanford, T. R., Ramachandran, R., Perrault, T. J., and Rowland, B. A. (2009). Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Exp. Brain Res. 198, 113–126. doi: 10.1007/s00221-009-1880-8

Stevenson, R. A., Ghose, D., Fister, J. K., Sarko, D. K., Altieri, N. A., Nidiffer, A. R., et al. (2014). Identifying and quantifying multisensory integration: a tutorial review. Brain Topogr. 27, 707–730. doi: 10.1007/s10548-014-0365-7

Vaz, R., Freitas, D., and Coelho, A. (2020). Blind and visually impaired visitors' experiences in museums: increasing accessibility through assistive technologies. Int. J. Incl. Mus. 13:57. doi: 10.18848/1835-2014/CGP/v13i02/57-80

Velasco, C., and Obrist, M. (2021). Multisensory experiences: a primer. Front. Comput. Sci. 3:12. doi: 10.3389/fcomp.2021.614524

Velasco, C., Tu, Y., and Obrist, M. (2018). “Towards multisensory storytelling with taste and flavor,” in Proceedings of the 3rd International Workshop on Multisensory Approaches to Human-Food Interaction (New York, NY: ACM), 1–7. doi: 10.1145/3279954.3279956

Verma, P., Basica, C., and Kivelson, P. D. (2020). Translating paintings into music using neural networks. arXiv [Preprint]. arXiv:2008.09960. doi: 10.48550/arXiv.2008.09960

Vi, C. T., Ablart, D., Gatti, E., Velasco, C., and Obrist, M. (2017). Not just seeing, but also feeling art: mid-air haptic experiences integrated in a multisensory art exhibition. Int. J. Hum. Comput. Stud. 108, 1–14. doi: 10.1016/j.ijhcs.2017.06.004

Vovk, A., Wild, F., Guest, W., and Kuula, T. (2018). “Simulator sickness in augmented reality training using the microsoft hololens,” in Proceedings of the 2018 CHI conference on human factors in computing systems (New York, NY: ACM), 1–9. doi: 10.1145/3173574.3173783

Vuilleumier, P., and Driver, J. (2007). Modulation of visual processing by attention and emotion: windows on causal interactions between human brain regions. Philos. Trans. R Soc. Lond. B Biol. Sci. 362, 837–855. doi: 10.1098/rstb.2007.2092

West, T. J., Bachmayer, A., Bhagwati, S., Berzowska, J., and Wanderley, M. M. (2019). “The design of the body: suit: score, a full-body vibrotactile musical score,” in Human Interface and the Management of Information. Information in Intelligent Systems: Thematic Area, HIMI 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, July 26-31, 2019, Proceedings, Part II 21 (Cham: Springer), 70–89. doi: 10.1007/978-3-030-22649-7_7

Yu, S. (2022). The research on the characteristics and forms of immersive experience in art exhibitions–take “van gogh–the immersive experience” as an example. J. Educ. Humanit. Soc. Sci. 6, 154–159. doi: 10.54097/ehss.v6i.4417

Yuan, Y., Keda, X., Yueqi, N., and Xiangyu, Z. (2020). “Design and production of digital interactive installation for the cultural theme of the belt and road initiative,” in Journal of Physics: Conference Series, Volume 1627 (Bristol: IOP Publishing), 012003. doi: 10.1088/1742-6596/1627/1/012003

Zhou, Z., Cheok, A. D., Liu, W., Chen, X., Farbiz, F., Yang, X., et al. (2004). Multisensory musical entertainment systems. IEEE MultiMedia 11, 88–101. doi: 10.1109/MMUL.2004.13

Keywords: assistive technologies, multi-sensory, visual impairment, entertainment, somatosensory, tactile, auditory

Citation: Welewatta R, Maithripala A, Weerasinghe H, Karunanayake K, Sandaruwan D, Arachchi SM and Cheok AD (2024) SEMA: utilizing multi-sensory cues to enhance the art experience of visually impaired students. Front. Comput. Sci. 6:1450799. doi: 10.3389/fcomp.2024.1450799

Received: 25 June 2024; Accepted: 29 October 2024;

Published: 18 November 2024.

Edited by:

Sebastien Fiorucci, Université Côte d'Azur, FranceReviewed by:

Luis Manuel Mota de Sousa, Universidade Atlântica, PortugalCorentin Bernard, FRE2006 Perception, Représentations, Image, Son, Musique (PRISM), France

Copyright © 2024 Welewatta, Maithripala, Weerasinghe, Karunanayake, Sandaruwan, Arachchi and Cheok. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Akila Maithripala, a2lsQHVjc2MuY21iLmFjLmxr

Ruwani Welewatta

Ruwani Welewatta Akila Maithripala

Akila Maithripala Heshan Weerasinghe1

Heshan Weerasinghe1 Adrian David Cheok

Adrian David Cheok