94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 11 June 2024

Sec. Mobile and Ubiquitous Computing

Volume 6 - 2024 | https://doi.org/10.3389/fcomp.2024.1394397

This article is part of the Research Topic Wearable Computing, Volume II View all 6 articles

This work described a novel non-contact, wearable, real-time eye blink detection solution based on capacitive sensing technology. A custom-built prototype employing low-cost and low-power consumption capacitive sensors was integrated into standard glasses, with a copper tape electrode affixed to the frame. The blink of an eye induces a variation in capacitance between the electrode and the eyelid, thereby generating a distinctive capacitance-related signal. By analyzing this signal, eye blink activity can be accurately identified. The effectiveness and reliability of the proposed solution were evaluated through five distinct scenarios involving eight participants. Utilizing a user-dependent detection method with a customized predefined threshold value, an average precision of 92% and a recall of 94% were achieved. Furthermore, an efficient user-independent model based on the two-bit precision decision tree was further applied, yielding an average precision of 80% and an average recall of 81%. These results demonstrate the potential of the proposed technology for real-world applications requiring precise and unobtrusive eye blink detection.

The phenomenon of eye blink rate serves as a versatile indicator, offering valuable insights into various aspects of human cognition and wellbeing. Extensive research has highlighted its significance in gauging fatigue levels, mental workload, and overall cognitive functioning, as described in studies (Martins and Carvalho, 2015; Faure et al., 2016; Haq and Hasan, 2016; Jongkees and Colzato, 2016). For instance, the prevalence of computer vision syndrome underscores the importance of monitoring eye fatigue to mitigate its adverse effects resulting from prolonged screen exposure, as outlined by Divjak and Bischof (2009). Leveraging the eye blink monitor technology, timely alerts can be issued to prevent or alleviate eye strain, thus promoting healthier habits. Moreover, the exploration of eye blink as an innovative input method in human-computer interaction holds promise, particularly for individuals with motor impairments, providing them with newfound opportunities to engage with digital interfaces. This interdisciplinary interest in eye blink detection extends to emerging technologies like virtual reality and augmented reality, where robust detection mechanisms are essential for enhancing user experiences, as demonstrated in the works (Królak and Strumiłło, 2012; Kumar and Sharma, 2016) As such, understanding and harnessing the nuances of eye blink behavior have far-reaching implications across various domains, from healthcare and assistive technology to immersive digital environments.

Many solutions have been proposed to address the challenges of eye blink detection, which can be grouped into computer vision-based and sensor-based approaches. Computer vision-based techniques are a popular sensing method in contemporary literature for eye gesture recognition (Al-gawwam and Benaissa, 2018; de Lima Medeiros et al., 2022; Youwei, 2023; Zeng et al., 2023). This solution requires capturing images of the eyes to extract the eye blink features, like markers (Kraft et al., 2022; Youwei, 2023), which often can achieve high accuracy given high-quality images for eye blink detection tasks with deep learning models. However, for sustained usage in the real world, vision-based methods are often influenced by image quality (e.g., lighting conditions, stability), usability (e.g., requires users to photograph their face), and private issues (esp. for automatic photo-taking methods), besides, the power consumption caused by image shotting and processing also prohibits its long-term usage in the wild environment. Some disadvantages of the computer vision-based approach can be overcome by the sensor-based methods like acoustic (Liu et al., 2021), EEG (Electroencephalogram) (Ko et al., 2020), radar (Zhang et al., 2023). However, the above sensor-based solutions either lack practicality, making long-term and real-world eye blink detection unfeasible, or robustness, leading to limitations in various environmental conditions, like limited sensing angles and uncomfortable configuration (skin contact).

To address the existing sensing limitations, we proposed a non-contact sensing-based eye detection solution leveraging a capacitive sensing technique, which is widely used in human activity recognition scenarios (Bian et al., 2024). The capacitive sensing solution measures the variation in capacitance, which occurs when the distance between the electrode and an object or the surface of the object changes. In the case of detecting eye blinks, a copper electrode of the capacitive sensor can be placed near the eye, and the movement of the eyelid during a blink alters the distance between the electrode and the eyelid, leading to changes in capacitance that can be detected. The solution was demonstrated with a series of experiments considering both static and dynamic body states. With the capacitive sensing unit deployed on a pair of standard glasses, we achieved 92% detection precision and 94% recall by a user-dependent model with a customized predefined threshold value. In addition, an efficient user-independent model based on the two-bit precision decision tree was further applied, yielding an average precision of 80% and an average recall of 81 %, this part is a major extension of our previous work (Liu et al., 2022).

Overall, we have the following contributions from this work:

1. A general concept of using capacitive sensing for eye blink detection in a non-contact way is proposed.

2. A wearable, low-power eye blink detection system that showcases both robustness and accuracy in five scenarios was developed.

3. An efficient and low-latency detection method based on a decision tree was implemented to demonstrate the efficiency of the proposed solution for eye blink detection.

Existing eye blink detection approaches are mainly grouped into computer vision-based and sensor-based solutions, as Table 1 lists. The vision solutions capture the landmarks of the face and abstract eye blink features with a deep convolutional model. Such a system provides high accuracy of eye blink detection and real-time inferences benefitting from the development of hardware resources and the feature abstracting deep neural network models, as demonstrated in the existing studies (Soukupova and Cech, 2016; Baccour et al., 2019; Youwei, 2023). However, such systems are constrained in practical scenarios. Firstly, environmental conditions like light could cause a sharp drop in accuracy. Secondly, there are a lot of cases in everyday life where a camera does not exist when eye blink detection is needed. Thirdly, the vision solution is not a privacy-respect approach, thus, resulting in a low acceptance rate among users. Such disadvantages of vision-based methods can be better addressed by sensor-based solutions. For example, Liu et al. (2021) proposed “BlinkListener” to sense the subtle eye blink motion using acoustic signals in a contact-free manner and demonstrated the robustness on the Bela platform as well as a smartphone with a median detection accuracy of 95%. Luo et al. (2020) introduced the Eslucent, an eyelid interface for detecting eye blinking, which is built upon the fact that the eyelid moves during the blink. By deploying an on-skin electrode on the eyelid, the capacitance value formed by the electrode and the eyelid will variate. The experiments show that the Eslucent achieves an average precision of 82.6% and a recall of 70.4%. Some other sensor-based approaches were also proposed for eye blink detection, like the doppler sensor (Kim, 2014), EEG signals (Ko et al., 2020), mmWaves (Shu et al., 2022), etc. However, the proposed sensor-based solutions lack either practicability, meaning that the long-term and in-the-wild eye blink detection is not possible, or robustness, meaning that the dynamic environmental conditions result in a limited number of usage scenarios. For example, the angular sensing range of the “BlinkListener” is limited. EEG and the “Eslucent” need special head/face preparation work before the detection. To address the above issues, we proposed a non-contact sensing-based eye detection solution leveraging the capacitive sensing technique.

Capacitive-based proximity sensing, a technique reliant on the fluctuations in the electric field surrounding electrodes due to environmental factors, has emerged as a versatile method with applications across numerous domains (Bian et al., 2024). This method has been extensively studied and categorized into three distinct modes based on electrode configuration (single or paired) and signal type (varying or static): transmit mode (Ye et al., 2018), shunt mode (Porins and Apse-Apsitis, 2019), and load mode (Grosse-Puppendahl et al., 2013). These modes provide a framework for perceiving proximity to surrounding objects by precisely measuring changes in capacitance, offering valuable insights into the spatial relationships between objects and their environment.

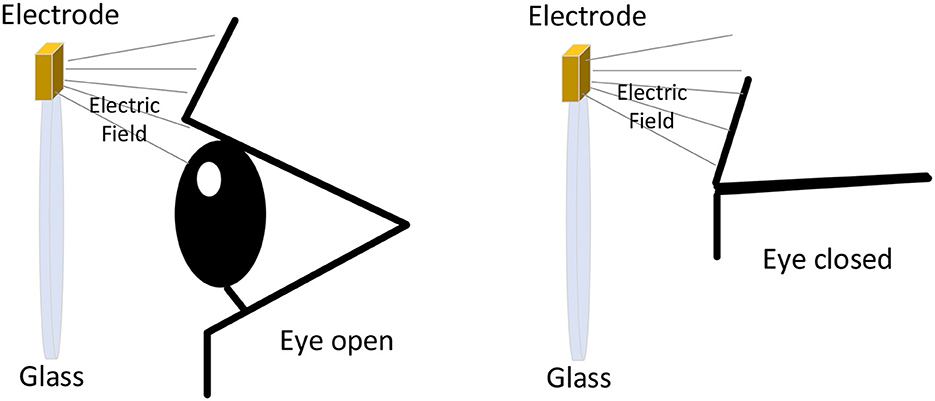

In this work, we present a novel application of capacitive sensing technology aimed at detecting eye blinking. Our capacitive sensing unit comprises a single electrode operating in the load mode, specifically designed for integration into a glass frame. As depicted in Figure 1, the electrode is charged, generating an electric field in its immediate vicinity. The activity of eye blinking, characterized by the movement of the upper eyelid, alters the distance between the electrode and the eyelid, consequently disrupting the distribution of the electric field. Through continuous monitoring of the capacitance variation, our system can effectively detect these subtle changes, providing a reliable means of identifying eye blinks in real-time. The inherent conductivity of the human body presents an intriguing opportunity within capacitive proximity sensing systems. By considering the entire body or specific body parts as conductive intrusions, a wide array of movement types can be accurately sensed. This capability extends beyond traditional inertial sensor units, as highlighted in studies (Grosse-Puppendahl et al., 2013; Ye et al., 2018; Porins and Apse-Apsitis, 2019), ushering in a new branch of wearable motion sensing modalities.

Figure 1. Capacitance between electrode and eye in different status (as the eyelids close, the distance between the eyelid and electrodes increases, leading to a reduction in capacitance and a subsequent rise in resonant frequency, as shown in this figure).

As the direct measurement of body-area capacitance or its variation poses challenges compared to parameters like voltage signals, researchers have delved into indirect measurement solutions. Among these, two primary methods have emerged: the charge-based approach and the frequency-based approach. The former entails monitoring body-area capacitance by detecting variations in charge resulting from voltage signals (Braun et al., 2011; Bian et al., 2019). However, this method typically necessitates preprocessing steps such as signal amplification or high-resolution sampling to accurately capture subtle fluctuations in the raw signal. In contrast, the frequency-based method records body-area capacitance by measuring frequency changes induced by variations in capacitance within an oscillating circuit, facilitated by a frequency counter (Grosse-Puppendahl et al., 2012; Bian and Lukowicz, 2021).

Taking into account factors such as cost-effectiveness and simplicity, we have chosen to adopt the frequency-based solution for eye-blink detection. In our setup, an LC tank oscillator is employed, with frequency measurement and digitization handled by the FDC2214 chip. This chip not only drives the LC tank to oscillate but also offers four channels for monitoring capacitance variations. However, for the scope of this study, we focus on utilizing only one channel. Nevertheless, our ongoing research endeavors will explore the potential of leveraging multiple channels to enhance the detection of eye activities.

Within the LC tank oscillator setup, any alteration in capacitance is reflected as a shift in the resonant frequency. Rather than retroactively computing capacitance variation values, our analysis directly employs frequency data for the abstraction of eye blink activity. This streamlined approach not only simplifies the detection process but also enhances the system's efficiency and accuracy in discerning eye blinks within dynamic environmental contexts.

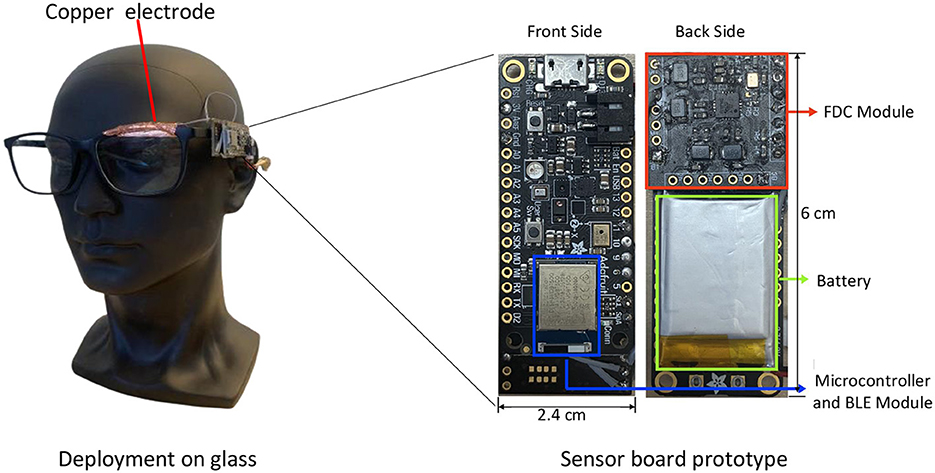

In addition to the sensing unit, the hardware platform incorporates an nRF52840 microcontroller for real-time eye blink abstraction, which also facilitates Bluetooth transmission for offline data presentation. A compact lithium battery with a 300 mAh capacity powers the system, providing approximately 20 hours of continuous in-the-wild eye blink detection. The electrode consists simply of a piece of copper tape affixed to the upper frame of a standard pair of glasses. There are no special treatments on the copper tape, the length of the copper tape is around 3 cm. The electrode is not a regular shape, its shape varies with the upper frame of the glasses. The entire hardware prototype weighs only around 18 grams, ensuring that users hardly notice it while wearing. In practical applications, the battery and PCB board can be strategically placed on each leg of the glasses. This design distributes the weight evenly across the wearer's ears, ensuring a more comfortable wearing experience. Figure 2 illustrates the hardware platform and its integration into the glasses.

Figure 2. Hardware prototype and its deployment on the glass. Copper tape is affixed to the glass frame, serving as the sensing electrode. The sensor board, which includes the microcontroller, Bluetooth interface, FDC module, and battery. The FDC module is a multi-channel family of noise- and EMI-resistant, high-resolution, high-speed capacitance-to-digital converters for implementing capacitive sensing solutions.

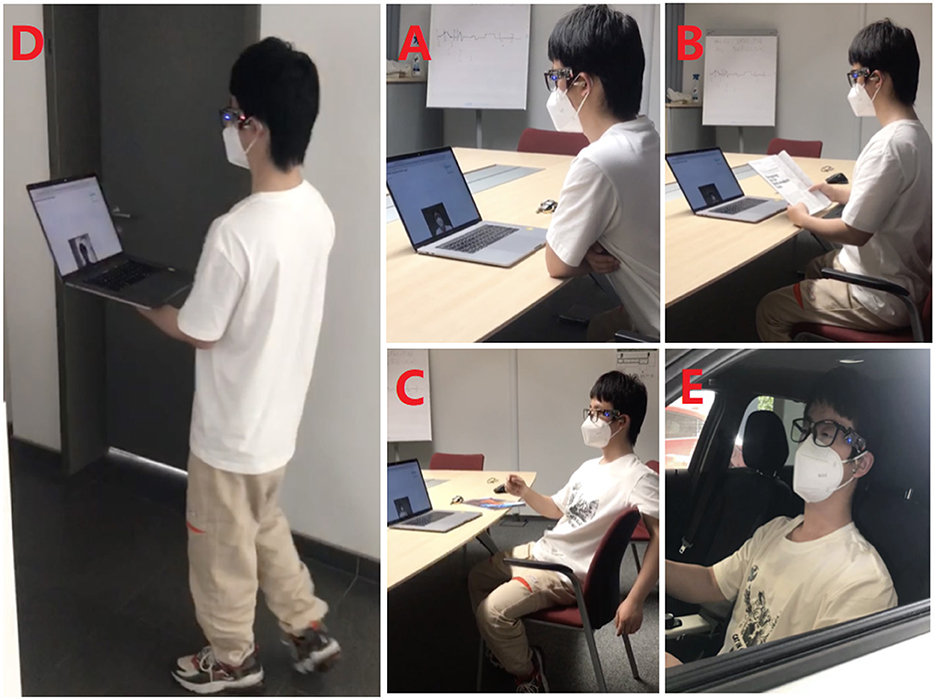

To comprehensively evaluate the proposed eye blink detection approach and hardware prototype, a series of five experiments were designed, each representing different daily activities. These experiments aimed to assess both the practicality and robustness of the system under various conditions. As illustrated in Figure 3, the experiments covered a spectrum of scenarios, including intentional and involuntary blink occurrences.

Figure 3. Five eye blink detection scenarios. [(A) intentional blink; (B) Involuntary blink while reading a book; (C) Involuntary blink while talking(replaced by reading news with voice in some sessions); (D) Involuntary blink while walking around; (E) Involuntary blink while sitting in the car cockpit simulating the driving activity].

Due to safety issues, the scenario involving the car cockpit was conducted in a controlled environment, namely a parking lot, where participants simulated driving activities. Similarly, to simulate conversation scenarios, volunteers read random news articles aloud in the absence of a predefined conversational topic. These adjustments ensured a diverse range of activities were captured, mirroring real-world situations as closely as possible.

Eight volunteers, comprising five males and three females aged between 24 and 32, were invited to participate in the experiments. Each scenario lasted approximately eight minutes, with data sampled at a rate of 60Hz. To facilitate data capture and analysis, a custom web application was developed using JavaScript. This application is composed of four primary components: the data receive module, plot module, video record module, and a button. The data receive module is engineered to capture raw sensor data from the microcontroller through the Bluetooth interface, storing it locally on the laptop. The plot module facilitates real-time visualization of this raw data. Meanwhile, the video record module activates the laptop's camera to gather eye information. The button is used to temporarily mark instances when an eye blink is detected. These instances are then definitively labeled based on the video data. Thus, this application enabled real-time data visualization, storage, and labeling of transmitted data. Additionally, the application utilized the PC camera to record the volunteers' facial expressions throughout the experiments, providing valuable ground truth data for eye blink occurrences. By designing these experiments and employing a comprehensive data collection and analysis approach, we aimed to thoroughly evaluate the performance and reliability of our eye blink detection system across a range of real-world scenarios.

The findings from the study conducted by Kwon et al. (2013) shed light on the temporal dynamics of voluntary blinks, revealing that the closing phase lasts approximately 100 ms, succeeded by an opening phase of about 200 ms until 97 percent recovery of the eye's opening. This delineation of blink phases underscores the significance of timing in blink detection, with capacitance variation primarily occurring during these dynamic phases. The static closed and open phases of the eye exhibit minimal effect on capacitance, as the distance between the electrode and the eyelid remains constant.

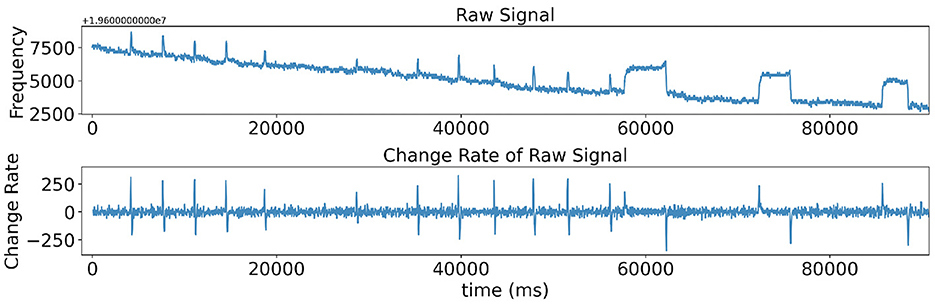

To facilitate real-time blink detection, we employed a one-second sliding window with a half-second overlap, providing a comprehensive temporal context for analysis. Initially, a sliding mean filtering with a window size of 5 was applied to the raw data to mitigate inherent noise interference. Subsequent computation of data variation involved simple subtraction of consecutive raw data points according to the equation in line 8 from Algorithm 1, effectively isolating blink-related features and eliminating environmental electric field drift. Figure 4 provides a visual representation of this process, showing the raw data stream alongside its corresponding variation stream, wherein each peak denotes a blink action, with positive peaks signifying the closing phase and negative peaks denoting the opening phase.

Figure 4. Raw signal and signal variation between two continuous raw data (the last three square waves in the raw signal stream indicate that the prototype also detected the duration of the eye-closing state, which will be explored in the future).

For blink detection, we established a predefined threshold against which signal variation was compared, enabling the identification of positive impulses indicative of blink events. The full eye-blink detection procedure can be found in Algorithm 1, which is majorly designed to detect the signal change rate caused by eye blink. The last three square waves in the raw signal stream indicate that the prototype also detected the duration of the eye-closing state, which will be explored in future.

To establish a baseline for subsequent involuntary experiments, we initiated an intentional eye blink experiment. Volunteers were instructed to sit in front of a laptop while wearing the provided glasses and deliberately blinking their eyes for approximately eight minutes. The laptop served to receive and plot the raw data stream transmitted from the hardware prototype deployed on the glasses, concurrently recording video to facilitate labeling of ground truth eye blink occurrences. The average blinking frequency observed during the intentional blinking experiment was approximately ten times per minute.

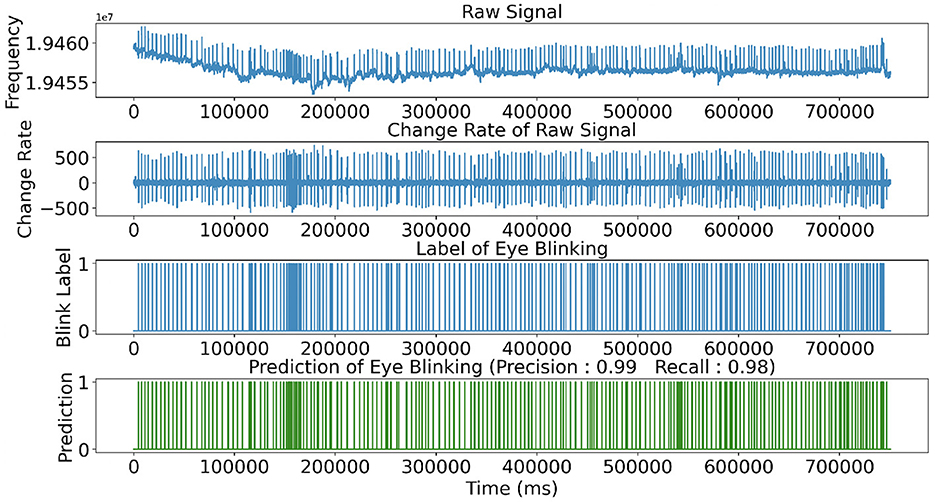

Figure 5 shows the raw signal and its variation during intentional blinking, alongside the ground truth and detected blinks (highlighted in green) from one of the volunteers. The drift observed in the raw signal is attributed to fluctuations in capacitance between the electrode and the environment, such as those caused by head movements. Despite these variations, the distinctive impulses generated by eye blinks remained discernible due to the rapidity of blink events and the significant rate of change in capacitance associated with eye blinks.

Figure 5. Raw signal and signal variation of the intentional blink scenario together with the ground truth and the detected blink(green).

By comparing the signal variation with a predefined threshold, we achieved a high precision of 0.99 and a recall of 0.98 for detecting eye blinks from the volunteer. On average, intentional blink detection yielded a precision of 0.93 and a recall of 0.94, indicating the robustness and effectiveness of our detection approach even in intentional blinking scenarios.

To showcase the practical applicability of the proposed prototype in real-life scenarios, such as reading and talking, all volunteers participated in four everyday activities encompassing both static and dynamic body states. These activities aimed to evaluate the prototype's performance across practical scenarios.

A simulated driving scenario was conducted in a parking lot, recognizing the effectiveness of involuntary eye blink monitoring for indicating fatigue during driving, as demonstrated in previous research (Wang et al., 2006). In the talking scenario, volunteers engaged in conversation with the operator or read aloud from a random news article while wearing the glasses. The reading activity involved participants reading from a paper, book, or smartphone in silence. For the walking scenario, volunteers donned the glasses and walked inside the office building, moving in random directions to assess the prototype's robustness across different environmental conditions.

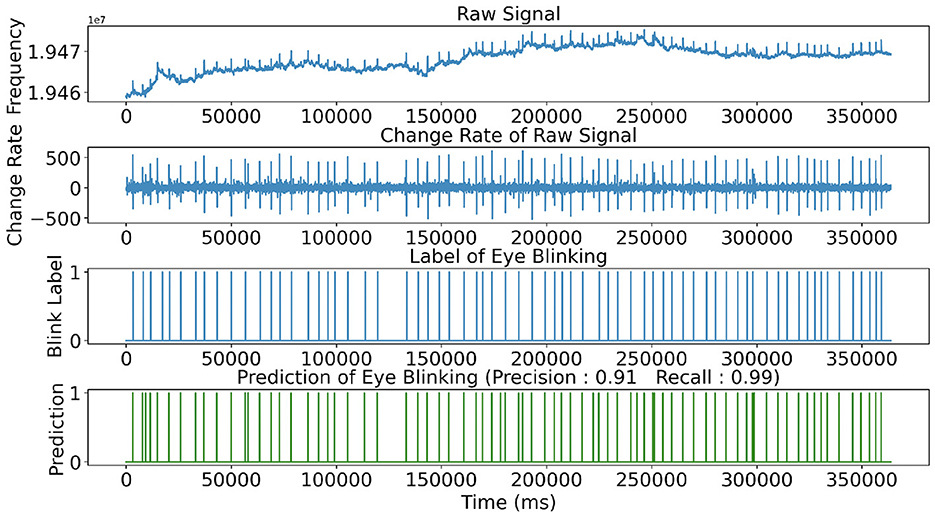

Figure 6 shows the signals, labels, and detected blinks recorded during the walking scenario of one volunteer. Notably, eye blink actions remained clearly discernible in the signal variation stream, with an impressive precision of 0.91 and recall of 0.99 achieved using the proposed positive peak detection approach. Similar levels of performance were observed across the other three explored daily activities, further validating the effectiveness and reliability of our prototype in diverse real-life scenarios.

Figure 6. Raw signal and signal variation of the walking scenario together with the ground truth and the detected blink (green).

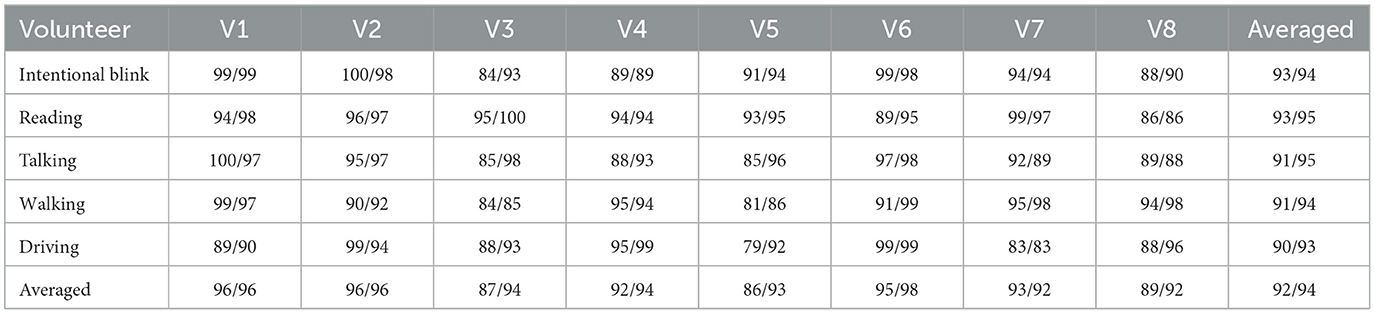

Table 2 presents the results of eye blink detection using the proposed prototype across the volunteers. Volunteers V1, V2, and V6 achieved impressive precision and recall scores, averaging over 0.95. Volunteer V6 notably achieved an average recall of 0.98, indicating that almost all blinks were successfully detected, particularly during walking and driving activities, where the recall reached 0.99. Volunteer V5, however, exhibited a lower average precision of 0.86, indicating the presence of false positives, particularly prominent during involuntary eye blink scenarios (though achieving a precision of 0.91 during intentional blinks). Overall, across all volunteers and activities, the average precision and recall were 0.92 and 0.94, respectively. These results underscore the robustness and effectiveness of our prototype in detecting eye blinks across various real-life scenarios.

Table 2. Eye blink detection performance with the use of customized threshold value (precision/recall, %).

While the eye-blink detection method utilizing a predetermined threshold value demonstrated commendable precision and recall scores, the need for user-dependent threshold configuration poses practical inconveniences across different users. We further implemented an energy-efficient and low-latency user-independent solution for eye blink detection to tackle this issue.

Figure 7 illustrates the workflow of the user-independent solution, comprising five components: a low-pass filter, peak detection, window selection, quantization, and decision tree classifier. Initially, the raw signal undergoes filtration with a low-pass 2-order Butterworth filter featuring a cutoff frequency of 10 Hz. Subsequently, peak detection identifies windows likely to contain blinking behavior. Only windows containing peak signals are forwarded to the classifier for processing, thereby reducing data processing power consumption compared to the slide-window-based approach, where the classifier continually processes windows. A one-second window size was employed in this study. The Python package scikit-learn was utilized to locate peaks using the find-peak function. The selected windows, comprising 30 samples preceding and following the detected peak location, are directed to the quantization module to decrease the precision bit.

In the quantization module, selected windows undergo data calibration and shifting processes. Given the wide range and variance in the magnitude of raw data with 32-bit precision across different users, a calibration step is employed to reduce the precision bit. The calibrated data, denoted as datacalibrated, is computed according to Equation (1). Subsequently, the required precision bit Nbit for the data can be determined as shown in Equation (2). In this work, a window-based calibration approach is utilized, wherein the minimum data min(data) of each window is subtracted correspondingly. This method offers finer granularity, necessitating a smaller number of precision bits compared to a global calibration method, which involves subtracting the global minimum value min(data) from the dataset. This choice is made due to the potential impact of noise and data drift on the global minimum value. The result revealed that the range of required precision bits for all selected windows falls between 9 and 20 bits.

Given the potential benefits such as enhanced hardware and computational efficiency associated with lower precision bit representation, this study delved into exploring a reduced precision bit representation of the selected windows without discernible performance degradation. The precision bit number nbit of the quantized windows is varied from 8 bits to 1 bit. The quantization process of the windows is achieved through right shift operations as illustrated in Equation (3). The parameter nbit is employed to determine the valid data length after the right shift. For instance, if the required precision bit of a window is 6 bits after calibration and nbit is set to 3, then each data element in the window undergoes a right shift of 6 − 3 = 3 bits. Following the shift, the magnitude range of each data in the windows spans from 0 to 7.

In the classifier module, prioritizing computational efficiency and latency considerations, a decision tree classifier was chosen over neural networks for implementation. Decision trees offer simplicity in implementation and are computationally less demanding compared to neural networks. Moreover, they can be readily translated into combinational logic circuits, resulting in ultra-low latency. Additionally, decision trees typically exhibit robustness against outliers in the data, with their performance being less affected by outliers compared to neural networks. Given that capacitive sensing can be susceptible to environmental influences, resulting in outliers, the decision tree was deemed suitable for classifying the selected windows. The decision tree employed in this study had a maximum depth of 15 and utilized the “gini” criterion, implemented using functions from the Python package scikit-learn.

To evaluate the classifier model's performance across subjects, the leave-one-subject-out cross-validation method was utilized in this study. Table 3 presents the eye blink detection results obtained using the decision tree model. It is notable that the precision score exceeds 80% when the precision bit is greater than 2 bits, while the recall score experiences a slight increase as the precision bit is reduced. This phenomenon may stem from a reduction in the number of False Negative predictions when a lower precision bit is chosen. With low-precision bit representation, only the prominent peak features in the windows are retained, typically associated with eye blink activity. Furthermore, the standard deviation of both recall and precision scores exceeds 10%, demonstrating the performance difference of eye blink detection between the different users. In comparison to the user-dependent solution, the performance of the user-independent approach exhibits noticeable degradation. This indicates that individual differences significantly influence the capacitive sensing-based solution in this study. To address this issue, collecting data from a larger pool of participants or exploring alternative learning paradigms such as online learning could be beneficial.

Overall, the decision tree based user-independent method allows for efficient eye-blink detection in real-time scenarios, making it a valuable approach for low-power and ultra-fast inference in eye-blink detection scenarios.

In this work, we introduced the energy-efficient, low-latency and non-contact eye blink detection solution with the use of capacitive sensing, which was evaluated in five application scenarios with using of both user-dependent and user-independent models. Instead of using the neural network model, a decision tree classifier model requiring inexpensive computation source with low-precision bit representation was implemented to realize energy efficiency and low latency in this work. While the prototype demonstrates competitive performance in blink detection, several limitations were observed during the experiments. Firstly, volunteers who do not typically wear glasses were provided with glasses of different sizes composed of plastic frames. However, the blink detection performance may degrade if the glasses do not properly fit the volunteer's head shape, which caused a large standard deviation of precision and recall score using the leave-one-subject-out cross-validation method. This limitation is reasonable considering that the proposed approach relies on the proximity of the upper eyelid to the capacitive electrode, with better sensitivity achieved with a closer distance between the eyelid and electrode. However, this constraint can be more effectively mitigated through the utilization of user-customized glass in real-world applications. Secondly, as each volunteer's blink signal variation scale differs, using a fixed threshold with a user-dependent solution for eye-blink detection across volunteers is not ideal. Although the user-independent model can overcome this issue, the performance of the user-independent model has an obvious degradation compared to the user-dependent solution, because factors such as eye shape and the distance between the eye and electrode can influence this variability of peak signal. Therefore, an online learning solution that adjusts alongside the user could be studied to remove this limitation in the future. Thirdly, during intensive body movements such as running, the glasses may vibrate relative to the head, resulting in a signal that may overshadow the signal caused by blinks. While this study did not explore blink detection in scenarios with such strenuous intensity, it presents a promising avenue for future research. Addressing these limitations would enhance the robustness and applicability of the blink detection approach in various real-life situations. Lastly, the signal quality of eye blink activity is intricately linked to the distribution of the electric field surrounding the eyelid. This electric field is susceptible to influence from electrode factors such as location, shape, and size. However, these electrode characteristics have not been investigated in this study, leaving room for optimization to enhance performance.

In addition to the five potential application scenarios discussed in this paper, our proposed lightweight and non-contact eye blink detection solution could be seamlessly integrated into VR devices. This integration aims to enhance the human-computer interface's performance by utilizing eye blink data. While current methods for extracting eye information, such as eye blink detection and eye tracking, offer highly precise inputs—exemplified by the Apple Vision Pro's sophisticated system of LEDs and infrared cameras that project invisible light patterns onto the eyes to detect blinks and track movement—these multifunctional solutions typically necessitate more complex, heavier hardware systems and greater computational resources. In this work, we have focused solely on demonstrating the efficacy of eye blink detection. Future research will explore eye tracking using multiple sensing electrodes to overcome the limitations mentioned above.

In conclusion, this work presents a pioneering non-contact, wearable, real-time eye blink detection solution leveraging capacitive sensing technology. The custom-built prototype, featuring low-cost and low-power consumption capacitive sensors integrated into standard glasses with a copper tape electrode attached to the frame, detects eye blinks by recognizing variations in capacitance between the electrode and the eyelid, thereby generating distinctive capacitance-related signals. Through evaluation across five different scenarios involving eight participants, the effectiveness and reliability of the proposed solution were confirmed. Employing a user-dependent detection method with a customized predefined threshold value, the prototype achieved an average precision of 92% and a recall of 94%, underscoring its feasibility and robustness. Additionally, the application of an efficient and low-latency user-independent model based on the two-bit precision decision tree yielded an average precision of 80% and an average recall of 81 %. These findings highlight the potential of the proposed technology for real-world applications requiring precise and unobtrusive eye blink detection.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by German Research Center for Artificial Intelligence. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

ML: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. SB: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Writing – original draft, Writing – review & editing. ZZ: Writing – original draft, Writing – review & editing. BZ: Project administration, Writing – review & editing. PL: Conceptualization, Funding acquisition, Project administration, Resources, Supervision, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by European Union's Horizon Europe research and innovation programme (HORIZON-CL4-2021-HUMAN-01) in the project SustainML (101070408).

This work is an extension of the ISWC'22 conference paper (Liu et al., 2022), we thank the contributions made by these authors.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Agarwal, M., and Sivakumar, R. (2019). “Blink: A fully automated unsupervised algorithm for eye-blink detection in eeg signals,” in 2019 57th Annual Allerton Conference on Communication, Control, and Computing (Allerton) (Monticello, IL: IEEE).

Al-gawwam, S., and Benaissa, M. (2018). Robust eye blink detection based on eye landmarks and savitzky-golay filtering. Information 9:93. doi: 10.3390/info9040093

Baccour, M. H., Driewer, F., Kasneci, E., and Rosenstiel, W. (2019). “Camera-based eye blink detection algorithm for assessing driver drowsiness,” in 2019 IEEE Intelligent Vehicles Symposium (IV) (Paris: IEEE), 987–993.

Bian, S., and Lukowicz, P. (2021). “A systematic study of the influence of various user specific and environmental factors on wearable human body capacitance sensing,” in EAI International Conference on Body Area Networks (Cham: Springer), 247–274.

Bian, S., Rey, V. F., Hevesi, P., and Lukowicz, P. (2019). “Passive capacitive based approach for full body gym workout recognition and counting,” in 2019 IEEE International Conference on Pervasive Computing and Communications (PerCom) (Kyoto: IEEE), 1–10.

Bian, S., Liu, M., Zhou, B., Lukowicz, P., and Magno, M. (2024). Body-area capacitive or electric field sensing for human activity recognition and human-computer interaction: a comprehensive survey. arXiv. 8, 1–49. doi: 10.1145/3643555

Braun, A., Heggen, H., and Wichert, R. (2011). “Capfloor-a flexible capacitive indoor localization system,” in International Competition on Evaluating AAL Systems through Competitive Benchmarking (Berlin: Springer), 26–35.

Cardillo, E., Sapienza, G., Li, C., and Caddemi, A. (2021). “Head motion and eyes blinking detection: A mm-wave radar for assisting people with neurodegenerative disorders,” in 2020 50th European Microwave Conference (EuMC) (Utrecht: IEEE), 925–928.

de Lima Medeiros, P. A., da Silva, G. V. S., dos Santos Fernandes, F. R., Sánchez-Gendriz, I., Lins, H. W. C., da Silva Barros, D. M., et al. (2022). Efficient machine learning approach for volunteer eye-blink detection in real-time using webcam. Expert Syst. Appl. 188:116073. doi: 10.1016/j.eswa.2021.116073

Divjak, M., and Bischof, H. (2009). “Eye blink based fatigue detection for prevention of computer vision syndrome,” in MVA (Yokohama: MVA), 350–353.

Faure, V., Lobjois, R., and Benguigui, N. (2016). The effects of driving environment complexity and dual tasking on drivers' mental workload and eye blink behavior. Transp. Res. Part F: Traffic Psychol. Behav. 40, 78–90. doi: 10.1016/j.trf.2016.04.007

Grosse-Puppendahl, T., Berghoefer, Y., Braun, A., Wimmer, R., and Kuijper, A. (2013). “Opencapsense: a rapid prototyping toolkit for pervasive interaction using capacitive sensing,” in 2013 IEEE International Conference on Pervasive Computing and Communications (PerCom) (San Diego, CA: IEEE), 152–159.

Grosse-Puppendahl, T., Berlin, E., and Borazio, M. (2012). “Enhancing accelerometer-based activity recognition with capacitive proximity sensing,” in International Joint Conference on Ambient Intelligence (Berlin: Springer), 17–32.

Haq, Z. A., and Hasan, Z. (2016). “Eye-blink rate detection for fatigue determination,” in 2016 1st India International Conference on Information Processing (IICIP) (Delhi: IEEE), 1–5.

Jongkees, B. J., and Colzato, L. S. (2016). Spontaneous eye blink rate as predictor of dopamine-related cognitive function—a review. Neurosci. Biobehav. Rev. 71:58–82. doi: 10.1016/j.neubiorev.2016.08.020

Jordan, A. A., Pegatoquet, A., Castagnetti, A., Raybaut, J., and Le Coz, P. (2020). Deep learning for eye blink detection implemented at the edge. IEEE Embed. Syst. Lett. 13, 130–133. doi: 10.1109/LES.2020.3029313

Kim, Y. (2014). Detection of eye blinking using doppler sensor with principal component analysis. IEEE Antennas Wirel. Propag. Lett. 14, 123–126. doi: 10.1109/LAWP.2014.2357340

Ko, L.-W., Komarov, O., Lai, W.-K., Liang, W.-G., and Jung, T.-P. (2020). Eyeblink recognition improves fatigue prediction from single-channel forehead EEG in a realistic sustained attention task. J. Neural Eng. 17, 036015. doi: 10.1088/1741-2552/ab909f

Kraft, D., Hartmann, F., and Bieber, G. (2022). “Camera-based blink detection using 3d-landmarks,” in Proceedings of the 7th International Workshop on Sensor-Based Activity Recognition and Artificial Intelligence (New York City, NY: ACM).

Królak, A., and Strumillo, P. (2012). Eye-blink detection system for human-computer interaction. Univer. Access Inform. Soc. 11, 409–419. doi: 10.1007/s10209-011-0256-6

Kumar, D., and Sharma, A. (2016). “Electrooculogram-based virtual reality game control using blink detection and gaze calibration,” in 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI) (Jaipur: IEEE), 2358–2362.

Kwon, K.-A., Shipley, R. J., Edirisinghe, M., Ezra, D. G., Rose, G., Best, S. M., et al. (2013). High-speed camera characterization of voluntary eye blinking kinematics. J. Royal Soc. Interf. 10, 20130227. doi: 10.1098/rsif.2013.0227

Liu, J., Li, D., Wang, L., and Xiong, J. (2021). Blinklistener: “listen” to your eye blink using your smartphone. Proc. ACM Interact. Mobile Wearable Ubiquit. Technol. 5, 1–27. doi: 10.1145/3463521

Liu, M., Bian, S., and Lukowicz, P. (2022). “Non-contact, real-time eye blink detection with capacitive sensing,” in Proceedings of the 2022 ACM International Symposium on Wearable Computers (New York City, NY: ACM), 49–53.

Luo, E., Fu, R., Chu, A., Vega, K., and Kao, H.-L. (2020). “Eslucent: an eyelid interface for detecting eye blinking,” in Proceedings of the 2020 International Symposium on Wearable Computers (New York, NY: ACM), 58–62.

Maleki, A., and Uchida, M. (2018). Non-contact measurement of eyeblink by using doppler sensor. Artif. Life Robot. 23, 279–285. doi: 10.1007/s10015-017-0414-x

Martins, R., and Carvalho, J. (2015). Eye blinking as an indicator of fatigue and mental load—a systematic review. Occup. Safety Hygi. III 10, 231–235. doi: 10.1201/b18042-48

Porins, R., and Apse-Apsitis, P. (2019). “Capacitive proximity sensing hardware comparison,” in 2019 IEEE 60th International Scientific Conference on Power and Electrical Engineering of Riga Technical University (RTUCON) (Riga: IEEE), 1–5.

Ryan, C., O'Sullivan, B., Elrasad, A., Cahill, A., Lemley, J., Kielty, P., et al. (2021). Real-time face & eye tracking and blink detection using event cameras. Neural Netw. 141, 87–97. doi: 10.1016/j.neunet.2021.03.019

Shu, Y., Wang, Y., Yang, X., and Tian, Z. (2022). An improved denoising method for eye blink detection using automotive millimeter wave radar. EURASIP J. Adv. Signal Proc. 2022, 1–18. doi: 10.1186/s13634-022-00841-y

Soukupova, T., and Cech, J. (2016). “Real-time eye blink detection using facial landmarks,” in 21st Computer Vision Winter Workshop, Rimske Toplice, Slovenia.

Wang, Q., Yang, J., Ren, M., and Zheng, Y. (2006). “Driver fatigue detection: a survey,” in 2006 6th World Congress on Intelligent Control and Automation (Dalian: IEEE), 8587–8591.

Ye, Y., He, C., Liao, B., and Qian, G. (2018). Capacitive proximity sensor array with a simple high sensitivity capacitance measuring circuit for human-computer interaction. IEEE Sens. J. 18, 5906–5914. doi: 10.1109/JSEN.2018.2840093

Youwei, L. (2023). Real-time eye blink detection using general cameras: a facial landmarks approach. Int. Sci. J. Eng. Agricult. 2, 1–8. doi: 10.46299/j.isjea.20230205.0

Zeng, W., Xiao, Y., Wei, S., Gan, J., Zhang, X., Cao, Z., et al. (2023). “Real-time multi-person eyeblink detection in the wild for untrimmed video,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New York City, NY: IEEE), 13854–13863.

Keywords: eye blink detection, non-contact, low latency, human activity recognition, capacitive sensing, energy efficiency

Citation: Liu M, Bian S, Zhao Z, Zhou B and Lukowicz P (2024) Energy-efficient, low-latency, and non-contact eye blink detection with capacitive sensing. Front. Comput. Sci. 6:1394397. doi: 10.3389/fcomp.2024.1394397

Received: 01 March 2024; Accepted: 16 May 2024;

Published: 11 June 2024.

Edited by:

Xianta Jiang, Memorial University of Newfoundland, CanadaReviewed by:

Youwei Lu, Oklahoma State University, United StatesCopyright © 2024 Liu, Bian, Zhao, Zhou and Lukowicz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mengxi Liu, bWVuZ3hpLmxpdUBkZmtpLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.