- 1Electrical and Computer Engineering Department, Birzeit University, Birzeit, Palestine

- 2Data Science and Business Analytics Program, Arab American University, Jenin, Palestine

Student modeling is a fundamental aspect in customized learning environments. It enables unified representation of students' characteristics that supports creating personalized learning experiences. This paper aims to build an effective student model by combining learning preferences with skill levels. A student profile is formulated upon detecting the user's learning styles and learning preferences, as well as their knowledge level and misconceptions. The pieces of information are collected through an interactive online platform, by completing personal and knowledge assessment quizzes. Moreover, a learner can make his/her profile open for other learners as a starting point for supporting collaborative learning. The results showed an improvement of students' educational achievements who used the platform, and the satisfaction level reported by non-neutral users was averaged as a score of 90%. The evaluation of this platform showed promising results regarding its ability in describing students in a comprehensive manner.

1 Introduction

Classical learning systems, either in-person or online, have usually been designed from a teacher's perspective, where one learning path was meant to fit all students. This path does not take into account the differences between learners, resulting in demotivated learners with low engagement with a course (Raleiras et al., 2022). One way to overcome this issue is by considering learners' traits to offer the ability to deliver learning materials according to those traits. Such a technique is called learner modeling. The construction of a learner model is a key step in designing personalized e-learning conditions with a learning management system. The main goal of this modeling is to draw out user attributes in order to customize the learning processes taking into account learners' styles, personalities, and needs (Abbasi et al., 2021).

In this study, we aim to build up a user model where no previous data or interaction of the student is available. The considered characteristics are learning styles, learning preferences, knowledge levels, and misconceptions. We will try to find out the impact of determining each of these measures on the learning outcome. Additionally, the idea of collaborative learning is presented to open the door for further studies.

2 Background and related studies

In this section, a background about this study will be presented as well as related studies found in the literature.

2.1 Intelligent tutoring system

An intelligent tutoring system (ITS) is a computer system that imitates human tutors and aims to provide immediate and customized instruction or feedback to learners (Psotka and Mutter, 1988), usually without requiring intervention from a human teacher (Arnau-González et al., 2023). ITSs have the common goal of enabling learning in a meaningful and effective manner by using a variety of computing technologies. ITSs are often designed with the goal of providing access to high-quality education to each and every student.

Intelligent tutoring systems (ITSs) consist of four basic components based on a general consensus among researchers (Freedman, 2000; Nkambou et al., 2010): domain model, student model, tutoring model, and user interface model.

Without diminishing the importance of other components within the ITS architecture, the student model is notably regarded as the central element, focusing on the cognitive and affective states of students and their progression throughout the learning journey (Mitrovic, 2010). Utilizing the student model, the ITS dynamically customizes instruction to suit each learner's needs (Brusilovsky, 1994), thus affirming its pivotal role as the principal facilitator of “intelligence” within the ITS architecture.

2.2 Personalized e-learning systems

Traditionally, learning systems were seen as “one-size-fits-all” systems, where they offered the same learning objects in the same structure to all students (Imran et al., 2014). However, students differ in their characteristics in terms of knowledge levels, misconceptions, learning styles, and preferences. Personalization addresses this issue to improve students' overall satisfaction (Fraihat and Shambour, 2015).

Furthermore, learning has become more student-oriented, replacing traditional learning tools with personalized learning systems (Sikka et al., 2012; Abouzeid et al., 2021). It has been proved that learning success can significantly be enhanced if the learning content is individually adapted to each learner's preferences, needs, and learning progress (Sikka et al., 2012). Students in the same class have varying knowledge levels and learn at different paces. There is a higher chance to succeed academically if they are supported individually. Thus, personalized education will help advance education quality by developing an individualized style of thinking and allowing learners to adapt more quickly to the learning environment (Zagulova et al., 2019).

The topic of personalization in e-learning has been the center of several recent studies (Bourkoukou et al., 2016). Recommender systems can be adopted to support personalization through recommending appropriate learning objects to learners taking into consideration their exclusive needs and characteristics (Fraihat and Shambour, 2015).

2.3 Student modeling

As demonstrated in the preceding sections, the student model serves as the central component of any ITS or personalized e-learning system, making the development of an advanced student model critical. This can be achieved through two primary methods of data collection from learners: explicit gathering, which involves direct acquisition of data from learners, or implicit monitoring of their activities on a learning platform. A robust student profile can be readily tailored to individual learners based on their preferences. In the realm of e-learning system personalization, learner models play a pivotal role, guiding the recommendation of the most suitable learning resources based on learner characteristics (Ciloglugil and Inceoglu, 2018). Various features are incorporated into recommender systems for personalization, encompassing learners' preferences, goals, prior knowledge, misconceptions, and motivation levels (Jeevamol and Renumol, 2021). The following subsections describe the main components we should incorporate into a student model.

2.3.1 Learning styles and preferences

Learning styles play a crucial role in the personalization of online learning; studies have proved a direct relationship between learning styles and the challenges that students face.

As defined by Keefe, “learning style is the composite of characteristics of cognitive, affective and psychological factors that serve as relatively stable indicators of how a learner perceives, interacts with, and responds to the learning environment” (Zagulova et al., 2019). However, Karen and Felicetti (1992) defined learning styles, to be the educational conditions under which a student learns best. Thus, learning styles are concerned with how students would prefer to learn rather than what they actually learn. Many operational and conceptual models exist for learning styles (Kolb, Felder–Silverman, Myers–Briggs, Honey and Mumford, VARK, etc.). These models differ in the conclusions they draw, and the conditions they suggest to improve learning (Zagulova et al., 2019).

For this research, Neil Fleming's VARK model will be considered for defining students' learning styles. The acronym VARK stands for Visual, Aural, Read/write, and Kinesthetic styles. This model was introduced by Fleming and Mills and is established on the experiences of students and teachers. The Visual learning style represents information as maps, diagrams, charts, graphs, flowcharts, and symbols instead of words. The Aural learning style suggests a preference for information that is heard or spoken. Those with such a learning style learn best from group discussions, lectures, phone, radio, and talking about concepts. Learners having a Read/Write learning style prefer information to be displayed as words. This learning style highlights text-based input and output, such as reading and writing manuals, books, assignments, essays, and reports. Finally, the Kinesthetic learning style implies a perceptual preference for hands-on experience and practice that can be simulated or real. It encompasses demonstrations, case studies, videos, simulations, and movies (Bajaj and Sharma, 2018).

The VARK model was nominated for this study as it is an easy tool that can determine how to maximize students' learning, by accurately assessing the effectiveness of different means of obtaining knowledge. The concept of this model is based on two main ideas; first, teaching students with their preferred methods significantly impacts their success in the processing of information and education. Second, acquiring knowledge by students with the use of their individual learning styles leads to the best degree of motivation, interest, and understanding (Hanurawan, 2017). Moreover, it deals with the different perceptual modes, where the only modes, or senses, that it does not consider are taste and smell. The VARK inventory offers metrics regarding the four modes, where an individual may have preferences varying from one to all four (Hawk and Shah, 2007).

The determination of the VARK learning style is achieved through the VARK questionnaire, which has demonstrated its validity and good reliability and has been adopted by many studies (Katsioloudis and Fantz, 2012; Ringo et al., 2015; Hasibuan and Nugroho, 2017; Aldosari et al., 2018; Abouzeid et al., 2021).

The concept of learning styles and other learning preferences has been employed in optimizing recommendations to personalize e-learning platforms. Many researchers have addressed this relationship from different perspectives. Several studies have attempted to build a user model by detecting user interaction with a learning management system. The collected logs and transactions are then used to estimate learning styles and traits (Hassan et al., 2021; Lwande et al., 2021). Other studies have also made use of the data collected on student behavior on the online platform. They proposed models based on machine learning algorithms to identify students' learning styles, which resulted in promising outcomes with adequate prediction of educational resources (Hassan et al., 2021; Lwande et al., 2021). While Heidrich et al. (2018) used classification techniques as well, they considered both the Learner Learning Trail and the Learner Learning Style. Additionally, software agents have played a role in monitoring users' actual activities to capture their learning styles (Rani et al., 2015).

The study by Ariebowo (2021) analyzed students' preferences and objectives in learning during the ongoing situation forced by the COVID-19 pandemic.

2.3.2 Knowledge level and misconceptions

Broadly speaking, there are two ways to define the level and find misconceptions, a dynamic one that relies on implicit information, automatically gathered by observing users' behaviors, and a static method that depends on explicit questions.

In terms of static determination of characteristics, Agbonifo and Motunrayo (2020) carried out a pre-test to measure students' knowledge levels explicitly, in order to categorize them into three segments based on their level. In the study by Krouska et al. (2021), a rule-based approach was utilized to identify misconceptions based on the repair theory. Misconceptions are detected by answering two consecutive quizzes with the same misconception. If the second question is answered correctly, the system assumes that the first incorrect answer was a result of student carelessness.

Regarding the automatic detection of misconceptions, in one research (Ghatasheh, 2015) machine learning played a role in user activity analysis, in which multiple classification algorithms were employed to predict the knowledge level. Additionally, Amer and Aldesoky (2021) used a multi-agent design for tracking and monitoring students' activities in order to model their knowledge and misconceptions.

The effectiveness of using online media to address misconceptions was studied by Halim et al. (2021). The results revealed that the following ordered treatments were the most effective in lowering the percentage of misconceptions: narrative feedback, e-learning modules, and realistic video.

In our case, we will be more interested in static methods, as we are working on the modeling of students in cold-start circumstances.

2.4 Collaborative learning

Collaborative learning is defined as the mutual participation and commitment of the engaged parties working together in a coordinated effort to tackle the problem. In collaborative learning, learning is seen as a shared experience (van Leeuwen and Janssen, 2019).

As online learning is becoming more dominant in education, it has been found that collaborative learning and social factors enhance activities of students' learning; thus, their utilization should be encouraged in teaching and learning in educational institutions as it impacts the academic development of students (Qureshi et al., 2021).

This study endeavors to construct an initial draft of a student model comprising various elements aimed at characterizing the student, including learning styles, preferences, knowledge levels, and misconceptions. The primary objective is to provide students with insight into themselves, particularly regarding misconceptions they may hold, to observe how this awareness can facilitate immediate self-improvement. Additionally, students will have the option to share their models with peers, potentially fostering increased collaboration among them.

3 Materials and methods

To accomplish the intended objective, a web-based platform was developed, comprising a user interface for gathering users' explicit data and an analytical backend component for interpreting this data into the intended factors. Two categories of information were collected: the first pertained to learning styles and preferences, while the second focused on knowledge level and misconceptions. To assess the assumptions, two methods were employed: an experimental approach to determine the system's impact and a qualitative assessment conducted via a questionnaire.

3.1 User interface

The main objective behind the user interface is to offer students an easy-to-use platform by which they can insert their information, where everything will be in one place, with a smooth sequential journey. The interface starts with a dashboard showing a summary of the user's progress and preferences where it offers the option to navigate to other parts of the system to provide more data.

From the system a student can take a preference quiz, the results of this quiz are presented in terms of learning preferences and learning styles. The questions related to defining learning style are set upon the VARK questionnaire (https://vark-learn.com/the-vark-questionnaire/), which is a freely available tool for assessing learning styles. The rest of the preferences are based on the literature, and they include the material's length, format, level of detail, content type, and language. For a number of questions, the student has the choice to select all the answers that apply to them, as one's preferences do not strictly fall under one category.

In addition to that, the system contains courses, each of which consists of a list of topics. To customize these topics, a level quiz is available before starting a topic. These quizzes consist of a limited number of multiple-choice questions covering the main concepts in the intended topics. The quizzes are time-bounded, as the time taken to complete the quiz plays a role in determining the student's skill level. The results of the quiz show the knowledge level, along with any detected misconceptions.

Furthermore, students can set their profiles as public, enabling other students to view their data, including contact details and knowledge assessment results. The idea behind this feature is to set the basis for collaborative filtering, where students who need help in some area, can find other students who excel in that area. Students with similar mindsets may find each other and get in touch to study together. The target is to turn this into a network within the platform, with in-platform chat, connections, and messaging. Additionally, connected users will be able to create study groups for knowledge sharing.

3.2 Learning style determination

As mentioned earlier, the questions related to learning style were derived from the VARK questionnaire. The questionnaire includes indirect questions about how one would prefer to get or give knowledge in a situation where each option is associated with a specific learning style. If one uses different methods for knowledge exchange, then more than one option can be selected.

The learning style to which most of that user's answers belong is marked as the learning style of that user. In general, more than one style can be dominant among the answers. Thus, in such cases, more than one style will appear in the profiles of these users.

3.3 Preferences interpretation

Some of the questions regarding learning preferences are straightforward, such as the preferred language. However, others are indirect or require some aggregation, such as those related to the preferred level of detail.

3.4 Knowledge assessment

Knowledge assessment falls into two parts. The first part assesses the mastery level of the examined topic, which can be good, medium, or low. The level (good, medium, and low) is based on the quiz score as a pure number, except if a misconception was detected then the identified misconception will be shown instead of the level.

The second part is concerned with identifying the potential misconceptions. Assessment quizzes contained options that are linked to misconceptions. If for the majority of questions the selected answers hold a certain misconception, then it will be concluded that the user has this misconception which needs to be corrected. In addition to identifying the misconception, the system will provide guidance on what the right thing to do is.

3.5 System evaluation

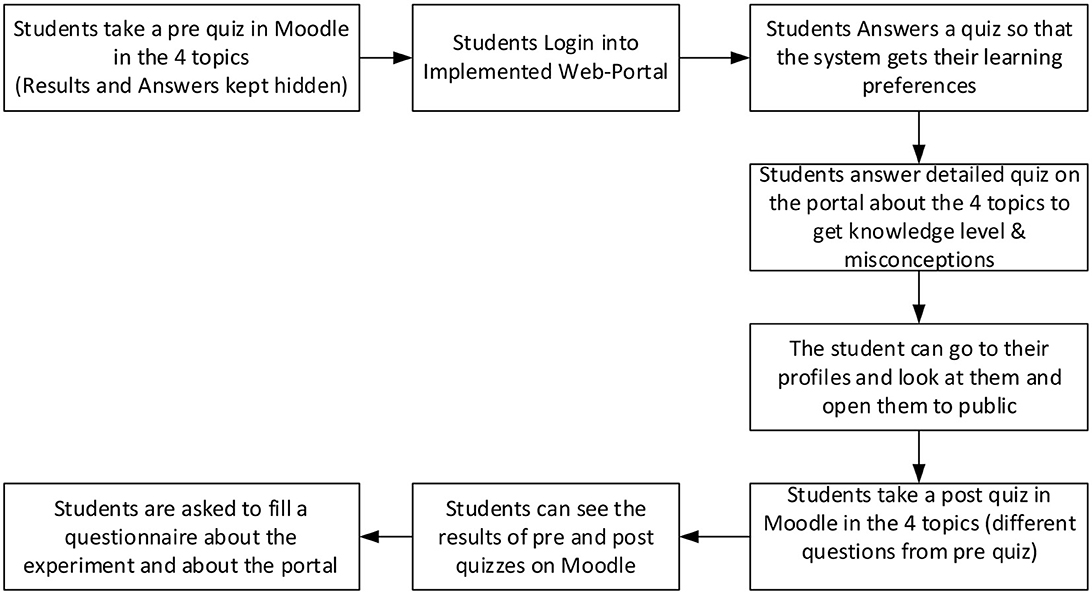

Figure 1 shows the main steps to test the proposed system and evaluate the user experience. The subject of “Digital Design” was selected, with four inner topics. To evaluate the effectiveness of the system and the efficiency of the considered aspects, two approaches were taken: empirical and questionaries. A group of 67 students were examined using a pre-quiz and a post-quiz carried out through Moodle. Between these two quizzes, the students registered on the platform and went through the full experience. They took the preferences quiz, navigated to the “Digital Design” course, and completed the level quizzes for the listed topics. Finally, they were able to preview their model on the portal.

For the empirical reasoning, the results from the pre-quiz were compared against the ones from the post-quiz. The comparison was based on multiple criteria to observe the effect of using the system vs. not using it, and how identifying misconceptions would impact students' learning. The results from the two quizzes were analyzed question by question with respect to the corresponding results obtained from the platform. For each topic, the analysis included the score from the pre-quiz, the score from the post-quiz, whether a quiz for that topic was taken in the platform, the score for the topic's quiz from the platform, and whether a misconception was detected. After detailing the results per topic, we ended up with 261 records.

Moreover, a questionnaire was carried out with the same group of students to explore the extent to which they found the system and the concepts behind it helpful. The questionnaire included 12 statements, and ratings were required to pinpoint the applicability or degree of agreement with each of these statements. Some of the questions were related to the quality of the website, while the rest were to check the importance of the taken aspects in general.

4 Results and discussion

This section will present the main results obtained in the system and the users' evaluation of the system model.

4.1 Experimental results

In general, comparing the test results before and after using the system, there was an increase of ~3 marks in the average results between the two tests, where it went from 7.9 to 10.63 out of 20. However, not all students took the four quizzes within the system; therefore, the following analysis will be presented. Regarding this analysis, 5 will be taken as the full mark.

To generate more precise conclusions, the results from the pre-test and post-test were studied question by question with respect to the results of the corresponding topic from the platform. In other words, the result of each question from the pre- and post-quizzes was taken separately and linked with the quiz from the platform that holds the same topic for each student. The parameters included in the reasoning were the question score from the pre-test, the question score from the post-test, whether a quiz for that topic was taken in the platform, the score for the topic's quiz from the platform, and whether a misconception was detected.

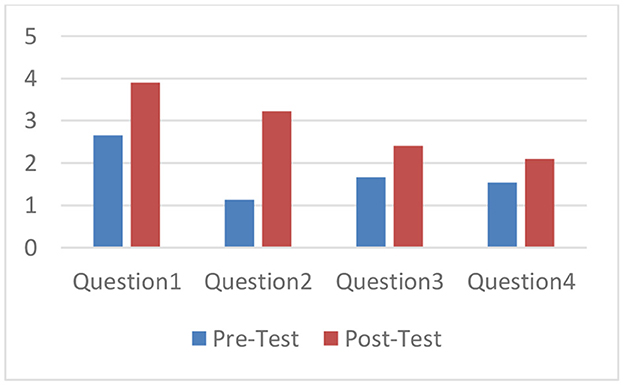

In both the pre-quiz and post-quiz, the first question (Q1) was about minterms, the second question (Q2) was about maxterms, the third question (Q3) was about prime implicants, and the fourth question (4) was about decoders. Where minterms, maxterms, prime implicants, and decoders are subtopics in digital logic design class. As each of these topics has an in-platform quiz, Table 1 illustrates how these parts were grouped in the analysis. M stands for the presence of a misconception.

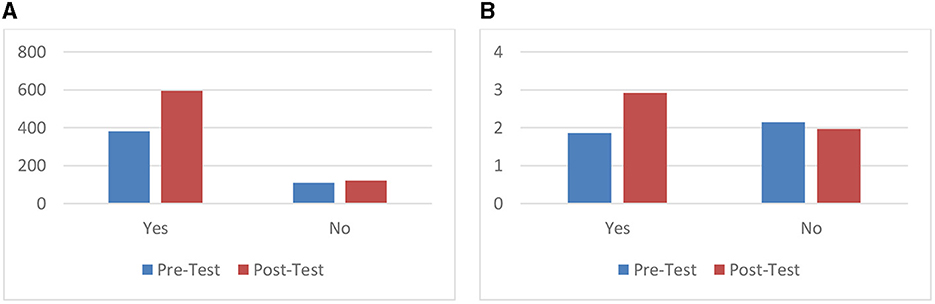

The results included 204 instances who had taken the in-platform quiz and 56 instances who had not taken the quiz. The results, as displayed in Figures 2A, B, show that the total mark of the cases where the platform was used increased from 380 to 595, which led to an increase of the average from 1.8 to 2.9 out of 5. In cases where questions were answered without taking the corresponding topic's quiz, there was a slight decrease from 2.1 to 1.96 out of 5. This indicates that the system helped students get better results.

More interestingly, there were 44 cases where the score in the pre-test was 0, and after using the platform, a misconception was detected. In all of these 44 cases, there was an improvement, and the students achieved 5 in the second test. These results prove the effect of telling the students their misconceptions and how correcting such misconceptions leads to improving their knowledge. On the other hand, there were 16 cases with a mark in the second test that was lower than that of the first one. In total, 14 of 16 students did not do well in the platform quizzes with marks between 0 and 50%. However, the system did not detect which misconceptions were dominant for these students. Moreover, in such cases, the system would just tell that the knowledge is poor as there was not a consistent pattern of the answers.

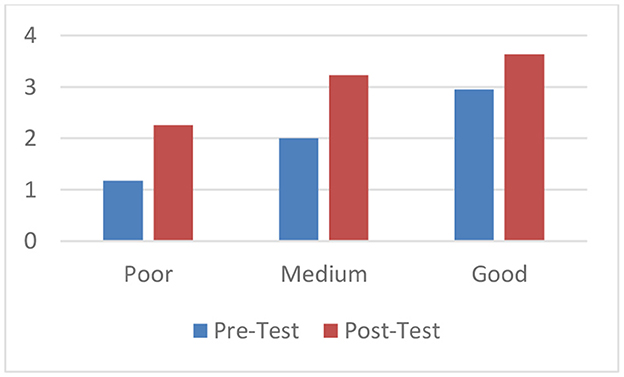

Another aspect of the analysis was the effect of the system on the different knowledge levels. Figure 3 shows the amount of improvement per knowledge group. It can be seen that those with poor and medium levels of knowledge had very similar degrees of improvement, which exceeded the improvement that was seen in students with good knowledge levels.

The impact of taking the knowledge assessment per topic is shown in Figure 4. The greatest amount of improvement was achieved in the second topic followed by the first one. To interpret this finding, we observed the distribution of detected misconceptions and noted that a higher number of misconceptions were found in the first two topics compared to the third and fourth topics.

4.2 Questionnaire results

Moving to the second part of the evaluation. The students who experimented with the platform provided their points of view through a questionnaire. The analysis of the results was divided into questions supporting the idea and questions regarding the efficiency of the proposed platform.

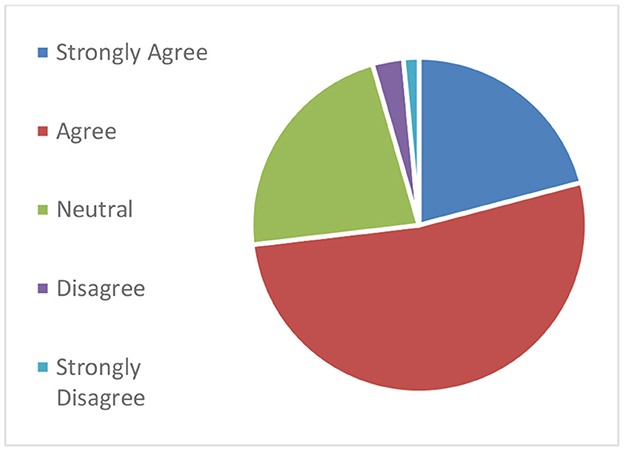

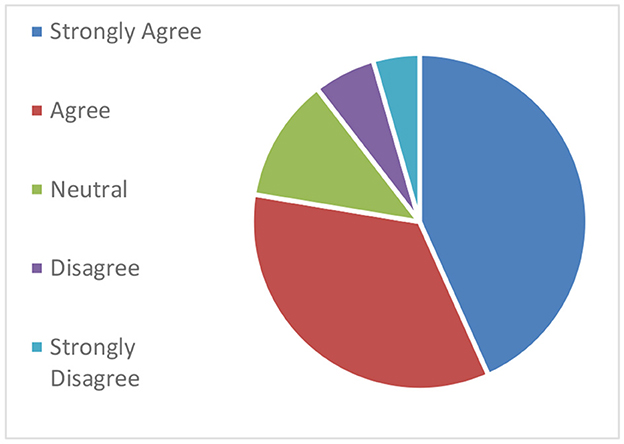

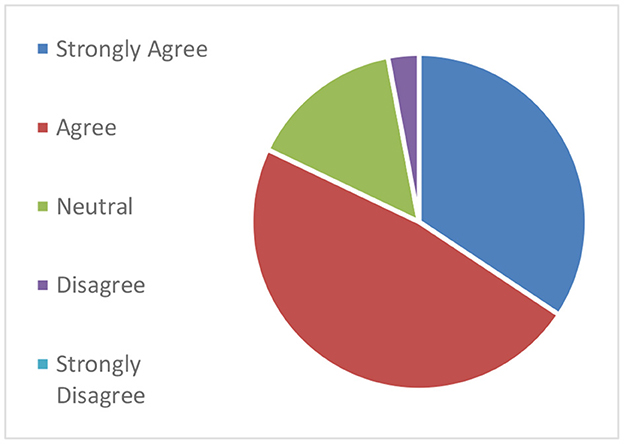

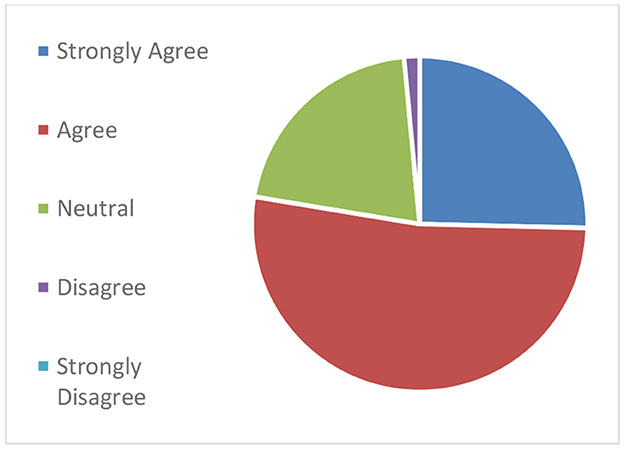

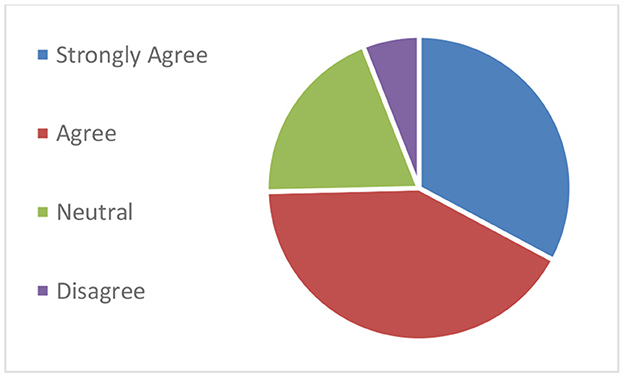

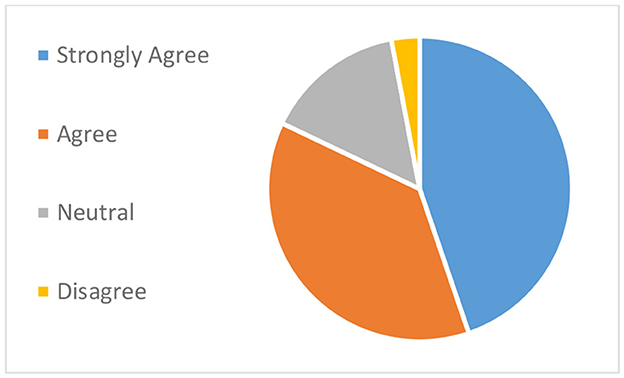

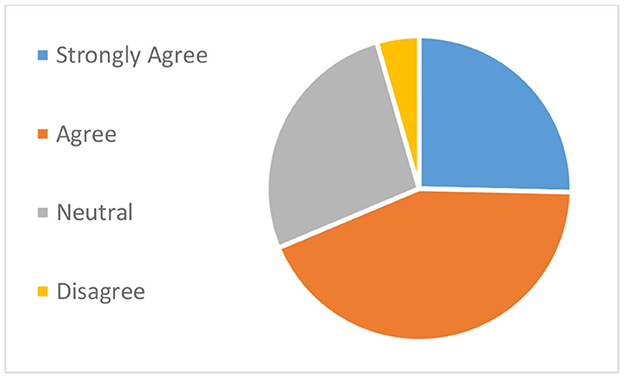

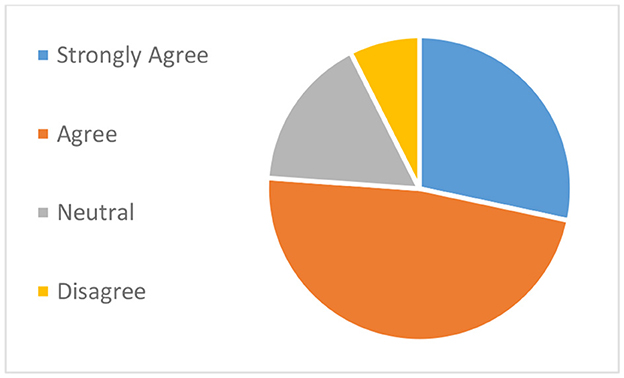

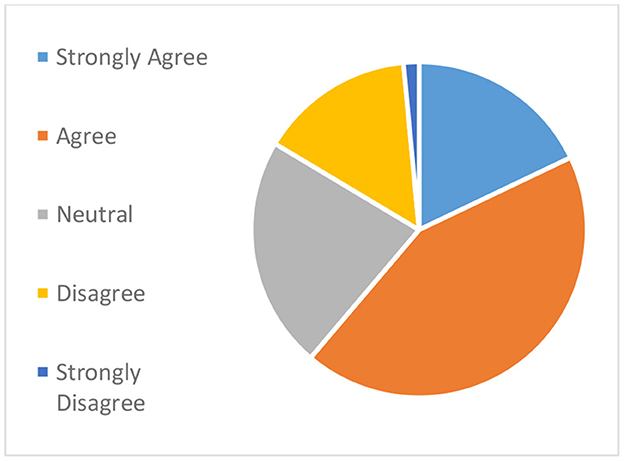

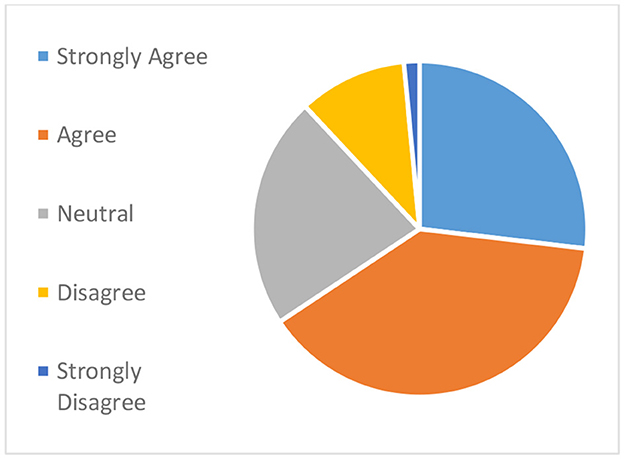

Students' opinions were generally positive about the concepts suggested by the research. Figure 5 shows very few students believe that knowing their misconceptions will not enhance their knowledge. Figure 6 shows the majority of students agree on the importance of displaying knowledge level on saving learners time. We can see, from Figure 7, that the minority of only two students disagreed that learning preferences aid the learning experience. Figure 8 shows that four answers disagreed while three strongly disagreed (a total of 10%), that seeing the public profiles alone is not sufficient for facilitating collaborative learning. This suggests the need for adding the features of chatting and connecting through the platform, in order to give more practical facilities for collaborative learning. Moreover, there is a potential for adding the feature of recommending learning materials according to the proposed models and offering the option of rating these materials, per the results from Figures 6, 9.

Another, and perhaps more crucial aspect, involves assessing the system's performance from the perspective of the sample group. The results indicate quite positive feedback. Figure 10 shows that none of the students disagreed about the ability of the platform to decide learning preferences. Moreover, from Figure 11, it can be concluded that the considered preferences were pretty much what students might look for. The user experience measured in Figures 12, 13 is good and can be easily enhanced. However, there is room for improvement in the interactivity of the platform (Figure 14), the intended capability of conducting study groups could be one option for addressing this. Finally, Figure 15 shows the student's satisfaction regarding the quiz length used to obtain their knowledge level and misconceptions.

5 Conclusion and future work

This study proposed a new approach for learning management systems that helps in personalizing the learning experience for each user. The research took into account the student modeling step of personalization, which is the first and most critical step, as it sets the ground for the latter steps. The system analyzed the personality aspect in terms of preferences and styles, as well as the knowledge aspect, considering misconceptions and knowledge levels.

After trying the system with the help of 67, it did well in figuring out their learning styles, as it could detect their misconceptions in some areas. Detecting misconceptions and presenting them narratively guided the students on how to correct them and improve their knowledge. On the other hand, identifying misconceptions proved to be less advantageous for students already possessing a good level of knowledge, as there was little room for further elaboration.

The implementation of an ITS based on the built profile to suggest material that matches the preferences and knowledge of each individual could be worked on in a future study. Users can give feedback about the recommended material, offering the opportunity to adjust the generation of those materials. In addition, the interaction of students with the system could further improve the contents of the student model.

Finally, collaborative learning could be put into practice by allowing users to reach each other to interact and share knowledge through the system.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the [patients/participants OR patients/participants legal guardian/next of kin] was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

AA-I: Investigation, Methodology, Supervision, Validation, Writing—review & editing. HB: Conceptualization, Investigation, Methodology, Software, Writing—original draft. IT: Investigation, Validation, Writing— review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbasi, M. H., Gholam, C., Montazer, A., Ghorbani, F., Alipour, Z., and Montazer, G. A. (2021). Categorizing E-learner attributes in personalized E-learning environments: a systematic literature review. Interdiscipl. J. Virt. Learn. Med. Sci. 12, 1–21. doi: 10.30476/ijvlms.2021.88514.1062

Abouzeid, E., Fouad, S., Wasfy, N. F., Alkhadragy, R., Hefny, M., and Kamal, D. (2021). Influence of personality traits and learning styles on undergraduate medical students' academic achievement. Adv. Med. Educ. Practice 12:769. doi: 10.2147/AMEP.S314644

Agbonifo, O. C., and Motunrayo, A. (2020). Development of an ontology-based personalised E-learning recommender system. Int. J. Comput. 38, 102–112.

Aldosari, M. A., Aljabaa, A. H., Al-Sehaibany, F. S., and Albarakati, S. F. (2018). Learning style preferences of dental students at a single institution in Riyadh, Saudi Arabia, evaluated using the VARK questionnaire. Adv. Med. Educ. Practice 9:179. doi: 10.2147/AMEP.S157686

Amer, M., and Aldesoky, H. (2021). Building an e-learning application using multi-agents and fuzzy rules. Electr. J. E-Learn. 19, 199–208. doi: 10.34190/ejel.19.3.2308

Ariebowo, T. (2021). Autonomous learning during COVID-19 pandemic: students' objectives and preferences. J. For. Lang. Teach. Learn. 6:10079. doi: 10.18196/ftl.v6i1.10079

Arnau-González, P., Arevalillo-Herráez, M., Luise, R. A. D., and Arnau, D. (2023). A methodological approach to enable natural language interaction in an Intelligent Tutoring System. Comput. Speech Lang. 81:101516. doi: 10.1016/j.csl.2023.101516

Bajaj, R., and Sharma, V. (2018). Smart education with artificial intelligence based determination of learning styles. Proc. Comput. Sci. 132, 834–842. doi: 10.1016/j.procs.2018.05.095

Bourkoukou, O., Bachari, E., and Adnani, M. (2016). A personalized E-learning based on recommender system. Int. J. Learn. 2, 99–103. doi: 10.18178/ijlt.2.2.99-103

Brusilovsky, P. (1994). “Student model centered architecture for intelligent learning environments,” in Proc. of Fourth International Conference on User Modeling (Amherst, MA: User Modeling Inc.), 31–36.

Ciloglugil, B., and Inceoglu, M. M. (2018). A learner ontology based on learning style models for adaptive E-learning. Lect. Not. Comput. Sci. 10961, 199–212. doi: 10.1007/978-3-319-95165-2_14

Fraihat, S., and Shambour, Q. (2015). A framework of semantic recommender system for E-learning. J. Softw. 10, 317–330. doi: 10.17706/jsw.10.3.317-330

Freedman, R. (2000). What is an intelligent tutoring system? Intelligence 11, 15–16. doi: 10.1145/350752.350756

Ghatasheh, N. (2015). Knowledge level assessment in e-learning systems using machine learning and user activity analysis. Int. J. Adv. Comput. Sci. Appl. 6, 107–113. doi: 10.14569/IJACSA.2015.060415

Halim, A., Mahzum, E., Yacob, M., Irwandi, I., Halim, L., Stracke, C. M., et al. (2021). The impact of narrative feedback, E-learning modules and realistic video and the reduction of misconception. Educ. Sci. 11:158. doi: 10.3390/educsci11040158

Hanurawan, I. N. (2017). Teaching writing by using Visual, Auditory, Read/Write, and Kinesthetic (VARK) learning style in descriptive text to the seventh grade students of SMPN 2 Jiwan. Engl. Teach. J. 5:4721. doi: 10.25273/etj.v5i1.4721

Hasibuan, M. S., and Nugroho, L. (2017). Detecting learning style using hybrid model. 2016 IEEE Conf. E-Learn. E-Manag. E-Serv. IC3e 2016, 107–111. doi: 10.1109/IC3e.2016.8009049

Hassan, M. A., Habiba, U., Majeed, F., and Shoaib, M. (2021). Adaptive gamification in e-learning based on students' learning styles. Interact. Learn. Environ. 29, 545–565. doi: 10.1080/10494820.2019.1588745

Hawk, T. F., and Shah, A. J. (2007). Using learning style instruments to enhance student learning. Decision Sci. J. Innov. Educ. 5, 1–19. doi: 10.1111/j.1540-4609.2007.00125.x

Heidrich, L., Victória Barbosa, J. L., Cambruzzi, W., Rigo, S. J., Martins, M. G., and dos Santos, R. B. S. (2018). Diagnosis of learner dropout based on learning styles for online distance learning. Telemat. Informat. 35, 1593–1606. doi: 10.1016/j.tele.2018.04.007

Imran, H., Hoang, Q., Chang, T.-W., and Graf, S. (2014). LNAI 8397—a framework to provide personalization in learning management systems through a recommender system approach. Intell. Inform. Datab. Syst. 8397, 271–280. doi: 10.1007/978-3-319-05476-6_28

Jeevamol, J., and Renumol, V. G. (2021). An ontology-based hybrid e-learning content recommender system for alleviating the cold-start problem. Educ. Inform. Technol. 26, 4993–5022. doi: 10.1007/s10639-021-10508-0

Karen, S., and Felicetti, L. (1992). Learning styles of marketing majors. Educ. Res. Quart. 15, 15–23.

Katsioloudis, P., and Fantz, T. D. (2012). A comparative analysis of preferred learning and teaching styles for engineering, industrial, and technology education students and faculty. J. Technol. Educ. 23:a.4. doi: 10.21061/jte.v23i2.a.4

Krouska, A., Troussas, C., and Sgouropoulou, C. (2021). A cognitive diagnostic module based on the repair theory for a personalized user experience in E-learning software. Computers 10:140. doi: 10.3390/computers10110140

Lwande, C., Muchemi, L., and Oboko, R. (2021). Identifying learning styles and cognitive traits in a learning management system. Heliyon 7:e07701. doi: 10.1016/j.heliyon.2021.e07701

Mitrovic, A. (2010). Fifteen years of Constraint-Based Tutors: what we have achieved and where we are going. User Model. User-Adapt. Interact. 22, 39–72. doi: 10.1007/s11257-011-9105-9

Nkambou, R., Mizoguchi, R., and Bourdeau, J. (2010). Advances in Intelligent Tutoring Systems. Heidelberg: Springer.

Psotka, J., and Mutter, S. A. (1988). Intelligent Tutoring Systems: Lessons Learned. Mahwah, NJ: Lawrence Erlbaum Associates.

Qureshi, M. A., Khaskheli, A., Qureshi, J. A., Raza, S. A., and Yousufi, S. Q. (2021). Factors affecting students' learning performance through collaborative learning and engagement. Interact. Learn. Environ. 2021, 1–21. doi: 10.1080/10494820.2021.1884886

Raleiras, M., Nabizadeh, A. H., and Costa, F. A. (2022). Automatic learning styles prediction: a survey of the State-of-the-Art (2006-2021). J. Comput. Educ. 2022, 1–93. doi: 10.1007/s40692-021-00215-7

Rani, M., Nayak, R., and Vyas, O. P. (2015). An ontology-based adaptive personalized e-learning system, assisted by software agents on cloud storage. Knowl. Bas. Syst. 90, 33–48. doi: 10.1016/j.knosys.2015.10.002

Ringo, S. S., Samsudin, A., Ramlan, T., Fitkov-Norris, E. D., and Yeghiazarian, A. (2015). Validation of VARK learning modalities questionnaire using Rasch analysis. J. Phys. 588:12048. doi: 10.1088/1742-6596/588/1/012048

Sikka, R., Dhankhar, A., and Rana, C. (2012). A survey paper on E-learning recommender system. Int. J. Comput. Appl. 47, 975–888. doi: 10.5120/7218-0024

van Leeuwen, A., and Janssen, J. (2019). A systematic review of teacher guidance during collaborative learning in primary and secondary education. Educ. Res. Rev. 27, 71–89. doi: 10.1016/j.edurev.2019.02.001

Keywords: learning style, misconceptions, personalized learning, student profile, e-learning

Citation: Abu-Issa A, Butmeh H and Tumar I (2024) Modeling students' preferences and knowledge for improving educational achievements. Front. Comput. Sci. 6:1359770. doi: 10.3389/fcomp.2024.1359770

Received: 21 December 2023; Accepted: 12 February 2024;

Published: 07 March 2024.

Edited by:

Shashidhar Venkatesh Murthy, James Cook University, AustraliaReviewed by:

Maha Khemaja, University of Sousse, TunisiaRadoslava Stankova Kraleva, South-West University “Neofit Rilski”, Bulgaria

Copyright © 2024 Abu-Issa, Butmeh and Tumar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Abdallatif Abu-Issa, YWJ1aXNzYUBiaXJ6ZWl0LmVkdQ==

Abdallatif Abu-Issa

Abdallatif Abu-Issa Hala Butmeh2

Hala Butmeh2