95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 10 January 2024

Sec. Human-Media Interaction

Volume 5 - 2023 | https://doi.org/10.3389/fcomp.2023.1162758

Introduction: The intersection of hearing accessibility and music research offers limited representations of the Deaf and Hard of Hearing (DHH) individuals, specifically as artists. This article presents inclusive design practices for hearing accessibility through wearable and multimodal haptic interfaces with participants with diverse hearing backgrounds.

Methods: We develop a movement-based sound design practice and audio-tactile compositional vocabulary, co-created with a Deaf co-designer, to offer a more inclusive and embodied listening experience. This listening experience is evaluated with a focus group whose participants have background in music, dance, design, or accessibility in arts. By involving multiple stakeholders, we survey the participants' qualitative experiences in relation to Deaf co-designer's experience.

Results: Results show that multimodal haptic feedback enhanced the participants' listening experience while on-skin vibrations provided more nuanced understanding of the music for Deaf participants. Hearing participants reported interest in understanding the Deaf individuals' musical experience, preferences, and compositions.

Discussion: We conclude by presenting design practices when working with movement-based musical interaction and multimodal haptics. We lastly discuss the challenges and limitations of access barrier in hearing accessibility and music.

Popular hearing culture largely approaches listening experiences from auditory perspectives that are often inaccessible to d/Deaf or Hard of Hearing (DHH) individuals. However, listening extends to more bodily experiences through multimodal channels (Sarter, 2006). Previous research in music has investigated substituting the auditory sense primarily with feedback that is visual (Chang and O'Sullivan, 2008; Fourney and Fels, 2009; Grierson, 2011; Petry et al., 2016; Deja et al., 2020), tactile (Karam et al., 2009; Remache-Vinueza et al., 2021), or a combination of the two (Nanayakkara et al., 2013). Although supporting hearing capabilities through another sensory channel increases hearing accessibility, musical experiences extend beyond sensory substitution or using assistive technology to deliver musical information. These experiences include aspects such as multisensory integration (Russo, 2019), musical expressivity (Hayes, 2011; Dickens et al., 2018), hearing wellbeing (Agres et al., 2021), and social connections (Brétéché, 2021). Unfortunately, the research significantly lacks the representation of DHH communities in designing, organizing, and facilitating performances, leaving those members isolated from participating in music as artists (Darrow, 1993). We investigate how a movement-based approach to music-making combined with haptic stimuli can enhance the listening experience and contribute to the long-term goal of increasing DHH performers' participation in music.

We develop movement-based sound design practice and audio-tactile compositional elements that are co-designed with a Deaf dancer. These compositional elements are presented and evaluated with a focus group of hearing participants. Through participants' movement explorations and first-person experiences, we survey this listening experience enhanced with movement interaction and multimodal haptic stimuli. Haptic stimuli include both in-air sound vibrations and on-skin vibrotactile stimulation. In addition to the two haptic modalities, the focus group participants explore possible different locations of the wearable haptic modules on the body.

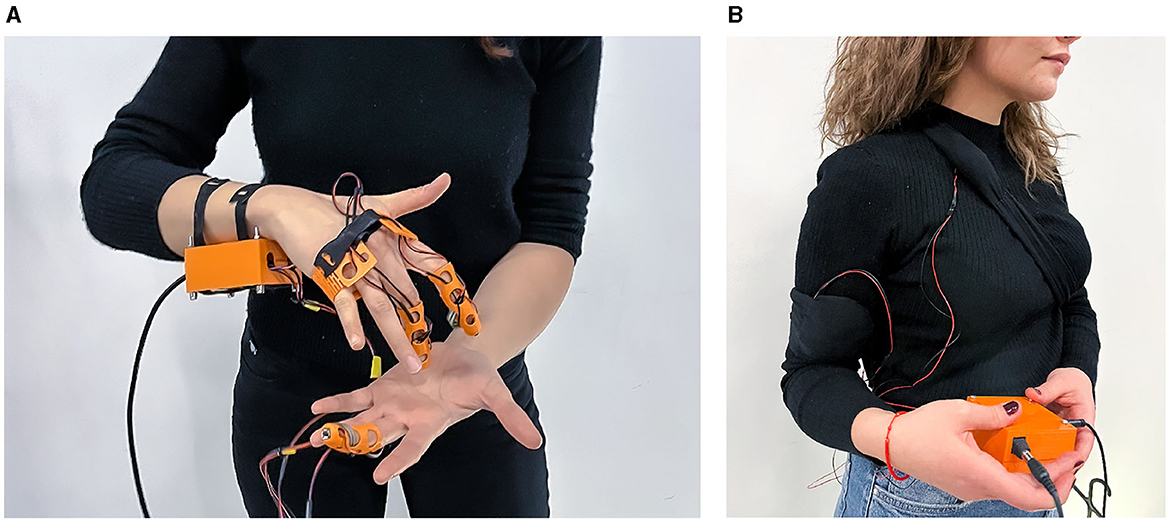

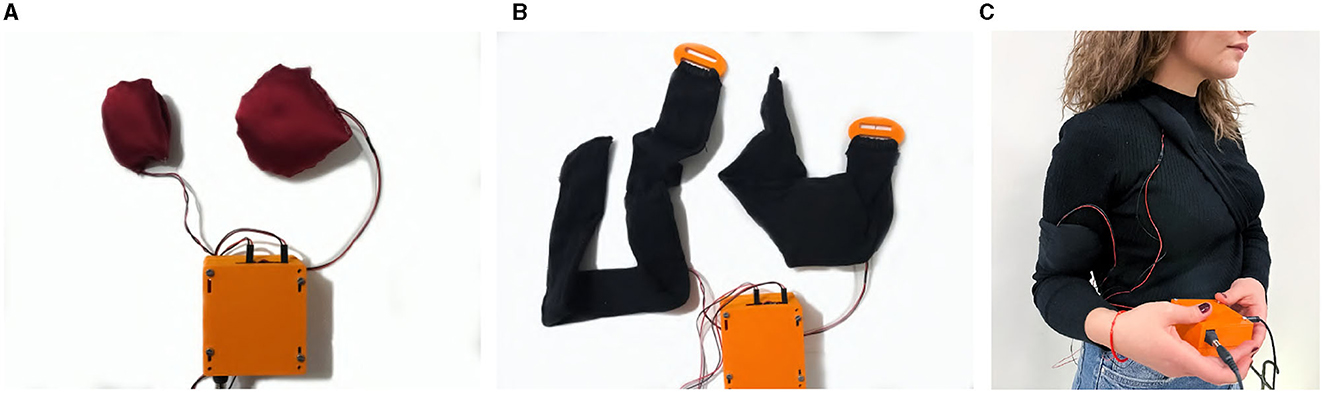

This manuscript extends two previous studies (Cavdir and Wang, 2020; Cavdir, 2022). The first study, Felt Sound, investigates how to design a shared performance experience for Deaf, Hard of Hearing, and hearing individuals (Cavdir and Wang, 2020). It presents the first design of a novel movement-based musical interface. This interface is designed based on sign language gestural interaction, providing kinesthetic feedback from its gestural expression and in-air vibrotactile feedback from a surround subwoofer array. In the second study, Touch, Listen, (Re)Act (TLRA) (Cavdir, 2022), this instrumental interaction is evaluated with a Deaf dancer, and the listening experience is expanded with additional vibrotactile modality. The second study examines the co-design process with the Deaf participant and develops wearable vibrotactile haptic devices. Figure 1A shows the musical interface and Figure 1B shows the haptic interface. The prior studies contributed to different design stages of this research. The current study uses the musical interface developed in the first study (Cavdir and Wang, 2020) and wearable haptic interfaces developed in the second study (Cavdir, 2022).

Figure 1. The musical interface is used for performance and the design of compositional vocabulary while the haptic interface is used to receive the music information. (A) The musical interface. (B) The haptic interface.

In the current study, we develop audio-tactile compositional elements with the Deaf co-designer and later qualitatively evaluate the listening experience with a focus group. This study aims to survey the listening experience enhanced by body movements and multimodal haptics with participants whose professional backgrounds are in music, dance, design, and accessibility research. The participants evaluated listening simultaneously with two modalities of haptic feedback (through in-air sound vibrations and on-skin tactile vibrations). They also explore where the haptic modules can be worn at different locations on the body. Drawing from their professional background and knowledge, they provided use cases where such listening experiences can be utilized.

We investigate the following research questions:

• How can we design a movement-based musical experience that enhances listening using multimodal haptics?

• What are the best practices for audio-tactile composition?

• What are the perspectives of different professionals on inclusive music practices, using haptic assistive devices, for the long-term goal of increasing participation of DHH performers?

The study investigates these research questions from the Design for Social Accessibility (DSA) perspective by evaluating the audio-tactile compositional elements both from the Deaf co-designer's and the hearing participants' perspectives. DSA is a design practice developed by Shinohara and Wobbrock (2016) to incorporate the perspectives of the participants with and without disabilities on accessibility issues. Shinohara et al. (2018) outline three tenets of design for social accessibility and find that involving “multiple stakeholders” increased the designers' awareness and sensitivity to the different dimensions of accessibility design. They authors also show that “expert users' feedback and insight prompted [the designers] to consider complex scenarios.” Similarly, we collected feedback from a focus group whose expertise extends to music, audio engineering, dance, design, and accessibility in arts. Our goal in involving these stakeholders draws from understanding different approaches to audio-tactile design for hearing accessibility in music. We discuss the focus group participants' experience compared to to the Dear co-designer's experiences.

This article primarily focuses on the third exploratory study that presents the sound design and compositional practice for audio-tactile music. The study qualitatively evaluates the participants' embodied listening experiences with the audio-tactile composition and multimodal (in-air and on-skin) haptic feedback. It surveys the perspectives in inclusive music-making of different stakeholders with backgrounds in music, dance, design, and accessibility research. In this work, we present three main contributions. First, we present the sound design and mapping when working with movement-based a musical interface and multimodal haptics. Our compositional approach for audio-tactile integration is detailed. Second, we report the focus group's listening experience enhanced by movement interaction and multimodal haptics. Their experience is compared and contrasted to participants' experiences from the earlier study to understand the effects of the haptic modalities on the listening experience. Third, we provide design implications and use cases of this musical experience based on the perspectives of the focus group participants with different professional backgrounds. Lastly, we discuss the limitations of the current design space.

The rest of the article is organized as follows: Section 2 summarizes the background and related work. Section 2.2 discusses our design considerations. Section 4 details the methods of conducting user studies, implementation of the physical interfaces, and our approach to sound design and composition. Section 5 reports our findings through qualitative and performance-based evaluation across three study iterations. Lastly, the implications and limitations of our study process are discussed in Section 6.

More than fifteen percent of the population experiences some level of hearing loss (Blackwell et al., 2014). However, the communication and collaboration opportunities for deaf, Deaf, and Hard of Hearing (DHH) communities are significantly limited. These individuals experience difficulty in perceiving sound characteristics in music as well as receiving music for hearing well-being. To support their musical experiences, researchers develop examples of assistive technologies and accessible digital musical instruments. Most of these technologies support users' engagement with music either with visual (Chang and O'Sullivan, 2008; Fourney and Fels, 2009; Grierson, 2011; Petry et al., 2016; Deja et al., 2020) or vibrotactile representations (Remache-Vinueza et al., 2021). These representations often provide musical information to substitute the missing senses while some utilize these stimuli to enhance this user group's musical experience. Nanayakkara, Wyse, Ong, and Taylor develop assistive devices with visual and haptic displays (Nanayakkara et al., 2013). Similarly, Fourney and Fels (2009) provide visuals to inform “deaf, deafened, and hard of hearing music consumers” about musical emotions conveyed in the performance, extending their experience of accessing the music of the larger hearing culture. Burn (2016) applies sensory substitution in designing new interfaces using haptic and visual feedback for “deaf musicians who wish to play virtual instruments and expand their range of live performance opportunities.” He highlights the importance of multisensory integration for deaf musicians when playing electronic instruments and integrates additional feedback modalities into virtual instruments. Similarly, Richards et al. (2022) develop a wearable, multimodal harness to study tactile listening experiences with hearing participants. These experiences are delivered via extra-tympanic sound conduction and vibrotactile stimulation of the skin on the upper body. Iijima et al. (2022) include gesture-based interaction to support DHH individuals' musical experiences.

Research on haptic interfaces often provides new technology or interaction to substitute the hearing sense, provide musical information through touch, or extend auditory perception through multisensory integration. However, tools and frameworks for composition and sound design are limited. A few examples of these limitations include lack of awareness of and access to the existing musical creativity tools (Ohshiro and Cartwright, 2022), the assistance of hearing individuals (Ohshiro and Cartwright, 2022), limited integration and representation of sign languages in digital tools (Schmitt, 2017; Efthimiou et al., 2018), and closed-caption systems' inability to convey musical features (Yoo et al., 2023). Pezent et al. (2020) develop an open-source framework to design and control audio-based vibrotactile stimuli. Gunther and O'Modhrain (2003) approach this gap from an aesthetically-driven perspective. They propose a compositional approach for “the sense of touch,” using a wearable device with haptic actuator arrays. They develop a tactile compositional language based on “musically structured spatiotemporal patterns of vibration on the surface of the body.” Remache-Vinueza et al. (2021) present an overview of methods for audio-tactile rendering and strategies for vibrotactile compositions. Their review provides insights and analysis on how specific musical elements are feasible for audio-tactile rendering while it further highlights the remaining challenges in this field due to the cross-modal relationship in audio-tactile music perception.

In addition to technological developments, some researchers address the social aspect of hearing accessibility and study how collaboration across diverse abilities can be built. For example, Sϕderberg et al. (2016) facilitate collaboration between hearing and deaf musicians in music composition. They study which musical activities allow for improved collaboration such as creating beats and they report that “in order for the target groups to create melodic sequences together [...], more detailed visualization and distributed haptic output is necessary.” Hearing abilities present diverse profiles even within DHH communities. To address this diversity, Petry et al. (2016) customize the visual and the vibrotactile feedback to design by considering interpersonal differences in Deaf individuals' needs and preferences.

Despite a wide range of accessibility research on hearing health and well-being with music (Agres et al., 2021), the current literature still lacks representations of DHH participants. The active participation of these individuals is crucial both in design and performance. Research of existing participatory practices includes perspectives of audience members or designers. Turchet et al. (2021) engage the audience to partake in performances using “musical haptic wearables.” Similarly, Cavdir et al. (2022) construct new haptic tools to improve Cochlear Implant (CI) users' concert experiences in a mixed audience that include handheld devices and concert furniture. To enable Deaf participants' inclusion as co-creators, Marti and Recupero (2019) conduct workshops to develop augmentations for hearing aids for better representation of their aesthetics, self-expression, and identity.

These participatory approaches support us to develop more social design practices. Social accessibility emphasizes the social aspects of assistive technologies over their functional aspects (Shinohara and Wobbrock, 2016). Quintero (2022) discusses the importance of designing artifacts for real-life scenarios to be used by people with disabilities. Design for social accessibility relates to “users' identities and how their abilities are portrayed” in social contexts. In the context of DHH users, social acceptability of assistive technologies relies on the description of abilities, identification with Deaf culture, and the context of use (Alonzo et al., 2022). With this framework, we approach our design process from an aesthetically-driven perspective, considering hearing inclusion through performance-based, embodied practices, and explore the perspectives of participants with diverse hearing abilities.

In our research process, we highlight inclusive design methods supported by embodied practices throughout all the design stages. We emphasize the felt sensations and felt experiences of music and develop gesture-based design and composition approaches, specifically for diverse hearing abilities and backgrounds.

Our main design consideration unpacks the end goal of accessibility in musical experiences by applying inclusive design practices to musical interface design, sound design and composition, and performance. The inclusive design supports our process toward participatory practices with DHH artists (Cavdir and Wang, 2022b). To increase inclusivity, we employ embodied interaction methods in three ways: movement-based design, bodily listening, and participation. We apply movement practices throughout several design stages from exploration with research through embodied design to performing with movement materials. Specifically, when working with individuals with hearing impairments, dance and movement practices offer an additional channel for expression, learning, and understanding of musical materials. For example, we leverage rhythmicality in both practices to bridge between listening and performing. Similarly, storytelling in choreography and composition is coupled to enhance the communication between the musician and the dancer (Cavdir, 2022).

Another motivation behind our embodied approach is to more flexibly adapt to Deaf participants' auditory and sensorimotor diversities, offering new ways for the participants to not only express but also listen through the body. We offer this bodily listening experience to accommodate listeners with hearing impairments, beyond non-aural means of musical expression. Although “decoding which musical features are perceived through hearing or through the body remains unclear” (Gunther and O'Modhrain, 2003), the listening experience is improved by receiving musical information through tactile sensations that are specifically designed and composed for Deaf performers. This composition redirects participants' attention from solely auditory listening to more tactile, visual, and kinesthetic listening.

Tancredi et al. (2021) highlight how embodied perspectives not only inspire new design approaches but also change definitions and designs for accessibility. Increased accessibility necessitates the active participation of the user group in the design process and shared spaces for creativity among designers and performers (Quintero, 2022). Our long-term goal aims for the active participation of DHH individuals as performers and co-designers when designing technologies for hearing accessibility. Such participation is especially crucial in “design for experiencing” because as a “constructive activity,” it requires both explicit and tacit knowledge gained from both the designer and user actively accessing the user's experience (Sanders, 2002; Malinverni et al., 2019). Malinverni et al. (2019) inform their design process through meaning-making in embodied experiences beyond the limits of verbal expression. Similarly, we integrate embodied practices into our participatory process to support the expression and articulation of the bodily knowledge gained through diverse sensory and motor experiences (Moen, 2006; Loke, 2009). These embodied practices help us define new non-aural ways of communicating and interpreting musical content. This exploration is possible by involving different stakeholders beyond the users. For example, we explore how our sign language interpreters use local sign language definitions and metaphors to describe sounds and sound qualities to deaf individuals. Embodied practices and participatory methods support each other in the design process.

Embodied experiences include felt sensations. Regardless of hearing abilities, felt sensations diversify musical experiences, ranging from designing musical interfaces, performing, and composing to listening. We design and compose to increase the felt sensations delivered from music by (1) providing vibrotactile information, (2) delivering this vibrotactile feedback through multisensory experiences, and (3) highlighting the first-person perspective (Loke, 2009) to listening.

We design on-skin haptic interfaces to communicate musical information while these interfaces deliver the same music signal felt through in-air sound vibrations. We explore the sensations that are felt through sound in different body parts, such as the torso, arms, chest, or fingertips. We also combine different modalities of haptic feedback to enrich the listening experience not only for DHH but also for hearing listeners. Our goal is to emphasize an internal listening experience and shift the participants' focus onto their bodies regardless of being observed (Cavdir and Wang, 2022b). Turning the attention to the bodily, felt sensations and movement expression emphasizes first-person perspectives. Even though visual cues are inevitably provided through movement expressions, one's own bodily experience is prioritized over the third-person perspective. For example, the listening explorations encourage the inner motivation of the participants to move while listening. This consideration translates to exploring ways of listening in relation to the sound source, such as touching or moving around the speakers or placing the wearable interfaces on different parts of the body. Similar to how Merleau-Ponty (1962) emphasizes “the instrumentality of the body,” we integrate the felt qualities of the moving body into our design and composition approach. We achieve this instrumentality by turning performers' body movements into a tool to create music while simultaneously responding to the interface rather than an external expression. The continuous detection of performers' motions through wearable interfaces increases performers' awareness of the living interaction between their bodies and the instrument. From the performer's perspective, this experience creates opportunities to share first-person experiences among performers. From the audience's perspective, these experiences are made visible by connecting the vibrations created by the music with the movements of the dancer.

To design a musical experience for a wide range of hearing abilities, composition and sound mapping should include new modalities in addition to auditory perception. We follow a gesture-based approach to highlight the semantic mapping among the vibrotactile, bodily movement, and auditory elements (Cavdir and Wang, 2022a). Breteche discusses that Deaf listeners are not only sensitive to the vibrotactile perception but also attentive to visible movements that are created “in music, for music, or by music” (Brétéché, 2021). A gestural composition offers this level of musical understanding that draws connections between body movements and sound events. With this approach, we design the sound mapping “by first defining a set of gestures and movement patterns; in other words, gestures come first and the instrument design is adapted to the gestural vocabulary” (Cavdir and Wang, 2022a).

The gesture-based approach to mapping integrates the performer's body into the physical interface and the body movement into the sound design. More specifically, gestural vocabulary defines the physical affordance of the musical interface, leading to sound mapping. This interdisciplinary perspective combines composition and choreography when developing performance practices. For most listeners with hearing impairments, the musical experience is significantly impacted not only by the visual elements of the body movements (Brétéché, 2021) but the simultaneous movements in response to the music (Godøy and Leman, 2010). We apply this interaction in performance by engaging the gestural vocabulary as the basis for two layers of mapping (1) between motion-sensor data and sound and (2) between music and dance gestures. We explored the shared tools and techniques between choreography and composition while incorporating discipline-specific techniques (e.g., from dance) to accommodate both the technology and the performers' special needs. Choreography techniques, such as mirroring, structured improvisation, gestural construct, poetry, and storytelling, are applied to the musical composition to emphasize the kinesthetic communication between performers whereas commonalities such as rhythm, form, and structure are emphasized to create a shared context. Moreover, the choreography techniques are integrated into the music practice and composition to lower the access barriers for performers who may have less musical experience.

The current study iteratively extends two previous studies. The first study develops a movement-based musical interface and a performance space to deliver physical sensations through in-air sound vibrations (Cavdir and Wang, 2020). In the current study, a new prototype (see Figure 1A) is developed for this musical interface and the interface is used for movement explorations, performance, and gesture-based composition. The second study investigates the co-design process of wearable haptic modules and movement mapping for co-performance with a Deaf dancer (Cavdir, 2022). In the current study, these interfaces are used for stimulating on-skin vibrotactile sensations in the listening experiment. This section details how the previous two studies contributes to the the current work and provide the methods used in the current study. The three studies follow the key design considerations, detailed in Section 2.2.

The first study included a performance series with 8 audience members. The performance was created with a movement-based musical interface whose gestural interaction was designed based on American Sign Language gestures. It delivered physically felt sensations on the body through in-air sound vibrations from a subwoofer array. The study qualitatively examined the audience's experience with this performance context (Cavdir and Wang, 2020).

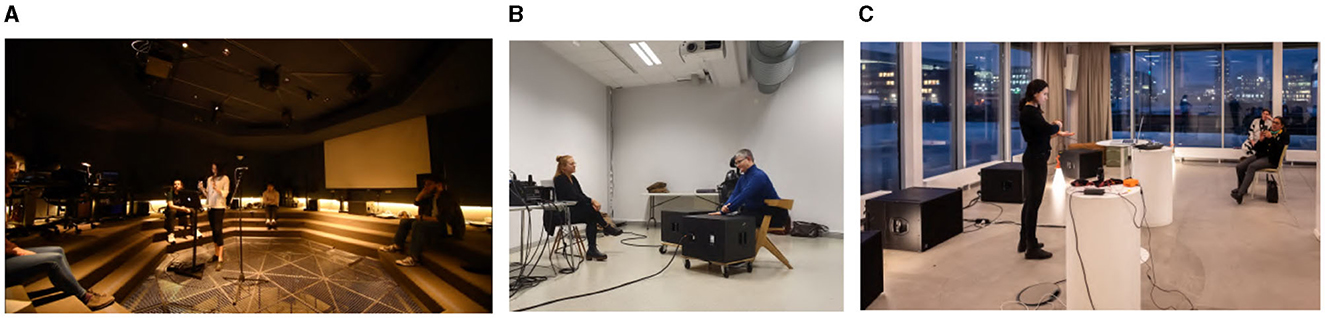

The performance setup provided the audience members with closer proximity to both sound sources and the performer (see Figure 2A). It offered a musical experience that was listened to through the whole body beyond solely auditory processes. The performance highlighted that the composition can create a felt listening experience. The listeners' insights were collected through an open-ended qualitative questionnaire and an exit discussion. The study group lacked Deaf or Hard of Hearing participants in the listening sessions; however, the participants' reflections provided important results for future studies in both delivering physical sensations through composition and developing a “one-to-one” connection between the performer and the audience (Cavdir and Wang, 2020).

Figure 2. Three performance spaces are shown. (A) The first space includes an 8-subwoofer speaker array in surround with participants sitting close to them. (B) The second space is where the exploratory workshops were held. The co-designer could move close to or away from the two subwoofer speakers while mostly listening to vibrations by touching to the speakers. (C) The third performance space included four subwoofer speakers facing the audience. This room included several acoustically reflective surfaces such as window walls and metal ceiling structures.

The second study consists of three co-design workshops with the researcher and a Deaf dancer/co-designer (P2.3, see Section 4.2) (Cavdir, 2022). Co-designers developed a wearable vibrotactile interface and a movement mapping between musical gestures and dance gestures. Each workshop explored new iterations of the haptic interfaces and the differences in listening with either on-skin haptics (with the wearable modules) or in-air vibrations (with the subwoofer array). Figure 2B shows the design space from Workshop 2. Cavdir (2022) details the design process throughout the three workshops.

In the workshops, the data is collected through questionnaires that are completed after each workshops, observations, final interview with the co-designer, and creative artifacts in forms of haptic interfaces, movement mappings, and sound excerpts.

In the third study, the musical interface is publicly performed. Due to COVID-19 restrictions, Deaf dancer (P2.3) could not attend the performance and the recordings of the co-design process and movement mapping are presented to the audience. After the public performance, the focus group (FG) participants listened to the audio-tactile compositions using in-air (through subwoofer speaker array) and on-skin (through wearable vibrotactile devices) haptics. This study asked the FG participants to discover new placements of the wearable interfaces on the body and movement responses to the composition compared to how they were initially co-designed with the Deaf participant. They also had opportunities to co-perform with the musician and express their movement response to the listening (see Figure 11D). In addition to trying haptic interfaces, some FG participant played with the musical interface. The focus group participants shared their reflections on the listening experience with multimodal haptics, the composition, and its perceived qualities. The focus group's feedback and insight are collected through an exit questionnaire and a group session. In this study, we focused on the collecting feedback on musical qualities, compositional approach, and tactile listening, in addition to FG participants' first-person experience during listening and movement explorations.

Table 1 shows methods and materials used in three studies.

Table 2 presents all participants' demographics across the three studies, including the participants' user code in the related study, their hearing, backgrounds in music, arts, and research, and the primary communication languages. The demographic information is presented based on the participants' self-reports.

The first study (P1-3) is motivated to create a performance experience for DHH participants and ASL signers. This performance series included three sessions and eight participants (P1.1–P1.8). Participants were invited via email and provided their oral consent before proceeding with the study. They attended one of three live performances as audience members, evaluating the composition's visual, kinesthetic, tactile, and auditory elements. No participant had reported any hearing impairments and only one participant had a background in signed communication (in Korean and American sign languages). The participants had a considerable music training and background with an average of more than 18 years of experience. All participants were familiar with performances using new musical interfaces (see Table 2).

The second study (W1-3) was conducted with the support of ShareMusic & Performing Arts Center1 in Malmö, Sweden. Three participants (P2.1–P2.3) who were contacted and invited via email through the center's network attended the first workshop. At the beginning of each workshop, the participants provided their oral consent to participate and to be audio-video recorded. The first workshop included interviews with the participants: a performing artist (P2.1) who works with audiences with hearing disabilities and has a Deaf daughter, an accessibility researcher (P2.2) who has hard-of-hearing relatives, and a Deaf dancer (P2.3) who primarily communicated with Swedish sign language (SSL). In the interviews, we discussed participants' cultural associations in the context of Deafness in Sweden.2 While P2.1 associated herself mostly with the hearing communities, P2.2 and P2.3 associated themselves equally with the hearing and DHH communities. The participants also had mixed backgrounds in their communication languages (see Table 2). P2.1 and P2.2 both contributed to the ideation design phase but could not participate as co-designers in the next two workshops. We continued the other two workshops with the Deaf participant, P2.3.

The third study session included 11 participants, who reported no hearing impairments with only one of whom reported sensitivity to vibrations and low frequencies due to a previous head injury. The participants attended a presentation informing them about the study and the design process, presenting performance excerpts with the dancer (P2.3), and a live performance. A focus group (P3.1–P3.5), comprised of 5 participants from the 11, attended a demo session with the interfaces in addition to the public session. These focus group participants had backgrounds ranging from dance, musicology, and sound technologies to accessibility research and professions. They were invited via email and provided oral consent to be audio-video recorded. The focus group participants volunteered their reflections on the vibrotactile interface, the performance, and the listening experience in an exit questionnaire and a group discussion.

Following the co-design of haptic interfaces in Study 2, new audio-tactile compositions are created collaboratively with the Deaf dancer (P2.3) for the third study. Additionally, for the third study, a new prototype of the musical interface is developed. These prototypes are presented in Section 4.5. The performance was conducted in room with glass windows and metal support materials which created rattling noises in response to the composition (see Figure 2C).

Study 3 consists of short listening sessions of these composition excerpts with the focus group. The composition excerpts are played live by the researcher using the musical interface in Figure 1A. The FG participants first listened with in-air haptics and were asked to explore interacting with the subwoofer array such as moving closer to or touching the subwoofer speakers. The FG participants later explored listening simultaneously with the in-air and on-skin haptics. Lastly, participants explored listening with only on-skin haptics or by combining two modalities.

After the listening sessions, we asked participants to explore placing the wearable haptic modules on different locations of the body. The on-skin haptic device included two modules as the Deaf dancer (P2.3) co-designed it. However, participants were allowed to wear only one of them or together as they wished. Finally, FG participants were asked to voluntarily attend a short co-performance exercise with the musician as they listened with two haptic modalities.

The participants' informed oral consent was collected before the study. Their written feedback is collected in an exit questionnaire. The group discussion is audio-recorded and later transcribed using otter.ai3 and manually corrected. The movement improvisations and explorations of haptic module locations are observed and visually documented by the researchers. Along with observations, the questionnaire responses and discussion transcripts are analyzed to report participants' experiences. Their experiences with multimodal listening (with in-air and on-skin haptics) and audio-tactile composition are compared with the Deaf dancer's (P2.3) experience.

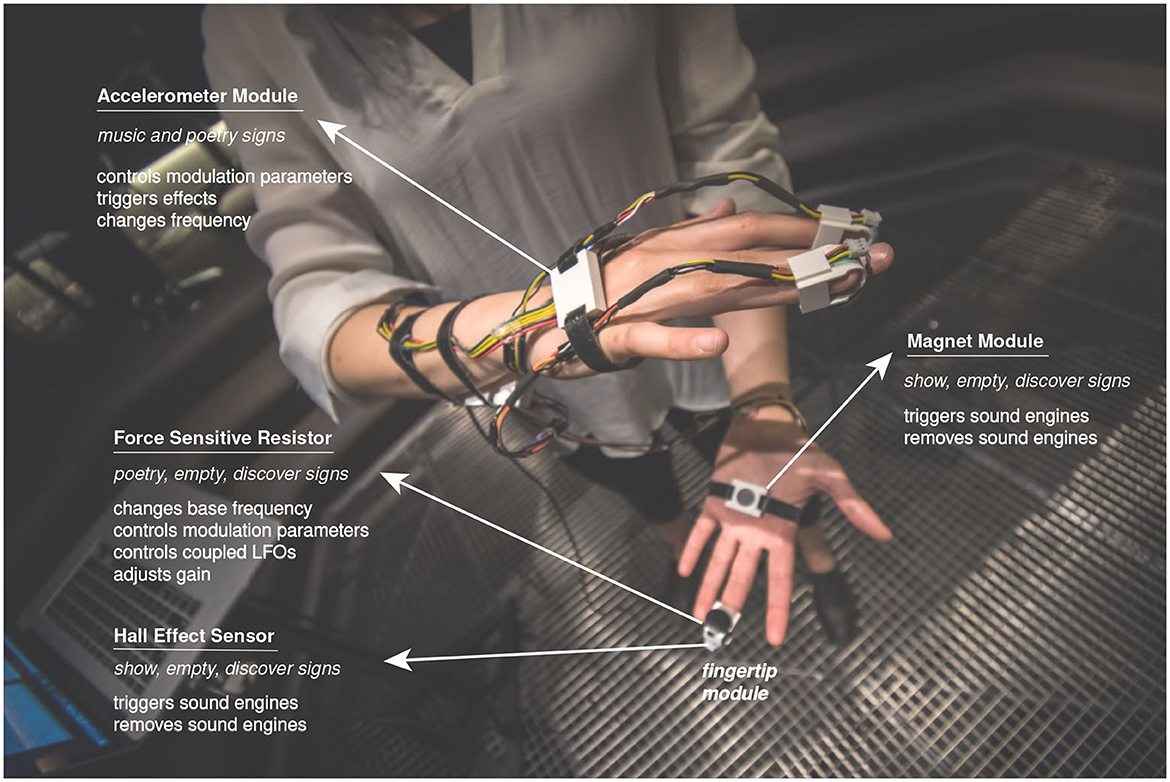

The musical interface consists of modular components that each contribute to detecting different combinations of finger and hand gestures. These modules include passive and active functional elements that are embedded during the 3D printing process (Cavdir, 2020). Cavdir and Wang (2020) detail the implementation of the interface and its gestural interaction design and mapping. Figure 3 presents the musical interface's first prototype.

Figure 3. The first prototype of the musical interface includes four modules, three of which are shown: accelerometer module, fingertip modules [consist of force sensitive resistors (FSRs) and Hall effect sensors], and magnet module. The accelerometer module contributes to detecting the music and poetry signs while fingertip modules contribute to detecting the poetry, empty, and discover signs along with the magnet modules.

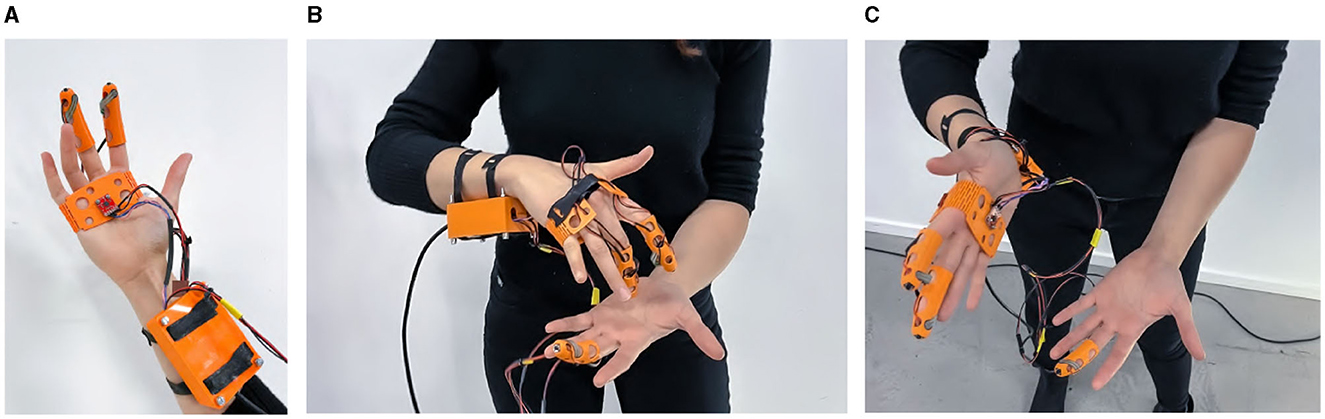

For Study 3, a new prototype is developed using 3D printing with some adjustments to the first prototype. Several parts of the interface were redesigned to maintain its modularity, to more easily connect, and to improve mobility. The fingertip sensors were extended and designed with a grid structure to patch through the cables and sensor extensions (see Figure 4A). Similarly, the accelerometer module was extended to wrap around the palm and provide cables to connect to the main circuit board (see Figure 4B). The accelerometer was fitted inside the palm instead of outside the hand with the same orientation as the first prototype (see Figure 4C). This new design allows the performer to more easily wear the interface while maintaining the same sensor configurations. Compared to the first prototype, only the Hall effect sensors were replaced by the tactile push buttons, eliminating the need for magnet modules.

Figure 4. The second prototype of the musical interface is modified for easier assembly and wearability. (A) A grid structure is designed, the fingertip modules are extended, (B) the Hall effect sensors are replaced with push buttons, (C) and magnet modules are eliminated.

In Study 3, the wearable haptic interface is used to deliver on-skin vibrations. Figure 5 shows the second prototype for the haptic interface. The haptic interface includes two modules that are wearable by the flexible straps around the chest, arms, hands, head, or legs. The interface can be worn while moving with small limitations from the cable connection between the amplifier and the computer. The modules each house an actuator in fabric-foam enclosures. Both haptic modules delivered the same sound signal to the different locations on the body. For the electronic components, we chose the Haptuator brand actuator4 and a generic Bluetooth audio amplifier due to their compact size.

Figure 5. The haptic wearable interface consists of two actuators enclosed in soft fabric modules, an audio amplifier in a 3D printed enclosure (A) and elastic straps to cover the modules (B) for wearability in different locations. The original prototype was designed for one actuator to be worn on the inner side of the upper arm and the other on the chest (C).

Although one module was originally designed to be worn on the chest and the second worn on the arm (Cavdir, 2022), in Study 3, they allow FG participants to simultaneously explore different locations on the body or to wear the modules as designed in Study 2.

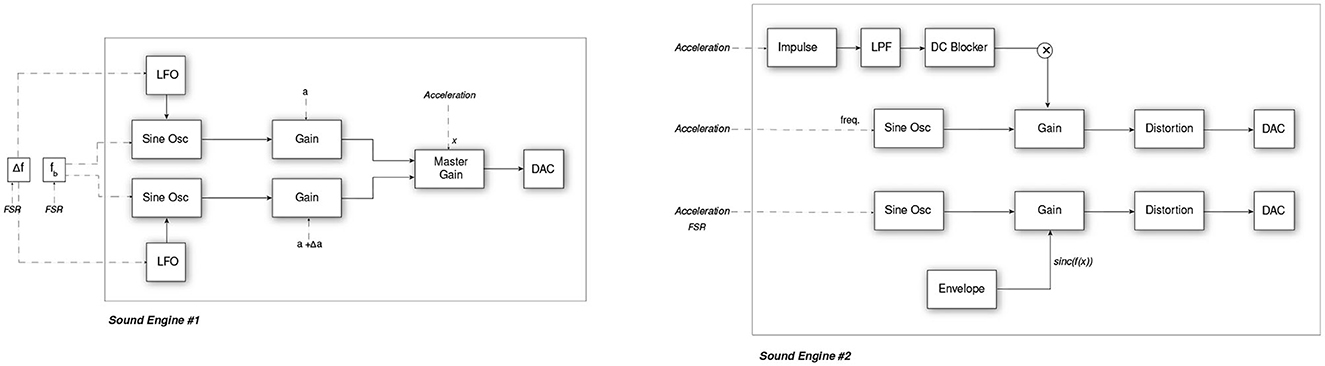

The musical interface's sound design consists of two sound engines that receive the sensor data from the hand interactions and control the audio-tactile feedback to amplify the felt sensations of the music. Each sensor can be started and stopped with the button switches on the fingertip modules.

We designed the first engine with two coupled sine wave oscillators, creating effects such as amplitude modulations (see sound engine #1 in Figure 6). The oscillators are played with a time lag in between. The sine oscillators are connected to a master gain object. Their individual gain is modulated with low-frequency modulators (LFOs) with a small frequency difference. Similarly, we apply a small gain difference to balance the leading and lagging signals' presence in the composition (Δa). This sound engine creates amplitude and frequency modulations over two closely oscillating sine wave drones. Based on the frequency difference between the two LFOs (Δf), the amplitude modulation changes from tremolo to beating effect. With the FSR sensors on the fingertip modules, the base frequency (fb) for both oscillators can be increased and decreased. The base frequency is always kept the same between two oscillators and only the frequency difference (Δf) between LFOs is modulated. The frequency difference increases with continuous FSR data as the sensor was pressed until the difference reaches a maximum of 10 Hz. The separate gain control with an incremental difference allows for creating different amplitude envelopes. Fast hand movements above a threshold of the accelerometer data trigger a fade-out effect and gradually decrease the master gain. This gesture is performed to both create a decaying envelope to transition to a specific part in the composition and to gradually remove the drones from the soundscape.

Figure 6. Simplified signal flow of the two sound engines shows the sound object, their connection, and some of the data that they receive from the digital sensors.

The second sound engine is based on two streams of audio (see sound engine #2 in Figure 6). The oscillators' parameters are controlled with the hand motions in the vertical and horizontal axes detected by the accelerometer. These two streams vary in their modulation structure: one's amplitude modulation and envelope are controlled by a sinc function and the other's oscillations are controlled by the acceleration data. They are both based on low-frequency sine wave oscillations and patched through nonlinear distortion (in Fauck5) before DAC. The distortion effect in this sound engine minimizes the aliasing due to its nonlinearity and adds tactile texture to the sound feedback. While the second stream's frequency is fixed and only its amplitude is modulated, the first stream's frequency is modulated in two ways with the accelerometer data. One modulation is more subtle and based on small hand movements and the other is largely attenuated in the frequency range between 45 and 95 Hz. In addition to skin's sensitivity to low frequencies around 250 Hz (Verrillo, 1992; Gunther and O'Modhrain, 2003), we observed in the first and second case studies that the felt sensations of the in-air sound vibrations are amplified within this range.

For most hearing listeners, tactile sensations can fade into the background of more dominant sensory cues since they might often interact with the world through visual and auditory sensations. On the contrary, for DHH listeners, an understanding of touch might develop more nuanced but their musical experience might be limited to more easily perceivable sound features such as bass frequencies or higher amplitude beats. However, developing analogies between sonic and tactile structures can amplify the felt sensations of haptic compositions to not only translate between modalities but to also expressively communicate musical ideas.

Gunther and O'Modhrain (2003) develop “a compositional language for the sense of touch” based on anatomy, psychophysics, and spatiotemporal patterns of the skin. Their tactile compositional language was built on basic musical elements such as frequency, intensity, duration, waveform (spectral content), and space (location). These building blocks provide us with a fundamental starting point to holistically compose higher levels of tactile compositional patterns and sensations. Gunther and O'Modrain emphasize that “tactile landscapes” emerge from complex combinations of stimuli.

We employ two approaches to design musical experiences with haptics: (1) composing with two modalities (in-air sound and vibrotactile) of haptic feedback and (2) composing tactile stimuli with higher-level musical structures. By using both in-air and on-skin haptic feedback, we amplify the felt sensations of the vibrations, including the room's response and the body's resonant frequencies. For hearing participants, this combination also delivers more nuanced spectral information supported by either aural or tactile sensations. Additionally, we connect audio-tactile compositional ideas to gesture-based choreographic practices in performance. This approach visually communicates the underlying auditory-tactile and gestural mapping to the audience. With this approach, we utilize multisensory stimulation in the composition based on audio, tactile, and kinesthetic elements and their cross-modal relationship for aesthetic outcomes.

In order to start developing higher-level compositional structures, we identify the key elements that underpin musical structures for tactile sensations. The compositional vocabulary offers a foundation for the design of sound engines that can later be used for composition or improvisation. As a starting point, we identify four elements: frequency and amplitude, envelope, time, and space.

Previous research indicates both that frequency discrimination through tactile stimuli is limited compared to the ear's ability and that the perceived vibratory pitch depends on both frequency and intensity (Geldard, 1960; Geldard and Sherrick, 1972; Gunther and O'Modhrain, 2003; Eitan and Rothschild, 2011). Building upon previous findings, we combine frequency and amplitude to convey the composition's qualitative dimensions. Because we want to deliver in-air vibrations through the subwoofer speakers, we focus on the low-frequency range of 20–220 Hz at high amplitudes. We also deliver low-frequency content for on-skin vibratory stimuli using haptic actuators. Both subwoofer and actuator frequency ranges are limited to low frequencies, with the actuator's resonant frequency at approximately 65 Hz.

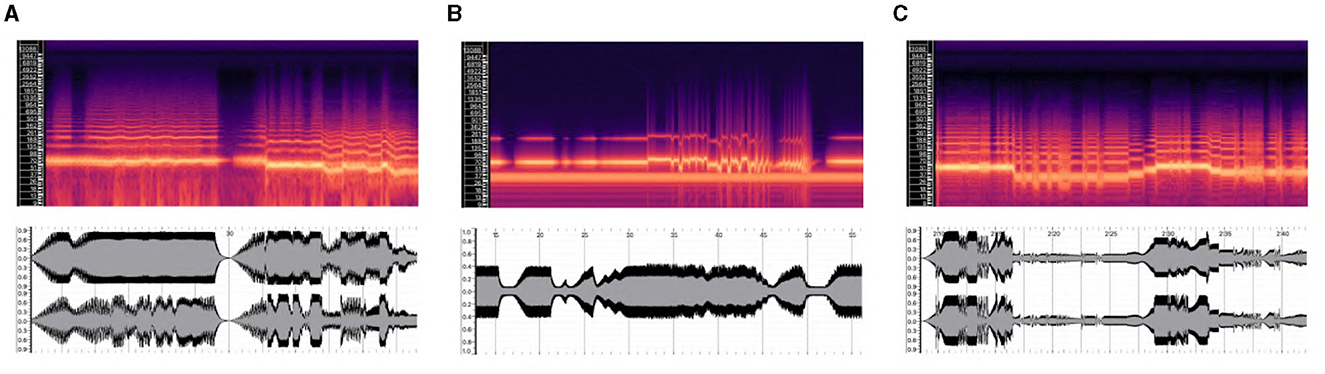

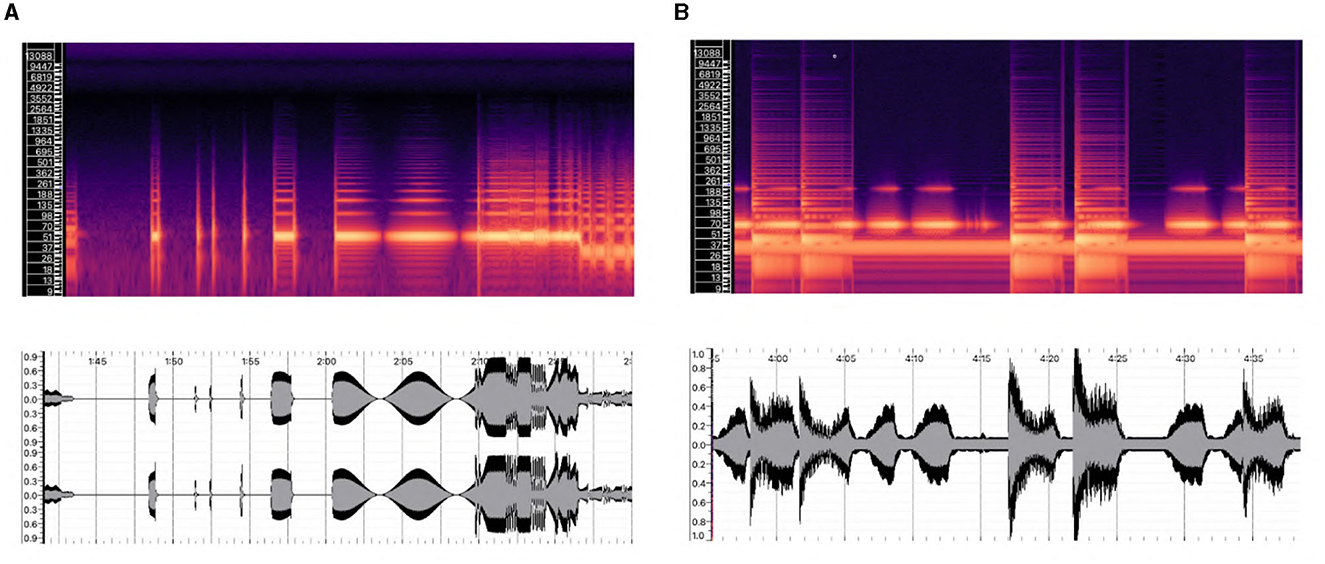

The composition's highest frequencies only reach 280-300 Hz to emphasize the physically felt sensations. Because of the limited range, the frequency content is coupled with amplitude adjustments and modulations. As Gunther and O'Modhrain (2003) discuss, we composed using a continuum of intensities to create dynamic variations and to amplify frequency modulations. By creating beating effects with a maximum of 10 Hz frequency difference between oscillators, the sensitivity to low frequencies of 5–10 Hz increases. Figure 7 shows the spectrogram of frequency and amplitude modulation in Figure 7A frequency modulation from acceleration data, Figure 7B frequency fluctuations from FSR sensor data, and Figure 7C discrete frequency change and amplitude modulations from both sensor data.

Figure 7. The spectrograms of audio excerpts shows different types of frequency and amplitude variations: (A) frequency modulation from acceleration data, (B) frequency fluctuations from FSR sensor data, and (C) discrete frequency change and amplitude modulations from both sensor data.

We utilized the high-amplitude, low-frequency vibrations to influence the felt aspect of the tactile sensations inside the body. To address resonant frequencies of the body, the composition ranges from specific frequency content to broader spectral content. It includes qualitative differences in sound and touch, extending from low-frequency oscillations that carry smoother qualities to more complex spectral content that carries rougher qualities. By changing the distortion and noise parameters, we created different textures. We also achieved similar qualitative differences by introducing silences and phrasing. Moments that include silences or less spectral complexity conveyed lightness qualities whereas compositional phrases with a frequency-rich spectrum communicated heaviness. When composing for auditory-tactile stimuli, these textural qualities are achieved by combining spectral content, modulations in frequency and amplitude, and envelope.

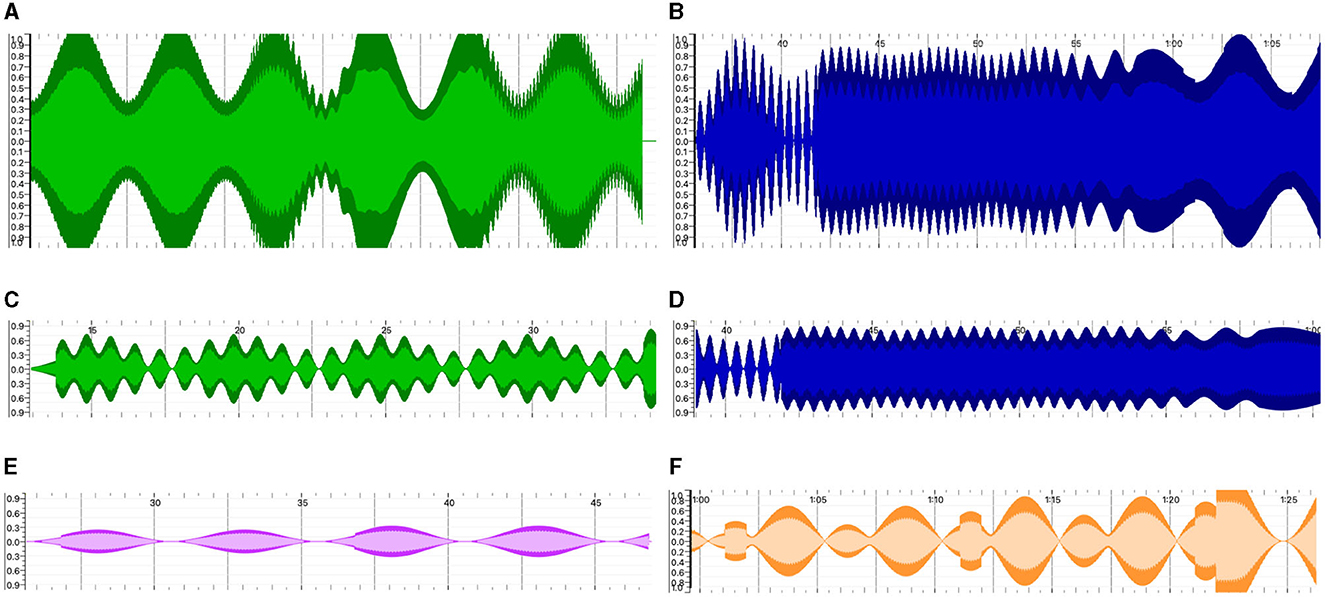

Along with frequency and amplitude, we created a range of felt sensations by applying different envelopes to the auditory-tactile stimuli. We design perceptual effects using different attack, sustain, and decay parameters in ADSR envelope and different types of modulations. These modulations range from beating and tremolo effects (see Figure 8) to decaying sinc oscillation (see Figure 9B). Figure 9B shows the attack with an impulse and a decay with the sinc function, e.g., two peaks between the time interval of 4:15–4:25 s.

Figure 8. Six examples of envelope designs and their resulting amplitude modulations with coupled LFOs designed for the first sound engine. (A) Frequency and amplitude modulation (beating effect with changing tremolo frequency), (B) frequency modulation with decreasing carrier frequency, (C) envelope for a beating effect, (D) dynamic envelop with an increasing depth, decreasing frequency, (E) envelop with a low frequency modulation, (F) envelop with an incrementally increasing depth.

Figure 9. Although both sound engines play continuous, drone-like sound outputs, rhythmic elements were created by momentarily switching the first sound engine on/off with the Hall-effect sensors for varying durations. This effect, shown in (A), created short notes and staccato notes. Controlling the second sound engine's gain envelope with an impulse input allowed strong attacks and short decay times, shown in (B). Similarly, we created rhythmic variations in the composition with this effect.

Two of the sound engines we designed for this composition are based on impulse and sustained stimuli. While the first sound engine creates more sustained stimuli with amplitude and frequency modulations, the second sound engine uses both impulse and sustained signals, allowing us to manipulate textural qualities (see Figure 9A, showing a transition from the first to the second sound engine). For example, the sustained input signals create smoother and more continuous effects while the impulse signal more drastically increases the amplitude.

The composition also includes varying temporal envelopes with slow and fast fluctuations to design different textural effects. As shown in Figure 9B, the duration of these fluctuations allows us to create several ways of grouping sound streams. With the first sound engine, we create modulations and beating effects using two coupled LFOs to control the gain of sine oscillators. By controlling time and frequency differences between the two oscillators of oscillators, we extend the duration and sustain of the envelope. With the second sound engine, the acceleration data capture the hand movements and modify the amplitude modulations. More subtle hand-waving movements create smoother and slowly oscillating modulation, whereas a fast acceleration change with a hand drop excites a wide spectral range at high amplitudes, creating strong and heavy sensations that are felt not only in the body but also in the performance space.

Lastly, we observe that adding variations to envelopes can be effective to deliver movement qualities. For example, a short attack creates a more active and evoking sensation whereas more gradual attacks generate a sensation that rises up and disperses on the skin. Similarly, a decaying oscillation decreases the sensory fatigue from tactile listening compared to fast and intense modulations. By introducing silences between envelope peaks or after fast modulations, we provide chances for rest and increase the sensations of lightness.

Remache-Vinueza et al. (2021) found that “creating a compilation of [tactile] sequences or patterns that change in time results in more meaningful [stimulation] for users” than solely presenting discrete stimuli. Based on our explorations with temporal musical elements, we applied temporal changes at three different levels: (1) compositional, (2) phrasing and grouping, and (3) rhythm and dynamics. On a grosser level, the composition includes temporal variety through how sound patterns were combined by creating musical sections throughout the performance. The composition consists of sections for each sound engine to be played solo and combined. Because more complex and high-level sensations emerge from the combined stimuli, this section is played to highlight the composition's qualitative dimensions. To deliver lower-level musical information and qualities, sound engines are played solo, specifically when working with a Deaf performer.

We create musical phrases and groupings by combining envelope and duration. Duration provides opportunities for listeners to differently group the vibrotactile stimuli when two sound engines are simultaneously played. We create distinct sound events and merged the two sound streams, guiding the listeners' attention accordingly. Although the same phrases are simultaneously presented, we lead the listeners to differently group the tactile phrases with two modalities of the stimuli. Felt qualities change when the same sound signal is received directly on the skin than through speakers. The skin quickly develops adaptation to the on-skin vibrations if stimulated over an extended time period (Gunther and O'Modhrain, 2003). When dealing with this phenomenon, we leverage musical phrasing to attract listeners' attention to moments when the stimuli provide more specific musical information and to carry the on-skin vibrations to the background in the moments when the skin's adaptation to the vibrations increases. Additionally, inserting rests and silences significantly helps work with this physiological phenomenon.

Although tonal structures of music are challenging to deliver through haptics, rhythmic and dynamic elements more clearly translate to tactile sensations. We created rhythmical variations primarily using envelopes (see Figure 9). Both sustained and iterative sounds carry rhythmic information. For example, the dabbing gesture with the latch sensor creates staccato notes with fast attack and decay times. Similarly, we added rhythmical qualities in the sustained sounds either by shaping the envelope through modulations in the composition or with gestural data in the sound mapping.

In addition to stimulating different locations on the body, two tactile modalities allowed us to explore spatial features. By using a surround subwoofer array, we include the space and its acoustic features in the musical experience. The first performance series was held in a listening room with an 8-surround subwoofer array. In this listening area, the audience members sat close to these subwoofers and were encouraged to touch the speakers, enabling them to receive vibrotactile feedback through touch in addition to sound. In the second performance, we continue delivering in-air vibrations through another subwoofer array; however, the seating setup was modified to accommodate the musician's and dancer's (P2.3) movement. The audience members were encouraged to walk around the space and touch the speakers. Figure 2 shows the listening spaces where the performances happened. Figure 2A presents the audience members' proximity to the speakers according to their listening preferences, Figure 2B presents the workshop room with only two speakers to compare and contrast the listening experiences across two modalities, and Figure 2C display's the final performance area, showing some listeners moving closer to the speakers. This final listening space included more reflective surfaces and was larger compared to the listening room in Figure 2A which was acoustically insulated.

Combining in-air and on-skin vibrations affects the listeners' perception of source localization and spectral content. The in-air vibrations emphasized the composition's embodiment and immersion (Cavdir, 2022) and the listening space highlighted different spectral content. The on-skin vibrations delivered the musical information in detail. Although on-skin vibrations were initially designed for the Deaf performer, they were offered to all participants. Both haptic modalities delivered the same musical composition.

Gunther and O'Modhrain discuss that the positioning of the tactile stimuli on the body surface is “a first-order dimension for the tactile composition” representing pitch in relation to musical composition. We leveraged this positioning to amplify the vibrotactile sensations by layering the same audio-haptic signal at two different locations (chest and upper inner arm). Since the sensitivity to vibrations changes between the chest and arm, both the complex combination of musical features in the composition as well as the spatial grouping from perceptual organization creates higher-level patterns and sensations. These locations can be increased or coupled to create vibrotactile movement on the skin. However, because the performance between the musician and the dancer includes movement and spatial variations, for simplicity, we restricted the design of the haptic interfaces to two locations on the body and to simultaneously deliver the same signal.

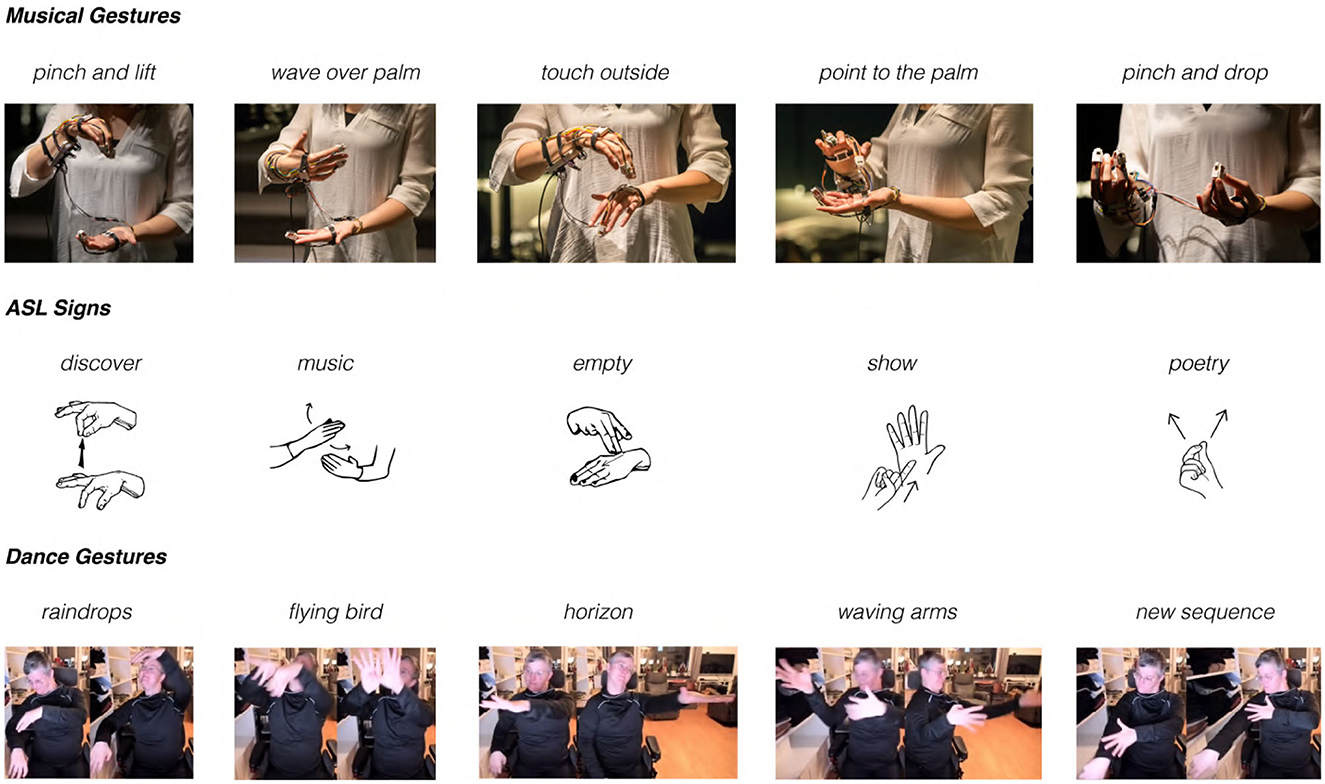

As an overarching research practice across three study iterations, movement-based interactions are maintained either with musical musical interfaces or in response to music. In the workshop series, we co-created a new composition, movement vocabulary, and choreography with the dancer (P2.3). We designed the mapping between sign language-based musical and dance gestures. Figure 10 presents the musical gestures on the top row, their signs from ASL in the middle row, and the corresponding dance gestures in the bottom row. With the discover sign, the dancer performed raindrop-inspired movements; with music, she imitated a bird's flying; empty sign qued scanning the horizon gesture for the dancer; with show sign, the dancer waved her arms with the intensity of the vibrations; poetry sign initiated a new sequence and dancer changed location on stage. The dance gestures were suggested and choreographed by the dancer based on her repertoire. She created this choreography by developing a narrative based on the musical composition and her tactile listening experience.

Figure 10. The dancer (P2.3) developed her movement vocabulary from her dance repertoire in relation to the musician's gestures. The gestural vocabulary for the musician was also recomposed. Co-designers created this mapping between the two gestural vocabularies and the vibrotactile stimuli and utilize the mapping as performance cues between co-performers.

Pitch changes, amplitude modulations, and rhythmic elements are the most common themes when discussing musical features. FG participants (P3.1, P3.3, P3.5) and P2.3 commented that they were able to feel the musical phrasings and amplitude envelope through both haptic modalities. These features are more frequently discussed than pitch changes when listening with the in-air haptics using subwoofers. P2.3 also explored creating different rhythmical patterns using both in-air and on-skin haptics individually.

One FG participant, P3.4, expressed that he was able to feel the pitch change but when the listening was through two modalities (in-air and on-skin), the pitch information was less nuanced. He thought that some of the spectral information was received aurally, but he could not specifically detail which spectral information. He reported his experience:

“It's kind of confusing to have the audio at the same time because it is so much more vibrations from [the haptic modules] but some of the [audio information] must come from [the speakers]. Because I can't feel some of the more spectral things [from the haptic modules], they need to come from the subwoofers.”

During the workshops and compositional practice, the Deaf dancer (P2.3) was able to articulate the pitch changes and the overall register of the voice: “[...] it sounds like a female singing.” when listening to the compositional elements with the on-skin haptics, she simultaneously narrated her experience by expressing when she felt pitch getting lower or higher. She was not able to articulate the pitch changes with the in-air haptics.

Another FG participant (P3.3) highlighted that listening with the on-skin haptics can increase one's sensitivity to “dealing with different pitches in the body” as she imagines what it would be like to listen as a deaf person. She suggested incorporating higher-frequency content in the composition.

Not all participants focused on understanding which modality provided the most information about the musical features. P3.1 expressed that “I wasn't hearing and feeling at the same time, it became one thing.” She expressed that the sound enhanced the vibrotactile sensations. Similarly, P3.5 reflected that “the whole listening is not just oral but also like you feel [...] from the speakers but also from the sensations [haptic actuators].” This participant expressed her experience with amplitude and frequency modulations with her movements by shaking her hands and head in the air when she heard these musical features.

Listening through multiple modalities influences the observer's sense of space, felt experience, and bodily expressions. This study investigated participants' felt bodily experiences during multisensory listening through vibrotactile sensations from subwoofer arrays and wearable haptic interfaces. The combination of sound and haptics enhanced the listening that is experienced in the performance/listening space and the body through both on-skin stimulation and movement interaction.

The combination of the auditory and tactile stimuli transforms the listener's sense of space on and around the body. Both hearing participants (P3.1–P3.5) and the Deaf participant (P2.3) reported that the sound from the in-air vibrations was felt both in the room and throughout the body. They also experienced the vibrations through the resonating objects in the room such as glass windows and metal structures. During the workshop sessions (W1-3), the Deaf participant expressed that the intense vibrations created a sensation as if the whole room was moving. They described this sensation by denoting the room as a large kinesphere with their hands and by oscillating this sphere along with vibrations. Among the FG participants, these vibrations contributed to grosser sensations of the space while simultaneously creating an embodied listening experience throughout the body. One FG participant, P3.5 shared her experience of feeling the music through in-air vibrations “inside of [her] body [. . . ] in the torso and entire body.”

Although receiving in-air vibrations through subwoofers extends the listeners' sense of space, this modality poses challenges to decoding musical information. P2.3 reported that the subwoofer speakers provided sensations felt on the body without the nuances of the sound. She needed stronger vibrations to follow the articulations in music and to develop an understanding of the mapping between vibrations and sound. We concluded that utilizing space and in-air vibrations as compositional techniques increases the embodied experience of listening, raising sensations throughout the body and the space around them. However, these techniques blur which sound feature is received from which haptic channel. As P1.3 previously expressed: “I felt like the sounds are not perceived through pinpointed sources, but rather through the entire body.” Similarly, P3.5 detailed this experience and commented that she did not “hear it like a sound but feel it [...] close in the body.”

The on-skin haptic modules offered more sensitive and articulate listening experiences compared to in-air haptics, especially when simultaneously worn at more than one location on the body. P2.3 explained her experiences wearing the haptic modules: “I understood what the sound was about, I could feel the difference. I could feel the diversity and the flow in music.”

FG participants received the sound from both channels. For P3.5 and P3.1, both modalities contributed to one combined listening experience. P3.5 reported that “[she] had to listen in a different way, not only aurally but also in her whole body,” describing it as an internalized listening experience. According to P3.5, the vibrations created a sensation that resembled “a dialogue between skin and heart.” Similarly, she said that “[she] did not consciously separate two different modalities” (sound vibrations from the subwoofer speakers and on-body vibrations from haptic modules), instead “[the listening] became one experience.” P3.1 reported that it was effective to feel the music through vibrations first, “neutralizing the listening experience beyond localized sensations on the body.”

In this study, body movement contributed to articulating bodily felt sensations and musical features through gestural expressions and movement improvisations. For example, FG participant P3.5 chose to listen with closed eyes and improvise movements (see Figure 11D). She expressed that “the skin felt the vibrations that went into the body and then movement grew from there.” (pointing into one of the haptic modules worn on the chest). Her movement improvisation can be seen in this performance excerpt.6 P3.2, expressed similar reflections on the sign-based gestural composition, reporting that both the dance and music performance using body movements and the sign language gestures expressed a connection, exuding dance-like qualities.

Figure 11. The hearing participants in the qualitative evaluation session tried the musical interface at different sites on their body: (A) wearing on the chest, (B) wearing and pressing on the wrist, (C) holding in the palm, (D) wearing two of them on the chest and the arm, (E) on their forearm, (F) on the neck, (G) between the palm and fingers, and (H) moving close to the subwoofers. Photo credits belong to Gianluca La Bruna.

Similarly, co-design practice with the Deaf dancer (P2.3) in the workshops (W1-3) included many movement explorations and exercises. She performed using the shared gestural mapping with the musician and expressed that “different vibration patterns served as movement cues” and “co-creating the choreography supported [her] learning of the mapping” between sound and vibration.

In the third study, FG participants were encouraged to move around the space and sense the in-air vibrations from three subwoofers (see Figure 11). In the next activity, the participants tried the haptic modules, exploring new locations on the body.

During the workshops (W1-3), the co-designer (P2.3) selected two locations to wear the haptic modules: the inner arm and chest (Figure 5). The inner upper arm was chosen because the skin is more sensitive to vibrations and the air cavity of the chest provides more resonance. After we introduced this design configuration in the third study, the FG participants were provided with the flexibility to choose how they wanted to listen to the music. Two participants wore the modules as designed—one on their arms and the other on their chest (Figure 11D). One of these participants, P3.5, preferred listening with closed eyes and improvising with her body movements to the music (see Figure 11D). Most of the other participants preferred holding the modules in their hands or pressing them against their inner forearms or their palms (Figures 11A–C, E, G). One of those participants listened by holding a module against her neck (Figure 11F). We observed that embodied experiences were reported more often when listening with the module worn on the chest. Increasing the locations on the body offered a more nuanced understanding of the music for listeners and more compositional possibilities for designers.

The participant who listened while holding a haptic module in his hands commented on the relationship between different haptic modalities and the composition content. P3.4 expressed that “some of the spectral information [of the composition] must come from the subwoofers” to be able to amplify some compositional qualities such as pitch change. He expressed that he could not feel the pitch change solely from the wearable interfaces. On the contrary, these qualities were more nuanced to the Deaf participant (P2.3) through the on-body vibrations than the in-air vibrations. She commented on recognizing the relative pitch range of the singer during the second workshop through the on-skin haptics. Evaluation across different hearing abilities creates one of the major challenges since the reflections of P2.3 and P3.4 relate to varying levels of pitch perception. Combining multimodal feedback might supplement the missing information from configurations with only one channel of haptic feedback.

We also asked participants how they imagined using this listening experience. P3.1 and P3.5 imagined the listening experience as a meditation tool. P3.5 said “[...] for an hour, just with closed eyes, I would just listen to it and feel it in the body.” P3.1 reported that the experience reminded her of the sound meditation instruments such as Tibetan bowls and gongs. She also expressed that the movement-based performance with the musical interface can be used to create poetry and to express “music and emotions.” P3.5 also suggested that it could be used as a dance tool for movement improvisation. P3.3 and P3.4 reported that it could be used for musical creativity. P3.3 tried the musical interface in addition to the haptic interface and expressed that it could be used to create music while feeling on the body both from vibrations and movement interactions. P3.4 imagined how and what kind of music Deaf people can create with the interfaces.

Findings from the evaluation of our study iterations include the following design implications:

• Multimodal tactile feedback holistically enhances the listening experience: Regardless of whether delivered through multimodal or unimodal channels, vibrotactile feedback conveys musical information; however, multimodal feedback supports several aspects of a musical experience, including performing, listening, and creating.

• Listening experience is enhanced by audio-tactile compositions: Sound design and composition to amplify the sensations that are directly felt on the body enhance the listening experiences. Different modalities can be used to create distinct sensations or to contribute to the listening experience as a whole.

• Including different perspectives increase awareness: Focus group participants come from backgrounds in dance, music, design, and accessibility work in the arts. Their perspectives amplified the conversation about hearing accessibility and access issues in music. In these group discussions, the participants started to understand how Deaf participants can use movement-based and haptic interfaces to create music and imagined the kind of music composed by Deaf musicians through this listening experience. Their professional backgrounds and perspectives contributed to increasing awareness and visibility.

• Different backgrounds reveal new use cases and applications of embodied listening experiences: FG participants' interaction with the interfaces supported ideation of different use cases. When designing for accessibility, it is important to involve different stakeholders even though they are not experts in accessibility studies (Shinohara et al., 2020).

• Design space of musical haptics benefits from being informed by the perspectives of different stakeholders as well as their hearing abilities: Including both the target user group in the study and many stakeholders with diverse perspectives significantly benefits the research in developing knowledge and identifying the capabilities of the design methods.

• Creating shared context, language, and space contributes to inclusion through participation: Co-designers more naturally gather context information to identify motivations and goals as well as to articulate experiences. Such shared elements foster participation based on empathy, aesthetic values, and social interaction.

Designing with diverse backgrounds led us to search for new composition and performance approaches that can equalize musical experiences for all. By introducing multiple haptic and kinesthetic feedback channels, we emphasized the elements of embodiment in musical and listening practices. While the practices offer more bodily experiences, the musical articulations might diminish in such pluralism. This compromise occurs because of the unexplored design space and lack of understanding of how movement and tactile qualities translate in the musical contexts as much as how musical qualities are communicated through vibrotactile sensations.

We addressed this challenge by incorporating body and movement materials into the compositional practice and by increasing the participation of individuals with diverse hearing abilities. This approach supported the design process to define and co-create new interfaces for specific accessibility needs. Although such specific needs relate to a small group of users, extending their potential contribution to a wider community arrives by evaluating these systems with different stakeholders. Our study investigated only a fragment of the hearing accessibility spectrum. However, investigating audio-tactile and embodied listening experiences with participants from different backgrounds and with different skills helped us to contribute to the long-term goal of increasing access to music-making for performers with diverse hearing abilities.

FG participants' perspectives provided unique angles. While these discussions derived from the study evaluations and performances, they also led to collecting participants' experiences from their own accessibility practices. Many FG participants highlighted approaching communities with the awareness of diversity rather than defining target groups as “a homogenous group.” P3.2 with a music and accessibility background emphasized the many differences between what we hear and how we perceive regardless of hearing impairments. P3.3 further articulated the differences between “what [sensations] the body perceives” and “how the body interprets” this information. We found that the movement expressions influenced the musical experience. P3.1 stated the importance of bodily awareness as she articulated that the experience depends on “how [we] are used to using [our] bodies as well as [being used to] communicating whether through the body or not.”

P3.4 expressed their interest in listening to compositions from DHH perspectives: “It gets me very curious what kind of music a deaf person would generate with this interface because [...] this [composition] would be very close to what that person would experience.” He elaborated on his experience with this inclusive creative space: “I don't feel excluded, I feel enriched from being able to get a glimpse of that perspective.”

We discuss the limitations raised in our study iterations as well as how the existing research and artistic structures either enforce such limitations or lack support to decrease barriers for improved hearing accessibility.

This study reported from one Deaf co-designer (P2.3) and the focus group participants (P3.1–P3.5) who identified as hearing. Such demographics present a limitation of this study. Although it is valuable to incorporate different “stakeholders with and without disabilities' (Shinohara et al., 2018), we hope to evaluate the listening experience with both hearing and Deaf participants in the same design space. Throughout our recruiting process, we faced the challenge of reaching out to the members of the Deaf community. Access barriers exist in both directions. While researchers deal with the challenges of accessing special user groups, these communities have limited opportunities to receive assistive technologies and tools, attend research studies, and participate as artists and performers.

Participants may also struggle to articulate their experience if they lack context-related language. Similarly, DHH individuals might lack the vocabulary to articulate their music perception. This challenge rarely relates to individuals' capabilities but more to social constructs for accessibility to music education. Working with movement-based musical interfaces provided our participants with an additional channel to articulate their experiences and connect movement qualities with their potential counterparts in music. We also shared certain music and research terms before the workshops with sign language interpreters to improve our communication with the participants.

We also recognize the limited translation between ASL and SSL as a limitation of this study. Although we consulted the SSL interpreters for better integration of the local sign language, some of the gestural elements and their mapping in the musical interface were challenging to redesign within the limited time. This limitation emphasizes the need for more holistic and longitudinal studies.

Our composition included movement-based interaction and vibrotactile feedback, leveraging the multimodal interaction between body movement, audition, and tactile sensations. However, little literature explains how we perceive sound materials through complex cross-modal stimuli. We suspect that some musical information received from auditory channels influences tactile perception, entangling the two modalities. Similarly, we focused on utilizing body movement to increase participation, first-person views, and inclusion in performance spaces. Still, we suspect that active body movement influences the on-skin listening experiences that are beyond the scope of our current work.

Our co-design sessions revealed factors about how the haptic modules can be worn; these factors need to be considered during the design process. Our co-designer (P2.3) requested at least three locations on the body. Due to technical limitations, we were only able to design for two locations (arm and chest) and needed to eliminate integrating actuators on the footrest of the wheelchair as she suggested. We observed that providing vibrotactile sensations at multiple locations enhanced the listening. However, different choices for these locations might impact the overall experience. The effects of stimulating different sites on the body might be perceived as a limitation due to the increased complexity of the evaluation. At the same time, we can leverage the affordances of these interfaces and this design space can be applied to developing new tactile compositional practices. We plan to investigate the cross-modal relationships between kinesthetic, spatial, auditory, and tactile perception and integrate this knowledge into our compositional practices for hearing accessibility and inclusion.

Musical experiences can be enhanced through music's visual, vibrotactile, and kinesthetic qualities beyond an auditory experience. In this study, we evaluated the multisensory listening experiences, combining audio-tactile compositional elements, movement expression, and multimodal haptics (in-air and on-skin). By including participants with different professional backgrounds reveals different perspectives on accessibility issues in music while increasing awareness of DHH experiences in music-making. This work provides audio-tactile compositional elements, co-created by the Deaf co-designer, and evaluates the listening experience with a focus group of hearing participants. It surveys how the listening experience is enhanced through multisensory experiences of auditory, tactile, and kinesthetic. The study draws from the Design for Social Accessibility framework to incorporate a Deaf participant's co-design and listening experience along with hearing participants who have experiences in music, dance, design, and accessibility. Moving forward, we will investigate the cross-modal relationship among auditory, tactile, and kinesthetic perception of music, together with DHH and hearing participants in the same design space.

In this work, we primarily use the terms that our participants reported preferring or identifying with. For those who reported no hearing impairments, we use “hearing”. Please note that definitions and terminology change based on the individuals' locations, cultures, and local languages. Terms like “normal-hearing” or “hearing-impaired” are used only to describe the degree of hearing loss, independent of any cultural or societal norms. In our literature review, we kept the terminology used by the authors of related research.