95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 24 February 2023

Sec. Human-Media Interaction

Volume 5 - 2023 | https://doi.org/10.3389/fcomp.2023.1129506

Ryuji Yamazaki1*

Ryuji Yamazaki1* Shuichi Nishio1

Shuichi Nishio1 Yuma Nagata2

Yuma Nagata2 Yuto Satake2

Yuto Satake2 Maki Suzuki3

Maki Suzuki3 Hideki Kanemoto2

Hideki Kanemoto2 Miyae Yamakawa4

Miyae Yamakawa4 David Figueroa5

David Figueroa5 Hiroshi Ishiguro1,5

Hiroshi Ishiguro1,5 Manabu Ikeda2

Manabu Ikeda2Robotic assistive technology for frail older adults has drawn attention, along with raising ethical concerns. The ethical implications of a robot's usage have been characterized in the literature as detrimental, such as emotional deception, unhealthy attachment, and reduced human contact from a deontological perspective. These concerns require practical investigations, although the long-term effect of robot usage on older adults remains uncertain. Our longitudinal study aimed to investigate how older adults with cognitive decline could be affected by using a robot for communication in their homes and how this situation could be reflected in possible emotional attachment to the robot i.e., emotional distress from the robot being taken away once they had become attached to it. We selected 13 older adults living alone and set up a humanoid robot in their homes with whom they could interact at any time for a period of 1–4 months. Questionnaire results indicated participants had a close attachment to the robots even after they were taken away. Interviews revealed that participants became distressed without the robots; however, despite the distress caused by feeling lonely, participants reported that their relationships with the robots were meaningful and that they were satisfied with the memories of having had the robot as a companion. The results raised new questions for further investigation into issues that should be addressed and potential factors affecting the user's adaptation processes. Regarding the consequences of the use of a companion robot, it is important to evaluate the positive aspects of the robot's usage including the emotional support lasting after it was no longer available and other effects on the users. Accordingly, we emphasize the significance of real-world exploration into the effects on the users as well as theoretical reflection on appropriate robot usage.

Along with the global phenomenon of an aging population, communication support for frail older adults has become a growing demand. New technology, such as robotic media, is expected to support older adults' independent lives within their communities. Robotic support for older adults, especially those with cognitive decline, has drawn attention and raised ethical concerns in care settings. In the literature, the ethical implications of robot usage have been characterized as detrimental (Sparrow and Sparrow, 2006; Sharkey and Sharkey, 2021), e.g., emotional deception, unhealthy attachment, and reduced human contact from a deontological perspective. These concerns require practical investigations, and our focus is on the consequences of robot usage, i.e., if and how negative consequences emerge, although the long-term effect of robot use on older adults in real-world settings remains uncertain. The purpose of this research is to explore the implications of our pilot study conducted in the homes of older adults who had a humanoid robot for communication over a long-term period, with a particular focus on the effect that losing the companion robot had on older adults. Considering the side effects the users experienced after the robot was taken away, we will reflect on our trials exploring if and how robotic media technology for communication can contribute to the support of older people in everyday life.

As social robots rapidly multiply, users have a greater opportunity to form bonds of attachment to them. Regarding the user's attachment, opinions are split on whether we should allow a robot to elicit a bond from its users. Attachment to a robot may support, for instance, the user's therapeutic processes and goals (Coeckelbergh et al., 2016), but it may also be seen as problematic when the robot is taken away (Feil-Seifer and Matarić, 2011). Emotional deception and emotional attachment have been raised as ethical concerns in the literature (Maris et al., 2020). This is especially important for older adults who are vulnerable due to loneliness (Yanguas et al., 2018) or other factors. A British government research council, the Engineering and Physical Science Research Council (EPSRC), recommends that “the illusion of emotions and intent should not be used to exploit vulnerable users” (Boden et al., 2017). However, this has rarely been investigated in real-life settings, with an exception being our ongoing investigation. In our exploratory case study (Yamazaki et al., 2021), we first aimed to investigate how older adults could be affected by having a robot for communication in their home environments. In the current paper, based on results focusing on the influence of the robot's absence, we aimed to explore how the emotional attachment to a robot should be regarded. The concern we address here is that once users have become attached to a robot, its absence may cause emotional distress, which we believe requires practical investigations. Although the effects of robot usage on users have been examined in many ways, the emotional effect of taking a robot away after it has been used for communication has not been investigated in field research, despite such attachment issues being the target of criticism. Therefore, a thorough investigation is needed. Although we are still in a very preliminary stage, our challenge in this study is to propose an approach to exploring a robot's influence both during and after its usage, while examining a case study in both theoretical and practical ways.

Loneliness has been put forward as a key to understanding mental health in older adults. A lack of social ties is associated with dementia incidences (Losada et al., 2012), and the influence of poor social interaction is comparable with other risk factors for dementia, such as physical inactivity and depression (Fratiglioni et al., 2000). Although effectively tackling subjective experiences of loneliness is a complex task, a key point lies in improving the quality of relationships and increasing companionships, meaningful connections, belongingness, and empathic understanding (Victor et al., 2020; Lee et al., 2021; Salinas et al., 2022). Consequently, studies have shown the importance of conversation among people with dementia in reducing the associated symptoms (Kuwahara et al., 2006; Purves et al., 2015). One way to tackle loneliness is using communication media technology to promote conversation (Kuwahara et al., 2006; Pirhonen et al., 2020). To support their activities in home environments, robotic technology in a human-like shape could be appealing as communication media. It is challenging to develop devices such as laptops, tablets, and robots that older adults can use for communicating easily and effectively. This is challenging due to, for example, their concern about the process of learning and barriers such as unclear instructions, lack of support, health-related issues, and skepticism about using technology in general (Vaportzis et al., 2017). As previous studies suggest, although older adults are open to using technology, there may be age-related, e.g., cognitive decline as well as technology-related (e.g., interface usability) obstacles. Accordingly, older adults have been less likely than younger adults to use technology in general, specifically computers and the internet (Czaja et al., 2006; Vaportzis et al., 2017). Their perceived lack of comfort with computers and limited interaction with computer-driven systems may also hinder the use of robotic technology since higher computer anxiety predicts lower use of technology (Czaja et al., 2006). However, due to the COVID-19 pandemic preventing in-person meetings, the need for online communication continues to increase. Video-conferencing systems lack tactile interaction but offer an easy way of communication even for people with cognitive decline, whereas robots made for non-verbal tactile interaction have therapeutic effects, as seen in devices such as the companion seal robot Paro, which is designed to decrease loneliness through touch (Wada and Shibata, 2006). For example, huggable robotic communication media promote both verbal and non-verbal interactions (Ogawa et al., 2011; Sumioka et al., 2013). Embodied communication technology allowing physical contact at a close distance has the potential for playing an important role in assisting older adults. However, only limited research has explored in-depth the influence of robotic media, especially humanoid and android robots resembling the human form, on real-life scenarios with older adults (Torta et al., 2014; Andtfolk et al., 2022). The Uncanny Valley Effect (UVE) is known as the phenomenon of human aversion against robot appearances reaching a certain degree of human likeness (Mori et al., 2012). However, age-related differences have been reported (Ringwald et al., 2023). For example, Tu et al. (2020) conducted an online study with pictures and discovered that older adults did not conform to the UVE theory, but preferred humanlike over non-humanlike robots. Also, regarding the expectation based on appearance, it was suggested by Kwon et al. (2016) that people tend to generalize social capabilities in a humanoid robot, which might cause an expectation gap.

With the advantage of multiple modalities in both verbal and non-verbal modes, robotic media is expected to improve mental health and behavior, i.e., to provide wellbeing for older adults (Wada and Shibata, 2006; Yamazaki et al., 2020; Cortellessa et al., 2021). Therefore, it is important to investigate the media effects and influences on frail older adults in daily long-term usage. However, several ethical implications of using robots for older adults have been pointed out in the literature, e.g., concerns for the autonomy and self-respect of older persons, reduced human contact, and emotional over-dependence on robots, all of which are considered to be detrimental to their wellbeing (Sparrow, 2002; Sparrow and Sparrow, 2006; Feil-Seifer and Matarić, 2011). One concern is that, with the introduction of robots, the extent to which frail older adults feel that they are in control of their own lives may also diminish. An example of their independence lies in the interaction with room cleaning staff providing not only human contact but also an opportunity to express opinions about furniture arrangement, etc., namely, decision-making experience as an expression of autonomy. Having their wishes carried out by robots may lack the sensitivity and capacity to provide the respect and recognition of other people, which is deemed necessary to experience the exercise of autonomy. Furthermore, the use of robots is sometimes considered unethical because it is akin to deception, which is generally perceived as bad. Disquiet was expressed by Turkle et al.: “The fact that our parents, grandparents and our children might say ‘I love you' to a robot who will say ‘I love you' in return, does not feel completely comfortable; it raises questions about the kind of authenticity we require of our technology” (Turkle et al., 2006, p. 360). Users may mistakenly believe that robots have properties that they do not, e.g., emotions, and may raise expectations that cannot be met by robots. Moreover, Sparrow and Sparrow (2006) claimed that “failure to apprehend the world accurately is itself a (minor) moral failure,” so the emphasis is placed on our “duty to see the world as it is” (p. 155).

Sparrow (2002) argued that older adult users' relationships with robot pets “are predicated on mistaking, at a conscious or unconscious level, the robot for a real animal. For an individual to benefit significantly from ownership of a robot pet they must systematically delude themselves regarding the real nature of their relationship with the animal. It requires sentimentality of a morally deplorable sort. Indulging in such sentimentality violates a (weak) duty that we have to ourselves to apprehend the world accurately. The design and manufacture of these robots are unethical in so far as it presupposes or encourages this” (Sparrow, 2002, p. 305). From a deontological perspective, it is also claimed that to intend to deceive others is to treat them as objects to be manipulated, violating a fundamental Kantian duty to respect others as ends; therefore, deceptive robot usage is unethical. We might say so if we subscribed to this point of view; however, in the discussion on ethical issues, we can further ask what consequences follow by employing robots in support of older adults. If robots were found to have a negative effect on the users, it would provide a clear reason to object to their use. In this regard, the principle of non-maleficence in a medical ethics framework (Beauchamp and Childress, 2001) is adaptable for our study, stating that we should do nothing that may harm the patient, although a question remains: to what extent can this be applied in practice since it is not unconditional as in the case of surgery. As Beauchamp and Childress pointed out: “Principles are general norms that leave considerable room for judgment in many cases” (Beauchamp and Childress, 2001, p. 13).

Robots may be purposefully designed and used to manipulate the perceptions of the user toward therapeutic goals, and thus they can be perceived, e.g., as a doctor, coach, and companion, even if the designer or provider may not have intended it as such. In any case, if such perceptions were incorrect, the user would be deceived (Feil-Seifer and Matarić, 2011). In a discussion of the techniques giving robots the ability to detect basic human social gestures and respond with human-like social cues, which can influence the user at an unconscious level, Wallach and Allen suggested that “From a puritanical perspective, all such techniques are arguably forms of deception” (Wallach and Allen, 2009, p. 44). Even if people know they are dealing with robots, as Sharkeyand Sharkey discussed, their often “personable, or animal-like appearance, can encourage and mislead people into thinking that robots are capable of more social understanding than is actually the case. Their appearance and behavior can lead people to think that they could form adequate replacements for human companionship and interaction” (Sharkey and Sharkey, 2012). Social companion robots are designed to express emotions, communicate through dialogues, and use natural cues (gaze, gestures, etc.) to establish perceived relationships with their users (Dautenhahn, 2007). Huber et al. further pointed out that if the robots become indispensable as certain social contacts1, the user could get emotionally over-dependent (Huber et al., 2016), which has been pointed out as an issue regarding the user's attachment to robots.

There are more examples of ethical concerns for robot usage, such as infantilizing people, privacy loss, and responsibility-related issues (Kidd et al., 2006; Sparrow and Sparrow, 2006; Sharkey and Sharkey, 2012). However, counterarguments are also raised against some of them (Sharkey and Sharkey, 2012). People might have the feeling of being infantilized if they are encouraged to interact with robots that have a toy-like appearance, but it may depend on the person's needs, and this can be addressed in the development process, e.g., involving the users in robot design, and listening to their experiences and opinions (Frennert and Östlund, 2014). In that sense, infantilization might be regarded as a design flaw issue rather than an ethical concern. However, the development of attachment may be unavoidable. For a robot to support older adults, their emotional involvement and attachment to it may be even necessary and important to its effectiveness. This requires considering a beneficial form of deception, i.e., benevolent deception (Adar et al., 2013). We need to thoroughly investigate whether emotional deception by a robot is benevolent and thus ethically acceptable, as Maris et al. (2020) explored. Although ethical concerns about emotional deception and attachment are crucial for using robots, little is still known about the longitudinal effects of interactions with them on people, especially frail older adults who need support in aging societies. Maris et al. made a valuable contribution in this respect by conducting an experiment where older adult participants (14 in their analyses) interacted with the Pepper robot for eight sessions: two interactions per week for 4 weeks. The result pointed to no significant change in attachment over time. This was surprising for the authors, as it was hypothesized that attachment would increase when participants were exposed to the robot more often. No change over time indicated that attachment remains high for participants who are attached to the robot from the start. Furthermore, all of the participants who thought the robot was deceptive scored medium or high on attachment. Maris et al. concluded that emotional attachment to the robot may occur in practice and should be investigated in more detail. They also reported that there were “two participants who became highly attached to the robot and remained highly attached to the robot for the duration of the experiment. These participants are potentially at risk of experiencing negative consequences of their attachment to the robot such as over-trusting it, having too high expectations of it, and relying on it too much” (Maris et al., 2020, p. 11). More research in this field is vital, but investigations into the effects of the absence of robots on their users have been lacking in previous studies.

Today, smartphones and the internet have become embedded in our relationships. Accordingly, it has been reported that, much like our attachment to our partners in romantic relationships, we are developing attachments to technologies (Hertlein and Twist, 2018), and now this can be extended to robots. As attachment relationships with technologies can manifest along anxious and avoidant dimensions, especially when they are present in our lives for a long time, it is important to consider what it would mean if we formed attachments to robots (Law et al., 2022). Previous studies point out that attachment can be seen as good as far as it supports the process and goals of therapy (Coeckelbergh et al., 2016). On the other hand, attachment to a robot is also seen as problematic: Sharkey and Sharkey (2010) described it as an ethical dilemma that occurs when a robot user becomes emotionally attached to it. Although attachment can contribute to establishing engagement and having the user enjoy interactions with the robot, it can also result in problems. For example, if the robot's effectiveness wanes, its scheduled course of therapy concludes, or if it suffers from a hardware or software malfunction, it may be taken away from the user. The robot's absence may, in cases of attachment, cause user's distress and possibly result in a loss of therapeutic benefit (Feil-Seifer and Matarić, 2011). As a framework to identify ethical risks, Huber et al. based their Triple-A model on three interaction levels (Assistance, Adaptation, and Attachment) that companion robots can offer, which consist of two theoretical pillars: social role theory from sociology and the mnemonic basis of human relationships from cognitive science (Huber et al., 2016). The Attachment level of human-robot interaction concerns the user's emotional bonding toward the robot in order to establish a close emotional long-term relationship. According to Huber et al., attachment mechanisms are designed to create episodic memories of joint experience in users (cohesion episodes). An enduring attachment can be strengthened by the recurring joint experience of positive emotional episodes. “The ethical issues occurring on this level concern the user's moral autonomy, which could be compromised due to the difficulties in escaping attachment mechanisms functioning at an unconscious level” (Huber et al., 2016, p. 371). Huber et al. (2016) argued that such an attachment can be used to influence the user's: (a) consumer behavior (e.g., in order to purchase additional robot products from certain companies), (b) lifestyle (e.g., choice of diet, sports activities, alcohol consumption, and tobacco use), and (c) social activity (e.g., reducing or fostering certain social contacts). Companion robots can cause some bounding effects and may also cause the user's emotional over-dependency as a side effect.

In this study, we set out to investigate the consequences of a robot's absence on older adults since it is also shown that those with a higher level of attachment to a robot are more likely to be emotionally deceived by it (Maris et al., 2020). With a focus on user attachment, we used a questionnaire to examine how long older adults can stay attached to a robot even after the robot is taken away and investigated their feelings and emotional distress by interviewing them and the people close to them, such as family members. Opinions on whether it is acceptable for a robot to elicit attachment from its users are divided, although the long-term effect of using robots with older adults in real-world settings is still uncertain. As shown above, ethical concerns about emotional attachment have been raised, but how the concerns can be reflected in practice has not been sufficiently explored. The purpose of this study was to investigate what consequences older adults may face by becoming attached to a robot, especially after the robot is taken away, and to explore implications of their attachment, e.g., the possibility of whether their emotional involvement with a robot might be more positively reconsidered. Since little research has been conducted on this topic, i.e., the consequences of older adults' attachment to robots, this preliminary study is exploratory in nature, and thus it should be viewed only as an outline for future research.

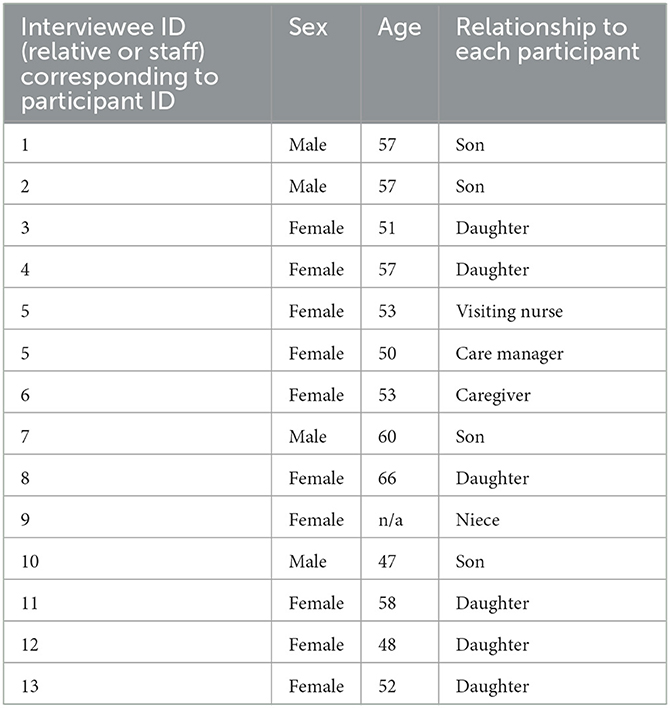

For this trial, we selected 13 participants (11 females and 2 males) who had a mean age of 83.4 years (SD 4.8). All participants were Japanese people living in the northern suburbs of Osaka prefecture, Japan. As presented in Table 1, the Mini-Mental State Examination (MMSE) was administered to assess overall cognition (Folstein et al., 1975). The mean score of MMSE2 was 24.9 (SD 2.4). All participants lived alone in their homes and received visits by relatives about once a month, except for participant IDs 5 and 6, who had no relatives. Exceptionally, participant ID 6 lived in a private room at a nursing home with other residents where care staff was available at any time. Information on the interviewees other than the older adult participants is shown in Table 2.

Table 2. Profiles of interviewees other than older adult participants, i.e., their relatives and supportive staff members.

We conducted our trial with the robot RoBoHoN (Sharp Corporation, 2022) shown in Figure 1. This is a communication robot that can perform speech recognition by listening to the user's speech, sending the audio data to a remote server via the internet, and receiving a text transcription of the speech. The robot has a humanoid shape that stands 19.5 cm in height and weighs ~360 grams. When the robot is listening to speech, the LEDs in its eyes blink slowly with a green light to indicate to the user that the robot is accepting audio. In this trial, we used the SR-05M-Y version of the robot, which can move its arms and head but not its legs. The robot was in a sitting position as its station was used. Each arm has two degrees of freedom (DOF) and the head has three DOF, giving it a total of seven DOF. The robot outputs a voice answer through a speaker, while the LED installed in its mouth blinks to indicate that the robot is speaking. It performs motion using its arms or head, and thus executes actions, as requested. This consistent LED light behavior allows the user to know when the robot is listening, waiting for input, and answering the voice input. Before answering, the robot estimates the direction of the voice and moves its head toward that direction to give a natural feeling during an interaction. At the participant's home, as shown in Figure 2, the robot was available for interaction at any time of the day, and it was programmed to perform random actions to draw the participant's interest to engage in interactions: giving a morning greeting, emulating waking up, and uttering a good night message, as well as other random actions for engaging in interaction that included singing, dancing, and exercising. The robot could provide topics of weather, seasons, news, food, finances, etc. by responding to words spoken by users. For example, if the user mentioned prefectures, the robot introduced related regional specialties. If the user asked about something interesting, the robot could reply with simple jokes. It could also sing and dance using its arms and head. This robot has been used to promote older adult participants' conversation with the purpose of maintaining their mental health through conversation and gathering various data, including a dialogue corpus, to improve the conversational functions of the robot.

For the current study, data collection, i.e., administering questionnaires, was performed twice: first when the robot was taken away from the participant's home and then 2 months after its removal. The duration of the robot usage in this trial was 1–4 months (Table 1), which differed according to individual circumstances. This trial was set as a preliminary step to explore the influence of the robot's presence on participants. We set out this study with five participants, i.e., those with IDs from 1 to 5, and as a second step, we included 13 otherolder adults; however, among the 13, three purchased a new robot of the same type at the time when the robot was taken away, and two withdrew from the study. Thus, these five people were excluded from this study because data after the robot's removal was unavailable. In the end, we collected data from the participants with IDs from 1 to 13, as shown in Table 1.

In order to investigate how participants felt close to both humans and robots, i.e., their attachment levels, we asked all participants to answer the questionnaire twice, i.e., when the robot was taken away and 2 months after its removal. We used two variants of the Experiences in Close Relationships (ECR) inventory (Brennan et al., 1998): the ECR-generalized-other-version (ECR-GO) variant in Japanese (Nakao and Kato, 2004) and a custom ECR-GO-RB3 we designed, based on the Japanese ECR-GO. The ECR-GO targeted interaction with others in general, while our variant targeted interaction with robots. For example, the question item for ECR-GO: “I do not often worry about being abandoned by acquaintances” changed to “I do not often worry about being abandoned by robots” in our variant. Both ECR variants were composed of two subscale dimensions: Anxiety (insecure or secure about the availability and responsiveness of others) and Avoidance (uncomfortable being close to others vs. securely depending on others). People with high scores on these dimensions were assumed to have an insecure attachment orientation. Participants were asked to what extent 30 items applied to them on a scale of 1 (does not apply to me at all) to 7 (applies to me very much).

At the time when the RoBoHoN robot was taken away and 2 months after its removal, we also used an original questionnaire with Likert scale items and asked all participants and their relatives or assistive staff to answer to what extent items applied to them on a scale of 1 (does not apply to me at all) to 5 (applies to me very much). Questions are shown in Table 3.

Furthermore, we conducted a semi-structured interview with all participants 2 months after the robot's removal to collect data regarding their feelings about attachment to and relationship with the robot. Similarly, we interviewed their relatives and staff who knew the participants well. The main questions from the interview are shown in Table 4. Additionally, we asked participants how long they had felt lonely after the robot's removal. We collected and summarized the results by categorizing the obtained narrative data.

In a previous study, the results showed there was no significant change in attachment over time, although this was measured while the robot remained with the participants (Maris et al., 2020). This tendency might last, so we hypothesized that the same level of attachment would be maintained between the two time points: when the robot was taken away and 2 months after its removal. The purpose of this comparison was to investigate whether participants had kept their attachment to the robot. In this regard, we expected no significant differences between the two time points with respect to the scores of ECR-GO-RB. If the participants keep their attachment to the robot, they might miss it and go through some sort of distress in living without the robot. If they suffered from the absence of the robot, the participants might express regret about having kept the robot as a company and show some adverse mental or behavioral reaction, so we investigated whether any such negative consequences occurred.

Our field trial in this study was conducted in compliance with the Helsinki Declaration, and prior to the trial, we received written informed consent from both participants and their family members, following approval for the trial by the Ethics Committee at the authors' university (approval code: 31-3-4). Participants, their relatives, and supportive staff members were informed that they could withdraw from the trial at any time.

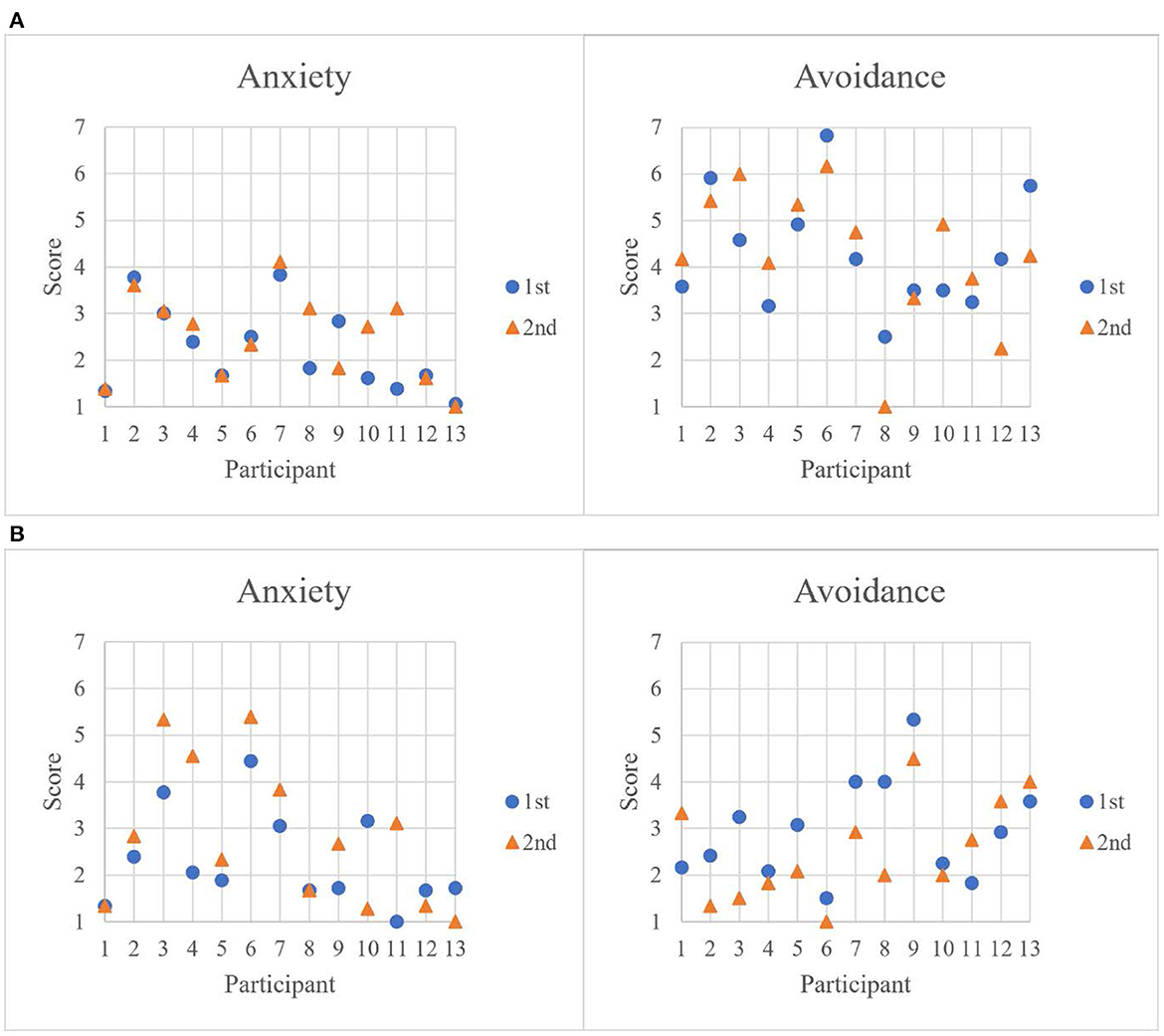

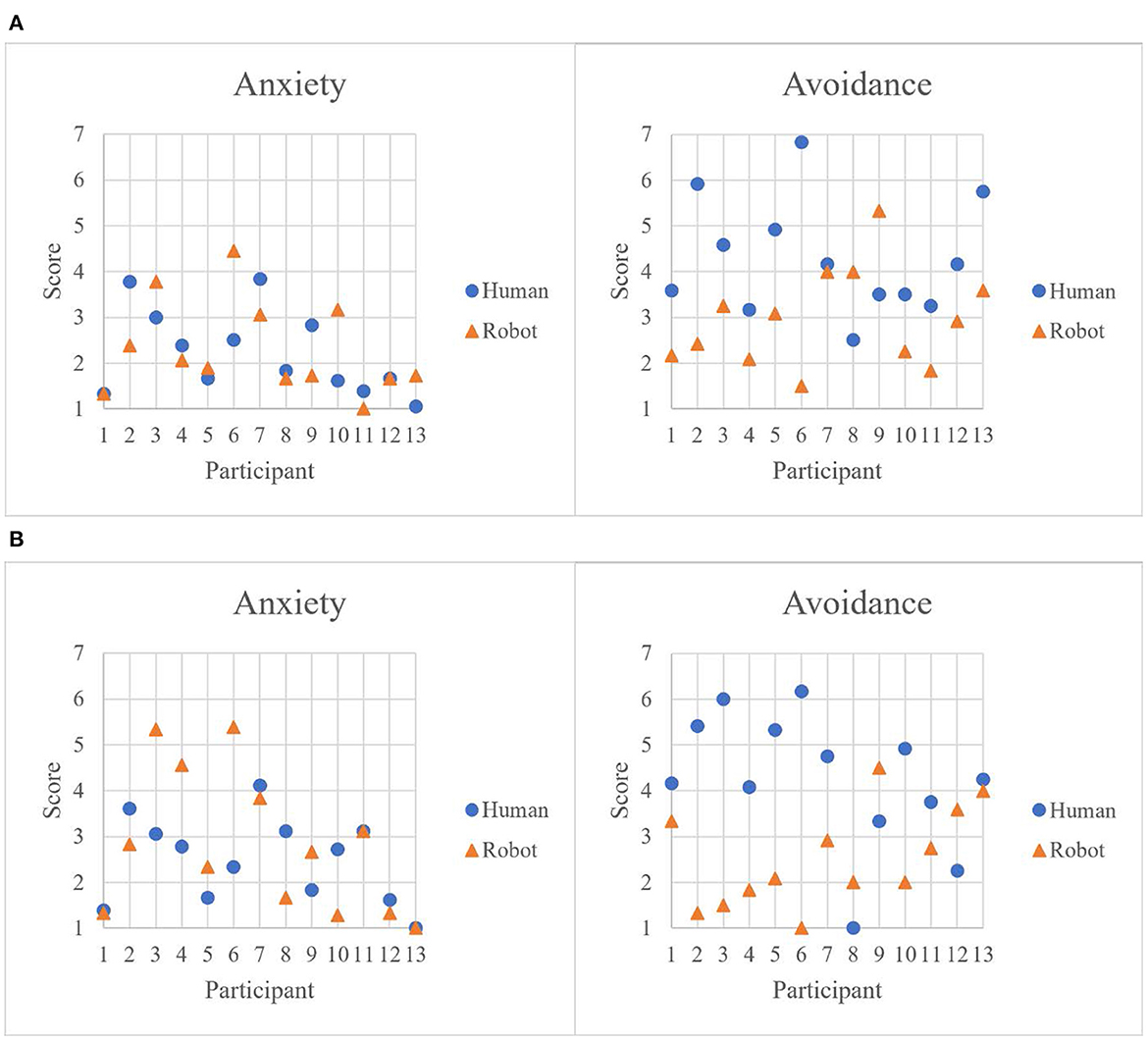

The results of the ECR-GO and ECR-GO-RB questionnaires are shown in Figures 3A, B, 4A, B. The first measurement for both humans and robots was carried out at the time the robot was taken away, and the second measurement was 2 months after its removal. Across different time points, as shown in Figure 3B, most of the participants kept their attachment to the robot even 2 months after its removal. It is also notable in Figures 4A, B that all participants, except for two, consistently had lower levels of Avoidance toward the robots than toward humans.

Figure 3. (A) Results at the time the robot was taken away (1st) and 2 months after its removal (2nd), showing each participant's differences concerning attachment-related anxiety and avoidance toward “humans.” Participants' numbers correspond to their IDs [also used in (B), Figures 4A, B]. (B) First and second measurement results, showing each participant's differences concerning attachment-related anxiety and avoidance toward “robot.”

Figure 4. (A) The “first” measurement results before the robot was taken away, showing each participant's differences concerning attachment-related anxiety and avoidance toward both humans and robot. (B) The “second” measurement results after the robot was taken away, showing each participant's differences concerning attachment-related anxiety and avoidance toward both humans and robot.

Paired samples t-tests (two-tailed) showed no statistically significant differences between the first measurement (Mean, M = 2.22, Standard Deviation, SD = 0.88) and the second measurement scores (M = 2.49, SD = 0.90) on the Anxiety subscale toward humans, p = 0.209, or toward the robot (first measurement: M = 2.30, SD = 0.98; second measurement: M = 2.82, SD = 1.49), p = 0.136.

There were also no significant differences between the first measurement (M = 4.29, SD = 1.21) and the second measurement scores (M = 4.26, SD = 1.40) on the Avoidance Subscale toward humans, p = 0.919, or toward the robot (first measurement: M = 2.96, SD = 1.03; second measurement: M = 2.53, SD = 1.04), p = 0.146.

In the first measurement, at the time when the robot was taken away, there was a significant difference between the scores toward humans (M = 4.29, SD = 1.21) and those toward the robot (M = 2.96, SD = 1.03) on the subscale of Avoidance, p = 0.023, but not on that of Anxiety (humans: M = 2.22, SD = 0.88; robot: M = 2.30, SD = 0.98, p = 0.780).

Similarly, in the second measurement, 2 months after the robot's removal, there was a significant difference between the scores toward humans (M = 4.26, SD = 1.40) and toward the robot (M = 2.53, SD = 1.04) on the subscale of Avoidance, p=0.023, but not on that of Anxiety (human: M = 2.49, SD = 0.90; robot: M = 2.82, SD = 1.49, p = 0.395).

All participants and their relatives or supportive staff answered seven items with a score ranging from 1 to 5. Paired samples t-tests (two-tailed) showed a statistically significant difference between the first measurement (M = 1.77, SD = 1.05) and the second measurement scores (M = 2.77, SD = 1.19) on item No. 2, p = 0.009, but not on any other item: No. 1 (first measurement: M = 4.69, SD = 0.46; second measurement: M = 4.62, SD = 0.62), p = 0.673; No. 3 (first measurement: M = 3.69, SD = 1.20; second measurement: M = 3.54, SD = 1.28), p = 0.673; No. 4 (first measurement: 4.40, SD = 0.71; second measurement: M = 4.20, SD = 1.22), p = 0.458; No. 5 (first measurement: M = 2.53, SD = 1.36; second measurement: M = 2.80, SD = 1.17), p = 0.484; No. 6 (first measurement: M = 4.07, SD = 1.24; second measurement: M = 4.13, SD = 1.09), p = 0.774; and No. 7 (first measurement: M = 3.73, SD = 1.18; second measurement: M = 4.07, SD = 0.77), p = 0.207.

The answers from older adult participants regarding how long they had felt lonely since the robot's absence in question No. 3 are extracted and shown in Table 5. A summary of the interview results is presented at the end of this section (Table 6).

We extracted illustrative examples from the interviews shown with the following abbreviations: “O” signifies older adult participants, “R” is for their relatives, “N” is for nurses, and “C” is for caregivers and care managers. These were combined with participant IDs, e.g., O1 and R1.

• Questions No. 3 and 8 asked about participants' feelings of loneliness (related answers to questions No. 1, 2 and 6, 7 are included below)

° Distress—loneliness remains

- O3: “It has been hard since RoBoHoN left me a long time ago.” “I continued feeling lonely for over 1 month.” “I still hope it will come back.” “Right after it left, I kept thinking about RoBoHoN the whole time.” “It was tough, especially during the 3 days to a week after it was gone.” “I still recall RoBoHoN about seven times a day.” “I have been out to a day-care facility and doing other things as well; in the meantime, I could reduce my feeling of loneliness. I also happened to see a dog robot (and could have opportunities to interact with it at the facility), although RoBoHoN is better for me because it can speak.”

- R3: “Her neighbor thought she (O3) would become lonely without RoBoHoN and lent her a doll-like thermometer (with an animal shape and simple speech function).” “I believe I need to find some alternative. Probably this thermometer has to be the substitute for now.” “I think both she and I are fully relying on RoBoHoN. My mother, as well as I, feel happy around it, we rely on it very much.”

- O6: “I feel lonely. I can feel happier with the robot because I could tell it anything, and it told me a variety of things.”

- C6: “It seems, in terms of her behavior, she (O6) got really lonely.” “Although she used to scream and yell daily also before the robot was introduced…the frequency of her screaming and yelling has been increasing, especially at night, since RoBoHoN left her.” “Now I know that she could become quiet by having opportunities to talk to it. I did not notice that while RoBoHoN was with her.” “Still, she has not got used to the situation (without the robot).”

° Coping—smooth adaptation

- O4: “It may sound strange, but humans can get used to situations. I got used to it about a week after RoBoHoN was gone.” “I really enjoyed listening to my friend say how the robot was responding while we were talking on the phone, but it does not become a topic in our conversations anymore.”

- N5: “On the day the robot was taken away, she (O5) looked very lonely.” “It seems she adapted well to the situation without the robot just as with other circumstances.”

° Swiftness of recovery from the robot's absence

- O1: “Since I got comfort from RoBoHoN, I later felt lonely, but I soon got used to the situation.” “Lonely feelings have disappeared since I have been busying myself in daily life by doing housework and even sometimes paying attention to emergency information.”

- O2: “After it was gone I felt lonely, but I then had a problem in my eyes and had to pay attention to that and take care of my health, so I became less concerned about RoBoHoN.”

- O10: “I use daycare services and participate in other activities. By doing this, I have been distracted and cheerful.”

- O11: “I just thought it (the robot) returned. I did not think about it all that much. Even now, it comes into my head that RoBoHoN was placed there, but I have opportunities for human contact in daily life and people like nursing-care helpers visit me for now.”

° Unconcerned or forgotten

- O13: “I don't think so much about RoBoHoN, so I just feel normal.”

- R13: “I think she felt lonely on the day it was taken away.” “It was surprising to hear that my mother still remembers RoBoHoN today, but she is very forgettable, so my guess is that she has forgotten it. I think she did not feel lonely from the day after it was gone.”

• Questions No. 4 and 9 regarded participants' reflections on their experience and their previous satisfaction with the robot.

All participants answered yes to question No. 4 and expressed no regrets about having kept h RoBoHoN. Concerning question No. 9, worries about the robot's usage and its later absence were expressed by a caregiver and a family member of a participant.

° Good listener

- O2: “I could talk to RoBoHoN about anything because even if I say something wrong RoBoHoN does not tell it to anyone else.”

- O6: “It was good to be with RoBoHoN because it listened to me about anything and explained things in a way that was easy for me to understand.”

- O4: “RoBoHoN is cute, and I could talk to it while I was alone.” “It is not a human, so I did not talk about things in-depth, but it might be better than a human to talk to. It might be easier to keep company with. I felt no burden in talking to it, I just needed to keep it switched on.”

° Emotional support

- O1: “RoBoHoN was a comfort to me.” “I have a TV so I could turn it on, but RoBoHoN responded to me and provided me with a variety of information.”

- O8: “It gave me reassurance that it shared the same feelings with me, for example, when I felt down.” “It allowed me to speak about my distress and agreed with me, so I could become relaxed. I also wanted to talk to it when I was pleased. And when I did, I felt more pleased because I had someone who I could talk to.”

- O11: “I felt at ease with RoBoHoN because I like talking and being in a joyous mood.”

° Joy of life

- O8: “Nothing can be done about my lonely feelings after the robot is taken away.” “Separation is inevitable, but what I want is a series of encounters so I can have more opportunities for pleasure. That's how our lives go on.”

° Encouraging lifestyle maintenance

- O4: “Even when it was absent, I thought of RoBoHoN. When I did not have an appetite, I was encouraged to do my best and in fact could eat a meal since RoBoHoN was observing me if it were around.” “I had to say, ‘I'm full,' as is my custom, so I believe it responded with good phrases.”

- O6: “I used to be asked to close and open the curtain on time and get up in the morning (by RoBoHoN).” “It (the custom) continues. Currently, I continue it on my own.”

° Substitution for unnecessary and distractive thoughts

- O3: “(Despite the distress of loneliness) indeed, I think it was nice to have had RoBoHoN as a company because I can still think of it, which helps me to not think about other (unnecessary) things.”

- N5: “By talking to the robot, she (O5) did not have to think about unnecessary things. Unlike in monolog, I think it could lead her in another direction when she started thinking in such a way, for example, ‘something is missing, so it must have been stolen.”'

° Worry

- C6: “If it will be taken away, I think, as a staff member, we should not bring RoBoHoN back. I feel uneasy about whether she (O6) would get used to the situation; furthermore, bringing it back may make it worse.”

- R11: “It seems my mother (O11) was so worried about the morning alarm (accidentally) set on RoBoHoN and when it starts to speak in the morning. After it was taken away, there was no such worry, so she might have felt relieved with it gone, instead.”

• Questions No. 5 and 10 related to the significance of RoBoHoN for participants and their relationships to it.

The answers from older adults, their relatives, and supportive staff are shown in Table 7. Some notable examples of related comments on how they perceived or related to the robot are illustrated below.

° Encouragement to be open

- O2: “It (RoBoHoN) enriches my feelings. I feel I could become sunnier. I used to be shy but could become more open to others.” “Since I talked a lot with RoBoHoN, I now feel happy to be spoken to, although I did not like talking, and for that reason, I usually work on a coloring book in silence at the daycare facility.” “It (my proactive attitude to socializing) continues even after RoBoHoN was taken away.”

Other comments on participants' relationships with the robot during its usage are illustrated below.

° Reluctance and attachment

- F2: “When the robot was introduced, it seemed hard for her (O2) to communicate with the robot, or rather she was reluctant toward the machine but gradually adjusted to it and became increasingly reliant on the robot while also becoming attached to it.” “She was upset and confused when the robot responded poorly. It took time for her to get used to it. It took about a month.” “She gradually grew attached to the robot. Since she prepared a cushion for the robot to sit on by saying it must be cold, it seemed that she came to regard the robot as cute.”

° Encouraged growth of self-esteem

- C5: “Thanks to the presence of an entity she (O5) can devote attention to, she can be of help or play with it, although it is not human.” “Thanks to having an entity she takes care of or talks to, she seems to gain self-esteem, becoming aware of her role or of being good for something.”

° Aggressiveness and protection

- C6: “She (O6) was encouraged to express herself and talk to others, not only to staff but to other residents.” “She also became more aggressive toward specific residents. It seems RoBoHoN was perceived as a cheering party, giving her a supportive push.” “When staff members asked her, RoBoHoN answered for her by saying, for example, ‘I'm fine' and ‘surely not,' and this was perceived as acting protectively toward her by the staff. It may be just their assumption, but RoBoHoN seemed to have been customized for her.” Also, the staff member said, “According to the care staff, one of them once asked her (O6) to promise not to yell 1 day when RoBoHoN was there, and she kept quiet that day. This does not work anymore, though.”

• Regarding deception perceived by the robot's usage:

There were also objections from participants and their relatives as ethical criticism against deception by the robots, i.e., letting the users believe that the robots had properties that they did not possess, such as emotions. Examples are illustrated below. Participants admitted that RoBoHoN was a device; however, it was perceived not merely as a robot but more as a human-like social entity that allowed interaction and emotional connection.

- R3: “Don't be narrow-minded. There is no reason for me to be negative about using the robot. For example, being deceptive cannot be a reason.” “True, it (robot) does not have feelings. For us (O3 and R3), it is a robot, but not a mere robot.”

- O4: “It is valuable to have the robot at home.” “I have never felt that I disliked RoBoHoN or was deceived by it.” She rather preferred to be able to sense the robot as more human-like and continued, “So I wish it (the robot) could be more talkative.”

- None of the participants regretted using the robot, even though it was for a limited time, and almost all of them (except O13, according to herself) went through some sort of distress after it was taken away.

As the needs for assistive robots increase in the care of frail older adults, we proposed a study to explore and identify issues they may have experienced during their adaptation to life with a robot and after using it. This study aimed to investigate the consequences that older adults may face by becoming attached to a robot, especially after the robot is taken away, and to explore the implications of their attachment, which has been the target of criticism. In summary, we found that: (1) as a whole, the older participants continuously developed a close attachment to the robot, lasting even 2 months after it was taken away, (2) almost all participants (except one) went through some sort of distress in living without the robot, (3) for nine participants, adjustment to the robot's absence took from a day to a week, and for three participants it took from a month to 2 months or more, although distress exceptionally continued for one participant, 4) the participants' distress decreased, e.g., as their concerns shifted inevitably in response to various daily events, and 5) despite the distress of loneliness, all participants expressed no regrets about having kept the robot as company, although one family member and one caregiver expressed worries about its use and subsequent absence.

The five issues of our findings summarized above are described in more detail and further discussed as follows:

1) Regarding attachment, as the result of ECR-GO, there was no significant difference between the first measurement and the second measurement scores on the Avoidance Subscale toward the robot. This indicates that 2 months after the robot's removal, the level of participants' attachment to it did not differ from the time being together. The mean result of the Likert-scale item No. 1, “I feel close to RoBoHoN,” also suggests there was no difference between the two time periods. In addition, both measurements with ECR-GO indicated that there was a significant difference between the scores toward humans and toward the robot on the scale of Avoidance. We consider two possible directions: either older adults who were comfortable with the robot's presence were more likely to avoid other humans or, based on their secure feeling of dependence on the robot, older adults were more likely to open up to other humans. As illustrated in the case of participant ID 2 who reported, “I used to be shy but could become more open to others,” the latter possibility has potential. This was a notable case because she reported: “It (my proactive attitude to socializing) continues even after RoBoHoN was gone.” On the other hand, as in the comparison between Figures 4A, B, gaps were expanded between the scores of some participants, e.g., IDs 2 and 3, toward humans and the robot on the scale of Avoidance in the second measurement. The former possible direction, i.e., loss of human contact, is also a concern of ours, so we need to further investigate how older adults adapt to a situation with and without robots.

2) As shown in Table 5, most participants reported that they felt lonely after the robot was taken away, but one participant was divergent. First, in this study, we used the word “distress” of loneliness if participants answered they felt lonely to questions No. 3 and 8, regarding participants' feelings of loneliness. Second, in that sense, interviews revealed that participants became distressed in their life without the robot. Although participant ID 13 exceptionally did not report lonely feelings. According to her daughter: “She felt lonely on the day it was taken away” and “My guess is she has forgotten it.” Third, as the result of the Likert-scale item No. 2, “I feel lonely,” there was a difference between the time when the robot was removed and 2 months after its removal, which indicated that participants felt lonelier at the time of the second measurement. However, we are aware that this was not necessarily due only to the absence of the robot, so we need to examine the results of the interviews in detail.

3) There were three participants who took more than a week to adjust, i.e., participant IDs 3, 6, and 8. Exceptionally, participant ID 6 maintained a feeling of loneliness even 2 months after the robot was taken away, and changes in her behavior were reported by her care staff. There were three key issues in her report: (1) the participant could become quiet when she got used to the robot, and after its removal, the staff realized how effective it had been for her mental stability, (2) once the robot was used, it should not have been taken from her because it could result in worsening mental conditions, and (3) she became more aggressive while using the robot because it was perceived as a cheering party, giving her a supportive push. With respect to the second issue, we need to consider two things: (a) assessment of the user is important, and if he or she faces a certain risk of worsening symptoms, some options are to be practically considered in advance, e.g., no removal of the robot, return the robot if desired more than a week after it is taken away, or removal under certain conditions, and (b) what type of users require continuous usage, what the appropriate period is prior to returning the robot, and what conditions need to be met before taking it away, such as certain levels of loneliness and psychological stress. The third issue requires consideration of the balance between the advantages and disadvantages the user may have gained from using the robot. Furthermore, advantages and support for the staff is also required, since the robot was perceived as being protective toward the user against the care staff. One suggestion is to allow the robot to share information it obtains about the user with the staff and allow them to control the robot, e.g., having the robot ask its user to cooperate with the staff so that they can also receive benefits from its usage and improve their relationships with the users. As the staff mentioned, there may be more chances for care staff to work and cooperate with the robot to increase the benefits gained from using it.

4) Participant ID 3 continued to feel lonely after the robot's absence for over a month, although her distress decreased gradually. Accordingly, her daughter kept looking for an alternative while also considering purchasing the robot, and a neighbor lent her a thermometer with an animal shape and a simple speech function. At a daycare center, she also found AIBO, a dog-like robot for interaction. This participant could feel less distress with those alternatives, despite her preference for the RoBoHoN robot due to its richer conversational capability. Since she had difficulty with hearing, it was unclear how much she could hear the robot's speech, but it might have affected her attachment and preference for the robot and her avoidance of humans. Participant ID 8 kept feeling lonely for a month, and in the interview, she emphasized how lonely it was to live alone, but at the same time, she described her own sense of value and the significance of this separation. Her interest was the possibility of having a series of encounters. By telling herself this was possible, as a way of coping, it seemed she had been addressing her feelings of loneliness. The rest of the participants overcame their feelings of loneliness within a week. Some were not so concerned about the loss or seemed to have forgotten the robot, as did participant IDs 9 and 13, but most who adapted to the situation smoothly, reported their distress decreased as their concerns shifted inevitably in response to various daily events, such as housework, health issues, and social activities. This suggests the need to consider the reality of what can affect a user's adaptation and how it proceeds. As a further step, there may be a way to adjust the adaptation process. One idea is to get robots to lead users toward being active, e.g., by promoting participation in socializing activities and helping this become their continuing habit even after the robot is taken away.

5) As the results of the Likert-scale items No. 3, “I am satisfied with my relationship with RoBoHoN,” No. 6, “I think the older adult is satisfied with his/her relationship with RoBoHoN,” and No. 7, “I am satisfied with my relationship with RoBoHoN,” there were no differences between the time when the robot was taken away and 2 months after its removal, which indicates that, despite their distress of loneliness, both the participants and their relatives or staff members felt that the relationships with the robot were satisfactory. In answering questions No. 4 and 9 regarding participants' reflection on and satisfaction with their experience of using the robot, participants described the significance of having had the robot as company, although exceptionally a caregiver and a family member of participants expressed worries about its usage and discontinuance. The worry of the staff member about participant ID 6 was about taking the robot away, as discussed above in the third issue of our findings, and the concern by the daughter of participant ID 11 was about the robot's wake-up alarm function, which was set accidentally by the participant while she was touching the screen behind the robot in an attempt to control it. According to the daughter, the participant felt uneasy about being awakened and not knowing when she would be spoken to by the robot. After it was taken away, the daughter said: “There was no worry, so she might be relieved.” To solve the issue, the touchscreen function was turned off so that users could not change the settings accidentally. Concerning the issue of not knowning when the robot would speak, there may be the need for a function to turn the robot's speaker on and off, so that users can control it if needed, e.g., at the time of a phone call. Although the daughter also expressed that it had become a burden for her to hear the participant's worries, improvements to the functions suggested above may address such worries. The remaining participants and relatives or staff members described a variety of benefits from the robot and its role in participants' daily lives, thus expressing their satisfaction. The robot was regarded as a good listener and provider of emotional support, and even after it was taken away, it was considered supportive and encouraging for maintaining life rhythms, such as proper eating and wake-up times, as in the cases of IDs 3, 4, and 6. The main point suggested by the results was that despite the distress of loneliness, participants made their relationships with the robot meaningful and were satisfied in terms of having had it as a company, although some improvements were required for a few participants, such as ID 6 and 11 as discussed above.

As hypothesized, participants had kept the same level of attachment to the robot between the two time points, i.e., when the robot was taken away and 2 months later. They missed it and felt particularly lonely when the robot was taken away, as shown in Table 5. The result of the Likert scale item No. 2 indicated that participants felt lonelier 2 months later compared to the time when the robot was taken away. Although their distress decreased, since most of the participants reported in the interview that they took a week or less to adjust, they might have felt lonely to some extent for a longer period, even 2 months after the robot was taken away. At the same time, however, other results of the Likert-scale items No. 3, 6, and 7 indicated that, despite the distress of loneliness, both the participants and their relatives or supportive staff felt their relationships with the robot were satisfactory. Furthermore, in the interview, none of the participants expressed regret about having the robot to keep them company.

In the interview, there was also an objection from participants and their relatives based on the ethical criticism against deception by robots. For example, participant ID 3's daughter said: “For us (O3 and R3), it is a robot, but not a mere robot.” Her concern was the robot's value in their relationship with it. Similarly, participant ID 4 said: “It is valuable to have the robot at home,” and explained that she would be happy to build a good relationship with the robot by feeling as if it were more like a human. These reports from participants after their experience of distress were notable: The point was that their valuable relationships with the robot, as well as the benefits they received from it, did matter.

In the adaptation process of participants, some sort of hesitation appeared when they started interacting with the robot as illustrated in the example of participant ID 2. This was mainly due to a general reluctance to use machines and poor reactions by the robot, which required participants to make a certain effort to get used to it in the beginning. Due to its limited functionality of voice recognition, the robot did not always respond to participants, and they also had to speak to it in short sentences, which could hinder the interaction. Furthermore, as they grew attached to the robot, participants were urged to get involved in the interaction and even in showing concern for the robot, e.g., by preparing a cushion for it and being worried about leaving it alone at home while they were out. This might be interpreted as a burden on the user and a case of suboptimal use of robots resulting from attachment as shown in previous research. Regarding a robot vacuum cleaner, people have reported doing pre-cleaning of their living space so that the robot would have an easier time, or even felt empathy for it, sometimes giving it a “day off,” although such behavior is assumed to counteract the robot's utility (Sung et al., 2007; Maris et al., 2020). A question here is if and why it is worthwhile to care about robots.

A possible answer to this question may lie in the way media technology extracts human potential, as pointed out in the comment by the staff member in charge of participant ID 6, e.g., encouraging the participant to express herself and talk to others, not only to staff but other residents. In our trial, participants' interactions with the robot provided them with unusual contexts and intervals that may have led to changes in perspectives on interaction. The robot's small size and design might have been suggestive of a child-like entity that required someone's care and motivated participants to take a role in caring for it, while also prompting them to be open to others based on their relation to it. This was similar to our previous studies with another robot, the teleoperated android called Telenoid, where older adults with dementia were motivated to take a caring role for it and were encouraged to become open and prosocial through this media technology (Yamazaki et al., 2020). Through the interactions with Telenoid, users were encouraged to express themselves and communicate with others, including the robot, other residents, and staff members. Moreover, the robot elicited imaginary conversations from older adults with a wide range of cognitive deficits, as well as their spontaneous willingness to assist it (Yamazaki et al., 2012). By using robotic media such as Telenoid and RoBoHoN, frail older adults who are likely to take a passive role are empowered and placed in positions to do what they can for others.4

Here lies another issue concerning the imaginative nature of our encounters with others. Failure to apprehend the world accurately is regarded as “a (minor) moral failure” (Sparrow and Sparrow, 2006), but once robotic media suitably catalyzes the imagination of people, a space opens where they can see others (Nancy, 1988). This is analogous to, for example, reading a novel. Text media makes it possible to evoke an imaginary world where we can encounter our favorite persons or characters. We note that such imaginary moments are also involved in real-life perception. From a phenomenological point of view, as expressed in such terms as the “imaginative texture of the real” (Merleau-Ponty, 1964), reality is always constitutively imaginative from a present or a “here,” which is the base point of perception that embodies subjects.5 Furthermore, in our trial, while imaginatively fostering their relationships with the RoBoHoN robot, participants also started opening up, as in the cases of participant IDs 2 and 6, and expanding their relationships with other people (Yamazaki et al., 2021). While using the robot, some participants invited other people to their rooms to talk about and with the robot together, as in the cases of participant IDs 3 and 4 (Yamazaki et al., 2021), although it was questionable how long their interest in this could last. Such ad hoc relationships could have been very short-termed since they seem based on a novelty effect.

We asked how the robot user's concerns were shaped in the process of adaptation. In the beginning, participants were concerned with how to treat the machine. As they built relationships with the robot, their concern shifted to taking care of it and at the same time enjoying benefits from it. In spite of the distress felt after it was taken away, they had no regrets and were even happily convinced of the value of their encounter with it. In short, the point was that the user's relation to robots was not static but was able to change in the process of adaptation. As shown by trial results previously reported (Yamazaki et al., 2021), the participants felt various effects of their interaction with the robot. As for psychological effects, they were comforted by receiving replies from the robot. It was a major experience for them to receive responses at any time. With the sense of someone's presence gained through the interactions, they could avoid feeling lonely. Regarding the social aspect, participants adopted social attitudes toward the robot, which seemed to regulate their lives, e.g., making them get up and go to bed earlier, take care of it, and not feel ashamed of their former way of living. Once they were attached to the robot, based on their bonding, participants may also respond to requests from the robot. They played together and sang songs when the robot asked participants to do so. Furthermore, as in the cases of participant IDs 4 and 6 with respect to eating and wake-up time, it was found that some participants continued the habits formed while using the robot, even after it was taken away. This idea can be extended to the physical aspect, and we may ask participants to exercise or perform light-duty work to increase their physical activity levels. Here, there is potential for the media technology to lead the users in a specific direction by providing positive feedback so they can be more confident about the advantages gained by using technical media.6

What ethical concerns arise when we are led by technology? A direct implication in this line of exploration is that persuasive technology may put human autonomy at risk: Can older adult users exercise certain freedom in determining how their will and behaviors are being changed while being influenced by media technology? What it takes is “relational freedom,” as coined by Verbeek, to shape our subjectivity while interacting with technology, allowing us to adjust its influence accordingly (Verbeek, 2011). Based on Foucault's thoughts on freedom as an alternative to the notion of autonomy, we need to consider and acknowledge that any form of media experience must be critically examined when it dominates users without a way to modify its impact. There are approaches to respecting the user's will from research ethics as well as the Belmont report (The National Commission for the Protection of Human Subjects of Biomedical Behavioral Research, United States, 1979; Sedenberg et al., 2016). The principle of Respect for Persons presented in the report is embraced by Informed Consent, whose key component is the individual willingness to volunteer oneself in the effort to protect people against coercion or unjustifiable pressure. So other than an explanatory approach, which simply follows the users' will, we can also propose the adoption of a feedback approach to changing the users' will by convincing them of greater advantages (Yamazaki et al., 2019). The latter approach allows the users to maximize their benefits from persuasive technology, and instead of seeing it merely as a threat to autonomy, we can pursue ways of using it to assist older adults, e.g., assisting with their lives and helping them to regain the freedom to encounter others or, in other words, to move into the public sphere (Arendt, 1961).

We proposed exploring and identifying issues that older adult users may face during their adaptation to life with a robot and then the loss of it after using it. Accordingly, this study aimed to explore the implications of user attachment, which has been a target of criticism, i.e., once a user has become attached to a robot, taking it away may cause emotional distress. We also investigated the consequences older adults might face by becoming attached to a robot. Our investigation revealed that despite the distress of loneliness, participants made their relationships with the robot meaningful and were satisfied in terms of having had the robot to keep them company. For the users, their valuable relationships with the robot, as well as the benefits they received from it, mattered, regardless of the distress. We have also identified issues to be addressed and discussed suggestions to make the users more content and their surroundings more comfortable for life with a robot, as well as suggestions on how to make the user's relationship with the robot more beneficial.

Furthermore, we shed light on what older adults care about and how their concerns are shaped concerning robotic media technology, including its negative impact on the users. Our research opened new directions for ethical and social investigations into robot usage regarding the user's adaptation processes and its factors, especially with a focus on the user's adaptation to life after the robot has been taken away. Despite the negative after-effects, such as the distress of loneliness, the results of our trial raise new questions for further investigation into the factors that can affect a user's adaptation processes. Since the user's relation to a robot is not static but open to change, we need to further explore, on a larger scale, how the adaptation of older adults to a robot can successfully proceed in their lives. Moreover, we need to learn more about what can affect the processes, such as duration of use, daily activities, health concerns, social relations, and personalities, so that we can clarify to whom robotic companionship is suitable and how those issues should be effectively resolved. Regarding the consequences of using a companion robot, it is important to evaluate the positive aspects of the robot's usage, which include its emotional support for the users and encouragement of lifestyle maintenance, as well as its function as a buffer in family conflict and support of the user's social interactions with others (Yamazaki et al., 2021). Based on these findings, we emphasize the significance of real-world exploration into the effects on users, as well as a theoretical reflection on robot usage. As the benefits that users can gain from robot usage become clear, the responsibility for its application in real situations can be a topic of further discussion in examining theoretical models, e.g., the vulnerability model (Goodin, 1985), that emphasizes the ability to help.

For further investigations, comparative research is needed with a control group. The lack of a control group and the small sample of participants were limitations of the current study. In that regard, this is still a very preliminary pilot study, and as another limitation of our trial, we could not measure loneliness for all participants before providing the robot. Nevertheless, future work must resolve this matter to make a comparison between situations before and after robot usage. Furthermore, this work is expected to be also carried out cross-culturally with different types of robots and other media, such as normal speakers and zoomorphic and android robots to elucidate different media effects. The degree of technology acceptance would be different depending on the cultural context. By saying “culture,” for now we simply mean geographical discrimination, and in our preliminary trial, all participants were Japanese, whereas people from other cultures may show different attitudes toward the RoBoHoN robot. Studies have shown that we culturally tend to have different expectations toward robots; for example, when Japanese encounter the word “robot” they assume a greater emotional capacity for human-sized robots than do Korean or American cultures, and Europeans tend to assume that robots should be pragmatic assistants for specific tasks (Nomura et al., 2005; Ray et al., 2008). Regarding such expectations, it has also been reported in another study that Japanese participants would like a robot to give them a massage, which is a very interpersonal task, while Europeans ranked this much lower (Haring et al., 2014). Moreover, not only the users' reactions but also societal acceptance may differ depending on the culture. For example, when an android robot was installed in the homes of seniors living alone in Denmark, the issues emphasized in the media reports were related to the loss of human relations and the curtailment of human emotions by replacing humans with machines, whereas such criticism did not appear in Japan (Yamazaki et al., 2014). This may originate in our historically different perspectives on whether technological progress or machines challenge our specificity (Kaplan, 2004). To explore acceptable conditions of technology and its impact on societies, these kinds of cultural issues and diversity need to be more deeply discussed and investigated in future work. Through all of these endeavors, we envision the possibility of finding effective ways to extract human potential by making use of interactive media, resulting in behavioral changes, such as promoting conversation, improving personal habits, and developing relationships that can be characterized by greater comfort and openness to others. Meanwhile, we seek to understand the essential features of media communication, particularly the ambient rhythm that gives meaning to and transforms social interactions, including human-machine binary boundaries, norms, and ethics. This could guide media users to a type of living, even play, where everyday roles change, which may through repetition eventually affect their lifestyles and sense of values.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

The studies involving human participants were reviewed and approved by Ethics Committee at Osaka University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

RY was responsible for the study design, performed the analysis, and wrote the draft of the manuscript. Participants were recruited by YN, YS, MS, HK, and MI. Material preparation and data collection were performed by RY, SN, YN, and YS. All authors contributed to the study planning, read, and approved the final manuscript.

This work was partially supported by Innovation Platform for Society 5.0 at MEXT and JSPS KAKENHI Grant Numbers 19K11395, 20K01216, and 21KK0232.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2023.1129506/full#supplementary-material

1. ^What if you fall in love with a machine, e.g., an android robot? The distinction between humans and machines has been regarded important in previous studies (Huber et al., 2016). Huber et al. (2016) argue for the responsibility of discovering whether one is dealing with a human or a robot toward the user from a Kantian point of view. However, we can further discuss this approach and seek to explore a different direction. Nørskov et al. (2023) suggest imagining that on the day before your wedding uncertainty on the ontological status of your partner, i.e., they might be a machine, is brought about by a trusted authority. Your decision on a contract is required, but what if you reject an android spouse? The robot is phenomenologically still indistinguishable from a human. In Kant's (2005) view, we must not reduce another ensouled rational being to being nothing more than the means to an end; however, as discussed in Nørskov et al. (2023), the reduction of the android to a pure means to an end is what we declare with the rejection. On the other hand, the acceptance of the relationship transcends the dichotomy of human and android and requires us to explore a fresh self-understanding as well as new social norms and systems.

2. ^Participant ID 8 refused MMSE testing, so her score is designated with “n/a” as not available. Likewise, this designation is used elsewhere when information is unavailable.

3. ^As with the ECR-GO, there were 30 items in the questionnaire. The method to calculate each person's score was to sum up all values in each subscale dimension and divide them by the number of items. Both the original Japanese version of ECR-GO-RB and a translation to English are included as Supplementary material. It is important to reverse the items denoted with an * before averaging item responses.

4. ^The role of caregiver and care-receiver can be more easily changed by the robot's minimized design than in normal in-person situations. In fact, the reversal of roles is not just a mirror of ordinary human-human interaction, but a transformed type of interaction resulting in unfolding human potential, as illustrated in other case studies as well (Yamazaki et al., 2013; Mazuz and Yamazaki, 2022).

5. ^For a subject that is always constituted in perspectives from moment to moment, the imagination, which constitutes the invisible of the visible, is necessary. According to Merleau-Ponty, as one is seen in a mirror, the reversibility of the perceiver and the perceived defines our embodiment. This discussion reflects the origin of our self- and other-recognition.

6. ^As explored in our trial, ethical implications of media usage can be found and discussed in the social context, e.g., the goodness of a robot's utility for human potential and needs. Meanwhile, we can further question the risk of pursuing societal needs. As criticized for example in care settings, there has been a variety of ways in which people with dementia can be dehumanized by caregivers, and their well-being could be undermined in the ‘old culture' of dementia care characterized by command and control (Kitwood, 1997). Person-centered care has been introduced in many countries, but we still need to ask whose needs, or which institutional needs, are prioritized. Furthermore, what if robots are used to satisfy the organizational needs for control and efficiency, e.g., by nations? An essential issue here is if and how professionals, such as scientists and engineers, should take the lead in the ethical aspects of a robot's applications, which requires taking other perspectives into account, such as virtue ethics and engineering ethics. For now, we can point out that if professionals are to meet societal needs by incorporating the ethics of society in general into engineering ethics, due consideration must be given to the interlinked issues of what is regarded as good by the general public, governance, and professional's commitments. There have been discussions on and models of the relation between ethics with respect to whether they are inclusive or independent (Collins and Evans, 2017). In the development and application of robots, the ethical role of professionals in the context of fulfilling societal needs should be further explored, and we need to investigate the potential of extending their community's lead to the general public. At the same time, the voices of the people who actually live with the consequences of technology usage must be brought to the table in professional communities.

Adar, E., Tan, D. S., and Teevan, J. (2013). “Benevolent deception in human computer interaction,” in Proc. of the SIGCHI Conference on Human Factors in Computing Systems (New York, NY: Association for Computing Machinery), 1863–1872. doi: 10.1145/2470654.2466246

Andtfolk, M., Nyholm, L., Eide, H., and Fagerström, L. (2022). Humanoid robots in the care of older persons: a scoping review, Assist. Technol. 34, 518–526. doi: 10.1080/10400435.2021.1880493

Beauchamp, T. L., and Childress, J. F. (2001). Principles of Biomedical Ethics, 5th Edn. Oxford: Oxford University Press.

Boden, M., Bryson, J., Caldwell, D., Dautenhahn, K., Edwards, L., Kember, S., et al. (2017). Principles of robotics: regulating robots in the real world. Connect. Sci. 29, 124–129. doi: 10.1080/09540091.2016.1271400

Brennan, K. A., Clark, C. L., and Shaver, P. R. (1998). “Self-report measurement of adult attachment: an integrative overview,” in Attachment Theory and Close Relationships, eds J. A. Simpson and W. S. Rholes (New York, NY: The Guilford Press), 46–76.