- Research and Development, Innovation Studio, Arts University Bournemouth, Bournemouth, United Kingdom

Within the field of digital musical instruments, there have been a growing number of technological developments aimed at addressing the issue of accessibility to music-making for disabled people. This study summarizes the development of one such technological system—The Modular Accessible Musical Instrument Technology Toolkit (MAMI Tech Toolkit). The four tools in the toolkit and accompanying software were developed over 5 years using an action research methodology. A range of stakeholders across four research sites were involved in the development. This study outlines the methodological process, the stakeholder involvement, and how the data were used to inform the design of the toolkit. The accessibility of the toolkit is also discussed alongside findings that have emerged from the process. This study adds to the established canon of research around accessible digital musical instruments by documenting the creation of an accessible toolkit grounded in both theory and practical application of third-wave human–computer interaction methods. This study contributes to the discourse around the use of participatory and iterative methods to explore issues with, and barriers to, active music-making with music technology. Outlined is the development of each of the novel tools in the toolkit, the functionality they offer, as well as the accessibility issues they address. The study advances knowledge around active music-making using music technology, as well as in working with diverse users to create these new types of systems.

1. Introduction

The use of technology to facilitate accessibility to music-making has seen growing interest in the last two decades (Frid, 2018, 2019). The rise of new instruments for musical expression (NIMEs) including digital musical instruments (DMIs) and accessible digital musical instruments (ADMIs) has been concurrent with access to facilitating technologies. Cheaper microprocessors, such as Arduino (2015), Raspberry Pi (Raspberrypi.org, 2020), Bela Boards (Bela, 2023), and Teensy Boards (Stoffregen, 2014) alongside an increase in the availability of sensors and implementation with prototyping technologies such as 3D printing (Dabin et al., 2016), have heralded the development of many new music-making tools (Graham-Knight and Tzanetakis, 2015; Kirwan et al., 2015). Authors have covered a range of topics related to ADMIs, including design and development (Förster et al., 2020; Frid and Ilsar, 2021), targeting specific populations (Davanzo and Avanzini, 2020a), evaluation of NIMEs (Lucas et al., 2019; Davanzo and Avanzini, 2020b), evaluating the use of electronic music technologies (EMTs) (Krout, 2015), integration into specific settings (Förster, 2023), and discussion about customization, augmentation, and creation of tools for specific users (Larsen et al., 2016). The literature provides insights into the challenges and opportunities of creating ADMIs and offers recommendations for future research and development. These challenges have historically included fear, dislike, or indifference to technology (Farrimond et al., 2011); lack of confidence when putting technology into practice (Streeter, 2007); musical output that can be seen as uninspiring, artificial, and lacking expression (Misje, 2013); and impersonal and lacking sophistication (Streeter, 2007). Technology can be seen as a barrier when coupled with a lack of formal training and exposure (Magee, 2006) and the perceived need for insider knowledge upon use (Streeter, 2007). More current reviews of the perceptions of these types of tools conducted with electronic musicians indicate that many of these issues maintain currency. Research by Sullivan and Wanderley (2019) suggests that DMI durability, portability, and ease of use are of the greatest importance to performers who use DMIs. Frid and Ilsar (2021) found several frustrations in their survey of 118 electronic musicians (40.68% professional musicians and 10.17% identifying as living with a disability or access requirement) including software and hardware limitations, time-consuming processes, need for more or new interfaces, and physical space requirements for the equipment.

Presented here is the development of a technological system, the Modular Accessible Musical Instrument Technology Toolkit (MAMI Tech Toolkit), that is rooted in addressing some of these issues and leveraging the unique power of technology to provide an accessible way for users to actively participate in music-making. The research looked at barriers with current technology for music-making and focussed on creating easy-to-use, small form factor, wireless technology. This study has a particular focus on moving away from using a screen and into tangible, physical, flexible, and tailorable tools for active music-making. The contributions of this study center around the description of an action research methodology used to develop the toolkit. There is an emphasis throughout the study on the use of participatory and iterative methods to elicit current issues and to explore barriers with music technology. These issues and barriers then form the basis for developing novel tools to address these gaps in provision. These contributions advance knowledge around music technology in facilitating access to active music-making for diverse user groups.

2. Related work

2.1. New tools for music-making

New tools for music-making can be classified in a variety of ways. Wanderley (2001) proposed the classification of NIMEs in three tiers: instrument-like controllers—where the input device design tends to reproduce features of existing (acoustic) instruments in detail—as seen in developments such as Strummi (Harrison et al., 2019) and John Kelly's “Kellycaster” (Drake Music, 2016); augmented instruments—where the instrument is augmented by the addition of sensors to extend functionality—such as Electrumpet (Leeuw, 2021); and alternate controllers—whose design does not follow one of the established instruments—for example, the Hands (Waiswisz, 1985). These new tools can be configured in a variety of ways—as both controllers with processing being achieved via separate computers or with onboard processing and sound production—the latter being exemplified in the Theremini (Moog Music Inc, 2023). These systems can vary from the use of off-the-shelf components used as controllers (Ilsar and Kenning, 2020) to entirely bespoke assemblages of hardware and software with the ability to integrate (or not) with existing music technologies. They may be purely research artifacts or commercial products (Ward et al., 2019). Frid (2018) conducted an extensive review of ADMIs presented at the NIME, SMC, and ICMC conferences and identified seven control interface types including tangible controllers. Using these classification systems, the tools in the MAMI Tech Toolkit can be classified as tangible (Frid, 2018), touch-based, and alternate (Wanderley, 2001) controllers.

2.2. Commercially available technology

There are commercially available technologies designed for accessibility such as the Skoog (Skoogmusic, 2023), Soundbeam (Soundbeam, 2023), Alphasphere (AlphaSphere, 2023), and Musii (Musii Ltd, 2023). The commonality of these instruments is that they facilitate different modalities of interaction that move away from the traditional acoustic instrument paradigm—i.e., there are no strings to pluck, skins to percuss, reeds to blow, pipes to excite, or material to resonate—there are also no screens to interact with to play them. A more reductive categorization can be used to describe what is left as facilitating interactions via the use of touch or empty-handed gestures. One example of a touch-based controller is the Skoog. The Skoog is a cube-shaped controller with a “squishy” velocity-sensitive “skin” that when depressed can be used to control sounds as dictated within its software (requires computer/tablet connection), with the ability to control the sound produced as well as constraints around these sounds (i.e., scale and key). The Soundbeam is an empty-handed gestural interface that uses ultrasonic beams as “an invisible keyboard in space” (CENMAC, 2021) to trigger notes or samples when the beam is broken. The Soundbeam is a longstanding ADMI that has been in use since the late 1990s featuring integrated synthesis/processing—connecting to speakers/monitors for sound production. The Soundbeam has been a transformative tool in over 5,000 special education settings and adult day centers (Swingler, 1998). Another example is the Alphasphere, a spherical device 26 cm in diameter that houses 48 velocity-sensitive pads over the surface. It is a MIDI controller with proprietary software. The Musii is a 78 cm × 92 cm multisensory interactive inflatable featuring three “prongs” that change color and modulate sound when interacted with (pushed in or squeezed)—with the ability to change the sounds and lights remotely, as well as an integrated vibrating speaker.

2.3. Music-making apps

Apps have been used to facilitate access to music-making through the use of the touch screen and integrated sensors. Tablets (in particular iPads), which are commonly found in school settings, have been used to facilitate music therapy (Knight, 2013; Krout, 2014). The ThumbJam app (Sonosaurus, 2009) contains 40 sampled instruments, hundreds of scales with playing styles, arpeggiation, and a customisable graphical user interface (GUI) (Matthews, 2018). Also offered is the ability to loop; add effects; manipulate sonic content; create instruments; and record, import, and export data. Another example of a generic music app includes Orphion (Trump, 2016) which offers sound-making possibilities through interacting with the screen and/or the motion of the device. There are also tablet versions of software such as GarageBand that offer direct touch-based access to sound creation and manipulation via the GUI, providing a different mode of access than using a computer mouse and keyboard.

2.4. Organisations

Several organizations and charities provide much-needed help in navigating the use and integration of these tools. Charities such as Drake Music (Drake Music, 2023), OpenUp Music (OpenUp Music, 2023), and Heart n Soul (Heart n Soul, 2023) facilitate music-making through technology and inclusive practices for disabled musicians, often supporting the development and implementation of technology. One development that sprung from OpenUp Music is the Clarion (OpenOrchestras, 2023) which is an accessible music technology that can be integrated with eye gaze systems (detecting users' eye movements) or used on the iPad, PC, or Mac. Clarion features the ability to create a GUI to suit the user and customizable sounds that can be mapped in different ways to allow expression. This type of customizable and scalable mapping is a leap forward in moving from an instrument-first paradigm to a human-first approach in which instruments are built around the user, rather than the other way around.

2.5. Developing for and with disabled users

The toolkit was developed in line with the underlying principles of the social model of disability. Oliver (1996) states that “the individual (or medical) model locates the ‘problem' of disability with the individual” (ibid, p. 32), whereas the social model of disability “does not deny the problem of disability but locates it squarely with society” (ibid, p. 32). These principles highlight the importance of asking people what they need and utilizing this to develop design directions (Skuse and Knotts, 2020). Paramount is ensuring what is centered is the perspectives of disabled people and of those around them who know them very well (albeit with an aside that there will always be issues with taking the opinions of others in proxy of the users themselves) and conducting “works that propose a position of pride against prejudice” (Ymous et al., 2020, p. 10). At the forefront of this research was the crucial aspect of making the research accessible to the participants both in choosing methods of gaining data, and then feeding that data back to participants in a way that suited them and matched their needs. This is in alignment with established research methodologies developed by Mack et al. (2022) in the field of human-centered methods.

2.6. Constraints and expression

When developing new technological tools for music-making, particularly alternate controllers, there is a decoupling of the sound production from the sound generation mechanism. This bond is found in traditional instruments where the acoustic properties of the instrument are in direct relationship with the physical construction of the instrument. This decoupling needs careful reconstruction to ensure that users can interact with the tool, the output is engaging, and it is suitable and understood by the user. As Bott (2010) identifies, distinguishing between access needs and learning needs is key to determining musical possibilities with an individual. These can often be interrelated, but making a distinction can start to cut through what might otherwise seem to be impenetrable complexities (ibid) when designing tools to suit individual users. Instruments and tools can be constrained by design to suit the needs of the user. The unique qualities of technology can provide opportunities to configure systems to users and constraints can be put in place to scaffold this. Sounds can be designed that are motivating to the user and mechanisms to play these can be constructed to suit the particular interaction paradigm of the user—for example, small gestures can be translated into vastly amplified sound or equally large gestures can be mapped to nuanced control of sound.

The issue of expression and constraints in the creation of DMIs has been explored in broader discourse such as the study by Jordà (2004) who discusses balancing relationships of “challenge, frustration, and boredom” (ibid, p. 60) with stimulation and placation. Balances must be struck between the learning curve, how users can interact, and what the user can ask the instrument to do. Technology use can range from using a single button to trigger one set of notes to instruments that have a one-to-one mapping of gesture to sonic output (such as the Theremin). There can be benefits, limitations, and creative playing opportunities within constrained interactions (Gurevich et al., 2010). This can be balanced against the time needed and learning curves faced, as less constrained instruments can require hours of practice to attain virtuosity (Glinsky, 2005). This can be a barrier that can alienate some users, however, heavily constrained instruments may quickly lead to mastery and boredom. The tools in this toolkit explore the lines between what Jack et al. (2020) quoted from Wessel and Wright (2002), the range of interaction through “low entry fee to no ceiling on virtuosity” (Jack et al., 2020, p. 184). Considering these elements can aid in pointing to “what might be considered essential needs for different types of musicians” (Jordà, 2004. p. 60). It is recognized that whilst constraining musical output may inspire creativity and remove frustrations by initially opening accessibility—as seen in the study of Gurevich et al. (2010)—there may be demotivation in the long run if the constraints hamper the players' ability to play the way they wish.

2.7. Third-wave human–computer interaction

The toolkit is situated in third-wave human–computer interaction (HCI) in which the focus on task-based efficiency of information transfer and technology-centric operation of computer systems by humans is interchanged for a more socially situated and embodied view of the user in a “turn to practice” (Tzankova and Filimowicz, 2018). This practice paradigm looks at HCI through a lens of real-life unfolding, processes situated in time and space, and technology as one aspect of the situation (Kuutti and Bannon, 2014). Third-wave HCI engages users through “in the wild” contexts (Bødker, 2006), with an emphasis on human meaning-making, situated knowledge, and grappling with the full complexity of the system (Harrison et al., 2007). This third wave “considers the ‘messy' context of socially situated and embodied action which introduces humanistic and social science considerations into design research” (Tzankova and Filimowicz, 2018, p. 3). The practice paradigm features in-situ, extended activities involving people and artifacts within their daily practices, within their organizational routines, and with more developmental and phenomenological orientations being used (Kuutti and Bannon, 2014). The research described in this study saw both the development and practical use of the tools embedded within the context of use to design for and with central users and their facilitators through an action research methodology. At the forefront were activities that held meaning for the participants and these meaning-making activities were conducted to inform the development of the toolkit whilst incorporating their feedback through real-world usage scenarios.

2.8. Research through design

In carrying out this research with a user-centric position and the aim of creating new designs with accessibility in mind, it is relevant to acknowledge the relation of this study to other fields in which similar questions have been asked. Research through Design (RtD) is a field that aims to understand users' perspectives, behaviors, and feedback. This is then used to inform the design process and ensure that the final design solution meets users' needs, preferences, and expectations. The toolkit shares the sentiment of being “an attempt to make the right thing: a product that transforms the world from its current state to a preferred state” (Zimmerman et al., 2007) which is underlined within the methodology of RtD. This also aligns with the tenets of action research (finding improved solutions in current situations). The prototypes used design as a means of inquiry—as commonly seen in RtD. Design practice was applied to situations with significant theoretical and practical value to the users.

2.9. Technology prototypes as probes

In this project, prototypes were used as “technology probes” (Hutchinson et al., 2003). Technology probes can be defined as simple, adaptable, and flexible with three main goals:

• A social science goal of “collecting information about the use and the users of the technology in a real-world setting” (Hutchinson et al., 2003, p. 18).

• An engineering goal of field testing the technology (ibid).

• A design goal of inspiring users and designers to think of new kinds of technology (ibid).

Technology probes can be presented in various fidelities from low-fi physical hardware prototypes to rudimentary examples of the system in use, or as fully functioning prototypes. These become more refined as the research progresses and are informed by interactions with stakeholders. These types of technology probes have also been called “products” (Odom et al., 2016) in RtD, and they can be used to support investigations into distinct kinds of experiences, encounters, and relationships between humans and interactive technology (ibid). Other researchers have designed ADMIs using products (Jack et al., 2020) and outlined them as having four key features. These features are that products should be inquiry-driven and designed to “provoke users in their environment to engage them in an enquiry” (ibid p. 2); have a complete finish with a focus not on what the design could become but what the artifact is; be able to fit convincingly into the everyday scenario they are designed to be used within; and be able to be used independently of the researcher (ibid). Probes and products are useful when considering the MAMI Tech Toolkit as the three technology probe goals describe what the toolkit aimed to elicit throughout the research journey, whilst the four key features of products embody how it was ultimately designed for the final iteration.

3. Methods

3.1. Research sites and stakeholder involvement

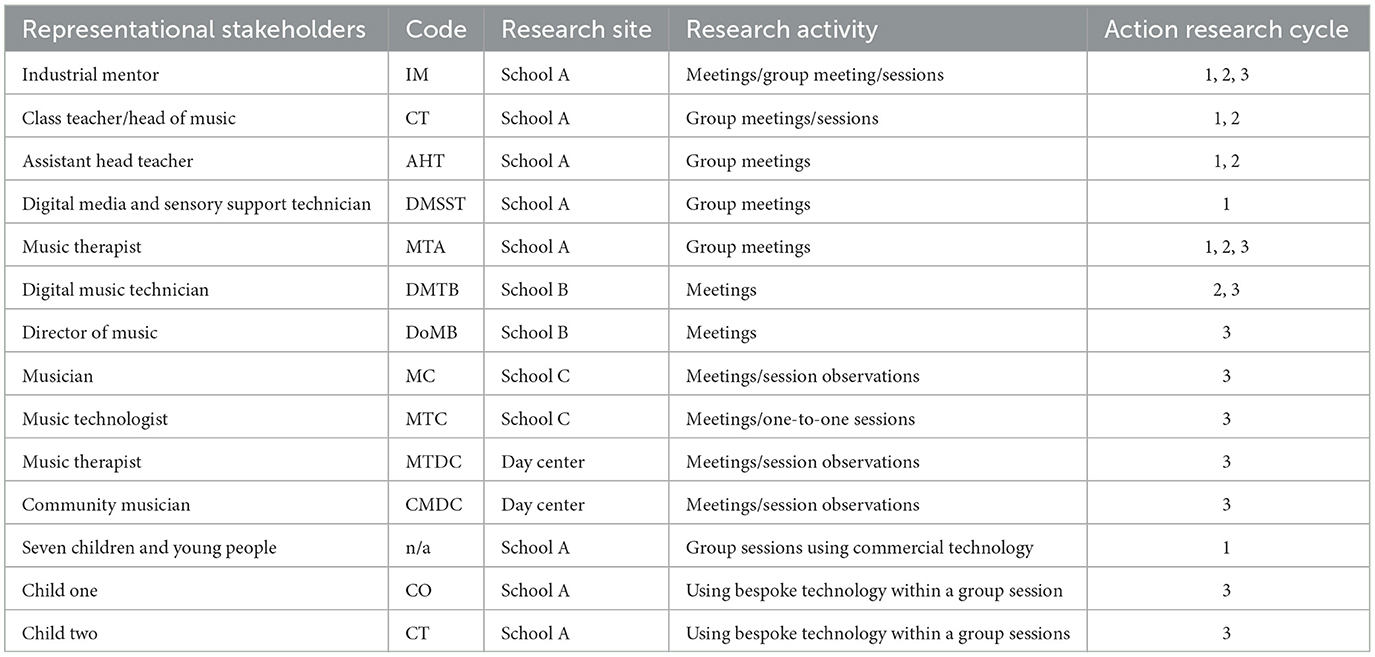

The toolkit was developed over 5 years with four research sites (three special educational needs schools and 1 day center for adults with disabilities) as part of an engineering doctorate undertaken by the author. As part of this doctorate, the main school (school A in Table 1) was the industrial sponsor of the research. An industrial mentor (the creative technologist at school A) was assigned to mentor the researcher in the development of the project. A team of stakeholders (Table 1) whose practice is directly related to the research was also used to assist with the research. Outlined are the roles of the stakeholders, the research site that they worked within, and the activities that they were involved in as part of the research.

Eleven practitioners (industrial mentor, class teacher/head of music, assistant head teacher, digital media and sensory support technician, music therapists, digital music technician, director of music, musician, music technologist, and community musician) and nine children and young people (CYP) participated in the research as stakeholders. A limitation of this research is that the specific needs and ages of all the CYP involved were not gathered due to a combination of ethical, logistical, and time constraints.

The fieldwork involved consulting, observing, and engaging with practitioners and CYP in their naturalistic environments. Some practitioners already used music technology within their practice. This allowed us to conduct case studies to inform the creation of technical specifications. Some practitioners did not use technology, in these cases, insight was gained into the role technology might be able to play in enabling active music-making. These cases were also helpful for considering how to integrate technology with more traditional approaches, and how traditional acoustic and digital musical instruments could be combined. Direct requests from stakeholders were gathered including specific desires for features and functionality, alongside explorations into issues of form, function, and context of use. From this, a set of design considerations were developed (Ward et al., 2017). Prototypes of the tools were presented to stakeholders throughout—most regularly to the industrial mentor (a person appointed by the industrial sponsor school as a lead stakeholder). These prototype tools were used as probes to iteratively provide physical manifestations of the stakeholder's requirements and were used to both gain their feedback and engage them to think of the next design steps to refine designs further or add features or functionality.

3.2. Action research

Action research (AR) was the methodology used to conduct the research. AR seeks to bring together action and reflection, theory, and practice, in a participatory process concerned with developing practical solutions to pressing concerns for individuals and communities (Reason and Bradbury, 2008). The focus of AR is to create a democratic atmosphere whereby people work together to address key problems in their community to develop knowledge embedded in practical activities for human flourishing (ibid). In the case of this research, the pursuit was providing access to music-making through the mobilization of the expertise of the stakeholders. This informed the final designed outcome and situated the tools within their context of use. The use of AR allowed practitioners to be added into the research as stakeholders as their expertise was needed and the flow of the design process to follow organically the needs of those at the center of the research.

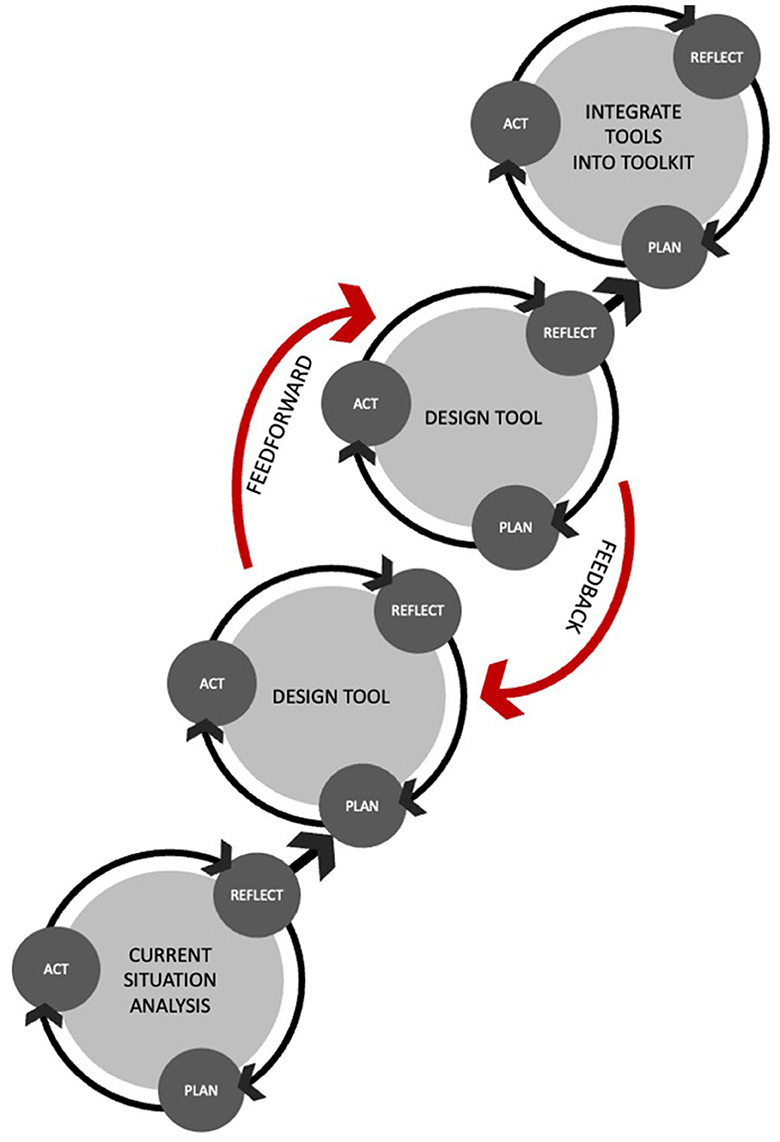

This “orientation to inquiry” (Reason and Bradbury, 2008, p.1) involved working closely in collaboration with stakeholders through phases of planning, action, and reflection. These three phases form the cycles of AR with the outcome of each cycle informing the activities of the next cycle. The first cycle aimed to conduct a situation analysis. In the planning phase, the stakeholders work with the researcher to put a plan of action together. In the action phase, the research activity is conducted, stakeholders are interviewed, and the data are summarized and analyzed. In the reflection stage, “researchers and practitioners reflect on, and articulate lessons learned and identify opportunities for improvement for subsequent research cycles” (Deluca et al., 2008, p. 54). Reflection on both techniques and methods used occurs to allow contributions to knowledge to begin to form. The first cycle of AR then feeds into the next cycle's planning stage. These basic steps are then germane to further cycles. Although this research is separated into distinct cycles, there was substantial overlap between cycles with an element of feedback and feedforward that informed the iterative development of the tools—as reflected in the red arrows (Figure 1).

Figure 1. Four completed cycles of action research (adapted from Piggot-Irvine, 2006).

3.3. Data collection

The research approach used throughout the data collection is an example of “starting where you are” (Lofland and Lofland, 1994; Robson, 2002, p. 49), in that it began through personal interest and with several connections to stakeholders already in place. The research endeavored to meet people where they were, following stakeholders' leads where possible. The use of this method connects with the underpinning research methodology of action research—a methodology focused on creating solutions to problems that are pertinent to the lives of stakeholders within the research, using cycles of action and reflection to develop practical knowing (Reason and Bradbury, 2008)—see Section 3.2 for more details. This meant both physically going to their sites of practice and interacting and elucidating information through naturalistic methods such as observation of practice, and relaxed and open discussion about their practice. The aims and research agenda also remained open to follow the needs of the stakeholders and to be able to focus on what they considered important.

The data collection methods used for this research were an amalgamation of both Creswell (2014) and Yins (2018) categories of evidence sources. Yins (2018, p. 114) sources used within this research were documentation (emails, agendas, key points synthesized, and observational notes from stakeholder interactions), interviews (face-to-face one-to-one in person, telephone conversations, emails, video calls, and focus groups), direct observations (in the sessions using technology, observation of practitioners using music therapy in their sessions, and observing CYP in music therapy), and physical artifacts (technology probes) (Hutchinson et al., 2003). Creswell's (2014) table of qualitative data collection types further illustrates sources of data collection including self-reflective documentation (self-memos on tech developments, on research and analysis, and recording of events), technical documentation (journaling technical development using e-notebook and GitHub), and audio-visual material (videos in sessions, photographs, and audio recordings).

In total, six sessions of CYP using off-the-shelf music technology were conducted throughout the four cycles of action research. These were group sessions with seven CYPs in two separate age groups—primary aged 5–11 years and secondary aged 11–16 years. Also conducted were three focus group meetings with a consistent group of practitioner stakeholders; 29 meetings with the industrial mentor; around eight separate meetings with individual practitioners; three observations of practitioners using music therapy in a live session; and 19 music therapy sessions incorporating elements of the toolkit with two consistent CYP.

All sessions were planned with the relevant stakeholders. Observational field notes were taken by the author and then analyzed. These key points were then provided as feedback to stakeholders covering issues and successes and developing a plan for the next iteration. For observing practitioners running their sessions, field notes were taken by the author and then analyzed to provide data on how to develop technology, how technology was being used, or how it could be integrated. Interviews/meetings were conducted face-to-face on a one-to-one basis, over the telephone, via email, and with focus groups. Focus groups were used initially to allow the opening of communicative spaces (Bevan, 2013) for the interdisciplinary stakeholders, and to facilitate open discussion around what had been done previously in the setting, what was being done, and what could be done next. The meetings were recorded and transcribed before analysis.

A literature review was also conducted and updated throughout the research to identify design considerations/requirements for the toolkit. Searches of Google Scholar, Google, and The Bournemouth University Library Catalog were used with the following keywords: music technology for music therapy, new interfaces for musical expression, music technology and special education needs, music technology SEN, and music technology complex needs. The Nordoff Robbins Evidence Bank (2014) (specifically account no. 16) and Research and Resources for Music Therapy 2016 (Cripps et al., 2016) were also consulted. The selection of articles expanded as the literature was reviewed. Articles were scanned for significance pertaining to the use of technology (both novel or off-the-shelf) with users with complex needs for active music-making or sonic exploration, or that they featured details of such technologies in use, or that they explored issues around and/or reviewed music technology in use. Some gray literature was also consulted (O'Malley and Stanton Fraser, 2004; Department for Education, 2011; Farrimond et al., 2011; Ofsted, 2012) as this provided a different perspective on technology usage in practice.

3.4. Data analysis

Thematic analysis was used to analyse the data gathered throughout the research using a process as described by Saunders et al. (2015, p. 580). This was done solely by the author. Data were coded manually on an activity-by-activity basis, with codes being grouped into themes. This was done after each activity to inform the technical development of the toolkit and then these themes were further analyzed more in-depth for the doctoral thesis. Both inductive and deductive methods were used during coding using a data-driven approach. Codes were assigned by the author based on describing the unit of data, and themes were then grouped by describing the overall subject of the individual codes to allow the data to be presented to stakeholders as a report of key findings.

3.5. Action research cycles

Four cycles of action research were conducted within the project span (see Figure 1).

3.5.1. Cycle one

The first cycle aimed to understand the current situation by focusing on working with stakeholders to explore the current use of technology and the issues surrounding this use. Existing technology for active music-making was explored through the use of the Orphion app (Trump, 2016) on an iPad connected to an amplifier within the six sessions with CYP. The findings from these activities were used to inform the developments within the cycle two.

3.5.2. Cycle two

The second cycle aimed to develop the first two tools in the toolkit: filterBox and the squishyDrum. These were iteratively prototyped with weekly input (nine meetings) from the industrial mentor and prototypes were presented to some of the stakeholders at two separate focus groups (2 months apart). Within this cycle, an additional school (B) came on board as stakeholders.

3.5.3. Cycle three

Cycle three aimed to develop another bespoke tool—The Noodler—in close collaboration with a music therapist at school A. In this cycle, the research moved away from creating tools that purely suited individual users into the direction of creating technology that worked for practitioners as these practitioners facilitate the use of technology within the setting. This allowed a “zooming out” to occur to see the tool as embedded within a bigger context, in recognition that music-making in the settings, and with the users involved in this research, constituted the messy real-world scenario described in the third-wave of HCI (Bødker, 2006). This necessitated the inclusion of input from more stakeholders, observing their practice closely, and integrating the tools into practice to inform the final designs. Other practitioners were consulted from a third site (School C) and a fourth research site (day center). Meetings with stakeholders from School B occurred and sessions were observed with each of the music therapists, community musicians, and musicians from all of the research sites. These activities were conducted to determine what a usual session might entail and where technology might be able to be deployed, or how it was being integrated if it was being used. Sessions were held with two children at school A spanning around 6 months of weekly sessions (in term times) using the Noodler—to aid in the development of the software and mappings for that specific tool, which could be classed as the most evolved design because of this extended contextualized use. Meetings were conducted with the industrial mentor to discuss the developing tools.

3.5.4. Cycle four

Cycle four aimed to finalize the prototypes of the tools in the toolkit alongside the software elements needed to create a stand-alone kit. This cycle featured analysis and integration of emergent concepts from preceding AR cycles into the finalized toolkit. A fourth bespoke tool (the touchBox) was developed during this cycle as a stand-alone tool that did not require a computer connection and had onboard sound production. Further to this was the development of the tools into a cohesive toolkit. Software to accompany the filterBox, squishyDrum, and Noodler was finalized, alongside an overall software application from which to launch the individual pieces of software. Resources for use were also developed—such as a quick start guide and user manual—and iPad connectivity was integrated.

4. Results

4.1. Technical design

Three of the tools feature a software component thus requiring a computer for processing and sound production, and one tool is stand-alone with an integrated microprocessor, screen, and speaker. Three of the tools in the toolkit (filterBox, squishyDrum, and the Noodler) consist of hardware and software elements as they connect wirelessly (using nRF24L01radio connected to Arduino microprocessor boards as transmitters and receivers). One tool (Touchbox) featured a Teensy 3.2 microcontroller with an audio adaptor. Max/MSP (Cycling'74, 2023) was used to develop the software for the system which was then turned into an application that could run on a Mac/PC tested at technical specification levels found on the school computers, and the ability to run directly from USB stick to ensure installing would not be a barrier. The audio featured synthesized sounds using Max/MSP via embedded virtual studio technology (VST) plug-ins or Max/MSP's synthesis engine, sample triggering (short clips of sounds or music), or musical note triggering via MIDI. The final toolkit featured an underpinning software element developed by the industrial mentor that was augmented and extended considerably to only work specifically with the tools in the toolkit and formed the bedrock that the toolkit software was built-on. A video demonstration of the toolkit in action can be found here: https://youtu.be/HIce1nJX4Us.

4.2. Mapping strategies

Mapping strategies involved taking input(s) from the user's gestures as a starting point and processing and attaching this to some sonic output. Two main mapping strategies were used to design the user gesture-to-sound couplings within the tools in the kit. Simple mapping strategies are exemplified as a one-to-one gesture to sound (such as pressing a button to trigger a sample or note) and complex mapping strategies are exemplified as many-to-one. Complex many-to-one mappings can be considered by analogy of the violin. To bow a note on the violin, the player must control both the pressure and movement of the bow on the string as well as place a finger on the fret to change the note as needed, hence many inputs are needed to achieve one output. The different tools in the toolkit used different mapping strategies to allow for the continuum between instant access with strong cause and effect—through the potential for virtuosic and heavily nuanced control.

4.3. Features and accessibility of the toolkit

This section outlines the functionality and accessibility of each element of the toolkit as well as the software developed. Some design decisions were aimed at creating a more user-friendly device—such as all tools in the toolkit using commonly found batteries (9v and AA) housed in easy-to-open compartments. All the tools feature mounting fixtures and can be affixed to clamps or stands to enable users to control them without having to hold them—and to achieve the best positioning for the user. The designs produced were considered to reflect a combination of the author's and stakeholder's decisions about effective ways to address the opportunities and challenges presented by these situations. By reflecting on the outcomes of this process, various topical, procedural, pragmatic, and conceptual insights had the potential to be identified and articulated (Gaver, 2012). The products of this process were the tools developed as artifacts through various stages of prototyping.

4.4. filterBox

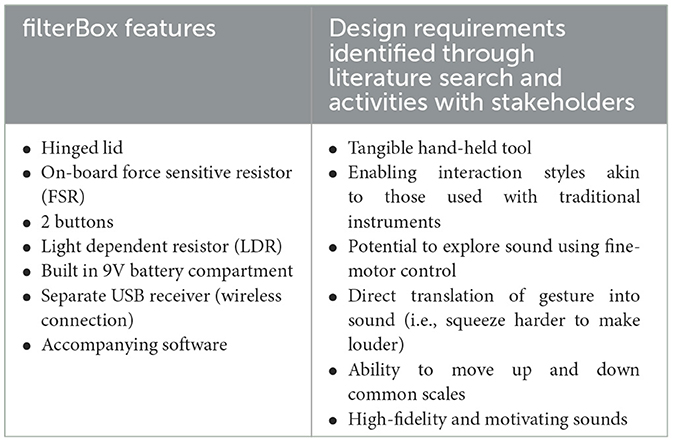

The filterBox (Figure 2 top left) is an acrylic lacquered high-gloss finish on laminated softwood with acrylic facia plates and integrated high-quality sensors. Table 2 shows the features and design requirements of the filterBox. The use of these materials and finish suggests a quality and robustness invoking a familiarity analogous to acoustic instruments. The final look of the tool aimed to give the look, smell, feel, and overall sense akin to traditional acoustic instruments.

Figure 2. Tools in the MAMI tech toolkit. (Top left) filterBox, (Top right) squishyDrum, (Bottom left) Noodler, (Bottom right) touchBox.

4.4.1. Features and accessibility

The filterBox was created to be hand-held in either hand. The idea was to offer the chance to practice fine motor control, manual dexterity, and coordination with complex mappings being used. Two buttons on the hardware control the notes, which can be moved up with one button and down with the other, through the selected scale. A force-sensitive resistor (FSR) situated around the middle of the tool detects pressure and controls the volume of the notes (off when no pressure is applied), and the light-dependent resistor (LDR) detects light and controls a filter on the notes. The buttons are positioned in a way that allows for accessibility of pressing but mitigates some elements of accidental presses by only being activated along one axis—i.e., a user must push down on the center and cannot push into the side to activate a sound. This enables the user to move around in a guided access way to find the direct spot for activation with space between the two buttons so that when one is being activated the other is less likely to be accidentally pressed. The proximity of them enables one finger/body part to be used to move between the two. The lid can be opened either by using a body part to lift it or by tipping the device upside down. Provided a user could grip the device and move it in the air, then the lid could be opened to trigger the LDR, but similarly fine motor skills could be practiced in opening and closing the lid. The filter-box could be clamped around the center to facilitate pressing the buttons alone to trigger notes and moving the lid to modulate the sound. The LDR activated by the lid could also be activated by covering it with the body or other materials, enabling users to utilize themselves or objects they may already interact with to control the sound.

As the complex mapping included control data from the FSR, LDR, and the buttons all working simultaneously, there is the opportunity for different elements to be controlled by different users as a multiplayer instrument. The smooth action hinge in the style of those used on a piano key cover can be opened and closed to increase/decrease the light to the LDR altering a filter on the sound. Using a commonly used interaction (the opening of a hinge), the lid mechanism aimed to provide a resistive mechanism for the user to work against to explore the sonic properties of the filterBox. The changing filter cutoff provided a familiar connection between the energy input via movement to match the sonic output. Expectation was used when designing some mappings—such as the idea of what a sound being put in a box would sound like to design the filter as the lid was closed. The hinged lid mechanism provided interaction opportunities that were analogous to those found in both traditional instruments—such as playing a trumpet with a mute—or in more contemporary music practices—such as scratching like a DJ. The position of the lid can be used to interpret how much light is being let in both by sight and by feel. There was an element of harnessing interactions that would be encountered in the everyday of the participants—such as opening a box and peeking in.

Many-to-one complex mappings are employed as a strategy to access the tool. Instant access and initial ease of use were balanced with the chance to achieve a nuanced and sophisticated control of sound and more technical exploration over time. The sounds were constrained to selectable scales to facilitate in-tune playing with other tools in the kit or other players. Complex physical manipulation could be navigated as the potential of the tool was explored by the user—for example, pressing the button to trigger an ascending note whilst undulated force on the FSR and simultaneously opening and closing the lid heralded different results to rapidly pressing the buttons whilst holding pressure on the FSR. The mobility of the tool allowed it to be used within the existing ecosystem of the user via their lap if in a wheelchair or on a table in front of them, and to be easily passed around without wires getting in the way or becoming a hazard or a point of distraction.

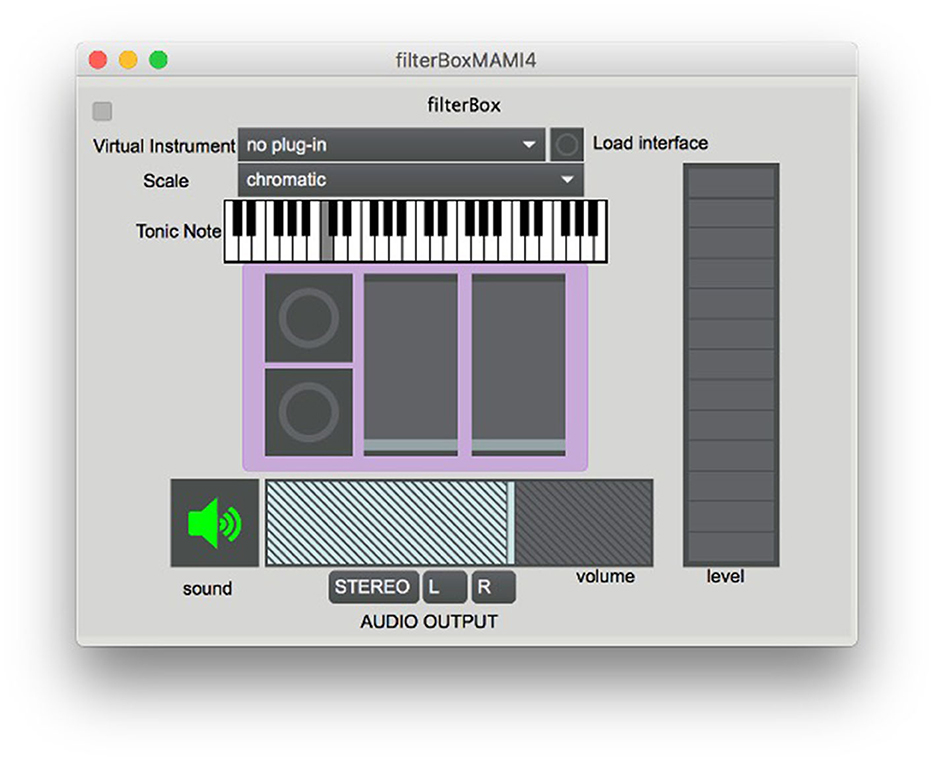

4.4.2. Software

Some software functions feature in the GUI of all three computer-connected tools. These functions are as follows:

• Sound off/on button.

• Volume control.

• Ability to switch output from stereo to left or right speakers (to enable two tools to be connected to one computer and both speakers to be used as independent sound sources).

• Access to the computers' audio system (to be able to select the audio output/input device).

The software GUI (Figure 3) can be used to change the VST to find a compelling sound for the user and the scale (and tonic note) can be selected to constrain the sounds. The filterBox software can be controlled via the Mira app on an iPad to foster accessibility by allowing settings to be adjusted to suit the user and by giving the option to interact with the iPad screen to change settings (and/or with the computer mouse)—depending on the needs of the users. The set-up of the software forms a series of constraints that the user must navigate to explore the sound gamut available (Magnusson, 2010; Wright and Dooley, 2019) providing opportunities to select a range of VST instruments and access each GUI—opening opportunities for endless augmentation of the sound to suit the user. This mechanism allows the user to delve into augmenting the sound whilst providing easily selectable presets. Several VST instruments were selectable (and settings within them accessible) to give the user access to a choice of high-fidelity sounds that were motivational to use and highly customizable. By revealing hierarchical levels of control of the settings as needed/wanted by the user, there was an attempt to enable and support the users without overwhelming them. There are visual elements on the GUI that show the real-time interaction with the buttons, force-sensitive resistor strip, and light sensor, as well as the levels of the sound and the master volume level. The GUI can be used with an interactive whiteboard allowing users to touch the screen to adjust levels.

4.5. squishyDrum

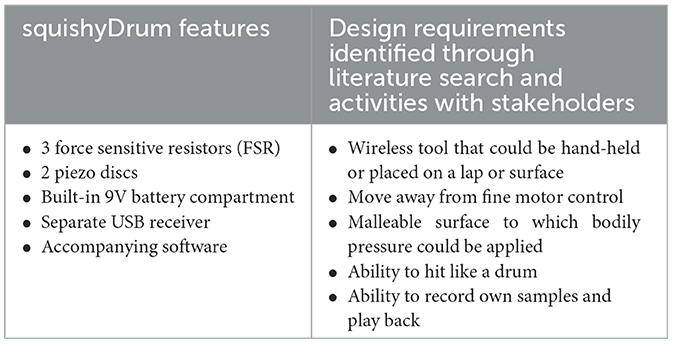

The squishyDrum (Figure 2 top right) is a round tool of 150 mm diameter which can be held in the hands or placed on the lap or surface and uses the same materials as the filterBox. Table 3 shows the features and design requirements of the squishyDrum. On the top, there is a 3-mm thick silicon skin. The materials used evoke a sense of robustness and similarity to an acoustic drum.

4.5.1. Features and accessibility

The squishyDrum has a wireless connection so it can be moved around easily and placed in a position suitable to the user. The main interaction mode for the squishyDrum is applying pressure on the silicon surface under which there are three FSRs. The sensors under the surface provide accessibility—with very small amounts of pressure effectively magnifying the user's gestures into amplified sonic outputs. Two piezo discs send out a signal when tapped to allow for tapping on the drum or hitting with a stick. The squishyDrum could be leaned on to trigger sound or pushed on to any body part or object. After many requests by stakeholders, a rudimentary microphone recording capability was added to this tool. The recording was achieved by using the squishyDrum software GUI and the microphone connected to the computer with playback being triggered via the FSRs on the tool. The ability to record and add folders of sounds enables users (and those that know them best) to have ownership and tailorability of the output. Stickers were added to denote where the FSRs were located so that users would know where to put pressure.

4.5.2. Software

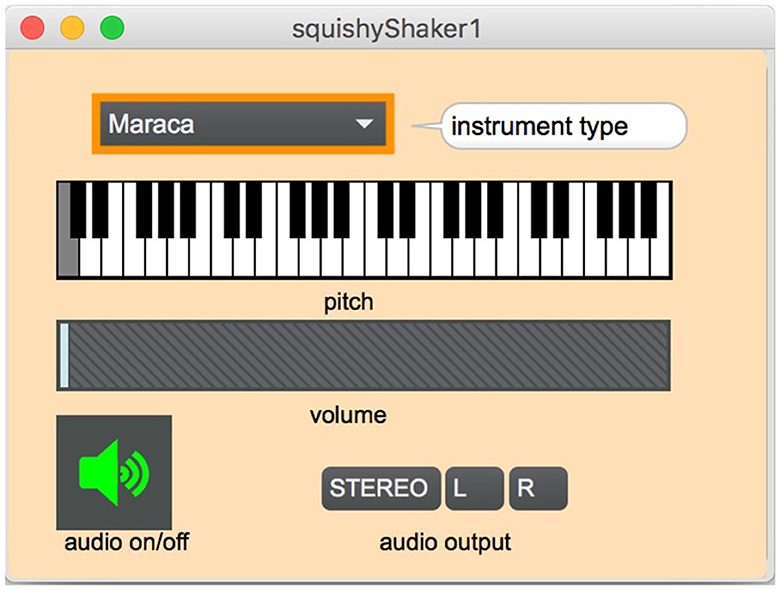

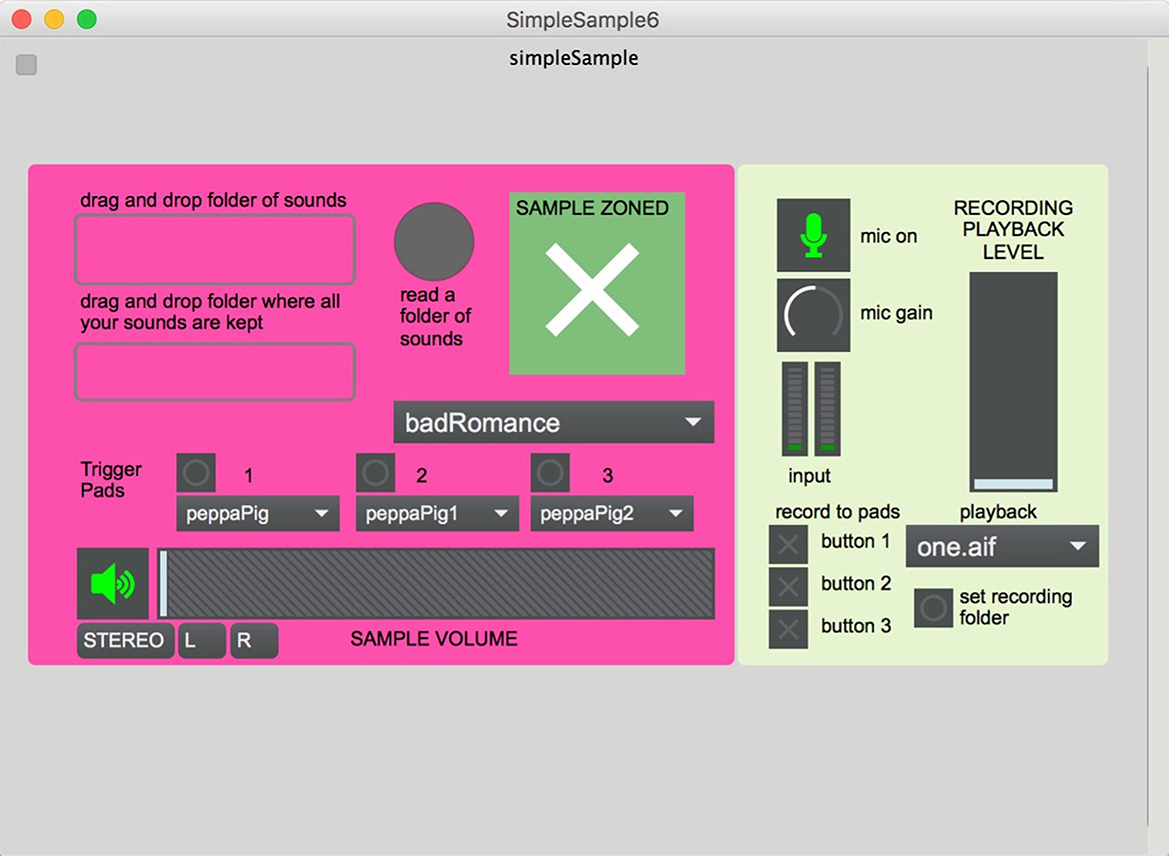

There are two software elements for the squishyDrum. The first, squishyShaker (Figure 4), allows for physical modeling synthesis to be triggered and modulated. Pressing the FSRs will determine the amplitude of the sound and the number of “objects” (coins, screws, and shells) in the digital shaker. The amount of effort exerted on the surface directly correlates to the intensity of the sound with the added element of having to press two of the force-sensitive resistors in tandem to trigger the sound. This was designed to encourage exploration of the surface to discover and coax out the sound. The user can hold the sound for as long as the surface is depressed, something that is almost exclusive of digital musical instruments (other than drone instruments). This self-sustain has the potential to enable the user to engage on a deep level by providing time to process the sound and to make the decision to stop. Also selectable is the pitch of the sound. The second software, named Simple Sample (Figure 5), allows for three samples to be triggered by pressing each of the three FSRs, or by pressing the on-screen button via the computer or the iPad (which can be used to remotely control the software). The ability to trigger via the iPad and the FSRs was to allow two users the opportunity to play together. One user could mirror the action of another user and vice-versa—in a call-and-response style interaction. The ability to record from a microphone was added to respond to stakeholder requests to magnify the users' voices, enabling them to hear themselves, as well as giving some ownership, involvement, and autonomy in the creation of triggered content. The gain of the recording microphone can be changed, as well as the playback volume of the recorded and in-built samples. The user can trigger both in-built samples and their stored recordings at the same time and mix the levels of these independently. Sample folders can be dragged and dropped (using either a single folder or a folder of folders—and folder hierarchy will be maintained). Folders can also be loaded by clicking to open a pop-up dialog box on the computer. A setting was added to the software GUI to allow switching between a triggered sample playing to the end or retriggering upon repressing the pressure pad—to allow for sonic layering to be achieved.

4.6. The Noodler

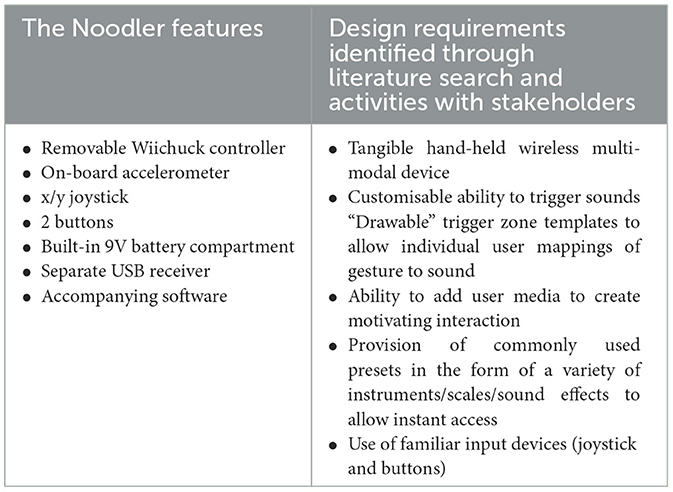

The Noodler (Figure 2 bottom left) is a wireless tool based around the Nintendo Wii chuck controller which has a built-in accelerometer to detect movement across the x, y, and z axes, an x/y axis joystick, and two buttons. The Wii chuck has an ergonomically designed form factor (albeit aimed at a user with typically developed hands), with smooth rounded edges and high production-level quality. Table 4 shows the features and design requirements of the Noodler.

4.6.1. Features and accessibility

For this research project, the Wii chuck was made detachable from the transmitter unit to be able to allowing users to keep their own Wii chuck or replace it should it cease functioning. The compact form factor of the Noodler meant that it was lightweight to move around and was accessible to a person with muscle weakness or weakened stamina, with the heavier part of the tool (transmitter containing batteries) on the end of a 50-cm cable which could be stowed either in a belt or on a chair nearby. The Noodler is a recognizable tool both in being a joystick and a controller for the popular computer console the Wii, which builds on commonly used interaction (that of controlling things with a joystick or pushing a button). The Noodler provides access to triggering notes and samples through its joystick movement and buttons. The joystick and buttons could be accessed with the thumb and fingers but also by holding the Noodler and pushing either input onto a surface to trigger sounds. This enabled it to be used against different body parts/against tables to activate the sonic output. The Noodler was able to be physically manipulated and moved into comfortable positions by the users to gain access to the modes of interaction available (one participant used their lip to control the joystick). The ability to change both sonic content and triggering gestures meant the tool could be tailored to the individual. By allowing the user to select from samples provided or the ability to add their own, they could appropriate the system to suit their tastes to motivate engaged use.

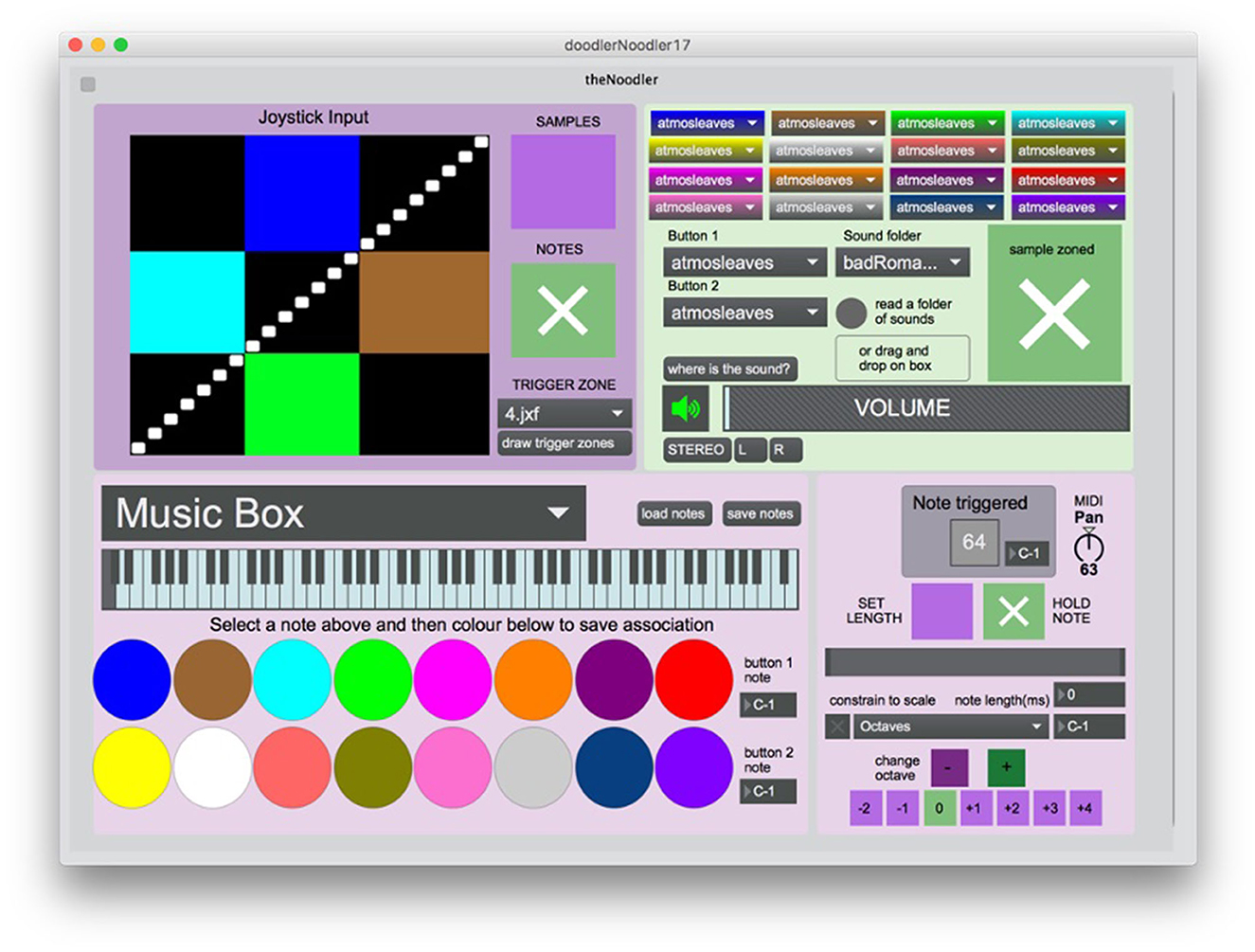

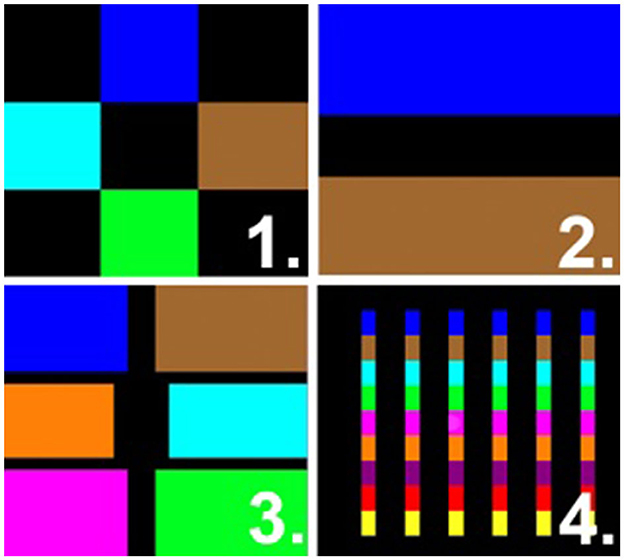

4.6.2. Software

There are two modes that the Noodler software (Figure 6) can facilitate: MIDI note triggering or sample triggering. When MIDI note triggering is selected, a MIDI instrument can be selected (from 128 choices) as the octave (−2 octaves to +4 octaves) or MIDI drums. When sample triggering is selected, a range of preset samples can be triggered featuring commonly requested sound effects or songs, or the user could upload their own samples. The user folders can be loaded via a drag-and-drop mechanism or pop-up dialog box. Samples triggered by the buttons can also be selected. One of the key accessibility features of The Noodler is the ability to draw triggerable zones on the GUI that are activated by the motion of the Noodler joystick. The familiar mechanism of drawing (with the mouse) can be used to create these zones within the GUI. The triggerable area (shown below the title “joystick input” in Figure 6) is virtually mapped to the gestural dimension space that the joystick can move around. Different color pens (up to 16 colors) can be used to color areas of the triggerable zone—the patterns of which can be saved and reloaded. Each color can then be mapped to trigger a MIDI note or sample with areas left black as rest zones. This allows the joystick to effectively become scalable in sensitivity facilitating use for both fine and gross motor control depending on the trigger pattern drawn (Figure 7 shows four presets that come pre-loaded). These customisable trigger zone templates allow individual user mappings of gesture to sound as the user moves the joystick in a way comfortable to them, and the trigger zones can then be drawn to suit. A dot moving around the square in the GUI is provided as an on-screen visual representation of the position of the Noodler within the trigger zone—to give users some visual feedback on the effect of their actions and to establish cause and effect by ensuring explicit visual mapping between sites of interaction and sonic generation.

Several presets were included within the software—featuring varying amounts of colors and zones. All the software loads up with mappings enabled so that sound can be interacted with straight away. This tailors to a specific request from stakeholders to include presets and set-ups that open with instant sonic output upon loading the software. When MIDI note triggering is selected, there is the ability to select whether the note stays on whilst the target dot stays within a trigger zone or whether the triggered note has a set duration—which can also be modified. There is a pan dial to allow the MIDI output to be panned to the left or right.

The Noodler sacrificed complex mappings (although it has the data streams to be able to design this in the future) to become a sample/note-triggering tool—a request that came up through working with stakeholders. These simple mappings can be layered up to create a complex system such as the Simple Sample app in which the triggered sample can be cut off when moving out of the trigger zone or continue to play out depending on what the user selects within the software.

Within the software, the state of the system can be seen from a variety of GUI components with musically analogous elements (e.g., keyboard slider and faders in Figure 6)—to provide a system that made sense to the user. More time to iterate over the look of the software would have been helpful in creating an interface that better matched the user's needs. The current interface may alienate some users—by being difficult to interpret (describing the functionality in a simple manner without using jargon whilst retaining an accurate description of said functionality is a challenge) and by having inaccessible usability qualities (icons that are too small, no text-to-speech, or use of icons to assist visually impaired users or those cannot read), both of which could form barriers to some users. The addition of iPad integration via the Mira app was used to help alleviate this in some areas.

4.7. touchBox

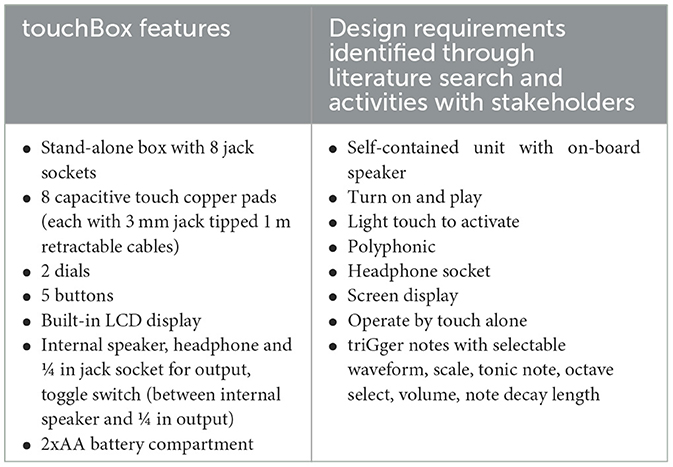

The touchBox (Figure 2 bottom right) is a stand-alone box with eight jack sockets for detachable capacitive touch 50 mm × 50 mm copper pads (each pad with 3 mm jack tipped 1 m retractable cables), two dials, five buttons, built-in LCD, internal speaker, headphone and inch jack socket for output, and toggle switch for toggling between internal speaker and in output. Table 5 shows the features and design requirements of the touchBox.

4.7.1. Features and accessibility

This self-contained unit had the aim of being able to turn on itself and play without involving an external computer and to open accessibility to the controls of the device to users by using tangible controls. These controls provide a means to grasp against. Materially, it matches the design aesthetic of the filterBox, squishyDrum, and Noodler. The pads are of hand-held size and can be held, placed on a surface, or mounted, meaning they can be positioned to suit the user. Up to eight pads can be used at one time. The pads require a light touch on a copper conductive plate to trigger and stay activated until the touch is removed. This provides accessibility for those who can only apply very small amounts of pressure (or even just place their body part down on the plate) and gives control beyond that of triggering sound to choosing when the sound stops. This gives the users a chance to rest and take in the sound, giving sometimes vital processing time needed to truly realize cause and effect. The movability of the pads gives users some autonomy in the set-up of their own instrument—as appropriation is common in other musical instruments where each player has their own unique set-up. The touchBox is polyphonic meaning that more than one note can be played concurrently.

The main unit has buttons to control: the timbre of the sound, the octave of the notes (one button to move up and one button to move down), the scale, and the tonic note of the scale (cycling around the setting as pushed). Two dials on the main unit control, the volume and note, decay (allowing for short staccato notes or long legato notes). Each button and knob on the main unit have a different style of casing for the different controls that are offered and are also in different colors. These design decisions provide the ability for the user to develop a relationship with the tool by touch alone and for visually impaired users to be able to distinguish between controls. The retractable cords enable ease of putting away the tool, and whilst this may seem like an innocuous feature, it can be argued that these features add to the overall usage experience of the tool. The way the instrument is stored and retrieved, connected, and set-up contributes to this practical use. This begins with the decision to use the tool and ends when it is returned to storage as one of the biggest stakeholder issues was getting the technology set-up and having all the accouterments to achieve successful use.

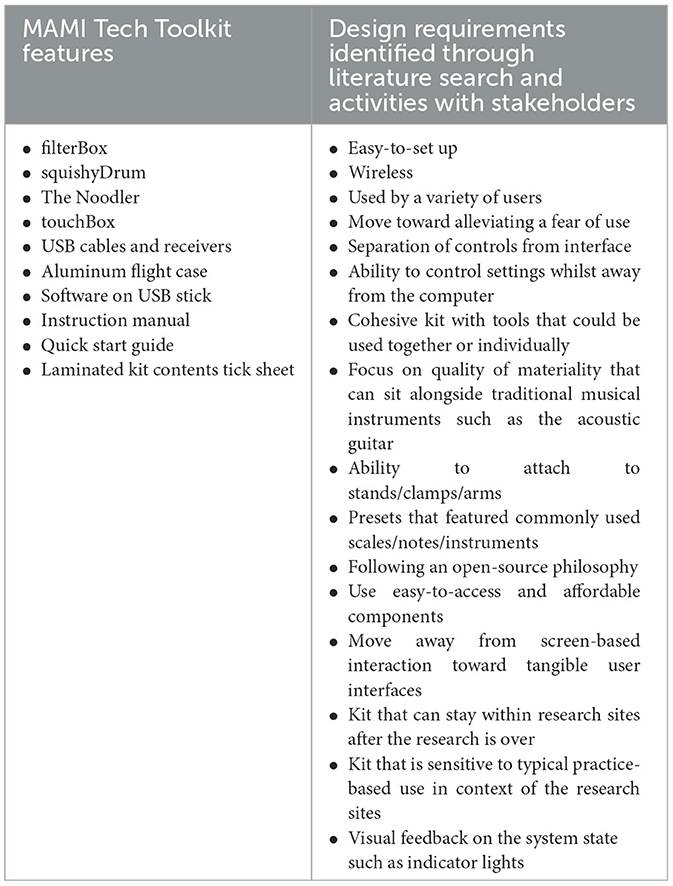

4.8. The MAMI tech toolkit features and accessibility

Table 6 shows the features and design requirements of the whole kit. The tangibility of the tools “takes advantage of embracing the richness of human senses developed through a lifetime of interaction with the physical world” (Ishii and Ullmer, 1997, p. 7) to provide rich multisensory experiences and an interface to grasp against. The final tool construction infers quality (see Figure 8). The tangibility of the tools also provides a mechanism for the users to experience their bodies. An analogous concept might be to think of weighted blankets that are used to provide the sensation of being embraced to alleviate anxiety or stress. The use of the weighted blanket can be seen to provide an edge and a stopping point against which a person can delineate their own edges in a proprioceptive manner. In the same way, the tools in the toolkit provide a means for the user to experience both their gestures as co-constituted with the tools, providing an opportunity to explore their own body. By extension of this mechanism, the sound can also provide an “edge” against which to interact and explore the sound/body relationship.

The ability to split the different tools in the toolkit up (each tool had its own receiver) facilitates the tools being able to be taken home by the user and practiced with for the chance to develop a relationship with the tool. The tools can be used alongside each other with a similar range of presets. Presets that are featured within the toolkits are commonly used scales/notes/instruments to allow for ease of integration with acoustic instruments and to allow for use with the existing canon of repertoire that was commonly used by the stakeholders. These can be selected and made to be cohesive with each other or to fill different ranges of frequencies, timbres, feelings, and movements, much like an orchestra or an ensemble would be constructed to work together filling the sonic space.

The three tools that connect to the computer can be controlled either via a computer or via the iPad. The Mira app allowed mirroring of the GUI from the Max/MSP software on the computer to the iPad, which in turn means the iPad can be used as a controller for the settings. The Mira app (£9.99 at the time of writing) manages the connection between the iPad and Max/MSP software allowing settings to be controlled away from the computer, thus removing the need to touch the computer during the interaction. This can be useful for some users who might find the computer a distraction and removes the need for the users to physically sit at a computer which could become a barrier to interaction. An iPad is also a much more familiar and enticing control unit with direct access to enable quick changes of the set-up or modification of the controls—some elements of the sound can be triggered by the iPad also to allow the iPad user and the tool user the potential to interact with each other.

The state of the system can be understood via visual elements that represent its states—for example, LEDs were used in the receivers to indicate that the units were active. The toolkits are contained in a metal flight case with all the components needed (minus the computer). The choice of a sturdy metal box is both analogous to transporting important artifacts and provides a practical storage solution for tools that are robust. The aim was to consider how the toolkit would fit into practice and have an overall sense of cohesion, as well as a feeling “ownable” by being portable. The hard flight case also considers the ritual of use that runs from deciding to use, using and placing it back in storage. Moreover, in a school setting, any help to mitigate parts going missing is usually welcome (hence the addition of the laminated list of contents with pictures to help users locate and replace items).

The toolkit software was also provided on a USB stick (as well as being downloadable via GitHub) to ease distribution and use in practice. Whilst these details may not involve the direct use of the tools in active music-making, they mediate the use of the tool. By providing tools that holistically consider their whole context of use, tools may integrate more easily into the context within which they are used. They have an authenticity that is considerate of the practice that they are part of. In this way, the tools enmesh with the practice within which they sit. The toolkit was created with an open-source philosophy and as such the internal diagrams of components, bill of materials, construction/code, and Max/MSP patches (the code created in Max/MSP) were made freely available on the GitHub link (see the Data availability statement section below) with a focus on using easy-to-access and affordable components throughout.

5. Discussion

5.1. Material qualities and cause and effect

Both the construction of the tool in terms of the aesthetical look and feel and the type of sonic content used (high-end synthesis or high-quality samples) received positive feedback from the stakeholders. The fidelity of the sounds offered using synthesis or VST instruments and the expressive potential that is built into their programming were more successful in engaging the stakeholders than when the tools triggered standard MIDI-based instruments/sounds. Settings and options were given in a particular order to scaffold the practitioners in their set-up and use of the tools. This could have been further explored in terms of hierarchical systems of access to settings as these could have been tailored more specifically to users depending on their confidence in using technology. By delivering the ability to change settings in a phased way, overwhelm can potentially be minimized.

Technology can be used to leverage interactions already associated with acoustic instruments whilst providing the ability to design interactions from the ground up to suit users. The breaking down and reconstructing of instruments in this way has a danger of confusing the user if cause and effect are not made meaningful to them and can trigger “learned helplessness” (Koegel and Mentis, 1985), when the user loses motivation and no longer believes that they can achieve the outcome or have any control if an interaction does not match their expectation or is not fully made accessible to them. The problems of this decoupling can be mitigated by clear signposting of how a system works including demonstrating the features and functionality to allow users to become comfortable; obeying commonly used interaction mechanisms (pressing something harder makes it louder); and creating robust systems with few technical issues—or at least mechanisms in place to rapidly find solutions or workarounds to problems to help users get things working again. The issue of this successful reconstitution of sound to gesture is one that has been discussed within the general realm of creating new interfaces for musical expression (Calegario, 2018). Questions arose within the research project giving appropriate feedback to ensure the user knew what they were doing and how that changed the sound, and ensuring cause and effect was meaningfully achieved. A successful method was to allow users to get to know their sound before joining group sessions—with the sound-producing mechanism (speaker or amplifier) positioned as closely as possible to the user. If possible, even placing the amplifier in contact with the user's chair to provide haptic feedback as well as auditory.

In the school setting, other people present such as carers and teachers/teaching assistants were useful in supporting individuals. They could facilitate the use of the tool, forge connections between the tool and the sound, and reduce ambiguity between the gesture and the sound. This ambiguity can be further widened by actions such as constantly changing how the tool works, changing the scale or instrument, or changing sounds. Sticking with a particular set-up could be beneficial to gaining mastery over an instrument but equally some users want to hear all the options they have before settling on a particular favorite. Again, a balance must be struck.

5.2. Mappings and design constraints

The mappings used throughout this research were constrained in terms of constraining notes to particular scales as a tool to aid in achieving inclusivity by giving the users the chance to play without feeling worried that they were going to play something wrong (except for the Noodler in which users could select any note they wanted to play). The use of these mechanisms provides suitable, appropriate, and—even more key—acceptable scaffolding for user interactions that strike that balance of difficulty and simplicity. The two ends of the continuum must be balanced on a case-by-case basis with one end leading to overwhelm and the other to boredom. The appropriate use of technology should aim to support access or a cognitive need of the user and fit their desires. This is a bonus of ADMIs in that they can be made to scale and tailor to individual users—enabling (by the change of a dial) a range of notes to be triggered by moving millimeters or meters.

There are many goals that the tools in the toolkit could support from wellbeing to social inclusion to occupational therapy or more obviously musical expression. It may be that virtuosity is seen as the epitome of musical goals but what is suggested here is that we consider virtuosity as a relationship between typical and extraordinary for a particular individual. Pressing a switch to trigger a sound might demonstrate a level of virtuosity for some. If the goal is to play something in time (whether to a particular beat per minute, to a desired rhythm, at a desired point by the user, or to create a desired effect such as layering sounds, for example), then to be able to push a button in time (for reasons as suggested above) is considered a successful movement toward this goal. If the goal is to decide when to respond and move toward an intentional response, then the individual pressing the switch whenever the user wants would be a successful movement toward this goal. If the desire is to allow fine control over the pitch of a note, then pressing a button to trigger a preset sound does not facilitate that type of outcome. This should be considered when setting users up with technology to support them.

The toolkit can facilitate both the triggering of single events and continuous control of sound—thereby providing a level of expression that matches the users' needs. Within the toolkit, there was a balance between providing a flexible, understandable, easy-to-use, and customisable system without it becoming overwhelming. Initially, the idea of the toolkit was to be completely modular—in that different sensors could be selected and then attached to different musical outputs—in a plug-and-play manner. However, it was quickly evident that this kind of development would be beyond the scope of the research resources. There was a dichotomy between bespoke tailoring to one user or modular flexibility that may be “good enough” for many users—as such the final application was tailored to be easy-to-use and featured functionality for use within a range of typical scenarios—and use cases—that stakeholders requested or were observed during practice. The stakeholders desired that tools be compatible both with one another and current technologies and work alongside traditional instruments. This would be a future goal—even the ability to use generic assistive technology style switches with the toolkit would be beneficial.

5.3. The tools in use

The Noodler was the only tool that had extensive testing and was used successfully in several group sessions alongside traditional instruments, and with settings that matched the repertoire being covered within these “in the wild” sessions. These songs were always discussed in advance of sessions with the music therapist, but the Noodler settings could be changed throughout the session to match the song that was being used at that time. The Noodler was attached to its own amplifier and positioned near the user—who became more accustomed to its use over time. A particularly successful example of this was when a well-known song (one of the participants' favorites) was cut into short (3–5 s samples) that could be triggered by the Noodler. The user and the music therapist engaged in some call and response interplay with the music therapist playing the same section of the song on an acoustic guitar—and the participant using the Noodler buttons to respond. It was clear to those present that the value of these forms of technology was made concrete in witnessing that interplay. The participant was clearly empowered by using the technology, and the playing field between practitioner and recipient was seemingly leveled. Those present commented that it was the most engaged and laughter-filled time they had witnessed from the user ever. The music therapist also analyzed a video of a different participant using the Noodler for a performance in front of an audience and described how they thought the tool opened the user to their surroundings and encouraged real-time engagement with the music and the space.

5.4. Barriers and next steps

This research suggests that the barriers to technology use can be placed into four categories: barriers to finding appropriate technology, barriers to setting technology up, barriers to integrating technology into practice, and barriers to using technology within the session. The above categories also interlink depending on the goals and needs of the practitioners and the individuals using the technology. Each barrier could be considered to have its own skill set and different training needs to overcome and each points to potential gaps in provision and potential ways to break down these barriers by providing technology that addresses them, or supportive documentation to understand technology or examples of integrated technology in practice. It is hoped that future work with the toolkit will add to this discourse as this was limited in this research. It is the opinion of the author that what is needed in terms of the next level of technological advancements are easier-to-use tools that account for heterogeneous users; tools that focus on the context of use; development in the field of interaction with tools for users with the most profound needs (especially in areas of assessing their needs with regard to provision for music-making, and assessing interaction with tools for music-making); and more resources and examples of best practice for use of all of the above that synergistically combines with as many existing resources as possible—such as, for example, the Sounds of Intent framework (Vogiatzoglou et al., 2011).

5.5. Limitations

There were many limitations to the toolkit, and much further work could be conducted around it. The main limitations were that there could have been further exploration of the sound world that the elements of the toolkit connected to—the mappings could have been richer. The GUI of the software could have been further developed to be more accessible, and in general, the whole toolkit could benefit from extensive testing in context. The organic following of the needs of the stakeholders meant that the data were messy to pick apart as there were no driving research questions and only tentative aims with stakeholders guiding how the research should go. Sometimes data-informed discussion around contextual issues of using music technology (in terms of making sure individual needs were met by considering logistical matters such as sound levels) highlighted a technical issue (showed something that needed fixing) or informed future design (a feature to add). Data could be all three at once and would triangulate to inform the three perspectives. An example would be the piece of data that said “one child found the sound level too loud”—this meant the requirements in design needed to consider how to make the child comfortable, to create a method to control sound, and to add the ability to access that control quickly. The integrated sound (and haptic feedback) would have been great additional improvements and fall into the category of “future work.” Adding LEDs to show the state of the system (for example, to show when the device was on, connected to the receiver, sending data, and even utility lights such as when the battery was low) could also have been further developed.

A limitation in the ethical consent process of the project meant that video of interactions featuring CYP playing with the MAMI Tech Toolkit could not be shared due to explicit consent not being obtained for video footage. However, the author would argue that it is of utmost importance to include the voices of participants alongside those of proxy representatives and practitioners, especially in the context of underrepresented voices.

6. Conclusion

In this study, a description of the development of the MAMI Tech Toolkit was given. This covered the cycles of action research used as part of the methodology to work with stakeholders and draw out tacit knowledge. The knowledge and who contributed was outlined, and how this was used to shape the direction and goals of the research and the design of the tools was discussed. Third-wave HCI methods were used to link the embedded exploration of people using technology “in the wild” and how this has shaped the technology developed throughout the action research methodology. The “technology prototype as probe” was outlined as the mechanism used to engage stakeholders with the design process as both a chance to see what was possible and as a point of departure into further ideas and design iterations. The hardware and software of the toolkit were described in terms of the engineering elements, features, and functionality. Each element of the toolkit was explored (and the toolkit as a whole) to analyse the accessibility and logistical design decisions that were made and links were highlighted to the stakeholder data that contributed to this. A discussion was provided which reflected on the integration of such tools into practice alongside some of the themes that emerged from the embedded iterative development of the system. Finally, some key factors of discourse that surround these types of tools such as material qualities, cause and effect, mapping, design constraints, barriers, and next steps were discussed.

In summary, this study described the use of an action research methodology to develop an accessible music technology toolkit. There was an emphasis on using participatory and iterative methods to both elicit current issues and barriers with music technology and to develop novel tools to address these gaps in provision. The contributions of the study advance knowledge around active music-making using music technology, as well as in working with diverse users to create these new types of systems.

Data availability statement

The datasets analyzed for this study can be found in the BORDaR at Bournemouth University http://bordar.bournemouth.ac.uk/196/. GitHub repository for the code created can be found at: https://github.com/asha-blue/MAMI-Tech-Toolkit-Final-Edition.

Ethics statement

The studies involving humans were approved by Bournemouth University Ethics Panel. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

Author contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Funding

The funding was granted by the EPSRC (code: EP/L016540/1).

Acknowledgments

This research was carried out as part of the author's engineering doctorate candidacy within the Centre for Digital Entertainment at Bournemouth University under the supervision of Dr. Tom Davis and Dr. Ann Bevan. The author thanks Luke Woodbury for industrial mentorship throughout and the Three Ways School for industrial sponsorship.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

AlphaSphere (2023). AlphaSphere. Available online at: https://alphasphere.com/ (accessed April 6, 2023).

Bela (2023). Bela Mini kit. Available online at: https://shop.bela.io/ (accessed December 22, 2022).

Bevan, A. (2013). Creating communicative spaces in an action research study. Nurs. Res. 21, 14–17. doi: 10.7748/nr2013.11.21.2.14.e347

Bødker, S. (2006). “When second wave HCI meets third wave challenges,” in Proceedings of the 4th Nordic Conference on Human-computer Interaction: Changing Roles (New York, NY: ACM), 1–8. doi: 10.1145/1182475.1182476

Bott, D. (2010). Towards a More Inclusive Music Curriculum - The Drake Music Curriculum Development Initiative, Classroom Music Magazine.

Calegario, F. (2018). Designing Digital Musical Instruments Using Probatio: A Physical Prototyping Toolkit. Berlin: Springer. doi: 10.1007/978-3-030-02892-3