- 1Artificial Life and Minds Lab, School of Computer Science, University of Auckland, Auckland, New Zealand

- 2Te Ao Mārama–Centre for Fundamental Inquiry, The University of Auckland, Auckland, New Zealand

Living systems process sensory data to facilitate adaptive behavior. A given sensor can be stimulated as the result of internally driven activity, or by purely external (environmental) sources. It is clear that these inputs are processed differently—have you ever tried tickling yourself? Self-caused stimuli have been shown to be attenuated compared to externally caused stimuli. A classical explanation of this effect is that when the brain sends a signal that would result in motor activity, it uses a copy of that signal to predict the sensory consequences of the resulting motor activity. The predicted sensory input is then subtracted from the actual sensory input, resulting in attenuation of the stimuli. To critically evaluate the utility of this predictive approach for coping with self-caused stimuli, and investigate when non-predictive solutions may be viable, we implement a computational model of a simple embodied system with self-caused sensorimotor dynamics, and use a genetic algorithm to explore the solutions possible in this model. We find that in this simple system the solutions that emerge modify their behavior to shape or avoid self-caused sensory inputs, rather than predicting these self-caused inputs and filtering them out. In some cases, solutions take advantage of the presence of these self-caused inputs. The existence of these non-predictive solutions demonstrates that embodiment provides possibilities for coping with self-caused sensory interference without the need for an internal, predictive model.

1. Introduction

The remarkable adaptive behavior displayed by living organisms would not be possible without the capacity to respond to sensory stimuli appropriately. The same sensors can be stimulated due to external (environmental) causes, as well as by internally driven activity. Intuitively, it seems like responding appropriately must require distinguishing the two. We can hear sounds in the world around us, but we can also hear our own voice when talking, and our own footsteps when walking. We can see our environment, but we can also see our own bodies. Not only do we perceive both the world and the results of our own actions, but the exact same sensory stimulus can be caused by an external event, or by our own activity. For example the sight of a hand being waved before your eyes could be your own hand or a friend snapping you out of a daydream. However, we typically have no trouble telling the difference. Indeed, the phenomenology of a self-caused stimulus can be very different from that of an externally caused one. A great example of this is the sensation of touch, which can reduce you to helpless laughter when externally applied—but trying to tickle yourself just isn't the same! (Blakemore et al., 2000). Understanding exactly how these inputs are processed differently can facilitate building artificial systems as capable and flexible as living ones.

One concrete way this has been studied is in research on the sensory attenuation of self-caused stimuli, where researchers have investigated how these stimuli are perceived as diminished in comparison to externally caused stimuli (Hughes and Waszak, 2011). This is clearly demonstrated in the force-matching paradigm. Here an external force is applied to a subject's finger, after which they must use their other hand to recreate that force as precisely as possible. This takes place under two conditions. In the direct condition, the subject applies force to their finger in a manner as close as possible to pressing on their own finger (given the constraints of the experimental apparatus). In the indirect condition, they apply the force via a mechanism located elsewhere, such as a lever to one side. Healthy subjects consistently apply too much force when pressing directly on their finger, indicating that the perceived force is attenuated compared to the other conditions (Pareés et al., 2014). The classical explanation of this effect is that when the brain issues a motor command, it uses a copy of that command to predict the sensory consequences of the resulting motor activity. The predicted sensory input is then subtracted from the actual sensory input, resulting in the attenuation of the stimulus (Klaffehn et al., 2019). This is a representationalist explanation in that it explicitly posits that the brain contains an internal model used to simulate the motor system (Wolpert et al., 1995).

While there is indeed evidence to support the presence of neural correlates of motor activity subsequently influencing sensory perception in different species, specifically via corollary discharge circuits (Crapse and Sommer, 2008), the aim of this paper is to interrogate the necessity and utility of internal representations in general and internal predictive models in particular for maintaining adaptive behavior in the presence of self-caused sensory interference. We examine the predict-and-subtract explanation of the sensory attenuation phenomena by using a genetic algorithm (GA) to explore the viable solutions in a dynamical model of a simple embodied system with non-trivial self-caused sensorimotor dynamics, where the task the controller must solve relies on engaging with an environmental stimulus, while its own motor activity also directly stimulates its environmental sensors. Here we focus on the classical, predict-and-subtract approach, which would in theory perfectly solve the interference problem that we have designed, though our GA instead finds alternative, non-predictive solutions which leverage the system's embodiment.

In general, expected stimuli produce a reduced neural response (de Lange et al., 2018). This has been explained in terms of an internal predictive model (e.g., Blakemore et al., 1998, 2000; Wolpert and Flanagan, 2001; Bays et al., 2005; Kilteni and Ehrsson, 2017, 2022; Kilteni et al., 2020; Lalouni et al., 2021). This type of explanation has been described as “cancellation theory,” where expected sensations are suppressed (Press et al., 2020). In the interest of completeness, we should mention that there are other predictive accounts of perception, such as Bayesian predictive processing, where attention also plays a major role (Friston, 2009; Clark, 2013; de Lange et al., 2018). The roles of prediction in Bayesian and cancellation theories have been considered contradictory, and “opposing process theory” is one attempt to reconcile them (Press et al., 2020). These alternative approaches are somewhat orthogonal to this project, as they address different potential roles for prediction, whereas we aim to engage with the classical account by investigating the role of embodiment in coping with self-caused sensory interference in a context where prediction and subtraction of that interference is a perfect solution. Likewise, while externally-caused stimuli can also be attenuated, for instance when expected (de Lange et al., 2018), or during movement (Kilteni and Ehrsson, 2022), this paper focuses specifically on coping with self-caused stimuli by modeling a task which requires responsiveness to environmental sensor stimulation despite the presence of self-caused sensory interference.

The problem of ego-noise in robotics hints at why subtracting out self-produced stimuli seems like a natural thing for the brain to do. Ego-noise refers to self caused noise, including that of motors. This noise can interfere with the data collecting sensors of a robot, and the straightforward engineering solution is to cancel out the noise. The explicitly representational and predictive explanation of the sensory attenuation effect meshes well with this engineering perspective, and has informed a predictive approach to dealing with ego-noise (Schillaci et al., 2016). We cite Schillaci et al. here as an illustration that this exact approach has indeed been used in recent work in robotics, and thus our results should have relevance to the field. Of course this is not the only approach to dealing with the general problem of making the self-other distinction in robotics—see for instance Chatila et al. (2018) and Kahl et al. (2022).

In our model, the embodiment is a simple, simulated, two-wheeled system with a pair of light sensors. It is coupled to a controller—a continuous-time, recurrent neural network (CTRNN)—which determines its motor activity. The sensory input to this robot is a linear combination of environmental factors (a function of its position relative to a light) and a self-caused component—a function of the robot's motor activity.

This model is designed to allow both representationalist and non-representationalist solutions to emerge. For the representationalist predict-and-subtract solution to be viable in this model, two criteria need to be met. Firstly, the controller must be able to model the interference. As the controller is a CTRNN, which is a universal approximator of smooth dynamics (Beer, 2006), it can indeed model the interfering dynamics, which are produced by simple, smooth functions. Secondly, the interference must be able to be removed from the input, given a prediction of the interference. Since the interference is summed with the actual sensor data, it can be removed by subtracting a prediction of the interference from the sensory inputs. This explicitly representational solution would fit with the classical explanation of sensory attenuation. Non-representationalist solutions that take advantage of the system's embodiment are also possible in this model, since the interfering dynamics are a function of the system's motor activity, and are coupled to the controller in a tight sensorimotor loop, embracing the situated, embodied and dynamical (SED) approach. In the classical account, the environmental stimulation of the sensor can be treated as independent of the system's activity, and the self-caused stimulation of the sensor is similarly compartmentalized—the decision to take a particular action is made independently of its incidental sensory consequences, and compensation for these consequences is left to downstream predictive and subtractive processes. In contrast with this approach, modeling how embodied systems are coupled to their environment, in particular how both the system's environmentally and self-caused sensory inputs are influenced by the system's own motor activity, enables additional ways of coping with self-caused stimuli, as will be seen in our results.

Following the evolutionary robotics methodology we explore the space of possible solutions using a genetic algorithm (GA) (Harvey et al., 2005). We then analyze the behavioral strategies of controllers tuned to successfully accomplish a task (phototaxis), in the presence of several different forms of motor-driven sensory interference. This permits us examine a range of ways embodied systems may cope with different self-caused sensory stimuli, and reveals that a number of alternatives to the classical predict-and-subtract approach are viable in our model.

Clearly the simulated robot and neural network controller that we are investigating are very different from humans and their brains. This limits the ability to make direct predictions about humans based on the results found in our model—we don't expect to find people using exactly the same strategies used by the two-wheeled robot. Nevertheless, this type of model can highlight how the solutions found by evolution are not always the same as the solutions that might be identified by a human engineer. As argued by Thompson et al. (1999), humans need to understand what they engineer, to divide and subdivide the problem and solution into smaller units until those units are simple enough to address directly. For example, dividing the problem of coping with self-caused stimuli from the general problems of perception and action, and further dividing it into the prediction and subtraction of self-caused stimuli. Natural or artificial evolution, on the other hand, is under no such constraint. The solutions it finds are the result of iterative improvement with no need for understanding, simplification or compartmentalization. Accordingly, it can find solutions that are “messy” and difficult, perhaps in some cases even impossible, for us to understand. Our evolutionary robotics model, like others before it (Beer, 2003; Phattanasri et al., 2007; Beer and Williams, 2015), allows us to see that there are alternatives to how an engineer might approach solving this particular problem. Furthermore, it allows us to generate concrete examples of alternative strategies for solving the problem at hand, and due to the simplicity of the model these examples are easier to analyze and come to understand than the incredibly complex behavior found in living systems.

In Section 2 we explain the model we developed and the GA we use to optimize its parameters. Then in Section 3 we present the results of our investigation, describing each form of interference used, and explaining the behavior of the most successful system evolved to perform phototaxis in the presence of each form of interference. Finally in Section 4 we summarize the different behaviors evolved to cope with these forms of interference, and discuss how these findings can inform our understanding of the role embodiment plays in coping with self-caused sensory stimuli. We draw attention to how the problem of disentangling self-caused and environmental stimulation of the sensors is made easier for embodied systems by the influence embodied systems have over both self-caused and environmental stimulation of their sensors, and we argue that, for embodied systems, this problem need not require the use of an internal model.

2. Model and methods

In this section we first describe our model of an embodied system with self-caused, motor-driven sensory interference, which must perform a task where clear perception of the environment is beneficial. We then describe the genetic algorithm (GA) that we use to investigate how embodied systems can cope with self-caused sensory input.

2.1. Model

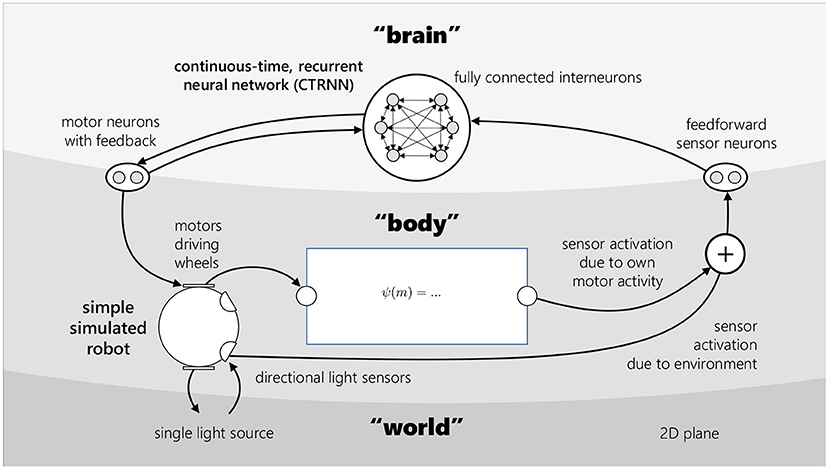

We model a simple light-sensing robot, controlled by a neural network, where the robot's light sensors can also be directly stimulated by the robot's own motor activity. The two-wheeled robot moves about an infinite, flat plane. It has a pair of directional light sensors, and the environment contains a single light source. Over the course of a single simulation, this light source's position remains fixed. The robot is controlled by a continuous-time, recurrent neural network (CTRNN). Motor-driven interference is ipsilateral and non-saturating, and is determined by one of three different functions, which are detailed in the Experiments section. Figure 1 provides a visual overview of the model architecture. As the model is fully deterministic, the course of each simulation is fully determined by the robot's initial distance from and orientation toward the light. In each simulation, the robot begins at the origin (0, 0), facing toward positive y, and initial conditions are varied by positioning the light at a different (x, y) coordinate.

Figure 1. An embodied model with motor-driven sensory interference. This model is used throughout the paper. It consists of three parts—the “brain,” the “body” and the “world.” The brain is a continuous-time, recurrent neural network (CTRNN), with 6 fully connected interneurons, 2 sensor neurons which project to all interneurons, and 2 motor neurons which project to and receive projections from all interneurons. The motor neurons determine the activation of the body's 2 motors. The body's position and orientation relative to the single light source in the environment determine the activation of its 2 light sensors. The value received at a given point in time by the right sensor neuron is a linear combination of the right light sensor activation, and a function ψ of the right motor's activation, representing self-caused sensory stimulation—and likewise for the left sensor, sensor neuron, and motor.

2.1.1. Embodiment

The robot is circular, with two idealized wheels situated on its perimeter π radians apart, at −π/2 and π/2 relative to its facing. The wheels can be independently driven forwards or backwards. Its two light sensors are located on its perimeter at −π/3 and π/3 relative to its facing. The environment it inhabits is defined entirely by the spatial coordinates of the single light source. The robot's movement in its environment is described by the following set of equations:

Where x and y are the robot's spatial coordinates, and α is the robot's facing in radians. mL and mR are the robot's left and right motor activation, respectively, and are always in the range [−1, 1]. The values of mL and mR are specified by the controller, which is described later. r = 0.25 is the robot's radius. We simulate this system using Euler integration with Δt = 0.01.

Physically this describes positive motor activation turning its respective wheel forwards, and conversely for negative motor activation. If the sum of the two motors' activation is positive, the robot as a whole moves forwards with respect to its facing, while if it is negative, the robot moves backwards. The amount that the robot turns is also determined by the relationship between the two wheels.

The robot's two light sensors are located at the coordinates (x + cos(α + θ)r, y + sin(α + θ)r), where θ is the sensor's angular offset. For the left sensor, θ = π/3 and for the right sensor, θ = −π/3. The environmental stimulation of the sensors is given by:

Where b = [cos(α + θ), sin(α + θ)] is the unit vector pointing in the direction the sensor is facing, and c is the vector from the sensor to the light, with ĉ denoting that the vector is normalized to have a unit length. That is ĉ = c/|c|, where |c| is the magnitude of c. The symbol · denotes the dot product of the two vectors, and the superscript + indicates that any negative values are replaced with 0. D is the Euclidean distance from the sensor to the light, and ϵ = 5 is a fixed environmental intensity factor. sL denotes the activation of the left sensor, with θ = π/3, while sR denotes the activation of the right sensor, θ = −π/3.

The numerator is maximized at 1 when the sensor is directly facing the light, and minimized at 0 when the sensor is facing π/2 radians (90°) or more away from the light. The denominator is minimized at 1 when the distance from the sensor to the light is 0. This means that the activation of a sensor grows both as the sensor faces more toward the light, and as the sensor approaches the light (so long as it is facing less than π/2 radians away from the light).

2.1.2. Controller

The controller is a continuous-time recurrent neural network (CTRNN) defined by the state equation below, following Beer (1996):

Here N = 10 denotes the number of neurons in the network. yi indicates the activation of the ith neuron. The parameter τi is the time constant of that neuron, where 0 < τi < 3, while the parameter βi is its bias, where −5 < βi < 5. Ii is any external input to the neuron. σ(x) = 1/(1 + e−x) is the standard logistic activation function for neural networks, and is a sigmoid function in the range [0, 1]. ωji is a weight determining the influence of the jth neuron on the ith neuron, where −5 < ωji < 5.

Two neurons are designated as input neurons, and all their incoming interneuron weights ωji are set to 0, including the recurrent weight ωii. With the robot described above, neurons 1 and 2 are designated as input neurons, and I1 = wIsL, while I2 = wIsR, where wI = 5 is a fixed input scaling weight. These are the only neurons which receive an external input, so I3..N = 0 always.

Two neurons are designated as output neurons (neurons 9 and 10), and their activation values y are treated as the output of the network. In our case, yN−1 and yN provide the values mL and mR, respectively. Output is scaled to be in the range [−1, 1] by the function:

Where ωmax = 5 denotes the maximum weight value ω permitted for a node in this CTRNN. The two output neurons do not receive stimulus from the input neurons. That is if j ∈ {1, 2} and i ∈ {9, 10} then ωji = 0. The remaining six neurons are interneurons, each of which receives inputs from all other neurons in the network. This neural network architecture is illustrated in Figure 1.

2.1.3. Motor-driven interference

Perception necessarily involves both the system and its environment. Nevertheless, we can consider the degree to which the activity of the system or environment contributes to a given stimulus. Let us take three very different points in this space. (1) If our robot passively sat still, while a light in the environment turned on and off, the change in the light sensors' activations would primarily be due to external causes—the robot's own activity would not play a role. (2) On the other hand, in the model described above, all changes in the light sensors' activations are the result of a change in the relationship between the light's position and the robot's position and facing. Because the light is static, the change is induced by the robot's activity, but determined by the robot's spatial relationship with its environment. (3) At the other end of the scale from (1), consider the case where the robot inhabits a lightless environment in which its sensors are directly and exclusively stimulated by its own motor activity. In this case, neither external causes, nor the relationship between the system and the environment play a role—the change in the sensors' activation is due solely to the robot's own activity.

For living systems in the real world, none of these three points are typically possible—for (1) perception is rarely (if ever) purely passive, for (2) movement will likely involve self-produced sensations even if the environment is passive, and for (3) self-produced sensations will depend on environmental conditions. Nevertheless, our own experiences may lie closer to one of these points than to another. Consider the visual experience of (1) sitting watching a movie (a passive experience, yet one whose visual sensations will still depend on activities like movement or blinking), (2) turning to look around the otherwise still room briefly (where the visual stimulation is largely determined by the spatial relationship between the eyes and the room, but still influenced by changes in the environment like the ongoing movie, and self-produced sensations like the peripheral vision of bodily movement), then (3) scratching your nose (where a change in visual stimulation is caused by your own hand entering the visual field, but depends also on static and dynamic environmental factors like the general lighting of the room and the flickering light of the movie screen).

In the model described so far, there is no possibility for directly self-caused stimuli like (3). This is precisely the kind of self-caused sensory input we are concerned with here, so we extend the model with an interference function ψ(m). The various interference functions we study are described in Section (3). The interference function is used in a new sensory input equation:

Where s is the original light sensor activation, m is the ipsilateral motor's output, and λ is a scaling term controlling how much of the sensory input is due to the environment, and how much is due to the system's motor activity. Substituting for the original input neuron equations, this gives:

This combination of motor-driven interference with sensor activity is additive and non-saturating. That is, the interference ψ(m) can never be so high that change in the environmental stimulation s of the sensor does not result in a change in s′. This means that if ψ(m) can be predicted by the network, then this value can simply be subtracted from the input neuron's output to other nodes. This mapping also uses the ipsilateral motor to generate interference for each sensor. This was chosen for two reasons. Firstly, it is physically intuitive. Secondly, because the motor neurons have recurrent connections to the interneurons, this means that the neural activity determining mL and mR [and thus ψ(mL) and ψ(mR)] contributes to the interneurons' synaptic inputs, making prediction easier.

To summarize, we start with a model of a two-wheeled robot with two light sensors, controlled by a CTRNN. In this model, changes in a light sensor's activation are purely the result of the robot's position and orientation changing relative to the light. We extend this model by adding a function which, given a motor activation value, produces an interfering output. Instead of the input neurons of the controller directly receiving the current activation of the light sensor, the light sensor's activation is first combined with this interference. The parameter λ controls the weighting given to the sensor activation vs. the interference in this combined term. For example, with λ = 0.05, instead of the light sensor's true reading s, the controller receives 0.95 s + 0.05ψ(m). The interference functions ψ(m) are described in the Section 3.

2.2. Methods

Parameters for the CTRNN controller were evolved using a tournament based genetic algorithm (GA) based on the microbial GA (Harvey, 2011). The GA operates on a population, which consists of a number of solutions specifying the parameters for the CTRNN. In a tournament, two randomly chosen solutions from the population are evaluated independently. Their fitness is compared, and then in the reproduction step the lower scoring solution is removed from the population and replaced by a mutated copy of the higher scoring solution. Our microbial GA differs from the classic presentation in that it ensures that each member of the population participates in exactly one tournament before the reproduction step is performed for the entire population. This allows generations of the population to easily be counted.

The following parameters were evolved for each node i in the CTRNN: the time factor τi, the bias βi, and a weight vector specifying the incoming interneural weights for node i, where ωji refers to the weight applied to the connection from j to i.

Each evolvable parameter of the network is encoded in the genome as a single 32 bit floating point number in the range [0, 1]. The weights and biases are translated from gene g to phenotype ω or β via the linear scaling function (ωmin + ωmax)g + ωmin, where ωmin and ωmax are the minimum and maximum neural weights, respectively –5 and 5, while for τ we use the exponential mapping e3g/10.

The reproduction procedure used, based on the result of a tournament, is to remove the loser from the population, and add in its place a copy of the winning genome. Each gene in this copy is then mutated by the function

Where is a random variable drawn from a normal distribution with a mean of 0 and a standard deviation of 1, μ = 0.2 is the mutation factor, and the result is scaled by adding 1 and taking the modulo with 1 to ensure the result is in the range [0, 1].

In all cases the system was evolved to perform phototaxis using the following fitness function:

Where t is the time at the current integration step, T is the trial duration, and d(x, y) is the euclidean distance from the point (x, y) to the light. The squared distance is used rather then the actual distance here solely for computational efficiency. Multiplying the distance by the current time means that minimizing distance later in the trial is more important to the fitness score than doing so earlier is. The final distance is the most important, while the original distance from the light at t = 0 is completely disregarded. However, improvement at any time is always relevant: t = 99 is almost as important as t = 100.

In each trial, the robot begins at the origin. Each generation, four light coordinates are stochastically generated. The first coordinate is chosen uniformly at random to lie on a circle of radius 3 centered on the origin. The other three coordinates lie on the same circle and form a square with the first. Each solution in the population has its fitness score calculated for each of the four light coordinates. These scores are combined before comparison in the tournament. This means that a given solution's score may go up or down from generation to generation, as it may perform better or worse on that generation's set of light coordinates. This helps prevent the GA becoming stuck in a local optima.

A population of 50 individuals was used. The trial duration was chosen to allow enough time for robust phototaxis to be selected for, either 10 or 20 time units depending on the interference function. The GA was allowed to run for a sufficient number of generations for fitness gains to plateau and for the population of solutions to converge.

3. Experiments

To investigate how embodied systems cope with motor-driven interference, we began by using the GA to find parameters that would allow a CTRNN controller to perform phototaxis in the basic model with λ = 0 (i.e., with no motor-driven sensor interference). The population of controllers that were the product of this GA run are taken as the ancestral population for the subsequently evolved populations in Experiments 2–4. That is, parameters for these populations were evolved starting from this ancestral population, rather than starting from a new, random population. We chose to use an ancestral population, rather than evolving subsequent populations from scratch, in order to allow for direct comparison between the behavior of the systems optimized with and without the presence of motor-driven interference. The results of Experiment 1 are presented in Section 3.1.

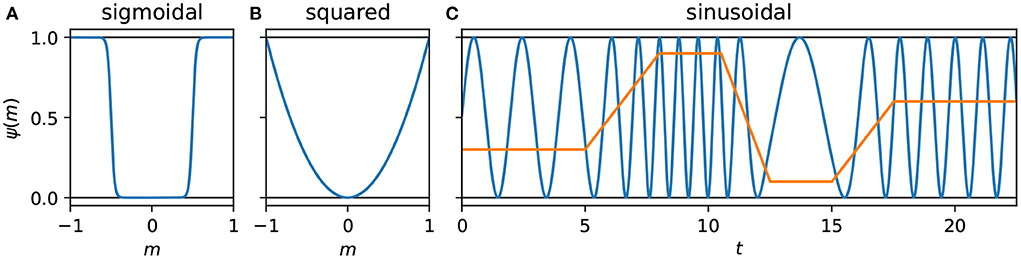

In addition to Experiment 1 with the basic version of the model where λ = 0 (and therefore s′ = s), we consider three further versions of the model in Experiments 2–4, each corresponding to a different interference function ψ(m). We use λ = 0.5 with each of these three functions. In turn we consider: (i) a threshold-like sigmoidal function, whose interference can be completely avoided by appropriately modified behavior; (ii) a form of unavoidable interference, taking the square of the motor activity; and (iii) a time-dependent interference function, a sine wave whose frequency depends on the motor activity, which eliminates a degree of control that was present with the squared interference. The three interference functions used for these experiments can be seen in Figure 2, and are introduced and explained in more depth in Sections 3.2–3.4, where the corresponding results are also presented.

Figure 2. Plots of the interference functions used in Experiments 2–4. (A,B) Plot pure functions of m corresponding to Equations (12) and (13) (Experiments 2 and 3, respectively). (C) Plots a function of time that depends on the cumulative history of m, Equation (14) (Experiment 4). The blue line is the interference, while the orange line is the motor activity.

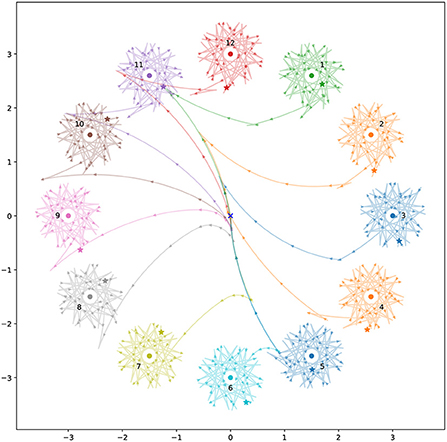

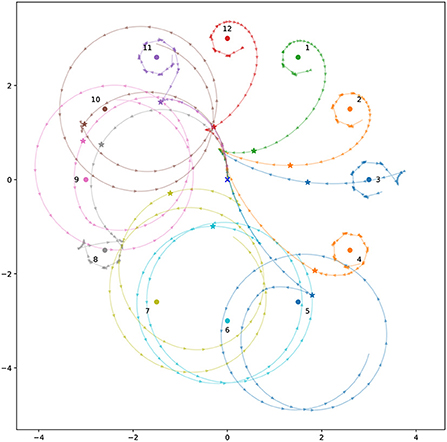

3.1. Experiment 1: Phototaxis without interference

A highly fit population of controllers was evolved to perform phototaxis in the basic model, with no motor-driven interference. Evolution of this population began from a population of solutions with uniformly random interneuron weight and time constant values, and with center-crossing biases (Mathayomchan and Beer, 2002). A trial duration of 10 time units was used. After evolution, genomes for this population are highly convergent, indicating that the population has become dominated by a single solution. Examining the fittest member of this population, we found that the controller reliably brought the robot close to the light across a collection of light coordinates representative of those used during evolution (Figure 3). The robot's behavior results in it remaining close to the light even over time periods orders of magnitude longer than the trial duration used during evolution. This indicates that the solution produces a long term, stable relationship with the environmental stimulus.

Figure 3. Spatial trajectories for the best individual from the ancestral population for 12 different light coordinates. The robot always begins at the origin, facing toward positive y (upwards). Stars mark the final position reached during the trial duration used during evolution. The colored circles show the light position for the correspondingly colored trajectory. The triangles along the trajectories point in the direction the robot is facing. They are plotted at uniform time intervals, so more spaced out triangles indicate faster movement.

The ancestral solution's behavior is well preserved in the descendent populations evolved to handle the various interference functions studied. Understanding how this solution works is helpful for understanding how the descendent solutions handle motor-driven sensory interference.

The ancestral solution's behavior can be divided into 2 phases:

(A) The approach phase, where the robot makes its way close to the light. This phase has to account for the light starting at an unknown point relative to the robot.

(B) The orbit phase, where the robot's long-term periodic activity maintains a close position to the light.

Note that this two phase description does not imply switching between two different sets of internal rules. These phases are driven by the ongoing relationship between the robot and its environment, and are better thought of in dynamical systems terms as a transient and a periodic attractor.

The orbit phase (Phase B) is simpler to explain, so we will begin with it. Here we can approximate the robot's behavior with a simple program:

1. Approach the light while driving backwards, such that you will pass the light with the light on your right hand side.

2. When the light abruptly enters your field of vision, it causes a spike in your right sensor: quickly respond by switching to driving forwards instead, turning gently to the left.

3. After driving forward has brought the light behind you and out of the sensor's field, go to 1.

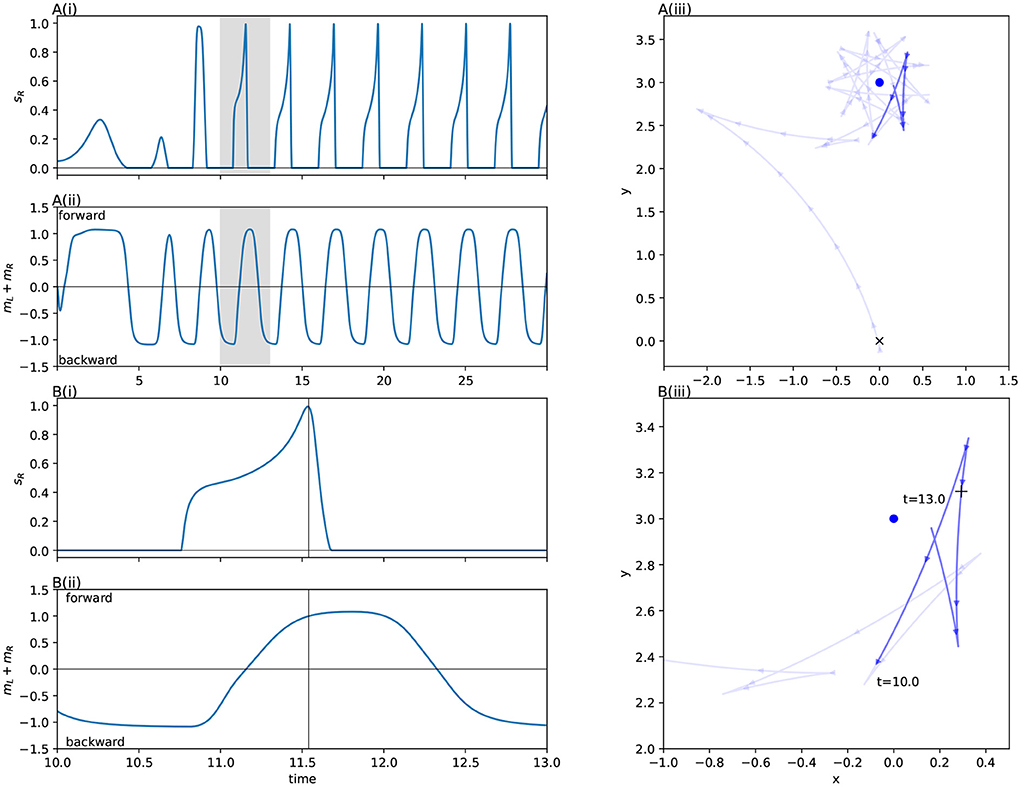

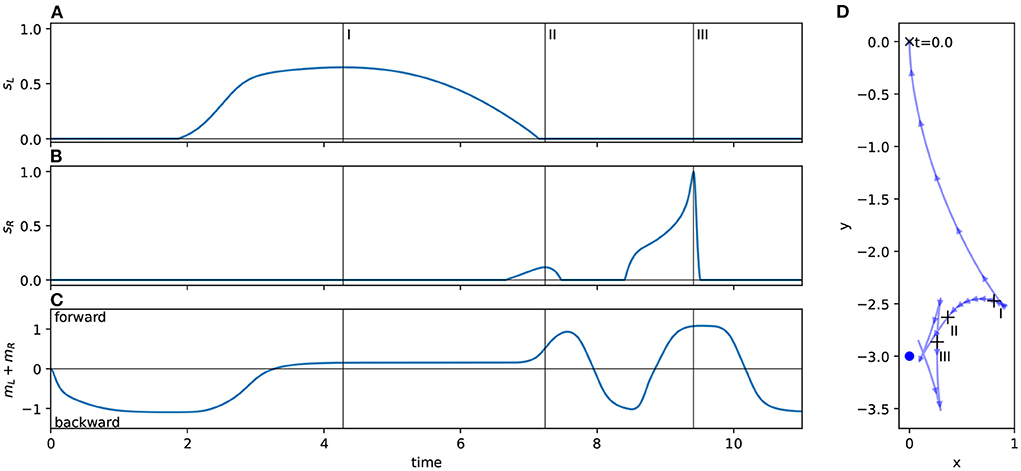

We observed this behavior across all the light coordinates we examined. Figure 4 and the corresponding caption explains how this behavior applies to the trajectory for a specific light coordinate, showing how the simple program described above matches its behavior. The left sensor is completely uninvolved in this process. In fact for some initial light positions, namely when the robot begins with the light on its right, the left sensor is also completely uninvolved in the approach phase. That is, if the left sensor is completely deactivated throughout certain trials, the trajectory is completely identical to if it were active.

Figure 4. Detail of the orbit phase (Phase B) for the ancestral solution. The plots marked (A) Show the ancestral solution when the light is at coordinates (0, 3)—position 12 in Figure 3. The highlighted sections of these figures mark the time period 10–13, which is shown in more detail in (B). The vertical line in B(i,ii) marks the peak of right sensor activation, which corresponds to the + in B(iii). The activity shown in (B) corresponds to the Phase B program (see main text). Before t = 11, the robot drives backwards, passing the light on its right side. As the right sensor is stimulated, the robot changes direction, driving forwards. After the right sensor stimulation peaks and dies down, the robot changes direction again, reversing toward the light. (A) Show how the process repeats.

The approach phase (Phase A) often consists of simply driving forwards, and then continuing to drive forwards until the right sensor is not stimulated. Thereafter the procedure for Phase B is followed, with the approach differing from the orbit primarily in that the amount of time spent on each step of the ‘program' while approaching the light varies more than it does when the robot is stably orbiting the light. This is what we see in trajectory shown in Figure 4, and in all conditions when the left sensor is not stimulated. However in conditions when the left sensor is stimulated during the approach phase, the left sensor is involved in guiding the robot into a state where Phase B takes over. This can be seen in Figure 5.

Figure 5. An example approach phase (Phase A) for the ancestral solution which is guided by the left sensor. (A–C) Show the sensorimotor activity of the ancestral solution when the light is at coordinates (0, –3), position 6 in Figure 3. (D) Plots the spatial trajectory of the robot. The vertical lines in plots (A–C) show the peaks in sensor activity. These correspond to the + markers in (D). Initially the robot drives backwards. The left sensor stimulation between t = 2 and t = 8 is associated with the robot to driving forwards while turning strongly to the left. Once this turn has oriented the robot such that the right sensor is being stimulated and the left sensor is no longer being stimulated, the robot drives forward until the right sensor is no longer stimulated. From here, this is just the same Phase B behavior presented in Figure 4.

This solution is an instance of a more general robust strategy for performing phototaxis in this model, which can be summarized even more simply as:

• If you don't see the light, drive backwards (it must be behind you).

• If you do see the light, drive forwards until you can't see it any longer.

The reason this does not result in just driving backwards and forwards along the same arc is that the robot turns a different amount when driving forwards vs. when driving backwards. The turn amount is determined by mR−mL, while the direction of travel is determined by whether mR + mL is negative or positive. When adjusting motor activity to change directions, it's trivial to also change the amount of turn. Of course this general strategy is not a complete description of the robot's behavior, the effect of sensor stimulation can be time dependent and differ for the left and right sensors. Particularly during Phase A, the approach to the light, the exact trajectories taken by the robot depend on continually regulating the 2 independent motors' speed and direction of activity to perform both gradual turns and sharp changes in direction via 3 point turns with sufficient precision to reliably enter Phase B and maintain it. However, we see this general strategy well preserved in populations descendent from this ancestral population as well as evolved independently in non-descendent populations.

To summarize, the ancestral solution takes advantage of the particular nature of its sensors, driving backwards so that the sensors are stimulated sharply. It adjusts its motor activity in response to this sharp stimulation in such a way that the stimulation is extinguished. This environmentally mediated negative feedback loop plays a critical role in enabling the system to remain stably in close proximity to the light source. Capturing this type of natural feedback loop is a strength of modeling work following the SED approach. In the subsequent sections, we will see the role this pattern of behavior plays in coping with additional self-caused interference, and how this behavior is modified when this population of solutions is taken as the ancestral population for subsequent optimisation via the GA with the addition of motor-driven interference.

3.2. Experiment 2: Avoidable interference

Having evolved a system to perform phototaxis in the absence of directly self-caused sensory stimuli, we take this population of solutions as the ancestral population for subsequent evolution in the presence of motor-driven interference functions to begin investigating how embodied systems can cope with this type of interference. In this section we describe the first form of self-caused sensory interference modeled, and how the ancestral solution is modified to accommodate it.

The simplest possible interference would be adding a constant value to all the sensor inputs. However this would not depend on the system's motor activity. Therefore the first ψ(m) that we model is a threshold-like interference function, where interference is maximized when motor activation is above a threshold value, and ≈ 0 elsewhere. To achieve this effect with a smooth function, we use a relatively steep sigmoidal function, with the equation:

Where exp(x) = ex and |m| is the absolute value of m, and where k = 50 is the term controlling the steepness of the sigmoid's transition from 0 to 1, while p = 0.5 determines the midpoint of the transition. So when m < −0.5 or m > 0.5: ψ(m) ≈ 1 and when −0.5 < m < 0.5: ψ(m) ≈ 0. This function is unique among the three in that were the system to constrain its motor activity to the appropriate range, it would avoid the interference altogether. We will refer to the interference generated by this function as avoidable or sigmoidal interference.

With motor activity capped at 50%, motor-driven interference can be avoided, and phototaxis can still be performed, just more slowly. Moving more slowly comes at a cost to fitness though, since the fitness function (Equation 11) rewards reaching the light quickly. Therefore, a predict-and-subtract solution to the interference which preserves the speed of the high-performance ancestral solution should outperform a solution which simply avoids the interference. However, we instead found that the fittest solution from the 5 populations evolved to perform phototaxis with the sigmoidal interference function modifies the motor activity of the ancestral solution significantly.

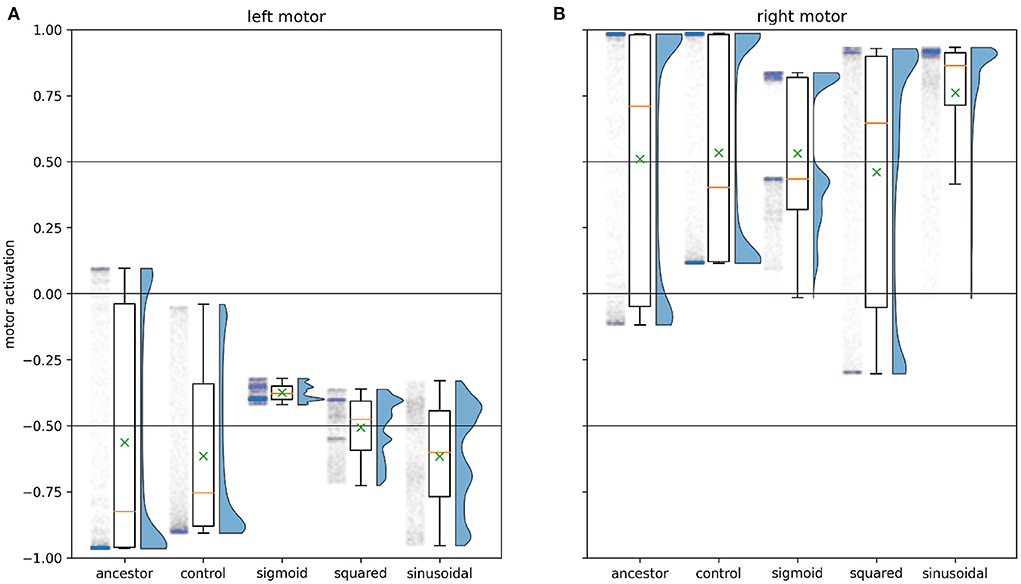

Figure 6 illustrates how the characteristic motor activity of the solution evolved with sigmoidal interference differs from that of the ancestral solution. Keeping in mind that the ancestral solution often involved minimal environmental stimulation of the left sensor, we observe that the left motor in this evolved solution never produces interference. This comes at the cost of greatly decreased absolute motor activity relative to the ancestral solution. The ancestral solution's left motor activity ranges widely, from –0.96 to 0.10 with a median of –0.82, close to the maximum possible absolute value of 1. See Figure 4A(ii) for ancestral motor activity as a time series. In contrast, the left motor activity of this solution ranges only between –0.42 and –0.32 with a median value of –0.38. Time series of this motor activity can be seen in Figures 8A(iv),B(iv). This drastic decrease in motor activity lowers the speeds attainable by the robot, but prevents motor-driven interference with the left sensor. While the activity of the left motor is kept below the threshold for producing interference at all times, keeping the left sensor free of interference, the right motor does produce interference. The distribution of right motor activity is bimodal, with peaks just below the interference threshold of 0.5, and close to its maximum value of 0.84. This bimodal distribution is the result of this solution producing two distinctly different orbit types.

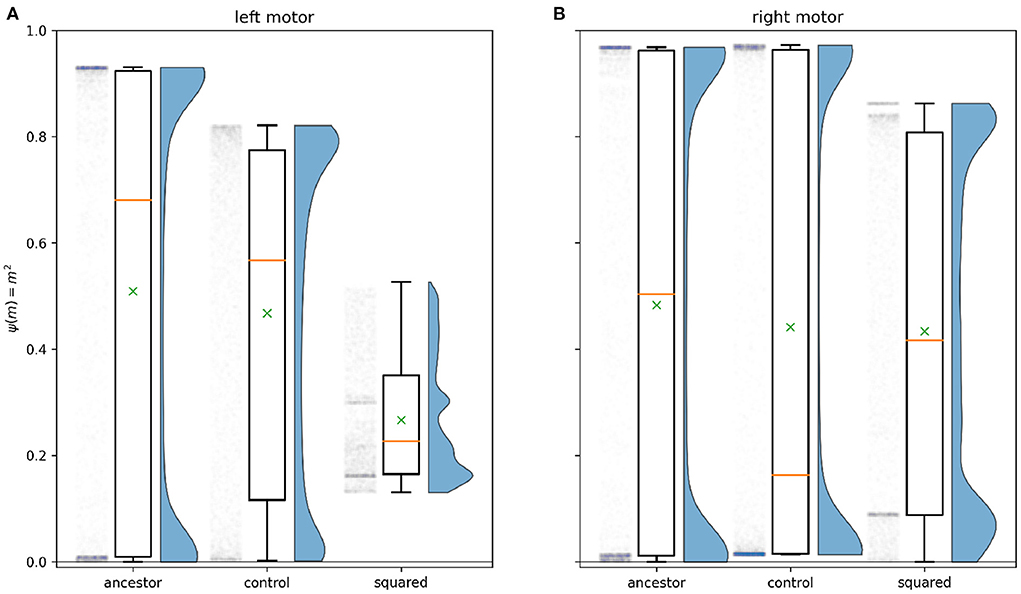

Figure 6. Motor activity for the 12 light positions shown in Figure 3 for time 20 to time 50 (integration steps 2,000–5,000), for the evolved solution to each experiment. This is one way of visualizing aspects of the ancestral behavior that have (and have not) been modified by further evolution in the presence of an interference function. The boxes extend from the first to the third quartile of the motor activity, and contain a yellow line showing the median, and a green × showing the mean. The whiskers extend to 1.5 times the inter-quartile range. The half-violin plot to the right of each box plot estimates the distribution of the motor activity, while to the left is a scatter plot of each simulated moment of motor activity with randomized horizontal placement. The column labeled control plots exactly the same information for the fittest solution evolved with λ = 0.5 and the null interference function ψ(m) = 0, showing the scope of change seen simply due to the presence of λ and to genetic drift. (A) plots the motor activity for the left motor of each system, while (B) plots this information for the right motor. Of particular relevance to the solutions cataloged in this paper are the depressed (absolute) left motor activation with sigmoidal interference and the corresponding bimodal distribution of right motor activity; the reduced range of left motor activity with squared interference, and the fact that the right motor activation with squared interference continues to cover a wide range; and the reduction in low (absolute) values of motor activity with the sinusoidal interference function.

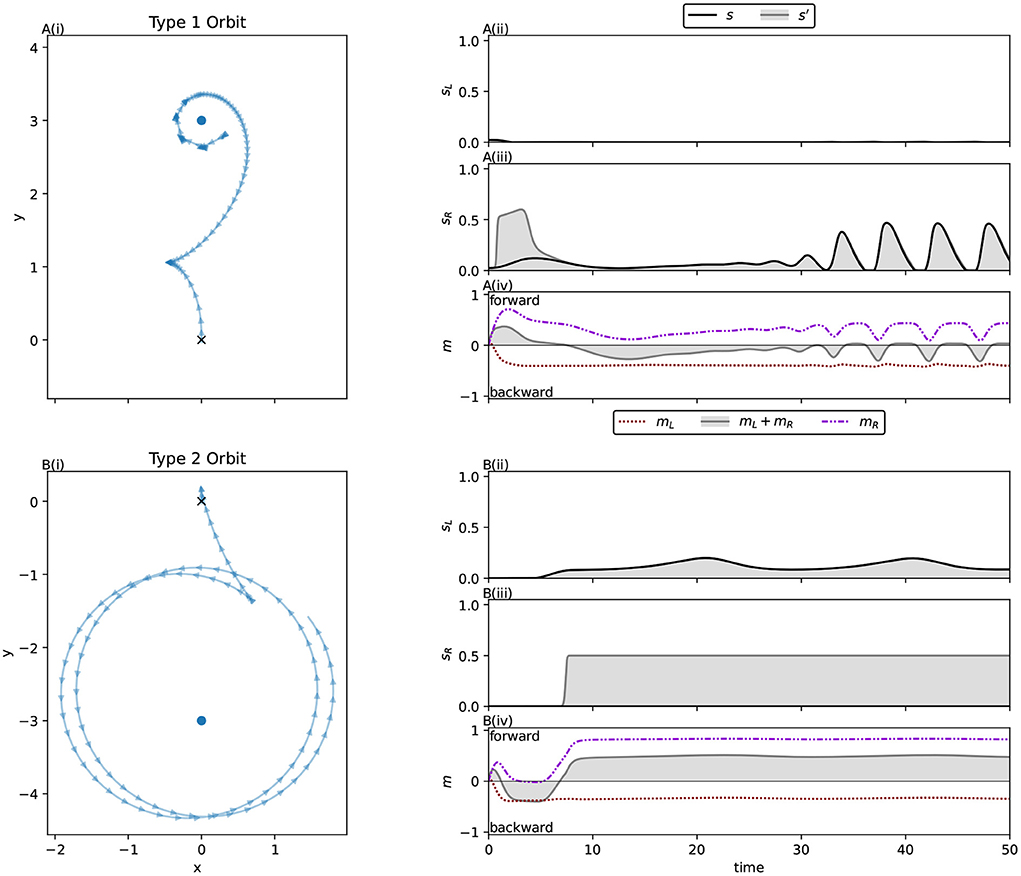

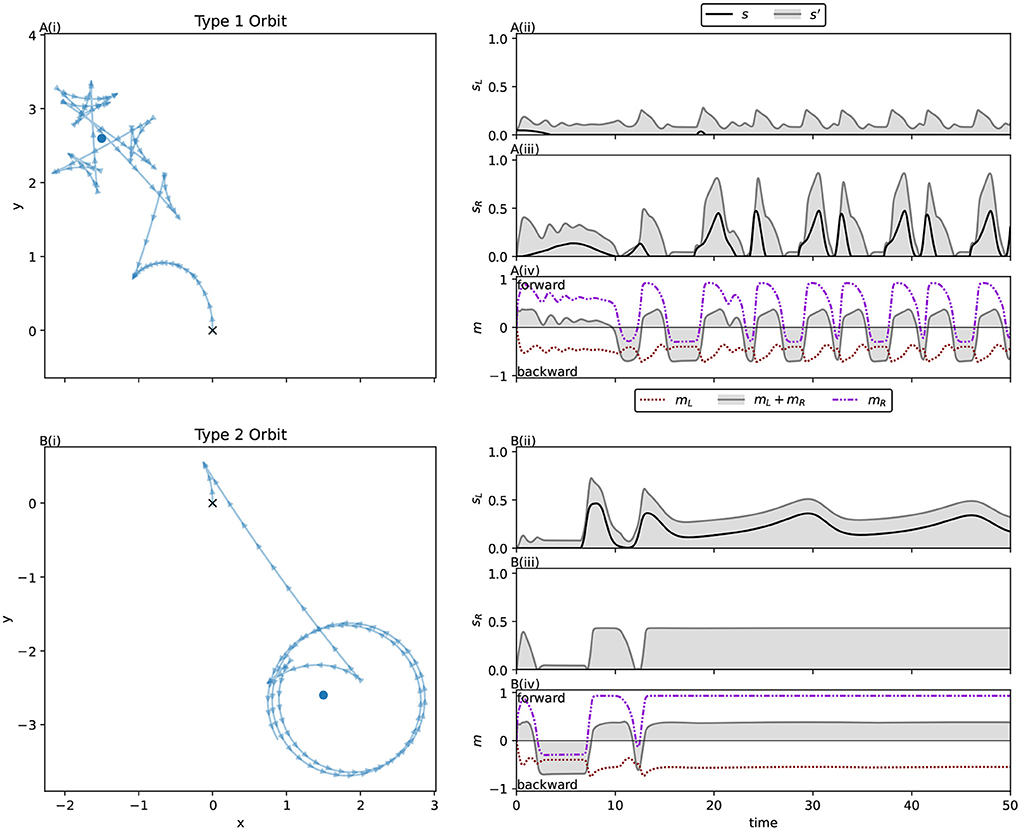

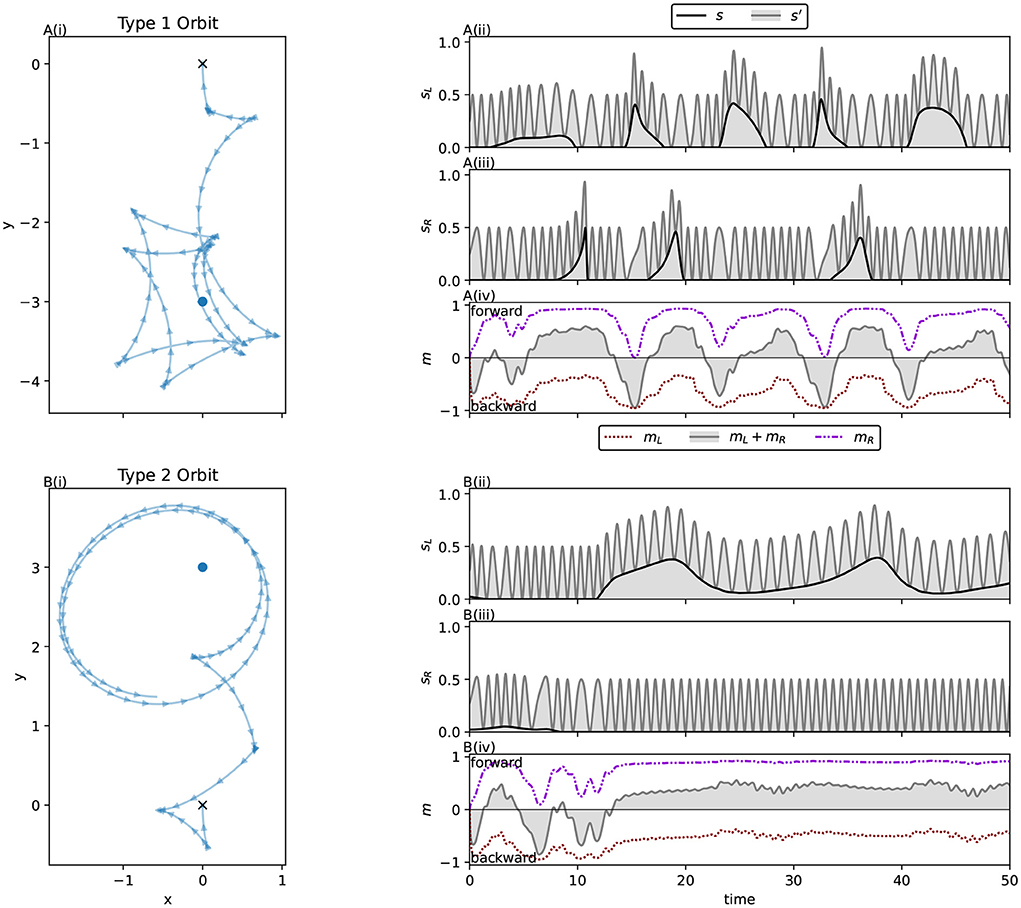

The orbiting behaviors of this system are of interest because they demonstrate ways in which a long term, stable relationship with an environmental source of sensor stimulation can be maintained in a model with motor-driven sensor interference. As with the ancestral population, a trial duration of 10 time units was used for this population. Due to the decreased overall motor activation relative to the ancestor, and the consequently decreased speed, the robot does not get as close to the light in that time as the ancestor did. This means that what has been selected for by the genetic algorithm here is modification of the approach phase to maintain accuracy in the presence of this novel interference. However, due to a sufficiently accurate approach and the evolved regulation of the motor-driven interference, stable orbits are still achieved across all light positions in the very long term. Unlike the ancestor, we see two distinctly different orbit behaviors. Across all interference functions we refer to those orbits reminiscent of the ancestral solution, involving forward and backward motion around the light, as Type 1 orbits, and to orbits which loosely circle the light while driving forwards as Type 2 orbits. These are easily distinguished visually (see Figure 7). As with the ancestor, approaches can broadly be divided into those guided by the left sensor, and those that are not. In the majority of cases for this solution, the approach phase preceding Type 1 orbits is guided exclusively by the right sensor, while Type 2 orbits tend to follow a left sensor guided approach phase.

Figure 7. Two distinct types of orbits are visible in the spatial trajectories for the best individual from populations evolved with sigmoidal interference (Equation 12). Type 1 orbits, reminiscent of the ancestral solution, are seen for Lights 11, 12, 1, 2, 3, and 4. Type 2 orbits, which feature a forward moving, counter-clockwise orbit of the light are seen for Lights 5, 6, 7, 9, and 10. For Light 8, an approach typical of a Type 2 orbit instead puts the robot in position for a Type 1 orbit.

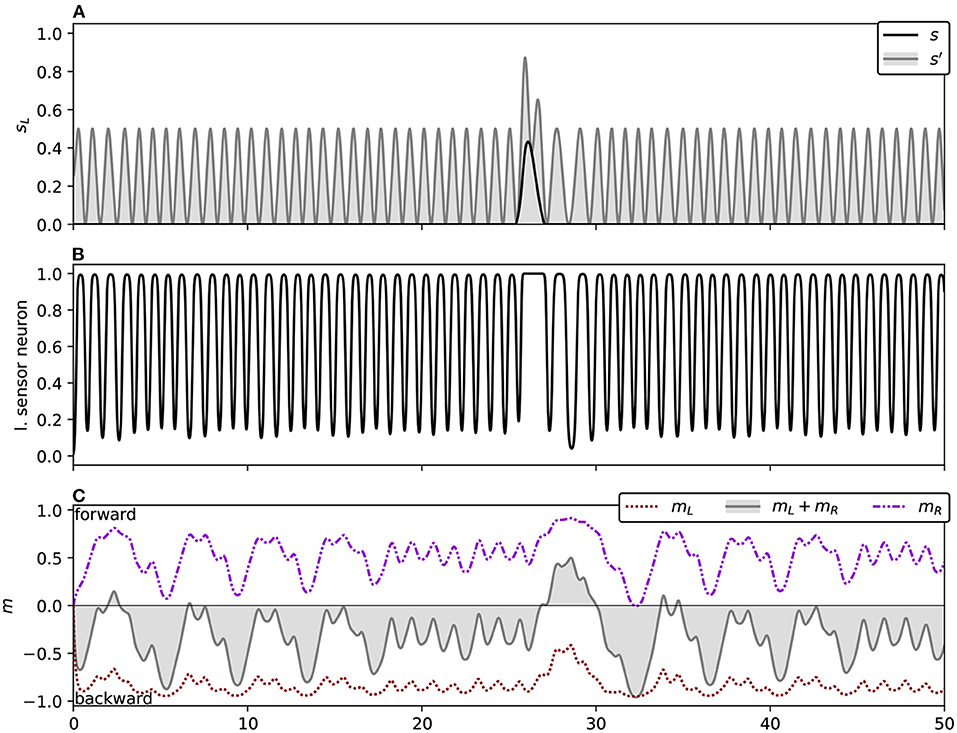

Type 1 orbits come much closer to the light. They display similar sensorimotor behavior to the ancestor's orbit behavior (Phase B), maintaining a stable relationship to the light by repeatedly driving backwards and forwards, albeit with greatly reduced motor activity compared to the ancestor. Figure 8A shows a typical example of sensorimotor activity for Type 1 orbits. Right motor-sensor interference is almost entirely avoided. A very low amount (not visible in the figure) coincides with the robot driving forwards slowly. This interference is necessary because the left motor's activity is negative, and is maintained very closely to the threshold for interference, so the right motor's positive activity cannot be raised sufficiently highly to drive forwards without producing at least a small amount of interference. We summarize this orbit strategy as performing the known good ancestral strategy while constraining motor activity to avoid sensor interference.

Figure 8. Two distinct orbit types produce the bimodal right motor activity distribution seen for the solution evolved with sigmoidal interference in Figure 6B. (A) The type 1 orbit, which alternates between driving forwards and backwards to stay close to the light. (B) The type 2 orbit, where the robot exclusively drives forwards during the orbit phase. (i) The spatial trajectory of the robots, (ii,iii) the robots left and right sensor activities respectively, and (iv) the robots' left and right motor activations. The black line in (ii,iii) shows the environmental stimulation of the sensor, while the grey line and corresponding shaded region shows the total activation of the sensor when both the environmental and motor-driven stimulation are combined. Note the minimization of interference during the Type 1 orbit, in contrast with high level of right sensor interference during the Type 2 orbit.

Type 2 orbits loosely circle the light, and are very different from the ancestral orbit behavior. Figure 8B shows an example of typical sensorimotor activity for this type of orbit. These orbits do not involve environmental stimulation of the right sensor, instead the left sensor is stimulated throughout the orbit phase. Unlike Type 1 orbits, where the relationship to the light is maintained by repeatedly driving forwards and backwards, the robot exclusively drives forwards. It does so very quickly, producing high right motor-sensor interference. We characterize this orbit strategy as keeping “one eye on the prize,” where the left sensor, facing the light, is kept free of interference. Meanwhile the right sensor, facing away, is continually stimulated by the right motor's activity. This orbit strategy is uniquely enabled by the ipsilateral nature of the motor-driven sensory interference.

In the presence of this threshold based interference, the best solution found by our GA when modifying the ancestral population to accommodate this interference constrains the ancestral solution's motor activity to avoid interference while performing the same function of phototaxis, using (in some situations) the same basic strategy. This approach contrasts with the predict-and-subtract approach of modifying the controller to subtract the anticipated interference from the sensor neurons' outputs, allowing the behavior of the ancestral solution to be performed without modification. This suggests that in our model such solutions are far closer in evolutionary space to the ancestral solution than a predict-and-subtract solution would be. The relevance of this to the evolutionary history of biological control systems is unclear, however it may suggest that adjusting neural activity to accommodate a novel form of motor-driven sensory interference would involve regulation of the behavior producing that interference in addition to or instead of the neural subtraction of internally predicted interference. This demonstrates that behavior modification does indeed work as a solution to motor-driven sensory interference, and that the precise way in which behavior is modified can depend heavily on the particularities of the sensorimotor contingency in question. Specifically we have seen how two ways of compensating for motor-driven sensory interference emerged in our model. Firstly, motor activity may be constrained to ranges that minimize or avoid interference with the sensors. Secondly, interference can be avoided for only one sensor, which is kept trained on relevant environmental stimuli. This permits unconstrained use of motor activity which interferes with the other sensor. While this robot is clearly much simpler than a human, this demonstration of how pre-existing behavior can be modified to avoid the effects of novel, self-produced sensory interference may suggest a role for such solutions in other contexts, such as less complex organisms (including perhaps our deep evolutionary past) and simple robots.

3.3. Experiment 3: Unavoidable interference

Sigmoidal interference certainly does not exhaust the possibilities for modeling interference, nor does it capture the fact that many self-caused stimuli cannot be avoided when taking action. Therefore, we also model non-avoidable interference, where the interference increases with the absolute magnitude of the motor activation. To minimize discontinuities in the system, and to ensure the interference can be approximated by the CTRNN controller, we use a smooth function—the square of the motor activity:

We will refer to the interference generated by Equation (13) as unavoidable or squared interference. Like the avoidable, sigmoidal interference function modeled previously, the magnitude of the interference correlates with the magnitude of the motor activity. However, unlike with the avoidable interference function, now all changes in motor activity produce a corresponding change in the sensory interference.

Examining the fittest solution produced by the GA's modification of the ancestral solution, we again find the ancestral solution well preserved. A trial duration of 20 time units was used during evolution to compensate for any decreased speed compared to the ancestor. The general strategy of approaching the light while driving backwards is maintained, however motor activity has changed to accommodate the addition of the squared interference function. The left motor's activity is now constrained to a much smaller range (see Figure 6A), which lowers interference dramatically compared to the interference that would be produced by the ancestral solution's motor activity (see Figure 9A). The right motor generates significant interference, but we find that rather than destructively interfering with the sensor in such a way that the environmental stimulus is masked, this motor-driven sensor stimulation is actually constructive in that it synchronizes with and amplifies the environmental stimulus's effect on the sensor. Figure 6B makes it clear that the right motor's activity has not been lowered or even constrained to a tighter range the way the left motor's has—though we still see a slight reduction in interference compared to what the ancestral solution would produce (see Figure 9B). How the system performs so accurately in the presence of this interference becomes clear when we consider the relationship between the right motor activity and the right sensor. As with the ancestor, the robot approaches the light while driving backwards, in such a way that the light enters the right sensor's field from it's blind spot at very close proximity to the sensor. Figure 10A shows an example of this approach. When the light enters the right sensor's field, its activation immediately spikes. In response, the right motor's activity also spikes, causing the robot to drive forwards, and also causing a spike of interference in the same sensor. This is a version of the ancestral Phase B orbit behavior, executed with reduced baseline motor activity, and high right motor activity coordinated with right sensor stimulation. By keeping motor activity at a low baseline and interacting with the environment in such a way that environmental stimuli are sharp and intense, this solution facilitates distinguishing environmental stimuli from low levels of self-caused background noise. By then coordinating motor activity with elevated environmental stimulation of the ipsilateral sensor, motor-driven interference can be raised to high levels without interfering with the system's function, “hiding” in the shadow of the environmental stimulus. Not only does this activity not interfere with perception of the environment, the stimulation caused by right motor's activity actually reinforces and amplifies the environmental stimulus's effect on the sensor above the maximum level it would be able to achieve on its own.

Figure 9. Motor-driven interference is reduced in Experiment 2 relative to the ancestral population. The figure shows ψ(m) = m2 for the 12 light coordinates shown in Figure 3, for 20 < t < 50. (A) Note primarily the lowered mean, median and maximum interference with the left motor. Despite the right motor's activity being spread across a wider range than either ancestor or control (see Figure 6), this spread is to low motor activity values, decreasing maximum right motor-sensor interference. (B) However, the right motor activity has definitely not been suppressed the way the left has, and the systems successful performance in the presence of this interference ultimately depends on the coordination of right motor-sensor interference with environmental stimulation of the right sensor (see main text).

Figure 10. Spatial trajectories and sensorimotor activity showing a Type 1 and Type 2 orbit for the solution evolved with squared interference. Subfigures are labeled as in Figure 8. (A) Shows a Type 1 orbit reminiscent of the ancestral solution, where motor activity is coordinated with sharp spikes of environmental stimulation of the right sensor. A(iii) Shows how elevated right motor interference coincides with environmental right sensor stimulation, amplifying it. The spiking activity is characteristic of negative feedback in this solution, where action resulting from sensor stimulation leads to the stimulus diminishing. (B) Shows a Type 2 orbit, where the robot orbits while driving forwards. B(iv) Shows how the motor activity plateaus during the orbit, with high right motor interference seen in B(iii). This is associated with positive feedback in this solution, where sensor stimulation leads to activity prolonging that stimulation.

Since right sensor stimulation leads to right motor activity, which in turn leads to more right sensor stimulation, we should address the possibility of a self-sustaining positive feedback loop. This possibility is limited by two forms of negative feedback. The system's relationship to the light source is structured in such a way that elevated right motor activity in response to the environmental stimulus moves the right sensor away from the light, eliminating that stimulus. This is environmentally mediated negative feedback. It is complimented by internal negative feedback. Figure 11A shows how a spike in right sensor stimulation causes an initial strong response in motor activity. However, despite continued stimulation at an elevated level, sufficient to saturate the output of the sensor neuron, motor activity quickly falls from the initial peak. Thus, both internal and environmentally mediated negative feedback play a role in preventing this orbit behavior from being disrupted by motor-driven positive feedback.

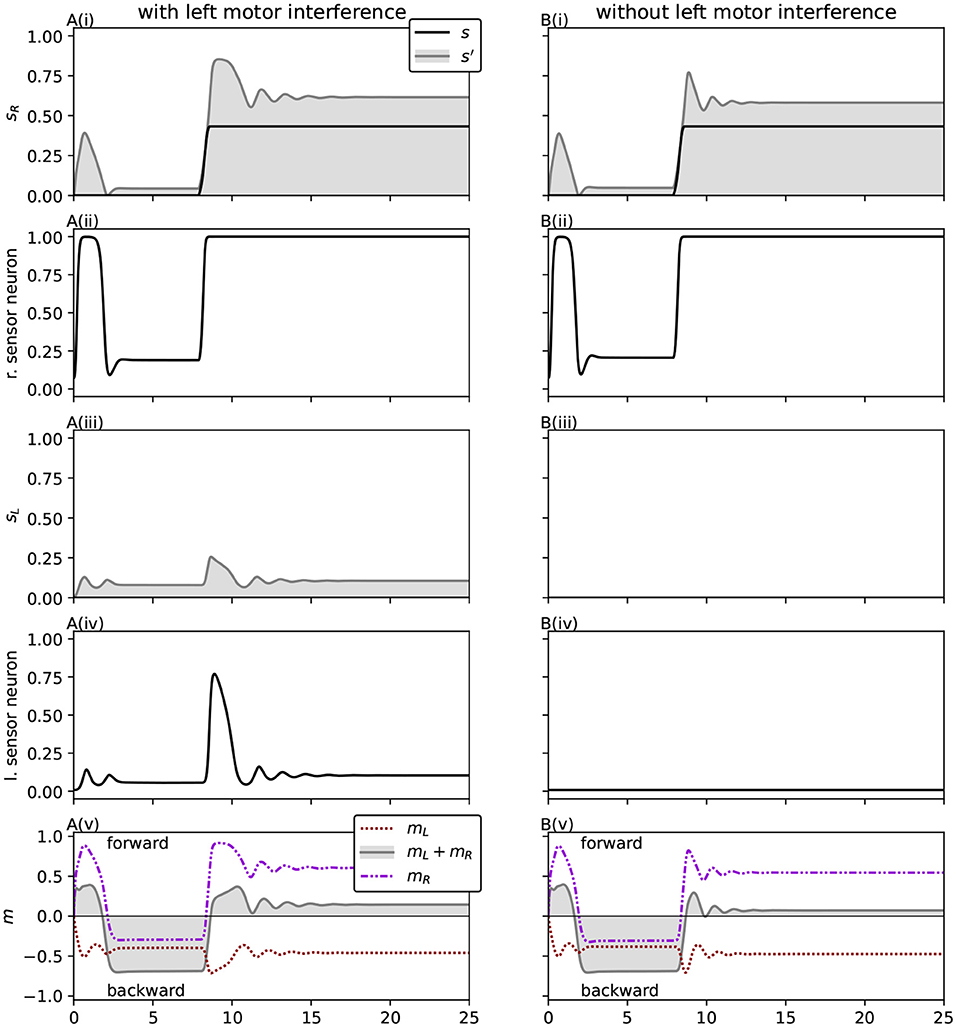

Figure 11. The magnitude and duration of the initial motor response to sensor stimuli are strengthened by the presence of left motor interference. Sensorimotor activity and sensory neuron output time series are shown for the solution evolved with squared interference (Equation 13), when the right sensor is presented with an artificial environmental stimulus, which spikes and plateaus around t = 8. (A) Shows the response under the condition of evolutionary adaptation for the robot, with motor interference present. (B) Shows the response when the left motor-sensor interference is removed. The duration and intensity of the motor response to the stimulus is diminished without the interference, indicating that the interference plays a functional role in the evolved behavior. Additionally, it can be seen that the response to sudden right sensor stimulation is accompanied by internal negative feedback—even when the stimulation persists, motor activity quickly falls from the initial peak.

As we also saw with sigmoidal interference, this solution realizes a second orbit pattern of Type 2. Positive rather than negative feedback plays a dominant role in this orbit, which comes into effect when the robot is close to the light, but the light is on its left (see Figure 10B). The system's response to left sensor stimulation does not feature the internal negative feedback that right sensor stimulation does, and it produces a response in both right and left motor activity. This in turn produces interference in both sensors. The ultimate effect is that the robot drives forwards in a counter-clockwise orbit around the light. This keeps the left sensor continually stimulated by the light, while the right sensor is continually stimulated by the right motor's activity. In this case we have an environmentally mediated, positive feedback loop, where left sensor stimulation causes the robot to turn toward that stimulus, and the resulting motor-sensor interference produces the same effect.

The way this system has been parametrized by the GA relies on the presence of motor-driven stimulation to perform phototaxis. Recall that the ancestor evolved to have zero left sensor activation in many situations, with a left sensor guided approach phase (Phase A) for a number of initial light positions. This trait remains in a way, where the left sensor is often completely free of environmental stimulation, and the left motor activity is constrained to produce lower levels of interference. Nevertheless, this interference plays an important role. Figure 12 illustrates how removing the motor-driven sensor stimulation from just the left sensor causes the approach phase to fail in the majority of cases, succeeding only when its trajectory inadvertently brings it close to the light. This is not unexpected, given that the system was optimized for the presence of motor-driven interference. However, it means that accurate control of the system's motor activity has been optimized in such a way that it now depends on perceiving the direct sensory effects of its own activity. Like the right motor, the left motor responds to sensor stimuli, though in a smaller range and with elevated negative rather than positive activation. This plays an interesting role in the system's response to right sensor stimulation (as in the Type 1 orbit shown in Figure 10A). Note how the coordinated peaks of right environmental and motor-driven sensor stimulation coincide with elevated left motor activity and corresponding motor-driven left sensor stimulation. Figure 11 shows how the presence of left motor-sensor interference amplifies and extends the initial motor activity response to right sensor stimulation. This demonstrates not only a specific way in which the system has been optimized for the presence of interference, but also how self-caused stimuli can play a directly functional role in behavior.

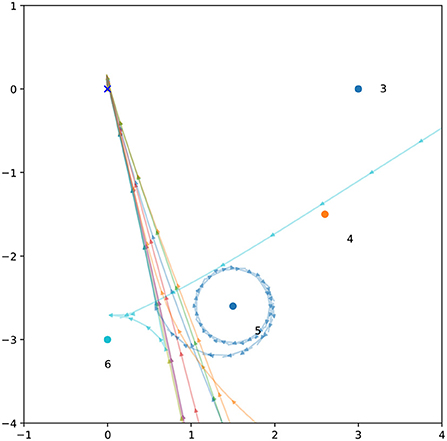

Figure 12. When motor-driven interference is removed, the behavior evolved with squared interference fails. Spatial trajectories for 12 light coordinates (Figure 3) are plotted with all motor-sensor interference removed. The approach phase now only succeeds in two out of 12 cases, where the blind approach brings the robot close to the light. The orbit phase only succeeds in one of these two cases.

To summarize, we see the ancestral strategy is well preserved in this evolved solution. This solution can be characterized as minimizing interference to an extent, as we also saw in the case of sigmoidal interference. We also see a condition where motor-driven sensor interference does not need to be minimized, namely when it can be made to coincide temporally with environmental stimulation of the same sensor. Here the onset of the environmental stimulus prompts the interfering motor activity, and a combination of internal and environmentally mediated negative feedback extinguishes both interfering activity and stimulus. In this case the motor-driven stimulation does not interfere with perception of the environmental stimulus, instead reinforcing and amplifying it. This obviates the need to distinguish or subtract the self-caused stimulus from the environmental. Separately, we also see that a stable, periodic orbit phase can be facilitated by positive feedback. Finally, we found that while left motor-sensor interference is confined to a narrow range, the system has been optimized to rely on its presence and even incorporate it functionally.

3.4. Experiment 4: Time dependent interference

With both of the preceding interference functions, if the motor activity is held constant, then the interference will also take on a constant value. Since the interference is additive and non-saturating, subtracting a constant term can remove the interference and leave only the environmental signal—no prediction required. In general a CTRNN with a sufficiently high bias β for the input neurons can do this, though in our case the maximum value we permit the GA to assign to β is too low to fully compensate for maximal interference. Nevertheless, solutions to the previous two interference functions have shown both the utility of avoiding or minimizing motor-sensor interference, as well as the role that holding motor activity and its corresponding interference constant can have in constructing long-term stable relationships with environmental sources of sensor stimulation. With the following function it is not possible for the interference to plateau at a constant value. It describes a sine wave with a maximum of 1 and a minimum of 0, whose frequency is determined by the motor activation:

Here c gives the phase of the sinusoidal, capturing the previous values of m. b = 0.1 determines the base frequency of the sinusoidal in the absence of any motor activity, while r = 8 is the frequency range term determining the maximum frequency the sinusoidal can reach. The effect of adding 1 and dividing by 2 is simply to shift the wave from the range [−1, 1] to the range [0, 1]. This equation essentially advances through a standard sine wave at a rate determined by the motor activity. As with the previous interference functions, the interference for a given sensor is calculated from the ipsilateral motor, such that when computing the interference for the left sensor we have m = mL, and for the right sensor m = mR.

Unlike the previous interference functions, this is not purely a function of the motor activity, such that if you know m at time t, you know ψ at time t. Instead it is a function of time, depending on the prior history of the system, specifically on all the previous motor activity up to the current time. More importantly for our purposes, if the input is held constant, the output continues to vary over time. We will refer to the interference generated by Equation (14) as time dependent or sinusoidal interference. A trial duration of 20 time units was used during evolution for this interference function.

Using this time dependent interference function we find that while avoiding interference, minimizing it, or holding it constant are all important ways of coping with self-caused stimuli, they are not the only ways. Timescale differences between the frequency of the motor-driven interference and the frequency of environmental stimulation of the sensor can be exploited to distinguish the two, and behavior can shape both interference and environmental stimuli to amplify these differences.

In this system the environmental signal is able to be detected despite the presence of interference, due to differences in timescale between the motor-driven interference and the frequency of environmental stimulation of the sensors. First let's demonstrate that the system actually can respond to environmental stimuli. Figure 13 illustrates how a spike in environmental stimulation of the left sensor has an excitatory effect on both motors, causing the system to switch from driving backwards to driving forwards. Observing the behavior of the output functions of this system's two sensor neurons, we found elevated neural biases β compared to the ancestral solution: remembering that −5 ≤ β ≤ 5, we observe 4.67 and 3.73 for the left and right motor, respectively, compared to –0.75 and 0.99 in the ancestral solution. These sensor neuron biases are calibrated such that (A) with no environmental stimulation, the neuron's output function is maximized only with the peaks of the sinusoidal interference, and (B) when combined with sufficient environmental stimulation, the troughs of the sinusoidal interference are high enough that the output function is maximized continually. This can be seen in the neural response to environmental stimulation shown in Figure 13B. This makes the environmental signal detectable despite the continuously varying interference. This solution is made possible by the large difference in timescale between the frequency of the sinusoidal interference and the frequency with which the sensor receives the environmental stimulation. In this system, the frequency of the interference can be an order of magnitude higher than the frequency of environmental stimulation, as can be seen in Figure 14. This difference in timescale means that the minimum value of the sinusoidal interference is bound to coincide multiple times with each period where there is no environmental sensor stimulation. This means that a drop in neuron firing always coincides with the absence of environmental sensor stimulation, so over time the system can reliably respond to environmental stimuli.

Figure 13. Sensorimotor activity and sensory neuron output time series are shown for the solution evolved with sinusoidal interference (Equation 14), (A) The left sensor is presented with a spike in environmental stimulation at around t = 28. (B) The neural response to the environmental stimulus is clearly visible—prolonged saturation of the left sensor neuron's output function (see Equation 5). (C) The spike of environmental sensor stimulation causes the robot to drive forward instead of backwards for a time, demonstrating that the system can respond to environmental stimuli.

Figure 14. Spatial trajectories and sensorimotor activity for the solution evolved with squared interference. Subfigures are labeled as in Figure 8. The sensor plots show how the relatively slowly changing environmental sensor stimulation raises the minima of the high frequency interference, allowing the environmental stimulus to be responded to despite the interference. The difference in timescale that makes this possible is clearly visible here. Responsiveness to the environment is most clearly visible in A(iv), where more positive motor activity is associated with environmental stimulation of the left or right sensor. The continual oscillations in motor activity (most clearly visible in the gray net motor activity line) are driven by the high frequency interference. These oscillations produce the elliptical Type 2 orbit seen in B(i).

While the evolution of our model was constrained in such a way that it could not implement it, there is another solution for filtering out interference of a sufficiently high timescale relative to the frequency of environmental sensor stimulation that peak interference is guaranteed to coincide with all instances of environmental stimulation. The maximum bias of nodes in our model was constrained to the maximum weight of a single incoming connection (5), which is lower than the product of the environmental intensity factor with the input scaling factor applied to inputs to the sensor neurons (5 × 5 = 25). However, a sufficiently high bias (around 12) can indeed induce the sensor neurons' output function to only be maximized when environmental stimulation is high.

These two ways of adjusting the neural biases demonstrate how a large difference in timescale between environmental signal and interference means that over time it is possible to extract the environmental signal from the summation of the two. However, such differences in timescale are not guaranteed, and it is here that the embodied nature of this system comes into play. The robot's motor activity actually amplifies any pre-existing difference in timescale, as typical motor activity is constrained to higher absolute ranges than the ancestral solution—see Figure 6.

Due to the way this time dependent interference periodically saturates the input neurons, the system is not sensitive to environmental stimuli spikes that are of sufficiently low duration to perfectly coincide with motor interference peaks as the corresponding input neuron's output function would already be saturated. Note that spikes of this duration do reliably induce a motor response in the other systems we've examined in this paper. This represents a problem for the ancestral solution's strategy of taking advantage of sharp spikes in the right sensor. Significantly—and despite the system's elevated right motor activity—this system's Type 1 orbit is much slower than the ancestor's, with the periods of environmental stimulation of the sensor lasting for longer. This avoids the problem of the environmental stimulus being too short duration, and further amplifies the differences in time scale. So when it comes to distinguishing environmental and self-caused stimuli, the motor activity of the system not only shapes the self-caused stimuli to facilitate this, it shapes the environmental stimuli too.

As with the unavoidable squared interference, the behavior of this system depends on the presence of its motor-driven interference. For example, with the left motor-sensor interference removed, environmental stimulation of the left sensor inhibits rather than excites the activation of both motors. Significantly, in the absence of environmental stimulation, the motor activity and corresponding interference of this system features a long transient before settling into lower magnitude oscillations, and this transient is restarted by environmental sensor stimulation. This effect can be seen in Figure 13. These prolonged effects of momentary environmental stimulation are not seen in the systems examined in Experiments 1–3. They mean that the frequency of the motor-driven interference varies significantly both during the approach to the light and during Type 1 orbits. Altogether these qualities demonstrate that the evolved behavior of this system depends on its motor-driven interference, emphasizing that even interference as seemingly unruly as this can be incorporated into successful behavior.

To summarize, this system has the ability to respond to environmental stimuli despite continually varying sinusoidal interference. Rather than subtracting out the motor-driven interference, the behavior of the system is deeply entangled with it, displaying oscillatory motor activity driven by the interference and prolonged transient motor activity following activation of the motors in response to stimuli. Additionally, whether an environmental stimulus is excitatory or inhibitory depends, respectively on the presence or absence of motor-driven sensor stimulation. This demonstrates that rather than suppressing self-caused stimuli, proper functioning for some systems relies on the presence of self-caused stimuli. In this system we see responsiveness to the environment facilitated by a fixed solution that is implemented at the evolutionary timescale, rather than prediction and subtraction of self-caused stimuli on the timescale of actions. Because of the difference in timescale between the frequency of the sinusoidal interference and the frequency of environmental stimulation, a CTRNN neuron can be parametrized such that the maximization of its output function only coincides with environmental sensor stimulation, or such that the minimization of its output function only coincides with the absence of such stimulation. Most significantly for the role of embodiment in coping with self-caused sensory stimuli, we see that this difference in time scale between motor-driven and environmental sensor stimulation is amplified by the system's behavior, which both elevates the frequency of motor-driven sensory stimulation and lowers the frequency of environmental sensor stimulation.

4. Discussion

One explanation of the sensory attenuation effect is that self-caused sensory stimuli are predicted internally using a copy of the relevant neural outputs, and then subtracted out of the sensory inputs (Wolpert et al., 1995; Miall and Wolpert, 1996; Roussel et al., 2013; Klaffehn et al., 2019). This may well be the case, but even in a model where this predict-and-subtract mechanism would be a perfect solution, our GA instead found other viable alternatives. We have shown that a neural network controller can be successfully adapted to handle several different forms of motor-driven sensory interference, and significantly, the adaptations we have cataloged here do not rely on predicting this interference. We now summarize these adaptations.

Avoidance: When self-caused sensory interference is only triggered by certain motor outputs, and if the task at hand can be accomplished while avoiding those outputs, it may be easiest for a control system to simply modify its behavior to avoid motor-sensor interference. We saw this emerge when our model was evolved with sigmoidal interference. It is not clear whether we should expect this avoidance approach to scale well to a more numerous and complex arrangement of sensors and motors, though it seems that the problem of prediction would also become more complex in such circumstances. In the special case where there are multiple independent sensors and motors, where each motor interferes with only one sensor, an alternative solution is possible. If the task can be accomplished using only one sensor, then only one source of interference needs to be regulated. Doing so permits the other motors to operate freely over a wider range of activity. We describe this strategy as “keeping one eye on the prize”. This is arguably just avoiding the interference, with extra steps. We again saw this strategy used in the case of sigmoidal interference.

Where interference is unavoidable but the magnitude of the interference does depend on motor activity, motor activity can be constrained to ranges that limit the quantity of interference, reducing its magnitude relative to environmental stimuli. This is used in the case of the unavoidable squared interference.

Minimization and avoidance could be seen as special cases of causing the interference to plateau at a constant value. If interference is additive and non-saturating, as it is in our model, it can be eliminated by simply subtracting a constant term from the input. In general this is trivial for a CTRNN. However even without subtracting the interference out directly, constant interference just shifts an environmental stimulus's contribution to the sensor to a higher range, which does not actually change the information available when the interference is non-saturating.