95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Comput. Sci. , 02 November 2021

Sec. Computer Vision

Volume 3 - 2021 | https://doi.org/10.3389/fcomp.2021.747195

This article is part of the Research Topic 2021 Editor's Pick: Computer Science View all 11 articles

The question is discussed from where the patterns arise that are recognized in the world. Are they elements of the outside world, or do they originate from the concepts that live in the mind of the observer? It is argued that they are created during observation, due to the knowledge on which the observation ability is based. For an experienced observer this may result in a direct recognition of an object or phenomenon without any reasoning. Afterwards and using conscious effort he may be able to supply features or arguments that he might have used for his recognition. The discussion is phrased in the philosophical debate between monism, in which the observer is an element of the observed world, and dualism, in which these two are fully separated. Direct recognition can be understood from a monistic point of view. After the definition of features and the formulation of a reasoning, dualism may arise. An artificial pattern recognition system based on these specifications thereby creates a clear dualistic situation. It fully separates the two worlds by physical sensors and mechanical reasoning. This dualistic position can be solved by a responsible integration of artificially intelligent systems in human controlled applications. A set of simple experiments based on the classification of histopathological slides is presented to illustrate the discussion.

Modern science is based on bridging the two worlds defined by Descartes (Russell, 1946): the res extensa, the external world perceived by our senses, and the res cogitans, our internal consciousness that grounds our thoughts, our being and our actions. We observe the world and learn the laws of nature, the regularities of events in the sky vault, the weather, the tides, the species, the habits of people, their language, etcetera. Next we use this knowledge to survive on Earth, to interact with our friends, to grow internally. It gives life stability and perspective.

The field of automatic pattern recognition explicitly studies these processes by simulation and by designing tools to facilitate them. Several closely related disciplines are thereby needed, like sensor technology, video and audio processing, statistics, machine learning, artificial intelligence, robotics and mathematics. Always and everywhere the same issues return: what are the patterns, how do we represent them, how can we use them in applications? Underneath are the philosophical questions to be answered for unifying the duality created by Descartes: How to extract knowledge from observations? How to use knowledge in actions?

In this paper it is argued that for a better understanding of the difficulties and results of pattern recognition it is helpful to realize from where the patterns originate that we want to recognize. Are they phenomena in the external world, or are they born from the concepts that exist in our mind and do we only see them outside due to a one-sided view? To phrase it sharper: are the patterns created by the laws of nature, or by us, in an attempt to model the outside world in our mind? We will argue for the latter and discuss the consequences for automatic pattern recognition and machine learning.

Our discussion will be based on an introspective point of view: What is experienced internally in our attempts to understand the world? What are the patterns observed? How does knowledge grow from the concepts behind these? To answer such questions it is not of any help to include what we know about the human body, the traditional senses, the nerves, the brain and human perception. Such knowledge is itself the result of the study of patterns observed in some traditional way. In addition we will also not discuss higher level applications like the construction of human-like robots. We will entirely focus on the patterns that are used to recognize objects and events in the world and that help us to constitute knowledge of this world. The key question is in the title of the paper: what is the origin of these patterns?

It is remarkable that we are able to recognize an object as a chair even if we have never seen the particular object before, even if it has a design that is entirely different from all chairs that we have come across. This ability is very familiar, but it may also seem to be a miracle. The oak can be recognized from its leaves, the father in the habits of his son, the composer by a symphony, the author by an essay she has written. We thereby consciously make observations on furniture, leaves, habits, pieces of art or writing styles. Occasionally unconsciously such observations are organized in the mind into groups, into classes. When we encounter a new member of a class, we recognize it and can assign the class name while classifying the object or the observation.

This ability is named generalization. The name of the class is not just the name of a set of observed objects. In fact, it refers to something deeper. It may refer to a possibly infinite set of seen or unseen objects. The border of this set is not well defined. It might be tested experimentally, but results will not only depend on the object itself, but also on the context. We might recognize the father in his son during a family reunion, or in his house, but not directly during a meeting in a different city.

It is also difficult, or entirely infeasible to generate all possible members of a class. That would imply, e.g., to imagine all possible leaves that would be recognized as the leave of an oak. So we are dealing with the concept of a class that is only vaguely defined, but in daily life very useful. For this the word pattern is used. It is used for what a set of objects has exclusively in common for a particular class. In the observations we recognize the pattern.

In daily language many words refer to patterns, specifically the nouns and the verbs. They are used in both ways, pointing to a concrete object, event, activity, but also referring to a general concept. For instance, compare the sentences “Where did you park your car?” and “Nowadays, it is difficult to park a car”. The first deals with a realized activity and an object, the second with their generalization. As described above, during our life we learn from observations and teachers to generalize from the reality outside to the concepts in our mind. This knowledge can be used to understand the world. It may in addition be applied the other way around, by generating examples to illustrate the concepts we are dealing with.

The field of pattern recognition aims to study how to bridge the gap between the world of objects and the world of concepts. As stated above, we do this naturally, but it is initially a miracle. In psychology, physiology and biology various aspects are studied, starting from the human mind and the human body. In automatic pattern recognition the first columns of the metaphoric bridge are erected at the other bank, the world of objects. Here the real world objects and events are first analyzed and described by their structure and features, e.g. by computer vision. This is followed by a search for patterns or an assignment to predefined classes using the tools of machine learning. The exact location of the bridge, the collection of objects where it starts and especially the concepts where it lands, are guided by what already lives in the mind. This is prior knowledge. It is already known before the observations are made.

Artificial recognition systems obviously operate entirely in the outside world. They are instrumented with sensors and generate conclusions on patterns and classes based on computer algorithms. The sensors and the algorithms are man-made, and the conclusions may be reported to someone who is interested in them, e.g. a doctor or a security agent. This raises the following question: Is it possible that the patterns are entirely in the perceived objects and do we learn them from these, or is it needed that they already live in some way in the mind? What is the origin of a pattern?

The issue of the origin of patterns is of significant importance for the design of automatic pattern recognition systems. If it is located in the real world of observations, more objects should be observed to obtain better systems. It cannot even be stated that they should be better observed, as better implies that it is defined what is searched. This will contradict the idea that the pattern is still unknown. On the other hand, if the origin is located in the mind, then all effort should be spent in transforming what is known about this pattern into how and what should be measured and how to learn from these measurements. For instance, it might be known that there are two types of rabbits, e.g. because the meat tastes differently, but what are the features the hunter should rely on? In short: gaining new knowledge from the world is different from adapting existing knowledge to the world. If we want to realize the first, but rely simultaneously on the second, a bias might be caused in the conclusions.

In the following sections it is, from a philosophical point, first discussed how human recognition deals with patterns. After that the analysis shifts to mechanical learning. In both cases the connection between observations and knowledge will be sketched. It is already emphasized here that in this shift the notions of observation and knowledge will shift as well. This has its consequence for our target, the question of the origin of patterns.

In this section we deal with knowledge derived from human observations. These are facts derived by senses. There is much to be said about what exactly an observation is and what a fact is. We will postpone this to the end of this section. For the time being the word observation is used. Readers should understand this as a given fact. Examples are “it is raining outside”, “the chair is broken”, “I am hungry”.

Knowledge of the world we live in, is significant to survive, to grow, to contribute. It is thereby important to connect this knowledge to the environment. Knowledge should be applied. By doing this it may grow as well. The duality between what lives inside us en what exists outside has intrigued philosophers and scientists for thousands of years. They want to understand from a fundamental point of view how these two poles interact with each other. This may help to develop useful applications.

The fields of pattern recognition and computer vision participate in this process in a technological fashion. The terminologies used in the various fields, philosophy, science and technology, are slightly different and change over the ages as well as between scientists. Various studies are written about the historic development. In the next section a short summary is presented, mainly based on the reviews by Russell (1946) and Steiner (1974). It is focussed on what is needed for our discussion on the recognition of patterns.

Our primary perception of the world outside of us, deals with specific objects and events. In pattern recognition it is aimed to find what is general in sets of them. In philosophical terms objects are particulars, e.g., the chair I see standing in front of the table. They are contrasted with the universals. Universals refer to what is behind the particulars, to what is general for a family of them. Patterns are thereby universals. The general concept of a chair belongs to the universals. Such a concept can manifest itself in many aspects of a particular. Chairs can appear anywhere, but we may also see the universal concept of the chair where there is no particular chair, like in a tree stump. Universals are not localized in time or space. They may show up in a plurality of particulars.

Particulars are elements of the external world. They cannot be described fully in words or numbers. Only certain features, measurements or qualities of an object can be explicitly reported. Senses and sensors always grasp just partially its totality. In some way this totality is again a universal, see the following quote from Russell.

“Seeing that nearly all the words to be found in the dictionary stand for universals, it is strange that hardly anybody except students of philosophy ever realizes that there are such entities as universals”.

However, to describe a universal fully is also difficult. When questioned about this, we can report aspects of what has been understood. A complete description is impossible. Children can indicate that they understand the concept of fractions, but their teacher can only check the way this knowledge is used. A woman can be recognized by her face, voice and posture, even if she is dressed differently than usual, if she has started wearing glasses or if she has significantly changed her hairstyle. There is no way to describe fully what is used to recognize someone.

Neither particulars, nor universals can be exactly described or defined. Both worlds, the external world as well as the internal world are richer than what can be expressed by numbers or words.

Whether the universals really exist has been a dispute over the ages. For Plato (428–348 BC) they are more real than the particulars, like explained in his allegory of the cave. The objects we see outside us are in the allegory just shades on a wall of the cave in which we live. They are generated by the light and the real creatures outside. These exist, but our normal eyes don’t see them. For a clairvoyant like Plato, however, they are real. An argument in favor of their existence is that knowledge or understanding can be shared in the same way as the observation of a remote object like a small chapel in the mountains. We may use a vague description or report some features to a friend who might have seen it as well. From his reaction it may be concluded that we are talking about the same. Objects and understanding, particulars and universals are very similar, except the former are observed by senses and the latter by thoughts.

For Plato the universals are the ideas that create the particulars. For his student Aristotle (384–322 BC), the ideas arise behind the particulars, but their creative powers are not confirmed by him. In the Middle Ages the understanding of the universals became gradually weaker. The nominalistic point of view was generally more accepted. Nominalists like William of Ockham and Francis Bacon just accept the existence of general or abstract terms and predicates. For them only the particulars are real. It is interesting that in the same period in the Indian Hindu philosophy, the Navya-Nyaya, a theory of real perceivable universals was studied. While for Plato universals live in heaven, in the Navya-Nyaya universals may directly be perceived in, for instance, a herd of camels (Chadha, 2014).

The last one who still advocated the reality of the universals like Aristotle was Thomas Aquinas (1,225–1,274). He described the way to reach them from a set of observed objects in terms of abstraction. We follow Bobik and Sayre (1963) in their discussion of three sorts of abstraction:

• Simple abstraction: This is based on a limited set of simple features, neglecting other properties. Printed characters are fully described by color, shape and size. Recognition might be based on just shape features without considering color and size.

• Complex abstraction: This is based on a complex grouping of features of which no one alone would be an adequate basis for recognition. As an example apple recognition is mentioned.

• Accompanying abstraction: This is not based on senses, but on verbally supplied information about object features.

It should be realized, of course, that Aquinas is strictly discussing human observations and reasoning. The idea of sensory based artificial measurements, as well as mechanical, computerized reasoning did not yet exist. Thereby shape is mentioned as a single feature, in fact a quality, but nowadays it is not clear at all how shape can be expressed quantitatively by a few numbers.

Aquinas dealt with this procedure differently (Steiner, 1972). For him two types of truths can exist next to each other. One is given by the dogmas formulated by the church or by teachers. The other is derived from observations. In a debate conflicts might be solved, but it is also perfectly possible that opposite formulations of the truth co-exist. In the first type, the dogmas, we recognize a rudiment of the reality of the ideas observed by Plato. In the debate on the observations the logical rules as originally formulated by Aristotle are used. In more recent times similar debates are sketched by Kuhn (1962) as paradigm shifts: only after some time conflicts between observations and theory are resolved by changing the theory. The student in machine learning may recognize the situation of an erroneously classified object: experts might assign different labels than artificially designed generalization algorithms. To some extend such conflicts are acceptable.

To conclude this section: the growing awareness of the distinction between universals and particulars resulted in a growing distinction between what is known and what is observed.

For the interaction between knowledge and observations two logical procedures can be distinguished, deduction and induction. Deduction operates top-down, induction goes bottom up. In deduction the knowledge is transformed into rules referring to properties of the observations. This transformation needs to be defined by an expert who is able to make his1 knowledge explicit. The rules have to be verified for a set of observations (a dog is an animal with four legs, so an animal with two legs cannot be a dog). This may result in two types of modifications: either adaptation of the rules (e.g. when we see a dog with three legs due to some accident), or the way the observations are represented (take barking into account as well).

Results of a deductive analysis are true, if and only if the premises are valid. This implies that the given knowledge as well as the rules that are derived from it should be true. Moreover, the observations as well as their representation should be reliable and fit into these rules. Knowledge about the particulars (the observations) as well as the universals (the premises and the rules) may grow by deduction. At the end, however, the procedure entirely depends on existing human knowledge (the premises) and reliable human interpretation of the observations. If one refuses to change the former in case of a conflict with the latter the situation of the co-existence of two truths arises.

Deduction is a way to apply existing knowledge. By following the rules we learn something on the specific observation, by updating the rules we obtain general knowledge from the observation. At this point we touch the key question of this paper: how does the process initiate? How does it start if no clairvoyant like Plato is available, or when we have lost trust in the religious dogmas? The theory of the tabula rasa tries to give an answer. We shortly summarize it.

The tabula rasa theory is based on the assumption that we are born without prior knowledge. We have no built-in mental content, and therefore all knowledge comes from experience or perception. Epistemological proponents of tabula rasa disagree with the doctrine of innatism, which holds that the mind is born already in possession of certain knowledge. Proponents of this theory also favor the “nurture” side of the nature-versus-nurture debate when it comes to aspects of one’s personality, social and emotional behavior, knowledge, and sapience. Consequently, what we know is initially based on what we learnt from parents, teachers, and others. When we have knowledge, observations may be used to enlarge it by some deduction procedure. But how did the people before us conquer their knowledge? There is clearly a chicken-and-egg problem.

There are several reasons why deduction is insufficient for explaining the knowledge we have of the world. There are always new, surprising observations that do not fit in what is known. Moreover, deduction expects a type of mature logical system which is often not, or just partially available. Thereby the second procedure, induction, is needed that operates bottom-up, as we are apparently able to extract knowledge from observations alone.

There is, however, also a severe problem with induction. If we rely on it too strongly, it might result into a common description of a set of observations, but this does not fit anymore in the total system of available knowledge. It results in just a word, an empty, isolated abstraction. This is the problem of the nominalists: at the end there are just words.

How to formulate a description of what is common between observations? A clear answer was given by William of Ockham (1,287–1,347): take the most simple one, also known as Ockham’s razor (Russell, 1946). It removes all solutions that are overcomplex. This is related to what is known as the principle of the minimum description length or the maximum margin classifier (Theodoridis and Koutroumbas, 2009).

Francis Bacon (1,561–1,626), sometimes characterized as the father of empiricism, advocated strongly the inductive procedure. The first step is to remove all “idols” (idola, Latin), i.e. all types of prejudices, folk beliefs, falsehoods, etcetera. Collect observations and find what is unique for a possible pattern. It implies that all features should be removed that are not common for all objects, as well as all features that are available in different patterns (features are here qualitative properties). This is a very severe demand and assumes that there is a single property that can be uniquely observed in the pattern under investigation. Patterns are thereby not concepts or generalizations. They can be directly observed.

For some philosophers Bacon went (much) too far by his strict empiricism as the source of knowledge. In contrast, Descartes (1,596–1,650) emphasized the reasoning. By logical combinations of given concepts the human thinking can possibly arrive at new insights and thus increase knowledge. However, does knowledge really grow if we just reorganize it and not feed it with new observations? Also the opposite question is yet unsolved: can we build knowledge from observations alone? We will give later an answer to the latter one in the context of machine learning.

One of the first who tried to reconcile empiricism and rationalism was Spinoza (1,632–1,677). In his analysis of the mind-body problem he denies dualism. Mind and body are different attributes, but this difference is not fundamental. Man acts in the world based on his thoughts and his moral insights as well as his observations. Spinoza is thereby a so-called neutral monist.

Later Kant (1724–1804), however, effectively denied that the gap between the two worlds could be bridged. The essences of the nature of things in the world outside, are essentially unknowable. We just see the exterior. This is still the main view in the natural sciences. It is the consequence of being objective and acting in a reproducible way. We try to develop better and better procedures and technologies to observe the objects, but their essence will always remain unknown according to Kant. The thing-in-itself (“das Ding an sich”) can not be reached.

Goethe (1749–1832) was in direct opposition to Kant. Although he is mainly known as a poet, for himself his scientific studies were at least equally important. Goethe opposed Kant, but even stronger he opposed Bacon. He agreed that scientific developments demanded other approaches than studied by Plato, Aristotle and Aquinas, but judged what Bacon proposed as totally inadequate. He followed himself a fully phenomenological procedure. The observations of the outside world made by the senses are thereby equally important as the inner experiences. At the end they may generalize into “archetypes”, possibly similar to the patterns discussed in this paper.

Following Goethe, the observations are richer than just the outside and include the impressions of the observer. His scientific studies are collected and commented by Steiner (1861–1925) who went a step further by explaining this procedure in a neutral monistic way (Steiner, 2003). The two worlds, one experienced at the outside and one experienced in the inside of the observer, are essentially the same. They are just observations by different senses (senses are by Steiner much wider interpreted than just physiological organs). So when we make a walk in the fields and we see a tree and the word “tree” or “oak” pops up in the mind before we even think about it, it is an observation of the same object as perceived by the eyes. For Steiner there is no gap to be bridged. We directly observe simultaneously both, the particular as well as the universal. This should be distinguished from a second step in which we reason about the observation, e.g. “that must be the oak in the field of Johnson” (from universal to particular) or “we are close to the mill so it must be an oak” (from particular to universal).

Steiner herewith closed the gap that arose in the two approaches first formulated by Plato and Aristotle. The first described an existing and directly accessible world of ideas, the latter how these can be approached by observation in the natural world around us. These two sides drifted apart in the Middle Ages resulting in dogmatism and nominalism and later in rationalism (Descartes) and empiricism (Bacon). Spinoza and Goethe tried to unify the two and finally Steiner made clear that the human observer has a direct access to both. However, modern scientific research and technological development necessarily still distinguish measurements and reasoning in enlarging knowledge and understanding. The next section will discuss their relation with the philosophical developments sketched above.

We will now make a next step in answering the question of the origin of the patterns we recognize in the world, either as a human observer or through the pattern recognition machines we build or use. These activities are based on observations and knowledge. In the previous section we used these concepts without an extensive description. Now it is the time to add some discussion.

We just used the word observation for what is received through the senses about events, objects, or object properties in the outside world. They are used to gain knowledge by procedures like deduction, induction or other types of logical reasoning. Such reasonings are based on facts. A fact is an objective truth that may be shared by others when general, common knowledge should be obtained. What is a fact that is indisputably true? Do such facts really exist?

Consider a discussion with a friend about a possible walk. I object as it rains. But is this really true? How did I know? May be I saw water drops through the window, but they might be caused by the neighbor watering his garden. Maybe I heard ticks on the panes, but perhaps just the wind blew the branches of the ivy on the wall outside against the window. The only thing of which I am absolutely certain is that I think that it is raining. At the end, the one and only unquestionable truth is what my thoughts observe in my mind. What my mind observes in itself can by nothing and nobody be denied. It may not be certain whether it is really raining. If my friend goes outside to check and also reports that he thinks that it rains, then we can only agree that we observe the same fact by assuming that we agree about the meaning of the word “rain”.

Not every fact that we observe has directly a name, a word connected to it that can be used to label the fact. We may find an item in a thrash bin, realize what it is, know that it is a part of a tool, without knowing how it is called. We may recognize an old friend in a shop, but his name doesn’t pop up in the mind.

Recognition is a fact. We observe it internally. A next step might be to describe it. In order to make clear to our partner who was the guy we met, we may try to describe him physically, or recall where he worked, lived, or what we did together. At the end we may also find his name. This name is just one of the properties to make clear who it was that we met.

For humans, recognition comes first. Arguments, properties, features are second. Naming is just one of them. That we recognize is not the result of an effort, of a laborious process. It is an internal observation, not just comparable with, but even the essence of what the senses tell us of the outside world. Initially they don’t report shapes, colors, frequencies, etcetera. They directly tell us that we recognize an object or an event. Only if we want to analyze, verify or describe it we go to the details of what we perceived. Gradually we become aware of properties and names. Consciousness demands effort.

Here a quotation of the philosopher C.S.Peirce (1839–1914) who phrased such instant recognition in the following way: “… it is only when the cognition has become worked up into a proposition, or a judgement of a fact, that I can exercise any direct control over the process; and it is idle to discuss the “legitimacy” of that which cannot be controlled. Observations of facts have, therefore, to be accepted as they occur” (Peirce and Buchler, 2012).

The ability of understanding is very similar to that of recognition. We can understand something directly, e.g. that the angles of a triangle sum to 180° is understood immediately by a single look at a proper picture (an auxiliary line through the top of a triangle parallel to its base). Explanation to somebody else may need some words. A proof based on axioms takes even more care. Like recognition, understanding is becoming aware of a fact: an observation of the mind in itself. Nobody can question this observation. Whether it is correct is a different issue. At the end, understanding and recognition are two sides of the same ability: cognition.

Facts correspond to the objects and events we encounter in the here and now. They are the manifestations of historic patterns in the present. Understanding corresponds to the laws that rule the world under study. They are the patterns that shape the future. Facts and understanding are given instantly. However, both have first to be transformed into observations and knowledge before they can be applied. This process demands consciousness. This transformation process integrates what directly pops up in the mind with what already lives there. This is what we experience when we need to answer questions like: “tell me what you see”, or “explain me what you understand”. This is sometimes a challenge. We might hesitate and struggle to find the right words, occasionally supported with some gestures or by drawing a picture.

People understand things in very different ways. Teachers reading answers on examination questions like: “explain your answer to the previous question” will be familiar with that. Different students may clarify their understanding in very different ways.

It takes effort to make a perceived fact or a gained understanding explicit. They have to be generalized such that they fit into already available knowledge. This might be characterized as upgrading the prior knowledge by experiences to posterior knowledge. In some contexts it is similar to what also may be called “generalization”.

After integration of the perceived facts the resulting observations are embedded in the existing personal knowledge. It is thereby enriched by these new observations. Effectively this is a learning process. For instance, when a biologist encounters a new species, the first exemplar is just an unexpected exception to his knowledge. Every next observation enriches the set of similar exceptions to what starts to become a new class in the collection of species. During this generalization process he gradually builds a set of specific species properties from what is similar between the new observations and different from other species. This generalization process takes attention, focus. Consciousness is needed to merge what is new with what is already available.

Building of knowledge as described is strengthened through attempts to share it with others, for example through an oral explanation or a written report. The result is thereby sharper and can be applied better. However, relevant aspects might be lost. Finding words to express thoughts, or to clarify a choice with arguments will project still vague personal ideas to concepts that are shared between people. This is based on the assumption that they mean the same for everyone. Sometimes this can be tested, but it is ultimately based on trust.

A similar assumption is needed for mathematical proofs. These are based on axioms whose validity is uncertain in an application. In this case, there must be trust between scientists and the field of application rather than between people themselves.

Finally the obtained knowledge has to be transformed such that it can be used. Thereby it has to connect potentially new observations with possible judgements or actions.

In order to share knowledge or to make it applicable it should be phrased in words. It will thereby be connected with existing concepts. In this process an original idea might be partially lost in an attempt to make the point very clear. We loose and we gain when we bring renewed knowledge down to Earth. This holds for new insights as well as for new observations that we try to integrate in an existing system of concepts. The clear correspondence between newborn ideas and new perceptions is what Steiner used when he formulated his version of neutral monism: there is no essential difference between the Platonic ideas and the Aristotelian perception. They are perceptions of the same world by different senses.

Learning integrates new observations with existing knowledge. There is no difference between how this is done between particulars and universals. We may learn how mountains look in general by studying a series of pictures collected from entirely different places in the Alps. We may also learn how a specific mountain looks, e.g. the Matterhorn, by studying a series of pictures collected from views of the same mountain taken from different positions in different times of the year. These are very similar processes. The distinction between particulars and universals might epistemologically be of interest, for recognition it makes no difference. If we recognize a concrete object, we are dealing with a physical item. If we recognize a common characteristic inside a set of objects, we are dealing with an abstract entity. Different senses might be used, but for the human phenomenon of recognition it makes no difference.

The outlined process of recognition and knowledge integration will now be illustrated by means of a study by Jaarsma et al. (2014), Jaarsma et al. (2016) on diagnosing histopathological slides. In an experiment, they followed the recognition and decision making process of three groups of examiners with different levels of expertise in histopathology: 13 novices (second year medical students with no real experience in histopathology), 12 intermediates (with an average of 3 years training) and 13 clinical pathologists (with 21 years of experience including 5 years training). Two experiments were performed with digitized microscopical images. None of the participants was familiar with such a system prior to the experiments.

In the first experiment (Jaarsma et al., 2014), they were asked to diagnose 10 microscopic images of colon tissue within 2 s. Eye movements and diagnoses (five categories, one normal, two pathological, two distracters) were stored. Some examples of similar images2 are shown in Figures 1–3. Afterwards, oral explanations of the diagnoses were recorded and analyzed using a categorization system developed from the literature. The authors conclude that although the experts and intermediates showed equal levels of diagnostic accuracy (approximately 86%), their visual and cognitive processing differed. The experts took a “recognize/detect-then-search” approach, while the intermediates used a “search-then-detect” procedure. This is based, among other things, on the longer scan path length and the larger fixation dispersion (spread of eye fixations on the image in pixels). The novices scored an average accuracy of 39%. Interestingly, the scores of the experts and the intermediates were almost equal, but the viewing styles of the two are essentially different. Those of the intermediates are closer to the styles of the novices.

In our terms, the novices are entering a new world for which their medical knowledge is not immediately applicable for recognition. They just have a theoretical background and no hands-on experience. Applicable knowledge has to be shaped. The intermediates are on their way to build a new skill. Much reasoning is still needed to reach a conclusion. Their diagnoses are good, but they still have to find the right words to motivate the decisions. This is further analyzed in the second paper by Jaarsma et al. (2016). Finally, the experts reach a level where there is an instant recognition after a first look at a slide. A confirmation is sought in the remaining time (of the only 2 s), because an oral report must then be submitted. It may be conjectured that after many years of expertise, the experts have developed a diagnostic sense. This is confirmed in a private communication with one of the authors, a clinical pathologist, who indicated that for him a first look at the slide can occasionally be sufficient for a correct diagnosis.

This example clearly shows the three ways of building knowledge that can be distinguished: authority, observation and reasoning. Novices used the authority of textbooks and courses. The intermediates have been using this for several years during real observations, internalizing their experiences and creating their own knowledge model. Finally, the experts must substantiate their diagnoses and be able to reason about what they recognize. Now knowledge grows further by sharing it with others.

At the end of this process, experts may develop a new sense: instant recognition of the pathological category. We would like to emphasize that for this phenomenon, which we all experience while recognizing objects and people around us, conscious reasoning does not play a role. Only when observations are questioned we may start looking for arguments.

What is the pattern that the experts recognize? They learned the relevant categories from the textbooks and from their teachers. Later they gradually started to recognize real world examples by using this knowledge during the interpretation of what they perceived by their senses. The patterns they recognized are concepts in the mind. They are able to do that as they developed a sense that points to these concepts when the corresponding fact is presented.

Patterns are just in the eye of the beholder and not in the outside world. They emerge after the observer has studied the objects. Consider a teacher observing the patterns in the class of 30 children under his supervision. There are boys and girls, they have different temperaments, there are different ways they think, some use words, others images. Some are good in arithmetic, some in languages, some in both. Some are shy, others are open. Some are quite, others are very lively. Some are small, others are large. There are tens of ways the children can be split in clearly distinguishable groups. Thereby thousands of subgroups can be distinguished. At the end, all children are unique. There are no patterns in the children, unless we define them ourselves.

In the previous section we argued that the patterns in the world exist just because we define them. For some readers this might be obvious and they don’t need our arguments. This is certainly not the first time this has been discussed. In 1965 K. M. Sayre already made a similar point when he explained the difference between recognition and classification (Sayre, 1965). The author of this paper read it, understood and forgot. This happened because the daily practise of pattern recognition research deals with given problems in a restrictive representation in which predefined classes have a minor to almost no overlap. In this case class patterns exist in the data and the challenge is to find the machine learning algorithm that gives the best performance. It is the purpose of this section and the next to clarify why this state of affairs is deceptive and to discuss the consequences.

We have described how the human ability to recognize a specific object category gradually grows through knowledge found in the literature or explained by teachers while practicing real-life examples. Machine recognition mimics this by using suitable sensors, algorithms to measure the relevant quantities (features) in the data followed by applying statistics on a training set with examples. The latter is necessary because experts often cannot indicate exactly what to measure in the sensor outputs. Thereby it is needed to use statistics, the science of the ignorant. The less is known on what to measure, the more data is needed to compensate for this lack of (explicit) knowledge.

Common scientific knowledge depends on observations made by different people, from different positions, at different times, and reported by words. It is based on the trust that these observations are comparable or just insignificantly different and that the reported words mean the same to everybody. These words might be sharply defined, but at the end definitions are again just words. Luckily there exist words for which we are sure they mean the same for all, these are the numbers. What is meant by a specific number is indisputably the same to all people that are able to count. This is illustrated by the following example.

The word “storm” does not necessarily mean the same for everyone. However, if the wind is measured by an anemometer (four rotating half bolls) with given specifications (all expressed in numbers) then it may be reported that it rotates five turns per second. We are very sure that everybody understands what this means. However, the observations are now replaced from human senses to artificial sensors. Instead of experiencing the wind ourselves and concluding that it storms, which might be disputed by others, we now read the display of the anemometer and everybody will agree about the number.

If we adjust an artificial sensor between us and the object and report the numbers related to the measurement, they represent a well defined objective observation. If position and time make a difference, additional measurements may be performed from other locations and at other moments. Words to describe the object or phenomenon are not needed in such circumstances. The human observer may even not see it or sense it otherwise. His past, his experience as well as his knowledge are not participating in the observation anymore.

What does it mean that human instant recognition is lost for the philosophical debate we described in Section 3? The unity of the facts observed from the external world and the internal understanding, the neutral monism, is gone now. Somebody reading a display with numbers will not have an instant internal experience related to the object that is observed. First some reasoning is needed to integrate the measurement numbers with his knowledge. He appears to find himself in a dualistic position. There is a physical world outside that is separated from the understanding in the mind. Consequently, the thing-in-itself may remain unknown.

By the use of sensors to observe the world, objectivity is gained, but instant recognition is lost. Measurements have to be fit into an explicit model of the world before the new observations will contribute to this knowledge. Reasoning and numerical optimization procedures might be needed. The consequence is that it becomes very difficult to state anything that is not in the knowledge model about the object that is recognized. Compare this to the common experience that some friend can be recognized instantly, but that after questioning arguments have to be searched. Similarly, pathologists that recognize the tissue type instantly, need time to phrase the proper arguments for their report.

What does it mean that human instant recognition is lost for the field of artificial pattern recognition? It uses sensors and explicit measurement procedures to extract numbers that characterize the objects under observation. These are not given to an expert who aims to enlarge his understanding, but supplied to a well defined machine learning algorithm that in a first step creates or updates a knowledge model and in a final application produces a classification result, the recognized class. In research environments this may be studied by an expert that develops (or assists) the algorithm, but in final applications it may be offered to a non-expert user. Occasionally, he can accept or reject it, but often he will use it as it is. Moreover, sometimes the result is even without human interaction directly used by other systems.

Here we see a clear duality: the observed world is entirely separated from the person who wants to know and understand. In between are sensors and automatic algorithms by which reasoning is modeled. In a trained pattern recognition system the observations and the knowledge collected in the past are crystallized and applied in the future. This is a simulation of, but also in contrast with, the human observer. As mentioned in Section 3, he is according to some monistic philosophers not just a product of the past, but can have, depending on his consciousness, an open mind for the here and now. Originally he is united with the world around and thereby able to recognize new situations and act accordingly. If automatic pattern recognition devices are used monism is lost. They create a dualistic world.

In a monistic setting we fully participate with the world around us, with all our organs for perception and action. A dance event, joined enthusiastically, or a lonely survival on an island in a hostile climate without modern facilities, are examples. We create, observe and experience at the same time. We are one with our environment.

The scientist needs to separate himself to some extend from the world he perceives. However, as argued in Section 3, he still has to experiment and to connect in order to understand. The more he disconnects, the more he just collects numbers, the more he becomes a statistician. Ultimately, there is no understanding, there are only the statistical patterns in the data.

In a completely dualistic situation we are not involved, we are just observers. In extremis it is even impossible to interpret the perceptions correctly, because we do not even understand how the sensors work. They are part of the outside world, that we want to study. We can only observe what these sensors tell us: numbers.

Scientists and analysts will find themselves in this situation when they use pattern recognition systems developed by someone else. Sensors designed by unknown engineers send information to magical algorithms written by machine learning experts that ultimately report a conclusion. The relationship with the external world may be lost. In order to check this, either the phenomena of interest should be inspected directly, or the physics of the applied sensors should be understood, as well as the procedures for object representation, training and classification. In addition the real-life examples used for training should be studied. In order to use a pattern recognition system responsibly, one needs to be familiar with the physics and artificial intelligence on which it is based.

In a dualistic position, we rely on the eyes and mind of somebody else, of the designer of the system between us and the phenomena of interest. In constructing a pattern recognition system ourselves, we should try to be aware of all issues that could lead to lack of understanding for the future user. A significant one is the answer to the question: where is the origin of the patterns that are recognized?

The following two alternatives will be considered:

• The world under observation. Under this conjecture there are clear, distinct categories in the world, e.g. clouds, water drops, insects, birds; the species of plants and animals, or healthy and sick people with distinguishable diseases. It is the task of the observer, or of the pattern recognition system, to determine the characteristic properties by which they can be distinguished. Suitable physically sensors have to be found that can reveal the patterns.

• The observer or the observing system. In this case the objects and events in the world constitute a continuum. No clear distinctions can be found. All categories and classes are just there because senses, reasonings, knowledge and words make them distinguishable. Artificial pattern recognition systems are designed accordingly. In this case the emphasis should be laid on the algorithms that reflect the knowledge and reasoning that defined them.

Does the first group of patterns really exists? There is always the restriction to the set of sensors that can be imagined. Different sensors or the use of higher sensor resolutions may reveal other patterns. If all restrictions are removed then at any point in the physical space an infinite set of numbers might be measured. The world under observation is necessarily restricted to what is actually sensed or measured. This defines the patterns.

The second group of patterns is naturally limited to the interest and knowledge of the observer or to the algorithms in a trained system. In order to train, examples are needed. Usually they are restricted to a limited set of possible objects. If they are labeled, knowledge of an expert is included. Experts may suggest possible preprocessing for the sensor data as well. Consequently, the patterns of interest do not exist as such in the world defined by the sensors, but their origin is in the mind of the expert.

There is a group of patterns that is slightly different from the above two. These are the artificial objects that are designed to be recognized, e.g. characters, traffic signs and speech. Their patterns exist in the world, but they originate from the human mind. Effectively they belong to both groups. Thereby it is not needed here to discuss them separately.

An expert that recognizes objects to be labeled for a training set is united with the problem. His expertise is trusted and used as a base for the design of a pattern recognition system. The pattern classes he distinguishes originate from his own knowledge. Users of the pattern recognition system have to rely on its classification. For them the patterns are in the representation of the problem created by the sensors and the pattern recognition system. A machine learning algorithm is trained on the sensor output of the training set and is thereby entirely separated from the problem. In between is the analyst that designed the systems and optimized its algorithms. He is often also separated from the problem, especially when the sensors, their physics and their outputs, are just given to him. He is in a dualistic position as he is not united with the external problem.

Does this also hold for the final user? Think about a pilot or an astronaut being in charge of controlling a flying device, or an operator in a power station or in a chemical factory in front of a wall of measurement displays. Are they united with the world they need to control, or are they just optimizing the numbers delivered by intelligent machines between them and a remote reality? For them the patterns are outside, in another world. The same may hold for the starting driver of a new, modern car. There is a dashboard with unknown lights and controls. Occasionally there are alarming beeps as well. He has to control a world from which he is separated.

However, an experienced driver of the same car, may very well be aware of what is going on. Like a child after a few years, he also became united with the world he has to control. He grows from a dualistic position to a monistic one. The patterns are now inside him as concepts and consequently he can become responsible himself and does not need anymore to follow the guides and rules given by a teacher.

The colon tissue problem discussed in Section 3 in relation with human recognition will now be used to illustrate the need of human knowledge in machine recognition. We will use for each of three classes of 25 slides scanned by 1024 × 1024 pixels3. The classes are normal, adenomatous and cancer. For simplicity the color slides are reduced to gray values, keeping in mind a remark made by one of the pathologists that color hardly contributes to his recognition. Each slide will initially be represented by a set of up to 100 neighborhood regions each characterized by 29 features. They will be called instances, following the concept of multiple instance learning (Li et al., 2021). The instance features cover about 40 × 40 pixels. Exact information is given in the Appendix. Four analysis steps are performed, based on increasing human supplied information:

1) A cluster analysis of the combined set of all 3 × 25 × 100 = 7,500 instances. Initially the instances are positioned in a 10 × 10 regular grid placed independent of the actual content. It will be shown that the clusters are not related to three classes of slides. Here no other information is used than the instance definition.

2) An instance classification analysis using the pathologist labeling of the slides from which they originate. The information that sets of instances are from the same slide is not used.

3) A slide classification analysis by a multi-instance slide classification. In this step slides are classified combining the classification results of their instances.

4) The above three steps are repeated for an advanced procedure in which the instances are positioned in a more informed way.

The first analysis aims to stay as close as possible to the original data, the scanned slides. A k-means multi-clustering (Theodoridis and Koutroumbas, 2009) is performed on the total set of 7,500 instances to inspect whether there is any structure related to the class labels of the corresponding slides.

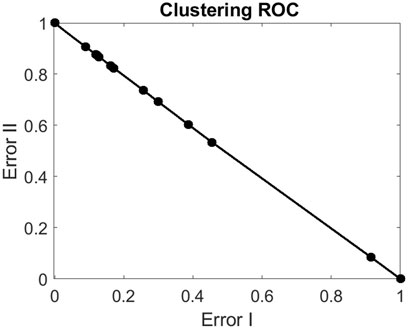

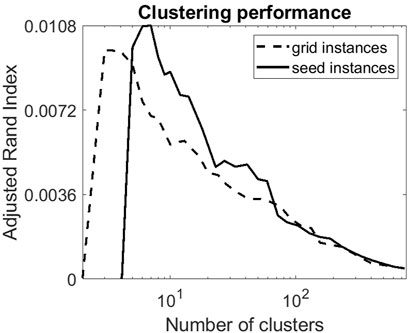

An ROC procedure was used to compare the fractions of instance pairs with the same or different class labels that are in different, respectively the same cluster (Aidos et al., 20132013). The resulting two errors are plotted as a function of the number of neighbors used in the k-means procedure. If there is a relation between the instance representation and the class labels it is to be expected that for some op of the clusterings the two errors sum to a value that is considerably smaller than one. Figure 4 shows that this is not the case. For all clusters holds that the instances are close to randomly distributed over the classes. Section 4.3.4 and Figure 5 in which the clustering are compared with the class labels using the Adjusted Rand Index. A value of one corresponds to a full correspondence, zero means no correspondence (Gates and Ahn, 2017).

FIGURE 4. Receiver Operating Curve (ROC) for different cluster sizes. Error I is the probability that two instances with the same slide class are in different clusters. Error II is the probability that two instances from different slide classes are in the same cluster. In the top-left are clusterings having a few large clusters. On the bottom right are the clusterings consisting of many small clusters.

FIGURE 5. The two cluster analyzes evaluated by the Adjusted Rand Index. The interval (0, 1) runs from no relation with labeling to a complete consistency. The seed instances are slightly better related with the slide labels, but still almost random. Gates and Ahn (2017) for a study on evaluating clustering performances.

In Table 1 the confusion is shown between one of the clusterings (here for the case of 13 clusters) and the slide labels. This illustrates that no regions are found in the feature space where one of the classes dominates. Image neighborhoods as represented by the instances are not dominantly different for the three types of slides. Still, pathologists are able to distinguish the slides consistently. In the next subsection it is analyzed whether it is helpful to use the information related to their recognition ability.

Instead of the unsupervised cluster analysis the three slide classes are used to label the instances of the corresponding slides. As already noted above, many instances do not reflect the proper classes. In fact, also in the two abnormal classes many tissue regions may look normal. Nevertheless, we gave it a try as some human knowledge is added by using the slide labels.

Slides can be very different. In a cross-validation procedure it should be avoided that instances of the same slide are in the training set as well as in the test set. Therefore a cross-validation was applied over the slides. Table 2 shows the results of a leave-one-slide-out experiment in which 74 slides are used for training and the instances of the removed slide were tested. This was rotated over all 75 slides.

The resulting overall classification error is 0.620, using LDA (i.e. assuming normal distributions with identical covariance matrices), and using equal priors for the three classes. This might seem bad, but it is still significantly different (p ≈ 1) from the classification error of random assignment, which is 0.667.

In this third experiment the entire slides are classified instead of their instances. This is done on top of the instance classification. The fractions of the instance assignments to each of the three classes are used to represent a slide. This results in a three-dimensional feature space in which again an LDA classifier is computed. The entire classification procedure has been repeated 75 times in a leave-one-slide-out cross-validation experiment. The result is shown in Table 3.

The slide classification error is 0.60. This is better than the above instance classification result. It is in this case, however, not significant (p = 0.55) as there are just 75 slides. Especially the fact that just 6 of the 25 cancer slides are correctly classified is disappointing.

In the above experiments the instances are located in a grid, neglecting the image content. The slides, however, contain various types of structures, visible for the human eye. In order to make the instances sensitive for the image content a procedure has been developed to position them on specific locations, named here seeds. These are the centers of blobs, as far as they could be detected by an automatic procedure. See the Appendix A for more details and Figure 7 for an example.

We found 4,014 seed instances in the 75 slides. The same three experiments as with the grid instances have been performed, finally resulting in better results.

• The cluster analysis still does not show any relation between clusters and slide classes, see Figure 5.

• The instance classification error decreased from 0.620 to 0.583, Table 4.

• The slide classification error decreased from 0.60 to 0.51, see Table 5. Especially the results of the cancer slides show significantly better numbers.

The purpose of the above experiments is to illustrate that without any prior knowledge there are no patterns to be observed in the raw data. This has already been formulated generally in 1969 by S. Watanbe’s “ugly duckling theorem”, (Watanabe, 1969), and is intensively discussed in the 1990’s as the “no free lunch theorem”, (Wolpert and Macready, 1997). Phrased colloquially: without any additional assumptions all objects in the observed data are equally different. However, already simple and often natural assumptions like a finite resolution of continuous sensory data and some type of data compactness will start to invalidate these theorems (Duin and Group, 1999). Adding more human insights gradually yields machine learning based classification performances that approximate the expert recognition rate.

In the experiments we even didn’t start from zero. From the beginning the raw data was interpreted as a two-dimensional image. Moreover, the slides themselves do not show natural phenomena, but are prepared in such a way that human recognition is enabled. In the data preparation is already some knowledge included. The experiments show that more is needed to recover the patterns that live in the human mind as well as in the data.

Our experiments are designed just to show an example of our general discussion on pattern recognition. It is in no way the intention to suggest that the procedures that have been followed are a proper way to solve the general issue of automatic colon tissue analysis. Different settings in our experiments yield different results, sometimes better, sometimes worse. As we didn’t want to optimize performances just our first choice is presented. Much more advanced and dedicated studies are available for data like these, often based on deep learning, see for example Kalkan et al. (2012) and EhteshamiBejnordi et al. (2017).

In this section it will first be discussed how human knowledge is used to build automatic systems to assist, or occasionally replace, an expert or user in his recognition task. After that the problems and solutions are analyzed of the possibly resulting dualistic position w.r.t. the observed world.

Originally the patterns live in the mind of an expert. He recognizes them guided by his knowledge and interest and is thereby able to collect a set of examples, to label them in appropriate classes, intermediates and the non-members: objects and phenomena that have nothing to do with the patterns under consideration.

In a second step, an engineer selects—in discussion with the expert—a set of sensors and algorithms to represent the pattern characteristics of the objects. This might be difficult for the experienced expert as he may not be aware anymore what aspects of the observations he uses. It is sometimes helpful if an expert is a teacher as well as then use can be made of the ability to make understanding explicit. Next, a set of examples is used by an analyst experienced in machine learning to design classification algorithms. They should classify but preferably also point to intermediate objects as well as to outliers that have nothing to do with the patterns of interest.

After training the system fixates what is learned from the expert and the additional examples. Its output is thereby connected to the outside world through the expert and training results. The expert is not anymore observing the world himself. In fully automatic applications this dualistic situation results in a mechanical classification of observations. Knowledge and interest of experts are crystalized in a system.

Depending on the application this may be undesirable. The output of the pattern recognition system may in appropriate situations be rejected and returned to the same or another expert, preferably showing the original object as well. Consequently, he has thereby a set of extended senses offered by the system. After some time he might be able to directly recognize the patterns using them all. This may improve the results of the first step: faster, more accurate recognition. The duality is thereby transformed into a hopefully better monistic situation.

This entire process can be compared to an experienced tennis player who meets an advanced trainer. This trainer recognizes that improvements are possible, but first some de-learning is needed. The tennis player becomes again a novice and needs to play consciously where he first could play automatically. In this situation something new can be added, and integrated in his playing tennis. Finally he may have reached a higher level.

In short, the patterns originate from the human mind. When they are perceived by means of a pattern recognition system, the human observer experiences them as in an external world. Direct recognition is affected by this. It can be re-established by experts who observe the output of the system at the same time as the objects in question. The consequences and possible solutions will now be briefly discussed.

It has been argued in this paper that objects can be recognized instantly by an expert who is fully familiar with them. No reasoning is needed as the patterns originate from the mind. Of course, mistakes can be made, especially if object and circumstances are different than expected. The expert is fully responsible for such mistakes. However, if the pattern is defined elsewhere, in the world outside, observed by an artificial sensor, and outputs are modified by an algorithm that is externally designed and trained, a user has to rely entirely on its outcomes. But who should now be responsible for mistakes?

There are two options for redirecting the responsibility back the user of such a system. Either the system should refuse to make decisions in case of doubt, or the system should be restricted to advices and leave all decisions to the user. In both cases it is needed that the user is a responsible expert. It should be realized that in handling new objects, fully automatic systems are in a worse position, compared to experts that have to handle objects they are not trained on. The latter may detect this, where an automatic system is forced to make decisions anyway.

Let us consider the example of a parking aid. It warns when the car approaches an obstacle. Without this aid the driver first studies the obstacles, estimates distances, adds some decimeters to be sure, and makes the parking move using his own vision abilities. With the parking aid the driver is automatically warned when the car is approaching the obstacles. Some of them might be bushes that may be neglected, other ones might be small or low objects that are missed by the parking aid erroneously. If this information is taken into account by the driver, he is better off than without the aid as well as in case he fully relies on it. This is only so if he is trained by using it and has become aware of its limitations. He has to become a parking expert by experimentally studying the use of the parking aid.

This illustrates our point: Although patterns originate in the human mind, a detour beyond it using artificial aids and reintegration through human consciousness can improve their recognition. We emphasize that if the last step is skipped, the user is forced to rely on a system that may behave unexpectedly, effectively leaving the system designer responsible for errors. In applications such as an autopilot or a self-driving car on public roads, this has led to serious accidents in the past. In situations where it is possible that conditions may deviate from what could be expected during design, users should be made responsible by giving them the freedom to make their own decisions. They can only bear this responsibility if the system, its sensors, and its algorithms are integrated with them in a similar way as their own senses and behavior. Training and testing procedures are needed for users. This implies, for example, for the application of colon tissue recognition discussed earlier in this article that a tissue classification advisory system will only be useful in the daily routine, after the pathologists have been trained for the system that will be used.

A pattern recognition system built in this way enriches the user’s world. His senses are supported with sensors, his decision-making with automatically generated advices. These are based on the observations, knowledge and skills of many experts from all over the world. Once the user has been trained to deal with this and has built the skills to apply this concerted effort in his particular circumstances, he can also act responsibly in new situations while being backed by the many that participated in the development of the system.

We have outlined how, throughout history, observations and knowledge of the world gradually separated. Through consciousness and specialized interests, they were studied from different points of view. In everyday life and in many technological and scientific studies we are still able to combine the two directly by what we called “instant recognition”. It requires the observer to be an element of the world under study. In a dualistic situation where the two are separate, instant recognition must be replaced by reasoning.

Such a duality is created by machines equipped with sensors and algorithms that observe and analyze the outside world. There are no patterns in the observations as such, as follows from the two theorems mentioned in section 4.4. Prior knowledge is necessary to find patterns. Different prior knowledge will reveal different patterns. Such knowledge is integrated by system designers through the choice of sensors and algorithms. The user of the system observes the world and sees the patterns through their eyes.

Also, a duality will arise between the user and the system if he does not participate in the system design. So we have two or three worlds were originally there was only one. The use of pattern recognition and machine learning devices transform monism into dualism.

This situation can be healed by fully mastering the intermediate system and becoming aware of all its limitations. As a result we reconnect directly with the world, just as we get used to wearing glasses or learning to ride a bicycle. Therefore, “machine learning” should also be understood as “learning to use a machine”.

Human beings are able to step outside from what they learned from parents, teachers and their cultural environment. Admittedly, this is not easy. What we have, and what machines do not have is a personal interest that enables us to overcome the prejudices and prior assumptions that have arisen in the past. This interest leads us to new insights.

Some publicists have raised the expectation that future machines can do this too, e.g. creative machines (Du Sautoy, 2019), or gaining knowledge through intuition (Lovelock, 2019). Such machines will not evolve evolutionary out of the present ones. They should be given the freedom to generate their own interest. They must be monistic, i.e. be part of the world under observation in order to originate their own patterns. Our current machines are dualistic as argued above. It is questionable whether devices with physical sensors and mechanical reasoning will ever conquer the freedom to generate their own interest. Something entirely new will be needed.

Finally, this paper is not an attempt to convince. The author just wants to explain a personal view.

The author confirms being the sole contributor of this work and has approved it for publication.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The author expresses his gratitude to colleagues and friends that participated in commenting on previous versions of the paper: Manuele Bicego, Marco Loog, Marius Nap, Mauricio Orozco-Alzate, Elżbieta Pȩkalska, David Tax, Dieter Hammer and Han de Vries. The author also thanks Marco Loog, David Tax, Marcel Reinders and the members of the Delft Pattern Recognition Laboratory for support and hospitality. A special thanks goes to Arnold Smeulders for pointing the author to the often neglected line in his research that culminated in this article.

1It is not assumed that experts or scientists are male. Wherever in the paper, in reference to them, he, his or him is written, she, her or hers may also be read.

2These images are not the ones used in (Jaarsma et al., 2014). These ones, as well as the ones used in Section 4.3, have been made available by Dr. Marius Nap.

3These images have been made available by Dr. Marius Nap.

Aidos, H., Duin, R. P. W., and Fred, A. L. N. (20132013). in The Area under the ROC Curve as a Criterion for Clustering Evaluation. Editors M.D. Marsico, and A.L.N. Fred (Setubal, Portugal: ICPRAMSciTePress), 276–280.

Bobik, J., and Sayre, K. (1963). Pattern Recognition Mechanisms and St. Thomas’ Theory of Abstraction. Revue philosophique de Louvain 61 (8), 24–43. doi:10.3406/phlou.1963.5193

Chadha, M. (2014). On Knowing Universals: The Nyāya Way. Philos. East West 64 (2), 287–302. doi:10.1353/pew.2014.0036

Du Sautoy, M. (2019). The Creativity Code: How AI Is Learning to Write, Paint and Think. HarperCollins.

Duin, R. P. W., and Group, P. R. (1999). “Compactness and Complexity of Pattern Recognition Problems,” in Proc. Int. Symposium on Pattern Recognition In Memoriam Pierre Devijver” (Brussels: Royal Military Ac), 124–128.

Ehteshami Bejnordi, B., Veta, M., Johannes van Diest, P., van Ginneken, B., Karssemeijer, N., Litjens, G., et al. (2017). Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with Breast Cancer. JAMA 318 (22), 2199–2210. doi:10.1001/jama.2017.14585

Gates, A. J., and Ahn, Y. (2017). The Impact of Random Models on Clustering Similarity. J. Mach. Learn. Res. 18 (1–87), 8728.

Jaarsma, T., Boshuizen, H. P. A., Jarodzka, H., Nap, M., Verboon, P., and van Merriënboer, J. J. G. (2016). Tracks to a Medical Diagnosis: Expertise Differences in Visual Problem Solving. Appl. Cognit. Psychol. 30 (3), 314–322. doi:10.1002/acp.3201

Jaarsma, T., Jarodzka, H., Nap, M., van Merrienboer, J. J. G., and Boshuizen, H. P. A. (2014). Expertise under the Microscope: Processing Histopathological Slides. Med. Educ. 48 (3), 292–300. doi:10.1111/medu.12385

Kalkan, H., Nap, M., Duin, R. P., and Loog, M. (2012). “Automated Classification of Local Patches in colon Histopathology,” in Proceedings of the 21st International Conference on Pattern Recognition (Tsukuba, Japan: ICPR2012), 61–64.

Li, J., Li, W., Sisk, A., Ye, H., Wallace, W. D., Speier, W., et al. (2021). A Multi-Resolution Model for Histopathology Image Classification and Localization with Multiple Instance Learning. Comput. Biol. Med. 131, 104253. doi:10.1016/j.compbiomed.2021.104253

Sayre, K. (1965). Recognition, a Study in the Philosophy of Artificial Intelligence. Notre Dame, IN: University of Notre Dame Press.

Steiner, R. (1972). Die Philosophie des Thomas von Aquino (1920). Dornach, Switzerland: Rudolf Steiner Verlag.

Steiner, R. (2003). Grundlinien einer Erkenntnistheorie der Goetheschen Weltanschauung (1886). Dornach, Switzerland: Rudolf Steiner Verlag.

Watanabe, S. (1969). Knowing and Guessing: A Quantitative Study of Inference and Information. Wiley.

Wolpert, D. H., and Macready, W. G. (1997). No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Computat. 1 (1), 67–82. doi:10.1109/4235.585893

Here some details are given of the two types of slide representations used in the experiments in Section 4.3. They are in no way optimized for classification performance as they serve just as an illustration of the effects of using gradually more human supplied information.

Slides are represented by a set of up to 100 instances. An instance is a local sampling of the image of 29 pixels around a center pixel. They sample the original image as well as various blurred versions according to the following specifications:

• One pixel of the original image in the center of the instance.

• Four pixels of the original image being the 4-connected neighborhood of the center pixel.

• Eight pixels equidistantly on a circle with radius of 2, sampling an image blurred with a Gaussian filter with standard deviation 2.

• Eight pixels equidistantly on a circle with radius of 6, sampling an image blurred with a Gaussian filter with standard deviation 6.

• Eight pixels equidistantly on a circle with radius of 16, sampling an image blurred with a Gaussian filter with standard deviation 16.

In the first set of experiments 100 instances are used for every slide, equidistantly positioned in a 10 × 10 grid. Figure 6 for a detailed example. In the second set of experiments up 100 instances are located at seeds in the image. An attempt to is made to locate the seeds in the following way at the centers of dark structures in the image.

1) The image is blurred with a standard deviation σ = 2.

2) All pixels that are locally minimum are found.

3) Around these seeds watershed contours are determined.

4) Holes are closed, obtaining a set of blobs.

5) Blobs are skeletonized to single pixels, the seeds.

6) If needed, a random selection of 100 seeds is taken.

In Figure 7 the result is shown for the same image detail.

Keywords: recognition, knowledge, monism, dualism, universals, patterns, concepts, histopathology

Citation: Duin RPW (2021) The Origin of Patterns. Front. Comput. Sci. 3:747195. doi: 10.3389/fcomp.2021.747195

Received: 25 July 2021; Accepted: 18 October 2021;

Published: 02 November 2021.

Edited by:

Marcello Pelillo, Ca’ Foscari University, ItalyReviewed by:

Yiannis Aloimonos, University of Maryland, College Park, United StatesCopyright © 2021 Duin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Robert P. W. Duin, cmR1aW5AeHM0YWxsLm5s

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers