- 1LTCI, Télécom Paris, Institut Polytechnique de Paris, Paris, France

- 2CNRS-ISIR, Sorbonne University, Paris, France

Adaptation is a key mechanism in human–human interaction. In our work, we aim at endowing embodied conversational agents with the ability to adapt their behavior when interacting with a human interlocutor. With the goal to better understand what the main challenges concerning adaptive agents are, we investigated the effects on the user’s experience of three adaptation models for a virtual agent. The adaptation mechanisms performed by the agent take into account the user’s reaction and learn how to adapt on the fly during the interaction. The agent’s adaptation is realized at several levels (i.e., at the behavioral, conversational, and signal levels) and focuses on improving the user’s experience along different dimensions (i.e., the user’s impressions and engagement). In our first two studies, we aim to learn the agent’s multimodal behaviors and conversational strategies to dynamically optimize the user’s engagement and impressions of the agent, by taking them as input during the learning process. In our third study, our model takes both the user’s and the agent’s past behavior as input and predicts the agent’s next behavior. Our adaptation models have been evaluated through experimental studies sharing the same interacting scenario, with the agent playing the role of a virtual museum guide. These studies showed the impact of the adaptation mechanisms on the user’s experience of the interaction and their perception of the agent. Interacting with an adaptive agent vs. a nonadaptive agent tended to be more positively perceived. Finally, the effects of people’s a priori about virtual agents found in our studies highlight the importance of taking into account the user’s expectancies in human–agent interaction.

1 Introduction

During an interaction, we communicate through multiple behaviors. Not only speech but also our facial expressions, gestures, gaze direction, body orientation, etc. participate in the message being communicated (Argyle, 1972). Both interactants are active participants in an interaction and adapt their behaviors to each other. This adaptation arises on several levels: we align ourselves linguistically (vocabulary, syntax, and level of formality), but we also adapt our nonverbal behaviors (e.g., we respond to the smile of our interlocutor, and we imitate their posture and their gestural expressiveness), our conversational strategies (e.g., to be perceived as warmer or more competent), etc. (Burgoon et al., 2007). This multilevel adaptation can have several functions, such as reinforcing engagement in the interaction, emphasizing our relationship with others, showing empathy, and managing the impressions we give to others (Lakin and Chartrand, 2003; Gueguen et al., 2009; Fischer-Lokou et al., 2011). The choice of verbal and nonverbal behaviors and their temporal realization are markers of adaptation.

Embodied conversational agents (ECAs) are virtual entities with a humanlike appearance that are endowed with communicative and emotional capabilities (Cassell et al., 2000). They can display a wide range of multimodal expressions to be active participants in the interaction with their human interlocutors. They have been deployed in various human–machine interactions where they can act as a tutor (Mills et al., 2019), health support (Lisetti et al., 2013; Rizzo et al., 2016; Zhang et al., 2017), a companion (Sidner et al., 2018), a museum guide (Kopp et al., 2005; Swartout et al., 2010), etc. Studies have reported that ECAs are able to take into account their human interlocutors and show empathy (Paiva et al., 2017), display backchannels (Bevacqua et al., 2008), and build rapport (Huang et al., 2011; Zhao et al., 2016). Given its relevance in human–human interaction, adaptation could be exploited to improve natural interactions with ECAs. It thus seems important to investigate whether an agent adapting to the user’s behaviors could provoke similar positive outcomes in the interaction.

The majority of works in this context developed models learnt from existing databases of human–human interaction and did not consider the dynamics of adaptation mechanisms during an interaction. We are interested in developing an ECA that exploits how the interaction is currently going and is able to learn in real time what the best adaption mechanism for the interaction is.

In this article, we report three studies where an ECA adapts its behaviors by taking into account the user’s reaction and by learning how to adapt on the fly during the interaction.

The goal of the different studies is to answer two broad research questions:

“Does adapting an ECA’s behavior enhance user’s experience during interaction?”

“How does an ECA which adapts its behavior in real-time influence the user’s perception of the agent?”

A user’s experience can involve many factors and can be measured by different dimensions, such as the user’s engagement and the user’s impressions about the ECA (Burgoon et al., 2007). In our three studies that we report in this article, we implemented three independent models where the agent’s adaptation is realized at several levels and focuses on improving the user’s experience along different dimensions as follows:

1) the agent’s adaptation at a behavioral level: the ECA adapts its behaviors (e.g., gestures, arm rest poses, and smiles) in order to maximize the user’s impressions about the agent’s warmth or competence, the two fundamental dimensions of social cognition (Fiske et al., 2007). This model is described in Section 7;

2) the agent’s adaptation at a conversational level: the ECA adapts its communicative strategies to elicit different levels of warmth and competence, in order to maximize the user’s engagement. This model is described in Section 8; and

3) the agent’s adaptation at a signal level: the ECA adapts its head and eye rotation and lip corner movement in function of the user’s signals in order to maximize the user’s engagement. This model is described in Section 9.

Each adaptation mechanism has been implemented in the same architecture that allows an ECA to adapt to the nonverbal behaviors of the user during the interaction. This architecture includes a multimodal analysis of the user’s behavior using the Eyesweb platform (Camurri et al., 2004), a dialogue manager (Flipper (van Waterschoot et al., 2018)), and the ECA GRETA (Pecune et al., 2014). The architecture has been adapted to each model and evaluated through experimental studies. The ECA played the role of a virtual guide at the Science Museum of Paris. The scenario used in all the evaluation studies is described in Section 6.

Even though these three models have been implemented in the same architecture and tested on the same scenario, they have not been developed in order to do comparative studies. The main goal of this paper is to frame them in the same theoretical framework (see Section 2) and have insights into each of these different adaptation mechanisms to better understand what the main challenges concerning these models are and to suggest further improvements for an adaptation system working on multiple levels.

This article is organized as follows: in Section 2, we review the main theories about adaptation which our work relies on, in particular Burgoon and others’ work; in Section 3, we present an overview of existing models that focus on adapting the ECA’s behavior according to the user’s behavior; in Section 4, we specify the dimensions we focused on in our adaptation models; in Section 5, we present the general architecture we conceived to endow our ECA with the capability of adapting its behavior to the user’s reactions in real time; in Section 6, we describe the scenario we conceived to test the different adaptation models; in Sections 7–9, we report the implementation and evaluation of each of the three models. More details about them can be found in our previous articles (Biancardi et al., 2019b; Biancardi et al., 2019a; Dermouche and Pelachaud, 2019). We finally discuss the results of our work and possible improvements in Sections 10, 11, respectively.

2 Background

Adaptation is an essential feature of interpersonal relationships (Cappella, 1991). During an effective communication, people adapt their interaction patterns to one another’s (e.g., dancers synchronize their movements and people adapt their conversational style in a conversation). These patterns contribute to defining and maintaining our interpersonal relationships, by facilitating smooth communication, fostering attraction, reinforcing identification with an in-group, and increasing rapport between communicators (Bernieri et al., 1988; Giles et al., 1991; Chartrand and Bargh, 1999; Gallois et al., 2005).

There exist several adaptation patterns, differing according to their behavior type (e.g., the modality, the similarity to the other interlocutor’s behavior, etc.), their level of consciousness, whether they are well decoded by the other interlocutor, and their effect on the interaction (Toma, 2014). Cappella and others (Cappella, 1981) considered an additional characteristic, that is, adaptation can be asymmetrical (unilateral), when only one partner adapts to the other, or symmetrical (mutual), like in the case of interaction synchrony.

In line with these criteria, in some examples of adaptation, people’s behaviors become more similar to one another’s. This type of adaptation is often unconscious and reflects reciprocity or convergence. According to Gouldner (Gouldner, 1960), reciprocity is motivated by the need to maintain harmonious and stable relations. It is contingent (i.e., one person’s behaviors are dependent upon the other’s) and transactional (i.e., it is part of an exchange process between two people).

In other cases, adaptation can include complementarity or divergence; this occurs when the behavior of one person differs from but complements that of the other person.

Several theories focus on one or more specific characteristics of adaptation and highlight different factors that drive people’s behaviors. They can be divided into four main classes according to the perspective they follow to explain adaptation.

The first class of theories includes biologically based models (e.g., (Condon and Ogston, 1971), (Bernieri et al., 1988)). These theories state that individuals exhibit similar patterns to one another. These adaptation patterns have an innate basis, as they are related to satisfaction of basic needs like bonding, safety, and social organization. Their innate bases make them universal and involuntary, but they can be influenced by environmental and social factors as well.

Following a different perspective, arousal-based and affect-based models (e.g., (Argyle and Dean, 1965), (Altman et al., 1981), (Cappella and Greene, 1982)) support the role of internal emotional and arousal states as driving factors of people’s behaviors. These states determine approaching or avoiding behaviors. This group of theories explains the balance between compensation and reciprocity.

Social-norm models (e.g., (Gouldner, 1960), (Dindia, 1988)) do not consider the role of physiological or psychological factors but argue for the importance of social phenomena as guiding forces. These social phenomena are, for example, the in-group or out-group status of the interactants, their motivation to identify with one another, and their level of affiliation or social distance.

The last class of theories includes communication- and cognition-based models (e.g., (Andersen, 1985), (Hale and Burgoon, 1984)), which focus on the communicative purposes of the interactants and on the meaning that the behavioral patterns convey. While adaption happens mainly unconsciously, it may happen that the process of interpersonal adaptation may be strategic and conscious (Giles et al., 1991; Gallois et al., 2005).

The majority of these theories have been studied by Burgoon and others (Burgoon et al., 2007). In particular, they examined fifteen previous models and considered the most important conclusions from the previous empirical research. From this analysis, they came out with a broader theory, the interaction adaptation theory (IAT). This theory states that we alter our behavior in response to the behavior of another person in conversations (Infante et al., 2010). IAT takes into account the complexities of interpersonal interactions by considering people’s needs, expectations, desires, and goals as precursors of their degree and form of adaptation. IAT is a communication theory made of multiple theories, which focuses on the sender’s and the receiver’s process and patterns.

Three main interrelated factors contribute to IAT. Requirements (Rs) refer to the individual beliefs about what is necessary in order to have a successful interaction. Rs are mainly driven by biological factors, such as survival, safety, and affiliation. Expectations (Es) refer to what people expect from the others based on social norms or knowledge coming from previous interactions. Es are mainly influenced by social factors. Finally, desires (Ds) refer to the individual’s goals and preferences about what to get out of the interaction. Ds are mainly influenced by person-specific factors, such as temperament or cultural norms. These three factors are used to predict an individual’s interactional position (IP). This variable derives from the combination of Rs, Es, and Ds and represents the individual’s behavioral predisposition that will influence how an interaction will work. The IP would not necessarily correspond to the partner’s actual behavior performed in the interaction (A). The relation between the IP and A will determine the type of adaptation during the interaction. For example, when the IP and A almost match, IAT predicts behavioral patterns such as reciprocity and convergence. When A is more negatively valenced than the IP, the model predicts compensation and avoiding behaviors.

In the work presented in this article, we rely on Burgoon’s IAT. Indeed, our adapting ECA has an interactional position (IP), resulting from its desires (Ds) and expectations (Es). In particular, the agent’s desire (D) is to maximize the user’s experience, and its expectations (Es) are about the user’s reactions to its behaviors. In our different models of adaptation mechanisms, the agent’s desire (D) refers either to giving the best impression to the user or to maximizing the user’s engagement (see Section 4). Consequently, the expectations (Es) refer to the user’s reaction reflecting their impressions or engagement level in response to the agent’s behavior. The behavior that will be performed by the ECA depends on the relation between the agent’s IP and the user’s reaction (actual behavior A).

In addition, we explore different ways in which the ECA can adapt to the user’s reactions. On one hand, we focus on theories that consider adaptive behaviors more broadly than a mere matching, that is, adaptation as responding in appropriate ways to a partner. The ECA will choose its behaviors according to the effect they have on the user’s experience (see Section 7). In Study 2 (see Section 8), our adaptive agent follows the same perspective but by adapting its communicative strategies. On the other hand, we try to simulate a more unconscious and automatic process working at a motoric level; the agent adapts at a signal level (see Study 3, Section 9).

3 State of the Art

In this section, we present an overview of existing models that focused on adapting ECAs’ behavior according to the user’s behavior in order to enhance the interaction and the user’s experience along different dimensions such as engagement, rapport, interest, liking, etc. These existing models predicted and generated different forms of adaptation, such as backchannels, mimicry, and voice adaptation, and were applied on virtual agents or robots.

Several works were interested in understanding the impact of adaptation on the user’s engagement and rapport building. Some of them did so through the production of backchannels. Huang et al. (2010) developed an ECA that was able to produce backchannels to reinforce the building of rapport with its human interlocutor. The authors used conditional random fields (CRFs) (Lafferty et al., 2001) to automatically learn when listeners produce visual backchannels. The prediction was based on three features: prosody (e.g., pause and pitch), lexical (spoken words), and gaze. Using this model, the ECA was perceived as more natural; it also created more rapport with its interlocutor during the interaction. Schröder et al. (2015) developed a sensitive artificial listener that was able to produce backchannels. They developed a model that predicted when an ECA should display a backchannel and with which intention. The backchannel could be either a smile, nod, and vocalization or an imitation of a human’s smile and head movement. Participants who interacted with an ECA displaying backchannels were more engaged than they were when no backchannels were shown.

Other works focused on modeling ECAs that were able to mimic their interlocutors’ behaviors. Bailenson and Yee (2005) studied the social influence of mimicry during human–agent interaction (they referred to this as the chameleon effect). The ECA mimicked the user’s head movements with a delay of up to 4 s. An ECA showing mimicry was perceived as more persuasive and more positive than an ECA showing no mimicry at all. Raffard et al. (2018) also studied the influence of ECAs mimicking their interlocutors’ head and body posture with some delay (below 4 s). Participants with schizophrenia and healthy participants interacted with an ECA that either mimicked them or not. Both groups showed higher behavior synchronization and reported an increase in rapport in the mimicry condition. Another study involving mimicry was proposed by (Verberne et al., 2013) in order to evaluate if an ECA mimicking the user’s head movements would be liked and trusted more than a non-mimicking one. This research question was investigated by running two experiments in which participants played a game involving drivers handing over the car control to the ECA. While results differed depending on the game, the authors found that liking and trust were higher for a mimicking ECA than for a non-mimicking one.

Reinforcement learning methods for optimizing the agent’s behaviors according to the user’s preference have been used in different works. For example, Liu et al. (2008) endowed a robot with the capacity to detect, in real time, the affective states (liking, anxiety, and engagement) of children with autism spectrum disorder and to adapt its behavior to the children’s preferences of activities. The detection of children’s affective states was done by exploiting their physiological signals. A large database of physiological signals was explored to find their interrelation with the affective states of the children. Then, an SVM-based recognizer was trained to match the children’s affective state to a set of physiological features. Finally, the robot learned the activities that the children preferred to do at a moment based on the predicted liking level of the children using QV-learning (Wiering, 2005). The proposed model led to an increase in the reported liking level of the children toward the robot. Ritschel et al. (2017) studied the influence of the agent’s personality on the user’s engagement. They proposed a reinforcement learning model based on social signals for adapting the personality of a social robot to the user’s engagement level. The user’s engagement was estimated from their multimodal social signals such as gaze direction and posture. The robot adapted its linguistic style by generating utterances with different degrees of extroversion using a natural language generation approach. The robot that adapted its personality through its linguistic style increased the user’s engagement, but the degree of the user’s preference toward the robot depended on the ongoing task. Later on, the authors applied a similar approach to build a robot that adapts to the sense of humor of its human interlocutor (Weber et al., 2018).

Several works have been conducted in the domain of education where an agent, being physical as a robot or virtual as an ECA, adapted to the learner’s behavior. These works reported that adaptation is generally linked with an increase in the learner’s engagement and performance. For example, Gordon et al. (2016) developed a robot acting as a tutor for children learning a second language. To favor learning, the robot adapted its behaviors to optimize the level of the children’s engagement, which was computed from their facial expressions. A reinforcement learning algorithm was applied to compute the robot’s verbal and nonverbal behavior. Children showed higher engagement and learned more second-language words with the robot that adapted its behaviors to the children’s facial expression than they did with the nonadaptive robot. Woolf et al. (2009) manually designed rules to adapt the facial expressions of a virtual tutor according to the student’s affective state (e.g., frustrated, bored, or confused). For example, if the student was delighted and sad, respectively, the tutor might look pleased and sad, respectively. Results showed that when the virtual tutor adapted its facial expressions in response to the student’s ones, the latter maintained higher levels of interest and reduced levels of boredom when interacting with the tutor.

Other works looked at adapting the activities undertaken by an agent during an interaction to enhance knowledge acquisition and reinforce engagement. In the study by (Ahmad et al., 2017), a robot playing games with children was able to perform three different types of adaptations, game-based, emotion-based, and memory-based, which relied, respectively, on the following: 1) the game state, 2) emotion detection from the child’s facial expressions, and 3) face recognition mechanisms and remembering the child’s performance. In the first category of adaptation, a decision-making mechanism was used to generate a supporting verbal and nonverbal behavior. For example, if the child performed well, the robot said “Wow, you are playing extra-ordinary” and showed positive gestures such as a thumbs-up. The emotion-based adaptation mapped the child’s emotions to a set of supportive dialogues. For example, when detecting the emotion of joy, the robot said, “You are looking happy, I think you are enjoying the game.” For memory adaptation, the robot adapted its behavior after recognizing the child and retrieving the child’s game history such as their game performance and results. Results highlighted that emotion-based adaptation resulted in the highest level of social engagement compared to memory-based adaptation. Game adaptation did not result in maintaining long-term social engagement. Coninx et al. (2016) proposed an adaptive robot that was able to change activities during an interaction with children suffering from diabetes. The aim of the robot was to reinforce the children’s knowledge with regard to managing their disease and well-being. Three activities were designed to approach the diabetes-learning problem from different perspectives. Depending on the children’s motivation, the robot switched between the three proposed activities. Adapting activities in the course of the interaction led to a high level of children’s engagement toward the robot. Moreover, this approach seemed promising for setting up a long-term child–robot relationship.

In a task-oriented interaction, Hemminahaus and Kopp (2017) presented a model to adapt the social behavior of an assistive robot. The robot could predict when and how to guide the attention of the user, depending on the interaction contexts. The authors developed a model that mapped interactional functions such as motivating the user and guiding them onto low-level behaviors executable by the robot. The high-level functions were selected based on the interaction context and the attentive and emotional states of the user. Reinforcement learning was used to predict the mapping of these functions onto lower-level behaviors. The model was evaluated in a scenario in which a robot assisted the user in solving a memory game by guiding their attention to the target objects. Results showed that users were able to solve the game faster with the adaptive robot.

Other works focused on voice adaptation during social interaction. Voice adaptation is based on acoustic–prosodic entrainment that occurs when two interactants adapt their manner of speaking, such as their speaking rate, tone, or pitch, to each other’s. Levitan (2013) found that voice adaptation improved spoken dialogue systems’ performance and the user’s satisfaction. Lubold et al. (2016) studied the effect of voice adaptation on social variables such as rapport and social presence. They found that social presence was significantly higher with a social voice-adaptive speech interface than with purely social dialogue.

In most previous works, the adaptation mechanisms that have been implemented measured their influence on the user’s engagement through questionnaires. They did not include them as a factor of the adaptation mechanisms. In our first two studies reported in this article, we aimed to learn the agent’s multimodal behaviors and conversational strategies to dynamically optimize the user’s engagement and their impressions of the ECA, by taking them as input during the learning process.

Moreover, in most existing works, the agent’s predicted behavior depended exclusively on the user’s behavior and ignored the interaction loop between the ECA and the user. In our third study, we took into account this interaction loop, that is, our model takes as input both the user’s and the agent’s past behavior and predicts the agent’s next behavior. Another novelty presented in our work is to include the agent’s communicative intentions along with its adaptive behaviors.

4 Dimensions of Study

In our studies, we focused on adaptation in human–agent interaction by using the user’s reactions as the input for the agent’s adaptation. In particular, we took into account two main dimensions, which are the user’s impressions of the ECA and the user’s engagement during the interaction.

These two dimensions play an important role during human–agent interactions, as they influence the acceptability of the ECA by the user and the willingness to interact with it again (Bergmann et al., 2012; Bickmore et al., 2013; Cafaro et al., 2016). In order to engage the user, it is important that the ECA displays appropriate socio-emotional behaviors (Pelachaud, 2009). In our case, we were interested in whether and how the ECA could affect the user’s engagement by managing the impressions it gave to them. In particular, we considered the user’s impressions of the two main dimensions of social cognition, that is, warmth and competence (Fiske et al., 2007). Warmth includes traits like friendliness, trustworthiness, and sociability, while competence includes traits like intelligence, agency, and efficacy. In human–human interaction, several studies have showed the role of nonverbal behaviors in conveying different impressions of warmth and competence. In particular, communicative gestures, arm rest poses, and smiling behavior have been found to be associated with different degrees of warmth and/or competence (Duchenne, 1990; Cuddy et al., 2008; Maricchiolo et al., 2009; Biancardi et al., 2017a). In the context of human–agent interaction, we can control and adapt the nonverbal behaviors of the ECA during the flow of the interaction.

Following Burgoon’s IAT theoretical model, our adapting ECA thus has the desire D to maintain the user’s engagement (or impressions) during the interaction. Since the ECA aims to be perceived as a social entity by its human interlocutor, the agent’s expectancy E is that adaptation can enhance the interaction experience. In our work, we are interested in whether adapting at a behavioral or conversational level (i.e., the agent’s warmth and competence impressions) and/or at a low level (i.e., the agent’s head and eye rotation and lip corner movement) could affect the user’s engagement. Even though the impact of the agent’s adaptation on the user’s engagement has already been the object of much research (see Section 3), here we use the user’s engagement as a real-time variable given as input for the agent’s adaptation.

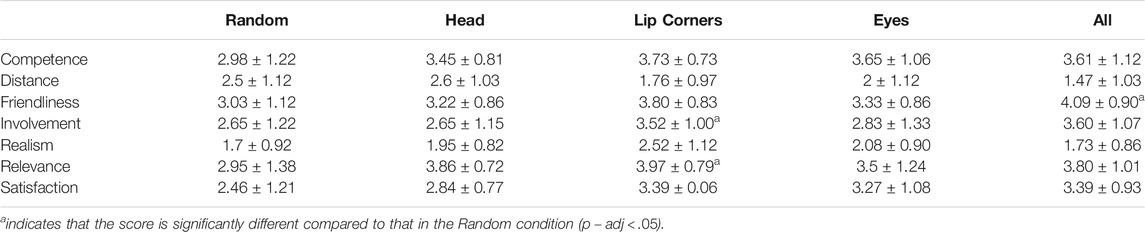

5 Architecture

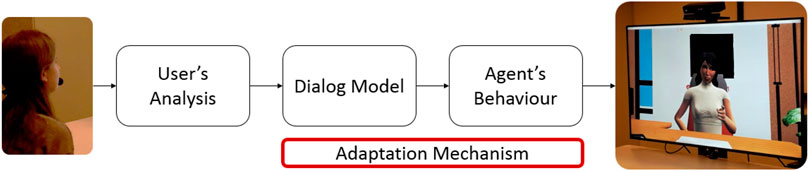

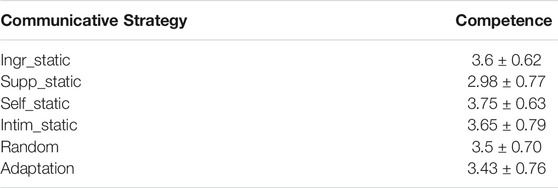

In this section, we present the architecture we conceived to endow the ECA with the capability of adapting its behavior to the user’s reactions in real time. The architecture consists of several modules (see Figure 1). One module extracts information about the user’s behaviors using a Kinect and a microphone. This information is interpreted in terms of speech (what the user has uttered) and the user’s state (e.g., their engagement in the interaction). This interpreted information is sent to a dialogue manager that computes the communicative intentions of the ECA, that is, what it should say and how. Finally, the animation of the ECA is computed on the fly and played in real time. The agent’s adaptation mechanisms are also taken into account when computing its verbal and nonverbal behaviors. The architecture is general enough to allow for the customization of its different modules according to the different adaptation mechanisms and goals of the agent.

FIGURE 1. System architecture: in the User’s Analysis module, the user’s nonverbal and verbal signals are extracted and interpreted and the user’s reaction is sent to the Dialogue Model module, which computes the dialogue act to be communicated by the ECA. The Agent’s Behavior module instantiates the dialogue act into multimodal behaviors to be displayed by the ECA. The Adaptation Mechanism module adapts the agent’s behavior to the user’s behavior. Its placement in the architecture depends on the specific adaptation mechanism that is implemented.

In more detail, the four main parts of the architecture are as follows:

1) User’s Analysis: the EyesWeb platform (Camurri et al., 2004) allows the extraction in real time of the following: 1) the user’s nonverbal signals (e.g., head and trunk rotation), starting from the Kinect depth camera skeleton data; 2) the user’s facial muscular activity (action units or AUs (Ekman et al., 2002)), by running the OpenFace framework (Baltrušaitis et al., 2016); 3) the user’s gaze; and 4) the user’s speech, by executing Microsoft Speech Platform1.

These low-level signals are processed using EyesWeb and other external tools, such as machine learning pretrained models (Dermouche and Pelachaud, 2019; Wang et al., 2019), to extract high-level features about the user, such as their level of engagement.

2) Dialogue Model: in this module, the dialogue manager Flipper (van Waterschoot et al., 2018) selects the dialogue act that the agent will perform and the communicative intention of the agent (i.e., how to perform that dialogue act).

3) Agent’s Behavior: the agent’s behavior generation is performed using GRETA, a software platform supporting the creation of socio-emotional embodied conversational agents (Pecune et al., 2014). The Agent’s Behavior module is made of two main modules: the Behavior Planner receives the communicative intentions of the ECA from the Dialogue Model module as input and instantiates them into multimodal behaviors and the Behavior Realizer transforms the multimodal behaviors into facial and body animations to be displayed on a graphics screen.

4) Adaptation Mechanism: since the ECA can adapt its behaviors at different levels, the Adaptation Mechanism module is implemented in different parts of the architecture, according to the type of adaptation that the ECA performs. That is, the adaptation can affect the communicative intentions of the ECA, or it can occur during the behavior realization at the animation level. In the first two models presented in this article, the Adaptation Mechanism module is connected to the Dialogue Model module, while for the third model, it is connected to the Agent’s Behavior module.

6 Scenario

Each type of adaptation has been investigated by running human–agent interaction experiments at the Science Museum of Paris. In the scenario conceived for these experiments, the ECA, called Alice, played the role of a virtual guide of the museum.

The experiment room included a questionnaire space, including a desk with a laptop and a chair; an interaction space, with a big TV screen displaying the ECA, a Kinect Two placed on the top of the TV screen, and a black tent behind the chair where the participant sat; and a control space, separated from the rest of the room by two screens, including a desk with the computer running the system architecture. The interaction space is shown in Figure 2.

FIGURE 2. Interaction space in the experiment room. The participants were sitting in front of the TV screen displaying the ECA. On the left, two screens separated the interaction space from the control space.

The experiments were completed in three phases as follows:

1) before the interaction began, the participant sat at the questionnaire space, read and signed the consent form, and filled out the first questionnaire (NARS, see below). Then they moved to the interaction space, where the experimenter gave the last instructions [5 min];

2) during the interaction phase, the participant stayed right in front of the TV screen, between it and the black tent. They wore a headset and were free to interact with the ECA as they wanted. During this phase, the experimenter stayed in the control space, behind the screens [3 min]; and

3) after the interaction, the participant came back to the questionnaire space and filled out the last questionnaires about their perception of the ECA and of the interaction. After that, the experimenter proceeded with the debriefing [5 min].

Before the interaction with the ECA, we asked participants to fill out a questionnaire about their a priori about virtual characters (NARS); an adapted version of the NARS scale from the study by Nomura et al. (2006) was used. Items of the questionnaire included, for example, how much participants would feel relaxed talking with a virtual agent, or how much they would like the idea that virtual agents made judgments.

The interaction with the ECA lasted about 3 min. It included 26 steps. A step included one dialogue act played by the ECA and the participant’s potential reaction/answer. The dialogue scenario was built so that the ECA drove the discussion. The virtual guide provided information on an exhibit that was currently happening in the museum. It also asked some questions about participants’ preferences. Purposely, we limited the possibility for participants to take the lead in the conversation as we wanted to avoid any error due to automatic speech understanding. More details about the dialogue model can be found in the study by (Biancardi et al., 2019a).

7 Study 1: Adaptation of Agent’s Behaviors

At this step, we aim to investigate adaptation at a high level, meant as convergence of the agent’s behaviors according to the user’s impressions of the ECA.

The goal of this first model is to make the ECA learn the verbal and nonverbal behaviors to be perceived as warm or competent by measuring and using the user’s impressions as a reward.

7.1 Architecture

The general architecture described in Section 5 has been modified in order to contain a module for the detection of the user’s impressions and a specific set of verbal and nonverbal behaviors from which the ECA could choose.

The modified architecture of the system is depicted in Figure 3. In the following section, we give more details about the modified modules.

FIGURE 3. Modified system architecture used in Study 1. In particular, the User’s Analysis module contains the model to detect the user’s impressions from facial signals. The Impressions Management module contains the Q-learning algorithm.

7.1.1 User’s Analysis: User’s Impression Detection

The user’s impressions can be detected from their nonverbal behaviors, in particular, their facial expressions. The User’s Analysis module is integrated with a User’s Impression Detection module that takes as input a stream of the user’s facial action units (AUs) (Ekman et al., 2002) and outputs the potential user’s impressions about the level of warmth (or competence) of the ECA.

A trained multilayer perceptron (MLP) regression model is implemented in this module to detect the impressions formed by users about the ECA. The MLP model was previously trained using a corpus including face video recordings and continuous self-report annotations of warmth and competence given by participants watching the videos of the NoXi database (Cafaro et al., 2017). The self-report annotations being considered separately, the MLP model was trained twice, one for warmth and one for competence. More details about this model can be found in the study by (Wang et al., 2019).

7.1.2 Adaptation Mechanism: Impression Management

In this model, the adaptation of the ECA concerns the impressions of warmth and competence given to the user. The inputs of the Adaptation Mechanism module are the dialogue act to be realized (coming from the Dialogue Model module) and the user’s impression of the agent’s warmth or competence (coming from the User’s Analysis module). The output is a combination of behaviors to realize the dialogue act, chosen from a set of possible verbal and nonverbal behaviors to perform.

To be able to change the agent’s behavior according to the detected participant’s impressions, a machine learning algorithm is applied. We follow a reinforcement learning approach to learn which actions the ECA should take (here, verbal and nonverbal behaviors) in response to some events (here, the user’s detected impressions). We rely on a Q-learning algorithm for this step. More details about it can be found in the study by (Biancardi et al., 2019b).

The set of verbal and nonverbal behaviors, from which the Q-learning algorithm selects a combination to send to the Behavior Planner of the Agent’s Behavior module, includes the following:

• Type of gestures: the ECA could perform ideational (i.e., related to the content of the speech) or beat (i.e., marking speech rhythm, not related to the content of the speech) gestures or no gestures.

• Arm rest poses: in the absence of any kind of gesture, these rest poses could be performed by the ECA: akimbo (i.e., hands on the hips), arms crossed on the chest, arms along its body, or hands crossed on the table.

• Smiling: during the animation, the ECA could decide whether or not to perform smiling behavior, characterized by the activation of AU6 (cheek raiser) and AU12 (lip puller-up).

• Verbal behavior: the ECA could modify the use of you- and we-words, the level of formality of the language, and the length of the sentences. These features have been found to be related to different impressions of warmth and competence (Pennebaker, 2011; Callejas et al., 2014).

7.2 Experimental Design

The adaptation model described in Subsection 7.1.2 has been evaluated by using the scenario described in Section 6. Here, we describe the experimental variables manipulated and measured during the experiment.

7.2.1 Independent Variable

The independent variable manipulated in this experiment, called Model, concerns the use of the adaptation model and includes three conditions:

• Warmth: when the ECA adapts its behaviors according to the user’s impressions of the agent’s warmth, with the goal to maximize these impressions;

• Competence: when the ECA adapts its behaviors according to the user’s impressions of the agent’s competence, with the goal to maximize these impressions; and

• Random: when the adaptation model is not exploited and the ECA randomly chooses its behavior, without considering the user’s reactions.

7.2.2 Measures

The dependent variables measured after the interaction with the ECA are as follows:

• User’s perception of the agent’s warmth (w) and competence (c): participants were asked to rate their level of agreement about how well each adjective described the ECA (4 adjectives concerning warmth and four concerning competence, according to Aragonés et al. (2015)). Even though only one dimension was manipulated at a time, we measured the user’s impressions about both of them in order to check whether the manipulation of one dimension can affect the impressions about the other (as already found in the literature (Rosenberg et al., 1968; Judd et al., 2005; Yzerbyt, 2005)).

• User’s experience of the interaction (exp): participants were asked to rate their level of agreement about a list of items adapted from the study by (Bickmore et al., 2011).

7.2.3 Hypotheses

We hypothesized the following scenarios:

H1: when the ECA is in the Warmth condition, that is, when it adapts its behaviors according to the user’s impressions of the agent’s warmth, it will be perceived as warmer than it is in the Random condition;

H2: when the ECA is in the Competence condition, that is, when it adapts its behaviors according to the user’s impressions of the agent’s competence, it will be perceived as more competent than it is in the Random condition;

H3: when the agent ECA adapts its behaviors, that is, in either the Warmth or Competence conditions, this will improve the user’s experience of the interaction, compared to that in the Random condition.

7.3 Analysis and Results

The visitors (24 women and 47 men) of the Carrefour Numérique of the Cité des sciences et de l’industrie of Paris were invited to take part in our experiment. 28% of them were in the range of 18–25°years old, 18% were in the range of 25–36, 28% were in the range of 36–45, 15% were in the range of 46–55, and 11% were over 55°years old. Participants were randomly assigned to each condition, with 25 participants assigned to the Warmth condition, 27 to the Competence condition, and 19 to the Random one.

We computed Cronbach’s alphas on the scores of the four items about w and the four about c: good reliability was found for both (

Since NARS scores got an acceptable degree of reliability (

7.3.1 Warmth Scores

The w means were normally distributed (the Shapiro test’s

No effects of age or sex were found. A main effect of NARS was found (

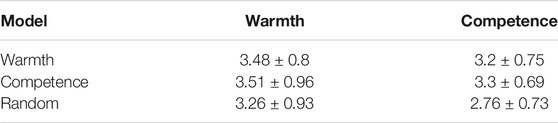

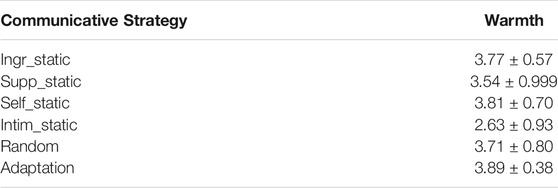

Although we did not find any significant effect, w scores were, on average, higher in the Warmth and Competence conditions than in the Random condition. The mean and standard error of w scores are shown in Table 1.

7.3.2 Competence Scores

The c means were normally distributed (the Shapiro test’s

We did not find any effect of age, sex, or NARS. A significant main effect of Model was found (

7.3.3 User’s Experience Scores

The exp items’ means were not normally distributed, but their variances were homogeneous (the Bartlett tests’ ps for each variable were

Even if we did not find any statistically significant effect, on average, items’ scores tended to be higher in the Warmth and Competence conditions than in the Random condition.

7.3.4 Performance of the Adaptation Model

The Q-learning algorithm ended up selecting (for each participant) one specific combination of verbal and nonverbal behaviors from the

7.4 Discussion

The results show that participants’ ratings tended to be higher in the conditions in which the ECA used the adaptation model than when it selected its behavior randomly. In particular, the results indicate that we successfully manipulated the impression of competence when using our adaptive ECA. Indeed, higher competence was reported in the Competence condition than in the Random one. No a priori effect was found.

On the other hand, we found an a priori effect on warmth but no significant effect of our conditions (just a positive trend for both the Competence and Warmth conditions). People with high a priori about virtual agents gave higher ratings about the agent’s warmth than people with low a priori.

We could hypothesize some explanations for these results. First, we did not get the effects of our experimental conditions on warmth ratings since people were more anchored into their a priori, and it was hard to change them. Indeed, people’s expectancies have already been found to have an effect on the user’s judgments about ECAs (Burgoon et al., 2016; Biancardi et al., 2017b; Weber et al., 2018). The fact that we found this effect only for warmth judgments could be related to the primacy of warmth judgments over competence (Wojciszke and Abele, 2008). Then, it could have been easier to elicit impressions of competence since we found no a priori effect on competence. This could be explained as follows: people might expect that it is easier to implement knowledge in an ECA rather than social behaviors.

The user’s experience of the interaction was not affected by the agent’s adaptation. During the debriefing, many participants expressed their disappointment about the agent’s appearance, the quality of the voice synthesizer and the animation, described as “disturbing” and “creepy,” and the limitations of the conversation (participants could only answer the ECA’s questions). These factors could have reduced any other effect of the independent variables. Indeed, the agent’s appearance and the structure of the dialogue were the same across conditions. If participants mainly focused on these elements, they could have paid less attention to the ECA’s verbal and nonverbal behavior (the variables that were manipulated and that we were interested in), which thus did not manage to affect their overall experience of the interaction.

8 Study 2: Adaptation of Communicative Strategies

At this step, we investigate adaptation at a higher level than the previous one, namely, the communicative strategies of the ECA. In particular, we focus on the agent’s self-presentational strategies, that is, different techniques to convey different levels of warmth and competence toward the user (Jones and Pittman, 1982). Each strategy is realized in terms of the verbal and nonverbal behavior of the ECA, according to the studies by (Pennebaker, 2011; Callejas et al., 2014; Biancardi et al., 2017a).

While in the previous study, we investigated whether and how adaptation could affect the user’s impressions of the agent, we here focus on whether and how adaptation can affect the user’s engagement during the interaction.

The goal of this second model is thus to make the ECA learn the communicative strategies that improve the user’s engagement, by measuring and using the user’s engagement as a reward.

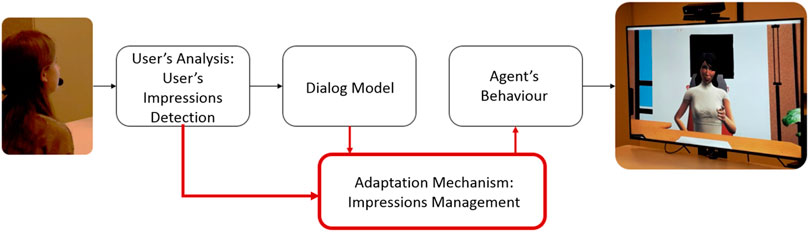

8.1 Architecture

The general architecture described in Section 5 has been modified in order to contain a module for the detection of the user’s engagement and a communicative intention planner for the choice of the agent’s self-presentational strategy.

The modified architecture of the system is depicted in Figure 4. In the following subsection, we give more details about the modified modules.

FIGURE 4. Modified system architecture used in Study 2. In particular, the User’s Analysis module contains the model to detect the user’s engagement from facial and head/trunk signals. The Communicative Intention module uses reinforcement learning to select the agent’s self-presentational strategy.

8.1.1 User’s Analysis: User’s Engagement Detection

The User’s Analysis module is integrated with a User’s Engagement Detection module that continuously computes the overall user’s engagement at the end of every speaking turn. The computational model of the user’s engagement is based on the detection of facial signals and head/trunk signals, which are indicators of engagement. In particular, smiling is usually considered an indicator of engagement, as it may show that the user is enjoying the interaction (Castellano et al., 2009). Eyebrows are equally important: for example, Corrigan et al. (2016) claimed that “frowning may indicate effortful processing suggesting high levels of cognitive engagement.” Head/trunk signals are detected in order to measure the user’s attention level. According to Corrigan et al. (2016), attention is a key aspect of engagement; an engaged user continuously gazes at relevant objects/persons during the interaction. We approximate the user’s gaze using the user’s head and trunk orientation.

8.1.2 Adaptation Mechanism: Communicative Intention Management

During its interaction with the user, the agent has the goal of selecting its self-presentational strategy (e.g., to communicate verbally and nonverbally a given dialogue act with high warmth and low competence). The agent can choose its strategy from a given set of four strategies inspired from Jones and Pittman’s taxonomy (Jones and Pittman, 1982):

• Ingratiation: the ECA has the goal to convey positive interpersonal qualities and elicit impressions of high warmth toward the user, without considering its level of competence;

• Supplication: the ECA has the goal to present its weaknesses and elicit impressions of high warmth and low competence;

• Self-promotion: the ECA has the goal to focus on its capabilities and elicit impressions of high competence, without considering its level of warmth; and

• Intimidation: the ECA has the goal to elicit impressions of high competence by decreasing its level of warmth.

The verbal behavior characterizing the different strategies is inspired by the works of Pennebaker (2011) and Callejas et al. (2014). In particular, we took into account the use of you- and we-words, the level of formality of the language, and the length of the sentences.

The choice of the agent’s nonverbal behavior is based on our previous studies (Biancardi et al., 2017a; Biancardi et al., 2017b). So, for example, if the current agent’s self-presentational strategy is Supplication and the next dialogue act to be spoken is introducing a topic, then the agent would say “I think that while you play there are captors that measure tons of stuffs!” accompanied by smiling and beat gestures. Conversely, if the current agent’s self-presentational strategy is Intimidation and the next dialogue act to be spoken is the same, then the agent would say “While you play at video games, several captors measure your physiological signals,” accompanied by ideational gestures without smiling.

To be able to change the agent’s communicative strategy according to the detected participant’s engagement, we applied a reinforcement learning algorithm to make the ECA learn what strategy to use. Specifically, a multiarmed bandit algorithm (Katehakis and Veinott, 1987) was applied. This algorithm is a simplified setting of reinforcement learning which models agents evolving in an environment where they can perform several actions, each action being more or less rewarding for them. The choice of the action does not affect the state (i.e., what happens in the environment). In our case, the actions that the ECA could perform are the verbal and nonverbal behaviors corresponding to the self-presentational strategy that the ECA aims to communicate. The environment is the interaction with the user, while the state space is the set of dialogue acts used at each speaking turn. The choice of the action does not change the state (i.e., the dialogue act used during the actual speaking turn), but rather, it acts on how this dialogue act is realized by verbal and nonverbal behavior. More details about the multiarmed bandit function used in our model can be found in the study by (Biancardi et al., 2019a).

8.2 Experimental Design

The adaptation model described in Section 8.1.2 was evaluated by using the scenario described in Section 6. Here, we describe the experimental variables manipulated and measured during the experiment.

8.2.1 Independent Variable

The design includes one independent variable, called Communicative Strategy, with six levels determining the way in which the ECA chooses the strategy to use:

1) Adaptation: the ECA uses the adaptation model and thus selects one self-presentational strategy at each speaking turn, by using the user’s engagement as a reward;

2) Random: the ECA chooses a random behavior at each speaking turn;

3) Ingr_static: the ECA always adopts the Ingratiation strategy during the whole interaction;

4) Suppl_static: the ECA always adopts the Supplication strategy during the whole interaction;

5) Self_static: the ECA always adopts the Self-promotion strategy during the whole interaction; and

6) Intim_static: the ECA always adopts the Intimidation strategy during the whole interaction.

8.2.2 Measures

The dependent variables measured after the interaction with the ECA are the same as those described in subsection 7.2.2.

In addition to these measures, during the interaction, for people who agreed with audio recording of the experiment, we collected quantitative information about their verbal engagement, in particular, the polarity of the user’s answer when the ECA asked if they wanted to continue to discuss and the number of any verbal feedback produced by the user during a speaking turn.

8.2.3 Hypotheses

We hypothesized that each self-presentational strategy would elicit the right degree of warmth and competence, in particular, the following:

H1ingr: the ECA in the Ingr_static condition would be perceived as warm by users;

H1supp: the ECA in the Suppl_static condition would be perceived as warm and not competent by users;

H1self: the ECA in the Self_static condition would be perceived as competent by users; and

H1intim: the ECA in the Intim_static condition would be perceived as competent and not warm by users.

Then, we hypothesized the following scenarios:

H2a: an ECA adapting its self-presentational strategies according to the user’s engagement would improve the user’s experience, compared to a non-adapting ECA and

H2b: the ECA in the Adaptation condition would influence how it is perceived in terms of warmth and competence.

8.3 Analysis and Results

75 participants (30 females) took part in the evaluation, equally distributed among the six conditions. The majority of them were in the 18–25 or 36–45 age range and were native French speakers. In this section, we briefly report the main results of our analyses. A more detailed report can be found in the study by (Biancardi et al., 2019a).

8.3.1 Warmth Scores

A 4 × 2 between-subjects ANOVA revealed a main effect of Communicative Strategy (

Table 2 shows the mean and SD of w scores for each level of Communicative Strategy. Multiple comparisons t-test using Holm’s correction shows that the w mean for Intim_static is significantly lower than that for all the others. As a consequence, the other conditions are rated as warmer than Intim_static. H1ingr and H1supp are thus validated, and H1intim and H2b are validated for the warmth component.

TABLE 2. Mean and standard deviation values of warmth scores for each level of Communicative Strategy. The mean score for Intim_static is significantly lower than that for all the other conditions.

8.3.2 Competence Scores

No significant results emerged from the analyses. When looking at the means of c for each condition (see Table 3), Supp_static is the one with the lower score, even if its difference with the other scores does not reach statistical significance (all p-values

TABLE 3. Mean and standard deviation values of competence scores for each level of Communicative Strategy. No significant differences among the conditions were found.

8.3.3 User’s Experience of the Interaction

Participants in the Ingr_static condition were more satisfied from the interaction than those in Suppl_static (

The exp scores are also affected by participants’ a priori about virtual agents (measured through the NARS questionnaire). In particular, participants who got high scores in the NARS questionnaire were more satisfied by the interaction (

Another interesting result concerns the effect of age on participants’ satisfaction (

On the whole, these results do not allow us to validate H2a, but the agent’s adaptation was found to have at least an effect on its level of warmth (H2b).

8.3.4 Verbal Cues of Engagement

During each speaking turn, the user was free to reply to the agent’s utterances. We consider as a user’s verbal feedback any type of verbal reply to the ECA, from a simple backchannel (e.g., “ok” and “mm”) to a longer response (e.g., giving an opinion about what the ECA said). In general, participants who did not give much verbal feedback (i.e., less than 13 replies to the agent’s utterances over all the speaking turns) answered positively to the ECA when it asked whether they wanted to continue to discuss with it, compared to the participants who gave more verbal feedback (

8.4 Discussion

First of all, regarding H1, the only statistically significant results concern the perception of the agent’s warmth. The ECA was rated as colder when it adopted the Intim_static strategy than when it adopted the other conditions. This supports the thesis of the primacy of the warmth dimension (Wojciszke and Abele, 2008), and it is in line with the positive–negative asymmetry effect described by (Peeters and Czapinski, 1990), who argued that negative information generally has a higher impact on person perception than positive information. In our case, when the ECA displayed cold (i.e., low warmth) behaviors (i.e., in the Intim_static condition), it was judged by participants with statistically significant lower ratings of warmth. Regarding the other conditions (Ingr_static, Supp_static, Self_static, Adaptation, and Random), they elicited warmer impressions in the user, but there was not one strategy that was better than the others in this regard. The fact that Self_static also elicited the same level of warmth as the others reflected a halo effect (Rosenberg et al., 1968); the behaviors displayed to appear competent influenced its warmth perception in the same direction.

Regarding H2, the results do not validate our hypothesis (H2a) that the interaction would be improved when the ECA managed its impressions by adapting its strategy according to the user’s engagement. When analyzing scores for exp items, we found that participants were more satisfied by the interaction and they liked the ECA more when the ECA wanted to be perceived as warm (i.e., in the Ingr_static condition) than when it wanted to be perceived as cold and competent (i.e., in the Intim_static condition). A hypothesis is that since the ECA was perceived warmer in the Ingr_static condition, it could have positively influenced the ratings of the other items, like the user’s satisfaction. Concerning H2b with regard to a possible effect of the agent’s adaptation on the user’s perception of its warmth and competence, it is interesting to see that when the ECA adapted its self-presentational strategy according to the user’s overall engagement, it was perceived as warm. This highlights a link between the agent’s adaptation, the user’s engagement, and a warm impression; the more the ECA adapted its behaviors, the more the user was engaged and the more she/he perceived the ECA as warm.

9 Study 3: Adaptation at a Signal Level

At this step, we are interested in low-level adaptation at the signal level. We aim to model how the ECA can adapt its signals to the user’s signals. Thus, we make the ECA predict the signals to display at each time step, according to those displayed by both the ECA and the user during a given time window. For the sake of simplicity, we consider a subset of signals, namely, lip corner movement (AU12), gaze direction, and head movement. To reach our aim, we follow a two-step approach. At first, we need to predict which signals that are due to adaptation to the user’s behaviors should be displayed by the ECA at each time step. The prediction of signal adaptation is learned on human–human interaction. The ECA ought to communicate its intentions to adapt to the user’s signals. Then, the second step of our approach consists in blending the predicted signals linked to the adaptation mechanism with the nonverbal behaviors corresponding to the agent’s communicative intentions. We describe our algorithm in further detail in subsection 9.1.2.

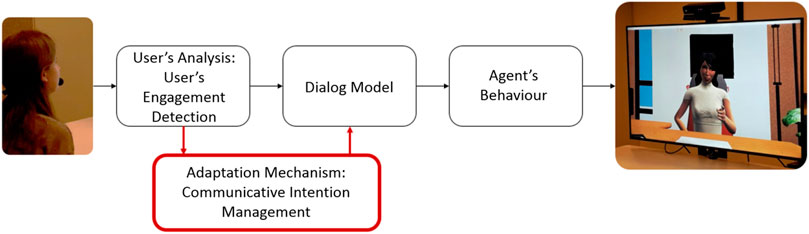

9.1 Architecture

The general architecture described in Section 5 has been modified in order to contain a module for predicting the next social signal to be merged with the agent’s other communicative ones. The modified architecture of the system is depicted in Figure 5. In the following subsection, we explain the modified modules. More details about these modules can be found in the study by (Dermouche and Pelachaud, 2019).

FIGURE 5. Modified system architecture used in Study 3. In particular, the User's Analysis module detects the user's low-level signals such as head and eye rotations and lip corner activity. The Adaptation Mechanism module exploits the IL-LSTM model for selecting the agent's low-level signals. In the Agent's Behavior module, the Behavior Realizer is customized in order to take into account the agent's communicative behaviors and signals coming from the IL-LSTM module in real time.

9.1.1 User’s Analysis: User’s Low-Level Features

Low-level features of the user are obtained from the User’s Analysis module using EyesWeb of the general architecture. In this model, we consider a subset of these features, namely, the user’s head direction, eye direction, and AU12 (upper lip corner activity). At every frame, the EyesWeb module extracts these features and sends the last 20 analyzed frames to the Adaptation Mechanism module IL-LSTM (see Section 9.1.2). It also sends the user’s conversational state (speaking or not) computed from the detection of the user’s voice activity (done in EyesWeb) and from the agent-turn information provided by the dialogue manager Flipper.

9.1.2 Adaptation Mechanism: Interaction Loop–LSTM

In this version of the architecture, the adaptation mechanism is based on a predictive model trained on data of human–human interactions. We used the NoXi database (Cafaro et al., 2017) to train a long short-term memory (LSTM) model that takes as input sequences of signals of two interactants over a sliding window of n frames to predict which signal(s) should display one participant at time n+1. We call this model IL-LSTM, which stands for interaction loop–LSTM. LSTM is a kind of recurrent neural network. It is mainly used when “context” is important, that is, decisions from the past can influence the current ones. It allows us to model both sequentiality and temporality of nonverbal behaviors.

We apply the IL-LSTM model to the human–agent interaction. Thus, given the signals produced by both, the human and the ECA, over a time window, the model outputs which signals should display the ECA at the next time step (here, a frame). The predicted signals are sent to the Behavior Realizer of the Agent’s Behavior module where they are merged with the behaviors of the ECA related to its communicative intents.

9.1.3 Agent’s Behavior: Behavior Realizer

We have updated the Behavior Realizer so that the ECA not only communicates its intentions but also adapts its behaviors in real time to the user’s behaviors. This module blends the predicted signals linked to the adaptation mechanism with the nonverbal behaviors corresponding to its communicative intentions that have been outputted using the GRETA agent platform (Pecune et al., 2014). More precisely, the dialogue module Flipper sends the set of communicative intentions to the Agent’s Behavior module. This module computes the multimodal behavior of the ECA and sends it to the Behavior Realizer that computes the animation of the ECA’s face and body. Then, before sending each frame to be displayed by the animation player, the animation computed from the communicative intentions is merged with the animation predicted by the Adaption Mechanism module. This operation is repeated at every frame.

9.2 Experimental Design

The adaptation model described in the previous section was evaluated by using the scenario described in Section 6. Here, we describe the experimental variables manipulated and measured during the experiment.

9.2.1 Independent Variable

We manipulated the type of low-level adaptation of the ECA by considering five conditions:

• Random: when the ECA did not adapt its behavior;

• Head: when the ECA adapted its head rotation according to the user’s behavior;

• Lip Corners: when the ECA adapted its lip corner puller movement (AU12) according to the user’s behavior;

• Eyes: when the ECA adapted its eye rotation according to the user’s behavior; and

• All: when the ECA adapted its head and eye rotation and lip corner movement, according to the user’s behavior.

We tested these five conditions using a between-subjects design.

9.2.2 Measures

The dependent variables measured after the interaction with the ECA were the user’s engagement and the perceived friendliness of the ECA.

The user’s engagement was evaluated using the I-PEFiC framework (van Vugt et al., 2006) that encompasses the user’s engagement and satisfaction during human–agent interaction. This framework considers different dimensions regarding the perception of the ECA (in terms of realism, competence, and relevance) as well as the user’s engagement (involvement and distance) and the user’s satisfaction. We adapted the questionnaire proposed by Van Vugt and others to measure the behavior of the ECA along these dimensions (van Vugt et al., 2006). The perceived friendliness of the ECA was measured using the adjectives kind, warm, agreeable, and sympathetic of the IAS questionnaire (Wiggins, 1979).

As for the other two studies, we also measured the a priori attitude of participants towards virtual agents using the NARS questionnaire.

9.2.3 Hypotheses

Previous studies (Liu et al., 2008; Woolf et al., 2009; Levitan, 2013) have found that users’ satisfaction about their interaction with an ECA is greater when the ECA adapts its behavior to the user’s one. From these results, we could expect that the user would be more satisfied about the interaction when the ECA adapted its low-level signals according to their behaviors. We also assumed that the ECA adapting its lip corner puller (that is related to smiling) would be perceived as friendlier. Thus, our hypotheses were as follows:

H1Head: when the ECA adapted its head rotation, the users would be more satisfied with the interaction than the users interacting with the ECA in the Random condition.

H2aLips: when the ECA adapted its lip corner movement (AU12), the users would be more satisfied with the interaction than the users interacting with the ECA in the Random condition.

H2bLips: when the ECA adapted its lip corner movement (AU12), it would be evaluated as friendlier than the ECA in the Random condition.

H3Eyes: when the ECA adapted its eye rotation, the users would be more satisfied with the interaction than the users interacting with the ECA in the Random condition.

H4aAll: when the ECA adapted its head and eye rotations and lip corner movement, the users would be more satisfied with the interaction than the users interacting with the ECA in the Random condition.

H4bAll: when the ECA adapts its head and eye rotations and lip corner movement, it would be evaluated as friendlier than the ECA in the Random condition.

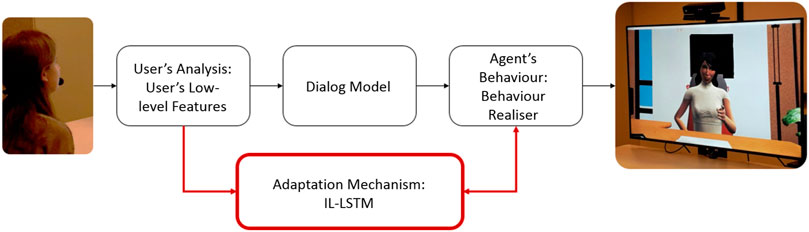

9.3 Analysis and Results

101 participants (55 females), almost equally distributed among the five conditions, took part in our experiment. 95% of participants were native French speakers. 32% of them were in the range of 18–25°years old, 17% were in the range of 25–36, 21% were in the range of 36–45, 18% were in the range of 46–55, and 12% were over 55°years old. For each dimension of the user’s engagement questionnaire, as well as for that about the perceived friendliness of the ECA, Cronbach’s αs were

TABLE 4. Mean

As our data were not normally distributed (the Shapiro test’s

In the Head condition, we could not find differences with the Random condition. We conclude that the hypothesis H1Head is rejected.

In the Lip Corners condition, compared to participants in the Random condition, participants were more involved (

In the Eyes condition, participants were satisfied with the ECA as they were with the ECA in the Random condition. Thus, the hypothesis H3Eyes is rejected.

In the All condition, the ECA was evaluated as friendlier (

Results of the NARS questionnaire indicated that 40, 30, and 30% of participants, respectively, had a positive, neutral, and negative attitude toward virtual agents. An ANOVA test was performed to study the influence of participants’ a priori toward virtual agents on their engagement in the interaction. Participants’ prior attitude toward ECAs had a main effect on participants’ distance (

9.4 Discussion

The results of this study showed that participants’ engagement and perception of the ECA’s friendliness were positively impacted when the ECA adapted its low-level signals.

These results were significant only when the ECA adapted its lip corner movement (AU12) to the user’s behavior (mainly their smile), that is, in the Lip Corners and All conditions. In the case of head and eye rotation adaptation, we found a trend on some dimensions but no significant differences compared to the Random condition. These results could be caused by the adopted evaluation setting where the ECA and the user faced each other. During the interaction, most participants gazed at the ECA without doing any postural shift or even changing their gaze and head direction. They were mainly still and staring at the ECA. The adaptive behaviors, that is, head and eye rotation of the ECA computed from the user’s behaviors, remained constant throughout the interaction. They reflected participants’ behaviors (that were not moving much). Thus, in the Head and Eyes adapting conditions, the ECA showed much less expressiveness and may have appeared much less lively, which may have impacted participants’ engagement in the interaction.

10 General Discussion

In our studies, we applied the interaction adaptation theory (see Section 2) on the ECA. That is, our adapting ECA had the requirement R that it needed to adapt in order to have a successful interaction. Its desire D was to maximize the user’s experience by eliciting a specific impression toward the user or maintaining the user’s engagement. Finally, its expectations (Es) were that the user’s experience would be better when interacting with an adaptive ECA. All these factors rely on the general hypothesis that the user expects to interact with a social entity. According to this hypothesis, the ECA should adapt its behavior like humans do (Appel et al., 2012).

We have looked at different adaptation mechanisms through three studies, each focusing on a specific type of adaptation. In our studies, we found that these mechanisms impacted the user’s experience of the interaction and their perception of the ECA. Moreover, in all three studies, interacting with an adaptive ECA vs. a nonadaptive ECA tended to be more positively perceived. More precisely, manipulating the agent’s behaviors (Study 1) had an impact on the user’s perception of the ECA while low-level adaptation (Study 3) positively influenced the user’s experience of the interaction. Regarding managing conversational strategies (Study 2), the ECA was perceived as warmer when it managed those that increased the user’s engagement vs. when it did not change them all along the interaction.

These results suggest that the IAT framework allows for enhancing human–agent interaction. Indeed, the adaptive ECA shows some improvement in the quality of the interaction and the perception of the ECA in terms of social attitudes.

However, not all our hypotheses were verified. This could be related to the fact that we based our framework on the general hypothesis that the user expects to interact with a social entity. The ECA did not take into account the fact that the user also had their specific requirements, desires, and expectations, along with the expectancy to interact with a social agent. Yet, the ECA did not check if the user still considered it a social entity during the interaction. It based its behaviors only on the human’s detected engagement and impressions. Moreover, the modules to detect engagement or impressions work in a given time window, but they do not consider their evolution through time. For example, the engagement module computes that participants are engaged if they look straight at the ECA without reporting any information stating that the participants stare fixedly at the ECA. The fact that participants do not change their gaze direction toward the ECA could be interpreted as participants not viewing the ECA as a social entity with humanlike qualities (Appel et al., 2012).

Expectancy violation theory (Burgoon, 1993) could help to better understand this gap. This theory explains how confirmations and violations of people’s expectancies affect communication outcomes such as attraction, liking, credibility persuasion, and learning. In particular, positive violations are predicted to produce better outcomes than positive confirmations, and negative violations are predicted to produce worse outcomes than negative confirmations. Expectancy violation theory has already been demonstrated to affect human–human interaction (Burgoon, 1993) and when people are in front of an ECA (Burgoon et al., 2016; Biancardi et al., 2017b) or a robot (Weber et al., 2018). In our work, we took into account the role of expectancies as part of IAT. Our results suggest that expectancies could play a more important role than the one we attributed to them and that they should be better modeled when developing human–agent adaptation. Future works in this context should combine expectancy violation theory with IAT. In this way, the ECA should be able to detect the user’s expectancies in terms of beliefs and desires. It should also be able to check if those expectancies about the interaction correspond to the expected ones and then react accordingly. For example, in our studies, we found some effects of people’s a priori about virtual agents: people who got higher scores in the NARS questionnaire generally perceived the ECA as warmer than people who got lower scores in the NARS questionnaire. This effect could have been mitigated if the agent could detect the user’s a priori.

Even with these limits, the results of our studies show that an adaptive model for a virtual agent inspired from IAT partially managed to produce an impact on the user’s experience of the interaction and on their perception of the ECA. This could be useful for personalizing systems for different applications such as education, healthcare, or entertainment, where there is a need of adaptation according to users’ type and behaviors and/or interaction contexts.

The different adaptation models we developed also confirm the potential of automatic behavior analysis for the estimation of different users’ characteristics. These methods can be used to better understand the user’s profile and can also be applied to human–computer interaction in general to inform adaptation models in real time.

Moreover, the use of adaptation mechanisms inspired from IAT could help mitigate the negative effect of some interaction problems that are more difficult to solve, due to, for example, technological limits of the system. Indeed, adaptation acts to enhance the agent’s perception and the perceived interaction quality. Improving adaptation mechanisms may help to counterbalance technological shortcomings. It may also improve the acceptability of innovative technologies that are likely to be part of our daily lives, in the context of work, health, leisure, etc.

11 Conclusion and Future Work

In this study, we investigated adaptation in human–agent interaction. In particular, we reported our work about three models focusing on different levels of the agent’s adaptation (the behavioral, conversational, and signal levels), by framing them in the same theoretical framework (Burgoon et al., 2007). In all the adaptation mechanisms implemented in the models, the user’s behavior is taken into account by the ECA during the interaction in real time. Evaluation studies showed a tendency toward a positive impact of the adaptive ECA on the user’s experience and perception of the ECA, encouraging us to continue to investigate in this direction.

One limitation of our models is their reliance on the interaction scenario. Indeed, to obtain good performances of adaptation models using reinforcement learning algorithms, a scenario including an adequate number of steps is required. In our case, the agent ended up selecting a specific combination of behaviors only during the later part of the interaction. A longer interaction with more steps would allow an adaptive agent using reinforcement learning algorithms to better learn. Another possibility would be to have participants interacting more than once with the virtual agent. This latter would require adding a memory adaptation module (Ahmad et al., 2017). This would also allow for checking whether the same user prefers the same behavior and/or conversational strategies from the agent over several interactions. Similarly, regarding adaptation models reflecting the user’s behavior, the less the user moves during the interaction, the less the agent’s expressivity level. The interaction scenario should be designed in order to elicit the user’s participation, including strategies to tickle users when they become too still and nonreactive. For example, one could use a scenario including a collaborative task where both the agent and the user would interact with different objects. In such a setting, although it would require us to extend our engagement detection module to include joint attention, we expect that the participants would also perform many more head movements that, in turn, could be useful for a better low-level adaptation of the agent.