94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci., 26 May 2021

Sec. Human-Media Interaction

Volume 3 - 2021 | https://doi.org/10.3389/fcomp.2021.661890

This article is part of the Research TopicCognitive InfocommunicationsView all 10 articles

This paper presents the Experiment in a Box (XB) framework to support interactive technology design for building health skills. The XB provides a suite of experiments—time-limited, loosely structured evaluations of health heuristics for a user-as-experimenter to select from and then test in order to determine that heuristic’s efficacy, and to explore how it might be incorporated into the person’s life and when necessary, to support their health and wellbeing. The approach leverages self-determination theory to support user autonomy and competence to build actionable, personal health knowledge skills and practice (KSP). In the three studies of XB presented, we show that with even the short engagement of an XB experiment, participants develop health practices from the interventions that are still in use long after the intervention is finished. To situate the XB approach relative to other work around health practices in HCI in particular, we contribute two design continua for this design space: insourcing to outsourcing and habits to heuristics. From this analysis, we demonstrate that XB is situated in a largely under-explored area for interactive health interventions: the insourcing and heuristic oriented area of the design space. Overall, the work offers a new scaffolding, the XB Framework, to instantiate time-limited interactive technology interventions to support building KSP that can thrive in that person, significantly both post-interventions, and independent of that technology.

In this paper, we present the Experiment in a Box framework, or XB for short, for designing interactive tools to support a person building knowledge skills and practice (KSP) to maintain their own health and wellbeing. By knowledge, we mean awareness, understanding, and wisdom related to the one’s body as it constantly adapts across time and in context toward enabling a person to move toward desired adaptive states (e.g., being fit, reducing stress, maintaining a healthy weight, etc). By skills, we are referring to one’s capacity to regulate and “tune” oneself in and to context toward desired adaptive states (schraefel and Hekler, 2020). By practice, we are referring to two senses of the term: repetition and commitment. Practice in the first sense is the number and frequency of the repetitions of a skill necessary to become adept at the knowledge and skills necessary in this case to build and maintain health. That repetition, in the second sense, as a formal practice frames this repetition as an ongoing part of one’s life, of actively, continually and deliberately engaging in developing and refining desired knowledge and skills toward desired states. The XB, as we detail in Related Work below, addresses a gap in the research around how to design interactive technology to help people build these resilient (Feldman, 2020) adaptable health KSP that can be actioned across health-challenging contexts, without requiring that technology to be perpetually necessary. In other words, we want to design technology to support building health KSP so people can get off that technology, and thrive with those KSP skills. Drawing on recent work that suggests framing health technology within a continuum from outsourcing to insourcing health skills (schraefel et al., 2020), we show that most of the current research and commercial interactive health technology supports the outsourcing end of health tech design—that is, giving the problem to a third party to manage, including especially around health, to automated interactive technology devices and services, from step counters or follow along workouts and their associated data capture and visualisations, to coordinated product and service delivery.

Further, within the increasingly pervasive discourse of behavior change/habit building frame, many of the current technologies not only seek to outsource habit-building but to then also seek to cultivate a person’s reliance not just on the technology but also context to drive these habits-as-healthy behaviour-change. For example, it is increasingly common to outsource one’s exercise to a follow-along program, or in some cases to programs that also monitor various biometrics, where tools suggest strategies based on that data for making progress (“TrainingPeaks | New Year. New Focus.” n. d.; ‘The Sufferfest: Complete Training App for Cyclists and Triathletes’ n. d.). While there is great potential for such outsourced, habit-formation-oriented technologies, to date, the hope has not been observed in reality. (Riley et al., 2011; West et al., 2012; Lunde et al., 2018; McKay et al., 2018). A key reason for this gap is that an outsourced, habit-formation orientation to behavior change asks for a very heavy lift for both the technology and for context to drive a person into desired adaptive states. This is difficult based on the inherent complexity of human behavior and health, which has been summarized elsewhere in terms of recognizing three factors that impact behavior and health: context, timing, and individual differences (Hekler et al., 2019; Chevance et al., 2020; Hekler et al., 2020; ; ). These dynamics require what we frame as constant adaptivity in context (schraefel 2020). These complexities establish the need for outsourced, habit-formation oriented technologies to be highly adaptive to context and changing circumstances—which is somewhat the antithesis of habits as context specific, context dependent. Most current systems are not broad enough or expert enough to be sufficiently adaptive, which, we contend, is the primary reason behind the gap between the hope and reality. For instance, most tools cannot be responsive and supportive to a simple question like what to do when it’s too late to go for the planned run; what are options when there are no stairs to climb? These current outsourced systems are also high cost in terms of their subscriptions, the associated special gear (Ji et al., 2014), and the need for complex, often data invasive “personalization” algorithms (Hekler et al., 2018; Hekler et al., 2020). Thus, while there is possibility and promise, considerable work is needed before this form of responsive health practices could become a reality.

An alternative to outsourced health practices/health behavior change is emerging under the auspices of personal science (Wolf and De Groot, 2021) or “self-study” (Nebeker et al., 2020). This approach emphasizes what we call insourcing, meaning the cultivation of KSP within the person, thus establishing (and supporting) the person as the driver and lead for achieving desired states, not the technology. The dominant way in which personal science/self-study are being supported via technology, particularly in the human-computer interaction (HCI) literature, is through extensions of randomized control trial logic into N-of-1 cross-over trials, which involve randomizing, within person and across time, the delivery or not of an intervention relative to some meaningful comparator (Karkar et al., 2017). These approaches focus on gathering quantifiable data to refine an outsourced prescription for health activities, ranging from workout plans (Agapie et al., 2016) to sleep strategies (Daskalova et al., 2016). Implicit in many of these approaches still remains an emphasis on either a single action behavior change, such as avoiding certain foods that appear linked with undesired symptoms, or cultivating a prescribed habit and routines, such as specific sleep hygiene techniques.

Our goal with the XB framework is to extend personal science and insourcing by shifting emphasis from behavior change/habit formation to, instead, cultivation of KSP and what we frame as heuristics. By heuristics, we mean a set of general principles, grounded in prior knowledge from both science and lived experience, that can guide effective adaption of the person, across contexts, toward desired adaptive states. The XB framework seeks to cultivate personal health heuristics by blending the broad categories of outsourced health and wellbeing plans and programs (prior knowledge based on science) as initial generic heuristics with the insourcing of personal evaluation found in N-of-1 studies in order for a person to test and customize those heuristics for themselves, to work across contexts.

The focus of the XB framework is, therefore, to help build the necessary health KSP so that a person 1) can answer the question “how do you feel” as a meaningful self-diagnostic and 2) from this self-diagnostic, apply associated health and wellbeing KSP toward selecting appropriate actions in context to feel better. As pointed to above, we frame this ability to select and adapt appropriate KSP to build and maintain health and wellbeing across contexts as tuning (schraefel and Hekler, 2020). The term draws on the analogy of tuning as an instrument or machine: aligning the components of a mechanism so that they resonate together, reinforcing each other toward better performance. The strings of a guitar, for example, are tuned to particular pitches, harmonically, relative to each other enabling them to produce music within the frames of those harmonics; that instrument itself may be tuned to a standard frequency so that it resonates harmonically with other instruments as well. In a tuned engine, all components are likewise synchronized, thus producing more power, more efficiency.

Our goal in presenting the XB framework here is two-fold. First, we seek to expand the design space of health technologies to suggest the possibility for solutions that work across both the spectrums of outsourcing to insourcing, and across the dimensions of cultivating behavior change/habit formation to cultivating personal health heuristics via building and testing associated health KSP. Second, with the XB framework specifically, our goal is to help designers and individuals develop interventions that will support dynamic self-tuning for one’s healthful resilience, across contexts. In the following sections, we present a more detailed discussion of the related work motivating the XB approach. We then present three exploratory studies developed to help triangulate on the set of features that are the fundamentals of the XB framework.

These three studies were conducted explicitly to explore the XB model, framed via the ORBIT model (Czajkowski et al., 2015) notion of “proof-of-concept” studies. In particular, we follow the ORBIT approach of Defining, Refining, and Testing for a meaningful signal. Note that, as discussed in ORBIT, this is an appropriate methodology for this stage of the research process present here. From the studies reported below, we can say convincingly that we appear to be getting a clear signal across these three studies. In accordance with the ORBIT model, this finding can be used to then justify more rigorous evaluation relative to a meaningful compactor. Our studies were explicitly conducted iteratively and consecutively to enable exploration and refinement of the XB approach. The fact that similar conclusions were being drawn even across disparate samples, with the first focused on an ad agency, the second on a university setting, and the third with an open call on social media, point toward some degree of consilience and, thus, increased confidence in the value of continuing this line of work toward more formal and rigorous testing, such as randomized controlled trials.

We conclude by summarizing our findings and identifying opportunities for future work that offer some reflections on open questions about XB phases, and also challenges for use in supporting health exploration for group/cultural health practice and infrastructure, beyond the individual. Overall, the XB framework offers a set of novel parameters, such as insourcing health knowledge skills and practice thus opening new areas in the HCI health design space to be explored.

Supporting health and wellbeing is a burgeoning research area in HCI, from addressing depression, anxiety, or bipolar health issues to new models of digitally enabled healthcare delivery e.g., (Blandford, 2019; Sanches et al., 2019). In the following sections situating our work relative to this context, we further develop both the insourcing to outsourcing design continuum (schraefel et al., 2020) (schraefel et al., 2021) and the habits to heuristics continuum outlined above as new vectors for contextualizing the health design space.

Outsourcing (Troacă, 2012) refers to the subcontracting out of expertize or production to reduce costs and improve efficiencies in industry. Tim Ferris’s book, The Four Hour Workweek (Ferriss 2009) made the concept more personal: one could outsource to a third party tasks from managing one’s calendar to one’s laundry to free up one’s time for more time-valuable processes. Outsourcing also outsources oversight of these processes, which can lead to issues from cost overruns and poorer quality to abuse, from overpriced contracts and substandard services (Brooks, 2020), to suicides at outsourcing factories (Heffernan, 2013) and inmate abuse, motivating the recent shutting down in the United States of outsourced federal prison services (Sabrina and sadie, 2021).

As a complement to outsourcing, we proposed “insourcing” (schraefel et al., 2020) to consider bringing tasks in-house, restoring them to the entity-as-owner. Such insourcing in physical activity would include a person undertaking to learn how to build skills for themselves to achieve and maintain fitness, rather than relying on a trainer to manage programming their fitness experience. Insourcing also has costs: time and money to gain skills, funds for associated equipment, time and resources to practices skills; risks that one’s expertize may not be sufficient on its own to support good strategies for any associated goals. On the plus side, insourced knowledge skills and practice can enable one to be more responsive to challenges, for much longer. A recent workshop call (schraefel et al., 2021), focusing on exploring the insourcing end of the insource-outsource continuum makes clear that there is not a value judgment in insourcing or outsourcing. Insourcing<→Outsourcing is, instead, a continuum: we often flow between these points. For example, most of us outsource production, management and distribution of vegetables from field to home to grocery stores—we outsource that expertize, time and risk and accept its limitations in choice and quality. We may also insource some small part of that process from time to time, as amateur farmers, working on urban community gardens, or growing a tomato in a pot over the summer, sufficient to dress the occasional salad.

This continuum of insourcing to outsourcing is one lens we can use to interpret emphasis in health tech both commercially and in interactive tech for health research. As we show in the following section, while there is a spread across the continuum, the preponderance of work clusters around outsourcing. Through the pandemic, such outsourcing has only escalated: sales of Peleton bikes and subscriptions to associated follow-along anytime-workouts have significantly increased since pre-COVID (Griffith, 2020). Subscription-based guided health services from yoga to meditation have also risen (Lerman, 2020). A trait also strongly associated with these approaches is quantitative data tracking, where sensors capture biometric information while associated apps translate these into scores or performance ratings. Step counters like Fitbit hardware and associated apps are a great example of this approach: the user tacitly agrees to follow the advice of the device which offers a simple, understandable directive: “get X steps on this counter within a day”. It is easy for a person to determine success or failure. Over the past 5 years in particular, more sensors have been integrated into single devices, from phones to watches, gathering even more types of biometric data, less obtrusively: one watch can capture heart rate, standing/moving time, sleep time movement pace, incorporated of course with where and when each beat and step is taken1. These measures offer a happy blending between computer scientist competence and the medical communities’ methodologies. As computer scientists, we are very good at creating ever cheaper, lower power, longer-lasting finer grained sensors. In the medical and sports science communities, we regularly make our cases on health prescription by connecting quantified measures with outcomes: blood pressure, heart rate, age, girth correlate to outcomes from disease prevention approaches [e.g. (Barry et al., 2014; Stevens et al., 2016)] to athlete training programs (Allen et al., 2019).

Beyond the off-the-shelf apps and tools like sports watches and health tracking apps that clearly define what can be counted and correlated, the Quantified Self (QS) communities have demonstrated the value of being able to correlate an amazing variety of measures to see more consistent data to both understand and adapt more varied practices to support desired outcomes (Prince, 2014; Barcena et al.), from room temperature to blood glucose, heart rate, weight as part of deliberate self-study. This QS community is heterogeneous. While some QS-oriented people collect more or less all kinds of data (Ajana, 2017), and some have more specific goals, such as monitoring sports performance over years, to help reflect on their practice or tune it (Dulaud et al., 2020; Heyen, 2020), others carry out more deliberate experiments, usually to understand a possible correlation. That is: a formal protocol is developed; it is executed over a specific time; the data is gathered; and then analyzed toward a conclusion (Choe et al., 2015a).

In more formal research in HCI, the outcome of such exploration has often been to support informing an outsourced outcome: a specific health prescription (Consolvo et al., 2008), supporting data-derived insights (Bentley et al., 2013) and/or using self-experimentation [e.g. (Hekler et al., 2013; Agapie et al., 2016; Duan et al., 2013; Daskalova et al., 2016; Pandey et al., 2017; Lee et al., 2017; Kravitz et al., 2020)] to support a decision to adopt a practice. Early days in this research (circa 2008) provided largely prescriptive interventions, with research focused on persuasive technology approaches of how to design the interaction to support translating a person’s actions with the intervention into a clearly prescribed habit, such as “walk 10 k steps”; “stand every hour”; “go to the gym” [e.g. Ubifit (Consolvo et al., 2008)]. Over the past decade, inspired by QS, personal informatics and n-of-1 studies, health practice designs in self-study have moved toward focusing increasingly on using one’s own data for personal sense-making. Bentley et al.’s (Bentley et al., 2013) Health Mashups attempts to find and highlight possible correlations between quantified representations of a person’s activities to help a person see in the data where they may need or want to do things differently for their health. Intriguingly, one is responding to the data picture of one’s self rather than necessarily a perceived or felt experience.

Once one identifies something to do, such as going to the gym when a pattern shows one doesn’t move, Hekler and colleagues’ DIY’ing strategies (Hekler et al., 2013) help develop and assess making the practice work in one’s life, while Agapie et al.’s workout system crowdsources tailored versions of that activity, again telling someone what to do (Agapie et al., 2016), and Daskalova et al.’s work helps assess and tune it (Daskalova et al., 2017). In related, but more disease-oriented health care, Duan and colleague’s (Duan et al., 2013) work uses formalized experimental N-of-1 designs to find triggers for negative effects in order to eliminate them. Work lead by Panday (Pandey et al., 2017) translates this methodology into a generalized architecture to carry out individually driven, but widely distributed formal N-of-1 studies within any area of human performance to answer “does X do Y” ? There is a strong emphasis, among this self-experimentation side, for producing rigorous statistical estimates (Lee et al., 2017; Phatak et al., 2018; Kravitz et al., 2020)—but that level of rigor is not always appropriate, particularly for early practices when a person is in effect, simply trying to determine even if they want to try out a given health behavior, little own commit to it for the rest of their lives.

By looking at work in HCI in one exemplar health area, sleep self-study, we see these clusters more sharply. Here, most work has focused on blending what to do in terms of sleep practice via tracking and analysis of associated sleep data [SleepTight (Choe et al., 2015)], building peripheral awareness of a recommended sleep enhancing protocol [ShutEye (Bauer et al., 2012)], and making recommendations of how to refine a sleep protocol based on external expert data analysis/pattern matching [SleepCoacher (Daskalova et al., 2016)]. In other words, the person outsources what to do about their sleep to an expert, data-driven guidance system. The prescription seems personalized based on one’s own data, and the success or failure is measured by adherence and improved performance based on measured data. In what is framed as “autoethnography” around sleep, Lockton and colleagues recently focused on participants designing probes that would let them reflect on aspects of sleep important to them, individually (Lockton et al., 2020). Here, the emphasis of the work is on reflection on current practice rather than changing it to sleep better. But even here, the majority of designs in the concluding “insights” section come back to how to re-present data already generated in sleep trackers, for instance, to look at patterns of sleep in different contexts. These approaches tend to assume these data are valuable, informative, sufficient, trustable. We let the data tell us how we are doing, and for many who adopt these approaches, these assessments are taken very seriously. Research around sleep tracking shows increased anxiety around sleep performance as a result is not uncommon (Baron et al., 2017). But research in sleep trackers also shows us how inaccurate they are (Ameen et al., 2019; Tuominen et al., 2019; Louzon et al., 2020). This is a conundrum for design, raised previously in a discussion about the “accuracy” of scales for supporting weight management (Kay et al., 2013): are we achieving what we hope to achieve for health with this data-led approach? Do we need to better qualify what the data presented is actually able to tell users about their experience, and their progress in a health journey?

Research in HCI has also shown that not everyone who might benefit from health support from interactive technology finds these quantification/tracking data-dominant approaches appealing (Epstein et al., 2016). This efficacy seems to be mostly for people already committed to exploring either their general health (Clawson et al., 2015) or to address a very specific health need (Karkar et al., 2016) and that even within that population, abandonment of trackers and self-tracking is high (Sullivan and Brown 2013) (Stawarz et al., 2015). Thus, there are at least two communities where the potential benefit of interactive technological interventions has not created benefit: those who find quantification a non-starter; and those who abandon it—without necessarily building a sustainable practice with it. There is also a related problem; even among ardent trackers, the way these technologies are designed sets up the very high risk that, if/when the technologies are removed, the desired change will also go away. This general pattern was shown in prior analogous work related to gym membership (Hekler et al., 2013). In particular, a previous physical activity intervention, which was shown to increase physical activity relative to a control condition in a randomized controlled trial (Buman et al., 2011), included the option of gym memberships, though not everyone in the study used the gym membership. While there were people who increased physical activity both using the gym and not using the gym, it was only the people who did not use the gym who maintained their physical activity when the intervention was over and the gym membership was taken away (Hekler, 2013). Returning to fitness programs and trackers, what happens on day 91, after the 90-days fitness follow along? This question of course reveals a concern dominant in this paper: what do we want our interactive health technologies to enable? To support building device reliance or independence? Another way of framing this question may be: does outsourcing to health technology make us more cyborg (Haraway, 1991) than human? Again, that continuum—raw to cyborg—is not meant as a value judgment, but as a way to be, perhaps, more deliberate and specific in our design choices.

For those who have abandoned or do not engage in data-dominant health support, we suggest that the tracking/quantification may not itself be the main issue for resistance, but rather the outsourcing of a practice to a quantifiable data driven approach, a point that is implied with Wolf and DeGroot’s framing on personal science [note, Wolf was one of the originators of the notion of Quantified Self and, in this piece, he is signaling a shift away from the emphasis on quantification (Wolf and De Groot, 2021)]. The tools, in the end, can be perceived to be too brittle or narrow at this point in their evolution to be more generally useful. Outsourcing is not just about giving monitoring of biometrics over to devices; it also needs the data-driven system to tell one how well they are doing and, what to do. In the authors’ work with athletes, it’s not uncommon to hear of dissonance between an athlete’s watch telling them about their recovery state and how they themselves think they are feeling. In the sleep trackers, it’s not uncommon for people who wake feeling ok, to be frustrated and therefore not seeing their deep sleep change. We suggest it may be that the perceived quality of the assessed states or recommended practices are not sufficiently responsive for the cost of perpetual tracking. Periodicity, messo, macro, micro cycles familiar in sports science is also largely absent. An implicit quality of most health devices is that they have no time limits, the implicit design being, the intervention will last forever. That too has been noted (Churchill and schraefel, 2015) as a frustrating assumption in such outsourced health tech design.

There is a challenge with insourcing-oriented designs: how to support internalizing awareness and knowledge of personal state? When we rely on outsourcing, on data to tell us how we are performing, we do not have to determine how we feel; we do not have to focus on building the pathways between our own in bodied sensors and the machines’. Building up these lived connections—to be able to answer “how do you feel how you feel” is non-trivial on at least two levels. First, some people have not, at least in recent memory, had the experience of, for example, a good night’s rest: they wake every day under-slept, roused by an alarm, instead of waking on their own. If we do not know what it feels like to be well-rested, or we cannot perceive these differences in terms of affect (Zucker et al., 1997; Erbas et al., 2018) then we lack a yardstick both to judge actions against, and make decisions about the perceived cost. Without this internalized yardstick and sense of difference, any information provided on plausible difference (you’ll be well-rested) is a purely intellectual value. Thus, the plausible benefits one would experience are not part of the calculations for choosing different health actions when insourcing is not present (Vandercammen et al., 2014). Second, while humans have incredibly sensitive dynamic sensing systems, some people do not have the connection between that sensory input and their experiences well-formulated. They may be interoceptively (Tsakiris and Critchley, 2016; Murphy et al., 2019) weak. That is, without the lived allostatic experience (Kleckner et al., 2017) that connects, for example, positive movement with good mood, or nutritious food with cognitive effectiveness, the person does not have, literally, a mental map to help navigate toward healthful practices and associated experiences. Those pathways have not been built. This is critical based on new theories around subjective states and our understanding on what we feel and why. For example, there is increasing evidence suggesting that emotions are not innate to our biology or brain but, instead, appear to be a psychological construction of the interaction between interoception and context and history (Lindquist et al., 2012). The implication of this is that, if one does not develop a robust practice of interoceptive awareness in context, one quite literally, will not be able to meaningfully feel (Lindquist et al., 2012). As classic work in neuroscience and social psychology illustrates, this is critically important as, there is increased recognition that it ultimately our experience of emotions that guide on in the actions we take (Spence, 1995; Haidt, 2012). Thus, training on how to feel, meaning how to meaningfully experience and interpret the signals that flag up our state across contexts, is a critically important and, as of yet, under-studied area of work for advancing health. This is the domain that we are exploring in the XB framework.

Just as computer science and medicine synergize around capture and use of quantified data for health interventions, psychology and computer science in HCI meet at Behavior Change2. A key embodiment for behavior change in HCI is the habit: identifying (Pierce et al., 2010; Oulasvirta et al., 2012), breaking (Pinder et al., 2018), and especially forming (Consolvo et al., 2008; Stawarz et al., 2015) habits are strong focii of that engagement. The focus on habits is understandable: while the space of sustaining a health practice is multifaceted, including “motives, self-regulation, resources (psychological and physical), habits, and environmental and social influences” (Kwasnicka et al., 2016), habits themselves are highly amenable to what computational devices can support: context triggered cues, just in time reminders and compliance tracking (Rahman et al., 2016; Lee et al., 2017). For context, in psychology, habits are described as behaviors that, once developed, are enacted automatically, that is without much conscious thought, and within specific contexts (Wood and Rünger, 2016). This context-based automation is valuable and important as habits can facilitate appropriate rhythms for achieving meaningful health targets. For instance, “at night while watching the TV, floss teeth”. The TV, the night, the floss container at hand, all contribute to the cues to trigger that practice. Such habits are extremely helpful: who wants to think deeply every day about the precise practice of dental hygiene? But what if the context changes? If the context switches, such as while traveling, or the floss runs out? Habits, by their very specificity, do not support adaptivity, yet many health contexts require a broader range to support the goals of a practice. For instance, if the intention is to maintain fitness, and a device only supports “go for a walk after breakfast” what happens when one is where that walk is not an option? Where are alternatives? To address these challenges, we propose expanding “habit” as a single design point, into a continuum that includes heuristics.

Heuristics, like habits, are also well known in HCI, specifically as a method of usability evaluation where a set of general processes or principles (the heuristics) are provided to be used by an evaluator inspecting an interaction for those processes, like “visibility of system status” and “recognition rather than recall” (Nielsen and Molich, 1990). Heuristics like habits can be internalized, but here rather than specific autonomous actions, they are integrated as functional principles to be instantiated based on personal knowledge and skills that guide a person’s practice in any given moment. As such, they are a useful mechanism for insourcing dynamic, responsive, healthful practices. For example, in strength training, there is a heuristic: when in doubt, choose compound movements. Such movements work most of the body and are therefore “go to” work for general physical preparedness (Verkhoshansky et al., 2009). As part of building an insourced practice, one then builds up experience with a lexicon of movements that will support that heuristic: pull ups, squats, rows, deadlifts and so on. Similarly, survivalist Bear Grylls is famous for his heuristic: “Please Remember What’s First: protection, rescue, water, food” (Kearney, 2018). Success with this life-saving mnemonic depends however, on knowing what protection is in the desert vs. the jungle. Likewise, one may need practice with the skills to make a fire to melt snow for water in the tundra, ward of other creatures, or be noticed for rescue.

In weighing design choices for health at one side of this continuum, habit formation requires intention (Prochaska and Velicer, 1997; Hashemzadeh et al., 2019), what we might frame as insourcing of motivation, to put the effort into repeating the task sufficiently for it to become automated/habituated. Assuming that motivation exists, the triggers for firing habits as per the references above can be supported by technology (as per Stawarz et al., 2015), and thus their development may be effectively outsourced. However, like the data-prescriptive outsourced approaches discussed above, habits do not deliberately build knowledge skills or practice for executing options either. Heuristics do focus on building those skills. While heuristics are more adaptive, as more context-agnostic than habits, they also require potentially more resources to insource in order to develop the variety of experiences and expertizes to be independent of external guides. Thus, the habit-heuristic continuum opens up a way to let designers ask more explicitly both what they are trying to instantiate in a person, and what the costs/trade-offs of either approach may be.

Another difference between habits and heuristics that interactive health tech may leverage is that, while habits are strongly associated with behavior change, heuristics are not. Heuristics are more associated with knowledge building and application: to have a general rule on how to approach a problem is different than a need to change in a very particular manner. While the ends may often be similar, the pathways, and thus design processes open to explore, are different, offering HCI additional options for designing toward successful health engagement.

Based on the above related work, we see considerable open space to explore the design and development of insourcing health heuristics to support building knowledge, skills and practice for personal health resilience. The following work presents XB as just such an exploration of this space. In the next sections we present an overview of the XB approach, several exploratory studies to define and confirm its key features, and then present an XB framework to enable general XB development by others that incorporates insights from these exploratory studies.

The XB approach provides a structure for translating a general health heuristic into a personal, practicable, robust health heuristic. For many, generic health heuristics are known, such as sleep hygiene rules, but they can also be hard for people to apply in their own lives. The self-determination theory postulates that to make the effort—to have the motivation—to engage in a health practice, a person’s autonomy, competency and relatedness must be supported, and when they are, increased feelings of vitality ensue (Stevens et al., 2016). Therefore, with the XB approach we can create simple, short-duration experiences, “experiments” that empower people to test out a health heuristic and to build competency through the testing process. By experiment, we are explicitly using the word in a colloquial sense, which we will unpack more momentarily. Experiments, in a colloquial sense, are what we want to inspire. Through experiments, one can not only learn a potentially new health skill; they also learn the meta skill of how to explore and find strategies for health that can work for them (i.e., experimentation and trial and error on self). Based on this, we see the XB model as a skills-building approach for supporting self-study practice around health heuristics that cultivates both self-regulatory skills in the form of self-study and skills in doing specific actions that produce desired across contexts. It is in line with the personal science framework (Wolf and De Groot, 2021), but simplified in terms of providing initial heuristics to work off of, compared to more of a blank slate implied in the personal science framework. We help participants share their experiences both through talking about their experiences as well as sharing anonymized data of their experiences for open science (Bisol et al., 2014).

To reinforce this exploratory approach, we frame each instantiation of the XB model as an “experiment in a box”, an XB. By experiment, we are explicitly not referring to the current health science use of that word, which connotes the use of randomization to enable a robust causal inference. As said, we use “experiment” in a colloquial sense as the colloquial concept aligns with the experience we offer. This framing as “experiment” has several SDT related advantages: it reinforces the autonomy of the Experimenter as the one in control, not the one being controlled or told what to do. The nature of an experiment is also time-limited, thus enabling a person to “try it out before committing” to a particular health protocol. That approach is in contrast to the dominant paradigm that assumes one will choose and follow a strategy forever. The “box” part of the Experiment in a Box is the guidance the XB provides to test and evaluate the heuristic and to translate it in the process from a general heuristic to personalized health knowledge skills and practice (KSP). The box is where the key distinction from the more general personal science framework occurs.

KSP is important in the XB model. Knowledge is prior information learned by others or oneself relative to the relationship between actions and outcomes, both positive and negative. Skills are strategies, approaches, and actions one actively engages in to achieve desired goals. These skills can be far ranging from the concrete development of one’s physical ability to do an action (e.g., shoot a basketball into a hoop) to ones’ self-regulatory capacity to define, enact, monitor, and adjust one’s actions in relation to a desired state. Practice has two associations, as pointed to in the introduction. The first is simply the repetitions necessary to be able to use a skill, apply knowledge effectively. The second is the incorporation of these skills as something that is deliberately, actively and consciously part of one’s life.

In the first case practice involves cultivating personal knowledge, which includes both that which can be expressed, and also what may be tacit knowledge—that is, “known” by the person but may not be conveyable via language (e.g., how to kick a football or play a chord on a guitar; building one’s capacity to know how to adapt appropriately in various contexts). For example, one general health heuristic for a healthy sleep practice might encapsulates actionable knowledge as: “ensure that the environment where you sleep is dark when sleeping”. The skill is the ability to execute that knowledge in diverse contexts. It might include something as simple as ensuring lights are off or blocking light leaks from windows. The more diverse the sleep contexts one experiences where they solved the dark room challenge, the broader the options are to draw upon to execute this heuristic across contexts. By extension, a person’s robust practice across contexts leads to enacting practice agility—a significant component for “resilient” (Feldman, 2020) health practices. As a general health heuristic becomes tested and refined by/for each person across contexts, it becomes an increasingly more personal and robust health heuristic, hence a personal health heuristic. For example, one may find that 1) dark rooms improve their sleep and 2) their best path to a dark room at home is a sleeping mask, but on the road, traveling with a clothespin/peg to shutter a hotel curtain, is essential. XBs are designed to help people develop this KSP experience/expertise.

Practice in the second sense is also a way of framing an ongoing engagement with knowledge and skill work. One may have a professional practice, a spiritual practice, a physical practice. The concept of practice in this way situates the interaction as deliberate, intentional, and continuous. It also inherits, at least in part, the sense of practice as part of continual skill refinement. This sense of practice further differentiates our focus on heuristics from habits. A habit’s great strength is to be able to “set it and forget it” like “brush your teeth every day on waking.” A heuristic-as-template on the other hand helps guide and instantiate knowledge, and apply skills, in diverse contexts. Thus we practice skills like a tennis serve, at first to learn the technique and build the coordination until the skill is automated (Luft and Buitrago, 2005)—where it actually changes where that pattern sits in our brain. After this, we practice the skill literally to keep those neural pathways cleared and strong (Olszewska et al., 2021). But the practice of the practice is to deliberately work to add nuance to the skill, to operate better, faster, across contexts. Such ongoing refinement is necessary, for example, around how we eat. What diet supports muscle building in year one needs to be refined in years two, as the body adapts, circumstances change. Thus, one’s physical practice is both regular, one might say habituated, but critically, is also conscious, deliberately open to refinement to support continual tuning. We will see this latter sense of a practice in KSP as part of the insourcing we have been developing the XB framework to help establish and support.

The above section lays out our general thinking that guided of how we designed our initial explorations into XB. In this section we present how we iteratively refined and tested our approach over three studies. We had several goals in these studies. A key goal is to see that health skills tested in an XB are effectively insourced. We define that in terms of a recognition that the specific health skill a person experimented is used over time. Further, we also sought to see if people insourced the more general skill of self-experimentation. Do people report continuing to try out different generic heuristics they hear of in their lives, as is suggested they do in the XB framework? In addition to the behaviors we were seeking to observe, we also wanted to gain an insight on perceived benefit. As our focus is on insourcing and interoceptive awarenessness, we asked an intentionally broad question: does a person feel better? Further, do they feel more capable in managing their wellbeing?

Our study approach, which involved three studies over time with increasing levels of rigor, was grounded in the classic scientific logic of assumption articulation and testing (i.e., iteration) coupled with triangulation toward consilience. Specifically, based on the stage of this approach, which as we argue, is still vary nascent, we explicitly started highly exploratory in our approach, to enable experience to guide our next steps. As we learned from experience, we refined our testing protocols. While no one study of this sequence provides definitive evidence of utility of the XB approach, the similar patterns we saw across different populations, ways of measuring, and ways of supporting an XB experience (i.e., consilience), we contend, is suggestive of the value of further study and exploration into the XB framework.

In addition, our iterative study approach was inspired by the ORBIT model (Czajkowski et al., 2015) for behavioral intervention development. In particular, study one and two were both variations of a proof-of-concept trial, with study one used to help refine XB (i.e., the end of phase I of the ORBIT model), and then to gather evidence of real-world impact relevant to the outcome of interest in study I, which is the start of phase II of the ORBIT model, focused on justifying the need for a more rigorous clinical trial. And even in our third study, we used more of a proof-of-concept formalism for evaluation, which explicit does not use statistical analyses between groups. The reason why we used iterative proof-of-concept studies is because, both, this is the stage of development we are at (define, refine, test for a meaningful signal), and because this approach is increasingly recognized as a more appropriate method for exploration than the increasingly debunked strategy of running underpowered randomized controlled trials and conducting corresponding “limited efficacy” analyses of between-group differences. Indeed, under-powered pilot-efficacy-trials explicitly are increasingly being shown to produce poor evidence that actually stymies scientific progress (Freedland, 2020a; Freedland, 2020b). The short reason is that, with such under-powered trials, any effect size estimates gleaned from such trials is highly untrustworthy and, thus, largely not interpretable. To avoid these traps, a series of proof-of-concept studies, which we conducted, fits with emerging best practices for work focused on defining, refining, and testing for a signal of an intervention. Further, when looked at together, they provide increased confidence, via the notion of triangulation and consilience, that the XB approach has been specified with sufficient rigor to enable limited replicability across populations. As illustrated in ORBIT, this does not mean that any claims of efficacy or effectiveness relative to some meaningful comparator can be made at that time, but, again, that was not our purpose. Our purpose was to define, refine, and test for signal (i.e., phase I and the start of Phase II of ORBIT). More details on each proof-of-concept study variation below.

Study One presents a low-fi exploratory study with a 12-member participant group to see simply if these short experiments were positively received, experienced as useful, and in particular, lead to lasting use after the study intervention ended. The results were overall positive, and we present a set of design insights from that study that formed the basis of a more formal interrogation in Study Two.

In Study Two, we translated the insights from study one into a stand-alone application to be used by individuals, independent of a group context. Using a within-subjects design, we sought to assess if such an implementation was sufficiently robust to create a positive effect during the study, and could likewise instill lasting knowledge skills and practice, as indictive of the creation of a personal health heuristic, beyond the end of the main study.

The outcomes of the study were overall positive. In Study Three, therefore, we sought to test our assertions on the key elements of XB—the framing of an experiment; the support to regularly evaluate and reflect on its utility—actually enabled XB success beyond simply making good practice guidance readily accessible. To this end, we ran a formative between-subjects randomized study to test our hypothesis that an XB approach would be more effective at promoting engagement with health practices compared to a version that mirrors standard mechanisms of offering health support. For our test we reused the SleepBetter XB from Study Two and created a similar app that offers knowledge (i.e., information about sleep) and details sleep health behavior skills (i.e., sleep hygiene), and invites a person to try any one of these practices for a week but does not provide the self-regulatory skills exploration operationalized by the XB model, nor a structure that guides experimenting with one’s practice. While we imagined that the more supportive approach would be better than the current standard for sharing health practice building, the strength of the responses between the two conditions underpinned the value of the XB.

In Study One, we worked with 12 participants from a national advertising organization over 6 weeks. As creativity is an essential quality for advertisers, the motivation for participants was their interest in improving their health to improve their creativity. As measuring creativity directly is notoriously messy (Thys et al., 2014), we drew on related work that shows both: 1) how cognitive executive functioning areas of the brain map to associated areas for creativity; and 2) that fitness practices enhance those areas of the brain (schraefel, 2014). From that, we postulate the use of validated cognitive executive functioning tasks to demonstrate quantitative benefit, along with participants’ qualitative self-reports of their experience. Participants carried out several of these tests prior to beginning the formal study and after each week. These included stroop tests, tapping tests, memory tests (Chan et al., 2008).

In this exploratory study, we focused on offering participants a weekly “taster” of five fundamental health practices for wellbeing we called the “in five” of move, eat, engage, cogitate, and sleep, detailed in (schraefel, 2019). In the six-week engagement, week one was a preparatory week to learn about the study and how it would run each week. In each of the 5 weeks following, the group ran an experiment on one of the in five. At the start of each week, the team manager sent around a document we co-wrote, with descriptions of the experiments and instructions for the protocol, asking people to track their practice/experiences at least once a day. We did not specify how to do this tracking; we were interested in what people would find important to meet that observation requirement. The manager was very good about sending out reminders every other day to make sure people had logged something about their experience for that study. To align these experiments as much as possible with the group’s motivation around creativity and design, we also named these experiments with colors rather health concepts. For example, the Black Box was the Sleep protocol; the Green box was for Eat, the Blue Box was for Cogitate, Red Box for Engagement and Yellow was for Move. Table 1 lists each of the experiments, and their associated protocols.

In this first study, the experiments for each week were predefined, but we emphasized choice in the variety of ways the heuristic could be implemented. For example, in the Green Box, the heuristic was “up your green and red” green being green vegetables; red being any protein. The experimental protocol was: “eat any time you wish, as many times in a day as you wish, but any time you eat, for the five-day work week, have some green veg and some kind of protein before you eat anything else, any time you eat”. As a group, prior to the Green Box start, we reviewed what constituted a “green veg” and what a protein was. To reduce any sense of sacrifice around eating in the experiment, the approach was also deliberately additive rather than subtractive: “as long as a green and protein are present, add anything else after that; and also have the green and protein before eating anything else you add”. We also acknowledged that this was not likely how people would continue to eat after the experiment, but that as an experiment this was to ensure dose effect in the given time.

At the end of each week, we asked participants to capture: 1) what did you learn/experience? 2) based on the effect experienced, how would you turn this into a part of your life—how would you create a personal health practice (a personal health heuristic) from this experiment? 3) what are any questions you have right now about implementing that practice? In our Black Box for sleep experiment, the kinds of questions we would get were: “Sleeping till I wake feels great; how do I do this on days when I want to go out with my friends?” This led us to discuss sleep debt, recovery, and also balancing values like social interaction for wellbeing with rest and recovery. In the Yellow Box, we learned that people were reluctant to be seen leaving their desks so frequently lest their colleagues perceived them to be slacking off. This response offered a powerful insight for managers in terms of the interventions needed to support movement as a cultural practice to be encouraged.

At the conclusion of each week’s experiment, we met online via Skype to reflect together on outcomes from that week and to prep for the week coming up. In-between weekly meetings, we used an open-source online bulletin board to share observations, respond to questions, and add reminder requests to record experiences. As noted, participants were also invited to re-run their creativity assessments once a week, post each experiment.

In this study, we did not ask participants for their logs or their creativity assessment data: that we agreed at the outset was personal, and we were keen for as many people on the team to feel as safe as possible working with us as co-explorers, not subjects. We agreed on sharing material on the forum with all of us in our weekly meetings—including the three questions asked each week, above. Our final interviews would be used for our analysis. To this end we asked participants to share as much of their insights as they felt comfortable, to help inform the design process.

Overall, the consensus was that the process was interesting and surprising in positive ways. The group even made a 3-min video to reflect on the experience (Ogilvy Consulting UK, 2014). From the forum posts and our weekly interviews, we gathered the following key points around factors affecting the utility of the XB approach:

1) Small repeatable doable doses; big effects. In developing the experiments, we were very careful to ensure that an effect could be felt within the week of its practice. We drew on our own professional backgrounds in health and sports science, consultations with colleagues who coach health practices professionally, and associated literature reviews to develop these protocols. Despite this preparation, we were surprised by the reported size of the experience based on the conservative nature of the protocol. For example, in the Green Box, we were particularly concerned that any food intervention would have a noticeable effect within a week, as the usual report in the literature had been 14 days to show measurable results. It seems experiential results may be sooner: each participant reported even before the week was up about changes in energy; about the surprise as they thought it would be hard to get a green and a protein in all the time, but how easy it actually was. It seems also that dose size afforded by an experiment is also valuable: that each time a person ate, they focused on the green/protein combination. This meant they were paying attention to this practice and its effect—both on immediate eating experience but also in reflection—having many data points to draw upon by the end of that week. Thus, short, simple, multiple doses that are readily achievable (with preparation) and that will also create an experienceable effect within the period seem to be key.

2) Beyond knowing: creating space to do the practice. Participants also commented on the importance of direct experience of a practice they “knew” of but had not directly tried. For example, in our Black Box for sleep week, we worked with the Group’s management to request permission to let participants test the heuristic “wake up when you wake up” and thus to sleep as long as they needed, and to come into the office when they were up and ready, without penalty. Intriguingly, it took more effort from the manager to confirm with participants that this was OK, than it was to get that support in the first place. Once they had the space to embrace what became known as “kill the alarm”, they did. Again, they reported value around being asked to actually “test” the concept in the experiment. As one participant reported, “I’d always heard that 8 h of sleep was beneficial, but until I actually tried it, I had no idea how much of a difference it made.”

3) Unanticipated benefits—While some people expected they may have more energy or gain more motivation to stick with a health practice, they were surprised to find how quickly their mood also seemed to improve; and how easy these practices were to make a regular practice. One person’s surprise was in their colleagues, especially after the Red Box on Engagement where they were asked to take 3 min every day to listen to a colleague, without interruption. “I used to hate that person, but after listening to her and others, it was amazing, she became so much nicer; she stopped bothering me.” There may be an opportunity here for design to explore more deliberately unanticipated positive side effects associated with experimental experiences.

4) Overall effects appeared to be cumulative—Each week people reported positive experiences; after week three, most were reporting they were starting to notice positive differences in their mood, sense of wellbeing, energy, creativity and for some, their weight. This progressive experience across experiments may be another side effect that can be explored more deliberately in design: to cue up that there may not only be benefits from the time given to one experiment, but in spending this period—in this case six consecutive weeks—on health practices; there may be additional benefit just in the exploration of health for those several weeks. This experience in itself of having the permission space and support to focus on health KSP—irrespective of experimental outcomes—may foster a foundation for lasting health engagement.

5) Short exposure may lead to lasting practice—In follow ups—3, 8 and 13 months after the study, participants were still using skills they had learned—translating them into practices that were working for them. For example, one participant shared that they attempt to get full nights’ sleep for them at least four nights a week so they can have “guilt-free socializing time” with their colleagues on weekends. The participant claimed the XB experience helped them explore and build a sleep-recovery approach to support their wellbeing. This approach was not taught by the Black Box, but the Experiment aspect of the study itself encouraged the person to keep testing practices to work for them.

6) Skills Debugging—Related to ongoing use of both skills and experimental “test the approach”, we found that, without prompting, participants used the skills from an XB to debug and retest their practices. One participant reaffirmed her initial results with one experiment that for her, a diet high in greens with sufficient protein feels good on numerous levels. She told us: “My parents visited for a week, and I just stopped doing Green and Red (a green and a protein with each feeding), and I ate what I’m like when I’m at home. Lots of bread, lots of pasta—no greens, very little red. I felt stressed; I reverted to type. When they left, I felt crappy and bloated and no energy. I decided to go back to red and green; the weight I put on had gone and I feel so much better. Maybe the stress is gone because the visit is over, but I think it’s a lot about the food.”

7) Preparation This result is inferred by participants’ discussions about their practice: in many cases the way they described carrying out a practice drew from the preparation for it in the week preceding it. For the Blue Box, the experiment was “learn new skills you can practice daily, many times, for a total of at least 20 min”. Here, we heard about how people had put together tools they would need, for orienteering (“I’m directionally impaired”) or sketching materials and where tools would need to be when setting up their “lab box” for the experiment. Likewise, for the Green Box, how they would have containers and prepare vegetables for the week to come again to have their lab ready to go. Preparation also helped illuminate challenges that we had not considered and needed to address on the design side. In the Yellow Box, we asked people to move away from their desk twice an hour and to keep moving for a few minutes each time. Operationalizing this was not a problem; fear about how their colleagues not in the study would feel about them walking away so frequently was a challenge we were able to mitigate by working with management ahead of that box’s week.

8) Diversity/Choice In this study, the diversity of ways to explore health was also seen as a benefit; it let us convey how health is dynamic and that there are multiple options within multiple paths to approach it. Enabling people to determine how they would choose to implement a heuristic was also well received: “We could eat whatever green we wished—I learned about so many veg I’d never tried—it was great.”; “I wanted to learn how to play guitar—I didn’t know that was good for my brain, too.”; “after moving and sleeping, I just felt so much more creative.”

In sum, our work in this first exploration showed us that guided exploration of health-KSP in even short periods provided a basis for participants to build their own health heuristics. These short, focused activities led to enduring use without any further technical support.

Based on the richness of our results from our first study, our goal in this phase of development was to explore how we could translate that lo-tech, guided XB approach into a stand-alone digital intervention that could potentially reach and benefit more people. There was also growing concern at our University for students’ wellbeing, and the correlation of poor sleep with mental health challenges reported to be on the rise in this group (Milojevich and Lukowski, 2016; Dinis and Bragança, 2018). Therefore, we decided to explore how an XB could be redeployed to focus on one health attribute, in this case Sleep, while still building a sense of exploration and skills development. As well, we saw this XB implementation as an opportunity to complement the predominantly outsourcing-oriented work around sleep in HCI, as overviewed above, with a study of an insourcing approach.

In this single-focus XB, we were also able to shorten the engagement with the XB from 5 weeks across five topics to 15 days on one, where participants carried out 3, 5-days sleep experiment cycles. We facilitated agency by offering participants a suite of ten experiments to choose from to test sleeping better (Table 2). Further, participants could choose to carry out the same experiment for each cycle or choose a different one, from the set of ten.

In research, self-perceived sleep quality is considered to be a more important marker of success rather than duration (quantity) (Choe et al., 2015a) alone (Mary et al., 2013; Mander et al., 2017; Manzar et al., 2018). Thus, our heuristics/experiments focused on practices that were assessed on a qualitative and experiential basis, rather than the currently dominant and possibly inaccurate (Ameen et al., 2019; Tuominen et al., 2019; Louzon et al., 2020) tracking approach of hours slept, number of interruptions, time assumed to be in a particular sleep state and so on. Our focus was: after trying this heuristic, how do you feel?

We developed SleepBetter, an Android OS smartphone XB application (Figures 1–3). The application includes 1) an experiment selection area; 2) self-reflection aids 3) a FAQ to provide information about the rationale/science behind each experimental protocol.

The application provides ten experiments for sleep improvement (also known as “sleep hygiene”) listed in Table 2. Each experiment is grounded in sleep research drawing on: guidelines from the National Health Service (NHS) United Kingdom (Sherwin et al., 2016) the Mayo Clinic, United States (Ogilvy Consulting UK, 2014), and related research (Bechara et al., 2003; Espie et al., 2014; Schlarb et al., 2015; Agapie et al., 2016; Daskalova et al., 2016). We also reviewed this set with sleep researchers.

Each day at the same time, participants were asked to respond to a short questionnaire (Figure 1) about their sleep experience the previous night, including: how they felt when they woke up, how they felt before going to sleep, their mood, and their perceived concentration. Each day they were also asked, summatively, “Do you feel better or worse than yesterday?” (Figure 2). Each question was set on a five-point Likert scale. Figures 1, 2 show examples of these representations.

A free-form reflection diary supported text entry for any annotations participants wished to make each day. Drawing on Locke and Latham’s approach to goal setting for motivation (Loke and Schiphorst, 2018) we called this space a Goal Diary so that participants could use this space to note a desired outcome from each experiment and reflect on effect. Participants could see graphs of daily progress based on their questionnaire answers; they also had a calendar (Figure 3) with circles around dates to show completed experiments. Circles were also colour-coded against a selected Mood state: greens (better); reds (worse).

As noted, with our focus on university students’ wellbeing, our inclusion criteria were for university students. To connect with this group, we used social media to recruit a convenience sample of the 25 final participants. The mean age was 21.8 (SD = 1.28). All participants identified sleep as an interest for themselves and agreed they wished to participate in the study either to learn more about sleep practices or because they wanted to improve their own sleep. A limitation of the study group is that, in this convenience sample, all participants identified as male.

This is a within-participants study with three Phases: A, B, C. Phase A includes initial sign up, consent gathering, apparatus set up and baselining questionnaire that took place over a 6-day period. Phase B is the XB intervention itself, which lasted for 15 days and was broken into three, 5-days “experiment” phases. At the end of phase B, one-on-one tape-recorded interviews took place, pending participant schedules, within 10 days after the intervention. Phase C is a post-intervention survey covering self-report of wellbeing and sleep practices as well as reflections on the XB experience 7 months after Phase B, when all use of the application had ceased for 6 months. We also note that out of the 25 participants who completed the study, we only contacted 16 for Phase C who deliberately stated (in Phase B) that they agree to being contacted with a follow-up at a later date. On completion of Phase A, Phase B started. Via the app, participants were presented with the list of experiments from Table 2 and were informed they could choose any experiment from the set but would not be able to change it once selected, until the 5-day period for each experiment was complete. To reduce the burden of having to remember to engage with the app, the app sent reminders to complete a questionnaire for the day. The reminder encouraged exploration of the FAQ for questions about the experiments in general or sleep in particular.

The main measurements during Phase B were the daily questionnaires which had two parts: protocol questions and state questions. The protocol questions were specific to each experiment. For example, for experiment C1 (Table 2) the questions included: “What time was your last coffee?” and “What time did you go to bed?” State questions focus on general sleep performance, such as: ease of falling asleep, sleepiness or alertness during the day, factors in mood, appetite and concentration ratings—all factors affected by sleep. As this study is exploratory rather than confirmatory, we drew on questions where the effect of a sleep intervention has been documented within days. These questions were drawn from established sleep assessments (Espie et al., 2014; Ibáñez et al., 2018) to map to the brevity of our intervention period.

After each of the three 5-days experiments, participants were offered the opportunity to choose a new experiment or re-run their current experiment. They were also given an additional questionnaire on day five. This interaction explored:

1) What attracted them to the experiment they just completed;

2) Their experience, prompting them to reflect on its perceived effectiveness in terms of how they felt—and if they were changing or sticking with their current experiment for the next iteration;

3) What factors seemed interesting to them about staying with or changing experiments.

We emphasize that our goal in analyzing the data we captured from each of these questions was not to be confirmative, per se, but exploratory, to see the ways in which our design approach supported our aspirations to support connecting brief practice with a felt sense of benefit. To that end, we present findings and discussion, below.

Thirty five participants were initially recruited, out of which: 25 completed the study, four dropped out after signing up when they could not run the app on their particular phone’s OS version; two reported that due to circumstances changing they could not participate; two gave no reason, and two dropped out when they changed phones during week one of the study. In sum, eight people dropped out during the pre-study set up phase, and two within the first 2 days of the study, having effectively null engagement with the intervention. As such, they have not been included in the reported data set. The overall change or benefit of the XB can be assessed in two ways: 1) as a perceived effect from pre-experiment assessment (what we call here Phase A) to post-experiment assessment (Phase B); and 2) by uptake and ongoing use of protocols over time, post-experiment (Phase C).

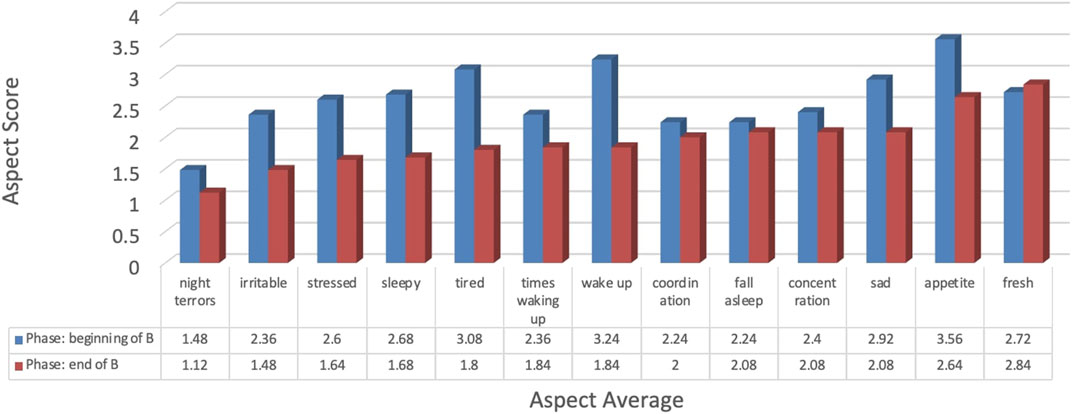

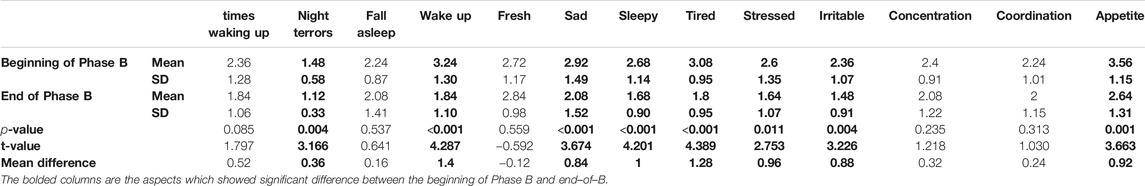

There was a statistically observed difference between A to B. Based on 13 questions used in sleep study assessments about perceived personal state and sleep quality, with each question based on a five-point score, where lower score was better than a higher score for a maximum score of four per state (Figure 4, Y axis), the Phase A results were 33.4, with SD = 6.52. The mean of the final questionnaire at the end of the study is 25.44 with a SD = 7.04, t = 5.452 (with p < 0.001). Cohen’s d formula to evaluate an appropriate effect size for our means is 1.17, thus the measured effect for the difference between the means is strong. Results are presented in Table 3, where questions with a significant effect are highlighted.

FIGURE 4. Score differences across all participants between the beginning of Phase B and end-of-B on perceived state questions.

TABLE 3. Statistical computations between the beginning of Phase B and end-of-B on perceived state questions.

Looking within these measures, we see significant positive changes in sleep quality attributes: nightmares/night terrors were reduced. Mood categories of perceived tiredness, sleepiness, stress and irritability improved, as did appetite (cravings are reduced). The other categories: number of times waking up, ease of falling asleep, concentration and coordination changed positively but not significantly. The only slight negative change, though not significant, was feeling fresh when waking up (Table 3), which may be due to this being one of the first times people were focusing on this factor regularly.

Of the 25 people who completed the study, 7 months later, 16 agreed to complete a follow-up survey of their experience with the SleepBetter XB, and how this experience may have continued to affect their sleep practices. This questionnaire was entirely self-report, and so suffers from the known issues of overly optimistic assessment of performance. Even given that, we were surprised by the degree of at least reported persistence of practice. Comparing their sleep quality between before the study and after, 75% rated it now as “good” and 19% at “very good”, well up from their Phase A baselines.

In one question we relisted Table 2 experiments and their “tips”, like “getting at least 7 h of sleep a night”. All 16 respondents reported remembering all of these tips with 15 continuing to use them at least some of the time. 81% of the participants reported continuing to use the tested protocols at least three times a week, with 43.8% reporting use of protocols every day. When asked if they had continued to use the App post-study, only four reported yes, with one of them using it for a month “to try other experiments,” two used it for a few days, and one for an additional week, suggesting the main and lasting effect was created during the Phase B of the study. There is also evidence, however, that people explored practices from the XB experiments after the study. For example, the top three most chosen experiments from Phase B were, from Table 2: L1—increasing bright light exposure during the day (19), S2—sleeping no less than 7 h per night (17) and C1—not drinking caffeine within 6 h of going to sleep (12). Of the Phase C respondents, while 12 reported getting more than 7 h of sleep/night since the study (Figure 5), only seven followed that protocol (S2) during Phase B. More broadly, 75% of respondents agreed that they were sleeping better. Significantly, all but one of the Phase C respondents attributed these changes to their engagement with the XB SleepBetter study.

Tw of key questions for us were: 1) Are these small doses of how to use these protocols to feel better in the XB sufficiently strong to have a perceived immediate benefit; and then 2) Are they sufficiently strongly felt to maintain these practices over time and without the app? The Phase C data suggests yes, at least among those who responded to our 7-months request (64% of our sample): XBs seem to support experience, effect, practice over time, and that once learned, practices are accessible enough not to be forgotten. Our post Phase B interviews and Phase C open questions, reported below, were designed to explore which attributes of the XB interaction were experienced as supporting these.

In an effort to be as unbiased as possible in the interviews, we asked about initial expectations of the app, what interested people in participating, and also asked about the experience with each section of the app: aspects that worked; what could be improved; length of an experiment; reasons for switching experiments or not; and what participants feel they learned about themselves during the study. The following uses ID-codes for participants.

The inspiration of our work has been to make a connection between the lived experience of feeling better as a result of testing a health protocol. After 7 months, 50% of our Phase C respondents said the most important aspect of what XB facilitated was the opportunity to “try a sleep practice, rather than being told it’s something you can do.” When asked what aspects of the app let them learn about sleep, which they would not otherwise have learned, they pointed to their well-being results from 1) experiencing how even very small changes can make a difference; 2) how important sleep is to performance, and 3) what doesn’t make a difference: A9—“I also tried other experiments, but they didn’t make any big impact so I didn’t really stick to them.”

Overall, the opportunity to choose from a set of protocols to test was received positively. As A9 put it: “It was nice that I could try different experiments and see which is working better for me.” In other cases, participants mapped to experiments they thought were most related to their sleep issue—A13: “I chose the experiment based on what I thought my problem was.” A16 said, “I chose the ones that would be related to my everyday needs and activities.” One feature—the opportunity to select experiments in comparison to be “told what to do” was seen as particularly valuable—A4: “I think it is a good idea to let people choose instead of forcing something onto them because different things work for different people, letting them discover what works for them and then giving them the chance to stick with it of they found something good, or switch to something else if it didn’t work.” A2 framed this as empowering: “Because I was the one choosing the experiment made me really more motivated so it’s not like someone was telling me what to do.” All ten experiments could have a similar effect/benefit, but enabling participants to assess which might be easier for them to test or adopt was also a plus—A12: “making me choose which (experiment) I was more comfortable with would help me see which one affects my sleep the most, by going through each one at a time.” The length of time for the experiments also seemed appreciated. As A1 put it: “it gave participants enough time to get used to the experiment and feel a difference and be able to decide which one suits them better.” Implicit within these comments is also an appreciation that they are not being told what works but are testing effects for themselves.

Some participants suggested that they had such a benefit from an experiment that they wanted to keep using it even while trying out another experiment in the following round. A12 stated that “after seeing that one experiment worked, I tried to keep it even though I switched to another one.” Indeed, after the first 5 days, 73.1% of the participants switched to another experiment. However, on the second experiment change, 69.6% remained with their current experiment for the last 5 days. Many of these said they would have liked to stack experiments together.

Some participants recommended that the app narrow down the set of experiments offered to make the selection even less of a choice, based on captured personal information. A13 suggests to “add a feature that would make the app choose the experiment for the user, based on their answers in the surveys and evolution.” Others, however, wanted an even wider set to find experiments even more in keeping with their daily lives. As A2 observes: “one thing to change about the app is the number of experiments available and the number of suggestions that the user has because for me personally half of the experiments were not applicable so I had to choose between only two or three options.”