95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 12 July 2021

Sec. Human-Media Interaction

Volume 3 - 2021 | https://doi.org/10.3389/fcomp.2021.659600

This article is part of the Research Topic Cognitive Infocommunications View all 10 articles

Virtual reality (VR) is a powerful technological framework that can be considered as comprising any kind of device that allows for 3D environments to be simulated and interacted with via a digital interface. Depending on the specific technologies used, VR can allow users to experience a virtual world through their different senses, i.e., most often sight, but also through touch, hearing, and smell. In this paper, it is argued that a key impediment to the widespread adoption of VR technology today is the lack of interoperability between users’’ existing digital life (including 2D documents, videos, the Web, and even mobile applications) and the 3D spaces. Without such interoperability, 3D spaces offered by current VR platforms seem empty and lacking in functionality. In order to improve this situation, it is suggested that users could benefit from being able to create dashboard layouts (comprising 2D displays) for themselves in the 3D spaces, allowing them to arrange, view and interact with their existing 2D content alongside the 3D objects. Therefore, the objective of this research is to help users organize and arrange 2D content in 3D spaces depending on their needs. To this end, following a discussion on why this is a challenging problem—both from a scientific and from a practical perspective—a set of operations are proposed that are meant to be minimal and canonical and enable the creation of dashboard layouts in 3D. Based on a reference implementation on the MaxWhere VR platform, a set of experiments were carried out to measure how much time users needed to recreate existing layouts inside an empty version of the corresponding 3D spaces, and the precision with which they could do so. Results showed that users were able to carry out this task, on average, at a rate of less than 45 s per 2D display at an acceptably high precision.

The concept of virtual reality (VR) integrates all technologies that enable the simulation of three-dimensional environments, thereby creating an artificial spatial experience to its users (Kim, 2005; Neelakantam and Pant, 2017). Although VR is most often associated with immersive VR headsets—which are gaining popularity in a wide range of sectors, including the gaming industry, real estate, and manufacturing (Zhang et al., 2018)—even 3D desktop environments can be regarded as VR in their own right (Berki, 2020).

In the past few years, it has been often argued that VR has the potential to become a highly practical tool in daily life, as has been the case with smartphones and other ICT technologies, by extending human capabilities and further contributing to the “merging” of human capabilities with those of increasingly intelligent ICT systems (Baranyi and Csapo, 2012; Baranyi et al., 2015; Csapo et al., 2018). The use of VR can thus be expected to far extend from its origins in “electronic gaming”, and to become widespread in hospitals, schools, training facilities, meeting rooms and even assisted living environments for the elderly (Lee et al., 2003; Riener and Harders, 2012; Slavova and Mu, 2018; Corregidor-Sanchez et al., 2020), transforming the common experience and effectiveness of humans in a variety of domains. Further, VR can help humans learn about and work in replicated environments which would be dangerous or hazardous under normal physical circumstances (Zhao and Lucas, 2015). Based on the above, several authors have come to the conclusion that VR can be seen as much more than a source of entertainment or a tool of visualization, and can in fact serve as a versatile infocommunication platform (Christiansson, 2001; Vamos et al., 2010; Galambos and Baranyi, 2011; Baranyi et al., 2015; Csapó et al., 2018; Pieskä et al., 2019).

On the other hand, it is necessary to point out that the widespread adoption of VR still faces some challenges, which mostly have to do with the practicality of the technology in terms of its ability to seamlessly integrate with users’ existing digital lives. Although VR has the potential to go further than just provide appealing visual experiences, by influencing how information is encountered, understood, retained and recalled by users (Csapó et al., 2018; Horvath and Sudar, 2018), this is only possible if a data-driven spatial experience is emphasized first and foremost.

Generally speaking, our investigation in this paper focuses on using desktop-based to integrate 2D digital content, since performing tasks using this kind of VR architecture carries benefits in terms of ease of access (widespread availability of laptops) and suitability for long periods of work (Lee and Wong, 2014; Berki, 2020; Bőczén-Rumbach, 2018; Berki, 2019). Specifically, the main problem that we discuss here is the use and re-configuration of dashboard layouts inside 3D spaces, i.e., how 2D display panels can be created and edited while a user is situated inside a 3D environment. As described in (Horváth, 2019), the evolution and increased use of 3D technologies brings with it the possibility of using applications that are significantly more powerful than 2D applications; however, 2D content remains a crucial part of 3D environments, enabling the integration of video, text, and web content (Horváth, 2019), rendering the environments useful for learning, researching, and even advertising (e.g., Berki, (2018) confirms that 2D advertisements displayed in 3D VR spaces have more effect on users than when they are displayed as banners on traditional webpages). Thus, we can ascertain that VR spaces provide a more seamless experience if existing 2D content can be integrated seamlessly into the 3D, and are amenable to spatial reconfiguration according to users’ needs. As we will see, this is not without challenges, hence we propose a set of operators and a workflow for the in-situ editing of dashboard layouts inside 3D spaces.

The paper is structured as follows. In Section 2, we provide an overview of existing desktop VR platforms and their use of dashboard layouts for visualizing 2D content. This section also introduces the MaxWhere platform, which served as an environment for the reference implementation of our layout editing tool. In Section 3, a brief discussion is given on the scientific challenges behind in-situ editing capabilities for 3D spaces. Although we focus primarily on the placement and orientation of 2D displays, this discussion also applies more generally to editing the arrangement of 3D objects. In Section 4, a set of operations and a workflow is proposed for editing dashboards in 3D spaces. The operations are intended to be complete and minimal (i.e., canonical). Finally, in Section 5, we describe the results of an experimental evaluation based on the reconstruction of three different virtual spaces by 10 test subjects, and conclude that the proposed operations and workflow offer a viable approach to the editing of 2D content in 3D virtual spaces.

As mentioned earlier, desktop VR is gaining increasing traction in education and training environments, due to its capability of supplying real-time visualizations and interactions inside a virtual world that closely resembles its physical counterpart, at much lower cost than using real physical environments (Lee and Wong, 2014; Horvath and Sudar, 2018; Berki, 2020). A number of VR platforms can be used for such purposes. For example, Spatial.io enables people to meet through augmented or virtual reality, and to “drag and drop” files from their devices into the environment around them, with the aim of exchanging ideas and iterating on documents and 3D models via a 2D screen. Users can access Spatial.io meetings even from the web, just through a single click; then, it is possible to start working in a live 3D workspace by uploading 2D and 3D files.

Another example of a desktop VR platform well-suited to educational purposes is JanusXR / JanusWeb, a platform that allows users to explore the web in VR by providing VR-based collaborative 3D web spaces interconnected through so-called portals. JanusVR allows its users to create and browse spaces via an internet browser, client app or immersive displays, and to integrate 3D content, 2D displays (images, videos, and links), as well as avatars and chat features into the spaces. Users can navigate between spaces through so-called portals. Although Janus VR as a company is not longer active, the project lives on as a community-supported service.

A similar philosophy is reflected in the Mozilla Hubs service, which is referred to by its creator, Mozilla as a service for private social VR. This service is also accessible on a multitude of devices, including immersive headsets and the web browser. Spaces can be shared with others and collaboratively explored. 2D content can be dragged and dropped (or otherwise uploaded) into the spaces, and avatar as well as chat (voice and text) functionality is available.

Generally speaking, a common feature of such platforms when it comes to arranging 2D content is that the possible locations and poses for the 2D content are either pre-defined (i.e., when the user enters the space, the placeholders are already available into which the supported content types can be uploaded), and / or users are allowed to ‘drag and drop’ their local files into the 3D space, which then appears “in front” of the camera at any given time. In the latter case, the 2D display that is created inside the space is oriented in whatever direction the user is facing. In some cases, a geometric fixture (a “gizmo”) can appear overlaid on the object, which can be used as a transformation tool to move, rotate and re-size the object (Figure 1).

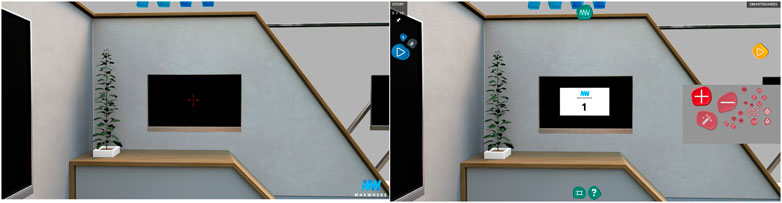

The proposed work in this paper is implemented on the MaxWhere desktop VR platform. MaxWhere was created with the purpose of enabling interaction with a large variety of digital content types (both 2D and 3D) in 3D spaces. MaxWhere can be accessed as a “3D browser” (a standalone client software compatible with Windows and MacOS) that can be used to navigate within and between 3D spaces available via a cloud backend. MaxWhere enables both interative 3D models and 2D content (including videos, images, documents, webpages, and web applications, as well as social media platforms) to be integrated into the 3D spaces (see Figure 2). 2D content in MaxWhere is displayed in so-called “smartboards”, which integrate a Chromium-based rendering process and provide an interface similar to commonly used web browsers.

MaxWhere is already widely used in education, based on its capabilities for information sharing, visualization and simulation (Kuczmann and Budai, 2019; Rácz et al., 2020), therefore it is a strong candidate for replacing or at least supplementing traditional educational methods. It has been shown that users of MaxWhere can perform certain very common digital workflows with 30 percent less user operations and up to 80 percent less “machine operations” (by providing access to workflows at a higher level of abstraction) (Horvath and Sudar, 2018), and in 50 percent less time (Lampert et al., 2018), due to the ability to display different content types at the same time. MaxWhere is also a useful tool for organizing virtual events such as meetings and conferences (more than one recent IEEE conference has been held on the MaxWhere platform, including IEEE Sensors 2020, and IEEE CogInfoCom 2020); and even for providing professional teams with an overview of complex processes in industrial settings (Bőczén-Rumbach, 2018).

The current version of MaxWhere does not include features for changing the arrangement of the 2D smartboards within spaces (although programmatically this is possible)—instead, the locations where users can display content are pre-specified by the designer of each space. Informally, many users the authors have spoken to have indicated that the ability to modify the arrangements would be a welcome enhancement to the platform. Accordingly, our goal is to propose a methodology that is universal and effectively addresses the shortcomings of the ‘gizmo approach’, which are described in Section 3.

Despite the advantages of 3D spaces, both in general and in MaxWhere, a key obstacle in terms of integrating 2D content into 3D spaces is that users cannot change the existing 2D layouts (comfortably or at all), thus their thinking is forced into the constraints of the currently existing layouts, leading to reduced effectiveness and ease of use. However, it is also clear that no single layout is suitable for all tasks. In MaxWhere, it is often the case that users report their desire to add “just one more smartboard” to a space, or to “move this smartboard next to the other one” for clearer context.

In the case of platforms other than MaxWhere, where layout editing is enabled using the so-called “gizmo approach,” a new set of challenges become clear. When it comes to editing dashboard layouts in 3D, it is generally not a trivial question how the camera (the viewpoint of the user into the space) should interact with the operations used to transform the displays. If a display is being moved towards a wall or some other object, and the camera is in a stationary location, it will become difficult to determine when the display has reached a particular distance from the wall / object, and during rotation of the display, to determine whether the angle between the smartboard and the wall / object is as desired. However, if the camera viewpoint is modified automatically in parallel with the smartboard manipulations, users will be unable to re-position themselves with respect to the objects and smartboards of interest as freely as if the camera viewpoint is independent of the manipulations. This is a key dilemma, which we refer to as the “camera-object independence dilemma,” and which we have attempted to solve, in the dashboard layout editor proposed in this paper, by allowing the camera viewpoint to be independent of the operations, but helping to solve positioning challenges through features such as snapping the smartboards to objects, or smartboard duplication.

Another challenge in the design of an in-situ spatial editing tool is how to bridge between mathematical, geometric and physics-based concepts generally used by professionals in the design of 3D spaces (e.g., axes, angles, orientations expresed as quaternions, friction, gravitation, restitution etc.) and the terminologies that laypeople are better accustomed to (e.g., left/right/up/down/forward/backwards, visually symmetrical or asymmetrical, horizontal / vertical alignment, etc.). The key challenge is to design an interface that is intuitive to everyone, not just engineers, while allowing the same level of precision as would be required in the professional design of 3D spaces.

Currently, the 3D spaces available in MaxWhere each contain a space-specific default layout with smartboards having a pre-determined position, orientation, size, and default content. Therefore, in each space, users are forced to use the existing smartboards, even if their arrangement does not fulfill the users’ needs, which can be unique to any given user and application.

As we have indicated, the objective of this work is to help users organize and arrange dashboard layouts and 2D content in 3D spaces. This can be achieved by designing a set of operations intended to be canonical—that is, minimal (both in the sense of number of operations, and in the sense of number of operations it takes to achieve the same result) but at the same time also complete (allowing any layout to be created). The requirement of minimality necessitates the adoption of a workflow-oriented perspective, so that each individual 2D display can be placed into its final and intended pose based on the workflow and only based on the workflow. In this way, the length of the arrangement process (per display) can be quantified at a high level. In turn, the requirement of completeness can be evaluated experimentally, by asking a set of test subjects to re-create a variety of already existing dashboard layouts.

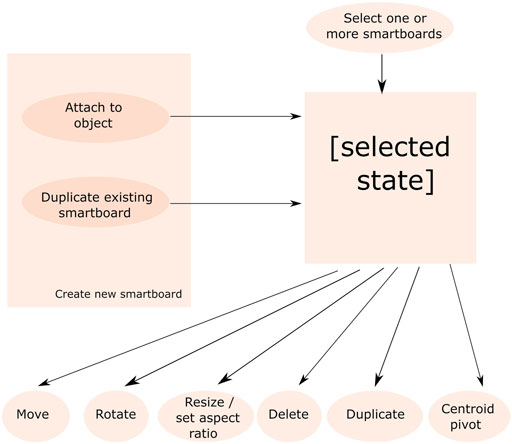

Figure 3 provides a brief overview of the workflow process that was considered as a staring point as we developed the proposed canonical operations.

FIGURE 3. Workflow process behind proposed editing operations. The key idea behind the workflow is that once one or more smartboards are selected, they can be moved, rotated, resized, deleted, duplicated or rotated around their common centroid. Smartboards can be selected directly, or one will be selected if it is created anew (either via duplication or by attachment to an object).

As detailed in the figure, the first step in editing a layout is to either add a new smartboard and then select it for further manipulations, or to select an existing smartboard for further manipulations. It is necessary to select one or more smartboards before it becomes possible to modify them. By “modification,” we mean some combination of scaling, translation and rotation operations. The different operations can be described as follows:

1) The first operation, in the case where a new smartboard is added to the scene, consists of choosing a place and an orientation to add the smartboard (Figure 4). Given that orientations are difficult to provide explicitly, the user is required to point the 3D cursor to a point on a surface inside the space (we refer to this point as the “point of incidence”), then exit (with the click of the right mouse button) to the 2D menu. This will “freeze” the spatial navigation and the user can click on an Add icon on the menu to create the new smartboard. The editor component then creates the new smartboard, assigns a unique integer ID to it, and places it in the position and orientation determined by the point of incidence on the surface (via the normal vector of the surface at that same point, as shown on Figure 4).

2) The second operation is that of selection, which allows users to select either individual smartboards, or groups of smartboards for further manipulation (Figure 5). Smartboards can be selected by toggling the selection mode (depicted by a “magic wand” icon in the 2D menu) and returning to 3D navigation mode, then hovering the 3D cursor over the given smartboard or smartboards. One can deselect individual or multiple smartboards by hovering the 3D cursor over them once again, or by toggling the selection mode again.

3) Once an individual smartboard, or a group of smartboards are selected, it / they can be further manipulated in terms of their position, orientation, size, and aspect ratio.

• One trivial manipulation is to delete the smartboard.

• Another is to duplicate it (in case only a single smartboard is selected). In the case of duplication, a newly created smartboard will be placed next to the originally selected smartboard).

• When it comes to manipulating position and orientation, the axes along which translation / rotation is performed depends on how many smartboards are selected. If a single smartboard is selected, it can be translated / rotated along or around its local axis (left / right axis, up-down axis and forward-backwards axis). If more than a single smartboard is selected and the smartboards are therefore being manipulated at the same time, the axes of manipulation become aligned with the global axes of the space. The reason for this is that when more than a single smartboard is selected, they may be facing different orientations, which means there is no single local axis for the left/right, up/down and forward/backwards directions. Further, if each smartboard were to be transformed along a different axis, the relative arrangement, and orientation of the smartboards would change in unexpected ways.

• When it comes to setting the aspect ratio of one ore more smartboards, three different aspect ratios can be chosen: 16-by-9, 4-by-3, and A4 size. In each case, the width of each smartboard is kept, and their height is modified according to the chosen aspect ratio. It is also possible to scale the smartboards to make them larger or smaller using two separate icons on the editor menu.

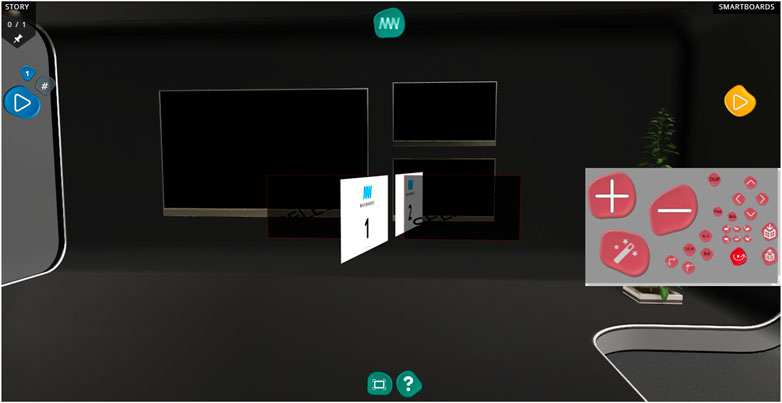

• Finally, an interesting but useful feature in the editor menu is the “centroid pivot” manipulation operation, which allows a group of smartboards to be rotated around their center of geometry. This enables users to mirror complete configurations (groups of smartboards) in different corners of the space, without having to move and rotate them individually, or to recreate the whole arrangement in a different location (Figure 6).

4) One experimental but quite useful feature added to the editor is the “snap-to” feature. As mentioned earlier, when a new smartboard is created, it is already added to the space in such a way that its orientation corresponds to the normal vector of the surface at the point where it is added to the space. However, users may later decide to move those smartboards to different locations, or incidentally the surface may not be completely flat. In such cases, users can temporarily turn on a physics simulation (by pressing Ctrl + S for “control + snap”—or Cmd + S on Mac OS), such that a gravity vector is added to the given smartboard, with the direction of the gravity vector pointing in the backwards direction. In this way, any smartboard can be forced to gravitate towards and snap onto any surface other than the one it was originally placed on.

5) At any point during the editing process, users may want to undo the previously effected operations. This is possible by pressing Ctrl + U (or Cmd + U on Mac OS). In our implementation, given the high precision of the manipulations (see also Section 4.2 below), we decided to give users the option of undoing the last 150 operations.

6) Finally, the export / import icons allow users to save (in .json format) and load the layouts, and to share those layouts with others.

FIGURE 4. Placement and addition of a new smartboard into the scene can be done by clicking the right mouse button to open the 2D “pebbles” menu and by clicking on the red plus sign.

FIGURE 5. Smartboard selection is performed by first toggling the selection mode (by clicking the magic wand among the red pebbles) and by hovering the mouse over the given smartboards in 3D navigation mode.

FIGURE 6. Using the centroid pivot, it is possible to rotate a group of smartboards around their center of geometry. In this case, the original location of the two smartboards is indicated by the greyed out rectangles. As can be seen, both smartboards have been rotated towards the left around their center of geometry.

As mentioned earlier in Section 3, the question of how the editing operations can be made precise without resorting to professional concepts remains a challenge. In our implementation of the editor tool, we facilitated precision by setting the gradations of manipulation to low values (0.5 cm in the case of translation and scaling, and 0.5 in the case of rotation). In order to ensure that the manipulation of the scene does not become overly tedious, we also implemented the manipulation buttons such that their sensitivity be rate-based. Thus, when clicked at a low rate, the small gradation values apply. When clicked at higher, more rapid rates, each gradation value is increased dynamically (up to 50 cm per click in the case of translation and scaling, and up to five degrees in the case of rotation).

As described earlier, the objective of this work was to design a set of operations that are complete and minimal (i.e., canonical) and enable users to reconfigure dashboard layouts inside 3D spaces. As detailed earlier, our goal was also to propose a set of operations that effectively address the camera-object independence dilemma and are interpretable not only to professional, but also to less seasoned users.

To verify the viability and efficacy of our tool, we realized an experiment with 10 test subjects. Each of the test subjects were students at Széchenyi István University (six male, and four female test subjects between ages 20 and 35). The test subjects had varied experience with VR technologies and MaxWhere in particular; some had previously encountered MaxWhere during their studies at the university, while others were completely new to the platform. In the experiment, test subjects were given the task of re-creating the smartboard layouts in three different MaxWhere spaces from scratch (based on images of an original layout in each case, as shown in Figure 7). Following each task, the resulting layout was exported from the space, and the time it took to re-create the layout and the precision with which the task was accomplished was evaluated.

FIGURE 7. Three spaces at the center of the experiment (from top to bottom: “Glassy Small”, “Let’s Meet” and “Seminar on the Beach”).

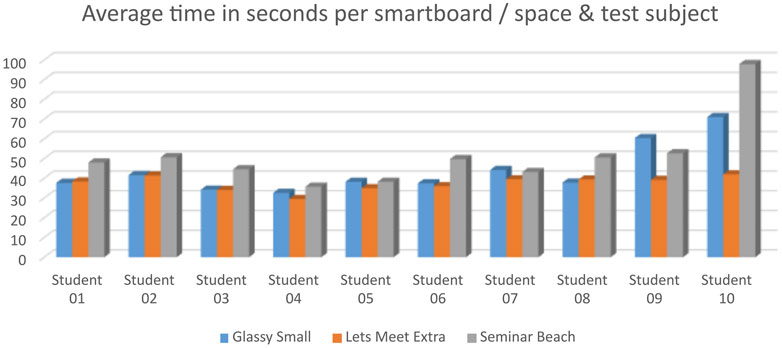

In our experiment, we set out to measure the time it took the same 10 users to recreate the smartboards inside an empty version of three different 3D spaces, based on screenshots of the original layout of the same spaces. The three spaces used in the experiment were “Glassy Small” (13 smartboards), “Let’s Meet” (10 smartboards) and “Seminar on the Beach” (12 smartboards). In each case (space per user), we measured the time it took to complete the task, and compared the original layouts to the re-created ones by exporting the re-created layouts.

As shown on Figure 8, all users were able to finish the layout editing task in less than 20 min, with the average time being more like 10 min. This means that, on average, users required around 45 s per smartboard when re-creating the layouts. This seems acceptable for users new to the tool; however, in a second part of our analysis, we also wanted to evaluate the precision with which they were able to accomplish the task.

FIGURE 8. Average time required to re-create the layouts of existing spaces was found to be less than 45 s / smartboard.

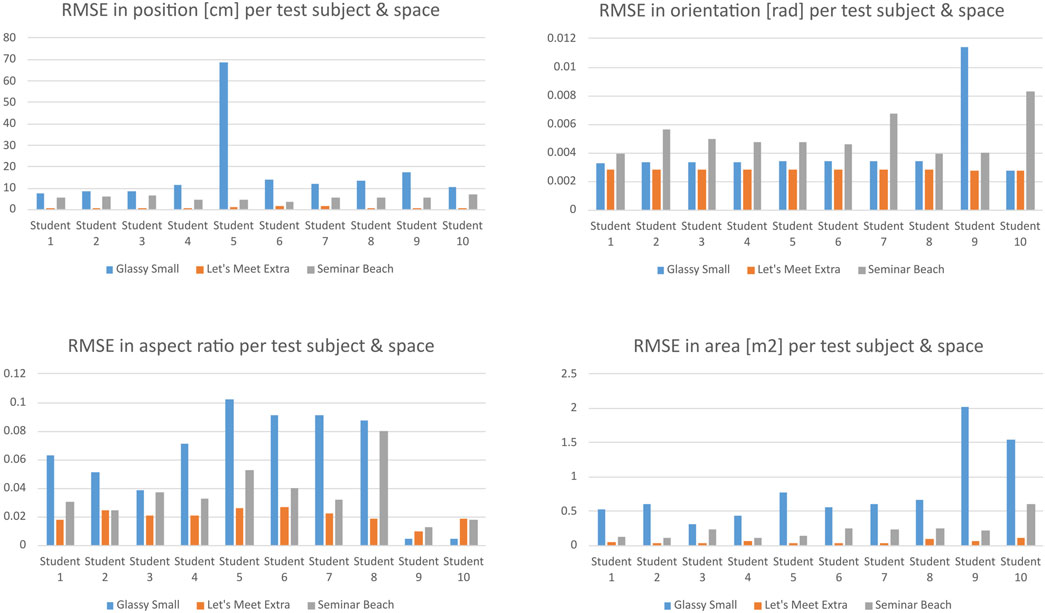

The precision per test subject and per space in terms of position, orientation, aspect ratio and smartboard area is shown in Figure 9. Details on the analyses are as follows:

• Difference in position was measured as the distance between the center of each smartboard and the re-created version of the same smartboard, in centimeters (the basic distance unit in MaxWhere). To aggregate these values, the root mean squared value of the distances was calculated.

• Difference in orientation was measured as the difference in radians between the orientation of each smartboard and its re-created version. To aggregate these values, the root mean squared value of the differences was calculated.

FIGURE 9. Precision of the manipulation operations, measured as root mean squared error in centimeters (for position), radians (for orientation), and meters squared (for surface area).

Note that orientation in MaxWhere is specified using quaternions, that is, 4-dimensional vectors in which the x,y,z coordinates represent the coordinates of a global axis of rotation (scaled by the sine of half the angle of rotation) and in which the “w” coordinate corresponds to the cosine of half the angle of rotation. Since any given quaternion corresponds to a specific rotation around a specific axis, it can be interpreted as a global orientation that is arrived at if the object is transformed by that rotation from its default state (the default state being the orientation obtained when the axes of the object are aligned with the global x,y,z axes of the space). The ‘distance’ (in radians) between two quaternions,

where

This can be shown to be true based on the fact that the scalar product of two unit-length quaternions always yields the cosine of half the angle between them, and the double-angle formula for cosines as follows:

and, since

• Difference in aspect ratio was measured as the difference between the (dimensionless) value of

• Finally, differences in the surface areas of the original and re-created smartboards were also calculated on a pairwise basis and aggregated using the root mean squared value.

Based on the results shown in Figures 8, 9, we were able to conclude that the proposed operations were complete (i.e., the layouts of very different spaces could be re-created) and effective (the users were able to complete the task in an acceptable amount of time).

Looking more closely at the precision results, one interesting observation was that error rates were significantly higher in the case of the “Glassy Small” space for all users, whereas the layout of the “Let’s Meet Extra” space was reconstructed most easily. This should be no surprise, given that the latter space—and to some extent the “Seminar Beach” space as well—featured visual placeholders (TV screen meshes or other frames)—which helped users in the positioning and sizing of the smartboards. Unsuprisingly, the discrepancy among the three spaces was smallest in the case of orientation, since this was the only measured aspect that was independent of the presence of such placeholders.

Even so, in the case of the “Glassy Small” space, the error in smartboard positioning was generally around 10 cm—an insignificant error considering that each original smartboard in the space had a width of at least 1.40 m. Further, the high precision of reconstruction achieved in the case of the other two spaces suggests that when there were sufficient visual cues present, the challenges of the task were more than manageable.

In scenarios where it is up to the user to define his or her own dashboard layouts (without a given example to be re-created), the proposed operations and workflow seem to be both efficient and effective.

In this paper, we argued that the widespread adoption of VR technologies in everyday use faces challenges in terms of the current ability of VR to integrate users’ existing digital life (e.g., 2D documents, images, and videos) with 3D objects. To address this challenge, we focused on the ability of users to create dashboard layouts of 2D content while they are inside a 3D space. After identifying key challenges associated with current object manipulation methods (e.g. the “gizmo approach”)—namely the camera-object independence dilemma and the problem of interpretability of manipulation operations for end users, we proposed a workflow and an associated set of operations for the in-situ creation and manipulation of dashboard layouts in VR spaces. We validated the proposed methodology in terms of efficiency and completeness by having test subjects re-construct existing dashboard layouts in empty versions of otherwise existing VR spaces on the MaxWhere platform.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Both authors contributed 50% to the paper. TS worked on the conceptualization and implementation of the tool and carried out the experiments. AC also worked on the conceptualization and implementation of the tool, and helped design the experiments. Most of the manuscript was written by TS and proof-read by AC.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Baranyi, P., and Csapó, Á. (2012). Definition and Synergies of Cognitive Infocommunications. Acta Polytech. Hungarica. 9, 67–83.

Berki, B. (2018). 2d Advertising in 3d Virtual Spaces. Acta Polytech. Hungarica. 15, 175–190. doi:10.12700/aph.15.3.2018.3.10

Berki, B. (2019). Does Effective Use of Maxwhere Vr Relate to the Individual Spatial Memory and Mental Rotation Skills?. Acta Polytech. Hungarica. 16, 41–53. doi:10.12700/aph.16.6.2019.6.4

Berki, B. (2020). “Level of Presence in max where Virtual Reality,” in 2020 11th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Mariehamn, Finland, 23-25 Sept. 2020 (IEEE), 000485–000490.

Bőczén-Rumbach, P. (2018). “Industry-oriented Enhancement of Information Management Systems at Audi Hungaria Using Maxwhere’s 3d Digital Environments,” in 2018 9th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Budapest, Hungary, 22-24 Aug. 2018 (IEEE), 000417–000422.

Christiansson, P. (2001). “Capture of user requirements and structuring of collaborative VR environments,” in Conference on Applied Virtual Reality in Engineering and Construction Applications of Virtual Reality, Gothenburg, October 4–5, 2001, 1–17.

Corregidor-Sánchez, A.-I., Segura-Fragoso, A., Criado-Álvarez, J.-J., Rodríguez-Hernández, M., Mohedano-Moriano, A., and Polonio-López, B. (2020). Effectiveness of Virtual Reality Systems to Improve the Activities of Daily Life in Older People. Ijerph 17, 6283. doi:10.3390/ijerph17176283

Csapó, Á. B., Horvath, I., Galambos, P., and Baranyi, P. (2018). “Vr as a Medium of Communication: from Memory Palaces to Comprehensive Memory Management,” in 2018 9th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Budapest, Hungary, 22-24 Aug. 2018 (IEEE), 000389–000394.

Galambos, P., and Baranyi, P. (2011). “Virca as Virtual Intelligent Space for Rt-Middleware,” in 2011 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Budapest, Hungary, 3-7 July 2011 ((IEEE)), 140–145.

Horváth, I. (2019). Maxwhere 3d Capabilities Contributing to the Enhanced Efficiency of the Trello 2d Management Software. Acta Polytech. Hungarica. 16, 55–71. doi:10.12700/aph.16.6.2019.6.5

Horvath, I., and Sudar, A. (2018). Factors Contributing to the Enhanced Performance of the Maxwhere 3d Vr Platform in the Distribution of Digital Information. Acta Polytech. Hungarica. 15, 149–173. doi:10.12700/aph.15.3.2018.3.9

Kuczmann, M., and Budai, T. (2019). Linear State Space Modeling and Control Teaching in Maxwhere Virtual Laboratory. Acta Polytech. Hungarica. 16, 27–39. doi:10.12700/aph.16.6.2019.6.3

Lampert, B., Pongracz, A., Sipos, J., Vehrer, A., and Horvath, I. (2018). Maxwhere Vr-Learning Improves Effectiveness over Clasiccal Tools of E-Learning. Acta Polytech. Hungarica. 15, 125–147. doi:10.12700/aph.15.3.2018.3.8

Lee, E. A.-L., and Wong, K. W. (2014). Learning with Desktop Virtual Reality: Low Spatial Ability Learners Are More Positively Affected. Comput. Educ. 79, 49–58. doi:10.1016/j.compedu.2014.07.010

Lee, J. H., Ku, J., Cho, W., Hahn, W. Y., Kim, I. Y., Lee, S.-M., et al. (2003). A Virtual Reality System for the Assessment and Rehabilitation of the Activities of Daily Living. CyberPsychology Behav. 6, 383–388. doi:10.1089/109493103322278763

Neelakantam, S., and Pant, T. (2017). Learning Web-Based Virtual Reality: Build and Deploy Web-Based Virtual Reality Technology. (Apress).

Pieskä, S., Luimula, M., and Suominen, T. (2019). Fast Experimentations with Virtual Technologies Pave the Way for Experience Economy. Acta Polytech. Hungarica. 16, 9–26. doi:10.12700/aph.16.6.2019.6.2

Rácz, A., Gilányi, A., Bólya, A. M., Décsei, J., and Chmielewska, K. (2020). “On a Model of the First National Theater of hungary in Maxwhere,” in 2020 11th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Mariehamn, Finland, 23-25 Sept. 2020 (IEEE), 000573–000574.

Riener, R., and Harders, M. (2012). “Vr for Medical Training,” in Virtual Reality in Medicine (Springer), 181–210. doi:10.1007/978-1-4471-4011-5_8

Slavova, Y., and Mu, M. (2018). “A Comparative Study of the Learning Outcomes and Experience of Vr in Education,” in 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18-22 March 2018 (IEEE), 685–686.

Vamos, A., Fülöp, I., Resko, B., and Baranyi, P. (2010). Collaboration in Virtual Reality of Intelligent Agents. Acta Electrotechnica et Informatica 10, 21–27.

Zhang, M., Zhang, Z., Chang, Y., Aziz, E.-S., Esche, S., and Chassapis, C. (2018). Recent Developments in Game-Based Virtual Reality Educational Laboratories Using the Microsoft Kinect. Int. J. Emerg. Technol. Learn. 13, 138–159. doi:10.3991/ijet.v13i01.7773

Keywords: virtual reality, 3D spaces, 3D dashboards, in-situ spatial editing, digital cognitive artifacts

Citation: Setti T and Csapo AB (2021) A Canonical Set of Operations for Editing Dashboard Layouts in Virtual Reality. Front. Comput. Sci. 3:659600. doi: 10.3389/fcomp.2021.659600

Received: 27 January 2021; Accepted: 28 June 2021;

Published: 12 July 2021.

Edited by:

Anna Esposito, University of Campania 'Luigi Vanvitelli, ItalyCopyright © 2021 Setti and Csapo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Adam B. Csapo, Y3NhcG8uYWRhbUBzemUuaHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.