94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Sci. , 04 June 2020

Sec. Human-Media Interaction

Volume 2 - 2020 | https://doi.org/10.3389/fcomp.2020.00017

This article is part of the Research Topic Games and Play in HCI View all 11 articles

Game balancing is a time consuming and complex requirement in game design, where game mechanics and other aspects of a game are tweaked to provide the right level of challenge and play experience. One way that game designers help make challenging mechanics easier is through the use of External Assistance Techniques—a set of techniques outside of games' main mechanics. While External Assistance Techniques are well-known to game designers (like providing onscreen guides to help players push the right buttons at the right times), there are no guiding principles for how these can be applied to help balance challenge in games. In this work, we present a design framework that can guide designers in identifying and applying External Assistance Techniques from a range of existing assistance techniques. We provide a first characterization of External Assistance Techniques showing how they can be applied by first identifying a game's Core Tasks. In games that require skill mechanics, Core Tasks are the basic motor and perceptual unit tasks required to interact with a game, such as aiming at a target or remembering a detail. In this work we analyze 54 games, identifying and organizing 27 External Assistance Techniques into a descriptive framework that connects them to the ten core tasks that they assist. We then demonstrate how designers can use our framework to assist a previously understudied core task in three games. Through an evaluation, we show that the framework is an effective tool for game balancing, and provide commentary on key ways that External Assistance Techniques can affect player experience. Our work provides new directions for research into improving and maturing game balancing practices.

Challenge is an important part of what makes a game entertaining (Chen, 2007). Striking the right level of challenge is critical for a game design to be successful: if it is too difficult, it can become frustrating; if it is too easy, players may become disengaged and uninterested (Vazquez, 2011). Traditionally, game designers try to find the right level of challenge through the activity of game balancing, where aspects of the rules are tweaked to target the right play experience (Schell, 2019).

Game balancing is extremely challenging, because many of a game's parameters are interconnected (Baron, 2012). For example, imagine a platformer game where in playtesting it is uncovered that players find jumping over large pits too difficult (the split-second timing required is hard for players to master). The designer may consider increasing the character's jumping distance by tweaking aspects of the game's physics. However, this would have many side effects in other parts of the game; e.g., changing running speed may make avoiding enemies too easy and changing gravity will affect the behavior of many other game objects. In such games with interconnected mechanics (such as platformers or first-person shooters), game balancing that operates in the space of the game world is extremely difficult and time consuming because of the interconnected nature of a game's in-world mechanics and properties. Tweaking game mechanics for balance commonly leads to unintended consequences, and can lead to mechanics operating in unsatisfying ways (Baron, 2012). Because of the limited research and reports of practice, game balancing still remains more of an art than science (Schell, 2019).

One way that game designers balance challenge is to use external assistance techniques. We define external assistance techniques (or EA techniques) as a set of approaches that work outside of the main mechanics of the game world, but allow a player to more easily complete challenges that are tightly connected to the game world by allowing them to better perform core tasks. From previous work in game design, we borrow and refine the definition of “core tasks,” the “basic motor and perceptual tasks” that games require in order to interact with game mechanics (Flatla et al., 2011). Our focus on “external” means that the assistance techniques we consider in this work do not need to change the main game mechanics to be effective, rather they can be added separately or distinctly from a game's core mechanics (e.g., a game's rules, physics, or other character or game object behaviors can remain unchanged). Previous work has proposed the use of “assistance techniques” (Bateman et al., 2011b; Cechanowicz et al., 2014; Vicencio-Moreira et al., 2014, 2015); however, the previously proposed techniques all focused on “internal” approaches for balancing gameplay, by directly making it easier for a player to perform a game's main mechanic. One example of this is making it easier to aim at a target by automatically moving the aiming reticule (Bateman et al., 2011b; Vicencio-Moreira et al., 2014). Our distinction between internal and external techniques, places the focus on the idea that with external techniques we are assisting the player in performing a particular challenging task, rather than changing the task itself (changing the task would be internal to the game).

To illustrate the idea of external assistance techniques, consider the hypothetical platform game described above. Using the framework described in this paper, a game designer may identify a core “reaction time” task to jump over a pit in the game. Based on this, they may identify the “advance warning” technique as an appropriate approach and provide an additional visual indicator onscreen as the player approaches a pit. This allows players to learn exactly when they need to press the jump button, by providing an external cue. Another example, is in a large open world game like World of Warcraft, where players must recall the location of places they need to visit for a quest. In this case the core task required of the player is a “spatial memory task,” which can be assisted using the common “map” technique (i.e., providing a map to guide the player from their current location to their intended destination).

Providing external assistance techniques (EA techniques) to balance difficulty has advantages over balancing other in-world mechanics and game object properties. This is because EA techniques can be used more selectively (e.g., an external cue might only be shown for the first encounter with a pit that is difficult to jump across) and are less likely to have unforeseen side-effects in the game world (e.g., if, say, jumping height was increased). Further, they are particularly attractive because they allow otherwise difficult tasks to remain in place, but assist players to better perform a required skill mechanic, like pressing the right button combination at exactly the right time, or remembering the location of an object in the game world; this allows players to better stay immersed in a game while having a satisfying experience (Weihs, 2013).

Game designers are generally aware of many of the assistance techniques that have been previously used (Burgun, 2011); however, it is not always clear how they can be applied, because every game is unique (Bourtos, 2008), and there is little work to help organize and describe the range of assistance techniques that might be possible. In this paper, we address the challenge confronting game designers to identify and select the best EA technique for their game. To do this we propose a generalized way of identifying appropriate EA assistance techniques in video games that require the core tasks (e.g., precise timing or recalling a specific detail). Our approach involves identifying the fundamental actions players perform in many games—core tasks—such as signal detection, reaction time, or pointing, we can identify a range of EA techniques that can improve play experience independently from the characteristics of a specific game.

In order to characterize the space of core tasks and their relationship to EA techniques, we performed a grounded analysis of 54 games. Our analysis started with an existing characterization of core tasks (Flatla et al., 2011), refining them based on the results of our grounded theory study. This resulted in a design framework of 10 core tasks and a description of 27 external assistance techniques that have been previously used to balance challenge in games. Next, to demonstrate the effectiveness and generalizability of our framework, we built three different games (a puzzle-like game, a third-person adventure game, and a sniper simulation) that share a common core task. We implemented three different assistance techniques that target the shared core task in each of the three games, showing that even though the games are seemingly different, assistance techniques can be adapted to fit all of them. Finally, to demonstrate the effectiveness of the resulting techniques for game balancing, we conducted an experiment on two of the games comparing the games to versions without an assistance technique. The results of our experiment show that the EA techniques increased player performance and that they were effective in reducing challenge in the games, meaning that they are effective tools for balancing challenge.

While approaches to game balancing have previously been studied (Bateman et al., 2011b; Cechanowicz et al., 2014; Vicencio-Moreira et al., 2014, 2015), this work has mostly identified specific assistance techniques for certain activities. There has been very little work that has organized and characterized the range of previously proposed EA techniques. It is important to note that the number of techniques and ways that game designers might choose to balance challenge is large. However, in this work we focus on what we call external assistance techniques, techniques that operate outside a game's main, in-world mechanics. To focus our initial work in this area, we necessarily exclude many other ways that games may be balanced; for example, providing guidance/advice in strategy games, or by changing in-game mechanics (e.g., by adjusting physics or character or game object properties). In this paper, we provide a demonstration of how EA techniques can be applied to help designers target a desired play experience. Our work provides game designers with a valuable new resource for understanding game balancing practices, guidance for identifying and applying external assistance techniques, and discovering new assistance techniques that can be applied in their games. Ultimately, our work contributes to the advancement of game design and development.

The fact that overly difficult games cause frustration, whilst easy ones lead to boredom, is considered “common knowledge” amongst game designers (Vazquez, 2011). As such, how to design for the appropriate level of difficulty has become an increasingly popular subject with game designers (Vazquez, 2011), as well as what aspects of a game can be manipulated to control difficulty (e.g., time limits, damage scaling, HUD restrictions, etc.) (Bourtos, 2008).

Commonly in game design, difficulty has been related to the concept of flow, which can be defined as the state in which people are so immersed in an activity that everything else ceases to matter, and their perception and experience of time become distorted (Csikszentmihalyi, 2008). Flow is achieved when a person's skill ideally aligns with the difficulty of the task at hand, which promotes a high level of engagement and focus on the task (Baron, 2012). In general, game designers want players to remain in a state of flow throughout their experience, which would represent a rich and meaningful engagement with the game (Salen and Zimmerman, 2003). To achieve this, Csikszentmihalyi outlines four task “characteristics” that increase the probability of achieving a flow state. One such characteristic is to “… demand actions to achieve goals that fit within the person's capabilities” (Baron, 2012).

Denisova et al. provide a more nuanced account of player experience arguing that play experience can better be characterized through the “challenges” a game presents. Challenges “… describe a stimulating task or problem,” while “difficulty” simply implies that “… something is hard to do” (Denisova et al., 2017). So, in game design, balancing player skill and the challenges they face, culminate in their experience of a game's difficulty, and should be done in a way to maintain equilibrium between stress/arousal and performance. Each player has a unique stress-performance curve, and thus a gradual increase in overall difficulty (easy, medium, and hard) is not necessarily optimal. EA techniques can be used to influence a player's performance and their perception of challenge, so that it corresponds with an appropriate difficulty to promote cognitive flow (Baron, 2012).

While game balancing is a necessary activity in almost any successful game, details around existing practices are not always widely shared in the game industry (Felder, 2015a). This may be, at least partially, because game balancing practices have not reached the same level of maturity as other design and development practices. However, it is generally understood game balancing is an iterative process that takes place throughout development, usually following feedback from play testing (Felder, 2015b; Schell, 2019). Much effort is often placed into balancing activities, though, since there are few well-established practices. Further, as discussed, the fact that game elements are often interconnected means that balancing games is complex and any changes to a games mechanics needs to be tested thoroughly to ensure that other interrelated aspects of the game have not been adversely affected (Felder, 2015b; Schell, 2019).

Game mechanics can concisely be described as the rules of a game and how players interact with the game. Schell describes “mechanics of skill” as one of the six main types of mechanics, since “Every game requires players to exercise certain skills” (2019). Games most frequently require a range of skills, which can be categorized as (Schell, 2019):

• physical skills: skills requiring dexterity, movement, speed, etc.; such as using a game controller.

• mental skills: skills including memory, observation, insight, problem solving, developing and following a strategy, etc.

• social skills: building trust, guessing an opponent's strategy, team communication/coordination, etc.

Similarly, Adams describes that the challenges a player must overcome can be considered as being either mental or physical (Adams, 2013). These categorizations of skill mechanics open up huge number of ways that games might be out of balance because of a mismatch between player skill and a design, both in low-level interactions (the need to click a button quickly) or higher-order cognitive tasks (e.g., developing a strategy in a game of Chess). In our work, we were initially interested in determining how game balancing practices might be facilitated and improved through further focusing on a particular subset of the physical and mental skills described above.

We were interested in providing a concrete characterization of how a specific set of skills could be assisted. When considering a range of skills fundamental to interacting with games, we found the work of Flatla et al.'s on “calibration games” to be helpful (Flatla et al., 2011). Calibration games are essentially gamified calibration tasks that are designed to encourage people to perform necessary calibration steps needed for many input technologies to operate reliably (e.g., calibrating an eye-tracker for a particular user). In this work, the authors use the idea of core tasks: “the core perceptual and motor tasks that … match common game mechanics….” These included a list of 10 core tasks such as reaction time, visual search, and spatial memory. When relating the core tasks to Schell's skill categorization (enumerated above), it can be seen that Flatla et al.'s core tasks relate to lower level physical and mental skills [described by Newell (1994) in his “Time Scale of Human Action” as unit tasks, operations or deliberate acts], but not to higher level tasks that involve rationalization (e.g., making a choice or developing a strategy). This means that supporting these skills is more tractable and success is more easily measured, since there are fewer sources of variation (that can arise from, say, how an individual engages in conscious deliberation or in human-to-human communication), which would be prevalent for social or higher level mental skills (MacKenzie, 2013). For these reasons, our work leverages Flatla et al.'s list as a concrete and tractable subset of game tasks that represent common skills in games.

One way of helping players who are struggling with a game challenge is to assist them with the task preventing their progress, effectively increasing their skill. For example, suppose a player is having a tough time hitting a target with a set number of bullets. Instead of making more bullets available (a typical internal game balancing approach), we could instead assist them with their aiming skill to increase the probability of a successful shot. Bateman et al. (2011b) used the term, “target assistance techniques” to describe a set of algorithms that helped players acquire and shoot targets in a multiplayer target shooting game. This work showed that several “target assistance techniques” were effective for helping to balance competition between players of different skill levels. Likely predating this, game designers use the idea of “aim assists” to describe techniques to help players acquire techniques in first-person shooters (Weihs, 2013).

Games have used specific assistance techniques to assist certain core tasks that people find difficult, and these are well-known to designers. As described, first-person shooters often incorporate some form of aim assistance or “auto aiming” [Auto-Aim (Concept), 2019] to help players deal with the difficult task of aiming a reticule at a rapidly moving target, especially when using a thumbstick on a gamepad where control is more difficult than a mouse (Vicencio-Moreira et al., 2014). Common aim assists that can be employed to improve play experience are techniques such as bullet magnetism, reticule magnetism, and auto-locking [Bateman et al., 2011b; Vicencio-Moreira et al., 2014; Auto-Aim (Concept), 2019].

Researchers have explored the concept of EA techniques improving play experience and player performance in several specific types of games including racing games (Bateman et al., 2011a; Cechanowicz et al., 2014), shooting games (Bateman et al., 2011b), and first-person shooters (Vicencio-Moreira et al., 2014, 2015), and have compared the effectiveness of several visual search assistance techniques in an AR game (Lyons, 2016). Also of note is work looking at balancing player skill in traditional multiplayer games (Bateman et al., 2011b; Cechanowicz et al., 2014; Vicencio-Moreira et al., 2014), or between players of different physical abilities (Gerling et al., 2014). Here we keep our review of the existing techniques brief and refer to relevant literature from research and current practice as we introduce the individual EA techniques in our framework.

Previous research provides valuable information comparing different techniques that allow players to better perform a certain core task. However, while this work has proposed (sometimes novel) assistance techniques within a particular context (i.e., using specific input or display devices, a certain type of game, etc.), it is still difficult for game designers to consider the wide range of possibilities for balancing games (Bourtos, 2008; Burgun, 2011; Vazquez, 2011; Baron, 2012; Felder, 2015a; Schell, 2019). Through the characterization of core tasks, our goal is to discuss a range of assistance techniques at a general level that could be applied to any game, irrespective of context. We believe this conceptual organization will provide both game designers and researchers with a starting point to explore and consider a range of EA techniques that can be applied to games to help target a desired level of challenge.

To characterize both core tasks and EA techniques we conducted a grounded theory study, which resulted in a framework describing the EA techniques that have been leveraged to assist certain core tasks. In this section we first describe our methodology, then describe our resulting framework, and, finally, we describe the general steps that can be used to adapt EA techniques to existing games using our framework as a guide.

Our work used a grounded theory study to create a framework of external assistance techniques that can assist players in completing core tasks in games. Grounded theory is comprised of qualitative practices used to characterize a new domain through the development of codes that are derived from data (Glaser, 1998; Glaser and Strauss, 2017). Grounded theory has been commonly used as a methodology for identifying frameworks from games artifacts (Toups et al., 2014; Alharthi et al., 2018; Wuertz et al., 2018), and our work follows the processes described in this previous work. We adopted a multi-phase process, whereby the research team identified codes from several iterative rounds of data collection and open coding. While Glaser and Straus describe how this process can be supported and informed by existing theory, we also leaned on multi-grounded theory (Goldkuhl and Cronholm, 2010). Multi-grounded theory follows the standard Glaser type approach, but in the structuring step describes how the process can be both inductive (to inform and refine existing theory) and deductive (drawing on existing theory to guide the process). Our process involved three general phases established in previous research (Wuertz et al., 2018):

• Phase 1: identifying and selecting game examples that contain core tasks,

• Phase 2: open coding from initial observations, and

• Phase 3: revision of our coding scheme, and development of axial codes.

All phases involved the research team engaging in discussion to explore the similarities and differences between their codes, concepts and our list of core tasks and external assistance techniques. Below we elaborate on each of the phases.

In selecting games for analysis we followed the process from other recent work that led to the creation of a framework to inform game design using grounded theory. Our initial selection process involved selecting games that the authors were familiar with (Wuertz et al., 2018).

Our goal with game selection was to identify games that contained core tasks, but we also believed that different genres may involve different core tasks (perhaps that had not previously been identified) and might also use vastly different EA techniques. We used a high-level taxonomy of games to assist in getting a mix of genres (Wikipedia, 2020), and we initially selected games from 16 of the genres and subgenres that we believed represented a good mix of games; the initial list of genres and games is available in Supplementary Materials. This list was only used to help diversify our initial game selection. The non-exhaustive list of genres and sub-genres comes from a list of video game genres on (Wikipedia, 2020), and game examples are drawn from the genre descriptions on this page. Subsequent iterations relied on selecting games that maximized variability based on our identified codes and did not use game genres.

Our inclusion criteria for games in our sample was relatively loose, in that a game only needed to have one core task and one EA technique to be included. To define core tasks we pre-determined that a core task must be a “basic motor and perceptual task” (Flatla et al., 2011) that takes place within the cognitive band of human action (i.e., excludes detailed deliberation, communications, or social processes) (Newell, 1994; MacKenzie, 2013). EA techniques were considered to be any feature in the game that was not related to game mechanics that operate in the game world.

Researchers frequently returned to Phase 1 after Phase 3 to seek out core tasks and EA techniques that were hypothesized about as potential codes. This also led us to have the following stopping criteria:

• Our existing axial codes did not suggest new core tasks or EA techniques that were not already represented in our dataset.

• We no longer found game examples that provided new core tasks or EA techniques.

This process resulted in 54 games that can be found in our Ludography, which is available as Supplementary Material to this paper. The games were analyzed either directly (through gameplay) or indirectly (by watching gameplay videos on YouTube).

Data were collected through experience reports from playing the games or through watching gameplay videos on YouTube. As new data were added, each was first evaluated for the core tasks involved, followed by EA technique identification. For each game we collected the game name, genre, descriptions for each core task, a listing and description of all observed game features that might be considered as EA techniques for each of the game's core tasks, and the data source (e.g., where it can be found in gameplay or a link to the YouTube video). As more games were added, we increasingly saw saturation in the data. The initial coding of core tasks and EA techniques was done without considering any existing theory, allowing us to later consider whether previous characterizations could accurately describe our data (which occurred as part of Phase 3).

Through discussions, we iteratively refined our list of core tasks and the external assistance techniques used to assist them. Recall that we relied on existing theory to help narrow the scope of our interest (as described in Related Work; see section Games Mechanics, Skill and Core Tasks). We initially considered the descriptions of each core task that we collected and determined their relationship to other core tasks. We then considered whether the core tasks could be reconciled with the core tasks described by Flatla et al., who identified a list of 14 core tasks (Flatla et al., 2011). Our process resulted in 10 core tasks, since we found that several of Flatla at al.'s core tasks were conceptually similar and could be supported by the same assistance techniques, thus we merged them. Our list of core tasks in the end is pragmatic reflection of our data collection, rather than having direct correspondence to, say, individual (or atomic) psychomotor control tasks (Schmidt et al., 2018).

We repeated a similar process with assistance techniques. Here, we iteratively grouped and labeled our assistance technique descriptions. Here we used the existing literature (described in Related Work) to consider our identified assistance techniques. This resulted in the 27 external assistance techniques, where each technique was aligned with one or more core task.

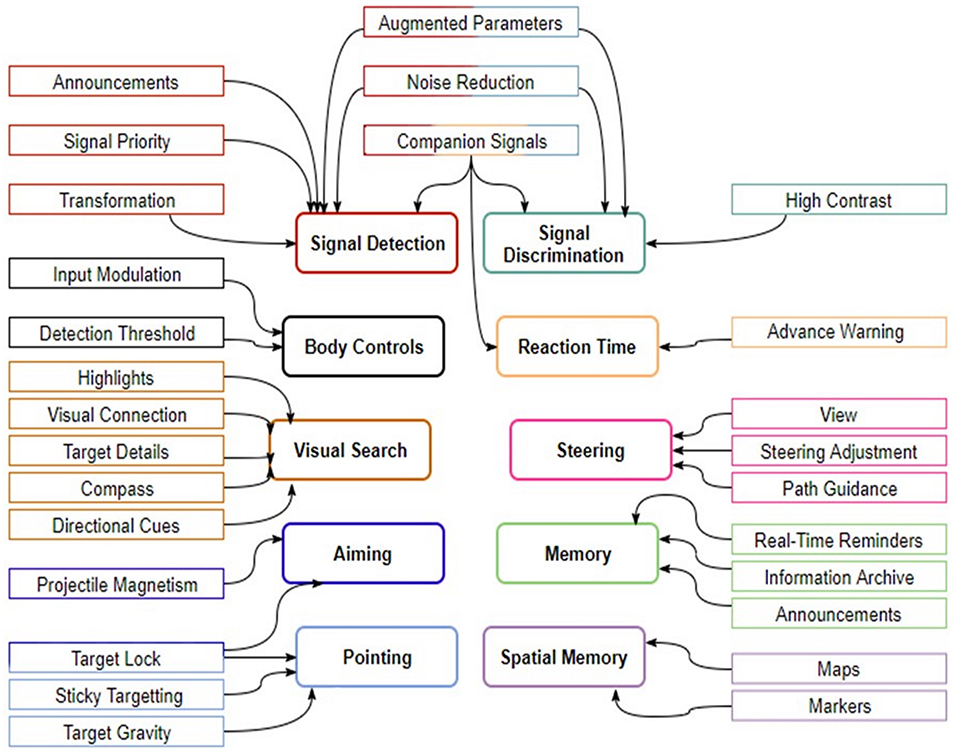

Below we present the descriptive framework that resulted from our analysis. Figure 1 displays the results of our analysis, relating the 10 core tasks with the 27 identified assistance techniques. In the subsections below, we first define the core tasks, followed by our description of EA techniques. For each, EA technique we provide an example of the technique from an existing commercial or research game. In our description of the framework below, we reference examples from our Ludography (available in Supplementary Material) using the notation [L#].

CT1 Signal Detection

Definition: The conscious perception of a stimulus, such as sound, light, or vibration.

External Assistance Techniques for Signal Detection

1.1 Companion Signals: Accompanying the original signal with an additional signal, often of a different medium (Johanson and Mandryk, 2016). E.g., the 3 stages of the charged attack in Monster Hunter: World [L13] use a visual signal (pulsing energy around the character), an audio signal (a sound effect of varying pitch per tick), and a haptic signal (controller vibration with every tick).

1.2 Augmented Parameters: Modifying parameters of a signal to make it more or less noticeable. E.g., In Overwatch [L9], Lucio uses a Sonic Amplifier ability that fires a burst of soundwaves as projectiles. Each shot of Lucio's weapon is accompanied by a sound effect. The pitch of this sound effect increases with every shot made, making it stand out.

1.3 Announcements/Emphasis: Feedback to point out a signal and/or for directing the player's attention to it. E.g., When players mount a monster in Monster Hunter: World [L13], a message telling the player what to do is displayed in the corner of the screen; this message glows making it more visually salient.

1.4 Signal Priority: Giving the signal more access to its media, such as more space on a visual display. E.g., When a player is injured in Call of Duty [L27] an overlay creates a blood spatter effect on the screen, which increases as the player's health goes down.

1.5 Transformation/Replacement: Altering a signal to provide one that is more effective or more informative. E.g., In Call of Duty [L27] players are rewarded for kill streaks by having access to a power-up that changes the representation of enemies on the mini-map from a blip to a triangle; this additional information makes it easier for players to identify enemy location and movement.

1.6 Noise Reduction: Modifying other elements in the game scene to make the signal more discernible. E.g., Whenever someone speaks using the in-game voice chat in Counter Strike Global Offensive [L25], the volume of everything else is reduced slightly so that the voice is loud enough to be heard. Note that signal priority (1.4) strengthens the signal being communicated by a particular source, while noise reduction reduces other signals in order to highlight another information source.

CT2 Signal Discrimination

Definition: Determining that there is a difference between two stimuli (e.g., determining that two colors or two sounds are different).

External Assistance Techniques for Signal Discrimination

2.7 Additional Cues: Presenting additional signals to convey complementary information. E.g., First-person shooters, such as Overwatch [L9], display a red outline around enemies when targeted. This helps distinguish them from friendlies, as players on both teams can look nearly identical.

2.8 Augmented Parameters: Modifying parameters of a signal to make it more noticeable. E.g., In Overwatch [L9], enemies' footsteps are louder than friendlies' footsteps to help ensure players can discriminate between the two.

2.9 High Contrast: Transforming/replacing a target signal to vary the contrast between it and other signals. E.g., Ultimate abilities in Overwatch [L9] are accompanied by a unique battle cry, which differs depending on whether the character is an ally or an enemy.

2.10 Noise Reduction: Reducing noise to better notice certain details about the target signal. E.g., When aiming through the scope in Sniper Elite [L45], the sounds of the surrounding battle are nearly muted to help players concentrate and line up a perfect shot.

CT3 Body Controls

Definition: Using muscle activation such as flexing or movement of a body part.

External Assistance Techniques for Body Controls

3.11 Input Modulation: Amplifying or diminishing the input from sensors to reach a desired result. E.g., In “The Falling of Momo,” a prosthesis training game (Tabor et al., 2017) there is an auto-calibration feature that automatically sets the gains on myoelectric sensors, so that the character can be controlled consistently between players with different muscle strengths.

3.12 Detection Threshold: varying the margin of error while posing or activating muscles. E.g., In Kinect Star Wars [L51], even minimal movements allow the players' input to be correctly interpreted as a goal movement.

CT4 Reaction Time

Definition: Reacting to a perceptual stimulus as quickly as possible.

External Assistance Techniques for Reaction Time

4.13 Companion Signals: Additional signals to alert the player that they are required to react. E.g., In NBA 2K19 [L54], on-screen indicators assist in timing the release of the button when shooting.

4.14 Advance Warning: Notifying players that a time-sensitive action is imminent. E.g., In Dead by Daylight [L5], skill checks (which require quick reaction) are announced by a gong before they appear.

CT5 Visual Search

Definition: Finding a visual target in a field of distractors; includes pattern recognition (determining the presence of a pattern amongst a field of distractors).

External Assistance Techniques for Visual Search

5.15 Highlights: Highlighting the target to make it stand out. E.g., Many quests in World of Warcraft [L7] require finding and collecting items. Target quest items are highlighted with a sparkle effect.

5.16 Visual Connection: A guide to the target via a visual connection (similar to Renner and Pfeiffer, 2017). E.g., Before the start of an Overwatch [L9] match, a line is shown on the ground to guide defending players from their starting location to the first defense point.

5.17 Target Details: Providing additional information about the target. E.g., In one mission of Grand Theft Auto V [L47] the player is provided with clues to identify an enemy, as the mission proceeds more clues are provided to facilitate identifying the enemy.

5.18 Compass: Pointer guiding the player in the target's direction. E.g., In Rocket League [L43], an arrow is always available pointing in the direction of the ball when it is off-screen.

5.19 Directional Cues: An auditory/visual effect to guide the players' attention. E.g., In Dead by Daylight [L5], when an escape hatch (which is difficult to find) is opened a sound of rushing air can be heard as the player nears it, guiding them to it.

CT6 Pointing

Definition: Accurately pointing at a target with feedback about current pointing position.

External Assistance Techniques for Pointing

6.20 Sticky Targeting: Slowing down the pointer when passing over a target (see Balakrishnan, 2004; Bateman et al., 2011b). E.g., Halo 5 [L1] uses a combination of aim assist techniques, one of which slows down the reticle as it passes over a target

6.21 Target Gravity: A force pulling the pointer toward the target based on distance (see Bateman et al., 2011b; Vicencio-Moreira et al., 2014). E.g., Fans of Battlefield 4 [L18] have analyzed the game and discovered that it includes a form of assistance to help players aim better. Two techniques were uncovered; one matches the Sticky Targeting technique, discussed earlier, and the other matches Target Gravity. In commercial games this is often referred to as reticule magnetism [Auto-Aim (Concept), 2019].

6.22 Target Lock: Instantly snapping the pointer to the target location [Auto-Aim (Concept), 2019]. E.g., In Monster Hunter: World [L5] (using slinger shot mode), players hold down a button that automatically targets a potential enemy; hitting another button will cycle through other targets.

CT7 Aiming

Definition: Accurately pointing at a target (possibly using a device) and/or predicting the collision between two objects, without feedback.

External Assistance Techniques for Aiming

7.23 Target Lock: Readjusting player position toward the target. E.g., In Naruto: Ultimate Ninja Storm [L16], target locking is provided. Players are free to roam around and even break the lock, but most actions players take reorient them toward their opponent.

7.24 Projectile Magnetism: Changing the projectile trajectory toward the target [Auto-Aim (Concept), 2019]. E.g., In Halo 5 [L1], bullets are pulled toward the target even if the shot was made slightly off-target. It should be noted that while projectile magnetism operates on a game object inside the game world, we consider it as an “external” technique because it does not change the game's main mechanics (i.e., firing a bullet with a particular trajectory), and can be done with little-to-no effect on other aspects of the game.

CT8 Steering

Definition: Moving or guiding an object along a path.

External Assistance Techniques for Steering

8.25 View: Giving the player a view into the game world that provides a better awareness of the environment. E.g., Dead by Daylight [L5] uses asymmetric view to create additional challenge. Survivors play the game in a third-person view, while the killer is given a first-person view. This makes it easier for the survivors to steer around obstacles and plan their routes as they can see more of their surroundings.

8.26 Steering Adjustment: Adjusting player velocity toward the optimal path (e.g., Cechanowicz et al., 2014). E.g., In Harry Potter: Quidditch World Cup [L33], to end a match, players chasing the snitch (a flying golden ball) are assisted to stay on a path that follows it.

8.27 Path Guidance: Guiding the player toward the optimal path. E.g., In TrackMania Turbo [L35], players race against a phantom car of the same color, representing the optimal path.

CT9 Memory

Definition: Memorizing and/or retrieving sets of items, sequences, and/or mappings.

External Assistance Techniques for Memory

9.28 Real-Time Reminders: Actively reminding the player of information to be recalled. E.g., In Pokémon Leaf Green [L21], players are often reminded of details they need to complete actions in the game.

9.29 Information Archive: A store of relevant information that may need to be recalled. E.g., The Witcher 3 [L14] has a Bestiary Guide that keeps track of all the monsters and creatures the player has encountered in the game so far. It includes information such as monster descriptions, and weaknesses.

9.30 Announcements: Highlighting relevant information, reinforcing the fact that they may need to be recalled in the future. In Professor Layton and the Curious Village [L32], important game events are automatically documented in a journal, which can be reviewed later to inform about future challenges.

CT10 Spatial Memory

Definition: Remembering the location of items in a space without persistent visual cues.

External Assistance Techniques for Spatial Memory

10.31 Maps: A visual representation of the game environment. E.g., Many open world RPGs (like The Witcher 3 [L14] or World of Warcraft [L7]) provide both a detailed world map and a mini-map.

10.32 Markers: Markers in the game environment to inform the player of a location. E.g., When playing a healer in Overwatch [L9], the locations of friendlies are visible in the environment (even through obstacles), so that the healer can easily find them.

Figure 1. The resulting descriptive framework of Core Tasks (rounded corners) and External Assistance Techniques (square corners). Arrows connect assistance techniques to the core tasks they facilitate.

In this work, we propose the use of external assistance techniques in games to aid in balancing challenge. The idea is that by understanding the core tasks that exist in a game, using our design framework, a logical set of starting points can be identified for consideration as assistance. Most, if not all, of the techniques we examined should be well-known to game designers, and, many of them would be expected as a part of the functionality of any good modern game. For example, it would be hard to imagine a large open-world RPG without a map feature. However, the insight is that further assistance can be offered to a game by providing additional assistance techniques, or stronger assistance versions of already used techniques. Below we enumerate a basic process for applying assistance techniques to a game using our newly developed framework.

The first step is to identify the core tasks within the game. To do this we can examine each of the high-level actions in detail and try to describe them directly through associating them with one or more core tasks. For example, “Shooting a gun” requires the player to point and click, “Collecting items” may include the player moving (Steering) to the item location (Spatial Memory) and then finding the item within an environment with many distractor objects (Visual Search).

To choose an appropriate technique (or to develop a new one), we first need to identify the goal of the assistance. For example, answers are needed to questions such as “Does this part of the game need assisting?”, “How much easier should it be?” and “What aspect of this action needs assisting?” For the last question, we are referring to player actions that consist of several core tasks like shooting a ranged weapon that may require aiming and reaction time. A further example could be, if a game requires collecting items as the main challenge, like in Animal Crossing [L41], then a technique that provides a weaker amount of assistance for tasks like visual search or reaction time might be chosen. However, if the item collection is a part of a looting system after a difficult boss encounter, such as the monsters from Monster Hunter: World [L5], then a stronger implementation would make sense for visual search since the players have beaten the challenge and picking up the reward should not be difficult at all.

When selecting a technique, two important considerations exist that help focus which technique will best match the core task and the assistance goals: Theme and Presentation. Theme refers to whether and how the assistance technique can fit into the theme of the game. Some techniques may be harder to implement than others, based on the type of game, as there can be a fundamental mismatch between a game theme and a technique. For instance, a basketball game could implement some of the aiming techniques to help players score. However, using Projectile Magnetism may not fit, since moving the ball in mid-air would be strange.

Presentation refers to how it will be made available in the game. Will it be available by default, will it be optionally activated by players, or will it be dynamic (i.e., only made available when the system determines that it is needed)?

Many EA techniques need to be calibrated, so that they provide the right level of help. For many of the techniques, the need for calibration is self-explanatory (such as how strong a Target Gravity effect should be). Calibration is important because simply implementing a technique may not be enough to reach the desired goal, or perhaps it could be too much if a degree of challenge and difficulty is still desired. To fine tune the implementation of a technique, playtesting can be done to see how it affects player performance. Further, as we will see in the evaluation of the example assistance techniques below, we found through testing that one technique did not perform as well as expected. Assistance techniques, like other elements of a game, need to be extensively play tested.

To demonstrate how our framework can be applied to balancing challenge in a variety of games, we developed three games that all shared a common core task: Visual Search. We pre-selected three different techniques and implemented them in each of the three games: Highlights, Target Details, and Compass. The goal of this demonstration was to provide a concrete illustration of how the idea of identifying core tasks is an effective strategy to help guide the selection of an EA techniques, and that different assistance techniques can effectively assist a single core task. We chose to demonstrate three separate EA techniques to highlight their diversity and their application in a range of games. This allowed us to demonstrate how different EA techniques can vary in their appropriateness for different game designs. Visual search was selected as the core task for the demonstration because it has not been closely examined in previous research in assistance techniques or as a target of game balancing activities.

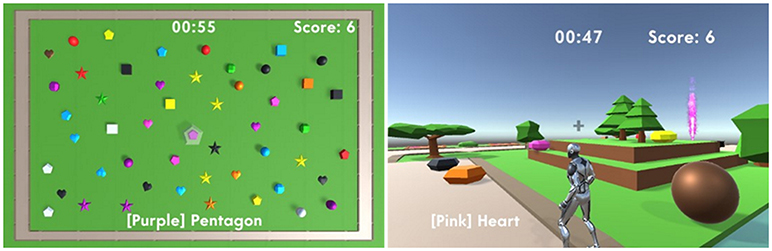

The first game seen in Figure 2 (Left) is a simple hidden-object game with a top-down view and is similar to aspects of play in many puzzle or point-and-click games. The second game is a simple sniper simulation shown in Figure 2 (middle). A “sniper” mode is a common element of many action games. The third game, found in Figure 2 (Right), is a third-person view game with target objects that must be found throughout the environment, which is similar to finding and collecting items in many RPGs.

Figure 2. Three games focused on the same core task (Visual Search) developed to demonstrate the application of assistance techniques. (Left) A hidden-object game with a top-down view. (Middle) A sniper game with a first-person view. (Right) A simple adventure game with a third-person view.

The games are not only differentiated by style and view, but also by the other core tasks they require. The top-down, object search game also requires pointing. The third-person game (Figure 2, right) has elements of steering for moving the character and spatial memory for remembering where a player has already looked. The sniper game (Figure 2, middle) also has an element of signal discrimination, as objects can look similar from the first-person view from the gun.

In each game, the target the player needs to select is given in text—centered near the bottom of the screen. Their score is in the top right corner. A correct click grants the player two points, while clicking on any other shape removes one point. The targets used in all three games are simple shapes such as cubes, spheres, stars, and hearts. However, these could take the form of anything that may be relevant to the game's theme. For example, in Figure 2, the targets could be some in-game loot such as weapons or gold like those from Diablo 3 [L8]. Snipers are usually used to hit moving targets—enemies—such as in Sniper Elite [L45].

We conducted an evaluation with 16 players. Our evaluation had two main purposes. First, we aimed to provide a demonstration that our framework can lead to techniques that are actually effective at adjusting the difficulty of a game (i.e., our framework can guide the selection of techniques that actually make a game easier). Second, and more importantly, it allowed us to collect players' views on the specific EA techniques implemented in the games. Thus, our evaluation allows us to evidence the main concerns and details that designers might confront when applying EA techniques in practice.

To limit our study length to ~1 h, our study used two of the three games that we implemented: Top-down view (Figure 2, left), and third-person (Figure 2, right). These two games were chosen as they are most distinct from one another, allowing players to comment on how the EA techniques vary across different game designs.

Our experiment was a 4 × 2 within-subject design with assistance technique (highlights, target details, compass, no assistance) and game type (top-down and third-person) as independent variables. Our design allowed us to investigate how different techniques perform in different game designs, both from a balancing point of view (through objective in-game performance data) and an experience point of view (through subjective responses to questionnaires).

Below we describe how we developed each of the three external assistance techniques for the Visual Search core task in each of the three games.

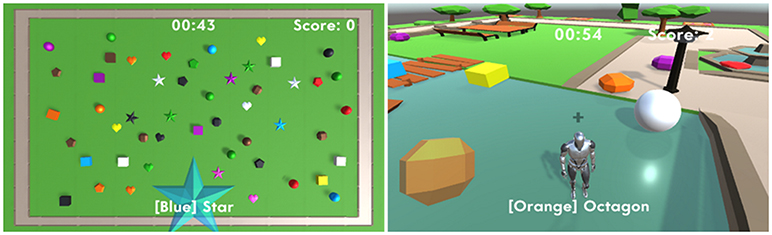

Highlights: For the top-down game, highlights were implemented as a “glow” effect around the target, as seen in Figure 3 (left). For the third-person version, Highlights were redesigned with the players' limited view in mind, and since the glow technique would not be particularly helpful when a target was far away from the player. The Highlights implementation is a beam of colored particles shooting upwards from the target (Figure 3, right). These are like the colored markers found in Fortnite [L20].

Figure 3. The Highlights assistance technique. A highlighted pink pentagon in the top-down game (left), and in the third-person game (rising pink smoke in the distance; right).

Target Details: Target Details gives the player more information about their target. Normally, only the name and color are given to the player in the form of text. Our implementation gives players an image of their target, which helps them avoid the need to visualize the target and enables quicker comparisons with what they see on screen. The implementation for both games is nearly identical, except for the location of the hint image; see Figure 4.

Figure 4. The target details technique. A large preview hint of the target shape to find in the top-down game (left), and in the third-person game (right).

Compass: A 3D pointer was implemented that is rotated to point toward the next target. The rotation was updated in real-time and moved once a new target was generated, or the player moved (in the third-person view). The compass was placed at the bottom-center of the screen in the top-down version of the game (Figure 5, left), and under the playable character in the third-person view (Figure 5, right).

Figure 5. The Compass technique. An arrow above appears above the target shape to find, directing the player toward the target.

The games were built using the Unity 3D game engine. Game sessions were played on a 64-bit Windows 10 machine, Intel Core i7 CPU and a 19-inch, 1440 × 900 monitor.

We recruited 16 participants to play the games. Participants ranged in age from 18 to 43 years old (M = 25.375, SD = 6.889), 4 identified as female and 12 as male; 12 were university students. Participants had a wide range of gaming experience, but all had played games using a mouse and keyboard. Most participants preferred the WASD (62.5%) input scheme over arrow keys (12.5%), and the remainder had no preference. Participants that were not familiar with WASD input spent more time in Practice Mode for the third-person view game to get comfortable with the controls.

The evaluation required ~60 min to complete. The game consisted of completing the experimental task 8 times (see below), once for every combination of the independent variables: 4 “technique” levels (no assistance, compass, highlight, and hint) and 2 “game type” levels (top-down and third-person). The presentation of techniques was balanced between participants using an 8 × 8 Latin square.

Before beginning a play-through, participants were given a brief introduction to each new technique and new game, and provided an opportunity to get accustomed to the combination by playing the game in a practice mode. Participants were informed that they could take as much time as necessary to get comfortable with a specific technique in a specific game. Overall, training required ~10 min per participant.

Participants were asked to complete a demographics questionnaire and subjective questionnaires as follows. The demographics questionnaire was completed at the start of the experiment and collected basic information (age, occupation, etc.) as well as experience with video games and relevant game controls schemes (e.g., the WASD controls that were used in our third-person game). Subjective measures were collected via a brief questionnaire presented after each technique, and a final questionnaire asking participants to reflect on their experiences was completed after gameplay. All questionnaires used Likert-style scales. Post-technique questionnaires collected ratings on their experience and the NASA TLX (to capture cognitive effort/task loading); the individual questionnaire items are presented with the results (section Subjective Measures). The final questionnaire asked participants to compare techniques as to their appropriateness for use in games.

Our experiment was approved by the Research Ethics Board of the University of New Brunswick.

For each run of the game, participants were given 2 min to locate and click on as many targets as possible amongst a field of distractors. Participants were instructed to score as many points as possible in the 2 min. The amount of time remaining in the game was displayed in the upper left corner during the experiment. We selected 2 min as the time to play each version of the games through piloting with members of our research group not involved in the research. We found that 2 min provided more than enough time to experience the game without fatiguing participants, and allowed the full experiment to be completed comfortably within 1 h.

Recall that players score two points for successful selections and lose one point for incorrect selections. After a successful click, all the shapes in the game scene are randomly regenerated (to ensure that the game required visual search and not spatial memory), and a new target was presented.

Given the amount of practice that participants had and the relatively simple gameplay, 2 min provided more than enough time per game, and ensured that players stayed engaged throughout the experiment. We note that participants provided a consistent level of effort throughout the experiment. We often observed participants racing against the clock to hit one more target, and a few were visibly upset if they were unable to get their final target just as their time ran out.

To assess the effectiveness of the techniques we considered the score participants achieved in each of the games, since maximizing score was their main goal in the experiment.

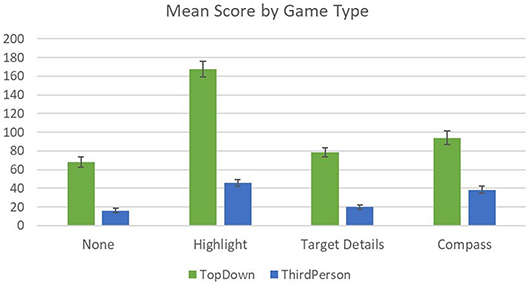

The grand mean score for all games was 66.0 points (sd = 50.5). The Top-Down game had the higher mean score of 102.1 (sd = 46.7), while Third-Person had the lower mean score at 30.0 points (sd = 17.7). We conducted a two-way ANOVA analysis with within-subject factors (technique and game type) to analyze the data. The main effect of Game Type on player score was statistically significant [F(1, 15) = 235.4, p < 0.01], see Figure 6. This difference is unsurprising, since the third-person game requires players to spend time navigating to the next target.

Figure 6. Mean player score (in points) achieved after two minutes of gameplay for each game and technique combination.

Examining the assistance techniques (independent of game type) on player score, we found that the highest mean score was achieved with Highlights, at 106.6 points, followed by Compass with 68.0 points, and finally Target Details and No Assistance came near the bottom with 49.2 and 42.1 points, respectively. The main effect of assistance techniques was also significant [F(3, 45) = 107.2, p < 0.01], see Figure 6.

There was a significant Game Type × Assistance Technique interaction effect [F(3, 45) = 50.7, p < 0.01], which was due mainly to the significant differences between Highlight and the other techniques for the Top-Down game and nearly all the pairs for Third-Person (except for None/Hint and Highlight/Compass, which were not significant; see Figure 6), as determined by a Scheffé post-hoc analysis.

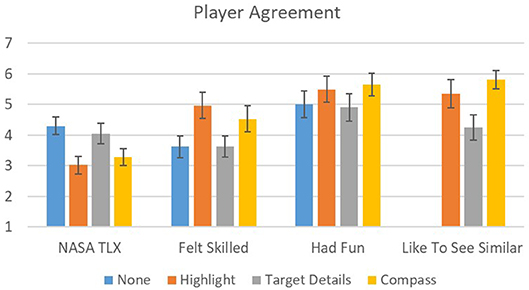

To assess players' views on the use of assistance techniques in the games, and in general, participants filled out several questionnaires. We analyzed the results of both games together, since the techniques were rated similarly in both game conditions. The chart in Figure 7 shows the mean agreement with Likert-style ratings for a number of statements (see below). All ratings were on a 7-point scale; 1 = strongly disagree, 4 = neutral, 7 = strongly agree.

Figure 7. Agreement ratings by Assistance Technique. NASA TLX scores lower is better, other ratings higher is better.

First, participants' ratings on NASA Task Load Index (TLX) items were aggregated into one value representing “Task Loading” (lower is better) (Hart, 2006). The other three ratings are mean values of agreement with the following statements: “I felt skilled at this game,” “I had fun playing this game,” and “I would like to see similar assistance techniques implemented in games I play.” See Figure 7.

We used a Friedman's test to detect differences in rating between each of the four Technique levels. Overall, the same trend can be seen across each item. There was a significant main effect of Assistance Technique on Task Loading—TLX (χ2 = 25.253, p < 0.0001, df = 3), Feeling Skilled (χ2 = 22.829, p < 0.0001, df = 3), Having Fun (χ2 = 10.602, p < 0.05, df = 3), and Liking to See a Similar Technique in Games Played (χ2 = 25.196, p < 0.0001, df = 3). Post-hoc tests show there were significant differences (p < 0.05) for the same pairs in all tests. All pairs were significantly different with the exception of Highlight & Compass, and Target Details & None.

Best Technique for Game: After playing each game, players responded to the question: “Which technique did you feel best fit with this type of game?” For the Top-Down game, of the 16 participants, 7 (44%) felt the Compass technique was most appropriate, 6 (38%) chose Highlights, and 3 (19%) felt that Target Details was best.

When asked about the most suitable assistance technique for the Third-Person game, of the 16 participants, there was an even split, eight felt that the Highlights was the most appropriate and eight felt that compass was most appropriate; none felt that Target Details was best for the Third-Person game. When asked to justify their choice for the best technique for the game, some participants described selecting Highlights because it helped them perform the best. However, others pointed out the Highlights made the game too easy, which is why they chose Compass over Highlights.

Overall, participants found the preview available in the Target Details technique not as helpful. From informal discussions after the experiments, participants attributed this to the fact that the visual representation (a larger, semi-transparent shape) was not always similar to the target they were looking for. In the top-down game, the preview was a closer representation of what they were looking for (e.g., consider the blue star Highlight and the target shape that can be seen in Figure 4, left). However, participants found Target Details less helpful in the Third-Person game. This is due to the target often being differently orientated than in the game (note the different appearance of the “Orange Octagon” using Highlights in Figure 4, right, and the corresponding target in Figure 2, right).

Free-form Questions: We also asked participants several free-form questions at the end of the experiment to solicit their opinions after playing all of the games with the different techniques and to gauge their feeling on the use of EA techniques in games.

All 16 participants felt that EA techniques like the ones they used are well-suited for inclusion in other games. Some players provided further detail as to why, explaining, such as P1 who said, “… some games have very good potential but discourages player by not offering help to proceed when the player is stuck.” Other participants agreed but provided some conditions for how Assistance Techniques should be provided, for example saying that “… a toggle menu allowing players to turn on or off the techniques would be beneficial” (P15). When asked, all participants felt they would like to have control of Assistance Techniques and to be able to turn them off, if they wanted to.

When considering whether they would like to know about how different techniques are being used to assist them in games they played, players were a little more split. Most of the participants (75%) felt that they would like to know. However, others felt they would prefer not to know. P15 highlighted this sentiment by pointing out that “Knowing that [an Assistance Technique] was there and performing poorly would not be beneficial as you would feel as though even with assistance, you could not perform well in game.”

Our study provides important findings that can help in designing and applying EA techniques.

1. For the previously under-studied core task of Visual Search in games, all techniques helped players perform better and have a better play experience (except for Highlights in the Third-Person game).

2. In general, as an Assistance Technique provided more of a boost to performance, players found the task easier (lower TLX scores), felt more skilled, had more fun, and were more in favor of seeing the technique in a game.

3. Participants rated techniques differently depending on the game: Highlights was rated best for the Top-Down game, and Highlights and Compass were rated best for the Third-Person game.

4. Participants want control of Assistance Techniques, through the ability to toggle them on or off.

5. Revealing the use of Assistance Techniques to provide success should be done carefully, since players might interpret not reaching goals as a personal failure.

The focus of our work was not the evaluation of previously understudied EA techniques, but rather the presentation of our framework of EA techniques. Our games and user evaluation were performed to exemplify the use of EA techniques in practice, including how techniques were adapted to different game types and genres and how different techniques can lead to different experiences. Below we elaborate on how the findings from our study inform the application of EA techniques and propose future work to refine and extend our framework.

Performance in the two games was different. While both games were based on a Visual Search core task, and involved clicking on shapes, they played like very different games. The games, while simple, were representative of many common real world games, and the fact that players score differently between the games, with or without assistance suggests that our goal of making different games was effective. We note that all players rated the games as fun to play, regardless of Assistance Technique, and that the techniques improved play experience.

As we expected, participants enjoyed the games more the better they performed. As they found the game easier, they felt more skilled, had more fun, and rated their desire to have a particular technique in their game higher as their performance increased. However, we also found strong evidence that when a game was too easy, it started to become less fun for many players. We can identify exactly when this happened for players in our study, due to the interaction effect detected between Game Type and Assistance Technique, which we can attribute largely to the disproportionate performance of Highlights in the top-down game (see Figure 6). In this case, Highlight provided such strong assistance that it removed most of the challenge of the game, meaning that players found the game less fun. This speaks to the challenge of balancing games, with any approach; while our Assistance Techniques were added after the development of the basic game, they still need to be balanced themselves. The hope, however, for external assistance techniques is that since they operate outside of the main in-game mechanics, this balancing activity is greatly simplified since it can work independently of those other mechanics.

We believe that in considering adding any type of assistance to a game, like all other types of game mechanics, it must be done carefully. The very idea of an “Assistance Technique” might suggest to some that there is an expectation of success once assistance has been given. As we saw from on participant's comments, they felt knowing that assistance was being given and still failing might make them feel badly about their abilities. At the same time, in many game designs, failure is an important part of the play experience; we play to be challenged, to accomplish our goals in a safe space, and with this in mind some degree of failure is needed to make the experience meaningful (Juul, 2013). For players who are highly skilled, providing assistance when it removes challenge or reduces the chance of failure might be akin to attaching training wheels to the bicycle of a skilled rider, and might even harm their perceived competence (Wiemeyer et al., 2016). Further work needs to be done to understand the intricacies of providing assistance and how it affects play experience.

Our analysis did not provide a deeper dive into the reasoning behind players' preferences for certain techniques. For example, it is possible that players preferred techniques that they had more familiarity with, rather than the ones that made universally the “best” game. While we do not believe this to be the case, future work should consider such potential factors. As in previous research on assistance techniques, we have found further evidence that players want control over the use of assistance, yet do not necessarily want to be reminded that it is being provided (Bateman et al., 2011b). Revealing the use of assistance techniques for balancing challenge (or competition between players Vicencio-Moreira et al., 2015) should be done carefully.

Our implementations and evaluation demonstrated the utility of the framework for adapting EA techniques to games to provide significant changes in game performance, which we consider. We provided a step-by-step process for adapting EA techniques to existing games, where the first step, “Core Task Identification,” is a critical step in determining how a game can be assisted. In our evaluation, the games were comprised of just a few core tasks: Visual Search and Pointing for both games, and, additionally, Steering in the Third-Person game.

Once the core tasks are identified, the appropriate assistance can be chosen to adjust player performance. If the desired effect or difficulty is not achieved, designers may then consider providing assistance to the other tasks in the game. For example, in our evaluation, players could have further benefitted from a Pointing assistance technique to improve their scores even further.

Game designers need to play test their techniques carefully. Sometimes our evaluation results followed conventional wisdom, but sometimes we found unexpected results. For example, Compass performed nearly as well as Highlights, especially in Third-Person, which we did not expect.

Unsurprisingly, but importantly, player preference is not always about performance. EA techniques can make certain tasks too easy, as many participants felt about Highlights. Even though it was effective and increased the players' scores considerably, players often preferred the Compass, which did not have as strong an effect as Highlights. Designers should keep this in mind when deciding when and where to implement certain techniques and consider the level of challenge that is desired.

Importantly, however, our work is fundamentally limited because it did not directly involve a broad set of game designers. While our first author has experience as a game designer, and we leveraged relevant experience reports from designers (in the cited articles from Gamasutra), we have little evidence still to the utility of our framework in actual practice with larger and more complex game designs. In our future work, we would very much like to discuss our framework in an interview study with practicing game designers to understand its utility to them, and how it might actually fit into their design practices.

This work provides several new directions for research. Assistance techniques for games have been investigated for a number of years in the HCI community. However, previous work has often focused on input assistance (working at the level of input for steering, pointing, and aiming). In this work we identify a number of understudied ways in which assistance can be provided in games (by beginning with the game's Core Tasks). Future work should confirm the effectiveness of our process for adapting EA techniques to games, both when applying existing techniques and in developing completely new techniques.

More basic research is needed, looking at EA techniques and how they can impact play, in a wider range of game types. By relating new work back to the concept of core tasks, we will get a consistent organizational concept for identifying new directions and understanding performance at a fundamental level, and how techniques impact other aspects of play such as skill development (Gutwin et al., 2016).

Our work focuses on the idea of core tasks from the work of Flatla et al. (2011) to help focus and narrow the mechanics that we looked at. Core tasks are the basic motor and perceptual tasks that are needed to interact with common game mechanics; however, the skills corresponding to core tasks only make up a small subset of the larger sets of skills players might need in games. We believe that focusing for our initial research in this area was a necessary step to make our work tractable. Future work should consider Schell's broader characterization of skills in games (Schell, 2019), which include social (e.g., building trust and relationships) and mental skills (e.g., establishing plans and strategy), as a starting point to identify very different but important skills that EA techniques can target to improve balance.

There is also a wider range of research that can likely be drawn upon and further exemplify EA techniques. In our work, we looked at a wide range of techniques that could be considered as assistance. However, different examples of EA techniques might emerge, and depending on the focus of any process creating a framework, different granularities of concepts and organizing principles will be developed. For example, the work of Alves and Roque (2010) provide a comprehensive list of “sound design patterns” that can help support game designers in developing sound to support their games. Two of the authors have informally discussed all 78 patterns and believe that roughly a third of these could be considered as EA techniques. For example, the “Imminent Death” sound pattern would be an example of a “Companion Signal” in our framework. Of the techniques that might be considered as EA techniques, we believe they represent specific examples of the “Signal Detection,” “Signal Discrimination,” and “Path Guidance” techniques.

So, while other specific examples of EA techniques might exist, it seems that they fit well into the categories of EA techniques that we identified. The informal exercise described above helps reassure us that our framework provided good generalizability, but that there are likely many examples of the techniques that could help designers identify specific adaptations of a technique for their games. To this end we hope to follow the lead of Alves and Roque, and develop materials that help make concrete examples of EA techniques in games more accessible, similar to Alves and Roque's sound design cards (Alves and Roque, 2011) and companion website (www.soundingames.com). While we believe our list of core tasks has good utility, in the future it is likely that new technological developments may lead to the need for changes and refinements to our initial list. For example, Body Controls is currently a comprehensive category that includes Muscle Activation, Ambidexterity and Movement. These subcategories may become more distinct as games begin to take advantage of body input, especially with the advancements made in Virtual and Augmented Reality technologies (Foxlin et al., 1998).

In this paper, we studied 54 games using a grounded theory study, allowing us to identify a framework of 10 different core tasks commonly needed in games, and 27 possible external assistance techniques that can make them easier to complete. Several of those techniques have been previously studied, while others are still to be explored and evaluated.

By organizing video game assistance at a fundamental level, through the lens of core tasks, we assist in the portability and understanding of these techniques across games, regardless of genre or platform. One of the main goals of this work was to create a comprehensive starting point for designers and game developers considering assistance for their games. We have successfully collected and presented a wide range of assistance techniques, exemplifying them and providing clear new language for discussing them.

We also conducted a study on the effectiveness of several techniques pertaining to a previously under-studied core task in games, Visual Search. We evaluated the effectiveness of three techniques (Highlights, Target Details, and Compass) in two different games that share Visual Search as a core task. Our findings show that the techniques improve performance and are suitable for balancing challenge.

In this paper, we provide the first generalization of how the range of core tasks can be assisted in games. Our work gives designers a new language for discussing external assistance techniques and an important starting point for making important, and common design decisions in order to target appropriate level of challenge in their games. Further, we provide a general methodology that can be used in future research that studies and characterizes techniques that can designers can employ in targeting a desired play experience.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by Research Ethics Committee University of New Brunswick. The patients/participants provided their written informed consent to participate in this study.

The research was conceived of mutually by JR, SB, and MF. JR led data collection and analysis with the assistance of SB and MF. JR and SB contributed equally to the writing with assistance from MF.

This research was supported by NSERC and the New Brunswick Innovation Foundation.

At the time of preparing this manuscript JR was employed by Kabam Games. However, all research described was conducted while JR was a graduate student at the University of New Brunswick, and all work was done independently of Kabam Games.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcomp.2020.00017/full#supplementary-material

Alharthi, S. A., Alsaedi, O., Toups, Z. O., Tanenbaum, J., and Hammer, J. (2018). “Playing to wait: a taxonomy of idle games,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems CHI'18 (Montreal, QC: Association for Computing Machinery), 1–15.

Alves, V., and Roque, L. (2010). “A pattern language for sound design in games,” in Proceedings of the 5th Audio Mostly Conference: A Conference on Interaction With Sound AM'10 (Piteå: Association for Computing Machinery), 1–8.

Alves, V., and Roque, L. (2011). “A deck for sound design in games: enhancements based on a design exercise,” in Proceedings of the 8th International Conference on Advances in Computer Entertainment Technology ACE'11 (Lisbon: Association for Computing Machinery), 1–8.

Auto-Aim (Concept). (2019). Giant Bomb. Available online at: https://www.giantbomb.com/auto-aim/3015-145/ (accessed February 1, 2020).

Balakrishnan, R. (2004). “Beating” fitts' law: virtual enhancements for pointing facilitation. Int. J. Hum. Comput. Stud. 61, 857–874. doi: 10.1016/j.ijhcs.2004.09.002

Baron, S. (2012). Cognitive Flow: The Psychology of Great Game Design. Gamasutra. Available online at: https://www.gamasutra.com/view/feature/166972/cognitive_flow_the_psychology_of_php (accessed January 21, 2020).

Bateman, S., Doucette, A., Xiao, R., Gutwin, C., Mandryk, R. L., and Cockburn, A. (2011a). “Effects of view, input device, and track width on video game driving,” in Proceedings of Graphics Interface 2011 GI'11 (St. John's, NL: Canadian Human-Computer Communications Society), 207–214.

Bateman, S., Mandryk, R. L., Stach, T., and Gutwin, C. (2011b). “Target assistance for subtly balancing competitive play,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems CHI'11 (New York, NY: ACM), 2355–2364.

Bourtos, D. (2008). Difficulty is Difficult: Designing for Hard Modes in Games. Gamasutra. Available online at: https://www.gamasutra.com/view/feature/132181/difficulty_is_difficult_designing_.php (accessed January 21, 2020).

Burgun, K. (2011). Understanding Balance in Video Games. Gamasutra. Available online at: https://www.gamasutra.com/view/feature/134768/understanding_balance_in_video_.php (accessed April 7, 2020).

Cechanowicz, J. E., Gutwin, C., Bateman, S., Mandryk, R., and Stavness, I. (2014). “Improving player balancing in racing games,” in Proceedings of the First ACM SIGCHI Annual Symposium on Computer-Human Interaction in Play CHI PLAY'14 (Toronto, ON: Association for Computing Machinery), 47–56.

Chen, J. (2007). Flow in games (and everything else). Commun. ACM 50, 31–34. doi: 10.1145/1232743.1232769

Csikszentmihalyi, M. (2008). Flow: The Psychology of Optimal Experience, New York, NY: Harper Perennial Modern Classics.

Denisova, A., Guckelsberger, C., and Zendle, D. (2017). “Challenge in digital games: towards developing a measurement tool,” in Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems CHI EA'17 (Denver, CO: Association for Computing Machinery), 2511–2519.

Felder, D. (2015a). Design 101: Balancing Games. Available online at: https://www.gamasutra.com/blogs/DanFelder/20151012/251443/Design_101_Balancing_Games.php (accessed February 1, 2020).

Felder, D. (2015b). Design 101: Playtesting. Available online at: https://www.gamasutra.com/blogs/DanFelder/20151105/258034/Design_101_Playtesting.php (accessed February 1, 2020).

Flatla, D. R., Gutwin, C., Nacke, L. E., Bateman, S., and Mandryk, R. L. (2011). “Calibration games: making calibration tasks enjoyable by adding motivating game elements,” in Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology UIST'11 (New York, NY: ACM), 403–412.

Foxlin, E., Harrington, M., and Pfeifer, G. (1998). “Constellation: a wide-range wireless motion-tracking system for augmented reality and virtual set applications,” in Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques SIGGRAPH'98 (New York, NY: Association for Computing Machinery), 371–378.

Gerling, K. M., Miller, M., Mandryk, R. L., Birk, M. V., and Smeddinck, J. D. (2014). “Effects of balancing for physical abilities on player performance, experience and self-esteem in exergames,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems CHI'14 (New York, NY: ACM), 2201–2210.

Glaser, B. G. (1998). Doing Grounded Theory: Issues and Discussions. Mill Valley, CA: Sociology Press.