- 1Department of Civil Engineering, Marshall University, Huntington, WV, United States

- 2School of Civil & Environmental Engineering and Construction Management, The University of Texas at San Antonio, San Antonio, TX, United States

- 3Department of Civil Engineering, The University of Jordan, Amman, Jordan

- 4Department of Civil Engineering and Construction, Bradley University, Peoria, IL, United States

Building roof inspections must be performed regularly to ensure repairs and replacements are done promptly. These inspections get overlooked on sloped roofs due to the inefficiency of manual inspections and the difficulty of accessing sloped roofs. Walking a roof to inspect each tile is time-consuming, and as the roof slope increases, this difficulty increases the time needed for an inspection. Moreover, there is an intrinsic safety risk involved. Falls from roofs tend to cause severe and expensive injuries. The emergence of new sensing technologies and artificial intelligence (AI) such as high-resolution imagery and deep learning has enabled humans to move beyond the concept of using manual labor in damage assessments. It has brought significant advantages in the field of safety management, and it can be a substitute for the traditional assessment of roofs. This study uses unmanned aerial vehicles (UAVs) and deep learning technology to perform sloped roof inspections effectively, thus eliminating the safety risk involved in traditional manual inspections. This study utilizes UAVs and deep learning to automatically collect and classify roof imagery to identify missing shingles on the roof. The proposed research can help real estate agents, insurance companies, and others make better and more informed decisions about roof conditions. Future research could be refining the model to deal with different types of defects in addition to missing shingles.

Introduction

Rooftops could become obsolete because of the loss of shingles, or they get completely damaged due to storms and hail. There are millions of dollars in property insurance claims filed by homeowners every year, resulting from these damages. There is an inherent delay in claiming process, which can be hectic for customers. To mitigate this problem, insurance companies prefer periodic assessments of rooftops. Periodic assessment is imperative as it can cut down the cost significantly. However, two reasons hinder the periodic assessment process. The first reason is the risk of falling from the roof, and the second is the extra cost due to inaccuracy and human error. Falling from heights is one of the most significant causes of accidents in the construction industry. Construction workers in general and roofers, in particular, are exposed to risk when they get on a roof simply due to gravity and physical limitations such as weight or age that increase the risk of climbing on a roof. According to a study done by the Center for Construction Research and Training (CPWR), fatal falls to a lower-level account for more than one in three (36.4%) construction deaths in 2020. The study further states that roofs were the primary source of those fatal injuries (Brown et al., 2021).

The incorporation of Artificial Intelligence (AI) and drones has enabled the performance of high-level operations without the intervention of humans. Along with other benefits, it can efficiently inspect the infrastructure. The overall drone inspection and monitoring market is expected to grow from USD 9.1 billion in 2021 to USD 33.6 billion by 2030, at a compound annual growth rate of 15.7% from 2021 to 2030 (Intelligence, 2022). Deep learning, a subpart of Artificial Intelligence, has shown promising results when it comes to computer vision applications. In particular, Convolutional Neural Network (CNN) can process high-level drone imagery and perform classification and object detection via different algorithms (i.e., YOLO, Resnet-50, VGG-f, and Alexnet) (Mseddi et al., 2021). Also, it is a modern approach to data classification which has shown recent advancements in unsupervised image classification, in some cases producing results with higher accuracy than humans (Wan et al., 2014).

The deployment of this category of technologies has not been explored for the effective inspection of sloped roofs with the capacity to reduce and eliminate the safety risks associated with the conventional roof inspection approach. To bridge this gap, this study investigates the implementation of UAVs and deep learning technology for the inspection of sloped roofs through the automatic collection and classification of roof imagery to identify missing shingles on the roof. A residential roof condition assessment method using techniques from deep learning is presented in this research. The proposed method operates on residential roofs and divides the task into two stages; 1) using drones to collect high-resolution roof images; and 2) developing and training a deep learning model, convolutional neural network (CNN), to classify roofs into two categories of good and bad conditions based on missing shingles.

Literature review

Many research studies have reported the application of deep learning for image processing, mainly for concrete structures and road condition assessment. This section presents a review of these applications starting with road condition assessment, followed by concrete structure condition assessment, and then wrapping up the review with the sparse application for roof assessment necessitating the need for this study. The first subsection below will summarize the work that has been done in the area of road assessment using Artificial Intelligence methods.

Road condition assessment

Many researchers have been working to optimize road conditions assessment, an important research area worthy of exploration for instance due to the high cost associated with road maintenance and the fact that road infrastructure globally is always not in its best shape. Ukhwah et al. (2019) proposed a novel approach for asphalt pavement road detection using YOLO neural network. Different YOLO versions such as Yolo v3, Yolo v3 Tiny, and Yolo v3 SPP are evaluated based on accuracy and mean average precision. The results show that mAP of Yolo v3, Yolo v3 Tiny, and Yolo v3 SPP were 83.43%, 79.33%, and 88.93%, with the accuracy of 64.45%, 53.26%, and 72.10%, respectively. It takes only 0.04 s to detect the image, which signifies the robustness of their proposed models. In a recent study, (Mubashshira et al., 2020), proposed a new model for crack detection on roads using unsupervised learning of deep learning technology. Color histograms of roads are used for the analysis of road pavements in their study. Afterward, K clustering and OTSU thresholding were employed for segmentation in order to detect the cracks. Results showed that the model performed well in detecting and localizing the damage in the image. In another study, (Sizyakin et al., 2020) proposed a new model for crack detection on roads using deep learning. In their work, they used the publicly available dataset CRACK500 and trained them on the state-of-the-art object detection algorithm U-net. Moreover, the morphological fitting is used to increase the binary map so the model can detect cracks with higher accuracy. Aravindkumar et al. (2021) proposed a model for automatic crack detection on roads using the deep learning technique. A total of 3,533 road images were taken as a dataset of roads of Tamil Nadu, India. They used the transfer learning technique using ResNet-152 classifier with the incorporation of a faster region-based convolutional neural network (Faster R-CNN). Images were trained based on a variety of damages and cracks and then notifying the relevant authority about the locations of these damages. From the results, it was concluded that their model worked effectively.

Bhat et al. (2020) proposed a model for crack detection on roads using deep learning technique. In their work, they exploited various models from traditional CNN and image processing to segmentation. A variety of classifiers are also analyzed, however, based on the result and findings CNN outperformed all other models due to its high accuracy. Elghaish et al. (2021) proposed a model for the classification and detection of highway cracks using deep learning. A total of 4,663 images were taken as dataset which were classified into subparts as “horizontal cracks”, “horizontal and vertical cracks” and diagonal cracks. State of the art object detection algorithms were used and a CNN model was develop in order to increase the accuracy of the proposed model. From the results it was concluded that the pre-trained model googlenet has higher accuracy of 89.08%. However, the new created CNN model exceeded the accuracy of all other algorithms with 97.62% accuracy rate with the use of adam’s optimizer.

Bhavya et al. (2021) proposed a model for pothole detection using deep learning using classification techniques. Images that were further divided into roads with potholes and roads without potholes were taken. They used a state-of-the-art pre-trained model Resnet-50 as a classifier. After the results it was concluded that the model can accurately classify between different kinds of roads and can replace external manpower in assessing road conditions. Ping et al. (2020) proposed an efficient deep learning model for detecting potholes using state-of- the-art objects detection models such as YOLO, SSD, HOG with SVM, and Faster-RCNN. The images were pre-processed and labeled accordingly. Their results showed that YOLO performed better than other object detecting for its higher accuracy and faster computation. Pan et al. (2018) proposed a new model for pothole detection on roads using deep learning and UAVs. In their study, spectral images were taken using UAVs and by the use of machine learning algorithms such as support vector machine (SVM) and random forest. Data is classified into normal pavements and pavements with potholes and cracks. They evaluated different models in their research and concluded that came to the conclusion that remote sensing via UAVs can offer an alternative to traditional assessment of roads.

Arman et al. (2020) presented a model for classification and detecting of road damage using deep learning models. Images were taken from a smartphone camera in the city of Dhaka. Damages such as a pothole, crack, and revealing were taken into account for this model. Their model’s images were fed into Faster RCNN, and RCNN for object detection and support vector machine algorithm of machine learning is used for classification purposes. The result demonstrated that they achieved 98.88% damage detection and classification accuracy. Wang et al. (2018) proposed a deep learning model for damage detection and classification in road networks. They used the SSD and Faster R-CNN in their work for detection and classification. The results were demonstrated in the IEEE BigData Road Damage Detection Challenge, and according to the results, their model performed better. Alfarrarjeh et al. (2018) suggested a deep learning model for road damage detection using images taken from mobile. According to their model, they trained their object detector algorithm on various images of damages as defined by Japan Road Association. Results show that their model performed well despite low imagery resolution and achieved an F1 (Accuracy) score up to 0.62. Seydi et al. (2020) proposed a model for the assessment of roads network damages caused by an earthquake. They used LIDAR point cloud in their model. The proposed model is based on three steps. In the first step, features of LIDAR data were extracted using CNN. In the second step, another neural network: Multilayer perceptron (MLP), is used to detect the debris in the data (images). The output from MLP was fed into another neural network to classify the road segments into blocked and unblocked. Their model performed well and achieved an accuracy of 97%.

Liu et al. (2020) proposed a novel approach for detecting damages inroads using a deep learning model. At first, they used segmentation technique to detect roads areas, then data was fed into object detection models You look Only Once (YOLOv4) and Faster Regional Convolutional Neural Network (F-RCNN) for detecting the damages. The result shows that the proposed model achieved good object detection capability in the IEEE Global Road Damage Detection Challenge 2020. Ale et al. (2018) proposed a faster and more accurate deep learning model for damages to the roads. In their model, classification and bounding box regression are done in a single stage as it is much faster than two stages, which are classification in one step and bounding box regression in the second step. They have trained the images on several one-stage models and inferred that a model RetinaNet can detect road damages with high accuracy. Bojarczak et al. (2021) proposed a model damage diagnosis of railways using a deep learning model and UAVs. They used a fully convoluted network (I.e., semantic segmentation) to locate the railhead’s defects. Their model is based on an OpenCV python library’s tensor flow work environment. The results showed that their model could accurately locate defects in the railway with an efficiency rate of 81%.

To summarize, this section shows that deep learning can be used successfully to diagnose and identify damage (including potholes and cracks) on asphalt roads and highways. The next subsection will discuss the assessment of concrete structures for automated crack detection using deep learning and other techniques.

Concrete structures condition assessment

Concrete structure condition assessment has been an interest to the Federal Department of Transportation for many years, especially the assessment of the bridge’s conditions. Otero et al. (2018) proposed a model for remote sensing of concrete bridge inspection using deep learning technology. In their work unsupervised learning approach is used to extract the features, i.e., cracks from the images. The dataset consisted of different kind of damages along with noise factor. After testing the model, they evaluated that algorithm can successfully detect cracks in different kind of images. Chen et al. (2020) proposed a model for damage detection in concrete bridges using a deep learning model. Their model is based on the technique of transfer learning, taking an existing state-of-the-art object detection algorithm YOLOv3 is used for object localizing. For the extraction of small features, deformable convolution is used. The tests showed that their model is more effective and takes less time in computation.

Kim et al. (2020) proposed a deep learning model for the detection of multiple cracks in concrete. Their model used Masked-RCNN, images of cracks, efflorescence, rebar exposure, and spalling were trained using Masked-RCNN. According to the results, their model achieved a precision of 90.4%, which promises the applicability of Masked-RCNN in damage detection. Rajadurai et al. (2021) proposed a model for using deep learning for automated crack detection in concrete. Transfer learning technique is used, and images (with cracks and no cracks) were fed into Alexnet model. Stochastic gradient descent was used as an optimizer to prevent high loss. Their model showed an accuracy of 99.99% when tested with test images. Cha et al. (2017) came up with a novel approach to detecting cracks in concrete automatically, and they used a convolutional neural network for their model. The model was trained on 40,000 images with a 256 × 256 pixels resolution. Their model outperformed all previous work on image processing and achieved 98% accuracy. Furthermore, they validated their result by testing data of 50 images of 5,888 × 3,584-pixel resolution. The result showed that their model could work in real-time situations.

Desilva et al. (2018) proposed a model for automatic detection of concrete cracks via deep learning and UAVs. A dataset of 3500 images was taken with different conditions such as daylight intensity, surface finishes, and humidity. The dataset was divided into training and testing with a ratio of 80/20. They used VGG-16 on their dataset, on which the model performed well. The overall accuracy of this model is 92.27% which shows the potential of deep learning in the detection of concrete cracks. Kumar et al. (2022) suggested a model for real-time monitoring of cracks in high rises concrete building. A total of 800 RGB pixel images (480 × 480 pixels) were fed into the You Look Only Once (YOLO-3) algorithm as input collected using UAVs. Images were annotated manually using open-source software. Their model outperformed all other previous work achieving an accuracy of 94.24%, and their model can process an image in 0.033 s.

All in all, detecting cracks in concrete automatically has been investigated by many researchers. Deep learning has been used successfully for this purpose. The next subsection will discuss the automation of roof condition assessment using deep learning and other Artificial Intelligent techniques.

Roof condition assessment

Up to the date of this research, only two papers have been found on the application of deep learning to automatically assess a residential house roof condition. Hezaveh et al. (2021) proposed a model for the automatic assessment of hail-damaged roofs. The dataset consisted of roof images that were damaged due to hail. In their model, the researchers used Unmanned Air Vehicle (UAV) to capture high-resolution RGB imagery. The images were trained in different types of convolutional networks. They found that their model can accurately identify hail damage on residential roofs. Wang et al. (2019) presented a residential roof condition assessment method using techniques from deep learning. The proposed method operates on individual roofs and divides the task into two stages: 1) roof segmentation, followed by 2) condition classification of the segmented roof regions. The proposed algorithm has yielded promising results and has outperformed traditional machine learning methods.

Obviously, the utilization of deep learning for roof conditions assessment is still lacking and needs more investigation. This paper will focus on missing roof shingles, and it will use YOLO v5 algorithm for the detection of damaged areas in roofs. The following section will discuss the research methodology in detail.

Methodology

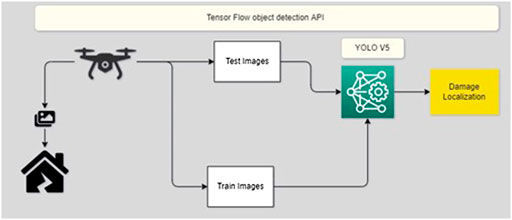

The aim of the study is to develop a deep learning model which can automatically locate and identify missing shingles in the roof. This study explores new possibilities to evaluate and localize the damaged areas in the roofs using the computer vision technique. The study utilizes the transfer learning technique of deep learning technology in which a pre-trained network is taken and fine-tuned until the loss function is minimum (Brown and Miller, 2018). This work incorporates YOLO v5 algorithm for the detection of missing shingles in roofs. YOLO v5 is a state-of-the-art object detection algorithm that has high accuracy and offers fasters computation. YOLO v5 divides input images into grids and each cell is responsible for object detection. It can effectively extract high and low-level features of input images based on the healthiness of the dataset. The model utilizes a supervised learning technique in which input data is already provided along with the labeled output. Here, a pool of images is taken and annotated using a labeling tool which is fed into the object detection algorithm (Aguera-Vega et al., 2017).

As shown in Figure 1, high-resolution RGB images of damaged roofs are captured using a UAV which are annotated using a labeling toolkit. The dataset is then classified into test and train sets and are further trained on YOLO v5 using python library “Tensor Flow Object Detection API”. Tensor flow is an open-source platform developed by Google, having special emphasis on deep learning models. Once the dataset is trained, its accuracy is checked against the test images for model evaluation (Adams et al., 2014).

The whole process utilizes a virtual machine using Google Colab for faster computational purposes. The study is classified into three stages.

Stage no. 1: Data acquisition

Gathering healthy data is one of the most important parameters in deep learning model training (Alzarrad et al., 2021). The researcher suggested utilizing a drone to capture high-resolution images. The dataset acquired is annotated using an open-source tool (labelImg). The ground truth acquired from the labeled dataset is then fed to an algorithm for training.

Stage no. 2: Training the model

In this step, the transfer learning technique is applied, where the dataset is classified into test and train sets. YOLO v5 pertained model is utilized for this purpose. Depending on the size of the dataset, batch size and epochs are then evaluated. “Tensor flow object detection API” is then used for training and validating the model.

Stage no. 3: Model evaluation

In this stage, the model is evaluated based on the accuracy of the prediction. The model is then checked to see if predictions are made accurately. Random test set images are then fed to the model and will check if the model accurately localized the region of interest in the images.

Case study

To illustrate an implementation of the presented model, a case study is used to verify and validate the model’s robustness. The researchers used DJI Matrice 300 RTK drone equipped with Zenmuse H20T, which has the capacity to zoom up to 20x (Figure 2) to collect roof images for two different houses in Hurricane, West Virginia.

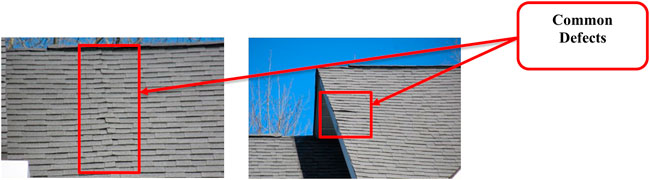

The drone covered each roof by means of manually composing and capturing mainly oblique aerial images. The individual roof inspection images were carefully composed by means of “first person view” (FPV); a facility which presents the drone operator with a real-time view of the scene as it is being captured by the on-board camera. To ensure completeness, a methodical sequence was followed in a flight that generally went around the roof in a counterclockwise fashion. Given the complex design and considerable height of the roof profiles (the highest point of the roof being some 28 ft above ground level) the inspection by air certainly saved considerable costs in time and money, and generally reduced the risk of injury and the potential for disturbance to the occupants. Figure 3 below shows the most common defects, namely cracked or slipped roof tiles, identifiable on the manually composed aerial images.

A total of three hundred and fifty (350) photos were collected from the first house and used to train the model. Two hundred (200) photos were collected from the second house and used to validate the model accuracy. All photos were annotated using an open-source tool (labelImg). To find and remove duplicate and near-duplicate images in the image dataset. The researchers use FiftyOne, an open-source ML developer tool. FiftyOne provides a method to compute the uniqueness of every image in a dataset. which results in a score for every image indicating how unique the contents of the image are with respect to all other images. Images with a low uniqueness value are potential duplicates that researchers explored to remove if needed.

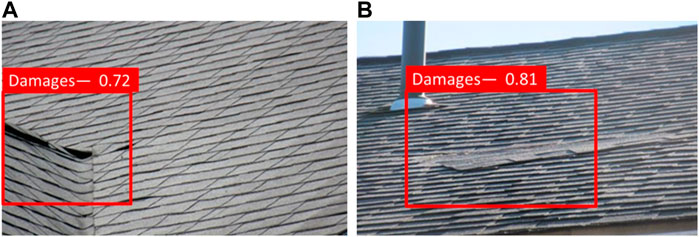

Figure 4A shows a sample of the photos that have been used to train the model. Figure 4B shows a sample of the photos that have been used to validate the model. The accuracy of the model using training data was 72%, while the accuracy of the model using the validation photos from the second house was 81%. The model did not pick up 19% of the defects, mainly because of poor photo quality or defects being too small. The model has not detected a defect when there was no defect (no false positive detection).

FIGURE 4. (A) (left) shows the accuracy of the model for the training photos, which is 72%. (B) (right) shows the accuracy of the model for validation photos which is 81%.

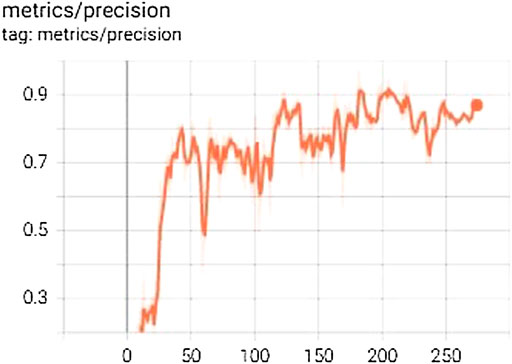

The model is capable of detecting missing shingles only and no other defects have been investigated for this stage of the research. The researchers tested the model’s accuracy by comparing the model’s prediction vs. humans’ prediction. A sample of the images that have been used to validate the model was sent to a graduate student. The student went through 100 randomly selected photos and categorized them as damaged and undamaged. The researchers compared the student prediction result with the model result and found that the model accuracy is good. The model accuracy turned out to be 0.81 (81%), indicating that 81 correct predictions were made out of 100 total examples. Further, to fully evaluate the effectiveness of the model, the researchers examine the model’s precision. Precision quantifies the number of positive class predictions that actually belong to the positive class (i.e., how many good condition photos have been classified as good conditions). The model precision was 0.86, as shown in Figure 5.

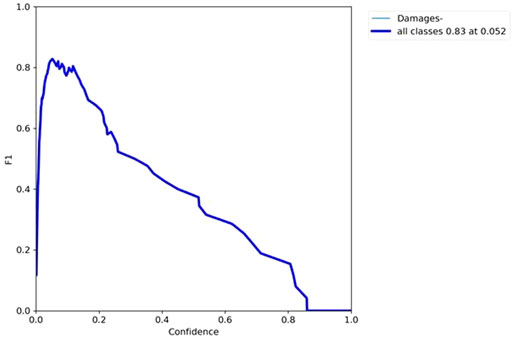

Further, the researchers examine the model F1 score. F1 score measures the percentage of correct predictions that the model has made. An F1 score is considered perfect when it is 1, while the model is a total failure when it is 0. The F1 score for this model was 0.832, which is good as shown in Figure 6.

Conclusion

8As important as it is to maintain the integrity of roofs in residential buildings, the routine inspection required to achieve this by ascertaining the characteristics and recommending the need for repairs or replacements is usually a daunting task, particularly, when it comes to sloped roofs. The level of inefficiencies and worker injuries experienced in current roof inspection practices expose the weaknesses in traditional inspection methods. The results of this research show that the implementation of deep learning techniques for processing imagery data has the potential to help mitigate or eliminate these challenges. In this study, the researchers used a transfer learning methodology by implementing YOLOv5 to automatically identify damaged areas specifically missing shingles on residential roofs. The model which was manually tested with a real case study shows a high level of performance indicating the potential of this technique for the automatic assessment of roofs. The model developed in the study after training and validation were found to have an accuracy of approximately 81% and a precision of 86% despite the limited amount of dataset. Although the relatively low amount of data used in the modeling is a limitation of this study, the level of accuracy and precision achieved further reinforces the potency of the methodology adopted and the model developed for the roof assessment. The use of this model can significantly benefit roofers and insurance companies for their timely decisions. This study is a steppingstone toward high-end roof damage assessment; with a large amount of dataset, the model accuracy could be improved. Lastly, for future research, the model can be converted to tensor flow lite to allow its use on smartphones such as Android, iPhone, and Raspberry Pi.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Written informed consent was obtained from the individual for the publication of any potentially identifiable images or data included in this article.

Author contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Funding

The research leading to the publication of this article was partially supported by the Department of Civil Engineering at Marshall University in Huntington, West Virginia, United States of America.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adams, S., Levitan, M., and Friedland, C. (2014). High resolution imagery collection for postdisaster studies utilizing unmanned aircraft systems (UAS). Photogramm. Eng. remote Sens. 80 (12), 1161–1168. doi:10.14358/pers.80.12.1161

Aguera-Vega, F., Carvajal-Ramirez, F., and Martinez-Carricondo, P. (2017). Accuracy of digital surface models and orthophotos derived from unmanned aerial vehicle photogrammetry. J. Surv. Eng. 143 (2), 04016025. doi:10.1061/(asce)su.1943-5428.0000206

Ale, L., Zhang, N., and Li, L. (2018). “Road damage detection using RetinaNet,” in Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), WA, USA, December 2018.

Alfarrarjeh, A., Trivedi, D., Kim, S. H., and Shahabi, C. (2018). “A deep learning approach for road damage detection from smartphone images,” in Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), WA, USA, December 2018.

Alzarrad, A., Emanuels, C., Imtiaz, M., and Akbar, H. (2021). Automatic assessment of buildings location fitness for solar panels installation using drones and neural network. CivilEng 2 (4), 1052–1064. doi:10.3390/civileng2040056

Aravindkumar, S., Varalakshmi, P., and Alagappan, C. (2021). Automatic road surface crack detection using Deep Learning Techniques. Artif. Intell. Technol. 806, 37–44. doi:10.1007/978-981-16-6448-9_4

Arman, M. S., Hasan, M. M., Sadia, F., Shakir, A. K., Sarker, K., and Himu, F. A. (2020). Detection and classification of road damage using R-CNN and faster R-CNN: A deep learning approach. Cyber Secur. Comput. Sci. 325, 730–741. doi:10.1007/978-3-030-52856-0_58

Bhat, S., Naik, S., Gaonkar, M., Sawant, P., Aswale, S., and Shetgaonkar, P..2020 A survey on road crack detection techniques. Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (Ic-ETITE), February 2020. Vellore, India: doi:10.1109/ic-etite47903.2020.67

Bhavya, P., Sharmila, C., Sai Sadhvi, Y., Prasanna, C. M., and Ganesan, V. (2021). “Pothole detection using deep learning,” in Smart technologies in data science and communication (Singapore: Springer), 233–243. doi:10.1007/978-981-16-1773-7_19

Bojarczak, P., and Lesiak, P. (2021). UAVs in rail damage image diagnostics supported by deep-learning networks. Open Eng. 11 (1), 339–348. doi:10.1515/eng-2021-0033

Brown, M., and Miller, K. (2018). The use of unmanned aerial vehicles for sloped roof inspections – considerations and constraints. J. Facil. Manag. Educ. Res. 2 (1), 12–18. doi:10.22361/jfmer/93832

Brown, S., Harris, W., Brooks, D., and Sue Dong, X. (2021). Data bulletin. Fatal injury trends in the construction industry. Available at: https://www.cpwr.com/wp-content/uploads/DataBulletin-February-2021.pdf.

Cha, Y.-J., and Kang, D. (2018). Damage detection with an autonomous UAV using Deep Learning. Sensors Smart Struct. Technol. Civ. Mech. Aerosp. Syst. 10598, 1059804. doi:10.1117/12.2295961

Chen, X., Ye, Y., Zhang, X., and Yu, C. (2020). Bridge damage detection and recognition based on Deep Learning. J. Phys. Conf. Ser. 1626 (1), 012151. doi:10.1088/1742-6596/1626/1/012151

Desilva, W. R., and Lucena, D. S. (2018). Concrete cracks detection based on deep learning image classification. Proceedings 2 (8), 489. doi:10.3390/icem18-05387

Elghaish, F., Talebi, S., Abdellatef, E., Matarneh, S. T., Hosseini, M. R., Wu, S., et al. (2021). Developing a new deep learning CNN model to detect and classify highway cracks. J. Eng. Des. Technol. 20, 993–1014. ahead-of-print(ahead-of-print). doi:10.1108/jedt-04-2021-0192

Hezaveh, M. M., Kanan, C., and Salvaggio, C., 2017. Roof damage assessment using deep learning. Proceedings of the 2017 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), October 2017. DC, USA: doi:10.1109/aipr.2017.8457946

Intelligence, I. (2022). Drone market outlook in 2022: Industry growth trends, market stats and forecast. Business Insider. Available at: https://www.businessinsider.com/drone-industry-analysis-market-trends-growth-forecasts.

Kim, B., and Cho, S. (2020). Automated multiple concrete damage detection using instance segmentation deep learning model. Appl. Sci. 10 (22), 8008. doi:10.3390/app10228008

Kumar, P., Batchu, S., Swamy, S., N., and Kota, S. R. (2021). Real-time concrete damage detection using deep learning for high rise structures. IEEE Access 9, 112312–112331. doi:10.1109/access.2021.3102647

Liu, Y., Zhang, X., Zhang, B., and Chen, Z. (2020). “Deep Network for road damage detection,” in Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), GA, USA, December 2020.

Mseddi, W., Sedrine, M. A., and Attia, R. (2021). “Yolov5 based visual localization for Autonomous Vehicles,” in Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, August 2021.

Mubashshira, S., Azam, M. M., and Masudul Ahsan, S. M. (2020). “An unsupervised approach for road surface crack detection,” in Proceedings of the 2020 IEEE Region 10 Symposium (TENSYMP), Dhaka, Bangladesh, June 2020. doi:10.1109/tensymp50017.2020.9231023

Otero, L., Moyou, M., Peter, A., and Otero, C. E. (2018). “Towards a remote sensing system for railroad bridge inspections: A concrete crack detection component,” in Proceedings of the SoutheastCon 2018, FL, USA, April 2018. doi:10.1109/secon.2018.8478856

Pan, Y., Zhang, X., Cervone, G., and Yang, L. (2018). Detection of asphalt pavement potholes and cracks based on the unmanned aerial vehicle multispectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 11 (10), 3701–3712. doi:10.1109/jstars.2018.2865528

Ping, P., Yang, X., and Gao, Z. (2020). “A deep learning approach for street pothole detection,” in Proceedings of the 2020 IEEE Sixth International Conference on Big Data Computing Service and Applications (BigDataService), Oxford, UK, August 2020. doi:10.1109/bigdataservice49289.2020.00039

Rajadurai, R.-S., and Kang, S.-T. (2021). Automated vision-based crack detection on concrete surfaces using deep learning. Appl. Sci. 11 (11), 5229. doi:10.3390/app11115229

Seydi, S. T., and Rastiveis, H. (2019). A deep learning framework for roads network damage assessment using POST-EARTHQUAKE Lidar Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. XLII-4/W18, 955–961. doi:10.5194/isprs-archives-xlii-4-w18-955-2019

Sizyakin, R., Voronin, V. V., Gapon, N., and Pižurica, A. (2020). A deep learning approach to crack detection on road surfaces. Artif. Intell. Mach. Learn. Def. Appl. II 11543, 1–7. doi:10.1117/12.2574131

Ukhwah, E. N., Yuniarno, E. M., and Suprapto, Y. K. (2019). “Asphalt pavement pothole detection using Deep Learning method based on Yolo Neural Network,” in Proceedings of the 2019 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, Indonesia, August 2019. doi:10.1109/isitia.2019.8937176

Wan, J., Wang, D., Hoi, S. C., Wu, P., Zhu, J., Zhang, Y., et al. (2014). “Deep learning for content-based image retrieval,” in Proceedings of the 22nd ACM International Conference on Multimedia, Boca Raton, FL, January 2014. doi:10.1145/2647868.2654948

Wang, N., Zhao, X., Zou, Z., Zhao, P., and Qi, F. (2019). Autonomous damage segmentation and measurement of glazed tiles in historic buildings via Deep Learning. Computer-Aided Civ. Infrastructure Eng. 35 (3), 277–291. doi:10.1111/mice.12488

Keywords: roof inspections, unmanned aerial Vehicles, artificial intelligence, convolutional neural Network, deep learning

Citation: Alzarrad A, Awolusi I, Hatamleh MT and Terreno S (2022) Automatic assessment of roofs conditions using artificial intelligence (AI) and unmanned aerial vehicles (UAVs). Front. Built Environ. 8:1026225. doi: 10.3389/fbuil.2022.1026225

Received: 23 August 2022; Accepted: 03 October 2022;

Published: 14 October 2022.

Edited by:

Amir Mahdiyar, University of Science Malaysia, MalaysiaReviewed by:

Salman Riazi Mehdi Riazi, Universiti Sains Malaysia (USM), MalaysiaPaul Netscher, Independent researcher, Perth, WA, Australia

Copyright © 2022 Alzarrad, Awolusi, Hatamleh and Terreno. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ammar Alzarrad, YWx6YXJyYWRAbWFyc2hhbGwuZWR1

Ammar Alzarrad

Ammar Alzarrad Ibukun Awolusi

Ibukun Awolusi Muhammad T. Hatamleh

Muhammad T. Hatamleh Saratu Terreno4

Saratu Terreno4