- Intergroup Human-Robot Interaction (iHRI) Lab, Department of Psychology, New Mexico State University, Las Cruces, NM, United States

Past research indicates that people favor, and behave more morally toward, human ingroup than outgroup members. People showed a similar pattern for responses toward robots. However, participants favored ingroup humans more than ingroup robots. In this study, I examine if robot anthropomorphism can decrease differences between humans and robots on ingroup favoritism. This paper presents a 2 × 2 × 2 mixed-design experimental study with participants (N = 81) competing on teams of humans and robots. I examined how people morally behaved toward and perceived players depending on players’ Group Membership (ingroup, outgroup), Agent Type (human, robot), and Robot Anthropomorphism (anthropomorphic, mechanomorphic). Results replicated prior findings that participants favored the ingroup over the outgroup and humans over robots—to the extent that they favored ingroup robots over outgroup humans. This paper also includes novel results indicating that patterns of responses toward humans were more closely mirrored by anthropomorphic than mechanomorphic robots.

Introduction

Robots are becoming increasingly prevalent, not only behind the scenes but also as members of human teams. For example, military teams work with bomb-diffusing robots (Carpenter, 2016), factory workers with “social” industrial robots (Sauppé and Mutlu, 2015), and eldercare facilities with companion robots (Wada et al., 2005; Wada and Shibata, 2007; Chang and Šabanović, 2015). Such teaming is critical for advancing our society, because humans and robots have different skillsets, which can complement each other’s expertise to enhance team outcomes (Kahneman and Klein, 2009; Chen and Barnes, 2014; Bradshaw et al., 2017). To best implement human–robot teaming, scholars need guidelines for how human–robot interaction (HRI) typically plays out, so they can plan for typical HRI paradigms.

One strong effect in HRI is that participants favor their ingroup (i.e., teammates) over the outgroup (i.e., opponents), regardless of whether the agents are humans or robots. In several previous HRI studies, participants even assigned “painful” noise blasts to outgroup humans to spare ingroup robots (Fraune et al., 2017b). This was replicated in the United States (Fraune et al., 2020). However, across studies, participants still favored ingroup humans over ingroup robots, though they did not differentiate outgroup humans and outgroup robots. Perhaps most surprisingly, these findings occurred despite that the robots had only a “minimally social” appearance (i.e., a pair of eyes, a head; Figure 1; Matsumoto et al., 2005, 2006), and participants did not view them as particularly anthropomorphic (i.e., having humanlike traits; Fraune et al., 2020).

Figure 1. Mugbot robots (left); mugbot with headphones (right). Reproduced with permission from Fraune et al. (2017b).

In this paper, I examine if increased robot anthropomorphism results in more similar ingroup favoritism toward robots like toward humans. I also seek to answer the specific questions: Must robots have some anthropomorphic appearance for people to favor robot teammates over human opponents, or would they do the same with mechanomorphic robots (i.e., robots with machine-like traits)? Further, if the robots had more anthropomorphic characteristics, would people no longer favor ingroup humans over ingroup robots?

To answer these questions, participants entered the lab four at a time and were placed into teams of two humans and two robots versus two humans and two robots. The robots varied in anthropomorphism (anthropomorphic, mechanomorphic). Groups played a price-guessing game, with winners assigning noise blasts to all players. Then, participants completed surveys about their perceptions of the players. The results indicate how robot anthropomorphism moderates effects of group membership on survey and behavioral favoritism of ingroup and outgroup humans and robots. These results have moral implications: If participants are willing to give painful noise blasts to humans in order to spare their robot teammates, what else might they be willing to do?

Related Work

People’s moral behavior and perceptions of others are affected by many factors. In this paper, I particularly focus on group membership, agent type, and robot anthropomorphism as factors relevant to humans-robot interaction (HRI), and I describe the motivation for this focus below.

Group Membership Affects Anthropomorphism and Positive Responses

Group membership is critical to effective group interaction because people typically view the ingroup (i.e., their own group) more positively than the outgroup (i.e., other groups). People are more likely to cooperate with ingroup members (Tajfel et al., 1971; Turner et al., 1987), favor them morally (Leidner and Castano, 2012), and anthropomorphize them (i.e., humanize them; Haslam et al., 2008). This is a type of intergroup behavior.

Group interaction with robots often takes the form of intergroup behavior similar to social psychology (Fraune et al., 2015a, b, 2017a; Leite et al., 2015). Participants categorize robots as ingroup or outgroup members based on perceived robot gender (Eyssel et al., 2012), nationality (Kuchenbrandt et al., 2013; Correia et al., 2016), helpfulness (Bartneck et al., 2007), and robot use of group-based emotions (e.g., pride in the group; Correia et al., 2018).

The more strongly participants categorize robots as ingroup members, the more likely they are to perceive them as anthropomorphic (Kuchenbrandt et al., 2013) and give them more moral rights (Haslam et al., 2008; Kahn et al., 2011; Haslam and Loughnan, 2014). For example, when military squads socially bonded with bomb-defusing robots, they hesitated to send the robots into dangerous territories (Carpenter, 2016). Thus, robots’ group membership can affect moral decisions about them.

However, group effects in HRI do not directly reflect group effects in social psychology (Chang et al., 2012; Fraune and Šabanović, 2014; Fraune et al., 2019). Below, I examine a divergence in group-related responses toward humans and robots and some possible explanations.

People Differentiate More Within the Ingroup Than the Outgroup

In previous studies, participants differentiated between humans and robots to the extent that they showed different patterns in responses toward group members depending on agent type. Participants differentiated ingroup humans and robots more than outgroup humans and robots. Viewed another way, participants favored ingroup humans over outgroup humans more than they favored ingroup robots over outgroup robots (Fraune et al., 2020). Thus, the effect of group membership was stronger for humans than for robots. These findings can be viewed from the perspective of the outgroup homogeneity effect or from social identity theory, described below.

Outgroup homogeneity effect

In the outgroup homogeneity effect, output members are typically seen as more similar to each other, and ingroup members are typically seen as more diverse (Jones et al., 1981; Judd and Park, 1988; Ackerman et al., 2006). The outgroup homogeneity effect has been shown to occur in competitive contexts, even when there was no difference in the amount of information about exemplars of the ingroup and outgroup (Judd and Park, 1988). Thus, in previous studies, participants perceived more differences in ingroup members than outgroup members (Fraune et al., 2020), accounting for the differentiation between ingroup (but not outgroup) humans and robots.

Social identity theory

According to social identity theory (Turner and Tajfel, 1986), more prototypical group members have more influence over their group (Hogg, 2001) and experience more results of their group membership (Mastro and Kopacz, 2006). In prior studies, participants may have attended to differences between ingroup humans and robots and treated the ingroup robots as less prototypical of the ingroup (Van Knippenberg et al., 1994; Van Knippenberg, 2011). Ingroup robots being perceived as less prototypical ingroup members would account for findings that group effects from psychology extend to interaction with robots, but to a lesser degree than to interaction with humans.

In the prior study, the robots with which participants interacted were far from human. They had only minimally anthropomorphic features (e.g., head, eyes), were less than a foot tall, and had the shape of the upside down cup. This leads to the question, can manipulating how prototypical a robot is of a human group modify the extent to which robots experience the results of their group membership—that is, ingroup favoritism?

Agent Anthropomorphism Affects Prototypicality and Anthropomorphism

To modify how prototypical a robot group member is in relation to a human group, I selected anthropomorphism. The more anthropomorphic a robot is, the more readily it should fit into human groups. Anthropomorphism also confers other benefits: When people perceive agents as anthropomorphic, they typically behave morally toward them (Epley et al., 2007; Haslam et al., 2008; Waytz et al., 2010). For example, people usually consider it more important to behave morally toward humans than toward bugs. In other cases, when people dehumanize other humans, they treat those humans like they are animals (Haslam and Loughnan, 2014).

Considering others as similar or different from humans and treating them accordingly is typically divided into two factors: (1) Agents high in the ability to Experience emotions (e.g., warmth, fear, joy, suffering) are perceived as deserving more moral rights. People typically consider robots to have low experience (Gray et al., 2007; Wullenkord et al., 2016), leading them to indicate that robots deserve fewer moral rights than humans (Kahn et al., 2011; Lee and Lau, 2011). (2) Agents high in Agency (e.g., civility, rationality) are perceived as having high moral responsibility (Haslam et al., 2008). More complex robots are viewed as more agentic than simple robots (Kahn et al., 2011), but less agentic than adult humans (Gray et al., 2007). Thus, some robots could be perceived to have higher moral responsibility than others (Kahn et al., 2012).

In prior studies, participants treated robots as having less ability to experience than humans in ratings and by assigning them more loud and “painful” noise blasts (Fraune et al., 2017b, 2020). In this study, I specifically manipulated robot anthropomorphism. To do so, I used anthropomorphic and mechanomorphic robots that varied on appearance dimensions (Phillips et al., 2018), specifically: Body Manipulators (anthropomorphic robots had arms and a torso; mechanomorphic robots had only a circular body), Facial Features (anthropomorphic robots had a head, eyes, and a mouth; mechanomorphic did not), and Mechanical Locomotion (anthropomorphic robots had legs; mechanomorphic robots had wheels). I also manipulated robot behavior: anthropomorphic robots spoke English, and mechanomorphic robots only beeped (Figure 2). In this study, I purposely conflated robot appearance and behavior such that the anthropomorphic robots both looked and behaved in an anthropomorphic manner, and the mechanomorphic robots both looked and behaved in a mechanomorphic matter, as in former studies (Fraune et al., 2015b; de Visser et al., 2016). Researchers use this technique because mismatching form and behavior causes dissonance and reduces acceptance of robots (Goetz et al., 2003).

Ingroup Agents Are More Useful

Another difference between the ingroup and outgroup is that the ingroup is typically cooperative and useful to the team (Wildschut and Insko, 2007). Usefulness relates to more positive emotions and behavior the agent (Nass et al., 1996; Reeves and Nass, 1997; Bartneck et al., 2007; Saguy et al., 2015). Therefore, in this study, I measured perceived usefulness as a reason participants may treat ingroup robots favorably, even if they were mechanomorphic (Venkatesh et al., 2003).

Present Study Overview

Overall, people treat robots somewhat, but not entirely, like humans in terms of ingroup favoritism. The more anthropomorphic the robot is, the more likely it should be that people will treat it as a prototypical group member and deserving of ingroup favoritism and moral status—but this has not yet been examined.

Previous studies measured moral behavior toward humans and robots by the volume of painful noise blasts participants assigned to others (Fraune et al., 2017b, 2020). Social psychological researchers have used noise blasts as a measure of aggression (e.g., Twenge et al., 2001). I specifically chose this measure because it violates the moral principle of harm (Leidner and Castano, 2012).

In this paper, I seek to replicate and extend the findings from previous studies. Below, I hypothesize about how people will treat robots. I define “treated better” on numerous measures, including being (a) treated more as part of participants’ group (e.g., rated as more cooperative and less competitive), (b) given softer noise blasts, (c) rated more positively on attitude valences and emotions, (d) anthropomorphized more, and (e) perceived as more useful.

First, I examine four hypotheses, replicating prior studies (Fraune et al., 2017b, 2020):

H1. Ingroup members will be treated better than outgroup members.

H2. Humans will be treated better than robots.

H3. The ingroup–outgroup difference will be larger than the human–robot difference, such that ingroup robots will be treated better than outgroup humans.

H4. Differences in ratings of ingroup humans and robots will be larger than differences in ratings of outgroup humans and robots.

Next, I test our main novel hypothesis from this study:

H5. Anthropomorphic robots will be differentiated less from humans than mechanomorphic robots from humans, across group memberships.

I examine if this relates to a consistent difference across robot anthropomorphism:

H6. Anthropomorphic robots will be treated better than Mechanomorphic robots.

I examine if prior findings that ingroup robots are treated better than outgroup humans (H4) depends on robot anthropomorphism:

H7. The ingroup–outgroup difference will be larger for anthropomorphic than mechanomorphic robots, such that the difference between ingroup anthropomorphic robots and outgroup humans will be greater than ingroup mechanomorphic robots and outgroup humans.

I examine in prior findings that the difference between ingroup humans and robots is greater than the difference between outgroup humans and robots (H5) depends on robot anthropomorphism:

H8: Differences in ratings of ingroup humans and mechanomorphic robots will be larger than differences of ingroup humans and anthropomorphic robots, which will be larger than differences in ratings of outgroup humans and robots.

Finally, I examine if group cohesion, anthropomorphism, and usefulness of agents relates to the volume of noise blasts participants give them.

H9: More perceived group cohesion, anthropomorphism, and usefulness will relate to lower noise blast volume.

H9a. Group cohesion will have the strongest effect for ingroup members.

H9b. Anthropomorphism will have the strongest effect for anthropomorphic robots.

H9c. Usefulness will have the strongest effect for mechanomorphic robots.

Method

Design

In this study, I use a 2 × 2 × 2 mixed design with Group Membership (ingroup, outgroup) and Agent Type (human, robot) manipulated within subjects’ and Robot Anthropomorphism (anthropomorphic, mechanomorphic) manipulated between subjects. The study was approved by the New Mexico State University Institutional Review Board (IRB).

Participants

Participants were recruited through the psychology participant pool at New Mexico State University. The study contained 81 participants, divided per condition as Anthropomorphic: 45 (61.7% female) and Mechanomorphic: 36 (69.4% female). Participants were on average 19.15 years old, and the majority of participants indicated their race as White (66.3%). The other racial groups were Native American (3.6%), Asian (4.8%), Black (1.2%), or mixed race (12.0%). The majority also identified as Hispanic/Latino (68.7%).

Procedure

Participants took part in the experiment in the Intergroup HRI lab (iHRI Lab) at New Mexico State University. The purpose was described as examining cognitive performance on a price-guessing game. Participants who objected to hearing loud noise blasts would be excused from the session; however, this never occurred.

When participants arrived at the study, they sat together at the table and, at the experimenter’s instruction, introduce themselves to each other by name. The experimenter randomly assigned participants to teams of two humans and two robots. Teammates received colored armbands related to their team (red or blue) and saw who was on their team. The experimenter told teams that they would work together on the task against the other team. The experimenter described the task to participant team members (see Task section) and then brought teams one at a time into the next room to meet their robot teammates (who wore the appropriately colored armbands on their bodies).

After meeting the robots, participants completed the task in separate rooms, and then the computer prompted participants to complete surveys. Finally, they were debriefed, given one credit for their psychology class, and dismissed.

Robots

The robots differed depending on between-subject conditions (Figure 2). In the Anthropomorphic condition, two humanoid Nao robots greeted participants with human speech (e.g., “Hello, I’m Sam. I look forward to working with you”). These robots sat on a table near a computer so they were not far below human eye level. In the Mechanomorphic condition, iRobot Creates (with their “Clean” button covered) beeped at participants, and the experimenter told participants that they would be working with these robots. These robots sat on the ground, where they would typically functionally drive.

The experimenter asked human teammates to introduce themselves by name to the robots. Participants were told that, because these robots’ purpose included interaction with the real world, they hear in a similar way to humans, and that the noise blasts are comparably aversive to humans and robots. Then, the experimenter led participants to separate rooms for the task, so they had no more communication with other players.

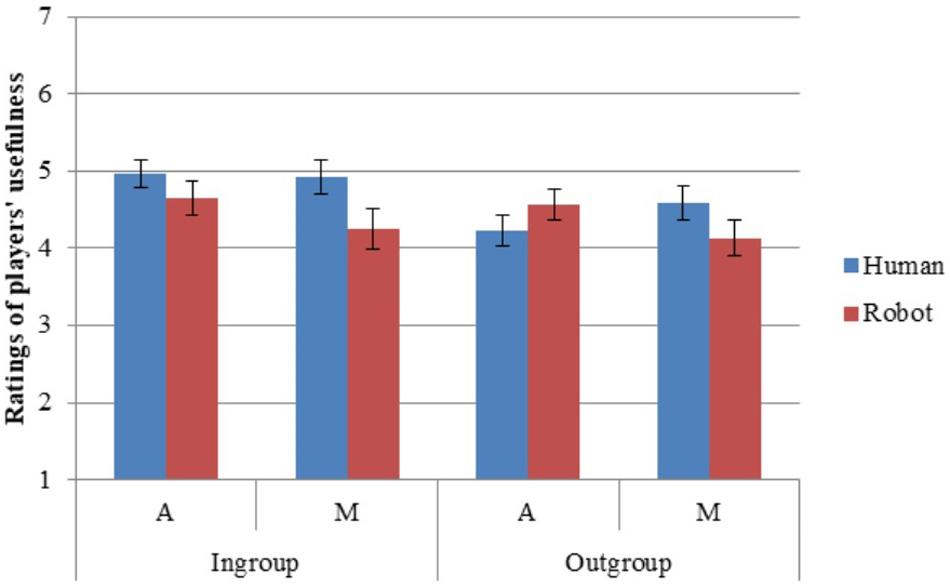

Task

Participants played a price-guessing game programmed using Java in Eclipse. A computer screen displayed an item (e.g., couch, watch), and participants guessed the price. They were told that teammates’ answers were averaged for a final answer. [This was to create teams in which the members were interdependent because prior research has indicated that interdependence is an important part of teams (e.g., Insko et al., 2013)]. The team that came the closest to the correct price on a given round won that round, and one member of the winning team was “randomly selected” to assign noise blasts of different levels to all eight players (including themselves) before the next round. The game included 20 rounds of the main game and one final round. For each round, participants saw the average guess for each team, the actual price, which team won, and if they were the player who would select the volume of noise blasts for this round.

In reality, the game was rigged such that participants actually played on their own, with other players’ responses simulated. Participants’ teams won 50% of the time and each participant was “randomly assigned” to give noise blasts four times.

In this study, teammate responses on the task were not attributed to an individual so participants could not learn which teammates behaved differently than they did and could not treat them differently based on behavior.

Noise Blast Measure of Moral Behavior

After each round, one player assigned noise blasts to all eight players. The experimenter described the noise blast as just another part of the game. This was to avoid influencing participant use of the noise blast. In reality, the noise blast was used as a measure of moral behavior (i.e., violating the ethical principle of harm; Leidner and Castano, 2012), as in other studies (e.g., Fraune et al., 2017b, 2020; Twenge et al., 2001).

The possible noise blasts were described as ranging in volume from 80 to 135 dB during all main rounds and from 110 to 165 dB during the final round, with 5-dB intervals. Each level of noise could be assigned to only one player to prevent participants from assigning everyone the same volume (e.g., to be fair; Figure 3). Each player (including the participant) was assigned one noise level per round. Participants viewed a chart relating different noise levels to known sounds (e.g., 80 dB = normal piano practice, 100 dB = piano fortissimo, 120 dB = threshold of pain, 135 dB = live rock band). In reality, participants never received noise blasts above 100 dB in order to protect their hearing. Also, the final round never arrived. Instead, participants were interrupted to complete surveys while they thought they were still playing the game. In this way, participants completed surveys while they were still part of a team with the robots and other humans.

Figure 3. Screen for assigning noise blasts to Names 1–8 (actual participant and robot names were used during the experiment). Reproduced with permission from Fraune et al. (2017b).

During the noise blast phase, only the participant assigning noise volume could see what volumes were assigned. Other participants could not see who was assigning noise volume or what volume players received, only whether they won or lost that round. In doing so, participants could not be tempted to conform to the players’ behavior. In reality, the volumes for everyone other than the participant assigning the volumes were randomized by the computer program, but noise blasts from ingroup members were softer (0.85 times the outgroup noise blast) on average than the noise blast from outgroup members to simulate how teams often favor the ingroup. [In our previous study, participants delivered similarly softer (approximately 0.80 times) noise blasts to ingroup than outgroup members before they heard any noise blast from any other player (Fraune et al., 2017b)]. In a prior study, the difference in noise blast volume for ingroup than outgroup did not change the noise blasts participants gave to ingroup than outgroup members, as measured between before and after participants heard noise blasts assigned by others (Fraune et al., 2017b).

Measures

Noise blasts: I used noise blast volume to measure moral behavior. I averaged noise blast volume to create one measure for each target (ingroup humans, ingroup robots, outgroup humans, outgroup robots). In a prior study, noise blast volume given to self and to the other ingroup human did not significantly differ (Fraune et al., 2017b).

Surveys: Participants rated surveys on a Likert scale, unless otherwise stated from 1 (Strongly Disagree) to 7 (Strongly Agree).

Agents’ noise perceptions: Participants responded to two questions (analyzed separately), indicating if they believed that the players “experienced pain from the noise blasts” and “did not like the noise” (Fraune et al., 2020).

Group cohesion: Participants responded to three questions (analyzed separately), indicating how much they felt cooperation and competition and as part of a group with players (Fraune et al., 2017b).

Attitude valence and emotions: Participants responded to questions (analyzed together) about their attitude valence toward robots on a bipolar scale from 1 (Dislike) to 7 (Like). They also rated how they felt on 12 emotions (e.g., happiness, fear) toward the players (Cottrell and Neuberg, 2005).

Anthropomorphism: To measure anthropomorphism, I examined agency (five items: can engage in a great deal of thought, has goals, is capable of doing things on purpose, is capable of planned action, is highly conscious) and experience (four items: can experience pain, can experience pleasure, has complex feelings, is capable of the motion; Kozak et al., 2006). Participants rated these on a scale from 1 (Not at all) to 4 (Average human) to 7 (Very much). I anchored ratings at “average human” to participants using shifting standards (Biernat and Manis, 1994) for rating humans than robots, as recommended in prior research (Fraune et al., 2017b, 2020).

Usability: Participants rated six questions about how useful it was to work with each player (e.g., “Working with this player in tasks like this would enable me to accomplish tasks more quickly”). These questions were modified from a prior scale (Venkatesh et al., 2003) to apply specifically to players in the game.

Demographics: Participants reported gender identity, age, major, and prior experience with robots and computers.

Results

Data were analyzed in SPSS 25. Values of p < 0.050 were considered statistically significant and are reported below. All significant findings are reported.

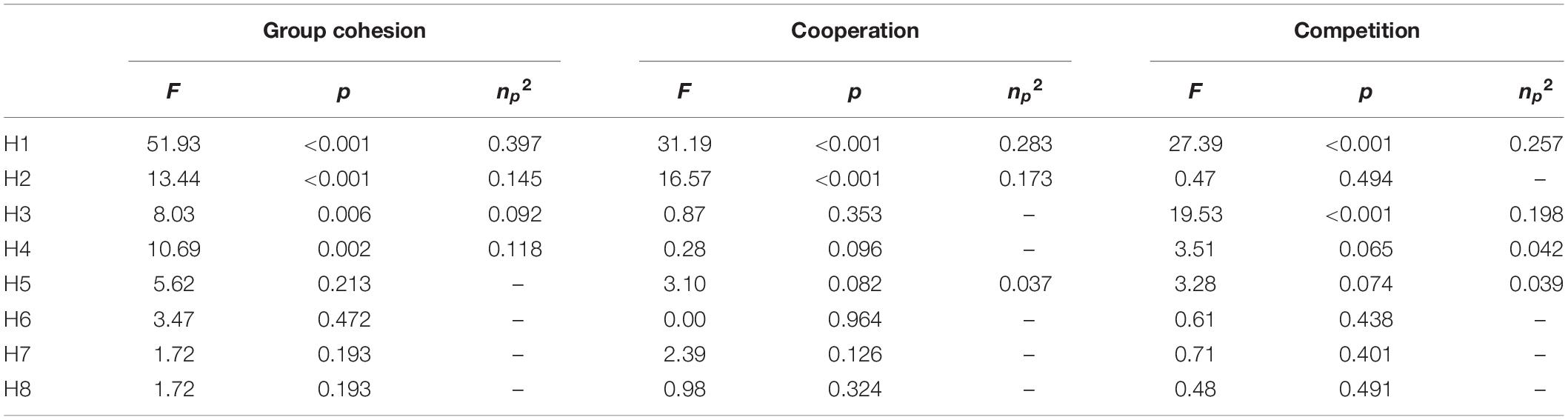

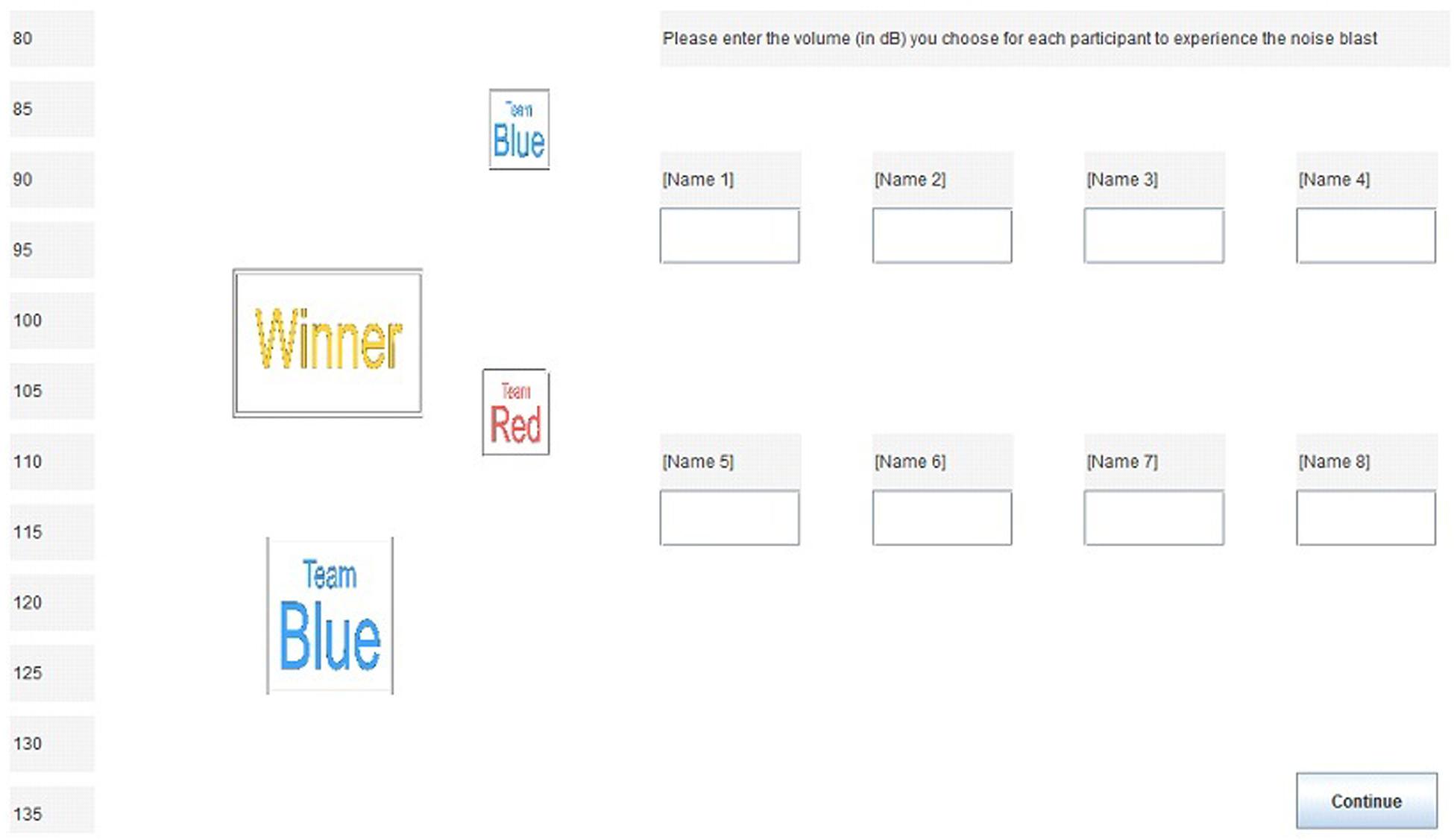

I ran a series of 2 (Group Membership: ingroup/outgroup) × 2 (Agent Type: human/robot) × 2 (Robot Anthropomorphism: anthropomorphic/mechanomorphic) mixed ANOVAs, with the first two variables being within-participants and the last being between-participants. With these tests, I examined if:

H1. Ingroup members were treated better than outgroup members (main effect of group membership).

H2. Humans were treated better than robots (main effect of agent type).

H6. Anthropomorphic robots were treated better than Mechanomorphic robots (main effects of Anthropomorphism).

Some two-way interactions occurred. To examine these according to the hypotheses, I used 2 (Player: igR/ogH) × 2 (Robot Anthropomorphism anthropomorphic/mechanomorphic) ANOVAs to examine if:

H3. Ingroup robots were treated better than outgroup humans (main effect of player).

H7. The difference between ingroup anthropomorphic robots and outgroup humans was greater than ingroup mechanomorphic robots and outgroup humans (interaction effect).

I calculated ingroup Group Differentiation as the difference between ratings of ingroup humans and ingroup robots (igH-igR) and outgroup Group Differentiation as the difference between ratings of the outgroup humans and outgroup robot (ogH-ogR).

There has been contention over the use of difference scores, such as those calculated above (Peter et al., 1993; Edwards, 2001; Edwards and Schmitt, 2002). The main concerns are as follows: (1) For the construct examined, it may be that one of the variables should be weighted more than another, for which the method of difference scores cannot account and (2) the findings may not be replicable, which is partially because (3) measure reliability typically decreases from using difference scores compared to the reliability of each score individually (Peter et al., 1993). To address the first concern, I operationally define Group Differentiation as the linear difference between how people respond to the ingroup versus the outgroup, for each agent type. To address the second concerns, prior research has already shown the replicability of findings with this definition of Group Differentiation (Fraune et al., 2017b, 2020). To address the third concern, I report Cronbach’s alpha for the difference scores (denoted as αdiff), all of which are very high (above 0.8), indicating that reliability is not a problem for different scores in this study. With these main concerns addressed, difference scores are appropriate in this context.

I used Group Differentiation (ingroup differentiation/outgroup differentiation) × 2 (Robot Anthropomorphism: anthropomorphic/mechanomorphic) ANOVAs to examine if:

H4. Differences in ratings of ingroup humans and ingroup robots were larger than differences in ratings of outgroup humans and outgroup robots (main effect of Group Differentiation).

H5. Mechanomorphic robots were differentiated more from humans than anthropomorphic robots from humans (main effects of Anthropomorphism).

H8: Differences in ratings of ingroup humans and mechanomorphic robots were larger than differences of ingroup humans and anthropomorphic robots, which were larger than differences in ratings of outgroup humans and robots (interaction effect).

I used post hoc t-tests to distinguish differences during interaction effects. Descriptive and inferential statistics are reported in tables and figures, and post hoc t-tests results are reported in the text.

Finally, I used linear regression to examine the effects of group cohesion, anthropomorphism, and usefulness on volume of noise blasts participants gave players. I examined this separately for ingroup than outgroup members, humans and robots, and anthropomorphic and mechanomorphic robots to determine if:

H9: More perceived group cohesion, anthropomorphism, and usefulness related to lower noise blast volume.

H9a. Group cohesion had the strongest effect for ingroup members.

H9b. Anthropomorphism had the strongest effect for anthropomorphic robots.

H9c. Usefulness had the strongest effect for mechanomorphic robots.

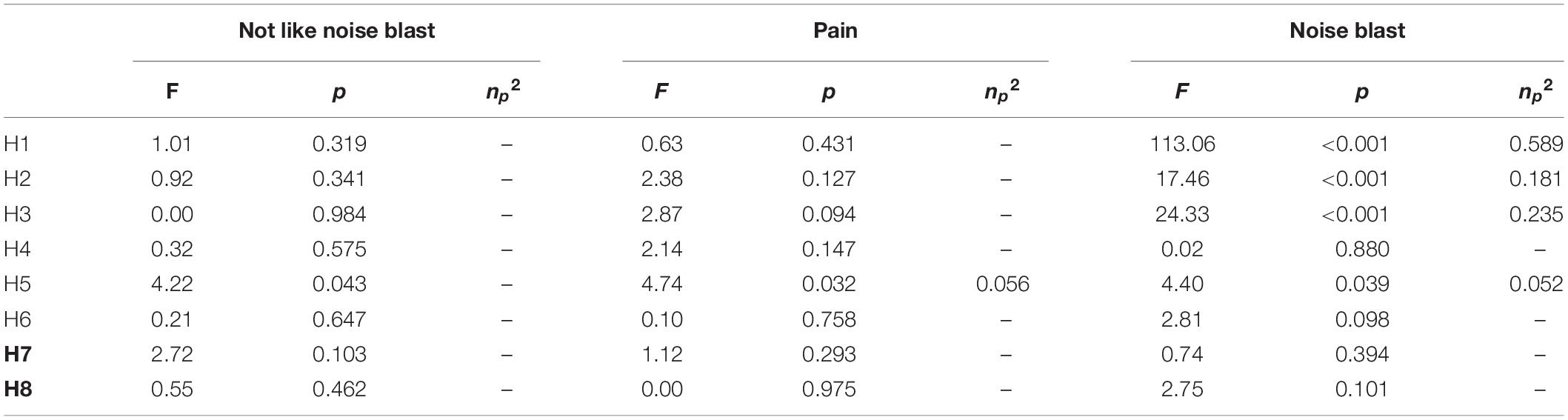

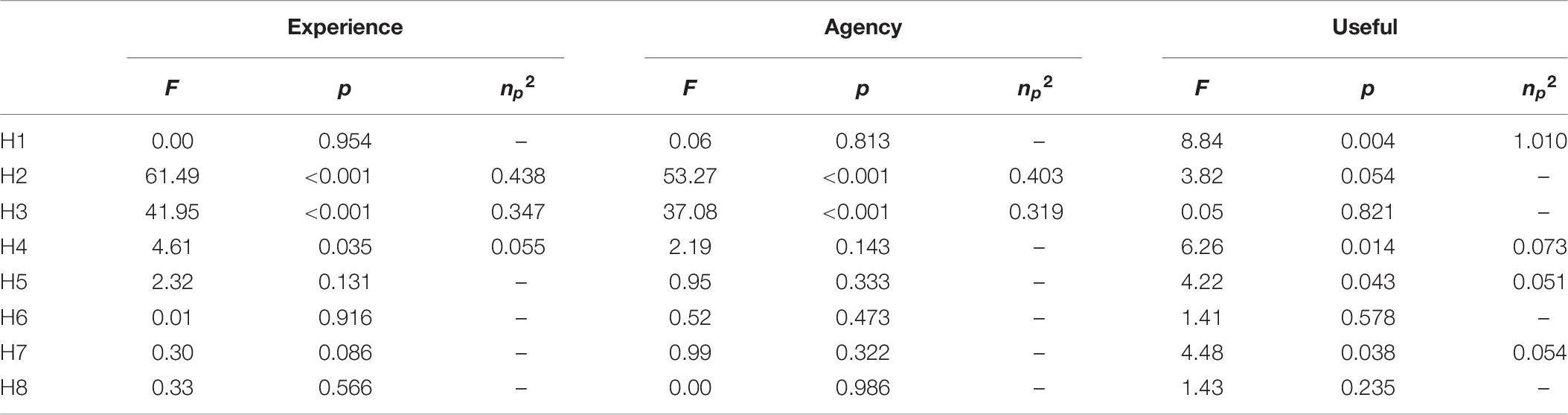

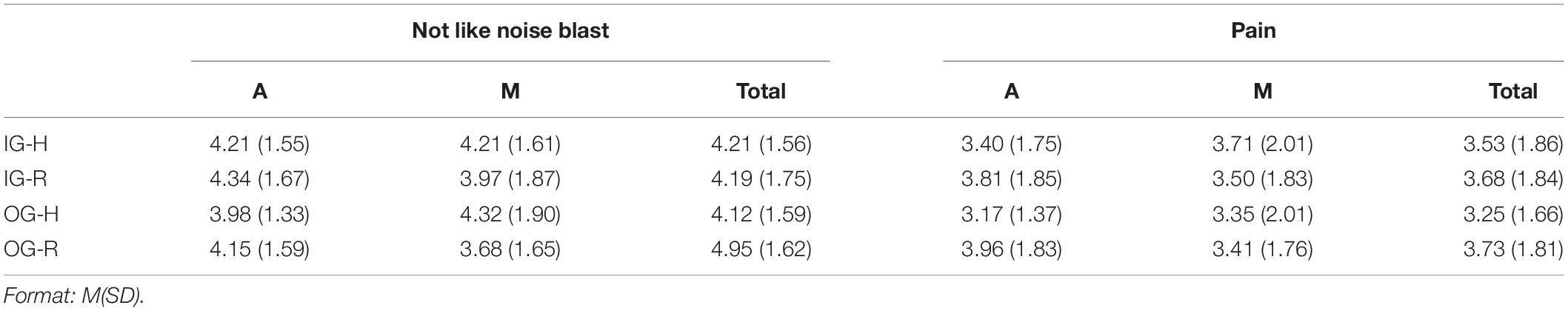

Pain Check

Participants rated no differences in agents not liking the noise blasts or experiencing pain from them (Tables 1, 2). However, participants differentiated anthropomorphic robots less than mechanomorphic robots from humans on not liking the noise blasts and experiencing pain from them (H5). In fact, they rated mechanomorphic robots as experiencing less pain and less disliking of the noise blasts than humans, but anthropomorphic robots as experiencing more pain than and disliking the noise blasts even more than humans.

Table 2. Ratings of ingroup (IG) and outgroup (OG) humans (H), and robots (R) that are anthropomorphic (A) and metamorphic (M) on pain.

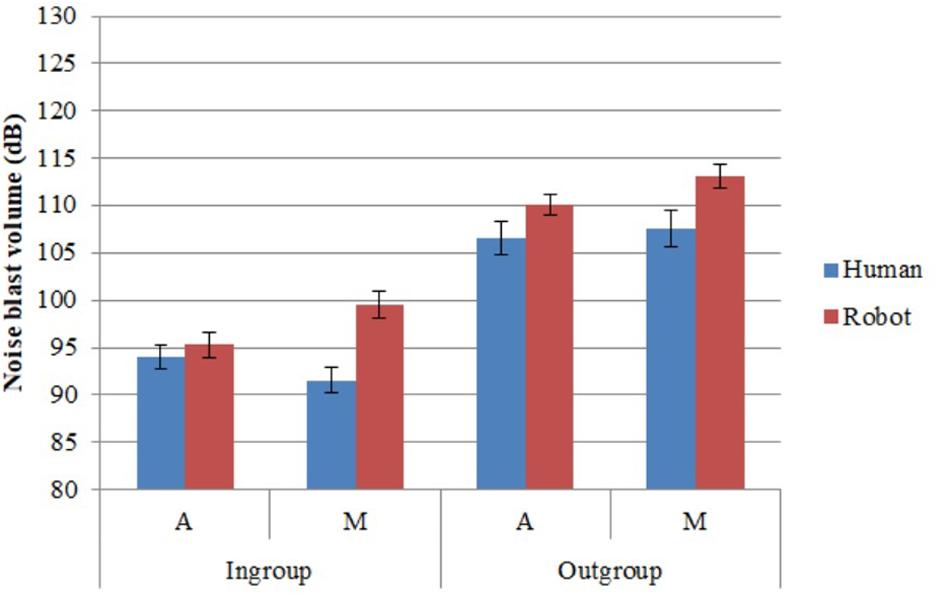

Noise Blast Volume

Participants assigned softer noise blasts to ingroup than outgroup members (H1) and humans than robots (H2; Table 1). They assigned softer noise blasts to ingroup robots than outgroup humans (H3). They differentiated anthropomorphic robots less than mechanomorphic robots from humans on noise blasts (H5; Figure 4).

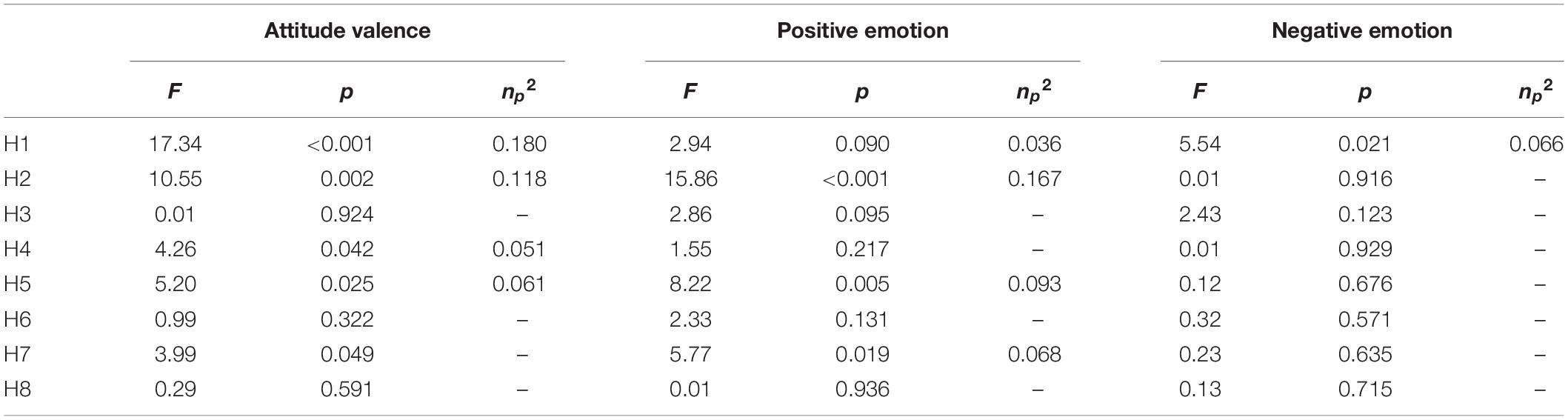

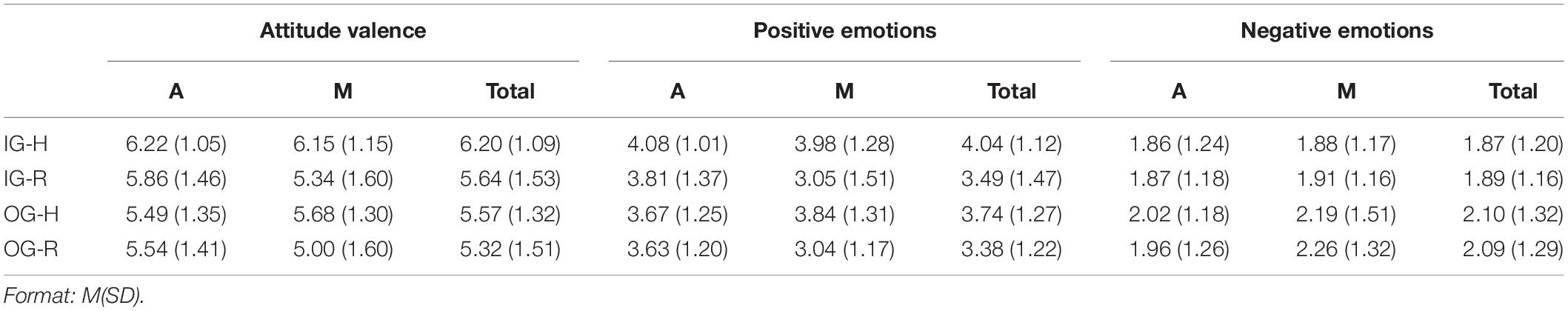

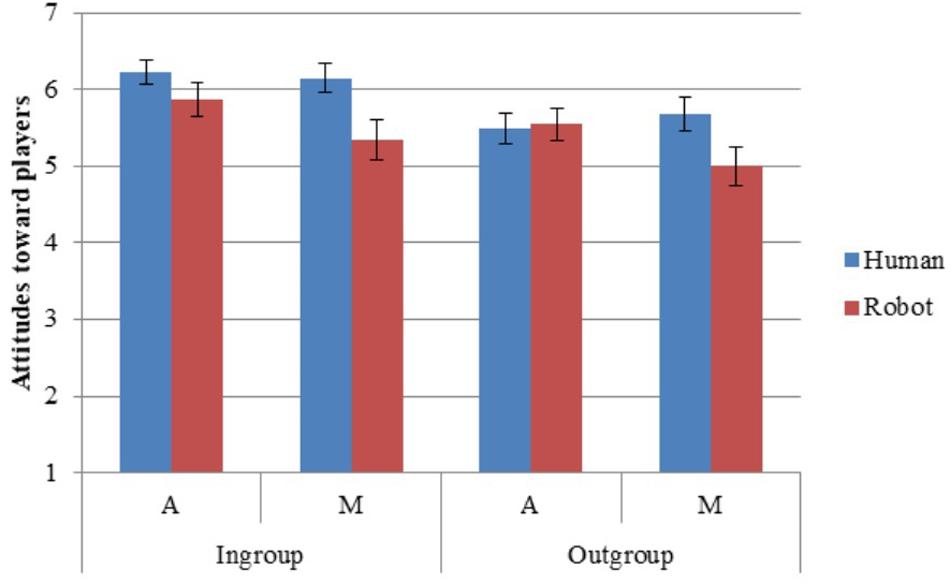

Attitude Valence and Emotions

Cronbach’s alphas was high for attitude valence (α = 0.984; αdiff = 0.983). Factor analysis indicated that, for each group (ingroup humans, ingroup robots, outgroup humans, outgroup robots) emotions were divided into two separate scales: Positive (respect, happiness, security, sympathy, excitement; α = 0.816; αdiff = 0.845) and Negative (discussed, fear, pity, anger, anxiety, sadness, unease; α = 953; αdiff = 0.895).

Participants had more positive attitude valence and fewer negative emotions toward the ingroup than outgroup (H1; Tables 3, 4). They had more positive attitudes and emotions toward humans than robots (H2). They showed more ingroup than outgroup differentiation in attitude valence—that is, ratings of humans as more positive than robots were more pronounced for attitudes toward the ingroup than the outgroup (H4). They differentiated anthropomorphic robots from humans less from mechanomorphic robots from humans on attitude valence and positive emotions (H5). Although there was no effect of Player (i.e., participants favoring ingroup robots over outgroup humans overall), there was an interaction effect between Player and Anthropomorphism on positive emotions (partly supporting H7; Table 3; Figure 5), with participants rating ingroup mechanomorphic robots as less positive than outgroup humans (mechanomorphic condition p = 0.044; anthropomorphic condition p = 0.047) and ingroup anthropomorphic robots (p = 0.022).

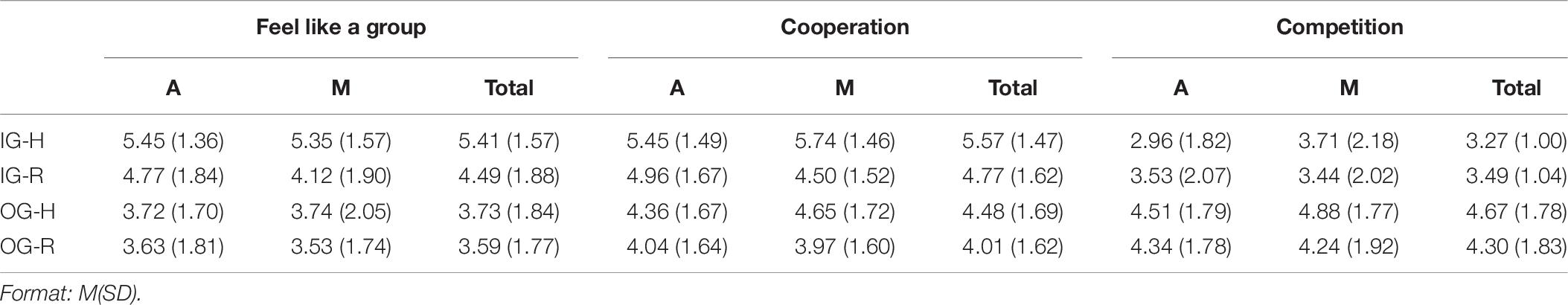

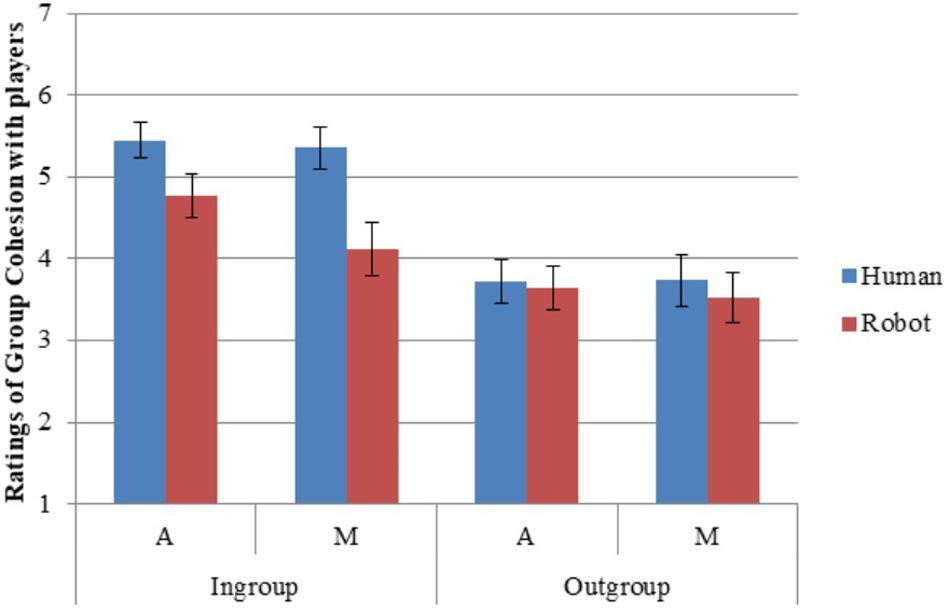

Group Cohesion

Participants indicated more feelings of group cohesion and cooperation, and less competition, with the ingroup than with the outgroup (H1; Tables 5, 6). They indicated more group cohesion and cooperation with humans than with robots (H2). They rated feeling more like part of the group and less competitive with ingroup robots than with outgroup humans (H3). They showed more ingroup than outgroup differentiation for group cohesion—that is, they indicated feeling more similar in cohesion between humans and robots in the ingroup than between humans and robots in the outgroup (H4; Figure 6).

Anthropomorphism

The experience subscale of anthropomorphism consisted of four items (α = 0.952; αdiff = 0.939), and the agency subscale included five items (α = 0.949; αdiff = 0.923).

Participants viewed humans as more experiential and agentic than robots (H2; Tables 7, 8). They also viewed ingroup robots as more experiential and agentic than outgroup humans (H3). There was more ingroup differentiation than outgroup differentiation for experience—that is, participants rated experience as more similar between humans and robots in the ingroup than between humans and robots in the outgroup (H4).

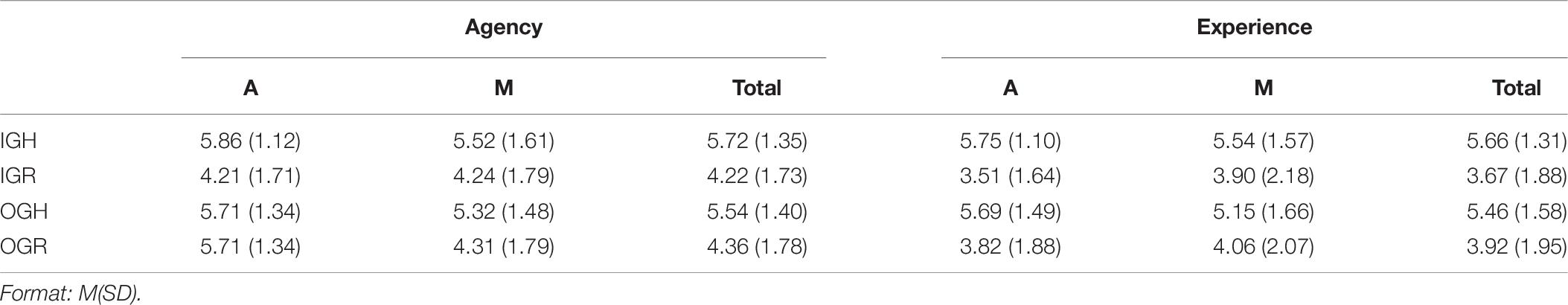

Usefulness

Cronbach’s alpha was high for the six usability items (α = 0.978; αdiff = 0.969). Participants rated ingroup members as more useful than outgroup members (H1; Tables 7, 8). They differentiated the ingroup more than the outgroup—that is, participants rated usefulness as more similar between humans and robots in the ingroup than between humans and robots in the outgroup (H4). They differentiated anthropomorphic robots from humans less than mechanomorphic robots from humans on usefulness (H5). They rated ingroup anthropomorphic robots as more useful than outgroup humans, but ingroup mechanomorphic robots as less useful than outgroup humans (partially supporting H7; Figure 7).

Relationship Among Variables

To determine which variables (perceived group cohesion, anthropomorphism, usefulness) most strongly related to noise blast volume, I used Pearson correlations.

Results indicated that for ingroup humans and robots, increased perceptions of group cohesion (humans: r = −0.403, p < 0.001; robots: r = −0.260, p = 0.020; H9a) and usefulness (humans: r = −0.222, p = 0.048; robots: r = −0.330, p = 0.003; partially supporting H9c) related to decreased noise blasts. For ingroup robots, increased perceptions of agency (r = −0.252, p = 0.025; H9b) also related to decreased noise blasts. When robot type was divided by robot Anthropomorphism, only anthropomorphic robots had a correlation between usefulness (r = −0.325, p = 0.030) and noise blasts.

For outgroup humans and robots, no correlations occurred.

Discussion

In this study, participants played a game with ingroup and outgroup humans and robots. The robots were either anthropomorphic (NAO) or mechanomorphic (iRobots). I measured how group membership, agent type, and robot anthropomorphism affected responses toward them. The results confirmed prior findings (H1–H4) and contributed novel findings (H5–H8). The results confirmed Hypotheses 1 and 2, with participants favoring the ingroup over the outgroup and humans over robots. Hypothesis 3 was partly supported, with participants typically favoring ingroup robots over outgroup humans. Hypothesis 4 was partly supported, with participants typically showing greater ingroup differentiation between humans and robots than outgroup differentiation between them. Novelly, I show that these effects are robust against robot anthropomorphism (H7 and H8 rejected). Also new, Hypothesis 5 was supported, with group effects of humans more closely mirrored by group effects of anthropomorphic robots than mechanomorphic robots. This finding did not relate to any consistent difference due to robot anthropomorphism (H6 rejected). Finally, if participants felt like other players were a cohesive part of the group or useful to the group, participants behaved more morally toward them—but only if they were ingroup members (H9 partly supported). These findings are described in more detail below.

Findings of participants favoring the ingroup (H1) and humans (H2) replicate the findings from previous studies (Fraune et al., 2017b, 2020). This is a robust finding. Favoring the ingroup occurred on the behavioral measure of noise blasts and on survey measures of attitude valence, emotion, group cohesion, and usefulness. Favoring humans occurred on behavioral measures of noise blasts and survey measures of attitude valence, emotion, group cohesion, and anthropomorphism.

This paper contributes the novel finding that group dynamics in human–human interaction are more closely mirrored by human interaction with anthropomorphic than mechanomorphic robots (H5). This occurred on behavioral measures of moral favoring and on survey measures of group cohesion, attitude valence, and usefulness. The findings did not merely reflect more positive responses toward anthropomorphic than mechanomorphic robots (H6 rejected). This implies that humans more readily apply group effects to robots that look and act more anthropomorphic—at least in brief interactions.

However, robot anthropomorphism was not strong enough in this study to mitigate favoring ingroup over outgroup (H7 rejected) or the outgroup homogeneity effect (H8 rejected). That is, even with mechanomorphic robots, participants treated ingroup robots better than outgroup humans (H3). Moreover, even with anthropomorphic robots, participants showed more ingroup than outgroup differentiation between humans and robots (H4). This indicates that these findings of ingroup favoring, and of ingroup differentiation between humans and robots, are robust across various robot types. However, ingroup differentiation may have decreased if the robots were less distinguishable from humans in appearance (e.g., Minato et al., 2004; Nishio et al., 2007) or had longer, more social interactions with participants before the task (Kahn et al., 2012).

Another novel finding from the study is that perceptions of group members, whether they were humans or robots, related to moral behavior (H9): The more participants perceived ingroup (but not outgroup) members as cohesive and useful, the softer the noise blasts participants assigned them. Further, the more participants perceived ingroup robots as anthropomorphic, the softer noise blasts participants assigned to them. This occurred regardless of robot anthropomorphism. These results align with findings from prior studies in social psychology of people favoring the ingroup and discriminating against the outgroup not out dislike for the outgroup, but because they feel close to the ingroup (Greenwald and Pettigrew, 2014).

Although this study showed some effects of robot anthropomorphism, there were not as many as hypothesized (H6, 7, and 8 rejected). This may seem surprising, considering that prior work suggests that people favor anthropomorphic robots over mechanomorphic robots (Gray et al., 2007). However, prior work shows that favoring of anthropomorphic robots depend on the number of robots (Fraune et al., 2015b) and context (Kuchenbrandt et al., 2011; Sauppé and Mutlu, 2015; Yogeeswaran et al., 2016) of interaction. In the context of this study, participants competed in a game and that competitive context was critical in the interaction. This is most strongly illustrated in the behavioral noise blast measure and the survey measure of group cohesion, which showed medium to large effect sizes for group membership (ingroup/outgroup) and only small effect sizes for agent type (human/robot). Given that participant behavior was only minimally affected by whether the target was human or robot and that people find it much more important to behave positively toward humans than robots (Epley et al., 2007; Haslam et al., 2008; Waytz et al., 2010), it follows that anthropomorphism had little significant effect. For other measures, like attitude, which had small effects for both group membership and agent type, it similarly follows that effects of robot type would be even more minimal.

Another possible reason for not finding many effects of robot anthropomorphism is that participants may have responded to the study’s mechanomorphic robot differently than usual because of the use of the iRobot Creates. iRobots may be familiar to participants because their bodies is the same as those of Roombas (typically meant for vacuuming). Research indicates that familiarity increases positive responses (Rindfleisch and Inman, 1998), even with robots (MacDorman, 2006). It is also possible that the robots’ typical purpose of cleaning affected participant responses negatively due to the mismatch of typical and current task. However, because the robots were not viewed more negatively than anthropomorphic robots, this is likely not the case.

This study does have some limitations. First, the findings apply best to short-term interactions with robots. In the long term, responses toward mechanomorphic robots may show stronger group effects. Second, although the sample size was large enough to find the main hypothesized effects, a larger sample size may have revealed more detailed three-way interaction effects and may have showed support for Hypotheses 7 and 8. However, with 81 viable participants in the study, if the effect had been at least moderate in size, it would likely have been revealed.

This study also acts as a foundation for future research. Prior work indicated that small differences in group composition of the teams (varying between one and three robots and humans in a team of four) did not affect findings in this situation (Fraune et al., 2020); however, recent research has indicated that larger changes in group composition affect some social phenomena such as conformity (Hertz et al., 2019). Future research should examine how larger differences in group composition affect moral behavior toward humans and robots.

Further routes for future examination include biological mechanisms for treating ingroup robots nearly as well as ingroup humans. For example, prior work indicates that oxytocin accounts for greater trust and compliance with automated agents (De Visser et al., 2017). Further, oxytocin is shown to motivate people for greater favoritism (De Dreu et al., 2011) and protection (De Dreu et al., 2012) of the ingroup. It remains to be seen if oxytocin related to group favoritism can account for treating ingroup robots more positively.

Conclusion

In this study, participants played a game with ingroup and outgroup humans and robots—with robots being anthropomorphic or mechanomorphic. Participants favored the ingroup over the outgroup and humans over robots. The study provides the novel contribution that human group dynamics were more closely reflected by group dynamics with anthropomorphic than mechanomorphic robots. Further, the findings indicate that if participants felt like other players were a cohesive part of the group or useful to the group, participants behaved more morally toward them—but only if they were ingroup members. These results can inform future human–robot teaming about how people will likely treat robots in their teams depending on robot anthropomorphism.

Data Availability Statement

The datasets generated for this study are available through the Open Science Framework (10.17605/OSF.IO/HCDNU).

Ethics Statement

The studies involving human participants were reviewed and approved by New Mexico State University (NMSU) Institutional Review Board (IRB). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

MF contributed to the conceptualization, funding acquisition, methodology, project administration, supervision, formal analysis, and writing.

Funding

This work was funded by NSF grant # 1849591.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Thanks are due to the NSF for funding this project and to Amer Al-Radaideh, Tyler Chatterton, Roccio Guzman, Danielle Langlois, Kevin Liaw, and Tammy Tsai for their assistance.

References

Ackerman, J. M., Shapiro, J. R., Neuberg, S. L., Kenrick, D. T., Becker, D. V., Griskevicius, V., et al. (2006). They all look the same to me (unless they’re angry) from out-group homogeneity to out-group heterogeneity. Psychol. Sci. 17, 836–840. doi: 10.1111/j.1467-9280.2006.01790.x

Bartneck, C., Van Der Hoek, M., Mubin, O., and Al Mahmud, A. (2007). “Daisy, daisy, give me your answer do!: switching off a robot,” in Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, (New York, NY: ACM).

Biernat, M., and Manis, M. (1994). Shifting standards and stereotype-based judgments. J. Personal. Soc. Psychol. 66, 5–20. doi: 10.1037//0022-3514.66.1.5

Bradshaw, J. M., Feltovich, P. J., and Johnson, M. (2017). “Human–agent interaction,” in The Handbook of Human-Machine Interaction, ed. G. A. Boy (Boca Raton, FL: CRC Press), 283–300.

Carpenter, J. (2016). Culture and Human-Robot Interaction in Militarized Spaces: A War Story. Abingdon: Routledge.

Chang, W.-L., and Šabanović, S. (2015). “Interaction expands function: social shaping of the therapeutic robot PARO in a nursing home,” in Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction, (New York, NY: ACM).

Chang, W.-L., White, J. P., Park, J., Holm, A., and Sabanovic, S. (2012). “The effect of group size on people’s attitudes and cooperative behaviors toward robots in interactive gameplay,” in Proceeding of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, (Piscataway, NJ: IEEE).

Chen, J. Y., and Barnes, M. J. (2014). Human–agent teaming for multirobot control: a review of human factors issues. IEEE Trans. Hum. Mach. Sys. 44, 13–29. doi: 10.1109/thms.2013.2293535

Correia, F., Alves-Oliveira, P., Maia, N., Ribeiro, T., Petisca, S., Melo, F. S., et al. (2016). “Just follow the suit! Trust in human-robot interactions during card game playing,” in Proceeding of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication, (Piscataway, NJ: IEEE).

Correia, F., Mascarenhas, S., Prada, R., Melo, F. S., and Paiva, A. (2018). “Group-based emotions in teams of humans and robots,” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, (New York, NY: ACM).

Cottrell, C. A., and Neuberg, S. L. (2005). Different emotional reactions to different groups: a sociofunctional threat-based approach to” prejudice”. J. Personal. Soc. Psychol. 88, 770–789. doi: 10.1037/0022-3514.88.5.770

De Dreu, C. K., Greer, L. L., Van Kleef, G. A., Shalvi, S., and Handgraaf, M. J. (2011). Oxytocin promotes human ethnocentrism. Proc. Natl. Acad. Sci. U.S.A. 108, 1262–1266. doi: 10.1073/pnas.1015316108

De Dreu, C. K., Shalvi, S., Greer, L. L., Van Kleef, G. A., and Handgraaf, M. J. (2012). Oxytocin motivates non-cooperation in intergroup conflict to protect vulnerable in-group members. PLoS One 7:e46751. doi: 10.1371/journal.pone.0046751

De Visser, E. J., Monfort, S. S., Goodyear, K., Lu, L., O’Hara, M., Lee, M. R., et al. (2017). A little anthropomorphism goes a long way: effects of oxytocin on trust, compliance, and team performance with automated agents. Hum. Factors 59, 116–133. doi: 10.1177/0018720816687205

de Visser, E. J., Monfort, S. S., McKendrick, R., Smith, M. A., McKnight, P. E., Krueger, F., et al. (2016). Almost human: anthropomorphism increases trust resilience in cognitive agents. J. Exp. Psychol. Appl. 22, 331–949. doi: 10.1037/xap0000092

Edwards, J. R. (2001). Ten difference score myths. Organ. Res. Methods 4, 265–287. doi: 10.1177/109442810143005

Edwards, J. R., and Schmitt, N. (2002). “Alternatives to difference scores: Polynomial regression and response surface methodology,” in The Jossey-Bass Business and Management Series. Measuring and Analyzing Behavior in Organizations: Advances in Measurement and Data Analysis, ed. F. Drasgow (San Francisco, CA: Jossey-Bass), 350–400.

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi: 10.1037/0033-295x.114.4.864

Eyssel, F., Kuchenbrandt, D., Bobinger, S., de Ruiter, L., and Hegel, F. (2012). “’If you sound like me, you must be more human’: On the interplay of robot and user features on human-robot acceptance and anthropomorphism,” in Proceedings of the 7th Annual ACM/IEEE international conference on Human-Robot Interaction, (New York, NY: ACM).

Fraune, M. R., Kawakami, S., Sabanovic, S., De Silva, R., and Okada, M. (2015a). Three’s company, or a crowd?: The effects of robot number and behavior on HRI in Japan and the USA. Robotics: Science and Systems.

Fraune, M. R., Nishiwaki, Y., Sabanovic, S., Smith, E. R., and Okada, M. (2017a). “Threatening flocks and mindful snowflakes: How group entitativity affects perceptions of robots,” in Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, (Piscataway, NJ: IEEE).

Fraune, M. R., Sherrin, S., Šabanović, S., and Smith, E. R. (2019). Is human robot interaction more competitive between groups than between individuals? Proceeding of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), IEEE.Piscataway, NJ

Fraune, M. R., and Šabanović, S. (2014). “Robot gossip: effects of mode of robot communication on human perceptions of robots,” in Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, (New York, NY: ACM).

Fraune, M. R., Sabanovic, S., and Smith, E. R. (2017b). “Teammates first: favoring ingroup robots over outgroup humans. RO-MAN 2017,” in Proceeding of the 26th IEEE International Symposium on Robot and Human Interactive Communication, (Piscataway, NJ: IEEE).

Fraune, M. R., Sabanovic, S., and Smith, E. R. (2020). Some are more equal than others: ingroup robots gain some but not all benefits of team membership. J. Interac. Stud. (in press).

Fraune, M. R., Sherrin, S., Sabanovic, S., and Smith, E. R. (2015b). “Rabble of robots effects: number and type of robots modulates attitudes, emotions, and stereotypes,” in Proceedings for the 2015 ACM/IEEE International Conference on Human-Robot Interaction, (Piscataway, NJ: IEEE).

Goetz, J., Kiesler, S., and Powers, A. (2003). “Matching robot appearance and behavior to tasks to improve human-robot cooperation,” in Proceeding of the 12th IEEE International Workshop on Robot and Human Interactive Communication, 2003. Proceedings. ROMAN 2003, (Piscataway, NJ: IEEE).

Gray, H. M., Gray, K., and Wegner, D. M. (2007). Dimensions of mind perception. Science 315, 619–619. doi: 10.1126/science.1134475

Greenwald, A. G., and Pettigrew, T. F. (2014). With malice toward none and charity for some: ingroup favoritism enables discrimination. Am. Psychol. 69, 669–684. doi: 10.1037/a0036056

Haslam, N., and Loughnan, S. (2014). Dehumanization and infrahumanization. Annu. Rev. Psychol. 65, 399–423. doi: 10.1146/annurev-psych-010213-115045

Haslam, N., Loughnan, S., Kashima, Y., and Bain, P. (2008). Attributing and denying humanness to others. Eur. Rev. Soc. Psychol. 19, 55–85. doi: 10.1080/10463280801981645

Hertz, N., Shaw, T., de Visser, E. J., and Wiese, E. (2019). Mixing it up: how mixed groups of humans and machines modulate conformity. J. Cogn. Eng. Decis. Mak. 13, 242–257. doi: 10.1177/1555343419869465

Hogg, M. A. (2001). Social Identification, Group Prototypicality, and Emergent Leadership. Social Identity Processes in Organizational Contexts. Philadelphia, PA: Psychology Press, 197–212.

Insko, C. A., Wildschut, T., and Cohen, T. R. (2013). Interindividual–intergroup discontinuity in the prisoner’s dilemma game: how common fate, proximity, and similarity affect intergroup competition. Organ. Behav. Hum. Decis. Process. 120, 168–180. doi: 10.1016/j.obhdp.2012.07.004

Jones, E. E., Wood, G. C., and Quattrone, G. A. (1981). Perceived variability of personal characteristics in in-groups and out-groups: the role of knowledge and evaluation. Personal. Soc. Psychol. Bul. 7, 523–528. doi: 10.1177/014616728173024

Judd, C. M., and Park, B. (1988). Out-group homogeneity: judgments of variability at the individual and group levels. J. Personal. Soc. Psychol. 54, 778–788. doi: 10.1037/0022-3514.54.5.778

Kahn, P. H. Jr., Kanda, T., Ishiguro, H., Gill, B. T., Ruckert, J. H., Shen, S., et al. (2012). “Do people hold a humanoid robot morally accountable for the harm it causes?,” in Proceedings of the 7th Annual ACM/IEEE International Conference on Human-Robot Interaction, (New York, NY: ACM).

Kahn, P. H. Jr., Reichert, A. L., Gary, H. E., Kanda, T., Ishiguro, H., Shen, S., et al. (2011). “The new ontological category hypothesis in human-robot interaction,” in Proceedings of the 6th international conference on Human-robot interaction, (New York, NY: ACM).

Kahneman, D., and Klein, G. (2009). Conditions for intuitive expertise: a failure to disagree. Am. Psychol. 64, 515–526. doi: 10.1037/a0016755

Kozak, M. N., Marsh, A. A., and Wegner, D. M. (2006). What do I think you’re doing? Action identification and mind attribution. J. Personal. Soc. Psychol. 90, 543–555. doi: 10.1037/0022-3514.90.4.543

Kuchenbrandt, D., Eyssel, F., Bobinger, S., and Neufeld, M. (2011). “Minimal group-maximal effect? Evaluation and anthropomorphization of the humanoid robot NAO,” in Proceedings of the 3rd International Conference on Social Robotics, (Berlin: Springer), 104–113. doi: 10.1007/978-3-642-25504-5_11

Kuchenbrandt, D., Eyssel, F., Bobinger, S., and Neufeld, M. (2013). When a robot’s group membership matters. Int. J. Soc. Robot. 5, 409–417.

Lee, S.-L., and Lau, I. Y.-M. (2011). “Hitting a robot vs. hitting a human: is it the same?,” in Proceedings of the 6th International Conference on Human-Robot Interaction, (New York, NY: ACM).

Leidner, B., and Castano, E. (2012). Morality shifting in the context of intergroup violence. Eur. J. Soc. Psychol. 42, 82–91. doi: 10.1002/ejsp.846

Leite, I., McCoy, M., Lohani, M., Ullman, D., Salomons, N., Stokes, C. K., et al. (2015). “Emotional storytelling in the classroom: individual versus group interaction between children and robots,” in Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction, (Piscataway, NJ: IEEE).

MacDorman, K. F. (2006). “Subjective ratings of robot video clips for human likeness, familiarity, and eeriness: an exploration of the uncanny valley,” in Proceeding of the ICCS/CogSci-2006 Long Symposium: Toward Social Mechanisms of Android Science, (Vancouver: ICCS).

Mastro, D. E., and Kopacz, M. A. (2006). Media representations of race, prototypicality, and policy reasoning: an application of self-categorization theory. J. Broadcasting Electron. Media 50, 305–322. doi: 10.1207/s15506878jobem5002_8

Matsumoto, N., Fujii, H., Goan, M., and Okada, M. (2005). “Minimal design strategy for embodied communication agents,” in Proceeding of the ROMAN 2005. IEEE International Workshop on Robot and Human Interactive Communication, (Piscataway, NJ: IEEE).

Matsumoto, N., Fujii, H., and Okada, M. (2006). Minimal design for human–agent communication. Artificial Life and Robotics 10, 49–54. doi: 10.1007/s10015-005-0377-1

Minato, T., Shimada, M., Ishiguro, H., and Itakura, S. (2004). “Development of an android robot for studying human-robot interaction,” in Proceeding of the International conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, (Berlin: Springer).

Nass, C., Fogg, B., and Moon, Y. (1996). Can computers be teammates? Int. J. Hum. Comput. Stud. 45, 669–678. doi: 10.1006/ijhc.1996.0073

Nishio, S., Ishiguro, H., and Hagita, N. (2007). “Geminoid: teleoperated android of an existing person,” in Humanoid Robots: New Developments, ed. A. C. de Pina Filho (Vienna: I-Tech Education and Publishing), 343–352.

Peter, J. P., Churchill, G. A. Jr., and Brown, T. J. (1993). Caution in the use of difference scores in consumer research. J. Consum. Res. 19, 655–662.

Phillips, E., Zhao, X., Ullman, D., and Malle, B. F. (2018). “What is human-like? Decomposing robots’ humanlike appearance using the Anthropomorphic roBOT (ABOT) Database,” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, (Piscataway, NJ: IEEE).

Reeves, B., and Nass, C. (1997). The Media Equation: How People Treat Computers, Television, and New Media. Cambridge: Cambridge University Press.

Rindfleisch, A., and Inman, J. (1998). Explaining the familiarity-liking relationship: mere exposure, information availability, or social desirability? Mark. Lett. 9, 5–19.

Saguy, T., Szekeres, H., Nouri, R., Goldenberg, A., Doron, G., Dovidio, J. F., et al. (2015). Awareness of intergroup help can rehumanize the out-group. Soc. Psychol. Personal. Sci. 6, 551–558. doi: 10.1177/1948550615574748

Sauppé, A., and Mutlu, B. (2015). “The social impact of a robot co-worker in industrial settings,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, (New York, NY: ACM), 3613–3622.

Tajfel, H., Billig, M. G., Bundy, R. P., and Flament, C. (1971). Social categorization and intergroup behaviour. Eur. J. Soc. Psychol. 1, 149–178. doi: 10.1002/ejsp.2420010202

Turner, J. C., Hogg, M. A., Oakes, P. J., Reicher, S. D., and Wetherell, M. S. (1987). Rediscovering the social group: A self-Categorization Theory. Oxford: Basil Blackwell.

Turner, J. C., and Tajfel, H. (1986). The social identity theory of intergroup behavior. Psychol. Intergroup Relat. 5, 7–24.

Twenge, J. M., Baumeister, R. F., Tice, D. M., and Stucke, T. S. (2001). If you can’t join them, beat them: effects of social exclusion on aggressive behavior. J. Personal. Soc. Psychol. 81, 1058–1069. doi: 10.1037/0022-3514.81.6.1058

Van Knippenberg, D. (2011). Embodying who we are: leader group prototypicality and leadership effectiveness. Leadersh. Q. 22, 1078–1091. doi: 10.1016/j.leaqua.2011.09.004

Van Knippenberg, D., Lossie, N., and Wilke, H. (1994). In-group prototypicality and persuasion: determinants of heuristic and systematic message processing. Br. J. Soci. Psychol. 33, 289–300. doi: 10.1111/j.2044-8309.1994.tb01026.x

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D. (2003). User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478.

Wada, K., and Shibata, T. (2007). Living with seal robots—its sociopsychological and physiological influences on the elderly at a care house. IEEE Trans. Robot. 23, 972–980. doi: 10.1109/tro.2007.906261

Wada, K., Shibata, T., Saito, T., Sakamoto, K., and Tanie, K. (2005). “Psychological and social effects of one year robot assisted activity on elderly people at a health service facility for the aged,” in Proceedings of the 2005 IEEE International Conference on Robotics and Automation, (Piscataway, NJ: IEEE).

Waytz, A., Gray, K., Epley, N., and Wegner, D. M. (2010). Causes and consequences of mind perception. Trends Cogn. Sci. 14, 383–388. doi: 10.1016/j.tics.2010.05.006

Wildschut, T., and Insko, C. A. (2007). Explanations of interindividual–intergroup discontinuity: a review of the evidence. Eur. Rev. Soc. Psychol. 18, 175–211. doi: 10.1080/10463280701676543

Wullenkord, R., Fraune, M. R., Eyssel, F., and Sabanovic, S. (2016). “Getting in touch: how imagined, actual, and physical contact affect evaluations of robots,” in Proceeding of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), (Piscataway, NJ: IEEE).

Keywords: social robotics, group effects, anthropomorphism, morality, human-robot interaction

Citation: Fraune MR (2020) Our Robots, Our Team: Robot Anthropomorphism Moderates Group Effects in Human–Robot Teams. Front. Psychol. 11:1275. doi: 10.3389/fpsyg.2020.01275

Received: 03 March 2020; Accepted: 15 May 2020;

Published: 14 July 2020.

Edited by:

Joseph B. Lyons, Air Force Research Laboratory, United StatesReviewed by:

Ewart Jan De Visser, George Mason University, United StatesSteven Young, Baruch College (CUNY), United States

Copyright © 2020 Fraune. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marlena R. Fraune, bWZyYXVuZUBubXN1LmVkdQ==

Marlena R. Fraune

Marlena R. Fraune