94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 21 January 2020

Sec. Educational Psychology

Volume 10 - 2019 | https://doi.org/10.3389/fpsyg.2019.03053

This article is part of the Research TopicWhat Big Data Can Tell Us About the Psychology of Learning and TeachingView all 12 articles

One of the many things teachers do is to give feedback on their students’ work. Feedback pointing out mistakes may be a key to learning, but it may also backfire. We hypothesized that feedback based on students’ mistakes may have more positive effects in cultures where teachers have greater authority over students, which we assume to be cultures that are high on power distance and religiosity. To test this hypothesis we analyzed data from 49 countries taking part in the 2015 wave of the TIMSS assessment, in which students in the 4th and 8th grades were asked whether their teachers in mathematics and science told them how to do better when they had made a mistake. For each country we could then estimate the association between the reported use of mistake-based feedback and student achievement. Consistent with our hypothesis, the estimated effect of mistake-based feedback was positive only in certain countries, and these countries tended to be high on power distance and religiosity. These results highlight the importance of cultural values in educational practice.

Some scholars argue that pedagogical methods and concepts are culturally embedded and that transplanting them from one culture to another is not always feasible (e.g., Hatano and Inagaki, 1998; Chen and Wong, 2015). In the present paper we focus on cultural differences in the effects of a specific teacher practice: to give feedback on students’ mistakes. Students’ mistakes have been argued to play a key role for learning (Mangels et al., 2006; Boaler, 2016) and reform initiatives in mathematics education (National Council of Teachers of Mathematics, 2000), research within the field of mathematics education (e.g., Kazemi and Stipek, 2001; Bray, 2011) as well as studies in psychology and neuroscience (see Boaler, 2016) emphasize that capitalizing on students’ mistakes may be a particularly productive teaching practice. On the other hand, to go from mistakes to learning is not straightforward. Feedback on mistakes, also known as corrective feedback, may even be counter-productive, for example if students perceive they cannot understand the feedback, if it makes them focus on right and wrong answers instead of the solving process, or if it makes them dwell on their mistakes (Gagné et al., 1987; Fyfe and Rittle-Johnson, 2017). The timing and character of corrective feedback may therefore influence its efficacy. In general, feedback are thought to work through cognitive, motivational and meta-cognitive mechanisms that are affected by the relationship between the learning situation and the learner, and by the level of expertise and experience of the learner and the teacher (Hattie and Timperley, 2007; Harks et al., 2014). This means that social and cultural factors that influence the learning context may affect the efficacy of feedback. The purpose of the present paper is to examine the cultural dependence of the efficacy of mistake-based feedback.

In classroom practice, teachers must choose how to react to students’ mistakes. The reaction could be anything from simply ignoring mistakes to making them starting points for whole-class discussions (Bray, 2011). Teachers’ feedback on how to do better when students have made a mistake is a particularly interesting teaching practice to study. Such corrective feedback can take many forms. For example, the teacher can criticize the student for making a mistake or praise the thought and emphasize the learning potential (Tulis, 2013), or the teacher can give feedback in the form of statements (e.g., giving the correct answer or providing an explanation) as well as questions (e.g., redirecting the question or asking for student explanation; Schleppenbach et al., 2007). Also how teachers’ feedback on errors is perceived by students may differ. For example, students can feel embarrassed when their teacher point out errors or view them as opportunities to improve (Tulis, 2013).

The ways in which teachers handle mistakes and how mistakes are perceived by the students may be nationally embedded and have shown to differ between countries. For example, in analyzing teacher–student interactions surrounding students’ mistakes in 60 videotaped 8th grade mathematics lessons, Santagata (2004) found differences between Italian and United States teachers. While United States teachers tended to mitigate student mistakes and students rarely blamed themselves for making them, Italian teachers more often aggravated student mistakes (e.g., by openly showing their disappointment) and students took responsibility for making them. Another example is Schleppenbach et al. (2007) who analyzed videotaped lessons from elementary mathematics lessons and found differences in how mistakes were treated in the United States and China. Their results indicate that United States and Chinese students made errors at similar frequencies, but that the teachers in the two countries responded to them differently. The United States teachers made more statements about errors than the Chinese teachers, who instead asked more follow-up questions about errors. Moreover, when questions were used, the United States teachers tended to question students by asking them to evaluate the answer, while the Chinese teachers questioned students by asking them to correct or explain the error. Cultural differences in how students mistakes are viewed have also been discussed by Stevenson and Stigler (1992) and Wang and Murphy (2004) who argue that, in the American context, mistakes are often seen as failures and something that makes students appear silly, while they in China and Japan are viewed as signs of what needs to be learned. Video analysis combined with student questionnaires confirm the existence of culturally dependent feedback effects in comparisons of Switzerland and Germany, where the Swiss students rate their opportunities to learn from errors higher (Dalehefte et al., 2012). Although all of these studies lay important groundwork for using a cross-cultural approach when looking at feedback on students’ mistakes, we know of no previous cross-cultural work in education focusing on the effects of teachers’ mistake-based feedback on student achievement at a larger-scale.

In an influential review of how culture may influence students’ approaches to learning, Littlewood (1999) focused on aspects in which East Asian culture tends to differ from Western culture: high power distance (normalcy of inequality in power and authority) and high collectivism (emphasis on interdependence instead of individuality), including a belief in the adaptability of individuals through effort. These aspects may both have bearing on the effect of negative feedback. Psychological research has suggested that Japanese are more willing than North Americans to accept negative feedback and try to improve from it (Heine et al., 2001a, b). The theoretical rationale for this difference, according to Heine et al. (2001a, p. 435), is that Japan is a culture that emphasizes “hierarchy and role mastery” and that in this context, “the discovery of negative features about the self serves to highlight where one needs to make efforts so as to move toward the consensually shared standards.” In other words, the authors simultaneously appealed to power distance (hierarchy) and collectivism (consensually shared standards to which individuals can adapt through effort). On the basis of this prior research we may hypothesize that mistake-based feedback from teachers leads to better student achievement specifically in cultures that are high on power distance and high on collectivism. We shall now elaborate on the rationale for these hypotheses.

According to Hofstede (2001), societies vary in the extent to which inequality in power is accepted and regarded as normal. When applied to the teacher-student relationship, high power distance implies that teachers have great authority. Students respect the teacher, they appreciate that the teacher tells them what to do, they speak up only when invited, and they do not contradict the teacher. Students in societies with low power distance have less respect for teachers and are more likely to challenge teachers’ authority and rely on their own experience instead (Hofstede, 1986; Woodrow and Sham, 2001; Joy and Kolb, 2009; Holtbrügge and Mohr, 2010). It would fit with this general picture of teacher authority that students would be more accepting of negative feedback from the teacher1.

Although we will use power distance as a proxy for cultural differences in teacher authority, we acknowledge power and authority are not exactly synonymous. An important aspect of authority is being a “reliable guide as to how things are” (Raz, 1990, p. 2), thus connecting authority with religion. It stands to reason that more religious societies are more accepting of religious authority and, plausibly, of teacher authority in general. This notion does not seem to be well researched, but scholars have argued for a strong parallel between religious authority and teachers’ authority (Smith, 2013). Another supporting piece of evidence is that country-level religiosity and power distance are strongly, but not perfectly, correlated (Parboteeah et al., 2008). For these reasons we shall use country-level religiosity as an alternative proxy for teacher authority to complement power distance.

The cultural dimension of collectivism vs. individualism concerns the degree to which individuals are first and foremost regarded as parts of a collective and perceive themselves as interdependent (Markus and Kitayama, 1991; Hofstede, 2001). Compared to people in individualist countries, people in collectivist countries seem to be more likely to view ability and wisdom as adaptable through effort rather than fixed in an individual (Heine et al., 2001b). This may further be connected to the concept of fixed (believing intelligence is as fixed entity) versus growth (believing intelligence is malleable) mindset (Dweck, 2006), which have also been indicated to be associated with trait use and could help account for cultural differences (Dweck et al., 1995). In a recent meta-analytic review, for example, Costa and Faria (2018) found that educational studies conducted in Asia and Oceania reported a significant association between growth mindset and student achievement while, in Europe, a fixed mindset was modestly and positively associated with student achievement. The authors suggest that this may reflect cultural differences were more collectivist societies (such as many Asian countries) might encourage students to value the learning process over individual academic achievement, while in Europe there is a tendency toward a more academically competitive society where students may prioritize individual results over knowledge.

Moreover, it has been suggested that collectivism may facilitate the acceptance of negative feedback because it enables individuals to identify their weaknesses in order to improve and blend in Heine et al. (2001b) and Gelfand et al. (2002). As Heine et al. (2001a, b), a sense that ability is not innate but improves with effort may make negative feedback less threatening and thus presumably more effective in promoting learning. In fact, studies in neuroscience (Mangels et al., 2006; Moser et al., 2011) have shown that students react differently to negative feedback depending on differences in their mindsets. Compared to students endorsing a more fixed mindset, more growth minded individuals showed superior knowledge gains in that they demonstrated greater remediation of errors and were more likely to reflect awareness of and allocation of attention to mistakes.

The aim of the present study is to examine the relation between the above-mentioned cultural dimensions and the effectiveness of teachers giving mistake-based feedback to students. Every country then provides just a single data point. It is therefore imperative to obtain data from as many countries as possible. We use data on student achievement and teaching practices in 49 countries obtained from TIMSS, the Trends in International Mathematics and Science Study. TIMSS is conducted every four years by IEA (International Association for the Evaluation of Educational Achievement). Entire classes in grades 4 and 8 are sampled and participating students are linked to the teacher/classroom level. Students are given achievement tests in mathematics and science as well as a background questionnaire including some items on teachers’ use of various instructional practices. Such data can be related to student outcomes to estimate the association between instructional practices and achievement (e.g., Blömeke et al., 2016; Eriksson et al., 2019). Specifically, the 2015 wave of TIMSS2 included the item “My teacher tells me how to do better when I make a mistake.” This allows us to estimate the association between the use of mistake-based feedback and student achievement within each country that participated in 2015 TIMSS.

We shall assume that these associations reflect the effects of mistake-based feedback on achievement (other possibilities are addressed in the discussion). Under this assumption our hypotheses can be tested by examination of how effects of mistake-based feedback correlate with available country-measures of collectivism, power distance, and religiosity.

In brief, the method of our study consists of two steps. The first step is to use TIMSS data to obtain estimates per country of the effect of mistake-based feedback on achievement. The second step is to examine if these estimates are related to country measures of power distance, religiosity, and collectivism.

Out of 55 countries that participated in TIMSS 2015 (4th grade, 8th grade, or both)3, we study 49 countries for which country measures of religiosity, power distance, and collectivism were available. The 49 countries are listed in Table 1, which also reports the size of the TIMSS student sample and the number of classes sampled in each country.

From the 2009 global Gallup we obtained country measures of religiosity in terms of the percentage of the sampled population who responded “yes” to the question: “Is religion important in your daily life?” (Crabtree, 2010). In our sample of countries, the percentage who judged religion as important ranged from 17 to 99 (M = 58, SD = 27).

Estimates of the power distance and individualism for each country in our sample, on a scale from 0 to 100, were taken from Hofstede’s website4 and are reported in Table 1. To obtain a collectivism measure we reverse coded the individualism measure (i.e., collectivism = 100 - individualism). In our sample of countries, power distance ranged from 13 to 100 (M = 59, SD = 22) and collectivism ranged from 9 to 86 (M = 51, SD = 24).

Country scores of gross national income (GNI) per capita in 2015, measured in international dollars, were downloaded from the Human Development Report Office of the United Nations5. For Taiwan we used the measure for 2015 from their National Statistics agency6. In our sample of countries, GNI per capita ranged from 7,000 in Morocco to 130,000 in Qatar (M = 35,000, SD = 22,000).

To estimate the effectiveness of mistake-based feedback we used data from TIMSS on student achievement and teachers’ use of mistake-based feedback, as well as some control variables.

TIMSS uses an elaborate method to measure student achievement in mathematics and science (Martin et al., 2016). In brief, each student responds to only a subset of test questions and five “plausible values” for the total score of each student are generated through an imputation method. Plausible values are given on a scale that was calibrated so that the 1995 TIMSS results had a mean of 500 and a standard deviation of 100. We used the set of five plausible values of student achievement in math and science as measured in TIMSS 2015, standardized within each country to unit standard deviation.

The grade 4 and grade 8 student questionnaires of TIMSS 2015 included one part about mathematics and one part about science. Both parts included a set of ten items about the teacher. For each item, students gave their response on a four-point scale: Disagree a lot (coded 1); Disagree a little (coded 2); Agree a little (coded 3); Agree a lot (coded 4). Our focus is on the item “My teacher tells me how to do better when I make a mistake,” which we shall refer to as MBF (mistake-based feedback). On the MBF item, almost all responses were either Agree a lot or Agree a little (93% in grade 4, 85% in grade 8). This means that MBF was nearly a binary variable. (Indeed, if we recode it as binary by lumping the two Disagree options together with Agree a little, all the main results presented in this paper would remain virtually identical).

Following prior research we average responses from all students in a class to obtain a measure of the teacher’s teaching style (Eriksson et al., 2019). (In the binary recoding, the class average would simply reflect how frequently students in a class responded by Agree a lot to the MBF item about a given teacher.) This is taken as a measure of how much the teacher uses mistake-based feedback. The class-average response to the MBF item for each of the two teachers yielded two class-level measures, which we refer to as MBF:Math and MBF:Science. For descriptive statistics of MBF:Math and MBF:Science in each grade in each country, see Table 2. There were eight countries in which science grade 8 was not taught by a single teacher but by several teachers specializing in different science disciplines. For these countries no MBF:Science measures in grade 8 were calculated (as they would be ambiguous).

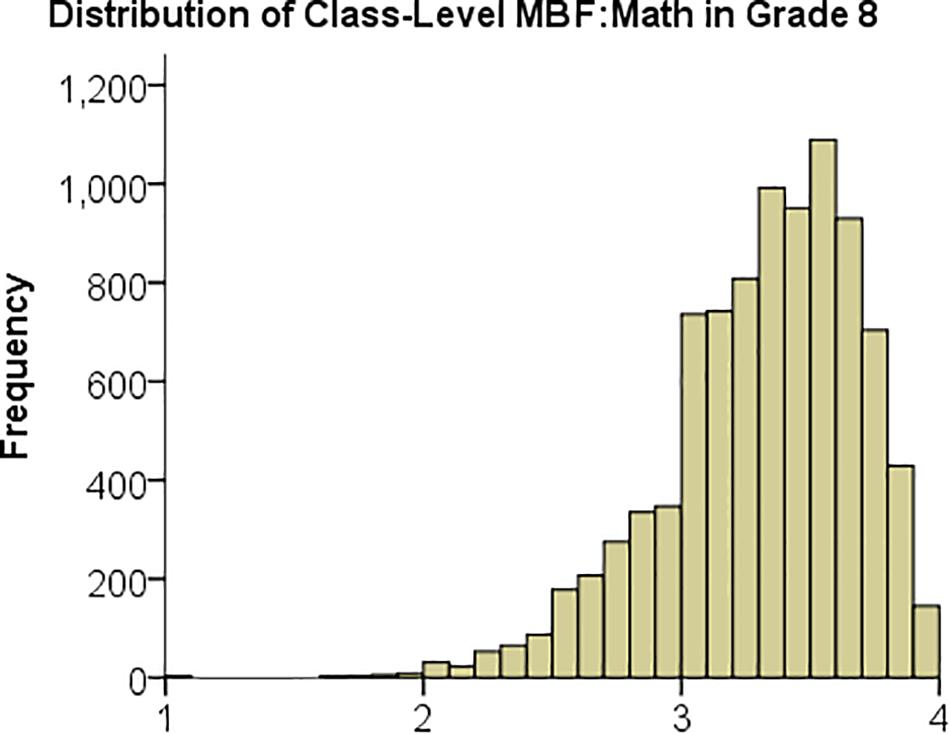

In Table 2, note that the country-means of MBF:Math and MBF:Science are consistently between 3 and 4, reflecting that these were the dominant individual responses. However, there were specific classes where the MBF measures were much lower than 3, as illustrated in Figure 1 showing the distribution of the MBF measure for mathematics across all participating classes in 8th grade. The corresponding distributions for 4th grade mathematics and for science look similar.

Figure 1. Histogram over MBF:Math, the class-level measure of mistake-based feedback in mathematics, of all classes in 8th grade.

As described in detail below, we estimate the effect of mistake-based feedback both with and without including control variables. Ideally, results are robust to the model specification. The following control variables are used.

First, when estimating the effect of MBF of the mathematics, we control for the MBF of the science teacher, and vice versa.

In addition to the MBF item, the student questionnaire included nine other items (using the same response scale) about the teacher: “I know what my teacher expects me to do,” “My teacher is easy to understand,” “I am interested in what my teacher says,” “My teacher gives me interesting things to do,” “My teacher has clear answers to my questions,” “My teacher is good at explaining mathematics,” “My teacher lets me show what I have learned,” “My teacher does a variety of things to help us learn,” “My teacher listens to what I have to say.” Note that all of these items are positive statements about the teacher. For each teacher subject (math and science) we calculated the student’s mean response to these items (Cronbach’s α > 0.86 for each academic subject in each grade), and then averaged this measure over all students of the class. We refer to these class-level measures as Pos:Math and Pos:Science. These measures were typically between 3 and 4, meaning that students tended to agree at least a little with the nine positive statements about the teacher. We use the Pos measures as control variables to ascertain that estimated effects of MBF do not simply reflect effects of a generally positive view of the teacher.

When studying antecedents of student achievement it is common to control for socio-economic status and gender. Following some previous research on TIMSS data (Blömeke et al., 2016; Eriksson et al., 2019), we used the response to the item “About how many books are there in your home?” as a proxy for socio-economic status, henceforth referred to as SES. This item has a five-point response scale from None or very few (0–10 books) (coded 1) to Enough to fill three or more bookcases (more than 200) (coded 5). Student gender was coded 1 for girl and 2 for boy.

TIMSS also includes four teacher background variables that we used as controls: experience (years of teaching), age, gender, and level of formal education.

There were at most a few percent missing data on the items we use. Missing data were handled using the multiple imputation functionality of SPSS v. 24, generating five sets of imputed data, to each of which one of the five pairs of plausible values on mathematics and science achievement was assigned.

To account for the multiple levels of data in each country (class and student) we include a random effect of class (O’Connell and McCoach, 2008). We estimate the effect of MBF in a given country for a given subject in a given grade using two different models: without control variables,

and with control variables,

Here Yij denotes the achievement in the given subject for student i in class j; γ00 is the class-level intercept; MBFj, Posj, Exj, Agj, Gej, and Edj are the MBF and Pos measures and the experience, age, gender and level of education for the teacher in the given subject in class j; OMBFj denotes the MBF measure of the teacher in the other subject in class j; SESij and Genij denote the socio-economic status and gender of student i in class j; uj is a random error term representing a unique effect associated with class j and rij is a random error term at the individual level. Error terms are assumed to have a normal distribution with a mean of zero and constant variance.

Analyses were conducted using restricted maximum likelihood (REML) estimation in the linear mixed model function of SPSS v. 24. Using the SPSS functionality for analysis of multiply imputed data, analyses were performed on each set of imputed data and then pooled to yield unbiased estimates of effects and standard errors.

By the above procedure the effect of MBF on achievement (i.e., the coefficient γ01) was estimated up to eight times per country: two models (with controls and without controls) for each of two subjects (math and science) in each of two grades (4th and 8th). Estimates and standard errors are reported in Tables 3, 4. To obtain approximate 95% confidence intervals, take the estimate plus/minus two standard errors.

Our main approach has two steps. In the first step we estimate the effect of class-level MBF on student achievement separately in each country, using a two-level analysis (student and class). In the second step we examine how these estimates per country relate to country-level measures of power distance, religiosity, and collectivism.

An alternative approach is to include all countries from the beginning in a three-level analysis (student, class, and country) of student achievement to examine the interaction of class-level MBF and country-level measures. Without controls, the model for a given subject in a given grade would then be

where Yijk denotes the achievement in the given subject for student i in class j in country k; γ000 is the country-level intercept; CLMk is a country-level measure (say, power distance) in country k; MBFjk is the MBF measure for the teacher in the given subject in class j in country k; vk and ujk are random error terms representing unique effects associated with country k and class j, respectively, and rijk is a random error term at the student level. When adding control variables to this model, we also include their interactions with the country-level measure (e.g., we would include a Posjk term as well as the interaction term CLMk∗Posjk).

The advantage of our main approach is that we explicitly obtain country estimates of the MBF effect, thereby allowing easy examination of their consistency across grades, subjects, and model specifications, as well as easy illustration of their relation to a country measure using a scatter plot. The advantage of the alternative approach is that it yields a more accurate estimate of the statistical significance of the latter relation, which in the above model is captured by the interaction term CLMk∗MBFjk. We use the alternative approach only to verify the statistical significance of the interaction. These analyses were performed in the lme4 package (Bates et al., 2014).

In the estimation of MBF effects, all variables were standardized within each country. Therefore, estimated MBF effects are measured in the unit “within-country standard deviation in achievement per within-country standard deviation in MBF.”

Starting with grade 4, the estimated MBF effects per country in Table 3 can be summarized as follows: The mean MBF effect was close to zero in both subjects, regardless of model specification, but there was substantial variation between countries. To illustrate, consider MBF effects for math estimated with controls in grade 4: the mean effect was −0.01, p = 0.47, with a standard deviation of 0.12 and a range from −0.35 to 0.25. Thus, it seems that there are some countries where the MBF effect is positive and other countries where the MBF effect is negative.

Estimated MBF effects per country in grade 8 showed the same pattern, see Table 4. To illustrate, consider MBF effects for math estimated with controls in grade 8: the mean effect was −0.03, p = 0.56, with a standard deviation of 0.22 and a range from −0.60 to 0.43.

Table 5 reports pairwise correlations, with bootstrapped confidence intervals, of the estimated MBF effects against religiosity, power distance, collectivism, and GNI per capita. The table reveals a consistent pattern. Regardless of the method and data used to estimate the MBF effect, it was always positively correlated with religiosity, power distance, and collectivism. The strength of the correlations varied across different estimates, but overall correlations tended to be stronger for religiosity (average correlation = 0.47) and power distance (average correlation = 0.44) than for collectivism (average correlation = 0.33). In Table 5, a few of the confidence intervals include zero, indicating a non-significant relation. However, when we conducted corresponding analyses using the alternative approach described in section 2.3, the interaction between MBF and these culture variables always came out as significantly positive. Thus, we conclude that there are robust positive associations between MBF effects on the one hand and religiosity, power distance, and collectivism on the other hand.

To increase the set of countries and use both grades and both subjects, we calculated an aggregate estimate of the controlled MBF effect by taking the average of all available controlled estimates for a given country. This yielded an aggregate estimate of the controlled MBF effect for 47 different countries. This aggregate estimate correlated with religiosity at r = 0.54, bootstrapped 95% CI [0.30,0.73], with power distance at r = 0.52 [0.24,0.71], and with collectivism at r = 0.37 [0.08,0.63]. Using religiosity, power distance, and collectivism as simultaneous predictors in a multiple linear regression of the aggregate MBF effect, we found they together explained 37% of the total variance, with statistically significant independent effects of both religiosity, β = 0.38, p = 0.015, and power distance, β = 0.39, p = 0.035, but not of collectivism, β = −0.11, p = 0.52.

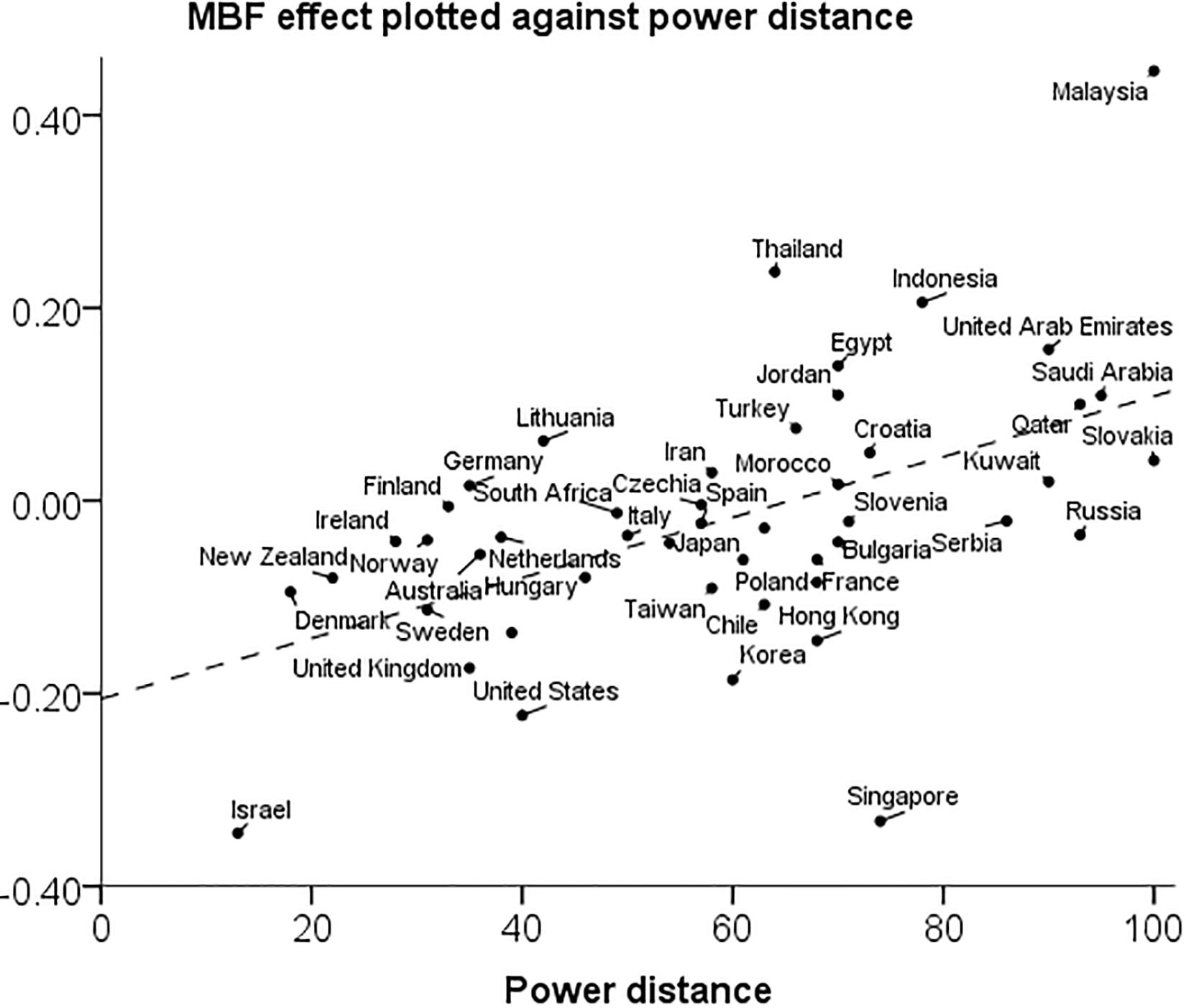

The relation between the aggregate MBF effect and power distance is illustrated by a scatter plot in Figure 2. Note that the regression line fits the data points fairly with two exceptions: Singapore and Malaysia are outliers in different directions. If the two outliers are excluded, the correlation between the MBF effect and power distance increases slightly to r = 0.55 [0.38,0.71], and similarly for the correlations with religiosity, r = 0.58 [0.32,0.78] and collectivism, r = 0.46 [0.19,0.72].

Figure 2. Scatter plot of the aggregate controlled MBF effect against power distance in 47 countries.

In this paper we have used data from an international assessment of mathematics and science achievement to examine the effect of teachers giving feedback on students’ mistakes. This is a teaching practice that has both proponents and critics. Our data support both views. In some countries (such as the United Arab Emirates) we found a positive association between teachers’ use of feedback on mistakes and their students’ achievement relative other students in the same country. In some other countries (such as the United States), the association was negative, at least after controlling for some potential confounders.

Based on prior cross-cultural work on negative feedback in other contexts (e.g., Heine et al., 2001a, b), we hypothesized that culture would moderate the effectiveness of mistake-based feedback. Specifically, it should be more effective in cultures where teachers have more authority. In the absence of a direct measure we examined two other widely available cultural measures, power distance and religiosity, which other scholars have thought to be associated with teachers’ authority (e.g., Hofstede, 1986; Smith, 2013). In line with our hypothesis, we found both measures to be positively correlated with the effectiveness of mistake-based feedback.

We also hypothesized that mistake-based feedback would be more effective in cultures where students are more motivated to adapt to consensually shared standards and are more likely to have a growth mind-set. In the absence of direct measures we examined another widely available cultural measure, collectivism, which other scholars have thought to be associated with these traits (e.g., Littlewood, 1999; Gelfand et al., 2002). Although collectivism was indeed found to be associated with the effect of mistake-based feedback, this association disappeared when we controlled for power distance and religiosity. For this reason we tentatively conclude that teachers’ authority is the main moderator of the effectiveness of mistake-based feedback.

When drawing conclusions from our study, some important limitations must be acknowledged. First, to measure the use of mistake-based feedback we only had access to a single student questionnaire item with a simple four-step scale (on which the vast majority of students used only the third or fourth step). A more complex measure would have been preferable for two reasons. For one thing, mistake-based feedback is a complex phenomenon, the many nuances of which a single item is unable to capture. For another, a single-item measure will typically have poor reliability. A likely consequence of poor reliability of the MBF measure is that the size of MBF effects on achievement will tend to be underestimated. In other words, with a more reliable measure of MBF we should expect to observe larger effects on achievement.

A second limitation is that our results are purely correlational. Within countries, we have assumed that a certain relation between use of feedback on mistakes and student performance is evidence of the effectiveness of the feedback practice. An alternative possibility is that associations reflect teachers adapting their teaching practices to the performance level of the student group. Under this alternative interpretation, our between-countries finding would require that teachers respond to higher student performance levels by increasing the use of feedback on mistakes in high power distance countries, whereas teachers in low power distance countries would respond to high-performers by decreasing their use of such feedback. This interpretation, although equally interesting, seems less plausible to us.

As mentioned above, our simple measure do not allow us to distinguish between different ways of implementing mistake-based feedback. There are many ways of using errors as a springboard for further learning (Borasi, 1994; Boaler, 2016). Thus, it is an open question to what extent the difference in effectiveness between countries lies in teachers implementing mistake-based feedback differently and to what extent it lies in students responding differently to the same feedback. Our findings are consistent with the hypothesis that we gave in the introduction: in countries that are high on power distance and religiosity, young people are more accepting of teachers’ authority and therefore more accepting of negative feedback. However, we acknowledge that in the absence of more direct evidence there may be other explanations of the associations we have found.

Cultural psychologists have long been interested in how negative feedback may work differently in different cultures. Here we have examined how teachers’ feedback on mistakes in math and science class is associated with student achievement in 49 countries. This study differs from classic cross-cultural studies of feedback, both in context and methodology. Still, the finding that feedback on mistakes was associated with better achievement in countries where authority is expected to be more important (namely, countries that are high on power distance and religiosity) was as we expected from prior research. These results highlight the importance of cultural values in educational practice.

The data files used in the analysis can be accessed in the Open Science Framework data repository (https://osf.io/z3h5c/).

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the participants’ legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

KE performed the statistical analysis and wrote the manuscript together with JL. All authors contributed to the conception of the study, read and approved the submitted version. OH and AR provided critical revision inputs.

This research was supported by the Swedish Research Council (Grant Number 2014–2008).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

KE was grateful to Pontus Strimling for their helpful discussions.

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2014). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48.

Blömeke, S., Olsen, R. V., and Suhl, U. (2016). “Relation of student achievement to the quality of their teachers and instructional quality,” in Teacher Quality, Instructional Quality and Student Outcomes: Relationships Across Countries, Cohorts and Time, eds T. Nilsen, and J.-E. Gustafsson (Cham: Springer International Publishing), 21–50. doi: 10.1007/978-3-319-41252-8_2

Boaler, J. (2016). Mathematical Mindsets: Unleashing Students’ Potential Through Creative Math, Inspiring Messages and Innovative Teaching. San Francisco, CA: Jossey-Bass.

Borasi, R. (1994). Capitalizing on errors as “springboards for inquiry”: a teaching experiment. J. Res. Math. Educ. 25, 166–208.

Bray, W. S. (2011). A collective case study of the influence of teachers’ beliefs and knowledge on error-handling practices during class discussion of mathematics. J. Res. Math. Educ. 42, 2–38.

Brown, G. T., Kennedy, K. J., Fok, P. K., Chan, J. K. S., and Yu, W. M. (2009). Assessment for student improvement: understanding Hong Kong teachers’ conceptions and practices of assessment. Assess. Educ. Princ. Policy Pract. 16, 347–363. doi: 10.1080/09695940903319737

Carless, D., and Lam, R. (2014). “Developing assessment for productive learning in Confucian-influenced settings,” in Designing Assessment for Quality Learning, eds C. Wyatt-Smith, V. Klenowski, and P. Colbert (Dordrecht: Springer), 167–179. doi: 10.1007/978-94-007-5902-2_11

Chen, W. W., and Wong, Y. L. (2015). Chinese mindset: theories of intelligence, goal orientation and academic achievement in Hong Kong students. Educ. Psychol. 35, 714–725. doi: 10.1080/01443410.2014.893559

Costa, A., and Faria, L. (2018). Implicit theories of intelligence and academic achievement: a meta-analytic review. Front. Psychol. 9:829. doi: 10.3389/fpsyg.2018.00829

Crabtree, S. (2010). Religiosity Highest in World’s Poorest Nations. Available at: http://news.gallup.com/poll/142727/religiosity-highest-world-poorest-nations. aspx (accessed August 31, 2010).

Dalehefte, I. M., Seidel, T., and Prenzel, M. (2012). “Reflecting on learning from errors in school instruction: findings and suggestions from a Swiss-German video study,” in Human Fallibility, eds J. Bauer, and C. Harteis (Dordrecht: Springer), 197–213. doi: 10.1007/978-90-481-3941-5_12

Dweck, C. S., Chiu, C. Y., and Hong, Y. Y. (1995). Implicit theories: elaboration and extension of the model. Psychol. Inq. 6, 322–333. doi: 10.1207/s15327965pli0604_12

Eriksson, K., Helenius, O., and Ryve, A. (2019). Using TIMSS items to evaluate the effectiveness of different instructional practices. Instr. Sci. 47, 1–18. doi: 10.1007/s11251-018-9473-1

Fyfe, E. R., and Rittle-Johnson, B. (2017). Mathematics practice without feedback: a desirable difficulty in a classroom setting. Instr. Sci. 45, 177–194. doi: 10.1007/s11251-016-9401-1

Gagné, E. D., Crutcher, R. J., Anzelc, J., Geisman, C., Hoffman, V. D., Schutz, P., et al. (1987). The role of student processing of feedback in classroom achievement. Cogn. Instr. 4, 167–186. doi: 10.1207/s1532690xci0403_2

Gelfand, M. J., Higgins, M., Nishii, L. H., Raver, J. L., Dominguez, A., Murakami, F., et al. (2002). Culture and egocentric perceptions of fairness in conflict and negotiation. J. Appl. Psychol. 87, 833–845. doi: 10.1037//0021-9010.87.5.833

Harks, B., Rakoczy, K., Hattie, J., Besser, M., and Klieme, E. (2014). The effects of feedback on achievement, interest and self-evaluation: the role of feedback’s perceived usefulness. Educ. Psychol. 34, 269–290. doi: 10.1080/01443410.2013.785384

Hatano, G., and Inagaki, K. (1998). “Cultural contexts of schooling revisited: a review of the learning gap from a cultural psychology perspective,” in Global Prospects for Education, Development, Culture and Schooling, eds S. G. Paris, and H. M. Wellman (Washington, DC: American Psychological Association), 79–104. doi: 10.1037/10294-003

Heine, S. J., Kitayama, S., and Lehman, D. R. (2001a). Cultural differences in self-evaluation: Japanese readily accept negative self-relevant information. J. Cross Cult. Psychol. 32, 434–443. doi: 10.1177/0022022101032004004

Heine, S. J., Kitayama, S., Lehman, D. R., Takata, T., Ide, E., Leung, C., et al. (2001b). Divergent consequences of success and failure in Japan and North America: an investigation of self-improving motivations and malleable selves. J. Pers. Soc. Psychol. 81, 599–615. doi: 10.1037/0022-3514.81.4.599

Hofstede, G. H. (1986). Cultural differences in teaching and learning. Int. J. Intercult. Relat. 10, 301–320.

Hofstede, G. H. (2001). Culture’s Consequences: Comparing Values, Behaviors, Institutions and Organizations Across Nations. Beverly Hills, CA: Sage.

Holtbrügge, D., and Mohr, A. T. (2010). Cultural determinants of learning style preferences. Acad. Manag. Learn. Educ. 9, 622–637. doi: 10.5465/amle.2010.56659880

Joy, S., and Kolb, D. A. (2009). Are there cultural differences in learning style? Int. J. Intercult. Relat. 33, 69–85.

Kazemi, E., and Stipek, D. (2001). Promoting conceptual thinking in four upper-elementary mathematics classrooms. Elem. Sch. J. 102, 59–80. doi: 10.1086/499693

Littlewood, W. (1999). Defining and developing autonomy in East Asian contexts. Appl. Linguist. 20, 71–94. doi: 10.1093/applin/20.1.71

Mangels, J. A., Butterfield, B., Lamb, J., Good, C. D., and Dweck, C. S. (2006). Why do beliefs about intelligence influence learning success? A social cognitive neuroscience model. Soc. Cogn. Affect. Neurosci. 1, 75–86. doi: 10.1093/scan/nsl013

Markus, H. R., and Kitayama, S. (1991). Culture and the self: implications for cognition, emotion, and motivation. Psychol. Rev. 98, 224–253. doi: 10.1037/0033-295x.98.2.224

Martin, M. O., Mullis, I. V. S., and Hooper, M. (eds) (2016). Methods and Procedures in TIMSS 2015. Chestnut Hill, MA: Boston College.

Moser, J. S., Schroder, H. S., Heeter, C., Moran, T. P., and Lee, Y. H. (2011). Mind your errors: evidence for a neural mechanism linking growth mind-set to adaptive posterror adjustments. Psychol. Sci. 22, 1484–1489. doi: 10.1177/0956797611419520

National Council of Teachers of Mathematics, (2000). Principles and Standards for School Mathematics. Reston, VA: National Council of Teachers of Mathematics.

O’Connell, A. A., and McCoach, D. B. (eds) (2008). Multilevel Modeling of Educational Data. Greenwich, CT: Information Age.

Parboteeah, K. P., Hoegl, M., and Cullen, J. B. (2008). Managers’ gender role attitudes: a country institutional profile approach. J. Int. Bus. Stud. 39, 795–813. doi: 10.1057/palgrave.jibs.8400384

Santagata, R. (2004). “Are you joking or are you sleeping?” Cultural beliefs and practices in Italian and US teachers’ mistake-handling strategies. Linguist. Educ. 15, 141–164. doi: 10.1016/j.linged.2004.12.002

Schleppenbach, M., Flevares, L. M., Sims, L., and Perry, M. (2007). Teacher responses to student mistakes in Chinese and U.S. mathematics classrooms. Elem. Sch. J. 108, 131–147. doi: 10.1086/525551

Smith, M. U. (2013). The role of authority in science and religion with implications for science teaching and learning. Sci. Educ. 22, 605–634. doi: 10.1007/s11191-012-9469-1

Stevenson, H. W., and Stigler, J. W. (1992). The Learning Gap: Why Our Schools are Failing and What We Can Learn from Japanese and Chinese Education. New York, NY: Touchstone.

Thanh Pham, T. H., and Renshaw, P. (2015). Formative assessment in Confucian heritage culture classrooms: activity theory analysis of tensions, contradictions and hybrid practices. Assess. Eval. High. Educ. 40, 45–59. doi: 10.1080/02602938.2014.886325

Tulis, M. (2013). Error management behavior in classrooms: teachers’ responses to student mistakes. Teach. Teach. Educ. 33, 56–68. doi: 10.1016/j.tate.2013.02.003

Wang, T., and Murphy, J. (2004). “An examination of coherence in a Chinese mathematics classroom,” in How Chinese Learn Mathematics: Perspectives from Insiders, eds L. Fan, N.-Y. Wong, J. Cai, and S. Li (Hackensack, NJ: World Scientific), 107–123. doi: 10.1142/9789812562241_0004

Keywords: negative feedback, power distance, religiosity, cultural values, effective instruction, mistakes

Citation: Eriksson K, Lindvall J, Helenius O and Ryve A (2020) Cultural Variation in the Effectiveness of Feedback on Students’ Mistakes. Front. Psychol. 10:3053. doi: 10.3389/fpsyg.2019.03053

Received: 15 August 2019; Accepted: 24 December 2019;

Published: 21 January 2020.

Edited by:

Ronnel B. King, The Education University of Hong Kong, Hong KongReviewed by:

Yulia Kuzmina, Psychological Institute of Russian Academy of Education, RussiaCopyright © 2020 Eriksson, Lindvall, Helenius and Ryve. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kimmo Eriksson, a2ltbW8uZXJpa3Nzb25AbWRoLnNl; a2ltbW9lQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.