- 1Educational Quality and Evaluation, DIPF - Leibniz Institute for Research and Information in Education, Frankfurt, Germany

- 2Centre for International Student Assessment (ZIB), Frankfurt, Germany

- 3Centre for Educational Measurement (CEMO), University of Oslo, Oslo, Norway

- 4Cognitive Science & Assessment, University of Luxembourg, Esch-sur-Alzette, Luxembourg

Editorial on the Research Topic

Advancements in Technology-Based Assessment: Emerging Item Formats, Test Designs, and Data Sources

Technology has become an indispensable tool for educational and psychological assessment in today's world. Individual researchers and large-scale assessment programs alike are increasingly using digital technology (e.g., laptops, tablets, and smartphones) to collect behavioral data beyond the mere correctness of item responses. Along these lines, technology innovates and enhances assessments in terms of item and test design, methods of test delivery, data collection and analysis, and the reporting of test results.

The aim of this Research Topic is to present recent developments in technology-based assessment and in the advancements of knowledge associated with it. Our focus is on cognitive assessments, including the measurement of abilities, competences, knowledge, and skills, but also includes non-cognitive aspects of assessment (Rausch et al.; Simmering et al.). In the area of (cognitive) assessments, the innovations driven by technology are manifold, and the topics covered in this collection are, accordingly, wide and comprehensive: Digital assessments facilitate the creation of new types of stimuli and response formats that were out of reach for assessments using paper; for instance, interactive simulations may include multimedia elements, as well as virtual or augmented realities (Cipresso et al.; de-Juan-Ripoll et al.). These types of assessments also allow for the widening of the construct coverage in an assessment; for instance, through stimulating and making visible certain problem-solving strategies that represent new forms of problem solving (Han et al.; Kroeze et al.). Moreover, technology allows for the automated generation of items based on specific item models (Shin et al.). Such items can be assembled into tests in a more flexible way than what is possible in paper-and-pencil tests and can even be created on the fly; for instance, tailoring item difficulty to individual ability (adaptive testing) while assuring that multiple content constraints are met (Born et al.; Zhang et al.). As a requirement for adaptive testing, or to lower the burden of raters who code item responses manually, computers enable the automatic scoring of constructed responses; for instance, text responses can be coded automatically by using natural language processing and text mining (He et al.; Horbach and Zesch).

Technology-based assessments provide not only response data (e.g., correct vs. incorrect responses) but also process data (e.g., frequencies and sequences of test-taking strategies, including navigation behavior) that reflect the course of solving a test item and gives information on the path toward the solution (Han et al.). Process data, among others, have been used successfully to evaluate and explain data quality (Lindner et al.), to define process-oriented latent variables (De Boeck and Scalise), to improve measurement precision, and to address substantial research questions (Naumann). Large-scale result and process data also call for data-driven computational approaches in addition to traditional psychometrics and new concepts for storing and managing data (von Davier et al.).

The contributions of this Research Topic address how technology can further improve and enhance educational and psychological assessment from various perspectives. Regarding educational testing, not only is research presented on the assessment of learning, that is, the summative assessment of learning outcomes (Molnár and Csapó), but a number of studies on this topic also focus conceptually and empirically on the assessment for learning, that is, the formative assessment providing feedback to support the learning process (Arieli-Attali et al.; Blaauw et al.; Csapó and Molnár; den Ouden et al.; Kroeze et al.).

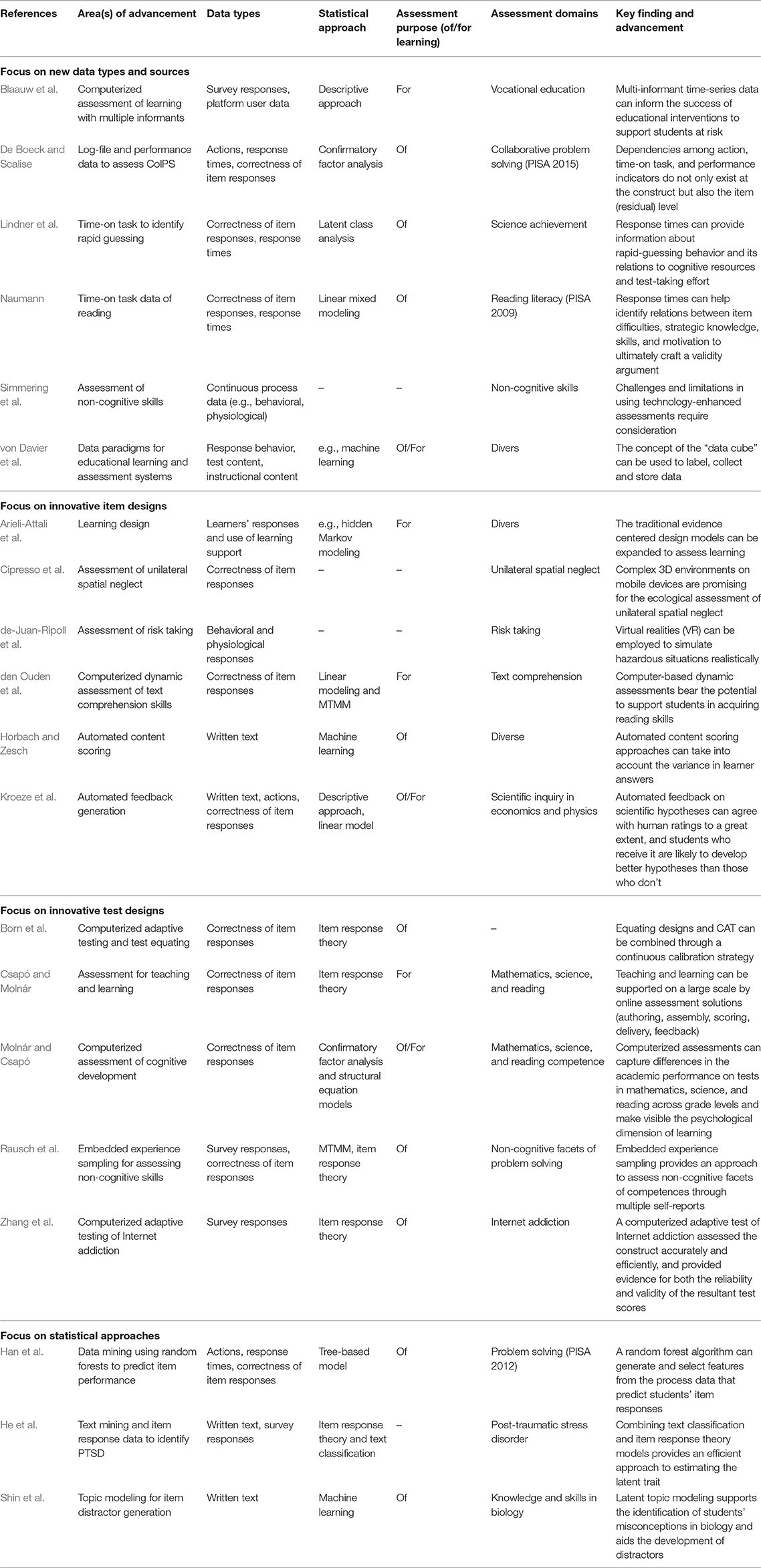

Table 1 gives an overview of all the papers included in this Research Topic and summarizes them with respect to their key features. Reflecting the scope of the Research Topic, we used four major categories to classify the papers: (1) papers focusing on the use of new data types and sources, (2) innovative item designs, (3) innovative test designs, and (4) statistical approaches. We refrained from multiple category assignments of papers, which was possible, and focused on their core contribution. The papers' key findings and advancements impressively represent the current state-of-the-art in the field of technology-based assessment in (standardized) educational testing, and, as topic editors, we were happy to receive such a great collection of papers with various foci.

Regarding the future of technology-based assessment, we assume that inferences about the individual's or learner's knowledge, skills, or other attributes will increasingly be based on empirical (multimodal) data from less- or non-standardized testing situations. Typical examples are stealth assessments in digital games (Shute and Ventura, 2013; Shute, 2015), digital learning environments (Nguyen et al., 2018), or online activities (Kosinski et al., 2013). Such new kinds of unobtrusive, continuous assessments will further extend the traditional assessment paradigm and enhance our understanding of what an item, a test, and the empirical evidence for inferring attributes can be (Mislevy, 2019). Major challenges lie in the identification and synthesis of evidence from the situations the individual encounters in these non-standardized settings, as well as in validating the interpretation of derived measures. This Research Topic provides much input for these questions. We hope that you will enjoy reading the contributions as much as we did.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was funded by the Centre for International Student Assessment (ZIB) in Germany. We thank all authors who have contributed to this Research Topic and the reviewers for their valuable feedback on the manuscript.

References

Kosinski, M., Stillwell, D., and Graepel, T. (2013). Private traits and attributes are predictable from digital records of human behavior. Proc. Natl. Acad. Sci. U.S.A. 110, 5802–5805. doi: 10.1073/pnas.1218772110

Mislevy, R. (2019). “On integrating psychometrics and learning analytics in complex assessments,” in Data Analytics and Psychometrics, eds H. Jiao, R. W. Lissitz, and A. van Wie (Charlotte, NC: USA Information Age Publishing, 1–52.

Nguyen, Q., Huptych, M., and Rienties, B. (2018). “Linking students' timing of engagement to learning design and academic performance,” in Paper presented at the Proceedings of the 8th International Conference on Learning Analytics and Knowledge (Sydney, NSW).

Shute, V. J. (2015). “Stealth assessment,” in The SAGE Encyclopedia of Educational Technology, ed J. Spector (Thousand Oaks, CA: SAGE Publications, Inc., 675–676.

Keywords: technology-based assessment, item design, test design, automatic scoring, process data, assessment of/for learning

Citation: Goldhammer F, Scherer R and Greiff S (2020) Editorial: Advancements in Technology-Based Assessment: Emerging Item Formats, Test Designs, and Data Sources. Front. Psychol. 10:3047. doi: 10.3389/fpsyg.2019.03047

Received: 19 December 2019; Accepted: 23 December 2019;

Published: 20 January 2020.

Edited and reviewed by: Yenchun Jim Wu, National Taiwan Normal University, Taiwan

Copyright © 2020 Goldhammer, Scherer and Greiff. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frank Goldhammer, Z29sZGhhbW1lciYjeDAwMDQwO2RpcGYuZGU=

Frank Goldhammer

Frank Goldhammer Ronny Scherer

Ronny Scherer Samuel Greiff

Samuel Greiff