- 1Laureate Institute for Brain Research, Tulsa, OK, United States

- 2Wellcome Centre for Human Neuroimaging, Institute of Neurology, University College London, London, United Kingdom

The ability to conceptualize and understand one’s own affective states and responses – or “Emotional awareness” (EA) – is reduced in multiple psychiatric populations; it is also positively correlated with a range of adaptive cognitive and emotional traits. While a growing body of work has investigated the neurocognitive basis of EA, the neurocomputational processes underlying this ability have received limited attention. Here, we present a formal Active Inference (AI) model of emotion conceptualization that can simulate the neurocomputational (Bayesian) processes associated with learning about emotion concepts and inferring the emotions one is feeling in a given moment. We validate the model and inherent constructs by showing (i) it can successfully acquire a repertoire of emotion concepts in its “childhood”, as well as (ii) acquire new emotion concepts in synthetic “adulthood,” and (iii) that these learning processes depend on early experiences, environmental stability, and habitual patterns of selective attention. These results offer a proof of principle that cognitive-emotional processes can be modeled formally, and highlight the potential for both theoretical and empirical extensions of this line of research on emotion and emotional disorders.

Introduction

The ability to conceptualize and understand one’s affective responses has become the topic of a growing body of empirical work (McRae et al., 2008; Smith et al., 2015, 2017b,c, 2018c,d,e, 2019a,c; Wright et al., 2017). This body of work has also given rise to theoretical models of its underlying cognitive and neural basis (Wilson-Mendenhall et al., 2011; Lane et al., 2015; Smith and Lane, 2015, 2016; Barrett, 2017; Kleckner et al., 2017; Panksepp et al., 2017; Smith et al., 2018b). Attempts to operationalize this cognitive-emotional ability have led to a range of overlapping constructs, including trait emotional awareness (Lane and Schwartz, 1987), emotion differentiation or granularity (Kashdan and Farmer, 2014; Kashdan et al., 2015), and alexithymia (Bagby et al., 1994a,b).

This work is motivated to a large degree by the clinical relevance of emotion conceptualization abilities. In the literature on the construct of emotional awareness, for example, lower levels of conceptualization ability have been associated with several psychiatric disorders as well as poorer physical health (Levine et al., 1997; Berthoz et al., 2000; Bydlowski et al., 2005; Donges et al., 2005; Lackner, 2005; Subic-Wrana et al., 2005, 2007; Frewen et al., 2008; Baslet et al., 2009; Consoli et al., 2010; Moeller et al., 2014); conversely, higher ability levels have been associated with a range of adaptive emotion-related traits and abilities (Lane et al., 1990, 1996, 2000; Ciarrochi et al., 2003; Barchard and Hakstian, 2004; Bréjard et al., 2012). Multiple evidence-based psychotherapeutic modalities also aim to improve emotion understanding as a central part of psycho-education in psychotherapy (Hayes and Smith, 2005; Barlow et al., 2016).

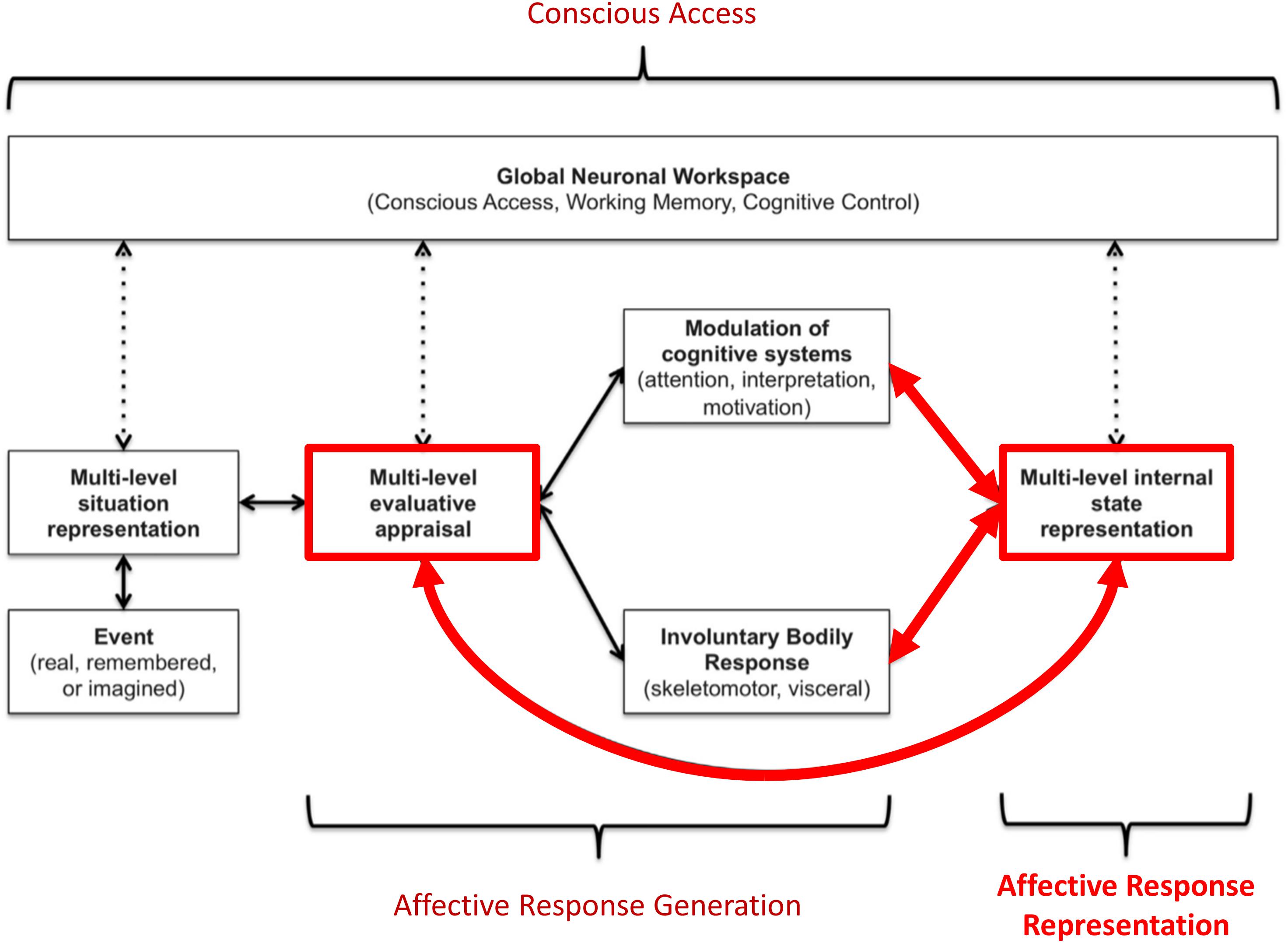

While there are a number of competing views on the nature of emotions, most (if not all) accept that emotion concepts must be acquired through experience. For example, “basic emotions” theories hold that emotion categories like sadness and fear each have distinct neural circuitry, but do not deny that knowledge about these emotions must be learned (Panksepp and Biven, 2012). Constructivist views instead hold that emotion categories do not have a 1-to-1 relationship to distinct neural circuitry, and that emotion concept acquisition is necessary for emotional experience (Barrett, 2017). While these views focus on understanding the nature of emotions themselves, we have recently proposed a neurocognitive model – termed the “three-process model” (TPM; Smith et al., 2018b, 2019a; Smith, 2019) of emotion episodes – with a primary focus on accounting for individual differences in emotional awareness. This model characterizes a range of emotion-related processes that could contribute to trait differences in both the learning and deployment of emotion concepts in order to understand one’s own affective responses (and in the subsequent use of these concepts to guide adaptive decision-making). The TPM distinguishes the following three broadly defined processes (see Figure 1):

Figure 1. Graphical illustration of the three-process model of emotion episodes (Smith et al., 2018b, 2019a,b; Smith, 2019). Here an event (either internal or external, and either real, remembered, or imagined) is represented in the brain at various levels of abstraction. This complex set of representations is evaluated in terms of its significance to the organism at both implicit (e.g., low-level conditioned or unconditioned responses) and explicit (e.g., needs, goals, values) levels, leading to predictions about the cognitive, metabolic, and behavioral demands of the situation. These predictions initiate an “affective response” with both peripheral and central components. This includes quick, involuntary autonomic and skeletomotor responses (e.g., heart rate changes, facial expression changes) as well as involuntary shifts in the direction of cognitive resources (e.g., attention and interpretation biases, action selection biases). This multicomponent affective response is represented centrally at a perceptual and conceptual level (e.g., valenced bodily sensations and interpretations of those responses as corresponding to particular emotions). Representations of events, evaluations, bodily sensations, emotion concepts (etc.) can then be attended to and gated into working memory (“conscious access”). If gated into and held in working memory, these representations can be integrated with explicit goals, reflected upon, and used to guide deliberative, goal-directed decision-making. The thick red arrows highlight the processes we explicitly model in this paper.

1. Affective response generation: a process in which somatovisceral and cognitive states are automatically modulated in response to an affective stimulus (whether real, remembered, or imagined) in a context-dependent manner, based on an (often implicit) appraisal of the significance of that stimulus for the survival and goal-achievement of the individual (i.e., predictions about the cognitive, metabolic, and behavioral demands of the situation).

2. Affective response representation: a process in which the somatovisceral component of an affective response is subsequently perceived via afferent sensory processing, and then conceptualized as a particular emotion (e.g., sadness, anger, etc.) in consideration of all other available sources of information (e.g., stimulus/context information, current thoughts/beliefs about the situation, etc.).

3. Conscious access: a process in which the representations of somatovisceral percepts and emotion concept representations may or may not enter and be held in working memory – constraining the use of this information in goal-directed decision-making (e.g., verbal reporting, selection of voluntary emotion regulation strategies, etc.).

The TPM has also proposed a tentative mapping to the brain in terms of interactions between large-scale neural networks serving domain-general cognitive functions. Some support for this proposal has been found within recent neuroimaging studies (Smith et al., 2017b,c, 2018a,c,d,e). However, the neurocomputational implementation of these processes has not been thoroughly considered. The computational level of description offers the promise of providing more specific and mechanistic insights, which could potentially be exploited to inform and improve pharmacological and psychotherapeutic interventions. While previous theoretical work has applied active inference concepts to emotional phenomena (Joffily and Coricelli, 2013; Seth, 2013; Barrett and Simmons, 2015; Seth and Friston, 2016; Smith et al., 2017d, 2019e; Clark et al., 2018), no formal modeling of emotion concept learning has yet been performed. In this manuscript, we aim to take the first steps in constructing an explicit computational model of the acquisition and deployment of emotion concept knowledge (i.e., affective response representation) as described within the TPM (subsequent work will focus on affective response generation and conscious access processes; see Smith et al., 2019b). Specifically, we present a simple Active Inference model (Friston et al., 2016, 2017a) of emotion conceptualization, formulated as a Markov Decision Process. We then outline some initial insights afforded by simulations using this model.

In what follows, we first provide a brief review of active inference. We will place a special emphasis on deep generative models that afford the capacity to explain multimodal (i.e., interoceptive, proprioceptive, and exteroceptive) sensations that are characteristic of emotional experience. We then introduce a particular model of emotion inference that is sufficiently nuanced to produce synthetic emotional processes but sufficiently simple to be understood from a “first principles” account. We then establish the validity of this model using numerical analyses of emotion concept learning during (synthetic) neurodevelopment. We conclude with a brief discussion of the implications of this work; particularly for future applications.

An Active Inference Model of Emotion Conceptualization

A Primer on Active Inference

Active Inference (AI) starts from the assumption that the brain is an inference machine that approximates optimal probabilistic (Bayesian) belief updating across all biopsychological domains (e.g., perception, decision-making, motor control, etc.). AI postulates that the brain embodies an internal model of the world (including the body) that is “generative” in the sense that it is able to simulate the sensory data that it should receive if its model of the world is correct. This simulated (predicted) sensory data can be compared to actual observations, and deviations between predicted and observed sensations can then be used to update the model. On short timescales (e.g., a single trial in a perceptual decision-making task) this updating corresponds to perception, whereas on longer timescales it corresponds to learning (i.e., updating expectations about what will be observed on subsequent trials). One can see these processes as ensuring the generative model (embodied by the brain) remains an accurate model of the world (Conant and Ashbey, 1970).

Action (be it skeletomotor, visceromotor, or cognitive action) can be cast in similar terms. For example, actions can be chosen to resolve uncertainty about variables within a generative model (i.e., sampling from domains in which the model does not make precise predictions). This can prevent future deviations from predicted outcomes. In addition, the brain must continue to make certain predictions simply in order to survive. For example, if the brain did not in some sense continue to “expect” to observe certain amounts of food, water, shelter, social support, and a range of other quantities, then it would cease to exist (McKay and Dennett, 2009); as it would not pursue those behaviors leading to the realization of these expectations [c.f. “the optimism bias” (Sharot, 2011)]. Thus, there is a deep sense in which the brain must continually seek out observations that support – or are internally consistent with – its own continued existence. As a result, decision-making can be cast as a process in which the brain infers the sets of actions (policies) that would lead to observations most consistent with its own survival-related expectations (i.e., its “prior preferences”). Mathematically, this can be described as selecting policies that maximize a quantity called “Bayesian model evidence” – that is, the probability that sensory data would be observed under a given model. In other words, because the brain is itself a model of the world, action can be understood as a process by which the brain seeks out evidence for itself – sometimes known as self-evidencing (Hohwy, 2016).

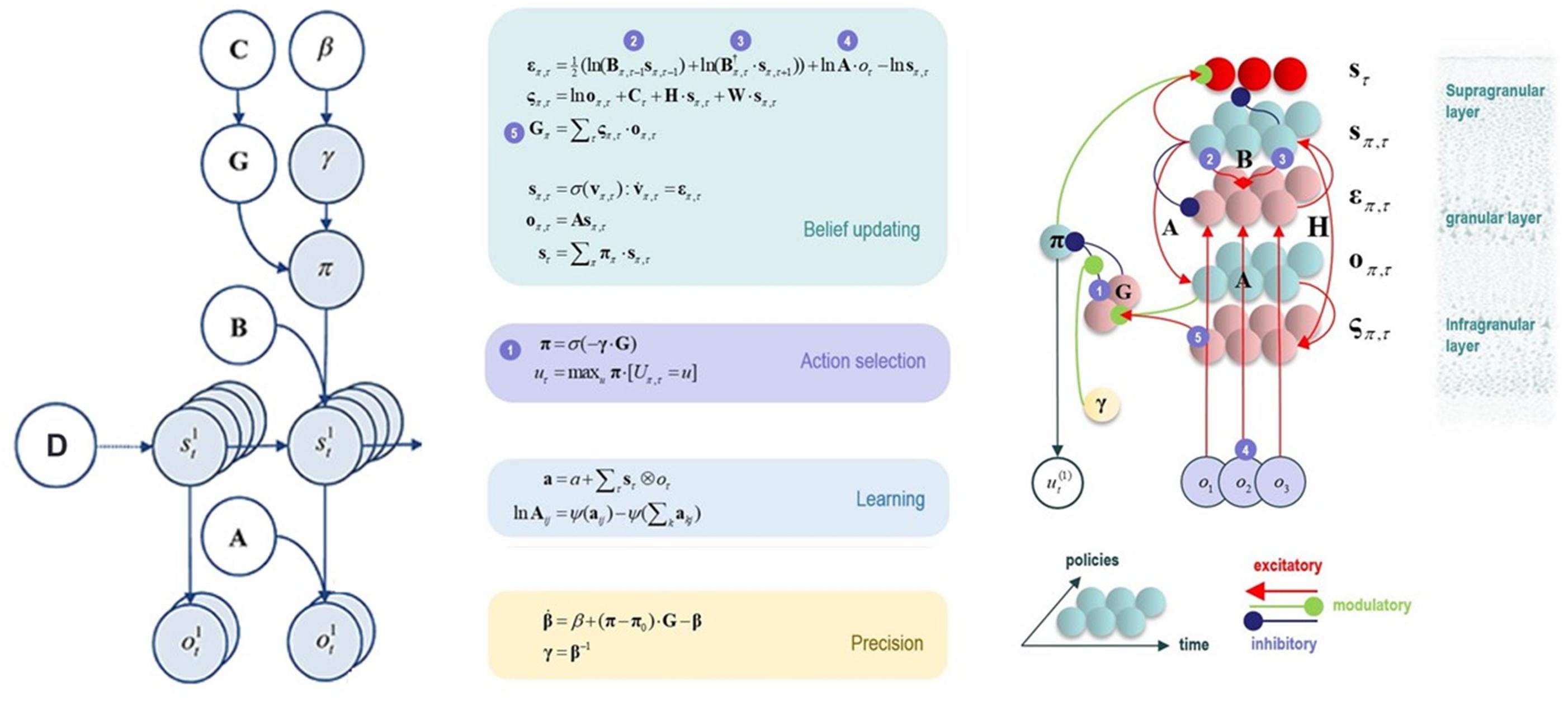

In a real-world setting, directly computing model evidence becomes mathematically intractable. Thus, the brain must use some approximation. AI proposes that the brain instead computes a statistical quantity called free energy. Unlike model evidence, computing free energy is mathematically tractable. Crucially, this quantity provides a bound on model evidence, such that minimization of free energy is equivalent to maximizing model evidence. By extension, in decision-making an agent can evaluate the expected free energy of the alternative policies she could select – that is, the free energy of future trajectories under each policy (i.e., based on predicted future outcomes, given the future states that would be expected under each policy). Therefore, decision-making will be approximately (Bayes) optimal if it operates by inferring (and enacting) the policy that minimizes expected free energy – and thereby maximizes evidence for the brain’s internal model. Interestingly, expected free energy can be decomposed into terms reflecting uncertainty and prior preferences, respectively. This decomposition explains why agents that minimize expected free energy will first select exploratory policies that minimize uncertainty in a new environment (often called the “epistemic value” component of expected free energy). Once uncertainty is resolved, the agent then selects policies that exploit that environment to maximize her prior preferences (often called the “pragmatic value” component of expected free energy). The formal mathematical basis for AI has been detailed elsewhere (Friston et al., 2017a), and the reader is referred there for a full mathematical treatment (also see Figure 2 for some additional detail).

Figure 2. This figure illustrates the mathematical framework of active inference and associated neural process theory used in the simulations described in this paper. (Left) Illustration of the Markov decision process formulation of active inference. The generative model is here depicted graphically, such that arrows indicate dependencies between variables. Here observations (o) depend on hidden states (s), as specified by the A matrix, and those states depend on both previous states (as specified by the B matrix, or the initial states specified by the D matrix) and the policies (π) selected by the agent. The probability of selecting a particular policy in turn depends on the expected free energy (G) of each policy with respect to the prior preferences (C) of the agent. The degree to which expected free energy influences policy selection is also modulated by a prior policy precision parameter (γ), which is in turn dependent on beta (β) – where higher values of beta promote more randomness in policy selection (i.e., less influence of the differences in expected free energy across policies). (Middle/Right) The differential equations in the middle panel approximate Bayesian belief updating within the graphical model depicted on the left via a gradient descent on free energy (F). The right panel also illustrates the proposed neural basis by which neurons making up cortical columns could implement these equations. The equations have been expressed in terms of two types of prediction errors. State prediction errors (ε) signal the difference between the (logarithms of) expected states (s) under each policy and time point and the corresponding predictions based upon outcomes/observations (A matrix) and the (preceding and subsequent) hidden states (B matrix, and, although not written, the D matrix for the initial hidden states at the first time point). These represent prior and likelihood terms, respectively – also marked as messages 2, 3, and 4, which are depicted as being passed between neural populations (colored balls) via particular synaptic connections in the right panel (note: the dot notation indicates transposed matrix multiplication within the update equations for prediction errors). These (prediction error) signals drive depolarization (v) in those neurons encoding hidden states (s), where the probability distribution over hidden states is then obtained via a softmax (normalized exponential) function (σ). Outcome prediction errors (σ) instead signal the difference between the (logarithms of) expected observations (o) and those predicted under prior preferences (C). This term additionally considers the expected ambiguity or conditional entropy (H) between states and outcomes as well as a novelty term (W) reflecting the degree to which beliefs about how states generate outcomes would change upon observing different possible state-outcome mappings (computed from the A matrix). This prediction error is weighted by the expected observations to evaluate the expected free energy (G) for each policy (π), conveyed via message 5. These policy-specific free energies are then integrated to give the policy expectations via a softmax function, conveyed through message 1. Actions at each time point (u) are then chosen out of the possible actions under each policy (U) weighted by the value (negative expected free energy) of each policy. In our simulations, the agent learned associations between hidden states and observations (A) via a process in which counts were accumulated (a) reflecting the number of times the agent observed a particular outcome when she believed that she occupied each possible hidden state. Although not displayed explicitly, learning prior expectations over initial hidden states (D) is similarly accomplished via accumulation of concentration parameters (d). These prior expectations reflect counts of how many times the agent believes she previously occupied each possible initial state. Concentration parameters are converted into expected log probabilities using digamma functions (ψ). As already stated, the right panel illustrates a possible neural implementation of the update equations in the middle panel. In this implementation, probability estimates have been associated with neuronal populations that are arranged to reproduce known intrinsic (within cortical area) connections. Red connections are excitatory, blue connections are inhibitory, and green connections are modulatory (i.e., involve a multiplication or weighting). These connections mediate the message passing associated with the equations in the middle panel. Cyan units correspond to expectations about hidden states and (future) outcomes under each policy, while red states indicate their Bayesian model averages (i.e., a “best guess” based on the average of the probability estimates for the states and outcomes across policies, weighted by the probability estimates for their associated policies). Pink units correspond to (state and outcome) prediction errors that are averaged to evaluate expected free energy and subsequent policy expectations (in the lower part of the network). This (neural) network formulation of belief updating means that connection strengths correspond to the parameters of the generative model described in the text. Learning then corresponds to changes in the synaptic connection strengths. Only exemplar connections are shown to avoid visual clutter. Furthermore, we have just shown neuronal populations encoding hidden states under two policies over three time points (i.e., two transitions), whereas in the task described in this paper there are greater number of allowable policies. For more information regarding the mathematics and processes illustrated in this figure, see Friston et al. (2016, 2017a,b,c).

When a generative model is formulated as a partially observable Markov decision process, active inference takes a particular form. Specifically, specifying a generative model in this context requires specifying the allowable policies, hidden states of the world (that the brain cannot directly observe but must infer), and observable outcomes, as well as a number of matrices that define the probabilistic relationships between these quantities (see Figure 2). The “A” matrix specifies which outcomes are generated by each combination of hidden states (i.e., a likelihood mapping indicating the probability that a particular set of outcomes would be observed given a particular hidden state). The “B” matrix encodes state transitions, specifying the probability that one hidden state will evolve into another over time. Some of these transitions are controlled by the agent, according to the policy that has been selected. The “D” matrix encodes prior expectations about the initial hidden state of the world. The “E” matrix encodes prior expectations about which policies will be chosen (e.g., frequently repeated habitual behaviors will have higher prior expectation values). Finally, the “C” matrix encodes prior preferences over outcomes. Outcomes and hidden states are generally factorized into multiple outcome modalities and hidden state factors. This means that the likelihood mapping (the “A” matrix) plays an important role in modeling the interactions among different hidden states at each level of a hierarchical model when generating the outcomes at the level below. One can think of each factor and modality as an independent group of competing states or observations within a given category. For example, one hidden state factor could be “birds,” which includes competing interpretations of sensory input as corresponding to either hawks, parrots, or pigeons, whereas a separate factor could be “location,” with competing representations of where a bird is in the sky. Similarly, one outcome modality could be size (e.g., is the bird big or small?) whereas another could be color (is the bird black, white, green, etc?).

As shown in the middle and right panels of Figure 2, active inference is also equipped with a neural process theory – a proposed manner in which neuronal circuits and their dynamics can invert generative models via a set of linked update equations that minimize prediction errors. In this neuronal implementation, the probability of neuronal firing in specific populations is associated with the expected probability of a state, whereas postsynaptic membrane potentials are associated with the logarithm of this probability. A softmax function acts as an activation function – transforming membrane potentials into firing rates. With this setup, postsynaptic depolarizations (driven by ascending signals) can be understood as prediction errors (free energy gradients) about hidden states – arising from linear mixtures in the firing rates of other neural populations. These prediction errors (postsynaptic currents) in turn drive membrane potential changes (and resulting firing rates). When predictions errors are minimized, postsynaptic influences no longer drive changes in activity (depolarizations and firing rates), corresponding to minimum free energy.

Via similar dynamics, predictions errors about outcomes (i.e., the deviation between preferred outcomes and those predicted under each policy) can also be computed and integrated (i.e., averaged) to evaluate the expected free energy (value) of each policy (i.e., underwriting selection of the policy that best minimizes these prediction errors). Dopamine dynamics also modulate policy selection, by encoding estimates of the expected uncertainty over policies – where greater expected uncertainty promotes less deterministic policy selection. Phasic dopamine responses correspond to updates in expected uncertainty over policies – which occur when there is a prediction error about expected free energy; that is, when there is a difference between the expected free energy of policies before and after a new observation.

Finally, and most centrally for the simulations we report below, learning in this theory corresponds to a form of synaptic plasticity remarkably similar to Hebbian coincidence-based learning mechanisms associated with empirically observed synaptic long-term potentiation and depression (LTP and LTD) processes (Brown et al., 2009). Here one can think of the strength of each synaptic connection as a parameter in one of the matrices described above. For example, the strength of one synapse could encode the amount of evidence a given observation provides for a given hidden state (i.e., an entry in the “A” matrix), whereas another synapse could encode the probability of a state at a later time given a state at an earlier time (i.e., an entry in the “B” matrix). Mathematically, the synaptic strengths correspond to Dirichlet parameters that increase in value in response to new observations. One can think of this process as adding counts to each matrix entry based on coincidences in pre- and post-synaptic activity. For example, if beliefs favor one hidden state, and this co-occurs with a specific observation, then the strength of the value in the “A” matrix encoding the relationship between that state and that observation will increase. Counts also increase in similar fashion in the “D” matrix encoding prior beliefs about initial states (whenever a given hidden state is inferred at the start of a trial) as well as in the “B” matrix encoding beliefs about transition probabilities (whenever one specific state is followed by another). For a more detailed discussion, please see the legend for Figure 2 and associated references.

In what follows, we describe how this type of generative model was specified to perform emotional state inference and emotion concept learning. We also present simulated neural responses based on the neural process theory described above.

A Model of Emotion Inference and Concept Learning

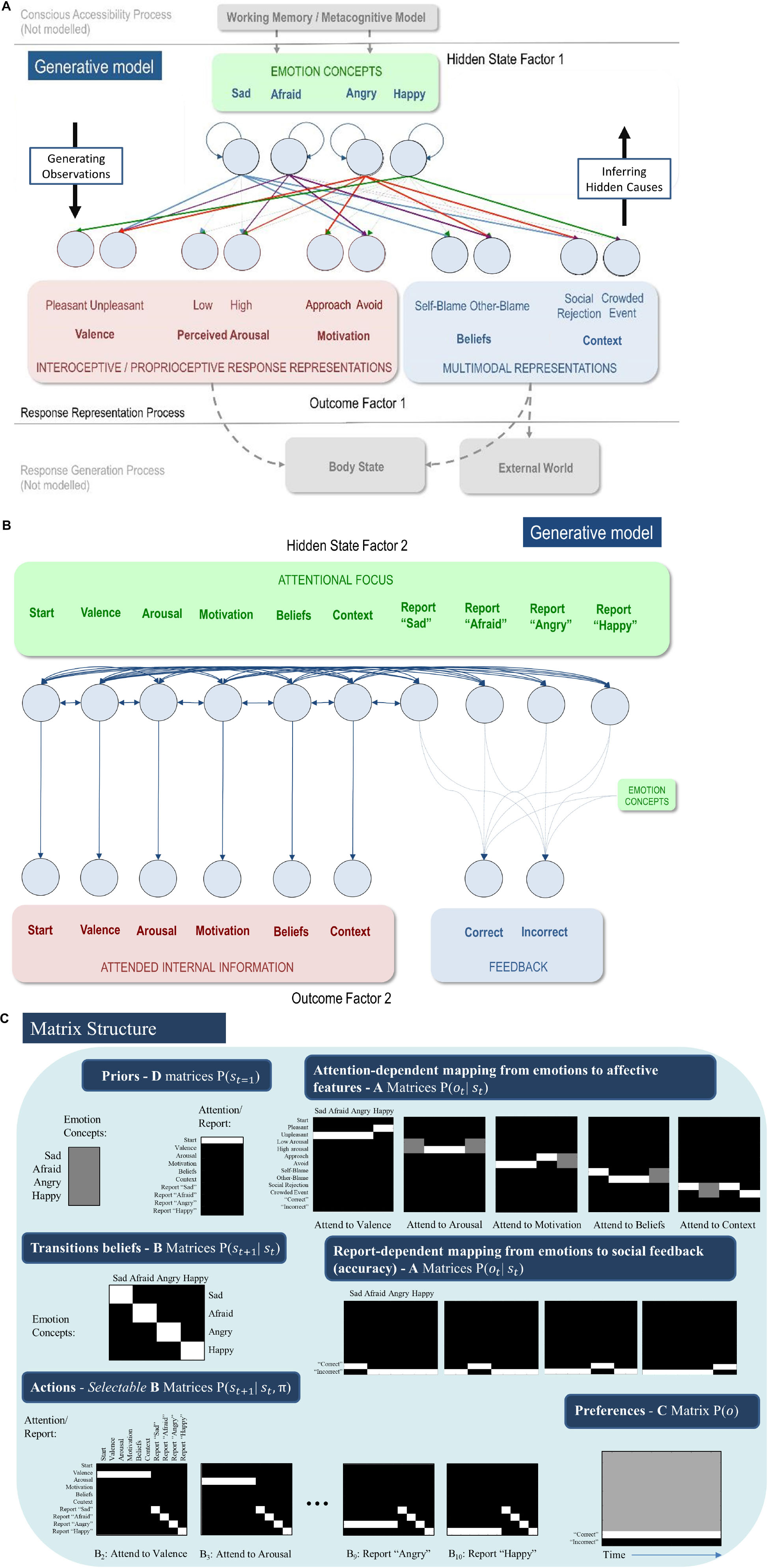

In this paper we focus on the second process in the TPM – affective response representation – in which a multifaceted affective response is generated and the ensuing (exteroceptive, proprioceptive, and interoceptive) outcomes are used to infer or represent the current emotional state. The basic idea is to equip the generative model with a space of emotion concepts (i.e., latent or hidden states) that generate the interoceptive, exteroceptive and proprioceptive consequences (at various levels of abstraction) of being in a particular emotional state. Inference under this model then corresponds to inferring that one of several possible emotion concepts is the best explanation for the data at hand (e.g., “my unpleasant feeling of increased heart rate and urge to run away must indicate that I am afraid to give this speech”). Crucially, to endow emotion concept inference with a form of mental action (Metzinger, 2017; Limanowski and Friston, 2018), we also included a state factor corresponding to selective attention. Transitions between attentional states were under control of the agent (i.e., “B” matrices were specified for all possible transitions between these states). The “A” matrix mapping emotion concepts to lower-level observations differed in each attentional state, such that precise information about each type of lower-level information was only available in one attentional state (e.g., the agent needed to transition into the “attention to valence” state to gain precise information regarding whether she was feeling pleasant or unpleasant, and so forth; see Figure 3C).

Figure 3. (A) Displays the levels of hidden state factor 1 (emotion concepts) and their mapping to different lower-level representational contents (here modeled as outcomes). Each emotion concept generated different outcome patterns (see text for details), although some were more specific than others (e.g., HAPPY generated high and low arousal equally; denoted by thin dotted connections). The A-matrix encoding these mappings is shown in (C). The B-matrix, also shown in (C), was an identity mapping between emotion states, such that emotions were stable within trials. The precision of this matrix (i.e., implicit beliefs about emotional stability) could be adjusted via passing this matrix through a softmax function with different temperature parameter values. This model simulates the affective response representation process within the three-process model of emotion episodes (Smith et al., 2018b, 2019a; Smith, 2019). Black arrows on the right and left indicate the direction by which hidden causes generate observations (the generative process) and the direction of inference in which observations are used to infer their hidden causes (emotion concepts) using the agent’s generative model. The gray arrows/boxes at the bottom and top of the figure denote other processes within the three-process model (i.e., affective response generation and conscious accessibility) that are not explicitly modeled in the current work (for simulations of the affective response generation process, see Hesp et al., 2019, and for simulations of the conscious accessibility process, see Smith et al., 2019b). (B) Displays the levels of hidden state factor 2 (focus of attention) and its mapping to representational outcomes. Each focus of attention mapped deterministically (the A-matrix was a fully precise identity matrix) to a “location” (i.e., an internal source of information) at which different representational outcomes could be observed. Multiple B-matrices, depicted in Figure 3C, provided controllable transitions (i.e., actions) such that the agent could choose to shift her attention from one internal representation to another to facilitate inference. The agent always began a trial in the “start” attentional state, which provided no informative observations. The final attentional shift in the trial was toward a (proprioceptive) motor response to report an emotion (i.e., at whatever point in the trial the agent became sufficiently confident, at which point the state could not change until the end of the trial), which was either correct or incorrect. The agent preferred (expected) to be correct and not to be incorrect. Because policies (i.e., sequences of implicit attentional shifts and subsequent explicit reports) were selected to minimize expected free energy, emotional state inference under this model entails a sampling of salient representational outcomes and subsequent report – under the prior preference that the report would elicit the outcome of “correct” social feedback. In other words, policy selection was initially dominated by the epistemic value part of expected free energy (driving the agent to gather information about her emotional state); then, as certainty increased, the pragmatic value part of expected free energy gradually began to dominate (driving the agent to report her emotional state). (C) Displays select matrices defining the generative model (lighter colors indicate higher probabilities). “D” matrices indicate a flat distribution over initial emotional states and a strong belief that the attentional state will begin in the “start” state. “A” matrices indicate the observations (rows) that would be generated by each emotional state (columns) depending on the current state of attention, as well as the social feedback (accuracy information) that would be generated under each emotional state depending on chosen self-reports. “B” matrices indicate that emotional states are stable within a trial (i.e., states transition only to themselves such that the state transition matrix is an identity matrix) and that the agent can choose to shift attention to each modality of lower-level information (i.e., by selecting the transitions encoded by “B” matrices 2–6), or report her emotional state (i.e., by selecting the transitions entailed by “B” matrices 7–10), at which point she could no longer leave that state. Only the first and last two possible attentional shifting actions (“B” matrices) are shown due to space constraints (note: the first “B” matrix for this factor corresponds to remaining in the starting state and is not shown); “B” matrices 4–8 take identical form, but with the row vector (1 1 1 1 1 1) spanning the first six columns progressively shifted downward with each matrix, indicating the ability to shift from each attentional state to the attentional state corresponding to that row. The “C” matrix indicates a preference for “correct” social feedback and an aversion to “incorrect” social feedback.

The incorporation of selective attention in emotional state inference and learning within our model was motivated by several factors. First, multiple psychotherapeutic modalities improve clients’ understanding of their own emotions in just this way; that is, by having them selectively attend to and record the contexts, bodily sensations, thoughts, action tendencies, and behaviors during emotion-episodes (e.g., Hayes and Smith, 2005; Barlow et al., 2016). Second, low emotional awareness has been linked to biased attention in some clinical contexts (Lane et al., 2018). Third, related personality factors (e.g., biases toward “externally oriented thinking”) are included in leading self-report measures of the related construct of alexithymia (Parker et al., 2003). Finally, emotion learning in childhood appears to involve parent-child interactions in which parents draw attention to (and label) bodily feelings and behaviors during a child’s affective responses [e.g., see work on attunement, social referencing, and related aspects of emotional development (Mumme et al., 1996; Licata et al., 2016; Smith et al., 2018b)] – and the lack of such interactions hinders emotion learning (and mental state learning more generally; Colvert et al., 2008).

In our model, we used relatively high level “outcomes” (i.e., themselves standing in for lower-level representations) to summarize the products of belief updating at lower levels of a hierarchical model. These outcomes were domain-specific, covering interoceptive, proprioceptive and exteroceptive modalities. A full hierarchical model would consider lower levels, unpacked in terms of sensory modalities; however, the current model, comprising just two levels, is sufficient for our purposes. The bottom portion of Figure 3A (in gray) acknowledges the broad form that these lower-level outcomes would be expected to take. The full three-process model would also contain a higher level corresponding to conscious accessibility (for an explicit model and simulations of this higher level, see Smith et al., 2019b). This is indicated by the gray arrows at the top of Figure 3A.

Crucially, as mentioned above, attentional focus was treated as a (mental) action that determines the outcome modality or domain to which attention was selectively allocated. Effectively, the agent had to decide which lower-level representations to selectively attend to (i.e., which sequential attention policy to select) in order to figure out what emotional state she was in. Mathematically, this was implemented via interactions in the likelihood mapping – such that being in a particular attentional state selected one and only one precise mapping between the emotional state factor and the outcome information in question (see Figure 3C). Formally, this implementation of mental action or attentional focus is exactly the same used to model the exploration of a visual scene using overt eye movements (Mirza et al., 2016). However, on our interpretation, this epistemic foraging was entirely covert; hence mental action (c.f., the premotor theory of attention; (Rizzolatti et al., 1987; Smith and Schenk, 2012; Posner, 2016).

Figure 3 illustrates the resulting model. The first hidden state factor was a space of (exemplar) emotion concepts (SAD, AFRAID, ANGRY, and HAPPY). The second hidden state factor was attentional focus, and the “B” matrix for this second factor allowed state transitions to be controlled by the agent. The agent could choose to attend to three sources of bodily (interoceptive/proprioceptive) information, corresponding to affective valence (pleasant or unpleasant sensations), autonomic arousal (e.g., high or low heart rate), and motivated proprioceptive action tendencies (approach or avoid). The agent could also attend to two sources of exteroceptive information, including the perceived situation (involving social rejection or a crowded event) and subsequent beliefs about responsibility (attributing agency/blame to self or another). These different sources of information are based on a large literature within emotion research, indicating that they are jointly predictive of self-reported emotions and/or are important factors in affective processing (Russell, 2003; Siemer et al., 2007; Lindquist and Barrett, 2008; Scherer, 2009; Harmon-Jones et al., 2010; Barrett et al., 2011; Barrett, 2017).

Our choice of including valence in particular reflects the fact that our model deals with high levels of hierarchical processing (this choice also enables us to connect more fluently with current literature on emotion concept categories). In this paper, we are using labels like “unpleasant” as pre-emotional constructs. In other words, although affective in nature, we take concepts like “unpleasant” as contributing to elaborated emotional constructs during inference. Technically, valenced states provide evidence for emotional state inference at a higher level (e.g., pleasant sensations provide evidence that one is feeling a positive emotion like excitement, joy, or contentment, whereas unpleasant sensations provide evidence that one may be feeling a negative emotion such as sadness, fear, or anger). Based on previous work (Joffily and Coricelli, 2013; Clark et al., 2018; Hesp et al., 2019), we might expect valence to correspond to changes in the precision/confidence associated with lower-level visceromotor and skeletomotor policy selection, or to related internal estimates that can act as indicators of success in uncertainty resolution; see Joffily and Coricelli (2013), de Berker et al. (2016), Peters et al. (2017), Clark et al. (2018). Put another way, feeling good may correspond to high confidence in one’s model of how to act, whereas feeling bad may reflect the opposite. Explicitly modeling these lower-level dynamics in a deep temporal model will be the focus of future work.

In the simulations we report here, there were 6 time points in each epoch or trial of emotion inference. At the first time point, the agent always began in an uninformative initial state of attentional focus (the “start” state). The agent’s task was to choose what to attend to, and in which order, to infer her most likely emotional state. When she became sufficiently confident, she could choose to respond (i.e., reporting that she felt sad, afraid, angry, or happy). In these simulations the agent selected “shallow” one-step policies, such that she could choose what to attend to next – to gain the most information. Given the number of time points, the agent could choose to attend to up to four of the five possible sources of lower-level information before reporting her beliefs about her emotional state. The “A” matrix mapping attentional focus to attended outcomes was an identity matrix, such that the agent always knew which lower-level information she was currently attending to. This may be thought of as analogous to the proprioceptive feedback consequent on a motor action.

The “B” matrix for hidden emotional states was also an identity matrix, reflecting the belief that emotional states are stable within a trial (i.e., if you start out feeling sad, then you will remain sad throughout the trial). This sort of probability transition matrix in the generative model allows evidence to be accumulated for one state or another over time; here, the emotion concept that provides the best explanation for actively attended evidence in the outcome modalities. The “A” matrix – mapping emotion concepts to outcomes – was constructed such that certain outcome combinations were more consistent with certain emotional states than others: SAD was probabilistically associated with unpleasant valence, either low or high arousal (e.g., lying in bed lethargically vs. intensely crying), avoidance motivation, social rejection, and self-attribution (i.e., self-blame). AFRAID generated unpleasant valence, high arousal, avoidance, other-blame (c.f., fear often being associated with its perceived external cause), and either social rejection or a crowded event (e.g., fear of a life without friends vs. panic in crowded spaces). ANGRY generated unpleasant valence, high arousal, approach, social rejection, and other-blame outcomes. Finally, HAPPY generated pleasant valence, either low or high arousal, either approach or avoidance (e.g., feeling excited to wake up and go to work vs. feeling content in bed and not wanting to go to work), and a crowded event (e.g., having fun at a concert). Because HAPPY does not have strong conceptual links to blame, we defined a flat mapping between HAPPY and blame, such that either type of blame provided no evidence for or against being happy. Although this mapping from emotional states to outcomes has some face validity, it should not be taken too seriously. It was chosen primarily to capture the ambiguous and overlapping correlates of emotion concepts, and to highlight why adaptive emotional state inference and emotion concept learning can represent difficult problems.

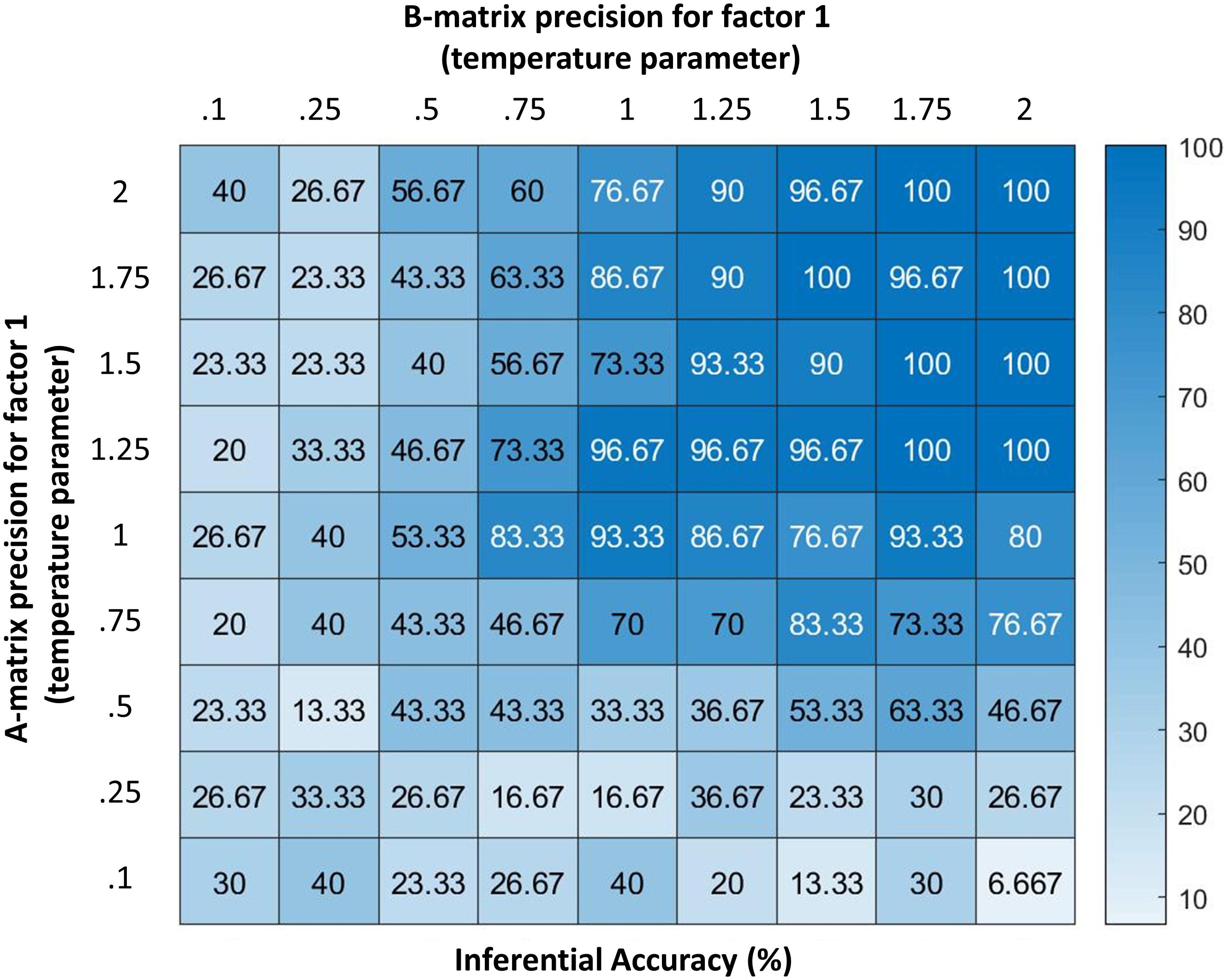

If the “A” matrix encoding state-outcome relationships was completely precise (i.e., if the contingencies above were deterministic as opposed to probabilistic), sufficient information could be gathered through (at most) three attentional shifts; but this becomes more difficult when probabilistic mappings are imprecise (i.e., as they more plausibly are in the real world). Figure 4 illustrates this by showing how the synthetic subject’s confidence about her state decreases as the precision of the mapping between emotional states and outcomes decreases (we measured confidence here in terms of the accuracy of responding in relation to the same setup with infinite precision). Changes in precision were implemented via a temperature parameter of a softmax function applied to a fully precise version of likelihood mappings between emotion concepts and the 5 types of lower-level information that the agent could attend to (where a higher value indicates higher precision). For a more technical account of this type of manipulation, please see Parr and Friston (2017b).

Figure 4. Displays the accuracy of the model (percentage of correct inferences over 30 trials) under different levels of precision for two parameters (denoted by temperature values for a softmax function controlling the specificity of the A and B matrices for hidden state factor 1; higher values indicate higher precision). As can be seen, the model performs with high accuracy at moderate levels of precision. However, its ability to infer its own emotions becomes very poor if the precision of either matrix becomes highly imprecise. Accuracy here is defined in relation to the response obtained from an agent with infinite precision – and can be taken as a behavioral measure of the quality of belief updating about emotional states. These results illustrate how emotion concepts could be successfully inferred despite variability in lower-level observations (e.g., contexts, arousal levels), as would be expected under constructivist theories of emotion (Barrett, 2017); however, they also demonstrate limits in variability, beyond which self-focused emotion recognition would begin to fail.

Figure 4 additionally demonstrates how reporting confidence decreases with decreasing precision of the “B” matrix encoding emotional state transitions, where low precision corresponds to the belief that emotional states are unstable over time. Interestingly, these results suggest that expectations about emotional instability would reduce the ability to understand or infer one’s own emotions. From a Bayesian perspective, this result is very sensible: if we are unable to use past beliefs to contextualize the present, it is much harder to accumulate evidence in favor of one hypothesis about emotional state relative to another. Under moderate levels of precision, our numerical analysis demonstrates that the model can conceptualize the multimodal affective responses it perceives with high accuracy.

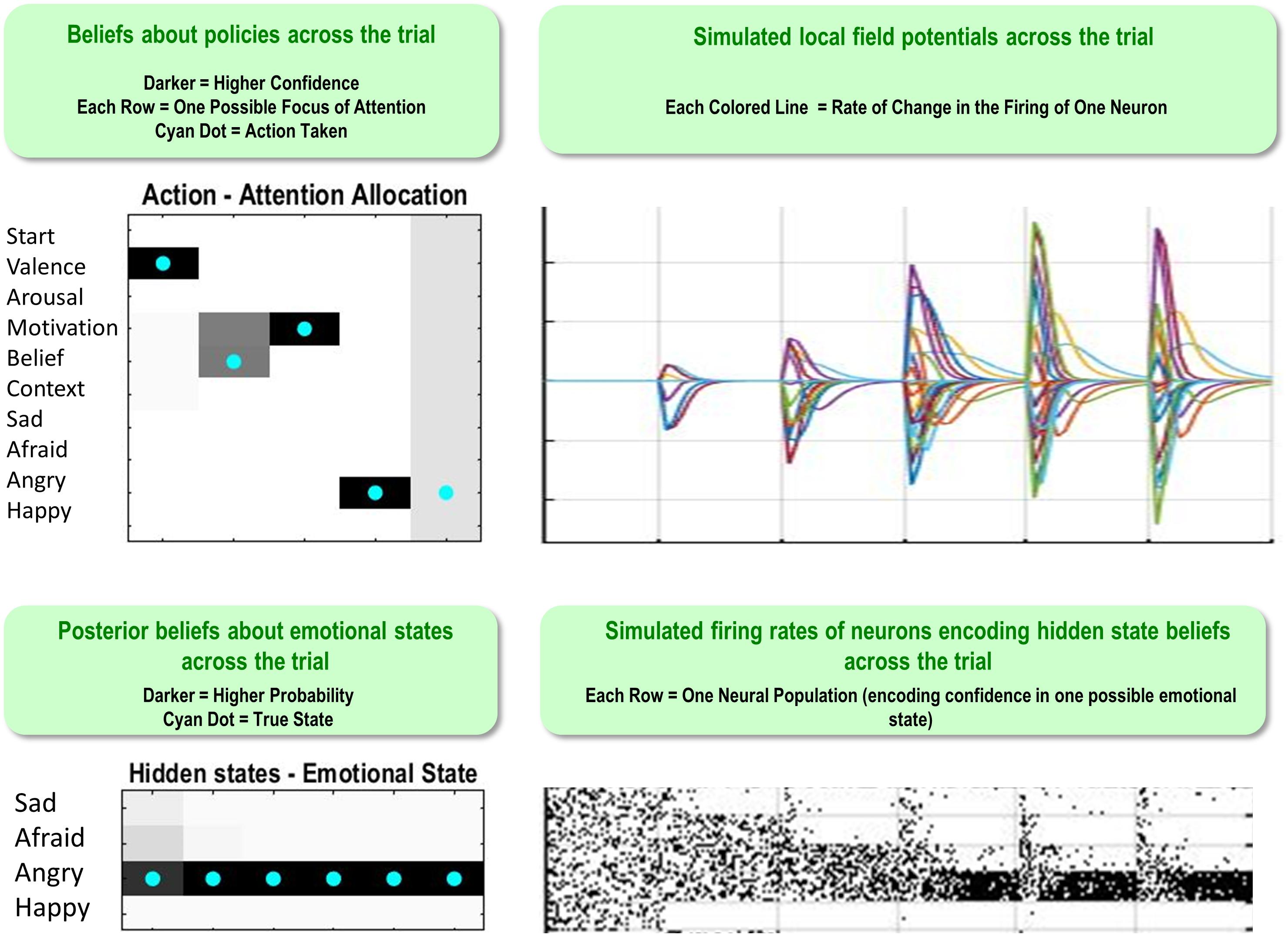

Figure 5 illustrates a range of simulation results from an example trial under moderately high levels of “A” and “B” matrix precision (temperature parameter = 2 for each). The upper left plot shows the sequence of (inferred) attentional shifts (note: darker colors indicate higher probability beliefs of the agent, and cyan dots indicate the true states). In this trial, the agent chose a policy in which it attended to valence (observing “unpleasant”), then beliefs (observing “other-blame”), then action (observing “approach”), at which time she became sufficiently confident and chose to report that she was angry. The lower left plot displays the agent’s posterior beliefs at the end of the trial about her emotional state at each timepoint in the trial, in this case inferring that she had been (and still was) angry. Note that this reflects retrospective inference, and not the agent’s beliefs at each timepoint. The lower right and upper right plots display simulated neural responses (based on the neural process theory that accompanies this form of active inference; Friston et al., 2017a), in terms of single-neuron firing rates (raster plots) and local field potentials, respectively. The simulated firing rates in the lower right plot illustrate that the agent’s confidence that she was angry increased gradually with each new observation.

Figure 5. This figure illustrates a range of simulation results from one representative trial under high levels of A- and B-matrix precision (temperature parameter = 2 for each matrix). (Upper left) Displays a sequence of chosen attentional shifts. The agent here chose a policy in which it attended to valence, then beliefs, then action motivation, and then chose to report that it was angry. Darker colors indicate higher confidence (probability estimates) in the model about its actions, whereas the cyan dot indicates the true action. (Bottom left) Displays the agent’s posterior beliefs about her emotional state across the trial. These posterior beliefs indicate that, at the end of the trial, the agent retrospectively inferred (correctly) that she was angry throughout the whole trial (i.e., despite not being aware of this until the fourth timestep, as indicated by the firing rates encoding confidence updates over time in the bottom right). (Lower right/Upper right) Plots simulated neural responses in terms of single-neuron firing rates (raster plots) and local field potentials (rates of change in neural firing), respectively. Here each neuron’s activity encodes the probability of occupying a particular hidden state at a particular point in time during the trial (based on the neural process theory depicted in Figure 2; see Friston et al., 2017a).

The simulations presented in Figures 4, 5 make some cardinal points. First, it is fairly straightforward to simulate emotion processing in terms of emotional state inference. This rests upon a particular sort of generative model that can generate outcomes in multiple modalities. The recognition of an emotional state corresponds to the inversion of such models – and therefore necessarily entails multimodal integration. In other words, successfully disambiguating the most likely emotional state here requires consideration of the specific multimodal patterns of experience (i.e., incorporating interoceptive, exteroceptive, and proprioceptive sensations) that would be expected under each emotional state. We have also seen that this form of belief updating – or evidence accumulation – depends sensitively on what sort of evidence is actively attended. This equips the model of emotion concept representation with a form of mental action, which speaks to a tight link between emotion processing and attention to various sources of evidence from within the body – and beyond. Choices to shift attention vs. to self-report are, respectively, driven by the epistemic and pragmatic value of each allowable policy, such that pragmatic value gradually comes to drive the selection of reporting policies as the expected information gain of further attentional shifts decreases. The physiological plausibility of this emotion inference process has been briefly considered in terms of simulated responses. In the next section, we turn to a more specific construct validation, using empirical phenomenology from neurodevelopmental studies of emotion.

Simulating the Influence of Early Experience on Emotional State Inference and Emotion Concept Learning

Having confirmed that our model could successfully infer emotional states – if equipped with emotion concepts – we are now in a position to examine emotion concept learning. Specifically, we investigated the conditions under which emotion concepts could be acquired successfully and the conditions under which this type of emotion learning and inference fails.

Can Emotion Concepts Be Learned in Childhood?

The first question we asked was whether our model could learn about emotions, if it started out with no prior beliefs about how emotions structure its experience. To answer this question, we first ran the model’s “A” matrix (mapping emotion concepts to attended outcome information) through a softmax function with a temperature parameter of 0, creating a fully imprecise likelihood mapping. This means that each hidden emotional state predicted all outcomes equally (effectively, none of the hidden states within the emotion factor had any conceptual content). Then we generated 200 sets of observations (i.e., 50 for each emotion concept, evenly interleaved) based on the probabilistic state-outcome mappings encoded in the model described above (i.e., the “generative process”). That is, 50 interleaved learning trials for each emotion were generated by probabilistically sampling from a moderately precise version of the “A” matrix distribution depicted in Figure 3C (i.e., temperature parameter = 2). This resulted in 50 sets of observations consistent with the probabilistic mappings for each emotion (e.g., this entailed that roughly 50% of HAPPY trials involved observations of low vs. high arousal, whereas only roughly 1% of HAPPY trials involved the observation of social rejection, etc.). After the 200 learning trials, we then examined the changes in the model’s reporting accuracy over time. This meant that the agent, who began with no emotion knowledge (i.e., a fully uninformative “A” matrix), observed patterns of observations consistent with each emotion (as specified above) at 50 timepoints spread out across the 200 trials and needed to learn these associations (i.e., learn the appropriate “A” matrix mapping). This analysis was repeated at several levels of outcome (“A” matrix) and transition (“B” matrix) precision in the generative process – to explore how changes in the predictability or consistency of observed outcome patterns affected the model’s ability to learn. In this model, learning was implemented through updating (concentration) parameters for the model’s “A” matrix after each trial. The model could also learn prior expectations for being in different emotional states, based on updating concentration parameters for its “D” matrix after each trial (i.e., the emotional state it started in). For details of these free energy minimizing learning processes, please see Friston et al. (2016).

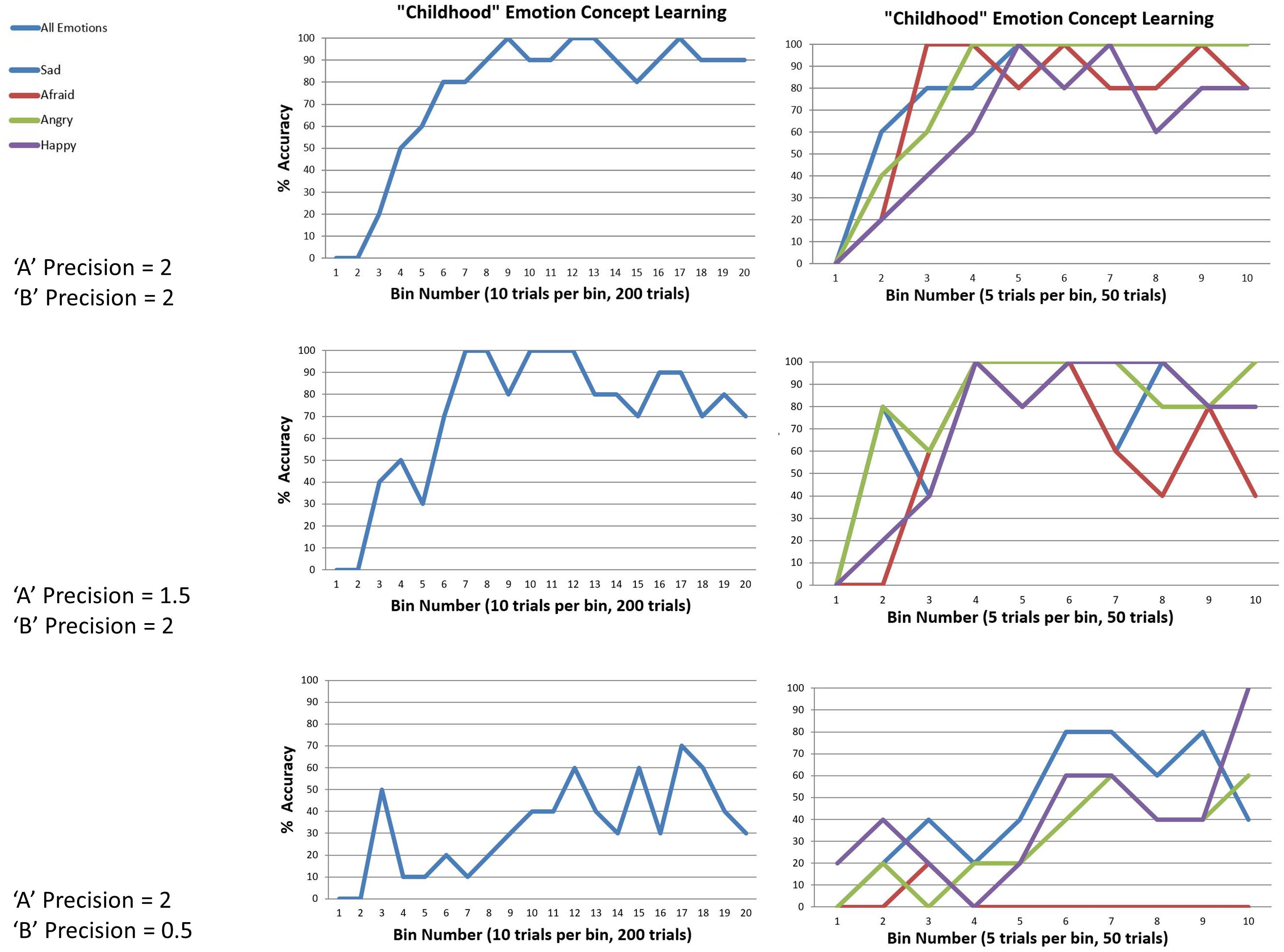

We observed that the model could successfully reach 100% accuracy (with minor fluctuation) when both outcome and transition precisions in the generative process were moderately high (i.e., when the temperature parameters for the “A” and “B” matrix of the generative process were 2). The top panel in Figure 6 illustrates this by plotting the percentage accuracy across all emotions during learning over 200 trials (in bins of 10 trials), and for each emotion (in bins of 5). As can be seen, the model steadily approaches 100% accuracy across trials. The middle and lower panels of Figure 6 illustrate the analogous results when outcome precision and transition precision were lowered, respectively. The precision values chosen for these illustrations (“A” precision = 1.5, “B” precision = 0.5) represent observed “tipping points” at which learning began to fail (i.e., at progressively lower precision values learning performance steadily approached 0% accuracy). As can be seen, lower precision in either the stability of emotions over time or the consistency between observations and emotional states confounded learning. Overall, these findings provide a proof of principle that this sort of model can learn emotion concepts, if provided with a representative and fairly consistent sample of experiences in its “childhood.”

Figure 6. This figure illustrates simulated “childhood” emotion concept learning, in which the agent started out with no emotion concept knowledge (a uniform likelihood mapping from emotional states to outcomes) and needed to learn the correct likelihood mapping over 50 interleaved observations of the outcome patterns associated with each emotion concept (200 trials total). Left panels show changes in accuracy over time in 10-trial bins, at different levels of outcome pattern precision (i.e., “A” and “B” matrix precision, reflecting the consistency in the mapping between emotions and outcomes and the stability of emotional states over time, respectively). Right panels show the corresponding results for each emotion separately (5-trial bins). (Top) with moderately high precision (temperature parameter = 2 for both “A” and “B” matrices), learning was successful. (Middle and Bottom) with reduced “A” precision or “B” precision (respectively), learning began to fail.

Can a New Emotion Concept Be Learned in Adulthood?

We then asked whether a new emotion could be learned later, after others had already been acquired (e.g., as in adulthood). To answer this question, we again initialized the model with a fully imprecise “A” matrix (temperature parameter = 0) and set the precision of the “A” and “B” matrices of the generative process to the levels at which “childhood” learning was successful (i.e., temperature parameter = 2 for each). We then exposed the model to 150 observations that only contained the outcome patterns associated three of the four emotions (50 for each emotion, evenly interleaved). We again allowed the model to accumulate experience in the form of concentration parameters for its “D” matrix – allowing it to learn strong expectations for the emotional states it repeatedly inferred it was in. After these initial 150 trials, we then exposed the model to 200 further trials – using the outcome patterns under all four emotions (50 for each emotion, evenly interleaved). We then asked whether the emotion that was not initially necessary to explain outcomes could be acquired later, when circumstances change.

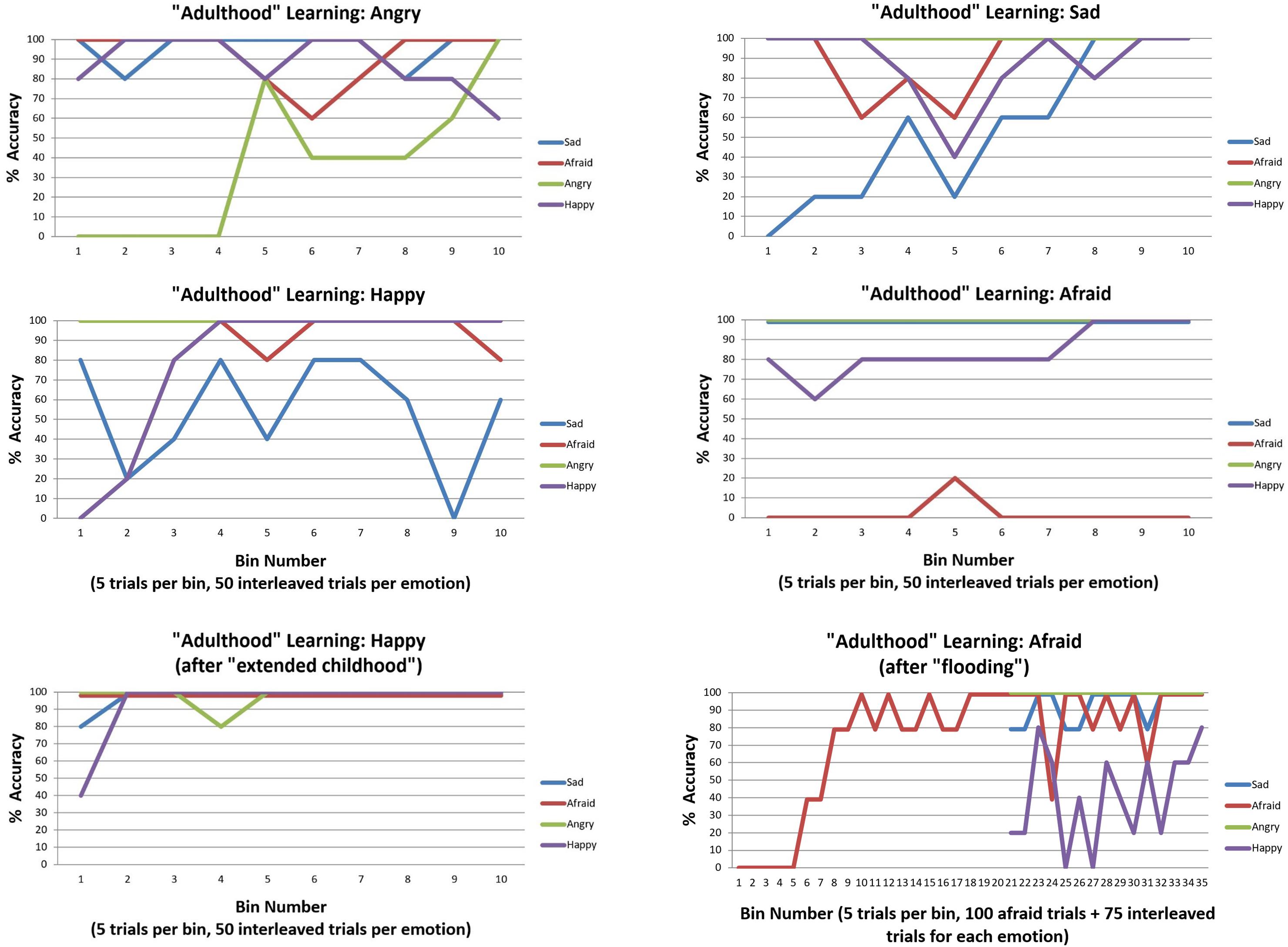

We first observed that, irrespective of which three emotions were initially presented, accuracy was high by the end of the initial 150 trials (i.e., between 80–100% accuracy for each of the three emotion concepts learned). The upper and middle panels of Figure 7 illustrate the accuracy over the subsequent 200 trials as the new emotion was learned. As can be seen in the upper left and right sections of Figure 7, ANGRY and SAD were both successfully learned. Interestingly, performance for the other emotions appeared to temporarily drop and then increase again as the new emotion concept was acquired (a type of temporary retroactive interference).

Figure 7. This figure illustrates emotion concept learning in “adulthood”, where three emotion concepts had already been learned (in 150 previous trials not illustrated) and a fourth was now needed to explain patterns of outcomes (over 200 trials, 50 of which involved the new emotion in an interleaved sequence). The top four plots illustrate learning for each of the four emotion concepts. ANGRY and SAD were successfully acquired. HAPPY was also successfully acquired, but interfered with previous SAD concept learning. As shown in the bottom left, this was ameliorated by providing the model with more “childhood” learning trials before the new emotion was introduced. AFRAID was not successfully acquired. This was (partially) ameliorated by first flooding the model with repeated observations of AFRAID-consistent outcomes before interleaving it with the other emotions, as displayed in the bottom right. All simulations were carried out with moderately high levels of precision (temperature parameter = 2 for both “A” and “B” matrices).

The middle left section of Figure 7 demonstrates that HAPPY could also be successfully learned; however, it appeared to interfere with prior learning for SAD. Upon further inspection, it appeared that SAD may not have been fully acquired in the first 150 trials (only reaching 80% accuracy near the end). We therefore chose to examine whether an “extended” or “emotionally enriched” childhood might prevent this interference, by increasing the initial learning trial number from 150 to 225 (75 interleaved exposures to SAD, ANGRY, and AFRAID outcome patterns). As can be seen in the lower left panel of Figure 7, HAPPY was quickly acquired in the subsequent 200 trials under these conditions, without interfering with previous learning.

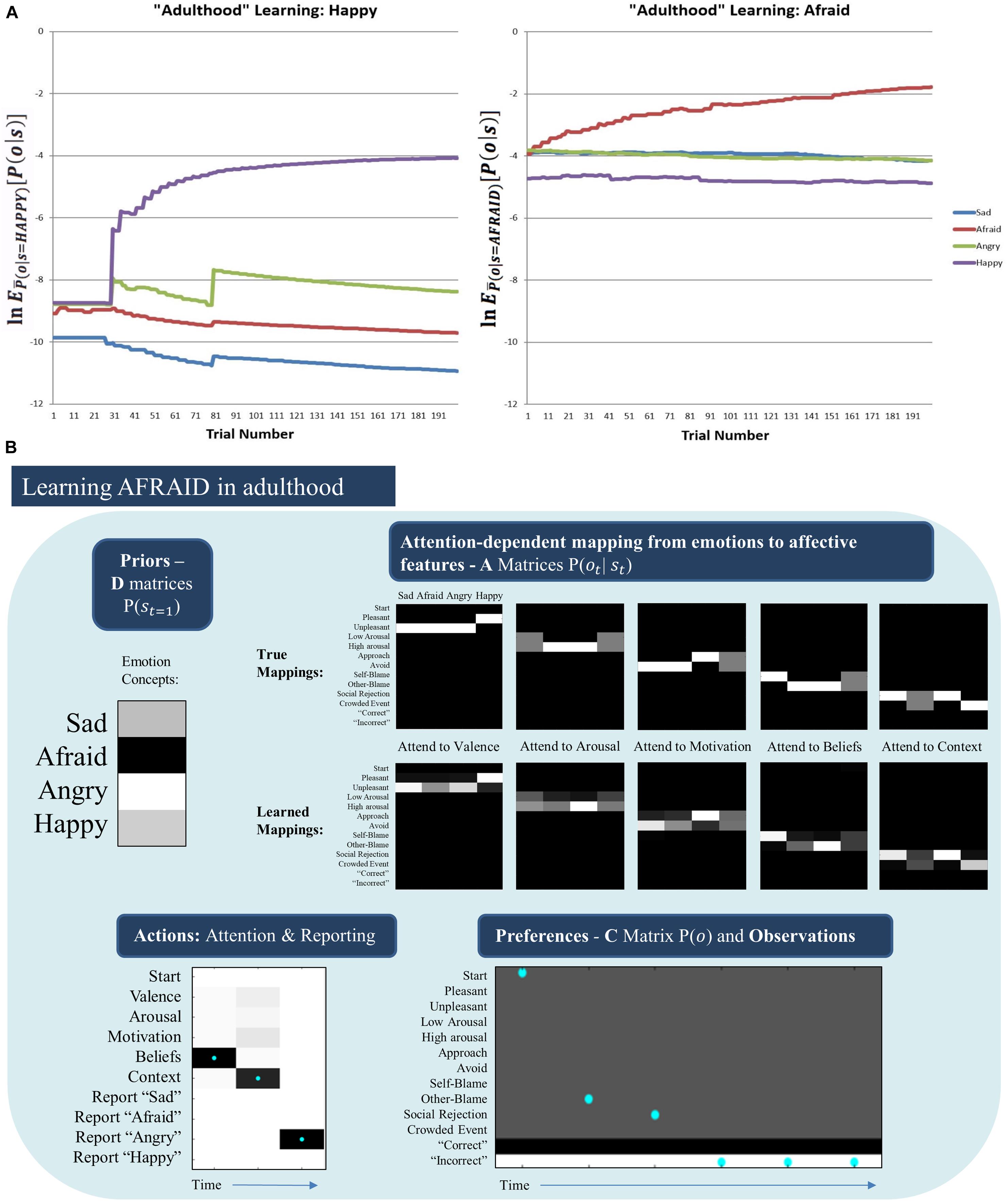

Somewhat surprisingly, the model was unable to acquire the AFRAID concept in its “adulthood” (Figure 7, middle right). To better understand this, for each trial we computed the expected evidence for each state, under the distribution of outcomes expected under the generative process (using bar notation to distinguish the process from the model) given a particular state, (). This was based on the reasoning that, if we treat the different emotional states as alternative models to explain the data, then the likelihood of data given states is equivalent to the evidence for a given state. Figure 8A plots the log transform of this expected evidence for each established emotion concept expected under the distribution of outcomes that would be generated if the “real” emotional state were AFRAID (right panel) and contrasts it with the analogous plot for HAPPY (left panel), which was a more easily acquired concept [we took the logarithm of this expected evidence (or likelihood) to emphasize the lower evidence values; note that higher (less negative) values correspond to greater evidence in these plots].

Figure 8. (A) (Left) The log expected probability of outcomes under the HAPPY outcome distribution, given each of the four emotion concepts in the agent’s model (i.e., given the “A” matrix it had learned) at each of 200 learning trials, (note: the bar over P indicates the generative process distribution). (Right) The analogous results for the AFRAID outcome pattern. These plots illustrate that HAPPY may have been more easily acquired than AFRAID because the agent was less confident in the explanatory power of its current conceptual repertoire when it began to observe the HAPPY outcome pattern than when it began to observe the AFRAID outcome pattern. This also shows that the AFRAID outcome pattern provided some evidence for SAD and ANGRY in the agent’s model, likely due to outcome pattern overlap between AFRAID and these other two concepts. (B) Illustrates the priors (D) and likelihoods (A) learned after 50 observations of SAD, ANGRY, and HAPPY (150 trials total) followed by 200 trials in which SAD, AFRAID, ANGRY, and HAPPY were each presented 50 times. As can be seen, while the “A” matrices are fairly well learned, the agent acquired a strong prior expectation for ANGRY. As shown in the example trial in the bottom left, this led the agent to “jump to conclusions” and report ANGER on AFRAID trials after making 2 observations consistent with both ANGRY and AFRAID (other-blame and social rejection, as shown in the bottom right). In this case, the agent would have needed to attend to her motivated actions (avoid) to correctly infer AFRAID.

As can be seen, in the case of HAPPY the three previously acquired emotion concepts had a relatively low ability to account for all observations, and so HAPPY was a useful construct in providing more accurate explanations of observed outcomes. In contrast, when learning AFRAID the model was already confident in its ability to explain its observations (i.e., the other concepts already had much higher evidence than in the case of HAPPY), and the “AFRAID” outcome pattern also provided moderate evidence for ANGRY and SAD (i.e., the outcome patterns between AFRAID and these other emotion concepts had considerable overlap). In Figure 8B, we illustrate the “A” and “D” matrix values the agent had learned after the total 350 trials (i.e., 150 + 200, as described above), and an exemplar trial in which the agent mistook fear for anger (which was the most common confusion). As can be seen, while the “A” matrix mappings were learned fairly well, the agent had learned a strong prior expectation for ANGRY in comparison to its expectation for AFRAID. In the example trial the agent first attends to beliefs (observing other-blame) and then to the context (observing social rejection). These observations are consistent with both ANGRY and AFRAID; however, social rejection is more uniquely associated with ANGRY (i.e., AFRAID is also associated with crowded events, while ANGER is not). Combined with the higher prior expectation for ANGRY, the agent “jumps to conclusions” and becomes sufficiently confident to report ANGER (at which point she receives “incorrect” social feedback). Here, correct inference of AFRAID (i.e., disambiguating AFRAID from ANGRY) would have required that the agent also attend to her action tendencies (where she would have observed avoidance motivation) before deciding which emotion to report.

In this context, greater evidence for an unexplained outcome pattern would be required to “convince” the agent that her currently acquired concepts were not sufficient and that further information gathering (i.e., a greater number of attentional shifts) was necessary before becoming sufficiently confident to report her emotions. Based on this insight, we examined ways in which the model could be given stronger evidence that its current conceptual repertoire was insufficient to account for its observations. We first observed that we could improve model performance by “flooding” the model with an extended pattern of only AFRAID-consistent outcomes (i.e., 100 trials in a row), prior to reintroducing the other emotions in an interleaved fashion. As can be seen in Figure 7 (bottom right), this led to successful acquisition of AFRAID. However, it temporarily interfered with previous learning of the HAPPY concept. We also observed that by instead increasing the number of AFRAID learning trials from 200 to 600, the model eventually increased its accuracy to between 40 and 80% across the last 10 bins (last 50 trials) – indicating that learning could occur, but at a much slower rate.

Overall, these results confirmed that a new emotion concept could be learned in synthetic “adulthood,” as may occur, for example, in psycho-educational interventions during psychotherapy. However, these results also demonstrate that this type of learning can be more difficult. These results therefore suggest a kind of “sensitive period” early in life where emotion concepts may be more easily acquired.

Can Maladaptive Early Experiences Bias Emotion Conceptualization?

The final question we asked was whether unfortunate early experiences could hinder our agent’s ability to adaptively infer and/or learn about emotions. Based on the three-process model (Smith et al., 2018b), it has previously been suggested that at least two mechanisms could bring this about:

1. Impoverished early experiences (i.e., not being exposed to the different patterns of observations that would facilitate emotion concept learning).

2. Having early experiences that reinforce maladaptive cognitive habits (e.g., selective attention biases), which can hinder adaptive inference (if the concepts have been acquired) and learning (if the concepts have not yet been acquired).

We chose to examine both of these possibilities below.

Non-representative Early Emotional Experiences

To examine the first mechanism (involving the maladaptive influence of “unrepresentative” early experiences), we used the same learning procedure and parameters described in the previous sections. In this case, however, we exposed the agent to 200 outcomes generated by a generative process where one emotion was experienced 50 times more often than others. Specifically, we examined the cases of a childhood filled with either chronic fear/threat or chronic sadness, as a potential means of simulating the effects of continual childhood abuse or neglect (the sadness simulations might also be relevant to chronic depression over several years). We then examined the model’s ability to learn to infer new emotions in a subsequent 200 trials.

In general, we observed that primarily experiencing fear or sadness during childhood (which could also be thought of as undifferentiated in the sense that they could not be contrasted with other emotions) led the agent to have notable difficulties in learning new emotions later in life. These results were variable upon repeated simulations with different emotions (e.g., verbal reporting continually fluctuated between high and low levels of accuracy for some emotions, while accuracy remained near 0% for others, while yet others were well acquired). For example, in one representative simulation, in which the agent primarily experienced fear during childhood, reporting accuracy continually varied for HAPPY (45% accuracy in the final 20 trials), remained at 0% for ANGRY, remained at 100% for AFRAID, and was stable at 100% for SAD). Whereas primarily experiencing sadness in childhood during a representative simulation led to 0% accuracy for HAPPY, continually varying accuracy for ANGRY and AFRAID (55% accuracy in the final 20 trials for each), and stable high accuracy for SAD (95% accuracy in final 20 trials). Similar patterns of (highly variable) results were observed when performing the same simulations with the other two emotions.

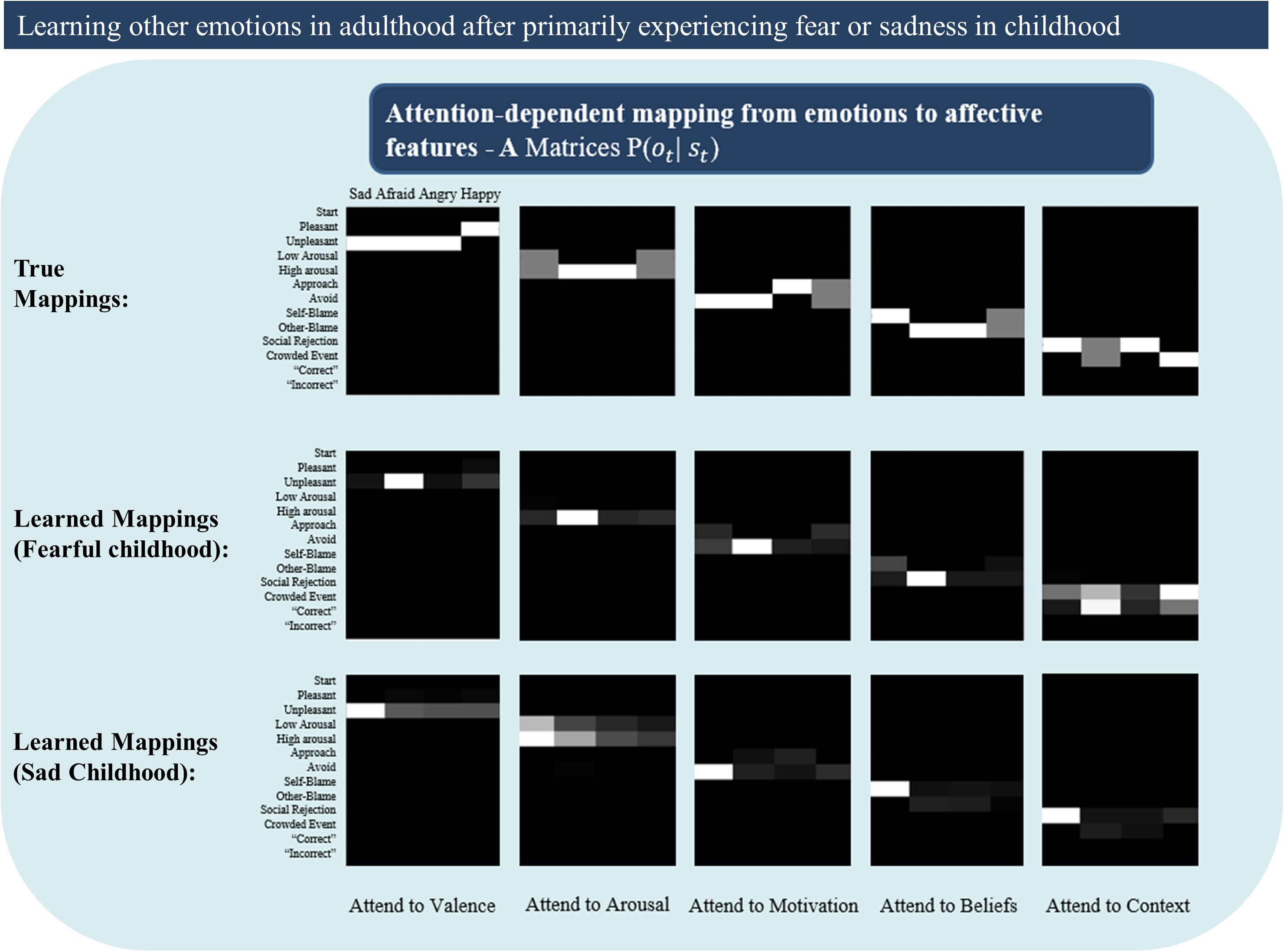

Unlike the results shown in Figure 8B – in which likelihood mappings were fairly well acquired (and precise prior expectations for specific emotions hindered correct inference) – poor performance was here explained primarily by poorly acquired likelihood mappings (i.e., the content of the other emotions concepts was often not learned). Figure 9 illustrates this by presenting the “A” matrices learned by the agent after childhoods dominated by either fear or sadness. As can be seen there, the likelihood mappings do not strongly resemble the true mappings within the generative process. These results in general support the notion that having unrepresentative or insufficiently diverse early emotional experiences could hinder later learning.

Figure 9. Illustrates poorly learned emotion concepts (likelihood mappings or “A” matrices) in adulthood due to a childhood primarily characterized by either fear or sadness. This could be thought of as simulating early adversity involving continual abuse or neglect, or perhaps cases of chronic depression. See text for more details.

Maladaptive Attention Biases

To examine the second mechanism proposed by the three-process model (involving maladaptive patterns in habitual attention allocation), we equipped the model’s “E” matrix with high prior expectations over specific policies, which meant that it was 50 times more likely to attend to some information and not to other information. This included: (i) an “external attention bias,” where the agent had a strong habit of focusing on external stimuli (context) and its beliefs about self- and other-blame; (ii) an “internal attention bias,” where the agent had a strong habit of only attending to valence and arousal; and (iii) a “somatic attention bias,” where the agent had a strong habit to attend only to its arousal level and the approach vs. avoid modality.

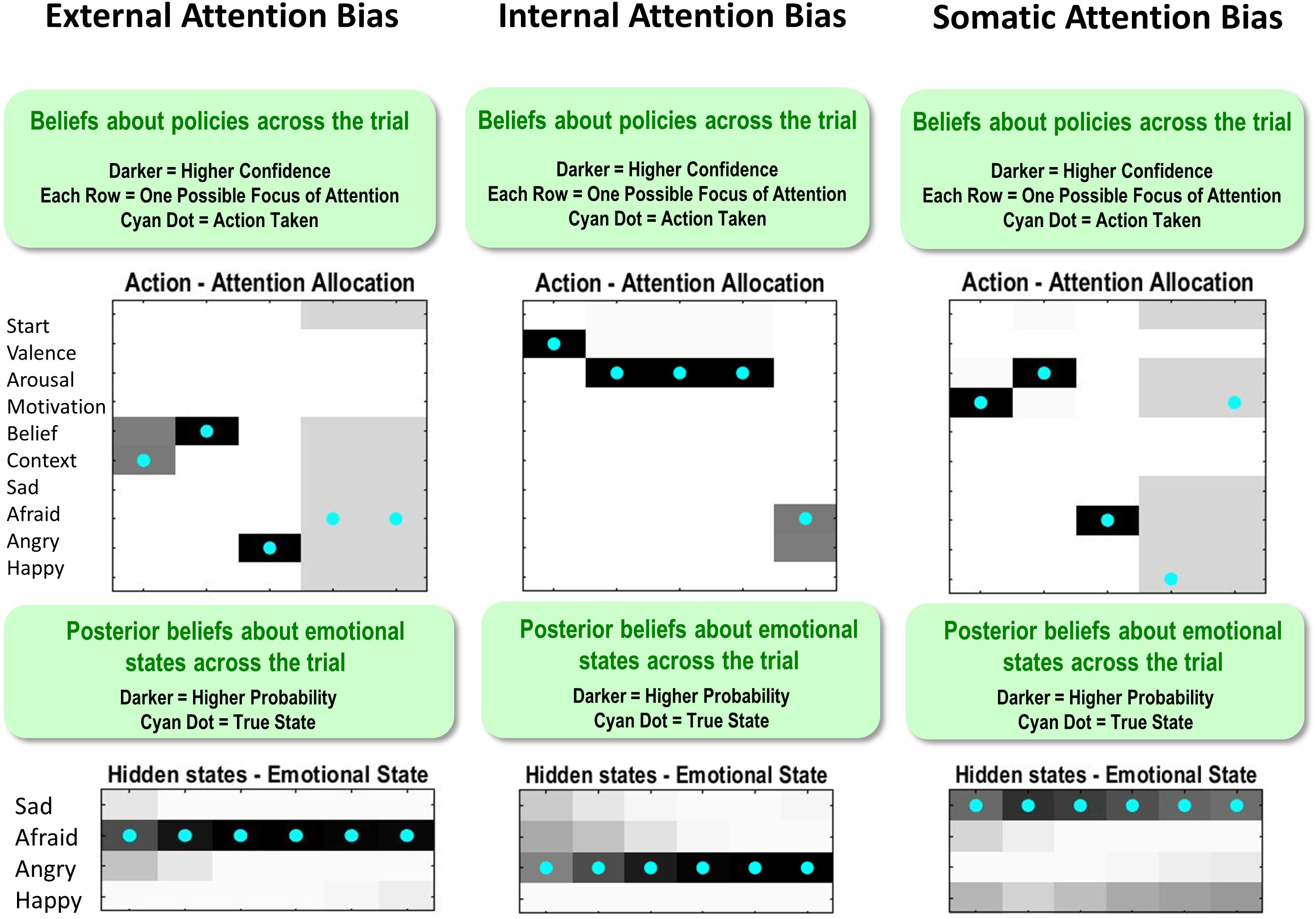

Figure 10 shows how these different attentional biases promote false inference. On the left, the true state is AFRAID, and the externally focused agent first attends to the stimulus/context (social rejection) and then to her beliefs (other-blame); however, without paying attention to her motivated action (avoid), she falsely reports feeling ANGRY instead of AFRAID (note that, following feedback, there is a retrospective inference that afraid was more probable; similar retrospective inferences after feedback are also shown in the other two examples in Figure 10). In the middle, the true state is ANGRY, and the internally focused agent first attends to valence (unpleasant) and then to arousal (high); however, without paying attention to her action tendency (approach), she falsely reports feeling AFRAID. On the right, the true state is SAD, and the somatically focused agent attends to her motivated action (avoid) and to arousal (high); however, without attending to beliefs (self-blame) she falsely reports feeling AFRAID instead of SAD.

Figure 10. This figure illustrates single trial effects of different attentional biases, each promoting false inference in a model that has already acquired precise emotion concepts (i.e., temperature parameter = 2 for both “A” and “B” matrices). External attention bias was implemented by giving the agent high prior expectations (“E” matrix values) that she would attend to the context and to her beliefs. Internal attention bias was implemented by giving the agent high prior expectations that she would attend to valence and arousal. Somatic attention bias was implemented by giving the agent high prior expectations that she would attend to arousal and action motivation. See the main text for a detailed description of the false inferences displayed for each bias. Note: the bottom panels indicate posterior beliefs, illustrating the agent’s retrospective beliefs at the end of the trial about her emotional state throughout the trial. Also note that the agent’s actions in the upper left and upper right after reporting should be ignored (i.e., they are simply an artifact of the trial continuing after the agent’s report, when there is no goal and therefore no confidence in what to do).

Importantly, these false reports occur in an agent that has already acquired very precise emotion concepts. Thus, this does not represent a failure to learn about emotions, but simply the effect of having learned poor habits for mental action.

The results of these examples were confirmed in simulations of 40 interleaved emotion trials (10 per emotion) in an agent who had already acquired precise emotion concepts (temperature parameter = 2 for both “A” and “B” matrices; no learning). In these simulations, we observed that the externally focused agent had 100% accuracy for SAD, 10% accuracy for AFRAID, 100% accuracy for ANGRY, and 60% accuracy for HAPPY. The internally focused agent had 100% accuracy for SAD, 50% accuracy for AFRAID, 60% accuracy for ANGRY, and 100% accuracy for HAPPY. The somatically focused agent had 10% accuracy for SAD, 100% accuracy for AFRAID, 100% accuracy for ANGRY, and 0% accuracy for HAPPY. Thus, without adaptive attentional habits, the agent was prone to misrepresent her emotions.

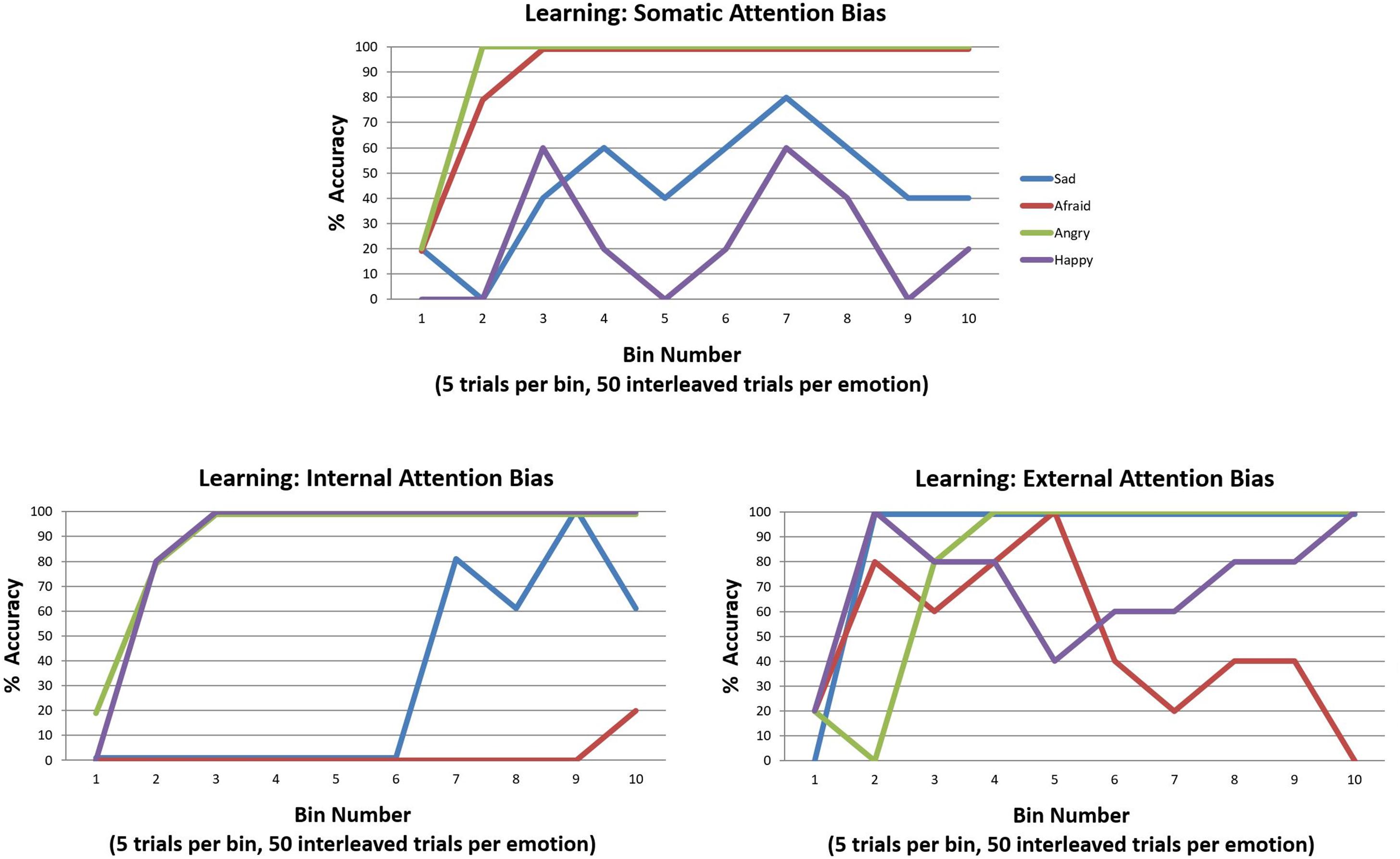

In our final simulations, we examined how learning these kinds of attentional biases in childhood could hinder emotion concept learning. To do so, we used the same learning procedure described in the previous section “Can Emotion Concepts Be Learned in Childhood?”. However, in this case we simply equipped the model with the three different attentional biases (“E” matrix prior distributions over policies) and assessed its ability to learn emotion concepts over the 200 trials. The results of these simulations are provided in Figure 11. A somatic attention bias primarily allowed the agent to learn two emotion concepts, which corresponded to ANGER and FEAR. However, it is worth highlighting that the low accuracy for the other emotions means that their respective patterns were subsumed under the first two. Thus, it is more accurate to say that the agent learned two affective concepts, which largely predicted only approach vs. avoidance. In contrast, the internally biased agent easily acquired the distinction between pleasant (HAPPY) and unpleasant emotions (all lumped into ANGER) and began to learn a third concept that distinguished between low vs. high arousal (i.e., SAD vs. ANGRY). However, it did not conceptualize the distinction between approach and avoidance (i.e., ANGRY vs. AFRAID). Lastly, the externally focused agent was somewhat labile in concept acquisition; by the end, she could not predict approach vs. avoidance, but she possessed externally focused concepts with content along the lines of “the state I am in when I’m socially rejected and think it’s my fault vs. someone else’s fault” (SAD vs. ANGRY) and “the state I am in when at a crowded event” (HAPPY). These results provide strong support for the potential role of attentional biases in subverting emotional awareness.

Figure 11. Illustration of the simulated effects of each of the three attention biases (described in the legend for Figure 10) on emotion concept learning over 200 trials (initialized with a model that possessed a completely flat “A” matrix likelihood distribution). Each bias induces aberrant learning in a different way, often leading to the acquisition of two more coarse-grained emotion concepts as opposed to the four fine-grained concepts distinguished in the generative process. See the main text for a detailed description and interpretation.

Discussion

The active inference formulation of emotional processing we have presented represents a first step toward the goal of building quantitative computational models of the ability to learn, recognize, and understand (be “aware” of) one’s own emotions. Although this is clearly a toy model, it does appear to offer some insights, conceptual advances, and possible predictions.

First, in simulating differences in the precision (specificity) of emotion concepts, some intuitive but interesting phenomena emerged. As would be expected, differences in the specificity of the content of emotion concepts – here captured by the precision of the likelihood mapping from states to outcomes (i.e., the precision of what pattern of outcomes each emotion concept predicted) – led to differences in inferential accuracy. This suggests that, as would be expected, those with more precise emotion concepts would show greater understanding of their own affective responses. Perhaps less intuitively, beliefs about the stability of emotion concepts – here captured by the precision of expected state transitions – also influenced inferential accuracy. This predicts that a belief that emotional states are more stable (less labile) over time would also facilitate one’s ability to correctly infer what they are feeling. This appears consistent with the low levels of emotional awareness or granularity observed in borderline personality disorder, which is characterized by emotional instability (Levine et al., 1997; Suvak et al., 2011).

Next, in simulating emotion concept learning, a few interesting insights emerged. Our simulations first confirmed that emotion concepts could successfully be learned, even when their content was cast (as done here) as complex, probabilistic, and highly overlapping response patterns across interoceptive, proprioceptive, exteroceptive, and cognitive domains. This was true when all emotion concepts needed to be learned simultaneously (as in childhood; see Widen and Russell, 2008; Hietanen et al., 2016), and was also true when a single new emotion concept was learned after others had already been acquired (as in adulthood during psycho-educational therapeutic interventions; e.g., see Hayes and Smith, 2005; Barlow et al., 2016; Burger et al., 2016; Lumley et al., 2017).

These results depended on whether the observed outcomes during learning were sufficiently precise and consistent. One finding worth highlighting was that emotion concept learning was hindered when the precision of transitions among emotional states was too low. This result may be relevant to previous empirical results in populations known to show reduced understanding of emotional states, such as those with autism (Silani et al., 2008; Erbas et al., 2013) and those who grow up in socially impoverished or otherwise adverse (unpredictable) environments (Colvert et al., 2008; Lane et al., 2018). In autism, it has been suggested that overly imprecise beliefs about state transitions may hinder mental state learning, because such states require tracking abstract behavioral patterns over long timescales (Lawson et al., 2014, 2017; Haker et al., 2016). Children who grow up in impoverished environments may not have the opportunity to interact with others to observe stable patterns in other’s affective responses; or receive feedback about their own (Pears and Fisher, 2005; Lane et al., 2018; Smith et al., 2018b). Our results successfully reproduce these phenomena – which represent important examples of mental state learning that may depend on consistently observed outcome patterns that are relatively stable over time.

As emotion concepts are known to differ in different cultures (Russell, 1991), our model and results may also relate to the learning mechanisms allowing for this type of culture-specific emotion categorization learning. Specifically, the “correct” and “incorrect” social feedback in our model could be understood as linguistic feedback from others in one’s culture (e.g., a parent labeling emotional reactions for a child using culture-specific categories). If this feedback is sufficiently precise, then emotion concept learning could proceed effectively – even if the probabilistic mapping from emotion categories to other perceptual outcomes is fairly imprecise (i.e., which appears to be the case empirically; Barrett, 2006, 2017).