- 1Dipartimento di Neuroscienze, Università di Parma, Parma, Italy

- 2Consiglio Nazionale delle Ricerche, Istituto di Neuroscienze, Parma, Italy

Spoken language is an innate ability of the human being and represents the most widespread mode of social communication. The ability to share concepts, intentions and feelings, and also to respond to what others are feeling/saying is crucial during social interactions. A growing body of evidence suggests that language evolved from manual gestures, gradually incorporating motor acts with vocal elements. In this evolutionary context, the human mirror mechanism (MM) would permit the passage from “doing something” to “communicating it to someone else.” In this perspective, the MM would mediate semantic processes being involved in both the execution and in the understanding of messages expressed by words or gestures. Thus, the recognition of action related words would activate somatosensory regions, reflecting the semantic grounding of these symbols in action information. Here, the role of the sensorimotor cortex and in general of the human MM on both language perception and understanding is addressed, focusing on recent studies on the integration between symbolic gestures and speech. We conclude documenting some evidence about MM in coding also the emotional aspects conveyed by manual, facial and body signals during communication, and how they act in concert with language to modulate other’s message comprehension and behavior, in line with an “embodied” and integrated view of social interaction.

Introduction

In the last years, the hypothesis of language as “embodied” in sensory and motor experience has been widely discussed in the field cognitive neuroscience.

In this review, we will firstly discuss recent behavioral and neurophysiological studies confirming the essential role of sensorimotor brain areas in language processing, facing the controversial issues and reviewing recent results that suggest an extended view of embodied theories.

We will discuss this hypothesis, providing evidences about the gestural origin of language, focusing on studies investigating the functional relation between manual gesture and speech and the neural circuits involved in their processing and production.

Finally, we will report evidences about the functional role of manual and facial gestures as communicative signals that, in concert with language, express emotional messages in the extended context of social interaction.

All these points provide evidences in favor of an integrated body/verbal communication system mediated by the mirror mechanism (MM).

What Is Embodied About Communication? the Involvement of Mirror Mechanism in Language Processing

It is well known that our thoughts are verbally expressed by symbols that have little or no physical relationship with objects, actions and feelings to which they refer. Knowing how linguistic symbols may have been associated with aspects of the real world represents one of the thorniest issues about the study of language and its evolution. In cognitive psychology, a classic debate has concerned how language is stored and recovered in the human brain.

According to the classical “amodal approach,” the concepts are expressed in a symbolic format (Fodor, 1998; Mahon and Caramazza, 2009). The core assumption is that meanings of words are like a formal language, composed of arbitrary symbols, which represent aspects of the word (Chomsky, 1980; Kintsch, 1998; Fodor, 2000); to understand a sentence, words are led back symbols that represent their meaning. In other terms, there would be an arbitrary relationship between the word and its referent (Fodor, 1975, 2000; Pinker, 1994; Burgess and Lund, 1997; Kintsch, 1998). Neuropsychological studies provide interesting evidence for the amodal nature of concept. In Semantic Dementia, for example, a brain damage in the temporal and adjacent areas results in an impairment of conceptual processing (Patterson et al., 2007). A characteristic of this form of dementia is the degeneration of the anterior temporal lobe (ATL) that several imaging studies have highlighted to have a critical role in amodal conceptual representations (for a meta-analysis, see Visser et al., 2010).

In contrast, the embodied approaches to language propose that conceptual knowledge is grounded in body experience and in the sensorimotor systems (Gallese and Lakoff, 2005; Barsalou, 2008; Casile, 2012) that are involved in forming and retrieving semantic knowledge (Kiefer and Pulvermüller, 2012). These theories are supported by the discovery of mirror neurons (MNs), identified in the ventral pre-motor area (F5) of the macaque (Gallese et al., 1996; Rizzolatti et al., 2014). MNs would be at the basis of both action comprehension and language understanding, constituting the neural substrate from which more sophisticated forms of communication evolved (Rizzolatti and Arbib, 1998; Corballis, 2010). The MM is based on the process of motor resonance, which mediates action comprehension: when we observe someone performing an action, the visual input of the observed motor act reaches and activates the same fronto-parietal networks recruited during the execution of the same action (Nelissen et al., 2011), permitting a direct access to the own motor representation. This mechanism was hypothesized to be extended to language comprehension, namely when we listen a word or a sentence related to an action (e.g., “grasping an apple”), allowing an automatic access to action/word semantics (Glenberg and Kaschak, 2002; Pulvermüller, 2005; Fischer and Zwaan, 2008; Innocenti et al., 2014; Vukovic et al., 2017; Courson et al., 2018; Dalla Volta et al., 2018). This means that we comprehend words referring to concrete objects or actions directly accessing to their meaning through our sensorimotor experience (Barsalou, 2008).

The sensorimotor activation in response to language processing was demonstrated by a large amount of neurophysiological studies. Functional magnetic resonance imaging (fMRI) studies demonstrated that seeing action verbs activated similar motor and premotor areas as when the participants actually move the effector associated with these verbs (Buccino et al., 2001; Hauk et al., 2004). This “somatotopy” is one of the major argument supporting the idea that concrete concepts are grounded in action–perception systems of the brain (Pulvermüller, 2005; Barsalou, 2008). Transcranial magnetic stimulation (TMS) results confirmed the somatotopy in human primary motor cortex (M1) demonstrating that the stimulation of the arms or legs M1 regions facilitated the recognition of action verbs involving movement of the respective extremities (Pulvermüller, 2005; Innocenti et al., 2014).

However, one of the major criticism to the embodied theory is the idea that motor system plays an epiphenomenal role during language processing (Mahon and Caramazza, 2008). In this view, the activations of motor system are not necessary to language understanding but they are the result of a cascade of spreading activations caused by the amodal semantic representation, or a consequence of explicit perceptual or motor imagery induced by the semantic tasks.

To address this point, further neurophysiological studies using time-resolved techniques such as high-density electroencephalography (EEG) or magnetoencefalography (MEG) indicated that the motor system is involved in an early time window corresponding to lexical-semantic access (Pulvermüller, 2005; Hauk et al., 2008; Dalla Volta et al., 2014; Mollo et al., 2016), supporting a causal relationship between motor cortex activation and action verb comprehension. Interestingly, recent evidences (Dalla Volta et al., 2018; García et al., 2019) has dissociated the contribution of motor system during early semantic access from the activation of lateral temporal-occipital areas in deeper semantic processing (e.g., categorization tasks) and multimodal reactivation.

Another outstanding question is raised by the controversial data about the processing of non-action language (i.e., “abstract” concepts). According to the Dual Coding Theory (Paivio, 1991), concrete words are represented in both linguistic and sensorimotor-based systems, while abstract words would be represented only in the linguistic one. Neuroimaging studies support this idea showing that the processing of abstract words is associated with higher activations in the left IFG and the superior temporal cortex (Binder et al., 2005, 2009; Wang et al., 2010), areas commonly involved in linguistic processing. The Context Availability Hypothesis instead argues that abstract concepts have increased contextual ambiguity compared to concrete concepts (Schwanenflugel et al., 1988). While concrete words would have direct relations with the objects or actions they refer to, abstract words can present multiple meanings and they needed more time to be understood (Dalla Volta et al., 2014, 2018; Buccino et al., 2019). This assumes that, they can be disambiguated if inserted in a “concrete context” which provides elements to narrow their meanings (Glenberg et al., 2008; Boulenger et al., 2009; Scorolli et al., 2011, 2012; Sakreida et al., 2013). Researches on action metaphors (e.g., “grasp an idea”) that are involved in both action and thinking, found an engagement of sensory-motor systems even when action language is figurative (Boulenger et al., 2009, 2012; Cuccio et al., 2014). Nevertheless, some studies observe motor activation only for literal, but not idiomatic sentences (Aziz-Zadeh et al., 2006; Raposo et al., 2009).

In a recent TMS study, De Marco et al. (2018) tested the effect of context in modulating motor cortex excitability during abstract words semantic processing. The presentation of a congruent manual symbolic gesture as prime stimulus increased hand M1 excitability in the earlier phase of semantic processing and speeded word comprehension. These results confirmed that the semantic access to abstract concepts may be mediated by sensorimotor areas when the latter are grounded in a familiar motor context.

Gestures: a Bridge Between Language and Action

One of the major contribution in support of embodied cognition theory derived from the hypothesis of the motor origin of spoken language. Comparative neuroanatomical and neurophysiological studies sustain that F5 area in macaques is cytoarchitectonically comparable to Brodmann area 44 in the human brain (IFG), which is part of Broca’s area (Petrides et al., 2005, 2012). This area would be active not only in human action observation but also in language understanding (Fadiga et al., 1995, 2005; Pulvermüller et al., 2003), transforming heard phonemes in the corresponding motor representations of the same sound (Fadiga et al., 2002; Gentilucci et al., 2006). In this way, similarly to what happen during action comprehension, the MM would directly link the sender and the receiver of a message (manual or vocal) in a communicative context. For this reason, it was hypothesized to be the ancestor system favoring the evolution of language (Rizzolatti and Arbib, 1998).

Gentilucci and Corballis (2006) showed numerous empirical evidence that support the importance of the motor system in the origin of language. Specifically, the execution/observation of a grasp with the hand would activate a command to grasp with the mouth and vice-versa (Gentilucci et al., 2001, 2004, 2012; Gentilucci, 2003; De Stefani et al., 2013a). On the basis of these results the authors proposed that language evolved from arm postures that were progressively integrated with mouth articulation postures by mean of a double hand–mouth command system (Gentilucci and Corballis, 2006). At some point of the evolutionary development the simple vocalizations and gestures inherited from our primate ancestors gave origin to a sophisticated system of language for interacting with others conspecifics (Rizzolatti and Arbib, 1998; Arbib, 2003, 2005; Gentilucci and Corballis, 2006; Armstrong and Wilcox, 2007; Fogassi and Ferrari, 2007; Corballis, 2010), where manual postures became associated to sounds.

Nowadays, during a face-to-face conversation, spoken language and communicative motor acts operate together in a synchronized way. The majority of gestures are produced in association with speech: in this way the message assumes a specific meaning. Nevertheless, a particular type of gesture, the symbolic gesture (i.e., OK or STOP), can be delivered in utter silence because it replaces the formalized, linguistic component of the expression present in speech (Kendon, 1982, 1988, 2004). A process of conventionalization (Burling, 1999) is responsible for transforming meaningless hand movements that accompany verbal communication (i.e., gesticulations, McNeill, 1992) into symbolic gestures, as well as string of letters may be transformed into a meaningful word. Symbolic gestures therefore represent the conjunction point between manual actions and spoken language (Andric and Small, 2012; Andric et al., 2013). This leads to a great interest around the study of the interaction between symbolic gestures and speech, with the aim to shed light to the complex question about the role of the sensory-motor system in language comprehension.

A large amount of researches have claimed that, during language production and comprehension, gesture and spoken language are tightly connected (Gunter and Bach, 2004; Bernardis and Gentilucci, 2006; Gentilucci et al., 2006; Gentilucci and Dalla Volta, 2008; Campione et al., 2014; De Marco et al., 2015, 2018), suggesting that the neural systems for language understanding and action production are closely interactive (Andric et al., 2013).

In line with the embodiment view of language, the theory of integrated communication systems (McNeill, 1992, 2000; Kita, 2000) is centered on the idea that gestures and spoken language comprehension and production are managed by a unique control system. Thus, gestures and spoken language are both represented in the motor domain and they necessarily interact with each other during their processing and production.

At the opposite, the theory of independent communication systems (Krauss and Hadar, 1999; Barrett et al., 2005) claims that gestures and speech can work separately and are not necessarily integrated each other. Communication with gestures is described as an auxiliary system, evolved in parallel to language, that can be used when the primary system (language) is difficult to use or not intact. In this view, gesture-speech interplay is regarded as a semantic integration of amodal representations, taking place only after processing of the verbal and gestural messages have occurred separately. This hypothesis is primary supported by neuropsychological cases which reported that abnormal skilled learned purposive movements (limb apraxia) and language disorders (aphasia) are anatomically and functionally dissociable (Kertesz et al., 1984; Papagno et al., 1993; Heilman and Rothi, 2003). However, limb apraxia often co-occuring with Broca’s Aphasia (Albert et al., 2013) and difficulty in gesture-speech semantic integration was reported in aphasic patients (Cocks et al., 2009, 2018). Alongside clinical data, disrupting the activity in both left IFG and middle temporal gyrus (MTG) is found to impair gesture-speech integration (Zhao et al., 2018).

Evidence in favor of the integrated system theory came from a series of behavioral and neurophysiological studies that have investigated the functional relationship between gestures and spoken language. The first evidence of the reciprocal influence of gestures and words during their production came from the study by Bernardis and Gentilucci (2006), who showed how the vocal spectra measured during the pronunciation of one word (i.e., “hello”) was modified by the simultaneous production of the corresponding in meaning gesture (and vice-versa, the kinematics resulted inhibited). This interaction was found depending on the semantic relationship conveyed by the two stimuli (Barbieri et al., 2009), and was replicated even when gestures and words were simply observed or presented in succession (Vainiger et al., 2014; De Marco et al., 2015).

Neurophysiological studies showed controversial evidences about the core brain areas involved in gestures and words integration, that include different neural substrates as M1 (De Marco et al., 2015, 2018) IFG, MTG and superior temporal gyrus/sulcus (STG/S) (Willems and Hagoort, 2007; Straube et al., 2012; Dick et al., 2014; Özyürek, 2014; Fabbri-Destro et al., 2015). However, IFG virtual lesion showed to disrupt gesture-speech integration effect (Gentilucci et al., 2006), in accordance with the idea of human Broca’s area (and so the mirror circuit) as the core neural substrate of action, gesture and language processing and interplay (Arbib, 2005). Partially in contrast, investigation of temporal dynamics of the integration processing by mean of combined EEG/fMRI techniques confirmed the activation of a left fronto-posterior-temporal network, but revealed a primary involvement of temporal areas (He et al., 2018).

Finally, further results in favor of motor origin of language came from genetic research, since it was suggested that FOXP2 gene was involved both in verbal language production and upper limb movements coordination (Teramitsu et al., 2004) opening the question about a possible molecular substrate linking speech with gesture (see Vicario, 2013).

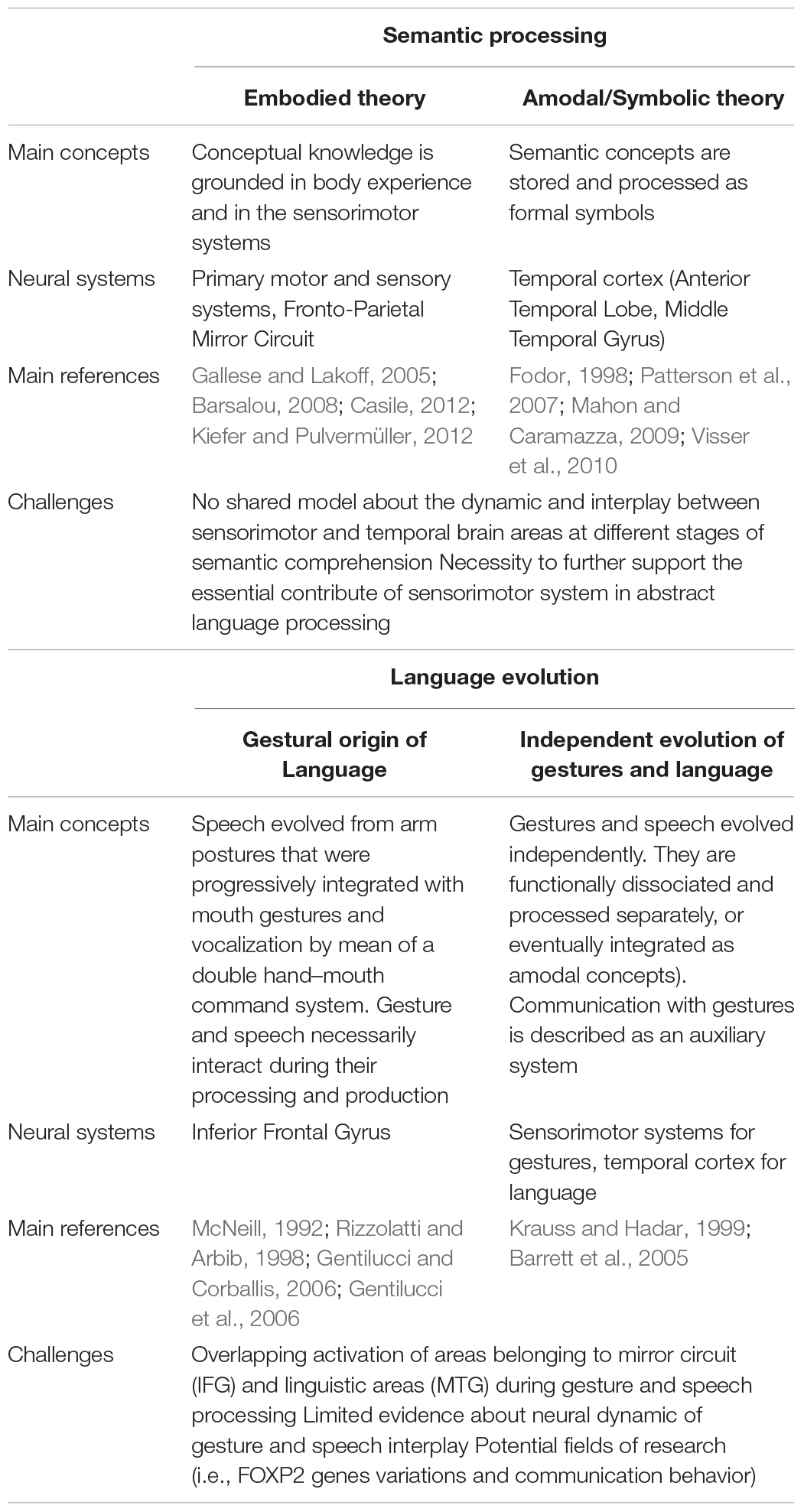

In conclusion, a good amount of results evidenced a reciprocal influence between gesture and speech during their comprehension and production, showing overlapping activation of the MM neural systems (IFG) involved in action, gesture and language processing and interplay (see Table 1). Further studies should consider potential integration of neuroscience research with promising fields investigating the issue at molecular level.

Table 1. Summary of main concepts, neural evidence, and future challenges about the theories explaining language semantic processing and evolution.

Motor Signs in Emotional Communication

The majority of studies that investigated the neural mechanism of hand gesture processing focused on the overlapping activations of words and gestures during their semantic comprehension and integration. However, it was shown that, gestural stimuli can convey more than semantic information, since they can also express emotional message. A first example came from the study of Shaver et al. (1987) which tried to identify behavioral prototype related to emotions (e.g., fist clenching is involved in the anger prototype). More recently, Givens (2008) showed that uplifted palms postures suggest a vulnerable or non-aggressive pose toward a conspecific.

However, beyond hand gestures investigations, emerging research about the role of motor system in emotion perception dealt with the study of mechanisms underlying body postures and facial gestures perception (De Gelder, 2006; Niedenthal, 2007; Halberstadt et al., 2009; Calbi et al., 2017). Of note, specific connections with limbic circuit were found for mouth MNs (Ferrari et al., 2017), evidencing the existence of a distinct pathway linked to the mouth/face motor control and communication/emotions encoding system. These neural evidences are in favor of a role of MM in the evolution and processing of emotional communication through the mouth/facial postures. As actions, gestures and language become messages that are understood by an observer without any cognitive mediation, the observation of a facial expression (such as disgust) would be immediately understood because it evokes the same representation in the insula of the individual observing it (Wicker et al., 2003).

We propose that MM guides every-day interactions in recognizing emotional states in others, decoding body and non-verbal signals together with language, influencing and integrating the communicative content in the complexity of a social interaction.

Indeed, the exposure to congruent facial expressions was found to affect the recognition of hand gestures (Vicario and Newman, 2013), as the observation of facial gesture interferes with the production of a mouth posture involving the same muscles (Tramacere et al., 2018).

Moreover, emotional speech (prosody), facial expressions and hand postures were found to directly influence motor behavior during social interactions (Innocenti et al., 2012; De Stefani et al., 2013b, 2016; Di Cesare et al., 2017).

Conclusion and Future Directions

Numerous behavioral and neurophysiological evidences are in favor of a crucial role of MM in language origin, as in decoding semantic and emotional aspects of communication.

However, some aspects need to be further investigated, and controversial results were found about the neural systems involved in semantics processing (especially for abstract language).

Nevertheless, a limitation emerges about experimental protocols which studied language in isolation, without considering the complexity of social communication. In other words, language should be considered always in relation to some backgrounds of a person mood, emotions, actions and events from which the things we are saying derive their meanings. Future studies should adopt a more ecological approach implementing research protocols that study language in association to congruent or incongruent non-verbal signals.

This will shed further light onto the differential roles that brain areas play and their domain specificity in understanding language and non-verbal signals as multiple channels of communication.

Furthermore, future research should consider to integrate behavioral and combined neurophysiological technique extending the sampling from typical to psychiatric population.

Indeed, new results will have also important implications for the comprehension of mental illness that were characterized by communication disorders and MM dysfunction as Autism Spectrum Disorder (Oberman et al., 2008; Gizzonio et al., 2015), schizophrenia (Sestito et al., 2013), and mood disorders (Yuan and Hoff, 2008).

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Funding

DD was supported by the project “KHARE” funded by INAIL to IN-CNR. ED was supported by Fondazione Cariparma.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to express our deep gratitude to our mentor, Prof. Maurizio Gentilucci, for his scientific guidance.

References

Albert, M. L., Goodglass, H., Helm, N. A., Rubens, A. B., and Alexander, M. P. (2013). Clinical Aspects of Dysphasia. Berlin: Springer Science & Business Media.

Andric, M., and Small, S. L. (2012). Gesture’s neural language. Front. Psychol. 3:99. doi: 10.3389/fpsyg.2012.00099

Andric, M., Solodkin, A., Buccino, G., Goldin-Meadow, S., Rizzolatti, G., and Small, S. L. (2013). Brain function overlaps when people observe emblems, speech, and grasping. Neuropsychologia 51, 1619–1629. doi: 10.1016/j.neuropsychologia.2013.03.022

Arbib, M. A. (2005). From monkey-like action recognition to human language: an evolutionary framework for neurolinguistics. Behav. Brain Sci. 28, 105–124. doi: 10.1017/S0140525X05000038

Armstrong, D. F., and Wilcox, S. (2007). The Gestural Origin of Language. Oxford: Oxford University Press.

Aziz-Zadeh, L., Wilson, S. M., Rizzolatti, G., and Iacoboni, M. (2006). Congruent embodied representations for visually presented actions and linguistic phrases describing actions. Curr. Biol. 16, 1818–1823. doi: 10.1016/j.cub.2006.07.060

Barbieri, F., Buonocore, A., Dalla Volta, R. D., and Gentilucci, M. (2009). How symbolic gestures and words interact with each other. Brain Lang. 110, 1–11. doi: 10.1016/j.bandl.2009.01.002

Barrett, A. M., Foundas, A. L., and Heilman, K. M. (2005). Speech and gesture are mediated by independent systems. Behav. Brain Sci. 28, 125–126. doi: 10.1017/s0140525x05220034

Barsalou, L. W. (2008). Grounded cognition. Annu. Rev. Psychol. 59, 617–645. doi: 10.1146/annurev.psych.59.103006.093639

Bernardis, P., and Gentilucci, M. (2006). Speech and gesture share the same communication system. Neuropsychologia 44, 178–190. doi: 10.1016/j.neuropsychologia.2005.05.007

Binder, J. R., Desai, R. H., Graves, W. W., and Conant, L. L. (2009). Where is the semantic system? a critical review and meta-analysis of 120 functional neuroimaging studies. Cereb. Cortex 19, 2767–2796. doi: 10.1093/cercor/bhp055

Binder, J. R., Westbury, C. F., McKiernan, K. A., Possing, E. T., and Medler, D. A. (2005). Distinct brain systems for processing concrete and abstract concepts. J. Cogn. Neurosci. 17, 905–917. doi: 10.1162/0898929054021102

Boulenger, V., Hauk, O., and Pulvermüller, F. (2009). Grasping ideas with the motor system: semantic somatotopy in idiom comprehension. Cereb. Cortex 19, 1905–1914. doi: 10.1093/cercor/bhn217

Boulenger, V., Shtyrov, Y., and Pulvermüller, F. (2012). When do you grasp the idea? MEG evidence for instantaneous idiom understanding. Neuroimage 59, 3502–3513. doi: 10.1016/j.neuroimage.2011.11.011

Buccino, G., Binkofski, F., Fink, G. R., Fadiga, L., Fogassi, L., Gallese, V., et al. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur. J. Neurosci. 13, 400–404. doi: 10.1111/j.1460-9568.2001.01385.x

Buccino, G., Colagè, I., Silipo, F., and D’Ambrosio, P. (2019). The concreteness of abstract language: an ancient issue and a new perspective. Brain Struct. Funct. 224, 1385–1401. doi: 10.1007/s00429-019-01851-1857

Burgess, C., and Lund, K. (1997). Modelling parsing constraints with high dimensional context space. Lang. Cogn. Process. 12, 177–210. doi: 10.1080/016909697386844

Burling, R. (1999). “Motivation, conventionalization, and arbitrariness in the originof language,” in The Origins of Language: What NonhumanPrimates Can Tell Us, ed. B. J. King, (Santa Fe, NM: School for American Research Press).

Calbi, M., Angelini, M., Gallese, V., and Umiltà, M. A. (2017). Embodied body language”: an electrical neuroimaging study with emotional faces and bodies. Sci. Rep. 7:6875. doi: 10.1038/s41598-017-07262-0

Campione, G. C., De Stefani, E., Innocenti, A., De Marco, D., Gough, P. M., Buccino, G., et al. (2014). Does comprehension of symbolic gestures and corresponding-in-meaning words make use of motor simulation? Behav. Brain Res. 259, 297–301. doi: 10.1016/j.bbr.2013.11.025

Casile, A. (2012). Mirror neurons (and beyond) in the macaque brain: an overview of 20 years of research. Neurosci. Lett. 540, 3–14. doi: 10.1016/j.neulet.2012.11.003

Cocks, N., Byrne, S., Pritchard, M., Morgan, G., and Dipper, L. (2018). Integration of speech and gesture in aphasia. Int. J. Lang. Commun. Dis. 53, 584–591. doi: 10.1111/1460-6984.12372

Cocks, N., Sautin, L., Kita, S., Morgan, G., and Zlotowitz, S. (2009). Gesture and speech integration: an exploratory study of a man with aphasia. Int. J. Lang. Commun. Dis. 44, 795–804. doi: 10.1080/13682820802256965

Corballis, M. C. (2010). Mirror neurons and the evolution of language. Brain Lang. 112, 25–35. doi: 10.1016/j.bandl.2009.02.002

Courson, M., Macoir, J., and Tremblay, P. (2018). A facilitating role for the primary motor cortex in action sentence processing. Behav. Brain Res. 15, 244–249. doi: 10.1016/j.bbr.2017.09.019

Cuccio, V., Ambrosecchia, M., Ferri, F., Carapezza, M., Lo Piparo, F., Fogassi, L., et al. (2014). How the context matters. Literal and figurative meaning in the embodied language paradigm. PLoS One 9:e115381. doi: 10.1371/journal.pone.0115381

Dalla Volta, R., Fabbri-Destro, M., Gentilucci, M., and Avanzini, P. (2014). Spatiotemporal dynamics during processing of abstract and concrete verbs: an ERP study. Neuropsychologia 61, 163–174. doi: 10.1016/j.neuropsychologia.2014.06.019

Dalla Volta, R. D., Avanzini, P., De Marco, D., Gentilucci, M., and Fabbri-Destro, M. (2018). From meaning to categorization: the hierarchical recruitment of brain circuits selective for action verbs. Cortex 100, 95–110. doi: 10.1016/j.cortex.2017.09.012

De Gelder, B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7:242. doi: 10.1038/nrn1872

De Marco, D., De Stefani, E., Bernini, D., and Gentilucci, M. (2018). The effect of motor context on semantic processing: a TMS study. Neuropsychologia 114, 243–250. doi: 10.1016/j.neuropsychologia.2018.05.003

De Marco, D., De Stefani, E., and Gentilucci, M. (2015). Gesture and word analysis: the same or different processes? NeuroImage 117, 375–385. doi: 10.1016/j.neuroimage.2015.05.080

De Stefani, E., De Marco, D., and Gentilucci, M. (2016). The effects of meaning and emotional content of a sentence on the kinematics of a successive motor sequence mimiking the feeding of a conspecific. Front. Psychol. 7:672. doi: 10.3389/fpsyg.2016.00672

De Stefani, E., Innocenti, A., De Marco, D., and Gentilucci, M. (2013a). Concatenation of observed grasp phases with observer’s distal movements: a behavioural and TMS study. PLoS One 8:e81197. doi: 10.1371/journal.pone.0081197

De Stefani, E., Innocenti, A., Secchi, C., Papa, V., and Gentilucci, M. (2013b). Type of gesture, valence, and gaze modulate the influence of gestures on observer’s behaviors. Front. Hum. Neurosci. 7:542. doi: 10.3389/fnhum.2013.00542

Di Cesare, G., De Stefani, E., Gentilucci, M., and De Marco, D. (2017). Vitality forms expressed by others modulate our own motor response: a kinematic study. Front. Hum. Neurosci. 11:565. doi: 10.3389/fnhum.2017.00565

Dick, A. S., Mok, E. H., Beharelle, A. R., Goldin-Meadow, S., and Small, S. L. (2014). Frontal and temporal contributions to understanding the iconic co-speech gestures that accompany speech. Hum. Brain Mapp. 35, 900–917. doi: 10.1002/hbm.22222

Fabbri-Destro, M., Avanzini, P., De Stefani, E., Innocenti, A., Campi, C., and Gentilucci, M. (2015). Interaction between words and symbolic gestures as revealed by N400. Brain Topogr. 28, 591–605. doi: 10.1007/s10548-014-0392-4

Fadiga, L., Craighero, L., Buccino, G., and Rizzolatti, G. (2002). Speech listening specifically modulates the excitability of tongue muscles: a TMS study. Eur. J. Neurosci. 15, 399–402. doi: 10.1046/j.0953-816x.2001.01874.x

Fadiga, L., Craighero, L., and Olivier, E. (2005). Human motor cortex excitability during the perception of others’ action. Curr. Opin. Neurobiol. 15, 213–218. doi: 10.1016/j.conb.2005.03.013

Fadiga, L., Fogassi, L., Pavesi, G., and Rizzolatti, G. (1995). Motor facilitation during action observation: a magnetic stimulation study. J. Neurophysiol. 73, 2608–2611. doi: 10.1152/jn.1995.73.6.2608

Ferrari, P. F., Gerbella, M., Coudé, G., and Rozzi, S. (2017). Two different mirror neuron networks: the sensorimotor (hand) and limbic (face) pathways. Neuroscience 358, 300–315. doi: 10.1016/j.neuroscience.2017.06.052

Fischer, M. H., and Zwaan, R. A. (2008). Embodied language: a review of the role of the motor system in language comprehension. Q. J. Exp. Psychol. 61, 825–850. doi: 10.1080/17470210701623605

Fodor, A. D. (2000). The Mind Doesn’t Work that Way: The Scope and Limits of Computational Psychology. New York, NY: MIT Press.

Fogassi, L., and Ferrari, P. F. (2007). Mirror neurons and the evolution of embodied language. Curr. Dir. Psychol. Sci. 16, 136–141. doi: 10.1111/j.1467-8721.2007.00491.x

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (1996). Action recognition in the premotor cortex. Brain 119, 593–609. doi: 10.1093/brain/119.2.593

Gallese, V., and Lakoff, G. (2005). The Brain’s concepts: the role of the sensory-motor system in conceptual knowledge. Cogn. Neuropsychol. 22, 455–479. doi: 10.1080/02643290442000310

García, A. M., Moguilner, S., Torquati, K., García-Marco, E., Herrera, E., Muñoz, E., et al. (2019). How meaning unfolds in neural time: embodied reactivations can precede multimodal semantic effects during language processing. NeuroImage 197, 439–449. doi: 10.1016/j.neuroimage.2019.05.002

Gentilucci, M. (2003). Grasp observation influences speech production. Eur. J. Neurosci. 17, 179–184. doi: 10.1046/j.1460-9568.2003.02438.x

Gentilucci, M., Benuzzi, F., Gangitano, M., and Grimaldi, S. (2001). Grasp with hand and mouth: a kinematic study on healthy subjects. J. Neurophysiol. 86, 1685–1699. doi: 10.1152/jn.2001.86.4.1685

Gentilucci, M., Bernardis, P., Crisi, G., and Dalla Volta, R. (2006). Repetitive transcranial magnetic stimulation of Broca’s area affects verbal responses to gesture observation. J. Cogn. Neurosci. 18, 1059–1074. doi: 10.1162/jocn.2006.18.7.1059

Gentilucci, M., Campione, G. C., De Stefani, E., and Innocenti, A. (2012). Is the coupled control of hand and mouth postures precursor of reciprocal relations between gestures and words? Behav. Brain Res. 233, 130–140. doi: 10.1016/j.bbr.2012.04.036

Gentilucci, M., and Corballis, M. C. (2006). From manual gesture to speech: a gradual transition. Neurosci. Biobehav. Rev. 30, 949–960. doi: 10.1016/j.neubiorev.2006.02.004

Gentilucci, M., and Dalla Volta, R. (2008). Spoken language and arm gestures are controlled by the same motor control system. Q. J. Exp. Psychol. 2006, 944–957. doi: 10.1080/17470210701625683

Gentilucci, M., Stefanini, S., Roy, A. C., and Santunione, P. (2004). Action observation and speech production: study on children and adults. Neuropsychologia 42, 1554–1567. doi: 10.1016/j.neuropsychologia.2004.03.002

Givens, D. B. (2008). The Nonverbal Dictionary of Gestures, Signs, and Body Language. Washington: Center for nonverbal studies.

Gizzonio, V., Avanzini, P., Campi, C., Orivoli, S., Piccolo, B., Cantalupo, G., et al. (2015). Failure in pantomime action execution correlates with the severity of social behavior deficits in children with autism: a praxis study. J. Autism Dev. Dis. 45, 3085–3097. doi: 10.1007/s10803-015-2461-2

Glenberg, A. M., and Kaschak, M. P. (2002). Grounding language in action. Psychon. Bull. Rev. 9, 558–565. doi: 10.3758/BF03196313

Glenberg, A. M., Sato, M., and Cattaneo, L. (2008). Use-induced motor plasticity affects the processing of abstract and concrete language. Curr. Biol. 18, R290–R291. doi: 10.1016/j.cub.2008.02.036

Gunter, T. C., and Bach, P. (2004). Communicating hands: ERPs elicited by meaningful symbolic hand postures. Neurosci. Lett. 372, 52–56. doi: 10.1016/j.neulet.2004.09.011

Halberstadt, J., Winkielman, P., Niedenthal, P. M., and Dalle, N. (2009). Emotional conception: how embodied emotion concepts guide perception and facial action. Psychol. Sci. 20, 1254–1261. doi: 10.1111/j.1467-9280.2009.02432.x

Hauk, O., Johnsrude, I., and Pulvermüller, F. (2004). Somatotopic representation of action words in human motor and premotor cortex. Neuron 41, 301–307. doi: 10.1016/s0896-6273(03)00838-9

Hauk, O., Shtyrov, Y., and Pulvermüller, F. (2008). The time course of action and action-word comprehension in the human brain as revealed by neurophysiology. J. Physiol. Paris 102, 50–58. doi: 10.1016/j.jphysparis.2008.03.013

He, Y., Steines, M., Sommer, J., Gebhardt, H., Nagels, A., Sammer, G., et al. (2018). Spatial–temporal dynamics of gesture–speech integration: a simultaneous EEG-fMRI study. Brain Struct. Funct. 223, 3073–3089. doi: 10.1007/s00429-018-1674-1675

Heilman, K. M., and Rothi, L. J. G. (2003). “Apraxia,” in Clinical Neuropsychology, eds K. M. Heilman, and E. Valenstein, (New York, NY: Oxford University Press).

Innocenti, A., De Stefani, E., Bernardi, N. F., Campione, G. C., and Gentilucci, M. (2012). Gaze direction and request gesture in social interactions. PLoS one 7:e36390. doi: 10.1371/journal.pone.0036390

Innocenti, A., De Stefani, E., Sestito, M., and Gentilucci, M. (2014). Understanding of action-related and abstract verbs in comparison: a behavioral and TMS study. Cogn. Process. 15, 85–92. doi: 10.1007/s10339-013-0583-z

Kendon, A. (1982). “Discussion of papers on basic phenomena of interaction: plenary session, subsection 4, research committee on sociolinguistics,” in Proceedings of the Xth International Congress of Sociology, Mexico.

Kendon, A. (1988). “How gestures can become like words,” in Crosscultural Perspectives in Nonverbal Communication, ed. F. Poyatos (Toronto: Hogrefe Publishers).

Kertesz, A., Ferro, J. M., and Shewan, C. M. (1984). Apraxia and aphasia: the functional-anatomical basis for their dissociation. Neurology 34, 40–40. doi: 10.1212/wnl.34.1.40

Kiefer, M., and Pulvermüller, F. (2012). Conceptual representations in mind and brain: theoretical developments, current evidence and future directions. Cortex 48, 805–825. doi: 10.1016/j.cortex.2011.04.006

Kita, S. (2000). “Representational gestures help speaking,” in Language and Gesture, ed. D. McNeill, (Cambridge: Cambridge University Press).

Krauss, R. M., and Hadar, U. (1999). “The role of speech-related arm/hand gestures in word retrieval,” in Gesture, Speech, and Sign, eds L. S. Messing, and R. Campbell, (Oxford: University of Oxford).

Mahon, B. Z., and Caramazza, A. (2008). A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. J. Physiol. Paris 102, 59–70. doi: 10.1016/j.jphysparis.2008.03.004

Mahon, B. Z., and Caramazza, A. (2009). Concepts and categories: a cognitive neuropsychological perspective. Annu. Rev. Psychol. 60, 27–51. doi: 10.1146/annurev.psych.60.110707.163532

McNeill, D. (1992). Hand and Mind: What Gestures Reveal about Thought. Chicago, IL: University of Chicago Press.

Mollo, G., Pulvermüller, F., and Hauk, O. (2016). Movement priming of EEG/MEG brain responses for action-words characterizes the link between language and action. Cortex 74, 262–276. doi: 10.1016/j.cortex.2015.10.021

Nelissen, K., Borra, E., Gerbella, M., Rozzi, S., Luppino, G., Vanduffel, W., et al. (2011). Action observation circuits in the macaque monkey cortex. J. Neurosci. 31, 3743–3756. doi: 10.1523/JNEUROSCI.4803-10.2011

Oberman, L. M., Ramachandran, V. S., and Pineda, J. A. (2008). Modulation of mu suppression in children with autism spectrum disorders in response to familiar or unfamiliar stimuli: the mirror neuron hypothesis. Neuropsychologia 46, 1558–1565. doi: 10.1016/j.neuropsychologia.2008.01.010

Özyürek, A. (2014). Hearing and seeing meaning in speech and gesture: insights from brain and behaviour. Philos. Trans. R. Soc. B Biol. Sci. 369:20130296. doi: 10.1098/rstb.2013.0296

Paivio, A. (1991). Dual coding theory: retrospect and current status. Can. J. Psychol. 45:255. doi: 10.1037/h0084295

Papagno, C., Della Sala, S., and Basso, A. (1993). Ideomotor apraxia without aphasia and aphasia without apraxia: the anatomical support for a double dissociation. J. Neurol. Neurosurg. Psychiatr. 56, 286–289. doi: 10.1136/jnnp.56.3.286

Patterson, K., Nestor, P. J., and Rogers, T. T. (2007). Where do you know what you know? The representation of semantic knowledge in the human brain. Nat. Rev. Neurosci. 8:976. doi: 10.1038/nrn2277

Petrides, M., Cadoret, G., and Mackey, S. (2005). Orofacial somatomotor responses in the macaque monkey homologue of Broca’s area. Nature 435:1235. doi: 10.1038/nature03628

Petrides, M., Tomaiuolo, F., Yeterian, E. H., and Pandya, D. N. (2012). The prefrontal cortex: Comparative architectonic organization in the human and the macaque monkey brains. Cortex 48, 46–57. doi: 10.1016/j.cortex.2011.07.002

Pulvermüller, F. (2005). Brain mechanisms linking language and action. Nat. Rev. Neurosci. 6, 576–582. doi: 10.1038/nrn1706

Pulvermüller, F., Shtyrov, Y., and Ilmoniemi, R. (2003). Spatiotemporal dynamics of neural language processing: an MEG study using minimum-norm current estimates. NeuroImage 20, 1020–1025. doi: 10.1016/S1053-8119(03)00356-352

Raposo, A., Moss, H. E., Stamatakis, E. A., and Tyler, L. K. (2009). Modulation of motor and premotor cortices by actions, action words and action sentences. Neuropsychologia 47, 388–396. doi: 10.1016/j.neuropsychologia.2008.09.017

Rizzolatti, G., and Arbib, M. A. (1998). Language within our grasp. Trends Neurosci. 21, 188–194. doi: 10.1016/S0166-2236(98)01260-1260

Rizzolatti, G., Cattaneo, L., Fabbri-Destro, M., and Rozzi, S. (2014). Cortical mechanisms underlying the organization of goal-directed actions and mirror neuron-based action understanding. Physiol. Rev. 94, 655–706. doi: 10.1152/physrev.00009.2013

Sakreida, K., Scorolli, C., Menz, M. M., Heim, S., Borghi, A. M., and Binkofski, F. (2013). Are abstract action words embodied? An fMRI investigation at the interface between language and motor cognition. Front. Hum. Neurosci. 7:125. doi: 10.3389/fnhum.2013.00125

Schwanenflugel, P. J., Harnishfeger, K. K., and Stowe, R. W. (1988). Context availability and lexical decisions for abstract and concrete words. J. Mem. Lang. 27, 499–520. doi: 10.1016/0749-596X(88)90022-90028

Scorolli, C., Binkofski, F., Buccino, G., Nicoletti, R., Riggio, L., and Borghi, A. M. (2011). Abstract and concrete sentences, embodiment, and languages. Front. Psychol. 2:227. doi: 10.3389/fpsyg.2011.00227

Scorolli, C., Jacquet, P. O., Binkofski, F., Nicoletti, R., Tessari, A., and Borghi, A. M. (2012). Abstract and concrete phrases processing differentially modulates cortico-spinal excitability. Brain Res. 1488, 60–71. doi: 10.1016/j.brainres.2012.10.004

Sestito, M., Umiltà, M. A., De Paola, G., Fortunati, R., Raballo, A., Leuci, E., et al. (2013). Facial reactions in response to dynamic emotional stimuli in different modalities in patients suffering from schizophrenia: a behavioral and EMG study. Front. Hum. Neurosci. 7:368. doi: 10.3389/fnhum.2013.00368

Shaver, P., Schwartz, J., Kirson, D., and O’connor, C. (1987). Emotion knowledge: further exploration of a prototype approach. J. Pers. Soc. Psychol. 52:1061. doi: 10.1037//0022-3514.52.6.1061

Straube, B., Green, A., Weis, S., and Kircher, T. (2012). A supramodal neural network for speech and gesture semantics: an fMRI study. PLoS One 7:e51207. doi: 10.1371/journal.pone.0051207

Teramitsu, I., Kudo, L. C., London, S. E., Geschwind, D. H., and White, S. A. (2004). Parallel FoxP1 and FoxP2 expression in songbird and human brain predicts functional interaction. J. Neurosci. 24, 3152–3163. doi: 10.1523/jneurosci.5589-03.2004

Tramacere, A., Ferrari, P. F., Gentilucci, M., Giuffrida, V., and De Marco, D. (2018). The emotional modulation of facial mimicry: a kinematic study. Front. Psychol. 8:2339. doi: 10.3389/fpsyg.2017.02339

Vainiger, D., Labruna, L., Ivry, R. B., and Lavidor, M. (2014). Beyond words: evidence for automatic language–gesture integration of symbolic gestures but not dynamic landscapes. Psychol. Res. 78, 55–69. doi: 10.1007/s00426-012-0475-473

Vicario, C. M. (2013). FOXP2 gene and language development: the molecular substrate of the gestural-origin theory of speech? Front. Behav. Neurosci. 7:99.

Vicario, C. M., and Newman, A. (2013). Emotions affect the recognition of hand gestures. Front. Hum. Neurosci. 7:906. doi: 10.3389/fnhum.2013.00906

Visser, M., Jefferies, E., and Lambon Ralph, M. A. (2010). Semantic processing in the anterior temporal lobes: a meta-analysis of the functional neuroimaging literature. J. Cogn. Neurosci. 22, 1083–1094. doi: 10.1162/jocn.2009.21309

Vukovic, N., Feurra, M., Shpektor, A., Myachykov, A., and Shtyrov, Y. (2017). Primary motor cortex functionally contributes to language comprehension: an online rTMS study. Neuropsychologia 96, 222–229. doi: 10.1016/j.neuropsychologia.2017.01.025

Wang, J., Conder, J. A., Blitzer, D. N., and Shinkareva, S. V. (2010). Neural representation of abstract and concrete concepts: a meta-analysis of neuroimaging studies. Hum. Brain Mapp. 31, 1459–1468. doi: 10.1002/hbm.20950

Wicker, B., Keysers, C., Plailly, J., Royet, J. P., Gallese, V., and Rizzolatti, G. (2003). Both of us disgusted in my insula: the common neural basis of seeing and feeling disgust. Neuron 40, 655–664. doi: 10.1016/s0896-6273(03)00679-2

Willems, R. M., and Hagoort, P. (2007). Neural evidence for the interplay between language, gesture, and action: a review. Brain Lang. 101, 278–289. doi: 10.1016/j.bandl.2007.03.004

Yuan, T. F., and Hoff, R. (2008). Mirror neuron system based therapy for emotional disorders. Med. Hypotheses 71, 722–726. doi: 10.1016/j.mehy.2008.07.004

Keywords: gesture, language, embodied cognition, mirror neurons, emotional communication, abstract concepts, motor resonance, social interaction

Citation: De Stefani E and De Marco D (2019) Language, Gesture, and Emotional Communication: An Embodied View of Social Interaction. Front. Psychol. 10:2063. doi: 10.3389/fpsyg.2019.02063

Received: 12 April 2019; Accepted: 26 August 2019;

Published: 24 September 2019.

Edited by:

Claudia Gianelli, University Institute of Higher Studies in Pavia, ItalyReviewed by:

Francesca Ciardo, Istituto Italiano di Tecnologia, ItalyCarmelo Mario Vicario, University of Messina, Italy

Sebastian Walther, University Clinic for Psychiatry and Psychotherapy, University of Bern, Switzerland

Copyright © 2019 De Stefani and De Marco. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Elisa De Stefani, ZWxpZGVzdGVmYW5pQGdtYWlsLmNvbQ==; Doriana De Marco, ZG9yaWFuYS5kZW1hcmNvQGdtYWlsLmNvbQ==

†These authors have contributed equally to this work

Elisa De Stefani

Elisa De Stefani Doriana De Marco

Doriana De Marco