- Faculty of Education, University of Macau, Macau, China

The current research investigates the impact of careless responding on factorial analytic results and construct validity with real data. Results showed that inclusion of careless respondents in data analysis distorts factor loading pattern and hinders recovery of theoretical existing factors. Careless respondents also blur the distinction of theoretically distinct factors, resulting in higher inter-factor correlations. That careless responding may threaten convergent validity also receives limited support. Researchers are advised to exclude careless respondents before statistical analysis.

Research on personality relies on collection of accurate data. If a dataset includes a large number of unmotivated respondents, it becomes questionable if conclusion drawn will remain valid. Previous researchers (e.g., Hinkin, 1995) have suggested simple methods to maintain respondents' motivation, such as administering a shorter survey to reduce fatigue or designing questions that are easy to read. Another easy method is to check the existence of careless responding (also called insufficient effort responding; Huang et al., 2012) and exclude the data in analysis (Meade and Craig, 2012; Maniaci and Rogge, 2014; Huang et al., 2015; Kam and Meyer, 2015). However, checking for careless respondents has not been as common as it should be, perhaps because researchers are not fully aware of the effect of careless responding in biasing their research conclusion. It may require more empirical evidence to convince researchers of the importance to control for careless responding. Therefore, in the current research, we will investigate the potential effect of careless responding on factor loading pattern, convergent validity, and discriminant validity. We will use a popular personality measure in our illustration.

Careless Responding

Careless responding (or insufficient effort responding) happens when participants did not pay enough attention to read survey items, did not fully process the items, did not retrieve relevant information from the memory, or did not integrate information from the memory with the items before responding (Tourangeau et al., 2000; Weijters et al., 2013). Previous research showed at least two types of careless respondents (Kam and Meyer, 2015). The first type of careless respondents randomly picked an answer in each item. This random response pattern may deflate the correlations among scale items (Kam and Meyer, 2015). The second type of careless respondents may give identical answers to each item. Variable scores may become more similar to each other, causing their inter-correlations to be inflated (Kam and Meyer, 2015). Previous research has shown that the results of careless responding on variable correlations can be unpredictable (Huang et al., 2015; Kam and Meyer, 2015). Researchers have been strongly advised to exclude careless respondents in their data before conducting statistical analysis (Meade and Craig, 2012; Maniaci and Rogge, 2014; Huang et al., 2015; Kam and Meyer, 2015; McGonagle et al., 2016). As mentioned, however, this has not been a common practice.

There have been several possible reasons why controlling for careless respondents has not been a common research practice. First, researchers may not have included a priori measure to check for careless responding. According to some research (Kam and Chan, 2018), it is best to include a priori measure rather than post-hoc methods to check for careless responding, because some of the post-hoc measures may confound with other response styles. One of these post-hoc measures, inconsistent response check among synonym items, may confound with participants' cognitive inability in providing consistent responses. Research study has shown that a large group of respondents have problems providing consistent answers to items with similar meaning, even when they have been careful in responding (Kam and Fan, 2018). Another post-hoc measure, such as agreeing with antonyms (e.g., outgoing and shy), may confound with attitudinal ambivalence (Jonas et al., 2000) or acquiescence response bias (Kam and Meyer, 2015), both of which has nothing to do with careless responding.

Second, researchers may be reluctant to exclude careless respondents because such practice will inevitably reduce sample size. Most statistical analysis has a minimum recommendation on sample size (Hinkin, 1995), and journal editors and reviewers tend to favor a study with a larger number of participants. However, such practice prevents careless respondents to be excluded, and as we will show, careless respondents have a strong biasing effect on statistical results. In this paper, we will focus on how careless respondents has the potential to distort factor loading pattern, convergent validity, and discriminant validity.

Potential Impact of Careless Responding

We hypothesized that careless responding can possibly distort the factor loading pattern in factor analysis. When participants randomly answer survey items, item correlations may decrease, attenuating magnitude of factor loadings (Crede, 2010; Kam and Meyer, 2015). When careless participants choose identical responses throughout a survey, it will increase inter-correlation among items from different constructs, thus blurring the distinction among different constructs (DeSimone et al., 2018). Therefore, with the existence of careless responding, cross-loadings may become more prevalent.

We also hypothesized that careless responding may attenuate the convergent validity between self-rating and peer-rating. With the existence of careless responding in a dataset, response errors increase. Because response errors from self-rating should not correlate with peer-rating, construct correlations between self-rating and peer-rating may become weaker.

Finally, we hypothesized that careless responding may decrease discriminant validity (i.e., increase correlations) among theoretically distinct constructs. When respondents give random answers or identical answers to consecutive survey items, the distinction among various constructs decreases. When respondents give random answers (i.e., first type of careless respondents), then the scale means among various distinct constructs tend to center in the mid-point of a Likert scale (e.g., 3 in a 5-point Likert scale; Huang et al., 2015). Similarly, when respondents give identical answers to consecutive survey items (i.e., second type of careless respondents), the scale means among various distinct constructs will be similar (Kam and Meyer, 2015). The resultant effect of both types of respondents is that scores from various constructs can become more similar, even when their correlations in the population should be close to zero. This will cause theoretically orthogonal constructs to correlate more strongly with each other.

The Current Study

The purpose of the current study is to examine the effect of careless respondent inclusion on factor loading pattern, convergent validity, and discriminant validity on a popular personality measure, HEXACO measure (Ashton and Lee, 2009). With the use of this personality scale, factor loading pattern will be examined using exploratory structure equation modeling (ESEM; Asparouhov and Muthen, 2009). ESEM has the advantage of providing model fit indices while allowing cross-loading information, thus allowing us to examine how factor loading pattern can possibly be distorted with the inclusion of careless respondents. In addition, ESEM also allow the modeling of correlations (covariances) among personality factors, and between self-rating and peer-rating, permitting us to examine the convergent validity of personality factors (between self-rating and peer-rating) and the discriminant validity between personality factors, which are supposed to have orthogonal relationship with each other.

Methods

Participants

Two hundred and eighteen pairs of roommates (i.e., 436 students; 289 female, 146 male, and 1 unidentified; Mage = 21.17; SDage = 3.53) from various universities in Shanghai participated in the current study. They completed an online survey in Chinese in exchange for 100 Renminbi (around US$14) per person. The study was approved by the ethics board at the University of Macau.

Instruments

For all items, a 5-point Likert scale (1 = Strongly Disagree; 5 = Strongly Agree) was used.

HEXACO Personality Inventory-Revised (HEXACO PI-R)

Each participant reported their own personality using 60-item version of HEXACO PI-R (Ashton and Lee, 2009). The scale measures six dimensions of personality, namely honesty-humility, emotionality, extraversion, agreeableness, conscientiousness, and openness to experience. Each dimension included 10 items. About half of the items were reverse-keyed (six for honesty-humility and conscientiousness; four for emotionality; extraversion, and agreeableness; five for openness to experience). Cronbach's alphas were good for these self-report personality factors in the current study (0.69 for honesty-humility, 0.73 for emotionality, 0.68 for extraversion, 0.72 for agreeableness, 0.69 for conscientiousness, and 0.71 for openness). In addition, participants also reported their roommate's personality using the parallel 60-item version of HEXACO PI-R. Cronbach's alphas were good for these peer-report personality factors in the current study (0.76 for honesty-humility, 0.74 for emotionality, 0.75 for extraversion, 0.83 for agreeableness, 0.80 for conscientiousness, and 0.75 for openness). Participants completed the officially back-translated, Chinese version of the scale that has been posted in the HEXACO website (http://www.hexaco.org). The order of the self-report and the peer-report versions was counterbalanced.

Instructed Response Items

Before starting the study, participants were informed about items that instructed them to answer a certain way (e.g., “Please answer the option Disagree for this item”), and a sample item was provided (Kam and Chan, 2018). Five of such instructed response items were included in the survey.

Analysis Strategy

In the current study, participants who answered more than half of the instructed response items (i.e., three out of five items) correct are considered careful respondents, and all responses from careless respondents are excluded. In cases when only one member within a pair is excluded (due to careless responding), data from excluded members would be analyzed using full information maximum likelihood (FIML) analysis. Using this criteria, 204 pairs of roommates (from 377 respondents; 255 female and 122 male) were included in the careful respondent sample. All data were analyzed using Mplus 7.1 (Muthén and Muthén, 1998-2012), with the complex analysis option to account for data non-independence (i.e., individuals nested within each roommate pair). Although all items were measured on an ordinal (5-point Likert) scale, Rhemtulla et al. (2012) showed that ordinal scales can be treated as continuous when the number of categories is five or more.

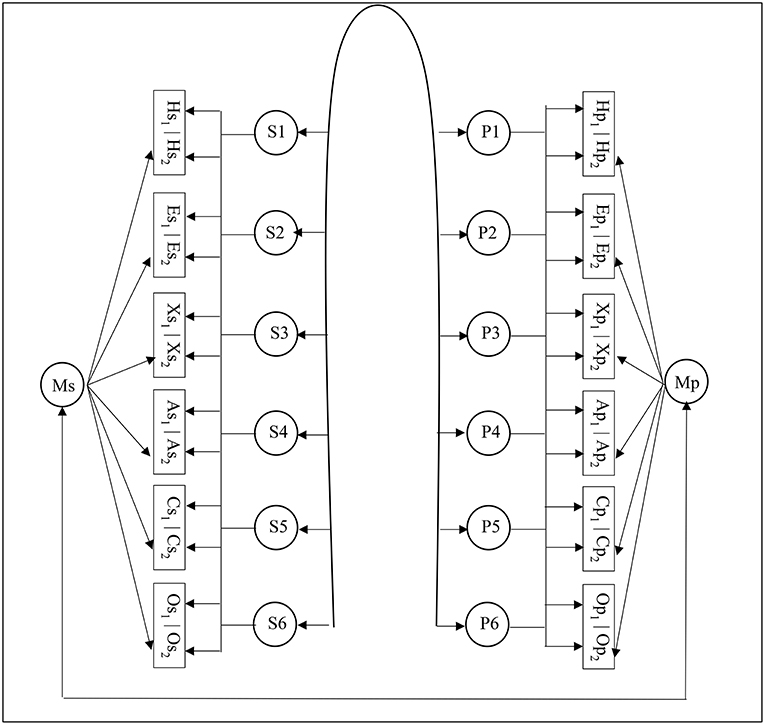

To demonstrate the effect of careless respondent inclusion, we first analyzed the data with the entire sample. We set up an Exploratory Structural Equation Modeling (ESEM) model (with goemin rotation) that allows the self-report HEXACO factors and the peer-report HEXACO factors to be freely correlate with each other. ESEM has an important advantage over simple confirmatory factor analysis (CFA) because the former relaxes the assumption of zero cross-loadings among items, thereby improving model fit without the need of resorting to parceling strategy. Marsh et al. (2014) strongly advocated the use of ESEM to analyze personality data. In the current study, each self-report HEXACO factor was allowed to have cross-loadings with other self-report HEXACO factors, and, similarly, each peer-report HEXACO factor was allowed to have cross-loadings with other peer-report HEXACO factors. In both self- and peer-ratings, a theoretically driven six-factor HEXACO model was imposed (Figure 1). Previous research has shown the existence of method effect due to the use of reverse-keyed items in personality measures. We therefore included a method factor on reverse-keyed items for self-report HEXACO factors and another method factor for peer-report HEXACO factors, and compared this model with a model without a method factor. We expected the model with a method factor to fit better than the model without.

Figure 1. Graphic representation of the final model. All self-report personality items loaded on latent factors S1-S6. Most self-report items had major loadings on one factor and cross-loadings on other factors. Similarly, all peer-report personality items loaded on latent factors P1-P6. Most peer-report items had major loadings on one factor and cross-loading on other factors. S1-S6 and P1-P6 were allowed to correlate with each other. Reverse-keyed self-report items loaded on a method factor (Ms), and reverse-keyed peer-report items loaded on another method factor (Mp). H, honesty-humility items; E, emotionality items; X, extraversion items; A, agreeableness items; C, conscientiousness items; O, openness to experience items; s, self-report; p, peer-report; subscript 1, regular-keyed items; subscript 2, reverse-keyed items. For presentation purpose, only item groups are presented. For example, Hs1 represents all regular-keyed items for self-report honesty-integrity measure. The following information was not shown in this figure due to space limitation. First, self-report method factor (Ms) was allowed to correlate with peer-report personality factors (P1-P6). Second, peer-report method factor (Mp) was allowed to correlate with self-report personality factors (S1-S6).

For the purpose of the current study, we are mainly interested in comparing the entire sample with the careful respondent only sample on two aspects: first, factor loading pattern of HEXACO factors; and second, the construct validity (i.e., convergent and discriminant validity) between self-report and peer-report of each HEXACO latent factor. For convergent validity, self-ratings and peer-rating of the same personality factors should be correlated well with each other. Therefore, we examined the strength of the correlations for the same personality factors between the entire sample and the careful respondent only sample. For discriminant validity, personality factors are theoretically orthogonal (due to the non-redundancy of the factors) and thus should not be strongly correlated. We examined the cross-correlations between personality factors and compare such correlations between the entire sample and the careful respondent only sample.

Results

Factor Analysis Results

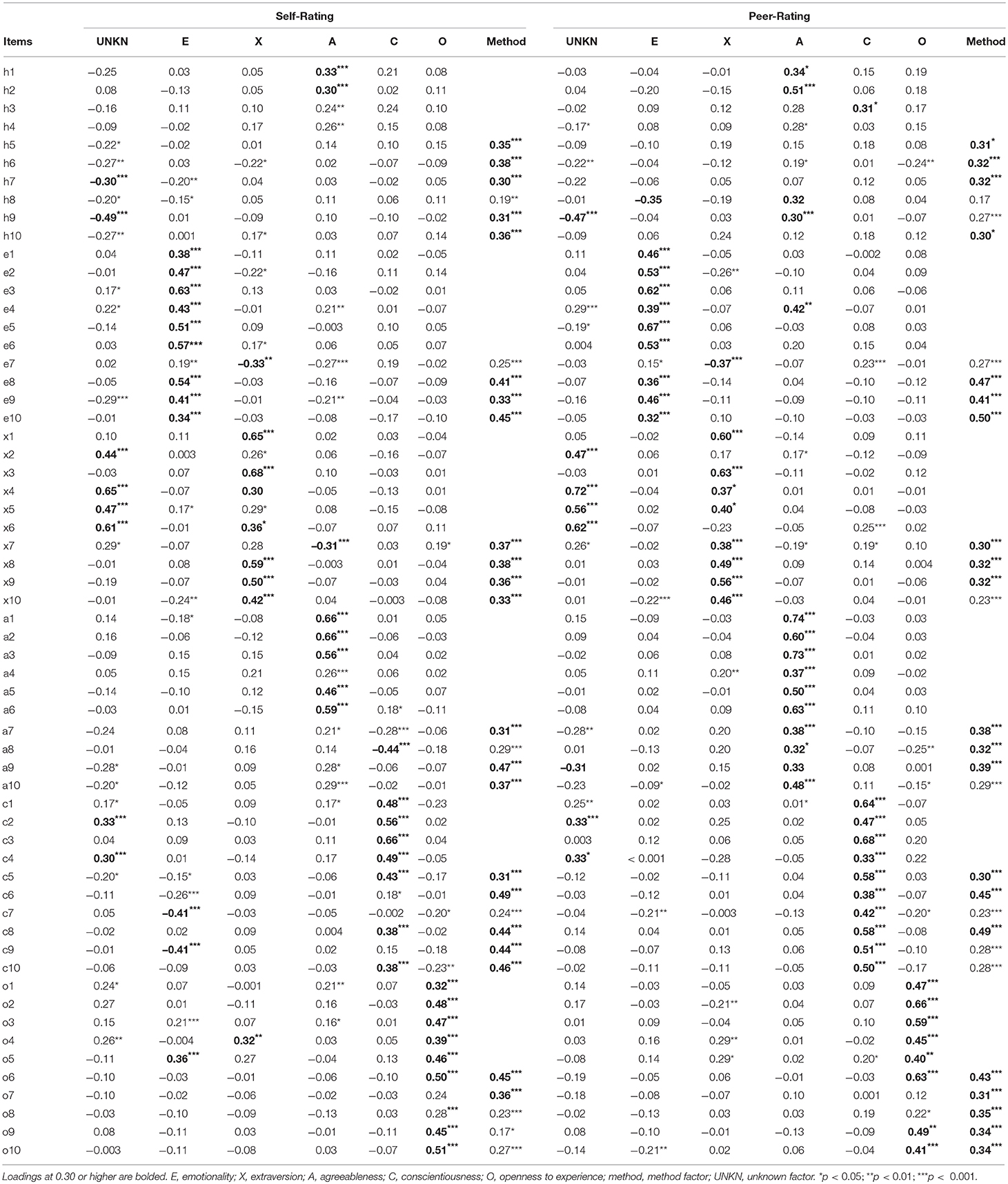

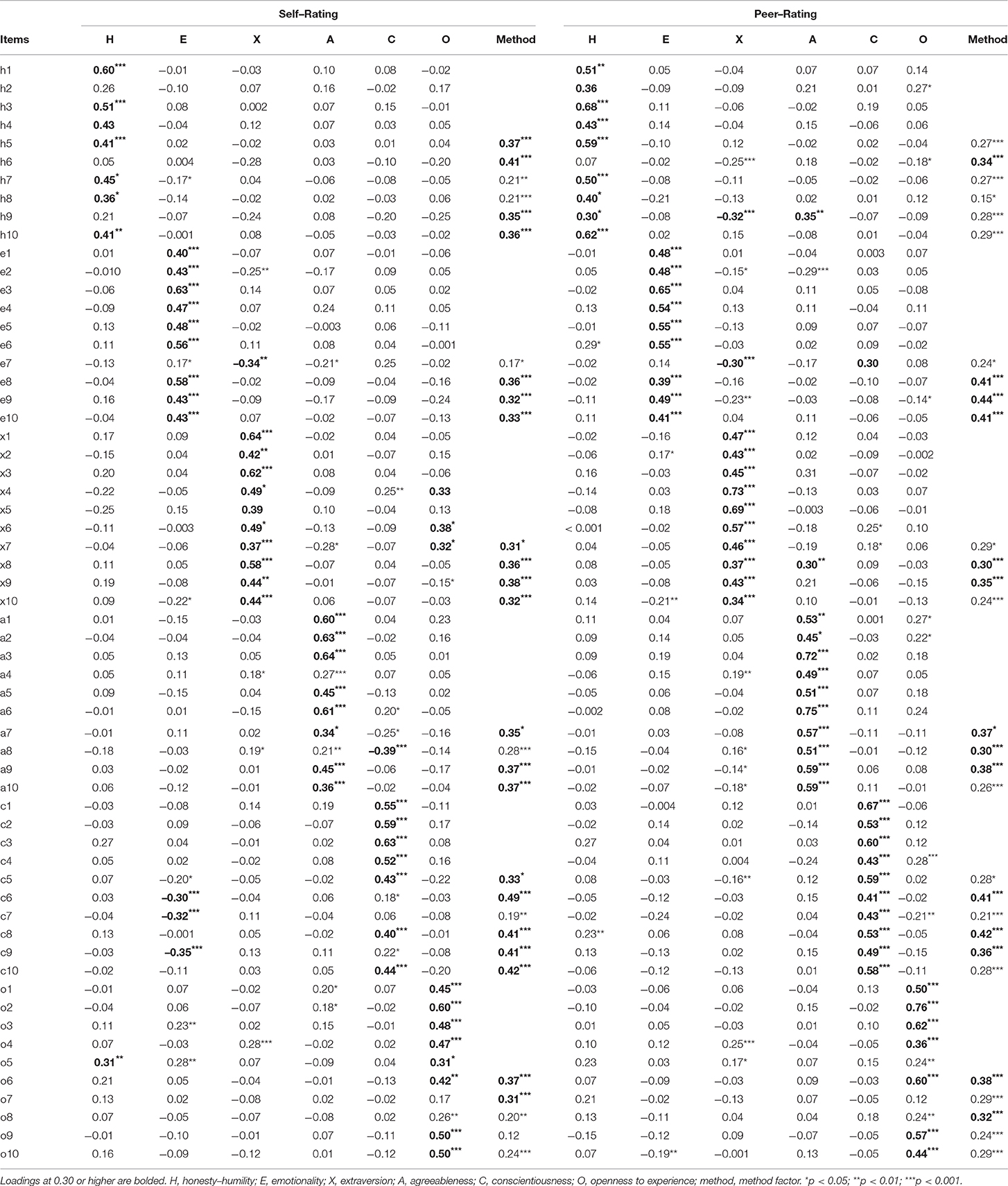

The ESEM model with method factor fit significantly better than the ESEM model without method factor, in both the entire sample (Δχ2 = 293.67; Δdf = 71, p < 0.001) and the careful respondent sample (Δχ2 = 304.01; Δdf = 71, p < 0.001). Therefore, the method factor model was used. When the entire sample is analyzed, ESEM analysis failed to show clear loading pattern for all of the six HEXACO factors (Table 1). Honesty-integrity factor was missing in both self-ratings and peer-ratings, and agreeableness items did not load well in the self-rating data—only half of the items loaded on the agreeableness factor. In addition, there were a substantial number of cross-loadings in extraversion items, in both self-ratings and peer-ratings. In both types of ratings, four out of ten extraversion items cross-load with the unknown factor. Given the result of an entirely missing factor (honesty-integrity) and substantial number of cross-loadings, a researcher may conclude that the six-factor HEXACO model was only partially supported by the data.

In contrast, a much better loading pattern was found in careful respondent only dataset (Table 2). First, the honesty-integrity factor was discovered, particularly in the peer-rating data. Seven out of 10 items in self-rating and 9 out of 10 items in peer-rating loaded successfully on the honesty-integrity factor. Second, in each of the factors, at least seven (out of ten items) loaded successfully on its corresponding factor. Although cross-loadings still exist, it is apparently less severe in this careful respondent data than in the entire respondent data. When examining the factor loading for extraversion, most items in self-rating and all items in peer-rating load on the factor, and cross-loadings were less severe in careful respondent data. This is at stark contrast with the loading pattern for the same factor in the entire respondent data. Interestingly, the factor loading pattern appears to be better in peer-rating (i.e., less cross-loadings) than in self-rating. Overall, the result showed superior loading pattern in careful respondent data than in entire respondent data.

Based on traditional cutoffs for fit indices (e.g., Fan and Sivo, 2007), the overall fit was somewhat inadequate in both the entire sample (χ2 = 11099.17, df = 6343, p < 0.001, TLI = 0.67, CFI = 0.70, RMSEA = 0.04, SRMR = 0.05) and the careful respondent only sample (χ2 = 10894.05, df = 6343, p < 0.001, TLI = 0.65, CFI = 0.69, RMSEA = 0.04, SRMR = 0.05). TLI and CFI were both suboptimal but RMSEA and SRMR were good. However, a large number of items can cause likelihood ratio statistics to deviate from the assumed chi-square distribution, resulting in inflated Type 1 error (Yuan et al., 2019). Shi et al. (2018) showed that both TLI and CFI are inaccurate assessments of model fit in a large model.

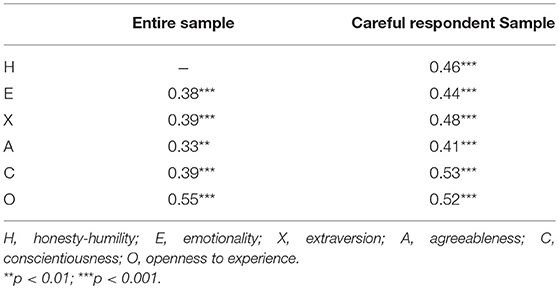

Convergent Validity Evidence

In personality measures, self-ratings and peer-rating should be correlated well with each other. Therefore, we examined the correlation of each personality trait between self-rating and peer-rating in the entire sample and in the careful respondent sample. The result, shown in Table 3, showed that the correlations for the careful respondent sample tended to be stronger than those for the entire sample (except openness). Due to the non-independence between the two sets of data (i.e., the careful respondent sample comes from the entire sample), the correlations could not be statistically compared.

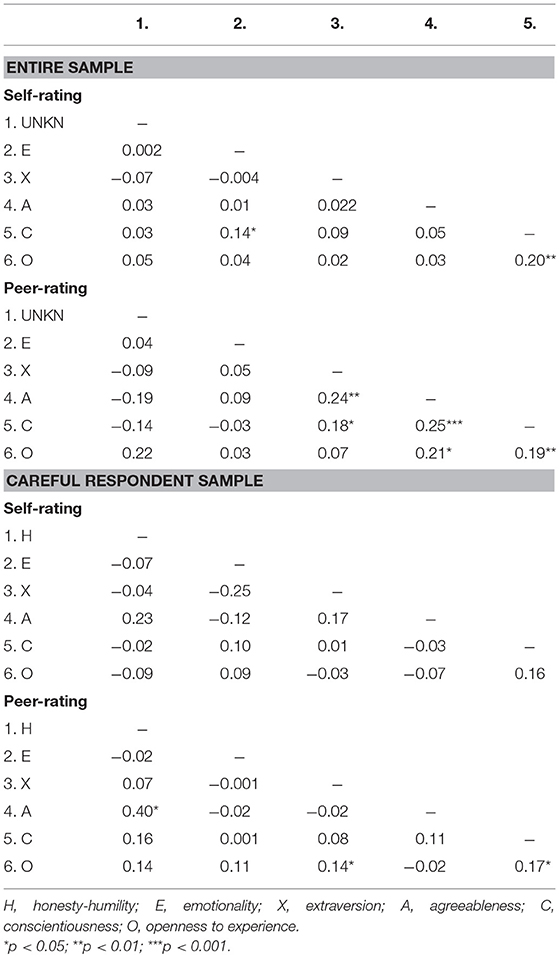

Discriminant Validity Evidence

Personality factors are theoretically orthogonal (i.e., uncorrelated with each other). Therefore, we examined the discriminant validity among the personality factors in the entire sample and in the careful respondent samples. The result, shown in Table 4, showed weak but significant correlations among personality factors in the entire sample. In stark contrast, the correlations were apparently fewer significant correlations (3 vs. 7 significant correlations) among personality factors in the careful respondent sample. The only exception is the correlation between honesty-humility and agreeableness (r = 0.40) in peer-rating. This is not surprising given that the two personality factors are often confused with each other, and in this study, the confusion appears in peer-rating but not in self-rating (r = 0.23, ns). Therefore, the careful respondent sample showed a stronger discriminant validity evidence than the entire sample.

Discussion

The primary motivation of the current article is to demonstrate that careless respondents can potentially threaten the factorial loading pattern and construct validity of personality measures, and the results of the current study confirmed this hypothesis. First, for factor analytic results, inclusion of careless respondents is likely to distort discovery of theoretically existing latent factors and cause serious cross-loading problem. Second, compared to careful respondents, inclusion of careless respondents may cause decrease in the correlations of personality factors between self-ratings and peer-ratings. Such decrease in correlations is, however, only modest in the current study. Nonetheless, the effect of such correlation attenuation may become blatant in meta-analytic research, when most studies did not exclude careless respondents in data analysis. In our knowledge, some research fields (e.g., industrial-organizational psychology) often employs meta-analysis to undiscover the “true” correlation among constructs. Third, compared to careful respondents, inclusion of careless respondents is likely to inflate correlations among theoretically distinct constructs. This result is particularly striking in the self-report data in the current study. The number of statistically significant correlations is higher in the entire sample as opposed to in the careful respondent only sample. Given these results, researchers who are interested in conducting construct validation studies should no longer ignore the effect of careless respondents in their data.

A critic may question the validity of the HEXACO scale due to the moderate relationship—rather than theoretically predicted orthogonal relationship—between two dimensions (honesty-humility and agreeableness) in peer report. However, observer reports are more likely than self-reports to have difficulty discriminating between these two dimensions (Lee and Ashton, 2006), perhaps because observers have less information to differentiate between nice people and honest people. Both HEXACO dimensions, however, showed discriminant validity with external variables (Hilbig et al., 2013), meaning that they are two distinct constructs. The moderate relationship should therefore not undermine confidence in the validity of the scale.

The current study has several limitations. First, we employed a popular personality scale among educated Chinese university students; future research should extend the generalizability of the results using other measures among less educated participants. Second, we employed ESEM analysis, and the results should generalize to exploratory factor analysis. Future research may look at the impact of careless responding in other statistical analytic techniques. Finally, we limited our investigation of convergent validity to the relationship between self-rating and peer-rating. We also limited our investigation of discriminant validity to the relationship among personality factors. Future research can further broaden the scope of our investigation.

Data Availability

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

This study was carried out in accordance with the recommendations of Research Services and Knowledge Transfer Office, University of Macau, with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Research Services and Knowledge Transfer Office, University of Macau.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Funding

The current study was financially supported by Multi-Year Research Grant (MYRG2018-00010-FED) offered by the University of Macau to the author.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Ashton, M. C., and Lee, K. (2009). The HEXACO-60: a short measure of the major dimensions of personality. J. Personal. Assess. 91, 340–345. doi: 10.1080/00223890902935878

Asparouhov, T., and Muthen, B. (2009). Exploratory structural equation modeling. Struct. Eq. Model. 16, 397–438. doi: 10.1080/10705510903008204

Crede, M. (2010). Random responding as a threat to the validity of effect size estimates in correlational research. Edu. Psychol. Measure. 70, 596–612. doi: 10.1177/0013164410366686

DeSimone, J. A., DeSimone, A. J., Harms, P. D., and Wood, D. (2018). The differential impacts of two forms of insufficient effort responding. Appl. Psychol. 67, 309–338. doi: 10.1111/apps.12117

Fan, X., and Sivo, S. A. (2007). Sensitivity of fit indices to model misspecification and model types. Multivar. Behav. Res. 42, 509–529. doi: 10.1080/00273170701382864

Hilbig, B. E., Zettler, I., Leist, F., and Heydasch, T. (2013). It takes two: Honesty-humility and agreeableness differentially predict active versus reactive cooperation. Personal. Indivi. Diff. 54, 598–603. doi: 10.1016/j.paid.2012.11.008

Hinkin, T. R. (1995). A review of scale development practices in the study of organizations. J. Manag. 21, 967–988. doi: 10.1177/014920639502100509

Huang, J. L., Curran, P. G., Keeney, J., Poposki, E. M., and DeShon, R. P. (2012). Detecting and deterring insufficient effort responding. J. Business Psychol. 27, 99–114. doi: 10.1007/s10869-011-9231-8

Huang, J. L., Liu, M., and Bowling, N. A. (2015). Insufficient effort responding: examining an insidious confound in survey data. J. Appl. Psychol. 100, 828–845. doi: 10.1037/a0038510

Jonas, K., Broemer, P., and Diehl, M. (2000). Attitudinal ambivalence. Eur. Rev. Soc. Psychol. 11, 35–74. doi: 10.1080/14792779943000125

Kam, C. C. S., and Chan, H. H. C. (2018). Examination of the validity of instructed response items in identifying careless respondents. Personal. Individ. Diff. 129, 83–87. doi: 10.1016/j.paid.2018.03.022

Kam, C. C. S., and Fan, X. (2018). Investigating response heterogeneity in the context of positively and negatively worded items using factor mixture modeling. Org. Res. Methods. doi: 10.1177/1094428118790371

Kam, C. C. S., and Meyer, J. P. (2015). How careless responding and acquiescence response bias can influence construct dimensionality: the case of job satisfaction. Org. Res. Methods 18, 512–541. doi: 10.1177/1094428115571894

Lee, K., and Ashton, M. C. (2006). Further assessment of the HEXACO Personality Inventory: two new facet scales and an observer report form. Psychol. Assess. 18, 182–191. doi: 10.1037/1040-3590.18.2.182

Maniaci, M. R., and Rogge, R. D. (2014). Caring about carelessness: participant inattention and its effects on research. J. Res. Personal. 48, 61–83. doi: 10.1016/j.jrp.2013.09.008

Marsh, H. W., Morin, A. J. S., Parker, P. D., and Kaur, G. (2014). Exploratory structural equation modeling: Integration of the best features of exploratory and confirmatory factor analysis. Annu. Rev. Clin. Psychol. 10, 85–110. doi: 10.1146/annurev-clinpsy-032813-153700

McGonagle, A. K., Huang, J. L., and Walsh, B. M. (2016). Insufficient effort survey responding: An under-appreciated problem in work and organisational health psychology research. Appl. Psychol. 65, 287–321. doi: 10.1111/apps.12058

Meade, A. W., and Craig, S. B. (2012). Identifying careless responses in survey data. Psychol. Methods 17, 437–455. doi: 10.1037/a0028085

Muthén, L. K., and Muthén, B. O. (1998-2012). Mplus User's Guide. 7 Ed. Los Angeles, CA: Muthén & Muthén.

Rhemtulla, M., Brosseau-Liard, P. É., and Savalei, V. (2012). When can categorical variables be treated as continuous? a comparison of robust continuous and categorical SEM estimation methods under suboptimal conditions. Psychol. Methods 17, 354–373. doi: 10.1037/a0029315

Shi, D., Lee, T., and Maydeu-Olivares, A. (2018). Understanding the model size effect on SEM fit indices. Edu. Psychol. Measure. 79, 310–334. doi: 10.1177/0013164418783530

Tourangeau, R., Rips, L. J., and Rasinski, K. (2000). The Psychology of Survey Response. Cambridge, UK: Cambridge University Press.

Weijters, B., Baumgartner, H., and Schillewaert, N. (2013). Reversed item bias: an integrative model. Psychol. Methods 18, 320–334. doi: 10.1037/a0032121

Keywords: careless responding, insufficient effort responding, factor analysis, construct validity, convergent validity, discriminant validity

Citation: Kam CCS (2019) Careless Responding Threatens Factorial Analytic Results and Construct Validity of Personality Measure. Front. Psychol. 10:1258. doi: 10.3389/fpsyg.2019.01258

Received: 23 March 2019; Accepted: 13 May 2019;

Published: 14 June 2019.

Edited by:

África Borges, Universidad de La Laguna, SpainReviewed by:

Leire Aperribai Unamuno, University of Deusto, SpainDoris Castellanos-Simons, Universidad Autónoma del Estado de Morelos, Mexico

Copyright © 2019 Kam. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chester Chun Seng Kam, Y2hlc3RlcmthbSYjeDAwMDQwO3VtLmVkdS5tbw==

Chester Chun Seng Kam

Chester Chun Seng Kam