- 1Department of Clinical Psychology, University of East Anglia, Norwich, United Kingdom

- 2School of Biological Sciences, University of East Anglia, Norwich, United Kingdom

- 3Centre for Affective Disorders, King's College, London, United Kingdom

- 4Department of Psychology, Centre for Brain Science, University of Essex, Colchester, United Kingdom

It has repeatedly been argued that individual differences in personality influence emotion processing, but findings from both the facial and vocal emotion recognition literature are contradictive, suggesting a lack of reliability across studies. To explore this relationship further in a more systematic manner using the Big Five Inventory, we designed two studies employing different research paradigms. Study 1 explored the relationship between personality traits and vocal emotion recognition accuracy while Study 2 examined how personality traits relate to vocal emotion recognition speed. The combined results did not indicate a pairwise linear relationship between self-reported individual differences in personality and vocal emotion processing, suggesting that the continuously proposed influence of personality characteristics on vocal emotion processing might have been overemphasized previously.

Introduction

One of the most influential hypotheses examining differences in emotion processing, the trait-congruency hypothesis, argues that stable personality traits influence the precision of an individual's emotion processing (see Rusting, 1998 for review). For example, extraversion and neuroticism have been extensively linked to processing of positive and negative emotions, respectively (Larsen and Ketelaar, 1989; Gomez et al., 2002; Robinson et al., 2007). However, although evidence points toward some form of relationship between selective emotion processing and certain personality characteristics, the literature from recent decades is contradictive (Matsumoto et al., 2000).

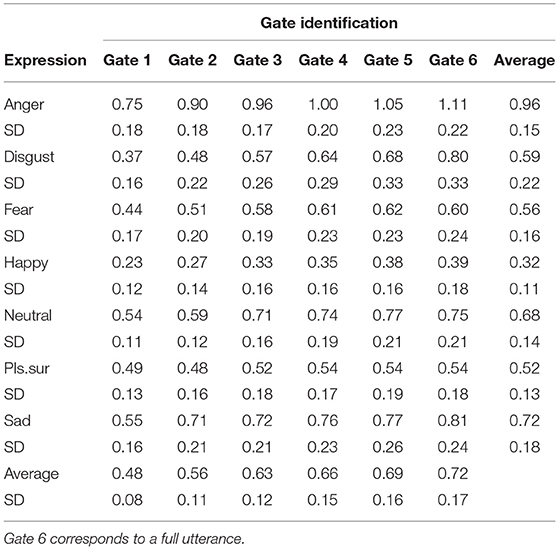

Both the vocal and facial emotion recognition literature has explored the relationship between different personality traits and emotion recognition accuracy (although far more emphasis has been put on detecting emotions from faces). For instance, in the vocal emotion literature, extraversion and conscientiousness have been associated with better vocal emotion recognition, but only in males (Burton et al., 2013). In contrast, Terracciano et al. (2003) found a positive relationship between vocal emotion perception and openness to experience. Similarly, in the facial emotion literature, some studies have found a link between better emotion recognition and openness to experience and conscientiousness (Matsumoto et al., 2000). In contrast, other studies have emphasized the importance of extraversion and neuroticism. Confusingly, while some researchers have argued that extraverted individuals perform better on facial emotion recognition tasks (Matsumoto et al., 2000; Scherer and Scherer, 2011), other studies have failed to evidence this relationship (Cunningham, 1977). Similarly, neuroticism has been linked to both poorer (Matsumoto et al., 2000) and better (Cunningham, 1977) recognition of facial emotions. It is thus evident that the confusing and contradictory relationships between personality traits and emotion recognition are not wholly consistent with predictions made by the trait-congruency hypothesis in either the facial or vocal domains (see Table 1 for an overview).

Table 1. An overview of studies exploring the relationship between personality traits and emotion recognition accuracy.

One set of factors that might potentially explain the inconsistent findings relate to methodological differences between studies. For instance, different studies have used different personality inventories and varying emotion recognition measures. While some studies correlate personality traits against overall emotion recognition accuracy (Terracciano et al., 2003; Elfenbein et al., 2007; Burton et al., 2013), some studies have investigated the relationship between personality traits and recognition of specific emotions (e.g., Matsumoto et al., 2000). Further, some studies have relied on specific individual personality traits, such as extraversion and neuroticism alone (e.g., Cunningham, 1977; Scherer and Scherer, 2011), whereas other studies have included all Big Five (i.e., agreeableness, conscientiousness, extraversion, neuroticism, and openness to experience) personality dimensions (e.g., Matsumoto et al., 2000; Rubin et al., 2005). It is thus clear that our understanding of the potential dynamic interplay between personality traits and processing of emotional information is far from complete and warrants further investigation.

Continuing from the confusing literature on personality and vocal emotion recognition accuracy, it is similarly possible that individual differences in personality traits may influence the temporal processing of vocal emotions. For instance, while the trait-congruency hypothesis would predict that extraversion and neuroticism are linked to better recognition of positive and negative emotions, it could also be argued that extraversion and neuroticism are linked to quicker recognition of positive and negative emotions, respectively. Recent advances in the vocal emotion literature have allowed investigation of the temporal processing of vocal emotions, which can provide crucial information on when distinct emotion categories are recognized and how much acoustic information is needed to recognize the emotional state of a speaker (Pell and Kotz, 2011).

The auditory gating paradigm is often employed when examining how much acoustic-phonetic information is required to accurately identify a spoken stimulus and can be used to examine any linguistic stimulus (e.g., word, syllable, sentence) of interest (Grosjean, 1996). For example, a spoken word can be divided into smaller segments and listeners are then presented with segments of increasing duration starting at stimulus onset. The first segment is thus very brief while the final segment corresponds to the complete stimulus (Grosjean, 1996). After listening to each segment listeners are asked to identify the target word and rate how confident they are in the accuracy of their response. This technique enables calculation of the isolation point, or the size of the segment needed for accurate identification of the target (Grosjean, 1996).

Different emotion categories unfold at different rates (Banse and Scherer, 1996) which can be understood in terms of the biological significance of the emotion category (Pell and Kotz, 2011). For example, fear signals a threatening situation that requires an immediate behavioral response, which suggests that this emotion category should be recognized faster than a less threatening emotion, such as happiness. In line with this, Pell and Kotz (2011) found an emotion bias, in which fear was the quickest recognized emotion category. In contrast, Cornew et al. (2010) have argued for a neutral bias, as they found that neutral utterances were identified more rapidly than angry utterances, which were identified more rapidly than happy utterances. The position of acoustical cues has also shown to play a crucial role in the decoding process of vocal emotions. Rigoulot et al. (2013) explored recognition patterns where the first gate corresponded to the last segment before sentence offset, the first gate reflected sentence onset, and the final gate corresponded to the full utterance of the sentence backwards. Results revealed that the position of acoustical cues is particularly important when prosodic cues of happiness and disgust are expressed.

While the behavioral literature on the time course processing of vocal emotions is still in its infancy, research on how differences in personality traits influence temporal processing of vocal emotions is absent. To our knowledge, the only study that has examined differences in temporal processing of vocal emotions, although at a group level, is the study by Jiang et al. (2015). They examined the time course of vocal emotions across cultures and reported an in-group advantage, i.e., quicker and more accurate recognition of stimuli, when English and Hindi listeners were presented with emotionally intoned vocal utterances presented in their own language, compared to foreign language utterances (English for Hindi listeners, Hindi for English listeners). This is consistent with findings from the vocal emotion accuracy literature, in which other studies (e.g., Paulmann and Uskul, 2014) also reported an in-group advantage in recognizing emotional displays. However, it is yet unexplored how the temporal dynamics of vocal emotions are influenced by personality characteristics.

The present investigation consisted of two independent but related studies based on two main aims; to get a better understanding of whether and how personality traits can predict individual differences in (1) vocal emotion recognition accuracy, and (2) vocal emotion recognition speed. Specifically, while Study 1 investigates whether recognition accuracy of various vocal emotions (i.e., anger, disgust, fear, happiness, neutral, pleasant surprise, and sad) is related to individual differences in personality traits, Study 2 is the first attempt to explore the influence of individual personality traits on the time course processing of vocal emotions. Thus, it asks if individuals differ in the amount of acoustic information they require to draw valid conclusions about the emotion communicated through tone of voice.

The two studies were also designed to address certain methodological issues identified in the previous literature and to account for other potential confounding variables. Firstly, the Big Five Inventory (BFI) was used consistently across both studies to ensure that potential findings were not confounded by the use of different measurement tools. Recognition rates for individual emotion categories, as well as for overall recognition accuracy, were explored in relation to scores on the BFI, to allow a fuller comparison to the previous literature.

Generally, vocal perception studies tend to use professional actors to portray the emotions (e.g., Graham et al., 2001; Banziger and Scherer, 2005; Airas and Alku, 2006; Toivanen et al., 2006), based on the assumption that professional actors are better able to portray unambiguous emotions (Williams and Stevens, 1972). It has however been argued that professional actors may produce exaggerated stereotypical portrayals (e.g., Scherer, 1995; Juslin and Laukka, 2001; Paulmann et al., 2016), which may result in lack of ecological validity (Scherer, 1989). A recent study by Paulmann et al. (2016) reported that, at an acoustical level, untrained speakers could convey vocal emotions similarly to trained speakers, suggesting that the use of untrained speakers might provide a good alternative. Thus, in this investigation we employed materials from both untrained speakers (Study 1) and a professionally trained speaker (Study 2). This allows generalizing potential personality trait effects on emotional vocal recognition across different speaker types (professional and non-professional). This approach will also be of use in future studies when deciding what kind of materials might be best suited to explore personality traits in the context of vocal emotions.

In line with the trait-congruency hypothesis, we hypothesized that extraversion would be linked to better and quicker recognition of positive vocal emotions, while neuroticism would be linked to better and quicker recognition of negative emotions. To specifically explore this hypothesis in both studies, an overall recognition accuracy score was generated for positive (happy, pleasant surprise) and negative (anger, disgust, fear, sadness) emotions, and was then examined in relation to levels of extraversion and neuroticism. Due to the sparse and contradictory findings in the previous literature, predictions are difficult to make for the other Big Five personality traits i.e., agreeableness, conscientiousness, and openness to experience. We would argue that, if there is a true, systematic relationship between personality traits and processing of vocal emotions, this relationship should be evident across both studies.

Study 1

The overall aim of Study 1 was to explore the relationship between individual differences in personality traits and vocal emotion recognition accuracy.

Methods

Participants

Ninety-five [75 females, mean age: 19.5, SD (standard deviation): 3.09] undergraduate Psychology students at the University of Essex participated and gave their informed written consent. They received module credits for their participation. All participants reported normal or corrected to normal hearing and vision. Participants self-reporting experiencing mental disorders were excluded from the analyses, as several studies have shown impaired emotion recognition in clinical populations such as depression (e.g., Leppanen et al., 2004), schizophrenia (e.g., Kohler et al., 2003), and borderline personality disorder (e.g., Unoka et al., 2011).

Consequently, 81 participants (65 females) were included in the final statistical analyses. This sample size was considered sufficient, as G*Power3.1 (Faul et al., 2007) yielded an estimated sample size of 84 participants (power = 0.80, alpha = 0.05, and effect size = 0.3; we considered a small to medium effect size to be a conventional estimate based on several studies exploring similar variables—often with smaller sample sizes, see Table 1).

Stimuli Selection

Stimuli for Study 1 were taken from a previous inventory (Paulmann et al., 2016). Fifteen semantically neutral sentences (e.g., “The fence was painted brown”) were portrayed by nine (non-professional) female speakers in seven emotional tones (anger, disgust, fear, happiness, neutral, sad, and surprise). For each emotional category, 40 sentences were presented resulting in 280 sentences in total. Emotionality ratings were obtained for these materials in a previous study (Paulmann et al., 2016). All materials were recognized much better than chance would predict. Specifically, arcsine-transformed Hu scores for materials ranged from 0.42 (for happiness) to 0.96(for anger; see Paulmann et al. (2016) for more details on stimuli). The 280 sentences were randomly allocated into seven blocks consisting of 40 sentences. Sentence stimuli are outlined in Appendix A.

The Big Five Inventory (BFI)

The BFI (John et al., 1991, 2008) is a 44-item questionnaire assessing the Big Five (A, C, E, N, O) personality characteristics. In contrast to the NEO-PI-R (Costa Jr and McCrae, 1995), the BFI is a shorter version frequently used in research settings that assesses prototypical traits of the Big Five. In addition, the BFI shares high reliability and validity when compared to other Big Five measures, e.g., Trait-Descriptive Adjectives (TDA) (Goldberg, 1992) and NEO-FFI (a shorter 60-item version of the NEO-PI-R) (Costa and McCrae, 1989, 1992).

Design

A cross-sectional design was employed. For the correlational analyses, personality traits were used as predictor variables, while the criterion variable was vocal emotion recognition accuracy. For the repeated-measures ANOVA, Emotion was the within-subject variable with seven levels; anger, disgust, fear, happiness, neutral, pleasant surprise, and sadness.

Procedure

Participants were seated in front of a computer where they listened to the sentence stimuli. They were informed of the experimental procedure, both by the experimenter and by on-screen instructions. Five practice trials were included to ensure that participants fully understood the task. For each trial, a fixation cross appeared on the center of the screen before sentence onset and remained visible while participants listened to each sentence stimuli. They were asked to indicate which emotion the speaker intended to convey using a forced-choice format, in which seven emotion boxes (anger, disgust, fear, happy, neutral, pleasant surprise, sad) appeared on the screen after sentence offset. After the response was given, there was an inter-stimulus interval of 1,500 ms before the next sentence stimulus was presented. In-between the four blocks, participants were able to pause until they felt ready to continue the task. The total run-time of the computerized task was approximately 30 min. After finishing the experiment, participants completed the BFI, the Satisfaction of Life Scale, PANAS-X and the Affect Intensity Measure (latter three all not reported here) before they were debriefed about the study purpose. All measures and procedures applied are reported within this manuscript.

Results

Vocal Emotion Recognition

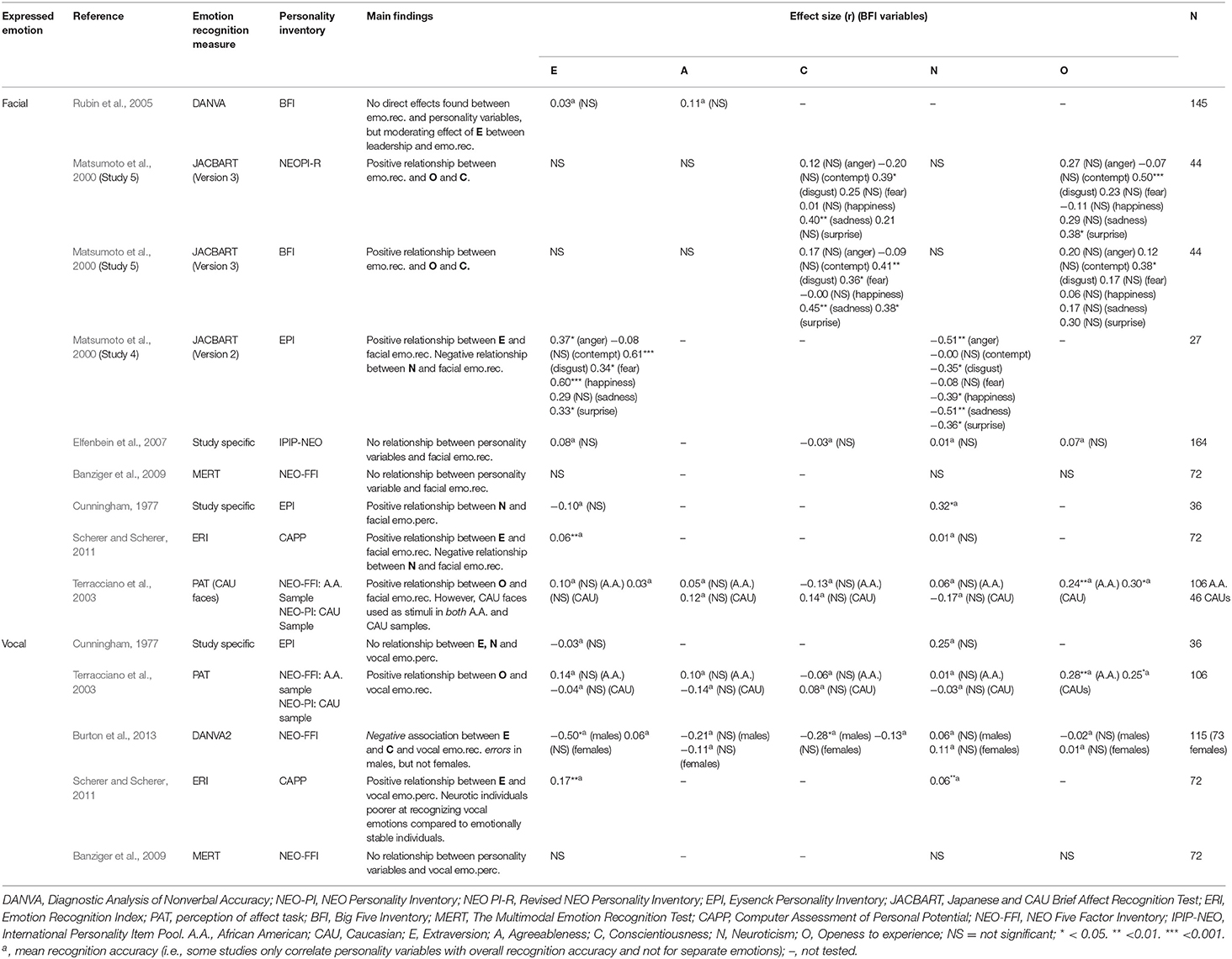

To control for stimulus and response biases, raw hit rates were transformed into unbiased hit rates (Hu scores; Wagner, 1993) (see Appendix C for raw hit rates and error patterns of responding). As Hu scores are proportional scores, they were arcsine-transformed as recommended for these data (Wagner, 1993). The arcsine-transformed Hu scores are presented in Table 2; a score of zero is equivalent to chance performance while a score of 1.57 reflects perfect performance.

Table 2. Mean arcsine-transformed Hu scores and SD for each emotion and averaged across all emotions.

To examine whether some emotions are easier to identify than others, a repeated-measures ANOVA was conducted using a modified Bonferroni procedure to correct for multiple comparisons (Keppel, 1991). In this procedure, the modified alpha value is obtained in the following way: alpha multiplied by the degrees of freedom associated with the conditions tested, divided by the number of planned comparisons. The Greenhouse-Geisser correction was applied to all repeated-measures with greater than one degree of freedom in the numerator.

A significant main effect was found for Emotion, F(4.579, 366.301) = 104.179, p < 0.001, suggesting that some emotions are indeed better recognized than others. Post hoc comparisons revealed that all emotion contrasts were significantly different from each other, with the exception of the contrast between disgust and fear, disgust and neutral, and fear and neutral. As can be seen in Table 2, anger was the emotion category recognized most accurately, while happy was the poorest recognized emotion.

Vocal Emotion Recognition and Personality

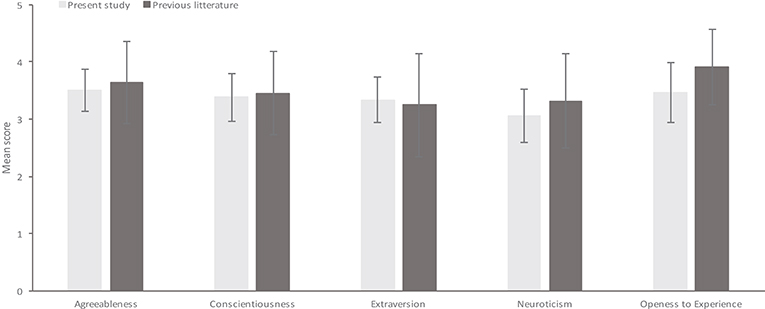

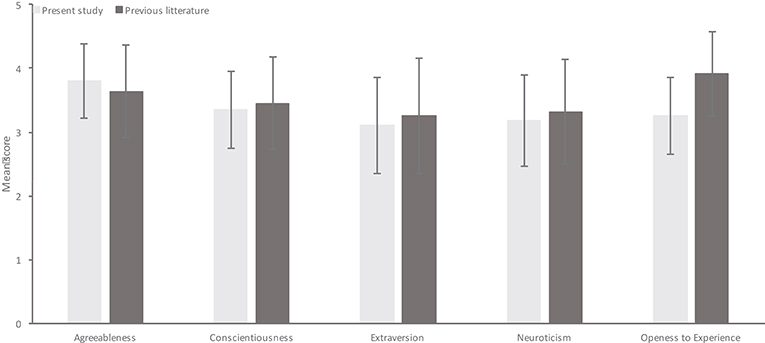

Means and standard deviations (SDs) were calculated for all the five personality dimensions and compared to the previous literature as compiled by Srivastava et al. (2003). Results from the present study were considered as a valid representation of administration of this measure to a population sample (see Figure 1) though our standard deviations look slightly smaller in some instances.

Figure 1. A comparison of means and SDs from the present study for each BFI variable and means and SDs obtained for the same variables in previous research. Means from the previous literature are based on results reported by Srivastava et al. (2003), where a mean age of 20 years was used for comparison, as the mean age of the present sample was 19.5 years.

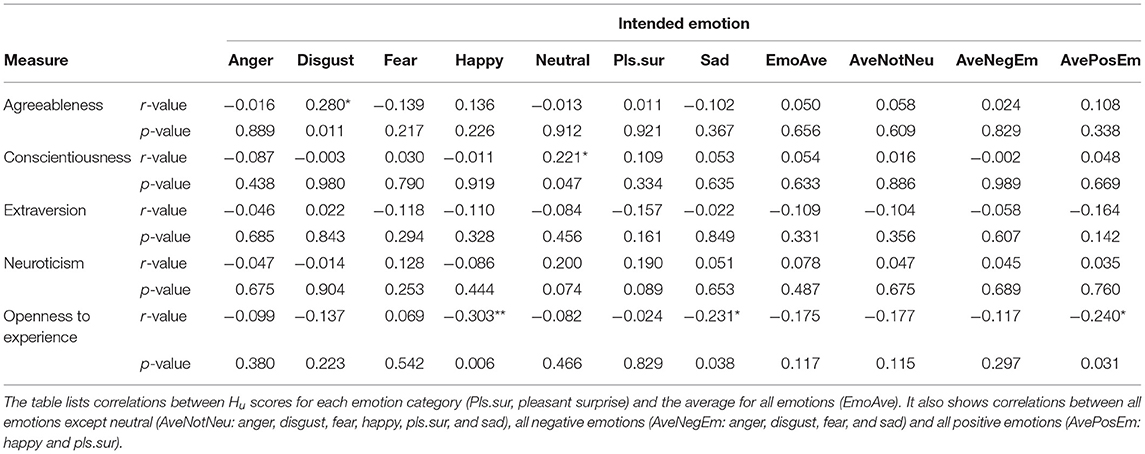

Pearson's correlations were conducted to examine the relationship between arcsine-transformed Hu scores and BFI scores (see Table 3). No significant relationship was found between overall emotion recognition and any of the Big Five personality traits. Similarly, no correlation was evident between extraversion and neuroticism and positive and negative emotion categories, respectively. However, a negative relationship was observed between recognition of positive emotions and openness to experience, r = −0.240, p = 0.031, showing that individuals scoring lower on openness to experience were better at recognizing positive vocal emotions.

Table 3. Study 1: Pearson's correlations (R-value) and their significance level (*P < 0.05) between Hu scores and the Big Five Inventory (BFI).

Discussion

Study 1 aimed to explore whether individual differences in personality traits could predict variation in vocal emotion perception. Group data analyses of emotion perception in isolation replicated findings previously reported in the vocal emotion literature (e.g., see Scherer, 1989 for a review). However, no noteworthy relationship was found between overall vocal emotion perception and any of the five personality dimensions, or between extraversion and neuroticism and positive and negative emotion categories, respectively. The present study thus failed to support the predictions made by the trait-congruency hypotheses. However, it should be noted that previous findings are also only partially in line with the trait-congruency predictions. For instance, Scherer and Scherer (2011) and Burton et al. (2013) suggest that extraverted individuals are better at vocal emotion recognition overall, but the latter study only finds this effect for males. Moreover, Scherer and Scherer (2011) argued that neuroticism links to better overall recognition of vocal emotions, while Burton et al. (2013) and other studies failed to find this relationship (Cunningham, 1977; Terracciano et al., 2003; Banziger et al., 2009). Interestingly, a negative relationship was evident between openness to experience and recognition of positive emotions. Although this relationship is only evident for recognition of positive emotions specifically, this is still surprising considering that Terracciano et al. (2003) argued for a positive relationship between vocal emotion perception and openness to experience.

Overall, the present study did not confirm a pairwise linear relationship between overall emotion perception and specific personality traits, a finding supported by some previous studies (e.g., Cunningham, 1977; Banziger et al., 2009). However, it is still possible that individual differences in personality traits play a role in vocal emotion recognition; personality characteristics may influence how quickly rather than how accurately individuals process vocal emotions. Thus, Study 2 was designed to explore the temporal processing of vocal emotions and its potential relationship to personality traits.

Study 2

Study 2 is the first attempt to explore whether individual differences in personality traits influence the time course processing of vocal emotions. Specifically, Study 2 aims to extend Study 1 by examining whether personality traits influence how quickly, in contrast to how accurately, different vocal emotion categories are identified. At a group level, we predicted that less acoustical information along the timeline would be required to accurately identify anger, fear, sadness, and neutral utterances compared to utterances intoned in a happy or disgusted voice, which would be in line with previous findings (e.g., Pell and Kotz, 2011). No clear predictions are made for the temporal unfolding of pleasant surprise, as this is, to our knowledge, the first study to examine this emotion category using a gating paradigm. Importantly, the study set out to examine the trait-congruency hypothesis; are extraverted and neurotic individuals quicker at recognizing positive and negative emotions, respectively.

Methods

Participants

One hundred-and-one (86 females, mean age: 19.4, SD: 2.45) undergraduate Psychology students at the University of Essex participated as part of a module requirement and received credits in exchange for their participation. All participants gave their written informed consent and reported normal or corrected to normal hearing and vision. Comparable to Study 1, participants who gave a self-report that they were experiencing a mental health disorder were excluded from the analysis, resulting in 83 participants (64 females) included in the final analyses. Power analysis was conducted as for Study 1 with a sample of 83 being sufficient to detect a small to medium sized effect keeping these same criteria.

Materials

Semantically-anomalous pseudo-utterances (e.g., “Klaff the frisp dulked lantary,” see Appendix B for full list) spoken by a professional female actress were selected from a previous inventory (Paulmann and Uskul, 2014). In the original study, average accuracy rates for stimuli were much better than expected by chance (14.2%) ranging from 55% (for happiness) to 91% (for neutral). From this inventory, 14 utterances were selected, each one coming from one of the seven emotional categories (anger, disgust, fear, happy, neutral, pleasant surprise, sad). All utterances were seven syllables long and edited into six gate intervals using Praat (Boersma and Weenink, 2009) on a syllable by syllable basis with increasing duration (see Pell and Kotz, 2011, for a similar approach). Average gate duration was 260 ms, full sentences were on average 2.2 s long. The first gate spanned over two syllables while subsequent gates added one syllable each until the utterance was complete (6th gate). The same 14 utterances were presented in each of the six blocks, with increasing syllable length per block, and utterances were randomly allocated for each individual participant.

The Big Five Inventory

The BFI was used as measure to characterize individual personality traits, as described in Study 1.

Design

A cross-sectional design was used. For the correlational analyses, predictor variables were identical to Study 1 (i.e., personality traits) while the criterion variable was recognition accuracy (and confidence ratings) at each gate interval and identification point of the intended emotion (in ms). For the repeated-measures ANOVA, Emotion (seven levels; anger, disgust, fear, happy, neutral, pleasant surprise, and sad) and Gate (six levels; Gates 1 to 6) were treated as within-subject variables.

Procedure

The experimental procedure was identical to Study 1; however, participants now listened to segments of each gate or the complete utterance (in the last block) rather than only complete sentences. Also, they were asked to indicate how confident they were that they had identified the correct emotion after categorizing each stimulus. The confidence scale ranged from 1 (not confident at all) to 7 (very confident). The procedure employed was identical to the one employed in Pell and Kotz (2011).

Results

Vocal emotion recognition

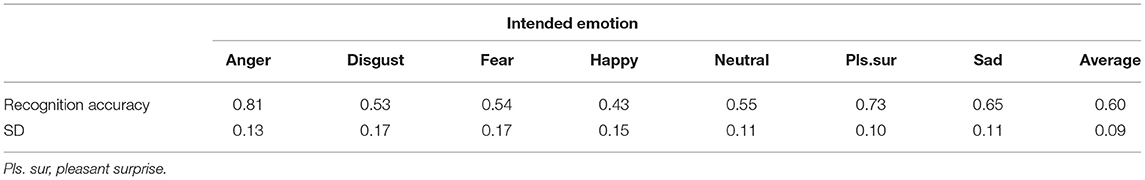

Again, unbiased hit rates were calculated and arcsine-transformed to control for response biases (Wagner, 1993) (Appendix D tabulates raw hit rates together with error patterns of responding). Arcsine-transformed Hu scores and SDs for each emotion category at each gate interval are presented in Table 4.

A repeated-measures ANOVA was used to examine how vocal emotion recognition unfolds over time. Significance level was again adjusted using Keppel's rule (new p = 0.017) (Keppel, 1991) and the Greenhouse-Geisser correction was applied. A significant main effect was found for Emotion, F(4.227, 346.654) = 242.097, p < 0.001, suggesting that emotion categories could be successfully distinguished from each other. Post hoc comparisons showed that all individual contrasts, except disgust and fear, and fear and pleasant surprise, are significantly different. As shown in Table 4, anger is again the most accurately recognized emotion while happy is the emotion category that is most poorly recognized. Additionally, a significant main effect of Gate was found, F(3.451, 282.972) = 112.928, p < 0.001, suggesting that recognition accuracy differed across gates. Post hoc comparisons revealed that recognition accuracy were significantly different at all gate intervals. Table 4 lists the overall mean recognition accuracy at each gate, showing that participants got better at recognizing emotional tone of voice with each increasing gate.

A significant Gate by Emotion interaction was also found, F(19.285, 1581.383) = 11.809, p < 0.001, indicating recognition differences across gates for the different emotion categories. The interaction was unfolded by emotion and post hoc comparisons revealed the following patterns: for angry stimuli, recognition rates improved with increasing gate duration (all ps < 0.001), except between Gates 3 and 4 (p = 0.068) and between Gates 5 and 6 (p = 0.025) where no significant improvements were observed. Looking at disgust stimuli, recognition rates improved significantly across gates except when comparing accuracy rates between Gate 4 and Gate 5 (p = 0.109). For stimuli expressing fear, recognition rates did not change significantly after listening to Gate 3 stimuli (all p ≥ 0.060), i.e., participants did not recognize fear better at longer durations. Comparable findings were observed for happy stimuli for which accuracy rates were not significantly different when comparing Gate 3 vs. Gate 4, Gate 4 vs. Gate 5, and Gate 5 vs. Gate 6 rates (all ps ≥ 0.02). Similarly, for neutral, recognition rates improved with increasing gate duration, except that recognition rates were not significantly different between at Gates 3 and 4, between Gates 4 and 5, and between Gates 5 and 6 (all ps ≥ 0.035). For pleasant surprise, recognition rates did not significantly improve on a gate by gate manner as contrast between Gates 1 and Gates 2, Gates 2 vs. 3, Gates 3 and 4, Gates 4 and 5, and between Gates 5 and 6 did not reach significance; still, at Gate 6, recognition was better than at Gate 1 (see Table 4), that is recognition improved with increasing exposure duration. Finally, for sadness, recognition improved with increasing stimulus duration, but comparisons for recognition rates between Gate 2 and Gate 3, Gates 3 and 4, Gates 4 and 5, and Gates 5 and 6 failed to reach significance (all ps ≥ 0.017). Overall, results showed that emotion recognition is generally easier when listening to longer vocal samples.

Vocal Emotion Processing and Personality

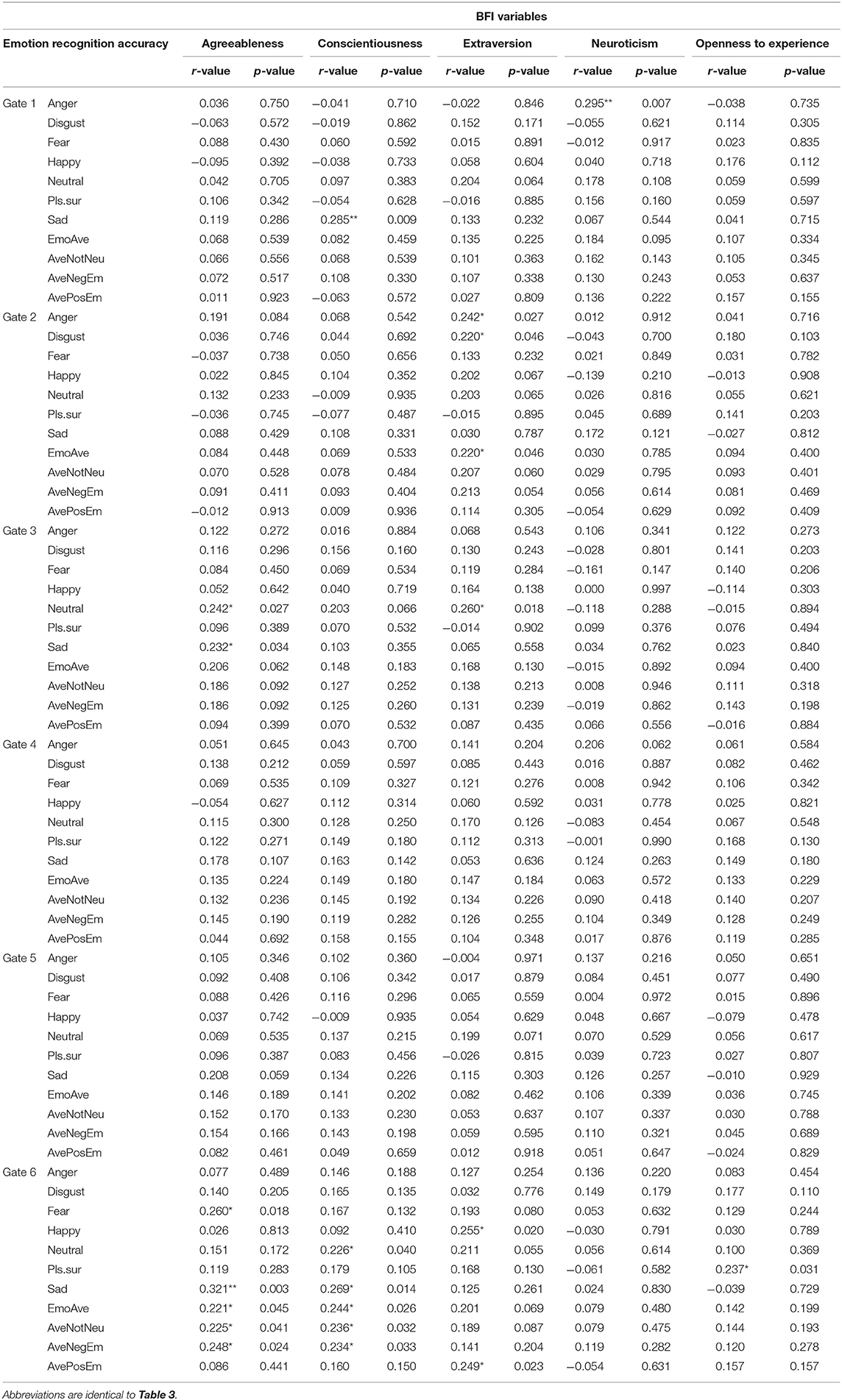

As for Study 1, means and SDs of all BFI variables were comparable to previous literature for general population samples (see Figure 2). Pearson's correlations were then conducted to examine the relationship between arcsine-transformed Hu scores and BFI variables at each gate interval. These results are presented in Table 5. While individuals scoring high on agreeableness and conscientiousness tended to have better overall recognition and recognition of negative emotions at Gate 6, extraverted individuals tended to have better recognition of positive emotions at this final gate. However, there are no clear and consistent trends between speed of recognition and BFI traits.

Figure 2. Means and SDs from the present study for each BFI variable including means and SDs obtained for the same variables in previous research. Means from the previous literature are based on results reported by Srivastava et al. (2003), where data from age group 20 was used, as in Study 1.

Table 5. Pearson's correlations (r-value) and their significance level (*p < 0.05, **p < 0.01) between Hu scores at individual gates and the Big Five Inventory (BFI).

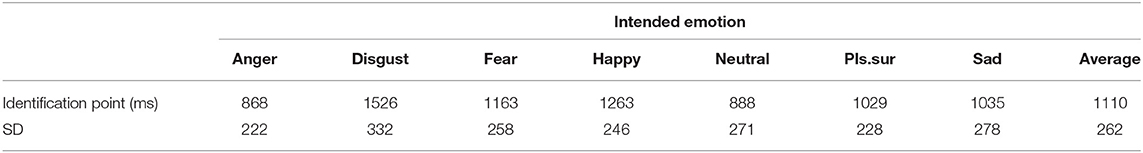

Importantly, the emotion identification point (EIP) was calculated for each emotion category to establish how much acoustical information is needed for listeners to successfully identify the intended emotion category. For each participant, EIP was first calculated for each vocal stimulus and then averaged across each emotion category (see Jiang et al., 2015 for a similar calculation procedure). Further, EIP was averaged for each emotion category across participants. As seen Table 6, anger and disgust are the emotion categories recognized quickest and slowest, respectively.

Table 6. Identification points in milliseconds and SD for each emotion category and average identification point across all emotions.

A repeated-measures ANOVA was conducted to examine whether the emotion identification point (EIP) differed between the different emotion categories, and significance level was adjusted to 0.017 to correct for multiple comparisons (Keppel, 1991). A significant main effect was found for EIP, F(5.318, 436.046) = 79.617, p < 0.001. Post hoc comparisons revealed that all EIPs were significantly different from each other for all emotion categories, except for contrasts between anger and neutral, and pleasant surprise and sadness. It is evident that anger (868 ms) and neutral (888 ms) are the emotion categories that are recognized quickest, while disgust (1,526 ms) is the emotion category that is recognized slowest.

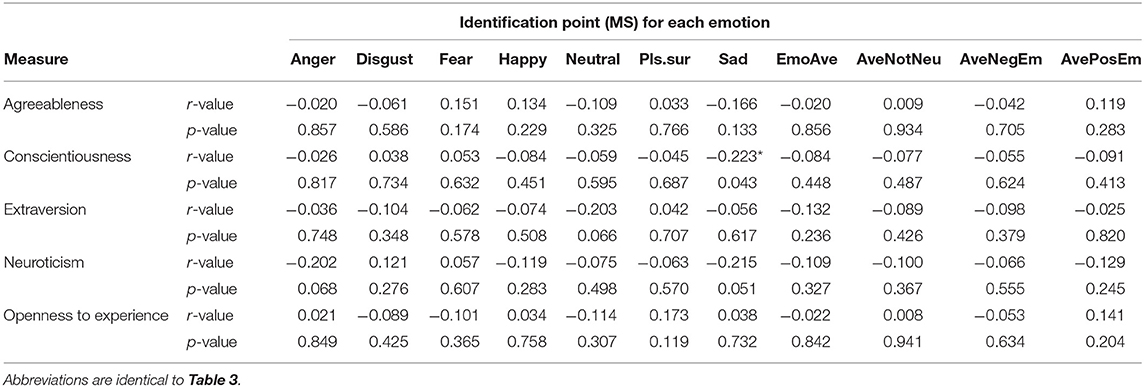

Pearson's correlations were used to examine the relationship between EIPs and BFI variables. Again, no clear trends appeared between overall EIPs and any of the BFI measures (see Table 7). Further, confidence ratings (on a 1–7 point scale) and SD were calculated for each emotion category at each gate interval to assess how participants evaluated their own performance. Generally, confidence ratings increased as recognition accuracy increased, indicating that confidence judgments given by listeners are related to their actual vocal emotion recognition ability. However, confidence ratings were not related to personality traits (see Appendix E).

Table 7. Pearson's correlations (R-Value) and their significance level (*P < 0.05) between identification point in ms and the Big Five Inventory (BFI).

Discussion

The overall aim of Study 2 was to explore whether individual differences in personality traits influenced recognition speed of vocal emotions. Firstly, group level analyses replicated findings in the previous vocal emotion literature. Specifically, as in previous studies, recognition accuracy improved at successive gate intervals and some emotion categories (i.e., anger and neutral) are recognized much quicker than other emotion categories (i.e., disgust and happiness; e.g., Cornew et al., 2010; Pell and Kotz, 2011; Rigoulot et al., 2013). Overall recognition accuracy patterns were also comparable with results obtained in Study 1 (e.g., anger and happiness were recognized with the highest and lowest accuracy respectively). Findings from both studies are thus well in line with the vocal emotion literature in general.

Secondly, in contrast to Study 1, extraverted individuals tended to be better at recognizing positive emotions at the final gate, while individuals scoring high on agreeableness and conscientiousness tended to be better at recognizing negative emotions and emotions overall at the final gate. It is unclear why we find these influences in Study 2, but did not observe them in Study 1. One crucial difference between the two studies is the stimuli used; in Study 2, we presented materials spoken by a professional speaker and that contained no semantic information. It could thus be argued that individual differences only appear when emotions are expressed in a highly prototypical way or when lacking semantic information. However, given that recognition rates across studies were comparable, speaker differences do not seem to influence emotion recognition accuracy heavily, it is thus less likely that the individual difference patterns are solely linked to the speaker differences across studies. Rather, if personality traits reliably influence the overall recognition ability of vocal emotions, this should have been evident in both Studies 1 and 2, which is not the case.

Importantly, the present study also failed to find a relationship between any of the personality traits and vocal emotion recognition speed. For instance, should the predictions from trait-congruency hypotheses be supported, a relationship should have been observed between extraversion and quicker EIPs for positive emotions, and for neuroticism and quicker EIPs for negative emotions. In short, no evidence was found here to support the assumption that personality traits heavily influence the temporal processing of vocal emotions.

General Discussion

Based on the assumption that emotion processing is greatly influenced by personality characteristics (e.g., Davitz, 1964; Matsumoto et al., 2000; Hamann and Canli, 2004; Schirmer and Kotz, 2006), we designed two independent but related studies to explore how personality traits influence the accuracy and speed of vocal emotion recognition. We initially analyzed the data at a group level to ensure that the findings in both studies reflected the vocal emotion recognition literature in general.

Vocal Emotion Processing and the Influence of Individual Personality Traits

The overall aim of the present investigation was to explore whether personality traits could explain variation in vocal emotion processing. In both studies, the data collected provided a solid base to explore this relationship; while the average scores on each personality dimension reflected a valid representation of general findings in the personality literature, the data on vocal emotion processing was also considered robust. While Study 1 reported an overall recognition accuracy of 55.3%, Study 2 reported an overall recognition accuracy of 61.8% at the final gate. While Study 1 presented materials from several untrained speakers, Study 2 employed materials from a professional female speaker. Thus, less speaker variability and potentially more prototypical portrayals of vocal emotions may have resulted in Study 2's higher average recognition accuracy. In both studies, recognition accuracy differed across emotions, in which anger and happy were the most accurately and most poorly recognized emotions, respectively. These results are in line with previous findings (e.g., Scherer, 1989; Paulmann et al., 2016).

In Study 2, the analyses of the time course processing of vocal emotions also showed that distinct emotion categories unfolded at different rates, suggesting that the amount of acoustical information required to identify the intended emotion differed between distinct emotion categories. These emotion-specific recognition patterns were consistent with the previous literature (e.g., Pell and Kotz, 2011). Also, recognition accuracy improved at successive gate intervals, in line with previous research (e.g., Cornew et al., 2010; Pell and Kotz, 2011; Rigoulot et al., 2013). One limitation of the gating design followed here is that segment duration increases over time (in order to determine the recognition point of individual emotions); however, this may limit our ability to compare the recognition success rates of short vs. long speech segments given that the longer segments were also heard after the short segments. To avoid this confound, future studies could randomly play short and long segments of speech to infer if longer gate durations indeed always lead to better recognition.

With regards to the relationship between vocal emotion processing and personality traits, we based our predictions on the trait-congruency hypothesis, which suggests that extraverted and neurotic individuals should display a bias toward processing of positive and negative emotions, respectively (e.g., Gomez et al., 2002; Robinson et al., 2007). It was not possible to formulate specific predictions for the other personality dimensions due to the sparse and contradictory findings in previous literature. We argued that, should a relationship between personality traits and vocal emotion processing be considered robust, findings for overall recognition accuracy would have to be replicated across the two studies.

Study 1 failed to support the predictions made by the trait-congruency hypotheses. Interestingly, the only personality trait that seemed to influence recognition accuracy of positive emotions was openness to experience. Specifically, individuals scoring lower on openness to experience were found to be better at recognizing positive vocal emotions. However, this result goes in the opposite direction to results reported previously (Terracciano et al., 2003), which suggest a positive relationship between openness to experience and recognition of vocal emotions.

Similarly, Study 2 also failed to find a significant relationship between personality traits and EIPs. Considering the adequate sample sizes, this suggests that individual variation in accuracy and speed of vocal emotion recognition cannot be clearly predicted by personality traits. A positive relationship was, however, found between extraversion and recognition of positive emotions at the final gate, suggesting that extraverted individuals are better at recognizing positive emotions overall. However, this finding was surprising, as Study 1 failed to find a relationship between extraversion and better recognition of positive emotions. Similarly, at Gate 6, agreeableness and conscientiousness were associated with better overall vocal emotion recognition and better recognition of negative emotions, but again, these findings are not reflected at different gates and are not consistent with results from EIPs or from results in Study 1.

Our findings are in line with previous studies that also failed to find a significant relationship between emotion perception and personality traits (e.g., Elfenbein et al., 2007; Banziger et al., 2009). Although there are more studies reporting a significant relationship (e.g., Cunningham, 1977; Scherer and Scherer, 2011; Burton et al., 2013) than studies reporting no relationship, it still raises the question of why replicating results is not guaranteed. One possibility, of course, is that samples are not comparable across studies. Here, we tried to address this concern by comparing the average scores for each personality dimension to general findings in the personality literature. We considered our average scores to be comparable. Additionally, it can be argued that observational study designs include only a restricted range of average scores (i.e., the scores that are most dense in the population, often mid-range scores). However, significant relationships may only be observed when including extremes from either end of the scale (which can easily be achieved in experimental designs). While this may be true, data from observational designs would still be valid with regard to their typicality in the population. That is, if a restricted range leads to non-significant findings while data including more extreme scores lead to a significant finding, the relationship between personality traits and vocal emotion recognition would still be overemphasized for the general population.

It is also worth noting that all studies that find a significant relationship between emotion recognition and vocal emotion perception do tend to provide an explanation for why this relationship is evident. For example, while Cunningham (1977) argues that neuroticism enhances emotion perception because discomfort is a motivating factor to perceive emotions. Scherer and Scherer (2011) who found the opposite pattern, argue that neurotic and anxious individuals might pay less attention to emotional cues from others. Thus, it seems easy to find plausible explanations, irrespective of the direction of the relationship. Future research should firstly focus on the discrepant results obtained in the personality and vocal emotion literature, and then try to gain a better understanding of the underlying reasons for the potential relationship(s).

If there is no clear and strong relationship between individual differences in personality traits and emotion processing, at least in the vocal domain, this can potentially explain why findings in the previous literature are so contradictory. We would argue that, as publishing null results is difficult, it is possible that at least some of the previous significant findings reflect chance findings. This hypothesis receives support from the fact that published studies showing null results are often reporting null findings in relation to other significant results. For example, the study by Elfenbein et al. (2007) focused mainly on the relationship between facial emotion recognition and effectiveness of negotiation, arguing that better facial emotion recognition could indeed influence negotiation performance. In relation to this, personality variables were also correlated against facial emotion recognition and null findings were reported as no relationships were found.

A limitation for the current investigation is the unequal male–female ratio in both Studies 1 and 2. Similar to other studies (e.g., Burton et al., 2013), our opportunity sampling resulted in a higher number of female participants. To address this limitation and to provide food for thought for future studies, we conducted post-hoc correlational analyses between personality traits and overall recognition accuracy for both studies for female and male participants separately. Similar to Burton et al. (2013) we fail to find reliable effects for our female sample. However, in latter study, the authors report a significant relationship between extraversion and conscientiousness and better vocal emotion recognition for male participants. Our current sample was too small to reliably comment on this relationship; yet, it may be of interest to some readers that we found a significant relationship between conscientiousness and overall emotion recognition (0.512; p = 0.021) in Study 1. No other effects were found in Study 1 or 2. Thus, it seems possible that previously reported significant associations between personality traits and emotion recognition (e.g., Terracciano et al., 2003; Scherer and Scherer, 2011) may predominantly have been driven by one gender only. Similarly, studies that fail to report significant associations might have overlooked relationships by collapsing across male and female participants. Thus, future studies with larger and more equal sample sizes should continue to explore how gender differences potentially influence the relationship between personality traits and vocal emotion processing. This will allow disentangling effects further and it is of great importance that future studies examine these points in a comprehensive and systematic manner. This will ensure that significant findings are replicable across different materials and different individuals when using same personality questionnaire measurements and research designs.

Concluding Thoughts

These studies used sample sizes that were supported by power calculation as well as by previous studies that report relationships with even smaller samples (e.g., Scherer and Scherer, 2011; Burton et al., 2013). We also controlled for confounding variables by using the same measurement tool (i.e., BFI) consistently across both studies, and by exploring the effects of speaker variability and difference in sentence stimuli. Although the data on personality traits and vocal emotion processing was representative of findings in the personality and vocal emotion recognition literature in general, a pairwise linear relationship between personality traits and emotion categories was not identified. Taken together, these data allow predicting that an overemphasis on the role of personality on vocal emotion processing has been put forward in the past. Crucially, it seems as if relationships between individual differences and emotional tone of voice are more complex than previously assumed. We thus encourage future studies to explore this complex relationship in more detail to shed further light on this issue.

Ethics Statement

This study was carried out in accordance with the recommendations of the University of Essex Science and Health Faculty Ethics Sub-committee. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the University of Essex Science and Health Faculty Ethics Sub-committee.

Author Contributions

DF worked on data collection, data analysis, and prepared draft of manuscript. HB worked on data analysis and manuscript draft. RM worked on manuscript draft. SP designed and programmed experiments, overlooked project, worked on data analysis and manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.00184/full#supplementary-material

References

Airas, M., and Alku, P. (2006). Emotions in vowel segments of continuous speech: analysis of the glottal flow using the normalised amplitude quotient. Phonetica 63, 26–46. doi: 10.1159/000091405

Banse, R., and Scherer, K. R. (1996). Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636. doi: 10.1037/0022-3514.70.3.614

Banziger, T., Grandjean, D., and Scherer, K. R. (2009). Emotion recognition from expressions in face, voice, and body: the Multimodal Emotion Recognition Test (MERT). Emotion 9, 691–704. doi: 10.1037/a0017088

Banziger, T., and Scherer, K. R. (2005). The role of intonation in emotional expressions. Speech Commun. 46, 252–267. doi: 10.1016/j.specom.2005.02.016

Boersma, P., and Weenink, D. (2009). Praat: doing phonetics by computer [Computer program]. Version 5.1.25. Available online at: http://www.praat.org/

Burton, L., Bensimon, E., Allimant, J. M., Kinsman, R., Levin, A., Kovacs, L., et al. (2013). Relationship of prosody perception to personality and aggression. Curr. Psychol. 32, 275–280. doi: 10.1007/s12144-013-9181-6

Cornew, L., Carver, L., and Love, T. (2010). There's more to emotion than meets the eye: a processing bias for neutral content in the domain of emotional prosody. Cogn. Emot. 24, 1133–1152. doi: 10.1080/02699930903247492

Costa Jr, P. T., and McCrae, R. R. (1995). Domains and facets: hierarchical personality assessment using the revised NEO personality inventory. J. Pers. Assess. 64, 21–50. doi: 10.1207/s15327752jpa6401_2

Costa, P., and McCrae, R. (1989). Neo Five-Factor Inventory (NEO-FFI). Odessa, FL: Psychological Assessment Resources.

Costa, P. T., and McCrae, R. R. (1992). Normal personality assessment in clinical practice: the NEO personality inventory. Psychol. Assess. 4, 5–13. doi: 10.1037/1040-3590.4.1.5

Cunningham, R. M. (1977). Personality and the structure of the nonverbal communication of emotion. J. Person. 45, 564–584. doi: 10.1111/j.1467-6494.1977.tb00172.x

Elfenbein, H. A., Foo, M. D., White, J., Tan, H. H., and Aik, V. C. (2007). Reading your counterpart: the benefit of emotion recognition accuracy for effectiveness in negotiation. J. Nonverbal Behav. 31, 205–223. doi: 10.1007/s10919-007-0033-7

Faul, F., Erdfelder, E., Lang, A. G., and Buchner, A. (2007). G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Goldberg, L. R. (1992). The development of markers for the big-five factor structure. Psychol. Assess. 4, 26–42. doi: 10.1037/1040-3590.4.1.26

Gomez, R., Gomez, A., and Cooper, A. (2002). Neuroticism and extraversion as predictors of negative and positive emotional information processing: comparing Eysenck's, Gray's, and Newman's theories. Eur. J. Personal. 16, 333–350. doi: 10.1002/per.459

Graham, C. R., Hamblin, A. W., and Feldstain, S. (2001). Recognition of emotion in English voices by speakers of Japanese, Spanish and English. Int. Rev. Appl. Ling. Lang. Teach. 39, 19–37. doi: 10.1515/iral.39.1.19

Hamann, S., and Canli, T. (2004). Individual differences in emotion processing. Curr. Opin. Neurobiol. 14, 233–238. doi: 10.1016/j.conb.2004.03.010

Jiang, X., Paulmann, S., Robin, J., and Pell, M. D. (2015). More than accuracy: nonverbal dialects modulate the time course of vocal emotion recognition across cultures. J. Exp. Psychol. Hum. Percept. Perform 41, 597–612. doi: 10.1037/xhp0000043

John, O. P., Donahue, E. M., and Kentle, R. L. (1991). The Big Five Inventory–Versions 4a and 54. Berkley, CA: University of California, Berkeley; Institute of Personality and Social Research.

John, O. P., Neumann, L. P., and Soto, C. J. (2008). “Paradigm shift to the integrative big-five trait taxonomy: history, measurement, and conceptual issues,” in Handbook of Personality: Theory and Research, eds O. P. John, R. W. Robins and L. A. Pervin (New York, NY: Guilford Press), 114–158.

Juslin, P. N., and Laukka, P. (2001). Impact of intended emotion intensity on cue utilization and decoding accuracy in vocal expression of emotion. Emotion 1, 381–412. doi: 10.1037/1528-3542.1.4.381

Kohler, C. G., Turner, T. H., Bilker, W. B., Brensinger, C. M., Siegel, S. J., Kanes, S. J., et al. (2003). Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am. J. Psychiatry 160, 1768–1774. doi: 10.1176/appi.ajp.160.10.1768

Larsen, R. J., and Ketelaar, T. (1989). Extraversion, neuroticism and susceptibility to positive and negative mood induction procedures. Person. Individ. Diff. 10, 1221–1228. doi: 10.1016/0191-8869(89)90233-X

Leppanen, J. M., Milders, M., Bell, J. S., Terriere, E., and Hietanen, J. K. (2004). Depression biases the recognition of emotionally neutral faces. Psychiatry Res. 128, 123–133. doi: 10.1016/j.psychres.2004.05.020

Matsumoto, D., LeRoux, J., Wilson-Cohn, C., Raroque, J., Kooken, K., Ekman, P., et al. (2000). A new test to measure emotion recognition ability: matsumoto and ekman's Japanese and Caucasian brief affect recognition test (JACBART). J. Nonverbal Behav. 24, 179–209. doi: 10.1023/A:1006668120583

Paulmann, S., Furnes, D., Bokenes, A. M., and Cozzolino, P. J. (2016). How psychological stress affects emotional prosody. PLoS ONE 11:e0165022. doi: 10.1371/journal.pone.0165022

Paulmann, S., and Uskul, A. K. (2014). Cross-cultural emotional prosody recognition: evidence from Chinese and British listeners. Cogn. Emot. 28, 230–244. doi: 10.1080/02699931.2013.812033

Pell, M. D., and Kotz, S. A. (2011). On the time course of vocal emotion recognition. PLoS ONE 6:e27256. doi: 10.1371/journal.pone.0027256

Rigoulot, S., Wassiliwizky, E., and Pell, M. D. (2013). Feeling backwards? How temporal order in speech affects the time course of vocal emotion recognition. Front. Psychol. 4:367. doi: 10.3389/fpsyg.2013.00367

Robinson, M. D., Ode, S., Moeller, S. K., and Goetz, P. W. (2007). Neuroticism and affective priming: evidence for a neuroticism-linked negative schema. Pers. Individ. Dif. 42, 1221–1231. doi: 10.1016/j.paid.2006.09.027

Rubin, R. S., Muntz, D. C., and Bommer, W. H. (2005). Leading from within: the effects of emotion recognition and personality on transformational leadership behavior. Acad. Manag. J. 48, 845–858. doi: 10.5465/amj.2005.18803926

Rusting, C. L. (1998). Personality, mood, and cognitive processing of emotional information: three conceptual frameworks. Psychol. Bull. 124, 165–196. doi: 10.1037/0033-2909.124.2.165

Scherer, K. R. (1989). Emotion psychology can contribute to psychiatric work on affect disorders: a review. J. R. Soc. Med. 82, 545–547. doi: 10.1177/014107688908200913

Scherer, K. R. (1995). Expression of emotion in voice and music. J. Voice 9, 235–248. doi: 10.1016/S0892-1997(05)80231-0

Scherer, K. R., and Scherer, U. (2011). Assessing the ability to recognize facial and vocal expressions of emotion: construction and validation of the emotion recognition index. J. Nonverbal Behav. 35, 305–326. doi: 10.1007/s10919-011-0115-4

Schirmer, A., and Kotz, S. A. (2006). Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 10, 24–30. doi: 10.1016/j.tics.2005.11.009

Srivastava, S., John, O. P., Gosling, S. D., and Potter, J. (2003). Development of personality in early and middle adulthood: set like plaster or persistent change? J. Personal. Soc. Psychol. 84:1041. doi: 10.1037/0022-3514.84.5.1041

Terracciano, A., Merritt, M., Zonderman, A. B., and Evans, M. K. (2003). Personality traits and sex differences in emotion recognition among African Americans and Caucasians. Ann. N.Y. Acad. Sci. 1000, 309–312. doi: 10.1196/annals.1280.032

Toivanen, J., Waaramaa, T., Alku, P., Laukkanen, A. M., Seppanen, T., Vayrynen, E., et al. (2006). Emotions in [a]: a perceptual and acoustic study. Logoped Phoniatr. Vocol. 31, 43–48. doi: 10.1080/14015430500293926

Unoka, Z., Fogd, D., Fuzy, M., and Csukly, G. (2011). Misreading the facial signs: specific impairments and error patterns in recognition of facial emotions with negative valence in borderline personality disorder. Psychiatry Res. 189, 419–425. doi: 10.1016/j.psychres.2011.02.010

Wagner, H. L. (1993). On measuring performance in category judgment studies of nonverbal behavior. J. Nonverbal Behav. 17, 3–28. doi: 10.1007/BF00987006

Keywords: emotional prosody, personality traits, emotional recognition accuracy, emotional recognition speed, tone of voice, vocal emotion

Citation: Furnes D, Berg H, Mitchell RM and Paulmann S (2019) Exploring the Effects of Personality Traits on the Perception of Emotions From Prosody. Front. Psychol. 10:184. doi: 10.3389/fpsyg.2019.00184

Received: 20 September 2018; Accepted: 18 January 2019;

Published: 12 February 2019.

Edited by:

Maurizio Codispoti, University of Bologna, ItalyReviewed by:

Ming Lui, Hong Kong Baptist University, Hong KongAndrea De Cesarei, University of Bologna, Italy

Copyright © 2019 Furnes, Berg, Mitchell and Paulmann. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Silke Paulmann, cGF1bG1hbm5AZXNzZXguYWMudWs=

Desire Furnes1

Desire Furnes1 Rachel M. Mitchell

Rachel M. Mitchell Silke Paulmann

Silke Paulmann