- 1Kokoro Research Center, Kyoto University, Kyoto, Japan

- 2Swiss Center for Affective Sciences, University of Geneva, Geneva, Switzerland

- 3Human Behaviour Analysis Laboratory, Department of Psychology, University of Geneva, Geneva, Switzerland

- 4Institut des Systèmes Intelligents et de Robotique (ISIR), Université Pierre et Marie Curie/Centre National de la Recherche Scientifique (CNRS), Paris, France

Researchers have theoretically proposed that humans decode other individuals' emotions or elementary cognitive appraisals from particular sets of facial action units (AUs). However, only a few empirical studies have systematically tested the relationships between the decoding of emotions/appraisals and sets of AUs, and the results are mixed. Furthermore, the previous studies relied on facial expressions of actors and no study used spontaneous and dynamic facial expressions in naturalistic settings. We investigated this issue using video recordings of facial expressions filmed unobtrusively in a real-life emotional situation, specifically loss of luggage at an airport. The AUs observed in the videos were annotated using the Facial Action Coding System. Male participants (n = 98) were asked to decode emotions (e.g., anger) and appraisals (e.g., suddenness) from facial expressions. We explored the relationships between the emotion/appraisal decoding and AUs using stepwise multiple regression analyses. The results revealed that all the rated emotions and appraisals were associated with sets of AUs. The profiles of regression equations showed AUs both consistent and inconsistent with those in theoretical proposals. The results suggest that (1) the decoding of emotions and appraisals in facial expressions is implemented by the perception of set of AUs, and (2) the profiles of such AU sets could be different from previous theories.

Introduction

Reading emotions of other individuals from their facial expressions is an important skill in managing our social relationships. Researchers have postulated that emotional categories (e.g., anger) (Ekman and Friesen, 1978, 1982) or elementary components of emotions, such as cognitive appraisals (e.g., suddenness) (Scherer, 1984; Smith and Scott, 1997), can be decoded based on the recognition of specific sets of facial movements (Tables A1, A2 in Supplementary Material). For example, Ekman and Friesen (1978) proposed that specific sets of facial action units (AUs), which could be coded through the Facial Action Coding System (FACS; Ekman et al., 2002), could signal particular emotions and specified the required action unit sets. For instance, in the case of sadness, the facial action set includes inner eyebrows raised (AU 1) and drawn together (AU 4), and lip corners pulled down (AU 15) (Ekman and Friesen, 1975). Scherer (1984), on the other hand, proposed that sets of AUs could signal cognitive appraisals. These researchers developed their theories based on previous theories and findings and their intuitions (Ekman, 2005).

However, only a few previous empirical studies systematically investigated the theoretical predictions on the relationships between the decoding of emotional categories or cognitive appraisals and AU sets and these studies did not provide clear supportive evidence (Galati et al., 1997; Kohler et al., 2004; Fiorentini et al., 2012; Mehu et al., 2012). For example, Kohler et al. (2004) investigated how participants categorize four emotions expressed by actors (Kohler et al., 2004). Based on the results from their decoding study, the authors described the necessary facial AUs for recognizing emotional expressions of high intensity happy, sad, angry, and fearful faces. The analysis showed that the four emotions could be identified with sets of AUs specific to these emotions which are characteristic of the target emotions and distinct from the other three analyzed emotions. However, the profiles of AUs were only partially consistent with theoretical predictions. For example, the brow lowerer (AU4) was associated with the decoding of sadness, however the inner eyebrows raiser (AU 1) and lip corner depressor (AU 15) were not. In short, these studies showed that decoding of emotions and appraisals in facial expressions was associated with sets of AUs, but the profiles of AU sets were only partially consistent with the theoretical predictions.

Furthermore, it must be noted that none of the aforementioned studies evaluated spontaneous emotional expressions in naturalistic settings. Facial displays encountered in everyday life situations show high variability including blends between emotions (Scherer and Ellgring, 2007; Calvo and Nummenmaa, 2015), and spontaneous behavior is more ambiguous (e.g., Yik et al., 1998). This issue is particularly important as behaviors we see in real-life emotional situations are often not the prototypical ones described in literature– they are very varied in terms of co-existing facial movements and sometimes subtle, with rare and low-intensity facial actions (e.g., see Hess and Kleck, 1994; Russell and Fernández-Dols, 1997).

In this study, we investigated whether and how sets of facial actions could be associated with the decoding of emotions and appraisals in spontaneous facial expressions in a naturalistic setting. As stimuli of such spontaneous facial expressions, we used unobtrusive recordings from a hidden camera showing face-to-face interactions of passengers claiming the loss of their luggage at an airport (Scherer and Ceschi, 1997, 2000). All the AUs in the passengers' facial expressions were first coded using FACS (Ekman et al., 2002). We then asked participants to rate six emotions—two positive (Joy, Relief) and four negative (Anger, Sadness, Contempt, Shame)—as well as six appraisals: suddenness, goal obstruction, importance and relevance, coping potential, external norm violation, and internal norm violation. Surprise was not included given that some previous studies (Kohler et al., 2004; Mehu et al., 2012) showed no agreement regarding its valence (Fontaine et al., 2007; Reisenzein and Meyer, 2009; Reisenzein et al., 2012; Topolinski and Strack, 2015), and described its duration as shorter than that of other emotions, making it an affect that could be potentially of a different nature than the other studied emotions (Reisenzein et al., 2012). We explored the relationships between the emotion/appraisal decoding and facial actions using stepwise multiple regression analyses. We expected to observe that sets of facial actions enable the decoding of emotions and appraisals (Ekman, 1992; Scherer and Ellgring, 2007). We did not formulate predictions for the AUs expected in each set given the lack of former decoding studies focusing on data from naturalistic settings.

Materials and Methods

Participants

One hundred and twenty-two students from a French technical university took part in the study. A psychologist conducted a short interview with the participants and found that women, a minority in this technical school, were a non-homogenous population (great age distribution, reported psychological history, and intake of substances). Therefore, only data from male students were considered in the analysis (n = 98; age = 17–25, means ± SD = 19.0 ± 1.5). The interview did not lead to the detection of any neuropsychiatric or psychological history in any of the participants. All participants provided written informed consent prior to participation in the study and were debriefed after the study. The study was approved by the University of Geneva ethics committee and conducted in accordance with the approved guidelines.

Stimuli

Our data relies on unobtrusive recordings from a hidden camera showing face-to-face interactions of passengers claiming the loss of their luggage at an airport (Scherer and Ceschi, 1997, 2000). The aim of such a naturalistic corpus was to obtain dynamic non-acted expressions, including non-typical and subtle facial displays.

Videos from this Lost Luggage corpus focus on the passenger, with a head and torso framing, while showing in the right corner a reduced size video of the face of the hostess (see Figure 1 for a schematic representation of stimuli).

Figure 1. Schematic illustration of stimuli: frame presenting a passenger claiming the loss of luggage to a hostess. Actual stimuli were real-life dynamic videos.

The original corpus included 1 min long video clips (16-bit colors) that have not been cut in a way to depict only one mental state per segment, therefore the first task was to segment emotional extracts, i.e., define when an emotional state starts and when it ends.

We asked laypersons to watch and to mark in time all mental states and point out state changes. The task was explained through guidelines that were provided in a written format that was additionally read orally to make sure the participants thought carefully about all the provided examples.

The judges were told that their task was to indicate changes between different mental states of one person and that each mental state could be made of several affects happening at the same time, e.g., one mental state composed of 50% joy and 50% guilt. They had to select a period of time (by indicating a starting and an ending time) for each state and to define this mental state. To avoid guiding participants into a particular theoretical framework, guidelines provided examples of action tendencies, motivational changes, appraisal attributions and emotional labels. Judges were told orally that the focus is on “internal states” of passengers that have lost their luggage and that the films come from a hidden camera at an airport. Judges were told that in one video clip a passenger can display several mental states and moments of neutrality and that they had to indicate them all. They could describe what they see in sentences, through expressions or labels either orally (transcribed by the experimenter) or in a written format on a piece of paper or directly in the provided ANVIL software, with which they were assisted (ANVIL, Video Annotation Research Tool. http://www.dfki.de/kipp/anvil/).

Seven laypeople, administrative staff from the technical university, were invited to act as judges for the task. The two first judges to participate (an account officer and a junior secretary) found the task to be extremely difficult. They gave the following reasons:

- it is impossible to say that a state is changing;

- it could be possible to point that there is an emotion such as anger in a video, but not point to a time;

- defining what the passengers feel or in what state they are, without being guided by specific emotional labels is difficult.

A third judge reported that the observed passengers are talking and not experiencing any affects or changes in mental states and therefore it is impossible to fulfill the task.

Consequently we decided to assign this procedure to individuals who we expected to have some ease to fulfill the task: e.g., individuals who have developed some acuity in the perception of facial expressions. Three individuals were recruited according to their professional activity (virtual character synthesis; facial graphics; FACS coding) and one for his interest in the non-verbal communication and social cognition. All four individuals, that we called “expert judges,” understood the task straight away from reading the guidelines.

Each clip was annotated by three expert judges.

In case of ambiguity, for example when one expert out of three considered less changes in a clip than the other experts, and made a segment last longer, we opted for leaving out the non-agreed upon segment. To reformulate, the solution was, when possible, to recut the clip to eliminate moments that led to discordance. Only moments on which judges agreed to display only one state were kept. If a state starting during a movement or a sentence was preceded by a neutral phase, a second or a second and a half might have been added to the chosen segment to enable the display of the movement development.

In two cases in which ambiguity did not allow an easy and straightforward cutting even in the above, restrictive, manner, a fourth experienced judge was asked to annotate the video clips. In both cases, two judges annotated long segments and one judge a much shorter segment. The fourth judge had a very similar segmentation to the short segmentation, for the two concerned videos. Thus, we followed this restrictive segmentation, as it enabled a definition of mental states to be extracted and presented in separate clips.

After cutting, 64 clips were obtained. Several extracts from these were excluded from the corpus, as they involved a fragment where the face was majorly obstructed or hidden behind glasses that reflected light in the view of the camera, or were presenting a situation outside of the original canvas (e.g., talking to a third person). In the end, 39 clips were included in the study, each lasting 4–56 s, with a majority lasting between 20 and 28 s. The clips were encoded with a temporal resolution up to 1/25th of a second and showed 19 male and 20 female stimuli. The passengers presented in the clips came from a wide variety of cultural backgrounds. Preliminary analyses showed that there were no effects of gender of stimuli in terms of all of the AU and emotion/appraisal rating data (t-test, p > 0.1); accordingly, the factor of stimulus gender was omitted in the following analyses.

FACS Coding

As we wanted to associate short video extracts to attributions made by laypersons, it was important to code all the facial actions that could have an impact on the observers. The ANVIL software was used for the annotation, with 61 tracks for the face (FACS; Ekman et al., 2002) and 22 for the bodily action coding in time. The analysis of the latter coding is outside the scope of this article. The FACS coding was performed by a certified FACS coder and was verified by a second certified FACS coder. The second coder annotated 12 % of the videos (randomly assigned). Both coders used the FACS manual as a constant reference criterion.

In assessing the precision of scoring, we looked at the frame-by-frame agreement by computing Cohen's Kappa (k) for face action coding (Cohen, 1960). The mean agreement was observed at the k = 0.66 (SD = 0.18), which according to Cicchetti and Sparrow (1981) shows strong agreement. Each of our particular AU coding cases showed satisfactory agreement except for AU 20 (lip stretcher), where k in the 0.21–0.40 range indicated merely a weak/fair agreement.

Procedure

Participants arrived in groups of two to ten. Each participant accessed the study individually through a web browser. The guidelines provided on the first web page were sufficient for understanding the tasks. Participants were randomly attributed to rating blocks. Emotional labels were presented in two controlled orders, the same order of presentation being kept for all stimuli judged by the same participant. Participants watched and evaluated from 6 to 39 short video clip extracts, depending on their self-reported concentration level and their willingness to participate. They answered the same set of questions after each video.

On the first page after each video display, participants were asked to evaluate appraisals presented in the form of a sentence, such as “Do you have the impression that the person you saw in the video, just faced a sudden event?” (suddenness). Appraisals were presented in the chronological order defined by the Componential theory: suddenness, goal obstruction, important and incongruent event, coping potential, respect of internal standards, and violation of external standards (e.g., Scherer, 2001). Participants answered appraisal questions on a 7-point Likert scale, ranging from 0 = totally disagree to 6 = totally agree.

On the second page after the video, participants also had to judge whether the observed passenger was experiencing joy, anger, relief, sadness, contempt, fear and shame. Each emotion was evaluated by participants on a separate 7-point Likert scale ranging from zero (no emotion) to six (strong emotion) and the emotions were not mutually exclusive. The order of presentation of emotional labels was randomized. The mean attribution of each label to each video (across participants) was the dependent variable. The independent variable was the duration of the facial action cues annotated by coders as present in videos watched by participants.

Data Analysis

For each video (n = 39), the annotation in terms of FACS units was quantified by computing the total duration of this AU in a video. We selected this measure as the length of videos was dependent on the duration of present AUs leading to the decoding of a mental state, and therefore the percentage of time an action is present in a video clip is not informative. Stepwise regression analyses with backward selection were performed using SPSS 16.0J (SPSS Japan, Tokyo, Japan). Stepwise regression analyses are techniques for selecting a subset of predictor variables (Ruengvirayudh and Brooks, 2016). By conducting the analyses, we tested whether and how the subset of AUs could predict the decoding of specific emotions/appraisals. Individual regression analyses were conducted for each emotion/appraisal as the dependent variable. All AUs were first entered into the model as independent variables and AUs that did not significantly predict the dependent variable were removed from the model one by one. The first model for which all AUs helped predict at least a marginally significant (p < 0.10) variance in the dependent variable emotion/appraisal was selected as the final model. Before the analyses, we conducted a priori power analyses using G*Power 3.1.9.2 (Faul et al., 2007). We used the data of Galati et al. (1997) as prior information, because only this study applied similar regression approaches and reported sufficient information for power analyses. The number of AUs associated with emotion decoding were comparable across previous studies (mean, 6.0, 5.25, and 7.1 in Galati et al., 1997; Kohler et al., 2004; and Fiorentini et al., 2012, respectively). The results showed that our regression analyses could detect the relationships between the AU sets and decoding of emotional categories reported in (Galati et al., 1997). (mean R2 = 0.49) with a strong statistical power (α = 0.05; 1–β = 0.99). Based on these data, we expected that our variable selection approach using stepwise regression analyses could detect the set of AUs similar with previous studies in terms of size. However, our analyses lacked the power to investigate full or larger sets of AUs (see discussion). For the final models, we calculated squared multiple correlation coefficients (R2) as effect-size parameters. Also, we calculated post hoc statistical power (1–β) for R2 deviation from zero using G*Power 3.1.9.2 (Faul et al., 2007).

Results

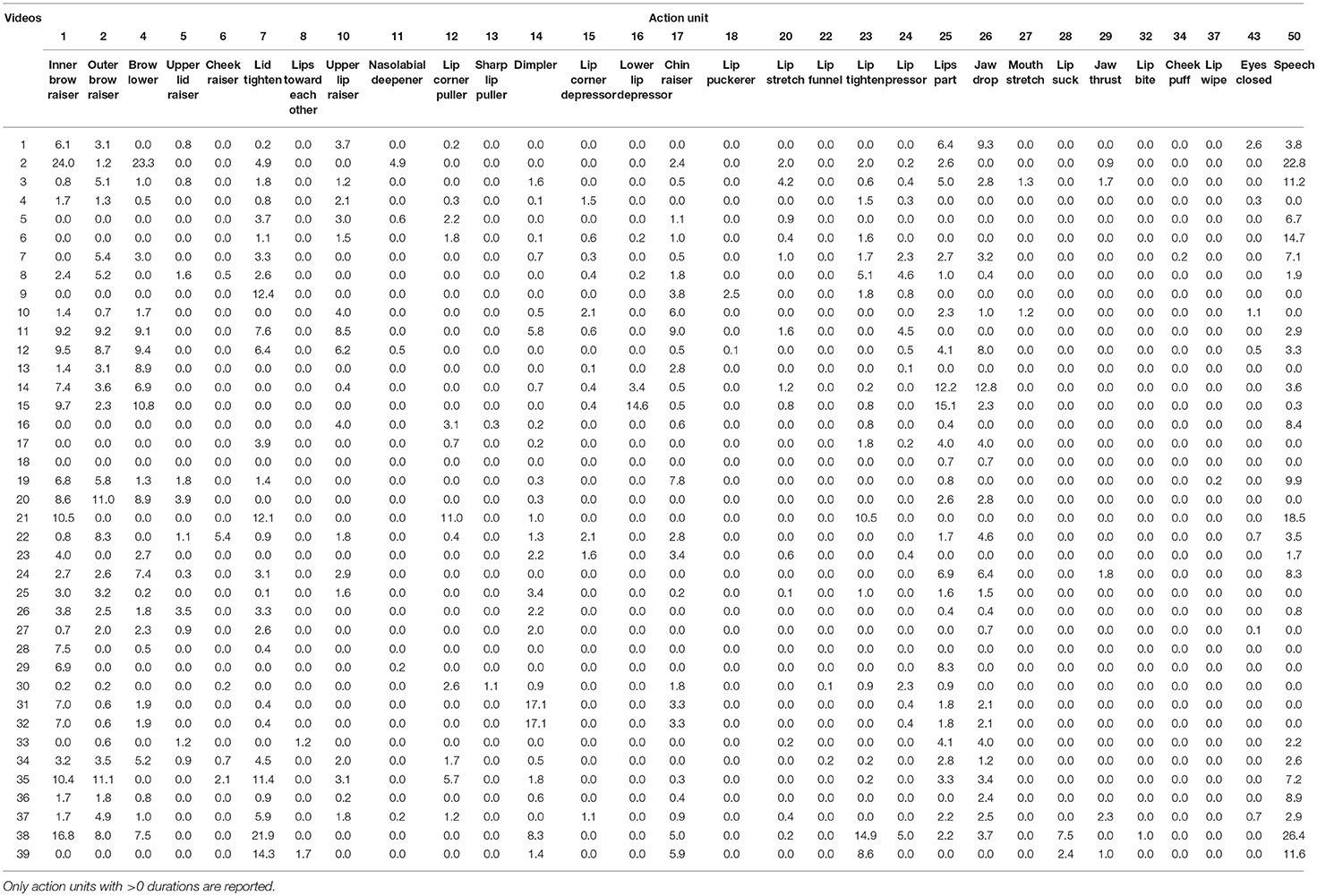

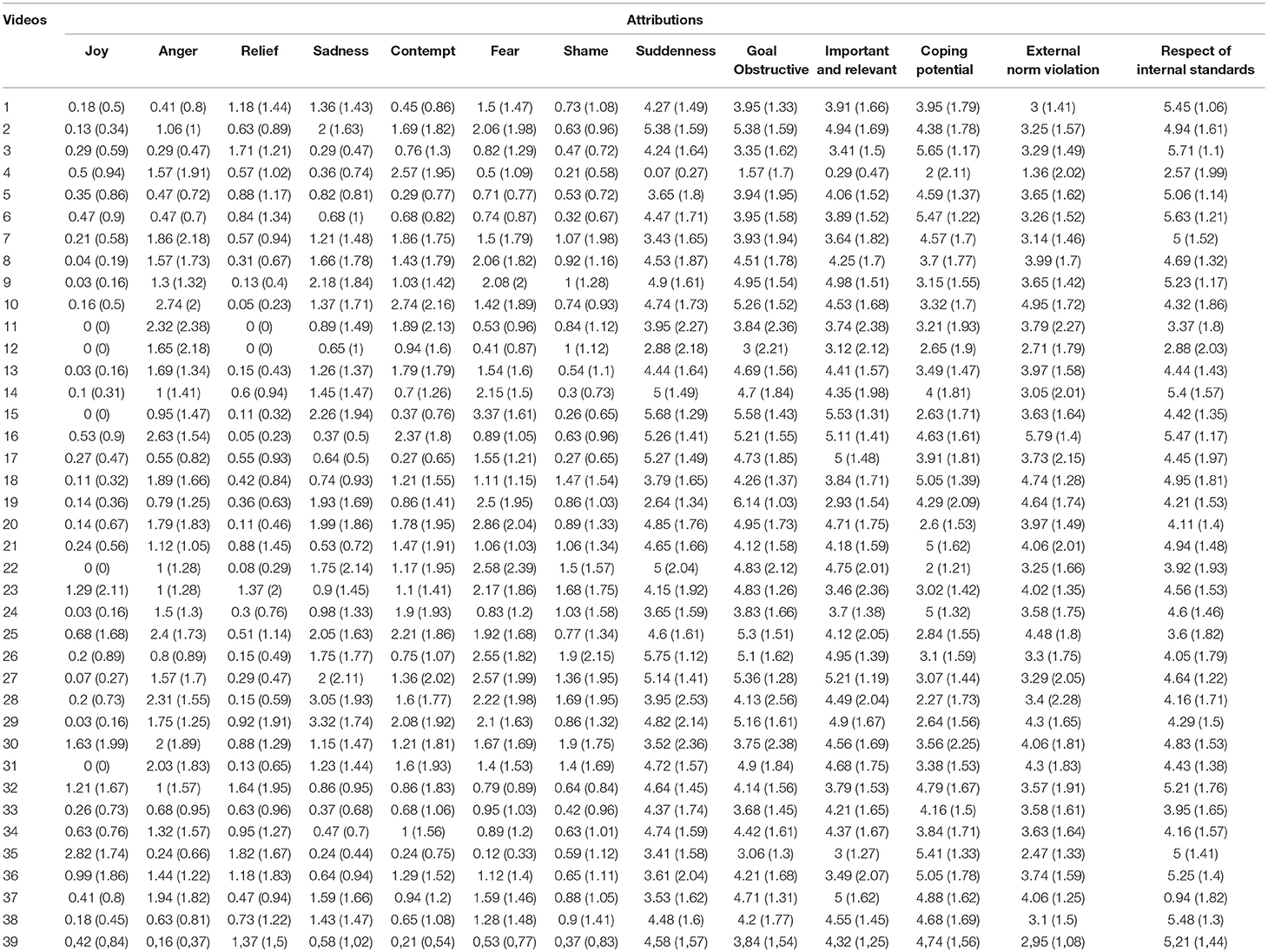

The FACS coding (total duration of AUs) and means ± SDs of attribution ratings are shown in Tables 1, 2, respectively.

Table 2. Means ± standard deviations of participants' ratings for each video in terms of emotion and appraisal label attributions.

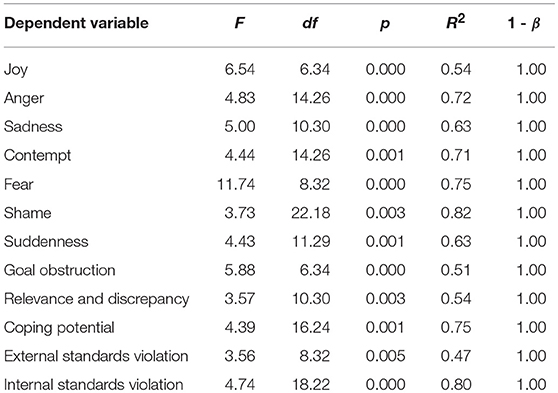

Stepwise regression analyses with backward selection showed that the attributions of all emotional categories and cognitive appraisals were significantly predicted by sets of AUs (Table 3). All the final regression models showed high effect-size parameters (R2 > 0.46) and high statistical power (1–β > 0.99).

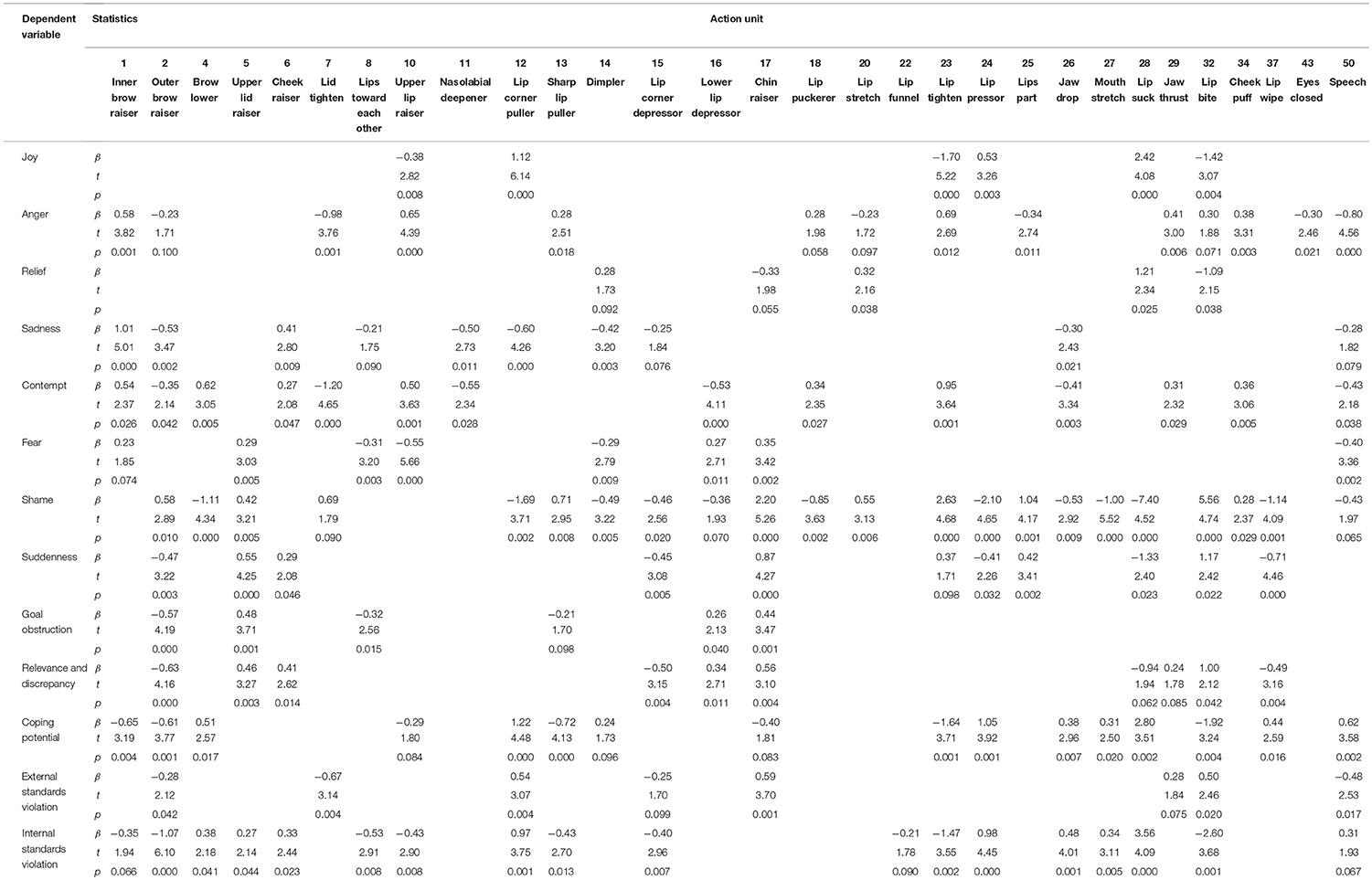

When we evaluated the profiles of AUs predicting each emotion/appraisal (Table 4), we found that several predictions based on prior observations in the literature concerning the relation between facial actions and emotion/appraisal attributions were confirmed. Specifically, positive associations were found between joy and AU 12 (upward lip corner pulling); between anger and AU 1 (inner eyebrow raise) and AU 10 (nasolabial furrow deepening); between sadness and AU 1 (inner brow raise) between fear and AU 5 (opening of the eye/upper lid raise) and marginally AU 1 (inner brow raise); and between shame and AU 2 (outer brow raise), AU 5 (opening of the eye/upper lid raise), AU 20 (lip stretch), AU 25 (mouth opening) and marginally AU 7 (lower eyelid contraction). In terms of cognitive appraisals, goal obstruction and perception of an event as relevant but incongruent were positively associated with AU 17 (chin raise). Perception of coping potential was associated with AU 4 (brow lowering) and AU 24 (lip pressing).

Table 4. Results of significant independent variables of final models in stepwise regression analyses.

At the same time, we found several unexpected positive associations between AUs and recognition of emotions/appraisals. For instance, AU 16 (lower lip depressor) was associated with fear as well as goal obstruction. It is interesting to note that there were also unexpected negative associations between facial actions and emotion/appraisal attribution (see Table 4). For example, the AU12 (smile) had negative associations with the attribution of some negative emotions, such as sadness and shame, but not with any appraisals. In terms of appraisal attribution, a negative association was observed for instance for AU 2 (outer brow raiser) and coping potential.

Discussion

In our study we looked at the decoding of emotions and cognitive appraisals from sets of AUs seen in a naturally negative emotional setting and we addressed this question through stepwise regression analyses. Results supported our predictions and revealed the relationships between AUs and the decoding of all emotions and cognitive appraisals. These results are consistent with some previous theories postulating the relationships between decoding of emotional categories or cognitive appraisals and sets of AUs (Ekman, 1992; Scherer and Ellgring, 2007), although other theories questioned such relationships (see Barrett et al., 2018). The results are also consistent with previous empirical studies investigating these relationships (e.g., Kohler et al., 2004). However, previous studies did not test spontaneous emotional expressions in naturalistic settings, and hence, the generalizability of these relationships to real-life facial expression processing remained unclear. Extending the current theoretical and empirical knowledge, our results suggest that decoding of emotional categories and cognitive appraisals can be accomplished through the recognition of specific facial movements.

The profiles of AUs associated with the decoding of emotional categories and cognitive appraisals were at least partially consistent with those in previous theories (Ekman, 1992; Scherer and Ellgring, 2007). For instance, the duration of the AU 1 (inner brow raise) and AU 12 (upward lip corner pulling) was associated with the attribution of sadness and joy, respectively. The duration of the AU 4 (brow lowering) and AU 17 (chin raise) was associated with coping potential and goal obstruction, respectively. These findings are also consistent with previous studies with actors (e.g., Kohler et al., 2004). Our results empirically support the notion that these AUs could be the core facial movements to decode emotional categories and cognitive appraisal in natural, spontaneous facial expressions.

At the same time, our results also showed several inconsistent patterns with theoretical predictions (Ekman, 1992; Scherer and Ellgring, 2007). For example, outer brow raiser was not associated with suddenness and lower lip depressor was associated with fear as well as with goal obstruction. Further testing is required for validation purposes in dynamic naturalistic settings as it might be useful to include these AUs in the new theories regarding the relationships between emotion/appraisal decoding and AUs. Furthermore, our results revealed some negative relationships between the duration of AUs and the decoding of emotions/appraisal. This is consistent with results from one rating study of photographs of acted emotional expressions (Galati et al., 1997). In our study, for example, smiles were negatively associated with sadness and shame. These findings suggest that not only the present but also the absent facial movements can be decoded as messages of emotions or appraisals in natural, dynamic, face-to-face communication.

Our findings specifying the relationships between the decoding of emotions/appraisals and AUs in spontaneous facial expressions could have practical implications. For example, it may be possible to build artificial intelligent systems to read emotions/appraisals from emotional facial expressions in a more human-like way. Although such systems currently exist, almost all of them appear to be constructed based on theories or data with actors' deliberate expressions (Paleari et al., 2007; Niewiadomski et al., 2011; Ravikumar et al., 2016; Fourati and Pelachaud, 2018). Additionally, it may be possible to build humanoid virtual agents and robots (Poggi and Pelachaud, 2000; Lim and Okuno, 2015; Niewiadomski and Pelachaud, 2015) for applications in healthcare or in the long term with the elderly, with expressions, which could be recognized as showing natural human-like emotional expressions. Finally, given the importance of appropriate understanding of inner states displayed in others' faces in healthy social functioning (McGlade et al., 2008), it may be interesting to assess the relationships between decoding of emotions/appraisals and AUs using naturalistic facial expression stimuli in clinical conditions. Indeed, several clinical populations report social cognition impairments in real-life situations, while showing satisfactory performance in typical emotion recognition or theory of mind tasks, which mostly rely on the judgment of pictures of acted facial expressions or exaggerated social stories (e.g. see Bala et al., 2018). Dynamic and more naturalistic approaches might help define clinical impairments faced for example by patients with Schizophrenia (Okruszek et al., 2015; Okruszek, 2018), amygdala lesions (Bala et al., 2018) or high functioning autism (Murray et al., 2017) and eventually lead to the improvement of existing social cognition trainings.

Several limitations of the present study should be acknowledged. First, our naturalistic set was limited in the number of stimuli and included only a negatively valenced situation at a single location. In order to generalize the findings, more positive and negative situations presented in varied and controlled contexts and cultures would need to be investigated. Furthermore, although we lacked data regarding emotions experienced by the expressers and we did not monitor the internal states of the participants, it would have been interesting to investigate interactions between AUs and encoded/decoded emotions and the characteristics of the observers. Given the literature on how emotions, facial mimicry and moods of observers influence emotion perception in others (e.g., Schmid and Schmid Mast, 2010; Wood et al., 2016; Wingenbach et al., 2018) it is a valuable topic in future research on dynamic naturalistic stimuli interpretation. Second, we analyzed only male participants. Although consistent gender differences have not been reported in terms of rating-style in the decoding of emotional expressions (e.g., Duhaney and McKelvie, 1993; Biele and Grabowska, 2006; for a review, see Forni-Santos and Osório, 2015), numerous studies have reported that the gender of the decoder might influence different aspects of the processing of faces. For example, the recognition of gender of faces was enhanced (reaction time reduced) when these were presented looking away from the decoder of opposite gender, but not in the case of a same gender decoder (Vuilleumier et al., 2005). It has also been reported that exposure to angry male as opposed to angry female faces activated the visual cortex and the anterior cingulate gyrus significantly more in men than in women (Fischer et al., 2004). Similarly, although no significant differences were observed in accuracy ratings by male vs. female participants nor in the recognition of male vs. female encoder faces, higher brain activity was observed in the extrastriate body area in reaction to threatening male faces compared to female faces, as well as in the activity of the amygdala to threatening vs. neutral female faces in male but not female participants (Kret et al., 2011). For all those reasons, the effect of gender of decoder participants needs to be carefully monitored in further studies. Third, although our final models had high statistical power, our sample size was small. In our approach we used stepwise regression analyses in order to select a subset of predictor variables. While having expected a number of predictor variables to be observed based on previous evidence, and our analyses having detected the expected number of predictor variables with high power, our analyses lacked the power to sufficiently analyse AUs not included in the final models. Future studies with a larger sample size may reveal the involvement of more AUs in the decoding of emotions/appraisals. Fourth, we coded single AUs but not the combination (i.e., simultaneous appearance) of AUs (e.g., AU 6 + 12) due to the lack of power. Because single vs. combined AUs could transmit different emotional messages (Ekman and Friesen, 1975), investigation of AU combination is an important matter for future research. Fifth, we coded AUs in a binary fashion as conducted in the previous studies testing the AUs and decoding of emotions (e.g., Kohler et al., 2004). The coding of 5-level AU intensity, which were newly added in FACS coding (Ekman et al., 2002), may provide more detailed insights regarding the relationships. Sixth, we studied only a linear additive relationship between AUs and the decoding of emotions and their components to simplify analyses. Further work could go beyond linear associations, e.g., quadratic associations. Finally, the use of naturalistic behavior in perceptive paradigms only allows for correlational studies, without the possibility of any strong claims of causality. When constructing paradigms allowing for causality testing, one aspect of interest for future investigations is the direct influence of single facial units on attributions, and future studies could carefully manipulate the presentation of AUs while keeping as much as possible of a naturalistic setting. One method to manipulate behavior one-by-one is to reproduce human behavior using a virtual humanoid or robot. Today's technology allows for dynamic and functional representations of human behavior, which can be copied from a naturalistic scene in sufficient detail in order to evoke similar reactions to the one's observed in videos of humans. Given that presenting behavior without context or one AU at a time lacks naturalness, AUs should be judged in sets of units they originally appear in. The manipulation of single AUs could focus on the removal of existing actions (see Hyniewska, 2013).

In conclusion, numerous studies have investigated the decoding of emotional expressions from prototypical displays and there seems to be unanimity on sets of facial AUs that provide good discriminability. However, to the authors' best knowledge, no study has looked at sets of AUs that lead to emotion and appraisal perception in naturally occurring situations. Our results show that emotional and appraisal labels can be predicted based on recorded sets of facial actions units. Interestingly, the sets of observed AUs do not coincide with what has been observed in former decoding studies.

Author Contributions

SH, SK, and CP were responsible for the conception and design of the study. SH obtained the data. SH and WS analyzed the data. All authors wrote the manuscript.

Funding

This study was supported by funds from the Research Complex Program from Japan Science and Technology Agency, as well as by the doctoral fund from the Swiss Center for Affective Sciences, University of Geneva.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank Magdalena Rychlowska, Tanja Wingenbach, and Tony Manstead for fruitful discussions and Yukari Sato for the schematic illustration.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2018.02678/full#supplementary-material

References

Bala, A., Okruszek, Ł., Piejka, A., Głebicka, A., Szewczyk, E., Bosak, K., et al. (2018). Social perception in mesial temporal lobe epilepsy: interpreting social information from moving shapes and biological motion. J. Neuropsychiatry Clin. Neurosci. 30, 228–235. doi: 10.1176/appi.neuropsych.17080153

Barrett, L. F., Khan, Z., Dy, J., and Brooks, D. (2018). Nature of emotion categories: comment on cowen and keltner. Trends Cogn. Sci. 22, 97–99. doi: 10.1016/j.tics.2017.12.004

Biele, C., and Grabowska, A. (2006). Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 171, 1–6. doi: 10.1007/s00221-005-0254-0

Calvo, M. G., and Nummenmaa, L. (2015). Perceptual and affective mechanisms in facial expression recognition: an integrative review. Cogn. Emot. 30, 1081–1106. doi: 10.1080/02699931.2015.1049124

Cicchetti, D. V., and Sparrow, S. A. (1981). Developing criteria for establishing interrater reliability of specific items: applications to assessment of adaptive behavior. Am. J. Ment. Defic. 86, 127–137.

Duhaney, A., and McKelvie, S. J. (1993). Gender differences in accuracy of identification and rated intensity of facial expressions. Percept. Mot. Skills 76, 716–718. doi: 10.2466/pms.1993.76.3.716

Ekman, P. (1992). An argument for basic emotions. Cogn. Emot. 6:169–200. doi: 10.1080/02699939208411068

Ekman, P. (2005). “Conclusion: What we have learned by measuring facial behaviour,” in What the face reveals: Basic and applied studies of spontaneous expression using the facial Action Coding System (FACS) 2nd Edn., eds P. Ekman, and E. L. Rosenberg (Oxford: Oxford University Press), 605–626.

Ekman, P., Friesen, W., and Hager, J. (2002). Facial Action Coding System. Salt Lake City: Research Nexus eBook.

Ekman, P., and Friesen, W. V. (1978). Manual of the Facial Action Coding System (FACS). Palo Alto, CA: Consulting Psychologists Press.

Ekman, P., and Friesen, W. V. (1982). Felt, false, and miserable smiles. J. Nonverb. Behav. 6, 238–252. doi: 10.1007/BF00987191

Faul, F., Erdfelder, E., Lang, A. G., and Buchner, A. (2007). G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Fiorentini, C., Schmidt, S., and Viviani, P. (2012). The identification of unfolding facial expressions. Perception 41, 532–555. doi: 10.1068/p7052

Fischer, H., Sandblom, J., Herlitz, A., Fransson, P., Wright, C. I., and Backman, L. (2004). Sex-differential brain activation during exposure to female and male faces. Neuroreport 15, 235–238. doi: 10.1097/00001756-200402090-00004

Fontaine, J. R. J., Scherer, K. R., Roesch, E. B., and Ellsworth, P.C. (2007). The world of emotions is not two-dimensional. Psychol. Sci. 18, 1050–1057. doi: 10.1111/j.1467-9280.2007.02024.x

Forni-Santos, L., and Osório, F. L. (2015). Influence of gender in the recognition of basic facial expressions: A critical literature review. World J. Psychiatry. 5, 342–351. doi: 10.5498/wjp.v5.i3.342

Fourati, N., and Pelachaud, C. (2018). Perception of emotions and body movement in the emilya database. IEEE Trans. Affect Computing 9, 90–101. doi: 10.1109/TAFFC.2016.2591039

Galati, D., Scherer, K. R., and Ricci-Bitti, P. E. (1997). Voluntary facial expression of emotion: Comparing congenitally blind with normally sighted encoders. J. Pers. Soc. Psychol. 73, 1363–1379. doi: 10.1037/0022-3514.73.6.1363

Hess, U., and Kleck, R. E. (1994). The cues decoders use in attempting to differentiate emotion elicited and posed facial expressions. Eur. J. Soc. Psychol. 24, 367–381. doi: 10.1002/ejsp.2420240306

Hyniewska, S. (2013). Non-Verbal Expression Perception and Mental State Attribution by Third Parties. PhD thesis, Télécom-ParisTech/University of Geneva.

Kohler, C. G., Turner, T., Stolar, N., Bilker, W., Brensinger, C. M., Gur, R. E., et al. (2004). Differences in facial expressions of four universal emotions. Psychiatry Res. 128, 235–244 doi: 10.1016/j.psychres.2004.07.003

Kret, M. E., Pichon, S., Grèzes, J., and de Gelder, B. (2011). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. Neuroimage 54, 1755–1762. doi: 10.1016/j.neuroimage.2010.08.012

Lim, A., and Okuno, H. G. (2015). A recipe for empathy. Int. J. Soc. Robot. 7, 35–49. doi: 10.1007/s12369-014-0262-y

McGlade, N., Behan, C., Hayden, J., O'Donoghue, T., Peel, R., Haq, F., et al. (2008). Mental state decoding v. mental state reasoning as a mediator between cognitive and social function in psychosis. Br. J. Psychiatry 193, 77–78. doi: 10.1192/bjp.bp.107.044198

Mehu, M., Mortillaro, M., Bänziger, T., and Scherer, K. R. (2012). Reliable facial muscle activation enhances recognizability and credibility of emotional expression. Emotion 12, 701–715. doi: 10.1037/a0026717

Murray, K., Johnston, K., Cunnane, H., Kerr, C., Spain, D., Gillan, N., et al. (2017). A new test of advanced theory of mind: the “Strange Stories Film Task” captures social processing differences in adults with autism spectrum disorders. Autism Res. 10, 1120–1132. doi: 10.1002/aur.1744

Niewiadomski, R., Hyniewska, S. J., and Pelachaud, C. (2011). Constraint-based model for synthesis of multimodal sequential expressions of emotions. IEEE Trans. Affect. Comput. 2, 134–146. doi: 10.1109/T-AFFC.2011.5

Niewiadomski, R., and Pelachaud, C. (2015). The effect of wrinkles, presentation mode, and intensity on the perception of facial actions and full-face expressions of laughter. ACM Trans. Appl. Percept. 12, 1–21. doi: 10.1145/2699255

Okruszek, Ł. (2018). It Is Not Just in Faces! Processing of emotion and intention from biological motion in psychiatric disorders. Front. Hum. Neurosci. 12:48. doi: 10.3389/fnhum.2018.00048

Okruszek, Ł., Haman, M., Kalinowski, K., Talarowska, M., Becchio, C., and Manera, V. (2015). Impaired recognition of communicative interactions from biological motion in schizophrenia. PLoS ONE 10:e0116793. doi: 10.1371/journal.pone.0116793

Paleari, M., Grizard, A., Lisetti, C., and Antipolis, S. (2007). “Adapting Psychologically grounded facial emotional expressions to different anthropomorphic embodiment platforms,” in Proceedings of the Twentieth International Florida Artificial Intelligence Research Society Conference (Key West, FL).

Poggi, I., and Pelachaud, C. (2000). “Performative facial expressions in animated faces,” in Embodied Conversational Agents, eds J. Cassell, J. Sullivan, S. Prevost, and E. Churchill (Cambridge: MIT Press), 155–188.

Ravikumar, S., Davidson, C., Kit, D., Campbell, N., Benedetti, L., and Cosker, D. (2016). Reading between the dots: combining 3D markers and FACS classification for high-quality blendshape facial animation. Proc. Graphics Interface 2016, 143–151. doi: 10.20380/GI2016.18

Reisenzein, R., and Meyer, W.U. (2009). “Surprise,” in Oxford Companion to the Affective Sciences, eds D.Sander and K. R. Scherer (Oxford: Oxford University Press), 386–387.

Reisenzein, R., Meyer, W. U., and Niepel, M (2012). “Surprise,” in Encyclopedia of Human Behaviour, 2nd Edn., ed V. S. Ramachandran (Amsterdam: Elsevier), 564–570.

Ruengvirayudh, P., and Brooks, G. P. (2016). Comparing stepwise regression models to the best-subsets models, or, the art of stepwise. Gen Linear Model J. 42, 1–14.

Russell, J. A., and Fernández-Dols, M. J. (1997). The Psychology of Facial Expression. New York, NY: Cambridge University Press.

Scherer, K., and Ceschi, G. (2000). Criteria for emotion recognition from verbal and nonverbal expression: studying baggage loss in the airport. Pers. Soc. Psychol. Bull. 26, 327–339. doi: 10.1177/0146167200265006

Scherer, K. R. (1984). “On the nature and function of emotion: a component process approach,” in Approaches to Emotion, eds K. R. Scherer and P. Ekman (Hillsdale, NJ: Erlbaum), 293–318.

Scherer, K. R. (2001). “Appraisal considered as a process of multilevel sequential checking,” in Appraisal Processes in Emotion: Theory, Methods, Research, eds. K. R. Scherer, A. Schorr, and T. Johnstone (Oxford: Oxford University Press), 92–120.

Scherer, K. R., and Ceschi, G. (1997). Lost luggage: a field study of emotion-antecedent appraisal. Motiv. Emot. 21, 211–235. doi: 10.1023/A:1024498629430

Scherer, K. R., and Ellgring, H. (2007). Are facial expressions of emotion produced by categorical affect programs or dynamically driven by appraisal? Emotion 7, 113–130. doi: 10.1037/1528-3542.7.1.113

Schmid, P. C., and Schmid Mast, M. (2010). Mood effects on emotion recognition. Motiv. Emot. 34, 288–292. doi: 10.1007/s11031-010-9170-0

Smith, C. A., and Scott, H. S. (1997). “A componential approach to the meaning of facial expressions,” in The Psychology of Facial Expression, eds J. A. Russell and M. J. Fernández-Dols (New York, NY: Cambridge University Press), 229–254.

Topolinski, S., and Strack, F. (2015). Corrugator activity confirms immediate negative affect in surprise. Front. Psychol. 6:134. doi: 10.3389/fpsyg.2015.00134

Vuilleumier, P., George, N., Lister, V., Armony, J., and Driver, J. (2005). Effects of perceived mutual gaze and gender on face processing and recognition memory. Vis. Cogn. 12, 85–101. doi: 10.1080/13506280444000120

Wingenbach, T. S., Brosnan, M., Pfaltz, M. C., Plichta, M. M., and Ashwin, C. (2018). Incongruence between observers' and observed facial muscle activation reduces recognition of emotional facial expressions from video stimuli. Front. Psychol. 9:864. doi: 10.3389/fpsyg.2018.00864

Wood, A., Rychlowska, M., Korb, S., and Niedenthal, P. (2016). Fashioning the face: sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 20, 227–240. doi: 10.1016/j.tics.2015.12.010

Keywords: emotional facial expression, spontaneous expressions, naturalistic, cognitive appraisal, nonverbal behavior

Citation: Hyniewska S, Sato W, Kaiser S and Pelachaud C (2019) Naturalistic Emotion Decoding From Facial Action Sets. Front. Psychol. 9:2678. doi: 10.3389/fpsyg.2018.02678

Received: 14 May 2018; Accepted: 13 December 2018;

Published: 18 January 2019.

Edited by:

Wenfeng Chen, Renmin University of China, ChinaReviewed by:

Lucy J. Troup, University of the West of Scotland, United KingdomQi Wu, Hunan Normal University, China

Copyright © 2019 Hyniewska, Sato, Kaiser and Pelachaud. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sylwia Hyniewska, c3lsd2lhLmh5bmlld3NrYUBnbWFpbC5jb20=

Sylwia Hyniewska

Sylwia Hyniewska Wataru Sato

Wataru Sato Susanne Kaiser

Susanne Kaiser Catherine Pelachaud

Catherine Pelachaud