- Department of Experimental Psychology, University College London, London, United Kingdom

While robots were traditionally built to achieve economic efficiency and financial profits, their roles are likely to change in the future with the aim to provide social support and companionship. In this research, we examined whether the robot’s proposed function (social vs. economic) impacts judgments of mind and moral treatment. Studies 1a and 1b demonstrated that robots with social function were perceived to possess greater ability for emotional experience, but not cognition, compared to those with economic function and whose function was not mentioned explicitly. Study 2 replicated this finding and further showed that low economic value reduced ascriptions of cognitive capacity, whereas high social value resulted in increased emotion perception. In Study 3, robots with high social value were more likely to be afforded protection from harm, and such effect was related to levels of ascribed emotional experience. Together, the findings demonstrate a dissociation between function type (social vs. economic) and ascribed mind (emotion vs. cognition). In addition, the two types of functions exert asymmetric influences on the moral treatment of robots. Theoretical and practical implications for the field of social psychology and human-computer interaction are discussed.

Introduction

With the rapid advancement of technology, robots are becoming increasingly intelligent and autonomous entities with the ability to fulfill a variety of tasks. Traditionally, such tasks have been restricted to the industrial setting in which robots were used for factory work, such as car manufacturing, building construction, and food production (Bekey, 2012; Kim and Kim, 2013). Thus, robots were built mainly to achieve economic efficiency and financial profits for the corporate world (i.e., economic value). This conception, driven mainly by instrumental considerations, is likely to change as robots are starting to penetrate the social sphere (Matarić, 2006; Dautenhahn, 2007; Lin et al., 2011). With new application areas ranging from healthcare to education and domestic assistance (Gates, 2007; Friedman, 2011; Bekey, 2012; Dahl and Boulos, 2013), the places that robots are going to occupy in the future alongside humans are likely to be social in nature. As such, robots will become integral parts in our daily lives, appearing in public and private domains. Facing this new era raises significant questions about the prospective relation between man and machine. Would the value type of the robots influence the extent to which we perceive life-like qualities in them and consider them as worthy of care and moral concern? The current research addresses this issue by exploring the impact of the robot’s proposed function (social vs. economic) on mind attribution and moral treatment.

Anthropomorphism

The question whether robots belong to the category of living or non-living entities has received considerable attention from researchers in different fields (Gaudiello et al., 2015). Anthropomorphism, i.e., the tendency to attribute humanlike characters to nonhuman entities, is a widely observed phenomenon in human–robot interaction (Epley et al., 2007; Airenti, 2015). Despite ongoing debates about the underlying functions and mechanisms of anthropomorphism (see Duffy, 2003; Levillain and Zibetti, 2017), attributing life-like qualities to robots whose behavior follow preprogrammed scripts is an intuitive process (Zawieska et al., 2012). This is because mind attribution does not involve a rational analysis by stepping inside others’ heads, but is instead shaped by how targets appear to us and how we feel towards them (Coeckelbergh, 2009). People automatically respond to and often overestimate the social cues emitted by the robot’s humanlike face, voice, and/or body movements (Reeves and Nass, 1996; Nass and Moon, 2000). We then impose the same source evaluation criteria (e.g., social stereotypes and heuristics) to these robots in the same fashion as done with human targets (e.g., Nass and Lee, 2001). For example, a smiling robot activates the memory exemplar of a nice person which in turn creates the impression of a sociable robot (Kiesler and Goetz, 2002; Powers and Kiesler, 2006).

When it comes to the way in which people perceive and treat robots, the predictive power of anthropomorphism is robust. As such, people are more likely to cooperate with robots (Powers and Kiesler, 2006), engage, and have enjoyable interactions with them (Walker et al., 1994; Koda and Maes, 1996), when they are anthropomorphic in appearance. Humans also tend to show increased empathy by trying to save them from possibilities of suffering (Riek et al., 2009). Despite these important findings, anthropomorphism has been largely criticized on its lack of conceptual and methodological consensus (Zawieska et al., 2012). In particular, anthropomorphism has been usually treated as a unidimensional concept, including multiple components with variations across studies, such as intellect, sociability, mental abilities, cognitions, intentions, and emotions (e.g., Bartneck et al., 2008; Nowak et al., 2009).

Cognition and Emotion

Full humanness or humanlike characteristics entail both higher order cognition (e.g., rationality and logic) and emotion (e.g., feelings and experience, Kahneman, 2011; Waytz and Norton, 2014). These two core capacities, i.e., cognition and emotion, also map onto the two dimensions of mind perception (Gray et al., 2007), the conceptualization of humanness (Haslam, 2006; Haslam et al., 2008), as well as the two primary dimensions of social perception (Fiske et al., 2007). Specifically, higher order cognition corresponds to agency, human uniqueness traits, and competence, while emotion is related to experience, human nature, and warmth (Waytz and Norton, 2014). Interestingly, it has been recently demonstrated that people automatically evaluate a target’s mind along these two dimensions (e.g., Gray et al., 2007), and such target can either be living entities, such as humans and animals (Fiske et al., 2007; Gray et al., 2007), or non-living entities, such as corporate brands (Aaker et al., 2010) and robots (Waytz and Norton, 2014). Furthermore, research suggests that emotion is perceived to be more essential to humanness than cognition (e.g., Willis and Todorov, 2006; Ybarra et al., 2008; Gray and Wegner, 2012) and it also plays a significant role in how we treat others (Gray and Wegner, 2009).

Robots have long been perceived as non-human or a subhuman species, designed as a tool to serve our needs but not to deserve our respect and equal concern as our fellow human beings (Friedman et al., 2003; Dator, 2007). This is mainly because they are attributed with only higher order cognition but not feelings and emotions (e.g., Haslam, 2006; Gray et al., 2012; Kim and Kim, 2013). In other words, it is the ascribed emotion and experience, but not cognition and agency, make a target deserve moral concerns (Gray and Wegner, 2009). This could mainly due to the fact that robots were historically designed out of purely pragmatic considerations in terms of the economic functions they support, i.e., efficiency and automation (Young et al., 2009; Chandler and Schwarz, 2010). Although this might result in attributions of higher order cognition (i.e., artificial intelligence), robots in the traditional sense are not seen as sentient beings (Gray and Wegner, 2012; Kim and Kim, 2013). Hence, people associate robots more with cognition- than emotion-oriented jobs (Loughnan and Haslam, 2007) and express more comfort in outsourcing jobs when those require cognition (Waytz and Norton, 2014).

Over the last 15 years, a number of social robots have been developed with the aim to provide social support and companionship. These include, for example, Nursebot Pearl and Care-o-bot 3 for medical and social care (Pollack et al., 2002; Graf et al., 2009), as well as robots such as Nao or Pepper (Robotics, 2010) for companionship. Unlike traditional robots, social robots are designed to socially engage humans (DiSalvo et al., 2002; Duffy, 2003; Walters et al., 2008), being specifically built to form an attachment (Darling, 2014). Given that this task emphasizes companionship and affective sharing, traits which are closely associated with feelings and emotions (e.g., Fiske, 1992; Gray et al., 2007; Cuddy et al., 2008), it is possible that the robot’s social value increases perceptions of its capacity for emotional experience. Knowledge about the proposed function of a robot could therefore trigger different attributions of mind.

Theoretical support for this assumption comes from social role theory. People infer the traits of a target from his/her socially defined categories (e.g., woman, mother, and manager), or the perceived social role s/he performs (Hoffman and Hurst, 1990; Wood and Eagly, 2012). For instance, women are perceived as affectionate and warm, while men are judged as agentic and competent given their typically ascribed gender role (i.e., caregiver vs. breadwinner, Spence et al., 1979; Eagly and Wood, 2011). Such influence of predefined categorical roles goes beyond the classic domain of gender and applies not only to human targets but also to non-living entities. Of direct relevance to the current study, previous research has demonstrated that businesspeople are typically seen to have higher capacity for cognition but to lack emotions in comparison to housewives and artists (Fiske et al., 2002; Loughnan and Haslam, 2007). Similarly, non-profit organizations dedicated to furthering social support are ascribed higher levels of emotion, but a lower degree of cognition compared to companies which aim for economic growth (Aaker et al., 2010). In line with this thinking, perceptions of robots’ emotional and cognitive ability could vary with their social and economic function, respectively. Moreover, these different values could have downstream consequences for moral consideration, in the sense that social/emotional skills afford rights as a moral patient, making robots deserve protection from harm (Waytz et al., 2010; Gray et al., 2012).

The Present Research

With robots being built for the purpose to add value to humans’ lives (Kaplan, 2005), such value can differ depending on whether it is to fulfill social or economic needs. In particular, in this paper, we define social value/function as the tendency to provide social support and companionship for human society. In contrast, we refer to economic value/function as the tendency to make financial profits and benefits for the corporate world. The primary aim of this paper is to examine whether the proposed function of robots exerts a significant influence on mind perception. In addition, we aimed to test whether such function also shapes subsequent moral treatment.

While previous research has largely focused on the appearance of robots and revealed the benefits of human resemblance (e.g., Hegel et al., 2008; Krach et al., 2008; Powers and Kiesler, 2006; Riek et al., 2009), the very reason for robots’ creation is in fact to assist humans with their built-in functions (e.g., Zhao, 2006). While a social function in the service of affiliation may imply the capacity for feelings and emotion, an economic function could indicate cognitive skills such as thinking and planning. To test this hypothesis, we conducted four studies in which we systematically varied information about the robot’s function using text-based descriptions, while controlled other information such as visual appearance.

Studies 1a and 1b were designed to examine whether the distinct function of robots differentially affects the two dimensions of mind. Given that the ability to experience and express emotions is essential for social connection, we predicted that robots with social value would attract higher ratings of emotion, but not cognition, than those with economic value. By contrast, the perceived mind of robot with economic function and control group would not differ given that the stereotypical function of robot is economic in nature (Gates, 2007; Friedman, 2011).

Study 2 aimed to replicate the findings observed in the first studies and further test whether there exists a dissociation in people’s beliefs between the function of a robot and its type of mind. For this, we made robots either lack or gain value by assigning high vs. low economic and social functionality. Given that robots have historically been associated with tasks that require cognitive rather than social skills (Lohse et al., 2007; Kim and Kim, 2013; Darling, 2014), we hypothesized that losing economic value would lead to reduced perceptions of cognition. By contrast, gaining social value should result in increased emotion attribution.

Finally, Study 3 was to replicate the finding of Study 2 and further test the moral consequences caused by its value type. As such, gaining social value, rather than economic value, should increase perceived emotional ability/experience of the robot. In addition, people should be most willing to protect robots with (high) social value from harm, given that perceived emotion/experience is tied to moral concern (Gray and Wegner, 2009).

Sensitivity Analysis

Given our primary aim, calculations were based on the effect of value type (social vs. economic) on mind perception. According to sensitivity power analysis (G∗Power, Faul et al., 2007), minimal effect sizes of f = .13 (Study 1A, N = 151, value type: between-subjects, mind dimension: within-subjects), f = .15 (Study 1B, N = 50, value type and mind dimension: within-subjects), f = .08 (Study 2, N = 172, value level and mind dimension: within-subjects, value type: between-subjects), and f = .11 (Study 3, N = 110, value level, value type, and mind dimension: within-subjects) could be detected under standard criteria (α = 0.05 two-tailed, β = 0.80), respectively.

Study 1

In Studies 1a and 1b, we sought to provide initial evidence for the hypothesis that mind attribution varies with the robot’s function. To this end, robots were described as having either social or economic value. In order to test whether people assign by default economic rather than social value to robots, we also included a control condition in which the robot’s function was not mentioned explicitly. We predicted that robots with social value would be ascribed higher levels of emotion, but not cognition, compared to those in the economic and control condition. Given that the stereotypical function of robots is to serve economic purposes (e.g., Gates, 2007; Friedman, 2011), we expected that robots with economic function and those whose functions are not mentioned explicitly (i.e., control condition) would be perceived similarly.

Study 1A

Method

Participants

One hundred and fifty-one participants (85 women, Mage = 24.8, SD = 6.98, 86% Caucasian, 5% Asian, and 9% others) were recruited from public areas in central London (i.e., public libraries and cafés) and took part in the study on a voluntary basis. The experimental design included the value type (economic vs. social vs. control) as a between-subject variable. Participants were randomly assigned to one of three conditions, resulting in approximately fifty people in each group. The study was conducted with ethical approval from the Department of Experimental Psychology at University College London, United Kingdom.

Material and Procedure

Short text-based descriptions of four robots were created which served as profile information: e.g., “This robot can quickly learn various movements from demonstrators and also make additional changes either to optimize the behavior or adjust to situations.” In order to assign economic versus social value, these profile descriptions were combined with information about the robot’s corresponding function: (a) economic condition: e.g., “Therefore, this robot can work as a salesperson in stores and supermarkets, guiding customers to different products and answering their inquiries” and (b) social condition: e.g., “Therefore, this robot can work as a social caregiver, keeping those socially isolated/lonely people accompanied, reminding them of their daily activities and having conversations with them.” In the control condition, no further information about the robot’s function was provided besides its basic profile description (please see Appendix A).

Pilot-testing with a separate group of participants (N = 56) confirmed that robots with social function were indeed perceived to be higher in their social value1 (M = 59.5, SD = 14.4 vs. M = 29.3, SD = 21.8) and lower in their economic value (M = 67.3, SD = 12.9 M = 78.9, SD = 13.6) compared to those with economic function, ps ≤ 0.002.

The study was completed on paper. After providing informed consent, participants were presented with text-based descriptions of four robots (of the same value type within a condition) which they rated on two dimensions of mind: (a) emotion (the capacity to experience emotions, have feelings, and be emotional, αs ≥ 0.93) and (b) cognition (the capacity to exercise self-control, think analytically, and be rational, αs ≥ 0.93)2. To distract participants from the aims of the study, they also evaluated each target on several filler items, i.e., how good-looking, durable, and lethargic the robot appears to be. All responses were made on 100-point scales ranging from 0 (not at all) to 100 (very much). The order of presentation of the four robot descriptions was counterbalanced across participants.

Results and Discussion

Mean values for emotion and cognition were computed by combining the three items of each measure into a single score and averaging across the four robot descriptions (emotion: α = 0.94, cognition: α = 0.93) within each dimension. A repeated measures ANOVA with mind dimension (emotion vs. cognition) as a within-subjects factor and value type (social vs. economic vs. control) as a between-subjects factor was performed.

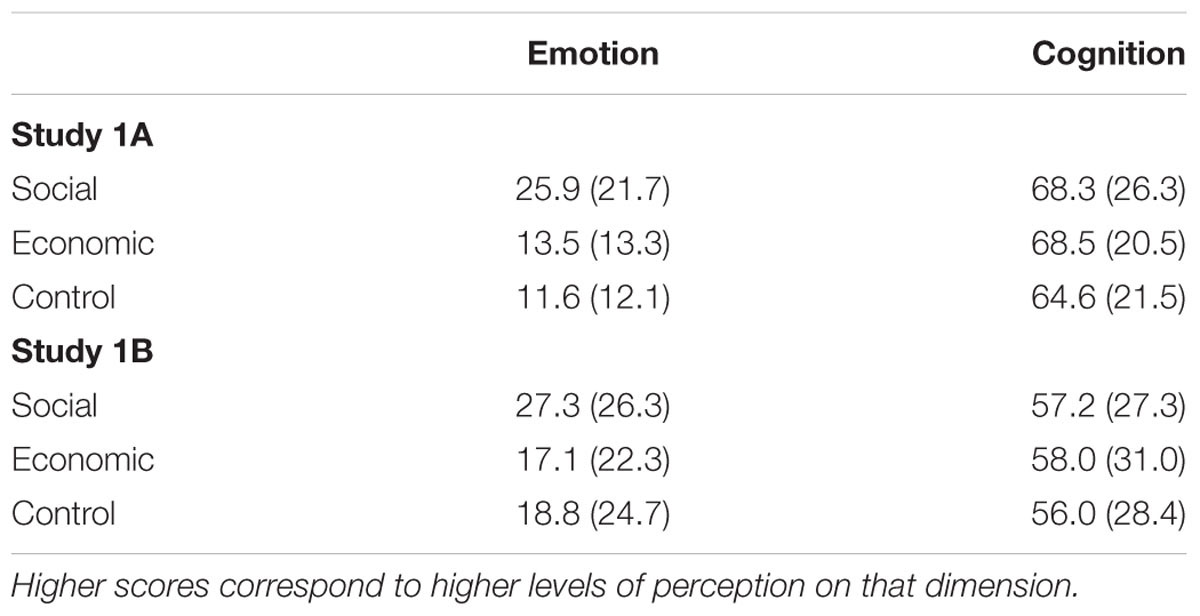

A significant interaction between mind dimension and value type emerged, F(2,148) = 4.44, p = 0.013, = 0.057. Pairwise comparisons revealed that the ascribed ability for emotional experience was significantly higher for robots with social than those with economic value (Mdifference = 12,4, p = 0.001) and those in the control group (Mdifference = 14.3, p < 0.001), with no significant difference between the latter two conditions, p = 0.918 (see Table 1). In contrast, perceived cognition did not differ as a function of value type, ps ≥ 0.792.

As predicted, knowledge about a robot’s proposed function critically moderated judgments of mind. Specifically, assigning social value to robots increased levels of perceived emotion. By contrast, attributions of cognition did not change in robots with economic function and those whose value was not mentioned explicitly, suggesting that robots are predominantly considered to possess economic rather than social function.

Study 1B

Study 1b aimed to replicate the findings of the first study with a within-subjects design. In addition, we wanted to describe robot’s social and economic value more directly. That is, instead of letting participants infer a robot’s value from its proposed function, we provided explicit information in the sense that the robots are created to provide social support and companionship (i.e., social value) versus financial benefits and corporate profits (i.e., economic value), respectively.

Method

Participants

Fifty participants (30 women, Mage = 27.4, SD = 6.86, 84% Caucasian, 6% Black, and 10% others) were recruited from public areas in central London (i.e., public libraries and cafés) and took part in the study on a voluntary basis. The experimental design included the value type (economic vs. social vs. control) as within-subject variable. The study was conducted with ethical approval from the Department of Experimental Psychology at University College London, United Kingdom.

Material and Procedure

The same text-based descriptions as in Study 1a served as profile information about the robots. In order to assign economic versus social value, these profile descriptions were combined with information about the robot’s corresponding function: “According to a recent business (social) analysis, this robot is predicted to be of profound economic (social) value. By economic (social) value, we mean the expected financial benefits and corporate profits (social support and companionship) they are going to bring to the corporate world (human society).” In the control condition, no further information about the robot’s function was provided besides its basic profile description.

The study was completed on paper. After providing informed consent, participants were presented with text-based descriptions of the three types of robots (economic, social, and control) which they had to rate on two dimensions of mind: (a) emotion (the capacity to experience emotions, have feelings, and be emotional, αs ≥ 0.89) and (b) cognition (the capacity to exercise self-control, think analytically, and be rational, αs ≥ 0.82). To distract participants from the aims of the study, they also evaluated each target on several filler items which were identical to those used in the previous study. All responses were made on 100-point scales ranging from 0 (not at all) to 100 (very much). To avoid any carryover effects, the robot in the control condition was always presented first. The order of the two experimental conditions (economic vs. social) was counterbalanced across participants.

Results and Discussion

Mean values for emotion and cognition were computed by combining the three items of each measure into a single score. A repeated measures ANOVA with value type (economic vs. social vs. control) and mind dimension (emotion vs. cognition) as within-subjects factors was performed. Replicating the finding of Study 1A, a significant interaction between value type and mind dimension was obtained, F(2,98) = 3.82, p = 0.025, = 0.072. Pairwise comparisons showed that ratings of emotion were significantly higher for robots with social value than economic value (Mdifference = 10.2, p = 0.010) and those in the control group (Mdifference = 8.5, p = 0.014, see Table 1), with no significant difference between the latter two conditions, p = 0.628. In contrast, the perceived cognition did not differ significantly among the three value types, p ≥ 0.456. This pattern of results replicates what was found in the first study. Specifically, social value positively influenced a robot’s perceived capacity for emotional experience, but not higher order cognition.

Study 2

The aim of Study 2 was to replicate the finding of Study 1 devoid of any information about the robots’ basic function (e.g., ability to learn various movements). To this end, we used visual stimuli, which were kept constant across conditions, to serve as the basic profile information. In addition, we tested for a dissociation in people’s beliefs between the function of a robot and its type of mind. For this, robots were made to either lack or gain value by assigning high vs. low economic and social functionality. If the two types of functions project distinct values (i.e., efficiency and productivity vs. caring and communality), attributions of cognition and emotion should be affected differently. Based on the finding that robots are primarily considered to be of economic value (i.e., high economic value), but not of social value (i.e., low social value), we hypothesized that losing economic value (i.e., low vs. control) would result in reduced levels of ascribed cognition. In contrast, gaining social value (i.e., high vs. control) should lead to increased emotion perception.

Method

Participants

One hundred and seventy-two participants (78 women, Mage = 23.9, SD = 6.26, 79% Caucasian, 6% Asian, and 15% others) were recruited online through a participant testing platform. All participants were fluent English speakers and took part in the study on a voluntary basis. The two-factor experimental design included the value type (economic vs. social) as a between-subject variable, and the value level (high vs. low vs. control) as a within-subject variable. Participants were randomly assigned to one of the two value type conditions, resulting in eighty-six people in each group. The study was conducted with ethical approval from the Department of Experimental Psychology at University College London, United Kingdom.

Material and Procedure

Color images of three robots, displaying neutral expressions with direct gaze, were chosen from publicly accessible Internet sources. Images were edited and cropped using Adobe Photoshop so that only the head and shoulder of the robots remained visible. In addition, we centered the head and created a uniform white background. Images measured 300 × 300 pixels.

To assign economic versus social value, text-based descriptions of the robot’s corresponding function were created which also systematically differed in the value level. In the economic condition, it was stated that “According to a recent business report, this robot is predicted to be of high (low) economic value. By economic value, we mean the expected financial benefits and corporate profits they are going to bring to the corporate world.” In the social condition, it was stated that “According to a recent social report, this robot is predicted to be of high (low) social value. By social value, we mean the expected the social support and companionship they are going to bring to the human society.” In the control condition, no textual description of the robot was provided. The matching between robot image and robot type was counterbalanced across participants.

The study was conducted online using Qualtrics software (Provo, UT, United States). After providing informed consent, participants were presented with images together with text-based descriptions of three robots that differed in their value level (high, low, and control) within the same value type. The matching between visuals and text was counterbalanced, so that each robot image was equally likely to appear with each value level description across participants. For each stimulus target, participant provided explicit evaluations on two dimensions of mind: (a) emotion (the capacity to experience emotions, have feelings, and be emotional, αs ≥ 0.89) and (b) cognition (the capacity to exercise self-control, think analytically, and be rational, αs ≥ 0.85). As in the previous studies, filler items were included to distract participants from the aims of the study. All responses were made on 100-point scales ranging from 0 (not at all) to 100 (very much). To avoid any carryover effects, the robot in the control condition was always presented first. The order of the two experimental conditions (high value, low value) was randomized.

Results and Discussion

Mean values for emotion and cognition were computed by combining the three items of each measure into a single score. A repeated measures ANOVA with value level (high vs. low vs. control) and mind dimension (emotion vs. cognition) as within-subjects factors, and value type (economic vs. social) as a between-subjects factor was conducted.

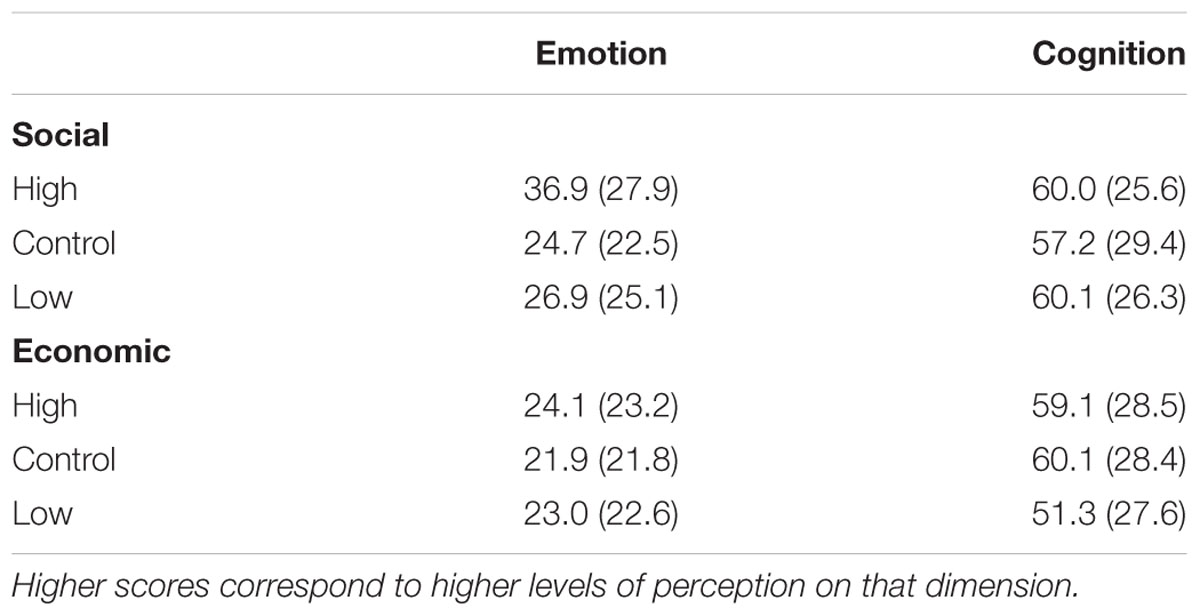

Importantly, a significant three-way interaction between value level, vale type, and mind dimension emerged, F(2,340) = 4.81, p = 0.009, = 0.028. Specifically, for ratings of emotion, the interaction between value type and value level was significant, F(1.91,325) = 5.19, p = 0.007, = 0.030. Further pairwise comparisons showed that the ascribed ability for emotional experience was greater for robots with high social value than low social value (Mdifference = 10.0, p < 0.001, see Table 2) and those in the control group (Mdifference = 12.2, p < 0.001), with no significant difference between the latter two conditions, p = 0.313. Perceptions of emotion were unaffected by the level of a robot’s economic value, ps ≥ 0.342.

For ratings of cognition, the interaction between value type and value level was also significant, F(1.97,335) = 4.13, p = 0.017, = 0.024, but the observed patterns were different from those of emotion. Specially, attributions of cognitive ability decreased for robots with low economic value than high economic value (Mdifference = -7.8, p = 0.005, see Table 2) and those in the control group (Mdifference = -8.8, p = 0.004) with no significant difference between the latter two conditions, p = 0.740. Perceptions of cognition were unaffected by the level of a robot’s social value, ps ≥ 0.313.

Replicating the findings of Studies 1a and 1b, robots with (high) social value were also ascribed greater emotional ability than those with (high) economic value (Mdifference = 12.8, p = 0.001, see Table 2). No such difference in robot functionality was observed for attributions of cognitive ability, p = 0.829.

In sum, the social value of robots significantly increased emotion judgments which is consistent with the previous findings. In addition, there was a dissociation between the perceived function of a robot and its type of mind. While losing economic value diminished ratings of cognition, gaining social value increased perceptions of emotional experience.

Study 3

Study 3 aimed to replicate the finding of Study 2 that gaining social value increases perceived emotional experience. In addition, it aimed to explore the moral consequences in terms of whether intentions to harm differ with the type (economic vs. social) and level (high vs. low) of a robot’s value. If perceptions of experience are tied to the attribution of moral rights, robots with high social value should be more likely to afford protection from harm (Gray et al., 2012).

Method

Participants

One hundred and ten participants (35 women, Mage = 23.5, SD = 6.31, 82% Caucasian, 8% Asian, and 10% others) were recruited online through a participant testing platform. All participants were fluent English speakers and took part in the study on a voluntary basis. The two-factor experimental design included the value type (economic vs. social) and the value level (high vs. low) as within-subject variables. The study was conducted with ethical approval from the Department of Experimental Psychology at University College London, United Kingdom.

Material and Procedure

The same four text-based descriptions as in Study 2 were used to assign high vs. low economic and social value to robots.

The study was conducted online using Qualtrics software (Provo, UT, United States). After providing informed consent, participants were instructed to imagine a hypothetical scenario in which an electromagnetic wave was going to be generated in the lab. They were told that they could save three out of the four robots from the undesirable electromagnetic shock by placing them into a shielded room. The four robots systematically differed in their value level (high, low) and value type (economic, social) as described in the vignettes.

To measure (1) the intention to harm, participant’s task was to indicate for each robot category how willing they were to sacrifice the robot to receive a shock in order to spare the other three. To measure (2) the perception of emotion, they further indicated how much pain and how sad the robot would feel if it were to receive a shock (αs ≥ 0.92). All responses were made on seven-point scales ranging from 0 (not at all) to 7 (very much). The presentation order of the four robot descriptions was randomized.

Results and Discussion

Repeated measures MANOVA with value level (high vs. low) and value type (economic vs. social) as within-subjects factors were performed on measures of emotion and harm. Firstly, the main interaction between value type and value level was highly significant, F(2,108) = 22.3, p < 0.001, = 0.292.

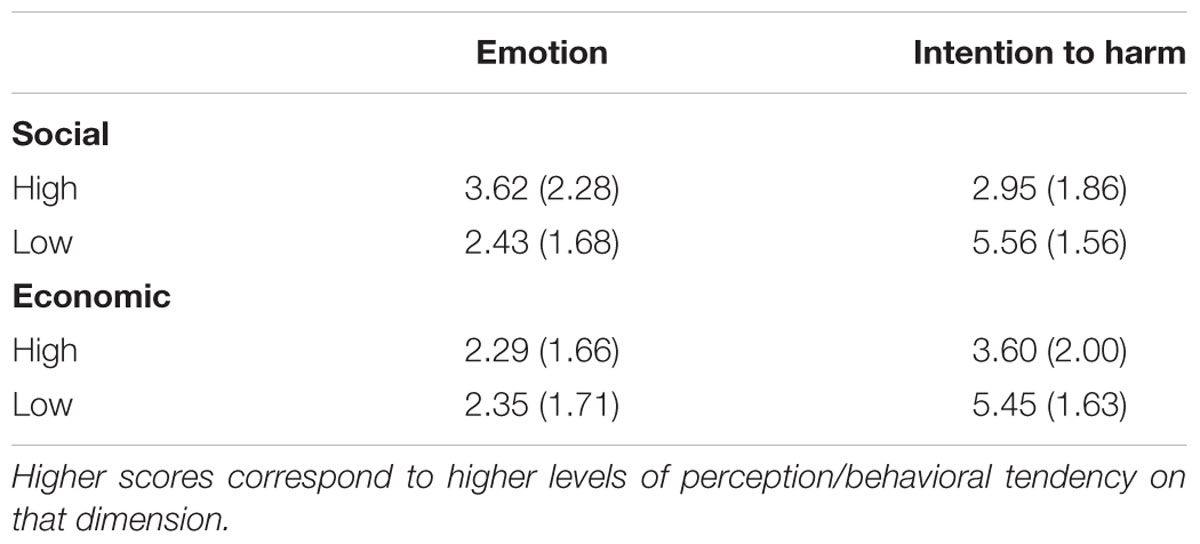

Regarding ratings of emotion, a significant interaction between value type and value level was obtained, F(1,109) = 35.7, p < 0.001, = 0.247. Replicating the findings of Study 1 and 2, perceived ability for emotional experience was elevated for robots with high social value (Mdifference = 1.19, Mdifference = 1.33, Mdifference = 1.27, Table 3) compared to the other three robot types, ts(109) ≥ 7.01, ps ≤ 0.001, ds ≥ 1.34, for which ratings did not significantly differ from each other, /ts(109)/ ≤ 1.06, ps ≥ 0.293.

Regarding intentions to harm, a main effect of value level for willingness to harm, F(1,109) = 166, p < 0.001, = 0.604, showed that people were less likely to sacrifice robots for an electric shock when they were of high value (M = 3.27) than low value (M = 5.51). In addition, the interaction between value type and value level was significant, F(1,109) = 6.97, p = 0.010, = 0.060. Paired-sample t-tests further revealed that participants’ intention to harm was the lowest for robots with high social value (Mdifference = 0.65, Mdifference = 2.50, Mdifference = 2.61, see Table 3) in comparison to the other three robot types, ts(109) ≥ 2.87, ps ≤ 0.005, ds ≥ 0.55.

Correlational analysis confirmed a significant association between emotion ratings and intentions to harm, r(440) = -0.331, p < 0.001. As such, the higher the level of ascribed emotional experience, the less the inclination to endorse harm, which is consistent with findings by Gray and Wegner (2009). High social value therefore afforded rights as a moral patient, making robots capable of emotional experience as well as eligible to deserve protection from harm.

General Discussion

Ever since their creation, robots have been considered as a non-human species, being developed to fulfill users’ goals without the need to deserve equal moral concern (e.g., Kim and Kim, 2013). This could derive from the fact that they were granted humanlike cognition, but not emotional capacity (e.g., Gray and Wegner, 2009, 2012). The current research aimed to explore whether the proposed function of robots, i.e., economic vs. social, shapes ascriptions of mind and in particular emotional experience. In addition, we examined the downstream consequences of robots’ functionality for moral treatment.

Studies 1a and 1b demonstrated that robots with social function received higher ratings of emotion, but not cognition, compared to those with economic function and whose function was not mentioned explicitly. Study 2 replicated the findings of the first two studies by showing that robots with (high) social function were assigned greater levels of emotion than those with (high) economic function. In addition, we demonstrated that losing economic value led to lower ascriptions of cognitive capacity while gaining social value resulted in increased emotion perception. Study 3 finally revealed that robots with high social value are likely to be afforded protection from harm by gaining rights as a moral patient. Such moral concern was significantly associated with their levels of ascribed emotional experience.

Together, the findings have important theoretical and practical implications. First, consistent with prior findings, the current study also demonstrates that mind perception is an intuitive and subjective process (e.g., Epley et al., 2007; Wang and Krumhuber, 2017b), and is a two-dimensional phenomenon, consisting of emotion/experience and higher order cognition/agency (Gray et al., 2007; Złotowski et al., 2014). Crucially, such perception is subject to the proposed function of a robot (social vs. economic). While previous studies on human-robot interaction have focused on the importance of humanlike form and behavior (e.g., Powers and Kiesler, 2006; Hegel et al., 2008; Krach et al., 2008; Riek et al., 2009), the present research demonstrates that distinct functions can elicit different inferences of mental ability.

Furthermore, there exists a dissociation between the robot’s function and its type of mind. While an economic function implies cognitive skills, it is the social function that makes robots capable of experiencing emotions in the eyes of the observer. This evidence is consistent with prior studies on human targets (Fiske et al., 2002; Loughnan and Haslam, 2007; Aaker et al., 2010), showing that levels of ascribed cognition/competence and emotion/warmth vary within the same entity. Thus, people seem to apply similar heuristics in response to humans and robots (e.g., Nass and Lee, 2001).

Due to the traditional role of robots in industrial settings, the existing gap in mind perception between humans and robots can be largely explained by the perceived lack of emotions and fundamental experiences (e.g., Haslam, 2006; Gray and Wegner, 2012). For example, Eyssel et al. (2010) showed that anthropomorphic inferences of robots increased with their ability to express emotions. Similarly, Złotowski et al. (2014) found that perceived emotionality, but not intelligence, contributed to the ascription of human traits in robots. Equipping robots with emotional skills, thereby highlighting their social value, could make them more humanlike and evoke empathic responses. Given that moral concern is associated with perceived experience, but not agency (Gray et al., 2012), it is the social value which confers moral rights and patiency as shown in the present research. This evidence is particularly timely in the context of recent discussions about the legal protection of robots (Darling, 2014). Although robots will never be fully sentient as defined by biological principles, the moral standing of those possessing social value will likely to be different from those fulfilling economic needs (Coeckelbergh, 2009).

Despite the importance of these findings, it is worth pointing out some limitations of the current research which could serve as avenues for future studies. Although robots’ social value acts as a critical moderator in people’s willingness to ascribe emotions, such perceived emotion is still much lower compared to the level of ascribed cognition. Assigning social value to robots therefore seems to increase their level of perceived emotional capacities only to a limited extent. This could due to the fact that we only employed minimal manipulations in the current study, i.e., simple textual description of robots’ function type. Future studies in human–computer interaction could let participants directly interact with robots that have been built to fulfill economic and social needs, respectively.

Furthermore, it would be interesting to examine the behavioral responses to robots of diverse functionality. Beliefs about a target’s mind have been shown to affect various outcomes, including likeability, social attention, joint-task performance, and emotional attachment (e.g., de Melo et al., 2013; Wykowska et al., 2014; Martini et al., 2016). With the rapid rise of social robots in society, future studies might be aimed at testing whether the emotions ascribed to robots based on their social value significantly influence people’s tendency to trust and cooperate. While first responses could result in feelings of uneasiness and discomfort (e.g., Gray and Wegner, 2012; Waytz and Norton, 2014; Wang and Krumhuber, 2017a) due to infrequent exposure to robots of this kind (Kätsyri et al., 2015), the social nature of robots may act as the basis for human bonding and attachment at a later stage (Darling, 2014; Dryer, 1999).

Ethics Statement

All subjects gave informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Department of Experimental Psychology at University College London, United Kingdom.

Author Contributions

XW and EK conceived the presented idea. XW carried out the experiments and wrote the manuscript with support from EK.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2018.01230/full#supplementary-material

Footnotes

- ^ Further principle component analysis (PCA) showed that four robots with assigned social value loaded on the same factor while the robots with assigned economic value loaded on the other, explaining 65.6% of the variance in combination, KMO = 0.68, Bartlett’s test of sphericity, χ2(28) = 196, p < 0.001.

- ^ Further principle component analysis (PCA) showed that the three items measuring emotion loaded on the same factor while the three items measuring cognition loaded on the other, explaining 84.5% of the variance in combination, KMO = 0.76, Bartlett’s test of sphericity, χ2(15) = 3507, p < 0.001.

References

Aaker, J., Vohs, K. D., and Mogilner, C. (2010). Nonprofits are seen as warm and for-profits as competent: firm stereotypes matter. J. Consum. Res. 37, 224–237. doi: 10.1086/651566

Airenti, G. (2015). The cognitive bases of anthropomorphism: from relatedness to empathy. Int. J. Soc. Robotics 7, 117–127. doi: 10.1007/s12369-014-0263-x

Bartneck, C., Croft, E., and Kulic, D. (2008). “Measuring the anthropomorphism, animacy, likeability, perceived intelligence and perceived safety of robots,” in Metrics for HRI Workshop, Technical Report, Vol. 471, Amsterdam, 37–44.

Bekey, G. A. (2012). Current Trends in Robotics: Technology and Ethics. Robot Ethics: The Ethical and Social Implications of Robotics. Cambridge, MA: MIT Press, 17–34.

Chandler, J., and Schwarz, N. (2010). Use does not wear ragged the fabric of friendship: thinking of objects as alive makes people less willing to replace them. J. Consum. Psychol. 20, 138–145. doi: 10.1016/j.jcps.2009.12.008

Coeckelbergh, M. (2009). Virtual moral agency, virtual moral responsibility: on the moral significance of the appearance, perception, and performance of artificial agents. AI Soc. 24, 181–189. doi: 10.1007/s00146-009-0208-3

Cuddy, A. J., Fiske, S. T., and Glick, P. (2008). Warmth and competence as universal dimensions of social perception: the stereotype content model and the BIAS map. Adv. Exp. Soc. Psychol. 40, 61–149. doi: 10.1016/S0065-2601(07)00002-0

Dahl, T. S., and Boulos, M. N. K. (2013). Robots in health and social care: a complementary technology to home care and telehealthcare? Robotics 3, 1–21. doi: 10.3390/robotics3010001

Darling, K. (2014). “Extending legal protection to social robots: the effects of anthropomorphism, empathy, and violent behavior towards robotic objects,” in Robot Law, eds R. Calo, A. M. Froomkin, and I. Kerr (Cheltenham: Edward Elgar), 212–232.

Dator, J. (2007). “Religion and war in the 21st century,” in Tenri Daigaku Chiiki Bunka Kenyu Center, ed. Senso, Shukyo, Heiwa [War, Religion, Peace], Tenri Daigaku 80 Shunen Kinen [Tenri University 80th anniversary celebration] (Tenri: Tenri Daigaku), 34–51.

Dautenhahn, K. (2007). Socially intelligent robots: dimensions of human–robot interaction. Philosophical Transactions of the R. Soc. Lond. B Biol. Sci. 362, 679–704. doi: 10.1098/rstb.2006.2004

de Melo, C. M., Gratch, J., and Carnevale, P. J. (2013). “The effect of agency on the impact of emotion expressions on people’s decision making,” in Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction (ACII), (Piscataway, NJ: IEEE), 546–551.

DiSalvo, C. F., Gemperle, F., Forlizzi, J., and Kiesler, S. (2002). “All robots are not created equal: the design and perception of humanoid robot heads,” in Proceedings of the 4th Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques (New York, NY: ACM), 321–326.

Dryer, D. C. (1999). Getting personal with computers: how to design personalities for agents. Appl. Artif. Intell. 13, 273–295. doi: 10.1080/088395199117423

Duffy, B. R. (2003). Anthropomorphism and the social robot. Robotics Auton. Syst. 42, 177–190. doi: 10.1016/S0921-8890(02)00374-3

Eagly, A. H., and Wood, W. (2011). Feminism and the evolution of sex differences and similarities. Sex Roles 64, 758–767. doi: 10.1007/s11199-011-9949-9)

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi: 10.1037/0033-295X.114.4.864

Eyssel, F., Hegel, F., Horstmann, G., and Wagner, C. (2010). “Anthropomorphic inferences from emotional nonverbal cues: a case study,” in Proceedings of the 19th IEEE International Symposium in Robot and Human Interactive Communication (RO-MAN 2010) (Viareggio: IEEE), 646–651.

Faul, F., Erdfelder, E., Lang, A. G., and Buchner, A. (2007). G∗ Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Fiske, A. P. (1992). The four elementary forms of sociality: framework for a unified theory of social relations. Psychol. Rev. 99, 689–723. doi: 10.1037/0033-295X.99.4.689

Fiske, S. T., Cuddy, A. J., and Glick, P. (2007). Universal dimensions of social cognition: warmth and competence. Trends Cogn. Sci. 11, 77–83. doi: 10.1016/j.tics.2006.11.005

Fiske, S. T., Cuddy, A. J., Glick, P., and Xu, J. (2002). A model of (often mixed) stereotype content: competence and warmth respectively follow from perceived status and competition. J. Pers. Soc. Psychol. 82, 878–902. doi: 10.1037/0022-3514.82.6.878

Friedman, B., Kahn, P. H. Jr., and Hagman, J. (2003). “Hardware companions?: What online AIBO discussion forums reveal about the human-robotic relationship,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, (New York, NY: ACM), 273–280.

Gates, B. (2007). A robot in every home. Sci. Am. 296, 58–65. doi: 10.1038/scientificamerican0107-58

Gaudiello, I., Lefort, S., and Zibetti, E. (2015). The ontological and functional status of robots: How firm our representations are? Comput. Hum. Behav. 50, 259–273. doi: 10.1016/j.chb.2015.03.060

Graf, B., Reiser, U., Hägele, M., Mauz, K., and Klein, P. (2009). “Robotic home assistant Care-O-bot® 3-product vision and innovation platform,” in Proceedings of the 2009 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), (Piscataway, NJ: IEEE), 139–144.

Gray, H. M., Gray, K., and Wegner, D. M. (2007). Dimensions of mind perception. Science 315, 619–619. doi: 10.1126/science.1134475

Gray, K., and Wegner, D. M. (2009). Moral typecasting: divergent perceptions of moral agents and moral patients. J. Pers. Soc. Psychol. 96, 505–520. doi: 10.1037/a0013748

Gray, K., and Wegner, D. M. (2012). Feeling robots and human zombies: mind perception and the uncanny valley. Cognition 125, 125–130. doi: 10.1016/j.cognition.2012.06.007

Gray, K., Young, L., and Waytz, A. (2012). Mind perception is the essence of morality. Psychol. Inq. 23, 101–124. doi: 10.1080/1047840X.2012.651387

Haslam, N. (2006). Dehumanization: an integrative review. Pers. Soc. Psychol. Rev. 10, 252–264. doi: 10.1207/s15327957pspr1003_4

Haslam, N., Loughnan, S., Kashima, Y., and Bain, P. (2008). Attributing and denying humanness to others. Eur. Rev. Soc. Psychol. 19, 55–85. doi: 10.1080/10463280801981645

Hegel, F., Krach, S., Kircher, T., Wrede, B., and Sagerer, G. (2008). “Understanding social robots: a user study on anthropomorphism,” in Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, 2008 (Piscataway, NJ: IEEE), 574–579.

Hoffman, C., and Hurst, N. (1990). Gender stereotypes: Perception or rationalization? J. Pers. Soc. Psychol. 58, 197–208. doi: 10.1037/0022-3514.58.2.197

Kaplan, F. (2005). “Everyday robotics: robots as everyday objects,” in Proceedings of the 2005 Joint Conference on Smart Objects and Ambient Intelligence: Innovative Context-Aware Services: Usages and Technologies, (New York, NY: ACM), 59–64.

Kätsyri, J., Förger, K., Mäkäräinen, M., and Takala, T. (2015). A review of empirical evidence on different uncanny valley hypotheses: support for perceptual mismatch as one road to the valley of eeriness. Front. Psychol. 6:390. doi: 10.3389/fpsyg.2015.00390

Kiesler, S., and Goetz, J. (2002). “Mental models of robotic assistants,” in Proceedings of the CHI’02 Extended Abstracts on Human Factors in Computing Systems, (New York, NY: ACM), 576–577.

Kim, M. S., and Kim, E. J. (2013). Humanoid robots as “The Cultural Other”: are we able to love our creations? AI Soc. 28, 309–318. doi: 10.1007/s00146-012-0397-z

Koda, T., and Maes, P. (1996). “Agents with faces: The effect of personification,” in Proceedings of the 1996, 5th IEEE International Workshop on Robot and Human Communication (Viareggio: IEEE), 189–194.

Krach, S., Hegel, F., Wrede, B., Sagerer, G., Binkofski, F., and Kircher, T. (2008). Can machines think? Interaction and perspective taking with robots investigated via fMRI. PLoS One 3:e2597. doi: 10.1371/journal.pone.0002597

Levillain, F., and Zibetti, E. (2017). Behavioral objects: the rise of the evocative machines. J. Hum. Robot Interact. 6, 4–24. doi: 10.5898/JHRI.6.1.Levillain

Lin, P., Abney, K., and Bekey, G. A. (2011). Robot Ethics: The Ethical and Social Implications of Robotics. Cambridge, MA: MIT Press.

Lohse, M., Hegel, F., Swadzba, A., Rohlfing, K., Wachsmuth, S., and Wrede, B. (2007). “What can I do for you? Appearance and application of robots,” in Proceedings of the AISB, Vol. 7, Newcastle upon Tyne, 121–126.

Loughnan, S., and Haslam, N. (2007). Animals and androids: Implicit associations between social categories and nonhumans. Psychol. Sci. 18, 116–121. doi: 10.1111/j.1467-9280.2007.01858.x

Martini, M. C., Gonzalez, C. A., and Wiese, E. (2016). Seeing minds in others–Can agents with robotic appearance have human-like preferences? PLoS One 11:e0146310. doi: 10.1371/journal.pone.0146310

Nass, C., and Lee, K. M. (2001). Does computer-synthesized speech manifest personality? Experimental tests of recognition, similarity-attraction, and consistency-attraction. J. Exp. Psychol. Appl. 7, 171–181. doi: 10.1037/1076-898X.7.3.171

Nass, C., and Moon, Y. (2000). Machines and mindlessness: social responses to computers. J. Soc. Issues 56, 81–103. doi: 10.1111/0022-4537.00153

Nowak, K. L., Hamilton, M. A., and Hammond, C. C. (2009). The effect of image features on judgments of homophily, credibility, and intention to use as avatars in future interactions. Media Psychol. 12, 50–76. doi: 10.1080/15213260802669433

Pollack, M. E., Brown, L., Colbry, D., Orosz, C., Peintner, B., Ramakrishnan, S., et al. (2002). “Pearl: a mobile robotic assistant for the elderly,” in Proceedings of the AAAI Workshop on Automation as Eldercare (Menlo Park, CA: AAAI), 85–91.

Powers, A., and Kiesler, S. (2006). “The advisor robot: tracing people’s mental model from a robot’s physical attributes,” in Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, (New York, NY: ACM), 218–225.

Reeves, B., and Nass, C. (1996). How People Treat Computers, Television, and New Media Like Real People and Places. (Vancouver: CSLI Publications), 3–18.

Riek, L. D., Rabinowitch, T. C., Chakrabarti, B., and Robinson, P. (2009). “How anthropomorphism affects empathy toward robots,” in Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, (New York, NY: ACM), 245–246.

Robotics, A. (2010). Nao, the Ideal Partner for Research and Robotics Classrooms. Available at: www.aldebaran-robotics.com/en

Spence, J. T., Helmreich, R. L., and Holahan, C. K. (1979). Negative and positive components of psychological masculinity and femininity and their relationships to self-reports of neurotic and acting out behaviors. J. Pers. Soc. Psychol. 37, 1673–1682. doi: 10.1037/0022-3514.37.10.1673

Walker, J. H., Sproull, L., and Subramani, R. (1994). “Using a human face in an interface,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, (New York, NY: ACM), 85–91.

Walters, M. L., Syrdal, D. S., Dautenhahn, K., Te Boekhorst, R., and Koay, K. L. (2008). Avoiding the uncanny valley: robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion. Auton. Robots 24, 159–178. doi: 10.1007/s10514-007-9058-3

Wang, X., and Krumhuber, E. G. (2017a). “Described robot functionality impacts emotion experience attributions,” in Proceedings of the Conference on the Study of Artificial Intelligence and Simulation of Behaviour (AISB), Bath, 282–283.

Wang, X., and Krumhuber, E. G. (2017b). The love of money results in objectification. Br. J. Soc. Psychol. 56, 354–372. doi: 10.1111/bjso.12158

Waytz, A., Gray, K., Epley, N., and Wegner, D. M. (2010). Causes and consequences of mind perception. Trends Cogn. Sci. 14, 383–388. doi: 10.1016/j.tics.2010.05.006

Waytz, A., and Norton, M. I. (2014). Botsourcing and outsourcing: robot, British, Chinese, and German workers are for thinking-not feeling-jobs. Emotion 14, 434–444. doi: 10.1037/a0036054

Willis, J., and Todorov, A. (2006). First impressions: making up your mind after a 100-Ms: exposure to a face. Psychol. Sci. 17, 592–598. doi: 10.1111/j.1467-9280.2006.01750.x

Wood, W., and Eagly, A. H. (2012). “Biosocial construction of sex differences and similarities in behavior,” in Advances in Experimental Social Psychology, Vol. 46, eds J. M. Olson and M. P. Zanna, (Burlington, NJ: Academic Press), 55–123.

Wykowska, A., Wiese, E., Prosser, A., and Müller, H. J. (2014). Beliefs about the minds of others influence how we process sensory information. PLoS One 9:e94339. doi: 10.1371/journal.pone.0094339

Ybarra, O., Chan, E., Park, H., Burnstein, E., Monin, B., and Stanik, C. (2008). Life’s recurring challenges and the fundamental dimensions: an integration and its implications for cultural differences and similarities. Eur. J. Soc. Psychol. 38, 1083–1092. doi: 10.1002/ejsp.559

Young, J. E., Hawkins, R., Sharlin, E., and Igarashi, T. (2009). Toward acceptable domestic robots: applying insights from social psychology. Int. J. Soc. Robotics 1, 95–108. doi: 10.1007/s12369-008-0006-y

Zawieska, K., Duffy, B. R., and Strońska, A. (2012). Understanding anthropomorphisation in social robotics. Pomiary Automatyka Robotyka 16, 78–82.

Zhao, S. (2006). Humanoid social robots as a medium of communication. New Media Soc. 8, 401–419. doi: 10.1177/1461444806061951

Keywords: robots, economic function, social function, mind perception, emotion, cognition, moral treatment

Citation: Wang X and Krumhuber EG (2018) Mind Perception of Robots Varies With Their Economic Versus Social Function. Front. Psychol. 9:1230. doi: 10.3389/fpsyg.2018.01230

Received: 21 December 2017; Accepted: 27 June 2018;

Published: 18 July 2018.

Edited by:

Mario Weick, University of Kent, United KingdomReviewed by:

Guillermo B. Willis, Universidad de Granada, SpainJill Ann Jacobson, Queen’s University, Canada

Copyright © 2018 Wang and Krumhuber. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xijing Wang, eGlqaW5nLndhbmcuMTNAdWNsLmFjLnVr

Xijing Wang

Xijing Wang Eva G. Krumhuber

Eva G. Krumhuber