- 1Institute of Psychology, Polish Academy of Sciences, Warsaw, Poland

- 2Department of Experimental Psychology, University College London, London, United Kingdom

- 3School of Psychology, Queen’s University Belfast, Belfast, United Kingdom

Smiles are distinct and easily recognizable facial expressions, yet they markedly differ in their meanings. According to a recent theoretical account, smiles can be classified based on three fundamental social functions which they serve: expressing positive affect and rewarding self and others (reward smile), creating and maintaining social bonds (affiliative smile), and negotiating social status (dominance smiles) (Niedenthal et al., 2010; Martin et al., 2017). While there is evidence for distinct morphological features of these smiles, their categorization only starts to be investigated in human faces. Moreover, the factors influencing this process – such as facial mimicry or display mode – remain yet unknown. In the present study, we examine the recognition of reward, affiliative, and dominance smiles in static and dynamic portrayals, and explore how interfering with facial mimicry affects such classification. Participants (N = 190) were presented with either static or dynamic displays of the three smile types, whilst their ability to mimic was free or restricted via a pen-in-mouth procedure. For each stimulus they rated the extent to which the expression represents a reward, an affiliative, or a dominance smile. Higher than chance accuracy rates revealed that participants were generally able to differentiate between the three smile types. In line with our predictions, recognition performance was lower in the static than dynamic condition, but this difference was only significant for affiliative smiles. No significant effects of facial muscle restriction were observed, suggesting that the ability to mimic might not be necessary for the distinction between the three functional smiles. Together, our findings support previous evidence on reward, affiliative, and dominance smiles by documenting their perceptual distinctiveness. They also replicate extant observations on the dynamic advantage in expression perception and suggest that this effect may be especially pronounced in the case of ambiguous facial expressions, such as affiliative smiles.

Introduction

A smile can be simply described as a contraction of the zygomaticus major - a facial muscle which pulls the lip corners up toward the cheekbones (Ekman and Friesen, 1982), named by Duchenne de Boulogne (1862/1990) “a muscle of joy.” This unique movement makes it an easily recognizable facial expression. However, smiles can also be confusing in their meanings and functions they serve. Despite the association between smiles and positive feelings and intentions (Ekman et al., 1990), trust (Krumhuber et al., 2007) and readiness to help (Vrugt and Vet, 2009), smiles can also be displayed during unpleasant experiences, i.e., to hide negative feelings (Ekman and Friesen, 1982), and be perceived as a signal of lower social status (Ruben et al., 2015) or intelligence (Krys et al., 2014). Smiling is therefore used in a wide variety of situations, depending on the context and social norms learned through socialization and experience. Not only can the use of smiles and their social function vary considerably (e.g., Szarota et al., 2010; Rychlowska et al., 2015), but the very expression of a smile comes in many forms. This is because the contraction of the zygomaticus major muscle [defined as Action Unit (AU) 12 in the Facial Action Coding System; Ekman et al., 2002] – the core feature of any smile expression – often involves the activation of other facial muscles, creating a range of possible variations. Ekman (2009), for example, identified and described 18 types of smiles, differentiated in terms of their appearance and the situation in which they are likely to occur. Moreover, AU12 can be accompanied by the presence of other AUs and thus convey emotions such as disgust or surprise (Du et al., 2014; Calvo et al., 2018).

Despite its variability, the most commonly used smile typology is the distinction between ‘true’/genuine and ‘fake’/false smiles, with the former being sincere displays of joy and amusement, and the latter being produced voluntarily, possibly to increase others’ trust and cooperation (Frank, 1988). True and false smiles can be distinguished on the basis of their morphology: the presence of supposedly involuntary eye constriction (AU6 – the contraction of the orbicularis oculi muscle), a classic criterion based on early studies by Duchenne de Boulogne (1862/1990). Although the true vs. false smile typology is parsimonious and extensively documented in the literature, it is not without shortcomings. Specifically, contemporary empirical evidence reveals that people are able to deliberately show Duchenne smiles (Krumhuber and Manstead, 2009; Gunnery et al., 2013), thereby limiting the usefulness of this criterion. More importantly, however, the binary nature of the typology fails to account for the variability of smiles produced in everyday life. People smile in many situations, involving diverse emotions or very little emotion. Some expressions undeniably convey more positive affect than others. However, the assertion that all smiles which fail to reflect joy and amusement must be false and potentially manipulative, seems oversimplifying. It is at least theoretically possible that an enjoyment smile is just one among many true smiles.

An alternative theoretical account proposes that smiles can be classified in accordance to how they affect people’s behavior in the service of fundamental tasks of social living (Niedenthal et al., 2010; Martin et al., 2017). This typology defines three physically distinct smiles of reward, affiliation, and dominance, which serve the main function of social communication and interaction (Niedenthal et al., 2013). Reward smiles communicate positive emotions and sensory states such as happiness or amusement, thereby potentially rewarding both the sender and the perceiver. Affiliative smiles communicate positive social motives and are used to create and maintain social bonds. A person displaying an affiliative smile intends to be perceived as friendly and polite. Finally, dominance smiles are used to impose and maintain higher social status. The person displaying this type of smile intends to be perceived as superior. Recent research by Rychlowska et al. (2017, Study 1) explored the physical appearance of reward, affiliative, and dominance smiles, including a description of the facial characteristics of each category, suggesting that the three functional smiles are indeed morphologically different. In a subsequent experiment (Rychlowska et al., 2017, Study 2), computer-generated animations of reward, affiliative, and dominance smiles were categorized by human observers and a Bayesian classifier. Despite the generally high categorization accuracy for all three smile types, human and Bayesian performance was lowest for the affiliative smiles, arguably because of their similarity to the reward smiles, as both expressions convey positive social signals and they both involve a symmetrical movement of the zygomaticus major muscle.

Given the multiple types of smiles, the diversity of situations in which they appear, and the varying display rules governing their production, the understanding of these facial expressions is a complex process which can rely on multiple mechanisms – such as a perceptual analysis of the expresser’s face, conceptual knowledge about the expresser and the situation, and sensorimotor simulation (Niedenthal et al., 2010; Calvo and Nummenmaa, 2016). This last construct involves the recreation of smile-related feelings and neural processes in the perceiver, and is closely related to facial mimicry, which is defined as a spontaneous rapid imitation of other people’s expressions (Dimberg et al., 2000). As sensorimotor simulation involves a complex sequence of motor, neural, and affective processes (see Wood et al., 2016, for review), it is more costly than other forms of facial expression processing. Hence, it may be preferentially used for the interpretation of expressions that are important for the observer or non-prototypical, and thus hard to classify (Niedenthal et al., 2010).

Existing literature suggests that facial mimicry, often used to index sensorimotor simulation of emotion expressions, is sensitive to social and contextual factors. Its occurrence may depend on the type of expression observed (Hess and Fischer, 2014), but also on the social motivation (Fischer and Hess, 2017), attitudes toward the expresser (e.g., Likowski et al., 2008), and group status (e.g., Sachisthal et al., 2016). Furthermore, it can be experimentally altered or restricted in laboratory settings using various pen-in-mouth procedures, stickers, chewing-gum, or sports mouthguards. In these cases, preventing mimicry responses has been shown to impair observers’ ability to accurately recognize happiness and disgust (Oberman et al., 2007; Ponari et al., 2012) and discriminate between false and genuine smiles (Maringer et al., 2011; Rychlowska et al., 2014).

Parallel to these findings, the results of other studies investigating the role of facial mimicry in emotion recognition were not conclusive (e.g., Blairy et al., 1999; Korb et al., 2014). Several factors could explain such inconsistencies: First, measuring rather than blocking facial mimicry may not necessarily show its involvement in expression recognition. Also, facial mimicry could be more implicated in recognition tasks that are especially difficult, i.e., when classifying low-intensity facial expressions or judging subtle variations between different types of a given facial expression (Hess and Fischer, 2014). This makes the interpretation of smiles an especially useful paradigm for studying the role of facial mimicry.

Another potential explanation for disparate research findings could be related to the way in which the stimuli are presented. Previous studies using facial electromyography (EMG; e.g., Sato et al., 2008; Rymarczyk et al., 2016) reveal that dynamic video stimuli lead to enhanced mimicry in comparison with static images. In particular, higher intensities of AU12 and AU6 – the core smile movements – have been reported when participants watched dynamic rather than static expressions of happiness. Dynamic materials have higher ecological validity (Krumhuber et al., 2013, 2017), given that in everyday social encounters facial expressions are moving and rapidly changing depending on the situation. As emotion processing is not only based on the perception of static configurations of facial muscles, but also on how the facial expression unfolds (Krumhuber and Scherer, 2016), dynamic displays provide additional information which is not present in static images. Furthermore, past research reveals better recognition and higher arousal ratings of emotions when they are shown in dynamic than static form (e.g., Hyniewska and Sato, 2015; Calvo et al., 2016). Dynamic displays may therefore provide relevant cues which facilitate the decoding of facial expressions.

The present work focuses on the distinction between the three functional smiles of reward, affiliation, and dominance (Niedenthal et al., 2010). Instead of using computer-generated faces as done by Rychlowska et al. (2017), we employed static images and dynamic videos of human actors displaying the three types of smiles. Our experiment extends previous research (Rychlowska et al., 2017; Martin et al., 2018) in three ways by testing (1) how accurately naïve observers can discriminate between the three functional smiles, (2) whether the capacity to classify these smiles is affected by facial muscle restriction that prevents mimicry responses, and (3) whether the type of display (static vs. dynamic) influences smile recognition, thereby moderating the potential effects of muscle restriction. In line with previous findings (Rychlowska et al., 2017), we predict that observers should be able to accurately classify the three functional smiles, with affiliative smiles being more ambiguous than reward and dominance smiles. We also anticipate that, consistent with previous work (Maringer et al., 2011; Rychlowska et al., 2014), facial muscle restriction should disrupt participants’ ability to interpret the three smile types. Finally, we hypothesize that impairments in smile classification in the muscle restriction condition should be especially strong in the static, rather than dynamic condition, given the relative smaller amount of information provided by stimuli of static nature.

Materials and Methods

Participants and Design

The study had a three-factorial experimental design with the stimulus display (dynamic vs. static) and muscle condition (free vs. restricted) as between-subject variables, and smile type (reward, affiliative, dominance) as within-subject variable. A total of 190 participants, mostly students at University College London, were recruited and voluntarily took part in the study in exchange for a £2 voucher or course credits. One hundred seventy-eight subjects identified themselves as White and 12 as mixed race. Technical failure resulted in the loss of data for two participants, leaving a final sample of 188 participants (137 women), ranging in age between 18 and 45 years (M = 22.2 years, SD = 4.2). A power analysis using G∗Power 3.1 (Faul et al., 2007) for a 3 × 2 × 2 interaction, assuming a medium-sized effect (Cohen’s f = 0.25) and a 0.5 correlation between measures, indicated that this sample size would be sufficient for 95% power. All participants had normal or corrected-to-normal vision. Ethical approval for the present study was granted by the UCL Department of Psychology Ethics Committee.

Materials

Stimuli were retrieved from a set developed by Martin et al. (2018) and featured eight White actors (four female) in frontal view, expressing the three smile types: reward smile (eight stimuli), affiliative smile (eight stimuli), and dominance (six stimuli) smile. Actors posed each smile type after being coached about its form and accompanying social motivations (see Martin et al., 2017; Rychlowska et al., 2017). In morphological terms (FACS, Ekman et al., 2002), reward smiles consisted of Duchenne smiles that were characterized by symmetrical activation of the Lip Corner Puller (AU12), the Cheek Raiser (AU6), Lips Part (AU25) and/or Jaw Drop (AU26). Affiliative smiles consisted of Non-Duchenne smiles that involved the Lip Corner Puller (AU12), the Chin Raiser (AU17), with or without Brow Raiser (AU1-2). Dominance smiles consisted of asymmetrical Non-Duchenne smiles (AU12L or AU12R), with additional actions, such as Head Up (AU53), Upper Lip Raiser (AU10), and/or and Lips Part (AU25) (see Figure 1). We employed both static and dynamic portrayals of each smile expression, netting 22 static and 22 dynamic stimuli. Dynamic stimuli were short videoclips (2.6 s) which showed the face changing from non-expressive to peak emotional display. Static stimuli consisted of a single frame of the peak expression. All stimuli were displayed in color on white backgrounds (size: 960 × 540 pixels).

FIGURE 1. Example of a reward smile (A), affiliative smile (B), and dominance smile (C) at the peak intensity of the display.

Procedure

Participants were tested individually in the laboratory. After providing informed consent, they were randomly assigned to one of the four experimental conditions, resulting in approximately 47 people per cell. Using the Qualtrics software (Provo, UT, United States), participants were instructed that they would view a series of smile expressions. Their task was to classify the smiles into three categories. The following brief definitions of each smile type, informed by previous research Rychlowska et al. (2017), were provided: (a) reward smile: “a smile displayed when someone is happy, content or amused by something,” (b) affiliative smile: “a smile which communicates positive intentions, expresses a positive attitude to another person or is used when someone wants to be polite,” and (c) dominance smile: “a smile displayed when someone feels superior, better and more competent or wants to communicate condescension toward another person.”

In addition to these smile descriptions, participants were given examples of situations in which each type of expression was likely to occur: (a) reward smile: “being offered a dream job or seeing a best friend, not seen for a long time,” (b) affiliative smile: “entering a room for a job interview or greeting a teacher,” (c) dominance smile: “bragging to a rival about a great job offer, meeting an enemy after winning an important prize.” Situational descriptions were pre-tested in a pilot study, in which participants (N = 33) were asked to choose amongst the three functional smiles the expression that best matched a particular situation (from a pool of 13 situational descriptions). For the present study, we selected the situation that was judged to be the most appropriate for each type of smile expression (selection frequency: reward: 94%, affiliative, 93%, dominance: 75%).

During the muscle restriction condition, participants were informed that people were more objective in their judgments of emotions when their facial movements were restrained. A similar cover story has been used by Maringer et al. (2011). In order to inhibit the relevant facial muscles, participants were to hold a pencil sideways, using both lips and teeth, without exerting any pressure (for a similar procedure see Niedenthal et al., 2001; Maringer et al., 2011). The experimenter demonstrated the correct way of holding the pen in the mouth, and only after the experimenter was satisfied with the pen holding technique, the experiment was started. There was no additional instruction in the free muscle condition.

After some comprehension checks of the three types of smile expressions, participants were presented with static or dynamic versions of the 22 stimuli, shown in a random sequence at the center of the screen. Dynamic sequences were played in their entire length; static photographs were displayed for the same length as the videos (2.6 s). For each stimulus, participants rated their confidence (from 0 to 100%) about the extent to which the expression was a reward, an affiliative, or a dominance smile. If they felt that more than one category applied, they could respond using multiple sliders to choose the exact confidence levels for each response category. Ratings across the three response categories had to sum up to 100%. We defined classification accuracy as the likelihood of correctly classifying a smile expression in line with the predicted target label (reward, affiliation, dominance). After completion of the experiment, participants were debriefed and thanked.

Results

Smile Classification

To test whether the three functional smiles are correctly classified by naïve observers, we calculated the mean confidence ratings for correct (i.e., function-consistent) answers for each smile type (accuracy rates). A 2 (stimulus display: static, dynamic) × 2 (muscle condition: free, restricted) × 3 (smile type: reward, affiliative, dominance) ANOVA, with smile type as within-subjects variable, and classification accuracy as the dependent measure yielded significant main effects of smile type, F(2,368) = 17.41, p < 0.001, = 0.09, and stimulus display, F(1,184) = 13.51, p < 0.001, = 0.07. The two main effects were qualified by a significant interaction between smile type and display, F(2,368) = 3.99, p = 0.021, = 0.02. The main effect of muscle condition, F(1,184) = 0.89, p = 0.348, = 0.01, the smile type by muscle condition interaction F(2,368) = 2.71, p = 0.070, = 0.01, the display by muscle condition interaction F(1,184) = 1.90, p = 0.170, = 0.01, and the smile type, display and muscle condition interaction F(2,368) = 0.16, p = 0.845, = 0.001, were not significant.

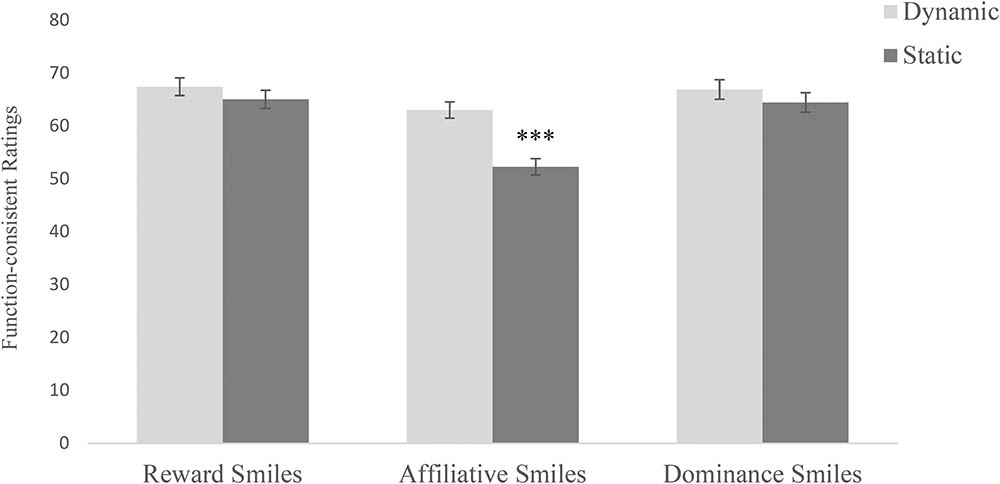

The main effect of smile type revealed that reward smiles (M = 66.25, SD = 16.37) and dominance smiles (M = 64.47, SD = 17.98) were recognized more accurately than affiliative smiles (M = 57.70, SD = 15.75, ps < 0.001, Bonferroni-corrected). The difference in recognition rates between reward and dominance smiles was not significant (p = 0.29, Bonferroni-corrected). The main effect of stimulus display revealed that recognition rates of the three smile types were higher in the dynamic (M = 65.80, SD = 9.92) than static condition (M = 59.98, SD = 11.71). However, decomposing the significant interaction between smile type and display with simple effects analyses revealed that affiliative smiles were recognized more accurately in the dynamic (M = 63.06, SD = 13.04) than static condition (M = 52.30, SD = 16.47), F(1,184) = 24.32, p < 0.001, = 0.12. No significant differences between the dynamic and static condition emerged for the recognition of reward smiles, F(1,184) = 0.94, p = 0.335, = 0.01, and dominance smiles, F(1,184) = 2.87, p = 0.092, = 0.02 (see Figure 2).

FIGURE 2. Function-consistent mean ratings (accuracy rates) of the three smile types in the dynamic and static condition. Error bars represent standard errors of the mean. The asterisks indicate a significant difference in the mean ratings between static and dynamic condition (p < 0.001).

Smile Confusions

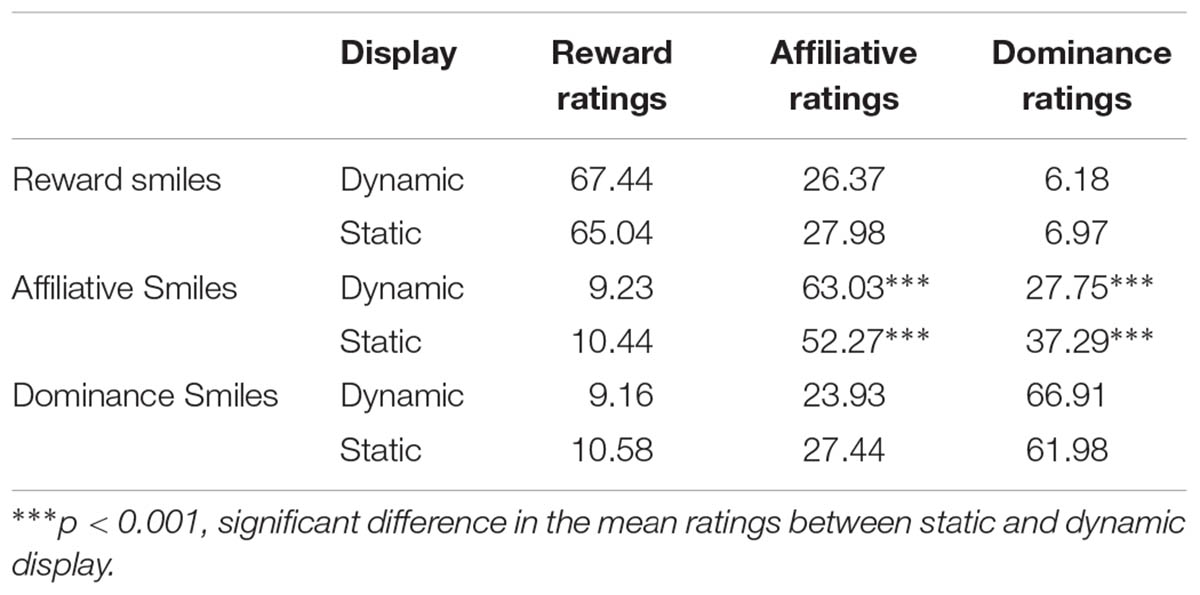

The confusion matrix in Table 1 provides a detailed overview of true (false) positives and true (false) negatives in smile classification. In order to analyze the type of confusions within a smile type, we followed established procedures (see Calvo and Lundqvist, 2008) and submitted function-consistent and function-inconsistent ratings of the smile expressions to a 2 (stimulus display: static, dynamic) × 2 (muscle condition: free, restricted) × 3 (smile type: reward, affiliative, dominance) × 3 (response: reward, affiliative, dominance) ANOVA, with smile type and response as within-subjects factors. The results revealed a significant main effect of smile type, F(2,368) = 867.54, p < 0.001, = 0.83, and response, F(2,368) = 36.11, p < 0.001, = 0.16, as well as a significant interaction between the two factors, F(4,736) = 726.55, p < 0.001, = 0.80. The interaction between smile type, response, and stimulus display was also significant F(4,736) = 9.20, p < 0.001, = 0.05. The response by stimulus display interaction, F(2,368) = 1.76, p = 0.177, = 0.01, the smile type, response, and muscle condition interaction F(4,736) = 2.28, p = 0.080, = 0.01, as well as the interaction between smile type, response, stimulus display and muscle condition F(4,736) = 1.37, p = 0.252, = 0.01, were not significant.

To decompose the three-way interaction, we examined the interactive effect of response and display separately for each smile type. The interaction of response (reward, affiliative, dominance) and display (static, dynamic) was not significant for the confusions of reward smiles, F(2,372) = 0.78, p = 0.459, = 0.004, and dominance smiles, F(2,372) = 2.8 p = 0.062, = 0.02, suggesting that the classification of these smiles was similar in both display conditions.

However, the interaction of response and display was significant for the confusion of affiliative smiles, F(2,372) = 18.57, p < 0.001, = 0.09. Overall, these smiles were rated higher on affiliation (M = 57.70, SD = 15.75) than dominance (M = 32.47, SD = 15.39) and reward (M = 9.83, SD = 9.58, ps < 0.001), but they were also more likely to be confused with dominance than reward smiles, F(2,372) = 408.03, p < 0.001, = 0.69. Simple effects analyses revealed that affiliative smiles were equally likely to be classified as reward smiles in both display conditions (static: M = 10.44, SD = 10.88, dynamic: M = 9.23, SD = 8.13, p = 0.386). However, affiliative smiles were also less likely to be accurately classified as affiliative in the static (M = 52.27, SD = 16.50) than in the dynamic condition (M = 63.03, SD = 52.27, p < 0.001). This difference results from participants rating affiliative smiles as more dominant in the static condition (M = 37.29, SD = 15.70) than in the dynamic condition (M = 27.75, SD = 13.58, p < 0.001) (see Table 1).

Discussion

The purpose of the present work was to test the extent to which the functional smiles of reward, affiliation, and dominance are distinct and recognizable facial expressions. We also aimed to explore the role of facial muscle restriction and presentation mode in moderating smile classification rates. The results reveal that participants were able to accurately categorize reward, affiliative and dominance smiles. This supports the assumption that diverse morphological characteristics of smiles are identified in terms of their social communicative functions (Niedenthal et al., 2010; Martin et al., 2017). The use of naturalistic human face stimuli, rather than computer-generated faces, extends existing work (Rychlowska et al., 2017), thereby achieving greater ecological validity.

Our results reveal that classification accuracy was significantly lower for affiliative smiles than reward and dominance smiles. This is in line with previous findings by Rychlowska et al. (2017) who showed that human observers and a Bayesian classifier were less accurate in categorizing affiliative smiles compared to reward and dominance smiles (using a binary yes/no classification approach to indicate whether a given expression was – or was not – an instance of a given smile type). The present research used continuous confidence ratings that were not mutually exclusive, thus replicating their findings with human-realistic stimuli and a different response format. Moreover, a closer inspection of participants’ ratings reveals that, whereas affiliative smiles were relatively unlikely to be classified as reward, reward smiles were often judged as affiliative, consistently with the results of Rychlowska et al. (2017) and Martin et al. (2018). While this finding suggests that reward smiles – similarly to the Duchenne smiles previously described in the literature – may constitute a more homogeneous, less variable category than other smiles (e.g., Frank et al., 1993), it also highlights similarities between reward and affiliative smiles which both convey positive social motivations. It is worth noting that participants in the present study saw smile expressions of White/Caucasian targets without any background information. The only context given in the study was the definition of the three smile types including examples of situations in which they might potentially occur. Recent work by Martin et al. (2018) suggests that the three types of smiles elicit distinct physiological responses when presented in a social-evaluative context. Adding social context to these displays therefore provides a promising avenue for future research, as the salience of specific interpersonal tasks could facilitate the distinction between affiliative smiles and the other two categories.

As predicted, the current study revealed higher recognition rates of the expressions presented in dynamic compared to static mode, and this applied in particular to affiliative smiles. This finding corroborates existing research on the dynamic advantage in emotion recognition (Hyniewska and Sato, 2015; Calvo et al., 2016). The fact that presentation mode is particularly important in the recognition of affiliative smiles confirms the assumption that dynamic features might be especially helpful in the identification of more subtle and ambiguous facial expressions, i.e., non-enjoyment smiles (Krumhuber and Manstead, 2009). As such, fundamental differences in the timing of smiles such as amplitude, total duration, and speed of onset, apex, and offset (Cohn and Schmidt, 2004) might inform expression classification (Krumhuber and Kappas, 2005).

Contrary to our predictions and to previous findings (Niedenthal et al., 2010; Maringer et al., 2011; Rychlowska et al., 2014), our results did not support the moderating role of people’s ability to mimic in smile classification. According to Calvo and Nummenmaa (2016), facial expressions consist of morphological changes in the face and their underlying affective content. Given that participants were instructed to rate each smile on three pre-designed scales (reward, affiliative, dominance smile), it is possible that this procedure induced cognitive, label-driven, rather than affective processing based on embodied simulation. Alternatively, the provision of a clear definition of the three functional smiles might have failed to encourage the social motivation necessary for facial mimicry to occur (Hofman et al., 2012; Hess and Fischer, 2014). It is also possible that other factors, i.e., trait empathy (Kosonogov et al., 2015) or endocrine levels (Kraaijenvanger et al., 2017) impact smile recognition rates as well as modulate the occurrence of mimicry. We think that it is unlikely that the present results are caused by an improper technique for blocking mimicry given that the experimenter closely monitored whether participants held the pencils correctly. In addition, we used a reliable facial muscle restriction technique employed in previous studies which revealed the moderating role of mimicry in emotion perception (Niedenthal et al., 2001; Maringer et al., 2011).

One potential limitation of our study was that we did not measure mimicry during the smile classification task. It is thus impossible to conclude whether participants in the free mimicry condition were really mimicking the smiles or whether mimicry occurred but did not enhance recognition performance in comparison to the restricted condition. We therefore suggest for future research on mimicry blocking to use EMG measurements in order to assess the presence of facial mimicry in the free muscle condition as well as the effectiveness of mimicry blocking in the restricted muscle condition. Finally, the lack of significant effects of the muscle restriction procedure may also reflect the complexity of sensorimotor simulation; a process which does not always involve measurable facial mimicry. Given that generating a motor output is a critical component for sensorimotor simulation more than facial activity per se (e.g., Korb et al., 2015; Wood et al., 2016), future studies could investigate the extent to which judgments of functional smiles are impaired by experimental manipulations that involve the production of conflicting facial movements.

In sum, the present research investigated observers’ judgments of reward, affiliative, and dominance smiles. While participants were able to accurately categorize each smile type, recognition accuracy was lower for affiliative than for reward and dominance smiles. Although preventing mimicry responses did not appear to influence participants’ classification, the use of dynamic versus static stimuli increased recognition accuracy of affiliative smiles. To our knowledge, this is the first study to test the role of muscle restriction and presentation mode in the recognition of reward, affiliative, and dominance smiles. The results highlight the importance of dynamic information, being particularly salient in the recognition of affiliative smiles which are the most ambiguous among the three smile types. The lack of a significant effect of facial muscle condition on smile classification suggests that the functional smiles can be recognized based on their physical appearance. Our findings contribute to the understanding of the importance of temporal dynamics in the perception of emotional expressions.

Author Contributions

AO, EK, PS, and MR conceived and designed the experiments. AO performed the experiments. AO and EK performed the statistical analysis. AO wrote the first draft of the manuscript. EK, MR, and PS wrote sections of the manuscript.

Funding

This work was supported in part by the Institute of Psychology of Polish Academy of Sciences Internal Grants for Young Scientists and Ph.D. Students – (2015 and 2017).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors thank Daniel Bialer for his help with data collection.

References

Blairy, S., Herrera, P., and Hess, U. (1999). Mimicry and the judgment of emotional facial expressions. J. Nonverb. Behav. 23, 5–41. doi: 10.1023/A:1021370825283

Calvo, M. G., Avero, P., Fernández-Martín, A., and Recio, G. (2016). Recognition thresholds for static and dynamic emotional faces. Emotion 16, 1186–1200. doi: 10.1037/emo0000192

Calvo, M. G., Gutiérrez-García, A., and Del Líbano, M. (2018). What makes a smiling face look happy? Visual saliency, distinctiveness, and affect. Psychol. Res. 82, 296–309. doi: 10.1007/s00426-016-0829-3

Calvo, M. G., and Lundqvist, D. (2008). Facial expressions of emotion (KDEF): identification under different display-duration conditions. Behav. Res. Methods 40, 109–115. doi: 10.3758/BRM.40.1.109

Calvo, M. G., and Nummenmaa, L. (2016). Perceptual and affective mechanisms in facial expression recognition: an integrative review. Cogn. Emot. 30, 1081–1106. doi: 10.1080/02699931.2015.1049124

Cohn, J. F., and Schmidt, K. (2004). The timing of facial motion in posed and spontaneous smiles. Int. J. Wavelets Multiresolut. Inf. Process. 2, 1–12. doi: 10.1142/S021969130400041X

Dimberg, U., Thunberg, M., and Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. doi: 10.1111/1467-9280.00221

Du, S., Tao, Y., and Martinez, A. M. (2014). Compound facial expressions of emotion. Proc. Natl. Acad. Sci. U.S.A. 111, E1454–E1462. doi: 10.1073/pnas.1322355111

Duchenne de Boulogne, G.-B. (1862/1990). The Mechanism of Human Facial Expression, ed. and trans. R. A. Cuthbertson. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511752841

Ekman, P. (2009). Telling Lies: Clues to Deceit in the Marketplace, Politics, and Marriage (revised edition). New York, NY: W. W. Norton & Company.

Ekman, P., Davidson, R. J., and Friesen, W. V. (1990). The Duchenne smile: emotional expression and brain physiology: II. J. Pers. Soc. Psychol. 58, 342–353. doi: 10.1037/0022-3514.58.2.342

Ekman, P., and Friesen, W. V. (1982). Felt, false, and miserable smiles. J. Nonverb. Behav. 6, 238–252. doi: 10.1007/BF00987191

Ekman, P., Friesen, W. V., and Hager, J. C. (2002). Facial Action Coding System: The manual on CD ROM. Salt Lake City, UT: Research Nexus.

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G∗ Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Fischer, A., and Hess, U. (2017). Mimicking emotions. Curr. Opin. Psychol. 17, 151–155. doi: 10.1016/j.copsyc.2017.07.008

Frank, R. H. (1988). Passions Within Reason: The Strategic Role of The Emotions. New York, NY: W. W. Norton & Company.

Frank, M. G., Ekman, P., and Friesen, W. V. (1993). Behavioral markers and recognizability of the smile of enjoyment. J. Pers. Soc. Psychol. 64, 83–93. doi: 10.1037/0022-3514.64.1.83

Gunnery, S. D., Hall, J. A., and Ruben, M. A. (2013). The deliberate Duchenne smile: individual differences in expressive control. J. Nonverb. Behav. 37, 29–41. doi: 10.1007/s10919-012-0139-4

Hess, U., and Fischer, A. (2014). Emotional mimicry: why and when we mimic emotions. Soc. Pers. Psychol. Compass 8, 45–57. doi: 10.1016/j.tics.2015.12.010

Hofman, D., Bos, P. A., Schutter, D. J., and van Honk, J. (2012). Fairness modulates non-conscious facial mimicry in women. Proc. R. Soc. Lond. B 279, 3535–3539. doi: 10.1098/rspb.2012.0694

Hyniewska, S., and Sato, W. (2015). Facial feedback affects valence judgments of dynamic and static emotional expressions. Front. Psychol. 6:291. doi: 10.3389/fpsyg.2015.00291

Korb, S., Malsert, J., Rochas, V., Rihs, T., Rieger, S., Schwab, S., et al. (2015). Gender differences in the neural network of facial mimicry of smiles – An rTMS study. Cortex 70, 101–114. doi: 10.1016/j.cortex.2015.06.025

Korb, S., Niedenthal, P., Kaiser, S., and Grandjean, D. (2014). The perception and mimicry of facial movements predict judgments of smile authenticity. PLoS One 9:e99194. doi: 10.1371/journal.pone.0099194

Kosonogov, V., Titova, A., and Vorobyeva, E. (2015). Empathy, but not mimicry restriction, influences the recognition of change in emotional facial expressions. Q. J. Exp. Psychol. 68, 2106–2115. doi: 10.1080/17470218.2015.1009476

Kraaijenvanger, E. J., Hofman, D., and Bos, P. A. (2017). A neuroendocrine account of facial mimicry and its dynamic modulation. Neurosci. Biobehav. Rev. 77, 98–106. doi: 10.1016/j.neubiorev.2017.03.006

Krumhuber, E., and Kappas, A. (2005). Moving smiles: the role of dynamic components for the perception of the genuineness of smiles. J. Nonverb. Behav. 29, 3–24. doi: 10.1007/s10919-004-0887-x

Krumhuber, E., Manstead, A. S., Cosker, D., Marshall, D., Rosin, P. L., and Kappas, A. (2007). Facial dynamics as indicators of trustworthiness and cooperative behavior. Emotion 7, 730–735. doi: 10.1037/1528-3542.7.4.730

Krumhuber, E. G., Kappas, A., and Manstead, A. S. (2013). Effects of dynamic aspects of facial expressions: a review. Emot. Rev. 5, 41–46. doi: 10.1177/1754073912451349

Krumhuber, E. G., and Manstead, A. S. (2009). Can Duchenne smiles be feigned? New evidence on felt and false smiles. Emotion 9, 807–820. doi: 10.1037/a0017844

Krumhuber, E. G., and Scherer, K. R. (2016). The look of fear from the eyes varies with the dynamic sequence of facial actions. Swiss J. Psychol. 75, 5–14. doi: 10.1024/1421-0185/a000166

Krumhuber, E. G., Skora, L., Küster, D., and Fou, L. (2017). A review of dynamic datasets for facial expression research. Emot. Rev. 9, 280–292. doi: 10.1177/1754073916670022

Krys, K., Hansen, K., Xing, C., Szarota, P., and Yang, M. (2014). Do only fools smile at strangers? Cultural differences in social perception of intelligence of smiling individuals. J. Cross Cult. Psychol. 45, 314–321. doi: 10.1177/0022022113513922

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., and Weyers, P. (2008). Modulation of facial mimicry by attitudes. J. Exp. Soc. Psychol. 44, 1065–1072. doi: 10.1016/j.jesp.2007.10.007

Maringer, M., Krumhuber, E. G., Fischer, A. H., and Niedenthal, P. M. (2011). Beyond smile dynamics: mimicry and beliefs in judgments of smiles. Emotion 11, 181–187. doi: 10.1037/a0022596

Martin, J., Rychlowska, M., Wood, A., and Niedenthal, P. (2017). Smiles as multipurpose social signals. Trends Cogn. Sci. 21, 864–877. doi: 10.1016/j.tics.2017.08.007

Martin, J. D., Abercrombie, H. C., Gilboa-Schechtman, E., and Niedenthal, P. M. (2018). Functionally distinct smiles elicit different physiological responses in an evaluative context. Sci. Rep. 8:3558. doi: 10.1038/s41598-018-21536-1

Niedenthal, P., Rychlowska, M., and Szarota, P. (2013). “Embodied simulation and the human smile: processing similarities to cultural differences,” in Warsaw Lectures in Personality and Social Psychology (Meaning Construction, the Social World, and the Embodied Mind, Vol. 3, eds D. Cervone, M. Fajkowska, M. W. Eysenck, and T. Maruszewsk (Clinton Corners, NY: Eliot Werner Publications), 143–160.

Niedenthal, P. M., Brauer, M., Halberstadt, J. B., and Innes-Ker, A. H. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cogn. Emot. 15, 853–864. doi: 10.1080/02699930143000194

Niedenthal, P. M., Mermillod, M., Maringer, M., and Hess, U. (2010). The Simulation of Smiles (SIMS) model: embodied simulation and the meaning of facial expression. Behav. Brain Sci. 33, 417–433. doi: 10.1017/S0140525X10000865

Oberman, L. M., Winkielman, P., and Ramachandran, V. S. (2007). Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2, 167–178. doi: 10.1080/17470910701391943

Ponari, M., Conson, M., D’Amico, N. P., Grossi, D., and Trojano, L. (2012). Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion 12, 1398–1403. doi: 10.1037/a0028588

Ruben, M. A., Hall, J. A., and Schmid Mast, M. (2015). Smiling in a job interview: when less is more. J. Soc. Psychol. 155, 107–126. doi: 10.1080/00224545.2014.972312

Rychlowska, M., Cañadas, E., Wood, A., Krumhuber, E. G., Fischer, A., and Niedenthal, P. M. (2014). Blocking mimicry makes true and false smiles look the same. PLoS One 9:e90876. doi: 10.1371/journal.pone.0090876

Rychlowska, M., Jack, R. E., Garrod, O. G., Schyns, P. G., Martin, J. D., and Niedenthal, P. M. (2017). Functional smiles: tools for love, sympathy, and war. Psychol. Sci. 28, 1259–1270. doi: 10.1177/0956797617706082

Rychlowska, M., Miyamoto, Y., Matsumoto, D., Hess, U., Gilboa-Schechtman, E., Kamble, S., et al. (2015). Heterogeneity of long-history migration explains cultural differences in reports of emotional expressivity and the functions of smiles. Proc. Natl. Acad. Sci. U.S.A. 112, E2429–E2436. doi: 10.1073/pnas.1413661112

Rymarczyk, K., Zurawski, L., Jankowiak-Siuda, K., and Szatkowska, I. (2016). Do Dynamic compared to static facial expressions of happiness and anger reveal enhanced facial mimicry? PLoS One 11:e0158534. doi: 10.1371/journal.pone.0158534

Sachisthal, M. S., Sauter, D. A., and Fischer, A. H. (2016). Mimicry of ingroup and outgroup emotional expressions. Compr. Results Soc. Psychol. 1, 86–105. doi: 10.1080/23743603.2017.1298355

Sato, W., Fujimura, T., and Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. doi: 10.1016/j.ijpsycho.2008.06.001

Szarota, P., Bedynska, S., Matsumoto, D., Yoo, S., Friedlmeier, W., Sterkowicz, S., et al. (2010). Smiling as a masking display strategy: a cross-cultural comparison. I: A. Blachnio, & A. Przepiorka (red: er). Closer Emot. 3, 227–238.

Vrugt, A., and Vet, C. (2009). Effects of a smile on mood and helping behavior. Soc. Behav. Pers. Int. J. 37, 1251–1258. doi: 10.2224/sbp.2009.37.9.1251

Keywords: smile, facial expression, emotion, dynamic, mimicry

Citation: Orlowska AB, Krumhuber EG, Rychlowska M and Szarota P (2018) Dynamics Matter: Recognition of Reward, Affiliative, and Dominance Smiles From Dynamic vs. Static Displays. Front. Psychol. 9:938. doi: 10.3389/fpsyg.2018.00938

Received: 03 February 2018; Accepted: 22 May 2018;

Published: 11 June 2018.

Edited by:

Jean Decety, University of Chicago, United StatesReviewed by:

Peter A. Bos, Utrecht University, NetherlandsMario Del Líbano, University of Burgos, Spain

Copyright © 2018 Orlowska, Krumhuber, Rychlowska and Szarota. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anna B. Orlowska, YW5uYS5vcmxvd3NrYUBzZC5wc3ljaC5wYW4ucGw=

Anna B. Orlowska

Anna B. Orlowska Eva G. Krumhuber

Eva G. Krumhuber Magdalena Rychlowska

Magdalena Rychlowska Piotr Szarota

Piotr Szarota