- 1School of Psychological Science, University of Bristol, Bristol, United Kingdom

- 2MRC Integrative Epidemiology Unit, Bristol Medical School, University of Bristol, Bristol, United Kingdom

- 3Centre for Exercise, Nutrition and Health Sciences, School for Policy Studies, University of Bristol, Bristol, United Kingdom

Established methods for nutritional assessment suffer from a number of important limitations. Diaries are burdensome to complete, food frequency questionnaires only capture average food intake, and both suffer from difficulties in self estimation of portion size and biases resulting from misreporting. Online and app versions of these methods have been developed, but issues with misreporting and portion size estimation remain. New methods utilizing passive data capture are required that address reporting bias, extend timescales for data collection, and transform what is possible for measuring habitual intakes. Digital and sensing technologies are enabling the development of innovative and transformative new methods in this area that will provide a better understanding of eating behavior and associations with health. In this article we describe how wrist-worn wearables, on-body cameras, and body-mounted biosensors can be used to capture data about when, what, and how much people eat and drink. We illustrate how these new techniques can be integrated to provide complete solutions for the passive, objective assessment of a wide range of traditional dietary factors, as well as novel measures of eating architecture, within person variation in intakes, and food/nutrient combinations within meals. We also discuss some of the challenges these new approaches will bring.

Introduction

Non-communicable diseases now account for almost three quarters of global mortality, with cardiovascular disease (CVD) being the leading cause of death. Diet is responsible for more than half of CVD mortality worldwide (1). The proportion of diet-related deaths has remained relatively stable since 1990 suggesting interventions to improve food intakes have had limited success (1). A major issue in combatting diet-related disease is the way in which food intake and eating behavior are assessed. Accurate measurement of eating is key to monitoring the status quo and responses to individual or systems level interventions.

Recent years have seen a shift in nutritional science away from a focus on single nutrients such as saturated fats, toward a recognition that the complexity in patterns of food intake (e.g., combinations of foods and nutrients throughout the day), is more important in determining health (2–4). In addition to what we eat, we need to extend our understanding of eating architecture—the structure within which food and drinks are consumed. Factors such as the size, timing, and frequency of eating are increasingly recognized as independent determinants of health over and above what food is being eaten (5, 6). For example, skipping breakfast is consistently associated with higher body weight and poorer health outcomes (5, 7). Breakfast tends to be a small meal eaten in the morning made up of foods higher in fiber and micronutrients and it's not clear which of these features (meal size, timing, or food type), if any, are causing the benefits to health (8).

Traditional methods of dietary assessment, such as food diaries, 24-h recalls and food frequency questionnaires (FFQs), are self-reported and prone to substantial error and bias (9–11), which may distort diet and health associations (12). Misreporting is one widely recognized limitation of self-reported dietary assessment methods, with systematic under-reporting of energy intake identified in upto 70% of adults of adults in the UK National Diet and Nutrition Surveys (13, 14). Under-reporting occurs for a range of reasons including; difficulties estimating portion sizes for ingredients of complex meals, a desire to present one's diet positively (social desirability), and poor memory (11). People tend to under-report between-meal snacks, possibly because these snacks tend to be less socially desirable or because they are more sporadic, easily forgotten events (15).

Multi-day food diaries or 24-h recalls compare best with “gold standard” dietary biomarkers (16). But diaries or recalls are labor intensive for researchers to interpret and code, and burdensome for participants, which means data capture is limited to short time periods, (typically 3–7 days) and can take years to be available after collection (17). In addition, accurate memory is essential for 24 h recalls and even with prospective methods like food diaries, reactivity is a problem, where participants report accurately but eat less than usual because their eating is being recorded (10).

FFQs, although simpler and quicker to use, only capture average food intakes. Therefore, exposures increasingly acknowledged as important like the timing of eating (6), the way that foods are combined within a meal (18) and within person variation throughout the day or day to day (17) are unmeasured. With analyses of 4-day food diaries revealing that as much as 80% of food intake variation is within-person and only 20% variation between people (19), there are many untapped avenues for research into novel mechanisms relating diet to disease and identifying opportunities for interventions.

Online versions of “traditional” dietary assessment methods have been developed, but errors and biases remain. Validation studies of a range of online 24-h recall and food diary tools have shown the same problems as their paper-based equivalents; misreporting, portion size estimation, accurately matching foods consumed to foods in composition databases, and high participant burden (16, 20, 21). With the best methods currently available, on paper or online, a maximum of 80% of true intake can be captured and there are systematic differences in the 20% of food intake missing (10, 15).

There is a clear need to enhance dietary assessment methods to reduce error and bias, increase accuracy, and provide more detail on food intake over longer periods so that truly causal associations with health can be identified. A range of reviews and surveys have provided insights into the use of technology to advance dietary assessments (22–24). Recent reviews in particular have highlighted the potential for hybrid approaches that use multiple sensors and wearable devices to improve assessments (25–27). We offer an overview of the state of the art in the use of sensor and wearable technology for dietary assessment that covers both established and emerging methods, and which has a particular focus on passive methods—those that require little or ideally no effort from participants. We illustrate how integrating data from these methods and other sources could transform diet-related health research and behaviors.

What we Eat

The most commonly used methods for objectively identifying food and portion sizes are image-based. The widespread adoption of smartphones (28) by most adults in high income countries means individuals always have a camera to hand as they go about their daily lives. Many smartphone apps exploring the use of food photography for dietary assessment have been developed and validated. Examples include the mobile food record (mFR) (29) and Remote Food Photography Method (RFPM) (30), where participants capture images of everything they eat over a defined time period by taking a photo before and after each meal. Initial problems with these methods included ensuring all meals were captured, and that photos captured all foods. There were also issues in identifying food items, both automatically and with manual coding systems. These apps were improved by adding customized reminders [drawing on ecological momentary assessment methods (31)], real-time monitoring of photos by researchers to encourage compliance, prompts to improve photo composition, and requests for supplementary information alongside photos. For example, users can confirm or correct tagged foods automatically identified in images (mFR) or add extra text or voice descriptions (RFPM).

The mFR and RFPM systems have been validated in adults using doubly labeled water (DLW) to assess the accuracy of energy intake estimated from several days of food photographs taken in free-living conditions. The mFR underestimated DLW measured energy expenditure by 19% (579 kcal/day), while the RFPM reported a mean underestimate of 3.7% (152 kcal/day), which is similar, if not slightly better, agreement than seen in self-reported methods (30). However, food photography currently has considerable researcher and participant burden because of the requirements for training, real-time monitoring, and provision of supplementary information. Crucially, participants still have to actively take photographs of everything they eat, and this may be affected by issues with memory and social desirability (32).

The introduction of wearable camera systems recording point of view images addresses some of these issues, by making the capturing of images of meals largely passive. Among the first wearable camera systems were those developed for life logging; recording images of events and activities throughout the day in order to aid recall for a variety of benefits (33, 34). Feasibility testing of one such device, SenseCam, which was worn around the neck and automatically took photographs approximately every 30 s, indicated it was promising in enhancing the accuracy of dietary assessment by identifying 41 food items across a range of food groups that were not recorded by self-report methods (35). However, wearing the device around the neck meant variations in body shape could alter the direction of the lens, so for some individuals the device did not record images of meals.

Another passive wearable camera system, e-Button, reduced the size of the device so that it could be worn attached to the chest (36). Chest mounting improved the ability of the device to capture images of meals. However, the system was a bespoke development, and the use of bespoke solutions produced in limited numbers brings challenges, including potentially high unit costs, limited availability of devices, and issues around ongoing technical support.

Recent studies in other research domains have used mass-market wearable cameras of a similar shape and size to e-Button. For example, studies of infant interactions with environments and parents have used pin-on camera devices that are widely available online as novelty “spy badges” (37, 38). These devices have many characteristics that make them ideal for capturing images of meals; their small form and light weight mean they can be easily worn on the body, and their low cost facilitates use at scale. However, these devices typically capture individual images or video sequences initiated by the user, so they lack the passive operation of devices like eButton that capture images automatically throughout the day.

If using camera devices that capture images throughout the day, the first major challenge is to identify which images contain food and drink. A camera taking photographs every 10 s and worn for 12 h a day for a week will capture nearly 30,000 images, of which perhaps only 5–10% contain eating events (39), so identifying food-related photographs is a non-trivial first step. Automatic detection of images containing food using artificial intelligence shows promise for photos taken in ideal conditions (achieving an accuracy of 98.7%) (39). However, photos taken with a wearable camera are uncontrolled and more susceptible to poor lighting and blurring, and the accuracy of identifying images that depict food ranges from 95% for eating a meal to 50% for snacks or drinks (39).

Once meal images have been identified, the next step is to code food content and portion size. Expert analysis of photographs by nutritionists is currently the most common method but requires trained staff, is time-consuming (typically months to return a dataset), and expensive (>$10 per image). Alternatively, automated food identification and portion size assessment, using machine learning (ML) methods, is complex and computationally intensive. The latest approaches using convolutional neural networks appear promising, with accuracy ranging from 0.92 to 0.98 and recall from 0.86 to 0.93 (40) when classifying images from a food image database (41) into 16 food groups. However, identification of individual food items remains limited (42). ML methods require large databases of annotated food photos to train their algorithms, which are time-consuming to create. With more than 50,000 foods in supermarkets (21) and product innovation changing the landscape constantly, considerable challenges remain for ML approaches.

Humans, on the other hand, have life-long experience visually analyzing food, and are excellent at food recognition. Crowdsourcing approaches, in which untrained groups of people perform a short, simple (usually Internet-based) task for a small fee, might therefore offer a rapid low-cost alternative to expensive experts while ML methods develop. Platemate is one dietary assessment app that employs this approach (43). It is an end-to-end system, incorporating all stages from photographic capture of meals through to crowd-based identification of all foods and their portion sizes and nutrient content. The system is complex, however, and by involving crowds of up to 20 people per photo it results in an average processing time of 90 min and cost of $5 per image. To be feasible for use in large-scale longitudinal studies or public health interventions, crowdsourcing of food data from photographs needs to be fast and low cost. We developed and piloted a novel system, FoodFinder (44), and found that small (n = 5) untrained crowds could rapidly classify foods and estimate meal weight in 3 min for £3.35 per photo. Crowds underestimated measured meal weight by 15% compared with 9% overestimation by an expert. A crowd's ability to identify foods correctly was highly specific (98%—foods not present in the photo were rarely reported) but less sensitive (64%—certain foods present were missed by the crowd). With further development crowdsourcing could be an important stepping-stone to the automated coding of meal images as ML methods mature. Crowdsourcing could also play an important role in this development, by creating annotated databases of meal photographs to facilitate training of ML algorithms.

In addition to image based methods for assessing meals, more recent developments in body-worn sensor technology have aimed to passively measure the consumption of specific nutrients. Small, tooth mounted sensors in which the properties of reflected radio frequency (RF) waves are modulated by the presence of certain chemicals in saliva can detect the consumption of salt and alcohol in real time (45). Similarly, tattoo like epidermal sensors that attach to, and stretch and flex with the skin can detect a variety of metabolites in an individual's perspiration that relate directly to their diet (46). For these devices, it is important that metabolites detected are specific to food intake, and not conflated with endogenous metabolites produced by the body as a result of eating.

To date these new oral and epidermal sensors have largely been tested in laboratory settings and are some way from becoming widely available. There are clearly compelling uses for these, for example accurate measurement of salt intake in patients with high blood pressure and sugar intakes in patients with diabetes, as well as enhancing food photography methods by providing non-visual nutritional composition information (e.g. sugar in tea or salt added in cooking). However, it is worth noting that these methods alone are not able to identify the food that contained these nutrients. For some dietary interests (e.g., changing dietary behaviors), food items need to be assessed rather than the nutrients they contain, and in these cases image-based methods for assessing meals will be required.

When we Eat

To advance our understanding of the effects of diet, we require objective assessments of not just what we eat, but when we eat too. A variety of approaches for the passive detection of eating events have been proposed, including; acoustic methods using ear-mounted microphones to detect chewing (47), throat microphones to detect swallowing (48) and detection of jaw movements using different sensor types attached to the head or neck (49–52).

Although these methods are capable of detecting eating events passively, they need the individual to wear bespoke sensing devices attached around the head and neck, and when used on a daily basis this inevitably introduces a considerable level of device burden. To address this, one approach is to use sensors that are embedded in or attached to items that are already part of people's daily lives.

One method explored has been the use of sensors that are part of spectacles. Some approaches to this have used piezoelectric strain sensors on the arms of glasses that are attached to the side of the head to measure movements from the temporalis muscle when chewing (53, 54). High levels of performance have been reported with this approach, with one study reporting an area under the curve for chewing detection (in a combination of laboratory and free-living tests) of 0.97 (55). However, it does require the sensors be manually attached to the head every time the glasses are worn. Others have used electromyography, in which the electrical activity associated with temporalis muscle contraction is detected using sensors imbedded in the arms of 3D printed eyeglass frames (56). This also gives good performance, with recall and precision for chewing bout detection above 77% in free-living conditions. This approach does not need manual attachment of sensors, but it does require individually tailored glass frames to ensure sufficiently good contact of the built-in sensors with the head. More broadly, not everyone wears glasses, so there is also the issue of how these approaches would work for those who do not.

Another method is to use wrist-worn devices equipped with motion sensors to automatically detect eating events. Data from gyroscope and accelerometer motion sensors can be used to identify the signature hand gestures of certain modes of eating (57, 58). Early adopters of this approach strapped smartphones to the wrist (59). This functionality is now more conveniently available in the form of off-the-shelf activity monitors and smartwatches. These devices can be highly effective in detecting eating events, with recent reports of 90.1% precision and 88.7% recall (60). However, building recognition models that can generalize well in free-living conditions where unstructured eating activities occur alongside confounding activities can be challenging, and can result in reduced precision in detection (61).

Recent reviews concluded that smartwatches are of particular interest for eating as they represent an unobtrusive solution for both the tracking of eating behavior (62), and the delivery of targeted, context-sensitive recommendations promoting positive health outcomes (63), such as Just-in-Time interventions (64).

The latest ML techniques are enabling researchers to go beyond detection of eating events using wrist-worn wearables, to also measure within meal eating parameters such as eating speed. In a recent example, convolutional neural networks and long short-term memory ML methods were applied to data from the motion sensors in off-the-shelf smartwatches worn by 12 participants eating a variety of meal types in a restaurant (65). Sequences of bites were first detected, which were then classified into food intake cycles (starting from picking up food from the plate until wrist moves away from the mouth).

The ability to passively detect meal onset is an essential aspect of other healthcare systems too. One example is closed-loop artificial pancreas systems for the management of blood glucose in patients with type 1 diabetes. Such systems rely on detecting a rise in interstitial fluid glucose concentrations (a proxy for blood glucose) using continuous glucose monitors (CGM). Meal detection can be challenging as interstitial glucose rises well after a meal has begun, limiting the current use of CGM in real-time monitoring systems. However, meal detection models using CGM have developed from being purely computer-based simulations to now showing promise when fitted to real-world data. The mean delay in detecting the start of a meal has reduced from 45 to 25 min (66). CGM could therefore be another method for the passive, objective detection of meal timings in future, although further research, particularly in populations without diabetes, is required. Encouragingly pilot work in the US indicates that wearing a CGM for up to a week is as acceptable as wearing accelerometer-based sensors for 7 days (67). Furthermore, our own pilot work in the UK ALSPAC-G2 cohort demonstrated that using the latest CGM devices, which no longer require finger prick tests for calibration, improves uptake of 6 days of monitoring (68). This reflects a growing demand for non-invasive methods for CGM.

Photoplethysmography (PPG) is a technique that detects changes in levels of reflected light as a result of variation in properties of venous blood, and which is routinely included in off-the-shelf smartwatches and activity monitors for the measurement of heart rate. This same technique can also be used to non-invasively measure glucose levels, and the latest enhancements give measurement performance approaching that of reference blood glucose measurement devices (69). This opens the possibility that non-invasive CGM using commercially available smartwatches and activity monitors may be widely available in the near future, and theoretically devices of this kind could detect glucose patterns associated with meal start and end times. Once again though, the latency between start of meal and detection would need to be determined, and meal detection algorithms evaluated.

Integrating Methods

The methods outlined above individually provide objective measurements of when, what and how much someone is eating. Integrating these methods offers the possibility of objectively capturing more complete and detailed pictures of dietary intake, while minimizing participant burden.

One previous proposal for an integrated system for objective dietary assessment involves combining smartwatch motion sensors with a camera built into the smartwatch (70). The motion sensors detect the start and stop of an eating event, and this triggers the camera to take an image of the meal for subsequent offline analysis. While this is a compact solution minimizing device burden, it does need the individual to direct the watch camera toward the meal to capture an image. More importantly, trends in smartwatch design have changed, and smartwatches typically no longer come equipped with built in cameras.

A more recent proposal again had a wrist-worn activity monitor to detect eating events, but this time combined with on-body sensors for detecting chewing and swallowing to capture more detailed information on bite count and bite rate within a meal (22). An interesting aspect of this system was the use of the individual's smartphone as the basis of a Wireless Body Area Network (WBAN) (71) to link up the activity monitor and different sensors. This enabled local communication between sensors via the smartphone, without the need to connect the sensors to a static wireless network or a cellular data connection.

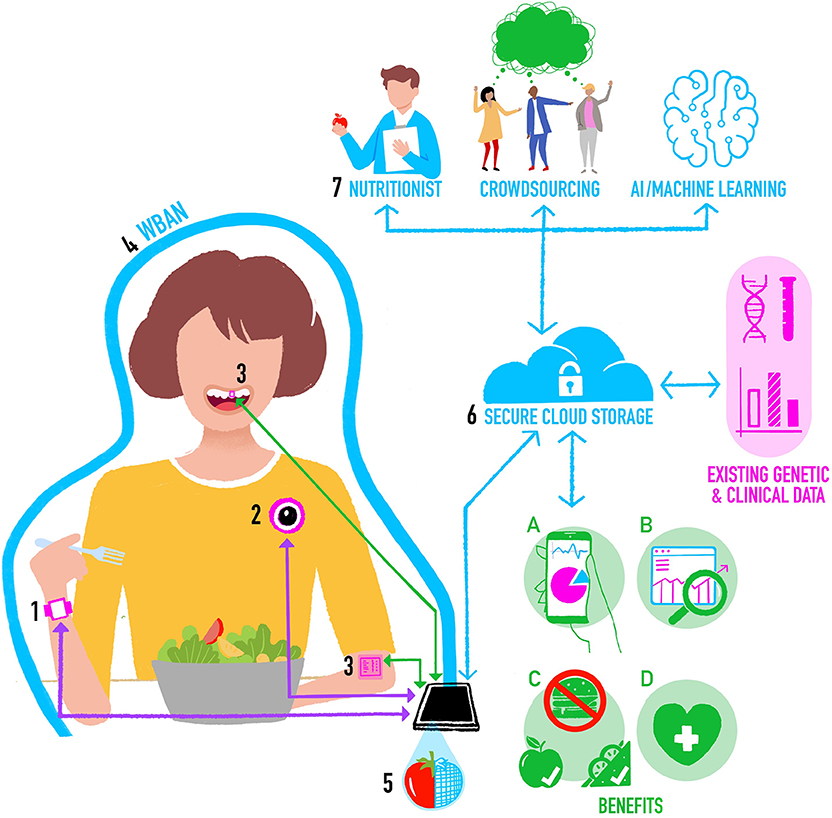

In Figure 1 we propose a new architecture for an integrated system for objective assessment of diet. We draw on some elements of these previous proposals, but also incorporate new and future developments in wearable sensing technology for objective dietary assessment. The operation of the system can be conceptualized as follows:

1. The individual wears a smartwatch containing accelerometer and gyroscope motion sensors. Classification algorithms applied to the motion data in real time on the watch can detect the beginning and end of an eating event, the mode of eating, and provide “within meal” metrics such as speed of eating. In the future the smartwatch may also have PPG-based CGM, which provides additional data on meal timing and size.

2. The individual is also wearing a chest-mounted camera capturing images from their viewpoint. To keep battery consumption and data storage requirements low (minimizing device size and maximizing time between charges), the camera takes still images at short intervals (e.g., every 10 s) and stores them for a brief period (e.g., for 5 min). Images are then deleted unless the smartwatch detects the start of an eating event, in which case images before, during and after eating are stored as a complete visual record of the meal. Saving multiple photos maximizes the chances of capturing high quality images unaffected by temporary issues with lighting, camera angle, blur, etc.

3. On-body sensors including oral tooth-mounted sensors and epidermal tattoos could be added to provide more detailed nutritional assessments for monitoring of specific nutrients or calibrate estimates from other tools.

4. The individual's smartphone forms the basis of a WBAN around their body. Most devices (e.g., activity monitors, cameras) will communicate with the smartphone using a Bluetooth connection. Oral and epidermal sensing devices that do not currently have power supplies or data storage or transfer capabilities could use Near Field Communication (NFC) as a power source and to transfer data from the sensor to the smartphone.

5. Segmentation, food item recognition, and volumetric estimates of portion sizes are initially computed locally on the smartphone using data from sensors and images, and these may be used to support Just-in-Time type eating behavior change interventions.

6. The smartphone also provides a secure connection to a cloud-based central dietary profile for the individual. Data captured by sensors is processed on the smartphone and the processed data are regularly uploaded to the central profile, perhaps when the individual is at home and their smartphone connects to their home wireless network. Processed data can then, at the individual's discretion, be linked to other sources of their own health data, including omics, clinical, and imaging data. Raw data from sensors are not uploaded to reduce privacy concerns and data transfer requirements.

7. Depending on the needs of the particular scenario, and balancing speed, accuracy, and cost, data from the central profile may be sent for further analysis. For example, images of meals may be sent to a crowd-based application (44), or a dietician to refine food item identification and portion sizes (72).

Figure 1. Integrating methods for objective assessment of diet using digital and sensing technologies.

The resultant cloud-based central dietary profile represents a detailed view of a person's food intake and eating behavior that will provide the following benefits:

a) Summaries of the individual's data for their personal use.

b) Dietary data that is stored and made available for future research on eating [for example prospective cohort studies like Children of the 90s (73)].

c) Information that can be automatically analyzed within computer-based personalized nutrition behavior change interventions involving monitoring progress in achieving changes in diet-related goals [e.g., see (74)].

d) Information that feeds into health professional consultations [e.g., enabling a dietician to get a better picture of an individual's overall intake and eating behavior so they can spend more time on behavior change techniques rather than having to assess diet as part of the appointment—for example see (75)].

Discussion

In this article we briefly looked at how emerging digital and sensing technologies are enabling new objective assessments of dietary intake. These new methods have the potential to address many of the issues associated with current paper and online dietary assessment tools around bias, errors, misreporting, and high levels of participant or researcher burden. They do so by automating the detection and measurement of eating events, food items and portion sizes, and by providing detailed information on specific nutrients and within meal eating behaviors.

Image-based methods remain the most popular approach for objective assessment of food items and portion size. The use of on-body cameras to passively capture images of meals for subsequent processing has a number of advantages. As the individual does not have to manually initiate the capture, this helps mitigate issues such as the stigma of photographing their meals. The reliance on an individual's memory or willingness to self-report is also removed, therefore burden and bias are reduced. However, having to wear the camera device does represent a different burden, and there are issues around privacy, for example concerns from others that they may be inadvertently recorded. For nutritional assessment, image capture could be limited to eating occasions, so while concerns remain, they would hopefully be reduced.

In terms of camera devices, future developments should combine the passive operation and ease of use of a system like e-Button (36), with the low weight, size and cost, and broad availability of commercially available products. If such a device was of utility to multiple research domains (following the model of e-Button), and particularly if it had compelling mass market health or dietary use cases, demand could be sufficient for commercial production. Integration of such a device into other items already accepted for daily use (clothing, jewelery, etc.) could possibly increase acceptability further.

The emergence of sensors that attach directly to teeth or to the skin holds the promise of real time measurement of specific nutrients. These devices are at the proof of concept stage, and there are important considerations to address around durability, and how to power and read data from these devices. However, many of the mobile and wearable devices we currently use have capabilities that could possibly be adapted to work with these new sensors. For example, the near field communication wireless technology now included in most smartphones to make wireless card payments uses high frequency radio signals that could potentially be adapted to power and communicate with oral and dermal sensors (76).

In terms of detecting when people are eating, smart glasses could potentially detect the movement of, or electrical signals from the muscles used to chew, although there are the issues of sensor attachment and positioning, and how this approach would work for people who do not normally wear glasses. Wrist-worn wearables such as smartwatches have the ability to detect the signature hand movements unique to eating. Consumer demand for these devices continues to grow, with worldwide shipments predicted to exceed 300 million by 2023 (from under 30 million in 2014) (77). Smartwatches are worn by individuals as part of their daily routine so they do not represent additional device burden. In addition, such devices have the capability to run 3rd party applications providing the opportunity for delivering just-in-time behavior change interventions based on the eating behaviors detected. However, battery life continues to be an issue, with smartwatches typically needing to be charged daily. Continuous monitoring of eating behaviors will exacerbate this. Also, the detection of eating behaviors from motion data often use computationally intense machine learning algorithms (e.g., convolutional neural networks) that cannot currently be used on wearable devices to detect eating in real time. This may change in the future as the processing power and battery life of smartwatches and other wearables improve and more sophisticated classification algorithms can run on these devices.

In the current review, we have proposed an architecture for an integrated system for the objective assessment of diet. Integrating methods will enable researchers to build a more detailed and complete picture of an individual's diet, and to link this with a wide range of related health data (e.g., omics, clinical, imaging). Storing this information in a central location will enable healthcare professionals, researchers and other collaborators the individual wishes to interact with to have controlled access to their detailed dietary data. However, this raises a number of important questions. Should cloud-based storage be used and where this would be hosted? What format to use for the stored data to maximize utility across applications? What model should be used for making the data available, given the rise of new models in which individuals can monetise their own data? (78).

Another key issue for new methods will be that they need to be financially sustainable over time. For integrated systems, architectures are required that minimize the time and cost of maintaining operation of the system when one component (e.g., a sensor) changes. For example, systems arranged with central hubs to which each sensor/device connects and communicates reduce the impact of a change in one component compared with fully connected architectures in which each sensor/device communicates to many others.

For all of these new techniques for passive measurement of dietary intake, it will be important to understand if they introduce unexpected measurement errors and biases. New methods for estimating multiple sources of error in data captured using the latest technologies could help in this respect. These are able, for example, to separate out the effects of factors such as coverage (access to the technology), non-response and measurement error (79).

Finally, whether these methods, individually or integrated, become widely adopted will rest largely with the individuals that use them. Extensive feasibility testing will be required to explore which of these new methods people are happy to use, and which ones they are not.

Author Contributions

AS, ZT, CS, and LJ contributed to conceptualizing and writing the article.

Funding

AS was funded by a UKRI Innovation Fellowship from Health Data Research UK (MR/S003894/1). LJ, AS, and CS were funded by MR/T018984/1. LJ and AS were affiliated with, and CS was a member of a UK Medical Research Council Unit, supported by the University of Bristol and the Medical Research Council (MC_UU_00011/6). ZT was funded by the Jean Golding Institute at the University of Bristol for research unrelated to this review. Some of the work presented here was funded by a catalyst award from the Elizabeth Blackwell Institute for Health Research a Wellcome Trust Institutional Strategic Support fund.

Conflict of Interest

AS and CS are listed as inventors on patent applications 1601342.7 (UK) and PCT/GB2017/050110 (International), Method and Device for Detecting a Smoking Gesture. LJ has received funding from Danone Baby Nutrition, the Alpro foundation and Kellogg Europe for research unrelated to this review.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to acknowledge Ben Kirkpatrick for the illustration.

References

1. GBD 2017 Diet Collaborators. Health effects of dietary risks in 195 countries, 1990-2017: a systematic analysis for the Global Burden of Disease Study 2017. Lancet. (2019) 393:1958–72. doi: 10.1016/S0140-6736(19)30041-8

2. Mozaffarian D, Rosenberg I, Uauy R. History of modern nutrition science—implications for current research, dietary guidelines, and food policy. BMJ. (2018) 361:k2392. doi: 10.1136/bmj.k2392

3. Leech RM, Worsley A, Timperio A, McNaughton SA. Understanding meal patterns: definitions, methodology and impact on nutrient intake and diet quality. Nutr Res Rev. (2015) 28:1–21. doi: 10.1017/S0954422414000262

4. Willett WC. Nature of variation in diet. In: Willett WC, editor. Nutritional Epidemiology. 3rd ed. Oxford: Oxford University Press (2013). p. 34−48.

5. Smith KJ, Gall SL, McNaughton SA, Blizzard L, Dwyer T, Venn AJ. Skipping breakfast: longitudinal associations with cardiometabolic risk factors in the Childhood Determinants of Adult Health Study. Am J Clin Nutr. (2010) 92:1316–25. doi: 10.3945/ajcn.2010.30101

6. Mattson MP, Allison DB, Fontana L, Harvie M, Longo VD, Malaisse WJ, et al. Meal frequency and timing in health and disease. Proc Natl Acad Sci USA. (2014) 111:16647–53. doi: 10.1073/pnas.1413965111

7. Monzani A, Ricotti R, Caputo M, Solito A, Archero F, Bellone S, et al. A systematic review of the association of skipping breakfast with weight and cardiometabolic risk factors in children and adolescents. What should we better investigate in the future? Nutrients. (2019) 11:387. doi: 10.3390/nu11020387

8. Betts JA, Chowdhury EA, Gonzalez JT, Richardson JD, Tsintzas K, Thompson D. Is breakfast the most important meal of the day? Proc Nutr Soc. (2016) 75:464–74. doi: 10.1017/S0029665116000318

9. Dhurandhar NV, Schoeller D, Brown AW, Heymsfield SB, Thomas D, Sørensen TIA, et al. Energy balance measurement: when something is not better than nothing. Int J Obes. (2015) 39:1109–13. doi: 10.1038/ijo.2014.199

10. Stubbs RJ, O'Reilly LM, Whybrow S, Fuller Z, Johnstone AM, Livingstone MBE, et al. Measuring the difference between actual and reported food intakes in the context of energy balance under laboratory conditions. Br J Nutr. (2014) 111:2032–43. doi: 10.1017/S0007114514000154

11. Subar AF, Freedman LS, Tooze JA, Kirkpatrick SI, Boushey C, Neuhouser ML, et al. Addressing current criticism regarding the value of self-report dietary data. J Nutr. (2015) 145:2639–45. doi: 10.3945/jn.115.219634

12. Reedy J, Krebs-Smith SM, Miller PE, Liese AD, Kahle LL, Park Y, et al. Higher diet quality is associated with decreased risk of all-cause, cardiovascular disease, and cancer mortality among older adults. J Nutr. (2014) 144:881–9. doi: 10.3945/jn.113.189407

13. Johnson L, Toumpakari Z, Papadaki A. Social gradients and physical activity trends in an obesogenic dietary pattern: cross-sectional analysis of the UK National Diet and Nutrition Survey 2008–2014. Nutrients. (2018) 10:388. doi: 10.3390/nu10040388

14. Rennie K, Coward A, Jebb S. Estimating under-reporting of energy intake in dietary surveys using an individualised method. Br J Nutr. (2007) 97:1169–76. doi: 10.1017/S0007114507433086

15. Poppitt SD, Swann D, Black AE, Prentice AM. Assessment of selective under-reporting of food intake by both obese and non-obese women in a metabolic facility. Int J Obes Relat Metab Disord. (1998) 22:303–11. doi: 10.1038/sj.ijo.0800584

16. Park Y, Dodd KW, Kipnis V, Thompson FE, Potischman N, Schoeller DA, et al. Comparison of self-reported dietary intakes from the automated self-administered 24-h recall, 4-d food records, and food-frequency questionnaires against recovery biomarkers. Am J Clin Nutr. (2018) 107:80–93. doi: 10.1093/ajcn/nqx002

17. Thompson FE, Subar AF. Dietary assessment methodology. In: Coulston AM, Boushey CJ, Ferruzzi G, editors. Nutrition in the Prevention and Treatment of Disease. 3rd ed. Cambridge, CA: Academic Press; Elsevier (2013). p. 5–48.

18. Woolhead C, Gibney M, Walsh M, Brennan L, Gibney E. A generic coding approach for the examination of meal patterns. Am J Clin Nutr. (2015) 102:316–23. doi: 10.3945/ajcn.114.106112

19. Toumpakari Z, Tilling K, Haase AM, Johnson L. High-risk environments for eating foods surplus to requirements: a multilevel analysis of adolescents' non-core food intake in the National Diet and Nutrition Survey (NDNS). Publ Health Nutr. (2019) 22:74–84. doi: 10.1017/S1368980018002860

20. Greenwood DC, Hardie LJ, Frost GS, Alwan NA, Bradbury KE, Carter M, et al. Validation of the Oxford WebQ online 24-hour dietary questionnaire using biomarkers. Am J Epidemiol. (2019) 188:1858–67. doi: 10.1093/aje/kwz165

21. Wark PA, Hardie LJ, Frost GS, Alwan NA, Carter M, Elliott P, et al. Validity of an online 24-h recall tool (myfood24) for dietary assessment in population studies: comparison with biomarkers and standard interviews. BMC Med. (2018) 16:136. doi: 10.1186/s12916-018-1113-8

22. Schoeller D, Westerp-Plantenga M. Advances in the Assessment of Dietary Intake. Boca Raton, FL: CRC Press (2017).

23. Selamat N, Ali S. Automatic Food Intake Monitoring Based on Chewing Activity: A Survey. IEEE Access (2020). p. 1.

24. Magrini M, Minto C, Lazzarini F, Martinato M, Gregori D. Wearable devices for caloric intake assessment: state of art and future developments. Open Nurs J. (2017) 11:232–40. doi: 10.2174/1874434601711010232

25. Bell B, Alam R, Alshurafa N, Thomaz E, Mondol A, de la Haye K, et al. Automatic, wearable-based, in-field eating detection approaches for public health research: a scoping review. Dig Med. (2020) 3:38. doi: 10.1038/s41746-020-0246-2

26. Hassannejad H, Matrella G, Ciampolini P, De Munari I, Mordonini M, Cagnoni S. Automatic diet monitoring: a review of computer vision and wearable sensor-based methods. Int J Food Sci Nutr. (2017) 68:1–15. doi: 10.1080/09637486.2017.1283683

27. Vu T, Lin F, Alshurafa N, Xu W. Wearable food intake monitoring technologies: a comprehensive review. Computers. (2017) 6:4. doi: 10.3390/computers6010004

28. Statista. Smartphone Ownership Penetration in the United Kingdom (UK) in 2012-2019, by Age. Available online at: https://www.statista.com/statistics/271851/smartphone-owners-in-the-united-kingdom-uk-by-age/ (accessed January 6, 2020).

29. Boushey C, Spoden M, Delp E, Zhu F, Bosch M, Ahmad Z, et al. Reported energy intake accuracy compared to doubly labeled water and usability of the mobile food record among community dwelling adults. Nutrients. (2017) 9:312. doi: 10.3390/nu9030312

30. Martin C, Correa J, Han H, Allen H, Rood J, Champagne C, et al. Validity of the Remote Food Photography Method (RFPM) for estimating energy and nutrient intake in near real-time. Obesity. (2011) 20:891–9. doi: 10.1038/oby.2011.344

31. Maugeri A, Barchitta M. A systematic review of ecological momentary assessment of diet: implications and perspectives for nutritional epidemiology. Nutrients. (2109) 11:2696. doi: 10.3390/nu11112696

32. McClung H, Ptomey L, Shook R, Aggarwal A, Gorczyca A, Sazonov E, et al. Dietary intake and physical activity assessment: current tools, techniques, and technologies for use in adult populations. Am J Prev Med. (2018) 55:e93–e104. doi: 10.1016/j.amepre.2018.06.011

33. Gemmell J, Williams L, Wood K, Lueder R, Brown G. editors. Passive capture and ensuing issues for a personal lifetime store. In: Proceedings of the 1st ACM Workshop on Continuous Archival and Retrieval of Personal Experiences. New York, NY: Microsoft (2004).

34. Hodges S, Berry E, Wood K. SenseCam: a wearable camera which stimulates and rehabilitates autobiographical memory. Memory. (2011) 19:685–96. doi: 10.1080/09658211.2011.605591

35. Gemming L, Doherty A, Kelly P, Utter J, Mhurchu C. Feasibility of a SenseCam-assisted 24-h recall to reduce under-reporting of energy intake. Eur J Clin Nutr. (2013) 67:1095–9. doi: 10.1038/ejcn.2013.156

36. Sun M, Burke L, Mao Z, Chen Y, Chen H, Bai Y, et al. eButton: a wearable computer for health monitoring and personal assistance. In: Proceedings/Design Automation Conference. San Francisco, CA (2014).

37. Sugden N, Mohamed-Ali M, Moulson M. I spy with my little eye: typical, daily exposure to faces documented from a first-person infant perspective. Dev Psychobiol. (2014) 56:249–61. doi: 10.1002/dev.21183

38. Lee R, Skinner A, Bornstein MH, Radford A, Campbell A, Graham K, et al. Through babies' eyes: Practical and theoretical considerations of using wearable technology to measure parent–infant behaviour from the mothers' and infants' viewpoints. Infant Behav Dev. (2017) 47:62–71. doi: 10.1016/j.infbeh.2017.02.006

39. Jia W, Li Y, Qu R, Baranowski T, Burke L, Zhang H, et al. Automatic food detection in egocentric images using artificial intelligence technology. Public Health Nutr. (2018) 22:1–12. doi: 10.1017/S1368980018000538

40. Caldeira M, Martins P, Costa RL, Furtado P. Image classification benchmark (ICB). Expert Syst Appl. (2020) 142:112998. doi: 10.1016/j.eswa.2019.112998

41. Kawano Y, Yanai K. FoodCam: a real-time food recognition system on a smartphone. Multimedia Tools Appl. (2014) 74:5263–87. doi: 10.1007/s11042-014-2000-8

42. Ahmad Z, Bosch M, Khanna N, Kerr D, Boushey C, Zhu F, et al. A mobile food record for integrated dietary assessment. MADiMa. (2016) 16:53–62. doi: 10.1145/2986035.2986038

43. Noronha J, Hysen E, Zhang H, Gajos K. PlateMate: crowdsourcing nutrition analysis from food photographs. In: UIST'11 - Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology. Santa Barbara, CA (2011). p. 1–12.

44. Johnson L, England C, Laskowski P, Woznowski PR, Birch L, Shield J, et al. FoodFinder: developing a rapid low-cost crowdsourcing approach for obtaining data on meal size from meal photos. Proc Nutr Soc. (2016) 75. doi: 10.1017/S0029665116002342

45. Tseng P, Napier B, Garbarini L, Kaplan D, Omenetto F. Functional, RF-trilayer sensors for tooth-mounted, wireless monitoring of the oral cavity and food consumption. Adv Mater. (2018) 30:1703257. doi: 10.1002/adma.201703257

46. Piro B, Mattana G, Vincent N. Recent advances in skin chemical sensors. Sensors. (2019) 19:4376. doi: 10.3390/s19204376

47. Amft O. A wearable earpad sensor for chewing monitoring. In: Proceedings of IEEE Sensors Conference 2010. Piscataway, NJ: Institute of Electrical and Electronics Engineers. (2010). p. 222–7. doi: 10.1109/ICSENS.2010.5690449

48. Sazonov E, Schuckers S, Lopez-Meyer P, Makeyev O, Sazonova N, Melanson E, et al. Non-invasive monitoring of chewing and swallowing for objective quantification of ingestive behavior. Physiol Measure. (2008) 29:525–41. doi: 10.1088/0967-3334/29/5/001

49. Sazonov E, Fontana J. A sensor system for automatic detection of food intake through non-invasive monitoring of chewing. IEEE Sens. (2012) 12:1340–8. doi: 10.1109/JSEN.2011.2172411

50. Farooq M, Fontana J, Sazonov E. A novel approach for food intake detection using electroglottography. Physiol Measure. (2014) 35:739–51. doi: 10.1088/0967-3334/35/5/739

51. Kohyama K, Nakayama Y, Yamaguchi I, Yamaguchi M, Hayakawa F, Sasaki T. Mastication efforts on block and finely cut foods studied by electromyography. Food Qual Prefer. (2007) 18:313–20. doi: 10.1016/j.foodqual.2006.02.006

52. Kalantarian H, Alshurafa N, Sarrafzadeh M. A wearable nutrition monitoring system. In: Proceedings - 11th International Conference on Wearable and Implantable Body Sensor Networks. Zurich (2014). p. 75–80.

53. Farooq M, Sazonov E. Detection of chewing from piezoelectric film sensor signals using ensemble classifiers. In: Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Florida, FL (2016). p. 4929–32.

54. Farooq M, Sazonov E. A novel wearable device for food intake and physical activity recognition. Sensors. (2016) 16:1067. doi: 10.3390/s16071067

55. Farooq M, Sazonov E. Segmentation and characterization of chewing bouts by monitoring temporalis muscle using smart glasses with piezoelectric sensor. IEEE J Biomed Health Inform. (2016) 21:1495–503. doi: 10.1109/JBHI.2016.2640142

56. Zhang R, Amft O. Monitoring chewing and eating in free-living using smart eyeglasses. IEEE J Biomed Health Inform. (2017) 22:23–32. doi: 10.1109/JBHI.2017.2698523

57. Dong Y, Hoover A, Scisco J, Muth E. A new method for measuring meal intake in humans via automated wrist motion tracking. Appl Psychophysiol. (2012) 37:205–15. doi: 10.1007/s10484-012-9194-1

58. Thomaz E, Essa I, Abowd G. A practical approach for recognizing eating moments with wrist-mounted inertial sensing. In: ACM International Conference on Ubiquitous Computing. Osaka (2015). p. 1029–40.

59. Dong Y, Scisco J, Wilson M, Muth E, Hoover A. Detecting periods of eating during free-living by tracking wrist motion. IEEE J Biomed Health Inform. (2013) 18:1253–60. doi: 10.1109/JBHI.2013.2282471

60. Kyritsis K, Diou C, Delopoulos A. food intake detection from inertial sensors using LSTM networks. In: New Trends in Image Analysis and Processing. (2017). p. 411–8.

61. Zhang S, Alharbi R, Nicholson M, Alshurafa N. When generalized eating detection machine learning models fail in the field. In: Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Wearable Computers. Maui, HI (2017). p. 613–22.

62. Heydarian H, Adam M, Burrows T, Collins C, Rollo M. Assessing eating behaviour using upper limb mounted motion sensors: a systematic review. Nutrients. (2019) 11:1168. doi: 10.3390/nu11051168

63. Noorbergen T, Adam M, Attia J, Cornforth D, Minichiello M. Exploring the design of mHealth systems for health behavior change using mobile biosensors. Commun Assoc Inform Syst. (2019) 44:944–81. doi: 10.17705/1CAIS.04444

64. Schembre S, Liao Y, Robertson M, Dunton G, Kerr J, Haffey M, et al. Just-in-time feedback in diet and physical activity interventions: systematic review and practical design framework. J Med Internet Res. (2018) 20:e106. doi: 10.2196/jmir.8701

65. Kyritsis K, Diou C, Delopoulos A. Modeling wrist micromovements to measure in-meal eating behavior from inertial sensor data. IEEE J Biomed Health Inform. (2019) 23:2325–34. doi: 10.1109/JBHI.2019.2892011

66. Zheng M, Ni B, Kleinberg S. Automated meal detection from continuous glucose monitor data through simulation and explanation. J Am Med Inform Assoc. (2019) 26:1592–9. doi: 10.1093/jamia/ocz159

67. Liao Y, Schembre S. Acceptability of continuous glucose monitoring in free-living healthy individuals: implications for the use of wearable biosensors in diet and physical activity research. JMIR. (2018) 6:e11181. doi: 10.2196/11181

68. Lawlor DA, Lewcock M, Rena-Jones L, Rollings C, Yip V, Smith D, et al. The second generation of the Avon longitudinal study of parents and children (ALSPAC-G2): a cohort profile [version 2; peer review: 2 approved]. Wellcome Open Res. (2019) 4:36. doi: 10.12688/wellcomeopenres.15087.2

69. Rodin D, Kirby M, Sedogin N, Shapiro Y, Pinhasov A, Kreinin A. Comparative accuracy of optical sensor-based wearable system for non-invasive measurement of blood glucose concentration. Clin Biochem. (2019) 65:15–20. doi: 10.1016/j.clinbiochem.2018.12.014

70. Sen S, Subbaraju V, Misra A, Balan RK, Lee Y. The case for smart watch-based diet monitoring. In: Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops). St. Louis, MI (2015). p. 585–90.

71. Movassaghi S, Abolhasan M, Lipman J, Smith D, Jamalipour A. Wireless body area networks: a survey. IEEE Commun. Surveys Tutor. (2014) 16:1658–86. doi: 10.1109/SURV.2013.121313.00064

72. Beltran A, Dadabhoy H, Ryan C, Dholakia R, Jia W, Baranowski J, et al. Dietary assessment with a wearable camera among children: feasibility and intercoder reliability. J Acad Nutr Dietetics. (2018) 118:2144–53. doi: 10.1016/j.jand.2018.05.013

73. Golding J, Pembrey M, Jones R, ALSPAC Study Team. ALSPAC–The Avon longitudinal study of parents and children. Paediatr Perinat Epidemiol. (2001) 15:74–87. doi: 10.1046/j.1365-3016.2001.00325.x

74. Forster H, Walsh M, O'Donovan C, Woolhead C, McGirr C, Daly EJ, et al. A dietary feedback system for the delivery of consistent personalized dietary advice in the web-based multicenter Food4Me study. J Med Internet Res. (2016) 18:e150. doi: 10.2196/jmir.5620

75. Shoneye C, Dhaliwal S, Pollard C, Boushey C, Delp E, Harray A, et al. Image-based dietary assessment and tailored feedback using mobile technology: mediating behavior change in young adults. Nutrients. (2019) 11:435. doi: 10.3390/nu11020435

76. Cao Z, Chen P, Ma Z, Li S, Gao X, Wu R, et al. Near-field communication sensors. Sensors. (2019) 19:3947. doi: 10.3390/s19183947

77. Statista. Total Wearable Unit Shipments Worldwide 2014-2023. Available online at: https://www.statista.com/statistics/437871/wearables-worldwide-shipments/ (accessed January 6, 2020).

78. Batineh A, Mizouni R, El Barachi M, Bentahar J. Monetizing personal data: a two-sided market approach. Procedia Comput Sci. (2016) 83:472–9. doi: 10.1016/j.procs.2016.04.211

Keywords: objective, assessment, eating, wearable, technology

Citation: Skinner A, Toumpakari Z, Stone C and Johnson L (2020) Future Directions for Integrative Objective Assessment of Eating Using Wearable Sensing Technology. Front. Nutr. 7:80. doi: 10.3389/fnut.2020.00080

Received: 02 March 2020; Accepted: 05 May 2020;

Published: 02 July 2020.

Edited by:

Natasha Tasevska, Arizona State University Downtown Phoenix Campus, United StatesReviewed by:

Adam Hoover, Clemson University, United StatesJosé María Huerta, Instituto de Salud Carlos III, Spain

Copyright © 2020 Skinner, Toumpakari, Stone and Johnson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andy Skinner, YW5keS5za2lubmVyQGJyaXN0b2wuYWMudWs=

Andy Skinner

Andy Skinner Zoi Toumpakari

Zoi Toumpakari Christopher Stone

Christopher Stone Laura Johnson

Laura Johnson