- University of Alaska Anchorage, Anchorage, AK, United States

Prior studies on students’ perception of open educational resources (OERs) indicates that students find open resources as good or better than commercial textbooks (Hilton, 2016). However, studies published to date have not attempted to control for student knowledge of cost as a variable influencing perception of quality. The purpose of this study was to evaluate students’ perception of the quality of brief, de-identified open and commercial textbook samples, then determine whether their preferences changed after learning textbook costs. As part of an in-class activity, students enrolled in an introductory-level psychology course reviewed samples of two commercial and two open textbooks. Participants rated the materials on quality measures (Gurung and Martin, 2011), selected a preferred textbook, and provided a rationale for their choice. Next, participants were informed of the cost of each textbook and asked to re-rate textbook quality and indicate whether their textbook preference had changed. Prior to learning the cost of the textbooks from which each sample was selected, 81.29% of responding participants indicated preference for a specific commercial text, citing quality factors related to quality/clarity of writing, book layout, and quality of figures as primary drivers of preference. Following the cost reveal, only 42.46% of responding participants indicated a preference for a commercial textbook while 57.53% indicated a preference for an open textbook. An exact McNemar’s test determined that this was a statistically significant difference in the proportion of respondents who selected open and commercial texts before and after price data were available, p < 0.01. Qualitative comments for participants who indicated a preference shift toward the open textbook referenced cost and quality of the materials as components of their decision-making, supporting previous studies that demonstrate cost is an important predictor of students’ textbook preferences (Clinton, 2019). Regression analysis showed that visual appeal, engaging writing, and clarity of writing predicted participants’ desire to use the text in class, but quality of examples was only a significant predictor for one of four texts. Suggestions for future research are discussed.

Introduction

Higher education has become increasingly expensive and the cost of college is of growing concern (Humphreys, 2012). Though tuition is a clear contributor to the cost of a college education, non-tuition college expenses such as textbooks, housing, and other essential needs often exceed the cost of tuition (California Student Aid Commission, 2019). The rising cost of tuition and fees may not be offset by available financial aid (Florida Virtual Campus, 2019), leaving students with difficult decisions about purchasing required course materials, working more, or cutting back costs by way of other vital needs (Gurung, 2017; Broton and Goldrick-Rab, 2018).

Various studies report that students regularly forego purchasing required materials due to cost (Chae and Delaney, 2018; Florida Virtual Campus, 2019). Faculty recognize that student costs are a significant barrier to success (Seaman and Seaman, 2019) and open educational resources (OERs) have swiftly gained popularity in higher education. OERs are teaching, learning and research materials in any medium shared under an open license that permits no-cost access, use, adaptation and redistribution by others with no or limited restrictions (United Nations Educational, Scientific and Cultural Organization (UNESCO), 2019). As of 2019, OERs have been adopted in 26% of large introductory undergraduate courses, similar to levels of adoption for some major commercial textbooks (Seaman and Seaman, 2019).

Open educational resources confer a variety of advantages to both student and faculty users. For students, the obvious benefit is cost, as most OERs are free to access. OERs also permit students access to materials without restriction from the first day of courses (Seaman and Seaman, 2019), and students can gain access to materials in a variety of formats and across various devices with little restriction (Watson et al., 2017; Ross et al., 2018). These benefits also extend to faculty, who can adapt materials to best suit their unique courses and learning outcomes due to the shared permissions offered with open licenses (Griggs and Jackson, 2017). Despite these potential benefits, skeptics voice concerns about the quality of OER materials.

To address these concerns, the impact of OERs and commercial textbooks have been extensively studied using the COUP (Cost, Outcome, Usage, and Perceptions) framework (Bliss et al., 2013). Studies on cost may explore student savings, often estimating textbook cost savings for one or many courses before and after transitioning from a commercial text to OERs (e.g., Hilton et al., 2014). Cost studies may also explore ancillary benefits of more affordable textbooks, such as decreased course drop rates, greater persistence, and enrollment intensity (e.g., Fischer et al., 2015; Wiley et al., 2016). The latter studies tend to occur at scale, utilizing institutional data from one or many institutions. OER adoptions are estimated to have saved students over a billion dollars (Scholarly Publishing and Academic Resources Coalition (SPARC), 2019), are associated with greater student persistence in courses (reducing course withdrawals; Clinton and Khan, 2019), and students using OERs appear to take more courses both in semesters when using OER and in subsequent semesters compared to commercial textbooks (Fischer et al., 2015), generating increased tuition revenue for institutions where OER is used (Wiley et al., 2016).

Outcomes research focuses on the direct changes in student course performance, such as changes in grade achievement, students’ persistence in education, and enrollment patterns. Notable large-scale studies on OER efficacy include Fischer et al. (2015) and Colvard et al. (2018). Fischer et al. (2015) reported no statistically significant differences in course grades in most courses (60%), improved grades in some courses (33%), and decreased performance in only one course (6.7%) when faculty transitioned from commercial to OER textbooks. Colvard et al. (2018) conducted a similar study, evaluating the impact of OER adoption on student performance for students across the university of Georgia system. Colvard et al. (2018) reported statically significant improvements in final course grades and a reduction in grades of D, F, and W following the transition from commercial to OER textbooks, yet the disproportionate improvements seen in low-income students, non-white students, and part-time students are the most promising results of the study as they demonstrate that OERs may effectively level the playing field for students traditionally underrepresented in higher education. While OER adoption does not universally improve student performance, most studies indicate that learning outcomes either do not change or improve following a transition from commercial to OER textbooks. See Hilton (2016, 2019) for thorough metanalyses on the efficacy of OERs.

Usage research explores how faculty and students engage with OERs, such as evaluating student engagement with OERs and covarying learning outcomes (Gurung, 2017; Clinton, 2018; Cuttler, 2019). Related to studies explore pedagogical techniques made possible by OER (Wiley and Hilton, 2018), such as student generated or remixed OERs (Randall et al., 2013; Azzam et al., 2017; Jhangiani, 2017), and the open sharing of instructional design and pedagogical techniques (Cronin, 2017).

Finally, perception research explores what a variety of stakeholders – including students, faculty, and administrators – think about OERs. Despite advances in technology, the textbook remains a central feature of most courses, and perception research is particularly valuable when understanding the value of textbooks in a course. A substantial number of studies explore faculty perceptions of OERs, student perceptions of OERs, or both. These studies are vital for a number of reasons. For instance, instructors may rely heavily on the textbook to provide course information, expecting students to come prepared for class discussion (in face to face classes). The text may function as an instructor substitute for students in online classes or who do not attend lecture regularly. Faculty may be inclined to select textbooks based on their students’ preferences (Durwin and Sherman, 2008) or their own perceptions of the quality of the material. Student perception of their course materials appear related to students’ decisions to purchase and/or engage with the assigned course textbook, especially if the textbook perceived to be necessary for completion of coursework (Cuttler, 2019), or if the textbook provides a more credible, reliable source of knowledge than other course resources (Blanchard, 2009).

Studies reporting faculty perceptions of OERs are generally favorable. Survey studies report that most faculty OER adopters consider the quality of OERs to be as good or better than commercial texts (California Open Educational Resources, 2016; Jung et al., 2017; Abramovich and McBride, 2018) and prefer using OERs to commercial textbooks (Delimont et al., 2016). In self-written faculty reflections of OER adoption (Ozdemir and Hendricks, 2017), faculty indicate their primary motivation for OER adoption is saving students money (80%), with ancillary benefits focusing on increasing satisfaction with content (44%), with permissions enabled by OER (24%), and with improved financial accessibility and format flexibility (20%). However, these faculty-focus studies are limited by primarily self-report measures and lack of meaningful control groups.

Likewise, research on student user perceptions of OER tends to favor OER over commercial textbooks. Most students surveyed report that OERs are as good or better than commercial texts in many studies, including those focused within subject matter areas (e.g., health psychology, Cooney, 2017; physics, Hendricks et al., 2017; biology, Watson et al., 2017), in studies across multiple courses at a single institution (e.g., Ikahihifo et al., 2017), and in studies of OER student users across multiple institutions (e.g., Abramovich and McBride, 2018; Griffiths et al., 2018). Many studies also report student preferences for using OER instead of commercial texts. For instance, Delimont et al. (2016) report that students moderately agreed with a statement that they preferred using OER to a commercial textbook (M = 5.7 on a 7-point Likert type scale, 7 indicating strongly agree). Jhangiani and Jhangiani (2017) came at this another way, asking students who had used OER whether they would have preferred to purchase a commercial text; only 20% of respondents indicated slight or strong agreement with this statement; the reader is left to infer that that the other 80% of students preferred not to purchase a text. Similarly, Ross et al. (2018) found that 83% of sociology students surveyed said they would not have preferred to purchase a $100 (CAD) commercial textbook for their course.

Taken together, these studies all indicate strong satisfaction from OER student users. However, specific limitations must be noted. First, these studies have not controlled for familiarity with the course material as a variable influencing choice. That is, students may simply prefer what they already know over other hypothetical course materials. Second, these studies lack any meaningful comparison data – either for comparison materials or comparison to groups using commercial textbooks. Rating the quality of OER materials without providing access to content-matched comparison materials produces only correlational data supporting student preference for OERs. Third, many of these studies do not explore what specific variables were associated with student satisfaction. For instance, research suggests that there may also be an obvious confounding variable in play: rating of the course material as a reflection of student perception of the quality of the course or instructor. For instance, while Griffiths et al. (2018) demonstrated student satisfaction with OER materials, these ratings closely matched student’s ratings of the quality of other aspects of the course, suggesting that student’s perception of the course materials are tied closely with their perception of the quality of the course as a whole. Studies also suggest that there’s a relationship between faculty OER use and students’ perception of faculty. Vojtech and Grissett (2017) found that participants recruited from upper division psychology courses rated a hypothetical faculty member using OER as kinder, more encouraging, and more creative, and a more preferred instructor than a comparative hypothetical faculty member using a commercial textbook. Put simply, multiple course features (such as instructor quality, course design, cost of the text, etc.) may influence student perceptions of OERs beyond the objective quality features of those materials (Hilton, 2016).

Other OER perception studies attempt to control for these limitations by including a commercial textbook-using comparison group. These studies may also evaluate a variety of other use and outcome variables. For instance, Gurung (2017) recruited 1,099 students taking psychology courses at seven institutions and evaluated student satisfaction with the text assigned by their institution, student perception of their class and learning experiences, student performance on a 15-item quiz, and student prior academic achievement (operationalized using ACT standardized college admission test scores). Commercial textbook users reported higher ACT scores, but less satisfaction with learning and with their course. OER users reported that the examples in their texts were more applicable to their everyday life, but reported using fewer study strategies and study aids, and rated their textbook more poorly on elements of visual appeal than students using commercial textbooks. Finally, students using OER performed lower than students using commercial textbooks, even when controlling for ACT scores. Authors speculate that the format of book (i.e., digital OER vs print commercial textbook) may have contributed to these differences.

Jhangiani et al. (2018) went one step further, conducting a quasi-experimental study exploring student perception, textbook use, and course performance in Canadian college students assigned digital OERs, print OERs, or a print commercial textbook. Students were not randomly assigned, and access to the book was a function of the student’s section enrollment. Results indicate that students assigned the OER text (either digital or print) performed similarly or better on course exams than students using the commercial text. There also appeared to be no statistically significant differences in self-reported textbook use. Students rated the print OER textbook higher on seven of 16 quality dimensions adapted from the Textbook Assessment and Usage Scale (TAUS; Gurung and Martin, 2011); there were no dimension on which the commercial text was rated higher than the OER text, and no significant differences between the commercial textbook and the digital OER, suggesting student preference for printed books. Finally, and perhaps most interesting, when students were asked to offer a fair market price for the text they used, students across all textbook types suggested $50 as a fair price for the book.

Cuttler (2019) likewise conducted a quasi-experimental study comparing various student metrics (such as self-reported textbook use, perception of correspondence between assessment and assigned materials, and satisfaction) of students using OERs to students using commercial textbooks, but added the element of comparing students in online and face-to-face sections of four psychology courses. Students were not randomly assigned and access to the book was a function of the students’ section enrollment. Results indicate that students assigned OERs reported using the textbook more (frequency and duration), perceived a greater degree of correspondence between textbook content, class activities, and assessments, and rated the OER textbook as better than the commercial textbook on 11 of 15 TAUS quality dimensions (Gurung and Martin, 2011). These results were generally consistent between students in face-to-face and online sections. Interestingly, 51.68% of students reported that textbooks that they had been required to purchase in the past had not been used enough to justify their cost, with higher reports of underutilization coming from students in face-to-face classes (57%) than online classes (48.2%). Authors speculate that utilization of the textbook may be a function of delivery, with online students relying more on the assigned text due to having fewer opportunities for learning directly from the course instructor. This highlights the valuable role that textbooks play in a course and what instructors likely fear most – that assigned textbooks go unread.

Grissett and Huffman (2019) evaluated the course performance, textbook use, and perceptions of students using OER or commercial textbooks in an introductory psychology course. Unlike Jhangiani et al. (2018) and Cuttler (2019), Grissett and Huffman (2019) were able to hold potential course- and instructor-specific variables constant by comparing sections taught by the same instructor on alternate days. Results indicate no significant difference in student exam performance or textbook use between the two courses. Student satisfaction was evaluated using a 22-question survey adapted from a variety of scales measuring student textbook perception of quality, use, and satisfaction (McGowan et al., 2009; Gurung and Martin, 2011; Gurung and Landrum, 2012; Lindshield and Adhikari, 2013). Unlike previous studies that asked students to evaluate only the textbook they used, Grissett and Huffman (2019) also asked students to rate the potential benefits of a digital textbook versus a traditional printed textbook regardless of the format that the participants used. Results indicate that participants highlighted the biggest advantages of using a digital OER textbook was reduced cost and increased convenience of a portable digital format. Participants indicated the biggest advantages of a printed book were ease of reading, ability to mark up the text, ability to quickly find a topic, and ability to keep the book as a future reference as the biggest advantages of a traditional printed textbook. Finally, when asked to choose one format, most students selected the format of textbook that they were currently using as their most preferred text (69% of traditional/commercial printed textbook users and 70% of free, online textbook users, respectively).

Clinton (2018) controlled for instructor variables by evaluating student performance (assessment score and overall course grades) and perceptions of quality prior to and following the instructors’ transition to an OER textbook in an introductory-level psychology course. Results indicate that students performed slightly better in the semester following OER textbook adoption, though this result is complicated by participants in the OER group reporting higher high school GPAs. There were no significant differences in student use of the textbooks, but there were significantly fewer withdrawals when using the OER textbook, replicating previous findings about the value of OER for promoting student persistence in courses. Last, students perceived the quality of the textbooks to be generally comparable except on the dimension of visual appeal (favoring the commercial text) and the quality of writing (favoring the OER text).

Most of these quasi-experimental studies support the benefit of OER for students, including similar or better performance, reduced costs, and similar or better text quality, as rated by students. The exception to this pattern of more favorable perception for OER textbooks is Lawrence and Lester’s (2018) comparison of student satisfaction and performance prior to and following the instructors’ transition to an OER text. Results indicate that there was no statistically significant difference in performance before and after the transition to OERs, yet fewer students reported satisfaction with the OER (57%) than reported satisfaction with the commercial text in a previous semester (74%). Students also were less likely to endorse satisfaction with various quality elements and the OER text only outperformed the commercial text on ratings of affordability. The authors note that at the time of the study, OER textbook offerings for their field were “relatively immature” (p. 562) and quality ratings could improve in the future as more high-quality materials become available.

The previous studies vary in quality and even quasi-experimental preparations contain critical limitations. These studies have not controlled for student knowledge of the cost of the textbook material as a factor influencing preference. That is, students may demonstrate bias in their reported preferences, rating a free textbook more favorably than a commercial counterpart. To date, the only example of a true experimental preparation evaluating differences in perception of OERs vs commercial texts can be found in Clinton et al. (2019). Researchers recruited and randomly assigned 144 students who had previously taken an introductory-level sociology course to read excerpts from either an OER or commercial textbook. The samples were of approximately equal length, covered related content, and students were not informed of the cost of the course materials nor whether the excerpt was taken from an OER or commercial text. Researchers evaluated student performance on a 10-item learning quiz consisting of five items drawn from each textbook’s test bank. Participants were also asked to complete a 14-item survey adapted from the TAUS (Gurung and Martin, 2011) with open-ended responses to assess perceived quality of the course materials.

Results indicated no significant differences in performance between students assigned the OER or commercial textbooks. Student perception of quality factors were likewise similar except for relevance of photos, better photograph placement, writing engagement, and writing clarity (favoring the commercial text). Students using the OER excerpt rated the sample as better in recency of research findings and using research findings to explain material. Researchers also found positive correlations between student assessment scores and their reported perception of textbook quality, particularly on dimensions related to writing clarity, interest, and the helpfulness and relevance of examples. For students using the commercial textbook, there were significant positive correlations between the learning outcomes and student ratings of textbook interest, textbook helpfulness, relevance of examples, writing engagement, and clarity. For the OER textbook, the only significant correlation between student assessment score and quality was for writing engagement.

Grounded theory analysis of students’ open-ended responses about helpful features of the textbooks indicated that layout features like use of colors, headings, bolded words, and definitions, as well as balance of text and pictures were factors associated with greater usability. It appears that there is a balance to the benefit of adding additional features, as some students referenced visual layout as a distraction, particularly when there was inconsistency in font and an overabundance of callouts in the text. Students also referenced the quality of writing, favoring writing that was clear, simple, and included multiple real-life examples to explain concepts over writing that was too wordy, statistical, “too academic,” or lacked examples.

Taken together, the available literature assesses student satisfaction with OER course materials on self-report surveys. Most quasi-experimental studies demonstrate that students perform as well or better with OER and perceive OER as similar or better in quality than commercial texts (c.f., Lawrence and Lester, 2018). However, quasi-experimental studies cannot control for biases such as student self-selection or uncontrolled external variables between groups, such as instructor differences, changes in course policy, or cohort effects (Hilton et al., 2013). It is also difficult to control for student knowledge of the cost of the textbook unless materials are provided to the students free of charge (e.g., Clinton et al., 2019).

While some available studies explore students’ direct evaluation of the quality of OER and commercial texts, there appeared to be no studies directly exploring the specific question of how the knowledge of cost influences student perceptions. Moreover, most studies comparing student perception of materials compared ratings from a single evaluation – no studies known to the authors have conducted repeated observations of student-perceived quality and whether those ratings changed as a function of learning pertinent information about the course materials (e.g., cost). This study aimed to address the following questions:

1) Do students perceive differences in quality and preferences between popular OER and commercial textbooks?

2) Do student perception of quality and/or preferences shift upon learning the cost of the materials?

3) Are those preferences reliably associated with any stimulus quality features previously reported in the literature?

Materials and Methods

This study was completed as part of a 75 min in-class activity to demonstrate psychological research methodology. The activity corresponded with a review of basic research concepts (e.g., independent and dependent variables). To permit students an opportunity to refuse participation in the study without penalty to their course grade, all students present during the activity earned credit for rating the class activity regardless of their participation in the study.

Participants

Potential participants (N = 168) were enrolled in an Introduction to Psychology course at a large, open enrollment university in the pacific northwest across three semesters: Spring 2018 (n = 73), Fall 2019 (n = 56), and Spring 2020 (n = 39). Students in these courses were assigned the OpenStax Psychology textbook along with free-to-access or instructor-created ancillary resources for their primary course materials and the course was delivered as a flipped-model classroom. The instructor provided a study guide highlighting learning objectives, assigned materials, and key terms prior to course lecture and expected students to review the content prior to lecture. Each class began with a brief, low-stakes activity to promote preparation and engagement. However, participants were not provided a study guide on the day of the study and were informed that there were no assigned preparation materials for the day.

Specific demographic data were not collected as part of this study. However, the course is a popular lower-division general education course for undergraduate students from across the university. The course is predominantly taken by students within their first year of study; 63.9% of students in the sampled courses indicated that they were within their first year of university studies on a separate student intake survey.

Obtaining parent consent for students under the age of 18 was beyond the scope of this study. As a result, data for students under the age of 18 (n = 16) was excluded from analysis. Students were also given an opportunity to withdraw from the study and cease participation at any point. Responses for one student who began completing the study but did not complete all survey materials were excluded from the final analysis. Excluding materials from students under the age of 18 and those who started but did not complete the study yielded responses from a total of 151 participants.

Materials

All participants received an envelope containing study materials and course materials to facilitate quick distribution of materials during the in-class activity. Study materials included an informed consent document, a packet containing samples from four textbooks, and a pre-price reveal survey assessing students’ perceptions of the different samples and preferences (described below). Class activity materials included a half-page activity feedback form as well as a copy of the informed consent document and take-home study questions about research methodology details covered in the project for later review.

The packet of textbook samples included two-page samples explaining independent variables and dependent variables, duplexed in full color. Two samples were drawn from commercial textbooks – Psychology in Your Life (Grison et al., 2015, pp. 32–33) and The Science of Psychology: An Appreciative View (King, 2013, pp. 24–25), and two from open textbooks – Psychology (OpenStax, 2014, pp. 55–56) and Introduction to Psychology – 1st Canadian Edition (Stangor and Walinga, 2014, pp. 103–104). For parsimony, these samples will hereafter be referred to by primary author name or publisher (i.e., Grison, King, OpenStax, and BCcampus, respectively).

Textbook samples were selected for topical coverage and length (page count). The researchers selected the two pages that presented information on independent variables and dependent variables in research methods. No other attempts were made to control content or visual components between samples, nor to edit the content for the reader (such as to omit partial sentences continuing from previous pages or onto subsequent pages not provided). During the first data collection session (Sp2018), the sample packet excerpts were arranged Grison, OpenStax, BCcampus, then King. To attempt to control for potential order effects, participants in the subsequent two iterations (Fa2019 and Sp2020) received the sample packet excerpts arranged King, OpenStax, Grison, then BCcampus.

All words (e.g., text found in headings, diagrams, and figure captions) and added visual features (e.g., figures, tables, and hints/definitions in margins) were calculated. The open textbooks contained fewer words (BCcampus, 907 words; OpenStax, 916 words) than the commercial texts (Grison, 1,098 words; King, 1,580 words). The open textbooks also contained fewer added visual features (BCcampus, 3 features; OpenStax, 2 features) than the commercial texts (Grison, 7 features; King, 10 features).

Measures

Adapted Perception of Textbook Quality Survey

Student survey materials included a pre-price reveal and post-price reveal survey to assess participants’ perception of textbook quality. On the pre-price reveal survey, participants were asked to rate various sample quality features (including visual appeal, quality of examples, engaging writing, understandable writing, and desire to use the sample textbook in class) for each textbook sample on a 5-point Likert type scale ranging from “strongly disagree” to “strongly agree.” These rating questions were adapted from Gurung and Martin’s Textbook Assessment and Usage Scale (TAUS; Gurung and Martin, 2011). Participants were also asked to propose a fair price to pay for each of the textbooks based on the samples. Last, participants were asked to select their most preferred textbook sample and provide a rationale for their choice. No identifying information about the texts or any information about the price of materials was provided. See Supplementary Table 1 for the pre-price reveal survey instrument.

Following a review of price information about the textbooks from which the samples were drawn, participants received a second copy of the perception of textbook quality survey. Participants were first asked to re-evaluate their top choice from the previous survey, specifically to re-select a top choice and describe whether learning the cost of the textbook altered their preference. Next, participants were provided with identifying information about the four textbook samples (including author, title, and cost) and were asked to re-rate the four textbook samples along the quality dimensions and scale (visual appeal, example quality, engaging writing, understandable writing, and desire to use the sample textbook) as on the pre-price reveal survey. Participants were also asked to rate whether they considered the cost of the text fair using the same Likert-type scale. Finally, participants were asked to briefly reflect upon whether knowing the cost of the textbook changed their opinions of the materials. See Supplementary Table 2 for the post-price reveal survey instrument.

Procedure

The experiment was administered embedded within an in-class activity on research methods. The researcher evaluated student perception of the quality of textbook materials using a one-group pretest-posttest design (Campbell and Stanley, 1959). Student perceptions were evaluated during an initial baseline observation, then information about the author, publisher, and cost of the book was provided. The researcher then re-evaluated student perception of textbook quality, assessing whether textbook preferences had shifted as a function of exposure to information about textbook cost.

Class sizes ranged from 39 to 73 participants per administration. At the start of class, the first author described the class activity, indicating that the class would have an opportunity to experience psychological research directly if they desired. To mitigate the coercive nature of the instructor as researcher, students were informed that their credit for the day would come from providing feedback on the class activity, not from completing the study. The researcher also encouraged any student who preferred not to participate in the research to collect a packet and follow along with the class even if they declined to submit their materials to reduce any coercive feelings of social pressure from peers.

Each student received an envelope containing study materials to facilitate quick distribution, organization, and collection of study materials during the short class activity. Following distribution of the study packet, the researcher reviewed the informed consent form at length. Each critical element of the consent from was read aloud and discussed as part of the in-class activity, including the students’ right to not participate in the study or to stop participating at any point throughout the activity. The researcher offered to answer questions about the study expectations or informed consent statement. After answering any clarifying questions, participants were instructed to sign their form if they agreed to participate. If students chose not to participate, they were instructed to leave the form unsigned. Because it was beyond the scope of this study to request parent permission for students under the age of 18 to participate, students were instructed to clearly write “NOT 18” on the form if they were under 18 years old, and place the unsigned consent form into the study envelope. Participants were asked to place their consent forms – signed or unsigned – in the envelope to return to the researcher at the end of the class activity. Packets with blank consent forms or with consent forms indicating the participant was under the age of 18 were omitted from analysis and destroyed per IRB protocol. Review of the study protocol and informed consent form was completed in approximately 20 min.

Participants were then instructed to remove the textbook sample packet and the pre-price reveal survey from the packet. The researcher explained the study and the survey instrument. Once any questions were answered, participants were asked to read and rate each of the four samples (20 min) then place their completed survey within the study envelope when completed. Next, the researcher led a brief review of what information could be learned from participant responses to the pre-price reveal survey and discussed concepts like correlation and causality. Following this brief class discussion, the researcher provided information about the titles, authors, and costs of each of the textbook samples included in the study packet. The researcher distributed the post-price reveal survey and asked participants to review and re-rate the samples using the new post-price reveal survey (10 min). Participants placed their survey in the study envelope when completed.

Last, the researcher collected all participants’ study envelopes and debriefed participants on the nature of the study. The class concluded with a brief discussion of the project, methodological decisions and limitations, possible confounding variables, and any last student questions. Students were asked to complete and submit the class activity rating form. This last stage of the class activity was completed in approximately 10 min.

Analyses

To determine initial textbook preferences and the factors that contributed to them, descriptive statistics (means and standard deviations) were calculated for participants’ ratings of the visual appeal, use of good examples, clarity of writing, and engaging writing of each sample both before and after price data information was revealed and compared. Descriptive statistics, including ranges, were calculated for student prices offered for each book.

To analyze preference changes and cost information, descriptive statistics were calculated and an exact McNemar’s test was used to determine whether there were statistically significant differences in the proportion of participants who selected each type (commercial or Open) of text before and after price data were available. This analysis is appropriate to determine whether there are significant differences on a dichotomous variable, in this case open or commercial textbook selection, in two conditions (“pre-price reveal” and “post-price reveal”; Adedokun and Burgess, 2012).

Open coding was conducted on the qualitative data participants provided to explain their textbook choice, to identify key themes driving textbook selection.

To further explore the factors that contribute to participants’ choices of texts, multiple linear regression analyses were conducted using the text’s visual appeal, quality of examples, engaging writing, and clarity of writing as independent variables to predict participants’ desire to use each textbook (the dependent variable). Participants’ reported desire to use each textbook was a continuous measure of preference using data on a five point Likert Scale (1 = Strongly Disagree they would like to use the text and 5 = Strongly Agree they would like to use the text).

Results

Question 1: Initial Textbook Quality Perception and Preference

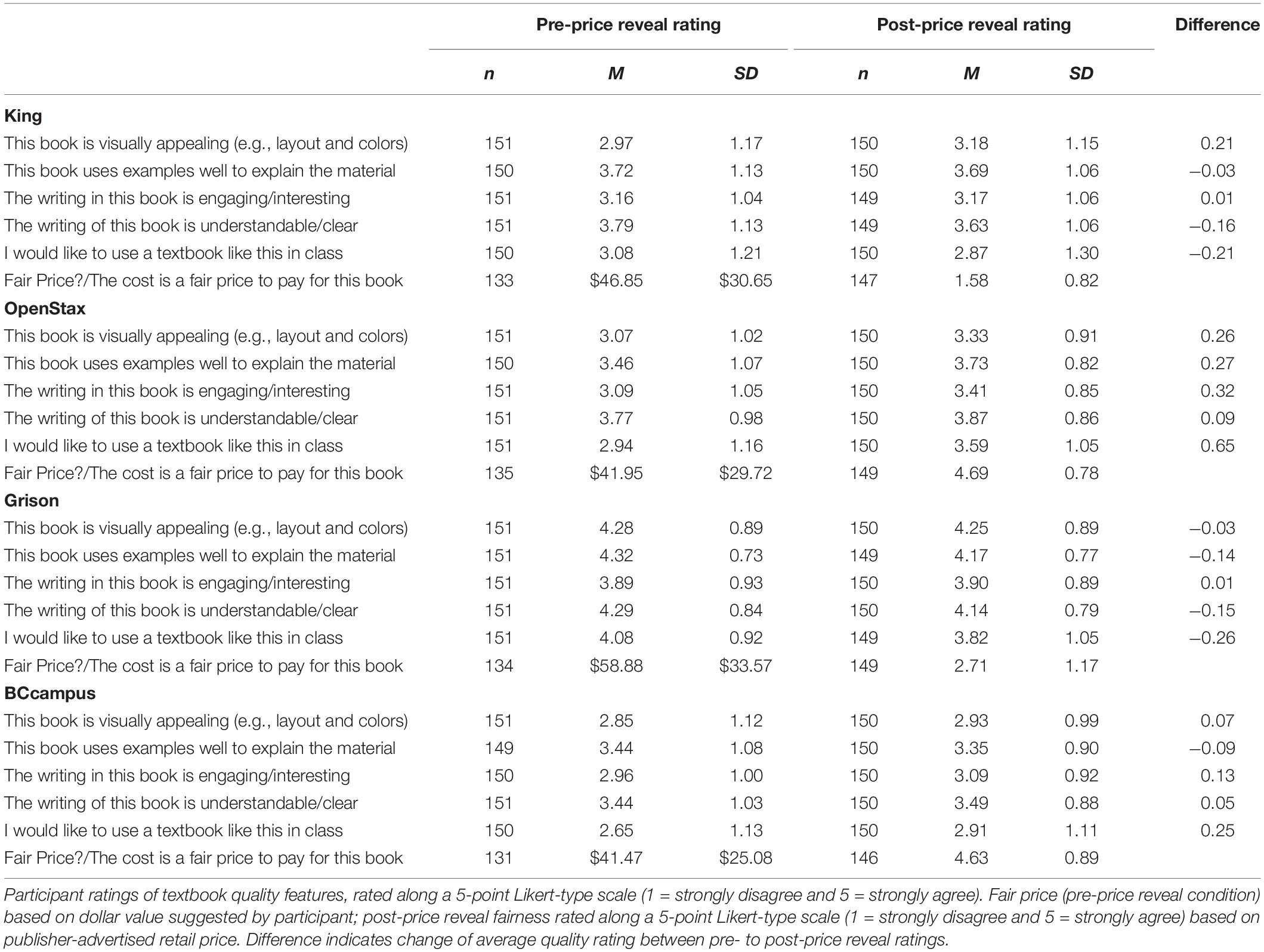

Participants provided the most favorable ratings for the Grison textbook, indicating that the book was visually appealing (M = 4.28, SD = 0.89), used good examples (M = 4.32, SD = 0.73), had engaging (M = 3.89, SD = 0.93) and clear writing (M = 4.29, SD = 0.84), and indicated high agreement that it would be a book they would like to use (M = 4.08, SD = 0.92). Ratings for the OpenStax and King books were roughly matched with one another, and least favorable overall ratings were provided for the BCcampus text (visually appealing: M = 2.85, SD = 1.12; good examples: M = 3.44, SD = 1.08; engaging writing: M = 2.96, SD = 1.00; clear writing: (M = 3.44, SD = 1.03; would like to use: M = 2.65, SD = 1.13). See Table 1 for a summary of all student quality ratings.

Participants also proposed a fair value for each book prior to learning the cost of the material. The highest average suggested value was offered for the Grison text (M = $58.88, SD = $33.57, range $0 to $175), followed by King (M = $46.85, SD = $30.65, range $0 to $192), OpenStax (M = $41.95, SD = $29.72, range $0 to $200), then BCcampus (M = $41.47, SD = $25.08, range $0 to $150). The average price offered by participants was significantly higher for the Grison text than the other three texts (p < 0.01). The average price offered for the King text was significantly higher than that offered for the OpenStax text (p < 0.05). There were no other statistically significant differences in the values participants assigned to the other books.

One hundred thirty-nine participants (92.05%) selected a most preferred book, and the top selection aligned with quantitative ratings. Of the participants who indicated preferred text, Grison was the most frequently selected preferred book (n = 91, 65.47%), followed by King (n = 22, 15.83%), OpenStax (n = 14, 10.07%), and then BCcampus (n = 12, 8.63%). Before learning cost information about each book, 81.29% of respondents who selected a preferred text (n = 113) selected one of the two commercial textbooks (Grison or King), demonstrating a strong preference for the commercial textbook offerings – specifically the Grison text. Only 18.70% of respondents who selected a preferred text (n = 26) selected one of the two open textbooks (BCcampus or OpenStax) as their top choice.

Participants’ rationales (N = 128) for their top textbook choices were open coded (Strauss and Corbin, 1990) to evaluate emerging themes. Responses appeared to focus around four major areas – (1) features of the quality of writing, such as the ease of following along or engagement with the material, (2) the quality of examples used, (3) layout of the material to facilitate reading, such as the addition of specific elements like color, typesetting, or page design to guide narrative flow, and (4) the use of figures to demonstrate concepts. A single comment may have contained multiple themes; thus, the number of coded comments exceeded the overall number of qualitative responses provided. For instance, “[sample 3] was most [visually] appealing and vocab words were more [visible]. The graphs also gave a good visual reference” contains reference to both the way that the text was formatted (layout) and the figures used in the sample (figures) and was scored as an example of a comment related to layout as well as a comment related to the quality of figures. Of the 232 coded comments, participants made specific reference to the quality of writing (n = 75, 32.33%), followed by figures (n = 67, 28.88%), layout (n = 59, 25.43%), then quality of examples (n = 31, 13.36%) as rationales guiding their most preferred text.

Question 2: Perception and Preference Changes Following Price Reveal

Following the price reveal, participants’ ratings of textbook quality changed only slightly compared to ratings provided in the pre-price reveal condition. For the Grison Text, all TAUS subscale ratings except ratings for the quality of writing decreased slightly. All ratings except for use of good examples increased for the BCcampus text compared to pre-price reveal ratings. All ratings for the OpenStax textbook increased. Ratings for the King textbook were mixed; use of good examples, writing clarity, and desire to use the textbook decreased while engagement of writing and visual appeal increased. Overall, these changes suggest that knowledge of the cost of course materials influenced participants’ perceptions of the quality of materials; open textbooks received generally higher TAUS ratings and commercial texts received mixed or generally lower TAUS ratings compared to the pre-price reveal condition. See Table 1 for a summary of all student quality ratings.

After price data were revealed, participants were also asked to rate whether they agreed if the cost of the textbook was fair on a 5 point Likert-type scale, ranging from 1 (Strongly Disagree) to 5 (Strongly Agree). The two open texts were perceived as being priced fairly (OpenStax M = 4.69, SD = 0.78; BCcampus M = 4.63, SD = 0.89) while the two commercial texts received substantially lower scores on price fairness (Grison text M = 2.71, SD = 1.17; King M = 1.58, SD = 0.82) than the open texts. A paired samples t test revealed that there was no statistically significant difference in perceived price fairness between the two open texts, t(130) = −0.07, p = 0.95, or between the King and BCcampus text, t(128) = 1.75, p = 0.08. There were significant differences in each of the other pairings comparing commercial texts with each other and commercial texts with open texts [King vs Grison: t(131) = −5.78, p < 0.01; King vs OpenStax: t(132) = 2.05, p < 0.05; Grison vs OpenStax: t(132) = −8.82, p < 0.01; Grison vs BCcampus: t(129) = 6.99, p < 0.01]. These ratings were likely heavily influenced by the cost participants were willing to pay, as participants offered a fair average price of $58.88 (SD = $33.57) for the Grison text and $46.85 (SD = $30.65) for the King text compared to their market prices of $65–$137.50 and $90–$255.67 (based on format), respectively.

Before price data were available, 81.29% of participants who indicated a text preference selected one of the commercial textbooks and 18.70% selected one of the open textbooks as their most preferred text. Once cost data was provided for each text, participants were asked to re-select their most preferred text taking the new cost information into account. Only 73 of the 81 participants who indicated a top choice book in the post-price reveal condition had also selected a top choice textbook in the pre-price reveal condition, permitting a comparison of preference shift.

Of participants selecting a top choice text in both the pre-and post-price reveal conditions, 30 participants (41.10%) maintained their preference for the commercial textbook they had selected in the pre-price reveal condition. One participant (1.37%) indicated a preference shift between commercial texts, initially selecting the King book but switching to the Grison text. Thirty participants (41.10%) who had chosen commercial textbooks in the pre-condition changed their preference to an open textbook – either OpenStax specifically (n = 25, 34.25%) or citing any open textbook as their preference (n = 5, 6.85%). None of the 12 participants (16.44%) who had chosen open textbooks in the pre-condition changed their selection to a commercial (i.e., more expensive) textbook once price data were available, nor did participant preferences shift between the open texts (i.e. from OpenStax to BCcampus, or vice versa).

While pre-post comparisons of textbook preference were unavailable for many participants (n = 78, 51.66%), 30 of 73 participants that indicated a preference on both the pre- and post-price reveal surveys indicated a shift of preference from commercial to open texts. An exact McNemar’s test determined that this was a statistically significant difference in the proportion of participants who selected open and commercial texts before and after price data were available (p < 0.01).

Finally, researchers asked participants to reflect on whether and why their top choice preferences had shifted in an open-ended response question. Qualitative student comments (N = 87) were open coded for themes related to the participants’ rationales using the open coding method described above. Rationales focused on issues of (1) quality, including comments referencing quality specifically or alluding to quality features like layout, clarity of writing, etc. (n = 50, 57.47%), (2) issues of cost, such as referencing the value of the material (n = 34, 39.08%), or (3) specific reference to familiarity with the text used (n = 3, 3.45%). Comments for individuals indicating a preference for a commercial textbook (n = 35) were more likely to indicate their top choice was due to the quality of the course material (n = 33, 94.29%), followed by price (n = 2, 5.71%), often specifically referencing the fairness of price for value. Comments for individuals indicating a preference for an open textbook (n = 52) were more likely to reference price (n = 17, 61.54%), followed by quality (n = 34, 32.69%), then familiarity with the book (n = 3, 5.77%). It is worth noting again that one sample text used in this study was the OpenStax textbook assigned in the course from which participants were recruited.

Question 3: Factors Associated With Preference

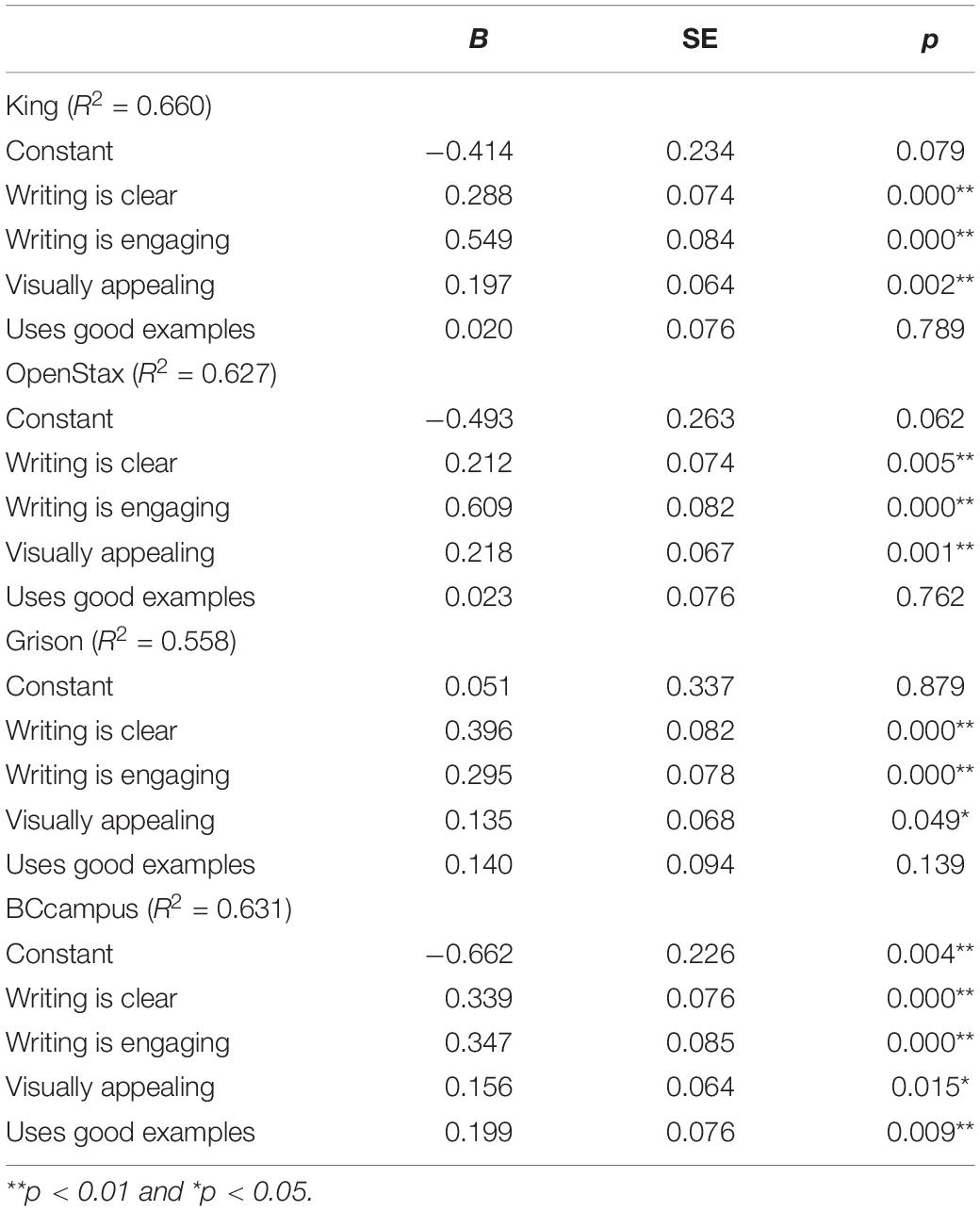

To better understand the factors that contribute to participants’ choice of text, regression analyses were conducted for each text. A multiple linear regression was calculated to predict participants’ desire to use each textbook in class, based on the sample text’s visual appeal, quality of examples, engaging writing, and clarity of writing. The regression models were tested by running collinearity statistics. For the regression models in Table 2, the variance inflation factor varied between 1.32 and 2.25 (average VIF = 1.84) and tolerance statistics varied between 0.45 and 0.76. Therefore collinearity was not an issue.

For the Grison text, a significant regression equation was found [F(4, 146) = 46.07, p < 0.01], with an R2 of 0.56. Participants’ predicted desire to use the sample text in class is equal to 0.05 + 0.14 (visual appeal) + 0.40 (writing is clear) + 0.30 (writing is engaging), as measured using the Likert items described above. Desire to use the Grison text (measured on a five point scale) increased 0.40 for each point increase on the clarity of writing measure, 0.30 for each point increase on the engagingness of writing measure, and 0.14 for each point on the visual appeal measure. “Uses good examples” was not a significant predictor of participants’ desire to use the Grison text.

For the King text, a significant regression equation was found [F(4, 144) = 69.70, p < 0.01], with an R2 of 0.66. Participants’ predicted desire to use the sample text in class is equal to −0.41 + 0.20 (visual appeal) + 0.29 (writing is clear) + 0.55 (writing is engaging), as measured using the Likert items described above. Desire to use the King text (measured on a five point scale) increased 0.29 for each point increase on the clarity of writing measure, 0.55 for each point increase on the engagingness of writing measure, and 0.20 for each point on the visual appeal measure. “Uses good examples” was not a significant predictor of participants’ desire to use the King text.

For the OpenStax text, a significant regression equation was found [F(4, 145) = 60.89, p < 0.01], with an R2 of 0.63. Participants’ predicted desire to use the sample text in class is equal to −0.493 + 0.61 (writing is engaging) + 0.22 (visual appeal) + 0.21 (writing is clear), as measured using the Likert items described above. Desire to use the OpenStax text (measured on a five point scale) increased 0.61 for each point increase on the engagingness of writing measure, 0.22 for each point on the visual appeal measure, and 0.21 for each point increase on the clarity of writing measure. “Uses good examples” was not a significant predictor of participants’ desire to use the OpenStax text.

For the BCcampus text, a significant regression equation was found [F(4, 142) = 60.73, p < 0.01), with an R2 of 0.63. Participants’ predicted desire to use the sample text in class is equal to −0.66 + 0.35 (writing is engaging) + 0.34 (writing is clear) + 0.20 (uses good examples) + 0.16 (visual appeal), as measured using the Likert items described above. Desire to use the BCcampus text (measured on a five point scale) increased 0.35 for each point increase on the engagingness of writing measure, 0.16 for each point on the visual appeal measure, and 0.34 for each point increase on the clarity of writing measure and 0.20 for each point increase on the good examples measure. The BCcampus text was the only one for which “uses good examples” was a significant predictor of participants’ desire to use the text.

Overall, this pattern of findings suggests that participants’ desire to use a text is consistently influenced by a text’s clarity, engaging writing, and visual appeal. This pattern was observed across all textbook samples used.

Discussion

The purpose of this study was to explore students’ perceptions of the quality of textbooks, including whether those preferences changed after learning the cost of course materials. Results indicate that participants initially indicated a preference for a specific commercial textbook (Grison), with no significant difference in preferences for the comparison commercial text (King) or two open textbooks used in this study (BCcampus and OpenStax). Participants’ ratings of quality, as operationalized as understandable writing, visual appeal, engaging writing, and use of good examples, aligned with this top choice, as did the highest average fair price offered. There were no significant differences in quality ratings or proposed fair costs for the three other texts. These results provide idiosyncratic support for previous studies; on one hand, there was clear preference for a single commercial text, consistent with studies demonstrating a preference for commercial text offerings (e.g., Gurung, 2017 and Lawrence and Lester, 2018). On the other hand, there was no clear difference in quality ratings or suggested fair cost between the other three texts, including another commercial sample, consistent with studies demonstrating a preference for OER offerings when students know the cost of course materials (e.g., Jhangiani et al., 2018; Cuttler, 2019, and Clinton, 2019). Taken together, these results indicate that the question of what students prefer may not be as simple as asking whether students prefer open or commercial texts, especially if students are unaware of the cost of materials. That is, student preference may be influenced by any number of other factors, including readability, perception of layout (font, text size, and additional formatting features), figures and diagrams, and quality of examples (e.g., Gurung, 2017; Clinton, 2018; Jhangiani et al., 2018; Clinton et al., 2019).

Prior to knowing the cost of the textbooks sampled for this study, participants reported selecting their most preferred texts based the ease/clarity of writing, the extent to which formatting facilitated their reading, and the quality of figures used in the texts. These results are consistent with previous studies that demonstrate students’ desire for a visually engaging and easy to read textbook (e.g., Gurung, 2017; Clinton, 2018). Along these dimensions, the commercial texts used in this study had an advantage with more figures/diagrams than the open textbook samples and a variety of additional formatting elements like colored text and keyword callouts. However, more visual elements may not necessarily yield more favorable ratings. At least one student in this study indicated that they preferred a textbook with fewer distracting visuals, consistent with previous research where a minority of students have commented on “busy” formatting being a distraction from understanding (e.g., Clinton et al., 2019). This may highlight an underexplored value of an open textbooks: with greater copyright permissions, professors and/or students can potentially add or omit design elements to deliver content in a way that is most beneficial for the intended learner.

Ratings of the textbooks changed slightly but noticeably following the price reveal. Participants’ quality ratings for commercial texts decreased slightly and quality ratings for open texts increased slightly (see Table 1). Ratings for the fairness of cost also favored the open texts, with participants providing high agreement with the fairness of price for the open textbooks, slight disagreement with the fairness of price for the Grison text, and disagreement with the fairness of price for the King textbook. These ratings correspond with the texts’ cost relative to one another, with open textbooks being free, the Grison text being $80 or below, and the King text valued at up to $250.

Analyses to detect whether participants’ preferences shifted upon learning the cost of the textbooks yielded mixed results. There was a statistically significant change in participants’ top choice of text: 81.29% of participants selecting a commercial text as their top choice prior to knowing the cost of materials, shifting to and 57.53% of participants selecting an open textbook following the cost reveal, demonstrating a shift in preference for those participants who selected a top choice text during the pre-price reveal and post-price reveal conditions. Of these participants, 30 of 61 who previously selected a commercial textbook switched their preference to an open textbook, citing both cost and quality (i.e., value) as motivating factors. No students switched preference from an open textbook to a commercial textbook. These results support previous studies demonstrating student preference for OERs (Delimont et al., 2016; Jhangiani and Jhangiani, 2017; Ross et al., 2018).

Yet not all participants’ preferences shifted. In fact, 30 participants (41.10%) who selected the Grison textbook as their top choice in the initial selection retained that preference following the price reveal, citing the quality of course material as their primary motivation in selection. This persistence of choice may be consistent with previous studies demonstrating that participants maintain a preference for whatever textbook they have previously used (e.g., Jhangiani et al., 2018; Grissett and Huffman, 2019), or may be evidence of status quo bias (Samuelson and Zeckhauser, 1988).

To better understand factors predicting participant preference, regression analyses were conducted for each text to evaluate the impact of visual appeal, quality of examples, quality of writing, and clarity of writing to predict participants’ desire to use the sample text in class. Results indicate that visual appeal, engaging writing, and clarity of writing predicted participants’ desire to use the text in class across all four texts. The quality of examples was a predictive variable for desire to use only for the BCcampus text. These results partially support previous studies, which suggest that students are sensitive to the quality and clarity of writing but contradict previous studies where the quality of examples was predictive of students’ perception of quality (e.g., Gurung and Martin, 2011; Gurung, 2017; Clinton et al., 2019). However, given that preference appears to be a complex multi-variate trait that may be influenced by a myriad of other factors (e.g., font, text size, layout, number of figures, etc.), future parametric and component analyses are warranted to help isolate how each of these variables contributes to students’ perception of quality.

One small yet interesting result is that participants offered a fair market price of between $41 and $58 for the samples used in this text. This price is consistent with previous studies demonstrating that students indicate that approximately $50 per text is a fair price (Chae and Delaney, 2018; Jhangiani et al., 2018). Interestingly, participants in this study did not seem to discriminate between open and commercial texts, citing an equivalent fair cost for three of four texts used in this study. These results may indicate that students are amenable to paying a small fee for OER textbooks, which could contribute to the sustainability of OER initiatives at the university level (Griffiths et al., 2017). However, future studies may wish to explore what costs students consider fair to pay for course material packs consisting primarily of OERs.

Limitations and Future Directions

While many studies demonstrate student preferences for OERs, few explore what impact knowledge of the cost has on student preference and none to date have specifically assessed what impact learning the cost of course materials has on students’ perception of the quality of textbook materials. This study is the first demonstration of a one-group pretest-posttest study designed to specifically evaluate students’ perception of textbook quality as a function of learning the cost of course materials. This study includes several practical and methodological strengths. This study was conducted with students as part of an in-class activity on psychological research methodology, demonstrating that it is possible to include experimentation and data collection as part of course delivery. This provides benefit both to the researcher, in the form of potentially publishable data, but also provides students with a hands-on demonstration of experimental methodology and may model the ease of conducting research, a high impact teaching practice (Kuh, 2008) for undergraduate students. Methodologically, this study also boasts a robust data set of participant ratings using a perception measure with demonstrated validity (Gurung and Martin, 2011) from students representing a variety of majors at a large, open enrollment institution. Put another way, data were collected directly from the students that would stand to benefit most from OER adoption, increasing the external validity of these finding results. The data collected also included quantitative and qualitative results, permitting the researchers to explore specific quality variables in greater detail using mixed methods analysis.

However, the study was also not without limitations, most notably inconsistent completion of the qualitative/short-answer survey questions, specifically selecting a most preferred text sample. While pre- vs post-price reveal preferred textbook choices showed that 30 participants’ preferences seem to have shifted from commercial to open texts, with 42 participants indicating a preference for one of the open textbooks (57.53%) and 31 participants (42.46%) maintaining a preference for a commercial textbook, these results should be interpreted with caution due to the large number of participants who failed to select their most preferred textbook on one or both of the surveys. Of 151 participants in the study, only 136 participants (90.07%) selected a preferred text on the pre-price reveal survey, and only 73 participants (48.34%) selected a most preferred text on both the pre- and post-price reveal surveys to permit a direct evaluation of preference shift. Of the 78 respondents not included in pre- vs post-cost reveal preference analysis, 66 participants selected a preferred text on only the first survey, eight participants selected a preferred text on only the second survey, and four participants never indicated a preferred text.

It is unclear why so many participants failed to select a most preferred text sample on these questions, especially since all of these participants provided consistent ratings of the quality of the samples using the Likert-type scales provided. It may be that participants may have overlooked or misunderstood the question (believing they needed to indicate a top choice on the post-price reveal survey only if their preference had changed), or simply chose not to answer due to assessment fatigue or lack of time. There appears to be support for the idea of students overlooking or misunderstanding the question, as most participants who failed to indicate a most preferred text answered a subsequent short-answer question about whether learning the cost of the textbook changed their opinion of the material on the post-cost reveal survey. Future studies may address this limitation by providing fewer sample texts or conducting the study over a longer session to permit participants additional time to complete all questions, reorganizing the survey to make the re-selection question more salient, or delivering the survey electronically and requiring participants to select a preferred text and provide a rationale before moving forward.

There may also have been a number of sources of bias in the study. One textbook sample included in this study came from the OpenStax textbook assigned in the course from which participants in this study were recruited. As discussed above, past research suggests that students demonstrate a preference for the textbook they’re already using. One participant indicated that recognizing the assigned text affected their preference for this book in a qualitative comment on the pre-price reveal survey, and two others indicated knowing it was their current assigned text affected their preference for the OpenStax text following the price reveal.

In addition, although the researcher reserved discussion of the merits of OER until the post-study debrief, knowing that one sample came from the textbook selected by the instructor/first author may have introduced an element of social desirability bias. Of those participants whose preference shifted from a commercial textbook to an open textbook, all preferences shifted to specifically to OpenStax (n = 25) or generally to an unspecified open textbook (n = 5). While cost, familiarity, or quality may have been the primary driving factor for this shift, it is possible that participants selected the text they believed their professor preferred as evidenced by its selection for the course.

Further, in the interest of providing a wide range of samples, participants in the current study were only given two pages of each of the textbooks under study and the pages selected were selected specifically to be the content that aligned with the in-class activity for that day (independent and dependent variables). This decision was made strategically to control for textbook factors like size/length, paper quality, binding (hard vs soft cover), etc., but no attempts were made to control for factors such as formatting, number of images or figures provided, word count, or other features and each of these variables may have subtly influenced participants’ preference for each text (e.g., Clinton et al., 2019). Further, participants may not have been given a sufficient sample of the text to accurately assess preference. However, previous studies have evaluated student preference using sample stimuli of a variety of lengths, ranging from approximately 2,000 words (Clinton et al., 2019) to passages (Gurung and Landrum, 2012) to whole chapters (Sheu and Grissett, 2020). The authors were unable to locate any studies that asked participants to directly compare full length commercial and open texts to one another in an experimental or quasi-experimental analysis of quality. Future studies may wish to specifically explore whether sample length permits a more robust evaluation of quality factors (e.g., writing quality, quality of examples, desire to use the text, etc.).

Another limitation of the current study is a lack of specific demographic data on participants. These questions were omitted primarily to minimize the length of the in-class activity. However, without demographic information, it is not possible to understand whether there were relationships between variables like age, gender, major, class standing, financial need, etc., and student perceptions/preferences. Financial need may be particularly worth exploring, as students with financial need may be more likely to shift preferences up on learning the price of course materials. In the words of one participant: “I have to consider… monetary issues. If [a commercial textbook] was required, I would struggle to be able to afford it.” Future studies may wish to account for student financial need when evaluating textbook preferences, especially if replicating an experimental study evaluating preference change as a function of learning the cost of course materials.

Conclusion

The results of this study suggest that students are sensitive to differences in quality features between textbooks. Although participants’ quality ratings initially favored a specific commercial textbook, learning the cost of the course materials produced a statistically significant shift in preference toward open textbooks (notably the OpenStax book used in the course from which participants were recruited). Of the participants who selected a most preferred textbook on both pre- and post-cost reveal surveys, nearly half of those that had initially selected a commercial textbook indicated a shift in preference toward an open textbook. No participants’ preferences shifted from an open textbook to a commercial textbook following the cost reveal. Participants who indicated a preference for an open textbook following the cost reveal indicated that quality and cost were factors in their decision. Participants who indicated a preference for a commercial textbook shared in their qualitative data that quality was their primary motivator in selecting a text. Finally, the quality/clarity of writing and elements of visual appeal were associated with participants’ desire to use the textbook, while the quality of examples was largely unrelated to participants’ preference to use the materials.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by University of Alaska Anchorage Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

VH designed the project, created materials, collected data, analyzed the qualitative data, and contributed to writing the final manuscript. CW analyzed the quantitative data and contributed to writing the final manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We wish to thank the William and Flora Hewlett Foundation and the Open Ed Group for sponsorship of article processing fees.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2020.00139/full#supplementary-material

References

Abramovich, S., and McBride, M. (2018). Open education resources and perceptions of financial value. Intern. High. Educ. 39, 33–38. doi: 10.1016/j.iheduc.2018.06.002

Adedokun, O., and Burgess, W. (2012). Analysis of paired dichotomous data: a gentle introduction to the McNemar test in SPSS. J. MultiDiscipl. Eval. 8, 125–131.

Azzam, A., Bresler, D., Leon, A., Maggio, L., Whitaker, E., Heilman, J., et al. (2017). Why medical schools should embrace Wikipedia: final-year medical student contributions to Wikipedia articles for academic credit at one school. Acad. Med. 92, 194–200. doi: 10.1097/ACM.0000000000001381

Blanchard, K. D. (2009). Accepting our limitations: the textbook as crutch and compromise. Teach. Theol. Relig. 12, 252–253. doi: 10.1111/j.1467-9647.2009.00530.x

Bliss, T. J., Hilton, J. III, Robinson, J. T., and Wiley, D. (2013). An OER COUP: college teacher and student perceptions of open educational resources. J. Interact. Media Educ. 1:4. doi: 10.5334/2013-04

Broton, K. M., and Goldrick-Rab, S. (2018). Going without: an exploration of food and housing insecurity among undergraduates. Educ. Res. 47, 121–133. doi: 10.3102/0013189X17741303

California Open Educational Resources (2016). OER Adoption Study: Using Open Educational Resources in the College Classroom. California OER Council. Available online at: https://docs.google.com/document/d/1sHrLOWEiRs-fgzN1TZUlmjF36BLGnICNMbTZIP69WTA/edit (accessed April 1, 2016).

California Student Aid Commission (2019). 2018-2019 Student Expenses and Resources Survey: Initial Insights. Rancho Cordova, CA: California Student Aid Commission.

Campbell, D. T., and Stanley, J. C. (1959). Experimental and Quasi-Experimental Designs for Research. New York: Houghton Mifflin.

Chae, B., and Delaney, S. (2018). Washington State Student Survey. Available at online: https://www.slideshare.net/UnaDaly/college-textbook-affordability-student-survey-findings

Clinton, V. (2018). Savings without sacrifice: a case report on open-source textbook adoption. Open Learn. 33, 177–189. doi: 10.1080/02680513.2018.1486184

Clinton, V. (2019). Cost, outcomes, use, and perceptions of open educational resources in psychology: a narrative review of the literature. Psychol. Learn. Teach. 18, 4–20. doi: 10.1177/1475725718799511

Clinton, V., and Khan, S. (2019). Efficacy of open textbook adoption on learning performance and course withdrawal rates: a meta-analysis. AERA Open 5, 1–20. doi: 10.1177/2332858419872212

Clinton, V., Legerski, E., and Rhodes, B. (2019). Comparing student learning from and perceptions of open and commercial textbook excerpts: a randomized experiment. Front. Educ. 15:110. doi: 10.3389/feduc.2019.00110

Colvard, N. B., Watson, C. E., and Park, H. (2018). The impact of open educational resources on various student success metrics. Int. J. Teach. Learn. High. Educ. 30, 262–276.

Cooney, C. (2017). What impacts do OER have on students? Students share their experiences with a health psychology OER at New York city college of technology. Int. Rev. Res. Open Distribut. Learn. 18:3111.

Cronin, C. (2017). Openness and praxis: exploring the use of open educational practices in higher education. Int. Rev. Res. Open Distribut. Learn. 18:3096. doi: 10.19173/irrodl.v18i5.3096

Cuttler, C. (2019). Students’ use and perceptions of the relevance and quality of open textbooks compared to traditional textbooks in online and traditional classroom environments. Psychol. Learn. Teach. 18, 65–83. doi: 10.1177/1475725718811300

Delimont, N., Turtle, E. C., Bennett, A., Adhikari, K., and Lindshield, B. L. (2016). University students and faculty have positive perceptions of open/alternative resources and their utilization in a textbook replacement initiative. Res. Learn. Technol. 24, doi: 10.3402/rlt.v24.29920

Durwin, C. C., and Sherman, W. M. (2008). Does choice of college textbooks make a difference in students’ comprehension? College Teach. 56, 28–34. doi: 10.3200/CTCH.56.1.28-34

Fischer, L., Hilton, J., Robinson, T. J., and Wiley, D. A. (2015). A multi-institutional study of the impact of open textbook adoption on the learning outcomes of post-secondary students. J. Comput. High. Educ. 27, 159–172. doi: 10.1007/s12528-015-9105-6

Florida Virtual Campus (2019). 2019 Florida Student Textbook & Course Materials Survey. Tallahassee, FL: Florida Virtual Campus.

Griffiths, R., Gardner, S., Lundh, P., Shear, L., Ball, A., Mislevy, J., et al. (2018). Participant Experiences and Financial Impacts: Findings from Year 2 of Achieving the Dream’s OER Degree Initiative. Menlo Park, CA: SRI International.

Griffiths, R., Mislevy, J., Wang, S., Shear, L., Mitchell, N., Bloom, M., et al. (2017). Launching OER Degree Pathways: An Early Snapshot of Achieving the Dream’s OER Degree Initiative and Emerging Lessons. Menlo Park, CA: SRI International.

Griggs, R. A., and Jackson, S. L. (2017). Studying open versus traditional textbook effects on students’ course performance: confounds abound. Teach. Psychol. 44, 306–312. doi: 10.1177/0098628317727641

Grison, S., Heatherton, T. F., and Gazzaniga, M. S. (2015). Psychology in Your Life, 2nd Edn. New York, NY: W/W. Norton & Company.

Grissett, J. O., and Huffman, C. (2019). An open versus traditional psychology textbook: student performance, perceptions, and use. Psychol. Learn. Teach. 18, 21–35. doi: 10.1177/1475725718810181

Gurung, R. A. (2017). Predicting learning: comparing an open educational resource and standard textbooks. Schol. Teach. Learn. Psychol. 3, 233–248. doi: 10.1037/stl0000092

Gurung, R. A., and Martin, R. C. (2011). Predicting textbook reading: the textbook assessment and usage scale. Teach. Psychol. 38, 22–28. doi: 10.1177/0098628310390913

Gurung, R. A. R., and Landrum, R. E. (2012). Comparing student perceptions of textbooks: does liking influence learning? Int. J. Teach. Learn. High. Educ. 24, 144–150.

Hendricks, C., Reinsberg, S. A., and Rieger, G. W. (2017). The adoption of an open textbook in a large physics course: an analysis of cost, outcomes, use, and perceptions. Int. Rev. Res. Open Distribut Learn. 18:3006.

Hilton, J. (2016). Open educational resources and college textbook choices: a review of research on efficacy and perceptions. Educ. Technol. Res. Dev. 64, 573–590. doi: 10.1007/s11423-016-9434-9

Hilton, J. (2019). Open educational resources, student efficacy, and user perceptions: a synthesis of research published between 2015 and 2018. Educ. Technol. Res. Dev. 68, 853–876. doi: 10.1007/s11423-019-09700-4

Hilton, J. L. III, Gaudet, D., Clark, P., Robinson, J., and Wiley, D. (2013). The adoption of open educational resources by one community college math department. The International Review of Research in Open and Distributed Learning 14. doi: 10.19173/irrodl.v14i4.1523

Hilton, J. L. III, Robinson, T. J., Wiley, D., and Ackerman, J. D. (2014). Cost-savings achieved in two semesters through the adoption of open educational resources. Int. Rev. Res. Open Dist. Learn. 15, 67–84.

Humphreys, D. (2012). What’s wrong with the completion agenda: and what we can do about it. Liberal Educ. 98, 8–17.

Ikahihifo, T. K., Spring, K. J., Rosecrans, J., and Watson, J. (2017). Assessing the savings from open educational resources on student academic goals. Int. Rev. Res. Open Distribut. Learn. 18, 126–140.

Jhangiani, R. S. (2017). Why Have Students Answer Questions When They Can Write Them?. Available online at: http://thatpsychprof.com/why-have-students-answer-questions-when-they-can-write-them/ (accessed January 12, 2017)

Jhangiani, R. S., Dastur, F. N., Le Grand, R., and Penner, K. (2018). As good or better than commercial textbooks: students’ perceptions and outcomes from using open digital and open print textbooks. Can. J. Schol. Teach. Learn. 9, 1–20. doi: 10.5206/cjsotl-rcacea.2018.1.5

Jhangiani, R. S., and Jhangiani, S. (2017). Investigating the perceptions, use, and impact of open textbooks: a survey of post-secondary students in British Columbia. Int. Rev. Res. Open Distribut. Learn. 18:3012. doi: 10.19173/irrodl.v18i4.3012

Jung, E., Bauer, C., and Heaps, A. (2017). Higher education faculty perceptions of open textbook adoption. Int. Rev. Res. Open Distribut. Learn. 18, 124–141. doi: 10.19173/irrodl.v18i4.3120

King, L. A. (2013). The Science of Psychology: An Appreciative View, 3rd Edn. New York, NY: McGraw Hill.

Kuh, G. D. (2008). High-Impact Educational Practices: What They are, Who has Access to Them, and Why They Matter. Washington, DC: Association of American Colleges and Universities.

Lawrence, C., and Lester, J. (2018). Evaluating the effectiveness of adopting open educational resources in an introductory American Government course. J. Polit. Sci. Educ. 14, 555–566. doi: 10.1080/15512169.2017.1422739

Lindshield, B., and Adhikari, K. (2013). Online and campus college students like using an open educational resource instead of a traditional textbook. J. Online Learn. Teach. 9, 1–7.

McGowan, M. K., Stephens, P. R., and West, C. (2009). Student perceptions of electronic textbooks. Issues Inform. Syst. 10, 459–465.