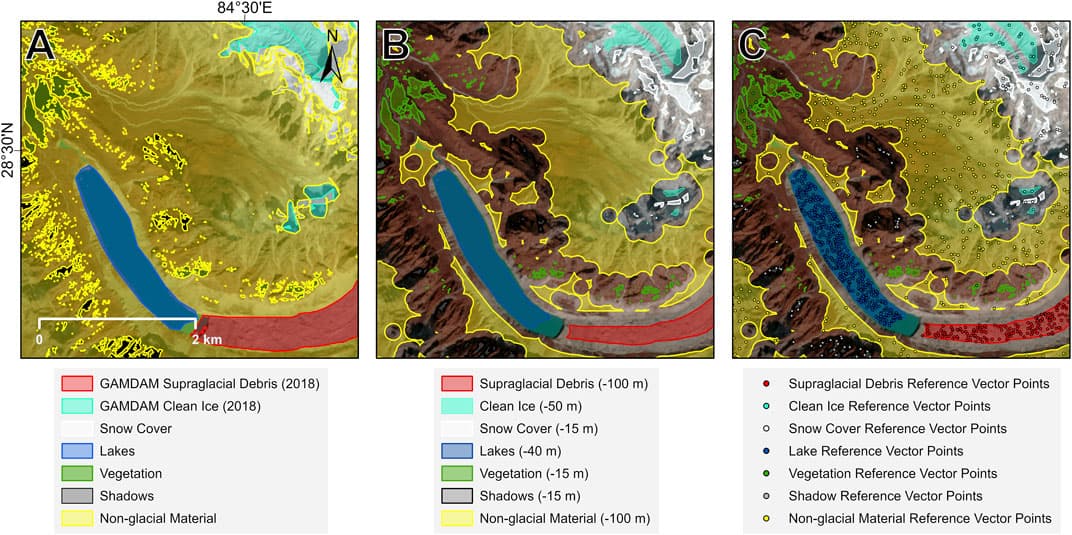

Evaluating glacial change and the subsequent water stores in high mountains is becoming increasingly necessary, and in order to do this, models need reliable and consistent glacier data. These often come from global inventories, usually constructed from multi-temporal satellite imagery. However, there are limitations to these datasets. While clean ice can be mapped relatively easily using spectral band ratios, mapping debris-covered ice is more difficult due to the spectral similarity of supraglacial debris to the surrounding terrain. Therefore, analysts often employ manual delineation, a time-consuming and subjective approach to map debris-covered ice extents. Given the increasing prevalence of supraglacial debris in high mountain regions, such as High Mountain Asia, a systematic, objective approach is needed. The current study presents an approach for mapping debris-covered glaciers that integrates a convolutional neural network and object-based image analysis into one seamless classification workflow, applied to freely available and globally applicable Sentinel-2 multispectral, Landsat-8 thermal, Sentinel-1 interferometric coherence, and geomorphometric datasets. The approach is applied to three different domains in the Central Himalayan and the Karakoram ranges of High Mountain Asia that exhibit varying climatic regimes, topographies and debris-covered glacier characteristics. We evaluate the performance of the approach by comparison with a manually delineated glacier inventory, achieving F-score classification accuracies of 89.2%–93.7%. We also tested the performance of this approach on declassified panchromatic 1970 Corona KH-4B satellite imagery in the Manaslu region of Nepal, yielding accuracies of up to 88.4%. We find our approach to be robust, transferable to other regions, and accurate over regional (>4,000 km2) scales. Integrating object-based image analysis with deep-learning within a single workflow overcomes shortcomings associated with convolutional neural network classifications and permits a more flexible and robust approach for mapping debris-covered glaciers. The novel automated processing of panchromatic historical imagery, such as Corona KH-4B, opens the possibility of exploiting a wealth of multi-temporal data to understand past glacier changes.

For aerosol, cloud, land, and ocean remote sensing, the development of accurate cloud detection methods, or cloud masks, is extremely important. For airborne passive remotesensing, it is also important to identify when clouds are above the aircraft since their presence contaminates the measurements of nadir-viewing passive sensors. We describe the development of a camera-based approach to detecting clouds above the aircraft via a convolutional neural network called the cloud detection neural network (CDNN). We quantify the performance of this CDNN using human-labeled validation data where we report 96% accuracy in detecting clouds in testing datasets for both zenith viewing and forward-viewing models. We present results from the CDNN based on airborne imagery from the NASA Aerosol Cloud meteorology Interactions oVer the western Atlantic Experiment (ACTIVATE) and the Clouds, Aerosol, and Monsoon Processes Philippines Experiment (CAMP2Ex). We quantify the ability of the CDNN to identify the presence of clouds above the aircraft using a forward-looking camera mounted inside the aircraft cockpit compared to the use of an all-sky upward-looking camera that is mounted outside the fuselage on top of the aircraft. We assess our performance by comparing the flight-averaged cloud fraction of zenith and forward CDNN retrievals with that of the prototype hyperspectral total-diffuse Sunshine Pyranometer (SPN-S) instrument’s cloud optical depth data. A comparison of the CDNN with the SPN-S on time-specific intervals resulted in 93% accuracy for the zenith viewing CDNN and 84% for the forward-viewing CDNN. The comparison of the CDNNs with the SPN-S on flight-averaged cloud fraction resulted in an agreement of .15 for the forward CDNN and .07 for the zenith CDNN. For CAMP2Ex, 53% of flight dates had above-aircraft cloud fraction above 50%, while for ACTIVATE, 52% and 54% of flight dates observed above-aircraft cloud fraction above 50% for 2020 and 2021, respectively. The CDNN enables cost-effective detection of clouds above the aircraft using an inexpensive camera installed in the cockpit for airborne science research flights where there are no dedicated upward-looking instruments for cloud detection, the installation of which requires time-consuming and expensive aircraft modifications, in addition to added mission cost and complexity of operating additional instruments.

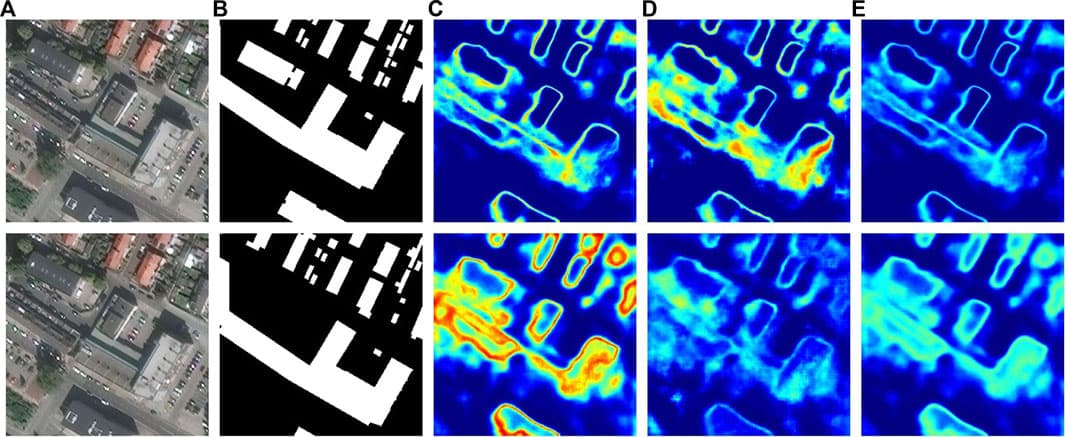

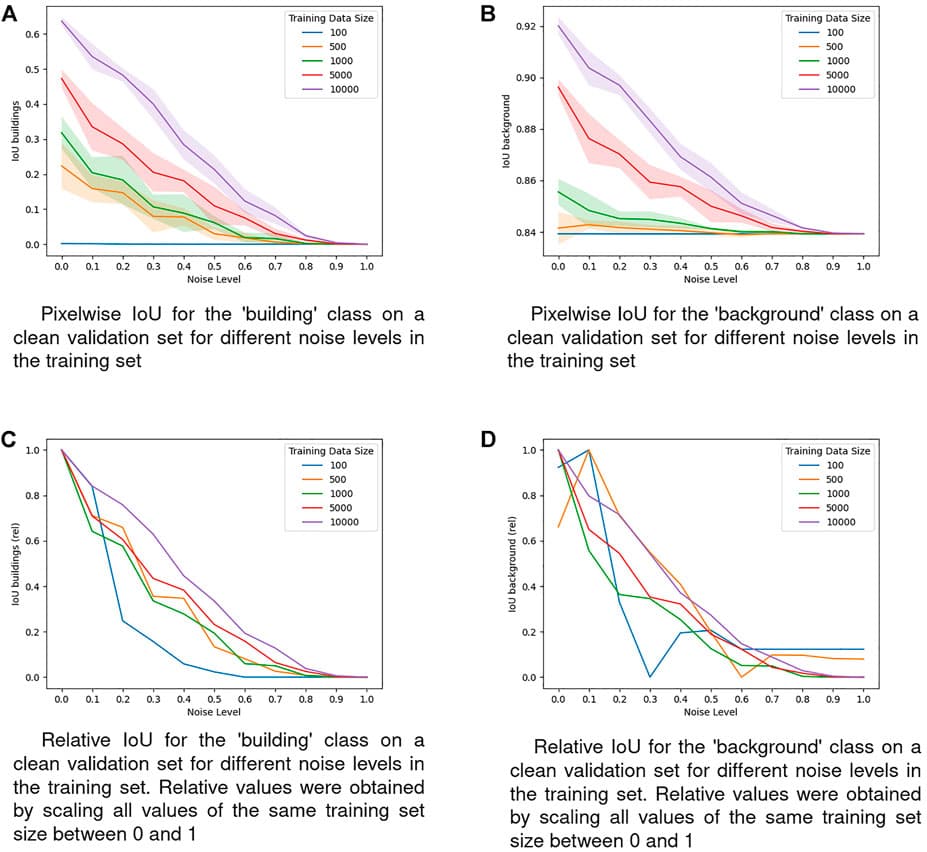

Deep Learning usually requires large amounts of labeled training data. In remote sensing, deep learning is often applied for land cover and land use classification as well as street network and building segmentation. In case of the latter, a common way of obtaining training labels is to leverage crowdsourced datasets which can provide numerous types of spatial information on a global scale. However, labels from crowdsourced datasets are often limited in the sense that they potentially contain high levels of noise. Understanding how such noisy labels impede the predictive performance of Deep Neural Networks (DNNs) is crucial for evaluating if crowdsourced data can be an answer to the need for large training sets by DNNs. One way towards this understanding is to identify the factors which affect the relationship between label noise and predictive performance of a model. The size of the training set could be one of these factors since it is well known for being able to greatly influence a model’s predictive performance. In this work we pick the size of the training set and study its influence on the robustness of a model against a common type of label noise known as omission noise. To this end, we utilize a dataset of aerial images for building segmentation and create several versions of the training labels by introducing different amounts of omission noise. We then train a state-of-the-art model on subsets of varying size of those versions. Our results show that the training set size does play a role in affecting the robustness of our model against label noise: A large training set improves the robustness of our model against omission noise.

The purpose of this study was to construct artificial intelligence (AI) training datasets based on multi-resolution remote sensing and analyze the results through learning algorithms in an attempt to apply machine learning efficiently to (quasi) real-time changing landcover data. Multi-resolution datasets of landcover at 0.51- and 10-m resolution were constructed from aerial and satellite images obtained from the Sentinel-2 mission. Aerial image data (a total of 49,700 data sets) and satellite image data (300 data sets) were constructed to achieve 50,000 multi-resolution datasets. In addition, raw data were compiled as metadata in JavaScript Objection Notation format for use as reference material. To minimize data errors, a two-step verification process was performed consisting of data refinement and data annotation to improve the quality of the machine learning datasets. SegNet, U-Net, and DeeplabV3+ algorithms were applied to the datasets; the results showed accuracy levels of 71.5%, 77.8%, and 76.3% for aerial image datasets and 88.4%, 91.4%, and 85.8% for satellite image datasets, respectively. Of the landcover categories, the forest category had the highest accuracy. The landcover datasets for AI training constructed in this study provide a helpful reference in the field of landcover classification and change detection using AI. Specifically, the datasets for AI training are applicable to large-scale landcover studies, including those targeting the entirety of Korea.