Human–computer integration is an emerging area in which the boundary between humans and technology is blurred as users and computers work collaboratively and share agency to execute tasks. The sense of agency (SoA) is an experience that arises by a combination of a voluntary motor action and sensory evidence whether the corresponding body movements have somehow influenced the course of external events. The SoA is not only a key part of our experiences in daily life but also in our interaction with technology as it gives us the feeling of “I did that” as opposed to “the system did that,” thus supporting a feeling of being in control. This feeling becomes critical with human–computer integration, wherein emerging technology directly influences people’s body, their actions, and the resulting outcomes. In this review, we analyse and classify current integration technologies based on what we currently know about agency in the literature, and propose a distinction between body augmentation, action augmentation, and outcome augmentation. For each category, we describe agency considerations and markers of differentiation that illustrate a relationship between assistance level (low, high), agency delegation (human, technology), and integration type (fusion, symbiosis). We conclude with a reflection on the opportunities and challenges of integrating humans with computers, and finalise with an expanded definition of human–computer integration including agency aspects which we consider to be particularly relevant. The aim this review is to provide researchers and practitioners with guidelines to situate their work within the integration research agenda and consider the implications of any technologies on SoA, and thus overall user experience when designing future technology.

Objective: Despite numerous recent advances in the field of rehabilitation robotics, simultaneous, and proportional control of hand and/or wrist prostheses is still unsolved. In this work we concentrate on myocontrol of combined actions, for instance power grasping while rotating the wrist, by only using training data gathered from single actions. This is highly desirable since gathering data for all possible combined actions would be unfeasibly long and demanding for the amputee.

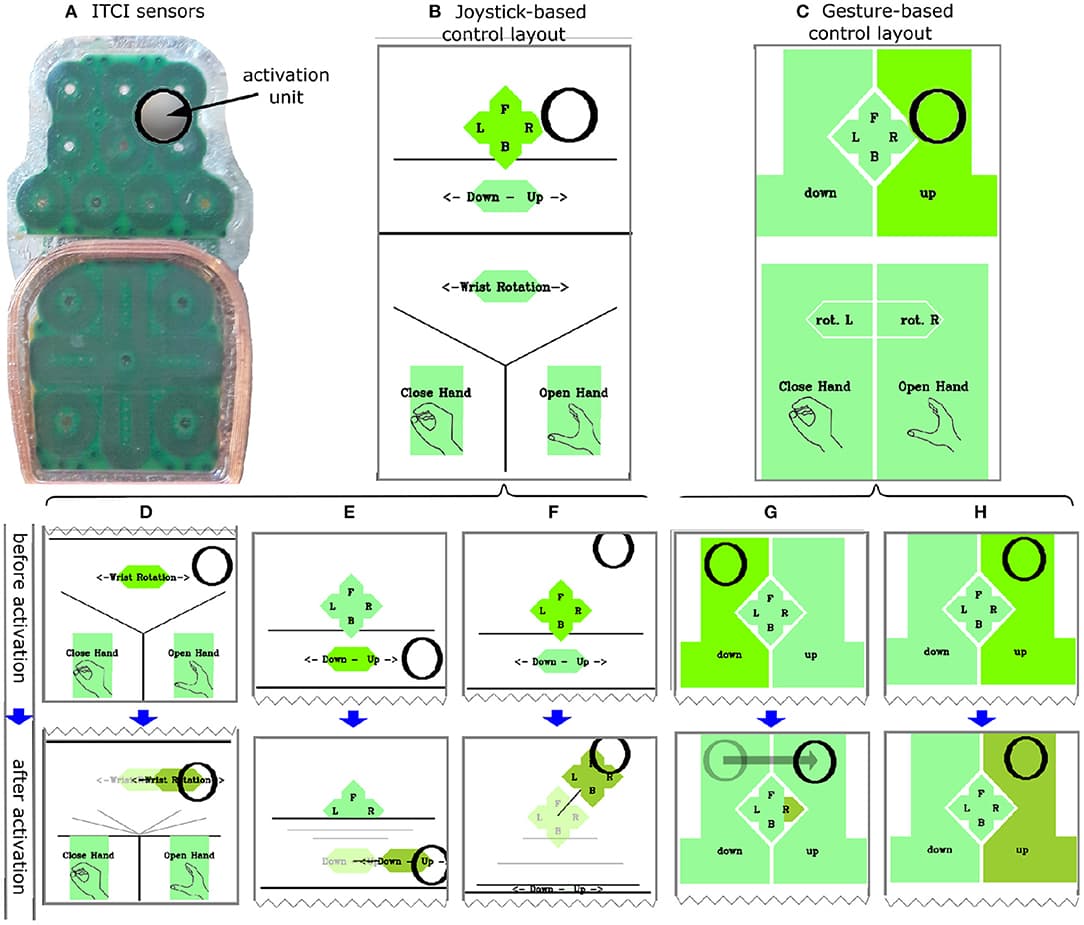

Approach: We first investigated physiologically feasible limits for muscle activation during combined actions. Using these limits we involved 12 intact participants and one amputee in a Target Achievement Control test, showing that tactile myography, i.e., high-density force myography, solves the problem of combined actions to a remarkable extent using simple linear regression. Since real-time usage of many sensors can be computationally demanding, we compare this approach with another one using a reduced feature set. These reduced features are obtained using a fast, spatial first-order approximation of the sensor values.

Main results: By using the training data of single actions only, i.e., power grasp or wrist movements, subjects achieved an average success rate of 70.0% in the target achievement test using ridge regression. When combining wrist actions, e.g., pronating and flexing the wrist simultaneously, similar results were obtained with an average of 68.1%. If a power grasp is added to the pool of actions, combined actions are much more difficult to achieve (36.1%).

Significance: To the best of our knowledge, for the first time, the effectiveness of tactile myography on single and combined actions is evaluated in a target achievement test. The present study includes 3 DoFs control instead of the two generally used in the literature. Additionally, we define a set of physiologically plausible muscle activation limits valid for most experiments of this kind.