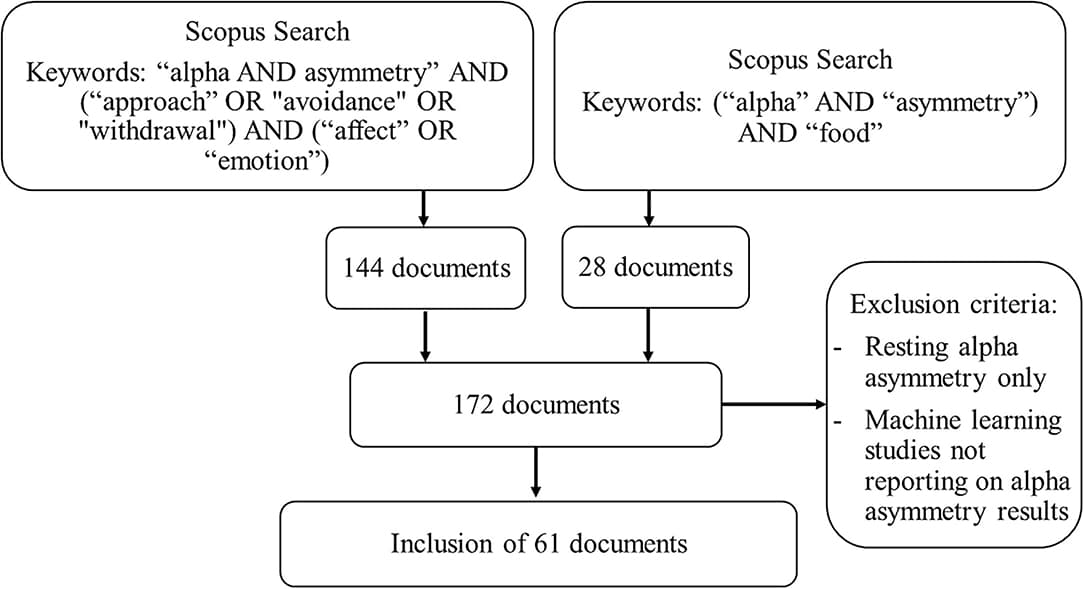

Frontal alpha asymmetry refers to the difference between the right and left alpha activity over the frontal brain region. Increased activity in the left hemisphere has been linked to approach motivation and increased activity in the right hemisphere has been linked to avoidance or withdrawal. However, research on alpha asymmetry is diverse and has shown mixed results, which may partly be explained by the potency of the used stimuli to emotionally and motivationally engage participants. This review gives an overview of the types of affective stimuli utilized with the aim to identify which stimuli elicit a strong approach-avoidance effect in an affective context. We hope this contributes to better understanding of what is reflected by alpha asymmetry, and in what circumstances it may be an informative marker of emotional state. We systematically searched the literature for studies exploring event-related frontal alpha asymmetry in affective contexts. The search resulted in 61 papers, which were categorized in five stimulus categories that were expected to differ in their potency to engage participants: images & sounds, videos, real cues, games and other tasks. Studies were viewed with respect to the potency of the stimuli to evoke significant approach-avoidance effects on their own and in interaction with participant characteristics or condition. As expected, passively perceived stimuli that are multimodal or realistic, seem more potent to elicit alpha asymmetry than unimodal stimuli. Games, and other stimuli with a strong task-based component were expected to be relatively engaging but approach-avoidance effects did not seem to be much clearer than the studies using perception of videos and real cues. While multiple factors besides stimulus characteristics determine alpha asymmetry, and we did not identify a type of affective stimulus that induces alpha asymmetry highly consistently, our results indicate that strongly engaging, salient and/or personally relevant stimuli are important to induce an approach-avoidance effect.

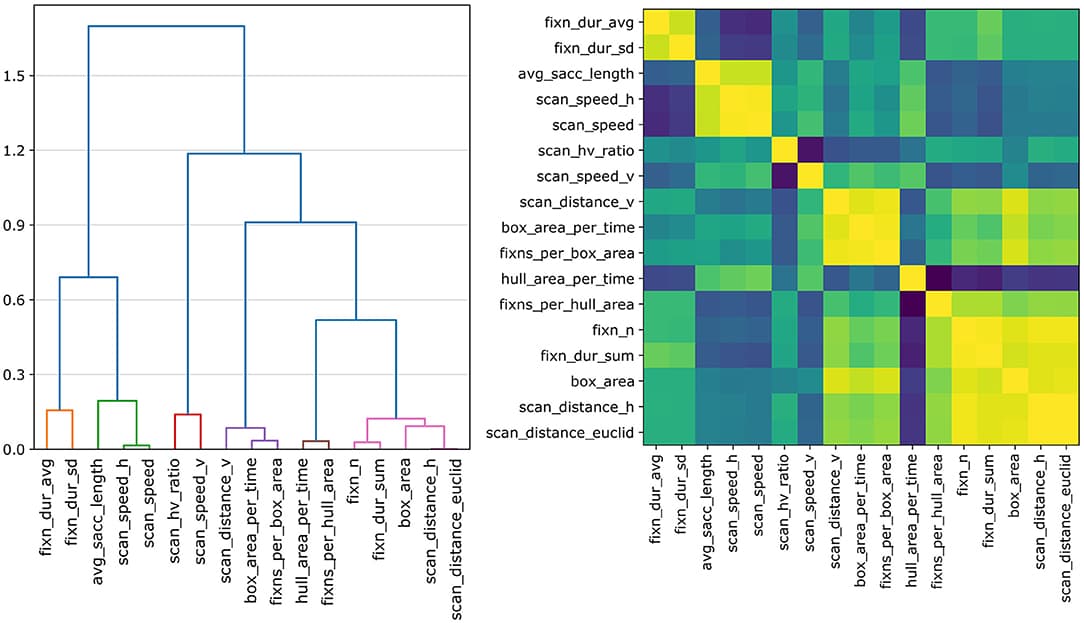

Often, various modalities capture distinct aspects of particular mental states or activities. While machine learning algorithms can reliably predict numerous aspects of human cognition and behavior using a single modality, they can benefit from the combination of multiple modalities. This is why hybrid BCIs are gaining popularity. However, it is not always straightforward to combine features from a multimodal dataset. Along with the method for generating the features, one must decide when the modalities should be combined during the classification process. We compare unimodal EEG and eye tracking classification of internally and externally directed attention to multimodal approaches for early, middle, and late fusion in this study. On a binary dataset with a chance level of 0.5, late fusion of the data achieves the highest classification accuracy of 0.609–0.675 (95%-confidence interval). In general, the results indicate that for these modalities, middle or late fusion approaches are better suited than early fusion approaches. Additional validation of the observed trend will require the use of additional datasets, alternative feature generation mechanisms, decision rules, and neural network designs. We conclude with a set of premises that need to be considered when deciding on a multimodal attentional state classification approach.

In future conditionally automated driving, drivers may be asked to take over control of the car while it is driving autonomously. Performing a non-driving-related task could degrade their takeover performance, which could be detected by continuous assessment of drivers' mental load. In this regard, three physiological signals from 80 subjects were collected during 1 h of conditionally automated driving in a simulator. Participants were asked to perform a non-driving cognitive task (N-back) for 90 s, 15 times during driving. The modality and difficulty of the task were experimentally manipulated. The experiment yielded a dataset of drivers' physiological indicators during the task sequences, which was used to predict drivers' workload. This was done by classifying task difficulty (three classes) and regressing participants' reported level of subjective workload after each task (on a 0–20 scale). Classification of task modality was also studied. For each task, the effect of sensor fusion and task performance were studied. The implemented pipeline consisted of a repeated cross validation approach with grid search applied to three machine learning algorithms. The results showed that three different levels of mental load could be classified with a f1-score of 0.713 using the skin conductance and respiration signals as inputs of a random forest classifier. The best regression model predicted the subjective level of workload with a mean absolute error of 3.195 using the three signals. The accuracy of the model increased with participants' task performance. However, classification of task modality (visual or auditory) was not successful. Some physiological indicators such as estimates of respiratory sinus arrhythmia, respiratory amplitude, and temporal indices of heart rate variability were found to be relevant measures of mental workload. Their use should be preferred for ongoing assessment of driver workload in automated driving.

![Aggregated disorientation events, accelerometry, and walking speed by age group by condition. Disorientation events (upper row), ankle worn accelerometric signal (middle row), and walking speed mean (lower row) according to age group and condition [control (C) vs. experimental (E)]. Bars show mean and 95% credibility intervals.](https://www.frontiersin.org/_rtmag/_next/image?url=https%3A%2F%2Fwww.frontiersin.org%2Ffiles%2FArticles%2F882446%2Ffpsyg-13-882446-HTML%2Fimage_m%2Ffpsyg-13-882446-g001.jpg&w=3840&q=75)