In this paper, we introduce a deep learning model to classify children as either healthy or potentially having autism with 94.6% accuracy using Deep Learning. Patients with autism struggle with social skills, repetitive behaviors, and communication, both verbal and non-verbal. Although the disease is considered to be genetic, the highest rates of accurate diagnosis occur when the child is tested on behavioral characteristics and facial features. Patients have a common pattern of distinct facial deformities, allowing researchers to analyze only an image of the child to determine if the child has the disease. While there are other techniques and models used for facial analysis and autism classification on their own, our proposal bridges these two ideas allowing classification in a cheaper, more efficient method. Our deep learning model uses MobileNet and two dense layers to perform feature extraction and image classification. The model is trained and tested using 3,014 images, evenly split between children with autism and children without it; 90% of the data is used for training and 10% is used for testing. Based on our accuracy, we propose that the diagnosis of autism can be done effectively using only a picture. Additionally, there may be other diseases that are similarly diagnosable.

Over the last few decades, electroencephalogram (EEG) has become one of the most vital tools used by physicians to diagnose several neurological disorders of the human brain and, in particular, to detect seizures. Because of its peculiar nature, the consequent impact of epileptic seizures on the quality of life of patients made the precise diagnosis of epilepsy extremely essential. Therefore, this article proposes a novel deep-learning approach for detecting seizures in pediatric patients based on the classification of raw multichannel EEG signal recordings that are minimally pre-processed. The new approach takes advantage of the automatic feature learning capabilities of a two-dimensional deep convolution autoencoder (2D-DCAE) linked to a neural network-based classifier to form a unified system that is trained in a supervised way to achieve the best classification accuracy between the ictal and interictal brain state signals. For testing and evaluating our approach, two models were designed and assessed using three different EEG data segment lengths and a 10-fold cross-validation scheme. Based on five evaluation metrics, the best performing model was a supervised deep convolutional autoencoder (SDCAE) model that uses a bidirectional long short-term memory (Bi-LSTM) – based classifier, and EEG segment length of 4 s. Using the public dataset collected from the Children’s Hospital Boston (CHB) and the Massachusetts Institute of Technology (MIT), this model has obtained 98.79 ± 0.53% accuracy, 98.72 ± 0.77% sensitivity, 98.86 ± 0.53% specificity, 98.86 ± 0.53% precision, and an F1-score of 98.79 ± 0.53%, respectively. Based on these results, our new approach was able to present one of the most effective seizure detection methods compared to other existing state-of-the-art methods applied to the same dataset.

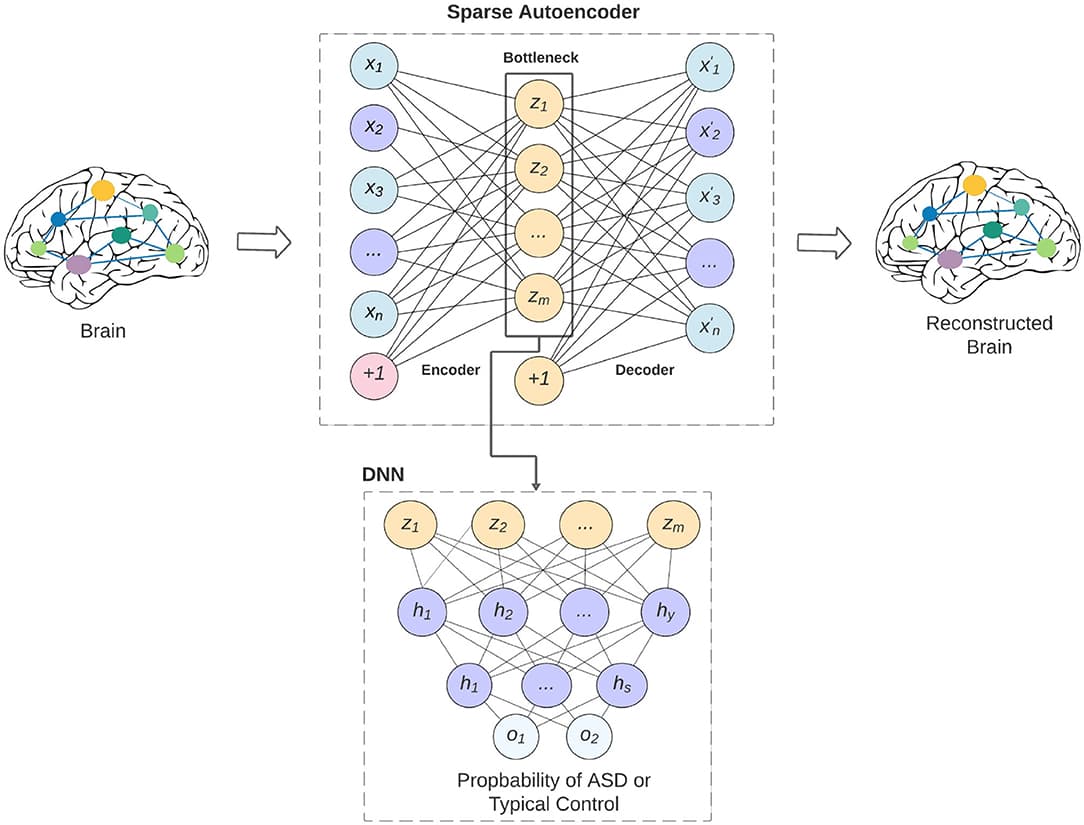

Autism spectrum disorder (ASD) is a heterogenous neurodevelopmental disorder which is characterized by impaired communication, and limited social interactions. The shortcomings of current clinical approaches which are based exclusively on behavioral observation of symptomology, and poor understanding of the neurological mechanisms underlying ASD necessitates the identification of new biomarkers that can aid in study of brain development, and functioning, and can lead to accurate and early detection of ASD. In this paper, we developed a deep-learning model called ASD-SAENet for classifying patients with ASD from typical control subjects using fMRI data. We designed and implemented a sparse autoencoder (SAE) which results in optimized extraction of features that can be used for classification. These features are then fed into a deep neural network (DNN) which results in superior classification of fMRI brain scans more prone to ASD. Our proposed model is trained to optimize the classifier while improving extracted features based on both reconstructed data error and the classifier error. We evaluated our proposed deep-learning model using publicly available Autism Brain Imaging Data Exchange (ABIDE) dataset collected from 17 different research centers, and include more than 1,035 subjects. Our extensive experimentation demonstrate that ASD-SAENet exhibits comparable accuracy (70.8%), and superior specificity (79.1%) for the whole dataset as compared to other methods. Further, our experiments demonstrate superior results as compared to other state-of-the-art methods on 12 out of the 17 imaging centers exhibiting superior generalizability across different data acquisition sites and protocols. The implemented code is available on GitHub portal of our lab at: https://github.com/pcdslab/ASD-SAENet.