Restricted Boltzmann Machines (RBMs) and Deep Belief Networks have been demonstrated to perform efficiently in a variety of applications, such as dimensionality reduction, feature learning, and classification. Their implementation on neuromorphic hardware platforms emulating large-scale networks of spiking neurons can have significant advantages from the perspectives of scalability, power dissipation and real-time interfacing with the environment. However, the traditional RBM architecture and the commonly used training algorithm known as Contrastive Divergence (CD) are based on discrete updates and exact arithmetics which do not directly map onto a dynamical neural substrate. Here, we present an event-driven variation of CD to train a RBM constructed with Integrate & Fire (I&F) neurons, that is constrained by the limitations of existing and near future neuromorphic hardware platforms. Our strategy is based on neural sampling, which allows us to synthesize a spiking neural network that samples from a target Boltzmann distribution. The recurrent activity of the network replaces the discrete steps of the CD algorithm, while Spike Time Dependent Plasticity (STDP) carries out the weight updates in an online, asynchronous fashion. We demonstrate our approach by training an RBM composed of leaky I&F neurons with STDP synapses to learn a generative model of the MNIST hand-written digit dataset, and by testing it in recognition, generation and cue integration tasks. Our results contribute to a machine learning-driven approach for synthesizing networks of spiking neurons capable of carrying out practical, high-level functionality.

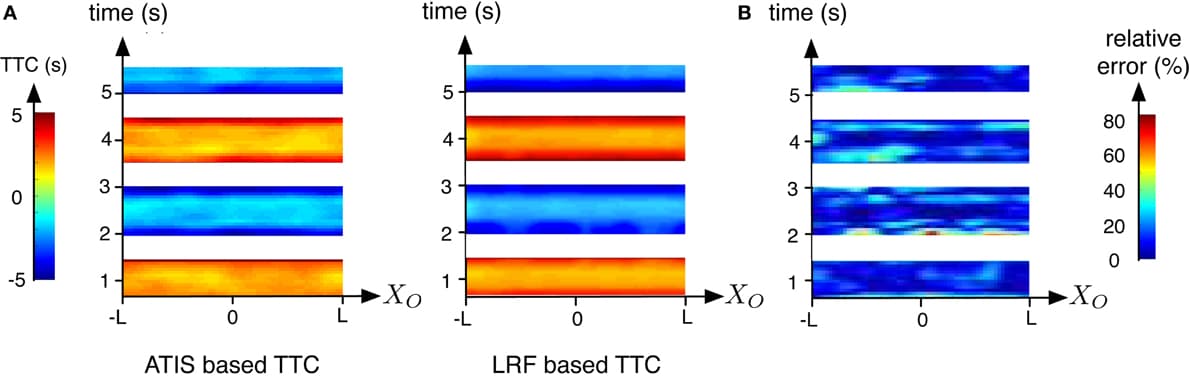

Conventional vision-based robotic systems that must operate quickly require high video frame rates and consequently high computational costs. Visual response latencies are lower-bound by the frame period, e.g., 20 ms for 50 Hz frame rate. This paper shows how an asynchronous neuromorphic dynamic vision sensor (DVS) silicon retina is used to build a fast self-calibrating robotic goalie, which offers high update rates and low latency at low CPU load. Independent and asynchronous per pixel illumination change events from the DVS signify moving objects and are used in software to track multiple balls. Motor actions to block the most “threatening” ball are based on measured ball positions and velocities. The goalie also sees its single-axis goalie arm and calibrates the motor output map during idle periods so that it can plan open-loop arm movements to desired visual locations. Blocking capability is about 80% for balls shot from 1 m from the goal even with the fastest-shots, and approaches 100% accuracy when the ball does not beat the limits of the servo motor to move the arm to the necessary position in time. Running with standard USB buses under a standard preemptive multitasking operating system (Windows), the goalie robot achieves median update rates of 550 Hz, with latencies of 2.2 ± 2 ms from ball movement to motor command at a peak CPU load of less than 4%. Practical observations and measurements of USB device latency are provided1.

Deep Belief Networks (DBNs) have recently shown impressive performance on a broad range of classification problems. Their generative properties allow better understanding of the performance, and provide a simpler solution for sensor fusion tasks. However, because of their inherent need for feedback and parallel update of large numbers of units, DBNs are expensive to implement on serial computers. This paper proposes a method based on the Siegert approximation for Integrate-and-Fire neurons to map an offline-trained DBN onto an efficient event-driven spiking neural network suitable for hardware implementation. The method is demonstrated in simulation and by a real-time implementation of a 3-layer network with 2694 neurons used for visual classification of MNIST handwritten digits with input from a 128 × 128 Dynamic Vision Sensor (DVS) silicon retina, and sensory-fusion using additional input from a 64-channel AER-EAR silicon cochlea. The system is implemented through the open-source software in the jAER project and runs in real-time on a laptop computer. It is demonstrated that the system can recognize digits in the presence of distractions, noise, scaling, translation and rotation, and that the degradation of recognition performance by using an event-based approach is less than 1%. Recognition is achieved in an average of 5.8 ms after the onset of the presentation of a digit. By cue integration from both silicon retina and cochlea outputs we show that the system can be biased to select the correct digit from otherwise ambiguous input.