EEG-based Brain-computer interfaces (BCI) are facing basic challenges in real-world applications. The technical difficulties in developing truly wearable BCI systems that are capable of making reliable real-time prediction of users' cognitive states in dynamic real-life situations may seem almost insurmountable at times. Fortunately, recent advances in miniature sensors, wireless communication and distributed computing technologies offered promising ways to bridge these chasms. In this paper, we report an attempt to develop a pervasive on-line EEG-BCI system using state-of-art technologies including multi-tier Fog and Cloud Computing, semantic Linked Data search, and adaptive prediction/classification models. To verify our approach, we implement a pilot system by employing wireless dry-electrode EEG headsets and MEMS motion sensors as the front-end devices, Android mobile phones as the personal user interfaces, compact personal computers as the near-end Fog Servers and the computer clusters hosted by the Taiwan National Center for High-performance Computing (NCHC) as the far-end Cloud Servers. We succeeded in conducting synchronous multi-modal global data streaming in March and then running a multi-player on-line EEG-BCI game in September, 2013. We are currently working with the ARL Translational Neuroscience Branch to use our system in real-life personal stress monitoring and the UCSD Movement Disorder Center to conduct in-home Parkinson's disease patient monitoring experiments. We shall proceed to develop the necessary BCI ontology and introduce automatic semantic annotation and progressive model refinement capability to our system.

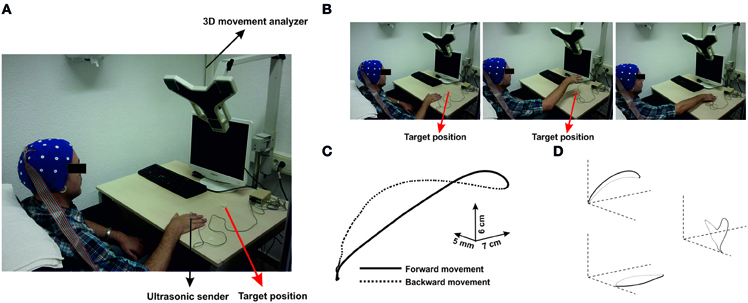

EEG involves the recording, analysis, and interpretation of voltages recorded on the human scalp which originate from brain gray matter. EEG is one of the most popular methods of studying and understanding the processes that underlie behavior. This is so, because EEG is relatively cheap, easy to wear, light weight and has high temporal resolution. In terms of behavior, this encompasses actions, such as movements that are performed in response to the environment. However, there are methodological difficulties which can occur when recording EEG during movement such as movement artifacts. Thus, most studies about the human brain have examined activations during static conditions. This article attempts to compile and describe relevant methodological solutions that emerged in order to measure body and brain dynamics during motion. These descriptions cover suggestions on how to avoid and reduce motion artifacts, hardware, software and techniques for synchronously recording EEG, EMG, kinematics, kinetics, and eye movements during motion. Additionally, we present various recording systems, EEG electrodes, caps and methods for determinating real/custom electrode positions. In the end we will conclude that it is possible to record and analyze synchronized brain and body dynamics related to movement or exercise tasks.

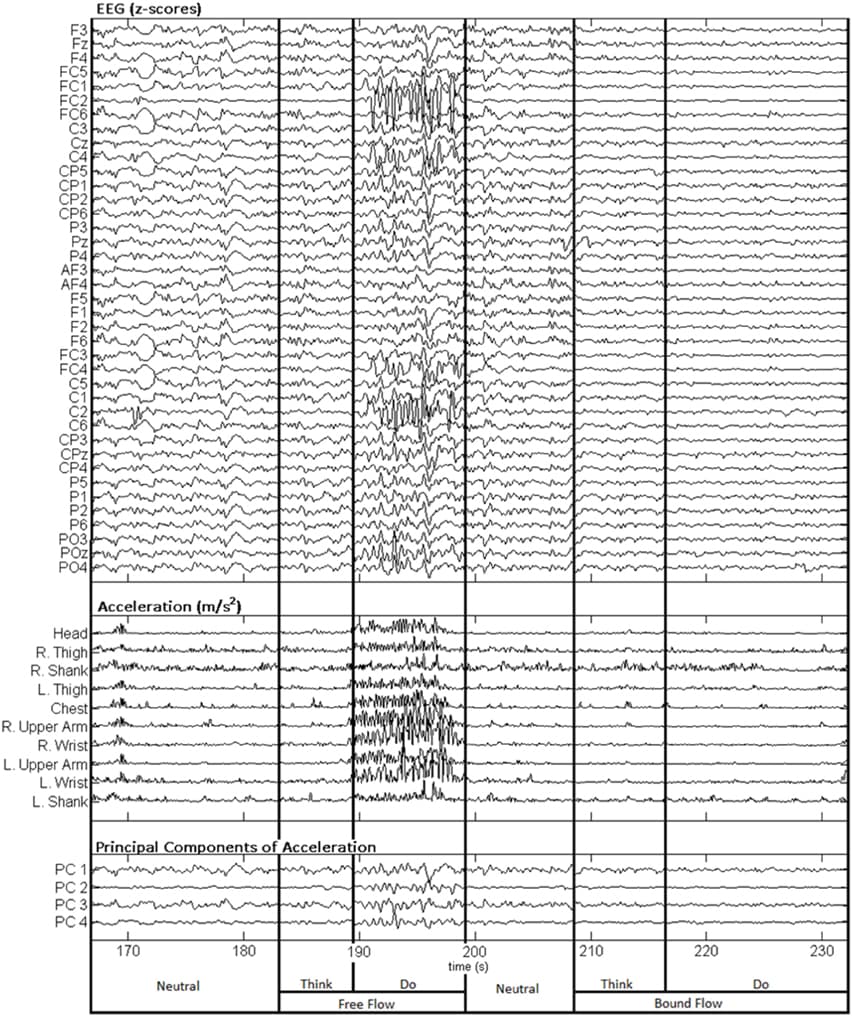

A new paradigm for human brain imaging, mobile brain/body imaging (MoBI), involves synchronous collection of human brain activity (via electroencephalography, EEG) and behavior (via body motion capture, eye tracking, etc.), plus environmental events (scene and event recording) to study joint brain/body dynamics supporting natural human cognition supporting performance of naturally motivated human actions and interactions in 3-D environments (Makeig et al., 2009). Processing complex, concurrent, multi-modal, multi-rate data streams requires a signal-processing environment quite different from one designed to process single-modality time series data. Here we describe MoBILAB (more details available at sccn.ucsd.edu/wiki/MoBILAB), an open source, cross platform toolbox running on MATLAB (The Mathworks, Inc.) that supports analysis and visualization of any mixture of synchronously recorded brain, behavioral, and environmental time series plus time-marked event stream data. MoBILAB can serve as a pre-processing environment for adding behavioral and other event markers to EEG data for further processing, and/or as a development platform for expanded analysis of simultaneously recorded data streams.

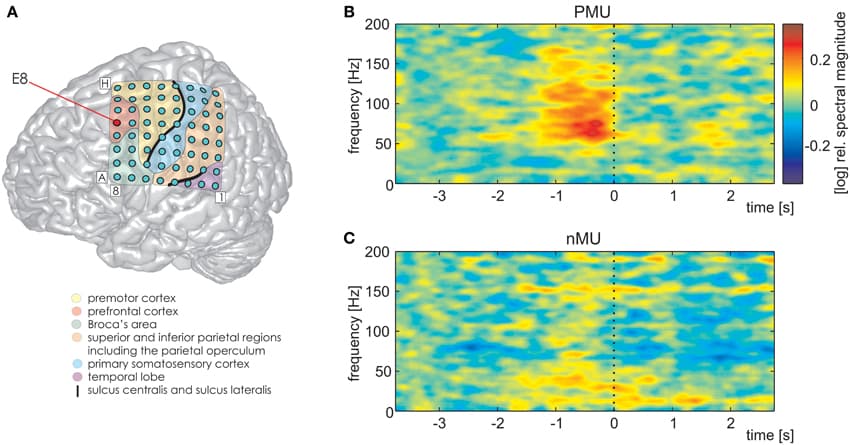

Voluntary drive is crucial for motor learning, therefore we are interested in the role that motor planning plays in gait movements. In this study we examined the impact of an interactive Virtual Environment (VE) feedback task on the EEG patterns during robot assisted walking. We compared walking in the VE modality to two control conditions: walking with a visual attention paradigm, in which visual stimuli were unrelated to the motor task; and walking with mirror feedback, in which participants observed their own movements. Eleven healthy participants were considered. Application of independent component analysis to the EEG revealed three independent component clusters in premotor and parietal areas showing increased activity during walking with the adaptive VE training paradigm compared to the control conditions. During the interactive VE walking task spectral power in frequency ranges 8–12, 15–20, and 23–40 Hz was significantly (p ≤ 0.05) decreased. This power decrease is interpreted as a correlate of an active cortical area. Furthermore activity in the premotor cortex revealed gait cycle related modulations significantly different (p ≤ 0.05) from baseline in the frequency range 23–40 Hz during walking. These modulations were significantly (p ≤ 0.05) reduced depending on gait cycle phases in the interactive VE walking task compared to the control conditions. We demonstrate that premotor and parietal areas show increased activity during walking with the adaptive VE training paradigm, when compared to walking with mirror- and movement unrelated feedback. Previous research has related a premotor-parietal network to motor planning and motor intention. We argue that movement related interactive feedback enhances motor planning and motor intention. We hypothesize that this might improve gait recovery during rehabilitation.

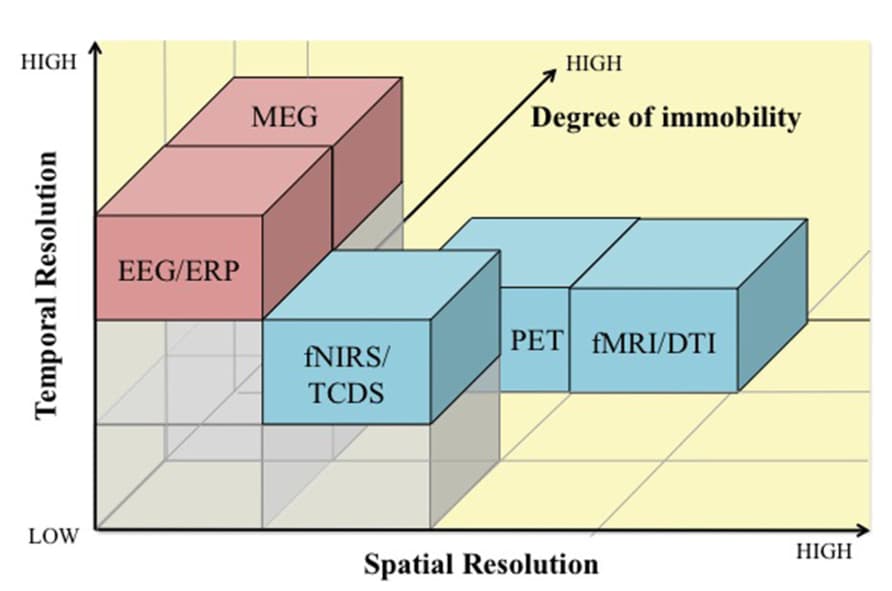

Neuroergonomics is an emerging science that is defined as the study of the human brain in relation to performance at work and in everyday settings. This paper provides a critical review of the neuroergonomic approach to evaluating physical and cognitive work, particularly in mobile settings. Neuroergonomics research employing mobile and immobile brain imaging techniques are discussed in the following areas of physical and cognitive work: (1) physical work parameters; (2) physical fatigue; (3) vigilance and mental fatigue; (4) training and neuroadaptive systems; and (5) assessment of concurrent physical and cognitive work. Finally, the integration of brain and body measurements in investigating workload and fatigue, in the context of mobile brain/body imaging (“MoBI”), is discussed.

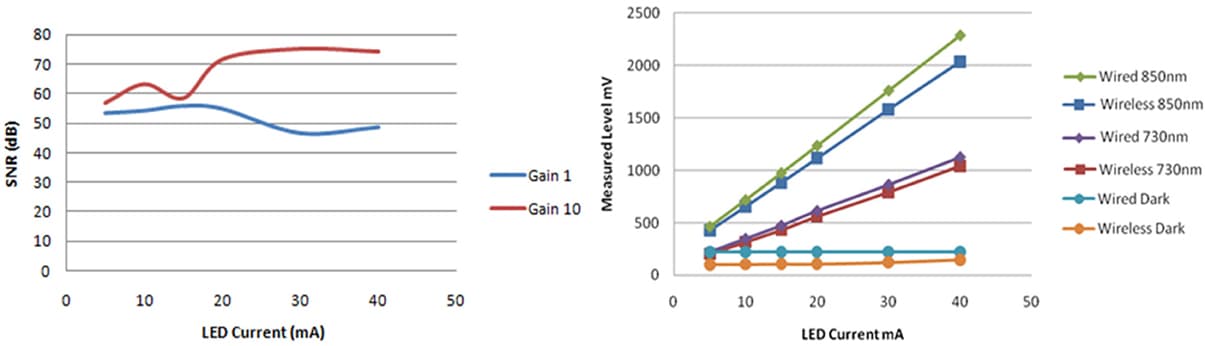

Functional near infrared spectroscopy (fNIRS) is a non-invasive, safe, and portable optical neuroimaging method that can be used to assess brain dynamics during skill acquisition and performance of complex work and everyday tasks. In this paper we describe neuroergonomic studies that illustrate the use of fNIRS in the examination of training-related brain dynamics and human performance assessment. We describe results of studies investigating cognitive workload in air traffic controllers, acquisition of dual verbal-spatial working memory skill, and development of expertise in piloting unmanned vehicles. These studies used conventional fNIRS devices in which the participants were tethered to the device while seated at a workstation. Consistent with the aims of mobile brain imaging (MoBI), we also describe a compact and battery-operated wireless fNIRS system that performs with similar accuracy as other established fNIRS devices. Our results indicate that both wired and wireless fNIRS systems allow for the examination of brain function in naturalistic settings, and thus are suitable for reliable human performance monitoring and training assessment.

![Scalp projection, spatial location and power spectra of independent component clusters (A) Cluster A located in the supplementary motor area (premotor cortex); (B) Cluster B located in the posterior cortex (Brodmann area 7); (C) Cluster C located in the posterior cortex (Brodmann area 40). From left to right in each row: cluster average scalp projections; dipole locations of cluster ICs (blue spheres) and cluster centroids (red spheres) visualized in the MNI brain volume in coronal and sagittal views; PSD for all feedback conditions. For cluster B and C a clear difference in PSD between noFB and Gaze vs. both of the VE conditions in the mu and in the beta range can be observed [Naming: Ss, ICs—number of subjects (Ss) and Independent Components (ICs) in the cluster].](https://www.frontiersin.org/_rtmag/_next/image?url=https%3A%2F%2Fwww.frontiersin.org%2Ffiles%2FArticles%2F76607%2Ffnhum-08-00093-HTML%2Fimage_m%2Ffnhum-08-00093-g003.jpg&w=3840&q=75)