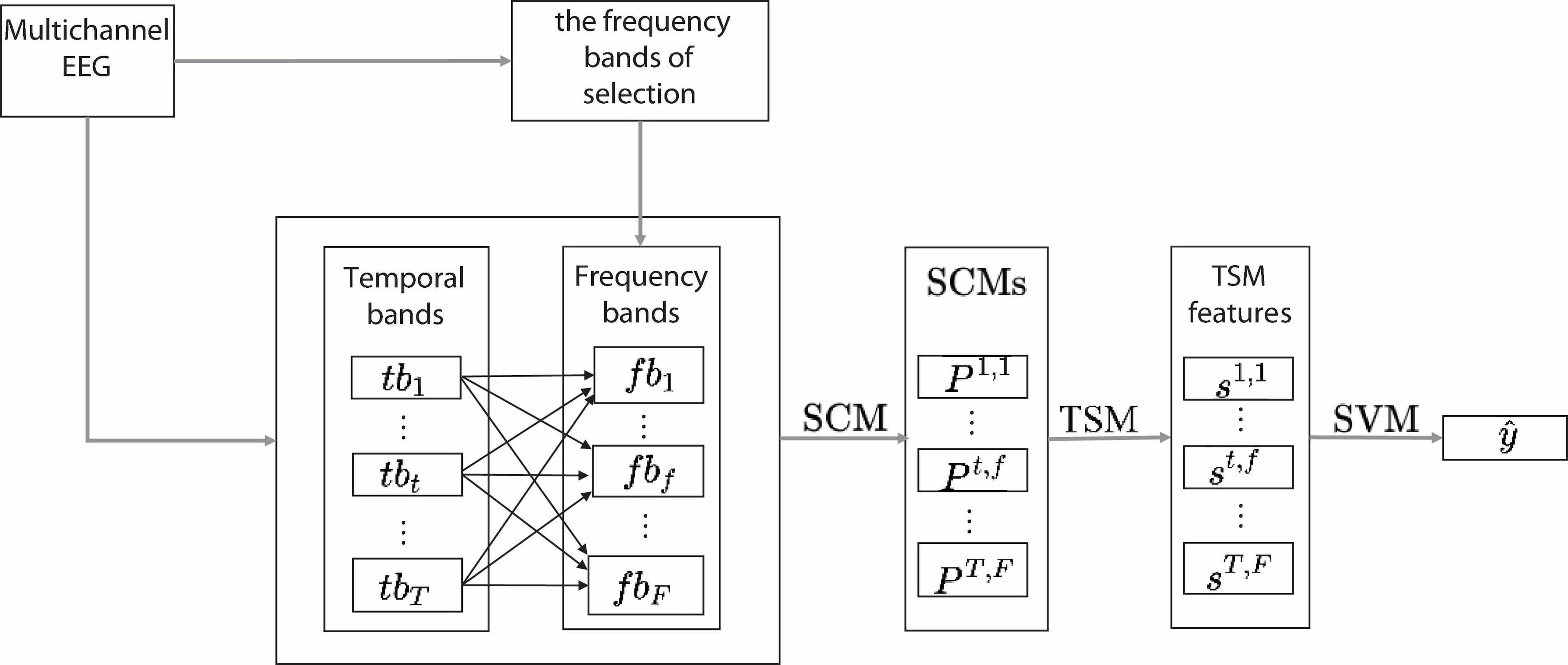

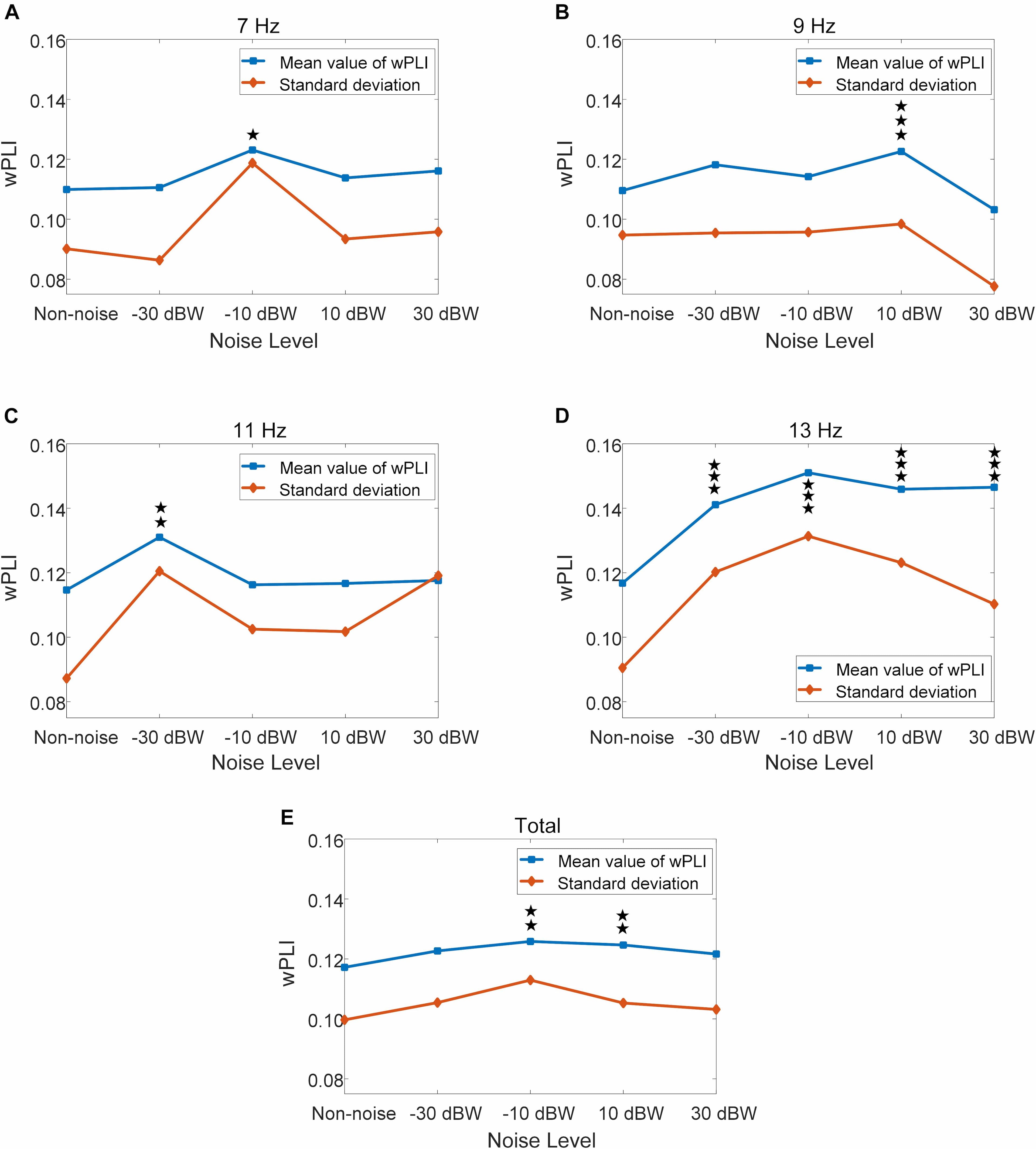

As a physiological process and high-level cognitive behavior, emotion is an important subarea in neuroscience research. Emotion recognition across subjects based on brain signals has attracted much attention. Due to individual differences across subjects and the low signal-to-noise ratio of EEG signals, the performance of conventional emotion recognition methods is relatively poor. In this paper, we propose a self-organized graph neural network (SOGNN) for cross-subject EEG emotion recognition. Unlike the previous studies based on pre-constructed and fixed graph structure, the graph structure of SOGNN are dynamically constructed by self-organized module for each signal. To evaluate the cross-subject EEG emotion recognition performance of our model, leave-one-subject-out experiments are conducted on two public emotion recognition datasets, SEED and SEED-IV. The SOGNN is able to achieve state-of-the-art emotion recognition performance. Moreover, we investigated the performance variances of the models with different graph construction techniques or features in different frequency bands. Furthermore, we visualized the graph structure learned by the proposed model and found that part of the structure coincided with previous neuroscience research. The experiments demonstrated the effectiveness of the proposed model for cross-subject EEG emotion recognition.

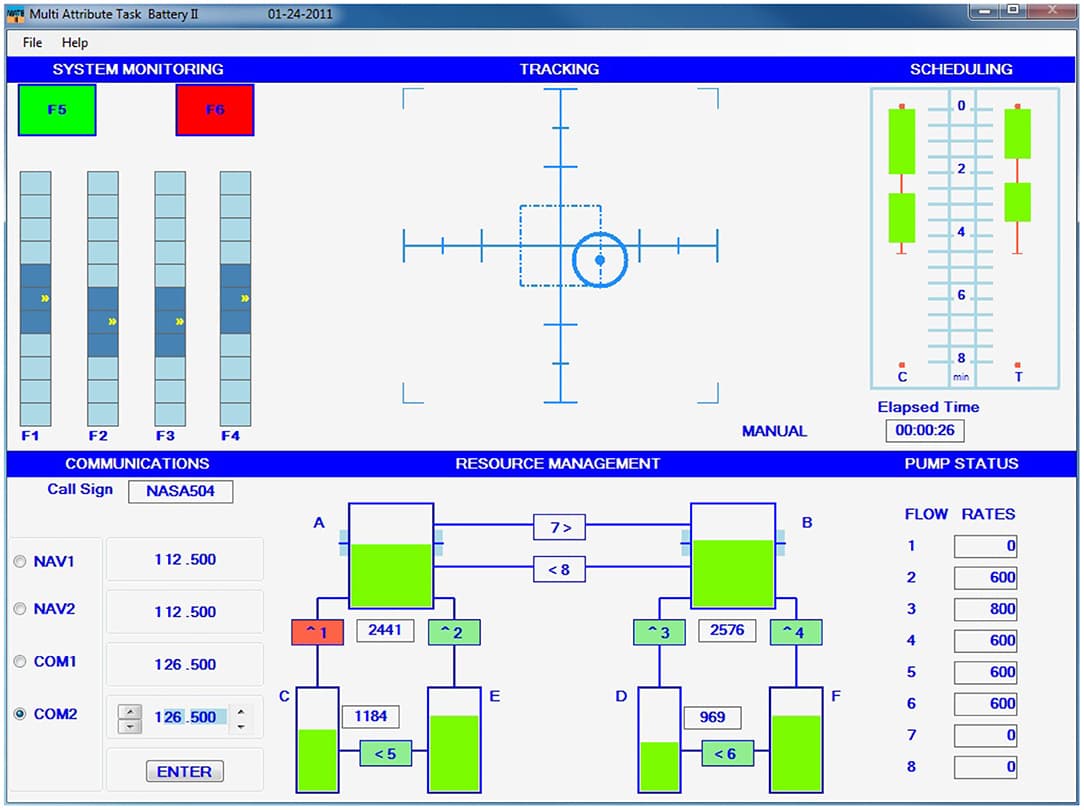

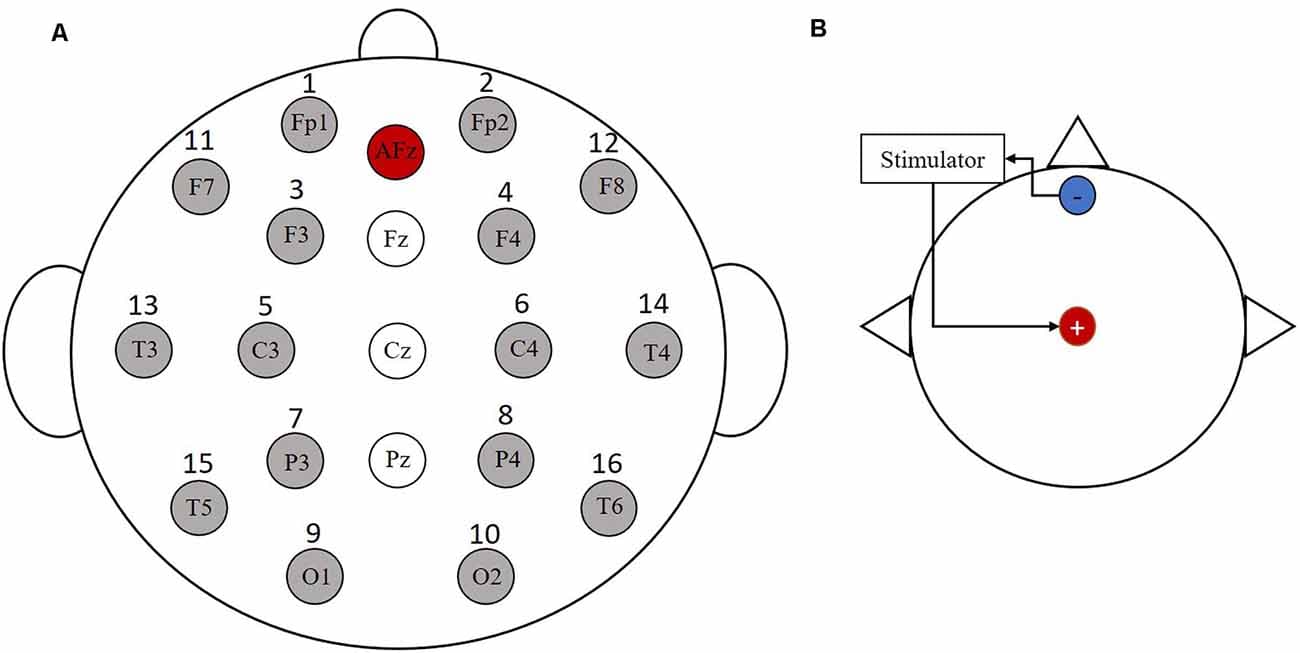

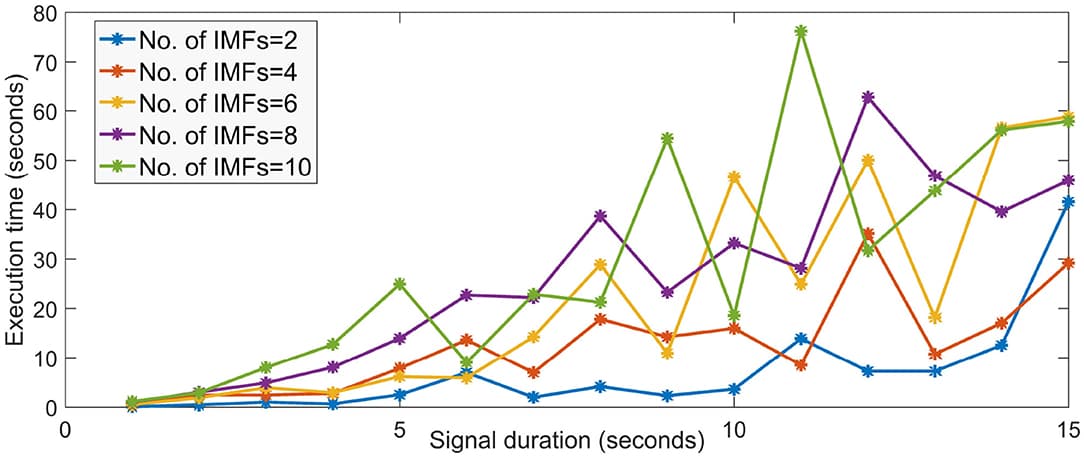

Recently, due to the emergence of mobile electroencephalography (EEG) devices, assessment of mental workload in highly ecological settings has gained popularity. In such settings, however, motion and other common artifacts have been shown to severely hamper signal quality and to degrade mental workload assessment performance. Here, we show that classical EEG enhancement algorithms, conventionally developed to remove ocular and muscle artifacts, are not optimal in settings where participant movement (e.g., walking or running) is expected. As such, an adaptive filter is proposed that relies on an accelerometer-based referential signal. We show that when combined with classical algorithms, accurate mental workload assessment is achieved. To test the proposed algorithm, data from 48 participants was collected as they performed the Revised Multi-Attribute Task Battery-II (MATB-II) under a low and a high workload setting, either while walking/jogging on a treadmill, or using a stationary exercise bicycle. Accuracy as high as 95% could be achieved with a random forest based mental workload classifier with ambulant users. Moreover, an increase in gamma activity was found in the parietal cortex, suggesting a connection between sensorimotor integration, attention, and workload in ambulant users.

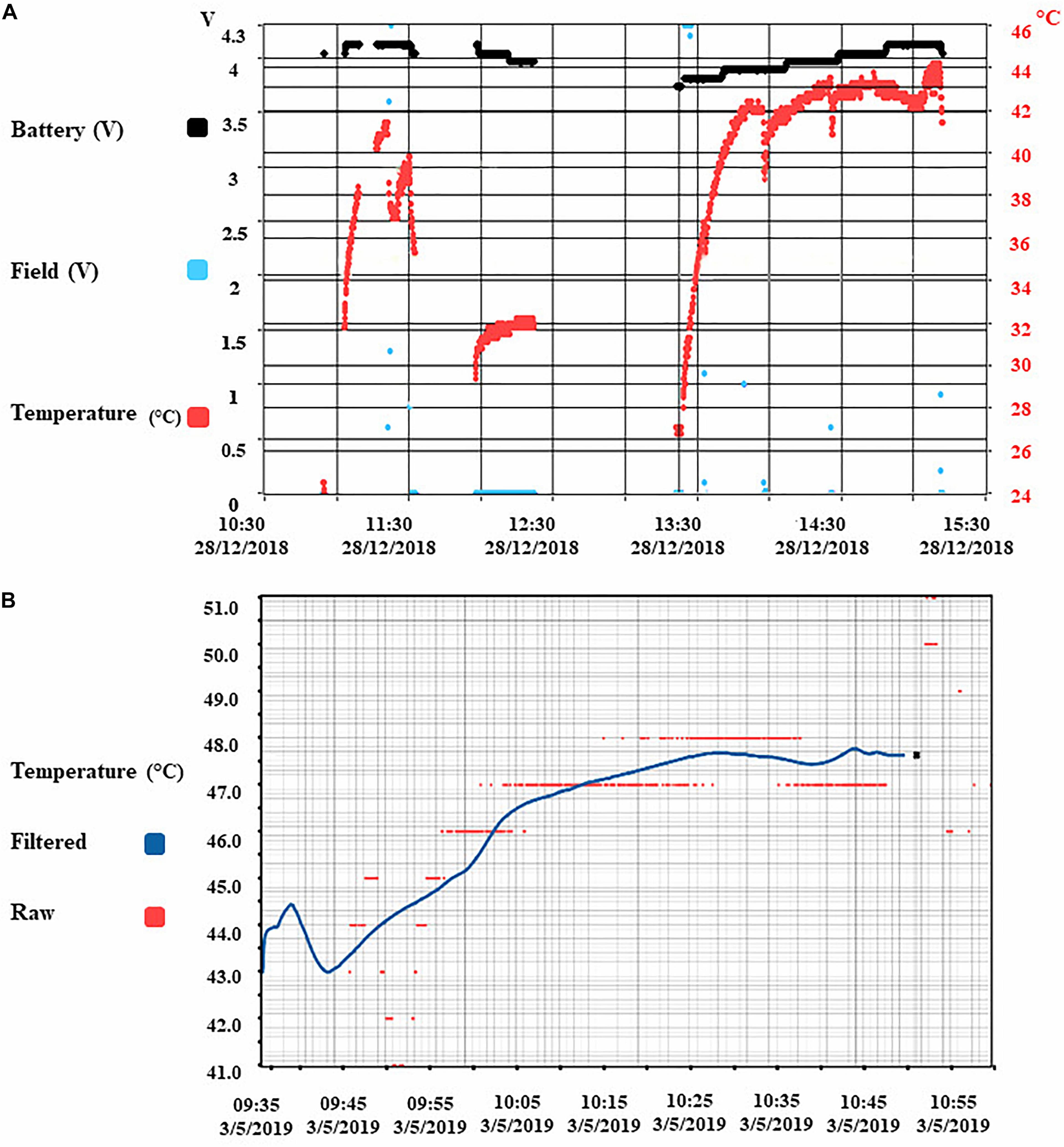

Recording and manipulating neuronal ensemble activity is a key requirement in advanced neuromodulatory and behavior studies. Devices capable of both recording and manipulating neuronal activity brain-computer interfaces (BCIs) should ideally operate un-tethered and allow chronic longitudinal manipulations in the freely moving animal. In this study, we designed a new intracortical BCI feasible of telemetric recording and stimulating local gray and white matter of visual neural circuit after irradiation exposure. To increase the translational reliance, we put forward a Göttingen minipig model. The animal was stereotactically irradiated at the level of the visual cortex upon defining the target by a fused cerebral MRI and CT scan. A fully implantable neural telemetry system consisting of a 64 channel intracortical multielectrode array, a telemetry capsule, and an inductive rechargeable battery was then implanted into the visual cortex to record and manipulate local field potentials, and multi-unit activity. We achieved a 3-month stability of the functionality of the un-tethered BCI in terms of telemetric radio-communication, inductive battery charging, and device biocompatibility for 3 months. Finally, we could reliably record the local signature of sub- and suprathreshold neuronal activity in the visual cortex with high bandwidth without complications. The ability to wireless induction charging combined with the entirely implantable design, the rather high recording bandwidth, and the ability to record and stimulate simultaneously put forward a wireless BCI capable of long-term un-tethered real-time communication for causal preclinical circuit-based closed-loop interventions.

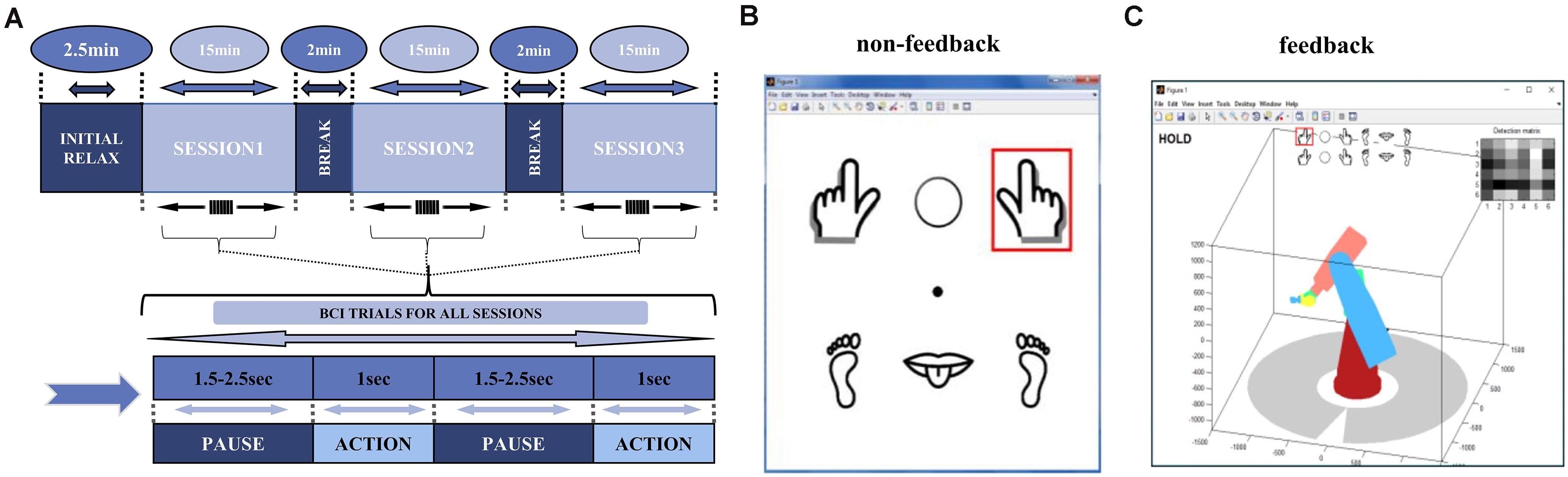

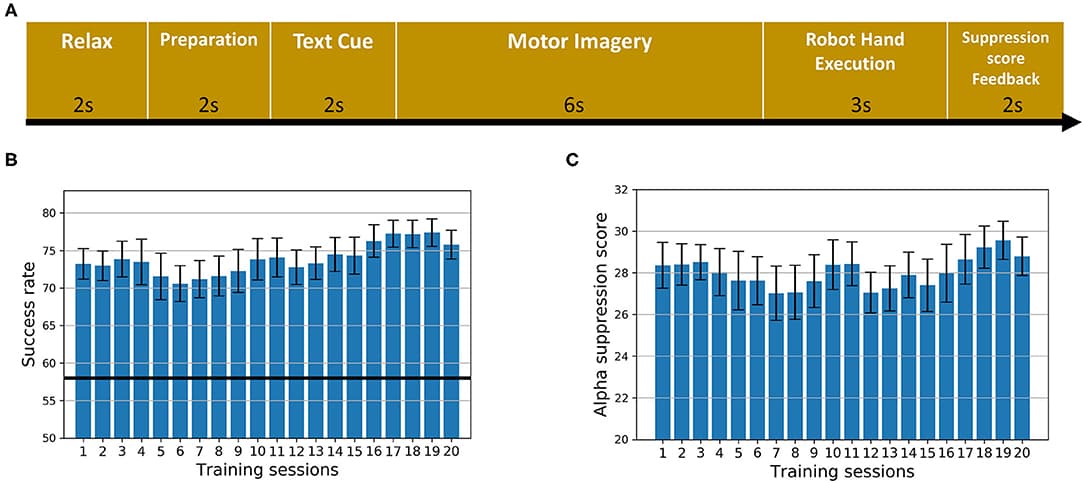

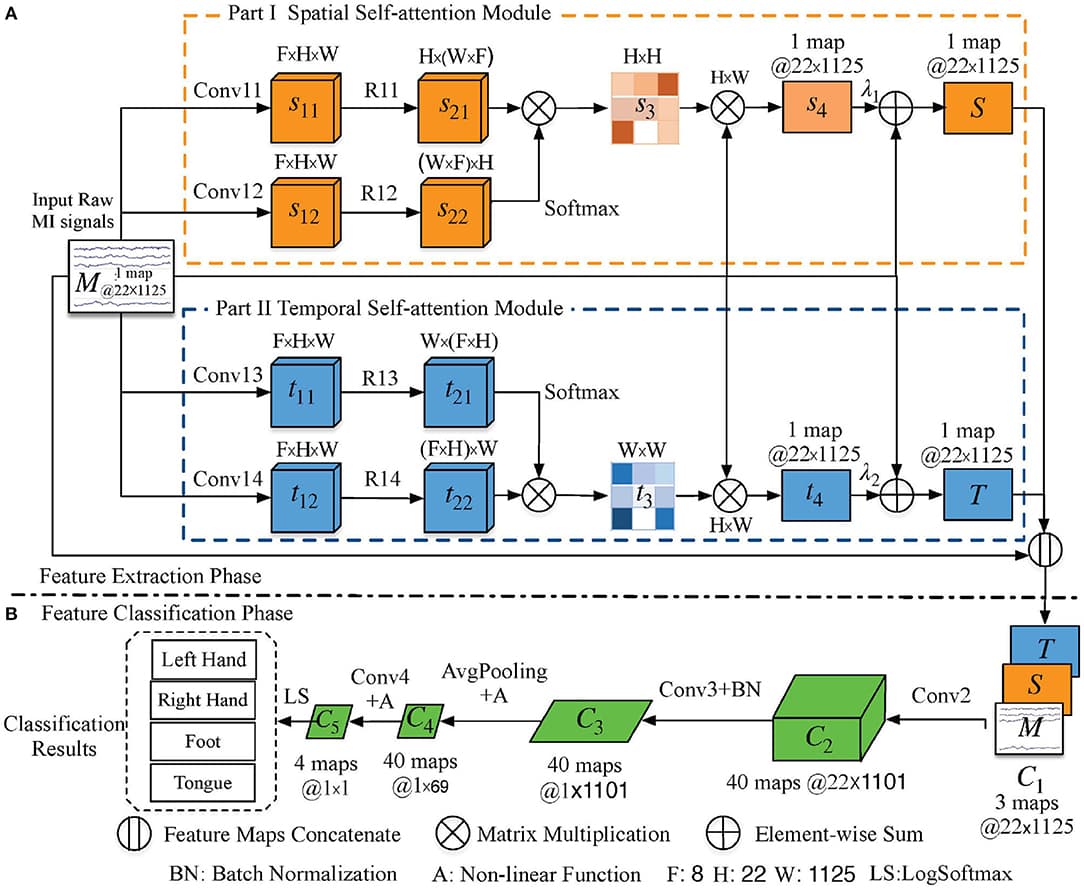

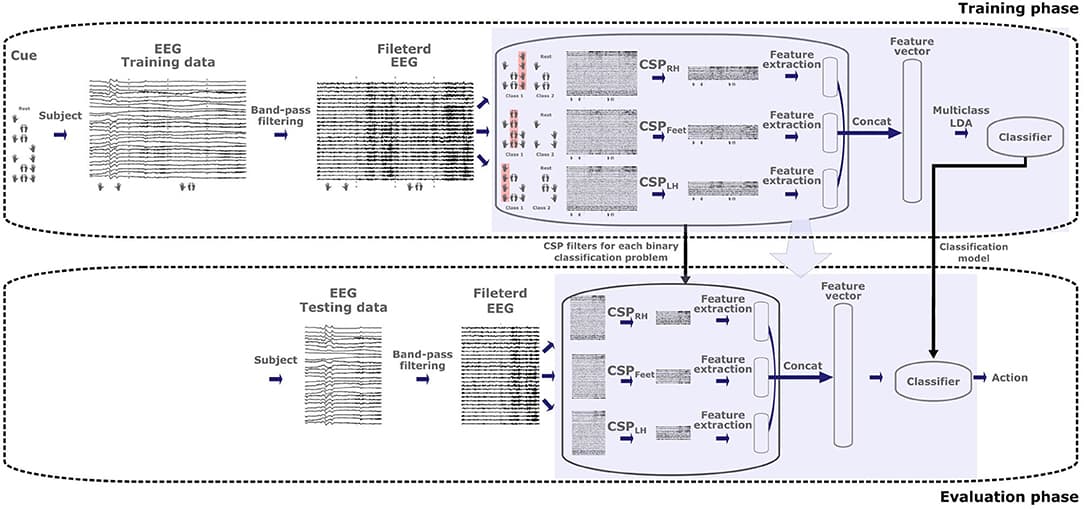

Motor imagery (MI) electroencephalography (EEG) classification is an important part of the brain-computer interface (BCI), allowing people with mobility problems to communicate with the outside world via assistive devices. However, EEG decoding is a challenging task because of its complexity, dynamic nature, and low signal-to-noise ratio. Designing an end-to-end framework that fully extracts the high-level features of EEG signals remains a challenge. In this study, we present a parallel spatial–temporal self-attention-based convolutional neural network for four-class MI EEG signal classification. This study is the first to define a new spatial-temporal representation of raw EEG signals that uses the self-attention mechanism to extract distinguishable spatial–temporal features. Specifically, we use the spatial self-attention module to capture the spatial dependencies between the channels of MI EEG signals. This module updates each channel by aggregating features over all channels with a weighted summation, thus improving the classification accuracy and eliminating the artifacts caused by manual channel selection. Furthermore, the temporal self-attention module encodes the global temporal information into features for each sampling time step, so that the high-level temporal features of the MI EEG signals can be extracted in the time domain. Quantitative analysis shows that our method outperforms state-of-the-art methods for intra-subject and inter-subject classification, demonstrating its robustness and effectiveness. In terms of qualitative analysis, we perform a visual inspection of the new spatial–temporal representation estimated from the learned architecture. Finally, the proposed method is employed to realize control of drones based on EEG signal, verifying its feasibility in real-time applications.

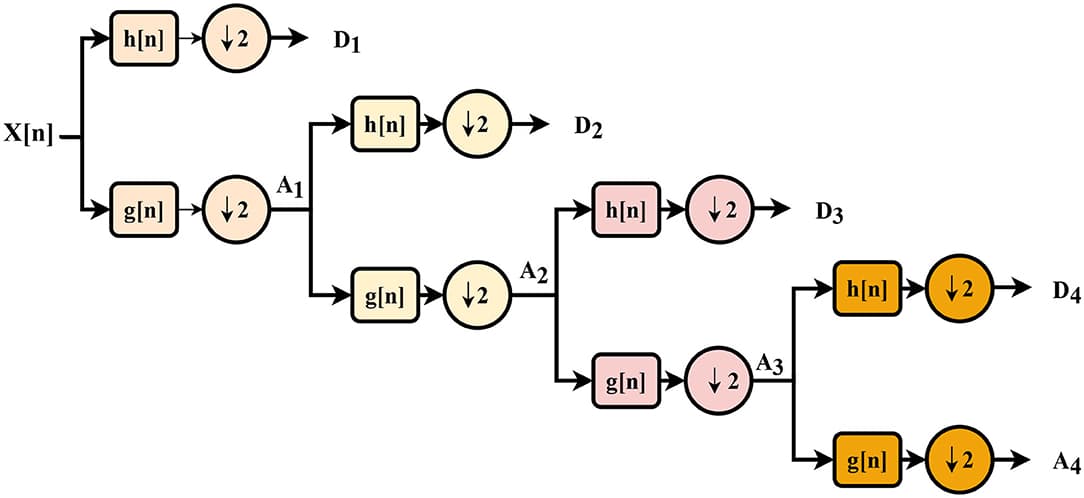

Brain-computer interface (BCI) multi-modal fusion has the potential to generate multiple commands in a highly reliable manner by alleviating the drawbacks associated with single modality. In the present work, a hybrid EEG-fNIRS BCI system—achieved through a fusion of concurrently recorded electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) signals—is used to overcome the limitations of uni-modality and to achieve higher tasks classification. Although the hybrid approach enhances the performance of the system, the improvements are still modest due to the lack of availability of computational approaches to fuse the two modalities. To overcome this, a novel approach is proposed using Multi-resolution singular value decomposition (MSVD) to achieve system- and feature-based fusion. The two approaches based up different features set are compared using the KNN and Tree classifiers. The results obtained through multiple datasets show that the proposed approach can effectively fuse both modalities with improvement in the classification accuracy.

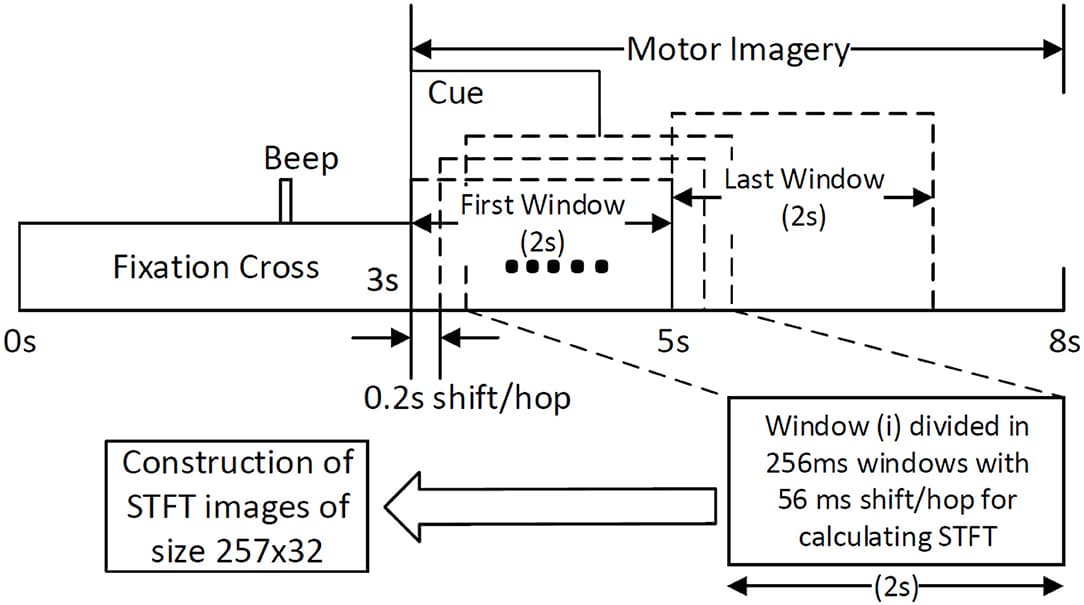

Inter-subject transfer learning is a long-standing problem in brain-computer interfaces (BCIs) and has not yet been fully realized due to high inter-subject variability in the brain signals related to motor imagery (MI). The recent success of deep learning-based algorithms in classifying different brain signals warrants further exploration to determine whether it is feasible for the inter-subject continuous decoding of MI signals to provide contingent neurofeedback which is important for neurorehabilitative BCI designs. In this paper, we have shown how a convolutional neural network (CNN) based deep learning framework can be used for inter-subject continuous decoding of MI related electroencephalographic (EEG) signals using the novel concept of Mega Blocks for adapting the network against inter-subject variabilities. These Mega Blocks have the capacity to repeat a specific architectural block several times such as one or more convolutional layers in a single Mega Block. The parameters of such Mega Blocks can be optimized using Bayesian hyperparameter optimization. The results, obtained on the publicly available BCI competition IV-2b dataset, yields an average inter-subject continuous decoding accuracy of 71.49% (κ = 0.42) and 70.84% (κ = 0.42) for two different training methods such as adaptive moment estimation (Adam) and stochastic gradient descent (SGDM), respectively, in 7 out of 9 subjects. Our results show for the first time that it is feasible to use CNN based architectures for inter-subject continuous decoding with a sufficient level of accuracy for developing calibration-free MI-BCIs for practical purposes.