94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 30 January 2025

Sec. Virtual Reality and Human Behaviour

Volume 6 - 2025 | https://doi.org/10.3389/frvir.2025.1451273

This article is part of the Research Topic Ethics in the Metaverse View all 4 articles

Researchers have identified various ethical issues related to the use of VR. For example, issues of consent, privacy and harm. It is important to address these issues as VR impacts various industries, including communications, education and entertainment. Existing ethical frameworks in particular may be the closest tool we have when it comes to understanding how to curb some of the ethical challenges that the technology presents. Recent work names ethical concerns related to VR, such as privacy and accessibility; however, we note that less discuss frameworks that can be used to guide responsible VR use. Such work also glosses over what various audiences think about ethical issues. This information in turn could be used to determine which existing frameworks can provide guidance for responsible use. To address this gap, we examine current literature, government documents, and conduct an analysis of 300 Amazon reviews of three top-rated VR products—to see whether and what ethical concerns various audiences identify. That is, we ask two questions: 1) Are three specific types of audiences naming ethical VR issues, and if so, what are the issues? 2) What frameworks could potentially guide users toward responsible use? We find that users are concerned about ethical issues and that three frameworks could guide us towards more responsible VR use: 1) Institutional Review Board (IRB) frameworks, 2) a care ethics framework, and 3) co-created, living ethical codes. We further pull from these three frameworks to offer a new ethical synthesis framework or ESF framework that could guide responsible use.

Even though virtual reality, or VR, has been around for more than 50 years, more consumers are using the technology today than in the past, leading to a new mainstream digital frontier that subsequently raises ethical questions (Flattery, 2021; Frenkel, 2022; Lanier, 2024). Given this current point in VR’s evolution, identifying ethical concerns at this stage can provide an important entryway for course correction if ethical considerations are missing. Moreover, given the profitability and growth of VR in consumer markets (VR, 2023), it is important to raise questions about ethical use to protect the rights of end users (Dremliuga et al., 2020; Lemley and Volokh, 2018). Perhaps, most importantly, researchers have noted that ethical thinking when applied to newer and emerging types of technologies, like VR, can often serve as an important precursor to the development of laws and policies (Carrillo, 2020).

Our main argument is that when it comes to VR, there is still no consensus on what constitutes responsible use, despite the fact that there are well-thought-out existing ethical frameworks that could begin to shape responsible use. One reason for this may be that comparatively less has been done on the ethical issues of VR compared to other areas of VR exploration, for example, in terms of its potential to change current industry dynamics (Adams et al., 2019; Flattery, 2021; Hatfield et al., 2022). As such, less is also known about which frameworks would be appropriate guides for promoting responsible VR use.

Responsible use is also hard to define without considering the viewpoint of involved audiences and stakeholders and has received less attention relative to other VR areas, for exception see (Ahmadpour et al., 2020). Whether and what ethical concerns are raised by contemporary stakeholders or those operating in the VR ecosystem at present can help orient us to responsible use guidelines. Consequently, we ask two questions here: 1) Are contemporary stakeholders (researchers, government officials, consumers) concerned about ethical VR issues, and if so, what are the issues they name? 2) What frameworks could potentially guide users toward responsible use?

To answer these two questions, we 1) draw on current, select VR and ethics research and examine what the researchers say. 2) We examine U.S. Congressional hearings to ascertain if hearings mention the ethical implications of VR and examine what these are. The U.S. case is considered here given its prevalence in shaping VR consumer trends and global market share (Byrne, 2023). We also focus on congressional hearings because they highlight a government perspective and because technological VR development is influenced by the government (Lanier, 2024). We also incorporate a review of 300 Amazon reviewers to determine whether and what ethical concerns consumers have raised in 2024. Unlike other studies, these three specific types of stakeholder perspectives are discussed, and three frameworks are recommended that can promote the responsible use of VR - we also include a discussion of a new framework (ethical synthesis framework or ESF) that focuses on recurring recommendations that are common across all three. Please note, this paper does not exhaustively examine what policies, regulations, or laws govern the use of VR, although some of this analysis is included here.

VR is a class of computer-generated technologies with high immersive qualities that create computer-aided artificial realities (Fox et al., 2009). To experience VR, users typically have to wear a head-mounted display (HMD), a device that resembles half a helmet and is connected to a computer interface (Virtual Reality, 2017). With the help of tracking software built into the VR hardware alongside haptic or touch control technology, users can navigate a computer-programed spatial environment by having it adjust to their head movements (Bailenson, 2018). The term “VR” itself was coined by Jaron Lanier, who wanted to use it to describe a scenario in which a user is immersed and able to enter another dimension (Virtual Reality, 2017).

VR has been around for more than half a century. Ivan Sutherland first popularized VR in 1965 with the aim of creating a virtual world that resembled, sounded and felt like our reality, but functioned by transporting the user to another environment (Mazuryk, Tomasz; Gervautz, 1999).

Millions of consumers use VR. There are currently an estimated 171 million active VR users worldwide (VR, 2023). VR is also a billion dollar industry and growing. Market estimates predict that revenue generated by international VR users will reach nearly $450 billion by 2030 (Byrne, 2023).

VR offers unique experiences. In order to characterize the distinctive aspects of VR experiences, Jeremy Bailenson (2018) developed the DICE framework (Bailenson, 2018). According to DICE, VR experiences can be described as dangerous, impossible, counterfactual, and expensive. The DICE framework, for example, takes real life as a counterpoint: in VR you can jump into a volcano (dangerous), you can spend your entire life on a faraway purple planet (impossible), you can go back to the time when the dinosaurs were alive and experience what would have happened if they had not gone extinct (counterfactual), and you could build an entire civilization out of gold (expensive).

VR can provide detailed information about complex issues (Seymour, 2008) and has the capacity to strengthen emotional ties with individuals who live far away or in different eras (Jerald, 2016). It also makes it possible to depict events like natural disasters and war realistically, which is extremely difficult to do in other media (Haar, 2005; Lele, 2013).

It remains, the current VR ecosystem is influenced by the following factors: it has been around for decades, but there is still little guidance regarding what constitutes ethical or responsible use; it has millions of users and most likely growing; and it can create uniquely compelling, real experiences for individuals.

VR uses span entertainment, technology, health, medicine and education (Virtual Reality, 2017). Examples of VR use include but are not limited to: entertainment (Abdelmaged, 2021; Jia and Chen, 2017), training and education (Abdelmaged, 2021; Kuna et al., 2023) and medicine (Chirico et al., 2016; Jiang et al., 2022). The entertainment industry alone is one of the largest consumers of VR headsets, with nearly seven out of 10 headsets being purchased by gamers (Byrne, 2023). Within the medical field, VR has also been used to treat substance use disorders, PTSD, and post-traumatic stress disorder (Emmelkamp and Meyerbröker, 2021). It has been used to teach astronauts how to handle hazardous materials in orbit as well as to treat their mental health (Holt, 2023; Yu, 2005). It has been used to instruct future doctors on complex surgical techniques (Jiang et al., 2022). It has been used to instruct healthcare providers on how to divert burn patients’ attention during surgery (Hoffman et al., 2014). It has also been used to create precise images of the inside of the human body. For example, the National Institutes of Health has developed a “virtual bronchoscopy” experience that allows people to see the entire human airway structure (Virtual Reality, 2017). In another example, neurosurgeons were able to pinpoint where brain tumors live (Virtual Reality, 2017). VR also has real estate, healthcare, tourism, and architecture uses (Ashgan et al., 2023; Byrne, 2023).

VR has also been used to test ethical scenarios. For example, researchers have simulated patients asking for painkillers in VR and doctors denying them access to antibiotics so as not to promote bacterial resistance (Pan et al., 2016). In another study, researchers noted that conducting suicide research in VR could be a better alternative to doing this research in real life given such interventions in real life could trigger cues that could activate suicidal thoughts (Huang et al., 2021). However, how one can prevent these issues from arising in VR remains unclear.

VR is being used in creative and novel ways to serve a wide range of users, including surgeons and astronauts. However, each of these uses raises ethical questions that are frequently left unanswered. For example, in some of the research cited above, the focus is on the potential of the technology and its applications, which may seem impressive at first glance, but there is little or no discussion of the ethical aspects of these applications.

VR has been used by researchers to study positive social behaviors like empathy. In one study, for instance, researchers discovered that also users who had a VR experience about homelessness were more likely to feel empathy for them even 8 weeks after the study ended (Herrera et al., 2018). In another study, students were sent into a VR simulation designed to help them understand the perspective of people who use wheelchairs or are visually impaired. The researchers found that students felt more empathy after the VR experience than before (Cook et al., 2024). In a recent meta-analysis, researchers looked at 44 publications that examined the relationship between virtual reality, disability, and empathy (Trevena et al., 2024). They discovered that all publications identified perspective taking as the mechanism by which empathy for another person emerges—noting that virtual reality in particular enables one to engage in perspective taking activity. Of the examined 44 studies, researchers found that 36 studies concluded that select VR experiences could lead to prosocial outcomes.

VR has been shown to have the potential to inspire empathy for the environment. In one study, researchers placed new users in a simulated VR ocean environment and later found that people showed empathy for the corals that were dying (Raja and Carrico, 2021). Researchers examined ageism in another study. Participants here entered a VR environment wherein they we assigned either young or old digital avatars. Participants assigned older avatars expressed empathy for older people. The researchers concluded that those assigned older avatars showed compassion in part because their avatar was coping with age-related issues in the VR environment (Yee and Bailenson, 2006). Although these research results provide insight into the connection between pro-social behavior and virtual reality, the studies mentioned here were also carried out in academic institutions, which are bound by ethical guidelines for research conduct, and may be used as a guide. That is, the Institutional Review Board, or IRB, framework is one ethical framework we discuss as a possible model for responsible use in a later section.

VR development is being led by companies. Meta, a company owned by Mark Zuckerberg has spent more than a billion dollars building its VR presence (Frenkel, 2022). Meta paid $2 billion to acquire Oculus, a company that makes VR headsets, effectively announcing its support of VR as a technology that will shape the future (Frenkel, 2022). The term “meta” is of particular importance in VR because one interpretation of VR is what technology companies and creators call the “metaverse,” or an artificial virtual world in which people occupy a simulated reality and conduct their business there (Metz, 2021). Companies, most notably Meta, are therefore racing to establish and dominate this market in a manner akin to how tech companies attempted to capitalize on the consumerism of computers and mobile technology (Metz, 2021). Zuckerberg’s decision to rename his company from Facebook to Meta further clarifies his vision of what VR is and can become (Metz, 2021).

The government is another VR stakeholder as it is often at the forefront of efforts to adapt the technology to its uses. This concept is what scientists call “smart government,” which alludes to harnessing the potential of relatively newer technology like VR to improve the lives of citizens and make government work more efficiently (Baldauf et al., 2023).

To close this section, as companies drive the development of VR, it is consumers whose demand and buy-in will be critical to sustaining the VR ecosystem (Pizzi et al., 2019). In particular, companies’ concerns are often profit-driven - it may be incumbent on users to demand ethical protection–which is why it is important to consider whether ethical concerns are relevant to today’s VR consumers.

To examine the various components of VR ethical concerns, we employed various or three data collection techniques. This methodical approach which researchers often refer to as “triangulation” was a crucial component of the research design (Oliver-Hoyo and Allen, 2006). That is, in order to triangulate the issue of naming VR ethical issues and offer a more thorough understanding, we employed three approaches: literature review, exploring government documents and examining 300 user reviews of VR technology. Researchers define triangulation as the process of looking at the same issue from multiple angles or methods to improve the validity of the findings (Oliver-Hoyo and Allen, 2006). Here the distinct insights that each data collection effort brought to the table together provided a more comprehensive and nuanced picture of VR ethics and possible solutions.

Consistent with the logic deployed by other researchers (Adams et al., 2019; Nelson, 2011), we further define ethical issues as those that broadly refer to moral principles, justice, and questions of right and wrong that have to do with how we should treat others and our obligations in various situations. Therefore, even though some of the issues raised here, like the physical effects of technology, might not seem ethical at first, they do become so when we consider these issues through this definition, for example, if a user is naming physical harm, it suggests that the user’s perception is that it is the company’s duty to reduce harm. We also note that the way in which we code ethical issues in this paper is broad and frequently blurs the lines between ethical concerns pertaining to the actual headset and the experiences accessed using the VR apparatus. Although more research can explore these differences, our goal in this article is to provide a comprehensive map of ethical concerns, and we highlight the fact that, from the standpoint of the user, these lines frequently blend together.

The literature on VR ethics scholarship was found through a search process based on the methodology identified by (Okoli) 2015. Specifically, we modified Okoli’s (2015) recommendations. This were broken down into four main steps: 1) we thought about the review’s goal; 2) we determined the inclusion criteria; 3) we examined the literature to make sure it met the inclusion criteria; and 4) we looked at the data, identified examples to showcase and decided on a layout for Table 1’s display. To set the inclusion criteria, keywords were entered in three iterations 1) “virtual reality” and “ethics*”, 2) “vr” and ethics*, 3) “virtual immersive environments” and ethics* were entered into three major academic databases (Google Scholar, Ebsco Academic Search Complete and Web of Science). The asterisk was used as a Boolean term to capture variations in spelling in our sample. In order to ensure that there was enough emphasis on the ethical component to be taken into consideration, articles that fulfilled the aforementioned requirements and contained these terms in the title were included. Iteration one returned 37 results for Google Scholar. Iteration two returned eight results for Google Scholar. Iteration three returned 1 result for Google Scholar. For Google Scholar we used the “allintitle” to filter on keywords in titles. Iteration one returned nine results for Ebsco Academic Search Complete. Iteration two returned eleven results for Ebsco Academic Search Complete. Iteration three returned one result for Ebsco Academic Search Complete. Iteration one returned 21 results for Web of Science. Iteration two returned zero results for Web of Science. Iteration three returned zero results for Web of Science. The search window targeted articles published within the last 15 years.

Table 1. Overview of select research articles broken down by focus, year, discipline, questions raised, ethical themes, and ethical frameworks.

We ran another search using the same inclusion criteria targeting particular journals that publish at the nexus of technology, ethics and society, such as New Media and Society, Journal of Computer-Mediated Communication, and Ethics and Information Technology. In the final step, publications were read closely by the authors to identify the key ethical issues discussed and then presented in Table 1. For example, if a publication mentioned the words “privacy” and “VR” the ethical issue of privacy was tagged to the publication.

To broaden the search, government documents on VR were also included. Notably, this analysis focused on American documents, given the United States continues to set the pace for VR research, with tech giants and Silicon Valley driving the effort (Byrne, 2023). Any changes and events that occur here will most likely have an impact on other VR markets. Keywords and their combinations were entered into the ProQuest congressional database to identify US Congressional hearings, reports, and other documents related to VR (ProQuest Congressional, 2024). ProQuest is an extensive database of all U.S. congressional research reports from 1916 to the present, House and Senate sessions from 1817 to the present, legislative histories from 1969 to the present, and presidential materials from 1789 to the present. To make sure that the majority of the document—rather than just a portion of it—dealt with VR, filters were used to make sure the keywords appeared in the document’s title. Here the keywords “virtual reality” yielded four results, “virtual reality” and “ethics*” yielded zero results, “vr” and ethics* yielded zero results, “virtual immersive environments” and ethics* yielded zero results; searches were entered consecutively. No time filter was applied to the search.

We also wanted to see if and what ethical concerns VR users expressed. To do this, we identified three of Amazon’s highest-rated VR products by entering the term virtual reality and filtered the results by the highest-rated products. We identified three products and then randomly extracted 100 reviews per product and then attempted to determine whether any of the 300 cumulative reviews raised ethical concerns. We selected the following three products: 1) the Meta Quest 2-Advanced All-In One VR Headset (Meta) priced at $199, 2) the HTC Vive Pro 2 VR System (HTC) priced at $999, and 3) a built-in VR gaming station (VRGS) for iPhone and Android users aimed at kids priced at $59.99. Due to their widespread appeal, representation of a variety of price points and applications, and ability to capture the opinions of a wide spectrum of VR users, these three products were selected. Moreover, we opted for highly regarded equipment instead of commonly purchased items to highlight that the product has undergone quality control and has garnered a range of feedback. We selected the Amazon site as opposed to another site because it is a popular place for people to purchase VR products. For example, Wirecutter, a New York Times-based product review service, often directs users to Amazon to acquire VR headsets (Brewster, 2024).

A sample of 100 user reviews from 2024 was selected. We chose this number based on two recommendations in qualitative work: 1) that there should be enough excerpts until a saturation point is reached, or the point at which no new meanings emerge (Braun and Clark, 2013), and 2) that for secondary sources: the target number of preferred extracts at the high end is 100 (Fugard and Potts, 2015). For each of these reviews, we conducted a thematic content analysis using both a semantic and latent coding approach (Terry et al., 2017). Thematic analysis as an analytical strategy is used when the basic principle of a study is to conduct exploratory research aimed at understanding recurring patterns that may evade quantifiable measurement (Hawkins, 2018). Semantic coding often examines words that appear on the surface, while latent coding often looks for deeper levels of meaning. For example, if a user mentioned that they had an ethical issue, we used semantic coding. However, if a user talked about privacy issues or accessibility issues, we coded them as indicating ethical issues.

Two coders analyzed the data by first reading through the reviews and then assigning a theme to capture meanings. This processing method was based on Braun and Clarke’s (2006) multi-stage thematic analysis process that includes reading through the data and identifying recurring codes, as well as selecting examples that illustrate these codes. We then calculated inter-coder reliability coefficients to ascertain agreement rates. The agreement rates were as follows: Meta: 91%, HTC: 93%, and VRGS: 100%. In other words, the coders agreed on the code assigned to the Meta reviews 91% of the time. An intercoder agreement coefficient greater than 85% indicates a consistent coding scheme (Miles et al., 2014).

Privacy, bias, accountability, anonymity, respect for people, the carbon footprint of VR equipment, physical harm to users, and what if any real-world repercussions should follow morally repugnant acts occurring in VR are a few examples of issues brought up by VR scholars (Brey, 1999; Craft, 2007; Flattery, 2021; Ford, 2001; Hatfield et al., 2022; Madary, 2014; Powers, 2003; Ramirez et al., 2021; Wolfendale, 2007). Among these categories, four broad categories of ethical concerns appear to keep reoccurring within the literature: 1) privacy and issues of consent; 2) the physical and mental effects/harms of technology use on the individual user; 3) harassment behavior intended to manipulate, threaten or abuse exemplars within the immersive environment, including but not limited to virtual users, and where the consequences of the harassing action are unclear; and 4) accessibility or issues of bias both in terms of who has access (Adams et al., 2019). A fifth category that covers statutory protections that scholars have identified is also included. Each are elaborated upon below.

Privacy means the “right to be left alone” (Nelson, 2011). When it comes to VR how privacy will be safeguarded and by whom is an important question (Adams et al., 2019). Guidelines regarding user rights protection and VR companies’ obligations to users are sparse. In one study, for example, researchers examined the privacy policies of leading VR system providers HTC and Oculus. They sampled 10% of HTC and Oculus applications and discovered that only 1/3 of them mentioned having a privacy policy in place. Less than 20% of these applications for Oculus mentioned how user data was handled. Researchers concluded that the current way of providing privacy policies is incomplete (it does not always cover how data is handled). Furthermore, they concluded that privacy concerns will continue to exist given lack of ethical standardization (Adams et al., 2019). Users here also pointed out that more permission requests should be integrated into the hardware, since many VR devices—like the Samsung Gear VR—do not do so.

Privacy concerns extend to VR data. VR data is unique in nature and its distribution could harm users. Headset records may capture an individual’s emotional responses to traumatic events or may collect biomedical data, including heart rate, brain activity, and other bio-sensitive information (Adams et al., 2019). It is also unclear to users how companies and developers use this data and whether external parties can purchase this data (Adams et al., 2019). Advanced features of VR cameras, which can locate users and use built-in microphones to track their location, as well as the fact that headsets are gathering data even when users are not actively using them are of concern. Here, one developer explained: “what somebody is doing while in VR is recordable and trackable of a different level” as compared to other types of technology (Adams et al., 2019). One developer noted that given the hyper-personalized nature of this “super personal data” companies could technically “create a biological map or biological key, of who their users are” (Adams et al., 2019, p. 448).

In one study, developers were especially concerned about potential physical harm to users, such as motion sickness. Others were concerned about how users in the virtual world would navigate the real world if, for example, a fire alarm went off and a user heard nothing because they were in the VR world. Other developers mentioned the physical and intimate risks of traversing the VR space, such as what happens when digital avatars are bullied or mistreated, which can feel more “traumatizing” given the eerily realistic nature of VR (Adams et al., 2019).

Questions about possible harm to users from VR technology also raise questions about vulnerability, especially in fields like medicine.

VR has been used to help people recover from strokes, help people overcome anxiety, and help doctors make medical decisions (Ouyang, 2022). While it is undeniable that these are significant applications of VR, less is known about the ethical standards that regulate these uses and the precautions taken to keep users safe. This is also not to say that there are no ethical protections when it comes to VR use, but rather to emphasize that the mainstream user is often unaware of these protections.

Given medical patients require special ethical protection—how ethics govern VR medical use is particularly important to make transparent. Medical patients fall under the category of a “vulnerable population” according to the Belmont Report (1978), a foundational document created to guarantee that research involving human subjects is carried out ethically (Belmont, 1979). Regulatory bodies such as the National Bioethics Advisory Commission (NBAC) have further defined the concept of vulnerability as “condition, either intrinsic or situational, of some individuals that puts them at greater risk of being used in ethically inappropriate ways in research” (NBAC, 2001, p. 85). NBAC recommends that all three vulnerability risk parameters for patients be taken into account when assessing risk to ensure that the research conducted is ethical: parameters include situational, characteristic and disparity risks. A situational risk would be a patient with an illness. The characteristic risk would be an attribute, like excluding children from the study due to age. Disparity risks would indicate that the individual belongs to a social group that historically has been under resourced.

Upon applying the three NBAC principles to a recent VR health application, a few ethical issues arise.

Some background, EaseVRx is a VR application that treats chronic low back pain and was greenlit by the US Food and Drug Administration in 2021 (Ouyang, 2022). It uses behavioral therapy principles and deep breathing exercises to help users relieve back pain FDA, 2021). The FDA was responsible for determining whether EaseVRx is physically safe for consumers to use. To do so, the FDA conducted a blinded, randomized trial involving 179 participants with chronic low back pain. Over a period of 8 weeks, patients were assigned to either the EaseVRx program or a control condition without VR. After completing the study, 66% of patients self-reported an estimated 30% reduction in pain under VR conditions. When the researchers examined the control condition, that number was 41%, who also reported an estimated pain reduction of 30%. When researchers checked in again in the same study at intervals of one to 3 months, the thirty percent pain reduction remained for those in the EaseVRx, but those assigned to the second condition, or the non-VR treatment condition reported that their rates of pain reduction had decreased (FDA, 2021). In the same study, an estimated 21% of participants self-reported feeling “discomfort” with the headset, and 10% reported motion sickness and nausea (FDA, 2021).

It is worth noting that a discussion of the methodology used to assess ethical risks, particularly with regard to the assessment of the vulnerability principle, was omitted from a product press release intended for the public. The press release mentions that the device was evaluated under the category of “low-to moderate-risk devices of a new type.” The press release does not specify how this risk was evaluated. Specifically, whether the vulnerable risk categories identified by NBAC were evaluated in this instance.

EaseVRx was also vetted under the “Breakthrough Device designation” (FDA, 2021). This classification is used when it comes to technology that has the potential to “treat or diagnose a life-threatening or irreversibly debilitating disease or condition” (FDA, 2021) and no comparable device to it exists. Since this classification could apply to several VR health applications, for the public to continue to have trust in these kinds of applications and the processes that support them (Robles and Mallinson, 2023), these ethical processes should be communicated to the public in clear language.

Users are also turning to VR to process both physical and emotional pain through immersive VR experiences (Hirsch, 2018; Meindl et al., 2019; Simon et al., 2005). In the 1990s, Hunter Hoffman and David Patterson employed VR to assist burn victims, which was one of the first applications of VR for pain relief (Burn Center, 2003; Ouyang, 2022). Hoffman and Patterson developed a VR experience called Snow world, where patients could throw snowballs at snowmen, play with penguins and woolly mammoths, among other Arctic creatures, before having their bandages changed. The reasoning behind their use of VR in this way was that, while pain needs to be attended to and focused upon, placing patients in simulated VR environments diverts their attention, which lessens the emphasis on the brain’s capacity to focus on pain. Both the patients and their brain scans confirmed a reduction in pain (Burn Center, 2003).

Studies in this vein reveal that VR has significant potential for pain management, nonetheless there are ethical concerns, such as when these experiences are used for data collection or when these experiences can be distorted by third-party malware intrusions - such incidents could be particularly traumatic. A participant, for example, was placed in a makeshift forest, in one study, to help with chronic pain, which triggered memories of a son who had died (Ouyang, 2022). The participant describes the experience, “I feel like I’m there with my son” (Ouyang, 2022). The participant was shaking as she relayed this and had “tears suddenly…streaming down her face” (Ouyang, 2022). Although this highly emotional VR use was overseen by a healthcare provider, dissemination would pose ethical challenges, most notably the fact that this use would most likely be done individually, without a licensed professional guiding the experience. In such experiences, biometric data is often collected as the patient undergoes these evocative experiences (Ouyang, 2022), raising important concerns about privacy, who owns the data resulting from such emotionally distressing experiences, and whether this data could be used in the future to harm users, and whether this data will be sold to third parties. Moreover, before beginning this experience, has the user been told about their rights and the steps they can take to redress any violations of those rights? It is also well established by several ethical standards that consent is significantly limited when a person is in pain (Belmont, 1979; NBAC, 2001).

VR use also raises the concern that routine use of VR could make people indifferent to phenomena and potentially desensitize them to the harm caused to others (Raja, 2023). There is less understanding of how VR and desensitization work.

Moreover, existing in a digital realm, accessed through VR hardware, implies that digital avatars are also susceptible to experiencing similar crimes as individuals living in the physical world (Brey, 1999; Ford, 2001). Researchers refer to these as “virtual crimes” or where VR digital avatars are subjected to physical or sexual assault, harassment, torture, murder, cyber-bullying, and virtual theft (Brey, 1999; Ford, 2001; Kade, 2016). Although not specific to VR, in one example, a gamer in China committed murder after experiencing virtual theft online (Madary, 2014). An example that supports the claim of several scholars who note that events in the virtual world can have effects in the real world (Craft, 2007; Flattery, 2021; Powers, 2003; Wolfendale, 2007). Such scholars ask the following questions: How do these incidents affect society? Should children be protected from this material? How should virtual harm be dealt with? What rights should survivors of these virtual crimes have? Are there structural problems with the creation of virtual spaces that allow some people to act unethically? Ethical-legal theorists moreover discuss topics such as the question of when an act in the virtual world becomes a criminal offense (Kerr, 2008) and acknowledge that criminal law almost always does not apply to cases of virtual murder or intimidation, a discrepancy due in part to the fact that criminal law, as currently understood, refers to physical rather than perceptual acts occurring in cyberspace (Kerr, 2008).

VR raises questions about accessibility, specifically whether participants need to possess certain types of social and physical capital in order to use VR. For example, do participants need to speak certain languages to access VR experiences (Ouyang, 2022). Studies of chronic pain also feature almost all white participants who are educated which raises concerns shared by medical professionals that these type of pain management scenarios are excluding certain patients from being served (Ouyang, 2022). The participant in one experience stated that the experience was helpful and noted about their pain: “I’ve tried breathing exercises before…but this is much more relaxing…I do not have pain in my stomach now” (Ouyang, 2022). Since there is evidence to suggest that VR relieves pain, the question arises as to who this service is available to. We also need to be concerned about the fact that is VR pain-relief technology being refined in underserved communities before being introduced to other historically more affluent sectors of society.

VR architects have pointed out that becoming a developer necessitates overcoming a number of barriers that unintentionally rule out some candidates and emphasize others. For instance, one developer in a study noted that there exists a “high entry barrier to even get started in VR…[meaning] people with disposal income and who are…tech oriented…it’s not just you know [anyone] typing on a keyboard” (Adams et al., 2019, p. 451).

AI arguably came into the public eye in 2023 and has since had a significant impact that is expected to affect many different industries and technologies, including VR (5 Examples of Biased Artificial Intelligence, 2019; Korteling et al., 2021; Mughal et al., 2022). Despite its advantages, reports of AI bias include biased healthcare algorithms, biased policing algorithms, and algorithms that discriminated against women in hiring decisions (5 Examples of Biased Artificial Intelligence, 2019). How AI will affect VR and what ethical implications this process has are still unresolved questions. Furthermore, numerous techno-ethicists have emphasized the widely accepted claim that, whichever two-alphabet acronym we talk about, whether VR or AI, both will certainly reflect the prejudices and inequalities ingrained in our society. We therefore have to anticipate that, as VR-infused AI advances, it will not be impervious to human prejudice and that, as a result, we will need to establish more concrete and universal ethical standards.

Several of the articles reviewed mentioned laws that have potential VR applications. For example, the authors discussed the Computer Fraud and Abuse Act (CFAA) (Kerr, 2008; Nelson, 2011). The CFAA was passed in 1986 and its primary purpose was to prevent hacking and unauthorized access to computers (NACDL, 2025). The CFAA can be used to find someone criminally or civilly negligent, and the articles that mention it discuss it in relation to unauthorized behavior, which includes, but is not limited to, assaulting virtual characters or taking someone’s virtual property (Kerr, 2008; Nelson, 2011; Adams et al., 2019).

Legal scholar John William Nelson further argues that Sui Generis Privacy Laws can also be applied to virtual situations (Nelson, 2011). Nelson notes that such laws are based on the premise that people have the right to be left alone, and this principle should theoretically apply in the virtual world too—although this is often not the case.

Scholars such as Kerr (2008) have also called for newer laws, recognizing the limitations of current ones in terms of harms that users experience in virtual spaces. In conclusion, there appears to be an understanding that some current laws, such as the CFAA, may apply to virtual words; however, technology is still advancing faster than the law and more statutory protections are needed.

Table 1 furthermore illustrates the ethical issues and enumerates frameworks that have emerged from work discussed in this section. Articles selected for the table each represent a distinct ethical theme found in VR scholarship. From this table, two patterns in particular stand out. (1) Researchers from a variety of fields, including neuroscience and philosophy, are contributing to the interdisciplinary discussion on ethics and VR. Ethical questions in particular are being asked by medical researchers. (2) While many of these researchers discussed the ethical dilemmas that come up with VR’s use, many of them do not offer ethical frameworks through which one could consider responsible use.

VR researchers (Adams et al., 2019; Hatfield et al., 2022) have also raised important questions such as: Will there be a unified code of conduct for VR users and developers, or will cases be handled individually? Who would be the referees? What does ethical VR use look like? In sum, there have been more calls requesting more guidance on responsible use (Nash, 2018; Ramirez et al., 2021; Ramirez and LaBarge, 2018).

One additional method to determine the significance of a subject is to check if it has been discussed in official government documents (Clark-Stallkamp and Ames, 2023; Switzer et al., 2023). The ProQuest Record delineates that there were at least four publications on VR. As a corpus, these documents reveal that there is hardly any discussion of the ethical implications of this technology in these documents. Moreover, representatives from New York and California attempted to introduce VR legislation in Congress with HR 4103 but ethical issues are not addressed. In summary, when looking at these official documents, there are signs of interest in what VR can do and how it can be applied, but there is a notable silence on ethical issues (New Developments in Computer Technology: Virtual Reality, 1991; Virtual Reality and Technologies for Combat Simulation, 1994; VR TECHS in Government Act of 2019; Wilson, 2008).

We examined 300 user reviews of three Amazon products (see Methods section). The results showed that ethical questions were brought up in relation to all three products by users, albeit by a minority of users (Table 2). For Meta, 20% of users raised ethical issues. For the HTC, 9% of users raised ethical issues. For the VRGS, 2% of users raised ethical issues. Users talked about privacy concerns, access to inappropriate content, harassment in the virtual world, accessibility issues, and affordability of technology. Quotes were selected to represent the ethical issues discussed by users. For example, parents spoke of how “addictive” the headsets were and were concerned about younger users being placed in inappropriate situations. Privacy concerns emerged as people wrote about the need to create an account to access the headset’s features and raised concerns about how their data was used. There were concerns regarding the HTC product’s price and quality because it was almost $1000, and some felt that the experience did not justify the price. Several users of various products also spoke of “headaches,” “motion sickness,” that the technology’s hardware was not suitable for children or “narrow faces” or gave an advantage to those with “short hair” and those who did not wear glasses. Overall, even though a small minority of users raised ethical concerns with each product, this indicates that users are experiencing these issues and are aware of them; given that Amazon reviews are public, it is also likely that developers are aware of these issues.

Guidelines are useful when laws and policies are absent (Carrillo, 2020). Such guidelines are also aspirational because they indicate how things should be rather than how they are, and can often provide guidance when things go wrong (Carrillo, 2020). Three ethical frames are discussed here. The first one originates from the university setting. This well-established framework should be used more widely for VR applications outside of research settings. The second examines the ethics of care. The third one collaboratively develops ethical community codes. When it comes to general VR use, it is also important to note that some ethical codes or parts thereof are occasionally applied to VR, but their application is not standardized. For example, while a researcher affiliated with a university will most likely have to meet the requirements of an institutional review board or IRB, a research and development employee at a company may not have to. For this reason, an argument has been made that there should be some ethical standardization when it comes to the governance of emerging technologies (Carrillo, 2020). In other words: it is not enough to identify and develop codes, their implementation is also important. Each of these frameworks was chosen because it offers something unique to a user who wants to learn about ethical use; additionally, these frameworks are adaptable and can be used in different situations (Ouyang, 2022); finally, some of these frameworks are widely cited and have stood the test of time, for example, the IRB framework has been around for many years and is used at almost all research universities (Belmont, 1979).

Research on VR taking place at universities has to adhere to the principles established by the IRB. IRB refers to a panel of experts sitting in institutions such as universities that oversee any research involving human subjects. In particular, their role is to ensure that research is conducted ethically (Wilkum, 2017). IRB addresses issues of consent, privacy and the risks people face during research and was created after taking stock of significant events in which people’s rights were violated (Wilkum, 2017). The IRB principles are based in part on the Nuremberg Code (1947), which was established and recognized as one of the first codes for ethical research, in which people’s consent for their participation in research is central and in all research the benefit should outweigh risks to persons (Wilkum, 2017). The IRB guidelines also serve as a reminder of years in the past when ethical considerations were disregarded and serve as a safeguard against a repeat of that past. For example, the need for additional codification to protect research participants was exacerbated in the United States by the Tuskegee experiments, in which impoverished Black men were denied treatment for syphilis for 40 years (Wilkum, 2017). Another influence on the IRB was the Declaration of Helsinki (1964), which further expanded the protections first listed in the Nuremberg Code to ensure that people were informed about risks and the power to consent (Slater, 2021). Ten years later, the U.S. Congress created the Belmont Report, which became the definitive code to guide all human subjects research in the U.S., based on three key IRB principles: “respect for the person,” “beneficence,” and “justice” (Belmont, 1979). Respect for people means acknowledging their autonomy and valuing their sanctity. When a person’s capacity to consent is impaired, as is the case, for example, when they are in a position of low power (classic example research on prisoners), further measures must be taken to protect them from harm (Wilkum, 2017). Beneficence means showing the participant in the research some ethical care, particularly if there are risks or harms that need to be justified in relation to the benefits. Justice refers to the notion that deciding who is and is not included in a research project should be done in an equitable manner, especially in terms of who the research would benefit or not (Belmont, 1979).

These three guidelines serve as the basis for the IRB’s evaluation of research within its purview. We can move toward responsible use by applying the three IRB principles of respect for the person, beneficence and justice to VR use generally. Respect for persons means refraining from mistreating them in the digital realm (Flattery, 2021; Powers, 2003). Beneficence encourages weighing the potential of VR against some of the risks it entails and informing users of this balance (Adams et al., 2019). Justice refers to the idea of equity and ensuring that VRs’ benefits and risks are evenly distributed and that one group does not grow at the expense of another (Ouyang, 2022). Given that the IRB Code in particular is a classic code to which research in American universities is held to, and given the history from which it emerged, it would be an able one to begin to guide responsible VR use.

Is another ethical code or lens that is helpful to consider for general responsible VR use. Care’s place in ethics and morality is a topic of debate. While some argue that care is just a principle that appears in other ethical frameworks, others argue that care is a distinct school of thought that offers a more modern view of conduct (Noddings, 1984; Wilkinson, n.d.; Wilkum, 2017). Several scholars such as Joseph Butler (17th century), David Hume (18th century), Adam Smith (18th century), Carol Gilligan (20th century) and Arthur Schopenhauer (19th century) have referred to care ethics in their work (Blum, 2001). The care lens, which has been distinguished from the justice lens (Blum, 2001), is rooted in a moral tradition that emphasizes the emotion of care. The targets of care are typically other humans, though they can also be other objects, such as nonhuman animals. Ethicist Blum (2001) states that care is more than just a feeling or an emotion and describes it as “knowledge or understanding of the other person’s needs, welfare, situation…[beyond] mere feeling of concern” (Blum, 2001). Ethicist Nel Noddings points out that care is not what one wants for the object of care, but what the person wants, since the perception of care we can have can be wrong (Noddings, 1984). In this way, providing care also involves empowering individuals to express their own desires. Another recurring theme is care for the individual, which is partially seen in moral and ethical systems such as utilitarianism and Kantianism as a key guiding principle (Blum, 2001).

Calls to apply the care lens to VR exist, where researchers have suggested that the care lens may be an appropriate lens to apply to VR because it encourages people to engage in empathy and perspective taking (Raja, 2023) while questioning their preconceived assumptions. The concept of perspective taking, or the ability to separate oneself from one’s own personal filters in order to consider the viewpoints and knowledge of another, is also fundamental to empathy (Belman and Flanagan, 2010). Given that VR technology is becoming more available and that users face unique risks due to its enhanced sensory experiences, care ethics frameworks may be a useful tool for applying ethical thinking to VR use. That is, these frameworks often prioritize consideration of others, with empathy often playing a central role. One such framework is the VIRAL framework, which reimagines VR as a technology of “care ethics” (Raja, 2023). V stands for viewpoints, or ensuring that different perspectives are shown, for example, in a VR medical experience featuring the viewpoints of both patients and medical professionals. I stands for interconnection, or how the characters of the experience are connected to each other. For example, in a medical VR simulation, how the quality of a doctor’s behavior affects a patient’s wellbeing. R, which stands for respect, indicates that the conduct and content of VR experiences are done so in a way that values each person’s autonomy, worth, and dignity. For example, creating digital avatars that represent others positively. A stands for action, implying a return to reality where the data gathered is utilized to carry out a beneficial deed for another. For instance, if medical personnel receive training in VR on how to have an efficient bedside manner, they ought to apply these techniques to help their own patients. L stands for liberation and represents an aspirational vision. Intentions to elevate others—often those who have been marginalized in our society—and thereby envision a better society for all are what lead to liberation. An example of this would be bringing ethical VR pain management interventions to underserved communities in healthcare settings.

Others have noted that a co-constituted code of ethics often garners a greater level of support (Hardina, 2004; Tunón et al., 2016). Co-constituted codes may be a preferable option, according to these researchers, who also believe that ethical decision-making is in part context-based and has varying degrees of urgency. Building ethical codes also typically entails ensuring that it reflects the gravity of the problem, is consistent with the participant’s values, and takes into account the particulars of the issue’s context (Hardina, 2004). For instance, the ethical application of VR in educational environments may vary slightly compared to its use in entertainment settings, co-created codes often are sensitive to these nuances. Ethical dilemmas also should not be addressed in isolation, but always require input from others (Campbell, 2016; Hardina, 2004). In this sense, the joint creation of a code of ethics represents the contribution of several members of the community, including experts.

Such experts would include VR developers. In one instance, recognizing this urgency, researchers convened an open forum in which they asked VR developers and users to develop codes to promote responsible VR use (Adams et al., 2019).An estimated 1,000 users examined an evolving ethics code, with nearly a quarter of those who viewed contributing to the code (n = 245). This code consisted of ten guiding principles: (1) “Do no harm,” (2) “Ensure experience,” (3) “Be transparent in data collection,” (4) “Ask for permission,” (5) “Keep nausea away,” (6) “Diversity of presentation,” (7) “Social spaces,” (8) “Accessibility to all” (9) “User-centered user design and experience” and (10) “Proactive innovation.” (1) The Do No Harm principle, modeled on the Hippocratic Oath, aimed to not create content that “objectifies, degrades, or violates the rights of people or animals” or creates experiences that would be considered illegal in “real life” or morally wrong. (2) Safeguarding the Experiences principle meant that outside actors should not be able to alter or distort the original experience. (3) Transparency in data collection means ensuring that users know how their data is stored and protected. (4) Ask for permission refers to asking for consent, especially when it comes to sensitive data. (5) Keep the Nausea Away is about ensuring that VR systems are tested to reduce motion sickness as a by-product of their use. (6) Principle of representation states “We will work to ensure that a diverse array of avatars is available for use by users and that our representations of groups and characters does not perpetuate stereotypes.” (7) “Cyberbullying and sexual harassment is kept to a minimum” is the goal of the social space principle. It is important to note that the use of qualifying language in the Code represents a significant flaw, particularly with regard to principle seven. (8) Accessibility refers to making these areas widely accessible to users, particularly those who might require fluid design to access them. (9) Create VR experiences with the needs of the user in mind. (10) Including the end user in the design process from stage A to stage Z is the goal of the “proactive innovations” principle (Adams et al., 2019). Since this code was developed by developers and users, it reflects their understanding of the values that are important to them. In particular, some of the principles—such as principle 5 about nausea and principle 7 about cyberbullying and sexual harassment—indicate that users are aware that these issues exist. Co-created ethical codes are also consistent with the claim that, given the novelty of virtual reality and the lack of a technology that seamlessly combines the real and the imagined, ethical frameworks and tools currently in use may need to be recreated (Zhou et al., 2023). Participatory codes further represent wider opinions and are therefore more likely to be supported by wider audiences.

Some note that ethics should regulate the full VR life cycle (Kenwright, 2018; Zhou et al., 2023) and note that designers have a “moral obligation” to users and the public at large when developing technologies (Kenwright, 2018). To this, we would add that it is also crucial to include a living ethical clause to these processes or the notion that technologies could be updated if ethical oversights are discovered. These ethical lifecycle appeals are similar to those made by organizations such as IEEE, which have made similar requests to integrate ethical considerations into not only VR but other technology as well (Herkert, 2016). Furthermore, this ethical lifecycle perspective is similar to what, for example, technology humanist Joe Herket describes as “anticipatory ethics” (Herkert, 2016). This concept alludes to the reasoning that ethical considerations ought to be ingrained in technology from the beginning to the end, rather than as an afterthought. Herkert points out that although this may initially seem “impractical,” it is not a good enough excuse to shun ethical decision-making considering the wider implications of ethical thinking for how we live and wish to live as a society (Herkert, 2016).

Others have proposed that we need specific ethical policies that would regulate the responsible use of VR (Spiegel, 2018). These include the adoption of laws that provide for strict protection of private information in connection with VR use, the establishment of a legal minimum age for the use of VR, which is based, for example, on the requirements of the voting age, and standardized lay warnings, which convey physical risks associated with technology to the user (Spiegel, 2018).

Additionally, since VR is a created world, focusing on the ethical training of its architects or developers could be a way to encourage responsible use of VR. For example, many VR developers are self-taught and often take online courses (Adams et al., 2019). Many developers gain foundational knowledge from classes in which ethical concepts are omitted entirely or could be taught more thoroughly. This is necessary as some note that developers make decisions about the VR system that impact end users, but lack ethical training, which is evident in their creation, an occurrence that needs to be remedied (Kenwright, 2018). Moreover, when it comes to finding out about and connecting with VR resources, Reddit in particular appears to be a primary hub for developers and users (Adams et al., 2019). These pipelines have the potential to be an excellent means of quickly providing a generation of developers with the tools they need for effective ethical decision-making. Some of these tools can be built on and refined or even co-developed either on the IRB principle or the principles of care ethics.

Each of the previous three frameworks represent unique strengths. For instance, the IRB framework is very structured because review boards at research universities around the country enforce it. IRB is also a framework based on an in-depth study of some of the ethical challenges we have faced in the past and represents a commitment not to repeat that past. The care ethics framework is unique in that it promotes empathy and care for others and if adopted could deter several ethical challenges such as physical and mental harm to the user. Because users have an incentive to follow something they create—the so-called “skin in the game” phenomenon—participatory ethical codes also represent a strong framework. Overall, each of these frameworks offers some direction for responsible use.

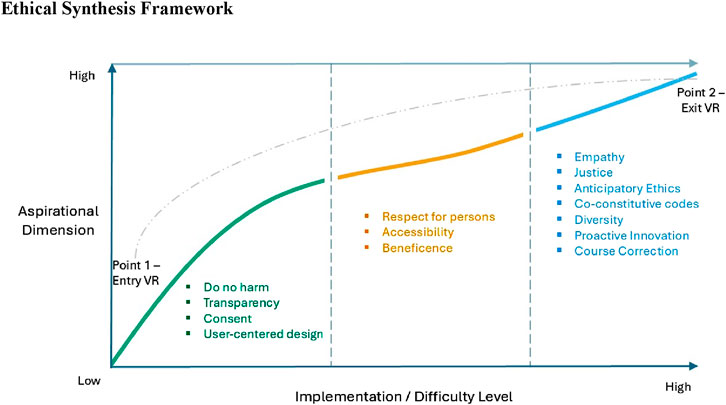

We have adopted select reoccurring principles that appear to be common to all three and used them to build the Ethical Synthesis Framework or ESF. Since ethical principles are presented in previous frameworks as stand-alone ideas, the ESF represents these as a fluid cycle in which some principles are more difficult to implement than others. Within the ESF, difficulty is based on abstraction level. For instance, transparency is a less abstract concept than justice and arguably easier to implement. In relation to ethical principles, the aspiration level describes how ambitious or ideal an ethical goal is. Aiming higher and setting more ambitious ethical goals is ideal. For example, in contrast to a VR experience that merely adheres to the ethical principle of consent, one that promotes empathy or care is, for instance, more aspirational or ideal.

A crucial part of the ESF is that the VR experience starts at some point 1 on the curve, as it moves toward a more aspirational and challenging to implement level, it moves toward an exit point or point 2. This transition from point 1 to point 2 represents growth and a greater focus on responsible behavior.

Architects and users of VR can use ESF. For example, a user may want to see whether the VR experience they are consuming promotes, at a fundamental level, principles such as “do no harm,” “transparency,” “consent” (including the right to ask questions, and user-centered design). VR technologies or experiences intended to follow more advanced or ideal principles include respect for persons, accessibility and beneficence. And those experiences which are perhaps most ideal would follow the principles of empathy, justice, co-constitutive creation processes, proactive innovation and course correction—i.e. treating ethical codes as living documents.

Figure 1 represents a synthesis of all three frameworks we discussed. We have presented the principles on a two-axis scale. The X-axis centers each of the ethical principles on a scale of high to low difficulty or implementation. For example, implementing the consent principle in theory should be easier to do than making the VR technology/experience an equitable or empathetic experience. The aspirational dimension, represented by the y-axis, suggests how significant or aspirational these ideas are; in other words, things with a medium aspirational level are often more straightforward to put into practice than those on a higher range. The most important takeaway from Figure 1 is that as a VR technology/experience progresses through the ethical lifecycle, it will alter from its initial state at point 1. With this in mind, re-emergence at any stage of the cycle depicted in Figure 1 means that the VR technology or experience is in a more advantageous ethical position than it was at the beginning or point 1. When creating a VR experience or technology, developers may find Figure 1 particularly helpful in imagining how the final product might follow these ethical principles. Users may also find it useful for determining whether the VR experiences they use adhere to these principles.

Figure 1. Represents a synthesis of all three frameworks we discussed. The figure was created based on the input from several sources (see Belmont, 1979; Raja, 2023; Herkert, 2016; Adams et al., 2009).

We put forth two questions here: 1) Are certain audiences concerned about ethical VR issues, and if so, what are the issues? We find that though they made up a small portion of the sample we looked at, we find that users are concerned about ethical issues. Users cited privacy and consent, harassment, physical and mental harm, and accessibility issues as key themes. These themes were consistent with the results in the literature (Brey, 1999; Craft, 2007; Flattery, 2021; Ford, 2001; Hatfield et al., 2022; Madary, 2014; Powers, 2003; Ramirez et al., 2021; Wolfendale, 2007). The recurrence of these issues in the literature indicates their persistence and the fact that they are still unresolved. In addition, we find that researchers and developers are calling for more guidelines on responsible VR use. It is also widely recognized in many fields that unbridled technological progress requires a delicate balance with ethical considerations (Benjamin, 2019; Spiegel, 2018). For this reason, we need to analyze technologies like VR more, not less, from an ethical perspective.

Our second question was: 2) What frameworks could potentially guide users toward responsible use? We identified three possible ethical frameworks and illustrated some of the key ethical principles in each of these frameworks in Figure 1. A contribution of the synthesis framework we present is its fluid nature, which envisages ethical use not as an outcome but as a process.

Despite the above analysis, some limitations should be noted. First, the methodology used in this study is exploratory and may not generalize to the level that a statistical study could. Moreover, the user data we selected only represents a fraction of the user sentiment out there. Future research could rely on causal designs to further assess larger portions of consumer sentiment toward VR. The growing number of AI applications being incorporated into VR technology and the ethical guidelines or lack of that result from this combination may also be explored in future studies. Despite these limitations, this study offers an important contribution to the literature: an examination of contemporary perceptions of VR consumers and a synthesis framework.

VR use raises important ethical concerns and questions. According to our analysis, there appears to be agreement on the ethical questions that VR raises while less is known about what ethical frameworks might be applied to promote responsible VR use. Here we have presented at least three different frameworks and a new synthesis framework that could guide responsible use. This work may be especially useful to audiences that are seeking guidance on how to undertake responsible VR use.

Publicly available data was analyzed in this study. This data can be found here: Literature on VR scholarship: Google Scholar, Ebsco Academic Search Complete, Web of Science, Government Documents: ProQuest Congressional Database, Amazon Reviews: Meta Quest 2-Advanced All-In One VR Headset (Meta), HTC Vive Pro 2 VR System (HTC), VR gaming station (VRGS).

Ethical approval was not required for this study. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements.

UR: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing–original draft, Writing–review and editing. RA-B: Data curation, Formal Analysis, Validation, Writing–review and editing, Visualization.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

5 Examples of Biased Artificial Intelligence (2019). Logically. Available at: https://www.logically.ai/articles/5-examples-of-biased-ai.

Abdelmaged, M. A. M. (2021). Implementation of virtual reality in healthcare, entertainment, tourism, education, and retail sectors. Empir. Quests Manag. Essences, Munich Personal RePEc Archive 1 (Issue 1).

Adams, D., Musabay, N., Bah, A., Pitkin, K., Barwulor, C., and Redmiles, E. M. (2019). “Ethics emerging: the story of privacy and security perceptions in virtual reality,” in Proceedings of the 14th symposium on usable privacy and security, SOUPS 2018, Baltimore, MD, August 12–14, 2018.

Ahmadpour, N., Weatherall, A. D., Menezes, M., Yoo, S., Hong, H., and Wong, G. (2020). Synthesizing multiple stakeholder perspectives on using virtual reality to improve the periprocedural experience in children and adolescents: survey study. J. Med. Internet Res. 22 (7), e19752. doi:10.2196/19752

Ashgan, E., Moubarki, N., Saif, M., and El-Shorbagy, A. M. (2023). Virtual reality in architecture. Civ. Eng. Archit. 11 (1), 498–506. doi:10.13189/cea.2023.110138

Bailenson, J. (2018). Experience on demand: what virtual reality is, how it works, and what it can do. 1st edn. New York, NY: W. W. Norton & Company.

Baldauf, M., Zimmermann, H. D., Baer-Baldauf, P., and Bekiri, V. (2023). Virtual reality for smart government – requirements, opportunities, and challenges. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinforma. 14038 (LNCS), 3–13. doi:10.1007/978-3-031-35969-9_1

Belman, J., and Flanagan, M. (2010). Designing games to foster empathy. Cogn. Technol. 14 (2), 5–15. Available at: http://www.tiltfactor.org/wp-content/uploads2/cog-tech-si-g4g-article-1-belman-and-flanagan-designing-games-to-foster-empathy.pdf.

Belmont. The Belmont report: ethical principles and guidelines for the protection of human subjects of research. (1979). Available at: https://www.hhs.gov/ohrp/regulations-and-policy/belmont-report/read-the-belmont-report/index.html

Blum, L. (2001). “Care,” in Encyclopedia of ethics. Editors L. C. Becker, and C. B. Becker (New York: Routledge). Available at: https://search.credoreference.com/articles/Qm9va0FydGljbGU6MzAyODU1MA==?q=care%20ethic.

Braun, V., and Clark, V. (2013). “Successful qualitative research: a practical guide for beginners,” in Feminism and psychology (London: Sage Publication). doi:10.1177/0959353515614115

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3 (2), 77–101. doi:10.1191/1478088706qp063oa

Brewster, S. (2024). The best VR headset. Wirecutter. N. Y. Times. Available at: https://www.nytimes.com/wirecutter/reviews/best-standalone-vr-headset/.

Brey, P. (1999). The ethics of representation and action in virtual reality. Ethics Inf. Technol. 1 (1), 5–14. doi:10.1023/A:1010069907461

Burn Center (2003). Virtual reality pain reduction university of Washington Seattle and U.W. Harborview burn center. University of Washington Seattle. Available at: https://www.hitl.washington.edu/projects/vrpain/.

Byrne, C. (2023). “Global virtual reality market shows significant potential growth,” in Digital transformation. Available at: https://digitalcxo.com/article/global-virtual-reality-market-shows-significant-potential-growth/#:∼:text=The%20virtual%20reality%20market%2C%20as,published%20by%20The%20Insight%20Partners.

Campbell, R. (2016). “It’s the way that you do it”: developing an ethical framework for community psychology research and action. Am. J. Community Psychol. 58 (Issues 3–4), 294–302. doi:10.1002/ajcp.12037

Chirico, A., Lucidi, F., De Laurentiis, M., Milanese, C., Napoli, A., and Giordano, A. (2016). Virtual reality in health system: beyond entertainment. A mini-review on the efficacy of VR during cancer treatment. J. Cell. Physiology 231 (2), 275–287. doi:10.1002/jcp.25117

Clark-Stallkamp, R., and Ames, M. (2023). The AECT archives: elusive primary sources, and where to find them. TechTrends 67 (5), 865–875. doi:10.1007/s11528-023-00868-4

Cook, S., Burnett, G., Abdelsalam, A. E., and Sung, K. (2024). “An exploration of immersive virtual reality as an empathic-modelling tool,” in Academic proceedings of the 10th international conference of the immersive learning research network (ILRN2024), 143–152. doi:10.56198/U6C0WZVJX

Craft, A. J. (2007). Sin in cyber-eden: understanding the metaphysics and morals of virtual worlds. Ethics Inf. Technol. 9 (3), 205–217. doi:10.1007/s10676-007-9144-4

Dremliuga, R., Dremliuga, O., and Iakovenko, A. (2020). Virtual reality: general issues of legal regulation. J. Polit. Law 13 (1), 75. doi:10.5539/jpl.v13n1p75

Emmelkamp, P. M. G., and Meyerbröker, K. (2021). Virtual reality therapy in mental health. Annu. Rev. Clin. Psychol. 17, 495–519. doi:10.1146/annurev-clinpsy-081219-115923

FDA (2021). “FDA authorizes marketing of virtual reality system for chronic pain reduction,” in FDA news release (U.S. Food and Drug Administration). Available at: https://www.fda.gov/news-events/press-announcements/fda-authorizes-marketing-virtual-reality-system-chronic-pain-reduction.

Flattery, T. (2021). Kantians commit virtual killings that affect no other persons? Ethics Inf. Technol. 23 (4), 751–762. doi:10.1007/s10676-021-09612-z

Ford, P. J. (2001). A further analysis of the ethics of representation in virtual reality: multi-user environments. Ethics Inf. Technol. 3 (2), 113–121. doi:10.1023/A:1011846009390

Fox, J., Arena, D., and Bailenson, J. N. (2009). Virtual reality: a survival guide for the social scientist. J. Media Psychol. 21 (3), 95–113. doi:10.1027/1864-1105.21.3.95

Frenkel, S. (2022). Mark Zuckerberg testifies about meta’s virtual reality ambitions. N. Y. Times. Available at: https://www.nytimes.com/2022/12/20/technology/zuckerberg-meta-ftc-virtual-reality.html.

Fugard, A. J. B., and Potts, H. W. W. (2015). Supporting thinking on sample sizes for thematic analyses: a quantitative tool. Int. J. Soc. Res. Methodol. 18 (6), 669–684. doi:10.1080/13645579.2015.1005453

Haar, R. (2005). “Virtual reality in the military: present and future,” in 3rd twente student conference on IT.

Hardina, D. (2004). Guidelines for ethical practice in community organization. Soc. Work 49 (4), 595–604. doi:10.1093/sw/49.4.595

Hatfield, H. R., Ahn, S. J., Klein, M., and Nowak, K. L. (2022). Confronting whiteness through virtual humans: a review of 20 years of research in prejudice and racial bias using virtual environments. J. Computer-Mediated Commun. 27 (Issue 6). doi:10.1093/jcmc/zmac016

Hawkins, J. (2018). “Thematic analysis,” in The SAGE encyclopedia of communication research methods (SAGE Publications), 1757–7160. doi:10.4135/9781483381411.n624

Herkert, J. (2016). Bringing ethics to the forefront of technology R&D. IEEE Future Dir. Available at: https://cmte.ieee.org/futuredirections/tech-policy-ethics/september-2016/bringing-ethics-to-the-forefront-of-technology-rd/.

Herrera, F., Bailenson, J., Weisz, E., Ogle, E., and Zaki, J. (2018). Building long-term empathy: a large-scale comparison of traditional and virtual reality perspective-taking. PLOS ONE 13 (10), e0204494. doi:10.1371/journal.pone.0204494

Hirsch, J. A. (2018). Integrating hypnosis with other therapies for treating specific phobias: a case series. Am. J. Clin. Hypn. 60 (4), 367–377. doi:10.1080/00029157.2017.1326372

Hoffman, H. G., Meyer, W. J., Ramirez, M., Roberts, L., Seibel, E. J., Atzori, B., et al. (2014). Feasibility of articulated arm mounted Oculus rift virtual reality goggles for adjunctive pain control during occupational therapy in pediatric burn patients. Cyberpsychology, Behav. Soc. Netw. 17 (6), 397–401. doi:10.1089/cyber.2014.0058

Holt, S. (2023). Virtual reality, augmented reality and mixed reality: for astronaut mental health; and space tourism, education and outreach. Acta Astronaut. 203, 436–446. doi:10.1016/j.actaastro.2022.12.016

Huang, X., Funsch, K. M., Park, E. C., Conway, P., Franklin, J. C., and Ribeiro, J. D. (2021). Longitudinal studies support the safety and ethics of virtual reality suicide as a research method. Sci. Rep. 11 (1), 9653. [Article]. doi:10.1038/s41598-021-89152-0

Iserson, K. V. (2018). Ethics of virtual reality in medical education and licensure. Camb. Q. Healthc. Ethics 27 (2), 326–332. doi:10.1017/S0963180117000652

Jerald, J. (2016). The VR book: human-centered design for virtual reality. Morgan and Claypool. doi:10.1145/2792790

Jia, J., and Chen, W. (2017). “The ethical dilemmas of virtual reality application in entertainment,” in Proceedings - 2017 IEEE International Conference on Computational Science and Engineering and IEEE/IFIP International Conference on Embedded and Ubiquitous Computing (CSE and EUC), 696–699. doi:10.1109/CSE-EUC.2017.134

Jiang, H., Vimalesvaran, S., Wang, J. K., Lim, K. B., Mogali, S. R., and Car, L. T. (2022). Virtual reality in medical students’ education: scoping review. JMIR Med. Educ. 8 (1), e34860. doi:10.2196/34860

Kade, D. (2016). Ethics of virtual reality applications in computer game production. Philosophies 1 (1), 73–86. doi:10.3390/philosophies1010073

Kenwright, B. (2018). Virtual reality: ethical challenges and dangers [opinion]. IEEE Technol. Soc. Mag. 37 (Issue 4), 20–25. doi:10.1109/MTS.2018.2876104

Korteling, J. E. H., van de Boer-Visschedijk, G. C., Blankendaal, R. A. M., Boonekamp, R. C., and Eikelboom, A. R. (2021). Human-versus artificial intelligence. Front. Artif. Intell. 4, 622364. doi:10.3389/frai.2021.622364

Kuna, P., Hašková, A., and Borza, Ľ. (2023). Creation of virtual reality for education purposes. Sustain. Switz. 15 (9), 7153. doi:10.3390/su15097153

Lanier, J. (2024). Where will virtual reality take us? New Yorker. Available at: https://www.newyorker.com/tech/annals-of-technology/where-will-virtual-reality-take-us.

Lele, A. (2013). Virtual reality and its military utility. J. Ambient Intell. Humaniz. Comput. 4 (1), 17–26. doi:10.1007/s12652-011-0052-4

Lemley, M. A., and Volokh, E. (2018). Law, virtual reality, and augmented reality. Univ. Pa. Law Rev. 166 (5), 1051–1138. Available at: https://scholarship.law.upenn.edu/penn_law_review/vol166/iss5/1.

Madary, M. (2014). Intentionality and virtual objects: the case of Qiu Chengwei’s dragon sabre. Ethics Inf. Technol. 16 (3), 219–225. doi:10.1007/s10676-014-9347-4

Meindl, J. N., Saba, S., Gray, M., Stuebing, L., and Jarvis, A. (2019). Reducing blood draw phobia in an adult with autism spectrum disorder using low-cost virtual reality exposure therapy. J. Appl. Res. Intellect. Disabil. 32 (6), 1446–1452. doi:10.1111/jar.12637

Metz, C. (2021). Everybody into the metaverse! Virtual reality beckons big tech. N. Y. Times. Available at: https://www.nytimes.com/2021/12/30/technology/metaverse-virtual-reality-big-tech.html.

Miles, M. B., Huberman, A. M., and Saldana, J. (2014). Qualitative data analysis: a methods sourcebook. 3rd ed.

Mughal, F., Wahid, A., and Khattak, M. A. K. (2022). “Artificial intelligence: evolution, benefits, and challenges,” in Internet of things. doi:10.1007/978-3-030-92054-8_4

Nash, K. (2018). Virtual reality witness: exploring the ethics of mediated presence. Stud. Documentary Film 12 (2), 119–131. doi:10.1080/17503280.2017.1340796

National Association of Criminal Defense Lawyers (2025). NACDL - computer Fraud and abuse act (CFAA). NACDL - National Association of Criminal Defense Lawyers. Available at: https://www.nacdl.org/Landing/ComputerFraudandAbuseAct.

NBAC (2001). Ehical and policy issues in research involving human participants: report and recommendations of the National Bioethics Advisory Commission. National Bioethics Advisory Commission. Available at: https://bioethicsarchive.georgetown.edu/nbac/human/overvol1.pdf.

Nelson, J. W. (2011). “A virtual property solution: how privacy law can protect the citizens of virtual worlds,” in Okla. City UL rev. Available at: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1688001