94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 17 March 2025

Sec. Virtual Reality in Medicine

Volume 6 - 2025 | https://doi.org/10.3389/frvir.2025.1420404

Purpose: This study aims to develop a system that integrates algorithms with mixed reality technology to accurately position perforating vessels during the harvesting of anterolateral thigh and free fibular flaps. The system’s efficacy is compared to that of color Doppler ultrasonography (CDU) to assess its performance in localizing vessels in commonly used lower extremity flaps.

Methods: Fifty patients requiring anterolateral thigh perforator flaps or free fibular flaps for the reconstruction of maxillofacial tissue defects were randomly divided into two groups: the System Group and the CDU Group, with 25 patients in each group. In the System Group, the flap outline was drawn on the flap donor area of the lower limb, and positioning markers were placed and fixed at the highest points of the outline. After performing lower-limb CTA scanning, the obtained two-dimensional data were reconstructed into a three-dimensional model of all lower-limb tissues and positioning markers using specialized software. This 3D model was then imported into the HoloLens 2. An artificial intelligence algorithm was developed within the HoloLens 2 to automatically align the positioning markers with their 3D models, ultimately achieving registration between the perforator vessels and their 3D models. In the CDU Group, conventional methods were used to locate perforator vessels and mark them on the body surface. For both groups, the perforator flap design was based on the identified vessels. The number of perforator vessels located during surgery and the number actually found were recorded to calculate the accuracy of perforator vessel identification for each technique. The distance between the marked perforator vessel exit points and the actual exit points was measured to determine the margin of error. Additionally, the number of successfully harvested flaps was recorded.

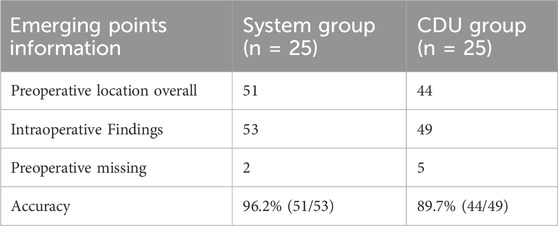

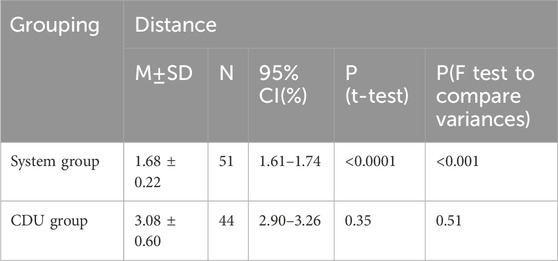

Results: In the system group, 51 perforating vessel penetration sites were identified in 25 cases, with 53 confirmed during surgery, yielding a 96.2% identification accuracy. In the CDU group, 44 sites were identified, with 49 confirmed during surgery, resulting in an 89.7% accuracy. The distance between the identified and actual penetration sites was 1.68 ± 0.22 mm in the system group, compared to 3.08 ± 0.60 mm in the CDU group. All 25 patients in the system group had successful flap harvests as per the preoperative design. In the CDU group, two patients failed to locate perforating vessels in the designed area, requiring repositioning and subsequent flap harvesting. One patient in the system group developed marginal tissue ischemia and necrosis on postoperative day 7, which healed after debridement. In the CDU group, one patient experienced ischemic necrosis on postoperative day 6, requiring repair with a pectoralis major flap.

Conclusion: The system developed in this study effectively localizes perforating vessel penetration sites for commonly used lower extremity flaps with high accuracy. This system shows significant potential for application in lower extremity flap harvesting surgeries.

In reconstructive surgeries for head, neck, and maxillofacial tissue defects, the anterolateral thigh flap and the free fibular flap are the most used lower limb perforator flaps. The anterolateral thigh flap is often referred to as the “universal flap” because of its ease of harvesting, rich blood supply, and long vascular pedicle (Kushida-Contreras et al., 2021). The free fibular flap can repair maxillofacial bone defects, and its skin paddle also repairs soft tissue defects while facilitates monitoring (Zhang et al., 2022; Han et al., 2022). However, variability in lower limb perforating vessels and individual differences can affect the final repair outcomes. These differences in vessel location, diameter, origin, and course through subcutaneous tissue and muscle are evident not only between individuals but also between different sides of the same individual (Christopher et al., 2003; Fliss et al., 2020). The survival of a free flap depends on establishing effective circulation through anastomosed arteries and veins. The main cause of failure is the inaccuracy of current methods used to evaluate and localize perforator vessels (Rao et al., 2010). Therefore, precise preoperative localization of perforator vessels and rational design of perforator flaps are crucial clinical issues that need to be addressed.

CTA and CDU are the most widely used methods for localizing perforator vessels (Moore et al., 2021). CTA uses X-rays and computer technology to generate high-resolution vascular images and provide comprehensive anatomical information. Due to its high spatial resolution, CTA can detect perforator vessels with diameters as small as 0.3 mm and clearly present them through 3D reconstruction (Bajus et al., 2023). CDU provides a high-resolution evaluation of vascular quality and hemodynamic information, such as peak flow velocity and resistance index. It can detect perforator vessels with diameters of approximately 0.5 mm (Shen et al., 2022). In contrast, CTA can precisely determine the diameter and length of the vascular pedicle, the muscle direction, and potential perforators during detection. Its superiority over CDU has earned it the reputation as the “gold standard” for vascular localization (Zhan et al., 2020). However, during surgery, CTA images require the surgeon to overlay the reconstructed images onto the actual surgical field based on personal experience, which often consumes significant time and effort in matching the virtual image with reality (Chatterjee et al., 2024). Retaining CTA’s technical advantages while introducing 3D visualization can significantly improve the efficiency of free flap harvesting and contribute to advancements in medical technology.

In recent years, the rapid development of mixed reality has opened up new possibilities for addressing this challenge. This technology, utilizing visual and auditory interaction, has been widely applied across various medical disciplines (Magalhães et al., 2024; Su et al., 2024; Ursin et al., 2024). Previous studies have primarily applied mixed reality in orthopedics or cranio-maxillofacial surgery, where fixed anatomical landmarks like bones, ears, and nasal structures serve as reference points for localization (Mishra et al., 2022). However, the lower limb’s flat and smooth surface, which lacks prominent anatomical landmarks, presents challenges for traditional manual registration methods in mixed reality. To address this, we developed a simple, non-invasive localization device and designed an artificial intelligence algorithm to automatically and rapidly align the device with its 3D model. This enables the precise alignment of perforator vessels with their 3D models, providing new insights and research foundations for clinical practice.

A total of 50 patients who underwent anterolateral thigh flap and free fibular flap surgeries at Chongqing University Cancer Hospital between June 2022 and January 2024 were selected. The patients were randomly divided into two groups: the system group and the CDU group, with 25 patients in each. The cohort included 29 males and 21 females, aged between 31 and 75 years.

The system group used MR with CTA data to localize lower limb perforator vessels. In this group, 22 patients received anterolateral thigh flap reconstruction for head, neck, and maxillofacial defects, and three patients received free fibular flap reconstruction for mandibular defects. The control group used CDU to perforator vessel localization. In this group, 23 patients received anterolateral thigh flap reconstruction for head, neck, and maxillofacial defects, and two patients received free fibular flap reconstruction for mandibular defects. All perforator flap surgeries were performed by the same chief surgeon. This study was approved by the Ethics Committee of Chongqing University Cancer Hospital (Ethics Approval No.: CZLS2021177-A). All patients provided informed consent.

Inclusion criteria: (1) Patients requiring anterolateral thigh flap or free fibular flap reconstruction of soft tissue or bone defects following extended resection of malignant tumors in the head, neck, or maxillofacial region. (2) Patients able to tolerate general anesthesia. (3) Patients with no history of trauma or surgery on either lower limb.

Exclusion criteria: (1) Patients eligible for forearm flap reconstruction following extended resection of malignant tumors in the head, neck, or maxillofacial region. (2) Patients unable to tolerate general anesthesia due to other underlying conditions. (3) Patients with a history of surgery or trauma to either lower limb.

A positioning device was installed in the planned flap donor area, followed by CTA scanning of the lower limb along with the positioning device. Three-dimensional reconstruction was then performed, and an artificial intelligence algorithm was developed in HoloLens 2 to overlay the positioning device with its 3D model for measuring the corresponding parameters.

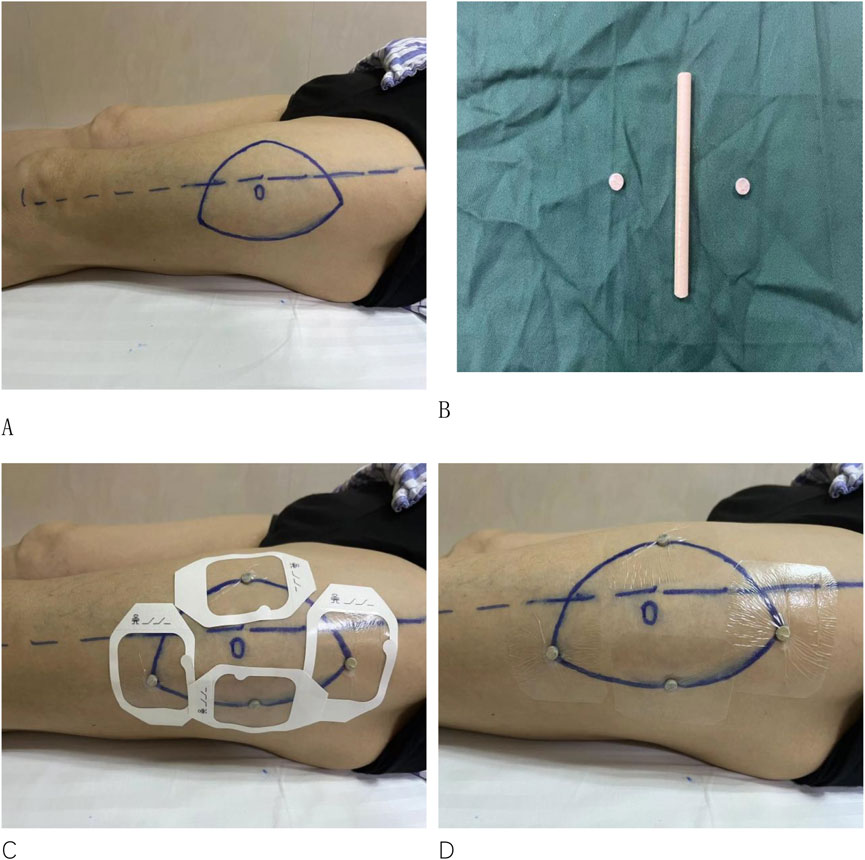

(1) Attaching the Localization Device: The patient lies in a supine position. Mark the area where the flap is to be harvested. A circular polyether ether ketone (PEEK) material, which is radiopaque and free of artifacts, with a diameter of 6 mm and a thickness of 3 mm, is applied to the marked high points of the anterolateral thigh flap or fibular skin paddle (Figure 1).

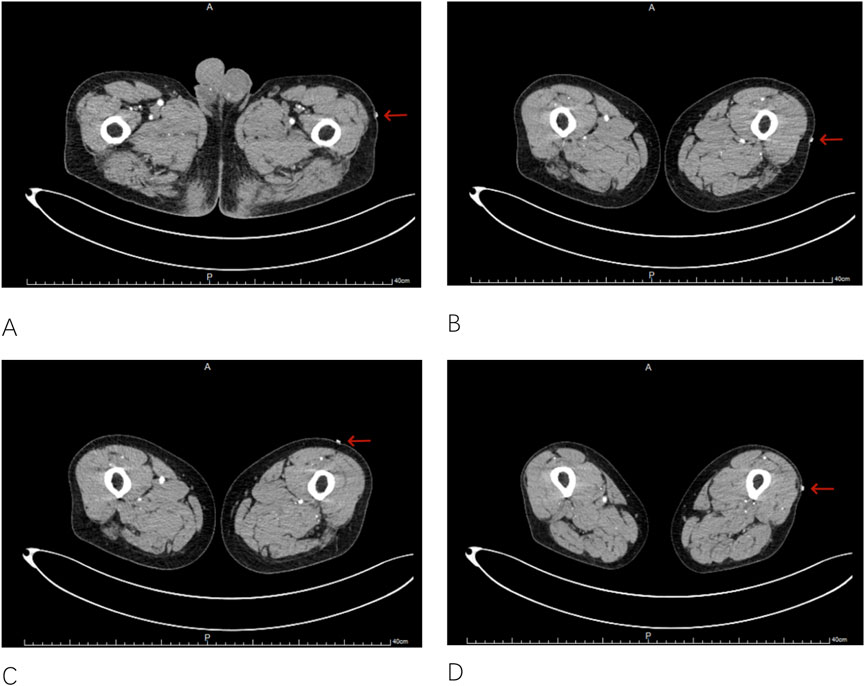

(2) Lower Limb CTA Scan: The patient remains in a supine position, identical to the surgical position, with feet first and arms raised above the head. A Siemens dual-source CT scanner (Siemens, Germany) is used. A contrast agent, Iopromide 370, is injected at a rate of 4.0 mL/s, with a total volume of approximately 90 mL. The arterial phase is triggered by monitoring the femoral artery with a threshold of 100 HU, and a 10-s delay is set for the scan. Upon completion, 2D images of the lower limb tissues and PEEK material are obtained (Figure 2).

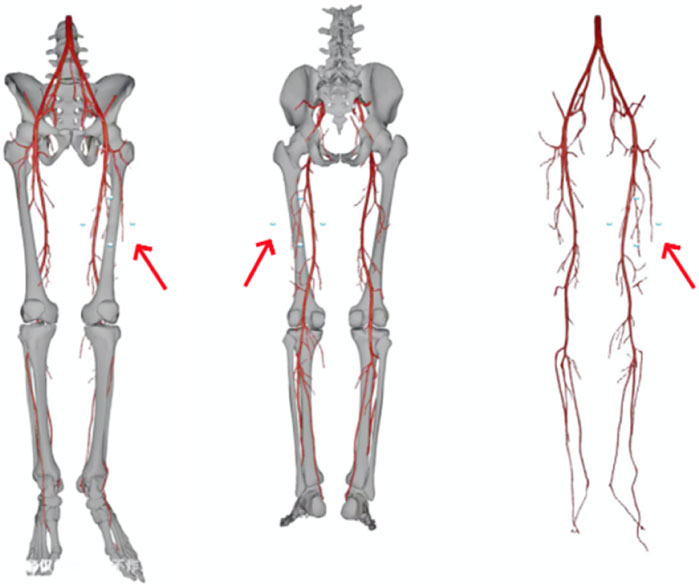

(3) Reconstructing 2D Data into a 3D Model: The 2D CTA data obtained is imported into Simplant software (Software workstation, Belgium). Using the workstation’s tissue recognition module, soft tissue, vessels, bones, and tumors are segmented and reconstructed into a mesh model. The workstation’s scene editing module is then used to optimize the mesh by refining triangles, smoothing protrusions, hollowing out vessels, and adjusting boundary contours. Different colors are applied to distinguish soft tissue, vessels, bones, and tumors within the model. The result is a 3D model of the lower limb and PEEK (Figure 3).

(4) Develop Algorithms for automatic registration of PEEK materials with their 3D models in HoloLens 2 (Microsoft, United States).

Figure 1. (A) Marking the area on the patient’s lower limb where the skin flap is to be harvested. (B) Fabrication of the PEEK material. (C, D) Attaching the PEEK material to the highest points of the contour in the designated harvest area.

Figure 2. Obtaining 2D images of the lower limb tissue and the PEEK material ((A–D), with arrows in the images indicating the 2D images of the PEEK material).

Figure 3. 3D model of the lower limb and the PEEK material created (the arrows in the images indicating the PEEK 3D model).

The input 3D model is denoted as

First, the 3D model is partitioned into non-overlapping patches. Each patch is resolved into a sequence of a specific length (not explicitly given in the document; assume it as L). Then, a linear layer is used to project these patches into the K-dimensional embedding space. The calculation formula is

To enable the model to capture the position information of the patches in the 3D model,a learnable 1-dimensional positional embedding

Position Embedding and Attention Mechanism Optimization: After the embedding layer, calculations are performed using the Transformer block containing the multi-head attention (MSA) and multi-layer perceptron (MLP) sub-layers. First, a normalization operation is carried out, with the formula

Calculation of Multi-Head Self-Attention (MSA):

Calculation of the MLP Part:

Final Output of the Transformer Layer:

PEEK Position Detection Optimization: The YOLOv8 algorithm is used to detect the key points of the 4 PEEKs on the patient’s body surface. The detected key-point positions are denoted as

3D Model Scaling and Optimization: According to the information of the long and short axes of the PEEK, the formulas

A new self-supervised learning module was integrated into the Unity-based HoloLens two development environment to provide real-time feedback and dynamic adjustments for aligning key points between the PEEK material and the 3D model of the patient’s lower limb. The implementation features real-time dynamic calibration in augmented reality, allowing for more precise alignment of the 3D model with the patient’s surface anatomy. This study utilizes the FDIM-AnatomicReg system developed by Chongqing FDiM Digital Technology Co., Ltd., with system version (v2.8.2022). The development process complies with the ISO 13485 standard for medical device software development.

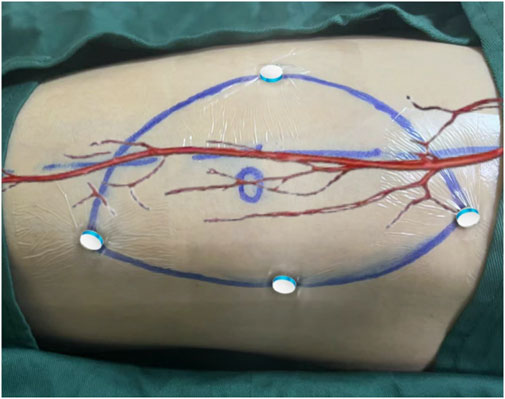

① Intraoperative Localization of Perforator Vessels: The patient is positioned supine, as during the examination. The surgeon dons a HoloLens2 activates the relevant module, and projects the 3D model of the patient’s lower limb onto the body surface. Using hand gestures and voice commands, assisted by Algorithms, the 3D model is automatically aligned with the localization device on the patient’s body surface. Once aligned, the perforator vessels match their 3D model, allowing for marking of the exit points on the body surface and confirming whether the designed flap includes perforator vessels (Figure 4).

② Intraoperative Anatomical Identification of Perforator Vessels: The skin and subcutaneous tissue are incised along the marked flap area. The perforator vessels are then identified visually from front to back, just beneath the fascia lata (Figure 5).

③ Recording and Calculations: The system records the number of localized perforator vessel exit points and the actual number identified intraoperatively. The accuracy of perforator vessel identification is then calculated based on these data. Before incising the flap, the perforator exit points identified by HoloLens 2 were marked with a marker. After the flap incision, the perforator vessels were carefully dissected and preserved, and the actual perforator exit points were identified and marked with a marker. The distance between the marked perforator exit points and the actual exit points was measured using a vernier caliper. The error is determined by measuring the distance between the localized and actual perforator exit points. Additionally, the number of successfully harvested flaps based on the design is recorded.

Figure 4. Aligning the PEEK material with its 3D model during surgery to achieve registration of the perforator vessels with their 3D model.

Patients in the CDU group were positioned optimally for the operation. Ultrasound measurements of the perforator vessels in the donor area of the lower limb were taken, recording the vessels' course, diameter, and hemodynamics. Marks were made on the body surface at the projected exit points of the perforators. The same technician performed all the ultrasound measurements and markings. During surgery, the skin and subcutaneous tissue were incised along the marked flap area to locate the perforator vessels. The number of exit points localized by CDU and identified intraoperatively were recorded. The accuracy of perforator vessel identification was calculated from the data. The error was determined by measuring the distance between the localized and actual perforator exit points. Additionally, the number of successfully harvested flaps based on the design was recorded.

Identification Accuracy Analysis: The identification accuracy of perforator vessels between the two groups was compared. Accuracy was expressed as a percentage and calculated using the formula:

Identification Accuracy (%) = (Total number of vessels identified during surgery/Total number of vessels identified by the system and confirmed during surgery) × 100%.

Localization Error Analysis: An independent two-sample t-test was used to compare the mean localization error of perforator vessel exit points between the two groups. Additionally, an F-test was employed to compare the variances of localization errors.

Success Rate Analysis: A chi-square test was performed to evaluate the differences in success rates of preoperatively designed flap harvesting between the two groups.

For all statistical analyses, a significance level of p < 0.05 was used. The results of the t-test and F-test were reported with their respective p-values and 95% confidence intervals (CIs). Data analysis was conducted using SPSS statistical software (version 13.0). Prior to the analyses, the normality of the data and homogeneity of variances were tested to ensure the appropriateness of the statistical methods. Continuous variables were presented as mean ± standard deviation (M ± SD).

In the system group, one case of anterolateral thigh flap exhibited ischemic necrosis at the edge on postoperative day 7, which healed with delay after debridement. In the CDU group, one case of anterolateral thigh flap developed ischemic necrosis on postoperative day 6, necessitating removal of the flap and subsequent reconstruction with a pectoralis major muscle flap.

In the system group, 51 perforator vessel exit points were identified in the surgical area across 25 cases, with 53 found during surgery, resulting in an identification accuracy of 96.2%. In the CDU group, 44 exit points were identified in the surgical area, with 49 found during surgery, resulting in an identification accuracy of 89.7% (Table 1).

Table 1. Estimation of the emerging points depicted by System and CDU method, verified by intraoperative finding.

The average distance between localized and actual perforator vessel exit points in the system group was 1.68 ± 0.22 mm. In the CDU group, the average distance was 3.08 ± 0.60 mm (Table 2).

Table 2. Emerging points discrepancies between preoperative marked points and intraoperative findings.

In the system group, all 25 patients successfully underwent perforator flap harvesting as per the preoperative flap design. In the CDU group, 2 out of 25 patients lack viable perforator vessels within the designed area of the anterolateral thigh flap, necessitating exploration of surrounding areas to locate perforator vessels and subsequent re-harvesting of the flap.

MR technology has significant advanced in the medical field. By integrating virtual environments with the real world, MR provides intuitive surgical navigation and localization tools, allowing surgeons to visualize complex anatomical structures in 3D within the actual surgical environment (Quesada-Olarte et al., 2022; Ghaednia et al., 2021). MR shows significant potential, particularly in surgeries requiring precise localization and navigation (Can Kolac et al., 2024; Eves et al., 2022).

Currently, MR applications in surgery largely rely on auxiliary tools like optical tracking systems (Stewart et al., 2021). Researchers use system algorithms to establish spatial coordinates and align virtual vascular images with the human body via magnetic tracking of surface reference markers. However, these systems are primarily used in spinal and orthopedic surgery, where fixed anatomical landmarks are present (Felix et al., 2022; Doughty et al., 2022; Birlo et al., 2022). Studies show that optical tracking systems can significantly improve surgical accuracy and efficiency in these procedures (Heinrich et al., 2020; Gsaxner et al., 2021). However, the use of optical tracking systems in soft tissue surgeries, especially in areas lacking fixed anatomical landmarks such as the lower limbs, is limited. This limitation exists because soft tissues are prone to deformation, making it challenging to establish a stable spatial coordinate system. Additionally, optical tracking systems require coordination of multiple devices, increasing the surgeon’s cognitive load and complicating the procedure (Meulstee et al., 2018). In perforator flap surgeries, particularly during anterolateral thigh flap and free fibula flap harvesting, vascular localization requires only local surface registration of the perforator vessels within the flap area, not extensive global registration. Therefore, this study developed an adhesive localization device based on PEEK material. This device is attached to the body preoperatively, followed by CTA imaging to create a 3D model of the vasculature and soft tissues relative to the PEEK. During perforator flap vascular localization, aligning the PEEK with its 3D model ensures accurate registration of the perforator vessels, enabling precise 3D matching of CTA imaging data to the surgical area. However, operations like zooming, rotating, and aligning the PEEK within HoloLens significantly increase preparation time and are prone to human errors, reducing surgical efficiency. Thus, a technique is needed to rapidly and precisely align the PEEK with its 3D model.

As artificial intelligence (AI) rapidly advances, the integration of magnetic resonance (MR) technology and Algorithms has become a key research focus in the medical field. Algorithms can quickly process large volumes of complex data and provide intelligent surgical plans, while MR technology visualizes the data to assist surgeons in real-time decision-making during surgery (Asadi et al., 2024; von Atzigen et al., 2022; Ramalhinho et al., 2023).

Recent studies show that combining MR with AI excels in intraoperative image registration and personalized surgical planning. Bohné et al. (2023) developed a novel method combining machine learning and MR, using the Yolo algorithm for medical applications in HoloLens 2. von Atzigen et al. (2020) integrated the Yolo algorithm with HoloLens 2 to detect pedicle screw heads during spinal fusion surgery, achieving an average localization error of 1.83 ± 1.10 mm and reducing registration time to 20 s. These studies confirmed the feasibility of combining Algorithms with MR by integrating machine learning and deep learning models into HoloLens 2, using the Yolo algorithm to improve accuracy and enable 3D model visualization.

In this study, Algorithms were implemented within HoloLens 2 to integrate MR and AI technologies for lower limb perforator vessel localization surgery. Automated registration of PEEK material with its 3D model significantly improved the accuracy and ease of perforator vessel localization, making it more practical. The average registration time was reduced to under 5 min, significantly improving efficiency compared to manual methods and reducing surgical time and complications.

This study conducted a prospective analysis of 50 patients undergoing anterolateral thigh and free fibula flap surgeries to evaluate the effectiveness of integrating Algorithms with MR in lower limb perforator flap procedures. The results showed that the system group achieved a perforator vessel emergence point recognition accuracy of 96.2%, significantly higher than the 89.7% in the CDU group. This indicates that the MR combined with AI system can more accurately identify and localize perforator vessels, reducing preoperative planning uncertainty. Additionally, the average error between the localized and actual emergence points of perforator vessels in the system group was less than that of the CDU group, comparable to findings by Sun et al. (2020) (1.30 ± 0.39 mm) and Tu et al. (2021) (1.61 ± 0.44 mm). The findings further confirm the system’s superiority in localization accuracy, demonstrating its ability to assist surgeons in more precisely designing and harvesting perforator flaps, thus reducing surgery time and complications. Moreover, all patients in the system group successfully underwent perforator flap harvesting according to the preoperative design, whereas 2 cases in the CDU group required flap redesign due to unsuccessful perforator vessel identification. These results suggest the system group offers higher reliability in preoperative planning, reducing uncertainty and potential risks in perforator flap design and harvesting.

The developed MR and AI integrated system demonstrated significant potential in anterolateral thigh and free fibula flap surgeries, effectively improving perforator vessel recognition and localization accuracy. However, this study has several limitations. First, the sample size is small, and larger clinical trials are needed to verify the system’s broader applicability. Secondly, although the localization algorithm used is relatively simple, the need to remove PEEK to maintain sterility prevents real-time intraoperative localization and tracking. Additionally, the high cost and technical complexity of hardware such as HoloLens 2 may limit its widespread clinical use. Future research should focus on reducing costs and simplifying technology to promote the broader clinical use of MR and AI integrated technologies. Addressing these challenges could enhance the role of MR and AI integration in precision medicine, providing more efficient and safer healthcare services for patients.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

This clinical trial has been conducted in accordance with the principles of the Declaration of Helsinki and has received ethical approval from the Ethics Committee of Chongqing University Cancer Hospital (No. CZLS2021177-A). All participants provided informed consent before participating in the study. The study design, procedures, risks, and benefits have been thoroughly explained to the participants. Participants have the right to withdraw from the study at any time without any consequences. Confidentiality of all participant information is strictly maintained throughout the study.

YL: Formal Analysis, Funding acquisition, Investigation, Methodology, Writing–original draft. JW: Supervision, Validation, Writing–review and editing. LZ: Data curation, Validation, Visualization, Writing–original draft. XT: Data curation, Project administration, Software, Writing–original draft. SW: Investigation, Methodology, Project administration, Software, Supervision, Validation, Writing–review and editing. PJ: Investigation, Methodology, Supervision, Validation, Software, Writing–review and editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. Scientific and Technological Research Program of Chongqing Municipal Education Commission (KJQN202300124, KJQN202400105); Chongqing Medical Scientific Research Project (2025MSXM059); Chongqing Municipal Scientific Research Institutions Performance Incentive and Guidance Project (Grant number CSTB2024JXJL-YFX0076); Doctoral Research Initiation Fund of the Affiliated Hospital of Southwest Medical University and Southwest Medical University 2023 Undergraduate Innovation and Entrepreneurship Training Program (#S202310632231) to S.W.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Asadi, Z., Asadi, M., Kazemipour, N., Léger, É., and Kersten-Oertel, M. (2024). A decade of progress: bringing mixed reality image-guided surgery systems in the operating room. Comput. Assist. Surg. (Abingdon) 29 (1), 2355897. doi:10.1080/24699322.2024.2355897

Bajus, A., Streit, L., Kubek, T., Novák, A., Vaníček, J., Šedivý, O., et al. (2023). Color Doppler ultrasound versus ct angiography for diep flap planning: a randomized controlled trial. J. Plastic, Reconstr. and Aesthetic Surg. 86, 48–57. doi:10.1016/j.bjps.2023.07.042

Birlo, M., Edwards, P. J. E., Clarkson, M., and Stoyanov, D. (2022). Utility of optical see-through head mounted displays in augmented reality-assisted surgery: a systematic review. Med. Image Anal. 77, 102361. doi:10.1016/j.media.2022.102361

Bohné, T., Brokop, L. T., Engel, J. N., and Pumplun, L. (2023). “Subjective decisions in developing augmented intelligence,” in Judgment in predictive analytics. Editor M. Seifert (Cham: Springer International Publishing), 27–52.

Can Kolac, U., Paksoy, A., and Akgün, D. (2024). Three-dimensional planning, navigation, patient-specific instrumentation and mixed reality in shoulder arthroplasty: a digital orthopedic renaissance. EFORT Open Rev. 9 (6), 517–527. doi:10.1530/eor-23-0200

Chatterjee, A. R., Malhotra, A., Curl, P., Andre, J. B., Perez-Carrillo, G. J. G., and Smith, E. B. (2024). Traumatic cervical cerebrovascular injury and the role of CTA: AJR expert panel narrative review. AJR Am. J. Roentgenol. 223 (1), e2329783. doi:10.2214/ajr.23.29783

Christopher, R., Geddes, S. F. M., and Neligan, P. C. (2003). Perforator flaps: evolution, classification, and applications classification, and applications. Ann. Plast. Surg. 50 (1), 90–99. doi:10.1097/00000637-200301000-00016

Doughty, M., Ghugre, N. R., and Wright, G. A. (2022). Augmenting performance: a systematic review of optical see-through head-mounted displays in surgery. J. Imaging 8 (7), 203. doi:10.3390/jimaging8070203

Eves, J., Sudarsanam, A., Shalhoub, J., and Amiras, D. (2022). Augmented reality in vascular and endovascular surgery: scoping review. JMIR Serious Games 10 (3), e34501. doi:10.2196/34501

Felix, B., Kalatar, S. B., Moatz, B., Hofstetter, C., Karsy, M., Parr, R., et al. (2022). Augmented reality spine surgery navigation. Spine 47 (12), 865–872. doi:10.1097/brs.0000000000004338

Fliss, E., Yanko, R., Bracha, G., Teman, R., Amir, A., Horowitz, G., et al. (2020). The evolution of the free fibula flap for head and neck reconstruction: 21 Years of experience with 128 flaps. J. Reconstr. Microsurg. 37 (04), 372–379. doi:10.1055/s-0040-1717101

Ghaednia, H., Fourman, M. S., Lans, A., Detels, K., Dijkstra, H., Lloyd, S., et al. (2021). Augmented and virtual reality in spine surgery, current applications and future potentials. Spine J. 21 (10), 1617–1625. doi:10.1016/j.spinee.2021.03.018

Gsaxner, C., Pepe, A., Li, J., Ibrahimpasic, U., Wallner, J., Schmalstieg, D., et al. (2021). Augmented reality for head and neck carcinoma imaging: description and feasibility of an instant calibration, markerless approach. Comput. Methods Programs Biomed. 200, 105854. doi:10.1016/j.cmpb.2020.105854

Han, J., Guo, Z., Wang, Z., Zhou, Z., Liu, Y., and Liu, J. (2022). Comparison of the complications of mandibular reconstruction using fibula versus iliac crest flaps: an updated systematic review and meta-analysis. Int. J. Oral Maxillofac. Surg. 51 (9), 1149–1156. doi:10.1016/j.ijom.2022.01.004

Heinrich, F., Huettl, F., Schmidt, G., Paschold, M., Kneist, W., Huber, T., et al. (2020). Holopointer: a virtual augmented reality pointer for laparoscopic surgery training. Int. J. Comput. Assisted Radiology Surg. 16 (1), 161–168. doi:10.1007/s11548-020-02272-2

Kushida-Contreras, B. H., Manrique, O. J., and Gaxiola-García, M. A. (2021). Head and neck reconstruction of the vessel-depleted neck: a systematic review of the literature. Ann. Surg. Oncol. 28 (5), 2882–2895. doi:10.1245/s10434-021-09590-y

Magalhães, R., Oliveira, A., Terroso, D., Vilaça, A., Veloso, R., Marques, A., et al. (2024). Mixed reality in the operating room: a systematic review. J. Med. Syst. 48 (1), 76. doi:10.1007/s10916-024-02095-7

Meulstee, J. W., Nijsink, J., Schreurs, R., Verhamme, L. M., Xi, T., Delye, H. H. K., et al. (2018). Toward holographic-guided surgery. Surg. Innov. 26 (1), 86–94. doi:10.1177/1553350618799552

Mishra, R., Narayanan, M. D. K., Umana, G. E., Montemurro, N., Chaurasia, B., and Deora, H. (2022). Virtual reality in neurosurgery: beyond neurosurgical planning. Int. J. Environ. Res. Public Health 19 (3), 1719. doi:10.3390/ijerph19031719

Moore, R., Mullner, D., Nichols, G., Scomacao, I., and Herrera, F. (2021). Color Doppler ultrasound versus computed tomography angiography for preoperative anterolateral thigh flap perforator imaging: a systematic review and meta-analysis. J. Reconstr. Microsurg. 38 (07), 563–570. doi:10.1055/s-0041-1740958

Quesada-Olarte, J., Carrion, R. E., Fernandez-Crespo, R., Henry, G. D., Simhan, J., Shridharani, A., et al. (2022). Extended reality-assisted surgery as a surgical training tool: pilot study presenting first hololens-assisted complex penile revision surgery. J. Sex. Med. 19 (10), 1580–1586. doi:10.1016/j.jsxm.2022.07.010

Ramalhinho, J., Yoo, S., Dowrick, T., Koo, B., Somasundaram, M., Gurusamy, K., et al. (2023). The value of augmented reality in surgery — a usability study on laparoscopic liver surgery. Med. Image Anal. 90, 102943. doi:10.1016/j.media.2023.102943

Rao, S. S., Parikh, P. M., Goldstein, J. A., and Nahabedian, M. Y. (2010). Unilateral failures in bilateral microvascular breast reconstruction. Plastic Reconstr. Surg. 126 (1), 17–25. doi:10.1097/PRS.0b013e3181da8812

Shen, D., Huang, X., Huang, Y., Zhou, D., Ye, S., and Nikolovski, S. (2022). Computed tomography angiography and B-mode ultrasonography under artificial intelligence plaque segmentation algorithm in the perforator localization for preparation of free anterolateral femoral flap. Contrast Media and Mol. Imaging 2022 (1), 4764177. doi:10.1155/2022/4764177

Stewart, C. L., Fong, A., Payyavula, G., DiMaio, S., Lafaro, K., Tallmon, K., et al. (2021). Study on augmented reality for robotic surgery bedside assistants. J. Robotic Surg. 16 (5), 1019–1026. doi:10.1007/s11701-021-01335-z

Su, S., He, J., Wang, R., Chen, Z., and Zhou, F. (2024). The effectiveness of virtual reality, augmented reality, and mixed reality rehabilitation in total knee arthroplasty: a systematic review and meta-analysis. J. Arthroplasty 39 (3), 582–590.e4. doi:10.1016/j.arth.2023.08.051

Sun, Q., Mai, Y., Yang, R., Ji, T., Jiang, X., and Chen, X. (2020). Fast and accurate online calibration of optical see-through head-mounted display for Ar-based surgical navigation using microsoft hololens. Int. J. Comput. Assisted Radiology Surg. 15 (11), 1907–1919. doi:10.1007/s11548-020-02246-4

Tu, P., Gao, Y., Lungu, A. J., Li, D., Wang, H., and Chen, X. (2021). Augmented reality based navigation for distal interlocking of intramedullary nails utilizing microsoft hololens 2. Comput. Biol. Med. 133, 104402. doi:10.1016/j.compbiomed.2021.104402

Ursin, F., Timmermann, C., Benzinger, L., Salloch, S., and Tietze, F. A. (2024). Intraoperative application of mixed and augmented reality for digital surgery: a systematic review of ethical issues. Front. Surg. 11, 1287218. doi:10.3389/fsurg.2024.1287218

von Atzigen, M., Liebmann, F., Hoch, A., Bauer, D. E., Snedeker, J. G., Farshad, M., et al. (2020). Holoyolo: a proof-of-concept study for marker-less surgical navigation of spinal rod implants with augmented reality and on-device machine learning. Int. J. Med. Robotics Comput. Assisted Surg. 17 (1), 1–10. doi:10.1002/rcs.2184

von Atzigen, M., Liebmann, F., Hoch, A., Miguel Spirig, J., Farshad, M., Snedeker, J., et al. (2022). Marker-free surgical navigation of rod bending using a stereo neural network and augmented reality in spinal fusion. Med. Image Anal. 77, 102365. doi:10.1016/j.media.2022.102365

Zhan, Y., Zhu, H., Geng, P., Zou, M., Qi, J., and Zhu, Q. (2020). Revisiting the blood supply of the rectus femoris. Ann. Plastic Surg. 85 (4), 419–423. doi:10.1097/sap.0000000000002141

Keywords: mixed reality, artificial intelligence algorithm, anterolateral thigh flap, free fibular flap, perforating vessel localization mixed reality, perforating vessel localization

Citation: Liu Y, Wu J, Zhou L, Tang X, Wu S and Ji P (2025) Research on the combination of algorithms and mixed reality for the localization of perforator vessels in anterolateral thigh and free fibula flaps. Front. Virtual Real. 6:1420404. doi: 10.3389/frvir.2025.1420404

Received: 20 April 2024; Accepted: 25 February 2025;

Published: 17 March 2025.

Edited by:

Heather Benz, Johnson and Johnson Medtech (US), United StatesReviewed by:

Laura Cercenelli, University of Bologna, ItalyCopyright © 2025 Liu, Wu, Zhou, Tang, Wu and Ji. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shuangjiang Wu, d3VzaHVhbmdqaWFuZzIxQHN3bXUuZWR1LmNu; Ping Ji, amlwaW5nQGhvc3BpdGFsLmNxbXUuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.