- Human-Computer Interaction Institute, Carnegie Mellon University, Pittsburgh, PA, United States

Augmented Reality (AR) systems provide users with timely access to everyday information. Designing how AR messages are presented to the user, however, is challenging. If a visual message is presented suddenly in users’ field of view, it will be noticed easily, but might be disruptive to users. Conversely, if messages are made visible by slowly fading their opacity, for example, they might require more effort for users to notice and react, as they need to wait for the content to appear. This is particularly true for head-anchored virtual content, and when users are engaged in other tasks or walking in a physical environment. To address this challenge, we introduce a motion-aware technique that delivers AR visual information unobtrusively during walking when users rotate their head. When users make a turn, TurnAware moves the visual content into their field of view from the side at a speed proportional to their rotational velocity. We compare our method to a Fade-in and Pop-up baseline in a user study. Our results show that our method enables users to react to virtual content in a timely manner, while minimizing disruption on their walking patterns. Our technique improves current AR information delivery techniques by striking a balance between noticeability and disruptiveness.

1 Introduction

Augmented Reality (AR) head-worn displays offer users convenient and timely access to information (Lages and Bowman, 2019a; b). Users can engage with tasks in their immediate environment, such as browsing through a store or cooking, while seamlessly receiving secondary information such as messages or instructions presented in AR. By presenting such secondary information directly in users’ field of view, AR provides access in an always-available manner. This also reduces users’ burden to handle additional hardware, e.g., getting the phone out of their pocket.

However, similar to a phone’s buzz that disrupts users, sudden notifications in AR can divert focus from their on-going task. This can be perceived as disruptive and decrease users’ primary task performance. In on-the-go scenarios such as walking, abrupt interruptions can cause users to slow down, stop unexpectedly, or be distracted, miss obstacles and even fall. This is because walking demands users to pay attention to their surroundings, destinations, and coordinate the body movements. Cognitive motor interference studies suggest that performing dual-task activities during walking can induce gait changes such as speed reduction (Al-Yahya et al., 2011). This is especially relevant to mobile AR scenarios, where walking can be more impacted by the sudden attention shifts caused by AR notifications. Ideally, AR content such as notifications should be accessible yet minimally disruptive to the user’s walking behavior.

Introducing secondary information directly into the central visual field can disrupt the user’s task and be intrusive. Several ambient AR displays aim to reduce such disruption, such as placing secondary AR information in user’s peripheral visual field (Lu et al., 2020; Cadiz et al., 2001). However, peripheral placement requires users to actively retrieve the information via a glance or head movement at a specific direction. This means that messages and other information might go unnoticed. Maintaining the balance between intrusiveness and noticeability is crucial in addressing ambient and secondary display (McCrickard et al., 2003). Animation techniques like gradual fading reduce such interruptions (Janaka et al., 2023), but can delay access to information. Users need to wait for the content to transition from transparent to fully visible, thus readable, which can be equally distracting in a walking scenario.

To address this challenge for AR information in on-the-go scenarios, we introduce TurnAware, a motion-aware technique that delivers AR visuals unobtrusively during walking. Our technique leverages the fact that users typically have to turn during walking, for example when walking around a corner or when moving their head to look at a shop window. When users take a turn, TurnAware moves the visual information into their field of view from the side at a speed proportional to their rotational velocity. By aligning AR notifications with natural head movements, thus the optical flow in users’ visual field, our technique aims to minimize disruptions while delivering messages so they are noticeable.

We evaluate our technique in a user study

In summary, we make the following contributions.

• A motion-aware technique for delivering AR information displays that leverages users’ head rotations to introduce virtual content unobtrusively into their field of view.

• A comparative study

2 Related work

2.1 AR content and user task

Head-mounted displays augment what users can see by adding visual contents into their field of view, enabling them to access additional information while engaging in tasks such as productivity or casual interactions Cho et al. (2024). Given that users have limited amount of attentional resources, providing them with the right information at the right time is a crucial area of research. Within our work, we aim to provide AR contents that are beneficial but have minimal negative impact on users’ current task.

A number of techniques aim to minimize the impact of AR information on users’ current activities by adapting how to display AR content to users’ cognitive states. Prior studies minimize workloads required to access information, or reduce awareness of non-essential data. Mise-Unseen, for example, leverages moments when users turn their heads away, hiding changes in the visual scene during these transitions (Marwecki et al., 2019). Other works modify the information itself by varying the placement, level of detail (Lindlbauer et al., 2019) or the amount of information (Billinghurst and Starner, 1999; Rhodes, 1998) of AR content. Building on these adaptive approaches to minimize interruptions during tasks that users perform, our work extends to walking scenarios. Users have to also constantly observe the surroundings when viewing AR contents. We aim to deliver AR visual information that is adaptive to user’s walking activity.

2.2 Using AR while walking

During walking, we constantly redirect our visual focus to navigate through complex surroundings (Logan et al., 2010). In scenarios when another task is present, walking behavior is affected negatively, including reduced speed, cadence, stride time, and even risk of falling (Kao et al., 2023; Al-Yahya et al., 2011; Lim et al., 2017). Speed is the most common reported outcome measure of such interference (Al-Yahya et al., 2011; Grießbach et al., 2024). When presenting information in user’s field of view in mobile AR scenarios, it is important to examine and control how much attention contents draw, in order to minimize the impact of concurrent tasks on walking performance.

A moving object produces visual flows that tells our eyes about its motion (Barlow and Foldiak, 1989; Durgin et al., 2005). However, these signals can be distorted if we are moving (Durgin, 2009). Barlow’s theory suggests that the perceived object motion is influenced by both the object’s and our own motion (Barlow and Foldiak, 1989). When we are moving, object’s motion appears less apparent if our movement is in the same direction as the moving objects. At the same time, stationary or counter-directional objects’ motion stand out more prominently to the viewer. In AR displays, integrating visual information into natural head movement patterns, such as head rotation, has the potential to align contents into the direction of visual attention, and thereby reducing distraction during walking activities.

2.3 Secondary AR information delivery

Our goal is to subtly introduce non-urgent AR information to not disrupt users when they are walking. We draw inspirations from a research on ambient and secondary displays in Virtual and Augmented Reality. Unlike urgent notifications demanding immediate action, ambient and secondary displays aim to keep users aware of information that is not their primary focus, without causing abrupt stops (Matthews, 2006).

In information placement and retrieval for ambient display, peripheral interfaces present visual information outside user’s central field of view (beyond 30°) to avoid obstructing their main focus (Weiser and Brown, 1996; Matthews, 2006; Cadiz et al., 2001). Along the line, Lages and Bowman propose adapting virtual content to position them close to nearby walls during walking without blocking central vision (Lages and Bowman, 2019a; Lages and Bowman, 2019b). Han et al. embed contents directly into existing physical objects to minimize distraction (Han et al., 2023).

Ambient AR displays aim to optimize information through various retrieval methods and placement. Glanceable interfaces, for example, enable users to access information from the periphery using head cursors or gaze summoning (Lu et al., 2020). Prior study results have indicated a trade-off: suddenly displaying visuals into central-field displays are intrusive, while peripheral placements often go unnoticed. In ambient and secondary display, achieving a balance between intrusiveness and noticeability is crucial (McCrickard et al., 2003). Placement is also investigated in mobile scenarios, such as comparing information placed in the central visual field using display-fixed and body-fixed systems. Users perceived body-fixed systems as less urgent, with both systems having similar perceived task loads (Lee and Woo, 2023). However, these work often overlook a critical initial step: how AR visuals capture user attention at the first place.

Visual information appears in the user’s field of view through various forms of motion, each capturing attention differently. Fade-in and Pop-up techniques are often compared, with Pop-up generally regarded as more distracting, while Fade-in gradually draws attention and is preferred (Janaka et al., 2023). However, these techniques often happen in static scenarios where there is no motion in the user’s field of view. As discussed above, walking presents a different context in terms of visual attention, which requires a distinct approach towards minimizing disruption. Moreover, behavioral measures for assessing task interference during walking remain underrepresented in prior research.

Inspired by prior studies, we aim to use a motion-aware technique to adapt AR information delivery to user movement in walking scenarios, with the goal to minimize disruptions to users’ walking behavior.

3 TurnAware

Our goal is to deliver AR messages while users are walking, and enable them to access AR information with minimal interruptions. We aim to minimize disruptions in walking behavior, and enable users to quickly react to secondary information such as messages. Other methods such as pop-up message in users’ central field of view aim to refocus users’ attention immediately, thus are well suited for urgent messages. In contrast, keeping AR content outside of users’ field of view enables them to access the information anytime, but does not provide awareness of when new messages arrive. This makes such types of presentation well suited for non-urgent messages and ambient information (Han et al., 2023). Our approach aims to strike a balance between noticeability (Li et al., 2024) and disruptiveness, making it well suitable for messages of medium urgency such as notifications from secondary apps [e.g., weather, music, Cho et al. (2024)].

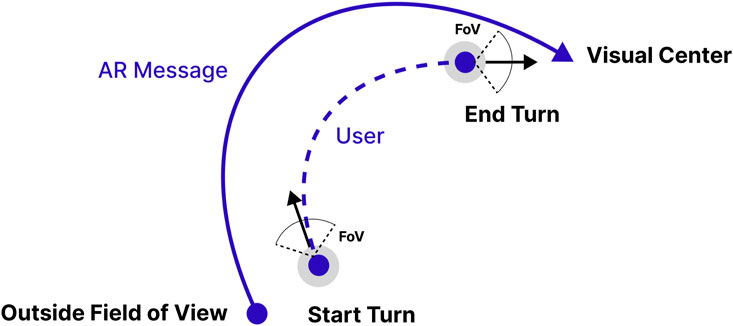

To achieve the goal, we propose TurnAware, a novel interaction technique that delivers messages in AR based on users’ motion. We leverage the moment users rotate during walking, e.g., when turning to change direction, illustrated in Figure 1. During rotation, our approach moves message from outside users’ field of view towards the center, with a velocity that is proportional to users’ rotational velocity. The movement of the visual content thus aligns with users’ movement. This enables us to deliver messages that are less salient and less obtrusive than pop-up messages that appear in users’ field of view without any animation, and appear in users’ field of view faster than fade-in messages while being equally unobtrusive.

Figure 1. (A) Users make a turn; (B) Users finish a turn; (C) A field of view from the headset showcasing example of an information display.

3.1 Implementation

Our method takes an AR message that should be delivered to users as input, as well as information about users’ position and direction. The visual information onsets at the start of a head turn, and moves to its final location by the time the head rotation is complete, as shown in Figure 2. In our current implementation and evaluation, we do not rely on turn detection, but leverage fixed points in the evaluation environment where users have to turn (e.g., when walking towards a wall). We chose this implementation, as we were interested in the “ideal” conditions, i.e., when we have ground-truth of whether users will turn. In future work, we plan to replace this implementation with a simple rotational velocity threshold: once users’ turn with a certain speed, we deliver a message if it was queued by the system.

Figure 2. Bird’s eye diagram of user’s movement and the AR Message trajectory using TurnAware technique.

To move in AR messages in the moments of head rotation, the visuals’ velocity is set proportional to the user’s head rotation velocity. Based on pre-tests and pilot studies, we set the velocity to be 50% higher than users’ head rotation velocity. This threshold balances the requirements that the notification appears at the end position quickly, but the movement is still perceived as in sync with users’ actual rotation. The starting position of the visual information is outside the mid-peripheral field (

4 Evaluation

We evaluate TurnAware to investigate its effect on walking behavior, task performance, and subjective preferences. We compare our proposed method against two baseline techniques: Fade-in and Pop-up. Additionally, we collect data on how participants move through the space without receiving any AR messages (i.e., the None condition). Participants performed two different primary tasks (object swapping, number reading) while walking through a large space. As secondary task, they were asked to react to the AR messages.

4.1 Study design

We use a within-subject design with two independent variables, Animation Technique with four levels (TurnAware, Fade-in, Pop-Up, None) and Task with two levels (Object swapping, Number reading). This design yielded a total of eight conditions, i.e., the combination of one animation technique with a primary task. Each participant completed all eight conditions. As dependent variables, we measured primary and secondary task performance (time, errors), walking behavior (speed changes, resumption lag), and subjective ratings, described below.

4.1.1 Animation technique

We compare TurnAware with techniques that deliver AR visuals that are also accessible within the user’s field of vision. Our focus is on techniques that do not require active retrieval of information, but where the user is a passive receiver. Strategies that requires users to actively perform glancing or head-up movements to access the information were not included (Lu et al., 2020). We focus on secondary information apps that will display notification on demand but are not urgent, such as social networking, fitness, music listening (Cho et al., 2024). We want to ensure that users can receive timely AR information without requiring additional effort to retrieve the information.

Our baseline approaches include Fade-in and Pop-up. Both include presenting visual information that are static and are placed at a 10 degree offset to the left or right of users’ central field of view, as investigated by previous studies (Lee and Woo, 2023). The Fade-in technique gradually increases the opacity of the visual information over a 2-s duration. We chose this timing based on findings from Janaka et al. (2023), who identified 2 s to balance obtrusiveness, speed of delivery and saliency for this technique. For the Pop-up technique, the visuals appears directly in the user’s field of view without any transition. We chose this baseline as it is more immediate, but potentially more disruptive than Fade-in (Lu et al., 2020; Janaka et al., 2023).

Finally, we included a None baseline, during which no AR Messages were presented. This serves as baseline for users’ performing the primary task without interruptions. We use the data from this technique to account for individual differences across participants’ walking behavior in our analysis.

4.1.2 Primary tasks

Participants completed two separate primary tasks while walking, each with different requirements on mental effort. Our goal was to evaluate the impact of the presentation techniques when users experience different levels of mental effort. During the tasks, participants walked along a cycled path, shown in Figure 2.

4.1.2.1 Object swapping task

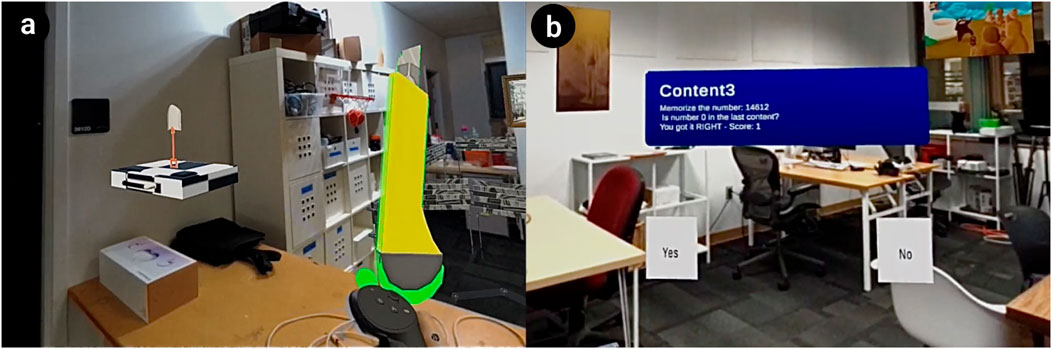

This task required participants to move virtual objects from one location along the walking path to another, shown in Figure 5. Specifically, there were three virtual boards with virtual objects in the room. Participants had to press their controller’s trigger button to pick an object from board one and place it on board 2, and so on. They did this for three rounds on the walking path. We consider this a task with low mental effort, since participants only had to “carry” the virtual object from one place to another.

4.1.2.2 Number reading task

Participants were required to read and memorize a 5-digit number on a virtual panel, shown in Figure 5. Along the path, there were three virtual panels. For each panel, participants had to first answer whether a specific digit was present on the previous panel; and then memorize the new number. This task was repeated for three rounds, for a total of nine number readings. We consider this a medium cognitive load task, especially compared to the object swapping task, since it involved reading.

4.1.3 Secondary task: reacting to AR messages

Our AR information displays are designed as non-urgent messages, such as weather updates, or provide daily fitness information. When seeing the messages, participants are asked to respond in a timely manner. In our study, the messages appear one or two times per walking cycle, with a gap of about 30 s between them. We asked participants to prioritize their primary tasks and focus on performing them as well as possible. The messages belong to one of two categories: weather and fitness messages. For each message, participants were asked to press a button on the controller to indicate which category it belongs to. This ensures that participants actually read the messages, and not just dismiss it immediately. The text is randomly selected from a set of ten predefined messages for each category. The design of layout is shown in Figure 3.

Figure 3. AR visual message example - participants press X button on left controller to acknowledge Weather information, and A button on right controller for Fitness information.

4.2 Apparatus

The study was situated in

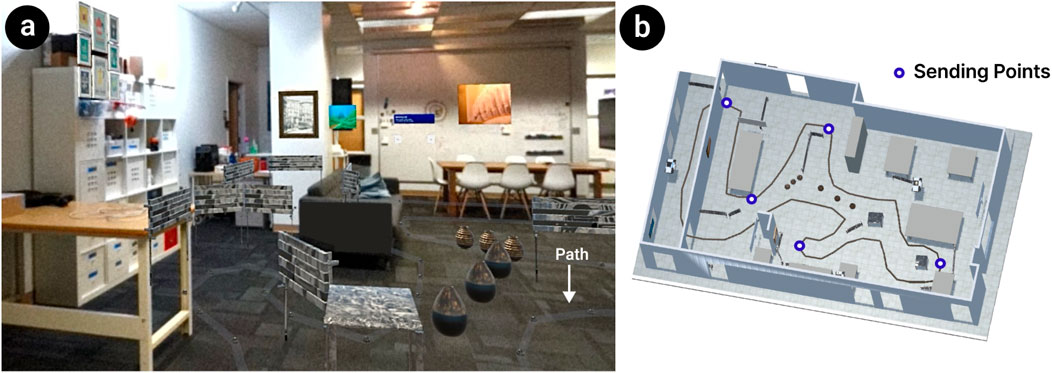

Figure 4. (A) Room environment set up; (B) An overview of walking path - The possible sending points align with user’s turning points.

Participants walked on a predefined path, illustrated as dotted path in AR to participants, shown in Figure 4B. Along the path, there were various sending points, i.e., possible locations on which AR message might occur. We pre-selected those locations to increase the consistency across participants. Our experimental system then randomly selected where to display the specific AR messages during each condition. Participants used Oculus Touch controllers in all conditions to perform the primary task, such as selecting Yes/No questions in Number Reading Task, and grabbing virtual objects in Object Swapping Task. The two task’s apparatus is shown in Figure 5. Participants were monitored by the experimenter to ensure that they complete the tasks while adhering to the predefined walking path. We used the headset’s built-in tracking system to record participants’ movements and interactions for later analysis.

Figure 5. (A) Object swapping task example: participants put the current object (knife) onto chess board, and grab the next one (shovel); (B) Number reading task example: “Memorize the number 14612. Is number 0 in the Last Content?”.

4.3 Participants

We recruited 16 participants (age:

4.4 Procedure

The study lasts around 70 min. After signing an informed consent form and completing the demographic questionnaire, participants were introduced to the study task, and went through a guided practice round involving walking two cycles along the path without AR visual messages. Then, they proceed with the full experiment. During the study, each participants went through all four techniques, once per task, for a total of 8 study conditions. Participants first completed all conditions for one primary task (four times, once per animation technique), and then for the other primary task. The order of animation technique was counterbalanced using a Latin square, the order of tasks was alternated. Among study conditions that includes AR information, participants receive a total of 6 or 7 messages in the 3 cycles of walking. After completing each study condition, participants took off the headset and completed the post-condition questionnaire.

4.5 Measurement

We measured participants’ primary and secondary task performance, as well as their walking behavior, resumption lag in walking. We draw inspiration from McCrickard’s evaluation of human information processing for notification systems (McCrickard et al., 2003), where participants’ walking patterns indicates interruptions, and their reactions to AR visuals indicates noticeability.

4.5.1 Task performance

We measure primary task performance as times needed to complete each of the primary tasks. The secondary task performance is indicated by the timely reception and response to AR messages. We analyze participants’ Reaction Time towards the AR messages by logging the time it takes to dismiss the Weather or Fitness notice, as a measurement of noticeability aside from interruption (McCrickard et al., 2003). Reaction time is measured as the time from activation of the AR message by the system to when users dismiss it. This includes the time the message is outside participants’ field of view (mid-peripheral field,

4.5.2 Walking behavior

Walking speed is the primary indicator of walking performance. We aim to minimize disruptions to the user’s pace of walking as a proxy for general distraction. Previous works have shown that walking speed can represent disruptions or flow changes caused by external stimuli, and is considered a general indicator of functional performance (Al-Yahya et al., 2011). In our study, we look at magnitude of disruption on walking speed.

We compute the walking speed differences of each technique’s conditions to the None conditions where no visual messages are delivered. We assume that participants have a natural walking pattern and pace during the None conditions. For each technique conditions, we extract participants’ positions from the onset of AR message to 2 s after its dismissal, to acquire the necessary data for later analysis. For each of those points, we find the closest adjacent points on the None path, and calculate the difference in walking speed between each pair of points. Note that participants also walked three cycles on the None path. We find the closest point and calculate the walking speed for each of the cycles, and calculate both the average and largest differences in speed to the walking behavior during the None condition. By calculating the largest difference in speed, we capture the peak impact that an AR message might have on a user’s walking behavior, which helps us assess the largest effect of each technique. In our analysis, all conditions use the same path layout, allowing us to control for path as a variable. This ensures that observed speed differences are attributable to the AR technique itself, rather than to the path layout.

4.5.3 Resumption lag in walking

Resumption lag is another behavioral measure of Interruption, which focuses on users’ effort to recover from an interruption. It is defined as the time interval between the end of the secondary task to the resumption of the primary task (Salvucci et al., 2009; Altmann and Trafton, 2004).

In our study, we measure resumption lag not in terms of primary task performance but in terms of walking speed. It is calculated as the time from participants reacting to an AR message to the time it takes for participants to reach their pre-message walking speed. We analyze the walking speed after message dismissal, and compare it with the walking speed 0.5 s before message onset. We take the timestamp where participants reach 95% of the pre-message speed as the point of resumption. The duration from the message dismissal to this timestamp is recorded as the resumption lag.

4.5.4 Self-reported metrics

We used the 5 questions on NASA-TLX (Hart, 2006) to evaluate Perceived Workload on a 7-point Likert-type scale, ranging from 1 (low or failure) to 7 (high or success). Additionally, to evaluate participants’ subjective response to different delivery techniques, we adopt questions from VR studies on evaluating notification interruptions (Ghosh et al., 2018) on Noticeability (How easy or difficult is it to notice the information display?), Understandability (Once you notice the information display, how easy or difficult is it to understand what it stands for?), Perceived Urgency (What level of urgency does the info rmation display convey?), Perceived Intrusiveness (How much of a hindrance was the information display to the overall AR experience?) and Distraction (How distracting did you perceive the information display to be?).

5 Result

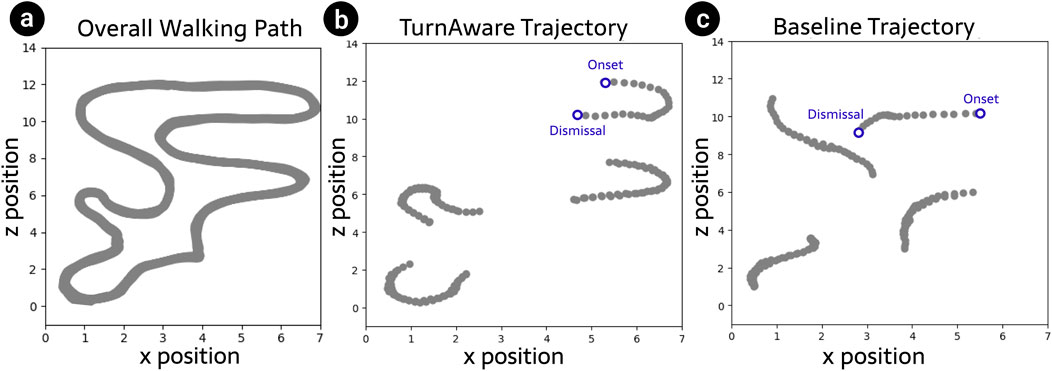

Our main result indicated that information delivery in TurnAware significantly reduces interruption on participants’ walking, while maintaining noticeability of the information. An example walking path, as well as where messages were shown, is illustrated in Figure 6.

Figure 6. Bird’s eye view visualization of AR message onset trajectories during study from one participant. The scatter plot displays position data recorded at consecutive timestamps in Unity world space. Each dot represents a measurement taken at a specific timestamp (Interval

5.1 Outliers

During the study, we observed that one participant did not follow the designated walking path, which caused the system to lose tracking. This participant received too few visual messages (1 or 2) to make a meaningful comparison. As a result, we count this as an outlier and exclude their data from subsequent analysis, and analyzed the remaining 15 participants.

5.2 Task performance

5.2.1 Primary task

The average time of completion for number reading task (i.e., three cycles of walking while completing the task) is 203.26 s

5.2.2 Secondary task

The error in the secondary task is defined as clicking the wrong dismissal button. An incorrect dismissal represents a mistake in processing the task. The error rate is

5.3 Analysis

We employed a series of Friedman tests to analyze walking behavior data across. We first conducted normality testing using Shapiro-Wilk test (Razali and Wah, 2011) for the measured walking behavior data. Results indicated that our the dependent variables did not follow the normality assumption (all

If the Friedman tests indicated a main effect, we analyzed the difference between each technique in each of the two task using pairwise Wilcoxon signed-rank tests (Wilcoxon et al., 1970) with Bonferroni adjustment as post-hoc tests. We used the same method for self-reported measures of perceived workload and subjective ratings. The statistical tests were performed using the statsmodel library version 1.14 in Python 3 (Seabold and Perktold, 2010).

5.4 Walking performance

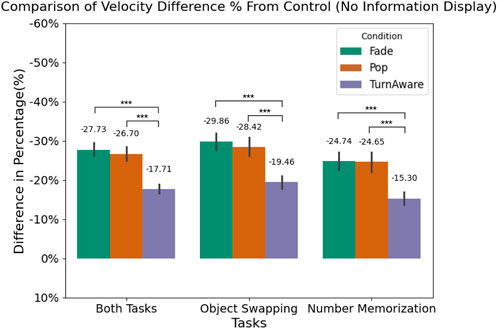

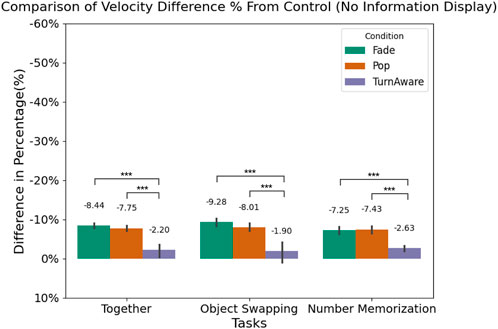

The results for largest difference between walking speed is shown in Figure 7, while the average difference is shown in Figure 8. Below, we report on the data from the largest difference in walking speed, as we believe it shows the highest possible disruption that participants might experience. The statistical results between largest and average walking speed, however, yielded similar main effects and pairwise differences. TurnAware achieves the least disruption in walking speed compared to the two baseline techniques, as shown in Figure 7. A Friedman Test on the combined task data showed a significant main effect of technique towards the walking speed difference (

In the number reading task, TurnAware techniques’ walking speed difference compare to None condition is

5.5 Resumption lag

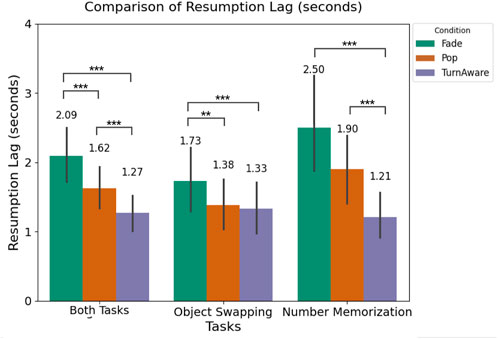

Using our technique, participants took the least time to resume to their previous walking speed, as evidenced by the shortest resumption lag among all three techniques (Figure 9). The Friedman Test result indicates a significant main effect (

On average, the resumption lag was 1.27 s

5.6 Reaction time

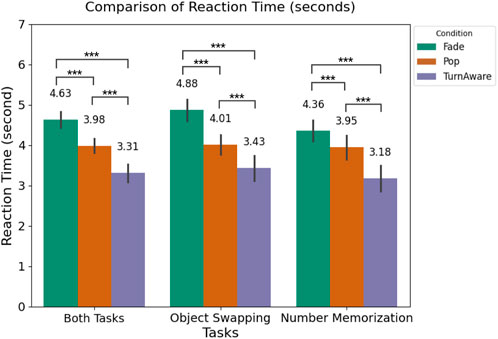

Beyond less interruptions on walking, participants were able to more quickly react to the AR messages. The Friedman test showed a main effect of the techniques on reaction time (

Fade-in has the longest reaction time (

Figure 10. Illustration of reaction time to AR messages across all conditions. Error bars indicate standard error.

5.7 Self-reported metrics

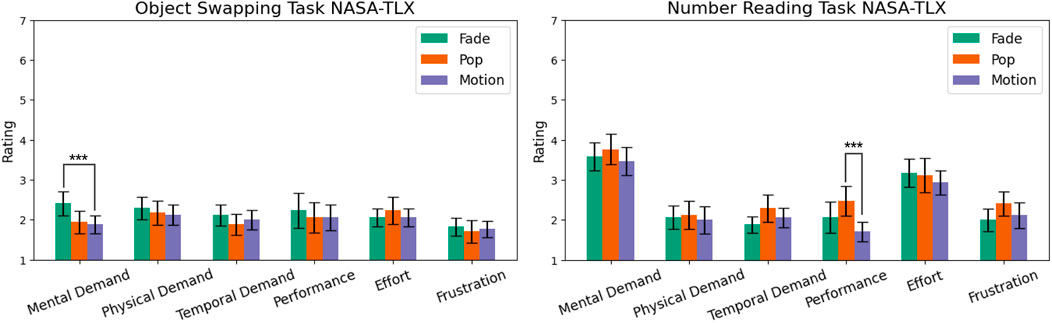

Participants’ perceived workload scores from the NASA-TLX subscale are shown in Figure 11. We employ Friedman tests for main effects, and pairwise Wilcoxon signed-rank tests as posthoc tests. For clarity, we only report the statistically significant effects

Figure 11. NASA TLX - Perceived workload on the object swapping task and number reading Task. Error bars indicate standard error. Lower score indicates less perceived workload or better performance.

In the object swapping task, TurnAware achieves a lower perceived mental work load (1: very low, 7: very high) (

None of the other questions on noticeability, distraction, etc. yielded any statistically relevant differences. It is worthy to note that more than half of the participants reported difficulty to discern or perceive the differences between the techniques presented. We discuss the discrepancy between these subjective responses and walking behavior below.

6 Discussion

We introduced a motion-aware technique to unobtrusively deliver AR messages while users are walking. We leverage users’ head rotations to move visual content into their field of view. Our main result indicates that TurnAware significantly minimizes the disruption on walking speed, reduces resumption lag, as well as decreases reaction time compared to baseline methods. We believe that our result has implications towards delivering visual information in AR, highlighting the benefits of motion-aware message delivery.

6.1 Minimizing disruption by aligning information delivery with motion

Unlike static information placement, such as (Lee and Woo, 2023; Lu et al., 2020), in which no significant main effect were found among placement directions (Lee and Woo, 2023), our technique adapts dynamically to user’s motion. Instead of assuming a fixed placement position, our approach leverages the natural changes in the user’s field of view induced by their movements. This allows visual content to be seamlessly moved into the user’s field of view as they turn. TurnAware dynamically adjust onset movement of visuals to match user’s movement direction and rotational speed. This alignment means that users do not have to make additional, abrupt shifts in attention to perceive the information.

Our results indicate that TurnAware helps users balance between walking (indicated by walking speed change and resumption lag) and secondary tasks (indicated by reaction time). Our approach makes the presentation of visual information more accessible and less intrusive. TurnAware is particularly suitable for delivering secondary information, such as weather updates or fitness accomplishments, due to its ability to let user access information with less disruption. Instead of having to deliberately check messages or being startled by sudden notifications, users can receive messages like weather updates and fitness accomplishments directly in their field of view in a seamless way. We believe this enhances the safety and convenience of AR.

6.2 Changing field of view during walking as an information delivery opportunity

Users’ visual field naturally changes while they are turning, i.e., there is motion resulting in optical flow. Integrating AR content while users are turning reduces the startling effects when being presented with new visual information, making it less disruptive, particularly while walking. From subjective feedback, some participants also noted that they were able to anticipate the movement of the visuals from the peripheral to the center if presented with a motion-aware message. We believe that this also enabled them to react faster compared to other techniques.

Our approach of incorporating new information based on motion is related to works that introduce information without users’ awareness, like Mise-Unseen, which hides virtual scene changes by leveraging gaze data (Marwecki et al., 2019). In contrast, our works aims to not decrease noticeability, but to balance disruptiveness and noticeability by aligning visual information to users’ movement. We believe that this balance can be particularly beneficial in multi-tasking AR scenarios, such as viewing daily news or online chats while walking, where the ability to maintain attention and mobility is crucial.

6.3 Behavioral outcome vs. subjective feedback

Our results indicate discrepancies between the behavioral data and subjective feedback. Specifically, while participants were less disrupted in their walking behavior with TurnAware, questionnaire data did not indicate differences in perceived load or preference.

We believe this can be attributed to various factors. First, participants are more familiar with the baseline techniques, Fade-in and Pop-ups, as they are already employed in current application such as system notifications, and messaging app notifications. Therefore, while participants change their walking behavior, they might not feel disrupted. Second, we saw that participants exhibited habituation effects, which is also reflected in their comments. A few participants expressed that at first, they were startled by the Pop-ups, but anticipated them in later trials. We believe that in a real-world setting, this habituation effect might not occur since users would not be able to anticipate the messages, thus would likely be disrupted. Lastly, around half of the participants reported difficulty distinguishing between the techniques during post-experiment interviews. This indicates that even though differences were subtle, TurnAware yielded less disruption to walking behavior.

Overall, we believe that AR messages delivered with our motion-aware technique balance noticeability and disruption in scenarios where users are on-the-go.

6.4 Limitation and future work

Our main result shows that TurnAware minimizes disruption on walking behavior compared to other baselines. We hope to explore different environments and scenarios in the future, as our experiment is conducted in relatively controlled space. Our method relies on the assumption that users frequently turn their heads, for example when walking around corners in buildings, or to look at shop windows while browsing through a street. In real-world scenarios, however, the frequency varies, depending on factors such as walking path, traffic, etc. This means that if a message is urgent, i.e., should be displayed immediately, and users do not turn their head, other delivery mechanisms might be preferable. We thus see TurnAware as complementary to other deliver mechanisms, and particularity well suited for non-urgent messages. Investigating more dynamic environments, such as crowded streets, helps explore how environmental distraction might impact the effectiveness of our technique, and potential improvements to enhance notification visibility without increasing intrusiveness.

In our experiment, the turning points were pre-defined and users performed a targeted walking task. For use in free-walking scenarios, TurnAware could be enhanced by integrating a turning point detection system by leverage body tracking models (Zheng et al., 2023; Ponton et al., 2022; Armani et al., 2024), for example. This would allow our approach to be used in a free-walking scenario, where the walking path would be less predictable.

We used two different primary tasks (number reading and object swapping) in our experiment, neither of which was very cognitively demanding. Future research should explore the effects of TurnAware with tasks of higher cognitive load, including wayfinding or multi-user scenarios. We believe that interaction techniques such as TurnAware that balance disruptiveness and noticeability will be beneficial for users’ ability to handle AR messages.

Finally, our current technique focused on delivery of visual information without active retrieval. Future studies could combine our technique with active retrieval methods (Lu et al., 2020). Giving users the freedom to look up information in combination with targeted notification delivery will provide them with the benefits of both approaches. We hope to explore how to refine AR information delivery through automatic and manual deliver in the future.

7 Conclusion

In this work, we introduce TurnAware, a motion-aware technique that aligns the delivery of AR information with natural head movements during walking. Our technique aims to balance noticability and disruption by providing users with a quick way to see messages. We conducted a comparative study where users received messages while performing a targeted walking task. Our findings demonstrate that compared to traditional information delivery methods such as Fade-in or Pop-up, TurnAware significantly reduces disruptions on walking speed, reaction time, and resumption lag. This enables users to balance walking and secondary tasks seamlessly. TurnAware improves the current information delivery in walking, enhancing the task efficiency of head-worn display users in on-the-go AR scenarios.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Carnegie Mellon University IRB Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

SL: Writing–original draft, Writing–review and editing. DL: Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

I acknowledge the use of GPT4 (OpenAI, https://chatgpt.com) at the drafting stage of paper writing, and proofreading of the final draft.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2024.1484280/full#supplementary-material

References

Altmann, E. M., and Trafton, J. G. (2004). Task interruption: resumption lag and the role of cues. Proc. Annu. Meet. Cognitive Sci. Soc. 26.

Al-Yahya, E., Dawes, H., Smith, L., Dennis, A., Howells, K., and Cockburn, J. (2011). Cognitive motor interference while walking: a systematic review and meta-analysis. Neurosci. and Biobehav. Rev. 35, 715–728. doi:10.1016/j.neubiorev.2010.08.008

Armani, R., Qian, C., Jiang, J., and Holz, C. (2024). Ultra inertial poser: scalable motion capture and tracking from sparse inertial sensors and ultra-wideband ranging. arXiv Prepr. arXiv:2404.19541, 1–11. doi:10.1145/3641519.3657465

Barlow, H. B., and Foldiak, P. (1989). “Adaptation and decorrelation in the cortex,” in The computing neuron (United States: Addison Wesley), 54–72.

Billinghurst, M., and Starner, T. (1999). Wearable devices: new ways to manage information. Computer 32, 57–64. doi:10.1109/2.738305

Cadiz, J., Venolia, G., Jancke, G., and Gupta, A. (2001). Sideshow: providing peripheral awareness of important information

Cho, H., Yan, Y., Todi, K., Parent, M., Smith, M., Jonker, T. R., et al. (2024). “Minexr: mining personalized extended reality interfaces,” in Proceedings of the CHI conference on human factors in computing systems, 1–17.

Durgin, F. H. (2009). When walking makes perception better. Curr. Dir. Psychol. Sci. 18, 43–47. doi:10.1111/j.1467-8721.2009.01603.x

Durgin, F. H., Gigone, K., and Scott, R. (2005). Perception of visual speed while moving. J. Exp. Psychol. Hum. Percept. Perform. 31, 339–353. doi:10.1037/0096-1523.31.2.339

Ghosh, S., Winston, L., Panchal, N., Kimura-Thollander, P., Hotnog, J., Cheong, D., et al. (2018). Notifivr: exploring interruptions and notifications in virtual reality. IEEE Trans. Vis. Comput. Graph. 24, 1447–1456. doi:10.1109/tvcg.2018.2793698

Grießbach, E., Raßbach, P., Herbort, O., and Cañal-Bruland, R. (2024). Dual-tasking modulates movement speed but not value-based choices during walking. Sci. Rep. 14, 6342. doi:10.1038/s41598-024-56937-y

Han, V. Y., Cho, H., Maeda, K., Ion, A., and Lindlbauer, D. (2023). Blendmr: a computational method to create ambient mixed reality interfaces. Proc. ACM Human-Computer Interact. 7, 217–241. doi:10.1145/3626472

Hart, S. G. (2006). “Nasa-task load index (nasa-tlx); 20 years later,” Proceedings of the human factors and ergonomics society annual meeting, 50. CA: Los Angeles, CA: Sage publications Sage, 904–908. doi:10.1177/154193120605000909

Janaka, N., Zhao, S., and Chan, S. (2023). Notifade: minimizing ohmd notification distractions using fading. Ext. Abstr. 2023 CHI Conf. Hum. Factors Comput. Syst., 1–9. doi:10.1145/3544549.3585784

Kao, P.-C., Pierro, M. A., Gonzalez, D. M., Na, A., Sefcik, J. S., Kwok, A., et al. (2023). “Performance during attention-demanding walking conditions in older adults with and without a fall history,” in 2023 combined sections meeting (CSM) (APTA) 70–77.

Lages, W., and Bowman, D. (2019a). “Adjustable adaptation for spatial augmented reality workspaces,” in Symposium on spatial user interaction, 1–2.

Lages, W. S., and Bowman, D. A. (2019b). “Walking with adaptive augmented reality workspaces: design and usage patterns,” in Proceedings of the 24th international conference on intelligent user interfaces, 356–366.

Lee, H., and Woo, W. (2023). “Exploring the effects of augmented reality notification type and placement in ar hmd while walking,” in 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Shanghai, China, 25-29 March 2023 (IEEE), 519–529.

Li, Z., Cheng, Y. F., Yan, Y., and Lindlbauer, D. (2024). “Predicting the noticeability of dynamic virtual elements in virtual reality,” in Proceedings of the CHI conference on human factors in computing systems (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3613904.3642399

Lim, J., Chang, S. H., Lee, J., and Kim, K. (2017). Effects of smartphone texting on the visual perception and dynamic walking stability. J. Exerc. rehabilitation 13, 48–54. doi:10.12965/jer.1732920.460

Lindlbauer, D., Feit, A. M., and Hilliges, O. (2019). “Context-aware online adaptation of mixed reality interfaces,” in Proceedings of the 32nd annual ACM symposium on user interface software and technology, 147–160.

Logan, D., Kiemel, T., Dominici, N., Cappellini, G., Ivanenko, Y., Lacquaniti, F., et al. (2010). The many roles of vision during walking. Exp. brain Res. 206, 337–350. doi:10.1007/s00221-010-2414-0

Lu, F., Davari, S., Lisle, L., Li, Y., and Bowman, D. A. (2020). “Glanceable ar: evaluating information access methods for head-worn augmented reality,” in 2020 IEEE conference on virtual reality and 3D user interfaces (VR) (IEEE), 930–939.

Marwecki, S., Wilson, A. D., Ofek, E., Gonzalez Franco, M., and Holz, C. (2019). “Mise-unseen: using eye tracking to hide virtual reality scene changes in plain sight,” in Proceedings of the 32nd annual ACM symposium on user interface software and technology, 777–789.

Matthews, T. (2006). “Designing and evaluating glanceable peripheral displays,” in Proceedings of the 6th conference on designing interactive systems, 343–345.

McCrickard, D. S., Chewar, C. M., Somervell, J. P., and Ndiwalana, A. (2003). A model for notification systems evaluation—assessing user goals for multitasking activity. ACM Trans. Computer-Human Interact. (TOCHI) 10, 312–338. doi:10.1145/966930.966933

Ponton, J. L., Yun, H., Andujar, C., and Pelechano, N. (2022). Combining motion matching and orientation prediction to animate avatars for consumer-grade VR devices. Comput. Graph. Forum 41, 107–118. doi:10.1111/cgf.14628

Razali, N. M., and Wah, Y. B. (2011). Power comparisons of shapiro-wilk, Kolmogorov-smirnov, lilliefors and anderson-darling tests. J. Stat. Model. Anal. 2, 21–33.

Rhodes, B. (1998). “Wimp interface considered fatal,” in Ieee VRAIS’98: workshop on interfaces for wearable computers.

Salvucci, D. D., Taatgen, N. A., and Borst, J. P. (2009). Toward a unified theory of the multitasking continuum: from concurrent performance to task switching, interruption, and resumption. Proc. SIGCHI Conf. Hum. factors Comput. Syst., 1819–1828. doi:10.1145/1518701.1518981

Seabold, S., and Perktold, J. (2010). “statsmodels: econometric and statistical modeling with python,” in 9th Python in science conference.

Wilcoxon, F., Katti, S., and Wilcox, R. A. (1970). Critical values and probability levels for the wilcoxon rank sum test and the wilcoxon signed rank test. Sel. tables Math. statistics 1, 171–259.

Zheng, X., Su, Z., Wen, C., Xue, Z., and Jin, X. (2023). “Realistic full-body tracking from sparse observations via joint-level modeling,” in Proceedings of the IEEE/CVF international conference on computer vision.

Keywords: Augmented Reality, user experience, information delivery, visual perception, walking

Citation: Liu S and Lindlbauer D (2024) TurnAware: motion-aware Augmented Reality information delivery while walking. Front. Virtual Real. 5:1484280. doi: 10.3389/frvir.2024.1484280

Received: 21 August 2024; Accepted: 21 November 2024;

Published: 18 December 2024.

Edited by:

Stephan Lukosch, University of Canterbury, New ZealandReviewed by:

Ana Stanescu, Graz University of Technology, AustriaShakiba Davari, Virginia Tech, United States

Copyright © 2024 Liu and Lindlbauer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sunniva Liu, c3Vubml2YWZsaXVAZ21haWwuY29t

Sunniva Liu

Sunniva Liu David Lindlbauer

David Lindlbauer