94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 20 August 2024

Sec. Augmented Reality

Volume 5 - 2024 | https://doi.org/10.3389/frvir.2024.1428765

Jifan Yang1*

Jifan Yang1* Steven Bednarski2

Steven Bednarski2 Alison Bullock3

Alison Bullock3 Robin Harrap4

Robin Harrap4 Zack MacDonald5

Zack MacDonald5 Andrew Moore6

Andrew Moore6 T. C. Nicholas Graham1*

T. C. Nicholas Graham1*Augmented Reality (AR) is a technology that overlays virtual objects on a physical environment. The illusion afforded by AR is that these virtual artifacts can be treated like physical ones, allowing people to view them from different perspectives and point at them knowing that others see them in the same place. Despite extensive research in AR, there has been surprisingly little research into how people embrace this AR illusion, and in what ways the illusion breaks down. In this paper, we report the results of an exploratory, mixed methods study with six pairs of participants playing the novel Sightline AR game. The study showed that participants changed physical position and pose to view virtual artifacts from different perspectives and engaged in conversations around the artifacts. Being able to see the real environment allowed participants to maintain awareness of other participants’ actions and locus of attention. Players largely entered the illusion of interacting with a shared physical/virtual artifact, but some interactions broke the illusion, such as pointing into space. Some participants reported fatigue around holding their tablet devices and taking on uncomfortable poses.

Augmented reality provides the “illusion of an enhanced world” in which virtual objects are super-imposed on the physical world (Javornik, 2016). This AR illusion allows people to interact with virtual objects as if they are present in the real world, for example, moving around them, leaning in to see them from different perspectives, and referencing them via gesturing and pointing. This illusion enhances the user’s perception of the presence of virtual objects, making them believe that the AR artifacts exist in the real environment, ultimately enhancing users’ experience of presence in the mixed-reality world (Lombard and Ditton, 1997; McCall et al., 2011; Aliprantis et al., 2019; Ens et al., 2019; Regenbrecht and Schubert, 2021).

This illusion relies on virtual objects having the same spatial properties as physical objects. For example, the position, orientation, and scale of a virtual object is tied to the physical world, allowing a person to move around it as if it is a physical object. In collaborative contexts, the illusion extends to believing that collaborators see the same virtual objects in the same physical place.

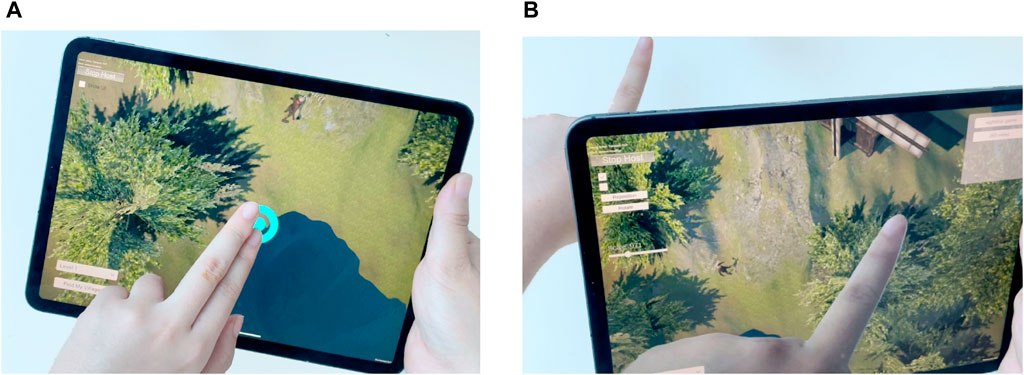

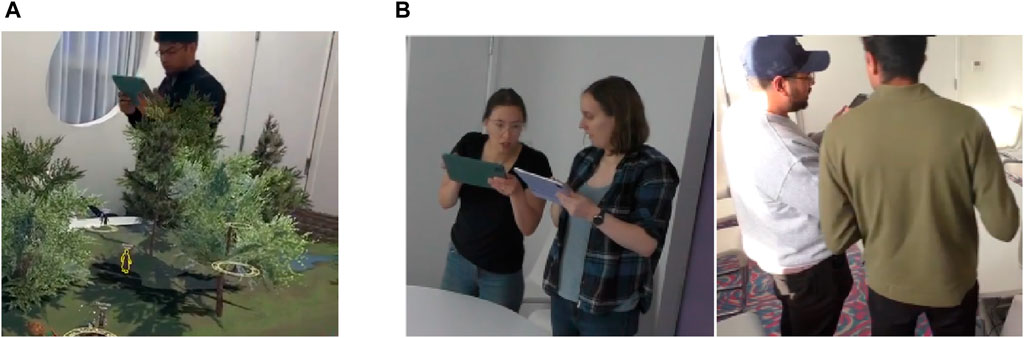

Despite this illusion being fundamental to interaction with AR, surprisingly little research has been performed into how successfully it is embraced by AR users. This paper reports on a multi-methods study to add to this emerging literature. Participants were asked to perform a collaborative task within an augmented reality game played through an iPad tablet computer (Figure 1). In the game, participants interacted with a virtual village placed on a physical table. We wished to determine how participants interacted with the village and its inhabitants, how they moved when interacting, what they chose to do on their iPad screen versus using natural gestures, and how naturally they accepted that their collaborator saw the same artifacts in space that they did. Our specific research questions are.

Figure 1. Two people playing the novel Sightline game. A virtual terrain is superimposed on a physical table. Players view the terrain, the table, and each other through an iPad display.

To address these questions, we performed content analysis on gameplay videos, thematic analysis of a semi-structured interview addressing participants’ experience, and used questionnaires to measure quality of collaboration and player experience. Our results show that for this collaborative AR game, participants entered the illusion of AR, treating the virtual artifacts as shared artifacts visible to their collaborator and themselves. Participants used the space around the table and used AR affordances and kinesthetic interaction to view the artifact in different ways. Nevertheless, there were two specific ways in which the illusion of AR broke down: participants preferred pointing on their screen over pointing directly at the AR artifact, and participants occasionally looked at each other’s screens to confirm that their collaborator indeed saw what they were seeing.

The paper is organized as follows. We first review work related to interaction within AR contexts. We then introduce the Sightline game. We detail the methods used in our exploratory study, and report players’ experiences of AR interactions and how they utilize AR artifacts and the space around them for collaboration. Finally, the paper concludes with discussion of how our findings inform the design of collocated collaborative AR.

Augmented Reality (AR) is a technology that superimposes virtual objects on a physical environment (Billinghurst et al., 2015). For example, as shown in Figure 1, a virtual terrain can be overlaid on a physical table to create the illusion of a live model of a village placed in a physical room. Users can interact with AR using a variety of devices, including head mounted displays, projection devices, and handheld displays (Azuma et al., 2001; Carmigniani et al., 2011). AR has been widely used for applications as diverse as games (Yusof et al., 2019; Paraschivoiu et al., 2021; Mittmann et al., 2022), simulations (Gabbard et al., 2019; Sung et al., 2019), and remote help (Hadar et al., 2017; Mourtzis et al., 2020). People using an AR application in the same location can see each other, the real environment around them, and the virtual models augmenting the scene. When viewed from different angles and distances, virtual objects are rendered according to the user’s perspective, giving people the illusion of looking at physical objects in the real world. This illusion not only enhances user feelings of immersion (Kim, 2013) and presence (1997), but can also support collaboration, facilitate natural interactions (Valli, 2008), and impact territoriality (Scott et al., 2004). These benefits are detailed as follows.

AR encourages kinesthetic interaction, where users physically move around and interact with virtual artifacts. More broadly, kinesthetic interaction involves “the body in motion experiencing the world through interactive technology” (Fogtmann et al., 2008). Interfaces affording kinesthetic interaction encourage bodily movements such as gesturing, walking, running, and jumping to interact with a computer, display, or technologically enhanced environment (Koutsabasis and Vosinakis, 2018). Kinesthetic interaction can incorporate concepts of natural interaction (Valli, 2008), where people use gestures, expressions, and common physical movements such as pointing (Sato et al., 2007) and head movement (Sidenmark and Gellersen, 2019) to interact with a digital system, and bodily interaction (Mueller et al., 2018) where physical movement is used to enhance the interactive experience (Andres et al., 2023). Kinesthetic interaction relies on artifacts having a physical location, so that it is possible to move around them.

Incorporating kinesthetic interaction has been argued to bring numerous benefits to human-computer interaction in general and collaboration in particular.

Users in an AR environment can walk around a virtual artifact to change perspective, move closer/farther to scale their views, select virtual objects by pointing at them, and reach out to touch and move objects (Bujak et al., 2013). These are all natural interactions transferred from the physical world, which make operating an AR application more intuitive as people can apply existing knowledge to interact with digital technology (Valli, 2008; St. Amant, 2015). However, natural interaction has limitations. Arm fatigue is known to negatively impact user experience and hamper prolonged use of mid-air interfaces Jang et al. (2017). Ma et al. (2020) compared hand fatigue levels while performing the same tasks using natural hand gestures and mice, and found that using natural gestures consumed more energy. Lou et al. (2020) compared the performance of interacting with different hand gestures, finding that extending arms and performing hand movements at higher location were more tiring, making users switch gestures frequently and negatively impacting their operation accuracy. Natural but complex gestures may also be frustrating for some people. Aliprantis et al. (2019) noted that people may prefer to use simple gestures over natural and fun gestures in an AR environment.

In addition, the accuracy of motion detection can affect user experience. For example, Kinect, the motion-sensing input device developed by Microsoft, had record-breaking sales at launch but was abandoned in the next-generation of Xbox products; this is in part because developers struggled to create compelling experiences based on natural interaction (Weinberger, 2018). For example, Jeremic et al. (2019) report frustration in participants that the Kinect could not recognize their bodies or had high latency when they made kicking motions. The above results suggest that applications based on kinesthetic interaction may strain the AR illusion when movement is not captured sufficiently accurately.

A major advantage cited for AR is its support for small group collaboration. In collocated collaboration, collaborators view the same AR objects at the same time and in the same location to complete a collaborative task (Wells and Houben, 2020), such as finding hidden objects (De Ioannes Becker and Hornecker, 2021) or building block towers (Poretski et al., 2021b). In this setting they can directly see and talk to each other. In remote collaboration, participants observe the same virtual objects in different locations and jointly work on a task such as conducting a chemistry experiment (Ahmed et al., 2021). AR technology is increasingly being explored in remote collaborative settings (Asadzadeh et al., 2021; Cavaleri et al., 2021). And finally, with remote expert systems, one user completes a task, while an expert provides remote assistance. The local user can use AR devices to communicate with the remote expert in real time via live video, or can watch pre-recorded expert videos (Hadar et al., 2017).

In this paper, we focus on collocated collaboration, which has been suggested to benefit from AR technology through establishment of group awareness, support of communication through gestural deixis, and provision of a 3D workspace.

Group awareness is the understanding of the status of the group, such as what other collaborators are doing, where they are looking at, what they are interested in, and how others feel about them (Ghadirian et al., 2016). Collocated AR allows the users’ workspace to be seamlessly combined with their communication space, allowing them to directly see both their collaborators and the physical and virtual objects in the scene (Ens et al., 2019). They can be aware of each other’s actions and attention by observing changes in the virtual scene, and through other participants’ gaze, hand movements, and gestures.

Communication is enhanced through deixis, which is the use of speech or gestures to reference parts of the shared scene (Levinson, 1983; Iverson et al., 1994). Deictic gestures help people understand each other’s intentions, aiding collaborators to communicate their focus through a simple hand gesture rather than lengthy verbal descriptions (Wong and Gutwin, 2010). Further, AR interfaces can enhance the cues already found in face-to-face conditions (Billinghurst et al., 2015), for example, providing gaze cues to facilitate deictic gestures, helping users better understand which object their collaborator is focusing on (Chen et al., 2021). Collocated collaboration therefore relies on people believing that other participants see the same artifacts in the same space as they do, providing meaning to gaze awareness and gestures. Despite this potential benefit, collocated AR collaborators have been found to rarely look at each other–they primarily focus on the physical items they use to manipulate AR artifact, for example, their screen (Rekimoto, 1996; Poretski et al., 2021a) or markers for positioning virtual objects in space (Matcha and Rambli, 2013).

Finally, AR supports collaboration by providing a three-dimensional workspace. Most screens only support interaction on a flat surface, while AR systems allow people to use physical movement to view virtual artifacts from different angles and scales. This can make it easier to carry out collaborative tasks related to spatial structures (Radu, 2012).

Most recently, Poretski et al. (2021b) compared the performance of users completing the same task in a physical and AR environment, finding users to have a similar or better collaborative experience in the AR setting. This study focused on how participants gather around virtual artifacts. Explorations of how people collaborate and use body posture in AR environments are still limited.

When interacting through bodily movements, collocated people exhibit specific behaviours in using the space around them. Scott et al. analyzed peoples’ territoriality, or behaviour when sharing desktop spaces, finding that the space directly in front of each person is dominated by that person, whereas the space in the centre of the table and between adjacent people is shared more for group work (Scott et al., 2004). This behaviour has been confirmed with more recent display hardware (Klinkhammer et al., 2018) and with large shared screens (Azad et al., 2012). Cibrian et al. (2016) of an interactive floor also found that collocated users tended to use independent space and avoided stepping into the areas occupied by their peers.

The use of space by collocated collaborators in an AR environment is likely to be more dynamic. Poretski et al. (2021b) compared the number of movements and relative positions of collaborators completing the same block-building task in physical and AR environments, finding that participants using the AR application moved more frequently and preferred to stand closer to their partners. James et al. (2023) compared collaboration using a regular wall display and a wall display expanded with AR technology, finding that participants walked more in the AR settings. People moving around frequently may use different ways to divide space, as it can be inconvenient to maintain a fixed work area. However, how people allocate and occupy space in AR environments is still an open question.

We have seen that the illusion of AR, where virtual objects can be treated like physical objects, underlies a range of powerful forms of interaction including kinesthetic interaction, gestural deixis, and gaze awareness. The illusion of AR enhances users’ experience of presence. Different from the sense of “being there” in VR environments (Slater, 2009), users experiencing AR environments get a sense of “it is here”; i.e., the virtual object is transported in front of them (Lombard and Ditton, 1997). Collaborative AR users experience not only the presence of virtual objects, but also sense the presence of their partner, reinforcing their feeling of “we are together” (Lombard and Ditton, 1997), further promoting collaboration and inter-personal interaction. While presence has been extensively explored in various forms of virtual reality environments (Roberts et al., 2003; Moustafa and Steed, 2018), and instruments have been created for measuring presence in VR (Witmer and Singer, 1998), presence in AR has required new definitions and exploration (Gandy et al., 2010).

The illusion of AR can break down, however, due to perceptual issues and physicality conflicts. In 2010, Kruijff et al. (2010) identified a list of 24 perceptual issues in augmented reality, ranging over, for example, illumination, lens flares, occlusion problems, limited field of view, colour fidelity, and depth perception cues. While many of these issues have been ameliorated over the intervening time, reviews of even the best current hardware still mention issues with lag, motion blur, and colour fidelity (Patel, 2024).

Physicality conflicts between virtual and physical objects—e.g., a physical person walking through a virtual object—can break the AR illusion, diminishing copresence (Kim et al., 2017a; b). Physical props can be used to stand in for virtual artifacts, but these can break the illusion of AR if they do not feel like the artifact being manipulated (Zhang et al., 2021). Lopes et al. (2017) have shown how electrical muscle stimulation can provide physicality to virtual objects, but this approach is far from ready for general application.

In summary, past research has shown that AR technology supports natural interaction and face-to-face communication with 3D artifacts. These forms of interaction are enabled by the AR illusion, where people perceive of virtual artifacts as inhabiting physical space. Despite the fundamental nature of the this illusion to interaction in AR, and despite limitations to the illusion being identified, there has been surprisingly little investigation of how strongly people adopt the AR illusion and the interaction styles that it affords. More in-depth investigations are needed about how people communicate, move, and use body postures to interact in AR environments. This paper contributes such a study.

To help understand how people collaborate and interact with virtual artifacts within an AR environment, we have designed Sightline, an augmented reality game. The game was designed to act as an exemplar of a collaborative AR system. The requirements of the game were therefore that it be quick to learn, require collaboration to play, and encourage players to interact with AR artifacts. This makes it suitable for capturing users’ behaviour and subjective experiences interacting with AR.

Sightline is a two-player puzzle game with a virtual terrain superimposed on a physical table (Figure 1). The game supports collocated collaboration by allowing two people to view and manipulate the same AR scene using iPad tablets, meaning that people moving to the same position around the table view the same terrain from the same perspective, and when one player changes the content of the scene, those changes are observed by the other player.

There are four villagers and six signs scattered around the virtual terrain. The goal of the game is to collaboratively establish as many “sightlines” between villagers as possible (Figure 2A). Two villagers (here “Agnes” and “Richard”) create a sightline if they are standing at signs and can see each other through the trees, houses, and other obstacles. For example, in Figure 2B, there is no sightline because the villagers cannot see each other through the house.

Figure 2. The goal of the game is to create sightlines between villagers standing at signs. The number above each sign indicates how many sightlines originate from that sign. (A) Agnes sees Richard, making the number of the sign next to her increase to 1. (B) Agnes and Richard’s signs both show 0 because a house blocks their views. (C) Villager moves with path displaying on the screen.

Each player controls two villagers. Villagers’ paths appear in the scene and can be seen by both players (Figure 2C). When a villager reaches a sign, the sign lights up and shows a number (Figure 2A). The number represents the number of sightlines originating from the villager, namely the number of villagers from other signs they can see. Only one villager is counted for each sign. When the villager leaves the sign, the light is removed from the sign and the number disappears. Players are asked to maximize their team score, the sum of the numbers above all the lighted signs. This requires them to coordinate actions in the placement of the villagers and change their poses to view possible sightlines from the villagers’ perspectives.

Additionally, the game supports discussion through two pointing methods. Players can tap on the screen with two fingers to show a beacon on the landscape at a position of interest (Figure 3A). If the other player is looking at that part of the scene, they will also see the beacon. Alternatively, a player can move their hand behind the screen (Figure 3B). The iPad’s camera captures the hand and overlays it on the virtual terrain, enabling both players to see fingers used to physically point at virtual objects.

Figure 3. The Sightline game supports on-screen (touch) and behind-screen (gesture) pointing. (A) On-screen pointing shows beacon in scene. (B) Behind-screen pointing shows player’s hand in scene.

The game was implemented using the Unity game engine (Unity Inc, 2023). The Mirror Networking library (Mirror Networking, 2023) was used to synchronize the collaborators’ views of the game scene, so that each player saw the same scene as the game progressed. The game was played on Apple iPad Pro 11″ tablets (third edition), connected via a Wi-Fi network.

Sightline offers players multiple virtual artifacts to interact with, which is intended to foster the AR illusion. Players can walk around the artifacts, point at them by hand, and view them from different perspectives using poses such as moving closer/further and crouching. We wished to explore whether players accept this illusion and adopt the above approach to interact with objects in the AR environment.

To perform well in the game, players must collaborate. They need to divide the task of maximizing sightlines into the smaller tasks of controlling the villagers and coordinating who will move which villager where. They also need to understand where their partner is focusing and understand the motivation behind their partner’s actions.

As discussed in Section 2.2, AR can support small group collaboration by providing a communal workspace, visual cues, and 3D interaction. Therefore, the game is designed to leverage the AR illusion to provide players with the following assistance.

This game has been designed to exercise numerous forms of interaction that rely on the AR illusion–physical movement around the virtual artifacts, viewing the artifacts from different perspectives, and treating the virtual and physical artifacts as a shared workspace. The game therefore serves as a testbed for understanding how well participants can and do adopt the AR illusion.

To understand players’ interactions and experience with Sightline, we conducted a multi-methods, exploratory study addressing the following research questions.

Twelve participants were recruited through social media targeted to the local university community. They were asked to find a teammate and register as a pair, and were required to be able to read and understand English and have the ability to hold and move iPads. Each participant filled out a consent form describing the study protocol. They were given the option to consent to the use of identifying images. Only photos of participants who actively consented are included in this paper.

The study was conducted in a meeting room with a large table (Figure 4). Upon loading the game scene, participants could see virtual objects superimposed on the tabletop from the iPad screen. The study session was video recorded. A video camera with tripod acted as main camera to capture the participants’ locations and gestures. A 360-degree camera on the table captured a panoramic view of the room and tracked participants who were speaking. To better understand what happened in the game session, the two iPad tablets used by participants were screen-recorded.

The study consisted of the following steps.

The following sections present the results of this study, starting with the analysis of video data and questionnaire data, then reporting thematic analysis results of the interview. Following this, in Section 7, we analyze what conclusions we can draw with respect to our research questions from these results.

To understand how participants interacted and collaborated using AR, we performed content analysis of videos of their gameplay sessions. We first analyzed kinesthetic interaction within the AR environment and assessed participants’ use of the space around the table. We used validated and custom questionnaires to capture participants’ subjective experience. Finally, we analyzed how participants communicated with each other. All of these informed the ways in which the participants did or did not embrace the illusion of AR.

Twelve participants (7 females and 5 males, 20–29 years, M = 24.5) participated in the study. Participants are identified below using a participant id. Participants P1 and P2 played together; P3 and P4 played together, and so forth. Six participants reported playing video games at most annually; one monthly; two weekly, and the remaining three play daily. Except for one participant who plays multiplayer games every day, all other participants played multiplayer games no more frequently than weekly. Four participants had never used any AR/VR applications, while eight participants had experience of VR games/apps or playing Pokémon Go. No participants had played an AR game similar to Sightline.

The mean time to complete the study was 20.3 min. The fastest group of participants took 11.5 min to complete the tasks, while a group of participants who became highly competitive, ended up taking 33 min.

Our findings are detailed as follows.

We observed and recorded the postures adopted by the participants when interacting with the game and used validated questionnaires to capture their thoughts about the interactions they performed. The following results reflect participants’ treatment of virtual artifacts in AR.

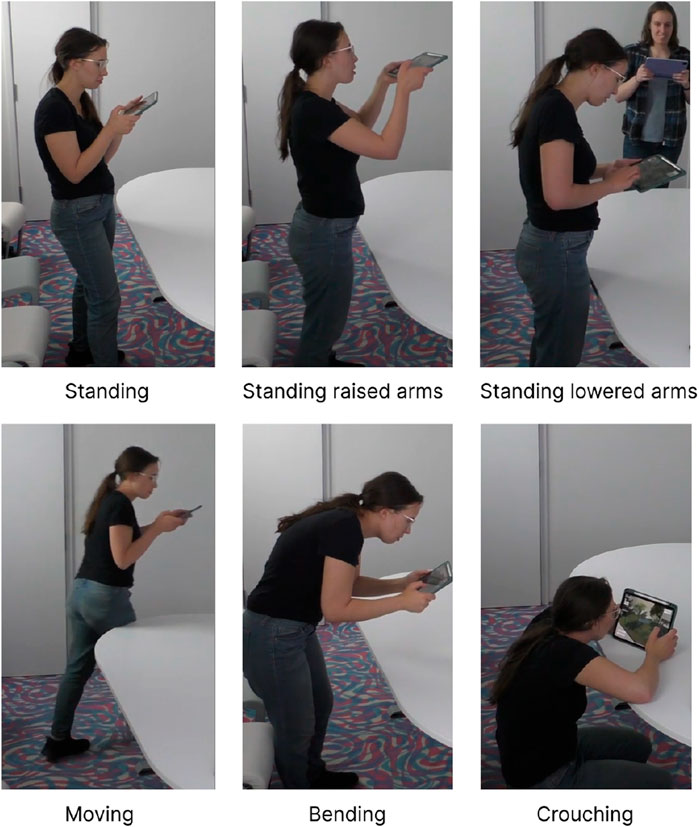

Participants assumed a set of poses allowing them to view the virtual terrain in different ways. We watched the videos captured by the main camera, and recorded and categorized their arm and upper body position changes. The time spent in each pose was recorded. Six types of pose were identified based on how participants held their body and the iPad. These are summarized in Figure 5.

Figure 5. Six poses were identified. Participants stood at the table to observe the virtual terrain and adjusted their perspective by walking around, moving their arms, bending and crouching.

Participants changed their pose using a common approach. They first moved around the table while looking at the screen to find an appropriate perspective to observe the scene. After reaching a suitable position, they stopped and stood facing the table. If necessary, participants moved the iPad closer to/further from the table to see the scene at different levels of detail. When trying to get an overview of the scene, participants raised their arms to get a top-down view. Otherwise, when participants wanted to zoom in to see the details more clearly, they lowered their arms or bent down to get the iPad closer to the table. By crouching down, they made their viewpoint even lower to observe the scene from the same level as the villagers, which helped them understand what their villagers could see (Figure 6).

We counted the participants’ changes of pose and the duration of each pose. Participants changed their pose frequently. Individual participants showed different preferences. For example, Figure 7 (left) illustrates the poses of the two participants in Session 1. P1 walked around more often and liked to zoom in her view by squatting down, while P2 preferred to stand in one place for a longer time and observe the scene by moving her arms and bending over. Despite variations in the postures used, the participants’ overall behaviour patterns were similar. Figure 8 provides an overview of all participants. They spent approximately two-thirds of the time standing, holding the iPad at chest height. For about one-fifth of the time, they walked around to adjust their position. For the rest of the time, participants moved their bodies and their iPads to see different perspectives and different levels of detail. This behaviour was uniform over all participants, indicating that when interacting with AR, all participants adopted poses similar to those that would be used for physical artifacts.

Figure 7. Postural changes and space usage of the two participants in Session 1. The bar chart on the left shows the poses they used over time. The heat map on the right shows the time they spent at each part of the table.

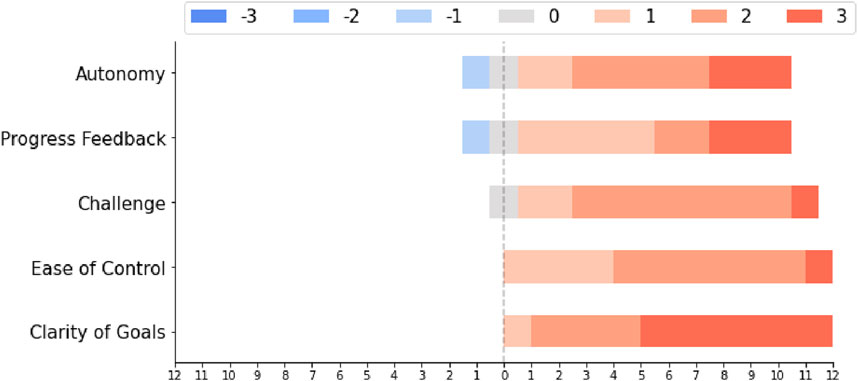

After their gameplay session, participants completed the Autonomy, Progress Feedback, Ease of Control, Clarity of Goals, and Challenge subscales of the Player Experience Inventory (PXI) (Abeele et al., 2020), showing how well they understood the Sightline game and how they felt about playing it. The results are shown in Figure 9, in the form of a stacked diverging bar chart (Robbins and Heiberger, 2011). The length of a bar indicates the total number of participants, while the segments of the bar indicate the number of people in each score range. The baseline represents the middle value of the scale, and the lengths of the bars on either side represent the number of players scoring below and above the middle value. Each subscale is measured via a 7-point Likert scale, from −3 (Strongly Disagree) to +3 (Strongly Agree). These results show that almost all participants gave positive scores on all subscales. The Ease of Control subscale particularly shows that participants found the AR controls easy to learn and use. Overall, participants understood the goal of the game, felt they were in control of the play of the game, and found the game easy to control.

Figure 9. PXI Questionnaire results. Higher scores represent more positive evaluation of the game by the participants.

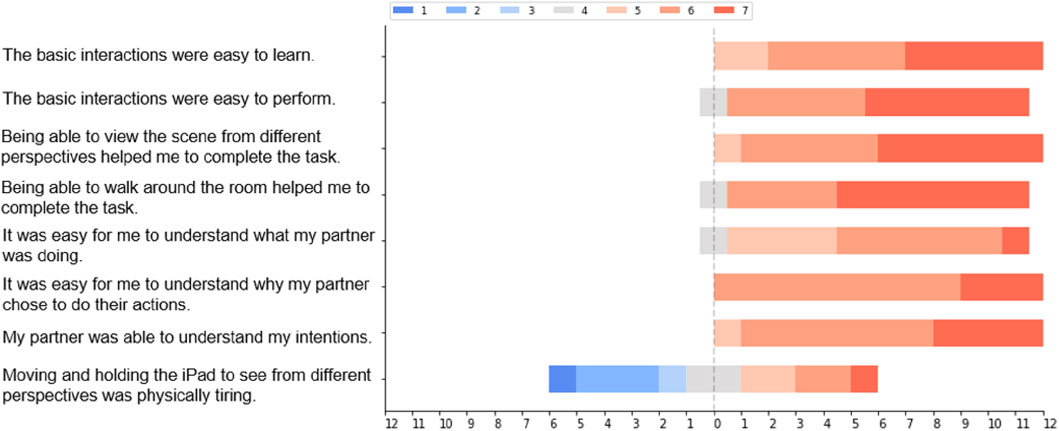

Finally, we used a custom questionnaire to capture participants’ experience, which used a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree). The results are shown in Figure 10. Participants found it easy to interact with the AR environment and considered moving around and viewing the virtual artifacts from different perspectives helpful in completing their task. They also thought it was easy to understand each other’s actions and intentions. Participants were split on whether they found it tiring to interact by moving the iPad.

Figure 10. Custom Questionnaire results. A larger number means that the participant was more likely to agree with the description.

These findings show that participants performed natural interactions in the AR environment. They naturally adopted six types of pose derived from everyday life to observe the AR objects from different heights and angles, as if they were interacting with physical entities, and they found the interaction easy to understand and perform.

To capture how participants communicated and collaborated with their partner, we analyzed their conversations, deixis, and focus.

To capture their level of attention to and understanding of each other, participants completed the Behavioural Engagement subscale of the Social Presence in Gaming Questionnaire (SPGQ) (De Kort et al., 2007). The participants rated an average of 5.94 out of 7 points, which indicates a strong connection between them.

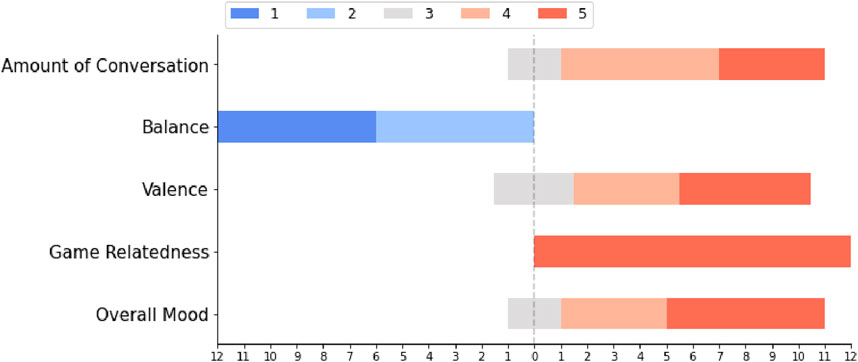

We reviewed the videos captured by the 360-degree camera (Figure 4), which recorded the participants’ speech and tracked the face of the person who was speaking. Based on this video data, we evaluated the participants’ verbal communication using the two-player interpersonal interaction questionnaire (Gorsic et al., 2019), which measures the conversations between two players using a 5-point Likert scale. The questionnaire addresses the amount, balance, valence, game-relatedness, and mood of the conversation.

As shown in Figure 11, participants engaged in nearly constant conversation with their partner, and talked approximately equally rather than one dominating the other. This indicated that each pair of participants actively shared their ideas with their partner. Participants’ conversation was overall positive and closely related to the game content, showing that they were focused on play of the game. Many participants laughed during conversations, appearing to be having a good time. Participants also showed excitement after making good progress. For example, P7 and P8 high-fived and complimented each other after receiving a perfect score. These behaviours evidenced that participants were attentive while collaborating and frequently communicated with their partner verbally.

Figure 11. Results of two-player interpersonal interaction questionnaire. For the Balance item, a lower score means that the participant talked the same amount as their partner. For the other items, higher scores represent more positive performance of the participants.

As mentioned in Section 3, the Sightline game provides two pointing methods for users to create referents. All participants used at least one pointing method. Using videos from the main camera and the iPads, we counted the number of occurrences of pointing, allowing us to determine each participant’s preference. Eight of 12 participants used on-screen pointing more, and four of 12 used behind-screen pointing more. Six participants used only one pointing method, while six used both methods over their play session. A Wilcoxon Signed Rank Test comparing players’ use of on-screen pointing (M = 60%) versus behind-screen pointing (M = 40%) showed no statistically significant difference (p = .498). This demonstrates that both pointing methods were used and that participants had no clear preference.

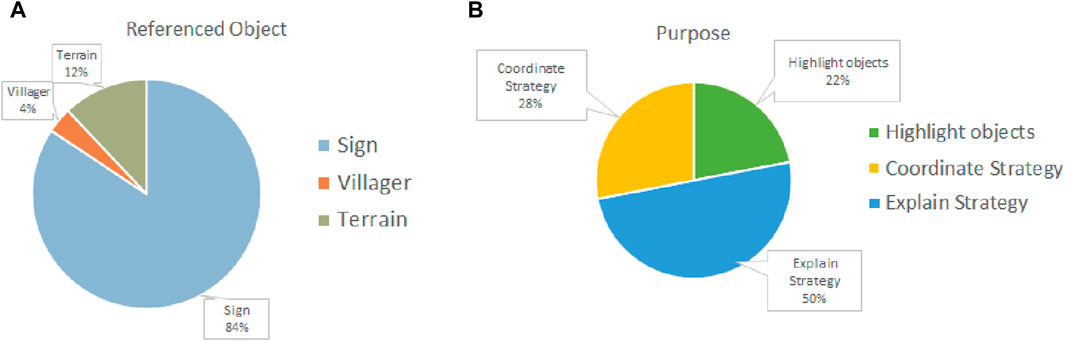

The objects that participants most regularly pointed at were the signs (Figure 12A). Participants told each other the location of the signs they found by pointing when exploring the scene. After that, they pointed to the signs that might increase their team score to tell their partner they intended to move a villager there. Participants also pointed at areas around the signs when they wanted to fine-tune the location of a villager.

Figure 12. Participants’ use of pointing. (A) Objects referenced via pointing. (B) Purpose of pointing.

In addition to the pointing methods provided by the game, participants also used other ways to reference objects. For example, participants rarely pointed at villagers, instead referencing villagers by name (e.g., “Do you wanna try sending Robert here?” (P1)). They also sometimes referenced signs via nearby objects (e.g., “I will send someone to the cart.” (P2)). The villagers’ paths were also used as pointers (Figure 2C). For example, in Session 1, P2 did not know which sign P1 was talking about, so P1 moved a villager towards the sign. Then P2 understood P1’s reference by looking at the endpoint of the villager’s path.

To understand the purpose of pointing, we reviewed the conversations one minute before and after each pointing action and labeled each instance’s purpose as one of.

Half of the pointing actions were used to explain strategy (Figure 12B). This often occurred when a participant wanted to move a villager, but the villager was controlled by another participant. Pointing helped them convey their intentions. Highlighting and coordinating strategies were used often at the beginning and end of a game round to communicate the locations of the signs, and to aid in calculating the team score.

Participants spent the vast majority of their time looking at their screen (95% of time). When they wanted to get their partner’s attention or to check their partner’s actions, participants shifted their focus from the screen to their partner by looking at their partner directly (3%) or their partner’s screen (2%). Some participants viewed their partner’s screen at the beginning of the collaborative gameplay session (Figure 13B). When one participant had a question, the other participant might also look at their partner’s screen, or show their screen as a demonstration. Despite looking at screens most of the time, participants maintained awareness of their partner. As participants focused on the screen to look at a virtual object, they were still able to see their partner either on the screen (“see-through vision”) or via their peripheral vision (Figure 13A).

Figure 13. Participants showed specific behaviours when switching their focus. (A) P10 seeing both virtual objects and P9 through iPad screen. (B) Participants looked at their partner’s screen.

Over all, participants had active and frequent interactions with their partner about the virtual objects. They conveyed ideas primarily through conversations and aided explanations with deictic pointing. Participants treated the AR artifacts as conversational props, indicating their acceptance of the AR illusion.

To access how participants used the physical space around the virtual terrain, we analyzed videos of their movements. We divided the area around the table into six segments of similar size and numbered the segments, then reviewed the videos captured by the main camera and recorded the time that each participant spent in each segment. The results showed that participants made dynamic use of the space.

Participants were more concentrated in the north side of the room, possibly because two cameras were placed at the south side of the room, making it feel busier. Within each segment, participants showed similar behaviours. They used the table edge areas most of the time, occasionally bending over the table to view the scene from a close distance, or stepping back and raising their arms to zoom out the view (Figure 14).

Participants did not stay in a fixed area, instead moving around. Most of the time participants stood in adjacent areas next to each other, rather than spreading out around the table. Participants spent most of time near their initial location: they moved to observe a place of interest, stayed for a while and then walked back to the original location until they had the next place they wanted to explore.

We observed minor conflicts due to the dynamic use of space–sometimes one participant walked through the area occupied by the other. Participants mainly exhibited awareness of their partner. When their partner was approaching, they took a few steps back to make space. But occasionally, participants were too focused on the screen to notice the movement of the other participant. For example, in session 6, P12 wanted to move from area 1 to area 3, while P11 was standing in the middle of area 2, making it difficult for P12 to pass. P11 did not notice that he was blocking his partner until P12 spoke to him. Collisions between participants occurred in Sessions 2 and 4, as neither participant noticed the other’s movement. However, the participants did not mind bumping into each other. They just laughed and apologized, and then pulled away a little to continue the game. Participants’ use of space shows that they treated the virtual terrain like a physical object that they could move around and view from different perspectives.

In summary, these empirical results show that participants largely treated the virtual objects making up the game as if they were physical, adopting different physical poses to view them from different heights and distances, and walking around the table to view them different perspectives. Participants referenced the virtual artifacts using a mix of behind-screen and on-screen pointing. They largely adopted the belief that the other participant was seeing the same scene as they were, but occasionally confirmed this by looking at their partner’s screen.

To understand the participants’ subjective experience in play of the Sightline game, we conducted a semi-structured interview following their play session, and performed a thematic analysis of the interview transcripts. Three themes, including seven sub-themes, were developed through thematic analysis, showing participants’ perceptions of the Sightline game. The first theme discusses how participants engaged in kinesthetic interaction and shared the space around them. The second theme describes participants’ experience around collaboration and communication, while the third theme discusses participants’ broader interaction with Sightline and their perceptions of AR.

All participants engaged in kinesthetic interaction while playing the game (Figure 8), which in turn was based on treating virtual artifacts as physical. Participants reported that they walked and changed postures to explore places of interest, to see around obstacles that blocked their views, or to follow a villager they controlled. They stopped moving when they were thinking about where to move the villagers or asking their partner to look at an object in the scene. The following subthemes explore participants’ views on kinesthetic interaction.

Participants reported that kinesthetic interaction in the AR environment was intuitive. P1 and P2 believed that they could have understood how to play the game in a short time without the tutorial session. P9 and P10 felt it was easy to line up the iPad with what they wanted to look at: “If you move the iPad up, you will see the whole terrain. When you tilt it, you can change your perspective with regard to the orientation of the iPad, which is pretty intuitive.” (P9) Participant P11 pointed out that moving to adjust perspective is not a “game mechanic” but a “real-world mechanic”, since they could observe the virtual terrain in the same way as physical objects on the table. This allowed them to easily image how to interact with virtual objects.

In addition, P5 and P6 thought that kinesthetic interaction facilitated exploration and encouraged them to actively observe each object in the scene. P9, P10, P11, and P12 also perceived that moving in an AR environment provided an immersive experience: “You needed to actually physically move to see the other villager; it makes you feel involved.” (P12)

Participants agreed that bodily movement was helpful in understanding the 3D environment. For instance, P4 and P12 mentioned that being able to move around helped them understand the layout of the scene: “It is definitely helpful, ‘cause you have to move to have the overview of the table.” (P4) P5 and P6 felt that moving prevented them from missing important details: “Like in order to find all the signs, if I were standing still, I do not think I would have been able to find that much.” (P5) P2 and P10 found it useful to align their line of sight with a villager’s by lowering the iPad, which made it easier for them to understand how many villagers the villager could see.

Participants enjoyed moving around while playing, finding it made gameplay more fun and dynamic. P7 mentioned that she preferred walking in an AR environment to a virtual reality environment: “I think it would be more nauseating if we were in like the glasses, the headsets. I think holding it and being able to see the real world was more comfortable.” However, P3 felt it was difficult for her to balance her attention between walking and looking at the screen: “It was nice to walk, but I was trying to follow the picture and also walking. It was difficult for me, because I’m not used to it.”

Six participants did not feel tired at all from continuous movement, while the other six participants reported differing levels of fatigue. This was consistent with their questionnaire responses from Figure 10. One participant (P7) with arthritis in the legs found moving around tiring. P2 and P9 mentioned that they repeatedly bent over during the gameplay, which made them feel back pain. P10 suggested that the height of the virtual terrain may require some trade-offs: “If it is like, a bit higher, then any person not have to bend or move. But I guess there’s another disadvantage of placing the map higher … I cannot see the bird eye view for that thing.” In addition to moving, holding an iPad for a long time also caused fatigue for some participants. P3 and P4 thought the iPad was heavy for them to hold, and P11 mentioned that looking at the iPad in his hands could lead to neck pain. All participants agreed that the current level of fatigue was acceptable; as P4 said: “It was not very tiring. It was fine.” However, P12 noted that if they played the game for longer periods of or in a larger space, they might feel more tired.

Participants did not consciously divide the physical space: “I do not think we have, like, a you go on this side, you go on this side strategy … So I think there were a couple of times where even we moved around each other, like it really was not something that we divided up beforehand. It was just as needed.” (P8) A participant’s position around the table mainly depended on the game task they were performing. For example, participants reported tweaking their positions as they moved villagers around: “I think I was initially standing over here, and then my villagers went somewhere else, so I followed.” (P2) But sometimes it was affected by their partner and other items in the room: “We were trying not to bump into each other.” (P9) “The availability of physical space, like whether or not there is a chair, also influenced our decision on where to stand and where to move.” (P10).

In general, participants were not worried about bumping into each other while moving. Being able to see the real-world surroundings when using AR helped participants coordinate: “Maybe we had some awareness of the other one, so we did not bump into to each other … Maybe we were not focusing on the other person, but we could see the periphery of vision. So I think we were not consciously aware of it, but we managed it instinctively.” (P6) Two pairs of participants collided once during their sessions, but they did not mind. P12 said he “kind of expected to have a collision” in an indoor environment with limited space, and they could quickly reorient and continue to play the game after a collision. Some participants also noted collisions in an AR environment using a handheld device can be less dangerous, making them less worried about bumping into each other: “We did bump into each other, so it was more than a worry–it absolutely happened. But I was not really worried about it because we did not have headsets. We were still able to see around very easily, and we were not lost in the virtual world. So even if we did bump into each other, it was not hard or painful.” (P8).

Participants agreed that it was important to play the game collaboratively, and that collaboration was necessary to get a good score. Three subthemes capture aspects of participant’s views on collaboration.

Participants felt they could easily collaborate during the gameplay. They worked together most of the time, except for a short period at the beginning when they familiarized themselves with the game elements. Participants did not consciously split up tasks–they started collaborating naturally. The strategies they used were similar: they first explored the scene, making sure they both could find all the villagers and signs. Then they selected a few signs and moved villagers there. After that, they calculated their team score, adjusted a villager’s position, or moved a villager to another sign until they both agreed the score could no longer be increased. Participants coordinated their actions, as P7 mentioned: “Sometimes, like, if you had a villager at one sign and you would move it to another sign to see if the sightlines would change, I would move my villager to where your villager left, taking up the space to make sure that you could still have a villager there.”

Participants liked the idea of each person controlling two villagers. P6 considered that this design prompted their collaboration: “Because you only have two villagers, if you want to test different signs, you might need to convince the other participant to try that. If we’re not working together, we cannot find the best solution.” P9 mentioned that splitting control of the villagers gave players a sense that they were making an equal effort in playing the game. P10 believed that controlling half of the villagers made delegating tasks easier: “I think task splitting was assisted by the game itself, where two villagers will be assigned to this player, and the other two villagers will assigned to the other player. So I think the first delegation of task is given by the game itself.”

All participants found pointing useful in collaboration. Some expressed a preference for on-screen pointing (Figure 3A). P5 and P6 thought that behind-screen pointing was not accurate enough, while P10 found that fingers superimposed on the terrain obstructed his view and his partner’s view. Moving hands behind the screen broke the AR illusion for some participants: P1, P7, and P8 disliked behind-screen pointing because they realized that they were actually pointing at an empty table, which made them feel “weird”.

Participants could easily understand each other’s pointing most of the time; however, four pairs reported that the screen size of iPads limited their view. They could not see virtual objects outside of the field of view of their screen, making them spend more time looking for the object their partner was pointing at: “If I’m standing here with my iPad, I’m pointing to something on that part and on that part, maybe he can see the pointer is somewhere here. But when I keep moving it on that side, I think it just disappears from his viewpoint. Even if it is [behind-screen pointing] visible for him in reality, from the iPad perspective, I do not think he got it.” (P10).

Participants felt they communicated verbally most of the time and found it easy to understand each other. Four participants (P3, P4, P9, P10) mentioned that having a common goal and being able to see a common scene facilitated their comprehension. Some non-verbal information also helped participants to communicate. For example, P8 indicated that she could guess her partner’s intention based on her gestures. P7 and P10 also pointed out that being able to see the paths of each other’s villagers (Figure 2C) helped them understand the strategies their collaborators had in mind.

Interestingly, each participant directly looked at their partner’s screen at least once during the game session (Figure 13B). They identified two reasons for this action: instinct and problem solving. For example, even though they already knew that both players saw the game scene, P3 and P8 said they instinctively wanted to make sure they and their partners were seeing the same content, while P5, P9, and P12 looked at their partner’s screen to mitigate the issue of limited field of view in pointing: “I physically moved to where he was standing, and he showed me his outlook on the view. That helped a lot.” (P5).

All participants liked Sightline, finding it fun and interesting. P6 felt curious about the AR scene, while P1, P2, P7, and P8 said they were highly motivated and became competitive to get a higher score.

Participants found interacting with AR to be a learning process. P9 felt the game provided immediate knowledge transfer: “When you see something, you can apply to immediately. It is not like you have to store it and then apply it later on. So immediate knowledge transfer is what helped me pick it up.” P10 added that playing with a partner is also a learning process: “I think the game became more interesting to us, because whenever I made a mistake, I looked at his performance. He did better than me at some point, so I was learning from him. I was getting some feedback from him, and vice versa.”

The 3D nature of AR scenes took additional time for some participants to get used to. All participants could easily think of moving their iPad in a horizontal direction, but some of them did not initially consider bending down to observe the scene from a villager’s perspective (Figure 6): “It never occurred to us to see through the perspective of the virtual characters. We were viewing from the top most of the time until you pointed out that we could try that strategy.” (P6) However, after an investigator prompted them that they could try putting down the iPad to see from the different levels, all participants got the idea and used it to determine sightlines.

In summary, participants enjoyed interaction in the AR environment. They moved around the virtual artifacts and viewed them from different perspectives. They verbally communicated with each other, while being able to see the same content and use deictic pointing aided their conversations. Participants found interactions in AR environment natural and intuitive. However, some of them experienced fatigue and confusion caused by movement and limited screen size.

We have shown how participants behaved when playing the Sightline game and have presented their subjective experiences. In this section, we revisit our research questions, discussing participants’ perceptions of AR and how they interacted with their partner and AR artifacts. This allows us to return to our overarching question of the success of the AR illusion. We then suggest implications for the design of collocated AR games based on our findings.

The study provided three sources of data addressing our three research questions: thematic analysis of participant interviews, analysis of gameplay videos, and questionnaires. Together, these provide a consistent picture of participants’ behaviours and experiences.

Participants made significant use of kinesthetic interaction to solve their gameplay task. All 12 took on similar poses when interacting with the AR scene (Figure 8). These interactions drew on how people interact with physical world, such as moving around the object of observation, crouching, leaning, and stretching. Participants frequently switched between these poses (Section 5.1.1). They spent most of their time standing up straight (Figure 7), which is likely because standing is more comfortable than crouching or leaning. They changed their poses as needed, for example, raising their arms to get an overview, or bending down to see details (Figure 8).

Participants used the table space dynamically. They spent nearly one-fifth of the time physically moving around the table (Figure 8). As discussed in Section 5.3, participants implicitly negotiated shared physical space and each established a “home base” to which they returned after moving around the table. As they moved, participants had little sense of ownership of space. They largely had no problems coordinating positions, while very occasionally bumping into each other. Participants did not consider close proximity to their partner to be a problem (Section 6.1.4). However, this may be because participants in this study already knew each other. Proximity and risk of collision might be more of an issue between strangers.

Fatigue was noticed by some participants, both in holding the iPad, and in performing physical movements such as bending and crouching (Section 6.1.3). Fatigue did not prevent the use of and enjoyment of kinesthetic interaction. Fatigue of some participants was caused by a mismatch between their height and that of the table, suggesting that adjusting the height of the table (or at least of the virtual scene) would have enhanced usability. People’s diverse abilities also need to be considered, as one participant with arthritis noted that some movements could be painful. For people with mobility impairments, it may be difficult or even impossible for them to use an AR application that requires kinesthetic interaction. Therefore it is important to provide alternative technologies that do not require these physical movements.

In summary, participants embraced the idea of kinesthetic interaction and interacted with virtual objects and the surrounding space physically. They looked at AR artifacts from different perspectives, walked around, and switched between multiple poses. Participants frequently changed their positions. They maintained a home position, but beyond that did not possess a strong sense of territory. These are all natural interactions originating from everyday life, supporting the idea that participants were comfortable interacting bodily as they would in a physical environment, and hence had embraced the AR illusion.

All participants agreed that collaboration was essential to getting a good score in the game and worked together most of time (Section 6.2.1). They communicated extensively and collaborated through various modalities. The forms of collaboration can be explained through Gutwin and Greenberg (2002) as follows:

Feedthrough: Virtual objects aided communication as they changed with participants’ manipulations and updated synchronously on both iPads. For example, when one participant moved a villager, the other participant could see where the villager was going by looking at the end point of the villager’s path (Section 6.2.3). Some participants also used the path as a supplement to gestural deixis (Section 5.2.3).

Consequential Communication: Participants were attentive to their partner, allowing them to gain information generated from partner’s bodily movement. As discussed in Section 5.2.4, participants mainly looked at their iPad’s screen, but they could maintain awareness of their partner by seeing them through the iPad (Figure 13A) or using their peripheral vision. They directly glanced at their partner when they wanted to get their attention. This behaviour of rarely directly looking at their partner is consistent with earlier studies (Rekimoto, 1996; Poretski et al., 2021a). As discussed in Section 5.1.2, participants felt that they paid attention to each other and could understand each other’s intentions.

Intentional communication: Participants made significant use of verbal communication (Section 6.2.3). They talked almost continuously (Section 5.2.2), and their conversations were balanced and mainly focused on the game (Figure 11). They also used gestural deixis to supplement language in communicating their intentions (Section 5.2.3). Interestingly, participants used verbal deixis to refer to villagers or unique objects in the scene, and used pointing methods provided by the game to indicate signs. This suggests that participants preferred language over pointing when referencing an object that could be identified by a specific name.

Participants’ collaboration fell into all three dimensions of Gutwin and Greenberg’s collaboration framework. This suggests that AR affords rich modalities for collaboration. This is in part because participants used similar modalities to physical interaction, particularly by pointing at virtual artifacts and looking at their partner through the iPad (Figure 13A) and using their peripheral vision.

Participants clearly used the virtual objects in the scene as conversational props, frequently referencing signs, villagers, and terrain while coordinating and explaining strategy (Figure 12). This revealed an area where the AR illusion broke down, however, for those participants that preferred on-screen pointing over gesturing directly into the AR scene (Figure 3). This was due to participants’ concerns around accuracy of behind-screen pointing and feeling “weird” pointing into empty space (Section 6.2.2).

Participants expressed positive feelings about AR interactions. Being able to move around not only helped them explore and understand the 3D game scene (Section 6.1.2), but also provided them with a fun experience (Section 6.3).

Participants were shown the basics of AR interaction while completing a tutorial level, but the tutorial was mainly directed to how to play the game, allowing the participants to discover how to interact with the AR scene largely on their own. The physical poses adopted by participants (standing, crouching, bending, etc.) are natural forms drawn from real-world experience. Participants reported the interactions as easy to understand and naturally corresponding to real-world actions (Section 6.1.1). As was stated by P11, these are “real-world mechanics”. This experience differs from some gestures devised explicitly for AR/VR interaction, such as pinch-to-select gestures (Hoggan et al., 2013). Such gestures can be learned and can be effective in interacting in mixed-reality spaces, but are not truly natural interactions, as they are not how people interact in the physical world. And unlike the sometimes underwhelming experiences of natural interaction in Kinect games (Section 2.1), participants felt that the movement was a natural part of performing their task. As a result, participants enjoyed the Sightline game, including the need to move while playing, showing a primary advantage of designing around the affordances of AR.

In addition, without prompting, participants expressed general preference to using an iPad versus a headset. P7 posited that being able to see the real environment could reduce cybersickness, making bodily movement in AR more comfortable (Section 6.1.2). The use of the iPad also made participants less worried about bumping into each other, as they could easily coordinate positions and react to collisions. In general, use of an iPad allowed participants to stay firmly rooted in the physical world even while interacting with virtual artifacts.

The above results suggest that AR can provide natural kinesthetic interactions that are accepted and enjoyed by users. Participants found the interactions intuitive and easy to perform and were comfortable interacting in an AR environment. The above findings are largely consistent with the research questions and hypotheses. However, we found some exceptions in the acceptance of the AR illusion, which are discussed in the next section.

The fundamental idea of AR is that by overlaying virtual artifacts on the physical world, an enhanced world is created where virtual entities can be treated as if they belong in the physical world. Users in AR environments need to understand and embrace this illusion to interact with an augmented scene, and in the case of Sightline, to better collaborate with their partner. For example, they are expected to move through the physical environment to observe the virtual objects from different perspectives and use real-world deictic gestures to interact, rather than interacting solely through their screen. In our study, participants easily and enthusiastically adopted this illusion, as they perceived interacting with virtual objects to be similar to interacting with real objects. Participants did retain, however, some perception that the virtual scene belonged to their iPad, instead of seamlessly connecting with the physical space on which it was situated.

Each pair of participants saw the common scene and could see each other either directly or through their screen (Figure 13A). By embracing the illusion of AR, participants successfully inferred which part of the scene their partners were looking at, based on the partner’s position, pose, and gaze; additionally, they could move their screen to where their partner stood to determine what their partner could see. Participants largely maintained awareness of their partner through peripheral vision or through the iPad screen, rarely looking directly at their partner. However, this illusion broke down at least once for each participant, as evidenced by their looking directly at their partner’s screen (Section 5.2.4). Participants reported trying to confirm that the scenes they saw were consistent or to resolve problems encountered in collaboration (Section 6.2.3). Both intentions indicate that participants were clearly aware of the presence of the screen. The break in the AR illusion can interrupt participants’ workflow of collaboration, making them stop the task at hand and take time to comprehend the information on their partner’s screen.

Moreover, although the game afforded behind-screen pointing using the same gestures as real-life pointing, participants preferred on-screen pointing (Section 6.2.2). Some participants were reluctant to put their fingers behind the screen because they knew that the object they were pointing at was invisible in the physical environment. They found it unnatural to point their fingers at what they knew to be an empty table in the real world, even though they could see their fingers pointing at an AR artifact on the table through the iPad display. These observations indicate that participants retained awareness that they were interacting with virtual objects on a screen, rather than being fully immersed in the AR environment. This feeling may be due in part to the limited screen size of the iPad, which made participants always aware of the boundary between virtual and reality through the borders of the screen. In addition, the interaction of tapping on the screen to manipulate the virtual villagers may also have reinforced participants’ awareness of the screen.

Overall, we found that participants successfully embraced the illusion of AR. Participants moved around the AR scene as if it was physically present on the table, using natural gestures such as leaning in to see more detail and stretching up to see an overview. Participants maintained awareness of their partner through peripheral vision or the iPad screen, rarely consciously look at their peers. They were able to infer where their partner was paying attention based on their partner’s position, posture, and gaze, and to move the screen to where their partner was standing to determine what their partner could see. The illusion was at times broken, however, with participants occasionally looking at their partner’s screen and some participants preferring on-screen to behind-screen pointing.

Specific elements of the Sightline game enhanced or weakened participants’ experience with the AR illusion, which may provide insights into the design of future AR applications. We highlight five recommendations as potentially useful.

Understand the Limits of the AR Illusion. As discussed in Section 7.2, participants interacted with AR artifacts physically, but some preferred on-screen pointing and looked at each others’ screens. Designers should therefore understand that the AR illusion may be effective most of the time, but may break down in some situations. Using devices with a wider field of view and making it easier to manipulate objects through behind-screen gestures may mitigate AR illusion disruptions.

Use awareness cues for pointing. Because of the limited field of view of handheld devices, participants sometimes did not notice that their partner was performing a pointing gesture (Section 6.2.2). Additional cues are needed in AR games to help players find a referenced location. For example, an arrow could be shown at the edge of the screen to indicate that their partner is pointing out of the screen coverage, and to show the direction of the referenced location.

Label objects to support verbal deixis. In Sightline, each villager is identified by a unique name. There are also some recognizable obstacles in the scene. Participants rarely pointed at these objects with their fingers or beacons, but referred to them by name in conversation (Section 5.2.3). This indicates that people may prefer to use verbal reference rather than pointing gestures to identify objects with specific names. For AR objects that need to be referenced and manipulated frequently, it may be worth labeling them to simplify communication.

Take advantage of 3D workspace in AR to promote kinesthetic interaction. The Sightline game uses three-dimensional terrain and decorations, which engaged participants to observe the scene from different perspectives and distances (Section 6.1.2). For AR games that aim to promote player movement, adding more 3D elements for players to explore may help facilitate their adoption of kinesthetic interaction.

Consider fatigue. Some participants experienced fatigue (Figure 10) due to holding the iPad and maintaining uncomfortable poses such as crouching (Section 6.1.3). AR artifacts should be placed to avoid the need for extended uncomfortable poses. AR games that require sustained bodily movement also need to limit the scale of the game scene. Additionally, AR applications should be designed with accessibility in mind, providing alternative methods of changing perspectives for users who have difficulties in moving around and crouching.

Our study found that participants dynamically interacted with AR environments and engaged in conversations around virtual objects. Our video analysis, thematic analysis, and questionnaire data were consistent over the questions studied, adding confidence to our results. However, this study is limited by its focus on two-person collocated collaboration in a single game. Future work will be required to confirm these results in other AR applications and with larger groups. Moreover, our study was limited to 12 participants. Data from later participants was similar to that of earlier ones, indicating that data saturation had been reached. As Guest et al. (2006) have suggested, once saturation has reached, it is possible to draw conclusions from a population, even if the number of study participants is small. The participants knew each other in advance, likely enhancing their ability to collaborate effectively; performance may be different for collaborators who have not met before. Finally, this study had good gender balance (7 females and 5 males), but most pairs were of the same gender. Further study with more diverse groups of players would be of interest.

In this paper, we investigated the perception of AR illusion by six pairs of collocated participants while playing the Sightline AR game. Participants were found to move frequently, adopting various postures to observe AR artifacts from different perspectives. The artifacts also functioned as conversational props, with participants referring to them verbally and by pointing. These findings suggest that participants largely embraced the embraced the illusion of AR. However, we observed occasional interruptions in the AR illusion, where participants noticed the presence of the screen and perceived the AR artifacts as belonging to the screen. This provides implications for designing AR applications.

The datasets presented in this article are not readily available because our primary data is in video format and cannot be anonymized. Requests to access the datasets should be directed to Jifan Yang, amlmYW4ueWFuZ0BxdWVlbnN1LmNh.

The studies involving humans were approved by General Research Ethics Board (GREB), Queen’s University, Kingston, Ontario, Canada. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any identifiable images or data included in this article.

JY: Conceptualization, Formal Analysis, Investigation, Methodology, Software, Writing–original draft, Writing–review and editing. SB: Funding acquisition, Project administration, Writing–review and editing. AB: Writing–review and editing. RH: Writing–review and editing. ZM: Writing–review and editing. AM: Writing–review and editing. TG: Conceptualization, Formal Analysis, Methodology, Supervision, Writing–original draft, Writing–review and editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. We acknowledge the generous support of the Social Sciences and Humanities Research Council of Canada (SSHRC) through the Environments of Change partnerships project (Grant No: 895-2019-1015).

An early version of this work appeared as the M. Sc. thesis of the first author (Yang, 2023).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2024.1428765/full#supplementary-material

Abeele, V. V., Spiel, K., Nacke, L., Johnson, D., and Gerling, K. (2020). Development and validation of the Player Experience Inventory: a scale to measure player experiences at the level of functional and psychosocial consequences. Int. J. Human-Computer Stud. 135, 102370. doi:10.1016/j.ijhcs.2019.102370

Ahmed, N., Lataifeh, M., Alhamarna, A. F., Alnahdi, M. M., and Almansori, S. T. (2021). “LeARn: a collaborative learning environment using augmented reality,” in 2021 IEEE 2nd international conference on human-machine systems (ICHMS) (IEEE), 1–4. doi:10.1109/ICHMS53169.2021.9582643

Aliprantis, J., Konstantakis, M., Nikopoulou, R., Mylonas, P., and Caridakis, G. (2019). “Natural interaction in augmented reality context,” in Workshop on visual pattern extraction and recognition for cultural heritage, 81–92.

Andres, J., Semertzidis, N., Li, Z., Wang, Y., and Floyd Mueller, F. (2023). Integrated exertion—understanding the design of human–computer integration in an exertion context. ACM Trans. Computer-Human Interact. 29, 1–28. doi:10.1145/3528352

Asadzadeh, A., Samad-Soltani, T., and Rezaei-Hachesu, P. (2021). Applications of virtual and augmented reality in infectious disease epidemics with a focus on the COVID-19 outbreak. Inf. Med. Unlocked 24, 100579. doi:10.1016/j.imu.2021.100579

Azad, A., Ruiz, J., Vogel, D., Hancock, M., and Lank, E. (2012). “Territoriality and behaviour on and around large vertical publicly-shared displays,” in Proceedings of the designing interactive systems conference (New York, NY, USA: Association for Computing Machinery), 468–477. DIS ’12. doi:10.1145/2317956.2318025

Azuma, R., Baillot, Y., Behringer, R., Feiner, S., Julier, S., and MacIntyre, B. (2001). Recent advances in augmented reality. IEEE Comput. Graph. Appl. 21, 34–47. doi:10.1109/38.963459

Billinghurst, M., Clark, A., and Lee, G. (2015). A survey of augmented reality. Found. Trends® Human–Computer Interact. 8, 73–272. doi:10.1561/1100000049

Braun, V., and Clarke, V. (2019). Reflecting on reflexive thematic analysis. Qual. Res. Sport, Exerc. Health 11, 589–597. doi:10.1080/2159676X.2019.1628806

Bujak, K. R., Radu, I., Catrambone, R., MacIntyre, B., Zheng, R., and Golubski, G. (2013). A psychological perspective on augmented reality in the mathematics classroom. Comput. and Educ. 68, 536–544. doi:10.1016/j.compedu.2013.02.017

Carmigniani, J., Furht, B., Anisetti, M., Ceravolo, P., Damiani, E., and Ivkovic, M. (2011). Augmented reality technologies, systems and applications. Multimed. Tools Appl. 51, 341–377. doi:10.1007/s11042-010-0660-6

Cavaleri, J., Tolentino, R., Swales, B., and Kirschbaum, L. (2021). “Remote video collaboration during COVID-19,” in 2021 32nd annual SEMI advanced semiconductor manufacturing conference (ASMC) (IEEE), 1–6. doi:10.1109/ASMC51741.2021.9435703

Chen, L., Liu, Y., Li, Y., Yu, L., Gao, B., Caon, M., et al. (2021). “Effect of visual cues on pointing tasks in co-located augmented reality collaboration,” in Symposium on spatial user interaction (New York, NY, USA: Association for Computing Machinery). SUI ’21. doi:10.1145/3485279.3485297

Chettaoui, N., Atia, A., and Bouhlel, M. S. (2022). Examining the effects of embodied interaction modalities on students’ retention skills in a real classroom context. J. Comput. Educ. 9, 549–569. doi:10.1007/s40692-021-00213-9

Cibrian, F. L., Tentori, M., and Martínez-García, A. I. (2016). Hunting Relics: a persuasive exergame to promote collective exercise in young children. Int. J. Human–Computer Interact. 32, 277–294. doi:10.1080/10447318.2016.1136180

De Ioannes Becker, G., and Hornecker, E. (2021). “Sally&Molly: a children’s book with real-time multiplayer mobile augmented reality,” in Extended abstracts of the 2021 annual symposium on computer-human interaction in play (New York, NY, USA: Association for Computing Machinery), 80–86. CHI PLAY ’21. doi:10.1145/3450337.3483498

De Kort, Y., Ijsselsteijn, W., and Poels, K. (2007). Digital games as social presence technology: development of the social presence in gaming questionnaire (SPGQ). Presence 195203, 1–9.

De Valk, L., Rijnbout, P., Bekker, T., Eggen, B., De Graaf, M., and Schouten, B. (2012). Designing for playful experiences in open-ended intelligent play environments, 3–10.

Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., et al. (2019). Revisiting collaboration through mixed reality: the evolution of groupware. Int. J. Human-Computer Stud. 131, 81–98. doi:10.1016/j.ijhcs.2019.05.011

Fogtmann, M. H., Fritsch, J., and Kortbek, K. J. (2008). “Kinesthetic Interaction: revealing the bodily potential in interaction design,” in Proceedings of the 20th australasian conference on computer-human interaction: designing for habitus and habitat (New York, NY, USA: Association for Computing Machinery), 89–96. OZCHI ’08. doi:10.1145/1517744.1517770

Gabbard, J. L., Smith, M., Tanous, K., Kim, H., and Jonas, B. (2019). Ar drivesim: an immersive driving simulator for augmented reality head-up display research. Front. Robotics AI 6, 98. doi:10.3389/frobt.2019.00098

Gandy, M., Catrambone, R., MacIntyre, B., Alvarez, C., Eiriksdottir, E., Hilimire, M., et al. (2010). “Experiences with an ar evaluation test bed: presence, performance, and physiological measurement,” in 2010 IEEE international symposium on mixed and augmented reality, 127–136. doi:10.1109/ISMAR.2010.5643560

Ghadirian, H., Ayub, A. F. M., Silong, A. D., Bakar, K. B. A., and Hosseinzadeh, M. (2016). Group awareness in computer-supported collaborative learning environments. Int. Educ. Stud. 9, 120–131. doi:10.5539/ies.v9n2p120

Gorsic, M., Clapp, J. D., Darzi, A., and Novak, D. (2019). A brief measure of interpersonal interaction for 2-player serious games: questionnaire validation. JMIR Serious Games 7, e12788. doi:10.2196/12788

Guest, G., Bunce, A., and Johnson, L. (2006). How many interviews are enough? An experiment with data saturation and variability. Field methods 18, 59–82. doi:10.1177/1525822x05279903