- 1Electronic Engineering, Maynooth University, Maynooth, Ireland

- 2Computer Science, Maynooth University, Maynooth, Ireland

- 3LAAS-CNRS, Universite de Toulouse, Toulouse, France

- 4Irish Air Corps, Casement Aerodrome, Baldonnel, Ireland

- 5Mechatrons, Greenogue Business Park, Baldonnel, Ireland

The aerospace industry prioritises safety protocols to prevent accidents that can result in injuries, fatalities, or aircraft damage. One of the potential hazards that can occur while manoeuvring aircraft in and out of a hangar is collisions with other aircraft or buildings, which can lead to operational disruption and costly repairs. To tackle this issue, we have developed the Smart Hangar project, which aims to alert personnel of increased risks and prevent incidents from happening. The Smart Hangar project uses computer vision, LiDAR, and ultra-wideband sensors to track all objects and individuals within the hangar space. These data inputs are combined to form a real-time 3D Digital Twin (DT) of the hangar environment. The Active Safety system then uses the DT to perform real-time path planning, collision prediction, and safety alerts for tow truck drivers and hangar personnel. This paper provides a detailed overview of the system architecture, including the technologies used, and highlights the system’s performance. By implementing this system, we aim to reduce the risk of accidents in the aerospace industry and increase safety for all personnel involved. Additionally, we identify future research directions for the Smart Hangar project.

1 Introduction

A Digital Twin (DT), as defined by Jones et al. in (Jones et al., 2020), is a virtual counterpart of a real-world entity with a data connection between them. These could be physical objects such as an aircraft or a facility such as a factory. DTs are widely used in many industries to plan and simulate safety, production, maintenance, and security operations. DTs are often used to simulate the impact of changes and improvements in systems and processes while maintaining the relationship between the physical and virtual spaces. The use of a physical twin dates back to the 1970s (Errandonea et al., 2020) when they were used during the Apollo 13 mission. Two physical models of the aircraft were used: one was used for launch, and the other was on the ground for use by the ground crew. This method was expensive, but it proved to be very successful for the mission by allowing the ground crew to develop effective solutions that ensured the safety of the astronauts. Introducing DTs in the early 2010s (Li et al., 2022) delivered similar benefits but reduced costs and offered new simulation capabilities.

When creating a DT, it is important to consider five dimensions: physical, virtual, data, connection, and service modelling (Liu et al., 2018). The DT models the physical dimensions of the real world and may model some of the dynamic elements in the system, e.g., people, vehicles, processes, etc. For a DT to be a real-time representation of the physical world, live sensor data must be fed into the system; by fusing multiple real-world sensors to update the representations within the virtual world, a more complete model can be created by reducing blind spots and improving model accuracy. A better model improves prediction and makes the decision-making field more reliable (Liu et al., 2018).

When we consider the use of DT for active safety, it is important to consider the characteristics of the problem domain. Safety in an aircraft hangar is crucial for creating a safe working environment for personnel and machines. Many issues must be considered when evaluating hangar safety, from operational hazards when working with machinery to excessive noise and low lighting. Ignoring hangar safety can lead to injury, near misses, or other hazards within the hangar (Gharib et al., 2021). Taxiing aircraft within a busy hangar can be challenging, requiring precision and a well-trained team. When maneuvering an aircraft, several aspects need to be considered: the initial position and final position of the aircraft, the aircraft type, overall aircraft scheduling, avoidance of foreign object debris (FOD), etc. Safety and efficiency go hand in hand when parking aircraft; a greater emphasis on the safety may reduce short-term efficiency.

Several approaches to improving the safety of hangar operations can be found in the literature. Examples include improving the path planning for busy hangars (Wu and Qu, 2015), while other approaches propose sensors positioned on the aircraft to detect potential collisions (Cahill et al., 2013; Khatwa and Mannon, 2018). When the ground crew are maneuvering an aircraft, they typically follow clearly marked paths; collisions tend to occur when the path markings are unclear or when objects protrude into the exclusion zone. Attaching sensors to the aircraft–either permanently or temporarily can be challenging due to cost or physical access.

The pushback or towing of aircraft is often accomplished using a tow truck. Modelling the kinematics of the tow truck-aircraft system allows for accurate simulation of the trajectory in confined spaces (Wang et al., 2012; Liu et al., 2019). Simulation of the towing operation within the DT offers a safe and secure method for improving physical systems and gathering data in the virtual environment (Wang et al., 2021). Axis Aligned Bounding Box (AABB) collision detection algorithm can be used to detect potential collisions (Du et al., 2021). The use of rectangular bounding boxes to represent parts of the aircraft is common due to their simpler shape, offering a reliable method for collision detection with a low computational load.

The data from real-time sensors are central to the DT being used for active safety. The real-time data can potentially detect hazards, and simulations within the DT can predict the risk of accidents, and customised alarms can be provided to personnel in specific physical locations and roles (Liu et al., 2020). Aircraft maintenance requires continuous evaluations of vehicles and machines to prevent damage to aircraft. Hangar personnel follow strict maintenance operations to ensure the aircraft is fully operational and safe. DTs have been used in maintenance to improve operational time and prevent human error [XXX]. Hangar maintenance with DT technology requires several factors, including an accurate digitalization of the environment, reliable analytical tools to evaluate the data from the DT, automation of the operations involved in the maintenance process and intelligent production to replace damaged parts (Novák et al., 2020).

Accurate virtual avatars of objects and people are important in creating a reliable DT for path planning and collision detection. Data fusion using multiple sensors with different viewpoints and capabilities can prevent gaps in the data and can assist DTs in decision-making, for example, by making path planning and collision avoidance more robust (Liu et al., 2020).

2 Materials and methods

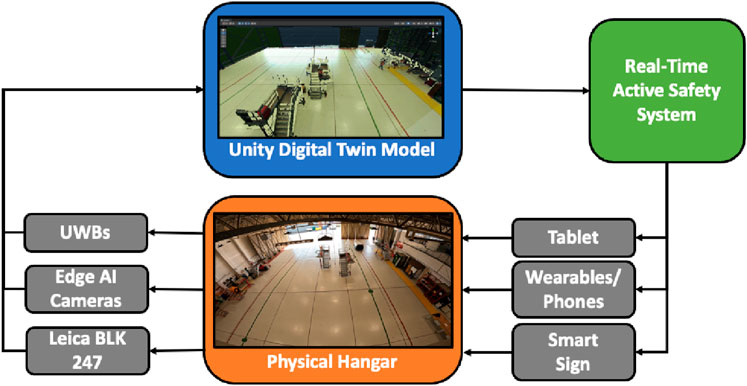

This section describes our research to build and evaluate a Smart Hangar system in Baldonnel Aerodrome, Dublin, Ireland. The system uses computer vision, LiDAR and Ultra Wide Band (UWB) position sensing beacons. The first step in the design of the DT was to identify what causes issues for the hangar personnel. These ranged from an unpredictable busy schedule, Foreign Object Debris (FOD) that obstructs the path of the tow truck driver, overcrowding of vehicles, and blind spots to the tow truck driver and wing walkers. While these issues are tackled by the well-trained team in the hangar, incidents still occur due to limitations in human attention, fatigue, and distractions. The Smart Hangar DT was designed to represent an aircraft hangar in a virtual environment to provide real-time active safety for the people and equipment. The Smart Hangar system is shown in Figure 1, illustrating the data flows that connect the sensors, the DT, the Active Safety system, and the human interfaces for the alerts.

The DT used real-time communication with the sensors to build a real-time virtual hangar model and simulate potential collision risks; then, the active safety system managed the alerts to the ground crew. The virtual model was built in Unity3D using a LiDAR scan and physical measurements of the hangar, the sensor locations, and fields of view. To create the active safety system, the sensor data was fused in the DT to update virtual models of people and vehicles. The active safety system determined the collision risk and other safety protocols using the data in the DT. When an alert was raised, the active safety system routed the alerts to the relevant human interface devices controlled by the system, such as wearables, smart signs and the tow truck interface.

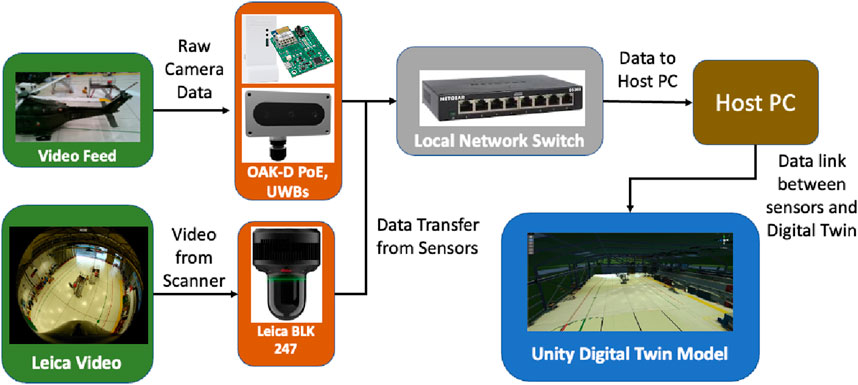

Sensors used in Smart Hangar consisted of UWB anchors and tags to provide accurate position estimates of tagged objects such as the wearables, Edge AI cameras (Luxonis Oak-D) that performed on-camera object tracking using MyriadX chip and reported this high-level data to the DT, and a Leica BLK247 LiDAR scanner that provided a 360° LiDAR scan of the hangar and a 360-degree video feed as shown in Figure 2.

The LiDAR scanner was used to create a textured point cloud model of the empty hangar, this is then used as the base model for the DT along with 3D models of the aircraft and 3D scans of other objects in the environment. The pre-scanned models were then imported into the Unity3D environment to represent the real-world hangar.

In the real-time operational phase of the DT the LiDAR scanner was positioned on the ceiling in the middle of the hangar Some areas of the hangar could not be seen due to obstacles, and a combination of physical measurements and camera images were used to adjust the model. The LiDAR model, the UWB position data and the camera fields of view were aligned using calibration tests using the grid pattern on the hangar floor as a calibration target. Both the UWBs and the cameras were positioned in key locations to compensate for LiDAR blind spots, and their higher update rate also ensured a real-time model of the real world to the virtual world. The test zone used in our study consisted of 25 × 30 × 10 m (approximately half the hangar); this test zone included the main door and offered valuable data on hangar operations, especially when manoeuvring aircraft.

The data transfer between the sensors and the DT was achieved using a ROS 2-Unity bridge. We chose ROS 2 as our middleware because it provided the potential for more secure communications using DDS security; security was an important consideration for our military stakeholders. The UWBs measure the distance of objects using the time-of-flight (ToF) method. Distance using ToF is found by measuring the time a pulse takes to travel from the tag to the anchors. The anchors were positioned in the four corners of the test area, 10 m off the ground. Typically, the three closest anchors were used to triangulate the location, and each anchor had a range of approximately 25 m. However, the number of obstacles in the path had an impact the on the range measurement.

The cameras used were Luxonis Oak-D PoE cameras; these cameras offered high processing power on the device and could run complex networks using the DepthAI software integrated into the devices. The cameras and a host PC were connected on a local network and were positioned 10 m off the ground with four cameras in the corners of the test space and an additional camera positioned in the middle of the hangar pointed outwards toward the door.

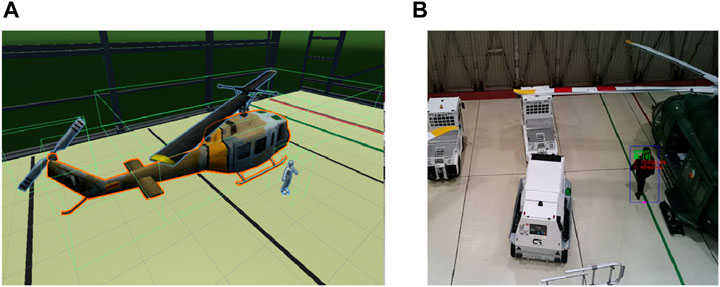

In Smart Hangar, we chose to use so-called edge AI cameras, which process the images on the camera and transfer only high-level information in the scene to the DT, e.g., the type of object recognized and its location in the camera view. This approach was taken to ensure that the system was scalable and to provide privacy to the staff operating in the hangar. The choice of the neural network used on the camera to achieve object detection is critical. Two network types were considered: 1) You Only Look Once (YOLO) architecture (Adarsh et al., 2020) and 2) the MobileNet architecture (Chiu et al., 2020). The accuracy of the YOLO network was much better than MobileNet when detecting the target objects at different ranges. Both networks ran at high frames per second (fps) even when detecting multiple objects on Luxonis hardware. The YOLO network achieved up to 20 fps, while MobileNet achieved up to 25 fps. We tested the different YOLO versions available at the time and the final network chosen was Tiny YOLO v3, as it offered high-speed and good object detection. Figure 3B shows person tracking in the hangar.

To insert objects into the DT the bounding boxes for the objects tracked by the cameras were projected onto the ground plane of the digital twin. This calculation was achieved by calibrating the camera intrinsic’s, using OpenCV, and the camera extrinsic’s using markings on the floor of the hangar. The markings used were measured manually using a laser tape measure. The coordinates for the cameras and UWBs operated in the right-hand coordinate system (RHS), while Unity operated in the left-hand coordinate system (LHS). This meant the coordinates from the sensors needed to be adjusted from RHS to LHS to be represented accurately. The orientation of the 3D model was clear from the major axis of objects such as helicopters but for humans the direction of motion was used to infer orientation.

To connect the DT to the hangar personnel and tow truck operators, human interfaces were developed for the tow truck driver, wearables for hangar personnel, and a smart sign (Figure 1). The tow truck driver interface was an in-cab tablet that rendered a bird’s eye view of the hangar (Figure 4A) and delivered visual and audio alerts when a collision was predicted. The Wearable and smart sign devices contained a UWB tracking beacon and interface consisting of a visual and audio display (Figures 4B,C). The Smart Sign used an interface similar to the wearable device, but had a larger screen and fixed location. The smart sign warned people detected by the DT moving near specified exclusion zones only when their trajectory indicated they would cross into the exclusion zone. The human interfaces are designed to operate silently except when there is a need to provide an alert to prevent the hangar personnel’s distraction and use a behaviour-feedback design (Lee and Kim, 2022).

Figure 4. (A) Visual alert on the tow truck driver’s tablet and (B) a wearable device and smart sign before the alert and (C) after the alert.

Collision detection for Smart Hangar was used to find potential safety hazards and prevent aircraft damage and injury. Objects in the hangar tend to be complex in shape and require more detail in the bounding box. Creating bounding boxes for objects like helicopters can lead to a high computational load on the system. Smart Hangar uses the Unity3D bounding box with the AABB method to detect the bounding box collisions. This method allows for reducing the bounding box of objects to a series of rectangles to represent the object’s shape accurately. Objects like a helicopter will require multiple bounding boxes to represent the area the object covers fully. In contrast, smaller objects or people only require one bounding box to represent the covered area. Figure 3A shows an example of a Unity3D bounding box in the DT model for a helicopter and a person. Figure 3B shows the tracking of a person from one of the cameras; it should be noted that these are not of the same scene because images are normally processed at the edge, with only the bounding boxes being sent to the DT PC.

When a potential collision is predicted, hangar personnel and tow truck drivers must have enough time to react and avoid a collision. Careful consideration must be made to the amount of information provided to the user. The wearables and smart sign alert used a simple audio alert and a simple visual STOP indication. The aim was for operators to associate the audio alert with the action “stop and regain situational awareness”. Examples of the alerts can be seen in Figure 4C. The visual red screen is mainly used to provide a visual confirmation of the audio alert. Providing detailed visual information describing a risk would create a cognitive load on the operator that would reduce the response time and thereby potentially increase the risk. However, in the case of the tow truck driver interface, a top-down perspective was provided as their view is often occluded and situational awareness is difficult to maintain as can be seen in Figure 4A.

3 System evaluation

The Smart Hangar system was constructed as a proof of principle in using a DT to provide active safety for hangar operations. The current system has a trade-off between positional accuracy and computation speed. This trade-off reduced the precision of the visual 3D models and the physics engine bounding box proxies used for collision prediction. We chose an accuracy of circa 0.5 m as a reasonable trade-off between measurement accuracy and the system response time. During tests, the system detected objects in the cameras, spawning them in the virtual world in under 1 s. While this is close to real-time, we aim to spawn objects in one-tenth of a second to support safe operations.

The Edge AI cameras used an image size of 3,840 × 2,160 pixels. We transferred the marked-up images to the PC for validation, but full-size images exceeded the network’s capacity. To counter this bandwidth limitation, our validation image was reduced in size to 416 × 416 pixels before transfer. The system’s positional accuracy was validated using a laser measuring tape to provide ground truth position measurements. The hangar had strong artificial illumination, which provided for consistent camera tracking. The only deterioration in tracking occurred when strong sunshine from open doors covered half of a camera scene. The overall precision of object tracking using the cameras was ± 40 cm.

Tracking with UWBs proved very successful; the system could track objects at ranges up to 50 m with an accuracy of 10cm; these objects were being tracked outside of the hangar test zone. When tracking objects in the test zone with a clear line of sight, the accuracy improved to ± 1 cm.

The human interfaces play a critical role in Smart Hangar, relaying essential data from the virtual environment to hangar personnel. The tow truck driver can access a view from the virtual cameras mapped to the physical cameras surrounding them, providing further information on their surroundings, including blind spots. To view the live view from the virtual model, the PC running the DT and the tablet must be on the same Wi-Fi network. This is beneficial in allowing for easy implementation of multiple devices to view the feed, but it can also lead to potential security issues. The video feed on the tablet is approximately 1.5 s, but a shorter millisecond delay would be desirable to cover faster movements.

4 Conclusion and future work

This paper describes the design, construction and initial evaluation of a Smart Hangar DT used for real-time active safety. The project investigated the steps in DT creation, object detection, data fusion, and collision avoidance for an active safety application in aircraft manoeuvring. A working DT of a real-world aircraft hangar, capable of tracking people in a privacy-preserving manner and representing them in the virtual environment, was demonstrated. The DT offered proof of principal evaluation of performance. Smart Hangar demonstrated the tracking of people and helicopters, but a wider class of objects, especially FOD, is needed before it could be considered for use in a practical setting. Smart Hangar demonstrated the potential use for collision prevention and enforcing exclusion zones. However, the current system has significant limitations in accuracy, ± 40cm, and a temporal resolution of 1.5 s, which is not sufficient for manoeuvring complex 3D aircraft such as helicopters.

Our future work is focused on collision avoidance and path planning techniques, fully overlapping camera views, and the integration of UWB-enabled mobile phones as an alternative to wearables. Improvements to the tow truck driver’s tablet would include more control over the system’s viewing methods and a more secure method to view the live feed. Workplace ethics and human factors are essential, and we plan a detailed study of the reaction of hangar personnel to the system and adapt the design to meet their needs.

Data availability statement

The datasets presented in this article are not readily available because under the terms of the agreement with the Irish Defence Forces, we cannot share data captured on-site in the hangar. Requests to access the datasets should be directed to Z2VycnkubGFjZXlAbXUuaWU=.

Ethics statement

The studies involving humans were approved by the Maynooth University Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

LC: Data curation, Formal Analysis, Investigation, Software, Validation, Visualization, Writing–original draft. JD: Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision. MC: Data curation, Formal Analysis, Methodology, Software, Validation, Visualization, Writing–review and editing. RD: Conceptualization, Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Writing–review and editing. MC: Conceptualization, Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Writing–review and editing. TM: Conceptualization, Data curation, Funding acquisition, Methodology, Project administration, Resources, Supervision, Validation, Writing–review and editing. PR: Data curation, Formal Analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Writing–review and editing. GL: Conceptualization, Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This publication has emanated from research conducted with the financial support of Science Foundation Ireland under Grant number 21/FIP/DO/9955 and 20/FFP-P/8901. For Open Access, the author has applied a CC BY public copyright license to any Author Accepted Manuscript version arising from this submission.

Conflict of interest

Author PR was employed by Mechatrons Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adarsh, P., Rathi, P., and Kumar, M. (2020). “YOLO V3-tiny: object detection and recognition using one stage improved model,” in 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 06-07 March 2020, 687–694. doi:10.1109/ICACCS48705.2020.9074315

Cahill, J., Redmond, P., Yous, S., Lacey, G., and Butler, W. (2013). The design of a collision avoidance system for use by pilots operating on the airport ramp and in taxiway areas. Cognition, Technol. Work 15 (2), 219–238. doi:10.1007/s10111-012-0240-9

Chiu, Y.-C., Tsai, C.-Y., Ruan, M.-D., Shen, G.-Y., and Lee, T.-T. (2020). “Mobilenet-SSDv2: an improved object detection model for embedded systems,” in 2020 International Conference on System Science and Engineering (ICSSE), Kagawa, Japan, 31 August 2020 - 03 September 2020, 1–5. doi:10.1109/ICSSE50014.2020.9219319

Du, Y., Ying, L., Peng, Y., and Chen, Y. (2021). “Industrial robot digital twin system motion simulation and collision detection,” in 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence (DTPI), Beijing, China, 15 July 2021 - 15 August 2021, 1–4. doi:10.1109/DTPI52967.2021.9540114

Errandonea, I., Beltrán, S., and Arrizabalaga, S. (2020). Digital twin for maintenance: a literature review. Comput. Industry 123 (December), 103316. doi:10.1016/j.compind.2020.103316

Gharib, S., Martin, B., and Neitzel, R. L. (2021). Pilot assessment of occupational safety and health of workers in an aircraft maintenance facility. Saf. Sci. 141 (September), 105299. doi:10.1016/j.ssci.2021.105299

Jones, D., Snider, C., Nassehi, A., Yon, J., and Hicks, B. (2020). Characterising the digital twin: a systematic literature review. CIRP J. Manuf. Sci. Technol. 29 (May), 36–52. doi:10.1016/j.cirpj.2020.02.002

Khatwa, R., and Mannon, P. (2018). Systems and methods for enhanced awareness of obstacle proximity during taxi operations. U. S. US10140876B2, filed 7 Dec. 2015, issued 27 November, 2018. Available at: https://patents.google.com/patent/US10140876B2/en.

Lee, B., and Kim, H. (2022). Measuring effects of safety-reminding interventions against risk habituation. Saf. Sci. 154 (October), 105857. doi:10.1016/j.ssci.2022.105857

Li, L., Aslam, S., Wileman, A., and Perinpanayagam, S. (2022). Digital twin in aerospace industry: a gentle introduction. IEEE Access 10, 9543–9562. doi:10.1109/ACCESS.2021.3136458

Liu, J., Han, W., Peng, H., and Wang, X. (2019). Trajectory planning and tracking control for towed carrier aircraft system. Aerosp. Sci. Technol. 84 (January), 830–838. doi:10.1016/j.ast.2018.11.027

Liu, Z., Meyendorf, N., and Mrad, N. (2018). The role of data fusion in predictive maintenance using digital twin. AIP Conf. Proc. 1949 (1), 020023. doi:10.1063/1.5031520

Liu, Z., Zhang, A., and Wang, W. (2020). A framework for an indoor safety management system based on digital twin. Sensors 20 (20), 5771. doi:10.3390/s20205771

Novák, A., Sedláčková, A. N., Bugaj, M., Kandera, B., and Lusiak, T. (2020). Use of unmanned aerial vehicles in aircraft maintenance. Transp. Res. Procedia, INAIR 2020 - CHALLENGES Aviat. Dev. 51 (January), 160–170. doi:10.1016/j.trpro.2020.11.018

Wang, N., Liu, H., and Yang, W. (2012). Path-tracking control of a tractor-aircraft system. J. Mar. Sci. Appl. 11 (4), 512–517. doi:10.1007/s11804-012-1162-x

Wang, Z., Han, K., and Tiwari, P. (2021). “Digital twin simulation of connected and automated vehicles with the unity game engine,” in 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence (DTPI), Beijing, China, 15 July 2021 - 15 August 2021, 1–4. doi:10.1109/DTPI52967.2021.9540074

Keywords: digital twin, edge AI, augmented reality, aircraft ground operations, industrial safety, active safety

Citation: Casey L, Dooley J, Codd M, Dahyot R, Cognetti M, Mullarkey T, Redmond P and Lacey G (2024) A real-time digital twin for active safety in an aircraft hangar. Front. Virtual Real. 5:1372923. doi: 10.3389/frvir.2024.1372923

Received: 18 January 2024; Accepted: 19 March 2024;

Published: 03 April 2024.

Edited by:

Francisco Rebelo, University of Lisbon, PortugalReviewed by:

Philipp Klimant, Hochschule Mittweida, GermanyFederico Manuri, Polytechnic University of Turin, Italy

Copyright © 2024 Casey, Dooley, Codd, Dahyot, Cognetti, Mullarkey, Redmond and Lacey. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gerard Lacey, Z2VycnkubGFjZXlAbXUuaWU=

Luke Casey

Luke Casey John Dooley1

John Dooley1 Peter Redmond

Peter Redmond Gerard Lacey

Gerard Lacey