- 1Center for Human-Computer Interaction, Virginia Tech, Blacksburg, VA, United States

- 2Microsoft Research, Microsoft Corporation, Redmond, WA, United States

Virtual monitors can display information through a head-worn display when a physical monitor is unavailable or provides insufficient space. Low resolution and restricted field of view are common issues of these displays. Such issues reduce readability and peripheral vision, leading to increased head movement when we increase the display size. This work evaluates the performance and user experience of a virtual monitor setup that combines software designed to minimize graphical transformations and a high-resolution virtual reality head-worn display. Participants performed productivity work across three approaches: Workstation, which is often used at office locations and consists of three side-by-side physical monitors; Laptop, which is often used in mobile locations and consists of a single physical monitor expanded with multiple desktops; and Virtual, our prototype with three side-by-side virtual monitors. Results show that participants deemed Virtual faster, easier to use, and more intuitive than Laptop, evidencing the advantages of head and eye glances over full content switches. They also confirm the existence of a gap between Workstation and Virtual, as Workstation achieved the highest user experience. We conclude with design guidelines obtained from the lessons learned in this study.

1 Introduction

Virtual monitors are surfaces capable of displaying windows and applications similar to how a physical monitor would (Pavanatto et al., 2021). The higher immersion of Head-Worn Display (HWD) (Slater et al., 2010) allows them to simulate monitors in virtual or augmented reality environments. Virtual monitors constrain content to a 2D surface, such as a plane or any other 2D manifold (e.g., a curved surface). Not being tied to physical objects provides more flexibility and portability, as virtual monitors can have any size, surround the user from all directions, quickly move, and dynamically adapt to environmental and task context (Pavanatto, 2021). These features provide relevant opportunities for working remotely, either from home or in a public location (Ng et al., 2021), to maximize screen space availability and enhance accessibility. While existing work has demonstrated them to be feasible (Pavanatto et al., 2021; Mcgill et al., 2020; Ng et al., 2021), virtual monitors also introduce new challenges derived from immersive technology issues that we must address before they become commonplace.

Readability is essential when performing productivity tasks and thus should be the primary concern when designing systems and applications that use virtual monitors. The lower resolution and reduced field of view (FOV) of HWDs further complicate the design of such interfaces. These problems are more prominent in optical see-through augmented reality (AR) devices with non-opaque text inside a small FOV. In a study using HoloLens 2, Pavanatto et al. (2021) showed that virtual monitors perform worse than physical monitors when the system must scale the virtual content up to enable readability, which leads to more head movement and less awareness of the content in the user’s periphery. While these problems are primarily hardware-related, we must also consider other components.

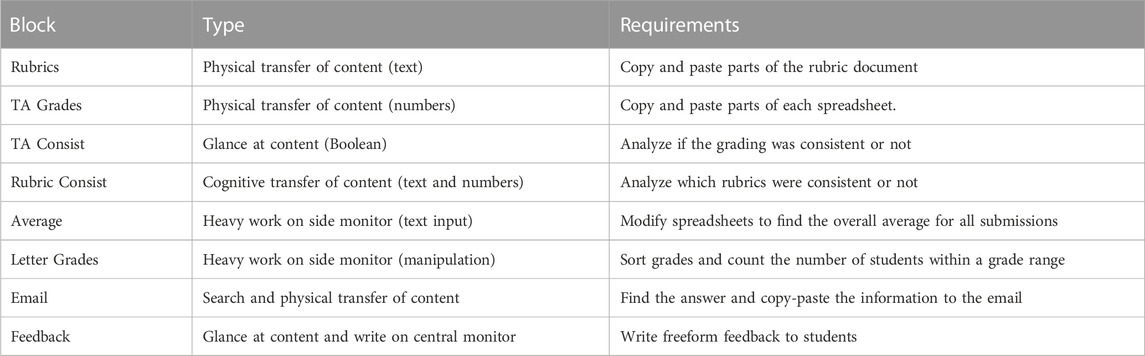

Based on published work and our own analytical and empirical testing, we identified some factors that we believe greatly influence the readability of virtual monitors (Figure 1). While we do not claim this list to be exhaustive, it provides a starting point for thinking about the components we should consider in our design. We attribute the readability of virtual monitors to five main components. Regarding hardware-related characteristics, we should consider the display, the optics, and the ergonomics of the HWD. On the software side, we must consider the virtual surface where the monitor is displayed and the relationship between that surface and the user’s viewpoint. Instead of only attributing the problems identified in previous research to the existing hardware, we believe that taking extra steps from the interface design perspective can reduce other sources of quality loss along the way and have a prominent influence on final readability.

FIGURE 1. Identified factors that influence readability of virtual monitors. Blue factors are hardware related, while orange ones are connected to software and UI design.

One important idea we can glean from Figure 1 is the issue of graphical transformations. Physical monitors render information on a screen with a pixel-perfect shape—a pre-defined mapping between the rendering buffer and the available pixels that guarantees sharpness and fidelity. On the other hand, an immersive system renders graphics from the user’s viewpoint through a perspective projection (LaViola et al., 2017). Essentially, the computer represents a view of the 3D environment using the 2D planar pixels of the HWD through a series of transformations. This conversion is usually not a problem since we understand the geometry and mathematics needed to transform 3D to 2D efficiently. However, since virtual monitors are 2D textures, the perspective transformation will degrade the user’s view of the original texture because of changes in the position and orientation of the surface. Therefore, if we position the surface in a world-fixed 3D coordinate system, the system will be prone to losing text quality as the user’s viewpoint moves. An alternative solution would be to place the content in a head-fixed coordinate system, allowing the content would move along with the user and be rendered directly on the HWD pixels. However, this approach would constrain the amount of information the computer can display to the number of pixels. It would further remove the user’s ability to use natural head movements to view different parts of the virtual monitor.

Instead of using either world or head-fixed coordinate systems, we can mitigate graphical transformation by combining both. This approach enables virtual monitors to be displayed pixel-perfect on the HWD (by directly mapping the visible area to the display) while using head orientation tracking to pan the virtual monitors vertically and horizontally (thus allowing viewpoint to change based on head movements). In that way, we can achieve the least distortion from the texture rendering while enabling a space as large as the field of regard. We can further use virtual gains (Mcgill et al., 2020) to define how fast we want the virtual content to change, allowing either a more refined control or reduced head movement.

We investigated the effects of replacing commonly used physical monitors with virtual reality (VR) monitors that combine custom-made software that minimizes graphical transformations and a high-resolution HWD. Participants completed ecologically valid productivity tasks involving multiple windows and applications on a real operating system. We compared our proposed solution with approaches commonly used in the real world: a Workstation, which is often used at office locations and consists of three side-by-side physical monitors; and a Laptop, which is often used in mobile locations and consists of a single physical monitor expanded with multiple desktops. We then analyzed quantitative and qualitative metrics such as performance, accuracy, satisfaction, ease of use, confidence, cognition, and comfort.

The contributions of this work include a quantifiable understanding of how virtual monitors (using state-of-the-art VR HWD and our rendering technique) 1) have improved usability when compared to a single-screen laptop but 2) still lack usability in comparison to a combination of multiple physical monitors. Moreover, 3) we contrast our results with previous results found in the literature (Pavanatto et al., 2021).

2 Related work

2.1 Readability in mixed reality

Studies have explored issues with readability in Mixed Reality (MR) environments. While reading in virtual monitors has been shown to be feasible (Grout et al., 2015; Pavanatto et al., 2021), large flat monitors were reported to impair readability (Grout et al., 2015). It has been suggested that text displayed on virtual elements should be displayed larger than on physical monitors (Dittrich et al., 2013; Pavanatto et al., 2021), although that can lead to reduced performance due to small FOVs (Pavanatto et al., 2021). While curved text could help to keep elements closer to users, it has been shown that text should only be warped in a single direction at a time and at small curvatures to preserve reading comfort (Wei et al., 2020). Text orientation has also been shown to affect how big a font needs to be for readability (Büttner et al., 2020).

Text style influences readability, with dark mode providing the best performance with a billboard (Jankowski et al., 2010), and benefits for visual acuity (Kim et al., 2019; Erickson et al., 2021). Readability is affected by parameters such as text size, convergence, view box size, positioning (Dingler et al., 2018), text outline, contrast polarity (Gattullo et al., 2014), opacity, size, and the number of lines of text (Falk et al., 2021). Text style, background, and illuminance further impact readability in outdoor scenarios using AR optical see-through HWDs (Gabbard et al., 2006), with dynamic management of text being used to place text on dark backgrounds while on the move (Orlosky et al., 2013).

The angular size of the text has also been shown to play an important role, where not only minimum values have been specified, but also maximum (Kojić et al., 2020). Rzayev et al. (2021) further investigated the presentation type and location of text in VR, revealing better performance of short text in head-fixed and long text in world-fixed. The existing work reveals that while we must deal with limitations in HWD resolution, we must also be careful about reducing text distortions to achieve a system that supports productivity work effectively.

2.2 Displaying content to workers

The idea of displaying windows through an HWD is not new. Feiner et al. (1993) explored the issue back in the 90s with a system that displayed floating windows through an optical see-through display, registering windows in head and world-fixed coordinates. Raskar et al. (1998) further investigated what it would mean to expand an office space by combining AR and a collection of projectors and cameras. However, this topic only became more popular in recent years as the context of pervasive and everyday AR gained traction (Grubert et al., 2017; Bellgardt et al., 2017). Grubert et al. (2018) presented an interesting vision of how an office of the future might work, reducing physical world limitations by taking advantage of immersive technologies. Further motivated by the COVID-19 pandemic, other works investigated the implication of using mixed reality in mobile scenarios (Knierim et al., 2021; Ofek et al., 2020) and work from home (Fereydooni and Walker, 2020). This shows the relevance of this topic and how it can impact society.

There are various ways in which virtual content can be displayed to workers. Surrounding the user with windows has been shown to reduce application switching time in immersive systems designed for multitasking (Ens et al., 2014) while combining virtual displays and touchscreens was shown to be beneficial to mobile workers (Biener et al., 2020). Information presented in the periphery can be accessed through quick glances (Davari et al., 2020), through approaches that place and summon glanceable virtual content in a less obtrusive manner (Lu et al., 2020), and using various activation methods (Lu et al., 2021). Physical displays and virtual representations have been combined to display visualizations over tabletops (Butscher et al., 2018), and larger screens (Reipschläger et al., 2020; Mahmood et al., 2018), and to combine physical and virtual documents (Li et al., 2019). Large display interfaces have also been transformed into immersive environments to facilitate sensemaking (Lee et al., 2018; Kobayashi et al., 2021). These works show many opportunities for using HWDs in real-world work, but there are still open questions about how to display the content to support workers better.

2.3 Virtual monitors for productivity

Using HWDs to render virtual monitors can enhance flexibility and mobility while reducing costs (Pavanatto et al., 2021). It can also address challenges such as lack of space, surrounding noise, illumination issues, and privacy concerns (Grubert et al., 2018; Ofek et al., 2020). Mcgill et al. showed that introducing a gain to head rotations can maintain an acceptable and comfortable range of neck movement when the user is surrounded by multiple horizontally organized displays (Mcgill et al., 2020). Working in VR in open office environments can reduce distraction and improve flow, being preferred by users (Ruvimova et al., 2020). Combining virtual monitors with tablets used for touch input can improve user performance (Le et al., 2021), showing the importance of considering traditional interaction devices even while using HWDs.

Previous work also reveals some of the issues of virtual monitors. Context switching between physical and virtual environments and focal distance switching between displays reduce task performance and increase visual fatigue (Gabbard et al., 2019). Having multiple depth layers, such as combining an HWD with a smartwatch, can induce more errors when interacting (Eiberger et al., 2019). Social acceptance and monitor placement are also shown to play important roles in the use of virtual monitors in public places, such as in airplanes (Ng et al., 2021), and in the layout distribution of content across multiple shared-transit modalities (Medeiros et al., 2022). A longitudinal study by Biener et al. (2022) showed that working in VR for a week can lead to high levels of simulator sickness and low usability ratings.

In a previous paper, we conducted a user study to compare the usability of physical and virtual monitors in augmented reality (AR) (Pavanatto et al., 2021). We concluded that the lower resolution and small FOV of the HoloLens 2 was the main factor resulting in a 14% reduction in performance when using virtual monitors instead of physical ones. The virtual monitors used in that study were world-fixed planes, and therefore, readability was optimized by enlarging the virtual monitors.

Existing work demonstrated a lot of the shortcomings of virtual displays. In this work, we replicate the methodology presented by our previous work (Pavanatto et al., 2021) but focus on analyzing a VR system that was developed with productivity and readability in mind. Furthermore, to the authors’ knowledge, the literature does not have any work performing a direct comparison between working on a laptop screen and a large virtual monitor.

3 Methods

We conducted a user study to investigate how replacing physical monitors with our custom virtual monitors prototype affects user experience. We aim to 1) understand how large the gap is between our system’s performance and a physical workstation setup and 2) the potential benefits of using our approach instead of a single laptop screen extended with multiple desktops.

To enable comparison with the literature, we based our study on previous work by Pavanatto et al. (2021), with a few modifications. While that study was conducted in AR, we use VR—the main reason for the change is that VR technology is more mature and allows for better display properties. Our equipment was a custom-designed prototype with higher resolution, larger FOV, and smaller form factor and weight than the HoloLens 2. In addition, our software was designed to minimize graphical transformations, keeping monitors’ texture pixel-perfect on the HWD while moving the content sideways when the user rotates their head.

While virtual displays enable us to achieve much more than simply replicating existing physical monitors, the rationale for this study is that understanding objective factors that differ from physical monitors is essential before designing more innovative systems. By creating systems with similar capabilities, we can directly compare our conditions to current productivity and user experience standards and look into factors that lead to potential issues. We expect our findings to support exploring the rich design space of virtual monitors, including novel UI paradigms.

3.1 Conditions

We designed ecologically valid conditions and tasks while considering the unique characteristics of each system, as detailed in each condition.

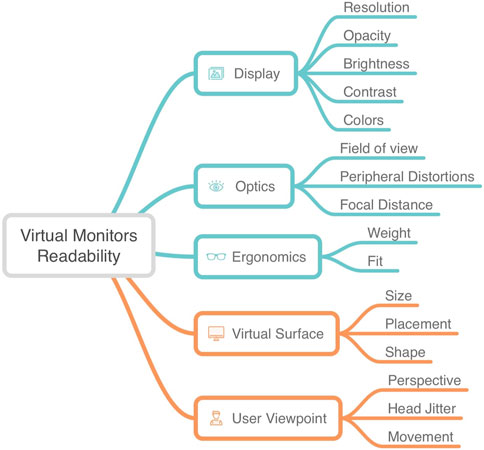

Our baseline was a Workstation setup (Figure 2 (top)) consisting of three identical 24″ physical monitors placed side-by-side, each with a screen space of 1920 × 1080 and positioned at 70 cm from the participant. We opted for a multi-monitor setup because they are a common choice by power users, relatively cost-efficient, and easy to set up. Such systems are commonly used in offices due to their need for extra space and fixed nature. In the conditions that did not use an HWD, we asked participants to wear a 3D-printed frame corresponding to the shape and weight of the HWD—while this can be considered a limitation of the study, we believe future HWDs will be less obtrusive; since we cannot test that today, we opted to give similar ergonomic penalties across conditions. Across all conditions, participants could interact with the system using a laptop keyboard and mouse positioned directly in front of them and configured at the same level of sensitivity. We lowered and covered the laptop’s screen while not in use.

FIGURE 2. Conditions: (top) Workstation had three monitors; (middle) Virtual had three monitors rendered in the HWD (participant could only see the virtual monitor, highlighted in orange in the Figure); (bottom) Laptop had a single monitor, with three virtual desktops.

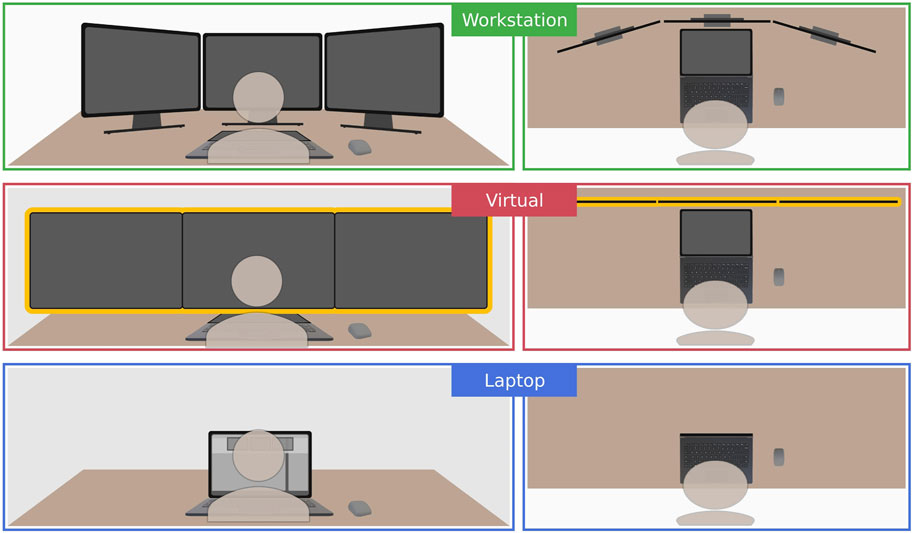

Our second condition was a Virtual setup (Figure 2 (middle)) consisting of a single 2D texture that rendered three 28.8″ floating virtual monitors side-by-side with a blue background (without any camera feed from the real world) — each monitor with a screen space of 1920 × 1080. This single texture was orthographically projected 1 m in front of the user (i.e., the accommodation distance of the head-worn display) and panned up/down, left/right, based on the user’s head rotation, as shown in Figure 3. We enlarged each virtual monitor 1.2x the workstation monitor size to achieve similar text readability. We amplified head rotations with virtual gains—moving the texture in the opposite direction of the head rotation (Mcgill et al., 2020). For rotations, we used a 1.5x horizontal and a 1.2x vertical gain. These values were chosen to match the same amount of head rotation from the workstation condition. All parameters were tested and incrementally adjusted during two preliminary testing sessions performed by two different users.

FIGURE 3. Geometric understanding of panning mechanism: (A) user looking forward sees monitor perpendicular to self; (B) user looking to the side rotates monitors to keep perpendicular. Gains were applied to reduce rotations.

Our final condition was a Laptop setup (Figure 2 (bottom)) with a single 15.6” physical monitor with a screen space of 1920 × 1080 that was extended using three virtual desktops—in which the user chose which workspace was displayed on the monitor. The laptop monitor was positioned 60 cm from the participant. This setup is commonly used in mobile use cases where extra monitors are unfeasible. Participants could switch between these desktops with a four-finger swipe gesture on the trackpad. We decided against using a keyboard shortcut (e.g., alt-tab) to preserve windows’ arrangement across the conditions (as participants do not rearrange windows in this task) and to help participants maintain a single mental model of their work (Andrews et al., 2011). The trackpad on the laptop was used only for switching virtual desktops.

While each condition had its own monitor size, distance, and resolution, each monitor had a constant pixel count of 1920 × 1080. While keeping pixel count constant, we also aimed to provide conditions that could be used in an ecologically valid way.

3.2 Apparatus

We performed the experiment on a Lenovo Thinkpad P51 laptop with an Intel Xeon CPU E3-1535M CPU, 128 GB of 3200 MHz DDR4 DRAM, an NVMe SSD, and an NVIDIA Quadro M2200 4 GB GPU. Participants accessed a full version of Windows 10.

We implemented virtual monitors using the Unity Engine Pro, version 2019.4, and the Windows Graphics Capture API through a back-end Visual Studio C# application. Our immersive application replicated the monitor textures directly from the render buffer of the video card, allowing for low enough latency such that it was not reported as a factor by participants. We turned off the physical monitors during the virtual condition but used the same system-generated texture. We combined textures from all monitors on the same plane and continuously panned it as participants rotated their heads. The rendered surface matches the display’s pixels with minimal distortions.

The Virtual condition used a proprietary HWD prototype created for productivity work (as the device was an unreleased commercial prototype, we are not able to provide complete specifications or an image of the actual HWD). The device has a “glasses” form factor (similar to the one shown in Figure 4 (left)), without head straps, and about 26 mm depth in the front. We tethered the device to a laptop, but the cable allowed effortless head rotations. The custom optical design included an FOV of 70°, a resolution of 2560 × 2560 per eye, and weighed 165 g—lower than most off-the-shelf devices. Latency was approximately equivalent to a physical monitor; all processing was conducted in the laptop, and textures would be output into the HWD. Time warping was not necessary, given the low complexity of the rendering pipeline. We had two prototypes of the device with fixed IPDs of 62 and 67 mm. The device would be chosen based on the participant’s measured IPD.

FIGURE 4. Prototype similar to the one used in the study (left); Environment where the study was conducted, from the experimenter point of view (right). Created using Microsoft Word version 2105, microsoft.com.

The environment was an individual office space with controlled illumination, as seen in Figure 4 (right). Brightness and contrasts were visually calibrated across the conditions to ensure similarity.

3.3 Experimental design

Our within-subjects independent variable was monitor type (Workstation, Virtual, and Laptop). We counterbalanced the order of presentation of the three conditions using Balanced Latin Square. Our dependent variables included objective measures such as performance (time to answer blocks of questions) and accuracy (correctness of the answers). We also collected subjective, self-reported measures such as satisfaction, ease of use, confidence, cognition, and comfort. Because of our interest in participants’ impressions of specific tradeoffs between our conditions, we opted to apply a custom-developed rating questionnaire inspired by other custom questionnaires used in existing literature (Cockburn and McKenzie, 2001; Czerwinski et al., 2003; Waldner et al., 2011; Pavanatto et al., 2021; Medeiros et al., 2022). Finally, we gathered in-depth qualitative data from questionnaires and interviews.

3.4 Hypotheses

Based on the findings reported in the literature and our own experience trying the conditions, we tested the following hypothesis:

H1. Using virtual monitors for productivity work will lead to a measurable decrease in performance compared to physical monitors. Existing research has shown that using a HoloLens 2 with virtual monitors decreased performance compared to physical monitors (Pavanatto et al., 2021). While we optimized our prototype to enhance readability and hope the gap between them will be smaller, we still believed that virtual monitors would not perform as well as physical ones.

H2. Using the Virtual Condition will lead to a measurable performance increase compared to Laptop. We expected our system to perform better than a single laptop. That is because, unlike virtual monitors, a single screen with multiple desktops does not provide access to all windows at a glance, requiring users to switch the workspace actively.

H3. Users will have a similar accuracy when working in any of the conditions. We believed that the differences between the conditions would not yield differences in task correctness, only in performance and user experience. In other words, all conditions would allow users to perform work, but some would present higher usability than others.

3.5 Experimental task

Our experimental task reproduced the one used by Pavanatto et al. (2021), providing a general baseline we can compare. Its design also preserves ecological validity, improving the validity of our results. The participant played the role of a head teaching assistant (TA) of a class and was required to fill out an online form about their students’ performance on an assignment.

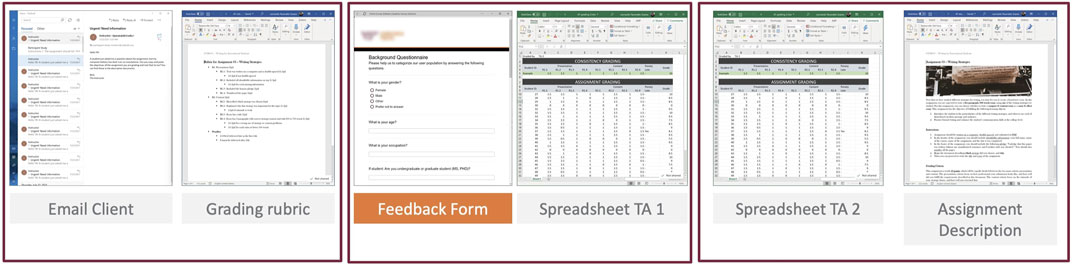

Participants were informed that they were responsible for aggregating the feedback given by other TAs. They had access to six windows distributed at fixed locations across their screens; see Figure 5. Documents included a Word file showing the assignment description, two Excel files used by other TAs for grading each student (each spreadsheet had half of the students), and a Word file with a rubric for grading. Participants’ objective was not to grade students but to aggregate existing data on an online report. The online report was a Qualtrics survey window where participants would answer questions. We also modified the task to include an email-answering subtask. The sixth open window contained the Mail app from Windows 10, where participants could receive new messages in an email account created specifically for this study. During the task, the instructor would send them an email asking for specific information, which they had to locate across the documents and send back - this task happened in a specific question block, in a way that it did not take place during other questions. We vertically divided the monitors in half and placed windows in the following order, from left to right: email client, rubric, online form, excel 1, excel 2, and assignment description.

FIGURE 5. Left monitor: email client and grading rubric; Center monitor: online form (reproduced with permission) and TA 1 spreadsheet; Right monitor: TA 2 spreadsheet and assignment description. Created using Microsoft Word version 2105, microsoft.com. Created using Microsoft Excel version 2105, microsoft.com.

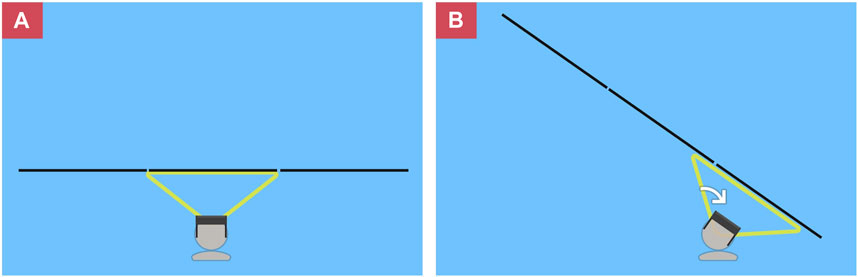

Participants were asked task questions divided into eight blocks that required reading, information transfer (both physical and cognitive), search, and writing across all three monitors. To minimize the learning effect, we presented a different order of question blocks for each trial, but the same across participants. Table 1 shows the description, as provided by Pavanatto et al. (2021), with the addition of the email task. The last question block asked participants to find information and write feedback on it. We limited that block to 4 minutes to create a compromise between providing a complete answer and not spending too much time thinking about the results.

3.6 Procedure

Our study was approved by the Institutional Review Board of Microsoft Research and conducted in person in a 90 min session. The experimenter thoroughly disinfected devices and surfaces. Participants and investigators followed COVID-19 safety protocols.

We recruited participants through mailing lists and social media posts and asked them to complete a screening questionnaire. To reduce session time and mitigate the risks of SARS-COV-2 exposure, volunteers who matched our inclusion criteria scheduled a session, signed a digital consent form, and completed a background questionnaire before their in-person session.

Upon arrival, the experimenter greeted the participant and briefly introduced the environment and study. The participant completed an IPD measurement procedure to determine which device they would use. Before the main task, the investigator presented and clarified the questions from the task. After that, to ensure all participants had the required skills and knowledge, participants completed a small tutorial on using Excel. All initial procedures used only a single physical monitor to avoid bias.

In the virtual condition, the VR device was calibrated to render the virtual monitors in the same place across participants relative to the physical monitors. For other conditions, the experimenter ensured the position was similar across participants. After calibration, the participant had a minute to explore the condition and understand how it worked.

The experimenter placed the documents and windows on the screen during the main task, and participants were asked not to move them. The participants could freely alter the documents as they processed data to answer the questions. Once completed, participants answered a condition questionnaire. Each condition had distinct datasets (obtained from Pavanatto et al. (2021)) so that participants would have to determine new answers for each condition.

After completing all conditions, participants answered a final qualitative questionnaire and a 10-min semi-structured interview. Questions included preferences, positive and negative aspects, perception and readability, etc. From their answers and general observation, we asked follow-up questions that further let us understand the user experience.

3.7 Participants

Twenty-seven participants (aged 20 to 46, 13 female) with normal vision (or contacts) from the local population participated in the experiment. Two were professionals, 20 were graduate students, and 5 were undergraduates. All participants used at least one computer regularly, with 26 using a laptop, 25 using a mobile phone, and 14 using a desktop. Twenty-four people reported using a computer for at least 4 h during a weekday, with 13 using it for more than 8 h. Seventeen participants reported not having much experience with Virtual Reality, while 24 reported having good experience with Windows 10. Twenty reported good experience with Word, while 21 reported good experience with Excel. Finally, 16 reported having previous experience being a TA.

4 Results

We collected our results from multiple sources. A Qualtrics survey recorded the questionnaires, including qualitative and subjective quantitative measures, along with the time to answer each block of questions. From Unity, we obtained a frame-by-frame log of all events during the sessions, such as time, frame time, and cursor 2D coordinates. Finally, we recorded audio files with the responses given by participants during the semi-structured interviews. These were transcribed by Office Online, with manual verification and fixes completed by the authors.

We exported Qualtrics and Unity outputs to “.csv” formats, which were ideal for further processing through Python scripts. We performed the statistical analysis using the JMP Pro 16 software. We used an α level of 0.05 in all significance tests. The results figures mark significantly different pairs with * when p ≤ .05, ** when p ≤ .01, and *** when p ≤ .001.

We verified normality through Shapiro-Wilk tests and normal quantile plot inspections for all the cases before deciding whether to apply two-way mixed-design factorial analysis of variance (ANOVA), non-parametric tests, or a transformation before using ANOVA. We further performed pairwise comparisons using Tukey HSD when appropriate. Our two factors were the condition (Workstation, Virtual, or Laptop) within subjects and the order (first, second, or third) between subjects. We did find some statistical significance for order and interaction, but for simplicity, we will only discuss the main effects of condition, as those are our main findings.

4.1 Performance

Results show that Laptop was the slowest condition, overall 14% slower than Workstation and 4% slower than Virtual—although Laptop performed better than Virtual in one question block. Our time measurement distribution was not normal (w = 0.9454, p = .0018). We modified the data with a logarithmic transform and found a significant main effect for condition (F2,2 = 3.43, p = .038). Values for conditions were: Workstation (M = 614.37s, SD = 303.03), Virtual (M = 674.52s, SD = 191.84), and Laptop (M = 703.1883s, SD = 223.38). Pairwise analysis showed that Laptop was significantly slower than Workstation (p = .042).

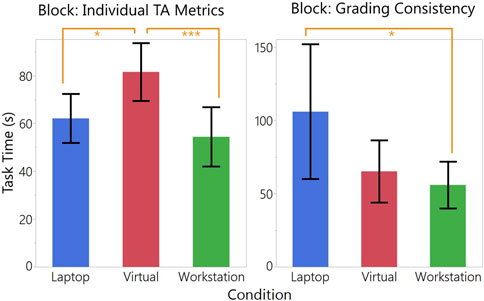

We further analyzed the individual question blocks and found significant results on two blocks, with Virtual and Laptop alternating which performed worse. For the “Individual TA Metrics” block, there was a significant main effect of condition (F2,2 = 8.126, p = .0007). Pairwise analysis showed that Virtual was significantly slower than both Laptop (p = .0178) and Workstation (p = .0006). For the “Grading Consistency” block, there was a main effect of condition (F2,2 = 4.703, p = .012). Pairwise analysis showed that Laptop was significantly slower than Workstation (p = .0142) and marginally slower than Virtual (p = .0558). Figure 6 show the values with the confidence interval.

FIGURE 6. Time in seconds to complete selected question blocks - Individual TA Metrics (left) and Grading Consistency (right). Lower bars are better. Error bar represents the confidence interval (95%).

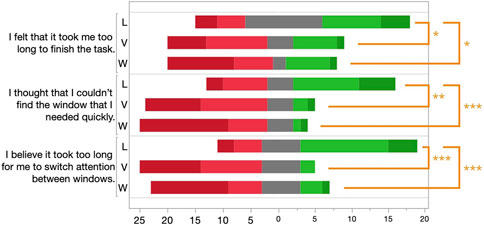

Participants subjectively rated their perceived performance (Figure 7). In “I felt that it took too long to finish the task.”, Laptop (M = 3.11, SD = 1.31) was rated 44% higher than Workstation (Z = −2.56, p = .0106), and 50% higher than Virtual (Z = −2.06, p = .0398). In “I thought that I could not find the window that I needed quickly.”, Laptop (M = 3.18, SD = 1.35) was rated 90% higher than Workstation (Z = −4.04, p < .0001), and 63% higher than Virtual Virtual (Z = −3.26, p = .0011). Finally, in “I believe it took too long for me to switch attention between windows.”, Laptop (M = 3.34, SD = 1.27) was rated 9% lower than Workstation (Z = −3.67, p < .0001), and 16% lower than Virtual (Z = −4.01, p < .0001).

FIGURE 7. Performance statement ratings. Scale goes from red (completely disagree) to green (completely agree).

4.2 Accuracy

Results show no significant difference in accuracy. We analyzed the answers to the tasks to generate scores. Each answer was given a score of either right (1) or wrong (0). We have the correct values for some questions, such as copy and paste, or the numerical ones. For others, such as the feedback question, we used a rubric that included the quality and completeness of the response. The total score, the sum of the scores for every question, was analyzed using Wilcoxon/Kruskal–Wallis tests.

We did not find any main effect of condition (p = .771) on score. Accuracy levels were around 82% across all conditions and orders. We also conducted a ChiSquare test for proportions for each question individually but found no significant differences. The trend closest to significance difference was for the “Feedback” question (χ2 = 4.294, p = 0.1168), where scores were: Workstation (M = 62.96%, SD = 49.21%), Laptop (M = 59.25%, SD = 50.07%), and Virtual (M = 37.03%, SD = 49.21%).

We also asked participants to rate their perceived accuracy subjectively. There was no significant effect in either “I felt that I delivered a quality result on the task.” (p = .54) and “I think that I made many mistakes while answering the questions.” (p = .12).

4.3 Subjective feedback

We asked participants to subjectively rate many different usability factors, which was essential to understanding the specific issues leading to the detected differences in performance and accuracy. Given the nature of the data, we evaluated the ratings using non-parametric Wilcoxon/Kruskal–Wallis Tests.

4.3.1 Satisfaction

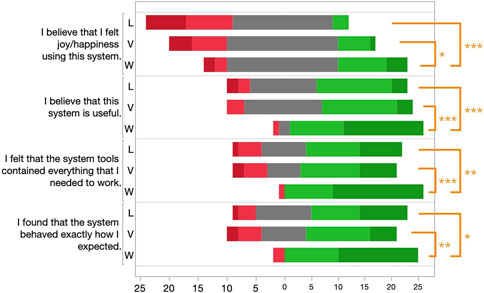

Participants reported higher user satisfaction with Workstation than the other two conditions (Figure 8).

FIGURE 8. Satisfaction statement ratings. Scale goes from red (completely disagree) to green (completely agree).

In “I felt joy/happiness using this system.”, Workstation (M = 3.41, SD = 1.08) was rated 48% higher than Laptop (p < .001), and 22% higher than Virtual (p = .0367). In “I believe this system is useful.”, Workstation (M = 4.45, SD = 0.75) was rated 26% higher than Laptop (p < .001), and 22% higher than Virtual (p < .001). In “I felt the system tools contained everything I needed to work.”, Workstation (M = 4.56, SD = 0.70) was rated 25% higher than Virtual (p < .001), and 21% higher than Laptop (p = .0038). In “The system behaved exactly how I expected.”, Workstation (M = 4.41, SD = 0.84) was rated 25% higher than Virtual (p = .002), and 15% higher than Laptop (p = .0366).

4.3.2 Ease of use

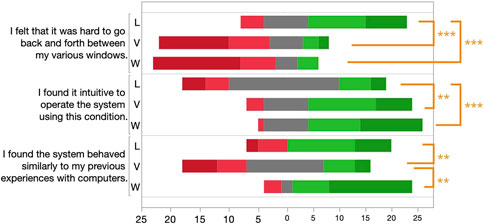

Results showed that Laptop was the hardest condition to use. Not surprisingly, virtual was reported as the least similar to their previous experiences with computers (Figure 9).

FIGURE 9. Ease of Use statement ratings. Scale goes from red (completely disagree) to green (completely agree).

In “I felt that it was hard to go back and forth between my various windows.”, Laptop (M = 3.85, SD = 1.03) was rated 81% higher than Virtual (p < .001), and 112% higher than Workstation (p < .001). In “I found it intuitive to operate the system using this condition.”, Laptop (M = 3.00, SD = 1.21) was rated 22% lower than Virtual (p = .005), and 29% lower than Workstation (p < .001). In “I found the system behaved similarly to my previous experiences with computers.”, Laptop (M = 3.67, SD = 1.27) was rated 30% higher than Virtual (p = .016), and Workstation (M = 4.34, SD = 1.00) was rated 18% higher than Laptop (p = .019).

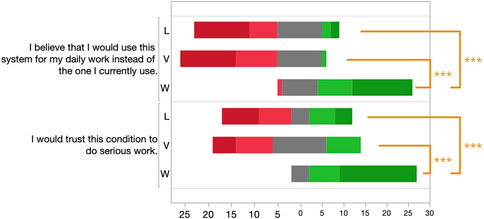

4.3.3 Confidence

Revealing the highest differences between the conditions, participants considered the Virtual and Laptop less trustworthy and less acceptable than Workstation (Figure 10).

FIGURE 10. Confidence statement ratings. Scale goes from red (completely disagree) to green (completely agree).

In “I would trust this condition to do serious wor.”, Workstation (M = 4.59, SD = 0.63) was rated 71% higher than Laptop (p < .001), and 74% higher than Virtual (p < .001). In “I would use this system for my daily work instead of the one I currently use”, Workstation (M = 4.30, SD = 0.87) was rated 102% higher than Laptop (p < .001), and 137% higher than Virtual (p < .001).

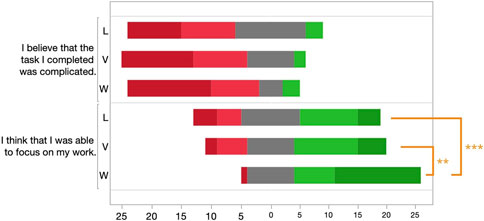

4.3.4 Cognition

While we did not administer a full cognitive load questionnaire, we did include two statements to understand how much participants could focus on their work and how they judged the difficulty of the task (Figure 11).

FIGURE 11. Cognition statement ratings. Scale goes from red (completely disagree) to green (completely agree).

In “I was able to focus on my work”, Workstation (M = 4.30, SD = 0.99) was rated 33% higher than Laptop (p = .001), and 24% higher than Virtual (p = .004).

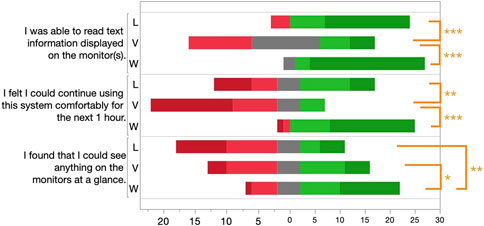

4.3.5 Comfort

In “I was able to read text information displayed on the monitor(s)”, Virtual (M = 3.22, SD = 1.15) was rated 26% lower than Laptop (p < .001), and 33% lower than Workstation (p < .001). In “I could continue using this system comfortably for the next 1 h”, Virtual (M = 1.96, SD = 1.16) was rated 37% lower than Laptop (p = .003), and Laptop (M = 3.14, SD = 1.49) was rated 29% lower than Workstation (p < .001). In “I could see anything on the monitors at a glance”, Workstation (M = 3.96, SD = 1.22) was rated 24% higher than Virtual (p = .0277), and 50% higher than Laptop (p = .0018).

4.4 Qualitative feedback

While the subjective ratings presented in previous sections help us understand general user experience issues that could impact performance and accuracy, there are still questions about the specific reasons for those issues. We obtained a fair amount of qualitative feedback through semi-structured interviews and written responses. We report common or meaningful comments regarding the Virtual condition, divided by a few topics.

4.4.1 Virtual monitors responses

Participants provided positive feedback on the virtual monitors. P1 stated: “I do not have to explicitly click on one of the windows to have it appear.” P10 commented, “it is easier to switch between Windows compared to the other two.” Regarding focus, P9 explained that virtual displayed only the monitors, while P15 commented that they were less distracted since they did not know what was happening around them. P23 raised the issue of familiarity: “I think I prefer this (workstation) just because I have experience in it, but with the virtual monitor, I do not really have experience. But I did find it really easy to use.”

Participants also gave positive prospects; P2 said, “It is just the comfort factor that needs to be made a little better. If that can be done, I can definitely see myself using virtual monitors in the future.” P5 agreed, stating: “It is hard in a laptop, but with the VR it is really easier, […] you can actually see a seamless thing at the same time.” P7 also said: “I liked the virtual monitors much more than the laptop version, and I could see myself using that. The only drawback I felt was the comfort of the glasses.” P27 added, “if you want to go everywhere, then the virtual one makes more sense […] It has more benefits over a laptop.”

4.4.2 Text readability

While all participants could read and finished tasks without issues, thirteen complained about the blurriness of the display. As P11 said, “I was able to read things properly, but I could see that being a problem because the resolution was not that high and some parts were a bit blurry compared to a regular workstation.” From those, seven felt the entire display was more blurry than a physical monitor, while the other six specifically reported that blurriness was localized to the display’s edges.

Part of the overall blurriness could be attributed to eye strain, as P21 said, “I felt at some point everything was getting blurry. Maybe because I had to put continuous focus on something.” Part of the localized blurriness could be explained by lens positioning, as the image was clear at the center, but eye movements would lead to heavy distortion and blurriness toward the edges. As P15 mentioned, “I felt that the main problem was that my eyes were not close enough to the lenses, so it was very blurry at the edges”. All six participants further reported that this led them to move their heads while keeping their eyes still—which is not what humans usually do in the real world, as it is a slower and more fatigue-inducing operation. As P12 stated, “In the VR case, my vision was blurred. So I had to move my head a lot when looking at a screen because I could not use my peripheral vision.”

4.4.3 Head movement

One of the more prominent factors for diminished performance reported by Pavanatto et al. (2021) was the amount of head movement participants had to perform in the Virtual condition. We found mixed results on head movement in the Virtual condition. Most participants did not have any comments about head movement or neck fatigue, but six still complained about moving their heads too much. As P3 stated, “I had to move my head a lot. If I wanted to work for a long time, I would get tired”. P13 also said that “I probably end up moving my head much more than needed.” Interestingly, P26 still thought that the monitor was too big: “Sometimes I found it too big, so I had to move my head a lot and it was kind of straining on my neck. I would like to have the opportunity to resize the monitor where I am comfortable with them.” Since the gains provided the same head rotation as Workstation, we believe participants perceived this because they preferred to perform head rotations instead of eye movements, given the previously mentioned distortions at the edges.

Interestingly, we also had participants that complained about the opposite. Three participants complained that it was hard to focus on elements because of the jitter introduced by the virtual gains. As P24 mentioned: “There was a lot of movement …when I changed my head angle just a little bit. It was like this screen movement, and it was not very convenient.” P17 had a similar thought, “…it felt like when you move your head …the virtual window, it was moving very fast, so it was hard to focus on any particular text or paragraph.”

Four participants brought up a significant issue with our approach. As P23 said, “having to move my head was the hardest part and then also not being able to …get closer to the monitor”. P1 had a similar perspective “…I did not want to move monitors with myself. If I rotate my head, I do not want them to be rotated”. Both were complaining about the decision to have monitors head-fixed. Participants would not get closer to the screen if they moved their heads forward. Similarly, the monitors would not stay still if they rotated their heads around the roll axis. That led to confusion and prevented users from performing natural interactions, such as getting closer to small text to read it better. P13 also mentioned the issue, adding that “I think if they were just fixed in space, they would be much better to use, I guess than what we have here.”

4.4.4 Keyboard visibility

Keyboard visibility is needed for typing in VR (Hoppe et al., 2018; Schneider et al., 2019). Initial system tests suggested that users could see the keys using the gap between the headset and their faces. Still, if this visibility was not optimal, it could influence performance and accuracy, especially on heavy typing-related questions. For most participants, like P5, it did work as intended “I could see like under the glasses and then also like on the sides a little bit which I liked.” But overall, 11 participants reported trouble seeing the keyboard. As P26 mentioned, “it was hard to look at the keyboard while I was wearing the glasses.” P13 added “because of the frames I could not see the keyboard and that made it hard to operate.” P11 tried to use a strategy to reduce how often they had to look down: “often like it took me a while to get my hands figured out so I did not have to look down often. But every now and then, when I had to find a key, it was a bit cumbersome.”

This issue may have had a substantial impact on both performance and accuracy. As P23 mentioned, “I noticed that I had a couple more typos using VR than I normally would have.” P16 was more emphatic, “that’s the biggest issue”, and “I had a lot of missed key presses, a lot of them, and it was because I was attempting to use it without looking at the keyboard.”

Five participants complained about the disconnect between displaying the monitors in VR and having the keyboard in the physical environment. As P22 stated, “I’m seeing through the VR, but I’m typing through the keyboard, so when I look at the keyboard, I have to look underneath of the device. So I found that really uncomfortable, like switching between the VR spectacle and the keyboard. I have to shift my eyes a little bit and try to look down the window.” P23 agreed, saying, “it was a little awkward to move back and forth with the headset and looking at the keyboard.” P10 had a strong opinion, “when I put the virtual glasses …at first I did not know how to type.” P5 suggested that we could fix this issue using a virtual representation: “I need some kind of representation of where my keyboard is like, even if I just had like a virtual model of the keyboard that I’m using that’s tracked in space.”

4.4.5 Device fit

The majority of participants (15) struggled to position the device comfortably on their heads, ensuring it sat still and their eyes were at the optical sweet spot (to reduce distortions). As P20 stated, “The only drawback I felt was the comfort of the glasses. They were uncomfortable.” Upon request, they further explained “It kept sliding. It was uncomfortable on my face and my nose”. They added an interesting insight that can be overlooked: “…you know you would not want something to leave a mark on your face.”

P19 wondered if another mechanism could keep the glasses in place: “I’m debating on a way that it fits on the face better than this. I think it would be much better, and working with it will be much easier”. P12 was more worried about positioning the optics at the right place: “The positioning of the lenses, so some mechanism to fit it, keep it in place, would be helpful.” An interesting comment came from P9, “I found it a bit bulky. So I think a lighter and less bulky version of it might be something that I would go for.” As stated before, this device is lighter than most commercially available devices, yet, it was still perceived as bulky in this glasses form factor. P8 further discussed ergonomics, “I would need the headset to fit better because it is big on my head it is very awkward and uncomfortable. I do not find the weight is as distracting as the fact that it kept feeling like it was going to fall off my face.”

4.4.6 Simulator sickness

We did not obtain any direct measures of simulator sickness, but there were six complaints about it. P21 attributed it to the virtual gains moving things faster: “I was getting nauseous, the kind of nausea you get like …when I am traveling I cannot use my phone because if I look at something like if I focus my attention like I get dizzy and I start feeling bad.” Other participants did not report any sickness; P8 reported: “No [simulator sickness], which is shocking ’cause I’m really easily motion sick when I put on virtual headsets.”

5 Discussion

Overall, Virtual was 9.10% slower than Workstation, and Laptop was 12.6% slower than Workstation. However, since these differences were not statistically significant, the results do not support H1, that we would find a measurable difference between Virtual and Workstation. Still, the performance difference trend was considerably higher than expected, as Virtual performs closer to Laptop than Workstation. For this same reason, the results do not fully support H2 based on this study, as the differences between Virtual and Laptop performance were too small, and there was no statistical significance. Subjectively, however, Laptop was perceived as the slowest, with participants believing that they took longer to finish the task, find the window of interest, and change focus between the windows.

Individually, two question blocks had compelling results regarding performance. Virtual was significantly slower than Workstation and Laptop in the second block of questions that required quick copy and paste. This difference could imply that participants had difficulty reading the text or typing on the keyboard. Question block three had very different results, as it did not require typing, and the users only had to glance and compare information from two windows. Laptop performed much worse than Workstation and Virtual, which we expected given that the users needed to switch virtual desktops to compare the information from both windows. Together, these results indicate that users may have had more substantial issues using the keyboard in the Virtual condition, but we need more information to confirm that.

Based on the subjective ratings and qualitative data, comfort was a significant issue in the Virtual condition (Figure 12). It is important to note that many of these problems are mainly hardware-related. We believe two primary factors caused this issue. First, participants struggled with using the keyboard while typing. While we expected the participants to see the keyboard through the gap below the headset, many had trouble seeing the keyboard, which caused trouble with context switching between the virtual monitors and the physical keyboard. Based on the comments, we believe that a significant percentage of the performance loss in the Virtual condition was because of this issue. The second issue was a coupling between device fit and head movement. Both quantitative and qualitative data show that the device was uncomfortable. Some participants complained that it would not fit properly over their noses, and they would have trouble making head turns.

FIGURE 12. Comfort statement ratings. Scale goes from red (completely disagree) to green (completely agree).

Furthermore, imprecise placement of the optical sweet spot led to blurriness issues, which further distorted the edges of the display and forced participants to adopt more head movement and less eye movement, which is time costly. Since the IPD is not exactly 62 mm or 67 mm for participants, there was an IPD mismatch in the system, which may have made positioning the glasses difficult for some participants. However, we do not believe this mismatch led to binocular vision since the offsets were small, and we did not receive any reports from participants about not converging their eyes. Other factors, such as undesired head movement caused by the amplification gains and simulator sickness, probably influenced the result to a lesser extent.

Regarding our third hypothesis on accuracy (H3), no statistical significance was found in total accuracy on either objective or subjective metrics. This result confirms the literature that accuracy is not as susceptible to the limitations of virtual monitors. In other words, while the participants took longer to complete the task on virtual monitors, their overall accuracy was similar. The last question block, which required participants to think about the overall documents and type complete responses, had a trend. In this question block, Virtual tended to perform worse than Workstation, with more errors. While this is not conclusive, it points again to the keyboard visibility problem. Another possibility is that this was the last question block, and participants were already influenced by some other factors, such as neck strain from moving the head more, simulator sickness, or blurriness because of eye strain. Given that this effect was not observed in the prior work (Pavanatto et al., 2021), we believe that keyboard visibility was still the most likely reason.

From those findings, we derive a few general guidelines to inform the future design of virtual monitors and VR hardware.

1. VR-based Virtual monitors can be more beneficial than a single laptop screen in mobile situations requiring multiple windows. Otherwise, workstations are expected to provide better results.

2. Using a head-fixed virtual monitor that pans based on head movements can improve readability. However, it also can remove other useful features, such as getting closer to the screen. This approach may also induce some simulator sickness.

3. HWDs with a glasses form factor must be much lighter than conventional form factors, as the lack of a head strap makes securing them on the user’s head much more complicated.

4. HWDs with a glasses form factor require mechanisms to correctly align the user’s eyes to the optical sweet spot.

5. When designing virtual monitors, remember that only some of the FOV available to the user may be useable. Distortion at the lenses’ edges can strongly degrade text quality and force users to move their heads instead.

Our results further reinforce that when using VR-based virtual monitors, it is necessary to enable a clear view of the physical keyboard while reducing visual switches between the virtual and physical world (e.g., by providing a camera pass-through view to the real world when the user looks down at the keyboard), such as discussed in existing literature (Hoppe et al., 2018; Schneider et al., 2019).

6 Limitations

Some limitations of this work should be noted: 1) the hardware utilized in this study is not available commercially and was designed with specific characteristics aimed at presenting virtual displays; 2) this study did not compare the difference between world- and body-fixed coordinate systems for virtual displays, and thus we cannot affirm specific contributions of the panning approach - in a previous work (Pavanatto et al., 2021) we compared world-fixed virtual monitors against physical monitors, but the condition is not included in this study; 3) some issues detected in this study, such as comfort, blurriness, distortion, were specific to the custom HWD being used and may not be generalizable, although we believe the lessons should still be applied to future design.

7 Conclusions and future work

We explored the effect of enhanced readability on the user experience of virtual monitors. From our identified factors, we created a prototype that improves readability by reducing texture distortions and providing display resolution. We evaluated our approach against two existing approaches for conducting productivity work. Our results show that increasing readability in a virtual monitor interface reduced the disparity between a setup of physical monitors and another with only virtual monitors. We also showed that virtual monitors were more beneficial than a single laptop screen, reinforcing the significant role that virtual monitors can play in improving productivity anywhere.

This work explored virtual displays in the form of monitors, trying to mirror the characteristics of physical monitors. However, the most significant advantages of virtual displays could lie in properties and behaviors that physical monitors cannot achieve. Follow-up studies can investigate the optimal size for displays, strategies or techniques for placing content, and even elements like depth and intelligent responses based on sensor information. Future studies should also investigate the effects of deploying such a system in the wild, in settings where it is not feasible to use a physical monitor.

Data availability statement

The datasets presented in this article are not readily available because informed consent did not include sharing raw data with third parties or for use in other studies. Requests to access the datasets should be directed to bHBhdmFuYXRAdnQuZWR1.

Ethics statement

The studies involving humans were approved by Institutional Review Board (IRB) at Microsoft Research. The studies were conducted in accordance with the local legislation and institutional equirements. The participants provided their written informed consent to participate in this study.

Author contributions

LP, CB, and RS contributed to conception and design of the study. SD and DB reviewed the study design. LP performed the user study sessions and the statistical analysis. LP wrote the first draft of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This study was funded by Microsoft Corporation.

Conflict of interest

Authors LP, SD, CB, and RS were employed by Microsoft Corporation.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that this study received funding from Microsoft Corporation. The funder had the following involvement in the study: study design, data collection and data analysis.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Andrews, C., Endert, A., Yost, B., and North, C. (2011). Information visualization on large, high-resolution displays: issues, challenges, and opportunities. Inf. Vis. 10, 341–355. doi:10.1177/1473871611415997

Bellgardt, M., Pick, S., Zielasko, D., Vierjahn, T., Weyers, B., and Kuhlen, T. W. (2017). “Utilizing immersive virtual reality in everydaywork,” in 2017 IEEE 3rd Workshop on Everyday Virtual Reality (WEVR), Los Angeles, CA, USA, 19-19 March 2017 (IEEE), 1–4. doi:10.1109/WEVR.2017.7957708

Biener, V., Kalamkar, S., Nouri, N., Ofek, E., Pahud, M., Dudley, J. J., et al. (2022). Quantifying the effects of working in VR for one week. IEEE Trans. Vis. Comput. Graph. 28, 3810–3820. doi:10.1109/TVCG.2022.3203103

Biener, V., Schneider, D., Gesslein, T., Otte, A., Kuth, B., Kristensson, P. O., et al. (2020). Breaking the screen: interaction across touchscreen boundaries in virtual reality for mobile Knowledge workers. IEEE Trans. Vis. Comput. Graph. 26, 3490–3502. doi:10.1109/TVCG.2020.3023567

Butscher, S., Hubenschmid, S., Müller, J., Fuchs, J., and Reiterer, H. (2018). “Clusters, trends, and outliers: how immersive Technologies can facilitate the collaborative analysis of multidimensional data,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–12. doi:10.1145/3173574.3173664

Büttner, A., Grünvogel, S. M., and Fuhrmann, A. (2020). “The influence of text rotation, font and distance on legibility in VR,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22-26 March 2020 (IEEE), 662–663.

Cockburn, A., and McKenzie, B. (2001). “3D or not 3D? Evaluating the effect of the third dimension in a document management system,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 434–441. doi:10.1145/365024.365309

Czerwinski, M., Smith, G., Regan, T., Meyers, B., Robertson, G. G., and Starkweather, G. K. (2003). “Toward characterizing the productivity benefits of very large displays,” in Human-Computer Interaction INTERACT '03: IFIP TC13 International Conference on Human-Computer Interaction, Zurich, Switzerland, 1-5 September 2003 (IEEE), 9–16.

Davari, S., Lu, F., and Bowman, D. A. (2020). “Occlusion management techniques for everyday glanceable AR interfaces,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22-26 March 2020 (IEEE), 324–330. doi:10.1109/VRW50115.2020.00072

Dingler, T., Kunze, K., and Outram, B. (2018). “Vr reading uis: assessing text parameters for reading in vr,” in Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–6.

Dittrich, E., Brandenburg, S., and Beckmann-Dobrev, B. (2013). “Legibility of letters in reality, 2D and 3D projection,” in Virtual augmented and mixed reality. Designing and developing augmented and virtual environments. Editor R. Shumaker (Berlin, Heidelberg: Springer Berlin Heidelberg), 149–158.

Eiberger, A., Kristensson, P. O., Mayr, S., Kranz, M., and Grubert, J. (2019). “Effects of depth layer switching between an optical see-through head-mounted display and a body-proximate display,” in Symposium on Spatial User Interaction (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3357251.3357588

Ens, B. M., Finnegan, R., and Irani, P. P. (2014). “The personal cockpit: a spatial interface for effective task switching on head-worn displays,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 3171–3180.

Erickson, A., Kim, K., Lambert, A., Bruder, G., Browne, M. P., and Welch, G. F. (2021). An extended analysis on the benefits of dark mode user interfaces in optical see-through head-mounted displays. ACM Trans. Appl. Percept. (TAP) 18, 1–22. doi:10.1145/3456874

Falk, J., Eksvärd, S., Schenkman, B., Andrén, B., and Brunnström, K. (2021). “Legibility and readability in augmented reality,” in 2021 13th International Conference on Quality of Multimedia Experience (QoMEX), Montreal, QC, Canada, 14-17 June 2021 (IEEE), 231–236.

Feiner, S., MacIntyre, B., Haupt, M., and Solomon, E. (1993). “Windows on the world: 2D windows for 3D augmented reality,” in Proceedings of the 6th Annual ACM Symposium on User Interface Software and Technology (New York, NY, USA: Association for Computing Machinery), 145–155. doi:10.1145/168642.168657

Fereydooni, N., and Walker, B. N. (2020). “Virtual reality as a remote workspace platform: opportunities and challenges,” in Microsoft New Future of Work Virtual Symposium (New York, NY, USA: Association for Computing Machinery), 1–6.

Gabbard, J. L., Mehra, D. G., and Swan, J. E. (2019). Effects of AR display context switching and focal distance switching on human performance. IEEE Trans. Vis. Comput. Graph. 25, 2228–2241. doi:10.1109/TVCG.2018.2832633

Gabbard, J. L., Swan, J. E., and Hix, D. (2006). The effects of text drawing styles, background textures, and natural lighting on text legibility in outdoor augmented reality. Presence 15, 16–32. doi:10.1162/pres.2006.15.1.16

Gattullo, M., Uva, A. E., Fiorentino, M., and Monno, G. (2014). Effect of text outline and contrast polarity on AR text readability in industrial lighting. IEEE Trans. Vis. Comput. Graph. 21, 638–651. doi:10.1109/TVCG.2014.2385056

Grout, C., Rogers, W., Apperley, M., and Jones, S. (2015). “Reading text in an immersive head-mounted display: an investigation into displaying desktop interfaces in a 3D virtual environment,” in Proceedings of the 15th New Zealand Conference on Human-Computer Interaction (New York, NY, USA: Association for Computing Machinery), 9–16. doi:10.1145/2808047.2808055

Grubert, J., Langlotz, T., Zollmann, S., and Regenbrecht, H. (2017). Towards pervasive augmented reality: context-awareness in augmented reality. IEEE Trans. Vis. Comput. Graph. 23, 1706–1724. doi:10.1109/TVCG.2016.2543720

Grubert, J., Ofek, E., Pahud, M., and Kristensson, P. O. (2018). The office of the future: virtual, portable, and global. IEEE Comput. Graph. Appl. 38, 125–133. doi:10.1109/mcg.2018.2875609

Hoppe, A. H., Otto, L., van de Camp, F., Stiefelhagen, R., and Unmüßig, G. (2018). “qVRty: virtual Keyboard with a haptic, real-world representation,” in HCI international 2018 – posters’ extended abstracts. Editor C. Stephanidis (Cham: Springer International Publishing), 266–272.

Jankowski, J., Samp, K., Irzynska, I., Jozwowicz, M., and Decker, S. (2010). “Integrating text with video and 3D graphics: the effects of text drawing styles on text readability,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1321–1330. doi:10.1145/1753326.1753524

Kim, K., Erickson, A., Lambert, A., Bruder, G., and Welch, G. (2019). “Effects of dark mode on visual fatigue and acuity in optical see-through head-mounted displays,” in Symposium on Spatial User Interaction (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3357251.3357584

Knierim, P., Kosch, T., and Schmidt, A. (2021). The nomadic office: A location independent workspace through mixed reality. IEEE Pervasive Comput. 20, 71–78. doi:10.1109/mprv.2021.3119378

Kobayashi, D., Kirshenbaum, N., Tabalba, R. S., Theriot, R., and Leigh, J. (2021). “Translating the benefits of wide-band display environments into an XR space,” in Symposium on Spatial User Interaction (New York, NY, USA: Association for Computing Machinery), 1–11.

Kojić, T., Ali, D., Greinacher, R., Möller, S., and Voigt-Antons, J.-N. (2020). “User experience of reading in virtual reality—Finding values for text distance, size and contrast,” in 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX) (IEEE), 1–6.

LaViola, J. J., Kruijff, E., McMahan, R. P., Bowman, D. A., and Poupyrev, I. P. (2017). 3D user interfaces: theory and practice. 2nd edn. Boston: Addison-Wesley Professional.

Le, K.-D., Tran, T. Q., Chlasta, K., Krejtz, K., Fjeld, M., and Kunz, A. (2021). “VXSlate: exploring combination of head movements and mobile touch for large virtual display interaction,” in Designing Interactive Systems Conference 2021 (New York, NY, USA: Association for Computing Machinery), 283–297.

Lee, J. H., An, S.-G., Kim, Y., and Bae, S.-H. (2018). “Projective windows: bringing windows in space to the fingertip,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–8.

Li, Z., Annett, M., Hinckley, K., Singh, K., and Wigdor, D. (2019). “HoloDoc: enabling mixed reality workspaces that harness physical and digital content,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–14. doi:10.1145/3290605.3300917

Lu, F., Davari, S., and Bowman, D. (2021). “Exploration of techniques for rapid activation of glanceable information in head-worn augmented reality,” in Proceedings of the 2021 ACM Symposium on Spatial User Interaction (New York, NY, USA: Association for Computing Machinery). doi:10.1145/3485279.3485286

Lu, F., Davari, S., Lisle, L., Li, Y., and Bowman, D. A. (2020). “Glanceable AR: evaluating information access methods for head-worn augmented reality,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22-26 March 2020 (IEEE), 930–939. doi:10.1109/VR46266.2020.00113

Mahmood, T., Butler, E., Davis, N., Huang, J., and Lu, A. (2018). “Building multiple coordinated spaces for effective immersive analytics through distributed cognition,” in 2018 International Symposium on Big Data Visual and Immersive Analytics (BDVA) (New York, NY, USA: Association for Computing Machinery), 1–11. doi:10.1109/BDVA.2018.8533893

Mcgill, M., Kehoe, A., Freeman, E., and Brewster, S. (2020). Expanding the bounds of seated virtual workspaces. ACM Trans. Comput.-Hum. Interact. 27, 1–40. doi:10.1145/3380959

Medeiros, D., McGill, M., Ng, A., McDermid, R., Pantidi, N., Williamson, J., et al. (2022). From shielding to avoidance: passenger augmented reality and the layout of virtual displays for productivity in shared transit. IEEE Trans. Vis. Comput. Graph. 28, 3640–3650. doi:10.1109/TVCG.2022.3203002

Ng, A., Medeiros, D., McGill, M., Williamson, J., and Brewster, S. (2021). “The passenger experience of mixed reality virtual display layouts in airplane environments,” in 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy, 04-08 October 2021 (IEEE), 265–274. doi:10.1109/ISMAR52148.2021.00042

Ofek, E., Grubert, J., Pahud, M., Phillips, M., and Kristensson, P. O. (2020). Towards a practical virtual office for mobile knowledge workers. arXiv preprint arXiv:2009.02947. Available at: https://arxiv.org/abs/2009.02947 (Accessed September 07, 2020).

Orlosky, J., Kiyokawa, K., and Takemura, H. (2013). “Dynamic text management for see-through wearable and heads-up display systems,” in Proceedings of the 2013 International Conference on Intelligent User Interfaces (New York, NY, USA: Association for Computing Machinery), 363–370. doi:10.1145/2449396.2449443

Pavanatto, L. (2021). “Designing augmented reality virtual displays for productivity work,” in 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 04-08 October 2021 (IEEE), 459–460. doi:10.1109/ISMAR-Adjunct54149.2021

Pavanatto, L., North, C., Bowman, D. A., Badea, C., and Stoakley, R. (2021). “Do we still need physical monitors? An evaluation of the usability of AR virtual monitors for productivity work,” in 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March 2021 - 01 April 2021 (IEEE), 759–767. doi:10.1109/VR50410.2021.00103

Raskar, R., Welch, G., Cutts, M., Lake, A., Stesin, L., and Fuchs, H. (1998). “The office of the future: A unified approach to image-based modeling and spatially immersive displays,” in Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques (New York, NY, USA: Association for Computing Machinery), 179–188. doi:10.1145/280814.280861

Reipschläger, P., Flemisch, T., and Dachselt, R. (2020). Personal augmented reality for information visualization on large interactive displays. IEEE Trans. Vis. Comput. Graph. 27, 1182–1192. doi:10.1109/TVCG.2020.3030460

Ruvimova, A., Kim, J., Fritz, T., Hancock, M., and Shepherd, D. C. (2020). “Transport me away: fostering flow in open offices through virtual reality,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–14. doi:10.1145/3313831.3376724

Rzayev, R., Ugnivenko, P., Graf, S., Schwind, V., and Henze, N. (2021). “Reading in VR: the effect of text presentation type and location,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–10.

Schneider, D., Otte, A., Gesslein, T., Gagel, P., Kuth, B., Damlakhi, M. S., et al. (2019). ReconViguRation: reconfiguring physical Keyboards in virtual reality. IEEE Trans. Vis. Comput. Graph. 25, 3190–3201. doi:10.1109/TVCG.2019.2932239

Slater, M., Spanlang, B., and Corominas, D. (2010). Simulating virtual environments within virtual environments as the basis for a psychophysics of presence. ACM Trans. Graph. 29, 1–9. doi:10.1145/1778765.1778829

Waldner, M., Grasset, R., Steinberger, M., and Schmalstieg, D. (2011). “Display-adaptive window management for irregular surfaces,” in Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces - ITS ’11 (Kobe, Japan: ACM Press), 222. doi:10.1145/2076354.2076394

Keywords: virtual monitors, virtual displays, productivity work, virtual reality, user studies, performance, user experience

Citation: Pavanatto L, Davari S, Badea C, Stoakley R and Bowman DA (2023) Virtual monitors vs. physical monitors: an empirical comparison for productivity work. Front. Virtual Real. 4:1215820. doi: 10.3389/frvir.2023.1215820

Received: 02 May 2023; Accepted: 15 September 2023;

Published: 05 December 2023.

Edited by:

Robert W. Lindeman, Human Interface Technology Lab New Zealand (HIT Lab NZ), New ZealandReviewed by:

Jason Orlosky, Augusta University, United StatesGun Lee, University of South Australia, Australia

Copyright © 2023 Pavanatto, Davari, Badea, Stoakley and Bowman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Leonardo Pavanatto, bHBhdmFuYXRAdnQuZWR1

Leonardo Pavanatto

Leonardo Pavanatto Shakiba Davari

Shakiba Davari Carmen Badea2

Carmen Badea2 Doug A. Bowman

Doug A. Bowman