94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 30 August 2023

Sec. Virtual Reality and Human Behaviour

Volume 4 - 2023 | https://doi.org/10.3389/frvir.2023.1169654

This article is part of the Research TopicBeyond Touch: Free Hand Interaction in Virtual EnvironmentsView all 7 articles

Locomotion is a fundamental task for exploring and interacting in virtual environments (VEs), and numerous locomotion techniques have been developed to improve the perceived realism and efficiency of movement in VEs. Gesture-based locomotion techniques have emerged as a more natural and intuitive mode of interaction than controller-based methods of travel in VEs. In this paper, we investigate the intuitiveness, comfort, ease of use, performance, presence, simulation sickness, and user preference of three user-elicited body-based gestures: the Calling gesture, Deictic Pointing gesture, and Mirror Leaning gesture. These gestures are intended to be used in three different seated multitasking scenarios involving virtual travel and various levels of hand engagement in selection. In the first study, participants compared the Calling gesture with the Tapping and Teleportation gestures for Scenario 1, which involved virtual travel only. The Calling gesture was found to be the most intuitive, with increased presence, while the Teleportation gesture was the preferred travel technique. The second study involved participants comparing the Deictic Pointing gesture with the Tapping and Teleportation gestures for Scenario 2, which involved virtual travel and one hand engaged in selection. The Deictic Pointing gesture was found to be more intuitive than the other gestures in terms of performance, comfort, ease of use, and presence. The third study introduced a new group of participants who compared the Mirror Leaning gesture with the Tapping and Teleportation gestures for Scenario 3, which involved virtual travel and both hands engaged in selection. The Mirror Leaning gesture was found to be the most intuitive, with increased presence and performance compared to the other gestures. We compared the gestures of the scenarios in three complementary search tasks: traveling in a straight-line path, moving in a directed path, and moving in an undirected path. We believe that the qualitative and quantitative measures obtained from our studies will help researchers and interaction design experts to design efficient and effective gesture-based locomotion techniques for traveling in a seated position in multitasking VEs.

Virtual locomotion is an important interaction component in many applications, allowing users to move and experience the VE while performing other tasks such as selection, manipulation, object searching, and virtual exploration (Griffin et al., 2018). Designing virtual locomotion to be natural and intuitive is crucial for creating a successful and enjoyable VR experience that has high immersion, presence, comfort, accessibility, and engagement without requiring the user to pay attention to the act of traveling itself.

Real walking is the most natural and presence-inducing method (Usoh et al., 1999), however, it requires a larger physical space to experience a large VE. Consequently, many locomotion techniques were designed that need smaller physical space while enabling the user to explore large virtual environments. One such classic locomotion solution is Artificial Locomotion Technique (ALT) or controller-based locomotion technique, typically activated using a handheld controller. While using ALTs in VEs reduces physical strain by enabling the users to sit and travel in virtual space, it does not provide vestibular or proprioceptive feedback. As a result, it confuses the senses, resulting in VR sickness (Bhandari et al., 2018). They are unnatural, and the users must carry the controllers throughout the virtual experience. Another alternative is the proxy gestures that require the user to perform gestures using either the upper or the lower part of their body which serve as a proxy for actual steps. Gesture based locomotion techniques have become more robust and user friendly than the controller based techniques (Ferracani et al., 2016; Caggianese et al., 2020). However, gesture based locomotion techniques are challenging to design and optimize. They may impart fatigue to the user if the gesture needs to be performed continuously for movement in VE. It also requires the choice of gesture that adapts to all users.

Previous research has demonstrated that proxy gestures are a promising approach for enabling locomotion in VEs. However, many studies have focused exclusively on subjective user preferences when researching and developing locomotion techniques, with a narrow focus on movement tasks alone. Further investigation is required to determine which locomotion methods are suitable for multitasking scenarios that involve movement in combination with selection and manipulation tasks. We have also chosen to explore seated-based locomotion techniques, as experiencing VR in a seated position not only provides comfort but also causes less physical strain (Chester et al., 2002) and is less likely to induce motion sickness (Merhi et al., 2007). Furthermore, studying gestures for seated positions is essential because they can be tailored to different user groups, such as the elderly and physically disabled, to explore the VE.

The present empirical study involves an evaluation of three gesture-based locomotion techniques. These techniques were developed based on the results of the gesture elicitation user study performed by Ganapathi and Sorathia (2019). The unique gestures obtained from the elicitation study were further classified based on their geometric features such as hand usage and gesture forms (Ganapathi and Sorathia, 2022). Specifically, the study investigates the gestures in three different Scenarios: Scenario 1, Scenario 2, and Scenario 3, where users are required to simultaneously engage in (i) virtual travel alone, (ii) virtual travel combined with object selection and manipulation of one virtual object, and (iii) virtual travel combined with object selection and manipulation of two virtual objects. The newly designed gestures are compared with tapping and teleportation-based techniques. The primary objective of this study is to evaluate the efficiency, effectiveness, user preference, simulation sickness, and presence of the various new gesture-based (controller-less) techniques in a multitasking VE while in a seated position.

Virtual locomotion is a challenging task in which natural and unconstrained walking through the VE is desirable. The utilization of proxy gestures for locomotion techniques requires the user to perform gestures that serve as a substitute for actual steps. One such technique is Walking in Place (WIP), where the user generates step-like motions while remaining stationary (Templeman et al., 1999). A user study by Riecke et al. (2010) found that WIP closely matches real walking in terms of performance measures. Furthermore, WIP has been shown to improve spatial orientation and is less likely to induce cyber sickness due to the generation of proprioceptive feedback (Tregillus et al., 2017). Tapping in Place, a variation of WIP, has been found to better match real walking in terms of perceived effort and the generation of relevant kinesthetic information. Nilsson et al. (2013) found that the hip movement gesture generated the necessary proprioceptive feedback and reduced unintended positional drift (UPD) when compared with the arm swinging (AS) gesture. The AS gesture also produced proprioceptive feedback but limited the user’s ability to use their arms for interaction in VE. McCullough et al. (2015) utilized an inexpensive wearable device called the Myo armband to implement the AS gesture and found that this method outperformed joystick-based navigation for spatial orientation tasks.

Another notable upper body gesture for virtual travel is the leaning gesture. To move in a specific direction, users simply lean towards the desired direction, and the angle of leaning controls their translation speed. Numerous leaning interfaces have been investigated, such as those in Tregillus et al. (2017) and Kitson et al. (2017). Harris et al. (2014) developed the Wii Lean method, which employs the physical leaning of the user on a Nintendo Wii Fit Balance Board. This interface generates vestibular feedback, offering a higher level of presence than controller-based navigation. Kruijff et al. (2015) found that dynamic leaning enhances forward linear vection and results in improved user involvement, enjoyment, and engagement in comparison to joystick control.

Cardoso (2017) proposed LMTravel, a virtual reality (VR) locomotion technique that employs hand gestures detected by the Leap Motion device. In another study, Zhang et al. (2017) proposed a locomotion method controlled by using double-hand gestures. Specifically, the left palm was used to control the movement forward and backward, along with velocity manipulation, while the right thumb controlled the direction of movement. The results indicated that the proposed solution was intuitive, easy to learn, easy to use, improved immersion, and reduced motion sickness in VR when compared to joystick-based techniques.

The locomotion techniques discussed above primarily focused on the task of moving through a VE. However, in many applications such as supermarket scenarios, video games, and training simulations, locomotion is accompanied by another task such as object search, grabbing, and manipulation. In one study (Pai and Kunze, 2017), two locomotion techniques, AS and WIP, were compared for a task that involved moving through a maze while carrying a virtual object. The study found that AS was more low-profile and required less energy compared to WIP. In another study (Ferracani et al., 2016), four gestures for locomotion, including AS, WIP, tap, and push, were compared for their perceived naturalness and effectiveness of locomotion. The task required users to travel a predefined path, avoiding obstacles and relocating virtual objects. Tomberlin et al. (2017) proposed a locomotion technique that used a fist gesture of the non-dominant hand to translate and rotate the viewport for a VR horror game. However, no empirical study was conducted to analyze the effectiveness of the technique.

The use of locomotion techniques in VEs requires a tracking system capable of detecting positional movement with six degrees of freedom (6DOF). However, recent advances in VR technology have led to the development of self-contained systems like the Oculus Quest, which utilizes inside-out tracking. The goal of our study was to design locomotion gestures suitable for multitasking VEs, enabling VR home-users to experience travel without purchasing additional embodied interfaces. Our proposed gestures can be used by users without any additional hardware.

We have used five distinct techniques in our studies. The calling gesture, deictic pointing gesture, and mirror leaning gesture were specifically designed for Scenario 1, Scenario 2, and Scenario 3 respectively. These techniques were compared to tapping and teleportation gestures from existing literature. An advantage of these techniques is their independence from controllers or additional hardware interfaces. However, the interaction area is constrained by the movement of the user’s head due to the placement of sensors on the HMD, which track hand gestures using Oculus hand tracking. In literature (Ferracani et al., 2016), the tapping gesture was found to be the most preferred gesture and performed the best in comparison to WIP, Push, and Swing gestures. Teleportation on the other hand was found to be intuitive, causing less collision and a user friendly way of locomotion that is used in many VR games (Bozgeyikli et al., 2016). Hence both these techniques were used for the comparison with the new designed gestures in our three user studies.

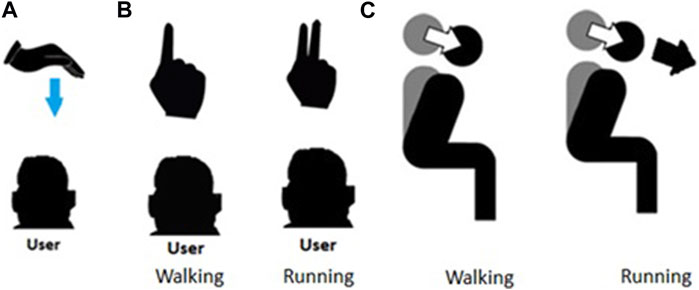

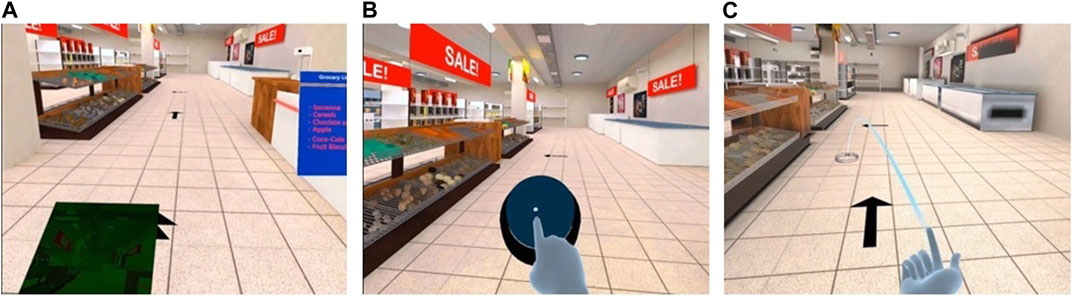

In the calling gesture users place their open hand vertically opposite to the HMD and move their hand towards the HMD. The gesture functions by “calling” the viewport or any point in space and dragging it towards the user, resulting in a reverse movement that causes the user to translate forward in the desired direction. The gesture is activated when the user positions their hand in front of the HMD, as shown in Figure 1A, and the initial position of the hand is recorded. As visual feedback, a grey laser beam is displayed to the user, indicating the point where the user will be moved at the completion of the gesture. When the user drags their hand toward the HMD the user’s viewpoint is then translated forward to the point indicated by the grey laser beam. The gesture’s flow is discrete, as the user’s movement occurs only after the gesture is completed, and the movement is carried out at a constant speed. This unimanual locomotion technique provides the user with complete control over movement in virtual space while freeing up the second hand for other tasks, although both hands can be used to execute the gesture for travel.

FIGURE 1. (A) Calling Gesture Front view of the gesture (B) Deictic Pointing Gesture Top view of the Gesture (C) Leaning gesture.

The pointing gesture, a gesture commonly used in everyday life, is proposed as the locomotion gesture for Scenario 2. To execute the gesture, the user extends their index finger within the HMD’s field of view. The vector that extends from the user’s head through the crosshair of the index fingertip and the VE determines the translation direction. The movement begins immediately upon placing the index finger in front of the HMD, with a velocity equivalent to walking at 1.2 m/s, as described by Chou et al. (2009). By extending both the index and middle fingers within the HMD’s field of view, the user can increase the velocity to running speed at 3.2 m/s, as illustrated in Figure 1B. While traveling, the velocity can be adjusted between walking and running. If the user moves the hand out of the sensor’s field of view while traveling, the movement will cease.

This gesture is specially designed for Scenario 3. The technique utilizes head movement as a means of controlling virtual movement velocity, similar to a head joystick as described in Hashemian et al. (2020). The user’s head or Head-Mounted Display (HMD) orientation is tracked for this purpose. Before the interaction begins, each user is seated in a swivel chair and the resting position of their head is calibrated with their back straight and head facing forward. To prevent unintended movements, a minimum distance of 5 cm, as established in Hashemian et al. (2020), is set, and the viewpoint is not moved, thus avoiding unnecessary forward translation when the user leans to perform secondary tasks or grab items nearby. To ensure user safety and comfort, a maximum distance of 35 cm is set for leaning, and the velocity does not increase beyond this point. The technique offers two levels of velocity control. The users were provided with visual feedback on their velocity by displaying a single arrow on the ground to indicate walking speed and two arrows to indicate running speed. Based on this feedback, users can adjust their leaning and control their velocity accordingly. The user’s movement comes to a stop when they return to the zero point or resting position. One of the main visual design additions to the gesture was a mirror view for the user. During leaning, the lower ground surface is more noticeable, while the upper part of the VE is only partially visible. However, when searching for targets in the VE, such as supermarkets, the upper view is also crucial. To avoid disrupting movement and reducing the sense of immersion, a mirror window is presented to the user while traveling, displaying the higher portions of the VE. This allows for mirror leaning, which provides visual cues for velocity and an upper view of the VE while the user is in motion. The mirror leaning gesture is shown in Figure 1C.

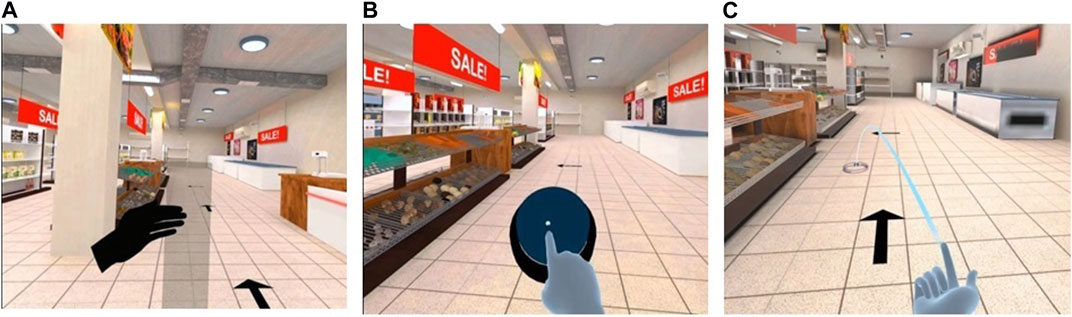

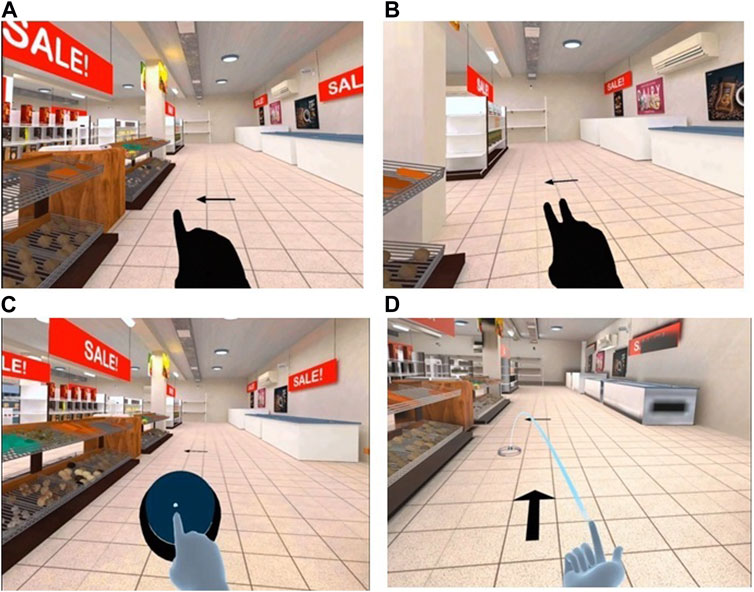

The tapping gesture utilizes finger tapping motions for moving in a VE, similar to the tapping gesture described by Ferracani et al. (2016). Users tap their fingers up and down on a hovering button, providing visual feedback when the button is pressed. Each tap moves the user a constant distance in the VE, and the user can increase the frequency of taps to move faster. This technique also allows the user to rotate their head or body and move in the direction they are facing. The gesture is shown in Figure 2A.

This technique utilizes the “point and teleport” locomotion method, which was previously introduced in Bozgeyikli et al. (2016) but without controllers. To perform this gesture, the user places their hand within the field of view of the head-mounted display (HMD) with their palm facing upward, while keeping only the index finger and thumb open. The user then points to a location on the VE ground, where a blue ring placeholder is displayed to indicate the target location, as depicted in Figure 2B. The virtual viewpoint is teleported to the specified location upon closing the index finger. The user’s orientation is maintained during teleportation, and they can adjust their orientation by physically rotating their body in the real world.

We conducted three distinct laboratory experiments at different time periods and with different users, for each scenario, to investigate the newly designed gestures. Our evaluation of these gestures encompassed both quantitative and qualitative measurements.

The personal computer (PC) used in the user study was a VR Ready system running a 64-bit Windows 10 operating system, powered by an Intel Core i5-10300H 8GB processor, and featuring an Nvidia Geforce GTX 1650 graphics card. The Oculus Quest 2 headset was utilized to enable hand gestures, with its 6DOF technology tracking both head and hand movements and translating them into VR. The headset was equipped with fast switch LCD displays, offering a resolution of 1832x1920 per eye and a refresh rate of 90 Hz. Although the headset came with two touch controllers, they were not employed in the experiment, as locomotion and selection were performed entirely through freehand gestures. The VR supermarket and locomotion techniques were developed using the Unity game engine, and the techniques were coded in C# using the Oculus Hand Tracking Support SDKs.

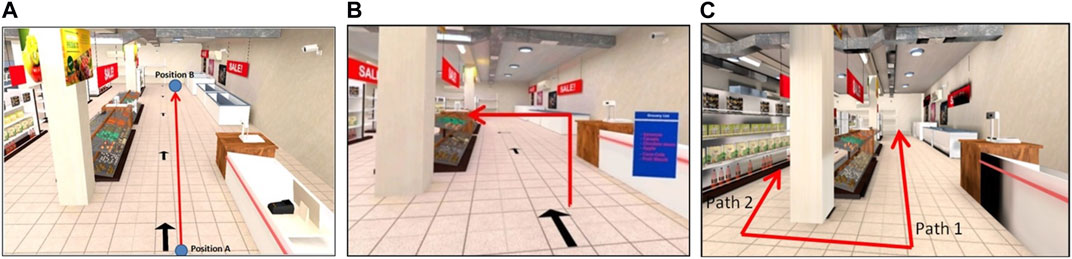

The tasks assigned to participants were designed to assess three key aspects that an effective locomotion technique should support: a) movement in a straight line path, b) movement in a directed path to reach a target, and c) movement in an undirected path to reach a target shown in Figure 3. The execution of tasks within a given scenario was evaluated using a set of metrics that could be either objective (based on measurements automatically collected by the VR application) or subjective (based on user-provided responses). Objective measures included completion time and the number of collisions, while subjective measures comprised the assessment of intuitiveness, comfort, ease of use, simulation sickness, presence, perceived workload, and user preference. As depicted in Figure 4, the six objects that the user needed to collect from the shelves were located in specific positions.

FIGURE 3. Tasks in the Supermarket (A) Straight line path (B) Directed path (C) Undirected path. Created with Unity® 2019.4.28f1(64-bit). Unity is a trademark or registered trademark of Unity Technologies.

FIGURE 4. Top view of the supermarket along with the position of six objects. Created with Unity® 2019.4.28f1(64-bit).

Movement in a straight line task is commonly encountered in VR applications, where the user may need to move in a straight line path to explore the VE or to reach specific targets, as discussed in Nabiyouni et al. (2015). The system records the time taken for the user to traverse the distance from position A to position B in a simple straight line.

In this task, participants are required to move in a straight line and make multiple directional changes, in the form of turns, in the supermarket. They must follow a predefined path, represented as black arrows on the ground, as shown in Figure 3B. While moving, users should stay in the center of the path and avoid colliding with the shelves. This task is reminiscent of user studies conducted by Nabiyouni et al. (2015) and Paris et al. (2017), which employed multiple path segments with turns to navigate a VE. The user selects the glowing target item placed at different heights using the grab gesture. The total completion time and the number of collisions with the shelves are recorded for this task.

In this task, participants are required to navigate through various paths with varying directional changes in the absence of any predefined path guidance, as depicted in Figure 3C. The task entails both movement and object interaction. This scenario was inspired by the search tasks in prior studies conducted by Ruddle and Lessels (2006) and Coomer et al. (2018). In our experiment, participants independently navigate through the supermarket to select the target items on the list without any guidance. The total completion time and the number of collisions with the shelves are recorded for this task.

Study 1 involved the participation of 30 students, with 13 of them being females. The age range of the participants was between 21–32 years (M = 25.33; SD = 3.83). Only eight participants (26.6%) had no prior experience with Head-Mounted Displays (HMDs), and none of the participants had any motor disabilities or prior exposure to any of the locomotion techniques used in the study, including the gesture-based teleportation method.

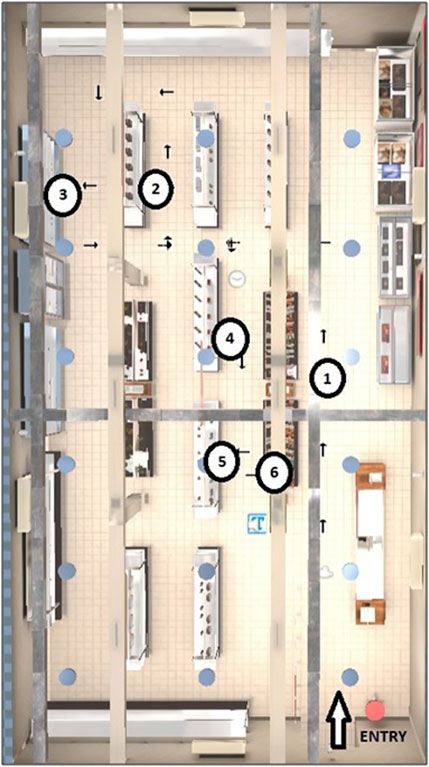

A within-subject design was employed, in which each participant undertook three training sessions, each session using a different locomotion technique. Following the training sessions, the participants completed nine primary trials, consisting of a factorial combination of three locomotion techniques (Calling Gesture, Tapping Gesture, and Teleportation) and three tasks (Task #1, Task #2, and Task #3) in a seated position. The order of the locomotion technique was counterbalanced across the participants. Participants completed the three tasks in the same order. In Scenario 1 participants performed the travel with both hands free. Figure 5 depicts the three gestures employed in Scenario 1, namely the Calling Gesture (a), Tapping Gesture (b), and Teleportation Gesture (c).

FIGURE 5. Gesture Comparison in Scenario 1 (A) Calling gesture (B) Tapping gesture (C) Teleportation Gesture. Created with Unity® 2019.4.28f1(64-bit).

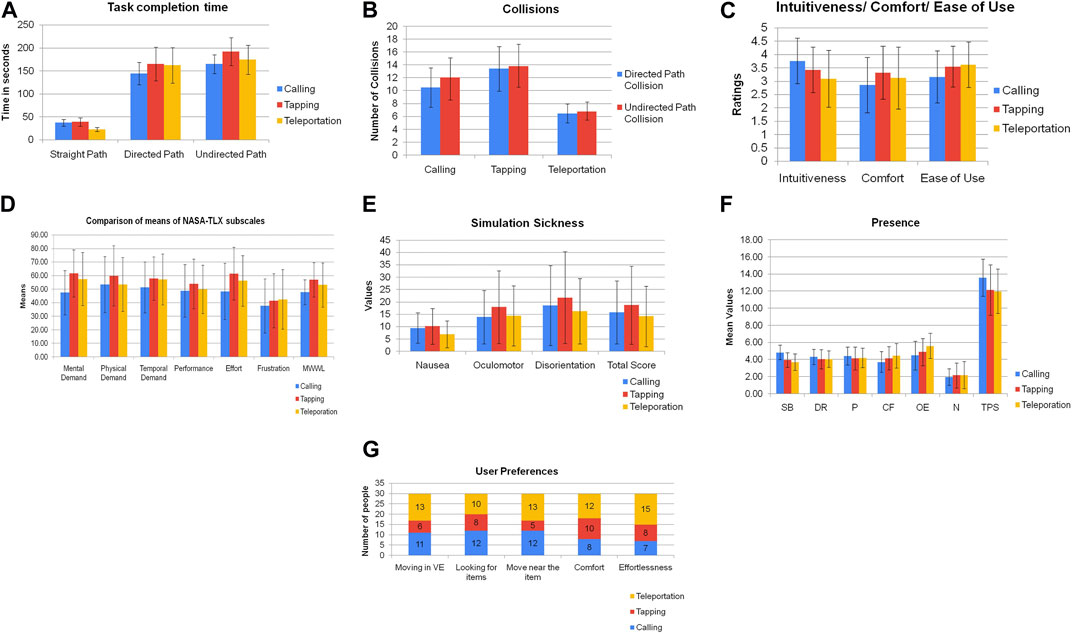

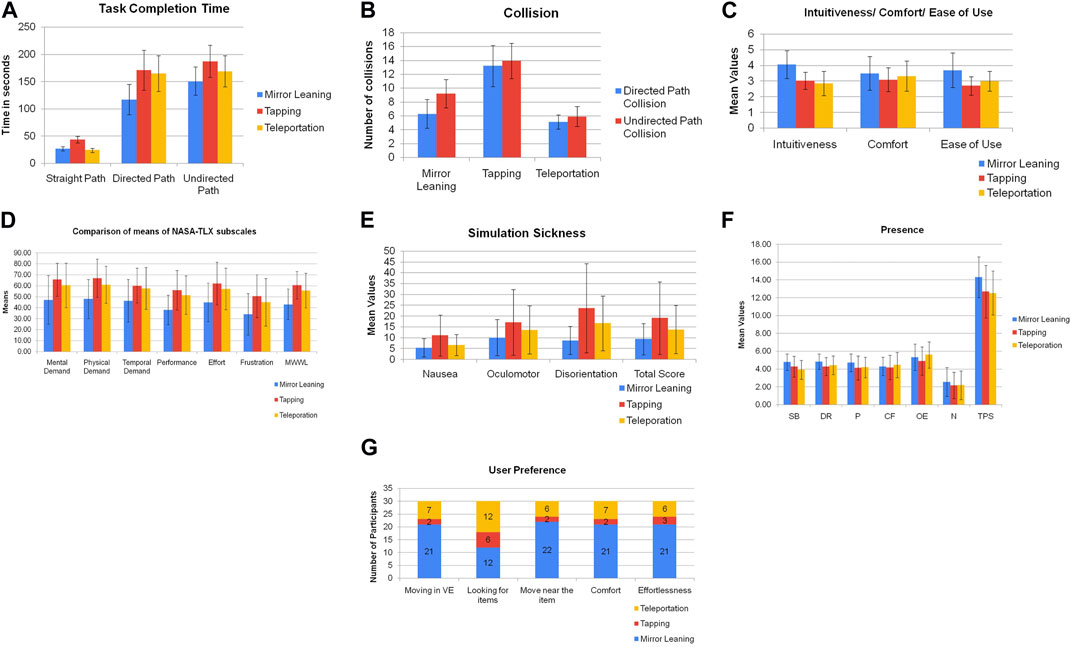

The data were analyzed using SPSS and a one-way ANOVA was performed. The statistical significance level was set at α = 0.05. To test for the normal distribution of the data, a Levene test was used. When pairwise comparisons were conducted, a Tukey’s post hoc test was performed to ascertain statistical significance. The descriptive statistics are shown in Figure 6 for user study 1.

FIGURE 6. Summary representation of measures in Scenario 1 (A) Total Completion Time (B) Collisions (C) Intuitiveness, Comfort, and Ease of Use (D) Perceived Workload (E) SSQ (F) Presence (G) User Preference.

Task performance is evaluated based on two primary metrics: the total time (speed) taken by the user to complete the task and their accuracy (collisions) during task completion.

The data were found to be normally distributed with an overall mean and standard deviation of M = 32.96 and SD = 10.31, respectively. Analysis of variance revealed that movement in a straight line path varied significantly across the three locomotion techniques (F(2, 90) = 45.62, p < 0.01). The effective size R squared was found to be 0.512. Post hoc analyses demonstrated that the teleportation gesture (p < 0.01) was significantly faster than the calling and tapping gesture.

For Task #2, task performance was measured by both the completion time and the number of collisions during the task. The data for completion time were found to be normally distributed, with an overall mean and standard deviation of M = 157.36 and SD = 34.56, respectively. Analysis of variance revealed that completion time varied significantly across the three locomotion techniques (F(2, 90) = 3.45, p = 0.036). The effective size R squared was found to be 0.12. Post hoc analyses showed that calling was significantly faster than tapping (p = 0.046). For collisions, the data were also found to be normally distributed, with an overall mean and standard deviation of M = 10.26 and SD = 3.85, respectively. Analysis of variance revealed that the number of collisions varied significantly across the three locomotion techniques (F(2, 90) = 42.78, p < 0.01). The effective size R squared was found to be 0.496. Post hoc analyses demonstrated that teleportation (p < 0.01) had a significantly lower number of collisions than calling and tapping.

In Task#3, the performance of the participants was evaluated based on the completion time and number of collisions during the task. The collected data were found to follow a normal distribution, with (M = 157.36, SD = 34.56) respectively, for the total time completion. The completion time was found to have a statistically significant difference among the techniques used (F (2, 90) = 7.169, p = 0.001). The effective size R squared was found to be 0.141. Post-hoc analysis revealed that calling had a significantly lower completion time compared to tapping (p = 0.001) and teleportation (p = 0.047). For the number of collisions, the data were found to be normally distributed, with (M = 10.92, SD = 4.14), respectively, for the total number of collisions. The statistical analysis showed a significant difference among the techniques used (F (2, 90) = 47.45, p < 0.01). The effective size R squared was found to be 0.522. Specifically, teleportation (p < 0.01) had significantly fewer collisions than calling and tapping gestures.

An analysis of the intuitive measure was conducted, which yielded a statistically significant result (F (2, 90) = 3.18, p = 0.046). The effective size R squared was found to be 0.68. Post hoc analysis indicated that calling was found to be significantly more intuitive compared to teleportation (p = 0.036).

The analysis of the comfort measure revealed no statistically significant difference among the locomotion techniques used (F (2, 90) = 1.436, p = 0.243).

The results for the ease of use measure were analyzed, and no statistically significant difference was found among the locomotion techniques used (F (2, 90) = 2.5, p = 0.08). The effective size R squared was found to be 0.14.

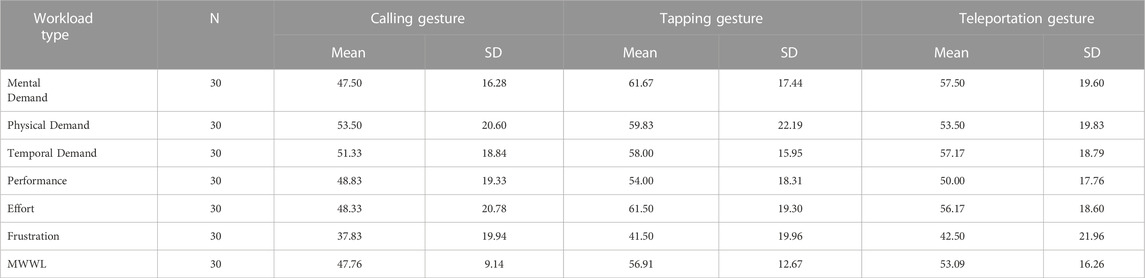

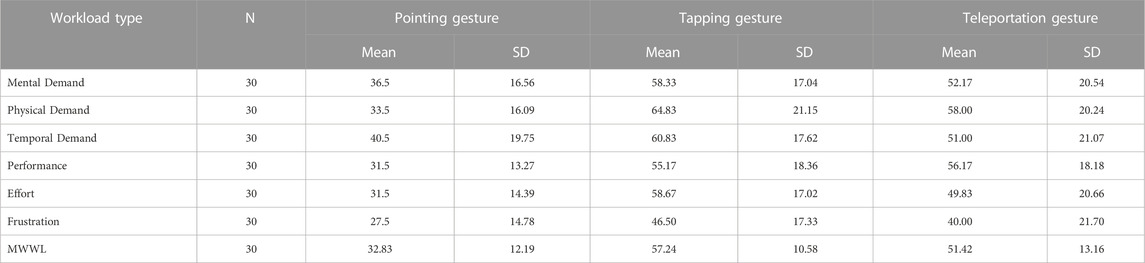

Table 1 displays the mean and standard deviation values for the NASA-TLX question scores of Mental Demand, Physical Demand, Temporal Demand, Effort, Performance, and Frustration for the Calling, Tapping, and Teleport conditions. The results of the statistical analysis indicated a significant difference in Mental Demand among the techniques used (F (2, 90) = 5.005, p = 0.009). Specifically, calling was found to be significantly less mentally demanding (p = 0.008) compared to tapping. However, no significant difference was found in Physical Demand (F (2, 90) = 0.9, p = 0.4), Temporal Demand (F (2, 90) = 1.23, p = 0.29), Performance (F (2, 90) = 0.64, p = 0.52), or Frustration scores (F (2, 90) = 0.425, p = 0.65) among the techniques used. For Effort scores, a statistically significant difference was found (F (2, 90) = 3.4, p = 0.03), with calling requiring significantly less effort (p = 0.029) compared to tapping. Furthermore, there was a significant difference in the Mean Weighted Workload (MWWL) among the techniques used (F (2, 90) = 2.911, p = 0.06). Specifically, calling was found to have significantly less MWWL when compared to tapping (p = 0.048). The descriptive statistics are shown in Table 1.

TABLE 1. Descriptive Statistics of NASA-TLX dimensions scale (0 = Low to 100 = High) for Scenario 1.

The analysis revealed that there was no statistically significant difference in simulation sickness among the three locomotion techniques, as indicated by the total score (F (2, 90) = 0.83, p = 0.43). The effective size R squared was found to be 0.21. Similarly, there was no significant difference found in the individual variables of nausea (F (2, 90) = 0.94, p = 0.39), oculomotor (F (2, 90) = 0.79, p = 0.45), and disorientation (F (2, 90) = 0.45, p = 0.63) between the three techniques.

Our statistical analysis revealed a significant difference in total presence among the three locomotion techniques, as indicated by (F (2, 90) = 3.517, p = 0.034). The effective size R squared was found to be 0.075. A post hoc analysis indicated that calling resulted in a significantly higher sense of presence than teleportation (p = 0.04), while there was no significant difference between the other gestures. The questions used to assess presence were grouped under the following categories: “SB” for “Sense of being in a Supermarket,” “DR” for “the extent to which the VE becomes the dominant reality,” “P” for “the extent to which the VE is remembered as a place,” “CF” for Control Factors (ease of navigation, ability to move near objects and avoid collisions), “OE” for Overall Enjoyment, “N” for Nausea, and “TPS” for Total Presence Score.

In this study, participants were asked to provide an overall ranking of the locomotion techniques suitable for Scenario 1. Results showed that teleportation was the most preferred gesture among participants (13 out of 30), followed by calling (11 out of 30), and tapping was ranked as the third preference. Additionally, participants expressed preferences for individual aspects such as navigation, searching for items in the supermarket, moving closer to the items to grab them, avoiding collisions, comfort, and ease of use.

The study determined that the calling gesture exhibited a significantly greater degree of intuitiveness when compared to tapping and teleportation gestures. Participants reported enjoying the motion of moving their hand forward and backward, which provided both optical cues and kinesthetic feedback similar to Nilsson et al. (2018). Furthermore, the rhythmic arm movement primes the sensory-motor system to respond to the optical flow, which aligns with previous literature (Williams et al., 2007; Engel et al., 2008) indicating that movement of body parts is crucial for eliciting multisensory stimuli. One participant commented, “Although the calling gesture required more effort, I found it enjoyable to perform as it felt natural within the VE.” Similarly, another participant stated, “I felt calling gesture is intuitive and has a natural pace while moving.”

The study revealed that the calling gesture imposed significantly less cognitive demand than tapping and teleportation. However, some participants expressed that continuous use of the calling gesture for traversing large VEs required a higher level of physical effort, as it involved constant forward and backward motion of the forearms similar to McCullough et al. (2015).

The teleportation gesture was observed to be instantaneous, and as such, no velocity was attributed to this gesture. Users could traverse great distances with a single execution of this gesture.

a) Task Completion Time - During path traversal, the calling gesture required users to halt and turn in the desired direction, and then execute the gesture once again. This two-step process increased the task completion time, especially when the path had multiple turns. Participants found it challenging to execute the calling gesture in paths with several bends and turns.

b) Collisions/Accuracy - The calling gesture had significantly fewer collisions than the tapping gesture. Users found it easier to reach their target object using teleportation, leading to fewer collisions. These results were consistent with the study by Bozgeyikli et al. (2016), where the point and teleport technique had the least number of collisions compared to the joystick and WIP.

a) Task Completion Time—Users primarily utilized landmark navigation when performing the calling gesture, which allowed them to update their current position and construct a cognitive map of the environment, as previously observed by Bruns and Chamberlain (2019) in a study of unfamiliar environments. The calling gesture was also found to be a simple method for identifying and reaching target objects with low visibility in crowded environments like supermarkets. During the physical examination of the experiment, we found that the total distance traveled by users to identify the target objects was less when using the calling gesture because of fewer revisits. The tapping gesture exhibited a significantly inferior performance compared to both the calling and teleportation techniques. Although the users were able to construct a cognitive map of the supermarket using this gesture, and the task of locating the target objects was more efficient than with the teleportation technique, accessing the items was challenging due to the fixed step distance for each tap which also caused more collisions with shelves. For teleportation in the search task, locating the glowing objects was found to be more challenging than the movement itself, which is consistent with the findings of Coomer et al. (2018) in which teleportation required a longer completion time than arm cycling and pointed tugging methods for locating glowing objects. Following each teleportation, users often had to reorient themselves to locate the target object. If the object remained unidentified, users would teleport further, changing their view. As a result, the number of revisits made by users while searching for the objects was high. In teleportation to avoid missing the targets they used short jumps. One user remarked, “In the directed path, I was able to teleport longer distances in one jump as I knew the path, but in the undirected path, I felt I might miss the target object; hence I took shorter jumps.”

b) Collision/Accuracy - The fixed step length of the calling and tapping gestures led to multiple collisions with the shelves.

The increase in presence while using calling may be due to the spatiotemporal continuity, which enhances the degree of immersion. While using the calling gesture, the continuous acquisition of knowledge may positively affect the user’s mental spatial representation. In contrast, because teleportation does not provide the experience of the journey between locations, it is likely that it negatively affects the user’s mental spatial representation, continuity, and connectedness, as noted by Zielasko and Riecke (2021) For teleportation, users reported that they had to mentally prepare for spatial context switching and rapidly reorient themselves after each jump which resulted in breaks in the presence of users.

The users indicated that the visual beam accompanying each step of the calling gesture allowed them to see their current movement direction and the step length to the next point, thus increasing their sense of locomotion control. One participant stated, “With calling, I had more control to move as I know where I will be moving next. The movement was seamless and continuous”. However, because the calling gesture and tapping gesture had a fixed step length, moving the users a short distance, less than one step, was very challenging. Users suggested that these short step-length movements were also necessary to select objects from the shelves. The teleportation provided users with the ability to easily control their movement over both larger and smaller distances.

We found that the teleportation gesture did not provide the user with self-motion cues, resulting in the user feeling stationary during travel, consistent with the findings of Riecke and Zielasko (2021). As a result, there was no dynamic sensory conflict, leading to the least amount of motion sickness.

Among the participants, 13 out of 30 users indicated a preference for the teleportation gesture, which they found to be most suitable for traveling and interacting with objects within the virtual supermarket environment. In contrast, 11 users preferred the calling gesture. Additionally, 12 users preferred the calling gesture for searching for items within the supermarket.

For Study 2, a total of 30 participants took part, out of which 12 were female. The age of the participants ranged between 21–34 years (M = 25.9; SD = 3.98). Ten participants (33.33%) had no prior experience with Head-Mounted Displays (HMDs), and none of the participants had a motor disability or any prior experience with any of the locomotion techniques used in the study.

In this study, we adopted a within-subject design, where each participant completed three training sessions, one for each of the three locomotion techniques used in the study. Participants then completed nine main trials, which consisted of a factorial combination of the three locomotion techniques (Deictic Pointing gesture, Tapping gesture, and Teleportation) and three tasks (Task #1, Task #2, and Task #3) in a seated position. To eliminate any potential order effects, we counterbalanced the order of locomotion techniques across participants. Participants performed the travel gesture on one hand and the other hand was engaged in the selection of a virtual object. Figure 7 displays the four gestures used in Scenario 2, which included (a) the Deictic Pointing Gesture for walking, (b) the Gesture for Running, (c) the Tapping Gesture, and (d) the Teleportation Gesture.

FIGURE 7. Gesture comparison in Scenario 2 (A) deictic pointing gesture for walking (B) pointing gesture for running (C) tapping gesture (D) teleportation gesture. Created with Unity® 2019.4.28f1(64-bit).

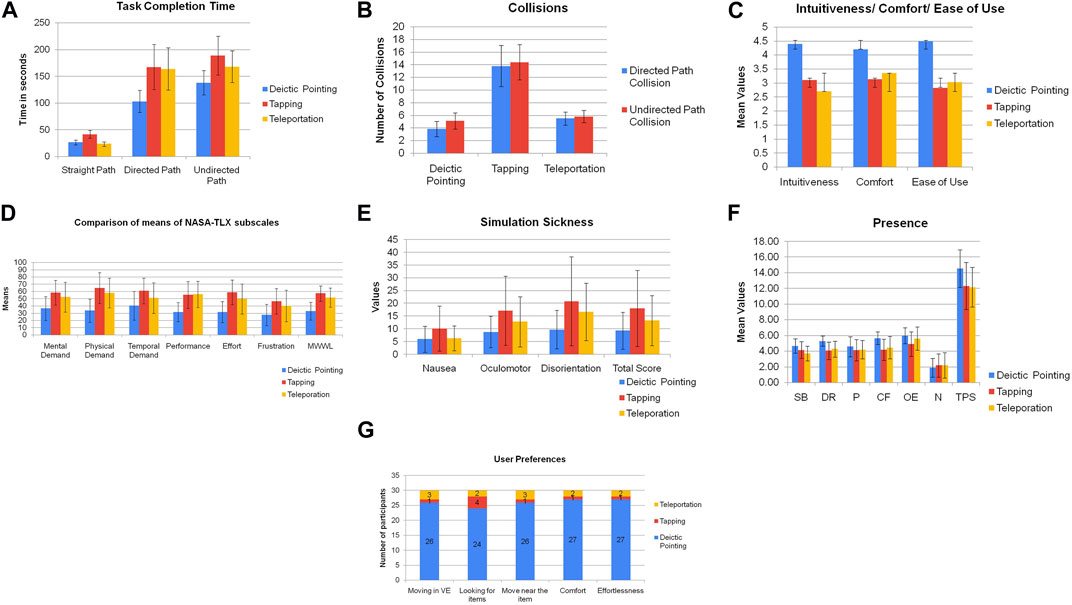

Data were analyzed using SPSS, and a one-way ANOVA was performed. The statistical significance level was set at α = 0.05. To test for the normal distribution of the data, a Levene test was utilized. When pairwise comparisons were conducted, a Tukey’s post hoc test was performed to ascertain statistical significance. The descriptive statistics are shown in Figure 8 for user study 2.

FIGURE 8. Summary representation of measures in Scenario 2 (A) Total Completion Time (B) Collisions (C) Intuitiveness, Comfort, and Ease of Use (D) Perceived Workload (E) SSQ (F) Presence (G) User Preference.

The data were assessed for normal distribution, and we found that the overall mean and standard deviation were (M = 30.58, SD = 9.6). The results for movement in a straight line path were statistically significant (F (2, 90) = 91.48, p < 0.01). The effective size R squared was found to be 0.678. A post hoc analysis revealed that pointing and teleportation was significantly faster than tapping gesture (p < 0.01).

In Task #2, the performance of the task was evaluated by measuring the completion time and number of collisions. The data obtained from the study were found to be normally distributed with (M = 144.90 and SD = 46.02), respectively. The statistical analysis revealed a significant difference in completion time among the three techniques (F (2, 90) = 30.97, p < 0.01). The effective size R squared was found to be 0.416. A post hoc analysis demonstrated that pointing gesture resulted in significantly faster completion time than tapping (p < 0.01) and teleportation. Collision data was also found to be normally distributed, with M = 7.82 and SD = 4.8, respectively. The analysis of collision data revealed a significant difference among the three techniques (F (2, 90) = 191.20, p < 0.01). The effective size R squared was found to be 0.815. Post hoc analysis showed that the pointing technique resulted in significantly fewer collisions compared to tapping (p < 0.01) and teleportation (p = 0.001). Moreover, teleportation had significantly fewer collisions than tapping.

In Task #3, the performance of the task was assessed using the completion time and the number of collisions. The data obtained from the study were found to be normally distributed, with M = 165.18 and SD = 36.39, respectively. The statistical analysis revealed a significant difference in completion time among the three techniques (F (2, 90) = 21.55, p < 0.01). The effective size R squared was found to be 0.331. A post hoc analysis demonstrated that pointing gesture resulted in significantly faster completion time when compared to tapping (p < 0.01) and teleportation (p = 0.001). The teleportation technique resulted in a significantly faster completion time compared to tapping (p = 0.025). Collision data were also found to be normally distributed, with M = 8.43 and SD = 4.6, respectively. The analysis of collision data revealed a significant difference among the three techniques (F (2, 90) = 229.76, p < 0.01). The effective size R squared was found to be 0.841. Post hoc analysis showed that pointing and teleportation resulted in significantly fewer collisions compared to tapping (p < 0.01).

The measure of intuitiveness showed M = 3.41 and SD = 1.03, respectively. One-way ANOVA results indicated a significant difference (F (2, 90) = 40.22, p < 0.01) in the intuitiveness measure. The effective size R squared was found to be 0.480. A post hoc analysis revealed that the pointing gesture (p < 0.01) was significantly more intuitive than the tapping and teleportation.

The comfort measure had an overall M = 3.59 and SD = 1.02, respectively. The one-way ANOVA results revealed a significant difference (F (2, 90) = 12.92, p < 0.01) in the comfort measure. The effective size R squared was found to be 0.229. A post hoc analysis demonstrated that the pointing gesture (p < 0.01) was significantly more comfortable than the tapping and teleportation.

The ease of use measure had an overall mean and standard deviation of M = 3.47 and SD = 0.99, respectively. The results of the ease of use measure were significant (F (2, 90) = 61.56, p < 0.01). The effective size R squared was found to be 0.586. A post hoc analysis revealed that pointing (p < 0.01) had significantly better ease of use than tapping and teleportation.

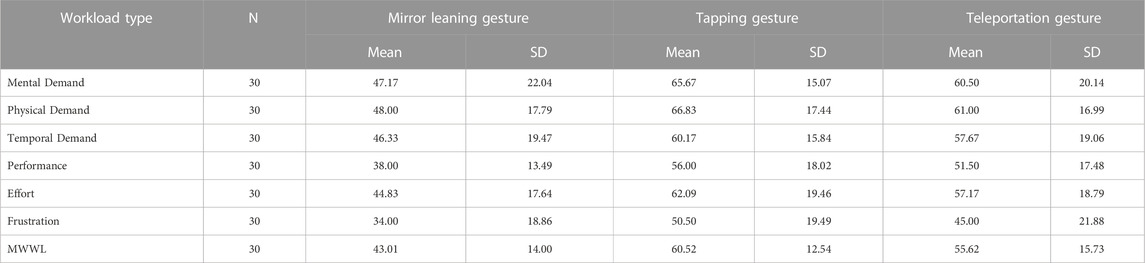

Table 2 displays the mean and standard deviation values of NASA-TLX question scores pertaining to Mental Demand, Physical Demand, Temporal Demand, Effort, Performance, and Frustration for Pointing, Tapping, and Teleport conditions. The results of the statistical analysis indicate that there are significant differences in the scores of Mental Demand (F (2, 90) = 11.55, p < 0.01), Physical Demand (F (2, 90) = 21.89, p < 0.01), Temporal Demand (F (2, 90) = 8.13, p = 0.001), Performance (F (2, 90) = 20.79, p < 0.01), Effort (F (2, 90) = 18.71, p < 0.01), and Mean Weighted Workload (F (2, 90) = 33.72, p < 0.001) between the three techniques. Specifically, the pointing was found to have significantly lower Mental Demand compared to tapping (p < 0.01) and teleportation (p = 0.003). Additionally, the pointing had significantly lower Physical Demand compared to tapping (p < 0.01) and teleportation (p < 0.01), and significantly lower effort compared to tapping and teleportation (p < 0.01). Moreover, the pointing gesture was found to have better performance scores compared to tapping (p < 0.01) and teleportation (p < 0.01), and significantly lower Mean Weighted Workload compared to tapping (p < 0.001) and teleportation (p < 0.001). These findings suggest that the pointing gesture is a more suitable technique compared to tapping and teleportation in terms of mental and physical demands, effort, performance, and workload. The descriptive statistics are shown in Table 2.

TABLE 2. Descriptive Statistics of NASA-TLX dimensions scale (0 = Low to 100 = High) for Scenario 2.

The results of our study indicate that there is a significant difference in simulation sickness, as measured by the total score of the Simulator Sickness Questionnaire (SSQ), between the three techniques (F (2, 90) = 3.00, p = 0.05). The effective size R squared was found to be 0.065. Specifically, the total score of SSQ was found to be significantly different between the Pointing gesture and Tapping gesture (p = 0.043), but no significant differences were found in the variables of nausea (F (2, 90) = 1.73, p = 0.183) and disorientation (F (2, 90) = 0.45, p = 0.63) between the locomotion techniques. Furthermore, in the oculomotor variable (F (2, 90) = 3.7, p = 0.028), a significant difference was found between the Pointing gesture and the Tapping gesture (p = 0.021). These findings suggest that the Pointing gesture may be associated with lower levels of simulation sickness and oculomotor discomfort compared to the Tapping gesture.

There was a significant difference in presence between the three techniques (F (2, 90) = 7.41, p = 0.001). The effective size R squared was found to be 0.146. Post hoc analysis revealed that the presence experienced in using pointing was significantly better than tapping (p = 0.005) and teleportation (p = 0.003).

The participants were asked to rank the three locomotion techniques suitable for Scenario 2. The results showed that pointing was the most preferred technique with 25 out of 30 participants ranking it first, followed by teleportation with 3 out of 30 participants ranking it second, and the tapping gesture was the least preferred, with only 2 participants ranking it.

The study found that the pointing gesture was more intuitive as it allows for continuous locomotion, which enables users to enjoy the journey while navigating. One participant commented that “Pointing gesture felt more natural as it was like a continuous walk. It was easy to adjust with the shelves, whereas in others, it was either too far or too close.”

Participants found the pointing gesture to be a more comfortable and effortless mode of navigation. As one participant noted, “I found it difficult to keep tapping repeatedly to reach my destination, even if the target object was visible. But with pointing, I was able to navigate smoothly without much effort.”

Participants reported that the pointing gesture was comfortable to hold and easy to use, even over long distances. Additionally, the gesture allowed for immediate stopping of locomotion upon hand removal, without any noticeable delay.

During the task, participants mostly used the pointing gesture to travel at running speed, while the teleportation gesture allowed them to take longer jumps to reach their destination quickly. As a result, both of these gestures had lower task completion times compared to tapping.

a) Task Completion Time–Participants used running speed on straight paths and switched to walking speed during turns, which helped them navigate the environment more efficiently while using the pointing gesture.

b) Collisions/Accuracy - The pointing gesture allowed for continuous adjustments in the distance during locomotion, which reduced collisions with shelves. In contrast, for tapping gestures users found it difficult to maintain a relative distance from the shelves while grabbing objects, leading to a higher number of collisions. The teleportation had higher short-distance accuracy, which resulted in fewer collisions.

a) Task Completion Time—The continuous optic flow feedback provided with the pointing gesture was found to facilitate the user’s ability to identify the glowing object, a result similar to that reported by Riecke and Zielasko (2021) for continuous travel in VE. The continuous self-motion cues provided with the pointing gesture also aided in path integration and spatial orientation, leading to improved spatial updating and reduced cognitive load. These findings align with those of Zanbaka et al. (2004), who compared the cognitive effects and paths taken for four different methods of travel in an immersive VE. The pointing gesture is recommended for situations where users need to cover longer distances with minimal effort while maintaining a continuous view of the environment. In contrast, the teleportation gesture may be useful when the user’s destination is unknown, as jumping shorter distances can help avoid missed objects, albeit at the cost of increased completion time due to the need for reorientation between jumps.

b) Collision/Accuracy—Similar to the previous study the pointing and teleportation gestures were able to move shorter distances, thus avoiding collisions with the shelves, as compared to the tapping gesture.

This increased presence for pointing gestures was attributed to the continuous locomotion, which enabled the user to acquire knowledge continuously and build a cognitive map of the supermarket. As one of the study participants commented, “With the pointing gesture, because of the continuous movement, I feel like I am moving and interacting in a real supermarket.” However, in the teleportation gesture, since the user has to reorient themselves after each jump, this negatively affects the continuity of the mental spatial representation.

When using pointing gestures, users preferred to travel at a slower speed to navigate turns and narrow paths, as they required a conscious effort to control their movement. This finding is similar to that reported by Caggianese et al. (2020), who compared controller-based navigation with free hand locomotion techniques and required users to travel on paths with varying widths. As one user in our study commented, “For the pointing gesture, though I was traveling at running speed, I needed to reduce my speed in turns to avoid collisions with the shelves.”

The absence of self-motion cues in teleportation resulted in a lack of dynamic sensory cue conflict, thereby reducing motion sickness. These findings are consistent with the results of a user study conducted by Bonato et al. (2008). However, the lack of self-motion cues in teleportation resulted in reduced immersion. One user in our study commented, “It is easy to travel the whole supermarket in less time. But I am unable to get the layout of the supermarket”.

In Scenario 2, the results showed that the pointing gesture was the most preferred technique for movement (25 out of 30 participants) and searching for items (24 out of 30 participants) in the virtual supermarket. However, four participants encountered issues with the running speed of the pointing gesture. Additionally, users had to consciously keep their index finger within the field of view of the head-mounted display (HMD), which was also noted in a previous study by Caggianese et al. (2020) regarding the challenges of managing hand position relative to the field of view during free hand gestures.

A total of 30 participants took part in Study 5, including 13 females. The age range of the participants was between 21 and 35 years old, with an average age of 26.2 years (SD = 3.87). None of the participants had a motor disability or prior experience with any of the locomotion techniques used in the study.

Our study employed a within-subject design, wherein each participant underwent three training sessions corresponding to three distinct locomotion techniques, namely Mirror Leaning gesture, Tapping gesture, and Teleportation. The main experiment comprised nine trials, each involving a factorial combination of the three locomotion techniques and three tasks denoted as Task#1, Task#2, and Task#3 in a seated position. The order of locomotion techniques was counterbalanced across participants. In this scenario, the participants have both their hands engaged in the selection of virtual objects. Figure 9 displays the four gestures used in Scenario 3, which included (a) the Mirror Leaning Gesture (b) the Tapping Gesture, and (c) the Teleportation Gesture.

FIGURE 9. Gesture Comparison in Scenario 3 (A) Leaning Gesture (B) Tapping gesture (C) Teleportation Gesture. Created with Unity® 2019.4.28f1(64-bit).

The data were analyzed using SPSS, and a one-way ANOVA was performed. The statistical significance level was set at α = 0.05. To test for the normal distribution of the data, a Levene test was utilized. When pairwise comparisons were conducted, a Tukey’s post hoc test was performed to ascertain statistical significance. The descriptive statistics are shown in Figure 10 for user study 3.

FIGURE 10. Summary representation of measures in Scenario 3 (A) Total Completion Time (B) Collisions (C) Intuitiveness, Comfort, and Ease of Use (D) Perceived Workload (E) SSQ (F) Presence (G) User Preference.

The data for completion time were assessed to have a normal distribution, with M = 31.67 and SD = 10.02. Analysis of variance (ANOVA) revealed significant differences in movement along a straight path (F (2, 90) = 147.80, p < 0.01). The effective size R squared was found to be 0.773. Post hoc analysis indicated that mirror-leaning and teleportation were significantly faster than the tapping gesture (p < 0.01).

The data for completion time were normally distributed, with M = 151.13 and SD = 40.42. Analysis of variance (ANOVA) revealed significant differences in completion time (F (2, 90) = 24.405, p < 0.01). The effective size R squared was found to be 0.359. Post hoc analysis indicated that the mirror leaning technique (p < 0.01) was significantly faster than tapping and teleportation. The data for collision counts was also found to be normally distributed, with M = 8.48 and SD = 4.03. ANOVA indicated significant differences in collision counts (F (2, 90) = 99.18, p < 0.01). The effective size R squared was found to be 0.695. Post hoc analysis showed that the mirror-leaning and teleportation (p < 0.01) had a significantly lower number of collisions compared to the tapping gesture.

The data exhibited a normal distribution, with M = 169.23 and SD = 31.55. The completion time results were analyzed using a one-way ANOVA and were found to be (F (2, 90) = 12.73, p < 0.01). The effective size R squared was found to be 0.226. Post hoc analysis revealed that the mirror-leaning had a significantly shorter completion time compared to the tapping (p < 0.01) and teleportation (p = 0.035). The teleportation technique had a significantly shorter completion time compared to the tapping gesture (p = 0.036). The collision data also exhibited a normal distribution, with M = 9.69 and SD = 3.88. The collision count results were analyzed using a one-way ANOVA and were found to be (F (2, 90) = 113.35, p < 0.01). The effective size R squared was found to be 0.723. Post hoc analysis showed that the mirror-leaning (p < 0.01) had significantly fewer collisions than the tapping gesture and the teleportation gesture (p < 0.01). Teleportation also had significantly fewer collisions than tapping (p < 0.01).

The measure of user intuition had an overall M = 3.32 and SD = 0.92. To analyze the data, we used a one-way ANOVA, which yielded a significant result (F (2, 90) = 21.92, p < 0.01). The effective size R squared was found to be 0.335. Post hoc analysis revealed that the mirror leaning (p < 0.01) was significantly more intuitive than both the tapping and teleportation.

The mean and standard deviation of the comfort measure were calculated to be M = 3.31 and SD = 0.94, respectively. The ANOVA results indicated that there was no significant difference in comfort between the three gestures, (F (2, 90) = 1.371, p = 0.259).

The overall mean and standard deviation of the data were M = 3.16 and SD = 0.92, respectively. The ease of use results were subjected to an analysis of variance (ANOVA), revealing a significant effect (F (2, 90) = 13.69, p < 0.01). The effective size R squared was found to be 0.239. Post hoc results demonstrated that the mirror leaning (p < 0.01) had significantly better ease of use compared to the tapping gesture and the teleportation gesture.

The results of the analysis revealed a significant difference in the Mental demand (F (2,90) = 7.332, p = 0.001) between the techniques. Specifically, mirror leaning showed significantly lower levels of mental demand compared to tapping (p = 0.001) and teleportation (p = 0.024). Similarly, the analysis for physical demand was significant (F (2, 90) = 9.19, p < 0.01), with the mirror leaning resulting in significantly lower physical demand compared to tapping (p < 0.01) and teleportation (p = 0.013). Moreover, the analysis for temporal demand was significant (F (2, 90) = 4.91, p = 0.009), and the mirror-leaning resulted in significantly less temporal demand compared to tapping (p = 0.012) and teleportation (p = 0.047). The analysis for performance scores was also significant (F (2, 90) = 9.72, p < 0.01), with the mirror leaning outperforming the tapping (p < 0.01) and the teleportation gesture (p = 0.006). The effort scores analysis showed significant results (F (2, 90) = 6.76, p = 0.002), with the mirror leaning requiring significantly less effort than tapping (p = 0.002) and teleportation (p = 0.032). Lastly, there was a significant difference in the Mean Weighted Workload (MWWL) (F (2, 90) = 12.219, p < 0.001) between the techniques. The Mirror Leaning gesture resulted in significantly less MWWL when compared to the tapping (p < 0.001) and teleportation (p = 0.002). The descriptive statistics are shown in Table 3.

TABLE 3. Descriptive Statistics of NASA-TLX dimensions scale (0 = Low to 100 = High) for Scenario 3.

The results of the study indicated a significant difference in simulation sickness, as determined by the total score (F (2, 90) = 2.94, p = 0.05). The effective size R squared was found to be 0.063. A significant difference was found in the total score of SSQ between the mirror-leaning gesture and the tapping gesture (p = 0.046). However, no significant differences were observed in the variables of nausea, disorientation, and oculomotor between the locomotion techniques.

The analysis of the total presence scores revealed a significant difference (F (2, 90) = 4.35, p = 0.016). The effective size R squared was found to be 0.091. Further post hoc analysis revealed that the mirror leaning had a significantly higher presence than the tapping gesture (p = 0.044) and the teleportation gesture (p = 0.025). In addition, a significant difference was found in the presence scores between the tapping and the teleportation gesture.

The mirror leaning gesture was the most preferred technique by the majority of the participants, with 19 out of 30 ranking it as their first choice. The teleportation gesture was ranked as the second most preferred gesture by 9 participants, and the tapping gesture was ranked third with 2 participants preferring it.

The mirror-leaning gesture offers a continuous optic flow, which in turn leads to stronger self-motion cues and increased vection for locomotion. Furthermore, this gesture is suitable for various applications such as driving and flight simulations where motion cueing is crucial. A participant also provided positive feedback stating, “With mirror-leaning gesture, as I lean, I feel I am really moving in a VE, but with the teleportation gesture, I am unable to feel a natural movement.”

This study revealed that the mirror-leaning gesture imposes significantly less load than tapping and teleportation gestures. The increased embodiment of the mirror-leaning gesture contributes to the reduction of cognitive load. This gesture provides users with greater freedom to move naturally and multitask using their hands since it does not require continuous hand usage. A participant stated, “In the mirror-leaning gesture, both hands were free for interaction, and my upper body was useful for locomotion. I felt very engaged during the travel.”

The study observed no significant difference in comfort between the gestures. This outcome is different from the findings of Buttussi and Chittaro (2019), where the teleportation gesture was reported to be significantly more comfortable than the mirror-leaning gesture in terms of general comfort. However, it should be noted that the current study compared joystick, teleportation, and leaning gestures and involved only directed path movement tasks, which may have contributed to this difference in results.

In the mirror-leaning gesture, users traveled at running speed to reach the destination, while in teleportation, users took longer jumps to reach the destination.

a) Task Completion Time—The mirror-leaning gesture allowed users to control their movement speed, using running speed on straight paths and switching to walking speed during turns, which led to faster task completion compared to the teleportation and tapping gestures.

b) Collisions/Accuracy - The study findings revealed that the mirror-leaning gesture was not as accurate for very short distance travel, such as moving a few millimeters near a shelf. This outcome is consistent with the findings of Kitson et al. (2017), where leaning using navichair was compared to joystick control. Unintentional leaning, such as bending slightly to reach an object, led to several unexpected collisions with shelves when grabbing objects.

a) Task Completion Time–The users found the mirror view provided during leaning to be helpful for viewing objects on upper shelves and stopping when identifying glowing objects, especially during undirected path travel. The visual effect of objects glowing was also identified as a contributing factor to the success of the leaning gesture in helping users reach their destinations. One participant noted that “The mirror view was very helpful, especially for the undirected path travel, as I was constantly looking for a glowing object in the small window.”

In contrast, we found that the tapping and teleportation technique posed a challenge when both hands were required for multitasking. Users experienced difficulties while looking for items with their hands occupied and were also impeded by path integration when attempting to reach targets in undirected path travel.

b) Collision/Accuracy - Our observations showed that the mirror-leaning and teleportation gestures resulted in significantly fewer collisions than the tapping gesture when used in undirected paths.

The increase in the presence of the mirror-leaning gesture is attributed to the vection and continuous locomotion facilitated by the method. As with the pointing gesture in Scenario 2, this continuous movement aided in continuous knowledge acquisition, enabling users to build a cognitive map of the supermarket.

Similar to Hashemian et al. (2020), the mirror-leaning gesture provided users with continuous locomotion in the VE and better control over their movement. Visual cues in the form of a single arrow for walking speed and a double arrow for running speed were found to be particularly helpful in enabling users to control the degree of leaning, reduce or increase speed, and stop movement. One participant noted that “The mirror-leaning gesture has good visual cues to specify the speed, which helped me in controlling my leaning angle”. Additionally, the mirror-leaning gesture exhibited the least latency issues, allowing for instantaneous start and stop of movement corresponding to the leaning angle.

The mirror-leaning gesture provided users with some vestibular cues during simulated accelerations, which reasonably helped reduce cybersickness by reducing inter-sensory cue conflict. We also quantitatively observed that the leaning gesture resulted in significantly less motion sickness than the tapping gesture.

The leaning gesture was preferred by 12 participants for looking at items in the supermarket, while another 12 participants preferred the teleportation gesture for this purpose. In Scenario 3, the leaning gesture was found to be the most suitable for the given multitasking scenario. Although users preferred this gesture for moving around the VE, some participants reported that it would be inconvenient to traverse large VEs because leaning for longer periods would cause fatigue and discomfort, such as back pain. Lowering the head downwards while using the locomotion technique also resulted in neck pain for participants, and they reported discomfort due to the weight of the HMD. While the mirror view was useful, some users complained that it obscured the path or items in the directed path search, but this did not significantly interfere with the movement or search task.

In summary, the results of the study showed that in Scenario 1, the teleportation gesture was found to be suitable for traveling and interacting with objects, particularly when the target object’s location was known ahead of time. However, it was found to be less effective when searching for target objects in a dense environment without a predefined path. In such cases, the calling gesture can be used to locate the target objects. Additionally, the calling gesture can be employed in VR applications where less effort and greater ease of use are not the primary goals. In fact, in some cases, increased effort can enhance the sense of accomplishment, particularly when time is not a critical factor. For example, in Zielasko and Riecke (2021), the sense of spatial presence of the environment, the completion of a task, and the overall experience gained from the VE were more important. Moreover, in a more relaxed or casual strolling environment, where users have more time to enjoy the travel experience, calling gestures can be used as a mode of travel. Furthermore, the calling gesture can be used in applications such as exergames, where high effort is desirable to motivate users and enhance enjoyment.

In study 2 it was found that the deictic pointing gesture was a more intuitive, less tiring, and more precise interaction technique for Scenario 2, which allowed users to travel short distances accurately and acquire better spatial knowledge of the environment. Teleportation, on the other hand, was useful for traveling longer distances in a shorter time but led to breaks in presence due to continuous spatial context switching, reducing immersion. Pointing was also the most preferred technique for movement and looking for items in the supermarket, with tapping and teleportation being less popular. Some users found it challenging to keep their finger within the field of view while using pointing, similar to previous findings about managing hand positions in the field of view during gesture-based interactions.

In study 3, it was found that the leaning gesture is a suitable option for environments where users need to make decisions while traveling, as it has a low cognitive load compared to other gestures, allowing users to perform other tasks simultaneously. It is especially useful in scenarios that involve high levels of multitasking with both hands and require high presence and low motion sickness. On the other hand, the teleportation gesture is suitable for large VEs that primarily involve travel without many interactions. It is ideal for scenarios where presence is not the primary goal, and ease of use and user comfort are the top priorities. The overall summary of the results is presented in Table 4.

The conducted studies had certain limitations. The gestures created for the various multitasking environments were based on a previous gesture elicitation study, which had participants only from India. This may have resulted in different gestures being suggested by computer users from diverse cultures or countries for multitasking scenarios. To address this limitation, it is recommended to increase the number of participants from various cultures to improve the generalization and cultural coverage of the gestures. Another limitation is that the participants in the study were university students with an average age of 25 years and most of them with prior experience with VR applications. The gesture performance may vary when different age groups, such as elderly users, and those without prior VR experience, are considered. The results achieved from this study cannot be generalized for users of all age groups and experience levels. Moreover, we would also intend to measure the physical fatigue quantitatively for the neck and back region as gestures such as leaning may induce pain when used for traveling in larger VEs. In this study, we focused on investigating the newly designed locomotion techniques for search tasks in a virtual supermarket. However, there is potential for applying these techniques to other VR applications such as time-critical applications, virtual walkthroughs, and exer games. Conducting a detailed analysis of gesture performance in various applications would enhance the study findings and could aid future researchers and designers in selecting the appropriate gesture for locomotion based on the VR application.

In this paper, we presented the evaluation of three newly designed gesture-based locomotion techniques for use in three different multitasking scenarios. These new techniques, namely Calling, Deictic Pointing, and Mirror Leaning gestures, were compared to existing gestures in the literature, namely tapping and teleportation gestures. The study found that the teleportation gesture was the preferred gesture for Scenario 1, while the Calling gesture was the most intuitive with increased presence. In Scenario 2, the Deictic Pointing gesture outperformed the tapping and teleportation gestures in terms of performance, comfort, ease of use, and presence. The Mirror Leaning gesture was found to be the most intuitive gesture in Scenario 3, with increased presence and performance compared to tapping and teleportation gestures. Overall, these results have significant implications for interaction design experts, providing valuable insights for the design of efficient and effective gesture-based locomotion techniques for use in a seated position in multitasking VEs.

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Indian Institute of Technology Guwahati. The patients/participants provided their written informed consent to participate in this study.

These experimental studies were carried out by PG as a part of PhD research guided by KS at the Indian Institute of Technology Guwahati, India. PG is the author of this paper. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2023.1169654/full#supplementary-material

Bhandari, J., MacNeilage, P. R., and Folmer, E. (2018). “Teleportation without spatial disorientation using optical flow cues,” in Graphics interface, 162–167.

Bonato, F., Bubka, A., Palmisano, S., Phillip, D., and Moreno, G. (2008). Vection change exacerbates simulator sickness in virtual environments. Presence Teleoperators VEs 17 (3), 283–292. doi:10.1162/pres.17.3.283

Bozgeyikli, E., Raij, A., Katkoori, S., and Dubey, R. (2016). “Point & teleport locomotion technique for virtual reality,” in Proceedings of the 2016 annual symposium on computer-human interaction in play, 205–216.

Bruns, C. R., and Chamberlain, B. C. (2019). The influence of landmarks and urban form on cognitive maps using virtual reality. Landsc. Urban Plan. 189, 296–306. doi:10.1016/j.landurbplan.2019.05.006

Buttussi, F., and Chittaro, L. (2019). Locomotion in place in virtual reality: A comparative evaluation of joystick, teleport, and leaning. IEEE Trans. Vis. Comput. Graph. 27 (1), 125–136. doi:10.1109/tvcg.2019.2928304

Caggianese, G., Capece, N., Erra, U., Gallo, L., and Rinaldi, M. (2020). Freehand-steering locomotion techniques for immersive virtual environments: A comparative evaluation. Int. J. Human–Computer Interact. 36 (18), 1734–1755. doi:10.1080/10447318.2020.1785151

Cardoso, J. (2017). Gesture-based locomotion in immersive VR worlds with the Leap motion controller.

Chester, M. R., Rys, M. J., and Konz, S. A. (2002). Leg swelling, comfort and fatigue when sitting, standing, and sit/standing. Int. J. Industrial Ergonomics 29 (5), 289–296. doi:10.1016/s0169-8141(01)00069-5

Chou, Y. H., Wagenaar, R. C., Saltzman, E., Giphart, J. E., Young, D., Davidsdottir, R., et al. (2009). Effects of optic flow speed and lateral flow asymmetry on locomotion in younger and older adults: A virtual reality study. Journals Gerontology Ser. B 64 (2), 222–231. doi:10.1093/geronb/gbp003

Coomer, N., Bullard, S., Clinton, W., and Williams-Sanders, B. (2018). “Evaluating the effects of four VR locomotion methods: joystick, arm-cycling, point-tugging, and teleporting,” in Proceedings of the 15th ACM symposium on applied perception, 1–8.

Engel, D., Curio, C., Tcheang, L., Mohler, B., and Bülthoff, H. H. (2008). “A psychophysically calibrated controller for navigating through large environments in a limited free-walking space,” in Proceedings of the 2008 ACM symposium on Virtual reality software and technology, 157–164.

Ferracani, A., Pezzatini, D., Bianchini, J., Biscini, G., and Del Bimbo, A. (2016). “Locomotion by natural gestures for immersive VEs,” in Proceedings of the 1st international workshop on multimedia alternate realities, 21–24.

Ganapathi, P., and Sorathia, K. (2022). “Analysis of body-gestures elucidated through elicitation study for natural locomotion in virtual reality,” in Ergonomics for design and innovation: Humanizing work and work environment: Proceedings of HWWE 2021 (Cham: Springer International Publishing), 1313–1326.

Ganapathi, P., and Sorathia, K. (2019). “Elicitation study of body gestures for locomotion in HMD-VR interfaces in a sitting-position,” in Proceedings of the 12th ACM SIGGRAPH conference on motion, interaction and games, 1–10.

Griffin, N. N., Liu, J., and Folmer, E. (2018). “Evaluation of handsbusy vs handsfree virtual locomotion,” in Proceedings of the 2018 annual symposium on computer-human interaction in play, 211–219.

Harris, A., Nguyen, K., Wilson, P. T., Jackoski, M., and Williams, B. (2014). “Human joystick: wii-leaning to translate in large VEs,” in Proceedings of the 13th ACM SIGGRAPH international conference on virtual-reality continuum and its applications in industry, 231–234.

Hashemian, A. M., Lotfaliei, M., Adhikari, A., Kruijff, E., and Riecke, B. E. (2020). Headjoystick: improving flying in vr using a novel leaning-based interface. IEEE Trans. Vis. Comput. Graph. 28 (4), 1792–1809. doi:10.1109/tvcg.2020.3025084

Kitson, A., Hashemian, A. M., Stepanova, E. R., Kruijff, E., and Riecke, B. E. (2017). “Comparing leaning-based motion cueing interfaces for virtual reality locomotion,” in 2017 IEEE Symposium on 3d user interfaces (3DUI) (IEEE), 73–82.

Kruijff, E., Riecke, B., Trekowski, C., and Kitson, A. (2015). “Upper body leaning can affect forward self-motion perception in VEs,” in Proceedings of the 3rd ACM symposium on spatial user interaction, 103–112.

McCullough, M., Xu, H., Michelson, J., Jackoski, M., Pease, W., Cobb, W., et al. (2015). “Myo arm: swinging to explore a VE,” in Proceedings of the ACM SIGGRAPH symposium on applied perception, 107–113.

Merhi, O., Faugloire, E., Flanagan, M., and Stoffregen, T. A. (2007). Motion sickness, console video games, and head-mounted displays. Hum. factors 49 (5), 920–934. doi:10.1518/001872007x230262

Nabiyouni, M., Saktheeswaran, A., Bowman, D. A., and Karanth, A. (2015). “Comparing the performance of natural, semi-natural, and non-natural locomotion techniques in virtual reality,” in 2015 IEEE symposium on 3D user interfaces (3DUI) (IEEE), 3–10.

Nilsson, N. C., Serafin, S., and Nordahl, R. (2013). “The perceived naturalness of virtual locomotion methods devoid of explicit leg movements,” in Proceedings of motion on games, 155–164.

Nilsson, N. C., Serafin, S., Steinicke, F., and Nordahl, R. (2018). Natural walking in virtual reality: a review. Comput. Entertain. (CIE) 16 (2), 1–22. doi:10.1145/3180658

Pai, Y. S., and Kunze, K. (2017). “Armswing: using arm swings for accessible and immersive navigation in ar/vr spaces,” in Proceedings of the 16th international conference on mobile and ubiquitous multimedia, 189–198.

Paris, R., Joshi, M., He, Q., Narasimham, G., McNamara, T. P., and Bodenheimer, B. (2017). “Acquisition of survey knowledge using walking in place and resetting methods in immersive VEs,” in Proceedings of the ACM symposium on applied perception, 1–8.

Riecke, B. E., Bodenheimer, B., McNamara, T. P., Williams, B., Peng, P., and Feuereissen, D. (2010). “Do we need to walk for effective virtual reality navigation? Physical rotations alone may suffice,” in Spatial cognition VII: International conference, spatial cognition 2010, Mt. Hood/portland, OR, USA, august 15-19, 2010. Proceedings 7 (Springer Berlin Heidelberg), 234–247.

Riecke, B. E., and Zielasko, D. (2021). “Continuous vs. Discontinuous (teleport) locomotion in VR: how implications can provide both benefits and disadvantages,” in 2021 IEEE conference on virtual reality and 3D user interfaces abstracts and workshops (VRW) (IEEE), 373–374.

Ruddle, R. A., and Lessels, S. (2006). For efficient navigational search, humans require full physical movement, but not a rich visual scene. Psychol. Sci. 17 (6), 460–465. doi:10.1111/j.1467-9280.2006.01728.x

Templeman, J. N., Denbrook, P. S., and Sibert, L. E. (1999). Virtual locomotion: walking in place through virtual environments. Presence 8 (6), 598–617. doi:10.1162/105474699566512

Tomberlin, M., Tahai, L., and Pietroszek, K. (2017). “Gauntlet: travel technique for immersive environments using non-dominant hand,” in 2017 IEEE virtual reality (VR) (IEEE), 299–300.

Tregillus, S., Al Zayer, M., and Folmer, E. (2017). “Handsfree omnidirectional VR navigation using head tilt,” in Proceedings of the 2017 CHI conference on human factors in computing systems, 4063–4068.

Usoh, M., Arthur, K., Whitton, M. C., Bastos, R., Steed, A., Slater, M., et al. (1999). “Walking> walking-in-place> flying, in virtual environments,” in Proceedings of the 26th annual conference on Computer graphics and interactive techniques, 359–364.

Williams, B., Narasimham, G., Rump, B., McNamara, T. P., Carr, T. H., Rieser, J., et al. (2007). “Exploring large VEs with an HMD when physical space is limited,” in Proceedings of the 4th symposium on Applied perception in graphics and visualization, 41–48.

Zanbaka, C., Babu, S., Xiao, D., Ulinski, A., Hodges, L. F., and Lok, B. (2004). “Effects of travel technique on cognition in VEs,” in IEEE virtual reality 2004 (IEEE), 149–286.