94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Virtual Real., 13 March 2023

Sec. Virtual Reality and Human Behaviour

Volume 4 - 2023 | https://doi.org/10.3389/frvir.2023.1100540

This article is part of the Research TopicEmbodiment and Presence in Collaborative Mixed RealityView all 6 articles

This is a perspective that presents a viewpoint on immersive informal learning applications built as digital twins of the natural world. Such applications provide multimodal, interactive, immersive, embodied, and sensory experiences unique and different from typical game art environments because they are geospatial visualizations of data and information derived from ecological field plot studies, geographical information systems, drone images, and botanically correct 3D plant models visualized in real-time interactive game engines. Since they are constructed from geometric objects, they can programmatically self-express semantic data and connect to knowledge stores on the Internet to create a web of knowledge for both exploration of a virtual environment as a natural landscape and for exploration of connected knowledge stores for informal learning at the moment of curiosity. This design is exceptionally powerful for informal learning as it supports the innate human desire to understand the world. This paper summarizes the construction methods used for creating three digital twins of natural environments and the informal learning applications created and distributed, namely, augmented reality (AR), virtual reality (VR), and virtual field trips. Informal learning outcomes and emotional reactions are evaluated using mixed-methods research studies to understand the impact of design factors. Visual fidelity and navigational freedom are combined to increase learning outcomes and many effective and emotional outcomes as well. Access to facts and story increase learning outcomes, and applications evaluated as beautiful are correlated with emotional reactions of awe and wonder, and awe and wonder are correlated with higher learning gains. Beauty is correlated with other system-wide subjective evaluations largely accepted as important to create a context conducive to support learning outcomes, such as calmness, excitement, and curiosity, a desire to share, and a desire to create. This paper summarizes the highlights of the author’s prior work to give the reader a perspective on the body of work.

Virtual field trips, if used for education, should be designed to prevent misconceptions (McCauley, 2017). Informal learning, like what happens on a field trip, represents experiential learning directly from the environment, often facilitated by a knowledgeable adult. Something salient catches the child’s eye in the environment, a question is asked to satisfy that innate human need to understand its world, and if a knowledgeable person is there, an explanation is offered (Ash, 2004), or the kids just Google it. This ambient array of signals sensed from the natural world, queried from the social network and often supported by artificial systems, represent the top level design context in which informal learning occurs. Accurate educational content is required to prevent misconceptions. That content is represented in the semantic information layer, the ontology that became part of my application design as a critical success feature. It is useful to have ontology of the world to represent the semantic meaning of virtual objects if one wishes to model it. This problem of naming and organizing the natural world was solved by Carl Linnaeus, recognized as the father of botanical taxonomy. It also makes machine learning, automation, and connections of large heterogeneous systems possible with semantic interfaces, all necessary for the construction of digital twins and educational metaverse applications of the natural world.

Data visualization is an established method used to communicate the meaning of large datasets and context. Typical geographical information system (GIS) datasets are normally presented as abstract dots on a 2D map, which tend to lack emotional engagement. They are emotionally “cold.” They do facilitate exploration, pattern recognition, hypothesis creation, collaboration, and insights. When the data sources are real-time data feeds from sensors in the real environment, such as data recording a hurricane approaching the shores of Florida, over time, we can see patterns in spatial temporal data, the path, speed, rainfall, and storm surge associated with all hurricanes approaching Florida, to then build models and simulations to forecast and predict future impacts and to inform our collective decisions and responses. Then, it becomes a powerful decision support tool and offers insight into the future unknowns.

Game engines such as the Epic Games Unreal Engine and Environmental Systems Research Institute (ESRI) have recently converged and collaborated to create ArcGIS Maps SDK for the Unreal Engine (Epic Games, 2023; ESRI, 2023), exploiting the powerful graphical, immersive, extended reality capabilities of the Unreal Engine to visualize and interact with large accurate GIS datasets and real-time data feeds. It is now easier to ingest terrain data, waterbody data, forest data, and ecological biosphere data, to produce 3D GIS models as immersive, interactive, and embodied experiences. Companies such as Blackshark.ai Inc. (Blackshark.ai, 2023), Cesium (Cesium, 2023), and NVIDIA Earth 2 (Huang, 2021) are quickly working to create 3D models of Earth. These models may evolve into future digital twins of Earth systems that will be important for scientists to better understand the state of the natural world (Gritz, 2022). Such applications, designed as digital twins, may prove to be valuable beyond virtual field trips for informal learning (Harrington, 2008, Harrington, 2009, Harrington, 2011, Harrington, 2012, Harrington, 2019, Harrington, 2020a, Harrington, 2020b, Harrington, 2020c, Harrington, 2021a, Harrington, 2021b), when geospatial information of the natural environment is required.

The user experience design for these virtual field trip applications had to support the learners’ objectives. Real field trip ethnographic observations provided a use case for the design, demonstrating the importance of high information fidelity, high graphical fidelity, and high visual fidelity combined with free choice in exploration and interactivity to access the educational material at moments of interest (Harrington, 2008; Harrington, 2009; Harrington, 2011; Harrington, 2012). These moments are salient signals or events triggered by the environment, a teachable moment (Bentley, 1995). Informal learning is self-directed, unlike formal education that represents someone else’s learning objectives. The key observations were that each individual was curious and motivated to learn about the natural world in their own way. There was a level of excitement, awe, and wonder, interactions, and questions. At the same time, each child’s attention was captured by slightly different salient signals or salient events in the environment, or all responded to a very strong salient signal or event that was unexpected and surprising, such as finding a salamander when none was expected (Harrington, 2009). This research is based on the assumption that we all seek to understand our world, and if given the chance with the right tools, we will all do so.

Information fidelity (Harrington et al., 2021b) is a requirement in all digital twins and a requirement for immersive informal learning applications as well, since the transmitted signals (e.g., graphical fidelity) represent the meaning (e.g., information fidelity) and are perceived as real (e.g., visual fidelity). Information fidelity is not graphical fidelity, but it may produce graphical fidelity as a byproduct of photorealistic representations of the natural world. The movie Avatar (Cameron, 2009) and Avatar: The Way of Water (Cameron, 2022) are photorealistic, but completely fake, so the reverse is not always true. Photorealistic applications produce high graphical fidelity and high visual fidelity, but do not necessarily have high information fidelity. Conversely, schematic representations of the world, such as air traffic control displays, have low graphical fidelity and low visual fidelity, but have high information fidelity required for correct perception of the situation and fast error-free decision response. When the photorealistic model is a byproduct of the accuracy of the data, it has high information fidelity and high visual fidelity, and informal learning may occur from direct observation, perception, experience, and interaction with the virtual models. If those models are connected to accurate semantic metadata, they can be made interactive to expose correct facts, concepts, and stories, and if presented in context, then informal learning may occur. This interaction results in new knowledge about the environment, dynamically changing perception and sensitivity to those signals in future encounters. Over time and with exposure, the connections between the environmental signals and its meaning are strengthened. This new knowledge is often quickly shared with others in social networks to spread the information to those who have not encountered the environmental signal directly.

The main goal of the creative works reported here was to produce applications that were both beautiful and functional, and used as accurate simulations to support informal learning that occurs on a field trip in nature (Harrington, 2008). The main research focus was on understanding the design, development, and evaluation of immersive informal learning applications. Using game engines as a general-purpose visualization tool, the design process became an extension of the traditional user-centered design to include domain experts, stakeholders, educators, and learners in an iterative multidisciplinary co-creative process, called the expert-learner-user-experience (ELUX) design process (Harrington et al., 2021a). This process was critical for the location and identification of the plants, and the details required to construct accurate 3D plant models. The photographs of each part of the plants, including leaves, petals, stamens, and pistils, and recording of height and spread data, along with the collection of the plant inventory and population densities by geolocation from field work, were all combined to increase information fidelity and visual fidelity (Harrington et al., 2021a). Real-time interactive computer graphics of AR and VR constrained the models to highly efficient low-polygon models without the sacrifice of salient botanical features. However, with the optimized release of Epic Games Unreal Engine 5.1 and Nanite, high-polygon plant models are now viable in large virtual open-world environments without degrading the models for the past runtime constraints.

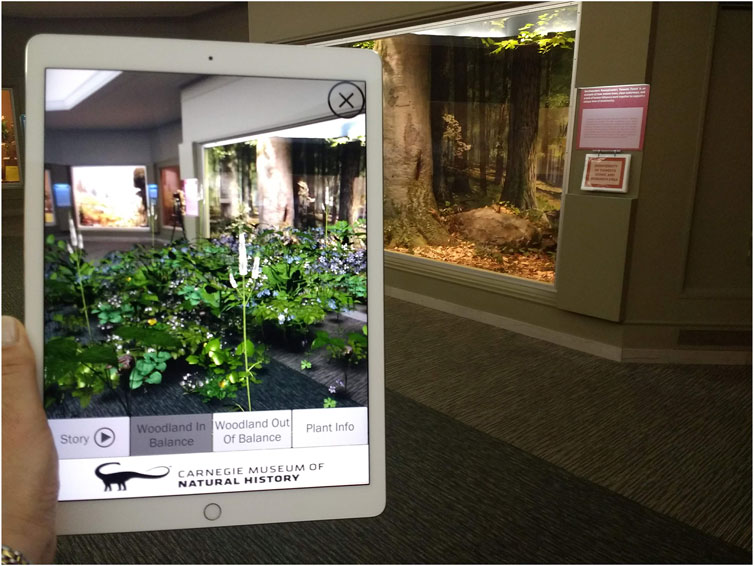

The learning and emotional impacts were evaluated using mixed-methods experimental research designs by the internal review board (IRB)-approved studies for publication in journals and conferences. Since 2016, the Harrington Lab at the University of Central Florida (UCF) has produced two major projects. Both the Virtual UCF Arboretum application (Figure 1) and the AR Perpetual Garden Apps (Figure 2) are based on similar data visualization methods used to produce the Virtual Trillium Trail, an earlier project. The creative works may be found and downloaded from the Harrington Lab website (https://the-harrington-lab.itch.io).

FIGURE 1. Virtual UCF Arboretum image of the environment. Inset is the author using the HTC VIVE VR headset and the Infinadeck omnidirectional treadmill configuration. Harrington, Maria. “Digital Twins of Nature Demo Reel I/ITSEC 2022 The Harrington Lab.” Video, 2.03 minutes, November 28, 2022. URL: https://youtu.be/FP9Ottj7cCA.

FIGURE 2. AR Perpetual Garden App in use in the Carnegie Museum of Natural History. Harrington, Maria. “AR Perpetual Garden App.” Video, 3.21 minutes, September 29, 2018. URL: https://youtu.be/maJ7iOu2BT4

The Virtual Trillium Trail Project started in 2003 as a modification of Unreal Tournament. Terrain and waterbody data from ESRI were imported after transferring to file formats that Unreal could recognize and visualize. Alpha maps in Unreal were used to define and visualize the plant inventories and population densities using geolocation. Part of a Ph.D. dissertation in information science it used non-experimental ethnographic studies and a co-design iterative process to build, test, and refine the application (Harrington, 2008). After the application was designed, built, and tested, experimental research studies were used to measure usability, emotional, behavioral, and learning outcomes influenced by design factors.

The first pilot study directly compared the real and the virtual environments to establish a control base-line condition for external validity. The pilot study recruited volunteers (n = 12) from a local suburban public elementary school in the greater Pittsburgh area, ranging in age from 8 to 10 years. The ethnographic component of this pilot study showed more learning events were triggered by salient signals in the real environment, such as a surprise salamander find, that were not programmed into the virtual field trip (e.g., no virtual salamander to find!) (Harrington, 2009). A within-subject design counterbalanced the two experimental conditions of real and virtual experienced by two groups in reverse order, to allow for a meaningful post-survey direct comparison. When the educational content evaluated was restricted to content present in both environments (e.g., only plants), the learning activities (e.g., map annotations of salient findings) were identical between the real (M = 2.75, SD = 1.96) and the virtual (M = 2.83, SD = 3.43) and showed no difference, F (1,11) = 0.00, p = 0.95 (Harrington, 2011). A Wilcoxon test was used to evaluate the 5-point Likert scale post-survey results, and it showed no difference between the users’ evaluations of the two experiences. The user evaluation of exploration, desire to create, sense of excitement, levels of curiosity, desire to re-experience, sense of calmness, desire to share, awe and wonder, assessment of beauty, level of frustration, and disinterest were no different between the virtual field trip and the real field trip (Harrington, 2009). This suggests that at a subjective level, the two environments were experienced in similar ways. There appears to be evidence of priming, transfer, and reinforcement, indicating that the best educational practices are to use the virtual and real experiences together to increase total learning outcomes. However, the small sample size and low power reduced confidence in such a conclusion.

The larger empirical research study used a factorial design with a two-way ANOVA between visual fidelity and navigational freedom. The high-visual-fidelity condition used photorealistic plants with high information fidelity (immersion dimension) and high navigational freedom that supported 360° free choice in navigation and exploration of that environment (embodiment and embodied presence dimensions). These two dimensions of design are fundamental to all virtual environments that are large open worlds representing geospatial information. While technology may change and improve levels of immersion and embodiment, the design dimensions of visual fidelity and navigational freedom will not.

Each independent variable was set to high and low levels of fidelity using the one system in a planned orthogonal contrast of system design parameters for rigorous internal validity. The resulting four combinations of system design parameters were high visual fidelity and high navigational freedom, high visual fidelity and low navigational freedom, low visual fidelity and high navigational freedom, and low visual fidelity and low navigational freedom, while holding all other independent variables constant. Such a research design was important in order to measure the impact of the two independent variables and determine their separate and interactive effects on the dependent variables of learning, emotional reaction, behavioral, and usability outcomes.

Several dependent variables were measured as ratio or interval scale data. Learning was measured as the percent change between a pre-test and a post-test of facts and concepts (knowledge gained). Emotional reactions and subjective evaluations were measured using a post-survey 5-point Likert scale across many items of interest, including beauty, awe and wonder, curiosity, calmness, excitement, inquiry, learning, frustration, desire to create, desire to share, and presence. Behavior was measured across several dimensions of free choice in engagement with design features in the system, such as total time in the condition (time), count of fact cards selected (fact inquiry), and count of audio stories selected (salient events).

This study recruited volunteers (n = 64) from the same population as the pilot study and randomly assigned subjects to one of the four conditions. Visual fidelity showed a strong significant effect on learning outcomes, F (1,60) = 10.54, p = 0.0019, and navigational freedom showed a trend effect on learning outcomes, F (1,60) = 2.71, p = 0.105. The combined conditions of high visual fidelity and high navigational freedom resulted in higher knowledge gained on tests (M = 37.44% increase in test scores, SD = 13.88). When compared to the lower learning gains recorded in the low-visual-fidelity and low-navigational-freedom condition (M = 20.93% increase in test scores, SD = 13.36), the results showed significant statistical interaction effects F (1,60) = 4.85, p = 0.0315, of visual fidelity and navigational freedom on learning outcomes. The study supports the importance of the design choice impacts and the combination, on learning outcomes, independent of all other factors. The results strongly support using the high-visual-fidelity and high-navigational-freedom design factors together when learning outcomes are desired (Harrington, 2012).

A Pearson correlation (n = 64), Sig (2-tail), showed several significant relationships. Knowledge gained showed a significant positive relationship with visual fidelity (r = 0.367, p < 0.01). Knowledge gained showed a significant positive relationship with salient events (stories) (r = 0.455, p < 0.01). Knowledge gained showed a significant positive relationship with fact inquiry (accessible facts) (r = 0.374, p < 0.01), further supporting the importance of the design for education (Harrington, 2008).

A Spearman rank-order correlation (n = 64), Sig (2-tail), was run on all of the survey data to evaluate relationships between learning and subjective evaluations of the experiences. Knowledge gained showed a positive correlation with awe and wonder (rs = 0.273, p < 0.05), indicating the importance of designing that emotional response into the applications. Beauty was correlated with awe and wonder (rs = 0.506, p = 0.01) indicating a complex causal chain of relationships between the aesthetics of the experience and the emotional responses required to enhance informal learning activity (Harrington, 2008).

Beauty was correlated with other self-reported ranks on emotions and subjective evaluations of the experiences in a post survey, showing system-wide effects of beauty on other emotions important for learning. Beauty showed a positive correlation with inquiry (rs = 0.420, p < 0.01), learning (rs = 0.376, p < 0.01), curiosity (rs = 0.305, p < 0.05), calm (rs = 0.450, p < 0.01), excitement (rs = 0.377, p < 0.01), desire to create (rs = 0.456, p < 0.01), desire to share (rs = 0.434, p < 0.01), and presence (rs = 0.589, p < 0.01) (Harrington, 2008).

The AR Perpetual Garden App was started in 2017 and released in 2018 as a virtual diorama. It was launched on the Carnegie Museum of Natural History Apple iTunes and Google Play Android stores in 2018. In an iterative co-design process, users, museum curators and educators, botanists, and scientists all worked together to create the AR app design and vet and approval all content. An AR virtual forest appears to cascade out of the real diorama to cover the gallery floor, effectively creating a virtual woodland springtime bloom inside the museum. As one of the first to use SLAM technology (ARCore and ARKit SDKs), it visualized a spatial 3D forest understory. The design shows two data visualizations of the woodland understory, one in balance and one out of balance, so the user can toggle between two data visualizations for comparison and contrast (Harrington, et al., 2019). Each of the 25 plant 3D models is accurate in detail acting like a 3D botanical model and museum exhibit to convey information (Harrington M., 2020). It is designed to trigger a salient event and a surprise and invoke questions. The goal is to communicate a complex scientific educational narrative about trophic cascades. Facts, concepts, and story are accessible through the interactive design of the interface.

Several IRB-approved studies have been conducted to gather data on usability, emotions, and learning outcomes with this AR app. The 2019 research study used a between-subject design to test user learning outcomes, perceptions of learning, and usability in the context of the Carnegie Museum of Natural History, Hall of Botany. Volunteer participants (n = 56) were recruited from the normal museum visitor population. Adults and parent–children pairs ranged in age from 5 to 56 were assigned to each condition to maintain equal proportions of gender and parent–child pairs, a block design based on convenience. There were two conditions. The experimental condition (AR), which used the AR app, and the control condition (No-AR) that used a booklet much like any museum catalog. Both conditions contained the same educational concepts and facts for internal validity.

Learning behavior was observed to be different between the AR and no-AR conditions. In the AR condition, children acted like they would in a real field of flowers, not a museum. They were observed to bend down to touch the virtual flowers and excitedly moved about the entire gallery hall dragging parents and grandparents to share finds. These document observations that demonstrate behavior representing a high sense of presence, engagement, and excitement (Harrington, March 2020). Immersive embodied experiences using contrasting visualizations in context appear to enhance context sensitivity (Koster & Kartner, 2018) critical for triggering a moment of insight, or a Gestalt. Such designs exploit the affordances of immersive AR to quickly communicate complex expert domain knowledge to a novice. It is unique as it offers an immersive, multimodal, interactive, embodied learning experience showing behavior indicative of presence, even in AR (e.g., AR Holodeck). These document observations demonstrate behavior representing a high sense of presence, engagement, and excitement (Harrington M., 2020).

Both conditions showed similar actual learning gains of approximately 40% (Harrington, 2020b). In this study, actual learning was measured as the percent change in pre- and post-test scores on facts and concepts. The post survey measured perceived learning with a 7-point Likert scale and is considered subjective and different from actual learning. An independent two-sample t-test revealed no difference between actual learning in the AR condition (M = 40.13%, SD = 24.62) and the no-AR condition (M = 46.72%, SD = 30.54), t (54) = 0.89, p = 0.38. However, significant differences were found in the perceptions of learning between the two conditions using the post-survey data, the AR condition (Mdn = 6, SD = 1.60) was higher than the no-AR condition (Mdn = 4, SD = 1.32), and a Mann–Whitney U-test showed the AR (mean rank = 34.95) to be higher than the no-AR (mean rank = 22.05) condition, U = 572.50, z = 3.01, p = 0.00. Dwell times, often used as a proxy measurement of engagement, showed no difference between conditions. An independent two-sample t-test revealed no difference in dwell times between the AR condition (M = 8.98 min, SD = 6.72 min) and the no-AR condition (M = 8.15 min, SD = 3.60 min), t (54) = 0.58, p = 0.57 (Harrington, 2020c).

A Spearman rank-order correlation coefficient was used on the AR only condition dataset (n = 28) to investigate the relationships between learning outcomes and each of the AR app design features, story, data visualizations, 3D flowers, bioacoustics, plant info, and usability (ease of use) on actual learning (percent change in test scores) and perceived learning outcomes (survey ranks). Actual learning outcomes showed a positive statistically significant correlation with story (rs = 0.41, p = 0.03) and plant info (rs = 0.41, p = 0.03), indicating a positive relationship between these AR app design features and actual learning outcomes. In contrast, perceived learning showed positive statistical significant correlations to different design features, 3D flowers (rs = 0.52, p = 0.01) and ease of use (rs = 0.54, p = 0.01). Important to note, the AR design factors correlated with perceived learning are different than the ones correlated with actual learning (Harrington, 2020d).

Significant positive correlations exist between all of the immersive AR design features and AR story, indicating that these features amplify the strength of the relationship of story on actual learning impact. The AR app design feature of data visualization scenarios showed a strong positive correlation with story (rs = 0.45, p = 0.02), with AR bioacoustics (rs = 0.53, p = 0.00), and with AR flowers (rs = 0.38, p = 0.00). Furthermore, more significant positive correlations exist between the AR app design features, indicating a deeper layer of amplification on learning outcomes. AR data visualization scenarios and AR bioacoustics show a significant positive correlation (rs = 0.53, p < 0.05). AR bioacoustics and AR flowers show a significant positive correlation (rs = 0.69, p < 0.01). AR flowers and AR data visualization scenarios show a significant positive correlation (rs = 0.62, p < 0.01) (Harrington, 2020b).

The post-survey responses were evaluated using the independent-sample Mann–Whitney U-test statistic to identify significant differences between conditions. The surveys asked subjects to rank impressions or emotional reactions to the different conditions. Beauty (Mdn = 6, p < 0.01), awe and wonder (Mdn = 6, p < 0.000), curiosity (Mdn = 6, p < 0.01), and want to visit the real (Mdn = 6, p < 0.05) were all significantly ranked higher (modes of 7) in the AR condition when compared to the no-AR condition. Need to know more (Mdn = 4, p = 0.06) showed a strong trend. Thus, emotional reactions when using an AR app for learning are different than those of a booklet. These emotions are considered important to motivate learning, thus demonstrating the impact of such a design.

There were several statistically significant positive correlations between actual learning and post-survey responses. Actual learning shows a positive relationship with ranks of understanding (rs = 0.516, p = 0.005), important (rs = 0.456, p = 0.015), and with want to visit the real (rs = 0.530, p = 0.004). Thus, when actual learning occurs, it appears to shift learner efficacy, attitudes, and a change in desired future behavior. This could also indicate how an AR app could shift perception towards the real world.

The subjective evaluation of beauty showed statistically significant positive correlations with perceived learning (rs = 0.582, p = 0.001), imagination (rs = 0.444, p = 0.018), awe and wonder (rs = 0.689, p = 0.000), surprise (rs = 0.433, p = 0.021), understanding (rs = 0.555, p = 0.002), insight (rs = 0.493, p = 0.008), and want to visit the real (rs = 0.769, p = 0.000), but not to actual learning gains. Interestingly, beauty showed statistically significant positive correlations with design features in the AR app. Beauty was correlated with the AR data visualization scenarios (rs = 0.389, p = 0.041), AR flowers (rs = 0.496, p = 0.007), and the AR plant info (rs = 0.556, p = 0.002).

These findings indicate a complex multidimensional relationship between the immersive and embodied AR app design features and the emotional responses and their connections to human reactions that increase actual learning outcomes.

The Virtual UCF Arboretum Project, started in 2016, is unique in that it visualized field study data stored in an ESRI GIS database of native plants and flowers to represent 100 hectares (247 acres) of the real UCF Arboretum. As a large open world created in the Epic Games Unreal Engine it supports free exploration and navigation in high fidelity photorealism. Such combined design factors amplify immersive embodied experiences for AR and VR applications. Each of the 35 plant 3D models is botanically accurate in photorealistic detail, scale, and morphology to match the real native species, producing high information fidelity, graphical fidelity, and visual fidelity. True to the GIS map data, ten natural communities are visualized to match the field study data in terms of real plant inventories and plant population densities per location, creating a digital twin (Harrington, et al., 2021a; Harrington et al., 2021b). As an example of an early digital twin of a natural environment, it achieves high information fidelity in the accuracy of plant models, plant inventory, and plant population distribution data, producing a high graphical and a high visual fidelity experience. The design not only visualized the data but also made all objects interactive and, using metadata, connected the semantic information of each virtual object and each virtual plant to a website field guide with educational information. This design produced an interactive user interface connecting the digital twin of nature to online educational materials to support informal learning. Accurate bioacoustics of birds and insect sounds and natural environmental sounds by geolocation support the multimodal, multisensory immersive embodied experience. The entire model is currently being updated using Epic Games Reality Capture and photogrammetry techniques for Unreal Engine 5.1 to improve graphical and visual fidelity (Harrington, et al., 2022). To enhance immersion and embodiment, the entire virtual environment has been integrated with an Infinadeck omnidirectional treadmill and an HTC VIVE VR headset. A new study with my graduate student is currently in process to measure different levels of combined immersion-embodiment effects on learning, emotion, and other human reactions to the experiences (Martin, 2023).

In all three systems, immersive embodied interactive experiences depend on design factors to produce learning outcomes. It is a complex multidimensional design space. A few of the known design factors are information fidelity, graphical fidelity, visual fidelity, multimodal signals, immersive displays, free choice in navigation, embodiment, embodied presence, salient signals and salient events, and interaction with knowledge (semantically accessible), facts, and story in context while experiencing these virtual environments.

The application the Virtual Trillium Trail demonstrated an interaction effect between the visual fidelity (e.g., a dimension of immersion) and navigational freedom (e.g., a dimension of embodiment) on learning outcomes. The high-visual-fidelity condition combined with the high-navigational-freedom condition nearly doubled learning gains over the low-visual-fidelity condition combined with the low-navigational-freedom condition. It showed positive correlations between beauty and the emotional reaction of awe and wonder, and awe and wonder was correlated to higher learning gains. Beauty was also positively correlated to other emotions and evaluations largely accepted as important to support learning outcomes, such as calmness, excitement, curiosity, desire to share, and desire to create. Small hand-held AR apps, such as the AR Perpetual Garden app, showed that for learning to occur, access to facts and story is required. It also showed the immersive features are correlated to story, possibly amplifying the effects. Beauty was correlated to AR app design features that relate to motivation, efficacy, desired behavior, and shifts in attitudes about the real world.

Since informal learning interacts with environmental signals, real or virtual, the virtual environments constructed must be accurate and access facts and story from trusted sources. Their graphical and visual representations should accurately communicate the information, so as to not misrepresent and to invoke emotional reactions that are accepted as important for engagement, motivation, and learning outcomes.

Since 2008, computer hardware and software has advanced to replicate multisensory signals that now approach the fidelity of real environmental signals. Computer graphics rendering capacity is approaching real world visual fidelity with photogrammetry. Fidelity in all sensory dimensions is increasing as well. Theaters with surround sound and use of simulated smells and full-body haptic devices are all combining to increase the aggregate sensory fidelity in all modalities of these application designs. Industrial digital twins offer high trust and accuracy because of internal controls in dimensions of policies, procedures, and protocols that hardcode security into the review and publication process of data trustworthy information. The metaverse does not. These are all just tools.

An artist, designer, or developer may use these tools to communicate and evoke emotions, shift attitudes, and change behavior. Only when there is high information fidelity combined with the high immersion and embodiment will actual educational learning occur in them. If not, it is fantasy entertainment, a sales pitch, or misinformation and propaganda. We cannot distinguish real from virtual in these systems based on the visual fidelity alone.

Libraries are the historical cultural stores of knowledge freely open to the public and widely used for learning. Digital libraries have accurate and trusted sources of knowledge to connect to applications for learning. Libraries have discoverable facts and story, as data, multimedia assets, and resources in books that can be trusted as accurate, protected, and secure. These sources of knowledge should be connected to virtual objects and applications for the metaverse for learning, distributed to AR apps or VR headsets, or used to enhance immersive exhibits in museums.

As artificial artifacts, a digital twin of the natural world, used in immersive AR and VR as metaverse applications could provide a platform for informal learning. Evolutionary biology has programed us, hard-coded into us, the desire to understand our world, the environment, and organism co-evolved (Reed & Jones, 1977). If such a platform is designed in such a way to support individual exploration and inquiry, learning can be supported. These are a few of the ideas that drove the creation of this body work. The model and equations to frame this idea were proposed in the simulated ecological environments for education (SEEE) tripartite model (Harrington, 2008) and used to describe the dynamic feedback framework and interactions between the human, the environment, and the user interface as well as connections of signals transmitted, received, and the feedback paths for changes in states of knowledge. The design factors are dimensions representing the visual fidelity of the sensed environment (e.g., also multisensory fidelity), the fidelity of movement (e.g., embodied navigational freedom), and the fidelity of interaction (e.g., natural interfaces). The theoretical framework is a simple system network with only three nodes and connecting arcs. A human without knowledge is the novice and when possessing complete knowledge of the entire environment becomes an expert. The human interacts with the environment, real or virtual, to learn in a feedback loop supported by the user interface that is connected to authenticated and trusted information stores of knowledge. Such applications of virtual nature makes knowledge beautiful.

The data analyzed in this study are subject to licenses/restrictions: Datasets will be made available on request. Requests to access these datasets should be directed to bWFyaWEuaGFycmluZ3RvbkB1Y2YuZWR1.

The studies involving human participants were reviewed and approved by the University of Central Florida Institutional Review Board, IRB ID: STUDY00000434: AR vs. No-AR Museum Study, and University of Pittsburgh Institutional Review Board PRO07020118, Subject: Simulated Ecological Environments for Education: A Tripartite Model Framework of HCI Design Parameters for Situational Learning in Virtual Environments Dissertation. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin. Written informed consent was obtained from the individual(s) for the publication of any identifiable images or data included in this article.

The author confirms being the sole contributor of this work and has approved it for publication.

The Virtual UCF Arboretum development was partly funded by the Epic MegaGrant program 2020 and supported by the University of Central Florida Research Foundation GAP Fund Award, Orlando, FL, 2022. The AR Perpetual Garden App was partly funded by the Artist in Residence Powdermill Nature Reserve, Carnegie Museum of Natural History, Pittsburgh, PA 2018. This work was funded in part by the Richard King Mellon Foundation and the State Government of Salzburg, Austria, under project contract “Ecomedicine VR Physiology Lab.”. The Virtual Trillium Trail was partly funded by the Idea Foundry Grant—The Entertainment and Ed Tech Business Accelerator Program, Pittsburgh, PA 2010. The Virtual UCF Arboretum project is under the direction MH. It is an augmented reality (AR) and virtual reality (VR) collaboration between the Harrington Lab in the Nicholson School of Communication and Media and the UCF Arboretum and Department of Biology and the Landscape and Natural Resources team at the University of Central Florida.

The author would like to thank the UCF Director of Landscape and Natural Resources and Arboretum, Patrick Bohlen, and his team, Jennifer Elliott, Ray Jarrett, Amanda Lindsay, John Guziejka, and Rafael Pares, for their knowledge, support, and partnership in this collaboration and also the UCF Digital Media students involved in the development effort: Zachary Bledsoe, Chris Jones, James Miller, and Thomas Pring, as well as those who helped on the website plant atlas: Kellie Beck, and other students, including Seun (Oluwaseun) Ademoye, Yasiman Ahsani, Jeremy Blake, Bailey Estelle, Sebastian Hyde, Katherine Ryschkewitsch, Marc-hand Venter, and Aubri White. The author is deeply grateful to her graduate student, Fred E. Martin, Jr, for providing valuable feedback on this paper and his current investigation of different levels of immersion and embodiment hardware configurations impact on learning geospatial information, and Matthew Hogan, CEO of M3DVR, for access to their Infinadeck omnidirectional treadmill for the study. The Virtual UCF Arboretum was released on October 2018 as an Unreal level and in November 2018 for the HTC Vive. The AR Perpetual Garden App was developed in part as an international collaboration between The Harrington Lab at the University of Central Florida, the Powdermill Nature Reserve at the Carnegie Museum of Natural History, CMNH, and the MultiMediaTechnology program of the Salzburg University of Applied Sciences, Austria. The multidisciplinary effort was developed with my partners, Dr. John W. Wenzel, Director, Powdermill Nature Reserve, CMNH, and Dr. Markus Tatzgern, FH-Professor, Head of Game Development and Mixed Reality, MultiMediaTechnology program of the Salzburg University of Applied Sciences, Austria. This work would not have been possible without the expertise of Bonnie Isaac at the CMNH and Martha Oliver at the Powdermill Nature Reserve. Undergraduate and graduate students were involved in the production of the app. The author thanks Chris Jones, Zack Bledsoe, and Alexandra Guffey, who are undergraduate students at the University of Central Florida, for their work and also Radomir Dinic and Martin Tiefengrabner of the Salzburg University of Applied Sciences for their support during development.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Ash, D. (2004). How families use questions at dioramas: Ideas for exhibit design. Curator Mus. J. 47, 84–100. doi:10.1111/j.2151-6952.2004.tb00367.x

Bentley, M. (1995). Carpe diem: Making the most of teachable moments. Sci. Activities 32 (3), 23–27. doi:10.1080/00368121.1995.10113190

Blackshark.ai (2023). Blackshark.ai website. Available at: https://blackshark.ai/(Retrieved 131, 2023).

Cameron, J. D. (2009). Avatar [film]. 20th century fox, lightstorm entertainment, dune entertainment, ingenious film partners. Available at: https://thewaltdisneycompany.com/20th-century-studios-and- lightstorm-entertainments-avatar-the-way-of-water-debuts-at-no-1-with-441-6-million-worldwide/.

Cameron, J. D. (2022). Avatar: The Way of water [film]. Lightstorm entertainment, TSG entertainment II. Available at: https://www.sssamiti.org/avatar-the-way-of-water-box-office-collection/.

Epic Games (2023). Epic games website. Available at: https://www.unrealengine.com/marketplace/en-US/product/arcgis-maps-sdk/questions (Retrieved 1 22, 2023).

ESRI (2023). ESRI website. Available at:https://developers.arcgis.com/unreal-engine/(Retrieved 1 22, 2023).

Gritz, J. R. (2022). Why Marshlands are the Perfect Lab for studying climate change. Smithsonian magazine. from Available at: https://www.smithsonianmag.com/science-nature/marshlands-perfect-lab-studying-climate-change-180980992/ (Retrieved online 11 15, 2022).

Harrington, M. C. R. (2009). “An ethnographic comparison of real and virtual reality field trips to trillium trail: The salamander find as a salient event,” in Children, youth and environments: Special issue on children in technological environments. Editors N. G. Freier, and P. H. Kahn, 19, 74–101.1

Harrington, M. C. R. (2008). Ph.D. Thesis: Simulated ecological environments for education (SEEE): A tripartite model framework of HCI design parameters for situational learning in virtual environments. Available at: http://d-scholarship.pitt.edu/8971/.

Harrington, M. C. R., Jones, C., and Peters, C. (2022). “Course on virtual nature as a digital twin: Botanically correct 3D AR and VR optimized low-polygon and photogrammetry high-polygon plant models,” in ACM Special Interest Group on Computer Graphics and Interactive Techniques Conference 2022 Courses (ACM SIGGRAPH 2022 Courses), Virtual Event, New York, NY, USA, August 7 - 11, 2022 (New York: ACM). doi:10.1145/3532720.3535663

Harrington, M. C. R., and Jones, C. (2021b). “Virtual nature,” in Proceeding of the ACM Special Interest Group on Computer Graphics and Interactive Techniques Conference 2021 Birds of a Feather (ACM SIGGRAPH 2021 BOF), Virtual Event, New York, NY, USA, August 9 - 13, 2021 (New York: ACM).

Harrington, M. (2020a). “Observation of presence in an ecologically valid ethnographic study using an immersive augmented reality virtual diorama application,” in Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, March 22–26, 2020, 813–814. doi:10.1109/VRW50115.2020.00257

Harrington, M, C. R. (2011). Empirical evidence of priming, transfer, reinforcement, and learning in the real and virtual trillium trails. IEEE Trans. Learn. Technol. 4 (2), 175–186. doi:10.1109/tlt.2010.20

Harrington, M. C. R. (2020d). “Augmented and virtual reality application design for immersive learning research using virtual nature: Making knowledge beautiful and accessible with information fidelity,” in Proceedings of the ACM Special Interest Group on Computer Graphics and Interactive Techniques Conference Talks (SIGGRAPH ’20 Talks), Virtual Event, New York, NY, USA, August 17, 2020 (New York: ACM), 2.

Harrington, M. C. R., Bledsoe, Z., Jones, C., Miller, J., and Pring, T. (2021a). Designing a virtual arboretum as an immersive, multimodal, interactive, data visualization virtual field trip. Multimodal Technol. Interact. 5 (4), 18. doi:10.3390/mti5040018

Harrington, M. C. R. (2020c). “Connecting user experience to learning in an evaluation of an immersive, interactive, multimodal augmented reality virtual diorama in a natural history museum and the importance of story,” in Proceedings of the IEEE, iLRN 2020: 6th International Conference of the Immersive Learning Research Network, June 21 – 25, 2020 (New York: San Luis Obispo, California). doi:10.23919/iLRN47897.2020.9155202

Harrington, M. C. R., TatzgernLanger, M. T., and Wenzel, J. W. (2019). Augmented reality brings the real world into natural history dioramas with data visualizations and bioacoustics at the Carnegie museum of natural history. Curator Mus. J. 62 (2), 177–193. doi:10.1111/cura.12308

Harrington, M. C. R. (2012). The virtual trillium trail and the empirical effects of freedom and fidelity on discovery-based learning. Virtual Real. 16 (2), 105–120. doi:10.1007/s10055-011-0189-7

Harrington, M. C. R. (2020a). “Virtual dioramas transform natural history museum exhibit Halls and gardens to life with immersive AR,” in Proceedings of ACM SIG CHI The ACM Interaction Design and Children (IDC) Conference 2020: Demos and Art Exhibition, London, UK. New York, NY, USA, June 21 – 24, 2020 (New York: Association for Computing Machinery), 276–279. doi:10.1145/3397617.3402036

Huang, J. (2021). NVIDIA to build earth-2 supercomputer to see our future. NVIDIA blog post, Available at: https://blogs.nvidia.com/blog/2021/11/12/earth-2-supercomputer/(Retrieved on 1 31, 2023).

Koster, M., and Kartner, J. (2018). Context sensitive attention is socialized via a verbal route in the parent-child interaction. PLoS ONE 13 (11), e0207113. doi:10.1371/journal.pone.0207113

McCauley, D. (2017). Digital nature: Are field trips a thing of the past? Science 358, 298–300. doi:10.1126/science.aao1919

Martin, F. E. (2023). “An experimental mixed-methods pilot study for U.S. Army infantry soldiers – higher levels of combined immersion and embodiment in simulation-based training capabilities show positive effects on emotional impact and relationships to learning outcomes,”. Unpublished master's thesis (Orlando, Florida: Department of Modeling and Simulation in the College of Graduate Studies at the University of Central Florida).

Keywords: beauty, digital twin, embodied, fidelity, Immersive, informal learning, metaverse

Citation: Harrington MCR (2023) Virtual nature makes knowledge beautiful. Front. Virtual Real. 4:1100540. doi: 10.3389/frvir.2023.1100540

Received: 16 November 2022; Accepted: 08 February 2023;

Published: 13 March 2023.

Edited by:

Stefan Greuter, Deakin University, AustraliaReviewed by:

Kaja Antlej, Deakin University, AustraliaCopyright © 2023 Harrington. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maria C. R. Harrington, bWFyaWEuaGFycmluZ3RvbkB1Y2YuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.