94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 07 September 2022

Sec. Haptics

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.997426

This article is part of the Research TopicNew Materials and Technologies for Haptics that Enhance VR and ARView all 4 articles

Virtual reality has been used in recent years for artistic expression and as a tool to engage visitors by creating immersive experiences. Most of these immersive installations incorporate visuals and sounds to enhance the user’s interaction with the artistic pieces. Very few, however, involve physical or haptic interaction. This paper investigates virtual walking on paintings using passive haptics. More specifically we combined vibrations and ultrasound technology on the feet using four different configurations to evaluate users’ immersion while they are virtually walking on paintings that transform into 3D landscapes. Results show that participants with higher immersive tendencies experienced the virtual walking by reporting illusory movement of their body regardless the haptic configuration used.

Traditional art exhibitions are designed for visitors to wander around and enjoy a visual engagement. When interaction is part of the art exhibition, it engages the user with the contents and creates a shared experience. Interaction can take multiple forms such as gesture, virtual drawing or engagement, body motion, or gaze tracking (Chisholm, 2018). The movement toward “Immersive art” was catalyzed by Yayoi Kusama’s 1st Infinity Room in 1965 Applin, (2012), which created the foundation for groups like the art collective founded in 2001 known as Teamlab to offer a variety of immersive art installations (Lee, 2022). The ideal of providing an immersive experience is now a requisite quality of art entertainment as is exemplified in the highly popular “The Immersive Van Gogh Experience”. This exhibition has been held in North America, Europe, Asia, and the Middle East. It most often involves visitors moving in large spaces with projected paintings on the floor, walls, and ceilings. Some include virtual reality headsets to experience the artist’s viewpoint and life. Most of these experiences are often limited to the visual and auditory senses (Richardson et al., 2013; Gao and Xie, 2018), with few attempts incorporating the remaining senses (Carbon and Jakesch, 2012). In this study, we assess the usage of passive haptics in a non-interactive virtual walking experience to explore visual art. More specifically, we combined vibrations around the ankles and ultrasound sensations under the sole of the feet when the participant is walking on a 3D landscape of an oil canvas.

Passive haptics refers to tactile sensations received on the skin without an active exploration from the user end (Ziat, 2022). Passive haptics has been shown to augment visual virtual environments and improve sense of presence, spatial knowledge, navigation, and object manipulation (Insko, 2001; Ziat et al., 2014; Azmandian et al., 2016). In both interactive (i.e., user walking) and non-interactive (i.e., user standing or sitting) conditions, passive haptic feedback when provided to the feet, enhanced the sense of vection, which is an illusion of self-motion that occurs when the perceiver feel bodily motion despite no movement taking place (Turchet et al., 2012; Riecke and Schulte-Pelkum, 2013; Kruijff et al., 2016). Vibrations at the foot can also help reduce visual attention and stress during navigation (Meier et al., 2015).

The human foot is highly sensitive to touch stimulation ((Dim and Ren, 2017)) and the sole of the foot contains similar mechanoreceptors that are found in the human palm (Strzalkowski et al., 2017). Because the feet serve different functions (i.e., gait control, maintaining posture, body orientation, and walking) than the hands, the distribution of afferent receptors and their frequency responses rely on population firing rather than individual neuron firing as it seems to be the case for the hand (Strzalkowski et al., 2017; Reed and Ziat, 2018; de Grosbois et al., 2020). Haptic feedback on the feet have been used for multiple purposes such as robotic telepresence (Jones et al., 2020), illusory self-motion (Riecke and Schulte-Pelkum, 2013), gait control in elderly (Galica et al., 2009; Lipsitz et al., 2015), and improved situational awareness in blind people (Velázquez et al., 2012). Location sites around the foot area and the technology used differ from one study to another. Vibration patterns have proved to elicit illusory motion (Terziman et al., 2013) by providing directional cues to the feet to help with navigation in virtual environments. In terms of locations, vibrations have been provided to sides of the foot to convey distal information from an object and collision avoidance (Jones et al., 2020). Vibrations around the ankle have also been shown to help with gait control (Aimonetti et al., 2007; Mildren and Bent, 2016). The sole of the foot is the most common location by either having participants standing on a vibrating platform (Lovreglio et al., 2018; Zwoliński et al., 2022) or wearing shoes that have small vibrating motors while standing or sitting on a chair (Nilsson et al., 2012; Turchet et al., 2012; Kruijff et al., 2016). Other researchers opted for fluid actuators on shoes to offer more realistic VR walking experiences by feeling different ground structures (Son et al., 2018a; Son et al., 2019; Yang et al., 2020). This solution seems to be more viable than vibrations as the fluid viscosity changes based on the pressure applied by the user during walking creating a more dynamic interaction. Vibrations on shoes remains a cheap solution, but their propagation highly depends on the complexity of the device and the materials used that can attenuate their effect; specifically if they are placed under the sole of the foot. Some potential solutions is the use of force or pressure sensors to modulate the vibrations or supplement the haptic feedback with auditory feedback to create a more realistic feel of the ground texture (Turchet and Serafin, 2014).

Paintings are typically enjoyed visually. The overall aesthetic experience, however, includes multiple factors including the haptic sense. Our aesthetic experience when perceiving, exploring, or interacting with artistic objects is governed by multiple contingencies related to personal and further associative experiences (Ortlieb et al., 2020). They are also determined by the sensory modalities that are in play during the moment. We are interested in how touch can affect the multimodal experience of art and how it can enhance the immersive experience of the viewers in a virtual environment. Some solutions already suggested haptic exploration using bodysuits (Giordano et al., 2015), haptic brushes (Son et al., 2018b), textured reliefs (Reichinger et al., 2011), exoskeletons (Frisoli et al., 2005), force feedback (Dima et al., 2014), surface haptics (Ziat et al., 2021), vibrotactile (Marquardt et al., 2009), thermal (Hribar and Pawluk, 2011), or mid-air haptics (Vi et al., 2017) to interact with an art installation on a screen, in a virtual reality setting, or enhanced tactile walls in museums. In these types of exhibitions and systems where touch is at the center of the artistic piece or movement, the interaction is highly encouraged. Although the main motivation is to enhance the interaction in museums, an additional objective is to provide blind people a medium to explore the artistic pieces using the sense of touch (Lim et al., 2019; Cho, 2021). Passive haptics is of specific interest in the present work for its ease of implementation and cost effectiveness.

Fifteen adults (8 F, mean age: 27.8, SD: 5.88) took part in this experiment. All participants were recruited from Bentley University and had normal or corrected to normal vision. The experiment was performed in conformance with the Declaration of Helsinki on the use of human subjects in research, and written informed consent was obtained from all participants. The experimental procedures were approved by the Institutional Review Board of Bentley University. All participants received an Amazon gift card for their participation in the experiment.

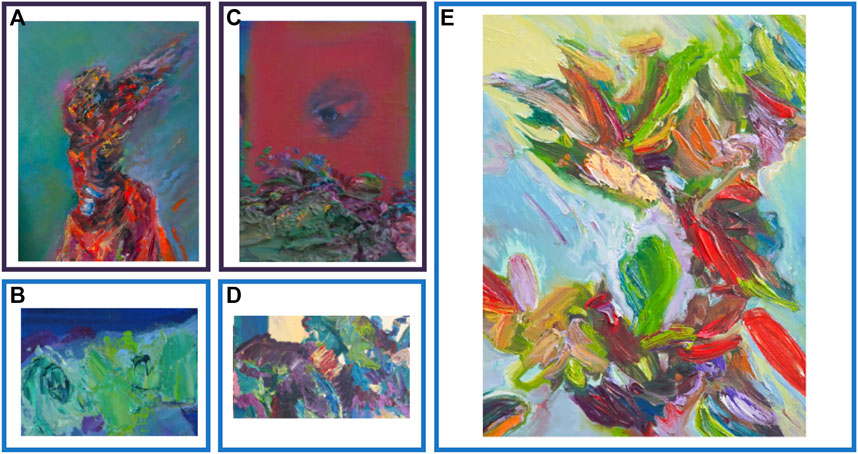

The PDK Emergence Gallery is a new type of multimedia immersive experience at the intersection of science, art, and technology. The participants are invited to virtually “walk” and “jump” on the surface of a set of paintings created by the artist Pamela Davis Kivelson (Davis Kivelson, 2020) in order to explore new kinds of awareness and social connections not only between multiple visitors, but also between the art and the visitor. The gallery contains five oil paintings as seen in Figure 1. The virtual guests explore the topological features including paint peaks, and valleys of the surface of oil paintings which were created from 3D scans of the actual oil paintings. The expressionistic properties of the brush work resulting in impasto or particularly thick paint in places, and the deliberate color choices referencing historical as well as contemporary landscape painting create a swirling, whipped like gelato, stretched distinct palette. Figure 7 shows a 3D view of one of the paintings when it turns into a landscape.

FIGURE 1. Oil on canvas by Pamela Davis Kivelson. (A) Winged Victory on Fire, 2020 (16 × 20), (B) Velocity, 2020 (4 × 7), (C) Eye, Lake, and Mountains, 2018 (4 × 7), (D) Quantum Braiding, 2020 (8.5 × 14), (E) Angry Sunflowers 3, 2020 (11 × 14). All figures were of similar size in the virtual gallery.

The gait cycle consists of two phases: the stance and the swing. Both feet are in contact with the ground at the beginning and the end of the stance phase that occurs 60% of the gait cycle. The remaining 40% that consists of the swing phase starts with toe-off and ends with the heel striking the ground (Novacheck, 1998; Pirker and Katzenschlager, 2017). The cycle contains two double support where both feet are in contact with the ground alternating between left and right and changing from hindfoot to forefoot (see Figure 2).

To simulate an illusory walking, we designed the sensations to mimic the gait cycle. More specifically, we combined vibrotactile actuation around the ankles using Syntacts (Syntacts, 2022) to create the sensation of foot impact while walking and mid-air ultrasound actuation under the sole of the feet using Ultraleap (Ultraleap, 2022) to create the change from hindfoot to forefoot during walking. Syntacts is a complete package that provides the software and hardware needed to interface an audio device to transmit the signal to tactile actuators. The Syntacts amplifier was connected to the Asus Xonar U7 MKII 7.1 USB (Asus xonar, 2022) that allows the connection to up to eight actuators; four were used on each foot. The actuators (part number VG1040001D) were linear resonant actuators (LRAs) from Jinlong Machinery & Electronics that produce a minimum vibration force of 1.5 Grms when driven with a 2.0 Vrms AC signal (Globalsources, 2022). Ultraleap STRATOS Explore is a 16 × 16 ultrasound transducer array with a 40 kHz refresh rate. It delivers mid-air sensations when a hand hovers at a distance of 15–20 cm. In our experiment, we used the sole of the feet as a site of stimulation. A custom-made stool with an open top allowed the feet to hover above the array at about 20 cm from the ground to receive the sensations.

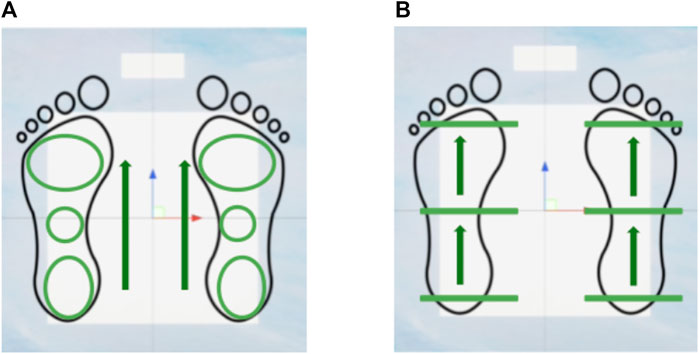

The sensations starts from the heels as the initial contact during walking starts with the heel (Figure 2). Two ultrasound sensations were therefore configured: elliptical sensations (U1) and line scan sensations (U2). For the U1 sensation, an ellipse of 70 Hz was emitted moving from the hindfoot to the forefoot while morphing in shape and size to accommodate the difference in surface area between the hind, mid, and the front parts of the foot. Both sensations were used with the maximum amplitude of 1. Additionally, the sensations alternated between the left and right to simulate single support during the gait cycle. The line scan sensation served as a control where no alternation between the left and right was applied. It only consisted of a line of constant thickness moving from the back to the front of the foot (see Figure 3).

FIGURE 3. Mid-air ultrasound Elliptical (A) and Line Scan (B) sensations for illusory walking. The arrows indicate the direction of the scan.

The vibrations around the ankles were arranged in a circular two-dimensional array with two different configurations (Figure 4). In the first configuration (S1), LRAs were arranged in a plus shape to stimulate directly the bones (Tibia and Fibula), while the second configuration (S2), LRAs were organized in an X shape to stimulate between the bone structures. It has been shown that placing vibrations directly on a bone attenuates them, while their propagation is facilitated if they are placed between two bone structures (Fancher et al., 2013; Ziat, 2022). The LRAs vibrated at a 200 Hz frequency with a smooth step roll-off configuration on Syntacts software with the maximum amplitude of 1.

A delay of 100 ms was introduced between the mid-air sensations and the vibrotactile sensations to prevent suppression (Ziat et al., 2010) resulting in a total delay of 200 ms when alternating between left and right. The combinations of these 2 × 2 sensations resulted in four experimental conditions: S1U1, S1U2, S2U1, and S2U2. Finally, an audio feedback of the vibrations were played during the experiment. Auditory information about footsteps provides important information about the locomotion (Nordahl et al., 2010). The audio signal during walking was synchronized with both vibrations and ultrasound haptic signals. It comprised two auditory stimuli corresponding to the heel and the hindfoot impact separated by a 1 ms duration.

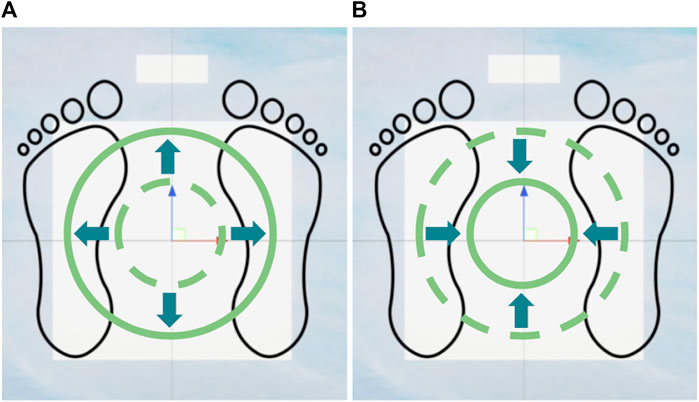

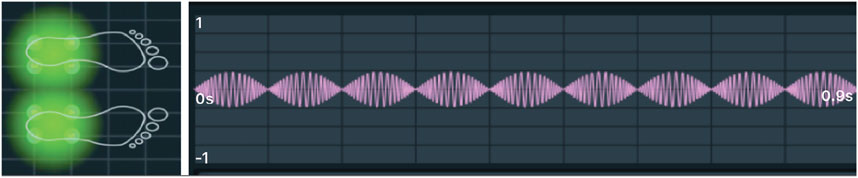

In addition to walking, participants also had the option to jump if they faced an obstacle or wished to experience a different type of locomotion. The jump, where the entire body is temporarily airborne, and land, where the feet touch the ground, were conveyed by the combination of both ultrasound and vibrotactile sensations. The ultrasound array emitted a 70 Hz expanding ripple effect while jumping and a 70 Hz collapsing ripple effect while landing to inform the participant about the altitude relative to the ground as shown in Figure 5. For the vibrotactile sensations, the signal was the result of a 200 Hz sine wave with a frequency modulation (FM) of 10 Hz that was superimposed with 5 Hz sine wave (see Figure 6). The frequency of signal was progressively reduced from 200 Hz to 100 Hz when the altitude increased. The amplitude of the signal was also reduced from 100% during landing to 25% during airborne (i.e., highest altitude point). The jump sensations were played simultaneously on both feet and remained constant across the four conditions. The audio signal for the jump phase has two peaks with the second peak being higher in amplitude, whereas the land signal has the same two peaks in reversed order. The two peaks were synchronized with the vibrotactile sensations at 200 Hz. Similarly, the audio peaks were also synchronized with the mid-air ripple effects of 70 Hz with a 1 ms delay.

FIGURE 5. Ultrasound expanding ripple effect for jumping (A) and collapsing ripple effect for landing (B).

FIGURE 6. Vibrotactile jumping sensations: A 5 Hz sine wave was superimposed with a 200 Hz sine wave that has a 10 Hz FM (from Syntacts).

After signing the consent form, each participant completed the Simulator Sickness Questionnaire (SSQ) (Kennedy et al., 1993), which allowed us to assess the initial level of symptoms and establish a baseline against which after-experiment SSQ data could be compared. The SSQ comprises 16 symptoms, each of which is rated on a 4-point scale (not at all, mild, moderate, severe). They were also asked to complete the Immersive Tendencies Questionnaire (ITQ) developed by Witmer and Singer (Witmer and Singer, 1998) to measure the tendency for a person to experience presence.

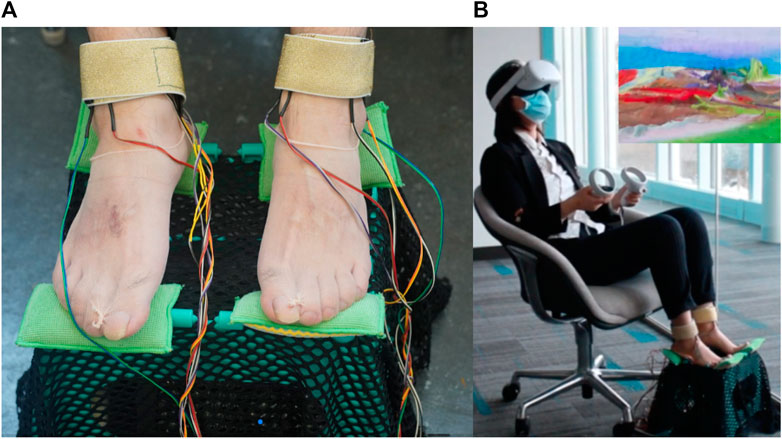

After completing both pre-study questionnaires and once participants were seated in a comfortable position, they put on the Oculus Quest 2 headset to experience the virtual walking on paintings without any haptic feedback in order to familiarize themselves with the interaction. Then, participants were asked to remove their shoes and wear cuffs around their ankles that deliver short haptic vibrations. They were instructed to put disposable socks on their bare feet, sit on an adjustable chair, and put their feet on a stool to receive mid-air sensations (Figure 7). They were instructed to rest their feet gently and not to put pressure on the stool. After they put back the VR headset to start the virtual navigation, they first landed in an hexagonal virtual art gallery where they could explore five paintings. They could freely wander into the gallery and pick the painting of their choice to start the virtual walking. When they got close to one of the paintings, the painting transformed into a 3D landscape that allowed the visitor to walk or jump on the brush strokes that became virtual hills, mountains, plains, or plateaus. This experience brings the visitor one step closer to the artist as every landform is nothing else than the artistic creation where brush strokes convey look, effect, mood, and the atmosphere of the painting. To return to the gallery, participant got close to the edges to jump off the painting.

FIGURE 7. (A) Participant’s feet with disposable socks resting on the stool with the cuffs around the ankles. (B) Participants exploring a 3D painting landscape.

Both haptic and auditory sensations started when participants leaped into the painting. Once they were done exploring, they were asked to complete a four-item questionnaire using a visual analog scale (VAS) from 0 to 100 from Matsuka et al. (Matsuda et al., 2021) to assess the walking sensations.

• I felt that my entire body was moving forward (self-motion).

• I felt as if I was walking forward (walking sensation).

• I felt as if my feet were striking the ground (leg action).

• I felt as if I was present in the scene (telepresence).

This step was repeated three more times using the four stimuli conditions (S1U1, S1U2, S2U1, S2U2) presented in a randomized order. At the end of the experiment, the participants were asked to complete the SSQ for a second time, fill out the presence questionnaire (PQ), and rank the haptic conditions from the most to the least favorite.

We followed hygiene procedures to minimize any contamination risk by cleaning the surfaces of the VR headset that makes contact with the participant’s face between each experimental session using non-abrasive, alcohol-free antibacterial wipes that are used for cleaning ultrasound equipment. Disposable foot socks were also provided to each participant before they put their feet on the stool for hygiene purposes.

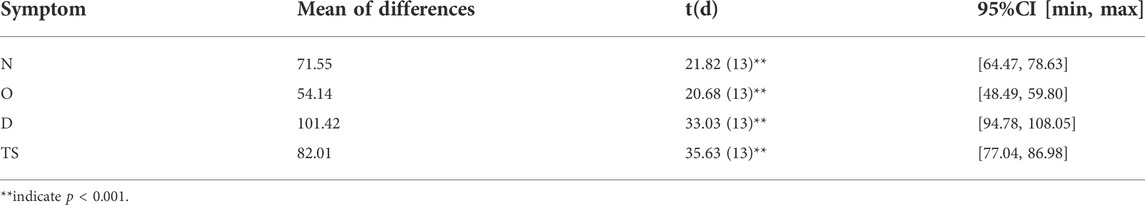

The severity of motion sickness symptoms were evaluated using the SSQ before and after the experiment. The SSQ provides scores on Computation of Nausea (N), Oculomotor Disturbance (O), Disorientation (D), and Total Simulator Sickness (TS) (Kennedy et al., 1993). One participant reported very high SSQ scores that indicated feeling ill after exposure to the virtual environment. Therefore, the score was eliminated from the analysis. Parametric paired t-tests were used to analyze both sub-scale and total SSQ scores that were normally distributed (Shapiro normality test, p > 0.05). Table 1 summarizes the results of the t-tests. These results indicate that reported simulator sickness changed significantly from before to after exposure to the virtual environment with disorientation scoring the highest.

TABLE 1. t-test results for Computation of Nausea (N), Oculomotor Disturbance (O), Disorientation (D), and Total Simulator Sickness (TS) with 95% confidence intervals.

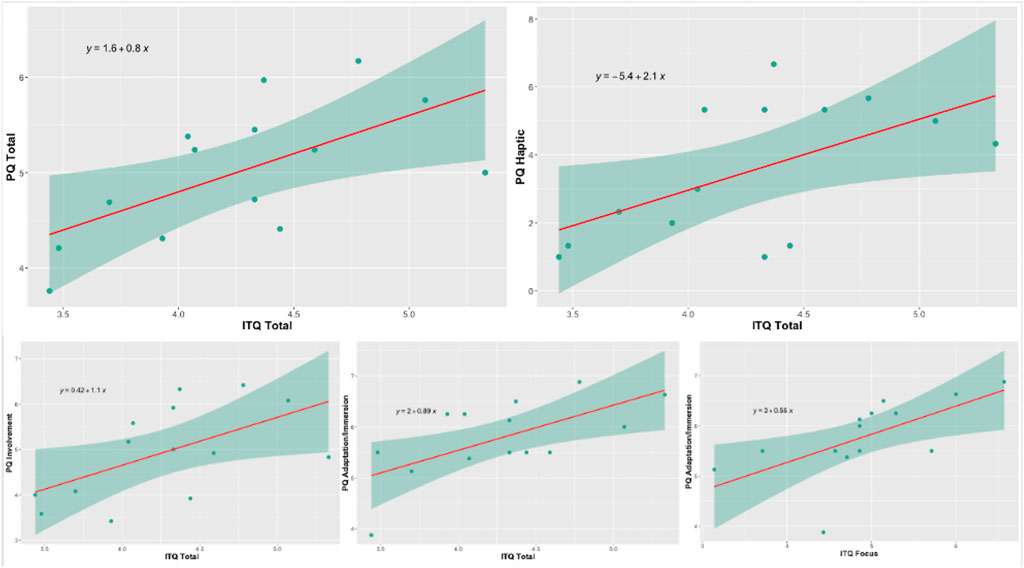

The ITQ is a 29-item questionnaire on a 7-point scale to measure the immersive potential of a given individual. It is composed of three sub-scales which include involvement (14 items), focus (13 items), and propensity to play video games (2 items). Because personality traits vary across individuals, authors suggested that they may influence the degree of experiencing presence in specific situations (Jerome and Witmer, 2002). The PQ is a semantic differential questionnaire of 28-item on a seven-point scale that is composed of four sub-scales that include involvement (10 items), sensory fidelity (8 items), adaptation/immersion (7 items), and interface quality (3 items). The PQ also includes items related to Haptic (items 13, 17, and 29) and Audio (items 5, 11, and 12) (Witmer et al., 2005). Presence and immersive tendency are considered to be positively correlated; a person who is more likely to become immersed in a virtual environment will have a greater sense of presence while interacting with this environment (Witmer and Singer, 1998). ITQ and PQ scores were correlated among themselves. The results of these correlations are shown in Table 2. Only significant results (p < 0.05) are reported. There was a strong Pearson correlation value (0.63) between PQ and ITQ total scores. Figure 8 shows individual scores with a regression line: PQ = 0.8*ITQ +1.6. Individual scores between PQ Haptic and total ITQ are also shown with a moderate correlation (r = 0.57) with a regression line of best fit: Haptic = 2.1*ITQ—5.4. PQ involvement and PQ Adaptation/Immersion were also positively correlated with ITQ with moderate and strong correlation respectively. Finally, the more focused participants were, the stronger the immersion was (r = 0.64). However, because of the limited number of participants providing ITQ, PQ, and SSQ questionnaire data (N = 15), correlations involving those data should be interpreted with caution.

FIGURE 8. Scattergrams for Left to right, top: ITQ-PQ, ITQ-PQ Haptic; bottom: ITQ-PQ Involvement, ITQ-PQ Adaptation/Immersion, ITQ Focus-PQ Adaptation/Immersion.

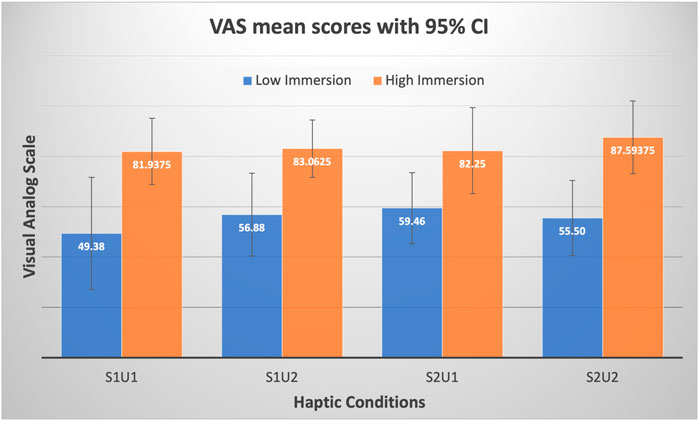

Participants’ answers were classified into two groups based on their responses to the ITQ: Low Immersion (scores ≤ 4) and High Immersion (scores >4). Shapiro-Wilk test was used to verify the normality of the VAS responses, which resulted in p values >0.05. Thus, we conducted a mixed ANOVA with the Haptics conditions (S1U1, S1U2, S2U1, and S2U2) as the within-subject factor and the Group (Low Immersion (6 participants), High Immersion (8 participants)) as the between-subject factor. The results of the ANOVA show a significant effect of the Group [F (1,12) = 12.47, p < 0.05] with the Low Immersion group obtaining lower VAS scores than the High Immersion group (Figure 9). In terms of preferred condition, from Figure 10, S2U2 condition seemed the least favorite, while S1U1 and S2U1 get higher rankings. However, Friedman test for ranked data showed no significant differences among the four conditions (χ2(3) = 4.2, p > 0.05).

FIGURE 9. Visual Analog Scale results for Low and High Immersion groups. The error bars represent the 95% confidence interval of the mean.

Motion sickness scores were significantly higher after the exposure to the virtual world with higher scores for the Disorientation subscale. This result is not surprising as virtual motion methods that instantly teleport users to new locations are usually correlated with increased users’ disorientation (Bowman et al., 1997). In our experiment, participants jumped into the painting from the art gallery and again jumped off the painting to return to the gallery, causing an increase in disorientation. Additionally, walking techniques impacted a user’s sense of presence in a virtual environment since they require varying amounts of physical motion (Ruddle and Lessels, 2006), which in turn affects the amount of simulator sickness caused by the apparatus; a well-recognized side effect of exposure to virtual environment. Real walking is well-known to reduce motion sickness and shows a great sense of presence (Usoh et al., 1999), but requires a bigger space, special treadmills, or redirected walking methods (Razzaque et al., 2002; De Luca et al., 2009; Matsumoto et al., 2016). In our experiment, the fact that participants were receiving passive haptic sensations on their feet while they were physically motionless and visually moving in the world could have increased the conflict between their proprioceptive and visual systems. In the future, this effect could be mitigated by shorter exposure times or 3D axis motion additions to the seat.

Participants’ ability to adapt and immerse themselves into the VR world was affected by their immersive tendencies, specifically their tendency to concentrate or pay attention (focus sub-scale of ITQ). The higher their immersive tendencies were, the stronger their cognitive, physical, and emotional involvement into the scenarios was. This goes hand in hand with their perception of the haptic sensations and how it affected their engagement during the walking illusion. We observed the same trend for participants’ VAS scores. The participants who reported low immersion tendencies had a VAS average score around 50 (midpoint of the scale), which can be considered as neutral. The participants with high immersion tendencies felt that their body was moving forward, they were walking forward, and their feet were striking the ground (mean score >81), regardless of the haptic conditions. The fact that there was no difference in preferences between the haptic conditions can be explained by the more even distribution of receptors on the sole of the feet (Kennedy and Inglis, 2002) as opposed to the proximal to distal increase in FAI and SAI receptors on the hand (Johansson and Vallbo, 1979), suggesting that any haptic combination enhanced the experience for those who felt more immersed. Additionally, the afferents on the sole of the foot have higher thresholds compared to those of the hand that response to specific frequency ranges (Johansson et al., 1982; Strzalkowski et al., 2015) which would require an extensive evaluation between the different locations at the foot. Although neurophysiology evidences provide support for the choice of these locations (sole and ankles), additional factors, such as the choice of the technology, the type of haptic feedback, and the practicality and the comfort for the user, could have affected the immersive experience. Evaluating which part of the foot (ankle, side, sole), their combination, and their timing provide better vection and enhanced experience would prove to be beneficial for future studies that target the foot as a site of stimulation.

In the future, the system can be improved by adding a self-view avatar that has been shown to enhance the sensation of walking, presence, and leg action, specifically when combined with passive haptics (Matsuda et al., 2021). Moreover, although sound was included, it was simply replicating the haptic sensations. Previous research has shown that audio feedback can provide indication of the ground textures in real-time virtual walking (Turchet et al., 2012). Based on the PQ answers, participants did not appear to be affected by the audio feedback. It would be interesting to explore in depth the multimodal interaction and how this information is integrated by the brain in congruent and incongruent situations (Ziat et al., 2015).

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Bentley University Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

This work is supported by the National Science Foundation under Grant NSF NRI #1925194.

The authors would like to thank Fred Monshi and Gaurav Shah from Bentley University for providing logistical support for this study.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aimonetti, J.-M., Hospod, V., Roll, J.-P., and Ribot-Ciscar, E. (2007). Cutaneous afferents provide a neuronal population vector that encodes the orientation of human ankle movements. J. physiology 580, 649–658. doi:10.1113/jphysiol.2006.123075

Applin, J. (2012). Yayoi Kusama: Infinity mirror room-Phalli’s field. Cambridge, MA and London: MIT Press.

[Dataset] Asus xonar (2022). Xonar U7 MKII 7.1 USB sound card with headphone amplifier, 192kHz/24-bit HD sound, 114dB SNR. Available at: https://www.asus.com/Motherboards-Components/Sound-Cards/Gaming/Xonar-U7-MKII/.

Azmandian, M., Hancock, M., Benko, H., Ofek, E., and Wilson, A. D. (2016). “Haptic retargeting: Dynamic repurposing of passive haptics for enhanced virtual reality experiences,” in Proceedings of the 2016 chi conference on human factors in computing systems.

Bowman, D. A., Koller, D., and Hodges, L. F. (1997). “Travel in immersive virtual environments: An evaluation of viewpoint motion control techniques,” in Proceedings of IEEE 1997 Annual International Symposium on Virtual Reality (IEEE).

Carbon, C.-C., and Jakesch, M. (2012). A model for haptic aesthetic processing and its implications for design. Proc. IEEE 101, 2123–2133. doi:10.1109/jproc.2012.2219831

Chisholm, B. (2018). Increasing art museum engagement using interactive and immersive technologies, Art and the Museum World. College Park, MD: University of Maryland.

Cho, J. D. (2021). A study of multi-sensory experience and color recognition in visual arts appreciation of people with visual impairment. Electronics 10, 470. doi:10.3390/electronics10040470

[Dataset] Davis Kivelson, P. (2020). Emergence. Available at: https://www.pdkgallery.com.

de Grosbois, J., Di Luca, M., King, R., and Ziat, M. (2020). “The predictive perception of dynamic vibrotactile stimuli applied to the fingertip,” in 2020 IEEE Haptics Symposium (HAPTICS) (IEEE), 848.

De Luca, A., Mattone, R., Giordano, P. R., and Bülthoff, H. H. (2009). “Control design and experimental evaluation of the 2d cyberwalk platform,” in 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems (IEEE), 5051.

Dim, N. K., and Ren, X. (2017). Investigation of suitable body parts for wearable vibration feedback in walking navigation. Int. J. Human-Computer Stud. 97, 34–44. doi:10.1016/j.ijhcs.2016.08.002

Dima, M., Hurcombe, L., and Wright, M. (2014). “Touching the past: Haptic augmented reality for museum artefacts,” in International Conference on Virtual, Augmented and Mixed Reality (Springer), 3–14.

Fancher, J., Smith, E., and Ziat, M. (2013). “Haptic hallucination sleeve,” in Worldhaptics 2013 Demo.

Frisoli, A., Jansson, G., Bergamasco, M., and Loscos, C. (2005). “Evaluation of the pure-form haptic displays used for exploration of works of art at museums,” in World haptics conference, Pisa, March (Citeseer).

Galica, A. M., Kang, H. G., Priplata, A. A., D’Andrea, S. E., Starobinets, O. V., Sorond, F. A., et al. (2009). Subsensory vibrations to the feet reduce gait variability in elderly fallers. Gait posture 30, 383–387. doi:10.1016/j.gaitpost.2009.07.005

Gao, Y., and Xie, L. (2018). “Aesthetics-emotion mapping analysis of music and painting,” in 2018 First Asian Conference on Affective Computing and Intelligent Interaction (ACII Asia) (IEEE).

Giordano, M., Hattwick, I., Franco, I., Egloff, D., Frid, E., Lamontagne, V., et al. (2015). “Design and implementation of a whole-body haptic suit for “ilinx”,” in a multisensory art installation. In 12th International Conference on Sound and Music Computing (SMC-15) (Ireland: Maynooth University), 169–175.

[Dataset] Globalsources (2022). Jinlong machinery & electronics co. ltd. Available at: https://www.globalsources.com/Linear-motor/Z-axis-controllablefrequency-and-vibration-1184294093p.htm.

Hribar, V. E., and Pawluk, D. T. (2011). “A tactile-thermal display for haptic exploration of virtual paintings,” in The proceedings of the 13th international ACM SIGACCESS conference on Computers and accessibility, 221–222.

Insko, B. E. (2001). Passive haptics significantly enhances virtual environments. Chapel Hill, NC: The University of North Carolina at Chapel Hill.

Jerome, C. J., and Witmer, B. (2002). “Immersive tendency, feeling of presence, and simulator sickness: Formulation of a causal model,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Los Angeles, CA: SAGE Publications Sage CA), 2197–2201.46

Johansson, R. S., and Vallbo, A. B. (1979). Tactile sensibility in the human hand: Relative and absolute densities of four types of mechanoreceptive units in glabrous skin. J. physiology 286, 283–300. doi:10.1113/jphysiol.1979.sp012619

Johansson, R. S., Landstro, U., and Lundstro, R. (1982). Responses of mechanoreceptive afferent units in the glabrous skin of the human hand to sinusoidal skin displacements. Brain Res. 244, 17–25. doi:10.1016/0006-8993(82)90899-x

Jones, B., Maiero, J., Mogharrab, A., Aguliar, I. A., Adhikari, A., Riecke, B. E., et al. (2020). “Feetback: Augmenting robotic telepresence with haptic feedback on the feet,” in Proceedings of the 2020 international conference on multimodal interaction, 194–203.

Kennedy, P. M., and Inglis, J. T. (2002). Distribution and behaviour of glabrous cutaneous receptors in the human foot sole. J. physiology 538, 995–1002. doi:10.1113/jphysiol.2001.013087

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilienthal, M. G. (1993). Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3, 203–220. doi:10.1207/s15327108ijap0303_3

Kruijff, E., Marquardt, A., Trepkowski, C., Lindeman, R. W., Hinkenjann, A., Maiero, J., et al. (2016). “On your feet! enhancing vection in leaning-based interfaces through multisensory stimuli,” in Proceedings of the 2016 Symposium on Spatial User Interaction, 149–158.

Lim, J., Yoo, Y., Cho, H., and Choi, S. (2019). “Touchphoto: Enabling independent picture taking and understanding for visually-impaired users,” in 2019 International Conference on Multimodal Interaction, 124–134.

Lipsitz, L. A., Lough, M., Niemi, J., Travison, T., Howlett, H., and Manor, B. (2015). A shoe insole delivering subsensory vibratory noise improves balance and gait in healthy elderly people. Archives Phys. Med. rehabilitation 96, 432–439. doi:10.1016/j.apmr.2014.10.004

Lovreglio, R., Gonzalez, V., Feng, Z., Amor, R., Spearpoint, M., Thomas, J., et al. (2018). Prototyping virtual reality serious games for building earthquake preparedness: The auckland city hospital case study. Adv. Eng. Inf. 38, 670–682. doi:10.1016/j.aei.2018.08.018

Marquardt, N., Nacenta, M. A., Young, J. E., Carpendale, S., Greenberg, S., and Sharlin, E. (2009). “The haptic tabletop puck: Tactile feedback for interactive tabletops,” in Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, 85–92.

Matsuda, Y., Nakamura, J., Amemiya, T., Ikei, Y., and Kitazaki, M. (2021). Enhancing virtual walking sensation using self-avatar in first-person perspective and foot vibrations. Front. Virtual Real. 2, 654088. doi:10.3389/frvir.2021.654088

Matsumoto, K., Ban, Y., Narumi, T., Yanase, Y., Tanikawa, T., and Hirose, M. (2016). “Unlimited corridor: Redirected walking techniques using visuo haptic interaction,” in ACM SIGGRAPH 2016 Emerging Technologies.

Meier, A., Matthies, D. J., Urban, B., and Wettach, R. (2015). “Exploring vibrotactile feedback on the body and foot for the purpose of pedestrian navigation,” in Proceedings of the 2nd international Workshop on Sensor-based Activity Recognition and Interaction, 1–11.

Mildren, R. L., and Bent, L. R. (2016). Vibrotactile stimulation of fast-adapting cutaneous afferents from the foot modulates proprioception at the ankle joint. J. Appl. Physiology 120, 855–864. doi:10.1152/japplphysiol.00810.2015

Nilsson, N. C., Nordahl, R., Turchet, L., and Serafin, S. (2012). “Audio-haptic simulation of walking on virtual ground surfaces to enhance realism,” in International Conference on Haptic and Audio Interaction Design (Springer), 61–70.

Nordahl, R., Serafin, S., and Turchet, L. (2010). “Sound synthesis and evaluation of interactive footsteps for virtual reality applications,” in 2010 IEEE Virtual Reality Conference (VR) (IEEE), 147.

Novacheck, T. F. (1998). The biomechanics of running. Gait posture 7, 77–95. doi:10.1016/s0966-6362(97)00038-6

Ortlieb, S. A., Kügel, W. A., and Carbon, C.-C. (2020). Fechner (1866): The aesthetic association principle—A commented translation. i-Perception 11, 204166952092030. doi:10.1177/2041669520920309

Pirker, W., and Katzenschlager, R. (2017). Gait disorders in adults and the elderly. Wien. Klin. Wochenschr. 129, 81–95. doi:10.1007/s00508-016-1096-4

Razzaque, S., Swapp, D., Slater, M., Whitton, M. C., and Steed, A. (2002). Redirected walking in place. EGVE 2, 123–130.

Reed, C., and Ziat, M. (2018). “Haptic perception: From the skin to the brain 73,” in Reference Module in Neuroscience and Biobehavioral Psychology (Elsevier).

Reichinger, A., Maierhofer, S., and Purgathofer, W. (2011). High-quality tactile paintings. J. Comput. Cult. Herit. 4, 1–13. doi:10.1145/2037820.2037822

Richardson, J., Gorbman, C., and Vernallis, C. (2013). The Oxford Handbook of new audiovisual aesthetics. New York, NY: Oxford University Press.

Riecke, B. E., and Schulte-Pelkum, J. (2013). “Perceptual and cognitive factors for self-motion simulation in virtual environments: How can self-motion illusions (“vection”) be utilized?” in Human walking in virtual environments (Springer), 27–54.

Ruddle, R. A., and Lessels, S. (2006). For efficient navigational search, humans require full physical movement, but not a rich visual scene. Psychol. Sci. 17, 460–465. doi:10.1111/j.1467-9280.2006.01728.x

Son, H., Gil, H., Byeon, S., Kim, S.-Y., and Kim, J. R. (2018a). “Realwalk: Feeling ground surfaces while walking in virtual reality,” in Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing System.

Son, H., Shin, S., Choi, S., Kim, S.-Y., and Kim, J. R. (2018b). Interacting automultiscopic 3d with haptic paint brush in immersive room. IEEE Access 6, 76464–76474. doi:10.1109/access.2018.2883821

Son, H., Hwang, I., Yang, T.-H., Choi, S., Kim, S.-Y., and Kim, J. R. (2019). “Realwalk: Haptic shoes using actuated mr fluid for walking in vr,” in 2019 IEEE World Haptics Conference (WHC) (IEEE), 241.

Strzalkowski, N. D., Mildren, R. L., and Bent, L. R. (2015). Thresholds of cutaneous afferents related to perceptual threshold across the human foot sole. J. Neurophysiology 114, 2144–2151. doi:10.1152/jn.00524.2015

Strzalkowski, N. D., Ali, R. A., and Bent, L. R. (2017). The firing characteristics of foot sole cutaneous mechanoreceptor afferents in response to vibration stimuli. J. Neurophysiology 118, 1931–1942. doi:10.1152/jn.00647.2016

[Dataset] Syntacts (2022). Syntacts, the tactor synthesizer. Available at: https://www.syntacts.org.

Terziman, L., Marchal, M., Multon, F., Arnaldi, B., and Lécuyer, A. (2013). Personified and multistate camera motions for first-person navigation in desktop virtual reality. IEEE Trans. Vis. Comput. Graph. 19, 652–661. doi:10.1109/tvcg.2013.38

Turchet, L., and Serafin, S. (2014). Semantic congruence in audio–haptic simulation of footsteps. Appl. Acoust. 75, 59–66. doi:10.1016/j.apacoust.2013.06.016

Turchet, L., Burelli, P., and Serafin, S. (2012). Haptic feedback for enhancing realism of walking simulations. IEEE Trans. Haptics 6, 35–45. doi:10.1109/toh.2012.51

[Dataset] Ultraleap (2022). Ultraleap. Available at: https://www.ultraleap.com.

Usoh, M., Arthur, K., Whitton, M. C., Bastos, R., Steed, A., Slater, M., et al. (1999). “Walking> walking-in-place> flying, in virtual environments,” in Proceedings of the 26th annual conference on Computer graphics and interactive techniques, 359–364.

Velázquez, R., Bazán, O., Varona, J., Delgado-Mata, C., and Gutiérrez, C. A. (2012). Insights into the capabilities of tactile-foot perception. Int. J. Adv. Robotic Syst. 9, 179. doi:10.5772/52653

Vi, C. T., Ablart, D., Gatti, E., Velasco, C., and Obrist, M. (2017). Not just seeing, but also feeling art: Mid-air haptic experiences integrated in a multisensory art exhibition. Int. J. Human-Computer Stud. 108, 1–14. doi:10.1016/j.ijhcs.2017.06.004

Witmer, B. G., and Singer, M. J. (1998). Measuring presence in virtual environments: A presence questionnaire. Presence. (Camb). 7, 225–240. doi:10.1162/105474698565686

Witmer, B. G., Jerome, C. J., and Singer, M. J. (2005). The factor structure of the presence questionnaire. Presence. (Camb). 14, 298–312. doi:10.1162/105474605323384654

Yang, T.-H., Son, H., Byeon, S., Gil, H., Hwang, I., Jo, G., et al. (2020). Magnetorheological fluid haptic shoes for walking in vr. IEEE Trans. Haptics 14, 83–94. doi:10.1109/toh.2020.3017099

Ziat, M., Hayward, V., Chapman, C. E., Ernst, M. O., and Lenay, C. (2010). Tactile suppression of displacement. Exp. Brain Res. 206, 299–310. doi:10.1007/s00221-010-2407-z

Ziat, M., Rolison, T., Shirtz, A., Wilbern, D., and Balcer, C. A. (2014). “Enhancing virtual immersion through tactile feedback,” in Proceedings of the adjunct publication of the 27th annual ACM symposium on User interface software and technology, 65–66.

Ziat, M., Savord, A., and Frissen, I. (2015). “The effect of visual, haptic, and auditory signals perceived from rumble strips during inclement weather,” in 2015 IEEE World Haptics Conference (WHC) (IEEE), 351–355.

Ziat, M., Li, Z., Jhunjhunwala, R., and Carbon, C.-C. (2021). “Touching a renoir: Understanding the associations between visual and tactile characteristics,” in Perception (England: Sage Publications Ltd 1 Olivers Yard, 55 City Road, London Ec1y 1sp), 50, 102.

Ziat, M. (2022). Haptics for human-computer interaction. From the skin to the brain (foundations and Trends® in human–computer interaction. Now Publishers.

Keywords: mid-air haptics, vibrations, passive haptic, virtual walking, paintings, visual art, virtual reality

Citation: Ziat M, Jhunjhunwala R, Clepper G, Kivelson PD and Tan HZ (2022) Walking on paintings: Assessment of passive haptic feedback to enhance the immersive experience. Front. Virtual Real. 3:997426. doi: 10.3389/frvir.2022.997426

Received: 18 July 2022; Accepted: 17 August 2022;

Published: 07 September 2022.

Edited by:

Jin Ryong Kim, The University of Texas at Dallas, United StatesReviewed by:

Hyunjae Gil, Ulsan National Institute of Science and Technology, South KoreaCopyright © 2022 Ziat, Jhunjhunwala , Clepper , Kivelson and Tan . This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mounia Ziat, bXppYXRAYmVudGxleS5lZHU=

†ORCID: Mounia Ziat, orcid.org/0000-0003-4620-7886; Hong Z. Tan, orcid.org/0000-0003-0032-9554

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.