95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Virtual Real. , 17 November 2022

Sec. Technologies for VR

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.974652

This article is part of the Research Topic Developing next-generation XR: trustworthy human-centered XR View all 3 articles

Social Virtual Reality (social VR or SVR) provides digital spaces for diverse human activities, social interactions, and embodied face-to-face encounters. While our digital bodies in SVR can in general be of almost any conceivable appearance, individualized or even personalized avatars bearing users’ likeness recently became an interesting research topic. Such digital bodies show a great potential to enhance the authenticity of social VR citizens and increase the trustworthiness of interpersonal interaction. However, using such digital bodies might expose users to privacy and identity issues such as identity theft: For instance, how do we know whether the avatars we encounter in the virtual world are who they claim to be? Safeguarding users’ identities and privacy, and preventing harm from identity infringement, are crucial to the future of social VR. This article provides a systematic review on the protection of users’ identity and privacy in social VR, with a specific focus on digital bodies. Based on 814 sources, we identified and analyzed 49 papers that either: 1) discuss or raise concerns about the addressed issues, 2) provide technologies and potential solutions for protecting digital bodies, or 3) examine the relationship between the digital bodies and users of social VR citizens. We notice a severe lack of research and attention on the addressed topic and identify several research gaps that need to be filled. While some legal and ethical concerns about the potential identity issues of the digital bodies have been raised, and despite some progress in specific areas such as user authentication has been made, little research has proposed practical solutions. Finally, we suggest potential future research directions for digital body protection and include relevant research that might provide insights. We hope this work could provide a good overview of the existing discussion, potential solutions, and future directions for researchers with similar concerns. We also wish to draw attention to identity and privacy issues in social VR and call for interdisciplinary collaboration.

The past 2 years have witnessed a remarkable trend towards the digitalization and online presence of human activities and social interactions: COVID-19 pandemic has imposed severe restrictions on people’s travel and physical contact, forcing many social activities, such as education, work, and healthcare, to be conducted remotely online (Wong et al., 2021). The unprecedented growing usage of social media and video conferencing tools, such as Facebook, WhatsApp, Zoom, and Microsoft Teams (Bary, 2020; Schultz and Parikh, 2020), are incredibly expanding how and where people can socialize, breaking the physical and distance barriers of social interaction, and transforming the norm of communications. Meanwhile, with the massive investments in the “metaverse” by tech giants such as Meta, Nvidia and Microsoft (Kim, 2021), the corresponding advances of HCI (human-computer interaction) technologies, and the popularity of affordable VR devices, leads virtual reality to becoming increasingly accessible and affordable to the public. In line with this trend, there are good reasons to believe that social virtual reality has great potential to lead the next revolution in the digitalization of social activities.

Social virtual reality (social VR or SVR) is a kind of multiuser VR application or platform that allows users to interact and communicate with each other within a virtual environment (McVeigh-Schultz et al., 2018; McVeigh-Schultz et al., 2019). define social VR as “a growing set of multiuser applications that enable people to interact with one another in virtual space through VR head-mounted displays” The concept of social VR is a successor of collaborative virtual environments (CVE), a term used in the 1990s that is defined as:

A broad class of desktop and immersive VR systems that support collaboration in a common virtual environment where each participant is represented by an avatar (Liu and Steed, 2021).

While the definitions above might have covered most of the multiuser VR applications, social VR also emphasizes cultivating social relationships, experiencing different virtual activities, and exploring self-representation (Maloney et al., 2021). Social VR has enormous potential for diverse applications, including but not limited to communication (Roth et al., 2017), gaming and entertainment (Roth et al., 2018; Wang, 2020), education (Le et al., 2015; Ripka et al., 2020; Foerster et al., 2021), collaborative work (Lohle and Terrell, 2014; Heath, 2021), and healthcare (Li et al., 2020; Shao and Lee, 2020). However, there are still many open questions including potential impacts of technological determinants on the experiences (Latoschik et al., 2019) or the overall design goals of such SVRs (Roth et al., 2015).

Meanwhile, social VR has been shifting from single-purpose virtual experiences to an alternative realm for human socio-cultural interaction (Dionisio et al., 2013). O’Brolcháin et al. (2016) have defined VRSN (Virtual Reality Social Network) as “the convergence of virtual realities and social networks” and hypothesized a scenario in which a significant portion of the world’s population are members of a social network, which either are immersive or at least offer immersive experiences. They also emphasized that in such a scenario, “users enter VRSN as themselves, rather than playing a character as in a game.”

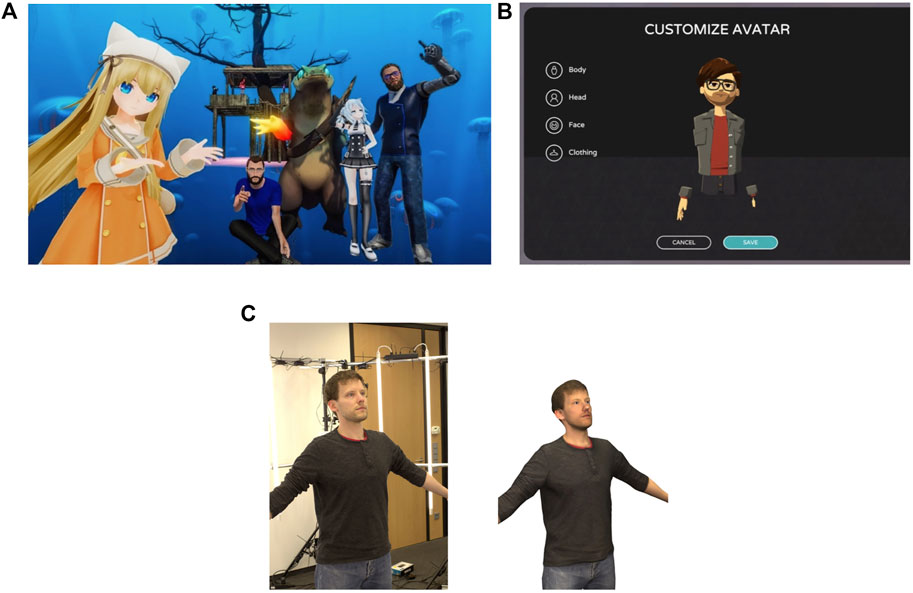

The graphical representation of a user in the virtual world is known as an avatar, which is usually required for users to be engaged in social activities, driven by the user’s movements (Bailenson et al., 2004) and creating virtual embodiment (Roth and Latoschik, 2020) for the user. In general, there are three types of self-representation alternatives in social VR (Figure 1). The first type of self-representation allows users to select from a very diverse set (Liu and Steed, 2021) or upload avatars created by users themselves. Social VR application VRChat1 is a typical example, where users can not only use cartoonish or anime characters but also appearances of elves, monsters, and robots as their avatars. The second type of self-representation is a customizable avatar that allows users to determine the color, shape, and style of different parts of the body (e.g., face, hair, eye, skin) and create their unique representations, which indicate their gender, race, aesthetics, hobbies, and so on. Customizable avatars are widely adopted by social VR applications, such as RecRoom2 and AltspaceVR3.

FIGURE 1. Examples of three types of self-representations in social VR. (A) Self-uploaded avatar. Example: various of self-presentations uploaded by users in VRChat5. (B) Customizable avatar. Example: the avatar customization interface in AltSpaceVR6. (C) Personalized (photo)realistic avatar. Example: generating a photorealistic avatar from a body-scanner. Panel reproduced from (Achenbach et al., 2017).

Recently, there has been a shift in users’ self-representations toward personalized (photo)realistic avatars that accurately capture the likeness of the them (O’Brolcháin et al., 2016). That is the third type of self-representation. For instance, in Spatial. io4—a virtual workspace focusing on meetings and media sharing, users can upload a photo of themselves to create realistic avatars that capture their appearance. To a large extent, such an avatar works as an identifier of the user, and this identity is consistent with who they are in real life. This type of avatars are usually referred to as personalized avatars (Chen et al., 2014; Ichim et al., 2015; Waltemate et al., 2018) or personalized realistic avatars (Fribourg et al., 2020). A personalized avatar reproduces the user’s appearance as realistic and recognizable as possible, serves as the proxy of their physical body in the virtual world, and uniquely determines the user (even though a user can have multiple such avatars). Thus, it can be considered a user’s digital body (Slater, 2008; Neustaedter and Fedorovskaya, 2009; Triberti et al., 2017; Ferrari, 2021). Studies suggest that using a personalized avatar can support creative idea generation (Marinussen and de Rooij, 2019), enhance users’ engagement, and make them feel more connected (Lucas et al., 2016). Social interactions with high-fidelity digital bodies in virtual reality will create a more substantial acceptance of virtual body ownership (VBO) (Latoschik et al., 2017). Waltemate et al. (2018) also point out that personalized photo-realistic avatars resembling the users will significantly increase presence [the sensation of being in the virtual world (Schuemie et al., 2001)] and dominance [the perceived state of own social dominance or submission (Waltemate et al., 2018)].

However, unlike physical bodies, the digital bodies can be altered or changed at the users’ will, which allow them to disguise their identity; identity theft will easily occur as cybercriminals “steal” or generate avatars of others and pretend to be them (Lake, 2020). In other words, the “authenticity” (whether one’s identity online or in the virtual world is who one claims to be and can be identified with a unique identity in reality) of a social VR citizen cannot be guaranteed. Social VR applications where users enter as themselves and use their personalized avatars (e.g., Spatial. io) are not without limitations. For example, identity verification is still lacking (in Spatial. io, so long as a photo is provided, users can generate and use a personalized avatar from that photo regardless of whom it belongs to), therefore the authenticity of the identity still cannot be guaranteed. It has been widely reported that identity thefts and phishing attacks exist in video conferencing using “deepfake” and similar technologies (Cole, 2020; McElroy, 2021). It will not be surprising that similar cybercrimes targeting avatars will occur in social VR.5,6

With the aforementioned future trend of social VR, there will be significant challenges and necessities in protecting users’ digital bodies, building the authenticity of social VR citizens, and protecting them from identity misappropriation. Such efforts are essential in enhancing the trust among social VR citizens and increasing people’s acceptance of social VR, as the trustworthiness of avatars has a significant and favorable influence on net-based social activities and collaboration (Bente et al., 2014; Chae et al., 2016; Pan and Steed, 2016; Pan and Steed, 2017). In addition, providing a trustworthy, intelligent, and comfortable virtual environment is the prerequisite for building human-centered social VR that everyone can trust and enjoy.

The presented systematic review addresses potential threats to users’ identity and privacy in social VR, with a specific focus on the protection of their digital bodies. While this is a relatively new field that receives insufficient scholarly attention, we aim to collect relevant research, identify research gaps, and call for attention and interdisciplinary collaboration from privacy-preserving computing technologies, artificial intelligence (AI), law, ethics, psychology, and human-computer interaction (HCI). As an outcome of this study, we aim to answer the following research questions or give insight towards potential answers backed-up by the current related work:

(RQ1) What are the existing and potential threats to privacy and identity when using digital bodies in social VR?

(RQ2) How can we protect digital bodies and users from those threats?

(RQ3) What is the current stage of digital bodies usage in social VR, in terms of usability and user acceptance?

(RQ4) How to indicate the authenticity of social VR citizens’ identity?

(RQ5) How do we evaluate the trust among social VR citizens?

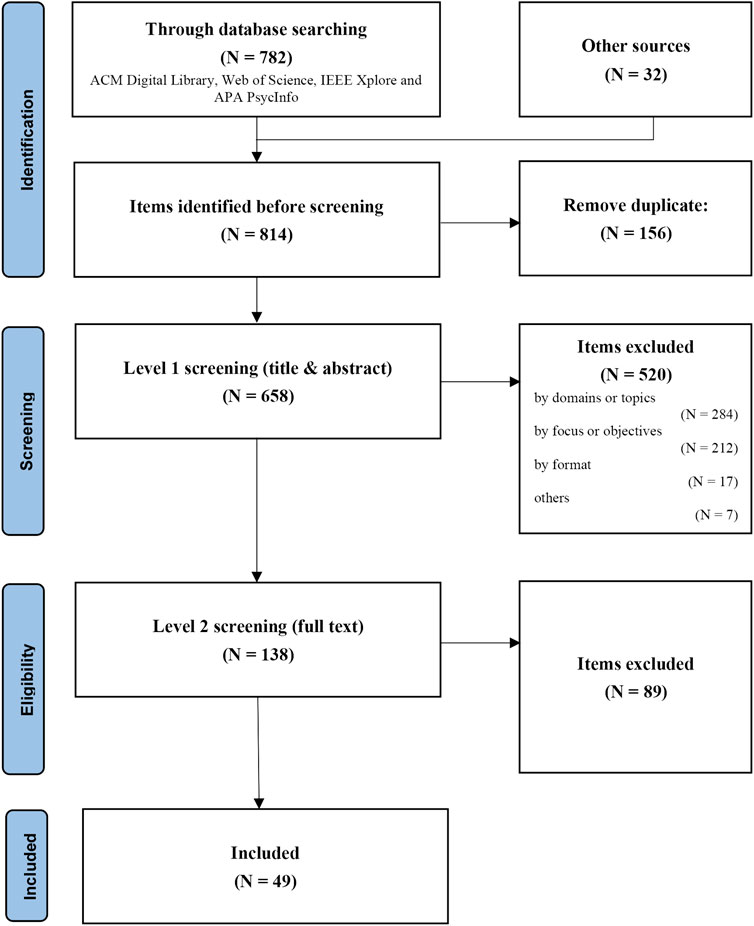

We performed a structured systematic review following the guideline and procedures from the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) statement (Page et al., 2021). In the following sections, we describe our eligibility criteria, information sources, search strategy, data collection process, as well as the selection process. The exclusion criteria, the number of items after selection in each step of the selection process, and the number of items included in the review, are presented in a flowchart (Figure 2) adapted from (Page et al., 2021).

FIGURE 2. Flow diagram of the selection process, numbers of included and excluded items in each step.

We aimed to identify papers that either describe or raise concerns about the addressed threats to identity and privacy, provide technologies and potential solutions for protecting digital bodies in social VR, or analyze the relationship between the digital bodies and identities of social VR users.

Regarding privacy and identity issues in social VR, we only focus on those stemming from the use of digital bodies. Therefore, other privacy issues [many of which have been discussed by O’Brolcháin et al. (2016)] such as the misuse of data, and the prevalence of recording devices, will not be considered.

Regarding technologies and potential solutions for protecting digital bodies in social VR, we search for grounded evidence, technical solutions, or design guidelines in the area of virtual reality. While some articles provide mere ideas or inspiration for future research, we considered them not eligible and more suitable to be referred to in the discussion. Similarly, technologies in other areas that could be potentially applied to digital bodies and social VR are considered out of scope and only referred to in the discussion.

Due to the lack of research on the addressed topic and the desire for interdisciplinary knowledge, we did not limit our search to human-computer interaction, but have broadened it to include psychology, law, ethics and more. We also do not restrict the research methods and forms of study, so long as they are peer-reviewed academic journal articles, conference papers, book sections, reports, or doctoral theses strongly related to our research topic.

The databases that have been scanned are ACM Digital Library, Web of Science, IEEE Xplore and APA PsycInfo. For each source, we always used the search term queries shown in Table 1. The search keys must be included in the title, abstract or author keywords of the article (for Web of Science, we search with “topic,” which includes the three previously mentioned and “keywords plus”). We searched articles published within the period from January 2000 to January 2022. Although concepts such as “virtual reality” and threats in the cyberspace have been around since long before 2000, we believe that the vision back then was out of touch with current development and lacked reference value. We constructed the search term queries in two sets of combinations: 1) in the first set of combinations, we used (“avatar” OR “digital body”) as the first keyword AND (“identity” OR “privacy” OR “authentication” OR “authenticity” OR “protect”) as the second keyword; 2) additionally, we use the second set of combinations (“VR” OR “virtual reality”) as the first keyword AND “identity theft” as the second keyword. On databases that allow us to specify the language of paper while searching, we select “English.” Addition articles identified via other sources and cited reference searching are also added to the collection.

Data was collected and managed with Zotero7 and Zotero Connector Chrome extension. Titles, authors, abstracts and sources of all items were collected on 13 January 2022, either by automatic grabbing with Zotero Connector or by importing RIS files generated from the databases. Full texts of items were either collected by automatic grabbing with Zotero Connector (last access 13 January 2022) or by manually downloading during the selection process. All data access was provided by the library of the authors’ host institution.

The selection process is illustrated by a flow diagram in Figure 2. Firstly, duplicated items were removed. Secondly, we performed level 1 screening, primarily by their title and abstract, and a few by browsing the full text. In this step, we excluded papers by domains or topics (studies that are not related to virtual reality, avatar, or have different interpretations of such concepts), by focus or objectives (study in related domains or topics but lack relevance to our research questions and objectives), by formats (items with only abstract, poster, demo) and by other reasons (retracted items, items not written in English, items with no abstract and full text available).

Thirdly, we examined the remained items for eligibility by browsing the full text or thoroughly and intensively reading if necessary. Those with full text not accessible were excluded; items that are irrelevant to the topic or have a different focus will be excluded; items lacking groundwork and evidence to support their conclusions will be excluded.

Finally, items that remained from the previous process were included and analyzed in this systematic review.

As illustrated in Figure 2, we have collected 782 items from database searching and 32 items from additional sources. 658 different works were identified after removing duplicates.

In the level 1 screening, 284 items were rejected by domain or topic, including works that have different definitions of our search terms (for example, “avatar” can be its original concept within Hinduism, the film Avatar by James Cameron, or a profile image in early Internet parlance), works that are not related to social virtual reality (e.g., a significant amount of works study virtual characters in MMORPGs) or avatar (e.g., some works use “avatar” to describe the concept that we usually define as “agent,” a virtual character controlled by computer programs). 212 items were rejected by research focus due to the lack of relevance to our research questions and objectives (e.g., many works discussing the “identity” of avatars focus on users’ self-perception of gender and race). 17 items were rejected by format, and six items were rejected for other reasons. Some items have met multiple criteria to be rejected, but they are counted only once with the primary rejection reason. The screening yields 138 items after removal.

In the next level of full-text screening, we applied the eligibility criteria and further excluded 89 items. Five items were eligible according to our criteria, but were already included in a previous review by Jones et al. (2021) which was also one of the selected papers. To avoid repetition, we did not include these items. Finally, 49 papers are included in the results and analysis. An overview of the selected 49 papers can be found in Table 2.

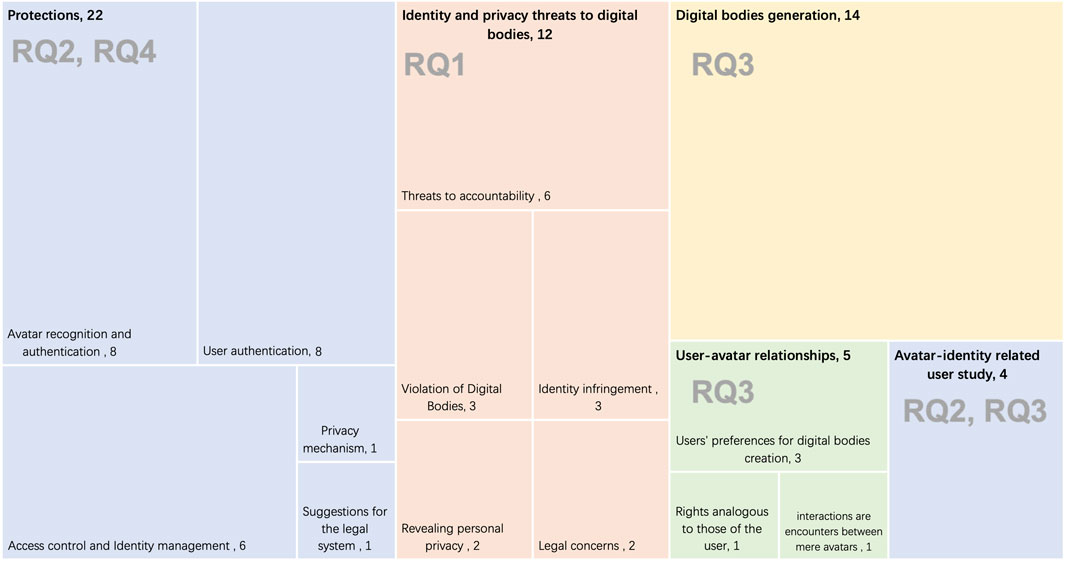

The selected papers have covered a wide range of topics, research methods and study characteristics. We first provide preliminary observations on selected works according to the published year, related domain, and the social VR platform used, constituting Section 3.1. Then, we present study characteristics and syntheses of results in five categories, namely digital bodies generation (Section 3.2), threats to privacy and identity (Section 3.3), user-avatar relationships (Section 3.4), protections (Section 3.5), and avatar-identity related user study (Section 3.6). The distribution of the selected papers across the five categories and the subtopics under each, as well as the relationship of each category to the Research questions, is shown in Figure 3.

FIGURE 3. Distribution of selected papers across categories and subtopics, and their relationship to the research questions. As some papers appear several times, the numbers do not add up to the total number of papers, nor number in each category.

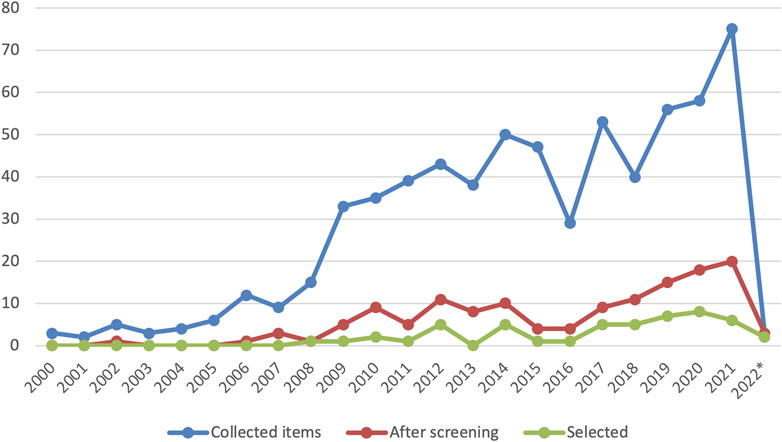

In Figure 4, we illustrate the number of publications of collected papers, papers after level 1 screening, and papers included in the final results, according to year. Prior to 2009, few relevant studies had emerged. Only six papers show some degree of relevance and passed the level 1 screening, while only one paper fell into our inclusion until 2008. The number of collected papers has a substantial increase in 2009, followed by a gradual upward trend until 2015 except for a slight decline in 2013, which indicates an increase in research interests in relevant topics, such as virtual world, social VR, and avatars. 14 papers published during this period are included in the final results. However, the year 2016 has witnessed a dramatic decrease of interest, only 29 papers from this year were collected, and the number of selected papers shows a similar trend. Since then, attention to this field has steadily increased until it peaked last year when a total of 21 papers passed the level 1 screening. Combined with the observation in Section 3.1.3, such an interesting change in trend may stem from the rise and fall of Second Life8 from Linden Lab, a virtual community that has once received considerable attention, and the popularity of the “metaverse” accompanied by advancements in VR technology in recent years.

FIGURE 4. Division of collected and selected items according to published year. *Database searching was conducted in January 2022, thus the Year 2022 only yields two selected item.

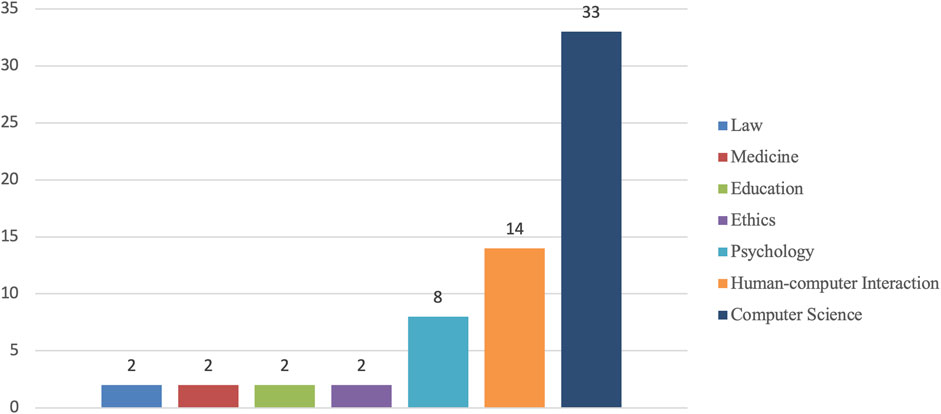

Due to the relatively liberal eligibility criteria, the included articles cover diverse research domains (Figure 5) range from law (N = 2), medicine (N = 2), education (N = 2), ethics (N = 2), psychology (N = 8), human-computer interaction (N = 15) and computer science (N = 33). On the one hand, the lack of research on the addressed research topics has forced us to broaden the scope of searching; on the other hand, it also reflects the fact that identity and privacy issues arising from the use of digital bodies have received a certain amount of attention from outside of the technical realm.

FIGURE 5. The research domains covered by the selected articles and the corresponding number of articles. Some articles cover more than one domain.

We have also counted what kinds of social VR platforms (including early desktop virtual worlds such as Second Life) are being used in research to conduct studies, collect data, recruit participants, or being analyzed. By 2017, Second Life was still dominant, and 11 papers conducted studies or collected data on Second Life. However, since 2018 Second Life has disappeared. Other social VR platforms Include AltspaceVR (N = 1), Entropia Universe (N = 2), Reaction Grid (N = 1), VRchat (N = 1), and self-implemented virtual worlds (N = 3).

While it is true that social VR users can choose between a variety of representations to suit different purpose, a unique personalized realistic avatar that captures the likeness of them is substantial to increasing body ownership, presence, and dominance (Waltemate et al., 2018), and preferred for trust-building (Bente et al., 2014).

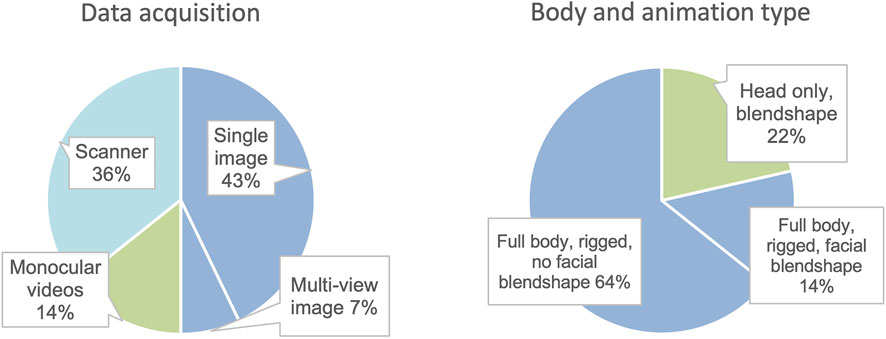

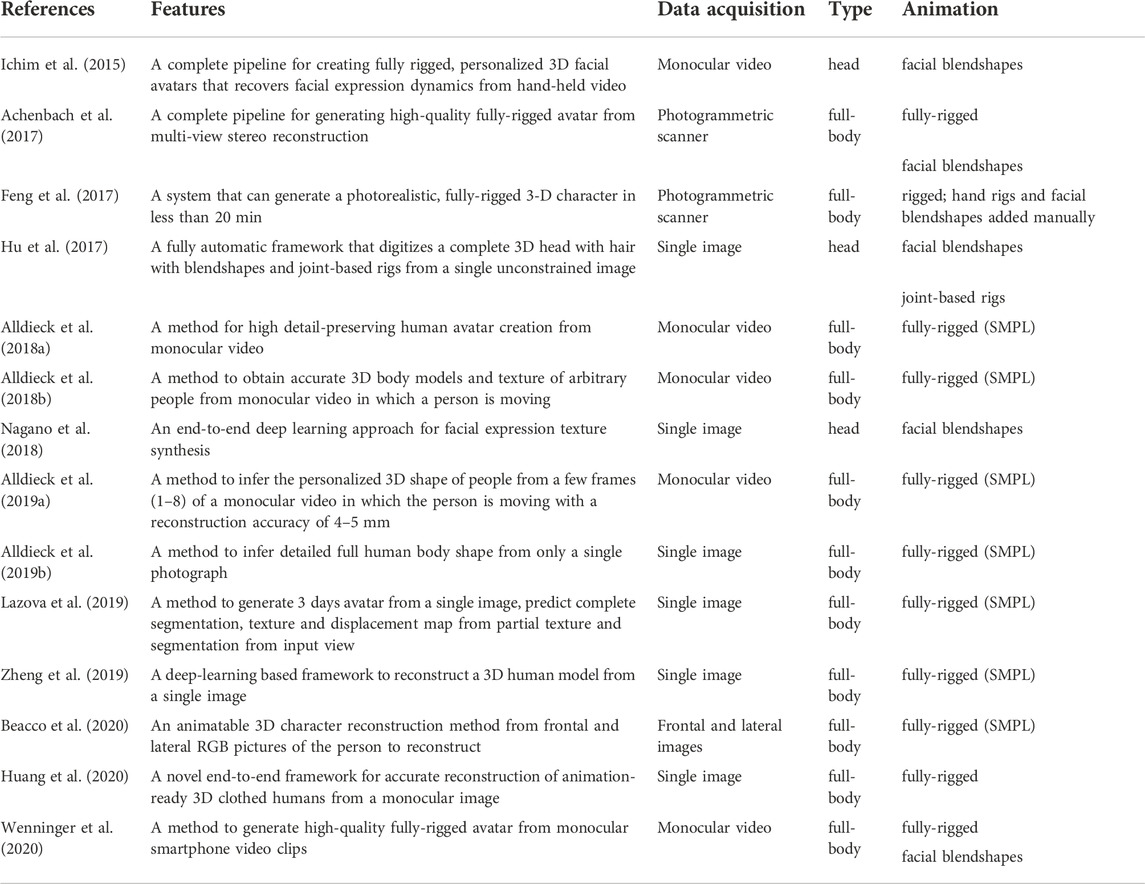

We looked for recent technical advancements that enable the automatic creation of personalized realistic avatars without the need for complex manual efforts. The generated avatars should not be simply a 3D reconstruction of a human body but should also be animatable and suitable for being used in social VR applications. 14 papers (Ichim et al., 2015; Achenbach et al., 2017; Feng et al., 2017; Hu et al., 2017; Alldieck et al., 2018a, 2018b, 2019a, 2019b; Nagano et al., 2018; Lazova et al., 2019; Zheng et al., 2019; Beacco et al., 2020; Huang et al., 2020; Wenninger et al., 2020) that presented novel methods or end-to-end pipelines are identified. As shown in Figure 6, they can be further classified according to data acquisition, which either use single images (N = 6), multi-view images (N = 1), monocular videos (N = 5), or photogrammetric scanners (N = 2). Three papers only focus on generating the avatar head, while the remaining 11 focus on full-body avatars. All the avatars or characters generated from these methods are animatable. Except for the three methods that generate facial avatars, all methods create rigged avatars, and two methods also enable facial blendshapes. It is also worth mentioning that methods in seven papers are based on the SMPL (Skinned Multi-Person Linear) Model (Loper et al., 2015). A summary of these papers includes their novel features, data acquisition, avatar types, and animation capability listed in Table 3.

FIGURE 6. Different data acquisition methods and body and animation types of digital body generation pipelines, illustrated as bar charts.

TABLE 3. This table summarizes the papers that presented methods or pipelines to automatically generate personalized (photo)realistic avatar from either images, videos or scanners.

Despite that the concerns for identity infringement and privacy issues that digital bodies may bring about have been highlighted frequently in literature, they are usually mentioned merely as background information and are rarely described and analyzed comprehensively. The following sub-sections are the syntheses from 12 papers on existing and potential identity and privacy issues that stem from or threaten the use of digital bodies in social VR.

As the representations of users and the medium of interaction between users and the virtual worlds, avatars as the direct digital proxies of the users are inevitably the target of violence and sexual harassment. Lemley and Volokh in their paper (2018) described a type of assault that are nowadays somewhat common: “virtual groping”—the offenders maliciously touching and rubbing body parts of the victim’s avatars. Although the victims are not “physically” being touched, they could still be seriously disturbed as if it happened in the real world. Falchuk et al. (2018) also discussed such criminal harassment to avatars targeted at female players, and reported that in Second Life, this kind of harassment has become so prevalent that developers need to publish a “primer” on how to avoid harm in such situations. Vanacker and Heider (2012) discussed the ethical issues raised from the harm done to avatars, including a case study of “The upskirt gallery,” where a Second Life user took sneak peek photos and exhibited several “upskirt pictures” of other avatars he encountered in the virtual world.

In addition, violation of avatars does not always appear during interactions. Violation can also be the offensive modification of the victim’s avatar (Lemley and Volokh, 2018), which includes using certain techniques to make the avatar appear naked, exaggerating the avatar’s appearance features to make it a grotesque caricature, to mention a few.

Identity theft is one of the most common concerns stemming from the use of avatars, especially personalized avatars. Falchuk et al. (2018) have described a kind of “social engineering hacking” in social VR. The offenders could impersonate a player’s friend to obtain information and profits, which might have harmed the privacy of both victims.

Lemley and Volokh (2018) discussed, from a legal perspective (based on United States law), whether users are protected when others deliberately use their names and likenesses to create an avatar. They argued that such impersonation may unfortunately be legally permitted, “especially when it is clear that this is just a pseudonym, can be a useful means of parody, commentary, and entertainment.” However, they also pointed out that such conduct should perhaps not be allowed without the consent of the impersonated person, for the fact that VR has a much higher visceral quality, and such impersonation may damage others’ perception of the impersonated person. Furthermore, they argue that ordinary people are more affected by avatar impersonation than celebrities. Therefore, the right of publicity needs to extend to a broader scope for VR and AR avatars.

Lake (2020) discussed identity infringement more specifically, noting that due to the highly interactive nature of social VR, “this type of infringement will be far more intimate than the theft of a still image or a static fake Facebook profile.” Lake further notes that as interactions in social VRs occur in real-time, users lack the means to authenticate offenders’ identities in a timely manner; meanwhile, the fluent e-commerce enabled by cryptocurrencies prevalent in social VR often helps offenders instantaneously get money from their victims. Interestingly, when it comes to the legal protection that a user’s avatar will receive (based on United States law), Lake believes that there are two scenarios: 1) trademark or copyright law applies to avatars that are unlike the users, and 2) the right of privacy and the right of publicity, should apply to personalized avatars that reflect the identity of users.

Anonymity and identity disguise also hinder the track of accountability of avatars in social VR. In their discussion of multi-user virtual worlds in eHealth applications, Gorini et al. (2008) pointed out that anonymity might negatively impact patients during e-therapy, as anyone can interact with the patients and there is no guarantee of who the patients are interacting with. Several papers (Mohamed and Yampolskiy, 2012; Yampolskiy et al., 2012) have revealed that terrorist groups were using virtual communities, such as Second Life, for communication and recruitment. Establishing the identity of individuals in such circumstances is challenging. Bader and Ben Amara. (2016) pointed out that identity masking in the virtual worlds allows users to create multiple avatars and exploit the virtual environments for crimes such as money laundering, economic fraud, identity theft, and cyber-terrorism.

In investigating virtual worlds as situated learning approaches, Conrad et al. (2017) raised their concerns about the impact of “fake” accounts. By comparing to “profiling cloning” and fake accounts on social networking sites such as Facebook, they suggested that for activities such as education, the necessity for anonymity is no more justified and will make it difficult to trace back to the person that can be accountable when deliberate disturbances and negative intervenes occur.

While personal privacy leakage is already a prevalent risk in the virtual world, the use of digital bodies may further exacerbate it. Deng and Ruan (2009) pointed out that users’ personal information such as name and address might be required in registration for creating an avatar, as many social VR’s policies oppose anonymous use. Besides, as the avatar is “an important extension of the user’s own privacy and identity with implication for intimacy and dignity,” in-world privacy concerns are also being raised as users’ daily activities will often be mirrored in their avatars (Deng and Ruan, 2009). Falk et al. (2021) have proposed such an example: a de-anonymization attack that by just secretly recording the movement of an avatar and mapping the correlations with the movement of the user in real life using a bespoke agglomerative clustering algorithm, the identity of the user might be revealed with an accuracy of 89.60%.

Lemley and Volokh (2018) concluded that existing law could not provide sound solutions against threats posed by VR and AR. They demonstrated the legal dilemma and identified potential questions through several case studies, such as how the law is likely to treat VR “street crimes” and the difficulties in criminal law enforcement and involvement. Thus, they suggested thinking ahead of such issues, and the legal doctrines and rules need to be adjusted to address the new situation. Similarly, Lake (2020) revealed the inconsistencies and procedural barriers brought about by dated Internet laws with a specific focus on identity misappropriation in social VR. According to them, these barriers include 1) Internet personal jurisdiction, 2) strong judicial preference toward protecting the anonymity of anonymous online users, and 3) sweeping immunity for Internet Service Providers (ISP), leaving plaintiffs without a defendant to sue.

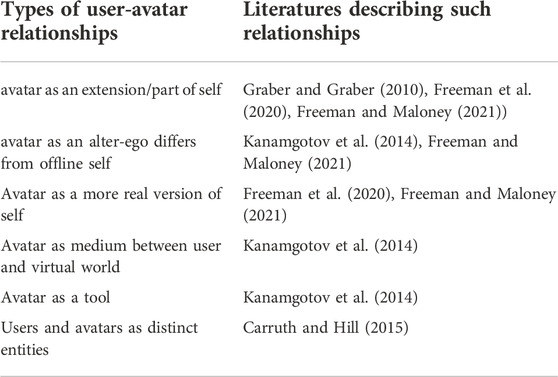

The relationships between users and avatars are central to the understanding of identity and privacy issues and are a prerequisite for ethical and legal discussions. Only when the nature of user-avatar relationship is clarified, can the violations to avatars be characterized and corresponding protection mechanisms be developed. We have selected five papers and further classified different user-avatar relationships described in these papers into six categories in Table 4. Based on the number of articles found and the distribution of their respective topics of interest, we believe that research under this category is underdeveloped. Nevertheless, we summarized their conclusions or key arguments on user-avatar relationships.

TABLE 4. We summarized typical user-avatar relationships that were observed or defined in literatures. These help us to understand how user perceive their avatar and how to develop protection mechanism for their digital body.

To a certain extent, users’ creation and customization of their digital bodies implicate how they perceive their avatars and how the user-avatar association is built. According to the evidence found, the creation of avatars reflects different preferences.

Kanamgotov et al. (2014) investigated different identity attributes during avatar creation and how avatar customization influences the degree of user-avatar association. In their first experiment—an unstructured interview with 16 computer science students, they discovered that experienced users tend to create an alter ego/false identity that is different from themselves with a higher level of customization and shares fewer identity similarities with their avatars. Besides, many participants prefer creating multiple avatars with different identities rather than using a single identity; Some chose to mask their own identities for privacy concerns. In their second experiment on a virtual campus, 12 participants customized their avatars and explored the virtual environment, followed by a semi-structured interview. They further conclude that users portray social identities through the creation of avatars. Most users prefer fabricating identities depending on the objects and environments they are exposed to rather than their own identities. We identified three types of user-avatar relationships from the study of Kanamgotov et al.: avatar as an alter-ego differs from offline self, avatar as medium between user and virtual world, and avatar as a tool.

Freeman et al. (2020) provide a different observation. A qualitative analysis was carried out on 30 semi-structured interviews of participants recruited from different social VR platforms. As a result, they identified three key themes that differentiate social VR avatars from those in traditional virtual worlds and online games. Firstly, avatar creation in social VR is considered challenging but fun and emotionally fulfilling. Secondly, higher engagement, intimacy, and personal feelings towards their avatar were provoked as users’ physical bodies became the immediate and sole interface between them and their avatars (avatar as an extension/part of self). Lastly, experiencing social VR avatars also encourage users to explore their own identity (avatar as a more real version of self), especially for those who might struggle with their gender or sexual identity. Their participants also show a stronger identification with their digital bodies in social VR than in other virtual worlds, as they have a stronger desire to make their digital bodies similar to themselves, which is inconsistent with the observation of Kanamgotov et al. (2014). The same data was further analyzed by Freeman and Maloney (2021), adding that the construct of avatars in social VR is normally based on consistency with the users’ physical selves or the social atmospheres of specific platforms. Besides, aesthetics, gender, race, and age/maturity play an essential role for users to perceive and interact with each other in social VR.

The inconsistent observations between (Kanamgotov et al., 2014) and (Freeman et al., 2020; Freeman and Maloney, 2021) may stem from the difference in their recruitment targets. Participants in the study of Freeman et al. (2020) were recruited from different social VR platforms, who might be more comfortable with socializinghe virtual world and more open to presenting their authentic selves on such platforms, comparing to participants recruited from school in the study of Kanamgotov et al. (2014). Both articles (Kanamgotov et al., 2014; Freeman and Maloney, 2021) highlight that environment or social atmospheres in social VR can impact users’ choice of avatar identity. In addition, only customizable avatars were considered in these studies, while personalized avatars, which reproduce the appearance of the user’s physical self, were not an option.

From an ethical perspective, Graber and Graber (2010) discussed whether an avatar shares rights analogous to the rights of the user. They started their argument “A Physical Body is Not Necessary for Legal Protection and Having Rights as a Person” with the evidence of psychological abuse and a will. Further, they argued that rights are already assigned to representations of an individual without biological corporeal such as prosthetic limbs, or without biological consciousness such as people in a permanent vegetative state, therefore could as well be assigned to digital bodies. Lastly, they presented a thought experiment to argue that avatars are appropriate candidates for rights and conclude that an avatar has rights analogous to the rights of the user, if a user considers an avatar an extension of the self.

In contrast, Carruth and Hill (2015) argued that users and their avatars should be considered to be distinct. Without further grounding, online avatar-mediated interactions should not be considered as encounters between users but rather merely between avatars. They investigated different accounts of identity and distinctness between users and avatars, concluding that they should be considered distinct as they instantiate distinct sets of properties. They then argued that user-avatar identification that is only relevant to their own avatar first-personally, is not sufficient to ground encounters between users. Therefore, they suggested that social and ethical issues described in literature (many have been described above) under the notion of the encounter between users, should be reconsidered.

In response to the problems mentioned above, we hope to find corresponding protection mechanisms or potential countermeasures in the literature. While 13 papers (Gavrilova and Yampolskiy, 2010; Boukhris et al., 2011; Mohamed and Yampolskiy, 2012; Yampolskiy et al., 2012; Bader and Ben Amara, 2014a; Bader and Ben Amara, 2014b; Bader and Ben Amara, 2016; Bader and Ben Amara, 2017; Falchuk et al., 2018; Pfeuffer et al., 2019; John et al., 2020; Lake, 2020) have explicitly addressed one or several identity and privacy issues and proposed corresponding protection mechanisms, works that have presented techniques or design guidelines that provide potential solutions in the area of virtual reality are also included and analyzed. Based on their characteristics and objectives, we have subdivided them into the following categories.

The traceability of an avatar is crucial to its acceptability in the social VR. Gavrilova and Yampolskiy (2010) are the first to investigate the field of automatic visual or behavioral authentication of non-biological entities (as they call Artimetrics), including software agents, avatars, and hardware robots. They have outlined the state-of-the-art visual and behavioral authentication and multi-modal system for avatar authentication. In another work (Yampolskiy et al, 2012) that was later published, they further defined and gave examples of six scenarios requiring Artimetrics, namely 1) matching a human face to an avatar face and 2) vice versa, 3) matching the face of one avatar to another avatar, 4) matching an avatar’s face from one virtual world to the same avatar represented in a different virtual world(s), and 5) matching a sketch of an avatar to an avatar’s face and 6)vice versa.

Following this field of study, Boukhris et al. (2011) presented a biometric identification system of avatar faces using support vector machines (SVM), and wavelet transforms, and achieved a 4.22% Equal Error Rate (EER) on a dataset of 100 samples collected from Second Life. Mohamed and Yampolskiy (2012) introduced two algorithms, Principal Component Analysis (PCA) and Wavelet PCA (WPCA), for avatar face recognition and tested them on two datasets of avatars from Second Life and Entropia Universe. Yampolskiy et al. (2012) described an avatar face recognition framework, including a set of algorithms to perform face detection and image normalization, face representation, and matching. Two scenarios within-virtual-world (avatar-to-avatar matching) and inter-reality-based (photo-to-avatar matching) was tested. In order to create a standard for evaluating avatar face authentication algorithms, Bader and Ben Amara (2014b) have developed the SID-Avatar database, a collection of 50 3D avatars from Second Life.

In contrast to automatic recognition, Tummon et al. (2019) were interested in avatar facial recognition by humans in a virtual airport passport control. A series of experiments were conducted to assess the feasibility of face-matching performed by participants in VR. In a follow-up work, Tummon et al. (2020) have further investigated how body language of avatars influences facial identification in the same scenario.

User authentications are the key to combating identity theft and achieving secured identity management. In their review of virtual reality authentication, Jones et al. (2021) identified and provided a comprehensive overview of 29 papers until October 2020. They categorized the authentication methodologies of selected works into four types: knowledge-based authentication, biometric authentication, multi-model authentication and gaze-based authentication. With further analysis of the performances and user studies of proposed protocols, they outlined the pros and cons of different authentication methodologies, pointed out the lack of study in this area and called for more investigation into multi-model schemes.

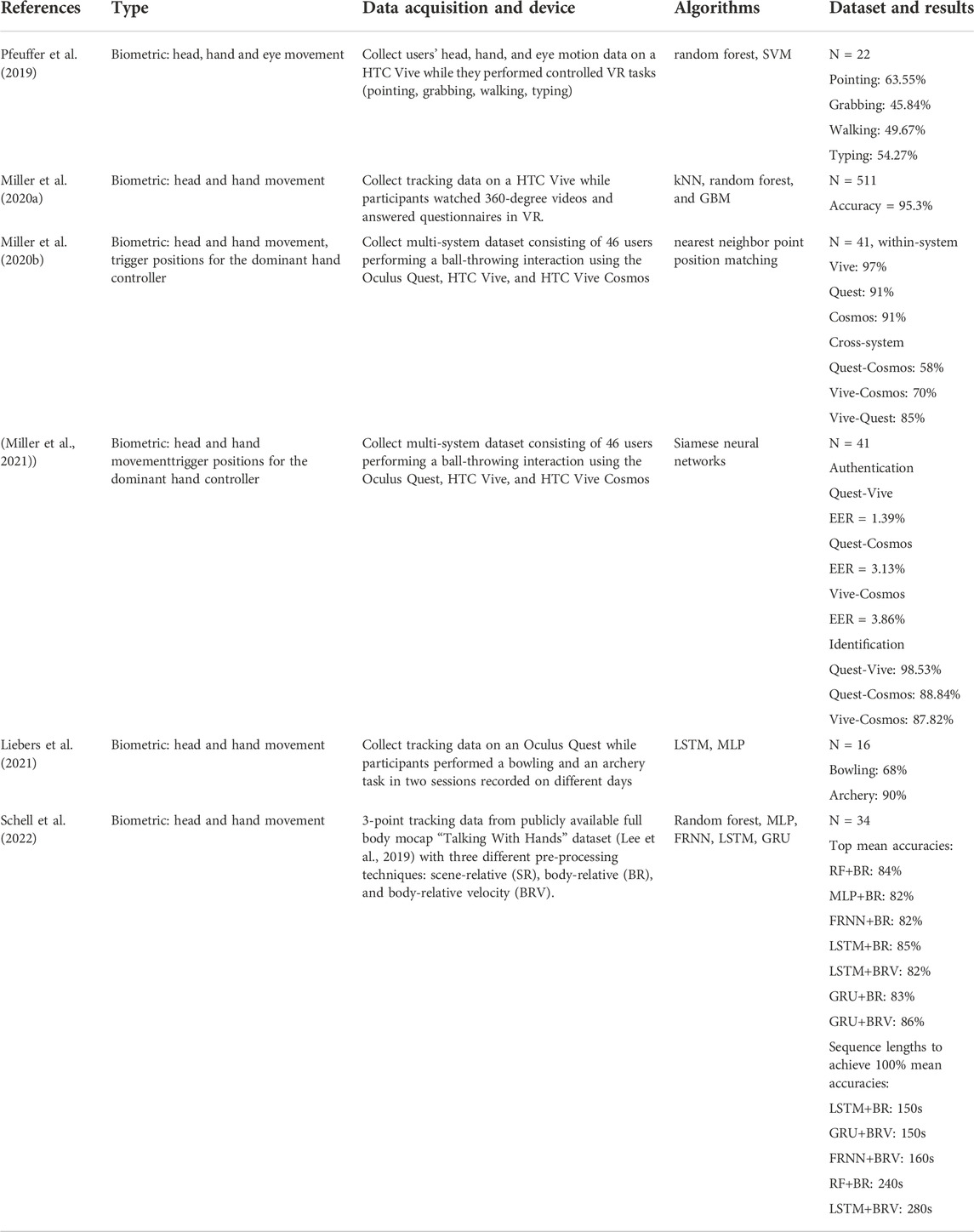

Additionally, we found six papers (Pfeuffer et al., 2019; Miller M. R. et al., 2020; Miller R. et al., 2020; Liebers et al., 2021; Miller et al., 2021; Schell et al., 2022) focusing on VR authentication that are not included in the review of Jones et al. An overview of these papers is listed in Table 5.

TABLE 5. An overview of six papers focusing on VR identification and/or authentication, that are not included in the reviewed of (Jones et al., 2021).

Notably, a latest work (Schell et al., 2022) compares different data representations and machine learning architectures for user authentication using movement data. By comparing three data representations: scene-relative, body-relative, and body-relative velocity data, the authors highlighted the importance of data pre-processing and concluded that scene-relative encoded data is not ideal, as it reflects session specific characteristics (i.e., position and orientation of the users) that could easily lead to overfitting of the models. In the comparison between five different prominent machine learning techniques (i.e., random forest, multilayer perceptron (MLP), and three RNNs: fully recurrent neural network (FRNN), long short-term memory (LSTM) and gated recurring unit (GRU)), RNN architectures outperformed random forest and MLP.

On the other hand, instead of seeking to improve the performance of authentication, John et al. (2020) implemented and evaluated a hardware-based eye-tracking defocus configuration that prevents biometric leaking during eye animation and iris authentication. Their evaluations reveal the security-utility trade-off between iris authentication and eye animation performance and suggest two different defocus configurations for each preference.

Another research direction is how to ensure that avatars themselves, as digital entities, can be protected from unauthorized access by imposters, and how the identity of the users can be managed within the social VR system. Although some authors have called for access control and identity management for avatars in social VR (Gavrilova and Yampolskiy, 2010; Yampolskiy et al., 2012; Bader and Ben Amara, 2014a; Bader and Ben Amara, 2016; Bader and Ben Amara, 2017; Freeman et al., 2020) are the only authors we found that explored this topic and proposed technical solutions.

They initially proposed a watermarking blind algorithm of an avatar’s face for securing access to virtual worlds (2014a). Biometric information (fingerprint) will be coded as a 128-bits sequence and inserted into the avatar’s face as an invisible watermark, by altering the vertex ordering of the mesh without changing the geometry. The watermark can be later extracted with a blind algorithm and compared with the original one according to Normalized Cross Correlation (NCC) for authentication. The method was proved robust to similarity transformations and signal processing attacks.

Bader and Ben Amara further describe an identity management framework relying on cancelable biometrics authentication (Bader and Ben Amara, 2016). This work is based on their previously mentioned watermarking schema but integrated with authorization mechanisms. A process model including components for 1) Identity Verification and Biometric Enrollment, 2) Avatar Watermarking, and 3) Internal Authentication and Authorization, was described.

In another paper (Bader and Ben Amara, 2017), they presented a virtual world platform for implementing and deploying biometrical access control against identity attacks from cybercriminals. They put forward a detailed methodology guide for designing such a virtual world equipped with a user interface for registration and biometric enrollment, simulation of 3D scenes, avatars, and objects, and a biometric-based logical access control framework.

Falchuk et al. (2018) presented design guidelines for privacy mechanisms in social VR (social metaverse as they call it) that help protect avatars from several threats with a focus on harassment and observation, as addressed in Section 3.3.1. They defined “privacy plan” as “A particular set of steps, initiated by an avatar, that enacts changes in the social metaverse such that the avatar has less risk of privacy intrusion when the plan is enac,” and gave several logical design examples of these privacy plans, such as “Creating a crowd of clones” identical to the avatar to confuse attackers about its actual activities, and “Private Copy” of a part of the virtual world that user can temporarily have exclusive access. To offer privacy plans to users, they have further designed a privacy framework with interactive menus that allow the user to select and control these privacy plans.

Targeting the procedural hurdles by dated Internet laws addressed in Section 3.3.5, Lake (2020) proposed several suggestions, which include revamping to what amount cyber interactions in VR reach a minimum contact that satisfies personal jurisdiction, and removing the shield of online anonymity when a certain IP address is involved in cybercrimes.

We were also curious if there is empirical research evaluating the interplay between social interaction and avatar-related identity manipulation, as these are the fundamental to trust-building in social VR.

To investigate the response to identity disguise, Segovia and Bailenson (2012) studied ostracism during avatar-based interaction, and manipulated ostracizers’ avatars to be either physically similar or dissimilar to the ostracizers. In their experiments during a ball-tossing game, participants who were ostracized from the game showed significantly higher aggressiveness towards unidentifiable ostracizers whose avatars are dissimilar to themselves, and higher aggressiveness towards ostracizers who have chosen to disguise their identity rather than have been assigned to. Their results indicate that identity manipulation might not be easily accepted during social interaction in VR.

Feng et al. (2014) presented their avatar generation method from the body scan, and in their experiments incorporated both the original scanned subjects’ and other people’s gestural styles into the avatars. Participants who know the subjects evaluated the subjects’ avatars with original gestural style as more recognizable than those without. They also suggested that their framework could be used to investigate the impact of gestural style on the trust and liking towards avatar. This study sheds light on whether people can recognize identity misappropriation when encountering imposter avatars.

Fysh et al. (2021) provided a user-friendly photorealistic avatar generation method for the psychological research community, and demonstrated a series of studies exploring the identification of the avatar faces with respect to the correspondence of the avatars with their real-life counterparts. Their research provides off-the-shelf workflows and research methodologies for future psychological research on digital bodies and identity.

Pakanen et al. (2022) presented two studies on how users want to see other users in VR and AR multi-user systems, focusing on avatar visual designs. They created 36 comparable avatars with six styles ranging from Photorealistic, Hologram, Cartoon, Shadow, Robot, to Furry, and six body alterations from full body to only eye and mouth. In the first study, semi-structured interviews were conducted with 16 participants after they experienced a multi-user system in VR or AR; a follow-up online survey further collected 43 participants’ preferences for avatar designs. Results from these studies suggested a preference for seeing other users with photorealistic avatars in both VR and AR scenarios due to their humanlike representation and affordances for interaction. They further inferred that use cases influence how users want to see others. Recognizable avatars in professional use cases are desired, as trust-building for users is highly impacted by the certainty of knowing whether the avatars they are interacting with are who they expect to be. Hearing the voice of the avatar will further enhance the trust that the avatar is really the person that it is supposed to be and not an artificial agent.

This work aimed to give an overview of threats to users’ identity and privacy in social VR with a specific focus on the digital bodies. We tried to collect relevant research, discussion and identify potential solutions. 49 studies were selected, analyzed, and presented according to five topics: 1) digital bodies generation, 2) threats to privacy and identity, 3) user-avatar relationships, 4) protections and 5) avatar-identity related user study.

Firstly, research into personalized avatar generation has produced significant results. Many proposed approaches are able to generate personalized avatars that can directly be animated and used as digital bodies in social VR in a short period of time. These approaches also vary in data acquisition, from requiring photogrammetric scanners to using single image. Secondly, we extracted and summarized existing and potential threats to users’ privacy and identity described in literature. While the violation of digital bodies is most severe in current social VR, identity infringement such as identity theft raises most concerns from the use of personalized avatars. Meanwhile, the accountability of social VR citizens is being threatened by the anonymity. Besides, the use of digital bodies may also increase the risk of personal privacy leakage. Thirdly, we classified different user-avatar relationships, in order to better understand the nature of threats to identity and privacy. Then, we presented several potential countermeasures in response to the threats that we identified, including avatar recognition, user authentication, access control and identity management, and privacy mechanisms. Lastly, we presented several empirical research that evaluate the interplay between social interaction and avatar-related identity manipulation.

The initial results have revealed a severe lack of research and attention on the addressed topic. Although some inspiring works were found, the focuses and contributions of most related research appear to be scattered. Apart from avatar generation and user authentication, many reviewed subtopics lack comprehensive research and influential results.

One of our initial objectives of this work was to find research in the field of information security and human-computer interaction on the issue of identity theft as addressed in Section 3.3.2. Surprisingly, not only has the issue received little attention, but almost all in-depth discussion has come from the legal perspectives only (Lemley and Volokh, 2018; Lake, 2020). The reason for this might be that the technology for photorealistic avatar creation is still in its early stage. Despite that many believe users entering virtual worlds as themselves and in their own likeness is the future trend of social VR (O’Brolcháin et al., 2016), this is not yet the reality. Naturally, there are few real-life cases of such identity theft.

Although violations against digital bodies and the threats to accountability addressed in Section 3.3.1 and Section 3.3.3 have been widely reported by the media (Basu, 2021), it is worrying that this has not received sufficient attention from the academic community, and that the relevant discussion seems to be still at the level of the Second Life era.

In contrast, the industry seems to be devoting more attention to these issues. For example, social VR developers have started implementing protection mechanisms against harassment (Kelly, 2016; Kaleem, 2022). In addition, with the development of blockchain technologies and Web 3.0, related concepts such as self-sovereign identity (SSI) and Non-Fungible Token (NFT), have emerged and are being “touted” as perfect answers to the future of the “metaverse” (Tweeddale and Yumasheva, 2021; Sawers, 2022).

Nevertheless, we will still attempt to answer the research questions as initially posed, given the available information at hand.

These threats to privacy and identity include the violation of the digital bodies, identity infringement, threats to accountability, and the risk of revealing personal privacy. The lack of protection and procedural barriers in existing law has also exacerbated the issues.

Some of these threats have long been prevalent in traditional social networking services (SNS). Identity infringement has long plagued social media such as Facebook. Many fake accounts have been created through stolen profiles and photos to engage in fraudulent activities (Picchi, 2018). In comparison, in social VR, not only does the high interactive nature lead to identity infringement being more intimate (Lake, 2020), but the presence of digital bodies may also result in more disorienting and contagious fraudulent activities. Similarly, in traditional SNS, the reveal of personal privacy remains a big issue, not only in terms of what users consciously provide but also what they unconsciously provide, such as their online activities. In the case of social VR, users’ daily activities could be reflected through their digital bodies in the virtual world and lead to a higher level of revealing. In addition, online games and social media are under threats to accountability. With the protection of anonymity, malicious attacks and defamation are commonplace. In social VR, a salient issue resulting from these threats is the violation of digital bodies.

These similarities and differences are also reflected in practices. Combined with relevant media reports, violations to the digital bodies have occurred the most commonly. Based on the current state that social VR is being used more for leisure and socializing, the damages are mostly psychological and emotional. However, suppose social VR would play an essential role in our life and even become an alternative realm for human social activities in the foreseeable future, identity infringement might prevail and cause more substantial harm, such as the defamation of personal reputation and theft of property.

As we have remarked previously, such threats and issues were mostly mentioned merely as background information and comprehensive description and analysis from technical background is still inadequate. Therefore, we look forward to systematic discussions and analyses of threats as well as reasonable predictions of potential problems that have not yet occurred, by researchers who have extensive experience and sufficient knowledge in this field. As an example, Adams et al. (2018) have presented grounded work on VR security and privacy perceptions, which provides an extensive understanding of perceived risks in VR and detailed analyses of the state of VR privacy policies. Similar works on digital bodies would be very rewarding, as they would raise the awareness of users, developers, and researchers and provide accurate and comprehensive understandings for interdisciplinary research.

Research directions we have identified so far include in-world avatar recognition, user authentication, access control and identity management, and other privacy mechanisms against harassment. Unfortunately, the presented works are not sufficient for the threats mentioned above.

In-world avatar recognition provides the possibility to identify and trace avatars involved in cybercrimes and other malicious activity. At the current stage, most works have been done in avatar face recognition, and have achieved promising performance. As the reviewed works in this area date from 2011–2014, we expect that developments in AI technology over the last few years will lead to further performance breakthroughs. However, in our opinion, avatar face recognition alone might have its limitation in practical application. At a system level, user activity can easily be tracked based on back-end data such as user id without using graphics as a medium; attackers can also use generic self-presentations or steal other’s avatars to mask their identities.

Thus, in order to improve the usability, more investigation to other recognition modalities, such as behavioral recognition, is desired. The legitimacy of tracking avatar activities may also raise legal and ethical concerns, and a future research direction could be about how such tracking can be done legally while guaranteeing the citizens’ privacy in the virtual world.

Currently common social VR applications are no different from traditional SNS, and generally use accounts with passwords, or bind with VR device accounts, to authenticate users. Such methods could be vulnerable to observation attacks and unrealistic for instant checks. On the other hand, biometrics such as fingerprint, voice recognition, and camera photo ID have proved to be reliable for identity verification in virtual interaction (Semple et al., 2010), many services are already offering multi-factor authentication (MFA) (Ometov et al., 2018) using biometrics, such as fingerprint on mobile platforms. The use of VR devices, on the other hand, offers more options for biometrics acquisition than other platforms.

With the advancing artificial intelligence technology, promising progress has been made on novel authentication algorithms and mechanisms for VR in the past few years. With the various sensors of VR devices and users’ unique biometrics, these authentication methods, although not yet mature, have achieved a high degree of accuracy. However, most current methods still require specific scenarios or tasks, and the way forward should be continuous authentication of users regardless of the environment and their activities. Additionally, research such as John et al. (2020) that considers the trade-off between the use of biometrics and potential privacy breaches deserves more attention.

In addition, we cannot ignore the potential problems arising from the use of artificial intelligence in social VR, such as bias in AI. It has been widely reported (Siwicki, 2021; Dilmegani, 2022) and researched (Ferrer et al., 2021) that the prejudiced assumptions in the algorithm development or prejudices in the training data could contribute to bias in the output of machine learning algorithm, and causing discrimination. For instance, a face recognition algorithm trained with a dataset containing mainly Caucasian people may perform poorly in recognizing people of other races. Similar bias could also exist when utilizing AI for VR authentication. For example, algorithms based on movement or physiological biometrics may be impractical or even discriminatory to people with disabilities. In human-centered social VR, it will be essential to consider the needs of different groups of people and ensure that viable alternatives are available to the appropriate technology.

Nowadays, privacy preserving security has become a key focus of information security and biometrics technologies, but in the VR domain, very little consideration has been given. In this regard, we expect authentication research in VR to be on par with mainstream security research, and possible directions include cancelable biometrics, homomorphic encryption, secure two-party computation, and so forth (Baig and Eskeland, 2021).

On the other hand, VR authentication can also be used without being restricted to existing VR sensors. Authentication modalities such as physiological biometrics (iris, fingerprint, EEG, ECG) (Ryu et al., 2021) with the support of additional wearables could also be considered. With VR devices such as the HP Reverb g2 Omnicept equipped with pupillometry, heart rate measure and face camera entering the market, there are reasons to believe that future VR devices will offer more authentication possibilities.

The protection of identity and the protection of digital bodies might be two separate aspects of the problems that together form a link between users, digital identities, and digital bodies. The idea of utilizing watermarking for avatar protection proposed by Bader and Ben Amara (2014a) deserves further exploration, and some recent studies on 3D mesh watermarking can be referred to (Ali, 2019; Malipatil and Shubhangi, 2020; Beugnon et al., 2022; Yoo et al., 2022).

NFTs, on the other hand, might have provided a different direction and have received a lot of attention in the identity management of the “metaverse.” As a token stored on the blockchain which represents a unique entity, an NFT can establish a verified and public proof of ownership of a digital item, as well as a digital identity or an avatar (Sawers, 2022). However, since what an NFT provides is merely proof of ownership and the avatar itself remains unchanged, it is still questionable how a social VR system can verify and secure such ownership. Nevertheless, nowadays, there are already productions in the form of cross-platform avatars created based upon such concepts, such as Ready Player Me9.

VR involves a lot of sensitive information about users, such as biometric information and personal activities. The leakages of such information will no doubt threaten users’ privacy and increase the risk of identity theft, as profiles of victims can be generated from such personal information. Therefore, it is important for identity management to minimize the risk of leaking such, and federated identity (Jensen, 2011) and self-sovereign identity (SSI) (Preukschat and Reed, 2021) are worth explorations. Traditional identity management processes and stores personal data in centralized databases, and the verification and authentication process are operated with a central authority, which “increases the risks of abuse and more easily rouses desires to use the system beyond the purposes for which it was originally intended (The European Data Protection Supervisor (EDPS), 2018).” In order to deal with such issues, decentralization has been a visible trend in identity management (Allen, 2016). SSI is a concept for digital identity driven by the development of cryptography, distributed networks, cloud computing and blockchain technologies (Preukschat and Reed, 2021). SSI allows individuals to fully own and manage their digital identities that exist independently from services (Mühle et al., 2018). The implementation of SSI should allow the digital bodies as part of the identity data to be persistent and verify and authenticate the identity using decentralized identifiers (DIDs) (W3C Community Group, 2020) without giving away the control of their personal data. However, there is still a long way to go before these theories can actually be applied to the social VR.

Privacy mechanisms are tools for users to protect their avatar and personal space in the virtual world, especially against harassment. Falchuk et al. (2018) in their protection mechanisms, have designed a privacy framework with interactive menus that allow users to customize their privacy settings. Many social VR applications such as Horizon Worlds and AltspaceVR have implemented “personal bubble” and similar features to prevent strangers from intruding personal space (Kelly, 2016; Kaleem, 2022).

In addition, adjusting the rendering of avatars could also provide options for users to protect themselves. For instance (Wolf et al., 2021), propose interaction techniques that allow users to actively control the transparency of other avatars, in order to reduce occlusion in overcrowded space. Such techniques could also be a good practice to mitigate the impact of harassment on victims, or to make unwanted users disappear. Alternatively, users could be given the option to blur their own avatars in untrusted environments, similar to the effect presented by Wang et al. (2021) in their experience-sharing reconstruction methods. Allowing users to switch self-avatar among different levels of fidelity (e.g., sketch-like rendering vs. cartoon-like rendering vs. realistic rendering, as Volonte et al. (2016) present in their study) could also be an option, for users to keep their personalized presentations while hiding identifiable details. We believe there are still many possibilities worth exploring.

On the other hand, empirical studies focusing on such privacy mechanisms are still inadequate. Further investigation should be conducted to quantify the effectiveness of privacy mechanisms and impact to user experience.

Despite that no relevant research has been found, it is reasonable to assume that AI technology will have massive potential for the prevention and remediation of various threats and cybercrimes in social VR. Many video game developers have started to use AI algorithms to detect cheating (Sreedhar, 2020; Jonnalagadda et al., 2021) or other abnormal activities. Facebook uses AI to detect and label harmful content and misinformation generated on its platform (Schroepfer, 2019). Similarly, AI can also be applied in social VR systems to identify irregularities, including identity theft, the violation of digital bodies, and so on. Furthermore, AI-based credit systems can be applied to determine the trustworthiness score of users by combining user authentication, user behavior analysis and other user data.

However, such monitoring is often accompanied by controversy, and the above scenario is perhaps reminiscent of the future portrayed by many anti-utopian science fictions. AI can be valuable tools to protect users’ privacy and security, but excessive and inappropriate use of AI can also lead to social surveillance and pose threats to autonomy (O’Brolcháin et al., 2016). Algorithm-based personalization contents in the virtual world could isolate users in their “filter bubbles,” and the analysis of users’ activities and behaviors could also enhance the manipulation of users. While exploring how AI technology can be used to protect users and to build intelligent human-centered social VR, discussion and awareness should also be raised to prevent social VR itself from posing threats to privacy and autonomy.

Personalized avatars can nowadays be created without complex manual efforts and highly capture the likeness of users. However, these technical advancements mostly remain as prototypes and not yet been deployed in commercialized social VR platforms. On the other hand, social VR users also tend to hide their real-life identities in virtual communities. As a results, personalized avatars as digital bodies are not yet popular among users.

Despite much progress in the generation of personalized avatars, they are still not readily accessible to the general public. One reason lies in that some of the methods required expensive equipment and setup, such as camera rigs (Achenbach et al., 2017; Feng et al., 2017). Another reason might be that most research focus more on the reconstruction realism and performance, while paying less attention to the deployment and the acquisition of users’ data. Additionally, most generation pipelines applied AI automation, which again could lead to bias in outputs. Whether the realism in appearance reproduction varies across gender, age, or race, remains to be explored. It is also worth considering and exploring whether these avatar generations can serve people with disabilities.

User acceptance also play an essential role. Firstly, the use of avatars is perceived differently by users. While many people see avatars as an extension or part of themselves, others might see them as only communication tools. Although some suggest that many social VR users tend to use avatars that are consistent with their offline selves (Freeman and Maloney, 2021), sharing real-life identities in public virtual environments may raise privacy concerns and may still not be preferred (Kanamgotov et al., 2014). The change of virtual environments might also be relevant to user acceptance. In the case of customizable avatars, the creation of avatars is strongly related to the virtual environments and usage scenarios (Kanamgotov et al., 2014; Freeman and Maloney, 2021). Whether specific scenarios of social VR (such as education and work collaboration) encourage the usage of personalized avatars remains to be explored. Meanwhile, we recognize that digital bodies also have the potential to help users in their exploration of self-identification, such as gender (Freeman et al., 2020; Freeman and Maloney, 2021). It also raises the question of whether digital bodies necessarily need to resemble their users? Perhaps for some people, a unique and identifiable digital body created in accordance with their ideal self could better represent their identity in the virtual world.

Therefore, we have identified the following research gaps. In term of usability, the deployment of personalized avatar generation should be further considered, and we look forward to non-biased, low-cost and user-friendly pipelines to be deployed on social VR platforms that allow users to generate and use their own avatars. In term of user acceptance, it would be worthwhile to investigate further what external factors influence the way users perceive their avatars. Different usage scenarios may strongly influence the willingness of users to use personalized avatars and to reveal their self-identity in the virtual world.

Protecting social VR citizens from the threats of privacy and identity issues will encourage them to enter the virtual world as themselves. However, how can users tell whether the avatars they encounter can be trusted? The appropriate way of indicating the identity authenticity of social VR citizens is yet another research gap that has not been explored.

Some traditional SNS such as Twitter and Facebook usually provide a “badge” for accounts that have the owners’ identities verified, and users tend to give higher credibility to these “verified accounts” (Vaidya et al., 2019). Similar mechanism can be adapted to social VR communities that provides identity status for authenticated users. It is also worthwhile marking social VR citizens who have suspicious identities or activities. One the other hand, Pakanen et al. (2022) suggests that visual designs of avatars will influence how other users perceive them and might have an impact on trust-building. It will be interesting to find out whether explicit information of authenticity (e.g., an identity status) or implicit indicators (e.g., the designs or rendering styles of the avatars) can effectively communicate the trustworthiness of social VR citizens and influence their trust among each other. We can also adapt certain UI designs from video games. For instance, in many video games, enemy and friendly characters are often highlighted in certain way (e.g., outlined) and distinguished by colors. Similar approach can be applied for trustworthy and untrustworthy social VR citizens.

Research in this direction can aim at identifying the factors that influence users’ trust during social interaction and creating a design guideline that gives requirements and recommendations for a social VR system that can effectively communicate the authenticity and trustworthiness level of its citizens.

The efforts of building authenticity and preventing identity infringement are not just to protect the user from potential harm, but ultimately to enhance the trust among social VR citizens. To that end, it is of great importance to evaluate the trust.

Self-reporting questionnaires are the dominant methods for measuring trust. However, most self-reporting methods may not be ideal for measuring specific trust and can reflect the internal feelings less accurately (Chan, 2010), and behavioral measurement are sometime preferred. Bente et al. (2014) investigate how photorealistic avatars and reputation scores affect trust-building in online transactions by letting participants perform the Trust Game online with a static avatar image. Hale et al. (2018) use a Virtual Maze experiment, in which participants need to navigate through a virtual 3D maze, and they can seek advice about which door to choose from two virtual characters. Perceived trustworthiness is measured by how often participants seek and follow advice from each character along. In addition, other behavioral clues are also investigated to measure trust or perceived trustworthiness, such as collaborative behavior in a shared virtual environment (Pan and Steed, 2017) and duration of mutual gaze in conversation (Aseeri and Interrante, 2021).

Unfortunately, apart from the study mentioned above, there has rarely been research on the measurement of the trust among social VR users. Future research can further aim at creating trust measurement paradigms that are specific for social VR citizens. Physiological data acquired by VR sensors might also provide the potentials for trust measurement.

In previous sub-sections, several research gaps have been identified, and several future research directions have been pointed out. In this sub-section, we aim to summarize and call for attention to these research directions, which include novel research directions waiting for exploration in the field of social VR, as well as technical advances in relevant areas that show potential to solve addressed issues.

To raise the awareness of issues and provide extensive background for relevant research, We need more comprehensive discussions and analyses of threats and violations to digital bodies in social VR. Such studies can come not only from technical perspectives but also from ethical and legal perspectives.

Currently, most photorealistic avatar generation techniques are not easy to be deployed and brought to the market, as they may require expensive equipment or complex processes. Easy-to-use generation pipeline and friendly user interfaces will make photorealistic avatar generation more accessible to the public. For instance, with a few clicks on a cellphone application, users should be able to scan and generate their digital bodies with the cellphone camera and upload them to the virtual world. Additionally, to avoid bias evaluation of performance across different groups such as gender, age and race, need to be made. Similarly, research should look into the applicability of these generation pipelines to people with disabilities.

In previous research, the tracking of virtual world citizens (including avatars and agents) is mostly based on visual recognition, such as facial recognition of their digital faces. With the advances of AI algorithms, behavioral recognition (such as movement patterns and in-world activities) and multimodal recognition, should be further explored. Meanwhile, another research focus could be on tracking virtual world citizens without violating the privacy of users.

Firstly, we believe that researchers should pay more attention to physiological biometrics (such as iris, EEG and ECG) for VR identification and authentication, considering that VR devices have been equipped with more and more physiological sensors. Secondly, continuous authentication and periodic authentication should be developed to improve usability. Lastly, the use of user information such as biometrics for authentication inevitably leads to privacy and security issues, and we have yet to see privacy-preserving authentication (concepts such as cancelable biometrics, homomorphic encryption, secure two-party computation, and so on) applied to the field of VR. It is worth noting that relevant concepts and techniques have been well developed in biometrics and security research, and it is essential to introduce them into the field of VR.

With the development and popularity of blockchain technology in recent years, the concept of decentralization has also been applied to identity management. These include federated identity and self-sovereign identity (SSI), among others. While these concepts have received much attention in the discussion of the “metaverse,” we believe there is an urgent need for grounded research and evaluation of their usability.

Several mechanisms and designs for protecting users’ digital bodies from violation and identity infringement have been applied to social VR platforms. We hope that not only will more such privacy mechanisms be proposed, but also that there will be more empirical research focusing on evaluating the effectiveness of these mechanisms.