95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 06 September 2022

Sec. Haptics

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.954808

Virtual reality has obvious potential to help humans developing/recovering brain functions, which operates through modulation of multisensory inputs. Some interventions using VR rely on the need to embody a virtual avatar, which stimulates cognitive-motor adaptations. Recent research has shown that embodiment can be facilitated by synchronizing natural sensory inputs with their visual redundancy on the avatar, e.g., the user’s heartbeat flashing around its avatar (cardio-visual stimulation) or the user’s body being physically stroked while the avatar is touched in synchronized conditions (visuo-tactile stimulation). While different full-body illusions have proven obvious interest in health and disease, it is unknown to date whether individual susceptibilities to illusion are equivalent with respect to cardio-visual or visuo-tactile stimulations. In fact, a number of factors like interoception, vestibular processing, a pronounced visual dependence, a specific cognitive ability for mental rotations, or user traits and habits like empathy and video games practice may interfere with the multifaceted construct of bodily self-consciousness, the conscious experience of owning a body in space from which the world is perceived. Here, we evaluated a number of dispositions in twenty-nine young and healthy participants submitted alternatively to cardio-visual and visuo-tactile stimulations to induce full-body illusions. Three components of bodily self-consciousness consensually identified in recent research, namely self-location, perspective taking and self-identification were quantified by self-reported feeling (questionnaires), and specific VR tasks used before and after multisensory stimulations. VR tasks allowed measuring self-location in reference to a virtual ball rolling toward the participant, perspective taking through visuomotor response times when mentally rotating an avatar suddenly presented at different angles, and self-identification through heart rate dynamics in response to a threatening stimulus applied to the (embodied) avatar. Full-body illusion was evidenced by self-reported quotations of self-identification to the avatar reaching scores in agreement with the literature, lower reaction times when taking the perspective of the avatar and a marked drop in heart rate showing obvious freezing reaction changes when the user saw the avatar being pierced by a spear. Changes in bodily self-consciousness components are not significantly dependent on the type of multisensory stimulation (visuo-tactile or cardio-visual). A principal component analysis demonstrated the lack of covariation between those components, pointing to the relative independence of self-location, perspective taking and self-identification measurements. Moreover, none of these components showed significant covariations with any of the individual dispositions. These results support the hypothesis that cardio-visual and visuo-tactile stimulations affect the main components of bodily self-consciousness in an extent that, in average, is mostly independent of individual perceptive-cognitive profiles, at least in healthy young people. Although this is an important observation at group level, which indicates a similar probability of inducing embodiment with either cardio-visual or visuo-tactile stimulations in VR, these results do not discard the fact that some individuals might have higher susceptibility to specific sensory inputs, which would represent a target to adapt efficient VR stimulations.

Virtual reality allows confronting a person with an avatar, seen as a distinctive body presented in the VR scene. Seeing an avatar is not trivial because psychological and neurological research has shown that it inevitably triggers mental processes that can modify body representation. Humans normally experience a conscious “self” located within their body boundaries. Yet, the so-called bodily self-consciousness can be disrupted, depending on the congruence of multiple sensory inputs processed by the brain. Thus, VR can help modulate visual inputs with reference to the avatar, with potential consequences for avatar embodiment.

In a pioneer experiment, filming a participant and projecting the video into a VR headset helped achieve a full-body illusion; thanks to synchronous visuo-tactile stimulations applied on the participant’s back, the authors measured both a proprioceptive drift toward the projected body and an enhanced self-reported identification with it (Lenggenhager et al., 2007). In agreement, seeing one’s heartbeat on a virtual body has been studied as a robust way to induce a full-body illusion with cardio-visual stimulations (Aspell et al., 2013), confirming a proprioceptive drift and an increased sense of ownership. Tactile and heartbeat origins of the illusion highlighted that both exteroceptive and interoceptive sensory inputs likely contribute to the multifaceted construct of bodily self-consciousness. In this vein, full-body illusions have also been achieved by coupling visual and motor signals (Barra et al., 2020; Keenaghan et al., 2020), or even visual and vestibular cues (Macauda et al., 2015; Preuss and Ehrsson, 2019). Thus, there is no doubt to date that full-body illusions using a combination of synchronous exteroceptive (Ehrsson, 2007; Lenggenhager et al., 2007; Aspell et al., 2009; Salomon et al., 2017; Salomon et al., 2013; Nakul et al., 2020; O’Kane and Ehrsson, 2021) and/or interoceptive (Aspell et al., 2009; Ronchi et al., 2015; Blefari et al., 2017; Heydrich et al., 2021; Heydrich et al., 2018) multisensory inputs alter the bodily self-consciousness, thereby avatar embodiment. To attest that full-body illusion is achieved, it is believed that presenting the same congruent visual stimulus but in asynchronous conditions is a mirrored control situation. Yet, the incongruity of an asynchronous signal may break another form of existing illusion, which doesn’t make asynchronous a strict control condition to compare to synchronous. It has been shown recently that when seeing a static congruent body in the VR scene, visuoproprioceptive cues alone, without added sensory conflict, are sufficient to create a sense of ownership toward an avatar in 40% of healthy people (Carey et al., 2019). Showing that visual capture may represent a sufficient condition to create the full-body illusion, it should be questioned if adding asynchronous visual stimulation in VR constitutes an effective methodological way to evidence that the induced illusion has operated. Although researchers have considered the asynchronous stimulation as a control condition to infer true illusion, it may actually break any form of illusion, even that created by mere visual capture. Therefore, introducing asynchronous conditions to evidence full-body illusion might be neither sufficient nor necessary.

Describing full-body illusion per se is hardly achievable; rather, a quantitative approach of the user’s response to congruent visual stimulations in VR is made possible through testing the effects on bodily self-consciousness (Tsakiris et al., 2007; Blanke and Metzinger, 2009; Tsakiris, 2010; Serino et al., 2013). As reviewed by Blanke (2012), the main processes underlying modifications of bodily self-consciousness (BSC) can be synthesized in three main components: self-location, perspective taking and self-identification. Quantifying each of these components thus becomes an important challenge to understand the user’s response to VR-induced redundant sensory inputs. While questionnaires have been mostly used to collect self-reported feelings of identification with the avatar, specifically designed VR tasks have been developed recently to allow non-declarative quantification of self-location (Nakul et al., 2020), perspective taking (van Elk and Blanke, 2014; Lopez et al., 2015; Heydrich et al., 2021) and self-identification (Slater et al., 2010; Tajadura-Jiménez et al., 2012; Pomes and Slater, 2013).

Self-location is the process of spatial localization of the self, that has been tested in early usage of VR by moving away the user after an illusion and asking him to return to its initial location (Lenggenhager et al., 2007; Aspell et al., 2013). Yet, voluntary movements (stepping back) are believed to override the specific illusion-induced changes in BSC (Aglioti et al., 1995; Wraga and Proffitt, 2000). Recently, an alternative method was proposed based on the transposition of the mental ball dropping task initially performed in supine conditions (Lenggenhager et al., 2009). The so-called Mental Imagery Task (MIT) assessed self-location in reference to a virtual ball rolling toward the users who must indicate the moment they estimate the contact with their feet (Nakul et al., 2020).

Perspective taking is the ability to take the perspective of the avatar, a phenomenon tightly coupled with the notion of avatar embodiment. Although two forms of visual perspective taking have been highlighted (Kessler and Rutherford, 2010), only one form needs the observer to project itself to adopt an embodied point of view, which stimulates the temporo-parietal junction, an area associated with the BSC construct (Blanke et al., 2005). Using a specifically designed VR task, perspective taking has then been assessed by so-called Own-Body Transformation (OBT) (van Elk and Blanke, 2014; Heydrich et al., 2021), where the participant has to accurately and quickly identify which hand is illuminated on an avatar presented at various angles.

Self-identification is a form of global identification with the body as a whole that has been assessed through neurovisceral reactions to a threatening event. Classically, heart rate deceleration is quantified during the initial “freeze” phase of the overall threatening response (Bradley et al., 2001), which has served as a marker of self-identification with an avatar (Slater et al., 2010; Pomes and Slater, 2013).

Taken together, above considerations show that research employing avatars have systematically observed a form of full-body illusion resulting from multisensory stimulations with sensory inputs (in most cases, cardio-visual or visuo-tactile) generated with visual redundancy in VR on a motionless avatar.

What has yet to be determined at this time is the role played by individual weighting of sensory dispositions when it comes to embody an avatar in VR, and, as a corollary, whether applying different types of sensory stimulation to achieve full-body illusion lead to specific changes in BSC.

As an illustration, empathy has been related to the strength of an illusion in the rubber hand illusion (Seiryte and Rusconi, 2015), the ability to take the perspective of an avatar (Heydrich et al., 2021) or the drift in self-location (Nakul et al., 2020) following a visuo-tactile illusion. As well, vestibular signals are critical for the BSC construct, thereby influencing mostly measures of self-location and perspective taking (Lopez et al., 2015). Interoception is another facet of an individual disposition, and this ability to perceive one’s internal body signals has been shown to influence the sense of ownership (Tsakiris et al., 2011; Suzuki et al., 2013).

It should be added that in virtual reality, perceptive, and cognitive processing greatly depends on visual information. So, supposing that VR users may be more or less dependent on visual cues (Kennedy, 1975), each one may exhibit different susceptibility for visual capture facilitating embodiment. The visual field dependence has been related to the sense of objects presence (Hecht and Reiner, 2007) and the feeling of presence in a scene (Maneuvrier et al., 2020), which could be another facet of individual conditions of embodiment.

Last but not least, the cognitive capacity to perform mental rotations is a critical individual disposition that may influence perspective taking as evaluated by the own-body transformation task described above. For decades, a task exists to assess one’s ability for mental rotations (Shepard and Metzler, 1971) that can be implemented in VR (Lochhead et al., 2022).

Other aspects that concern traits and habits have been shown to interfere with VR usage: the feeling of presence, cybersickness, videogames practice, as well as visuomotor ability in reference to the sport’s expertise (Feng et al., 2007; Green and Bavelier, 2007; Pratviel et al., 2021). So, the objective of the present study was to explore the putative relationship between a number of individual dispositions and the main components of bodily self-consciousness.

The main hypothesis was that specific dispositions facilitating embodiment might be identified when using a cardio-visual or a visuo-tactile stimulation, which should be reflected in particular modulations of components of bodily self-consciousness.

Twenty-nine healthy sport students (13 females, 21.9 ± 3.4 years, 64.7 ± 12.8 kg, 171.5 ± 8.9 cm) gave their informed consent to participate in this program for which they received credits as part of their academic curriculum. The institutional review board “Faculte des STAPS” approved the procedure that respected all ethical recommendations and followed the declaration of Helsinki. All the participants had normal or corrected-to-normal vision. None of them had any experience with the VR tasks used here. Participants were instructed not to consume caffeine or alcohol at least 24 h before the experiment. To test both visuo-tactile and cardio-visual stimulations, every participant took part in the study twice, with a minimum of 2 days between the two passages. Because familiarization with the tasks and the stimulation procedure at the second passage cannot be excluded, the repetition effect was included in the multi-way analysis of variance.

The sample size was determined from pre-tests concerning OBT measurements before and after a visuo-tactile of cardio-visual illusion. An a priori power analysis with G*Power (Faul et al., 2007) for OBT measurements with an effect size of 0.7, α = 0.05 and power = 0.8 indicated a theoretical sample size of n = 19. To add more statistical power, and as more dropouts were expected between both passages or due to cybersickness, 30 participants were recruited, 29 of whom completed the entire protocol.

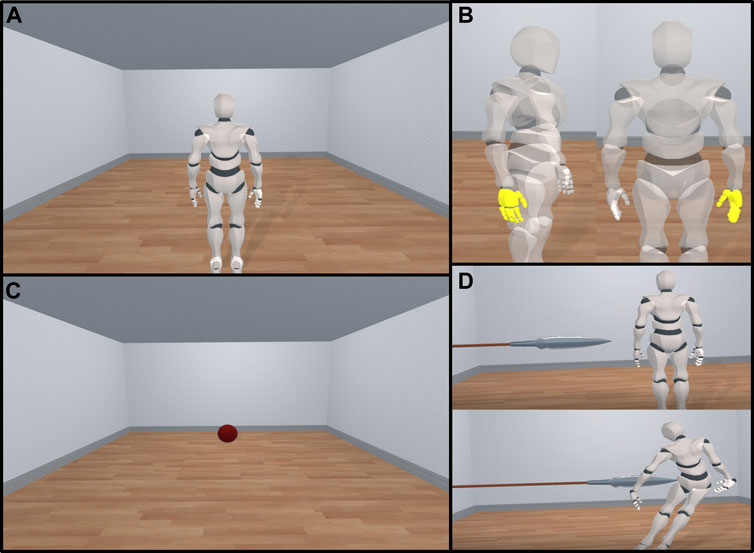

The experiment took place in a virtual environment developed with Unity (Unity Technologies, San Francisco, CA, United States) and shown in an HTC Pro Vive headset (HTC America, Inc., Seattle, WA, United States). The participants were immersed in a 10 m × 6 m × 3 m room, viewing the back of a gender-neutral humanoid avatar (see Figure 1A) standing at a 2-m distance in front of them. When specified, participants used the VR controllers to interact with the environment and perform the tasks described below.

FIGURE 1. View of the avatar and the three tasks used in Virtual Reality. (A) View of the avatar in the head-mounted display as perceived by the participant. (B) Ball appearing at the back of the room and moving toward the participant during the mental imagery task to quantify self-location. (C) Avatar displayed at 120° (left) and 0° (right) angles with the right hand highlighted during the own-body transformation task to quantify perspective taking. (D) The avatar slowly approached then pierced by a spear to induce heart rate deceleration used as a measure of self-identification.

During the experiment, the participant’s electrocardiogram was recorded (1 kHz) with three electrodes using a PowerLab 8/35 device (ADInstruments, Dunedin, New Zealand) and a dedicated bioamplifier (FE132, ADInstruments, Dunedin, New Zealand).

Half of the participants (n = 15) experienced the visuo-tactile stimulation first, and the cardio-visual stimulation 2 days later, and the other half experienced stimulation in reverse order.

The visuo-tactile stimulation was performed with the experimenter using the VR controller to stroke the participant’s back in an unpredictable way, with random time intervals (1.6 ± 0.6) that were yet the same for every subject. With perfect synchronization, the participant viewed an image of the controller touching the avatar’s back.

During the cardio-visual stimulation, the heartbeat of the participant was detected online from the raw electrocardiogram acquisition. The peak of the R wave was used to trigger a visual stimulus on the avatar’s silhouette, displayed as a blueish light flashing in and out. The outline grew until it reached its maximum size 100 ms after the R peak then faded out for a 400 ms duration.

Self-location was measured using a mental imagery task first described in Nakul et al. (2020). At the beginning of the task, the avatar disappeared from the room, and a 50 cm large red ball (Figure 1B) appeared at the end of the room at a random distance ranging from 5.7 to 6.3 m. The mean apparition distance was consistent across repetitions of the task. After a beep sound, the ball started rolling (1 m/s) toward the participants. Three seconds later, the VR scene fully turned black and the participants had to imagine the ball rolling and press the controller key at the moment they estimated the ball touched their feet. The estimated distance served to quantify self-location.

To measure the ability of the participant to take the avatar’s perspective, a new task was adapted from the one used in previous experiments (van Elk and Blanke, 2014; Deroualle et al., 2015; Heydrich et al., 2021). During this task, the avatar stayed in the room, still at a 2-m distance from the participant. After a random duration, the avatar displayed was refreshed with a change in orientation. At 0°, the participant sees the avatar from behind. At 180°, the avatar faces the participant. Other points of view including +60°, −60°, +120° and −120° rotations were used (Figure 1C). The time needed by the user to take the perspective of the avatar and press the VR controller key to indicate which hand is highlighted on the avatar, right or left, served to quantify perspective taking. The percentage of correct answers was measured to evaluate how the directive “as accurate and quick as possible” was actually perceived. Overall, correct answers were largely dominant (97.2%).

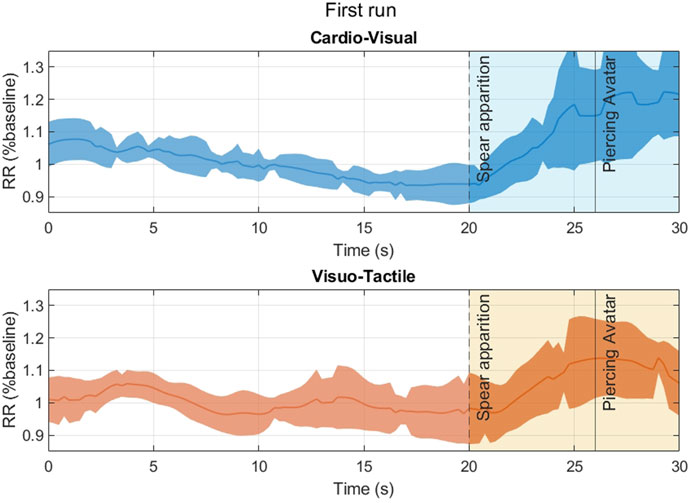

To assess self-identification with the avatar after the illusion, a spear slowly approached the avatar, finally skewering him after 6 s on the screen (Figure 1D). As in Maselli and Slater (2013), the heart rate was analyzed 20 s before and after the onset of the threatening stimulus. Self-identification was then quantified by the dynamics of heart rate deceleration during the so-called freeze initial phase before heart rate acceleration. Following previous recommendations, heart rate deceleration (HRD) was measured as the difference between the maximum and minimum RR interval time duration during the largest deceleration event lasting more than 1.5 s occurring in the time window lasting from the appearance of the threat on screen to the time it touched the body (Adenauer et al., 2010; Tajadura-Jiménez et al., 2012; Maselli and Slater, 2013).

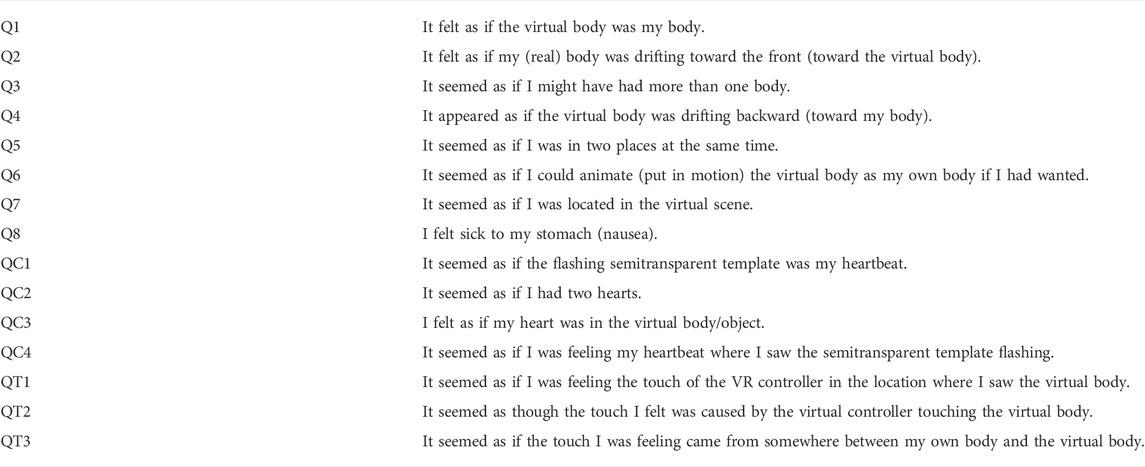

A questionnaire was displayed between successive tasks involving the avatar to collect self-reported feelings (see Table 1). Items relative to bodily self-consciousness (Q1–Q8: Aspell et al., 2013; Lenggenhager et al., 2009; Nakul et al., 2020), as well as questions about the specific cardio-visual (QC1–QC4: Aspell et al., 2013) and visuo-tactile (QT1–QT3: Nakul et al., 2020) stimulations were taken from previous studies. Participants answered by pointing a virtual laser beam toward a Likert scale (from −3 to +3), while still immersed in the virtual environment.

TABLE 1. Illusion questionnaire. Questions are presented in the order indicated by number. Items relative to the cardio-visual illusion (QC1, QC2, QC3, and QC4) and visuo-tactile illusion (QT1, QT2, and QT3) are only displayed during the corresponding stimulation.

Visual field dependence was evaluated using the Rod and Frame Test in virtual reality, a task which consists in aligning vertically a rod initially tilted 27° (left or right) displayed inside an 18° tilted frame, as in Maneuvrier et al. (2020). Participants were instructed to take their time to reach the best vertical alignment. The average residual angle calculated from 20 trials served as quantification of visual field dependence.

The individual ability to mentally rotate objects was assessed using a classical mental rotation task (Shepard and Metzler, 1971) adapted here in VR. During the designed VR task, the participant was presented with two objects, one of which was displayed at an angle of 0, 60, −60, 120, −120 or 180° around the vertical axis from the other. They were instructed to use the dedicated controller key to say as precisely and quickly as possible if the two objects were similar, or if one was the mirror image of the other. After four trials during which correct answers were given orally to ensure that the instructions were understood, 64 pairs of objects were presented. The performance relied on response time associated with similar objects only (2/3 of the total objects displayed). The percentage of correct answers was recorded to ensure that subjects effectively tried to mentally rotate objects and didn’t answer randomly.

Finally, two aspects of interoception were assessed: interoceptive accuracy and interoceptive sensibility. Interoceptive accuracy was measured using a heartbeat counting task (Schandry, 1981; Fittipaldi et al., 2020), asking the participants to press a button (connected to the Powerlab and synchronized with electrocardiogram recordings) each time they perceived their own heartbeat or thought a heartbeat was occurring. The performance was assessed using the median distance between the heart rate and the response rate during two repetitions of the task (md Index), following the method described in Fittipaldi et al. (2020). Interoceptive sensibility was measured using the Interoceptive Accuracy Scale [IAS, (Murphy et al., 2020)], a questionnaire assessing a global evaluation of one’s perceived capacity to process interoceptive signals.

Last, empathy was assessed using the Empathy Quotient (Baron-Cohen and Wheelwright, 2004).

Upon arrival, participants were introduced to the overall procedure. They were then equipped with three electrocardiograph electrodes, and started by completing questionnaires on a computer, before performing two repetitions of the heartbeat counting task. Then, the VR headset and the handheld controllers were presented to the participant. The Rod and Frame Test and mental rotations tasks were performed, and the avatar task began shortly after. The avatar’s size was modified to match that of the participant. Eight repetitions of the Mental Imagery Task (MIT) and forty-eight repetitions of the Own-Body Transformation (OBT) task were performed prior to the cardio-visual or visuo-tactile stimulation. The full-body illusion was induced with 2 min of synchronous stimulation, either with visuo-tactile or cardio-visual stimulation. After that, four blocks of tasks comprising four repetitions of the MIT and 24 repetitions of the OBT (presented in a random order) followed by the illusion questionnaire were performed, intersected with 35 s periods of synchronous stimulation used to maintain the illusion. At the end of the fourth repetition, the threatening stimulus appeared on the screen while successive heartbeat (Rwave-to-Rwave in the electrocardiogram) interval durations were collected. After that, participants removed the VR headset and completed questionnaires about their experience in the virtual environment. Cybersickness was assessed with the Simulator Sickness Questionnaire (SSQ, French version, Bouchard et al., 2009), and Presence using the igroup Presence Questionnaire (IPQ, Schubert et al., 2001).

During the second passage with the alternative stimulation (cardio-visual or visuo-tactile), participants completed the entire protocol, except the initial questionnaires and the heartbeat counting task because they bring no additional information.

Data corresponding to each task were collected and analyzed using Matlab (MATLAB, 2021; Matworks, Natick, MA, United States). As measures of the Mental Imagery Task (MIT) and the Own-Body Transformation (OBT) task obtained during repetitions were not statistically different across the four experimental blocks, they were averaged.

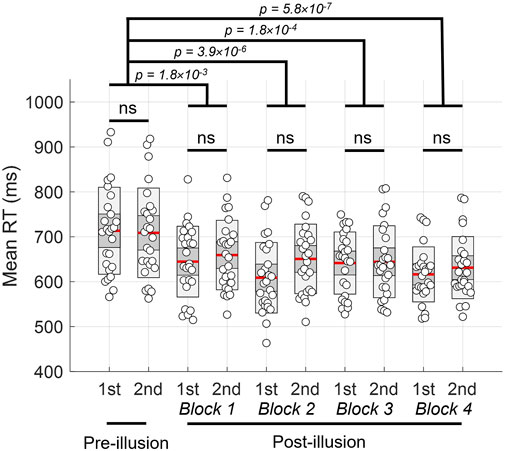

Normal distribution for datasets was assessed using a Shapiro-Wilk test. To explore the effects of visual stimulations on own-body transformation (perspective taking) and mental imagery task (self-location) a three-way ANOVA was used including type of stimulation (Cardio-visual/Visuo-tactile), pre vs. post measurement and order of passage (First run/Second run) as dependent factors.

Heart rate deceleration was measured only after the visual stimulation; so, a two-way ANOVA was used with the type of stimulation (cardio-visual vs. visuo-tactile) and order of passage as dependent factors. Wilcoxon signed-rank tests were used to compare the answers from the illusion questionnaire following a cardio-visual or visuo-tactile stimulation.

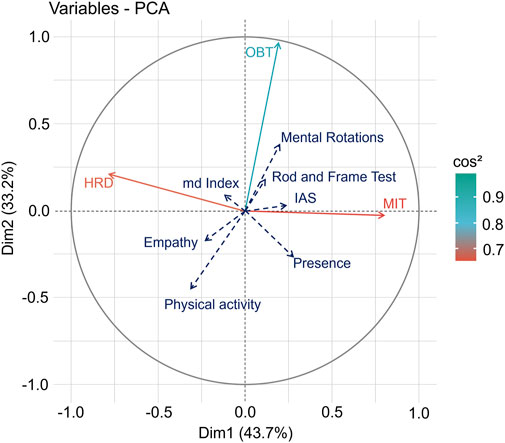

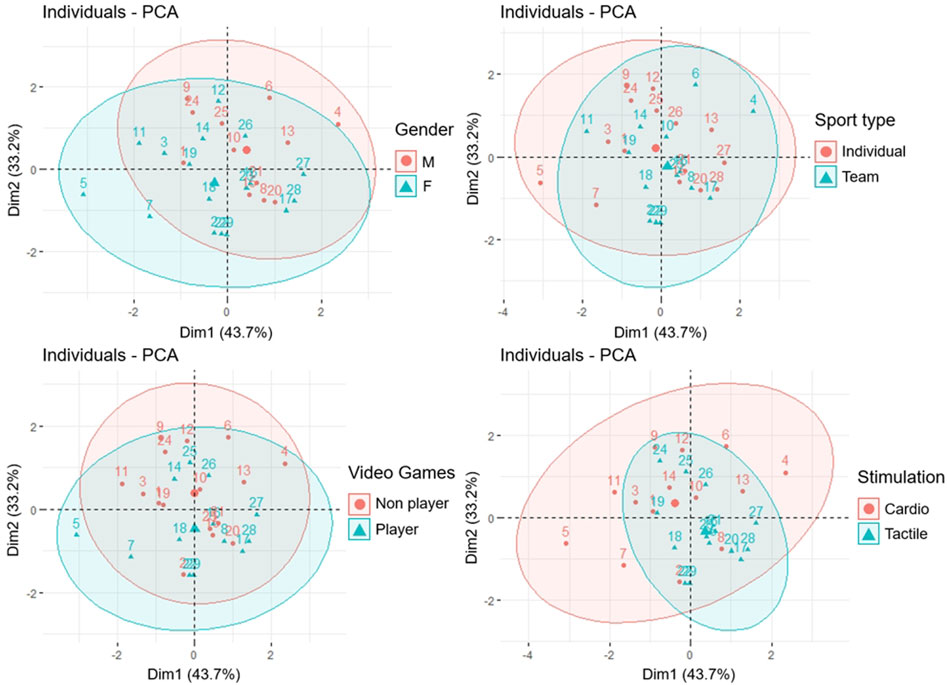

As a multivariate analysis including individual dispositions and changes in components of bodily self-consciousness, a Principal Component Analysis (PCA) was performed using the R software (R Core Team, 2020) using recordings of the first passage for each participant.

As a first step, only variables quantifying self-location, perspective taking and self-identification were introduced in the PCA as so-called active variables. Then, supplementary variables that did not influence initial computations of the PCA were added and mapped as a layer on the PCA correlation circle: Rod and Frame Test performance, mental rotations task performance, performances in the heartbeat counting task (md Index), interoceptive sensitivity (IAS), presence and empathy.

Additional information concerning qualitative variables was synthesized in mapping 95% confidence ellipses identifying gender, individual/team sport practice, video games habits, and the type of stimulation, either cardio-visual or visuo-tactile.

Based on PCA results and previous studies, additional analysis were performed to decipher potential relations between bodily self-consciousness components and individual traits. The relation between mental rotations abilities and OBT performances was assessed with Pearson’s correlation coefficients. Participants were separated into two groups (low/high) according to their empathy and interoception scores (measured with the EQ and the md Index, respectively), and independent samples t-test between groups were performed for components of bodily self-consciousness. The split between low empathy (n = 15) and high empathy (n = 14) groups was performed for EQ = 40. Low-interoception (n = 15) and high-interoception (n = 14) groups were separated according to md Index values above or below 0.35, respectively.

Self-reported responses to the illusion questionnaire provided scores in agreement with previous reports (Salomon et al., 2017; Nakul et al., 2020), especially in response to question 1 (“It felt as if the virtual body was my body”) that is often used as the main argument to identify a full-body illusion. Self-reported responses showed no distinction between the cardio-visual and the visuo-tactile illusion (Table 2).

TABLE 2. Quotes of self-reported feelings from the illusion questionnaire scaled in a −3 to +3 Likert scale. Wilcoxon p-values indicate no distinction between each stimulation.

The mental imagery task showed no change in perceived location following the illusion [F (1,115) = 0.17, p = 0.68], no order of passage effects [F (1,115) = 0.16, p = 0.69] and no effect of the type of illusion [F (1,115) = 0.18, p = 0.67]. In average, participants localized themselves 61 ± 60 cm in front of them before the illusion (cardio-visual: 63 ± 58 cm; visuo-tactile: 58 ± 62 cm), and 55 ± 70 cm after the multisensory stimulation (cardio-visual: 58 ± 73 cm; visuo-tactile: 53 ± 67 cm).

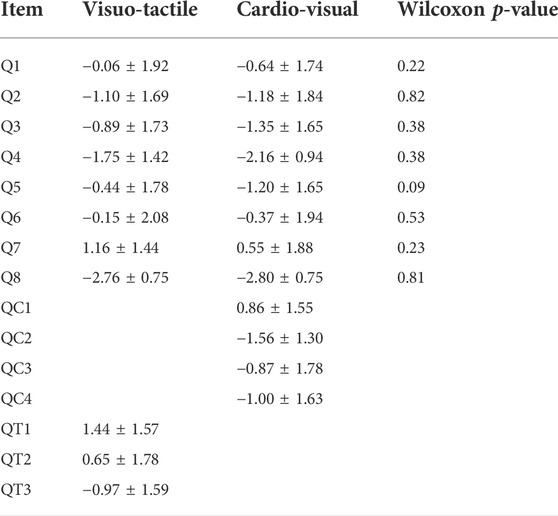

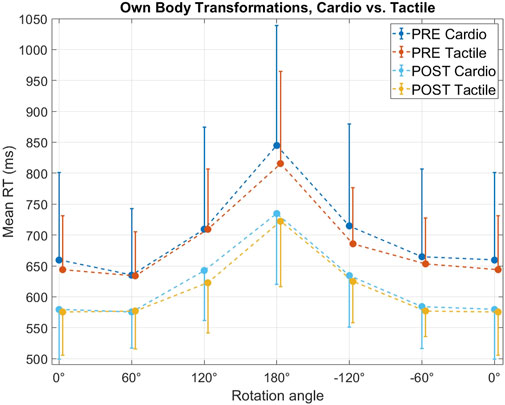

By contrast, a three-way ANOVA conducted on quantitative assessments of perspective taking showed significant effects of the multisensory stimulations. Response times significantly decreased after induction of the illusion [F (1,115) = 9.98, p = 0.002], indistinctly of the cardio-visual and visuo-tactile stimulations [F (1,115) = 0.46, p = 0.50]. As no interaction effect exists between pre-illusion vs. post-illusion scores and the order of passage (first or second run) [F (1,115) = 2.37, p = 0.13], it is excluded that repetition alone might explain an improvement in response time.

Moreover, to make sure that the observed improvement in perspective taking abilities was effectively linked with the induction of a full-body illusion, and not only to familiarization with the task, a two-way ANOVA performed on Repetition (PRE, block 1, block 2, block 3, and block 4) and Half (1st or 2nd half of each repetition of the OBT task) as independent factors. We found a Repetition effect [F (4,259) = 9.98, p = 1.65 × 10−7], no Half effect [F (1,259) = 1.99, p = 0.16], and no interaction effect [F (4,259) = 0.64, p = 0.64]. A post-hoc Tukey showed significant differences the PRE condition and block 1 (p = 1.78 × 10−3), block 2 (p = 3.92 × 10−6), block 3 (p = 1.80 × 10−4), and block 4 (p = 5.78 × 10−7). There were no significant differences between blocks 1, 2, 3, and 4. Moreover, the post-hoc Tukey showed no differences between mean RT in each half for Pre (p = 1.00), block 1 (p = 1.00), block 2 (p = 0.67), block 3 (p = 1.00), and block 4 (p = 1.00). Average response times in each half of all repetitions are presented in Figure 2.

FIGURE 2. Mean response time during the OBT task, for each half (1st or 2nd) of every repetition. Colored lines indicate mean, heavy grey ± 1 SD and light gray 95% confidence interval. Individual values are represented by white circles.

Overall, cardio-visual and visuo-tactile stimulations increased the participants’ performance by 95 ± 100 ms and 82 ± 64 ms, respectively. In average, participants performed even better when they experienced the cardio-visual or the visuo-tactile stimulation during their second passage [F (1,115) = 7.01, p = 0.01]. During the first passage, participants improved their average response times by 133 ± 85 ms, and by 44 ± 53 ms during the second passage.

Figure 3 shows the angle-dependent response time with a profile in perfect agreement with the literature (Falconer and Mast, 2012). It also illustrates the gap in response time after stimulation both visuo-tactile and cardio-visual stimulations, whatever the order of passage (which yet results in great standard deviations).

FIGURE 3. Mean response times (filled circles) and SD (vertical bars) during the OBT task measured at different angles before (PRE) and after (POST) the cardio-visual (Cardio) and visuo-tactile (Tactile) stimulations.

As expected, heart rate deceleration was more marked during the first passage, because the second passage made it possible to anticipate the threatening event [F (1,57) = 4.14, p = 0.047]. Regarding first passage only, cardio-visual and visuo-tactile stimulations caused marked effects that were not significantly different [F (1,57) = 1.45, p = 0.23]. Heart rate deceleration reached 16.3 ± 8.7 beats per minute following the visuo-tactile stimulation, and 23.1 ± 9.7 beats per minute following the cardio-visual stimulation.

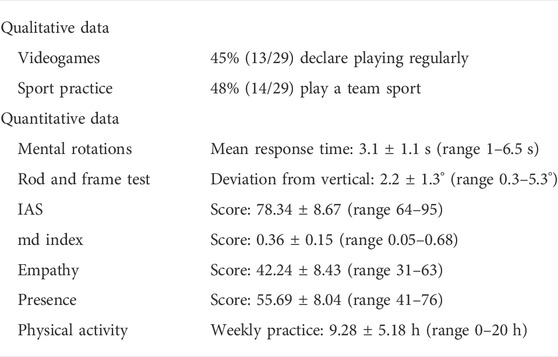

Variables used to characterize individual traits, abilities and habits potentially influencing behavior in VR are quantified in Table 3. Quantitative data exhibited substantial heterogeneity that pointed to true distinctive profiles among our participants, an asset for the subsequent exploration of particular profiles possibly matching with facilitated embodiment.

TABLE 3. Results from questionnaires and pre-tests. Quantitative variables are presented with mean ± standard deviation and range.

As the cybersickness scores (as assessed by the Simulator Sickness Questionnaire) were low in average [6.6 ± 5.5 (range 0–22), six participants who scored >10], they were not included in the subsequent Principal Component Analysis.

The Principal Component Analysis (PCA) revealed a lack of covariation between components of bodily self-consciousness, namely self-location (MIT distances), perspective taking (OBT response times) and self-identification (HR). As these three components have been identified as distinctive parts of the bodily self, the absence of covariation confirms that the evaluations of the three identified components of bodily self-consciousness reached the expected non-redundancy in our conditions. Importantly, based on evaluations during the first passage, the projection of individual dispositions on the PCA mapping (Figure 5) showed no individual dispositions co-varying with one of the components of the bodily self-consciousness.

To provide additional information regarding other variables that might show some specific proximity with one of the component of the bodily self-consciousness (BSC) when a cardio-visual or a visuo-tactile stimulation was used, 95% confidence ellipses are shown in Figure 6. None of the variables (Gender, Sport Type, Video Games Practice) allowed inferring particular profiles in relation with the mapping of BSC components. When the type of stimulation (cardio vs. tactile) was used for comparable mapping, the main information was that the repartition of our participants was not different on average (see the barycenter of each ellipse), but some individual singularities can be observed.

There were no clear correlation between mental rotation and OBT performances, either before (r2 = 0.10, p = 0.10) or after the multisensory stimulation (r2 = 0.06, p = 0.22). There were no relation as well between the ability to perform mental rotations of 3D forms and the amelioration of OBT performances before and after the illusion (r2 = 0.13, p = 0.06).

There were no significant differences between low-empathy and high-empathy groups for MIT measures before (p = 0.81, t = −0.25) and after the stimulation (p = 0.81, t = 0.25), and for the evolution of MIT distances following the illusion (p = 0.85, t = 0.20). Concerning OBT, there were no differences between low/high empathy groups before (p = 0.85, t = −0.20) and after the stimulation (p = 0.69, t = 0.41), and for the evolution of OBT mean response times after the multisensory stimulations (p = 0.94, t = 0.08). There were no differences concerning HRD as well (p = 0.83, t = −0.21).

Concerning interoception, there were no significant differences between low-interoception and high-interoception groups for MIT measures before (p = 0.50, t = 0.68) and after the stimulation (p = 0.40, t = 0.86), and for the evolution of MIT distances following the illusion (p = 0.50, t = 0.68). For OBT, there were no differences between low/high interoception groups before (p = 0.76, t = 0.31) and after the stimulation (p = 0.75, t = −0.32), and for the evolution of OBT mean response times after the multisensory stimulations (p = 0.16, t = 1.46). There were no differences concerning HRD as well (p = 0.94, t = −0.08). Moreover, there were no differences between low and high interoception groups for the cardio-visual specific questions in the illusion questionnaire (QC1: p = 0.63, t = 0.49; QC2: p = 0.50, t = 0.68; QC3: p = 0.55, t = −0.61; QC4: p = 0.37, t = −0.91).

For the first time here, two types of multisensory stimulation, based on interoceptive and exteroceptive bodily signals, have been performed on the same population to promote avatar embodiment in VR. In addition, a number of personal dispositions have been assessed to explore a putative link between perceptive-cognitive profiles of VR users, and a facilitated achievement of the full-body illusion when using preferentially a cardio-visual or a visuo-tactile stimulation. As a main finding, no particular matching between perceptive-cognitive profiles and the type of illusion was evidenced, and both types of interoceptive and exteroceptive stimulations induced the same global effect on components of bodily self-consciousness. A possible misidentification of distinctive effects due to the total absence of illusion in our conditions is unlikely. First, participants self-reported feelings of identification with the avatar in agreement with the literature. Then, after multisensory stimulations, they achieved faster response times during the own-body transformation task (Figures 2, 3) and a marked heart rate deceleration when their avatar was threatened (Figure 4).

FIGURE 4. RR intervals (heart rate) presented in percentage of the baseline (last 20 s before the spear apparition in the VR scene). Data presented here corresponds to the first stimulation, either cardio-visual (top) or visuo-tactile (bottom). SD is plotted as shaded area.

The three main components of the bodily self-consciousness usually evaluated separately in the literature, self-location (as assessed with the mental imagery task), perspective taking (as assessed with the own-body transformation task) and self-identification (as assessed by the initial heart rate deceleration when the avatar was threatened) showed expected independency, but more importantly, none of them showed obvious correlation with individual dispositions evaluating a large panel of individual properties from empathy and interoception to VR-specific cognitive abilities (Figure 5). As a consequence, we showed no superiority of a cardio-visual or a visuo-tactile stimulation to achieve a full-body illusion, at least in sports students, thus discarding a greater susceptibility to interoceptive or exteroceptive bodily signals for instance. Interoception has been linked to physical activity (Georgiou et al., 2015; Wallman-Jones et al., 2021), which itself impacts the malleability of body representations (Tsakiris et al., 2011; Suzuki et al., 2013). Since the cardio-visual illusion involves heartbeats as the congruent interoceptive and visual stimulus, it may be possible that the individual’s ability to discern its own heartbeats may predict the outcomes of this particular illusion. With the approach used here, we yet failed to highlight such an effect, as evidenced by the absence of relation between interoception scores and bodily self-consciousness measures. Moreover, interoception—measured as the ability to detect one’s heartbeats—didn’t have an impact on the answers to the illusion questionnaires relative to the cardio-visual stimulation. Generally, neither interoception, sports practice, gender, empathy, visual dependency to the field in VR or the ability for mental rotations in VR correlated with bodily self-consciousness components when affected by VR-based illusions (Figures 5, 6).

FIGURE 5. Principal Component Analysis with the two main dimensions projected on orthogonal axes. OBT, MIT, and HRD represent the mean response time performances in the own-body transformation task (expressed as Pre-Post/Pre), the difference between perceived self-location before and after the illusion, and the heart rate deceleration following the threatening stimulus, respectively. Other measures (individual dispositions) are represented as supplementary variables.

FIGURE 6. 95% confidence ellipses showing the repartition of participants (numbered) according to distinct individual profiles (top-left: gender/top-right: sports type/bottom-left: videogames habits/bottom-right: multisensory stimulation). Largely overlapped ellipses indicate the absence of differences between two sample groups according to a specific variable. Larger dots indicate the baryleft of an ellipse.

Testing two types of stimulation (here visuo-tactile and cardio-visual) in the same population, which is new here, required repeating the main procedure twice and making choices. Although a significant “familiarization effect” was observed when participants were submitted to the alternative illusion at their second passage (mainly for heart rate deceleration and own-body transformations), the absence of interaction effects between stimulation-induced changes and order of passage in our conditions shows that being exposed to cardio-visual or visuo-tactile stimulation first had no influence on the observed changes in components of bodily self-consciousness when they were present.

Overall, above results may constitute relevant information to conceive VR interventions using avatars, considering that no particular perceptual or behavioral profile would require a particular design of the VR activity, at last on average. In addition, the methodology used here including some improvements on VR-based tasks may serve as a basis to interrogate other populations or identify the possible need to adapt VR multisensory stimulations to deficiency or disease.

Although the full-body illusion seems to be elicited without any nuance related to the type of stimulation, we cannot ignore individual stimulation-induced changes of the components of the BSC. Longo et al. (2009) demonstrated the phenomenon with the rubber hand illusion, using a PCA and an illusion questionnaire. An absence of covariation between components called ownership, location and agency was observed. In particular, there is dissociation between ownership and perceived location. The different components of the bodily self-consciousness are impacted by multisensory stimulations, but not necessarily to the same extent. Based on PCA and ellipse mapping as presented here, one could further explore individual behavior, which would probably require a deeper analysis with measure of reproducibility at individual level and goes beyond the scope of the present study. The way in which the neural integration balanced visual, vestibular and proprioceptive information in our sport students might have influenced one’s ability to integrate multisensory information from visual and tactile stimuli. Again, as a main observation here, we found no correlations between individual dispositions assessed with specific pre-tests and measures of self-location, perspective taking and self-identification. As an additional finding, the 95% ellipses grouping subjects according to particular phenotypes (gender, videogames habits, physical activity type) did not differ when the three measured dimensions of bodily self-consciousness were taken into account (Figure 6). A tentative hypothesis is that bodily self-consciousness is a complex and multivariate construct that cannot be resumed to a reduced number of incidences. Even if empathy and interoception were occasionally depicted as co-varying with the BSC following an illusion (Suzuki et al., 2013; Nakul et al., 2020; Heydrich et al., 2021), there are conflicting results that show a lack of correlation (Ainley et al., 2015).

Lastly, it is interesting to note for further developments of VR interventions that the individual ability for mental rotation, assessed by our original VR task, did not correlate with own-body transformation performances, which has been created for the user to take the perspective of the avatar (Deroualle et al., 2015; Heydrich et al., 2021). Unlike mental rotations of 3D forms, taking the perspective of the avatar is an embodied process (Kessler and Rutherford, 2010); more than just depicting the ability of a participant to mentally rotate objects, own-body transformation scores effectively reflect perspective taking abilities, and therefore allow quantifying embodiment.

This study is not without limitations. Despite a number of successful evidence in previous literature that encouraged the present study, achieving a visuo-tactile or a cardio-visual illusion is neither automatic nor ubiquitous. Yet, as we explain in introduction, we are not convinced that comparing synchronous with (additional) asynchronous stimulations brings the proof that an illusion has been reached in the synchronous situation. This leaves researchers with no perfect method to assess the full-body illusion. Consensually, changes in self-location (not observed here) or perspective taking (observed here) or self-identification (observed here) served as suitable indices to accept the notion that the multisensory construct associated to the illusion has been altered. Perhaps the main support for further research in our experiment lies in the demonstration that a number of VR tasks are available that allow a quantitative approach of individual responses to a multisensory illusion, which could feed further explorations.

Building on the body of evidence in the literature highlighting the effects full-body illusion—achieved with both visuo-tactile and cardio-visual stimulation—on the components of bodily self-consciousness, we developed VR-based testing of self-location, perspective taking and self-identification. In a population of young athletes, the modification of the BSC components following a multisensory stimulation did not depend on the selected interoceptive or exteroceptive sensory inputs chosen. Whatever the individual perceptual and behavioral profiles, self-location, perspective taking and self-identification represented main and independent components of the BSC, through which the congruence in visual and tactile or cardiac sensory inputs in VR operated in non-specific ways.

Both cardio-visual and visuo-tactile stimulation seem equally effective to induce embodiment, as evidenced by the evolution of bodily self-consciousness components. This result offers great perspective to improve VR applications, as the method used to induce embodiment can be selected to fit different needs and constraints. Visuo-tactile stimulation often requires the presence of an experimenter, while cardio-visual stimulation can be done without external intervention but requires specific equipment. Inducing embodiment in a more ecological way—as well as using more relevant methods to measure it—could be a great asset in many scenarios, for example, in the fields of medicine, education and motor learning. Mainly, understanding the links between motor performance and bodily self-consciousness when embodying an avatar could be a great asset to develop future VR applications for rehabilitation and sports training.

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Faculte des STAPS Institutional Review Board Univ. Bordeaux. The patients/participants provided their written informed consent to participate in this study.

LA and YP conceived the study. LA, YP, AB, and VD-A wrote the manuscript. LA, FL, and YP designed the study. AB collected the data. LA, YP, AB, and VD-A analyzed the data. All authors approved the final manuscript.

A CIFRE grant No. 2019/0682 was awarded by the ANRT to the Centre Aquitain des Technologies de l’Information et Electroniques, to support the work of graduate student YP.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adenauer, H., Catani, C., Keil, J., Aichinger, H., and Neuner, F. (2010). Is freezing an adaptive reaction to threat? Evidence from heart rate reactivity to emotional pictures in victims of war and torture. Psychophysiology 47, 315–322. doi:10.1111/j.1469-8986.2009.00940.x

Aglioti, S., DeSouza, J. F. X., and Goodale, M. A. (1995). Size-contrast illusions deceive the eye but not the hand. Curr. Biol. 5, 679–685. doi:10.1016/S0960-9822(95)00133-3

Ainley, V., Maister, L., and Tsakiris, M. (2015). Heartfelt empathy? No association between interoceptive awareness, questionnaire measures of empathy, reading the mind in the eyes task or the director task. Front. Psychol. 6, 554. doi:10.3389/fpsyg.2015.00554

Aspell, J. E., Heydrich, L., Marillier, G., Lavanchy, T., Herbelin, B., and Blanke, O. (2013). Turning body and self inside out : Visualized HEartbeats alter bodily self-consciousness and tactile perception. Psychol. Sci. 9, 2445–2453. doi:10.1177/0956797613498395

Aspell, J. E., Lenggenhager, B., and Blanke, O. (2009). Keeping in touch with one’s self: Multisensory mechanisms of self-consciousness. PLoS ONE 4, e6488. doi:10.1371/journal.pone.0006488

Baron-Cohen, S., and Wheelwright, S. (2004). The empathy quotient: An investigation of adults with asperger syndrome or high functioning autism, and normal sex differences. J. Autism Dev. Disord. 34, 163–175. doi:10.1023/B:JADD.0000022607.19833.00

Barra, J., Giroux, M., Metral, M., Cian, C., Luyat, M., Kavounoudias, A., et al. (2020). Functional properties of extended body representations in the context of kinesthesia. Neurophysiol. Clin. 50, 455–465. doi:10.1016/j.neucli.2020.10.011

Blanke, O., and Metzinger, T. (2009). Full-body illusions and minimal phenomenal selfhood. Trends Cogn. Sci. 13, 7–13. doi:10.1016/j.tics.2008.10.003

Blanke, O., Mohr, C., Michel, C. M., Pascual-Leone, A., Brugger, P., Seeck, M., et al. (2005). Linking out-of-body experience and self processing to mental own-body imagery at the temporoparietal junction. J. Neurosci. 25, 550–557. doi:10.1523/JNEUROSCI.2612-04.2005

Blanke, O. (2012). Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 13, 556–571. doi:10.1038/nrn3292

Blefari, M. L., Martuzzi, R., Salomon, R., Bello-Ruiz, J., Herbelin, B., Serino, A., et al. (2017). Bilateral Rolandic operculum processing underlying heartbeat awareness reflects changes in bodily self-consciousness. Eur. J. Neurosci. 45, 1300–1312. doi:10.1111/ejn.13567

Bouchard, S., St-Jacques, J., Renaud, P., and Wiederhold, B. K. (2009). Side effects of immersions in virtual reality for people suffering from anxiety disorders. J. CyberTherapy Rehabil. 2, 127–138. doi:10.1037/10858-005

Bradley, M. M., Codispoti, M., Cuthbert, B. N., and Lang, P. J. (2001). Emotion and motivation I: Defensive and appetitive reactions in picture processing. Emotion 1, 276–298. doi:10.1037/1528-3542.1.3.276

Carey, M., Crucianelli, L., Preston, C., and Fotopoulou, A. (2019). The effect of visual capture towards subjective embodiment within the full body illusion. Sci. Rep. 9, 2889. doi:10.1038/s41598-019-39168-4

Deroualle, D., Borel, L., Devèze, A., and Lopez, C. (2015). Changing perspective: The role of vestibular signals. Neuropsychologia 79, 175–185. doi:10.1016/j.neuropsychologia.2015.08.022

Ehrsson, H. H. (2007). The experimental induction of out-of-body experiences. Science 317, 1048. doi:10.1126/science.1142175

Falconer, C. J., and Mast, F. W. (2012). Balancing the mind: vestibular induced facilitation of egocentric mental transformations. Exp. Psychol. 59, 332–339. doi:10.1027/1618-3169/a000161

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi:10.3758/BF03193146

Feng, J., Spence, I., and Pratt, J. (2007). Playing an action video game reduces gender differences in spatial cognition. Psychol. Sci. 18, 850–855. doi:10.1111/j.1467-9280.2007.01990.x

Fittipaldi, S., Abrevaya, S., Pascariello, G. O., Hesse, E., Birba, A., Salamone, P., et al. (2020). A multidimensional and multi-feature framework for cardiac interoception. NeuroImage 212, 116677. doi:10.1016/j.neuroimage.2020.116677

Georgiou, E., Matthias, E., Kobel, S., Kettner, S., Dreyhaupt, J., Steinacker, J. M., et al. (2015). Interaction of physical activity and interoception in children. Front. Psychol. 6, 502. doi:10.3389/fpsyg.2015.00502

Green, C. S., and Bavelier, D. (2007). Action-video-game experience alters the spatial resolution of vision. Psychol. Sci. 18, 88–94. doi:10.1111/j.1467-9280.2007.01853.x

Hecht, D., and Reiner, M. (2007). Field dependency and the sense of object-presence in haptic virtual environments. Cyberpsychol. Behav. 10, 243–251. doi:10.1089/cpb.2006.9962

Heydrich, L., Aspell, J. E., Marillier, G., Lavanchy, T., Herbelin, B., and Blanke, O. (2018). Cardio-visual full body illusion alters bodily self-consciousness and tactile processing in somatosensory cortex. Sci. Rep. 8, 9230. doi:10.1038/s41598-018-27698-2

Heydrich, L., Walker, F., Blättler, L., Herbelin, B., Blanke, O., and Aspell, J. E. (2021). Interoception and empathy impact perspective taking. Front. Psychol. 11, 599429. doi:10.3389/fpsyg.2020.599429

Keenaghan, S., Bowles, L., Crawfurd, G., Thurlbeck, S., Kentridge, R. W., and Cowie, D. (2020). My body until proven otherwise: Exploring the time course of the full body illusion. Conscious. Cogn. 78, 102882. doi:10.1016/j.concog.2020.102882

Kennedy, R. S. (1975). Motion sickness questionnaire and field independence scores as predictors of success in naval aviation training. Aviat. Space Environ. Med. 46, 1349–1352.

Kessler, K., and Rutherford, H. (2010). The two forms of visuo-spatial perspective taking are differently embodied and subserve different spatial prepositions. Front. Psychol. 1, 213. doi:10.3389/fphys.2010.00213

Lenggenhager, B., Mouthon, M., and Blanke, O. (2009). Spatial aspects of bodily self-consciousness. Conscious. Cogn. 18, 110–117. doi:10.1016/j.concog.2008.11.003

Lenggenhager, B., Tadi, T., Metzinger, T., and Blanke, O. (2007). Video ergo sum: Manipulating bodily self-consciousness. Science 317, 1096–1099. doi:10.1126/science.1143439

Lochhead, I., Hedley, N., Çöltekin, A., and Fisher, B. (2022). The immersive mental rotations test: Evaluating spatial ability in virtual reality. Front. Virtual Real. 3. doi:10.3389/frvir.2022.820237

Longo, M. R., Betti, V., Aglioti, S. M., and Haggard, P. (2009). Visually induced analgesia: Seeing the body reduces pain. J. Neurosci. 29, 12125–12130. doi:10.1523/JNEUROSCI.3072-09.2009

Lopez, C., Falconer, C. J., Deroualle, D., and Mast, F. W. (2015). In the presence of others: Self-location, balance control and vestibular processing. Neurophysiol. Clinique/Clinical Neurophysiol. 45, 241–254. doi:10.1016/j.neucli.2015.09.001

Macauda, G., Bertolini, G., Palla, A., Straumann, D., Brugger, P., and Lenggenhager, B. (2015). Binding body and self in visuo-vestibular conflicts. Eur. J. Neurosci. 41, 810–817. doi:10.1111/ejn.12809

Maneuvrier, A., Decker, L. M., Ceyte, H., Fleury, P., and Renaud, P. (2020). Presence promotes performance on a virtual spatial cognition task: Impact of human factors on virtual reality assessment. Front. Virtual Real. 1, 571713. doi:10.3389/frvir.2020.571713

Maselli, A., and Slater, M. (2013). The building blocks of the full body ownership illusion. Front. Hum. Neurosci. 7, 83. doi:10.3389/fnhum.2013.00083

Murphy, J., Brewer, R., Plans, D., Khalsa, S. S., Catmur, C., and Bird, G. (2020). Testing the independence of self-reported interoceptive accuracy and attention. Q. J. Exp. Psychol. (Hove). 73, 115–133. doi:10.1177/1747021819879826

Nakul, E., Orlando-Dessaints, N., Lenggenhager, B., and Lopez, C. (2020). Measuring perceived self-location in virtual reality. Sci. Rep. 10, 6802. doi:10.1038/s41598-020-63643-y

O’Kane, S. H., and Ehrsson, H. H. (2021). The contribution of stimulating multiple body parts simultaneously to the illusion of owning an entire artificial body. PLOS ONE 16, e0233243. doi:10.1371/journal.pone.0233243

Pomes, A., and Slater, M. (2013). Drift and ownership toward a distant virtual body. Front. Hum. Neurosci. 7, 908. doi:10.3389/fnhum.2013.00908

Pratviel, Y., Deschodt-Arsac, V., Larrue, F., and Arsac, L. M. (2021). Reliability of the Dynavision task in virtual reality to explore visuomotor phenotypes. Sci. Rep. 11, 587. doi:10.1038/s41598-020-79885-9

Preuss, N., and Ehrsson, H. H. (2019). Full-body ownership illusion elicited by visuo-vestibular integration. J. Exp. Psychol. Hum. Percept. Perform. 45, 209–223. doi:10.1037/xhp0000597

R Core Team (2020). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing.

Ronchi, R., Bello-Ruiz, J., Lukowska, M., Herbelin, B., Cabrilo, I., Schaller, K., et al. (2015). Right insular damage decreases heartbeat awareness and alters cardio-visual effects on bodily self-consciousness. Neuropsychologia 70, 11–20. doi:10.1016/j.neuropsychologia.2015.02.010

Salomon, R., Lim, M., Pfeiffer, C., Gassert, R., and Blanke, O. (2013). Full body illusion is associated with widespread skin temperature reduction. Front. Behav. Neurosci. 7, 65. doi:10.3389/fnbeh.2013.00065

Salomon, R., Noel, J.-P., Łukowska, M., Faivre, N., Metzinger, T., Serino, A., et al. (2017). Unconscious integration of multisensory bodily inputs in the peripersonal space shapes bodily self-consciousness. Cognition 166, 174–183. doi:10.1016/j.cognition.2017.05.028

Schandry, R. (1981). Heart beat perception and emotional experience. Psychophysiology 18, 483–488. doi:10.1111/j.1469-8986.1981.tb02486.x

Schubert, T., Friedmann, F., and Regenbrecht, H. (2001). The experience of presence: Factor Analytic insights. Presence. (Camb). 10, 266–281. doi:10.1162/105474601300343603

Seiryte, A., and Rusconi, E. (2015). The Empathy Quotient (EQ) predicts perceived strength of bodily illusions and illusion-related sensations of pain. Pers. Individ. Dif. 77, 112–117. doi:10.1016/j.paid.2014.12.048

Serino, A., Alsmith, A., Costantini, M., Mandrigin, A., Tajadura-Jimenez, A., and Lopez, C. (2013). Bodily ownership and self-location: Components of bodily self-consciousness. Conscious. Cogn. 22, 1239–1252. doi:10.1016/j.concog.2013.08.013

Shepard, R. N., and Metzler, J. (1971). Mental rotation of three-dimensional objects. Science 171, 701–703. doi:10.1126/science.171.3972.701

Slater, M., Spanlang, B., Sanchez-Vives, M. V., and Blanke, O. (2010). First person experience of body transfer in virtual reality. PLoS ONE 5, e10564. doi:10.1371/journal.pone.0010564

Suzuki, K., Garfinkel, S. N., Critchley, H. D., and Seth, A. K. (2013). Multisensory integration across exteroceptive and interoceptive domains modulates self-experience in the rubber-hand illusion. Neuropsychologia 51, 2909–2917. doi:10.1016/j.neuropsychologia.2013.08.014

Tajadura-Jiménez, A., Grehl, S., and Tsakiris, M. (2012). The other in me: Interpersonal multisensory stimulation changes the mental representation of the self. PLOS ONE 7, e40682. doi:10.1371/journal.pone.0040682

Tsakiris, M., Jiménez, A. T., and Costantini, M. (2011). Just a heartbeat away from one’s body: interoceptive sensitivity predicts malleability of body-representations. Proc. R. Soc. B 278, 2470–2476. doi:10.1098/rspb.2010.2547

Tsakiris, M. (2010). My body in the brain: A neurocognitive model of body-ownership. Neuropsychologia 48, 703–712. doi:10.1016/j.neuropsychologia.2009.09.034

Tsakiris, M., Schütz-Bosbach, S., and Gallagher, S. (2007). On agency and body-ownership: Phenomenological and neurocognitive reflections. Conscious. Cogn. 16, 645–660. doi:10.1016/j.concog.2007.05.012

van Elk, M., and Blanke, O. (2014). Imagined own-body transformations during passive self-motion. Psychol. Res. 78, 18–27. doi:10.1007/s00426-013-0486-8

Wallman-Jones, A., Perakakis, P., Tsakiris, M., and Schmidt, M. (2021). Physical activity and interoceptive processing: Theoretical considerations for future research. Int. J. Psychophysiol. 166, 38–49. doi:10.1016/j.ijpsycho.2021.05.002

Keywords: multisensory integration, embodiment, virtual avatar, self location, self identification, perspective taking, interoception, mental rotation

Citation: Pratviel Y, Bouni A, Deschodt-Arsac V, Larrue F and Arsac LM (2022) Avatar embodiment in VR: Are there individual susceptibilities to visuo-tactile or cardio-visual stimulations?. Front. Virtual Real. 3:954808. doi: 10.3389/frvir.2022.954808

Received: 27 May 2022; Accepted: 11 August 2022;

Published: 06 September 2022.

Edited by:

Sara Arlati, National Research Council (CNR), ItalyReviewed by:

Michel Guerraz, Savoy Mont Blanc University, FranceCopyright © 2022 Pratviel, Bouni, Deschodt-Arsac, Larrue and Arsac. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Laurent M. Arsac, bGF1cmVudC5hcnNhY0B1LWJvcmRlYXV4LmZy

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.