94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 27 April 2022

Sec. Virtual Reality and Human Behaviour

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.860872

This article is part of the Research TopicBeyond audiovisual: novel multisensory stimulation techniques and their applicationsView all 11 articles

The perception of one’s own body is a complex mechanism that can be disturbed by conflicting sensory information and lead to illusory (mis-) perceptions. Prominent models of multisensory integration propose that sensory streams are integrated according to their reliability by approximating Bayesian inference. As such, when considering self-attribution of seen motor actions, previous works argue in favor of visual dominance over other sensations, and internal cues. In the present work, we use virtual reality and a haptic glove to investigate the influence of an active haptic feedback on one’s visual and agency judgments over a performed finger action under experimentally manipulated visual and haptic feedbacks. Data overall confirm that vision dominates for agency judgment in conditions of multisensory conflict. Interestingly, we also show that participants’ visual judgment over their finger action is sensitive to multisensory conflicts (vision, proprioception, motor afferent signals, and haptic perception), thus bringing an important nuance to the widely accepted view on a general visual dominance.

Bodily perception is a complex mechanism that can be disturbed by multimodal conflicts. Remarkably, the felt position of one’s hand can be altered by inducing ownership over a rubber hand counterpart (Botvinick and Cohen, 1998). In the rubber hand illusion (RHI), multimodal conflicts are introduced between visual, tactile, and proprioceptive signals, so that the congruence of vision and touch can be considered to be more reliable than proprioception alone, thus giving rise to the ownership illusion of the rubber hand and to a visual dominance for locating the limb. This interpretation of the neural mechanism of the RHI is supported by prominent understanding on multisensory integration, according to which the brain integrates multiple sensory streams weighted by their reliability and approximating Bayesian inference (Knill and Pouget, 2004). A Bayesian brain model can indeed explain the proprioceptive drift to come from the computation of the felt position of the hand using a weighted sum of available sensory signals (Holmes and Spence, 2005). Extending this principle, if a sensory modality is added and is reliable enough, it can influence the multisensory integration, and thus affect one’s feeling of ownership of one’s limbs as well as its felt location. For instance, it can be the case when adding haptic feedback in a Virtual Reality (VR) context in which the real movements of one’s hand are not perfectly matching with the provided visual feedback (i.e., visuo-proprioceptive conflict). In this situation, careful manipulation of the haptic information can influence the multisensory integration by making one configuration more reliable (e.g., congruent visuo-haptic feedback), thus influencing the acceptance of the proprioceptive distortion (Lee et al., 2015).

In such Bayesian framework contexts, when vision is supposed to be reliable, the integration of conflicting visuo-motor information may lead to a visual dominance over afferent motor signals and proprioception. This phenomenon was indeed exemplified by Salomon et al. (2016) by visually displaying a virtual hand that did not move according to the subject’s actual movement. They could observe that, when the movement of two fingers was swapped (e.g., index finger moving instead of the middle one), participants could wrongly self-attribute the seen motor action. Although striking, this experiment does not allow concluding on a generalized visual dominance for agency judgments because the haptic feedback was not manipulated so its influence could not be evaluated. The multisensory integration in Salomon et al. (2016) indeed involved internal cues (proprioceptive and afferent motor information), visual feedback (observed finger movement, in conflict with the internal cues), but also a passive haptic feedback from touching the table on which the hand was resting. To elaborate on this work and evaluate how haptic feedback influences multisensory integration and, in particular, if it can alter visual dominance for motor perception, we propose to study more deeply the multisensory integration leading to the misattribution of virtual fingers’ movements in an experimental paradigm involving systematic haptic feedback manipulation.

This paper is organized as follows. The next section presents the related work about multisensory integration in cognitive neuroscience and multisensory conflict. Section 3 describes the experiment, including the setup, the task and conditions, the protocol, and our hypotheses. The statistical analysis is detailed in Section 4, while results are discussed in Section 5, followed by the conclusion in Section 6.

Multisensory integration designates the brain’s process involved in converting a given set of sensory inputs into a unified (integrated) perception. A prominent model about this process is based on Bayesian inference, proposing that incoming sensory signals are integrated according to the inverse of their variance, i.e., their reliability, thus giving more weight to more precise modalities (Knill and Pouget, 2004; Friston, 2012). Illustrating this phenomenon, the rubber hand illusion involves presenting participants with a rubber hand placed nearby their hidden real hand (Botvinick and Cohen, 1998). The illusion of ownership for the rubber hand is then induced by brushing synchronously the subject’s real hand and its rubber counterpart. The illusion then rises from a multisensory conflict in which the visuo-tactile congruency is considered as more reliable than proprioception. Noteworthy, in this particular context of illusory ownership toward a virtual bodily counterpart, a reciprocal link between multisensory integration, and illusory ownership was found: the ownership illusion emerges from multisensory integration, while in return it influences upcoming multisensory processing. For example, Maselli et al. (2016) showed that the ownership illusion acts as a “unity assumption” and, congruently with bayesian causal inference models, enlarges the temporal window for integrating visuo-tactile stimulus.

In the particular context of the rubber hand illusion (RHI), the ownership illusion induces a proprioceptive drift, meaning that one’s perception of one’s hand location shifts toward the rubber hand location. But proprioceptive drifts had been observed earlier in a similar paradigm, without tactile stimulation, using a prism to manipulate one’s static hand position (Hay et al., 1965). This phenomenon was later confirmed, and more deeply investigated, by Holmes and Spence (2005) who used a mirror to modulate the visual position of the participants’ right hand. They showed that participants estimate their hand position to be closer to the visual information when asked to move their fingers than when only presented with a passive visual hand reflection. Taken together, these findings could lead to the idea that vision necessarily dominates over proprioception. It is indeed often considered that, in the context of VR, vision is more reliable than other sensory cues, leading to a “visual dominance,” or “visual capture,” that overrides other factors of movement perception (Burns et al., 2005). But other factors and combinations of conditions are to be considered, as they can lead to an increase or a decrease of the reliability associated to a specific modality. As counterexamples, it is known that the spatial direction of the visual distortion strongly impacts the visuo-proprioceptive integration for the judgment of hand location (van Beers et al., 2002), or that the haptic perception can dominate vision when one is judging about shapes if two radically different configurations are presented to vision and touch (Heller, 1983).

More dramatically, beside the manipulation of one’s proprioceptive judgment, the ownership illusion can also enable manipulations of one’s sense of authorship toward a movement, namely one’s sense of agency (Blanke and Metzinger, 2009). Illustrating this possibility, and laying the foundation for the present research, Salomon et al. (2016) manipulated their participant’s sense of agency using an anatomical distortion of their hand’s movements. Participants were asked to rest their right hand on a table and to lift either their index or middle finger. Their real hand was hidden while they were presented with a virtual representation of their hand. On trials with distortion, the consistency principle of agency (Jeunet et al., 2018) was challenged by visually moving the virtual hand’s index finger if the participant moved the middle finger, and conversely. Under these circumstances, Salomon et al. showed not only that participants would experience a lower sense of agency, but that they could wrongly self-attribute the motor action of the virtual finger they saw moving. This motor illusion is a typical example of visual capture in a case of conflicting integration, as the visual stimuli here dominates over tactile (passive tactile feedback when bringing back the moved finger on the table), proprioceptive and motor afferent signals. These results however do not allow us to make conclusions on the influence of haptic feedback in the resolution of multisensory conflicts. We thus propose to investigate whether adding an active haptic feedback (a reaction force) could influence the multisensory conflict leading to agency judgment and motor attribution, thereby providing an important nuance to the generally accepted view on visual dominance, and opening new research perspectives on agency manipulations.

Our protocol replicated Salomon et al. (2016) and extended it with congruent and incongruent active haptic feedback in order to test whether or not such active haptic feedback can dominate vision in a motor perception context.

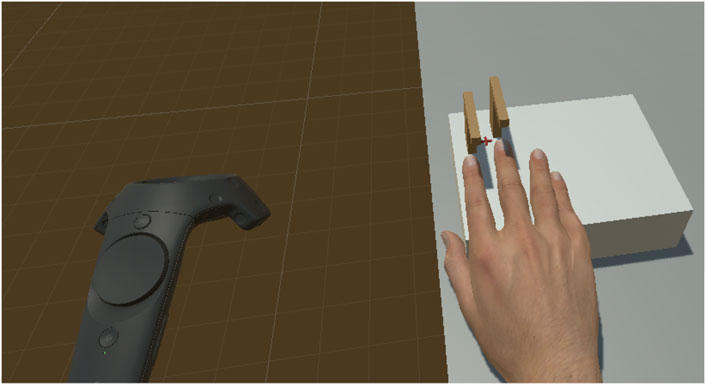

Participants sat on a chair in front of a table, and were equipped with an HTC Vive Pro Eye Head Mounted Display (HMD). They were wearing a Dexmo glove from Dextra robotics (https://www.dextarobotics.com/) on their right hand, on which an HTC Vive tracker was fixed (Figure 1).

The Dexmo haptic glove can prevent a finger from moving, or apply a force of varying intensity on the user’s fingertips. We used the finger-tracking data it provides to animate the virtual hand [androgynous right hand from Schwind et al. (2018)].

The participants’ right wrist was kept static using a strap attached to the table. The right hand was resting on a rectangular surface (Figure 2), while the left one was holding a HTC vive controller to answer questions.

Participants saw a virtual environment through a virtual reality head mounted display (HMD) from a first person perspective (Figure 3). The virtual scene was composed of a virtual table placed congruently with the real table, on which a rectangular prop was placed. On top of this prop were attached two virtual obstacles (Figure 2) that the virtual index and middle fingers would hit when being lifted. The eye tracking system was used to ensure that participants were continuously looking at a red fixation cross located between the index and middle fingers (Figure 3).

FIGURE 3. The virtual scene viewed from the first person perspective; during the task, participants had to look at the red cross located on the surface between the index and middle fingers.

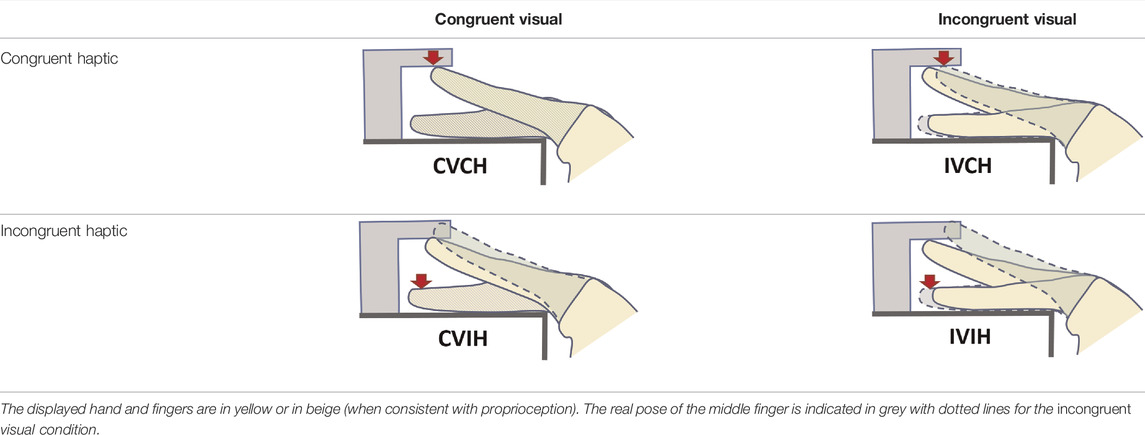

During the experiment, participants were asked to lift either their index or middle finger until it touched the corresponding virtual obstacle, and then to bring their finger back on the table. When a participant lifted the index or middle finger, the virtual hand would simultaneously lift the same finger (congruent visual) or the other finger (incongruent visual). When the moving virtual finger touched the obstacle, haptic feedback was applied either on the lifted finger (congruent haptic) or on the other finger tip (incongruent haptic). That is, when the haptic feedback is congruent, the glove applies a force on the moving finger to prevent it from passing through the virtual obstacle. Conversely, when the haptic feedback is incongruent, the moving finger does not receive any haptic feedback from the glove, but a force is applied on the resting finger’s tip, pushing it against the platform. Our experiment follows a 2 × 2 factorial design, with two factors (haptic and visual feedbacks) manipulated with two levels (congruent or incongruent with the movement actually performed by the participant). Table 1 sums-up the experimental conditions.

TABLE 1. Experimental conditions illustrated in the context of the subject moving their index finger (highlighted in green with plain line if displayed or dotted line otherwise).

Each trial consisted of one task (i.e., finger lifting) followed by a question. During the movement, participants had to look at the fixation cross located between their index, and middle fingers (Figure 3).

When participants brought back their moved finger on the rectangular platform, a question appeared in front of the virtual hand (Figure 4). It was randomly selected between visual perception (“Which finger did you see moving?”) and motor perception (“Which finger did you move?”), and answers were presented in random order. Participants answered the question using the Vive controller held in their left hand.

A full experimental block consisted in 30 trials of each condition, thus in 120 trials in total, presented in pseudo-random order. For each trial, participants could freely choose to move either their index or middle finger; they were however asked to keep the balance as much as possible. To help participants, how many times they moved each finger was displayed when half of a block was completed.

When a participant arrived, the experiment was first explained to them, they were shown the setup, signed a consent form and filled in a demographic questionnaire. Then, they sat on the chair in front of the table and put on the HMD. First, a stereoscopic vision test was conducted to ensure they would correctly see the fingers movements during the experiment. Then the eye-tracking system was calibrated. Next, the participant removed the HMD and was helped to put on the glove and to place their hand correctly for the calibration to be performed.

The calibration phase went as follows. First, the height of the physical prop was adjusted to fit the participant’s hand size. This way, the angle between the index/middle fingers plane and the palm was ensured to be sufficiently below 180° to allow participants to lift their fingers while wearing the glove. Indeed the maximal extension of the hand tolerated by the haptic glove corresponds to a flat hand (180° between the palm and the index/middle fingers plan). Finally, the virtual hand pose was adjusted to fit the participant’s fingers pose on the rectangular prop, as well as the position to achieve for triggering the haptic feedback.

Following the calibration, participants put back the HMD and completed a two-minutes acclimatization phase to get used to the virtual hand. During this phase, participants were asked to keep their thumb still and the other fingers fully extended, but could freely rotate their wrist and lift their fingers from the rectangular prop. A training phase followed, consisting in 48 trials. The training block was composed of 12 trials of each condition, presented in random order.

After the training, each participant went though the three main blocks, thus performing 360 trials in total. They could take a break between each block if they wanted to. Once completed, subjects had to perform the Agency and Ownership blocks: four additional blocks of 48 trials each. Each block corresponded to an experimental condition and the order of blocks was randomized. During this phase, the visual and motor perception questions were removed. After each block, as in the study by Salomon et al., participants were asked to fill a questionnaire on agency and ownership toward the virtual hand (Table 2). Subjects had to answer the questions on a seven-points Likert scale ranging from 1 (“Totally disagree”) to 7 (“Totally agree”).

Except during the training blocks, white noise was used to isolate participants from the external world. Moreover, if between two blocks the participant’s hand had moved from its initial position, for example if the participant took a pause, the calibration phase was repeated.

Following a sample size estimation based on Salomon et al. (2016), 20 healthy right-handed volunteers were recruited for this study (twelve females), aged from 18 to 35 (m = 21.65, sd = 4.36). All participants gave informed consent and the study was approved by our local ethics committee. The study and methods were carried out in accordance with the guidelines of the declaration of Helsinki.

First, we expect the replication of previous results from Salomon et al. (2016) (H1). We thus have four sub-hypothesis (see Table 1 illustrating the conditions acronyms): (H1a) Visual judgment accuracy is not affected by visual congruency (CVCH not significantly different from IVCH), (H1b) Motor judgment accuracy is reduced when the visual feedback is incongruent with the motor actions (CVCH

Second, our (H2) hypothesis focuses on the influence of an active haptic feedback on one’s perception. Concerning visual perception, as Salomon et al. showed vision to be considered as highly reliable in a very similar set-up, we anticipate no effect of the active haptic feedback on visual judgment accuracy. Thus, we hypothesize that (H2a) visual judgment accuracy is not significantly affected by visual nor by haptic feedback congruency.

When it comes to motor perception judgment accuracy, we believe that vision will be predominant in our context as no visual cues indicates the visual feedback to be erroneous (Power, 1980). We thus hypothesize that (H2b) motor judgment accuracy is higher if the visual modality is congruent than if it is incongruent, no matter the associated haptic feedbacks (CV...H

Given that agency is typically induced when one can control the virtual bodily counterpart, it should be experienced by participant in our setup when the visual feedback is congruent with the movement performed. In this condition, and as our haptic feedback manipulations do not disturb the matching between the performed and the seen movement, we do not expect haptic information to disturb the sense of agency. Conversely, in conditions when the visual feedback is incongruent with the performed movement, participants should not experience a strong sense of agency. Similarly as before, as the haptic modality does not influence the performed/seen movement consistency, we do not expect congruent haptic feedback to alter the sense of agency. As a sum-up, under both visual feedback conditions, we do not expect the haptic modality to disturb the feeling of agency (H2c).

Finally, concerning ownership, Bovet et al. (2018) previously showed the propensity of visual-haptic conflict to reduce ownership. We thus expect that, under congruent visual feedback, incongruent haptic feedback should lower ownership levels. Conversely, under incongruent visual feedback we do not expect any significant effect of the haptic modality as, in this condition, the scores already reported by Salomon et al. indicate low levels of ownership toward the virtual hand (H2d).

Visual and motor judgment accuracies were computed for each participant as the percentage of correct answers to the corresponding perception questions. As for both measurements the samples included several outliers and were not approximating a normal distribution (Shapiro-Wilk test), the statistical analysis was conducted using non-parametric tests.

Concerning agency and ownership scores, they were computed separately for each participant using the mean of answers to the corresponding questions in the last four blocks of the experiment. For both agency and ownership data, the samples were not outliers-free. As there was no reason to exclude the outliers from the dataset, and as they influenced the results of the associated t-tests, we conducted the statistical analysis using non-parametric tests.

In case of multiple comparisons, p-values were corrected with the conservative Bonferonni method and reported using the following notation: pcorr.

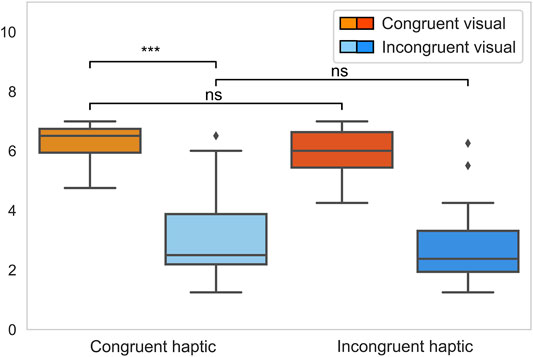

A one-sided Wilcoxon test was applied on the pair (CVCH; IVCH) to test order, revealing a significantly (p < 10−3) higher motor perception accuracy under congruent visual feedback (Figure 5), thus validating (H1b).

To test whether motor judgment accuracy is influenced by congruency (H2b), one-sided Wilcoxon tests were applied on pairs comparing congruent and incongruent visual feedback. The motor perception accuracy was significantly higher for the CVCH condition than for the IVCH one (p < 10−3 and pcorr < 10−3) and the IVIH one (p ≃ 0.003 and pcorr ≃ 0.012). Similarly, the CVIH condition led to a significantly higher motor judgment accuracy than the IVIH (p ≃ 0.002 and pcorr ≃ 0.01) and the IVCH one (p < 10−3 and pcorr < 10−3). Thus, those results validate (H2b) (Figure 5).

A two-sided Wilcoxon test was applied to compare the CVCH and IVCH samples, revealing a significant effect of visual feedback on visual perception accuracy (p ≃ 0.02), thus rejecting (H1a) (Figure 6). The associated one-sided Wilcoxon test showed visual judgment accuracy to be decreased when the visual modality is incongruent (p < 10−2).

A Friedman test applied to the four samples rejected the similarity of distributions (p < 10−3), thus rejecting our (H2a) hypothesis according to which visual perception accuracy would not be affected by visual nor haptic congruency.

A post-hoc analysis was conducted using one-sided Wilcoxon tests on each possible pair of conditions (Figure 6). The visual judgment accuracy of the IVIH condition was found to be significantly lower than for the CVCH and CVIH ones (p < 10−3 and pcorr < 10−2 for both tests).

A one-sided Wilcoxon test was applied on congruent haptic feedback samples, revealing a significantly (p < 10−3) higher agency under congruent than incongruent visual feedback (Figure 7), thus validating (H1c).

FIGURE 7. Agency scores across conditions, computed for each participant using the mean of answers to the corresponding questions in the last four blocks of the experiment.

To test for (H2c), according to which agency would not be affected by the haptic feedback congruency, two-sided Wilcoxon tests were applied on the pairs (CVCH; CVIH), and (IVCH; IVIH). None was significant (p > 0.05), thus failing to reject this hypothesis (Figure 7).

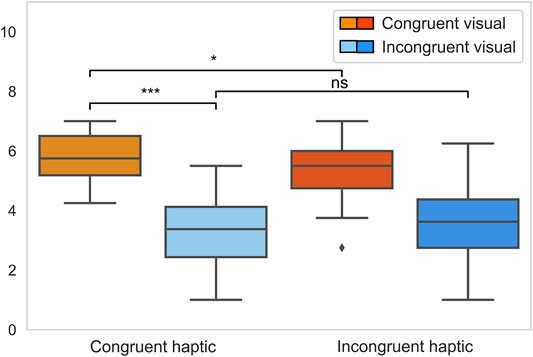

A one-sided Wilcoxon test was applied on congruent haptic feedback samples, revealing a significantly (p < 10−3) higher ownership under congruent than incongruent visual feedback (Figure 8), thus replicating previous findings and validating (H1d).

FIGURE 8. Ownership scores across conditions, computed for each participant using the mean of answers to the corresponding questions in the last four blocks of the experiment.

To test for (H2d), according to which ownership would be affected by the haptic feedback congruency only under congruent visual information, a one-sided Wilcoxon test was applied on the pair (CVCH; CVIH) while a two-sided one was applied on the pair (IVCH; IVIH). Under congruent visual information, the congruent haptic feedback led to significantly (p ≃ 0.03) higher ownership scores than the incongruent one (Figure 8). Under incongruent visual feedback, no effect of haptic feedback congruency was found (p ≃ 0.29).

First, we reproduced and extended Salomon et al. (2016) results on the influence of visual feedback on judgment of motor authorship. More precisely, motor perception is affected by the congruency between the performed and the observed movement: when haptic feedback is congruent with the action performed, congruent visual information leads to a higher motor perception accuracy than incongruent visual information (CVCH

As an extension of this result, we show that in our setup, no matter the haptic feedback congruency, congruent visual feedback always leads to less motor judgment errors than an incongruent one. Of particular interest, if visual or haptic information is incongruent (conditions CVIH and IVCH), then congruent visual feedback with incongruent haptic stimuli leads to a better motor perception accuracy (m ≃ 98%) than incongruent visual feedback coupled with congruent haptic stimuli (m ≃ 92%), thus showing that haptic information does not challenge vision in the motor judgment context. Put together, when it comes to motor judgment, these findings argue in favor of the visual dominance over the other modalities being integrated to produce one’s perception.

Concerning visual perception accuracy, our findings are different from (Salomon et al., 2016) as when the haptic feedback is congruent with the performed movement, visual judgment accuracy is significantly higher under congruent than incongruent visual feedback (CVCH

Now considering perception judgment accuracy under congruent haptic feedback, Salomon et al. (2016) made the distinction between two accuracy patterns by manipulating the visual feedback congruency. They experimentally changed the position of the virtual hand to be either in the same orientation as the physical subject’s hand (0° rotation), in a plausible orientation (90° rotation), or in an impossible posture (180°or 270° rotation). They observe that with a plausible hand posture, the accuracy pattern presents a small decrease in visual perception accuracy (but not significant) and a large decrease in motor perception accuracy when visual feedback is switched from congruent to incongruent. Conversely, when the virtual hand is in an impossible posture, both visual and motor perception accuracy largely decrease when visual feedback is incongruent. In our experiment, the virtual hand is presented with a 0° rotation, and our results replicate the corresponding pattern from Salomon et al. (2016). Indeed, we observe a slight decrease in visual judgment accuracy when the visual feedback switches from congruent to incongruent. This decrease is significant in our setup (m ≃ 97.2% to m ≃ 95.3%) but not in Salomon et al. (2016) (m ≃ 94.8% to m ≃ 93.8%), which may be explained by a more pronounced haptic feedback in our setup. However, as we also observe a large drop in motor perception accuracy when the visual feedback becomes incongruent (m ≃ 98.4% to m ≃ 92%), our results fit the pattern described by Salomon et al., thus confirming their general finding. As improvements in motor perception can in turn bear potential benefits for motor performance, future work could explore the impact of such active haptic feedback on motor performance to infer its potential benefit for motor training (Ramírez-Fernández et al., 2015; Kreimeier et al., 2019; Odermatt et al., 2021).

Finally, considering the agency and ownership scores, we replicated previous findings showing that, under congruent haptic feedback, both scores are higher when participants are provided with congruent visual information than incongruent. This is in line with agency and ownership being typically induced when one is presented with a virtual bodily counterpart they can control and that is placed in an anatomically plausible position. Moreover, because the haptic feedback factor does not impair one’s control of the virtual hand as it does not disturb the matching between the performed and the seen movement, we did not observe any significant difference in agency scores when comparing conditions differing only by the haptic feedback condition. In addition, as hypothesized, when provided with congruent visual information, the participant’s sense of ownership was significantly lower in the incongruent haptic feedback condition than in the congruent one. This result is in line with previous work from Bovet et al. (2018) showing the propensity of a visual-haptic conflict to reduce ownership. Conversely, when the visual information was incongruent, haptic feedback had no significant effect on ownership scores, and in particular, it did not bring back the ownership illusion.

In cases when a multisensory conflict arises, such as in a VR simulation, vision is considered as dominant over other sensory cues. Typically, when considering self-attribution of seen motor actions, previous work tends to confirm this tendency for fingers movements (Salomon et al., 2016). In the present work, through the use of VR and of an haptic glove, we provide an important nuance to this widely accepted view on visual dominance by investigating the influence of an active haptic feedback on one’s visual and agency judgments about a seen movement.

Overall, we confirm vision dominates multisensory conflicts when it comes to agency judgments of motor action. But conversely, we show that visual dominance can be challenged by the association of proprioceptive and motor afferent signals with active haptic perception, as shown in our results with a lower accuracy for the judgment of which finger one saw moving. Finally, our experiment shows an effect of a punctual and transient haptic stimulation on the sense of ownership for a virtual hand. When the active haptic feedback is incongruent with the performed movement, participants experience a loss of ownership for the hand, even if visuals were congruent.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

The studies involving human participants were reviewed and approved by the CER-VD (Commission cantonale d’éthique de la recherche sur l’être humain) N°2018-01601. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Conceived and designed the experiments: LB, BH, and RB. Performed the experiments: DP. Analyzed the data: LB. Contributed reagents/materials/analysis tools: DP and LB. Wrote the manuscript: LB, BH, and RB.

This work has been supported by the SNFS project “Immersive Embodied Interactions” grant 200020-178790.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2022.860872/full#supplementary-material

Blanke, O., and Metzinger, T. (2009). Full-Body Illusions and Minimal Phenomenal Selfhood. Trends Cognitive Sciences 13, 7–13. doi:10.1016/j.tics.2008.10.003

Botvinick, M., and Cohen, J. (1998). Rubber Hands 'Feel' Touch that Eyes See. Nature 391, 756. doi:10.1038/35784

Bovet, S., Debarba, H. G., Herbelin, B., Molla, E., and Boulic, R. (2018). The Critical Role of Self-Contact for Embodiment in Virtual Reality. IEEE Trans. Vis. Comput. Graphics 24, 1428–1436. doi:10.1109/tvcg.2018.2794658

Burns, E., Whitton, M. C., Razzaque, S., McCallus, M. R., Panter, A. T., and Brooks, F. P. (2005). “The Hand Is Slower Than the Eye: A Quantitative Exploration of Visual Dominance over Proprioception,” in IEEE Proceedings. VR 2005. Virtual Reality, 2005, Bonn, Germany, 12-16 March 2005 (Bonn, Germany: IEEE), 3–10. doi:10.1109/VR.2005.1492747

Friston, K. (2012). The History of the Future of the Bayesian Brain. NeuroImage 62, 1230–1233. doi:10.1016/j.neuroimage.2011.10.004

Hay, J. C., Pick, H. L., and Ikeda, K. (1965). Visual Capture Produced by Prism Spectacles. Psychon. Sci. 2, 215–216. doi:10.3758/BF03343413

Heller, M. A. (1983). Haptic Dominance in Form Perception with Blurred Vision. Perception 12, 607–613. doi:10.1068/p120607

Holmes, N. P., and Spence, C. (2005). Visual Bias of Unseen Hand Position with a Mirror: Spatial and Temporal Factors. Exp. Brain Res. 166, 489–497. doi:10.1007/s00221-005-2389-4

Jeunet, C., Albert, L., Argelaguet, F., and Lecuyer, A. (2018). "Do You Feel in Control?": Towards Novel Approaches to Characterise, Manipulate and Measure the Sense of Agency in Virtual Environments. IEEE Trans. Vis. Comput. Graphics 24, 1486–1495. doi:10.1109/tvcg.2018.2794598

Knill, D. C., and Pouget, A. (2004). The Bayesian Brain: The Role of Uncertainty in Neural Coding and Computation. Trends Neurosciences 27, 712–719. doi:10.1016/j.tins.2004.10.007

Kreimeier, J., Hammer, S., Friedmann, D., Karg, P., Bühner, C., Bankel, L., et al. (2019). “Evaluation of Different Types of Haptic Feedback Influencing the Task-Based Presence and Performance in Virtual Reality,” in Proceedings of the 12th acm international conference on pervasive technologies related to assistive environments, Rhodes Greece, June 5–7, 2019, 289–298. doi:10.1145/3316782.3321536

Lee, Y., Jang, I., and Lee, D. (2015). “Enlarging Just Noticeable Differences of Visual-Proprioceptive Conflict in Vr Using Haptic Feedback,” in 2015 IEEE World Haptics Conference (WHC) (IEEE), Northwestern University, Evanston IL, June 22–26, 2015, 19–24. doi:10.1109/whc.2015.7177685

Maselli, A., Kilteni, K., López-Moliner, J., and Slater, M. (2016). The Sense of Body Ownership Relaxes Temporal Constraints for Multisensory Integration. Sci. Rep. 6, 30628. doi:10.1038/srep30628

Odermatt, I. A., Buetler, K. A., Wenk, N., Özen, Ö., Penalver-Andres, J., Nef, T., et al. (2021). Congruency of Information rather Than Body Ownership Enhances Motor Performance in Highly Embodied Virtual Reality. Front. Neurosci. 15, 678909. doi:10.3389/fnins.2021.678909

Power, R. P. (1980). The Dominance of Touch by Vision: Sometimes Incomplete. Perception 9, 457–466. doi:10.1068/p090457

Ramírez-Fernández, C., Morán, A. L., and García-Canseco, E. (2015). “Haptic Feedback in Motor Hand Virtual Therapy Increases Precision and Generates Less Mental Workload,” in 2015 9th international conference on pervasive computing technologies for healthcare (PervasiveHealth), Istanbul, Turkey, 20-23 May 2015 (IEEE), 280–286.

Salomon, R., Fernandez, N. B., van Elk, M., Vachicouras, N., Sabatier, F., Tychinskaya, A., et al. (2016). Changing Motor Perception by Sensorimotor Conflicts and Body Ownership. Sci. Rep. 6, 25847. doi:10.1038/srep25847

Schwind, V., Lin, L., Di Luca, M., Jörg, S., and Hillis, J. (2018). “Touch with Foreign Hands: The Effect of Virtual Hand Appearance on Visual-Haptic Integration,” in Proceedings of the 15th ACM Symposium on Applied Perception, Vancouver British Columbia Canada, August 10–11, 2018, 1–8.

Keywords: multisensory integration, multisensory conflicts, virtual reality, haptics, sense of agency, sense of ownership, visual perception, motor perception

Citation: Boban L, Pittet D, Herbelin B and Boulic R (2022) Changing Finger Movement Perception: Influence of Active Haptics on Visual Dominance. Front. Virtual Real. 3:860872. doi: 10.3389/frvir.2022.860872

Received: 23 January 2022; Accepted: 11 March 2022;

Published: 27 April 2022.

Edited by:

Stephen Palmisano, University of Wollongong, AustraliaReviewed by:

Juno Kim, University of New South Wales, AustraliaCopyright © 2022 Boban, Pittet, Herbelin and Boulic. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Loën Boban, bG9lbi5ib2JhbkBlcGZsLmNo

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.