95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Virtual Real. , 14 March 2022

Sec. Augmented Reality

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.838237

Optical see-through near-eye display (NED) technologies for augmented reality (AR) have achieved significant advancements recently with investments from both academia and industry. Although various AR NED products have been successfully commercialized and even deployed into applications, there are still challenges with present AR NED technologies (e.g., limited eyebox, fixed focus, bulky form factors). In this review, we present a brief overview of leading AR NED technologies and then focus on the state-of-the-art research works to counter the respective key challenges with each of the leading AR NED technologies. We also introduce a number of emerging technologies that are worthy of close study.

Augmented Reality (AR) is widely recognized as the next-generation computing platform replacing smart phones and computers. In AR, information is presented to viewers with virtual objects such as graphics and captions fused with real environments without compromising the viewer’s natural vision (Olbrich et al., 2013; Choi J. et al., 2020; Yu et al., 2020; Chiam et al., 2021; Ong et al., 2021). Different from smart glasses which simply superimpose two-dimensional (2D) contents in a head-mounted display (HMD), AR allows the viewers to have more natural interactions with the virtual objects.

The central component of AR is a near-eye display (NED) which is worn by the viewers and is used to combine real and virtual imageries together so that both can be seen at the same time (Koulieris et al., 2019). Although AR NEDs offer a replacement for smartphones and computer monitors and provide visual experience to viewers, all designs for AR NEDs involve tradeoffs between a number of different metrics, including resolution, eyebox (Barten, 2004), form factor, correct focus cues (Zschau et al., 2010), field of view (FOV) (Wheelwright et al., 2018), eye relief, brightness, and full color. Therefore, the greatest challenge in AR NEDs is not in optimizing any individual metric, but instead simultaneously providing a wide FOV, variable focus to mitigate the vergence-accommodation-conflict (VAC), high resolution, a wide eyebox, ease of manufacturing, a slim form factor, etc (Hoffman et al., 2008). However, to counter the mentioned challenge with AR NEDs requires significant technological advancements. The requirement that an AR NED be see-through constrains the form factor and optical materials involved. The requirements on other metrics, including resolutions, FOV, eyebox, and eye relief push the boundaries of diffraction for visible light wavelengths.

In this paper, we present a review on the advancements and challenges towards AR NEDs. Although there are two main groups of AR NEDs (Rolland et al., 1994), namely video see-through and optical see-through, in this paper we will focus introducing the optical see-through AR NEDs because of their potential to provide an extremely high sense of immersion. We will begin our review by giving an overview of the leading types of AR NEDs. Then we will describe each of the leading types of AR NEDs in details with the principles and advancements to counter key challenges including eyebox, FOV and VAC. We conclude by outlining emerging technologies and unsolved challenges for future research.

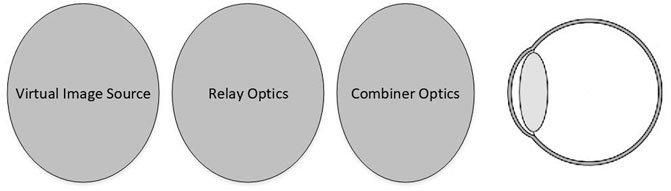

The basic construction for AR NEDs normally includes: 1) a display unit or image source (e.g., a laser projector, a LCD display panel); 2) magnifying optics or relay optics; and 3) the medium to transmit and project the virtual imageries into the eyes of the viewers while allowing the lights from the real environment to pass through (e.g., half mirrors, holographic films) (Cakmakci and Rolland, 2006; Kress, 2020) (Figure 1). Kress and Sterner introduced the critical optical design challenges for AR NEDs, including providing sufficient resolution, large eyebox and wide FOV (Kress and Starner, 2013). Another key impediment and a key cause of discomfort with AR NEDs is the VAC issue (Yano et al., 2002; Koulieris et al., 2017), which is caused by a mismatch between the binocular disparity of a stereoscopic image and the single eye’s optical focus cues provided by the AR NED.

FIGURE 1. Schematic diagram for basic components of optical see-through AR NED which includes: a display unit or image source (e.g., a laser projector, a LCD display panel); magnifying optics or relay optics and the medium to transmit and project the virtual imageries into the eyes of the viewers while allowing the lights from the real environment to pass through (e.g., half mirrors, holographic films).

There have been various attempts from both industry and academia aiming to deliver compact AR NEDs with full color, high-resolution, large FOV and minimized VAC. Starting from beam splitter (BS) based AR NED, various AR NEDs technologies have been developed, such as waveguide based AR NEDs, holographic optical element (HOE) based AR NEDs, freeform optics based AR NEDs. Each of these technologies features their advantages in some of the metrics while having limitations for other metrics. There are also other emerging technologies, including pinlight based, transmissive mirror device (TMD) based, and meta-surface based AR NEDs. We will review each of them in the following sections.

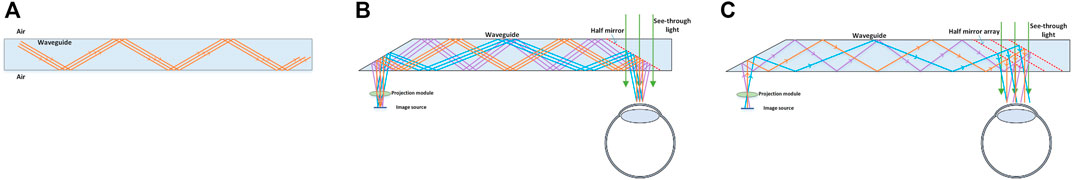

Waveguide based AR NEDs use a waveguide as the medium to transmit and project the virtual imagery into the viewer’s eyes. As its name indicates, a waveguide can guide different types of waves (e.g., electromagnetic wave) to pass through the fibers and pipes and has been widely applied in various domains (Snyder and Love, 1983). In optics, a waveguide is used as a transmitter that transmits the light wave between two different materials by guiding the light waves as shown in Figure 2A. As the waveguide is able to transmit light waves with total-internal-reflection (TIR) mode and therefore without any loss of input signal, it has been deployed for AR NEDs. However, limited FOV is a common challenge with waveguide based AR NEDs due to the incident light angle requirements for TIR to take place, which is dependent on the refraction index of the waveguide (Shen et al., 2017).

FIGURE 2. Schematic illustration of (A) lights transmitting inside a waveguide; (B) reflective waveguide based AR NED with single partially reflective mirror; and (C) reflective waveguide based AR NED with multiple partially reflectors. Green lines in (B) and (C) represent lights from real environment.

Waveguide based AR NEDs normally need two coupling components: an in-coupler and an out-coupler. As its name indicates, an in-coupler is responsible to couple the light from the image source into the waveguide while the out-coupler is responsible to direct the light from the waveguide into the user’s eye. Based on the coupling components used, waveguide based AR NEDs can be categorized into two main types: reflective and diffractive waveguides based AR NEDs.

In reflective waveguide based AR NEDs such as Epson’s Moverio, the molded plastic substrate is utilized as the light waveguide for the virtual imagery and a semi-reflective mirror is placed in front of the eye to reflect the virtual imagery into the viewer’s eye while allowing the real image to pass through1. Figure 2B shows a typical schematic diagram for the reflective waveguide based AR NED. As there is no polarization needed, reflective waveguide based AR NEDs can choose to use various types of micro displays (e.g. LCD, LCOS, OLED) as the image source while providing high optical efficiency and low cost. However, for reflective waveguide technologies, the FOV is directly proportional to the size of the reflector. Therefore, in order to increase the FOV, the reflector should be larger and the waveguide size needs to be increased, which results in a large form factor for the whole NED. To enlarge the eyebox for reflective waveguide based AR NED, multilayer coatings and embedded polarized reflectors can be used in order to extract the light towards the eye pupil as shown in Figure 2C. The polarized waveguide technologies own advantages of a large eyebox. However, they also suffer from a few drawbacks, including high cost for manufacturing, low optical efficiency and color non-uniformity. Thus, it still remains a challenge for a cost-effective solution for consumers.

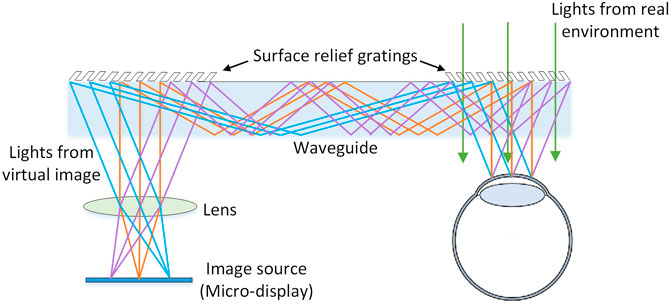

Diffractive waveguide structures differ from reflective structures with the usage of in- and out-couplers produced by diffractive optical element (DOE) which is fabricated with slanted nanometric gratings or surface relief gratings (SRGs). As its name indicates, the in-coupler with slanted gratings in-couples the collimated light to enter the waveguide at a particular angle and then the light travels through the waveguide to the other end of the out-coupler. Finally, the out-coupler will out-couple the light from the waveguide via diffraction and project the light into the viewer’s eye at a certain angle (Levola, 2007). The schematic for this technique can be seen in Figure 3.

FIGURE 3. Diagram of diffractive waveguide based AR NED. The in-coupler with slanted gratings in-couples the collimated light to enter the waveguide at a particular angle and then the light travels through the waveguide to the other end with an out-coupler. The out-coupler will out-couple the light from the waveguide via diffraction and project the light into the viewer’s eye at a certain angle.

Although diffractive waveguide based AR NEDs can achieve a good trade-off among form factor, eyebox, manufacturing readiness, there are still challenges remaining, including chromatic aberration and limited FOV. One major drawback with diffractive waveguide based AR NEDS is the chromatic aberration or rainbow effect (Zhang and Fang, 2019). To mitigate chromatic aberration, Eisen et al. proposed a novel method by resorting to substrates with a gradient refractive index (Eisen et al., 2006). Another straightforward solution for aberration mitigation is to combine two or three layers of waveguide structures targeting at three monochromatic lights (R, G, B) respectively (Mukawa et al., 2008). However, this will introduce the issue of crosstalk or ghost image. To reduce the crosstalk, Levola and Aaltonen proposed to place the waveguide planes in a 10° chevron shape such that the ghost image will appear beyond the range of FOV (Levola and Aaltonen, 2008). Diffractive waveguide based AR NEDs also suffer from limited FOV due to the limit of the refractive index of the waveguide (Xiong et al., 2021b). In order to increase the FOV for diffractive waveguide based AR NEDs, Chen et al. proposed a dual-channel exit pupil expander design to split the FOV into two halves (Chen et al., 2021). By doing so, a FOV of 70° (diagonal) is achieved.

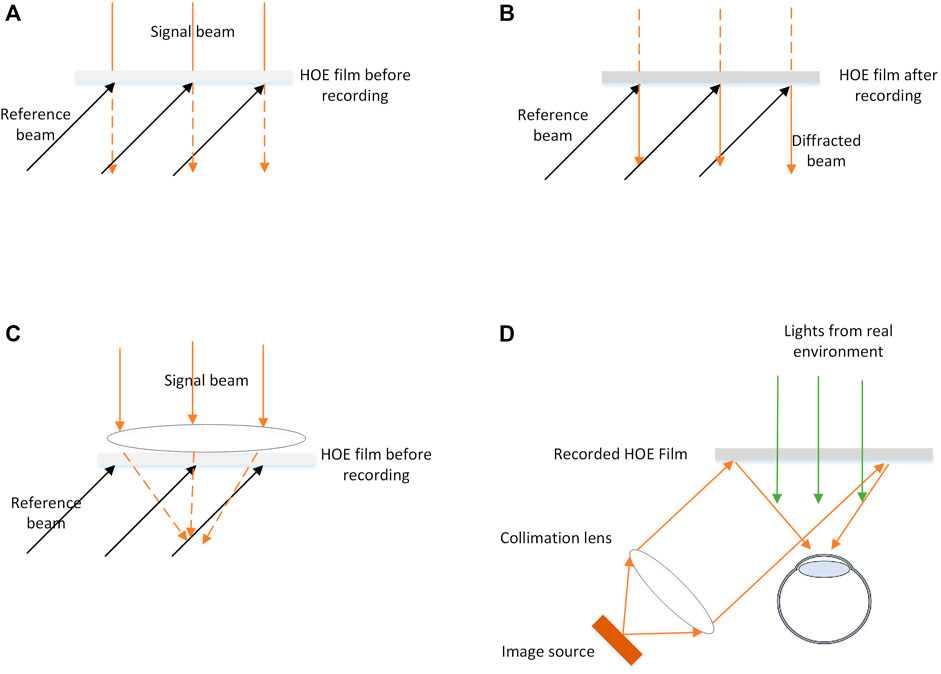

Holographic optical elements (HOEs) are optical devices based on holography technique and have optically see-through property due to their high angular selectivity. Therefore, HOEs have been employed in AR NEDs in recent years (Lee B. et al., 2020). The principle of the HOE depends on the hologram recording (Kim et al., 2017). When the reference beam illuminates the recorded hologram, the virtual 3D image close to the original object is reconstructed. Figure 4A shows the recording process of a reflection HOE film and Figure 4B shows the reconstructed signal beam by projecting the reference beam to the recorded HOE film. Depending on the geometry of the recording process, HOEs can be classified into two types: transmission type and reflection type (Xiong et al., 2021a). In a transmission HOE, both the signal beam and reference beam are on the same side of the recording material. In a reflection HOE, the signal beam and the reference beam are on the different sides of the recording material. Figure 4D illustrates a typical setup for HOE based AR NEDs in which the collimated lights are projected onto the recorded HOE and the reconstructed lights will be generated and projected into the user’s eye.

FIGURE 4. (A) and (B) show the recording and reconstruction process of reflection HOE films; (C) shows the HOE recording process to achieve large FOV and (D) shows a typical setup for HOE based AR NED.

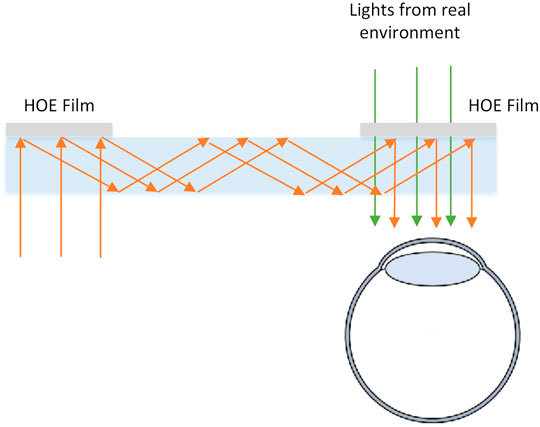

As shown in Figure 4A, the reference and signal beams are both collimated. After hologram recording, the collimated signal beam perpendicular to the HOE plane can be reconstructed with the illumination of the oblique reference beam. This kind of HOEs is always utilized as the in- and out-couplers of waveguide based AR NEDs because HOE is able to off-axis direct the light (Mukawa et al., 2008; Piao et al., 2013; Shi et al., 2012). As shown in Figure 5, the light waves from the source display are reflected on the in-coupler HOE with an incident angle and then travel through the waveguide, and finally the out-coupler HOE changes the directions of the light and projects the light toward the eye of the viewer. The combinational use of HOE and waveguide enables the optical see-through view in a compact form factor. However, as one HOE film reflects only one wavelength of light, in order to achieve full color display, three HOEs are needed to reflect red, green, and blue colors respectively. This not only adds cost for manufacturing but since the three HOEs need to be “sandwiched” together, each wavelength of the light is slightly diffracted by the other color hologram adding color “cross-talk” in the image (Mukawa et al., 2008). To solve the issue, Shin et al. proposed a novel recording method towards improving the diffraction efficiency and uniformity of full-color HOE (Shin et al., 2021). In their method, an analysis is first conducted on the inhibitory properties of the initial response and the optical characteristics of the late response of the recording medium for each wavelength. Then the analysis result is utilized to improve the diffraction efficiency and color uniformity of full-color HOE. Compared with the above-mentioned diffractive waveguide based AR NEDs with DOE as the in- and out-couplers, the rainbow effect or color crosstalk problem can be eliminated with the HOE as the in- and out-couplers due to its narrower spectral bandwidth.

FIGURE 5. Schematic diagram of waveguide based AR NED using HOE as the in- and out-couplers (Only the central FOV of the NED system is shown in the diagram).

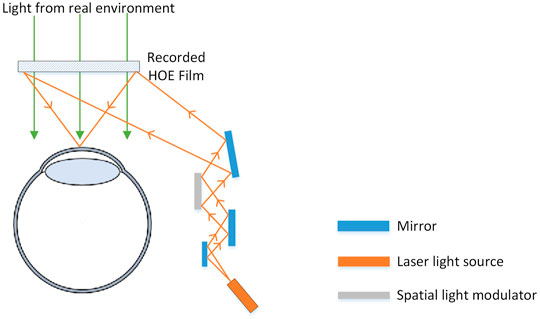

HOE based AR NEDs have two natural strengths in comparison with other technologies. First, it can be manufactured in compact form factor as normally HOE can be printed in thin films (Jeong et al., 2019; Jang et al., 2020). Secondly, it can achieve large FOV as the HOE film can be recorded with a signal beam with large-angle which can be generated by placing an objective lens in front of the HOE film (Figure 4C). Additionally, HOE can also be combined with a spatial light modulator (SLM) to achieve natural depth perception of virtual imagery (Yaras et al., 2010) thus eliminating the VAC issue and these kinds of AR NEDs are normally referred as holographic AR NEDs (Maimone et al., 2017). Figure 6 shows a typical optical design for holographic AR NED which was proposed by the authors (Xia et al., 2020). Our design is made up of three components: a laser light source, a recorded HOE film as the optical combiner and a SLM. The laser light source is deployed to emit the light source; the SLM is utilized to generate the digital hologram pattern calculated for the 3D image to be displayed; and the recorded HOE film is utilized to reconstruct the virtual image via off-axis projection.

FIGURE 6. Schematic illustration of a holographic AR NED design which includes three components: a laser light source, a recorded HOE film as the optical combiner and a SLM. The laser light source is deployed to emit the light source; the SLM is utilized to generate the digital hologram pattern calculated for the 3D image to be displayed; and the recorded HOE film is utilized to reconstruct the virtual image via off-axis projection.

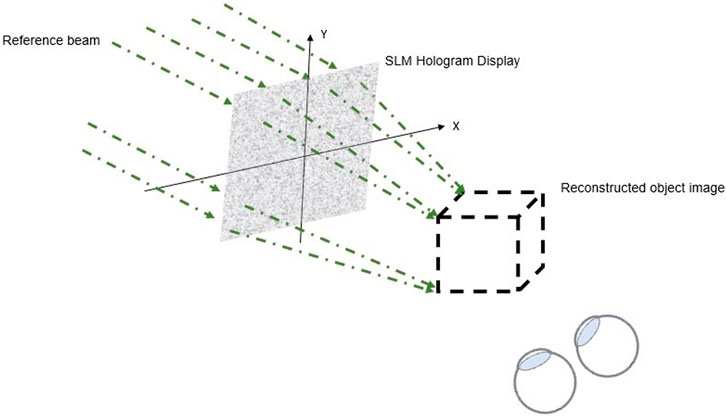

Holographic AR NEDs normally choose to use computer-generated holograms (CGHs) to directly and dynamically reconstruct the realistic-looking projections (Peng et al., 2020). CGH is the field of using computers to algorithmically generate holographic interference patterns and a SLM to display the hologram pattern. Figure 7 shows the typical system setup for a CGH. To calculate the interference pattern for CGH, there are mainly two methods, including Fourier holography (Makey et al., 2012) and Fresnel holography (Benton and Bove, 2008). However, the conventional calculation for these two CGH methods with heuristic solutions is usually time consuming with no guaranteed image quality (Maimone et al., 2017). To improve the image quality reconstructed with holographic displays, Padmanaban et al. introduced a novel overlap-add stereogram (OLAS) algorithm to invert the light field into a hologram via the short-term Fourier transform (Padmanaban et al., 2019). Their method takes more computing power thus increasing the computing time in comparison with other methods. To speed up the hologram calculation, a few algorithms have been proposed (Chen and Chu, 2015; Gilles et al., 2016; Wei et al., 2016; Askari et al., 2017). Deep learning based methods leveraging on the power of neural network are recently introduced and achieve both unprecedented image fidelity and real-time framerates Horisaki et al. (2018); Lee J. et al. (2020).

FIGURE 7. Schematic diagram of a CGH setup. Computer generated holographic interference patterns will be displayed on the SLM and the 3D image will be reconstructed by projecting the laser light onto the SLM.

One major drawback with holographic AR NEDs is the trade-off between FOV and eyebox (Brooker, 2003) as the product of these two factors is limited by the total number of pixels of the SLM. However, adopting a high-resolution SLM with higher pixel density will lead to significantly increased manufacturing cost and large form factor. To increase the eyebox, Park and Kim proposed a novel HOE based NED which uses a HOE as multiplexed concave mirrors to replicate the eyebox, thus enabling the observation of the images in a wider range (Park and Kim, 2018). In their method, CGH is created with different range of angular spectrums to control the depth of field for the displayed 3D object individually. Jang et al. demonstrated their holographic AR NED with expanded eyebox by shifting the optical system’s exit pupil to cover the expanded eyebox area with pupil-tracking. In their method, they proposed a pupil-shifting holographic optical element (PSHOE) to reduce the form factor (Jang et al., 2017, 2018). Choi et al. introduced their novel technique for eyebox expanded holographic AR NEDs by replicating and stitching the base eyebox via the combined use of a HOE and high order diffractions of the SLM (Choi et al., 2020b). In 2019, an improved integration of holographic AR NED and Maxwellian-view display was presented by Lee et al. in which the holographic AR NED processes relatively few layers of the virtual 3D scene, while the remaining objects are processed with a Maxwellian-view display through a Gaussian smoothing filter (Lee et al., 2019). In 2020, the authors proposed a novel design to expand the eyebox for holographic AR NEDs by utilizing a lens-array HOE which replicates the same spatial frequencies comprising the high-resolution holographic image at each viewing position (Xia et al., 2020).

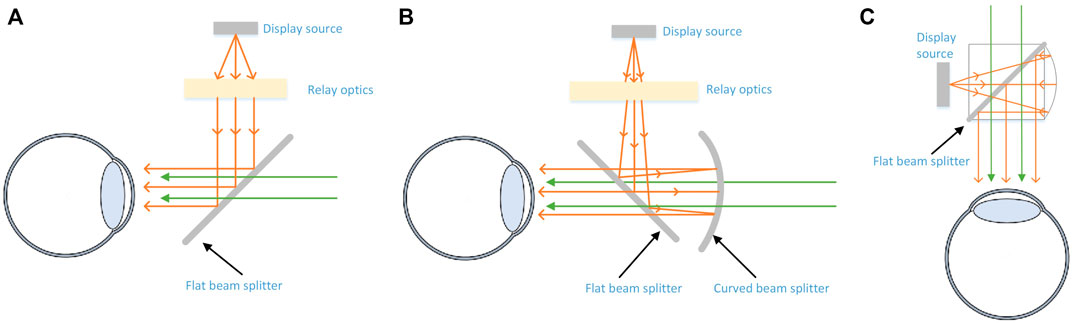

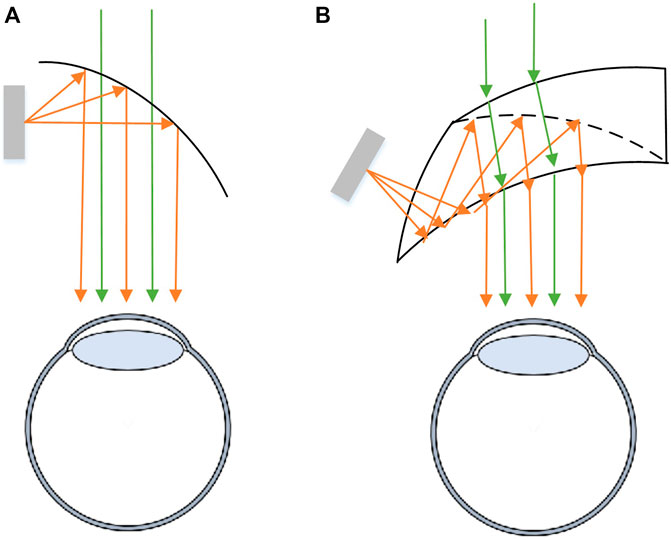

In 1968, Ivan Sutherland developed the first see-through AR NED with a flat beam splitter (BS) to superimpose the computer-generated images on the direct view of the real world (E., 1968). Based on the initial prototype of AR NED which used only a flat BS (Figure 8A), new designs chose to deploy a curved BS together with the flat BS (Figure 8B, C). As the curved BS looks like a typical birdbath, these AR NEDs are normally categorized as birdbath AR NEDs. Typical commercialized birdbath AR NEDs include ODG AR glasses, Google glasses, etc. Compared with the traditional flat BS, the birdbath AR NEDs always have wide FOV due to the magnification of curved BS. Whereas this magnification always causes the image distortion which can be compensated with the predistortion of the image source.

FIGURE 8. Schematic diagram of beam splitter based AR NEDs. (A) shows a flat BS based AR NED; (B) and (C) show two birdbath designs for AR NEDs (Only the central FOV of the NEDs is shown in the diagram)

Beam splitter based AR NEDs usually are constraint by the conflict between form factor and FOV (Rotier, 1989; Droessler and Rotier, 1990). To overcome these constraints, freeform optics based AR NEDs were developed as freeform surfaces can introduce more variables to optimize the optical eyepiece to get a high performance and relative compact outlook. For instance, Wang et al. developed an off-axis single-element curved beam combiners for AR NED (Wang et al., 2016). Meta two is another representative of freeform optics based AR NED2. In these designs, a freeform half-mirror is used as both magnifying optics and an optical combiner (Figure 9A). To achieve compact form factor, instead of using one single freeform reflector, recent designs sophisticatedly choose a combination of refraction surfaces, total-internal-reflection (TIR) surfaces and reflection surfaces to minimize the form factor while allowing a large FOV (Morishima et al., 1995; Hoshi et al., 1996; Yamazaki et al., 1999). For example, Cheng et al. developed a freeform prism based AR NED which achieved a compact form factor in 2009 (Figure 9B). In their design, the wedge-shaped freeform prism consists of three freeform surfaces, and rays from the image source are firstly refracted by one surface close to the image source. After two consecutive reflections by surfaces, the rays are transmitted through one surface and reach the exit pupil of the system. An auxiliary element, which consists of two freeform surfaces, is attached to the prism to obtain a see-through view, and freeform surface in the auxiliary element is designed in order to maintain a non-distortion real-world scene. Market available AR NED products (e.g., NED ARTM3, have also been successfully commercialized based on freeform prism.

FIGURE 9. Freeform optics based AR NEDs. (A): freeform curved half mirror. (B): freeform prism (Only the central FOV of the NEDs is shown in the diagram).

Freeform optics based AR NEDs, together with BS based AR NEDs, rely on binocular parallax to generate depth perception for the viewers and the optical power of the combiners are usually fixed. Therefore, these designs always suffer from VAC issue (Zabels, 2019; Zhan et al., 2020; Rolland et al., 2021). To solve VAC, some vari-focal based methods have been proposed. For example, Stevens et al. used Alvarez lenses to mitigate VAC issue in their proposed NED (Stevens et al., 2018). Dunn et al. proposed to use a varifocal deformable membrane mirror for each eye and eye tracking technique to achieve a wide FOV and VAC mitigated AR NED (Dunn et al., 2017). Similarly, McQuaide et al. proposed to use a deformable membrane mirror to generate realistic 3D depth cues by variable focus thus their display allows the viewer to see 3D objects using the natural accommodative response of the eye (McQuaide et al., 2003). Hua and Javidi proposed a method to combine freeform surface techniques with integral imaging (Hua and Javidi, 2014). In 2014, Hu and Hua deployed the combination of freeform-prism based design, high-speed deformable membrane mirror device and high-frame-rate digital micromirror device (DMD) to demonstrate a multifocal bench-top prototype with an extended depth range reaching from 0 to 3 diopters (Hu and Hua, 2014). Lee et al. proposed three-dimensional (3D) HMD providing multi-focal and wearable functions by using polarization-dependent optical path switching in Savart plate (Lee et al., 2016). In 2018, Wilson and Hua developed a mechanical method by shifting two lateral freeform Alvarez lenses to create a compact, high-resolution and tunable optical see-through NED with adjustable optical power from 0 to 3 diopters (Wilson and Hua, 2018). However, their method is limited by the speed of the actuators and has limitations on FOV and eyebox.

To mitigate the VAC issue, Maxwellian view AR NEDs have been developed (Yuuki et al., 2012). Different from other methods which render virtual imagery with true focal cue for each eye, Maxwellian view AR NEDs, or retina scanning displays, use pinhole imaging to project and focus the virtual imagery onto the retina (Choi et al., 2020c). This method can help to alleviate the VAC issue. Recently, Song et al. developed a novel method to construct an optical see-through retinal-projection near-eye display using the Maxwellian view and a holographic method (Song et al., 2021). In their method, a single phase-only spatial light modulator (SLM) was employed to generate holographic virtual images which can be directly projected onto the retina. The virtual image can be projected at different depths and thus the presented method can resolve VAC issues. However, the eyebox size in Maxwellian view NEDs is always limited, and the alignment for the pupil is rather restrictive for the viewers.

Digital holography can also be integrated with the BS or freeform optics based AR NEDs to solve the VAC issue. This solution always employs the phase SLM as the image source and uses coherent light as the illumination source to create the 3D virtual image for the user’s eye (Gao et al., 2016; Gao and Liu, 2017; Chang et al., 2019). However, this kind of holographic NEDs always have a small FOV due to the etendue limitation of holographic displays.

Some other researchers have also explored other means to mitigate VAC issues, such as using Pancharatnam-Berry (PB) phase lenspolarization-dependent lens (Tan et al., 2018; Moon et al., 2019; Yoo et al., 2019; Xiong et al., 2021a), or using electrically tunable lens (Lee et al., 2019; Piskunov et al., 2020). With different polarization state, polarization-dependent lens can create different focal lengths and thus generate multiple focal planes for near-eye display. As electrically tunable lens is able to dynamically adjust the focus for the light, it can been combined with active shutters to achieve time-multiplexed focus adjusting for the virtual images and real images respectively (Liu et al., 2008; Xia et al., 2019). In this way, tunable lens based design can help to solve the VAC problem.

Besides the abovementioned AR NED technologies, there have been other emerging technologies developed and published in recent years, including:

Metasurfaces refer to planar optical elements composed of artificially fabricated subwavelength structures to allow them to modify electromagnetic characteristics of lights thus the light can bended at angles larger than what is possible using simple reflection (Yu et al., 2011; Genevet et al., 2017; Arbabi et al., 2018; Neshev and Aharonovich, 2018; Ruiz De Galarreta et al., 2020; Bayati et al., 2021; Boo et al., 2021). Recent advancements in metasurface technologies show that they are able to conquer the limitations of conventional optical components, such as limited FOV and bulky form factor. Therefore, a number of research works have been conducted to use metasurfaces for AR NEDs. For example, Hong et al. replaced a freeform combiner with a metasurface written on a flat substrate displaying a non-rotationally symmetric phase profile combiner. The simulation results indicate a potential path to larger FOVs up to 77.3° horizontally and vertically (Hong et al., 2017). A recent work done by Nikolov et al. introduced a new concept and working principles for a metaform which integrates a freeform optic and a metasurface into one single optical element (Nikolov et al., 2021). The metaform can be used as an optical combiner for AR NEDs and it shows promises to solve optical design challenges for AR NEDs. Lee et al. introduce their method towards a compact AR NED with large FOV using metasurfaces (Lee et al., 2018). In their method, the metasurface can selectively work as a lens for virtual image and work as a transparent film for real world images. Lan et al. employed a metasurface to holographically cast virtual information onto the fovea region of the retina of the viewer’s eyes (Lan et al., 2019). They developed a metasurface which can be placed in close contact with the viewer’s eye and is responsible to project the virtual image onto the fovea region of the retina of the viewer’s eyes. The metasurface generates the predesigned phase distribution using silicon nanobeams and only occupies only 1% of the pupil area. Therefore, it allows the images from the real-world environment to be perceived by the viewer. The metasurface features the smallest form factor, adding a sub-micrometer thickness and a sub-microgram weight to a normal contact lens.

This work was done by Maimone et al. in 2014 who presented a novel design for an optical see-through AR NED that offers a wide FOV and supports a compact form factor approaching ordinary eyeglasses (Maimone et al., 2014). Instead of conventional optics, their design uses only two simple hardware components: a LCD panel and an array of point light sources (implemented as an edge-lit, etched acrylic sheet) placed directly in front of the eye, out of focus. In 2019, Song et al. proposed a new method for light-field NED in which random pinholes are used as a SLM and the method can help to solve the repeated zone problem with light-field displays (Song et al., 2019). Park introduced their pinhole based technology by adding a pinhole inside the optical path consisting of an optical combiner and a collimator. The optical combiner, the collimator and the pinhole are combined into a so-called pin mirror and the pin mirror is able to extend the depth of field. A wide FOV can also be achieved by adding multiple pin mirrors horizontally and vertically (Park, 2020).

A Transmissive Mirror Device (TMD) plate consists of numerous micro-mirrors and is usually used for aerial imaging (Monnai et al., 2014). As TMD plate enables the user to observe the virtual image in the mid-air while allowing the images from the real environment to pass through, Otao et al. developed a novel HMD design for near-eye light field display with TMD (Otao et al., 2017). Although their design achieves wide field of view for AR NED, it still has the disadvantage of a bulky form factor.

Polarization-dependent optical element can generate different optical performances when the incident light has different polarization state. Based on liquid crystal (LC), this kind of optical element can generate the desired phase profile by spatially varying the LC directors (Zhan et al., 2019), which is also called as Pancharatnam-Berry (PB) phase optical elements or geometric phase optical element. The significant advantage of this PB phase optical element is the compact form-factor with just a thin planar plate, which can benefit the total form factor of AR NEDs. Generally the PB phase optical elements can be divided to PB phase lens and PB phase deflector. With the utilization of PB phase lenses, polarization states can be multiplexed for different purposes for AR NEDs, such as to create multiple focal planes (Tan et al., 2018), or to work as a combiner to combine virtual imagery with see-through real imagery (Moon et al., 2019; Cui et al., 2020). PB phase deflector is always utilized to generate different deflection angle with the incident light in different polarization states. PB phase deflector is employed to replace the in- and out-coupler of waveguide display to use single micro-display pannel with multiplexed polarization states for both eye (Weng et al., 2016), or to enlarge the FOV for waveguide display (Yoo et al., 2020). PB phase deflector is also utilized as electro-optic image shifter to enhance the resolution of near-eye display (Lee et al., 2017).

AR is widely recognized as the next-generation computing platform with numerous potential applications in various sectors. As an indispensable component for AR, NEDs have been the subject of many investigations by academia and industry and are therefore experiencing rapid progress. In this paper, we first present an overview about various technologies and their advances for AR NEDs. Then we focus our review on the principles, challenges and advancements for three leading designs for AR NEDs, including half-mirror/prism based AR NEDs, HOE based AR NEDs and waveguide based AR NEDs. We also reviewed other emerging technologies, including pinlight based AR NEDs, TMD-based AR NEDs, PB phase lens based AR NEDs and meta-surface based AR NEDs.

Each of the reviewed methods has their own advantages and disadvantages and involves tradeoffs between different metrics. As shared by Chang et al., in 2020, future-ready AR NEDs will provide both a comfort experience (eyeglasses-style form factor, light weight, large FOV) and immersion experience (natural 3D perception, high refresh rate, high-quality image) to users (Chang et al., 2020). Although HOE based AR NED technologies are heavily investigated and have shown the promise to achieve future-ready AR NEDs, there are still significant challenges remaining, including low image quality and high computation demand. Novel methods from other technologies or from the synergy between different technologies could also be expected. Another point worthy of close monitoring is the fast developments of AR display engines, including LCoS, DLP, LED, OLED, and LBS (Zhan et al., 2020). The continuously improved display resolution and decreased size of display engines might bring breakthrough inventions toward the ultimate goal of AR NEDs.

The mutual occlusion is also an important issue for AR NEDs. Most of the current AR NEDs superimpose the virtual image onto the real environment, but the displayed virtual image is transparent and can not block the rear scene. Several recent research works are focused on the approaches obtaining hard-edge mutual occlusion in optical see-through AR NEDs. Most of the solutions use two SLMs to merge the real and virtual imagery. This method always utilize one SLM to add occlusion mask to the real scene and use the other SLM to display the virtual image and then superimpose the both for the eye (Wilson and Hua, 2017; Hamasaki and Itoh, 2019; Chae et al., 2021; Zhang et al., 2021). Some other researches utilize the time-multiplexed method with a single SLM to achieve the mutual occlusion for AR NEDs (Ju et al., 2020; Krajancich et al., 2020). The existing approaches can achieve mutual occlusion to some extent, but still suffers from limited FOV and bulky form factor.

We hope our discussions will help to inspire research on future directions to counter the challenges with AR NEDs and we look forward to these advances.

XX and FG contributed to the conceptualization, methodology and writing of the review. NM and YC provided supervision and guidance of the review.

This work is supported by the National Natural Science Foundation of China (Grant No. 62005154), the Natural Science Foundation of Shanghai (Grant No. 20ZR1420500), National Key Research and Development Program of China (No. 2021YFF0307803) and MOE TIF Grant (MOE2019-TIF-0011).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1Moverio: https://moverio.epson.com/

2https://www.aniwaa.com/product/vr-ar/meta-2/

Arbabi, E., Arbabi, A., Kamali, S. M., Horie, Y., Faraji-Dana, M., and Faraon, A. (2018). MEMS-tunable Dielectric Metasurface Lens. Nat. Commun. 9. 6. doi:10.1038/s41467-018-03155-6

Askari, M., Kim, S.-B., Shin, K.-S., Ko, S.-B., Kim, S.-H., Park, D.-Y., et al. (2017). Occlusion Handling Using Angular Spectrum Convolution in Fully Analytical Mesh Based Computer Generated Hologram. Opt. Express 25, 25867–25878. doi:10.1364/oe.25.025867

Barten, P. (2004). Formula for the Contrast Sensitivity of the Human Eye. Image Qual. Syst. Perform. Proc. SPIE 5294, 231–238.

Bayati, E., Wolfram, A., Colburn, S., Huang, L., and Majumdar, A. (2021). Design of Achromatic Augmented Reality Visors Based on Composite Metasurfaces. Appl. Opt. 60, 844–850. doi:10.1364/ao.410895

Boo, H., Lee, Y., Yang, H., Matthews, B., Lee, T., and Wong, C. (2021). Metasurface Optical Elements for High Performing Augmented/mixed-Reality Smart Glasses. Proc. Conf. Lasers Electro-Optics 2021, STu4D.5. doi:10.1364/cleo_si.2021.stu4d.5

Cakmakci, O., and Rolland, J. (2006). Head-worn Displays: A Review. J. Display Technol. 2, 199–216. doi:10.1109/JDT.2006.879846

Chae, M., Bang, K., Jo, Y., Yoo, C., and Lee, B. (2021). Occlusion-capable See-Through Display without the Screen-Door Effect Using a Photochromic Mask. Opt. Lett. 46, 4554–4557. doi:10.1364/OL.430478

Chang, C., Bang, K., Wetzstein, G., Lee, B., and Gao, L. (2020). Toward the Next-Generation VR/AR Optics: a Review of Holographic Near-Eye Displays from a Human-Centric Perspective. Optica 7, 1563–1578. doi:10.1364/optica.406004

Chang, C., Cui, W., Park, J., and Gao, L. (2019). Computational Holographic Maxwellian Near-Eye Display with an Expanded Eyebox. Sci. Rep. 9, 1–9. doi:10.1038/s41598-019-55346-w

Chen, C. P., Mi, L., Zhang, W., Ye, J., and Li, G. (2021). Waveguide-based Near-Eye Display with Dual-Channel Exit Pupil Expander. Displays 67, 101998. doi:10.1016/j.displa.2021.101998

Chen, J.-S., and Chu, D. P. (2015). Improved Layer-Based Method for Rapid Hologram Generation and Real-Time Interactive Holographic Display Applications. Opt. Express 23, 18143–18155. doi:10.1364/oe.23.018143

Chiam, B. S. W., Leung, I. M. W., Devilly, O. Z., Ow, C. Y. D., Guan, F., and Tan, B. L. (2021). Novel Augmented Reality Enhanced Solution towards Vocational Training for People with Mental Disabilities. Proc. IEEE Int. Symp. Mixed Augmented Reality (Ismar) 2021, 195–200. doi:10.1109/ismar-adjunct54149.2021.00047

Choi, J., Son, M. G., Lee, Y. Y., Lee, K. H., Park, J. P., Yeo, C. H., et al. (2020a). Position-based Augmented Reality Platform for Aiding Construction and Inspection of Offshore Plants. Vis. Comput. 36, 2039–2049. doi:10.1007/s00371-020-01902-9

Choi, M.-H., Ju, Y.-G., and Park, J.-H. (2020b). Holographic Near-Eye Display with Continuously Expanded Eyebox Using Two-Dimensional Replication and Angular Spectrum Wrapping. Opt. Express 28, 533–547. doi:10.1364/oe.381277

Choi, M.-H., Kim, S.-B., and Park, J.-H. (2020c). Implementation and Characterization of the Optical-See-Through Maxwellian Near-Eye Display Prototype Using Three-Dimensional Printing. J. Inf. Display 21, 33–39. doi:10.1080/15980316.2019.1674196

Cui, W., Chang, C., and Gao, L. (2020). Development of an Ultra-compact Optical Combiner for Augmented Reality Using Geometric Phase Lenses. Opt. Lett. 45, 2808–2811. doi:10.1364/OL.393550

Droessler, J., and Rotier, D. (1990). Tilted Cat Helmet-Mounted Display. Opt. Eng. 29, 19–26. doi:10.1117/12.55669

Dunn, D., Tippets, C., Torell, K., Kellnhofer, P., Aksit, K., Didyk, P., et al. (2017). Wide Field of View Varifocal Near-Eye Display Using See-Through Deformable Membrane Mirrors. IEEE Trans. Vis. Comput. Graphics 23, 1322–1331. doi:10.1109/TVCG.2017.2657058

Eisen, L., Friesem, A. A., Meyklyar, M., and Golub, M. (2006). Color Correction in Planar Optics Configurations. Opt. Lett. 31, 1522–1524. doi:10.1364/ol.31.001522

E., S. I. (1968). “A Head-Mounted Three Dimensional Display,” in Fall Joint Computer Conference, 757–764. doi:10.1145/1476589.1476686

Gao, Q., Liu, J., Duan, X., Zhao, T., Li, X., and Liu, P. (2017). Compact See-Through 3D Head-Mounted Display Based on Wavefront Modulation with Holographic Grating Filter. Opt. Express 25, 8412–8424. doi:10.1364/oe.25.008412

Gao, Q., Liu, J., Han, J., and Li, X. (2016). Monocular 3D See-Through Head-Mounted Display via Complex Amplitude Modulation. Opt. Express 24, 17372–17383. doi:10.1364/oe.24.017372

Genevet, P., Capasso, F., Aieta, F., Khorasaninejad, M., and Devlin, R. (2017). Recent Advances in Planar Optics: from Plasmonic to Dielectric Metasurfaces. Optica 4, 139. doi:10.1364/optica.4.000139

Gilles, A., Gioia, P., Cozot, R., and Morin, L. (2016). Hybrid Approach for Fast Occlusion Processing in Computer-Generated Hologram Calculation. Appl. Opt. 55, 5459–5470. doi:10.1364/ao.55.005459

Hamasaki, T., and Itoh, Y. (2019). Varifocal Occlusion for Optical See-Through Head-Mounted Displays Using a Slide Occlusion Mask. IEEE Trans. Vis. Comput. Graphics 25, 1961–1969. doi:10.1109/TVCG.2019.2899249

Hoffman, D. M., Girshick, A. R., Akeley, K., and Banks, M. S. (2008). Vergence-accommodation Conflicts Hinder Visual Performance and Cause Visual Fatigue. J. Vis. 8, 33–30. doi:10.1167/8.3.33

Hong, C., Colburn, S., and Majumdar, A. (2017). Flat Metaform Near-Eye Visor. Appl. Opt. 56, 8822. doi:10.1364/ao.56.008822

Horisaki, R., Takagi, R., and Tanida, J. (2018). Deep-learning-generated Holography. Appl. Opt. 57, 3859–3863. doi:10.1364/ao.57.003859

Hoshi, H., Taniguchi, N., Morishima, H., Akiyama, T., Yamazaki, S., and Okuyama, A. (1996). Off-axial HMD Optical System Consisting of Aspherical Surfaces without Rotational Symmetry. SPIE 2653, 234.

Hu, X., and Hua, H. (2014). High-resolution Optical See-Through Multi-Focal-Plane Head-Mounted Display Using Freeform Optics. Opt. Express 22, 13896. doi:10.1364/oe.22.013896

Hua, H., and Javidi, B. (2014). A 3D Integral Imaging Optical See-Through Head-Mounted Display. Opt. Express 22, 13484. doi:10.1364/oe.22.013484

Jang, C., Bang, K., Li, G., and Lee, B. (2018). Holographic Near-Eye Display with Expanded Eye-Box. ACM Trans. Graph. 37, 14. doi:10.1145/3272127.3275069

Jang, C., Bang, K., Moon, S., Kim, J., Lee, S., and Lee, B. (2017). Retinal 3D. ACM Trans. Graph. 36, 1–13. doi:10.1145/3130800.3130889

Jang, C., Mercier, O., Bang, K., Li, G., Zhao, Y., and Lanman, D. (2020). Design and Fabrication of Freeform Holographic Optical Elements. ACM Trans. Graphics (Tog) 39, 184. doi:10.1145/3414685.3417762

Jeong, J., Lee, J., Yoo, C., Moon, S., Lee, B., and Lee, B. (2019). Holographically Customized Optical Combiner for Eye-Box Extended Near-Eye Display. Opt. Express 27, 38006–38018. doi:10.1364/OE.382190

Ju, Y.-G., Choi, M.-H., Liu, P., Hellman, B., Lee, T. L., Takashima, Y., et al. (2020). Occlusion-capable Optical-See-Through Near-Eye Display Using a Single Digital Micromirror Device. Opt. Lett. 45, 3361–3364. doi:10.1364/OL.393194

Kim, N., Piao, Y.-L., and Wu, H.-Y. (2017). “Holographic Optical Elements and Application,” in Holographic Materials and Optical Systems. Editors I. Naydenova, D. Nazarova, and T. Babeva. doi:10.5772/67297

Koulieris, G.-A., Bui, B., Banks, M. S., and Drettakis, G. (2017). Accommodation and comfort in Head-Mounted Displays. ACM Trans. Graph. 36, 1–11. doi:10.1145/3072959.3073622

Koulieris, G. A., Akşit, K., Stengel, M., Mantiuk, R. K., Mania, K., and Richardt, C. (2019). Near‐Eye Display and Tracking Technologies for Virtual and Augmented Reality. Comput. Graphics Forum 38, 493–519. doi:10.1111/cgf.13654

Krajancich, B., Kellnhofer, P., and Wetzstein, G. (2020). Optimizing Depth Perception in Virtual and Augmented Reality through Gaze-Contingent Stereo Rendering. ACM Trans. Graph. 39, 1–10. doi:10.1145/3414685.3417820

Kress, B. C. (2020). Optical Architectures for Augmented-, Virtual-, and Mixed-Reality Headsets. Bellingham, Washington, US: Society of Photo-Optical Instrumentation Engineers.

Kress, B., and Starner, T. (2013). A Review of Head-Mounted Displays (HMD) Technologies and Applications for Consumer Electronics. Proc. SPIE 8720, 87200A–87213A. doi:10.1117/12.2015654

Lan, S., Zhang, X., Taghinejad, M., Rodrigues, S., Lee, K.-T., Liu, Z., et al. (2019). Metasurfaces for Near-Eye Augmented Reality. ACS Photon. 6, 864–870. doi:10.1021/acsphotonics.9b00180

Lee, B., Yoo, C., and Jeong, J. (2020a). Holographic Optical Elements for Augmented Reality Systems. Proc. SPIE 11551, Holography, Diffractive Optics, Appl. X 2020, 1155103. doi:10.1117/12.2573605

Lee, C.-K., Moon, S., Lee, S., Yoo, D., Hong, J.-Y., and Lee, B. (2016). Compact Three-Dimensional Head-Mounted Display System with Savart Plate. Opt. Express 24, 19531. doi:10.1364/oe.24.019531

Lee, G.-Y., Hong, J.-Y., Hwang, S., Moon, S., Kang, H., Jeon, S., et al. (2018). Metasurface Eyepiece for Augmented Reality. Nat. Commun. 9, 1–10. doi:10.1038/s41467-018-07011-5

Lee, J., Jeong, J., Cho, J., Yoo, D., Lee, B., and Lee, B. (2020b). Deep Neural Network for Multi-Depth Hologram Generation and its Training Strategy. Opt. Express 28, 27137–27154. doi:10.1364/oe.402317

Lee, J. S., Kim, Y. K., Lee, M. Y., and Won, Y. H. (2019). Enhanced See-Through Near-Eye Display Using Time-Division Multiplexing of a Maxwellian-View and Holographic Display. Opt. Express 27, 689. doi:10.1364/oe.27.000689

Lee, Y.-H., Zhan, T., and Wu, S.-T. (2017). Enhancing the Resolution of a Near-Eye Display with a Pancharatnam-Berry Phase Deflector. Opt. Lett. 42, 4732–4735. doi:10.1364/OL.42.004732

Levola, T. (2007). 28.2: Stereoscopic Near to Eye Display Using a Single Microdisplay. SID 07 Dig. 38, 1158–1159. doi:10.1889/1.2785514

Levola, T., and Aaltonen, V. (2008). Near-to-eye Display with Diffractive Exit Pupil Expander Having Chevron Design. J. Soc. Inf. Display 16, 57–62. doi:10.1889/1.2966447

Lin, T., Zhan, T., Zou, J., Fan, F., and Wu, S.-T. (2020). Maxwellian Near-Eye Display with an Expanded Eyebox. Opt. Express 28, 38616. doi:10.1364/oe.413471

Liu, S., Cheng, D., and Hua, H. (2008). An Optical See-Through Head Mounted Display with Addressable Focal Planes. IEEE Int. Symp. Mixed Augmented Reality 2008, 33–42. doi:10.1109/ismar.2008.4637321

Maimone, A., Georgiou, A., and Kollin, J. S. (2017). Holographic Near-Eye Displays for Virtual and Augmented Reality. ACM Trans. Graph. 36, 1–16. doi:10.1145/3072959.3073624

Maimone, A., Lanman, D., Rathinavel, K., Keller, K., Luebke, D., and Fuchs, H. (2014). Pinlight Displays: Wide Field of View Augmented Reality Eyeglasses Using Defocused point Light Sources. ACM Trans. Graphics (Tog) 33, 89. doi:10.1145/2601097.2601141

Makey, G., El-Daher, M. S., and Al-Shufi, K. (2012). Utilization of a Liquid crystal Spatial Light Modulator in a gray Scale Detour Phase Method for Fourier Holograms. Appl. Opt. 51, 7877–7882. doi:10.1364/ao.51.007877

McQuaide, S. C., Seibel, E. J., Kelly, J. P., Schowengerdt, B. T., and Furness, T. A. (2003). A Retinal Scanning Display System that Produces Multiple Focal Planes with a Deformable Membrane Mirror. Displays 24, 65–72. doi:10.1016/S0141-9382(03)00016-7

Monnai, Y., Hasegawa, K., Fujiwara, M., Yoshino, K., Inoue, S., and Shinoda, H. (2014). “HaptoMime,” in UIST 2014-Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, 663–668. doi:10.1145/2642918.2647407

Moon, S., Lee, C.-K., Nam, S.-W., Jang, C., Lee, G.-Y., Seo, W., et al. (2019). Augmented Reality Near-Eye Display Using Pancharatnam-Berry Phase Lenses. Sci. Rep. 9, 6616. doi:10.1038/s41598-019-42979-0

Morishima, H., Akiyama, T., Nanba, N., and Tanaka, T. (1995). “The Design of Off-Axial Optical System Consisting of Aspherical Mirrors without Rotational Symmetry,” in 20th Optical Symposium, 53–56.

Mukawa, H., Akutsu, K., Matsumura, I., Nakano, S., Yoshida, T., Kuwahara, M., et al. (2008). 8.4: Distinguished Paper: A Full Color Eyewear Display Using Holographic Planar Waveguides. SID Symp. Dig. 39, 89–92. doi:10.1889/1.3069819

Neshev, D., and Aharonovich, I. (2018). Optical Metasurfaces: New Generation Building Blocks for Multi-Functional Optics. Light Sci. Appl. 7, 1–5. doi:10.1038/s41377-018-0058-1

Nikolov, D. K., Bauer, A., Cheng, F., Kato, H., Vamivakas, A. N., and Rolland, J. P. (2021). Metaform Optics: Bridging Nanophotonics and Freeform Optics. Sci. Adv. 7, 1–10. doi:10.1126/sciadv.abe5112

Olbrich, M., Graf, H., Kahn, S., Engelke, T., Keil, J., Riess, P., et al. (2013). Augmented Reality Supporting User-Centric Building Information Management. Vis. Comput. 29, 1093–1105. doi:10.1007/s00371-013-0840-2

Ong, D., Chia, K., Huang, Y., Teo, T., Tan, J., Lim, M., et al. (2021). “Smart Captions: a Novel Solution for Closed Captioning in Theatre Settings with AR Glasses,” in Proceedings of 15th IEEE International Conference on Service Operations and Logistics, and Informatics (SOLI 2021) 2021, 1–5. doi:10.1109/soli54607.2021.9672391

Otao, K., Itoh, Y., Osone, H., Takazawa, K., Kataoka, S., and Ochiai, Y. (2017). “Light Field Blender,” in SIGGRAPH Asia 2017 Technical Briefs, SA 2017. doi:10.1145/3145749.3149425

Padmanaban, N., Peng, Y., and Wetzstein, G. (2019). Holographic Near-Eye Displays Based on Overlap-Add Stereogramss. ACM Trans. Graph. 38, 1–13. doi:10.1145/3355089.3356517

Park, J.-H., and Kim, S.-B. (2018). Optical See-Through Holographic Near-Eye-Display with Eyebox Steering and Depth of Field Control. Opt. Express 26, 27076. doi:10.1364/oe.26.027076

Park, S.-g. (2020). “PinMR: from Concept to Reality (Conference Presentation),” in Proc. SPIE 11310, Optical Architectures for Displays and Sensing in Augmented, Virtual, and Mixed Reality (AR, VR, MR). doi:10.1117/12.2566389

Peng, Y., Choi, S., Padmanaban, N., Wetzstein, G., and Wetzstein, G. (2020). Neural Holography with Camera-In-The-Loop Training. ACM Trans. Graph. 39, 14. doi:10.1145/3414685.3417802

Piao, J.-A., Li, G., Piao, M.-L., and Kim, N. (2013). Full Color Holographic Optical Element Fabrication for Waveguide-type Head Mounted Display Using Photopolymer. J. Opt. Soc. Korea 17, 242–248. doi:10.3807/JOSK.2013.17.3.242

Piskunov, D. E., Danilova, S. V., Tigaev, V. O., and Vladimir, N. (2020). “Tunable Lens for AR Headset,” in Digital Optics for Immersive Displays II (DOID20). doi:10.1117/12.2565635

Rolland, J. P., Davies, M. A., Suleski, T. J., Evans, C., Bauer, A., Lambropoulos, J. C., et al. (2021). Freeform Optics for Imaging. Optica 8, 161. doi:10.1364/optica.413762

Rolland, J. P., Holloway, R. L., and Fuchs, H. (1995). Comparison of Optical and Video See-Through, Head-Mounted Displays. Proc. SPIE 2351, 293–307. doi:10.1117/12.197322

Rotier, D. (1989). Optical Approaches to the Helmet Mounted Display. Proc. SPIE 1116, 14–18. doi:10.1117/12.960892

Ruiz De Galarreta, C., Sinev, I., Alexeev, A. M., Trofimov, P., Ladutenko, K., Garcia-Cuevas Carrillo, S., et al. (2020). Reconfigurable Multilevel Control of Hybrid All-Dielectric Phase-Change Metasurfaces. Optica 7, 476–484. doi:10.1364/optica.384138

Shen, Z., Zhang, Y., Weng, Y., and Li, X. (2017). Characterization and Optimization of Field of View in a Holographic Waveguide Display. IEEE Photon. J. 9, 1–11. doi:10.1109/jphot.2017.2767606

Shi, R., Liu, J., Zhao, H., Wu, Z., Liu, Y., Hu, Y., et al. (2012). Chromatic Dispersion Correction in Planar Waveguide Using One-Layer Volume Holograms Based on Three-step Exposure. Appl. Opt. 51, 4703–4708. doi:10.1364/ao.51.004703

Shin, C.-W., Wu, H.-Y., Kwon, K.-C., Piao, Y.-L., Lee, K.-Y., Gil, S.-K., et al. (2021). Diffraction Efficiency Enhancement and Optimization in Full-Color HOE Using the Inhibition Characteristics of the Photopolymer. Opt. Express 29, 1175–1187. doi:10.1364/oe.413370

Song, W., Cheng, Q., Surman, P., Liu, Y., Zheng, Y., Lin, Z., et al. (2019). Design of a Light-Field Near-Eye Display Using Random Pinholes. Opt. Express 27, 23763–23774. doi:10.1364/oe.27.023763

Song, W., Li, X., Zheng, Y., Liu, Y., and Wang, Y. (2021). Full-color Retinal-Projection Near-Eye Display Using a Multiplexing-Encoding Holographic Method. Opt. Express 29, 8098–8107. doi:10.1364/oe.421439

Stevens, R., Rhodes, D., Hasnain, A., and Laffont, P. (2018). Varifocal Technologies Providing Prescription and VAC Mitigation in HMDs Using Alvarez Lenses. Proc. SPIE. 10676, 106760J. doi:10.1117/12.2318397

Tan, G., Zhan, T., Lee, Y.-H., Xiong, J., and Wu, S.-T. (2018). Polarization-multiplexed Multiplane Display. Opt. Lett. 43, 5651–5654. doi:10.1364/ol.43.005651

Wang, J., Liang, Y., and Xu, M. (2015). Design of a See-Through Head-Mounted Display with a Freeform Surface. J. Opt. Soc. Korea 19, 614–618. doi:10.3807/JOSK.2015.19.6.614

Wei, H., Gong, G., and Li, N. (2016). Improved Look-Up Table Method of Computer-Generated Holograms. Appl. Opt. 55, 9255–9264. doi:10.1364/ao.55.009255

Weng, Y., Xu, D., Zhang, Y., Li, X., and Wu, S.-T. (2016). Polarization Volume Grating with High Efficiency and Large Diffraction Angle. Opt. Express 24, 17746–17759. doi:10.1364/OE.24.017746

Wheelwright, B., Shulai, Y., Geng, Y., Luanava, S., Choi, S., Gao, W., et al. (2018). Field of View: Not Just a Number. Proc. SPIE Digital Opt. Immersive Displays 2018, 10676.

Wilson, A., and Hua, H. (2018). High-resolution Optical See-Through Vari-Focal-Plane Head-Mounted Display Using Freeform Alvarez Lenses. Proc. SPIE Digital Opt. Immersive Displays 2018, 106761J. doi:10.1117/12.2315771

Wilson, A., and Hua, H. (2017). Design and Prototype of an Augmented Reality Display with Per-Pixel Mutual Occlusion Capability. Opt. Express 25, 30539–30549. doi:10.1364/OE.25.030539

Xia, X., Guan, Y., State, A., Chakravarthula, P., Rathinavel, K., Cham, T.-J., et al. (2019). Towards a Switchable AR/VR Near-Eye Display with Accommodation-Vergence and Eyeglass Prescription Support. IEEE Trans. Vis. Comput. Graphics 25, 3114–3124. doi:10.1109/tvcg.2019.2932238

Xia, X., Guan, Y., State, A., Chakravarthula, P., Rathinavel, K., Cham, T., et al. (2020). “Towards Eyeglass-Style Holographic Near-Eye Displays with Statically Expanded Eyebox,” in IEEE ISMAR 2020.

Xiong, J., Li, Y., Li, K., and Wu, S.-T. (2021a). Aberration-free Pupil Steerable Maxwellian Display for Augmented Reality with Cholesteric Liquid crystal Holographic Lenses. Opt. Lett. 46, 1760. doi:10.1364/ol.422559

Xiong, J., Tan, G., Zhan, T., and Wu, S.-T. (2021b). “A Scanning Waveguide AR Display with 100° FOV,” in Optical Architectures for Displays and Sensing in Augmented, Virtual, and Mixed Reality (AR, VR, MR) II. vol. 11765, 1176507-1–1176507-6. doi:10.1117/12.2577856

Yamazaki, S., Inoguchi, K., Saito, Y., Morishima, H., and Taniguchi, N. (1999). Thin Wide-FOV HMD with Free-Form-Surface Prism and Applications. Proc. SPIE stereoscopic displays virtual reality Syst. VI 3639, 453–462.

Yano, S., Ide, S., Mitsuhashi, T., and Thwaites, H. (2002). A Study of Visual Fatigue and Visual comfort for 3D HDTV/HDTV Images. Displays 23, 191–201. doi:10.1016/s0141-9382(02)00038-0

Yaras, F., Kang, H., and Onural, L. (2010). State of the Art in Holographic Displays: a Survey. J. Display Technol. 6, 443–454. doi:10.1109/jdt.2010.2045734

Yoo, C., Bang, K., Chae, M., and Lee, B. (2020). Extended-viewing-angle Waveguide Near-Eye Display with a Polarization-dependent Steering Combiner. Opt. Lett. 45, 2870–2873. doi:10.1364/OL.391965

Yoo, C., Bang, K., Jang, C., Kim, D., Lee, C.-K., Sung, G., et al. (2019). Dual-focal Waveguide See-Through Near-Eye Display with Polarization-dependent Lenses. Opt. Lett. 44, 1920–1923. doi:10.1364/ol.44.001920

Yu, K., Ahn, J., Lee, J., Kim, M., and Han, J. (2020). Collaborative SLAM and AR-guided Navigation for Floor Layout Inspection. Vis. Comput. 36, 2051–2063. doi:10.1007/s00371-020-01911-8

Yu, N., Genevet, P., Kats, M. A., Aieta, F., Tetienne, J.-P., Capasso, F., et al. (2011). Light Propagation with Phase Discontinuities: Generalized Laws of Reflection and Refraction. Science 334, 333–337. doi:10.1126/science.1210713

Yuuki, A., Itoga, K., and Satake, T. (2012). A New Maxwellian View Display for Trouble-free Accommodation. Jnl Soc. Info Display 20, 581–588. doi:10.1002/jsid.122

Zabels, R. (2019). AR Displays: Next-Generation Technologies to Solve the Vergence - Accommodation Conflict. Appl. Sci. 23, 101397. doi:10.3390/app9153147

Zhan, T., Lee, Y.-H., Tan, G., Xiong, J., Yin, K., Gou, F., et al. (2019). Pancharatnam-Berry Optical Elements for Head-Up and Near-Eye Displays [Invited]. J. Opt. Soc. Am. B 36, D52–D65. doi:10.1364/JOSAB.36.000D52

Zhan, T., Yin, K., Xiong, J., He, Z., and Wu, S.-T. (2020). Augmented Reality and Virtual Reality Displays: Perspectives and Challenges. iScience 23, 101397. doi:10.1016/j.isci.2020.101397

Zhang, Y., and Fang, F. (2019). Development of Planar Diffractive Waveguides in Optical See-Through Head-Mounted Displays. Precision Eng. 60, 482–496. doi:10.1016/j.precisioneng.2019.09.009

Zhang, Y., Hu, X., Kiyokawa, K., Isoyama, N., Uchiyama, H., and Hua, H. (2021). Realizing Mutual Occlusion in a Wide Field-Of-View for Optical See-Through Augmented Reality Displays Based on a Paired-Ellipsoidal-Mirror Structure. Opt. Express 29, 42751–42761. doi:10.1364/OE.444904

Zschau, E., Missbach, R., Schwerdtner, A., and H., S. (2010). “Generation, Encoding, and Presentation of Content on Holographic Displays in Real Time,” in Proc. SPIE 7690 on Three-Dimensional Imaging, Visualization, and Display 2010 and Display Technologies and Applications for Defense, Security, and Avionics IV, 76900E. doi:10.1117/12.851015

Keywords: near-eye display, head-mounted display, augmented reality, optical see-through, review

Citation: Xia X, Guan FY, Cai Y and Magnenat Thalmann N (2022) Challenges and Advancements for AR Optical See-Through Near-Eye Displays: A Review. Front. Virtual Real. 3:838237. doi: 10.3389/frvir.2022.838237

Received: 17 December 2021; Accepted: 15 February 2022;

Published: 14 March 2022.

Edited by:

Ruofei Du, Google, United StatesReviewed by:

Jae-Hyeung Park, Inha University, South KoreaCopyright © 2022 Xia, Guan, Cai and Magnenat Thalmann. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frank Yunqing Guan, ZnJhbmsuZ3VhbkBzaW5nYXBvcmV0ZWNoLmVkdS5zZw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.