- Spatial Media Group, University of Aizu, Aizu-Wakamatsu, Fukushima, Japan

The recent rise in popularity of head-mounted displays (HMDs) for immersion into virtual reality has resulted in demand for new ways to interact with virtual objects. Most solutions utilize generic controllers for interaction within virtual environments and provide limited haptic feedback. We describe the construction and implementation of an ambulatory (allowing walking) haptic feedback stylus with primary use in computer-aided design. Our stylus is a modified 3D Systems Touch force-feedback arm mounted on a wearable platform carried in front of a user. The wearable harness also holds a full-sized laptop, which drives the Meta Quest 2 HMD that is also worn by the user. This design provides six degrees-of-freedom without tethered limitations, while ensuring a high precision of force-feedback from virtual interaction. Our solution also provides an experience wherein a mobile user can explore different haptic feedback simulations and create, arrange, and deform general shapes.

1 Introduction

In this section, we examine current trends in extended reality (XR), identify aspects less developed than the rest of the paradigm, and define the importance of further development as well as propose an alternate solution to the identified challenges.

1.1 Contemporary trends in virtual and augmented reality

The recent introduction of extended reality into other fields such as marketing, design, and retail is expected to cause a shift in dominancy over a certain demographic (Bernard, 2020). The 2020 XR Industry Insight reported that 65% of AR development companies are working on industrial applications, while just 37% on consumer products (AREA: Augmented Reality for Enterprise Alliance, 2020). This should not be surprising as the focus on virtualizing environments and scenarios, which would otherwise be either prohibitive, expensive, or dangerous, has never been easier. All of these current and future trends are propelled by advancements in the hardware that XR relies on. Generally, devices are becoming smaller, lighter, more mobile, and more powerful. A trend that is greatly improving the accessibility and general ergonomics is the fact that devices are becoming self-contained and untethered. Meta Quest 2 has expanded the functionality of the original Oculus Quest HMD with several new features, the most notable being bare-hand tracking, wireless linkage with a PC, up to 120 Hz screen refresh rate, and tracking of objects (for example, a couch or desk) in the user space (Ben Lang, 2022).

1.2 Emerging aspects of virtual reality and haptic feedback

When discussing haptics in VR much has changed since the release of the first Oculus Rift. One of the major innovations is the tracking of wireless controllers, which also includes capacitive touch sensors to sense finger movement in the proximity of a physical button (Controllers - Valve Index, 2022). Upon a button press, visual stimulus in the form of a 3D finger is reinforced by the tactile feedback of a real button. If there is no virtual representation, immersion suffers because of sensory mismatch. Regarding haptic feedback from virtual objects, little has changed since most controllers use vibrations to provide feedback. There are various solutions to this sensory stimulus deficit, such as gloves, which let a user feel the hardness or even texture of a virtual object, or full body suits that can be used for motion capture and haptic feedback (for example, to feel impact of simulated bullets) (Natasha Mathur, 2018).

1.3 Importance of haptic feedback in immersive VR

One of the key aspects in immersive VR is a sense of presence, i.e., the subjective perception of truly being in an artificially created virtual environment (Burdea, 1996; Rosenberg, 1997). When people are presented with a realistic environment in VR they can suspend disbelief to admit the illusion of reality. However, when one tries to haptically interact with the environment, the illusion is broken due to lack of coherent sensory feedback. This problem can be remedied by the use of passive haptics, props placed in a real space in which the user experiences the virtual environment. However, such a solution is highly impractical, as it requires real space to be tailored to a virtual environment and vice versa. Another type of solution called active haptics uses robotics, which overcomes the flexibility issue of props. Such dynamic props could introduce significant latency, which is potentially hazardous to a gamer (Azmandian et al., 2016; Suzuki et al., 2020). However, as described in Kreimeier et al. (2019) and other articles referenced therein, “haptic feedback was shown to improve interaction, spatial guidance and learning in VR environments, and substitute multimodal sensory feedback can enhance overall task performance as well as the perceived sense of presence.” Other studies have confirmed that even the simpler and already widely used vibrotactile feedback in many cases enhances presence in virtual environments (Kang and Lee, 2018; Kreimeier and Götzelmann, 2018). Unfortunately, current systems do not provide high-quality force or tactile feedback (Dangxiao et al., 2019). Providing a relevant and believable combination of small-scale haptic feedback, such as texture simulation and medium-scale modest force-feedback, is challenging in various aspects, including form-factor, ease of deployment, and precision (Choi et al., 2017). These challenges are especially apparent in solutions that encapsulate this combination of features in a wearable package and immersing the user into the virtual environment via VR. In conclusion, haptic feedback and stimulus of other senses besides just visual and auditory can greatly impact quality of immersion in VR. Overall, the richer the variety of sensory feedback, the deeper the immersion (Slater, 2009; Wang et al., 2019).

1.4 Recent inventions providing haptic feedback in virtual environments (VE)

Even though practical implementation of haptic feedback-providing devices is difficult and often makes commercial deployment nearly impossible, researchers are developing solutions that drive advancement towards future generations of technologies in this field. In the following section, several devices are briefly described to show how some of the above-mentioned challenges have been recently addressed.

1.4.1 2018—CLAW: A multifunctional handheld VR haptic controller

CLAW is a virtual reality controller that augments typical controller functionality with force-feedback and actuated movement to the index finger. It focuses on providing feedback while performing three distinct interaction cues touching, grasping, and triggering. Touching it can render textures to one’s fingertip by vibrations, but it uses a servomotor while grasping and triggering (Choi et al., 2018).

1.4.2 2018—Haptic Revolver: Touch, shear, texture, and shape rendering on a VR controller

The Haptic Revolver is feature-wise similar to the CLAW but achieves shared functions differently. The haptic element is an actuated wheel that raises and lowers beneath one’s finger to render contact with a virtual surface. As a user’s finger moves along the surface of an object, the controller spins the wheel to render shear forces and motion under the fingertip. The biggest difference from the CLAW is that the wheel is user-interchangeable and can provide different textures, shapes, edges, and active elements (Whitmire et al., 2018).

1.4.3 2018—Wearable fingertip haptic device for remote palpation: Characterization and interface with a virtual environment

The wearable fingertip haptic device consists of two main subsystems, which enable simulation of the presence of a virtual object or surface stiffness. One of the subsystems comprises an inertial measurement unit that tracks the motion of user’s finger and controls linear displacement of a pad towards one’s fingertip. The other subsystem controls pressure in a variable compliance platform through a motorized syringe to simulate the stiffness of a touched surface (Tzemanaki et al., 2018).

1.4.4 2020—Wireality: Enabling complex tangible geometries in virtual reality with worn multi-string haptics

Wireality is one of the latest devices intended to address many of the aforementioned challenges. It is a self-contained worn system that allows individual joints on the hands to be accurately arrested in 3D space by retractable wires that can be programmatically locked. This permits convincing tangible interactions with complex geometries such as wrapping fingers around a railing. The device is not only lightweight, comfortable, and high-strength, but also affordable, as it was developed under a strictly constrained production cost of $50 USD (Fang et al., 2020).

1.4.5 2022—QuadStretch: A forearm-wearable multi-dimensional skin stretch display for immersive VR haptic feedback

QuadStretch is a recently introduced multi-dimensional skin stretch display worn on the forearm for VR interaction. The device is small, lightweight, and utilizes counter-stretching that moves a pair of tactors in opposite directions to stretch the user’s skin by securing the tactors’ skin surface with an elastic band. Various VR interaction scenarios showcase QuadStretch’s characteristics, structured to highlight intensity (Boxing & Pistol), passive tension and spatial multi-dimensionality (Archery & Slingshot), and continuity in complex movements (Wings & Climbing) (Shim et al., 2022).

None of the solutions described above allow truly free-range mobility for haptic interfaces with force-feedback based on positional displacement and unconstrained movement through virtual environments. We developed hardware (using a modified 3D Systems Touch haptic device) and software (using the Unity game engine and low-level device drivers) that allow medium-scale force display in mobile VR applications.

2 Materials and methods

2.1 Problem description

A previously referenced article (Dangxiao et al., 2019) outlined the expected medium-term evolution of human-computer interaction. It presented various devices, of which we are particularly interested in those featuring six degrees-of-freedom (DoF), for a force-feedback haptic stylus such as those made by 3D Systems (3D System, 2022). These are mainly used for 3D modeling and sculpting and are intended for desktop use. They provide excellent tracking precision and have wide support among major 3D design tools. Comparing stylus-based interfaces to other wearable force-feedback solutions—such as VRgluv (VRgluv, 2021), TactGlove (Polly allcock, 2021), SenseGlove Nova (Clark Estes, 2021), Power Glove (Scott Stein, 2022), the above-mentioned CLAW (Section 1.4.1) (Choi et al., 2018), Haptic Revolver (Section 1.4.2) (Whitmire et al., 2018), or the Wearable Fingertip Haptic Device for Remote Palpation (Section 1.4.3) (Tzemanaki et al., 2018)—a main advantage of the stylus is that it is grounded and can provide force-feedback in space. As a user’s hand holds a stylus used to touch a virtual surface, their arm movement is constrained. This is not possible when using the compared devices, which enable the user to feel the size, shape, stiffness, and motion of virtual objects, but not positional translation. A device that is comparable in functionality to the Touch device, Wireality (Section 1.4.4), was released in 2020. It effectively addresses many challenges mentioned in Section 1.3. However, in the use case of 3D modeling and sculpting, its precision might not be sufficient and simulation of textures and vibrotactile feedback are not supported.

2.2 Design and implementation overview

Therefore, we embraced the challenge of converting a desktop-targeted stylus to a wearable haptic interface while maintaining untethered 6 degrees-of-freedom in VR, which introduces several challenges, such as the ergonomics of a wearable harness, weight, and limitations of the haptic device itself.

2.2.1 Hardware

2.2.1.1 Harness

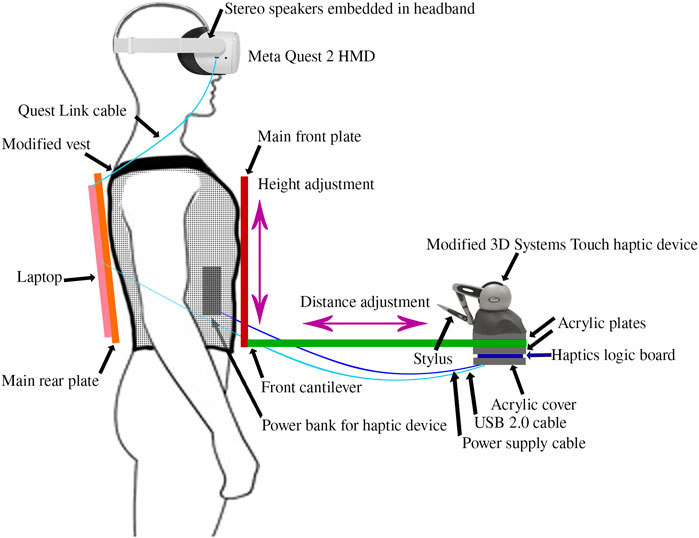

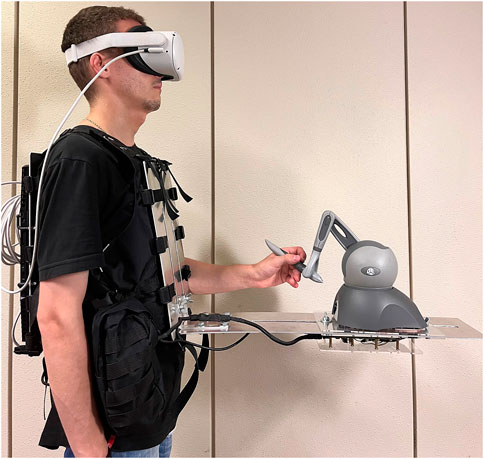

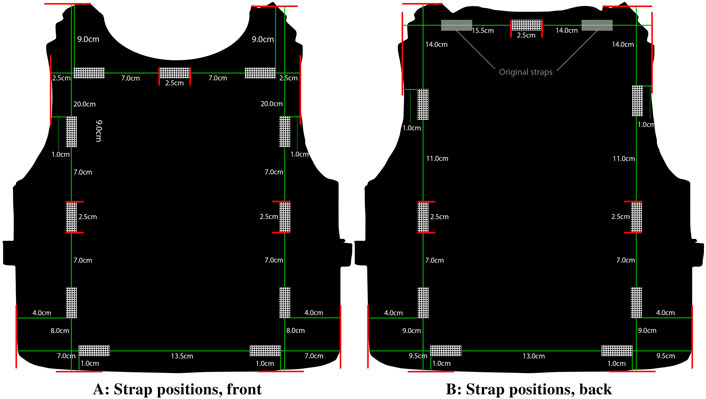

After considering but then rejecting over-the-shoulder arrangements, it was decided to create an adjustable platform on which the stylus base is mounted in front of the user. The crucial element is a vest that the user wears; it must be strong enough to support the weight of all of the hardware while still being comfortable and cannot constrain natural movement. A tactical vest originally intended for survival games is a versatile option. The vest was modified to accommodate mounting clips for 2 sheets of 3 mm thick aluminum, on both front and back, as shown in Figure 1.

FIGURE 1. Tactical vest customized for supporting the force-feedback device platform: strap positions in front (A) and back (B)

2.2.1.2 Front and rear plating

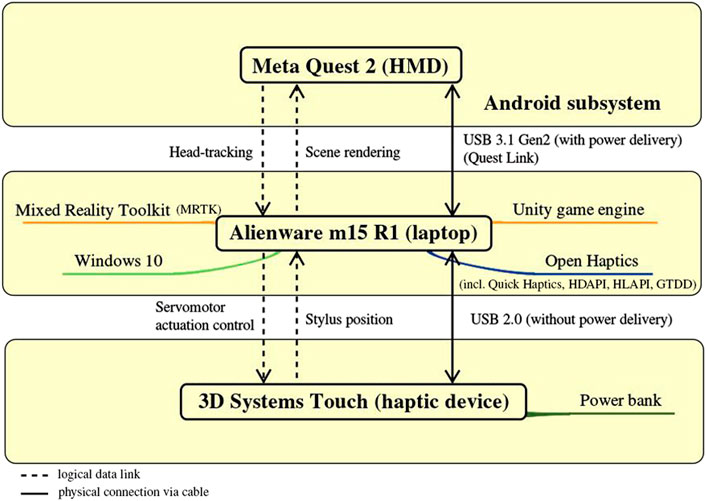

Use of a different type of material for the plating, such as acrylic or thinner steel, was considered. However, the issue with these materials is that they are either light and flexible or heavy and rigid, which dichotomy is unsuitable in this use case. The properties of aluminum lie between these two extremes and was considered appropriate. The back aluminum plate is used to mount a full-size 15.6″ laptop, which drives a Meta Quest 2 HMD in so-called ‘Quest Link’ mode. This ensures optimal performance as the Quest 2 is based on an Android platform with performance-constraining hardware. Open Haptics, a software development kit (SDK) for 3D System’s devices, is not supported on Android devices, so the haptic device must be separately driven.

2.2.1.3 3D systems touch platform

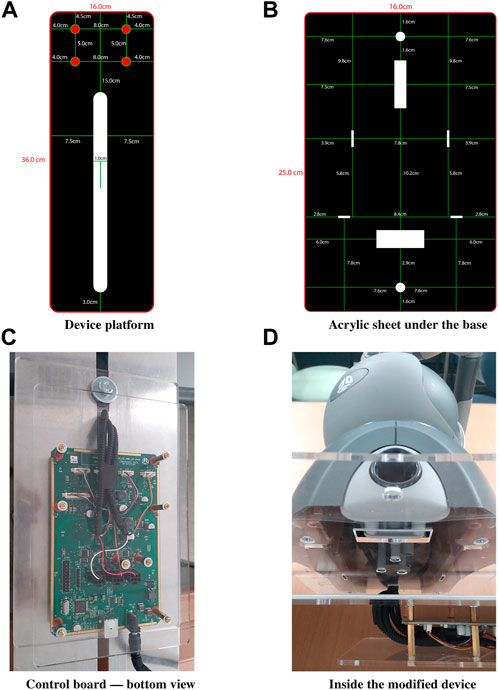

On aforementioned front aluminum plate is perpendicularly attached another 3 mm thick plate, which serves as the base for the stylus assembly, a cantilever of the wearable device. A channel in the middle is cut along its length and allows the stylus to be moved closer or further from the torso of the user, similar to the front plate accommodating users varying in ergonomic height and arm length. The stylus device itself is mounted on a sheet of laser-cut 3 mm-thick acrylic to provide insulation between the aluminum and electronic parts of the assembly as shown in Figure 2. However, the stylus assembly also had to be modified for this application.

FIGURE 2. Force-feedback device modifications: (A) device platform; (B) acrylic sheet under the base; (C) control board—bottom view; and (D) inside the modified device

2.2.1.4 Stylus assembly modifications

Originally, the base of the Touch stylus contained weights to prevent it from tipping during desktop use. These weights were removed as the cantilever provides secure enough mounting for the user to perceive no vertical flexing of the shelf and almost no horizontal flex. Further adjustment included moving the Touch main board from the bottom of the stylus’s base assembly to the bottom of the shelf while preserving the adjustment feature. These (warranty-voiding) modifications are shown in Figure 2.

2.2.1.5 Power delivery

The wearable assembly consists of three main devices, so appropriate power deliveries had to be implemented. The power supplies for the HMD and laptop were used as-is, as they have their own internal batteries. However, stylus servomotor power is supplied from an external power bank strapped to the user’s waist. An abstracted entirety of this system is shown in Figure 3 and a tangible implementation in Figure 4.

2.2.2 Software

The implemented software is divided into two main subsystems, as shown in Figure 5, described following.

2.2.2.1 Virtual reality

An immersive environment is streamed from the laptop, mounted on the vest’s rear, to the HMD. As previously mentioned, the Meta Quest 2 is a stand-alone Android-based device. However, incompatibility with the Open Haptics SDK requires the Quest to run in Link mode, which downgrades the device into a tethered HMD. The main difference with some other HMDs is that Quest 2 utilizes so-called inside-out tracking, which obviates the necessity of having stationary (“Lighthouse”-like) sensors in user space, as would be the case with HTC Vive or Oculus Rift. The VR environment is implemented in the Unity game engine by utilizing a combination of Oculus XR plugin and Mixed Reality Toolkit (MRTK). The Oculus XR plugin interfaces lower level necessities, such as stereoscopic rendering for the HMD, the Quest Link feature (which essentially converts the Quest into a thin client of the PC), and input subsystems, which provide controller support and HMD tracking (Oculus XR Plugin, 2020). The MRTK encapsulates those low-level features and extends them by hand-tracking and other features such as gesture-operated teleport, a ray-cast reticle operator, and physics-enabled hand models (Mixed Reality ToolKit, 2022).

2.2.2.2 Haptic force-feedback

The 3D Systems stylus is controlled through the Open Haptics for Unity plugin, which enables integration of different 3D haptic interactions in Unity (OpenHaptics, 2018a). The overall package consists of Quick Haptics micro API, Haptic Device API (HDAPI), Haptic Library API (HLAPI), Geomagic Touch Device Drivers (GTDD), and additional utilities (OpenHapticsa, 2018a) (OpenHaptics, 2018b).

The structure of the Open Haptics plugin for Unity differs from the native edition. Rather than using Quick Haptics to set-up haptics/graphics, this package uses OHToUnityBridge DLL to bridge calls between the Unity controller, the HapticPlugin script written in C#, and HD/HL APIs written in the C programming language. By examining the dependencies of OHToUnityBridge.dll, we determined that this library does not leverage Quick Haptics, but rather directly invokes the HD, HL, and OpenGL libraries.

2.2.3 Prototype description

The following describes the four main sections of the engineered prototype as a proof-of-concept.

2.2.3.1 Scene loader

The “scene loader” Unity scene is not explicitly exposed to the user as a selectable scene but rather serves as a container, not just for objects of the sub-scenes, but also as an encapsulator for the application scene management system.

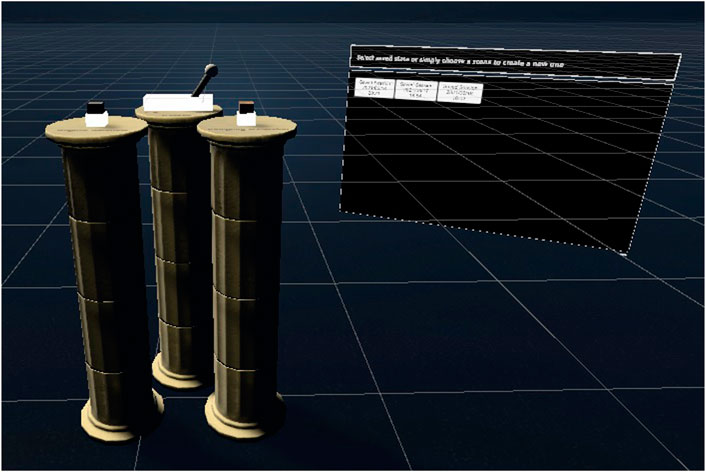

2.2.3.2 Scene selector

As portrayed in Figure 6, after the demo application is launched, the first (“splash”) scene, Scene Selector, is loaded as an additive to the Scene Loader. This scene contains selectors to gather information about the intended use of the main sub-experiences. The user is presented with two pillars with buttons atop and a lever and a canvas containing touchable User Interface (UI) buttons. The lever is used to indicate the chirality of the user, i.e., which of their hands is dominant and therefore assumed to be used with the haptic stylus. The canvas is populated with buttons that each represent previously saved state of a sculpting/carving session. Therefore, when the user ends a session, the state is saved and can be reloaded so that exploration can be resumed later. The left button, labeled “Haptic Sandbox,” is used to load one of the sub-experiences, and the button labeled “Sculpting & Carving” is used to load the other sub-experience, which purpose is self-explanatory but is described below in Section 2.2.3.4. In this scene, the user relies on hand-tracking and gestures as well as physics to interact with the environment. It is also possible to teleport within the play area using a palm-up “put-myself-there” gesture.

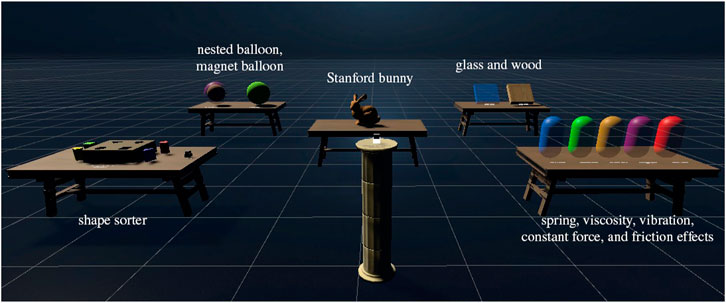

2.2.3.3 Haptics sandbox

As seen in Figure 7, the “Haptics Sandbox” presents the user with several haptic simulations. Five different stages demonstrate various capabilities of the haptic mechanism. The first is a block shape-sorting experience. A user can teleport to an area in front of a table with an assortment of shapes, which can be inserted into respective cutouts in a pre-cut square block. Each block is a different shape and has only one correct cut-out, like the children’s toy upon which this stage was based. When one is comfortably positioned in front of the desk, the physical haptic stylus can be grasped and is represented by an approximately congruent shape inside the virtual space. Real physical movement is then projected onto the virtual space. If the user touches a ‘touchable’ object in the virtual space, the haptic device responds by mechanically locking or constraining movement and rotation, which simulates contact with a physical object. Furthermore, blocks can be picked up by pressing a button on the stylus and grabbing and then lifting them. The simulation of weight and mass of a virtual object is achieved by the ability of the haptic plugin to translate physics properties of Unity materials into parameters that are processed by the haptics engine and projected to signals controlling the servomotors inside the assembly.

Another stage consists of two balloon-like sculptures. One consists of outer and inner spheres. The outer layer models a relatively weak material, which can be poked through with a certain amount of force. This sensation is best described as popping a balloon with a pin. Once the outer layer is poked through, the pen lands on a solid, impenetrable sphere whose shape can be felt across its surface. When the user wants to pull out of the inner layer, the same amount of force to pop-out must be exerted as when popping-in. The other sculpture, rather than repulsively resisting one’s touch, attracts the stylus to its surface and constrains movement of the stylus to its shape. This stimulus can be best described as dragging a magnetic stick over a metallic surface. It is quite effortless to slide the stick across the surface, but to pull off, a certain amount of force must be applied to overpower the “stickiness.” If that force overpowers the attractive virtual-magnetic force, the stylus breaks free from the spherical shape.

On the next stage table, the user is presented with two boards angled at 45° and made out of two different virtual materials that simulate glass and wood. This experience showcases the ability of tactile haptics to simulate textures and smoothness. It is commonly understood that glass is smoother than a raw wood plank, so we opted for the comparison of these two materials. Again, a user can teleport to the desk area and use the stylus to touch these two boards.

Lastly, a table with five differently colored capsules is presented to the user. Each capsule represents a different tactile effect. It is possible to experience elastic spring, viscosity, vibration, constant force, and friction effects.

As a bonus feature, there is also a possibility to palpate (feel the shape of) the Stanford bunny, which is often used for benchmarking 3D software due to the representative nature of its model.

2.2.3.4 Sculpting and carving

The second space that the user can enter is the “Sculpting and Carving” scene. One can either first select a saved instance of the scene on the canvas and then load this saved state by pressing the physics-reactive button, or if no saved instance is selected and the button is pushed, the simulation starts in a fresh state with no model. Upon loading, the user is presented with nothing besides a floating panel and an empty plane alongside a means of returning to the scene selection in the form of previously used button pillar. The floating panel consists of four buttons, each of which instantiates one of four basic shapes a cube, cylinder, sphere, or plane.

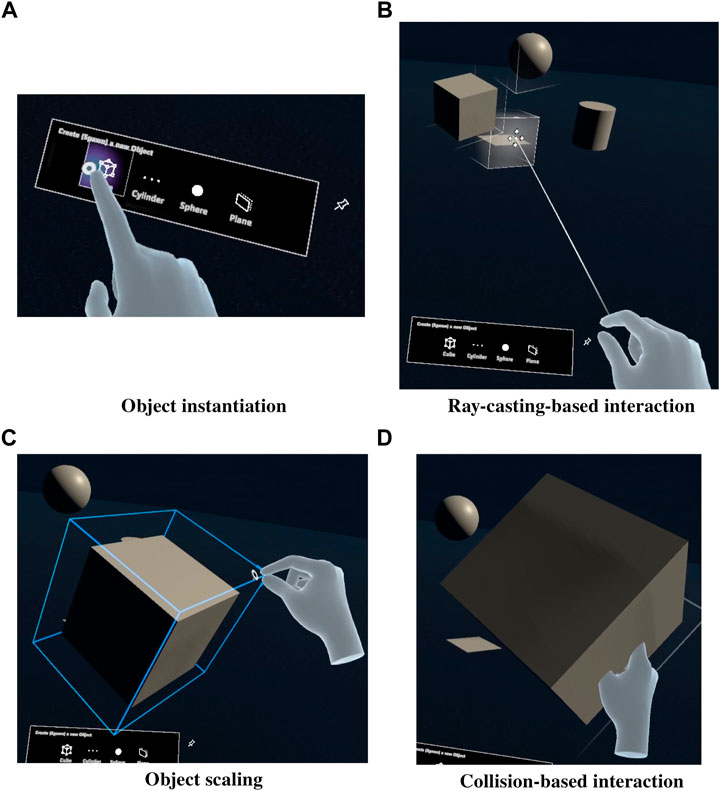

Each of these shapes can be transformed in scale and orientation using bimanual manipulation interpreted by the MRTK, as mentioned earlier in Section 2.2.2.1. These actions can be performed through ray-casting, where the user aligns a reticle over the object and then grabs either a corner for scale manipulation or the center of an edge for rotation on the horizontal or vertical axes. It is also possible to grab an object by selecting the middle of a face and translating the object positionally. This form of interaction is usually used if the object is so distant that it cannot be grabbed directly by hand. However, if the object is in close proximity, it is also possible to use collision-based interaction, such as grabbing or rotating by grasping with one’s hand while it intersects with the object. These interactions are shown in Figure 8.

FIGURE 8. Immersive haptic modeling: (A) object instantiation; (B) ray-casting-based interaction; (C) object scaling; and (D) collision-based interaction

Every object is also touchable and each object’s shape can be traced by the haptic stylus. The user must be in close proximity to it though, as mechanical limitation of the stylus’s arm does not allow positional translation over great distances.

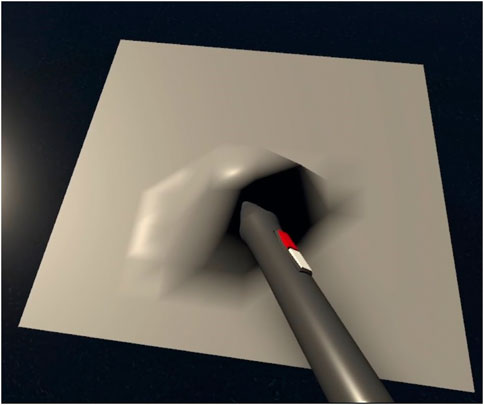

Furthermore, the aforementioned plane positioned in front of the pillar can be shaped using the haptic pen. When the user teleports close to the plane, it is possible to place the stylus on top of this plane, press the primary selection button, and shape its surface, as seen in Figure 9.

3 Validation

3.1 Immersivity comparison—pilot experiment

In the following, we describe two conditions of experiencing haptic feedback as presented to subjective experiment participants to discover deficiencies in the current implementation and to prove or disprove that perceived immersion is improved by using the ambulatory design.

3.1.1 Conditions: Seated (with normal monitor) and mobile VR (with HMD)

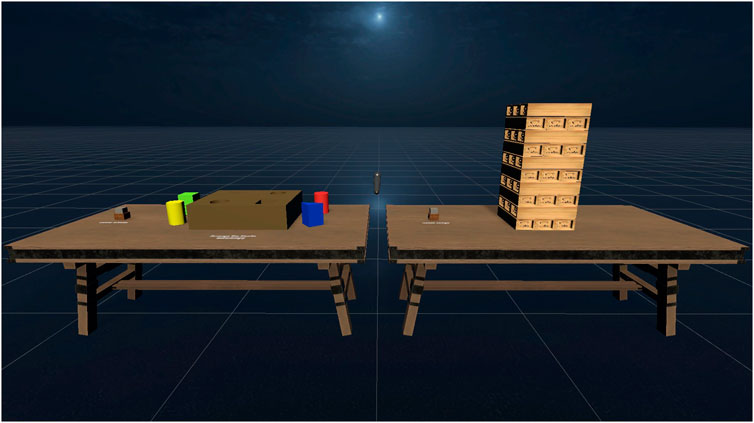

The baseline condition consisted of a haptic device plugged into a standard desktop computer with a monitor, which the user sits in front of. The seated experience was a game of Jenga wherein the player can pick up and remove small blocks. These were also physics-affected objects, so if a user pushes the virtual stack of blocks with the stylus, it reacts accordingly (by shifting or toppling).

The room-scale condition involved outfitting each experimental participant in our harness and, with our guidance, letting them explore the immersive experience and all of its segments, as described in Section 2.2.3.

3.1.2 Procedure and controls

For the baseline condition, subjects were first introduced to the haptic device its controls, and expected behavior, followed by a demo of a game of Jenga. There was no time limit to this task or quantitative objective. Rather, it allowed each subject to get familiar with the concept of using haptic feedback devices in a desktop setting.

In the room-scale condition, testers were first introduced to the combined the VR headset and force-feedback stylus they used in the previous segment. Then, they were guided through the haptic sandbox with a short introduction of each segment and its expected simulated stimulus. Similarly, after transitioning to the sculpting and carving scene, subjects were given instructions about controls and capabilities and allowed to freely explore its features. There was no time limit for this task, quantitative objective, or goal.

Throughout the experiment, each participant performed the desktop experience on the same computer, with the same monitor, and in the same seating position. Additionally, each tester wore the same harness, laptop, and HMD, and could explore the same sections of the haptic sandbox as in the room-scale condition.

3.1.3 Participants

In total, 8 people participated in the pilot experiment (aged 19–35, male (6) and female (2)). The participants’ experience with VR varied from having no experience at all to owning a headset and occasionally using it. This allowed us to observe the intuitiveness of the experience and test if inexperienced users required more guidance than experienced users. User experience with haptic devices varied, but the observed trend was towards no experience at all or having tried them only a few times. Overall, the guidance necessity was roughly the same due to the novelty of the featured system. This indicates that the need for guidance regarding operation of the haptic stylus was greater than for operation of the headset alone. However, once basic interaction was explained to the participants, we noted no further need of guidance.

3.1.4 Data acquisition and composition

After experiencing both set-ups, participants were asked to complete a questionnaire, which elicited impressions expressed as agreement with positive claims on a quantified Likert scale. Additionally, participants were asked to answer a couple of questions about their previous experience in using haptic devices, virtual reality, and CAD software.

3.1.5 Results

The impression of immersiveness of the desktop experience was quite spread across the Likert scale, but we were still able to conclude that the users had neutral or slightly positive feelings of immersion while playing the simulated Jenga game.

Subjects had a mixed response regarding the comfort of the wearable harness. Most of them expressed concern about the weight, and many indicated a time limit of comfortable wearability of 15 min to about half an hour. Furthermore, female participants noted that the harness needs to be softer in the front, by possibly adding more padding between the vest and the aluminum plating. Overall, the response was more neutral than negative.

We were generally pleased to confirm that the simulation of tactile feeling was regarded as close to a “real” feeling. Most of the users agreed that the simulation was comparable with a natural touch sensation. However, some users expressed feelings of inadequate intensity and reported that some effects were not strong enough to be comparable with ordinary feeling.

When we queried the participants about the immersiveness of the VR mode, we received strong positive feedback. Even though the harness was heavy and at times even uncomfortable, it is reassuring to confirm that it did not break the sensation of immersion. Also, comparing responses regarding its form-factor immersiveness to that of the desktop mode, we can clearly determine that the pairing of virtual reality and haptic force-feedback contributes to overall immersion.

Most users described the combination of inputs as usable but somewhat tricky. Rotational reset was implemented to give users a way to recover from avatar misalignment with the stylus after teleportation. Many subjects noted that congruence of the virtual stylus with its real affordance sometimes drifts and that the rotational reset function cannot be fully relied upon. Furthermore, the hardware limitations of the stylus and inadequate intensity of haptic effects contributed to no-one choosing ‘Natural’ as their impression. Hand-tracking was overall regarded as quite accurate, but switching between the haptic stylus and using one’s dominant hand again required a user to hide their hand and then look at it again to reload the hand tracking mode. This awkwardness was mostly shrugged off as just something one ‘has to get used to,’ but will be addressed in the future.

All participants concurred that this type of device arrangement can be used for CAD. When participants rated their overall experience on a scale from 1 to 10, we received quite positive feedback. The quality of our proof of concept averaged a score of 8.5/10. Any score lower than 5 would be considered unsuccessful, but even though we can claim “success,” there is plenty of room for improvement. Overall, we gained valuable feedback in the form of complaints, compliments, and suggestions for future refinement and expansion.

3.2 Immersivity comparison—performance experiment

In a second experiment, we were not interested in characterizing absolute performance, for instance by modeling such performance with Fitt’s Law, but rather confirming that the ambulatory performance could be at least as good as that measured under the fixed condition. This was conducted several months after the previous experiment. There were no differences between the hardware used in the improvement experiment, and no subjects participated in both experiments.

3.2.1 Conditions: Seated (with normal monitor) and mobile VR (with HMD)

The baseline condition is almost identical to that described in Section 3.1.1 but with the addition of the block sorting game introduced in Section 2.2.3.3. However, the Unity application was re-implemented to precisely match that of the room-scale condition. The composition of this scene is depicted in Figure 10.

Similar to that described in Section 3.1.1, the room-scale set-up involved outfitting each experimental participant in our harness, but instead of letting them explore the features independently, they were instructed to complete a set of tasks as described in the following section.

3.2.2 Procedure and controls

For the game of Jenga, subjects were first instructed to remove as many blocks as possible from the tower without toppling it within a 4 min time limit. If they toppled the tower, then they could reset and start over. Only the highest number of removed blocks from any number of runs was recorded, as well as the number of resets.

Similarly, for the shape-sorting game, the testers were given a 4 min time limit to successfully sort the full range of shapes. Only if all shapes were successfully sorted was the score incremented. This was to prevent players from sorting only the easier shapes from the lot.

Furthermore, subjects were split into two groups those who partook only in the ambulatory segment and those in the desktop experience. This was to avoid learning effects and results favoring either of the two based on a tester’s increase in experience through the experiment.

The measured segment took approximately 10 min, not including introduction of this experiment to each subject, questionnaire answering, and a warm-up session. The warm-up session took approximately 2 min for each segment (4 min in total) and answering the questionnaire took up to 10 min; the overall experiment lasted from 20 to 30 min per tester.

Each participant in this experiment performed the desktop segment on the same computer, same monitor, and in the same seating position (only seat height was adjusted to position the monitor at the tester’s eye level).

The ambulatory segment contained a 4 min warm-up session during which we mostly focused on the harness adjustment to avoid any discomfort, which could affect results. Furthermore, the subject could get familiar with the new interface.

3.2.3 Participants

Eight adults volunteered to participate in the ambulatory experiment. 37.5% were between 18 and 25 years of age while the remaining 62.5% were between 26 and 35; 75% were males and 25% were females. Eight adults also partook in the desktop version of this experiment, of which 87.5% were between 18 and 25 years old and 12.5% were between 26 and 35 years old; 62.5% were male and 37.5% were female. The subjects were compensated for their participation (¥1,000, approximately $8, for a half-hour session). All subjects were right-handed and had a background in Computer Science or Software Engineering such that they were well versed in standard human-computer interaction. However, some subjects had no previous experience with VR. They were introduced to basic concept and usage of VR by having them walk in Meta Home, and they were shown how to enable the pass-through “Chaperone” functionality of Quest 2 HMD (revealing the camera-captured physical environment with video see-through), which reassured them that there was only slim chance of accidental collision with real objects.

3.2.4 Data acquisition and composition

Measured data were collected by the supervisor via observation while using a stopwatch and Google Forms to record the scores. Furthermore, after each measured segment, each subject was asked to answer a User Experience Questionnaire (UEQ) comprised of 26 pairs of bipolar extremes, such as “complicated/easy” and “inventive/conventional,” evaluating User Experience (UE) by indication on a quantized Likert scale from 1 to 7 (Schrepp et al., 2017a; Schrepp et al., 2017b). Additionally, subjects were asked to answer 36 questions picked from the multi-dimensional scale Intrinsic Motivation Inventory (IMI) to assess subjective experience. IMI statements, such as “I was pretty skilled at this activity” and “This activity was fun to do,” were contradicted or confirmed by indicating agreement between “not true at all” and “very true” (inclusively) on a 7-step scale (McAuley et al., 1989).

3.2.5 Results

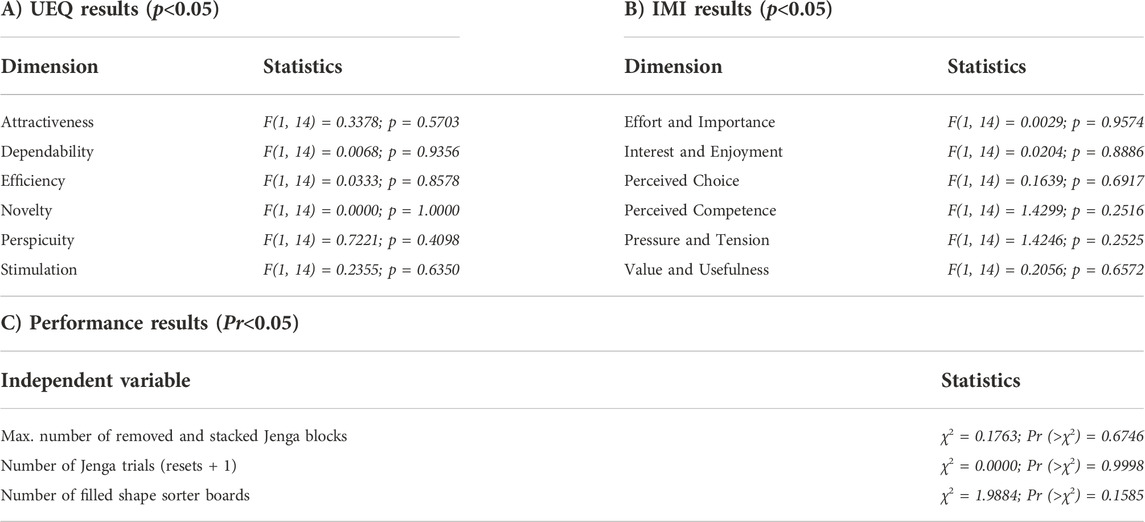

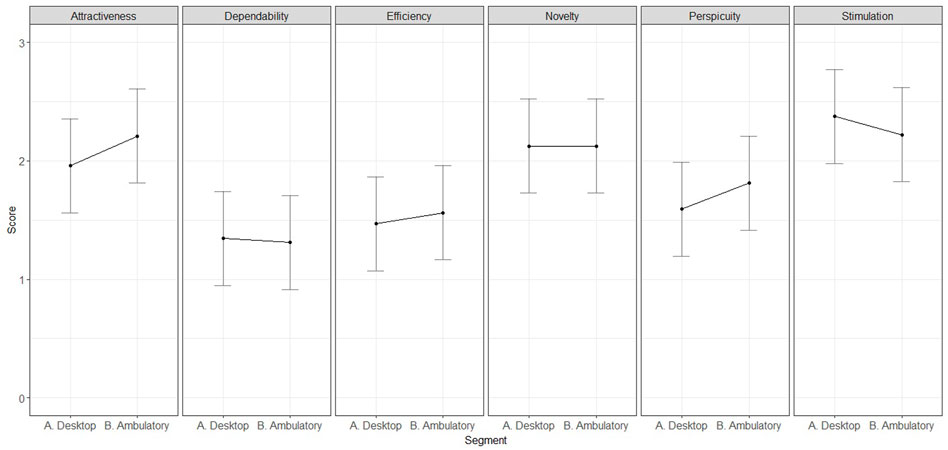

The results from the UEQ were compiled into six different dimensions—Attractiveness, Dependability, Efficiency, Novelty, Perspicuity, and Stimulation—derived from a zero-centered 7-point scale (-3 ˗3) and indicate that there were no significant differences between the desktop and ambulatory editions of our application. The ANOVA analysis was performed with ‘ez’ library in R (R Core Team and R, 2018; Lawrence, 2016). However, the UEQ also provides benchmarking of results against data from 21,175 persons from 468 studies concerning different products (business software, web pages, web shops, and social networks). In this regard, the desktop segment rated slightly worse than the ambulatory. These results are presented in Table 1 and Figure 11.

TABLE 1. Performance experiment results: (A) results of User Experience Questionnaire; (B) Intrinsic Motivation Inventory results; and (C) results of performance dimensions evaluation

FIGURE 11. Performance experiment—compiled UEQ results of zero-centered 7-point scale with ordinate axis truncated to positive interval [0,3]. Error bars correspond to a confidence interval of 95%.

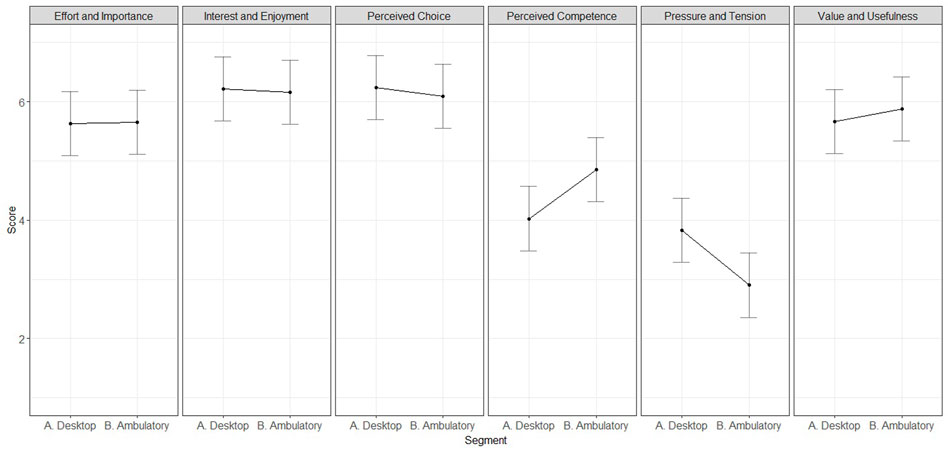

The IMI evaluates intrinsic motivation in six subscales: Effort and Importance, Interest and Enjoyment, Perceived Choice, Perceived Competence, Pressure and Tension, and Value and Usefulness. Experimental results were compiled using ANOVA and similarly showed that there were no significant differences between the desktop and ambulatory conditions, as shown in Table 1 and Figure 12.

FIGURE 12. Performance experiment—IMI results (error bars correspond to a confidence interval of 95%; a single irrelevant question and an irrelevant scale from IMI were excluded from the analysis)

Performance measurements were analyzed using the ANOVA function in R and utilizing the Type II Wald χ2 test for logistic regression analysis. We considered three dimensions: Maximum number of removed and stacked Jenga blocks, Number of Jenga trials (resets + 1), and Number of filled shape sorter boards. When considering that the null model is represented by recorded data from the desktop segment, the results were Pr( > 0.1763) = 0.6746, Pr( > 0.0044) = 0.9471, and Pr( > 1.9884) = 0.1585 for each of these metrics, respectively. As each of the Pr values is greater than a rejection threshold of 0.05, we conclude that performance measurements did not show any significant differences between the two conditions.

4 Discussion

4.1 Future work

The development of this prototype heavily focused on making the whole structure as light as possible, but after seeing the results described in Section 3.1.5, we recognized that our choice of materials was not optimal. To achieve a weight reduction, we plan to design and prototype a 3D-printed honeycomb-like structure instead of the front aluminum plate and to eliminate unnecessary reinforcement in the rear. The system currently weighs 9 kg (including HMD), which should be lowered as much as possible while preserving the robustness of the device. The design of this structure will also include consideration for the identified inadequacy in the comfort of women due to insufficient padding in the harness. Further reduction can be achieved by making the haptic device wireless and utilizing a wireless connection between a workstation host and the Meta Quest 2, eliminating the necessity of having to carry a PC.

Changing the parameters (gain, friction, shear, etc.) of each haptic effect could potentially be a solution to achieve an even better positive feedback in comparison to a simulated and “real” tactile feeling.

To resolve the issue with misaligned rotation perceived by the user and to achieve proper anchoring of the stylus to world coordinates, rather than locally to a relative position of the user’s avatar, we plan to add an independent tracker on the stylus platform.

The prototype is far from perfect, but seeing the enjoyment of our participants increased our motivation to make this system even better. For instance, users requested more geometric shapes for the sculpting mode and more touchable objects similar to the Stanford bunny. We will also expand the feature-set by adding more shapes to the sculpting scene and further immersion-benefiting details, including spatialized sound effects associating with more materials. There are currently only a few objects with such association of material and sound definitions: wood scratching, glass scraping, and thumping sound effects, rendered while the respective material is being dragged, translated, or touched.

Additionally, we would like to add networked support for multiplayer experiences, wherein users cooperate socially on group experiences and projects.

4.2 Limitations

We already described some software bugs and hardware inadequacies in Section 3.1.5. There are a few other limitations that participants were notified about before beginning the study.

The archive feature described as part of the “Sculpting and Carving” scene is not fully functional. There is an inherent problem with the Open Haptics plugin that prevents touchable objects from being correctly serialized and deserialized.

Furthermore, the sculpting feature is currently very limited. When transforming a mesh of a sculptable surface, vertices are translated at run-time and normals are recalculated and faces re-instantiated during transformation. This poses a problem for the Haptics Plugin, which maintains a record of every touchable object in the scene. When the user instantiates or sculpts a shape, the entire dataset is refreshed with a new map of touchable vertices, but the object record is not correctly populated. We have yet to determine the true cause of this problem.

Another bug was discovered after adding another simulation aspect, which would highlight the ambulatory functionality of this system. Currently, if a user moves within the world space while virtually holding an object, the haptics controller stops sending commands to the servomotors, which simulate a held object’s weight as well as drag when moved. A walking user is able to feel the increasing resistance of a spring when pushing on it, but is unable to feel anything when pulling it. This bug requires further investigation.

5 Conclusion

As described in Section 2.1, we addressed the problem by creating a free-range haptic interface that maintains untethered 6 degrees-of-freedom in virtual reality and provides small- and medium-scale force-feedback. We have been at least partially successful in this task. Initially, we designed and created the necessary hardware by transforming a 3D Systems Touch haptic device into an ambulatory version and implementing software which allows an untethered user to immersively explore different aspects of haptics. Our solution reifies formerly impalpable phenomena, for immersive CAD, gaming, and virtual experience. The capabilities introduced by this invention allow new free-range immersion with a spatially flexible force-feedback display. For instance, we are aware of no other solution that enables experiences such as ambulatorily pushing on an arbitrarily long spring, or a use case scenario of an organ-transplant simulation realistically locating the surgeon betwixt two operating tables and requiring rotation from donor around to recipient and implementing haptic force-feedback in space.

Additionally, we conducted a pilot experiment that measured the precision and ergonomics of our hardware as well as stability and usability of our software. The results were qualified, as we discovered several deficiencies in software and hardware aspects of our solution. However, overall results suggest the potential of a combination of haptic force-feedback and ambulatory VR to improve immersion in free-range virtual environments.

Moreover, we conducted a second experiment targeting user experience and performance evaluation to prove or disprove that the conversion from stationary to ambulatory/wearable set-up did not degrade the overall experience. Initially, we expected the ambulatory version to be better in some dimensions, but results showed that there were no significant differences between the two across every measured dimension. However, considering the limitations described in Section 4.2 and the deficiencies discovered in the first experiment, finding no significant difference is not regarded as a negative result. If the hardware and software deficiencies are mitigated, the ambulatory set-up has the potential to surpass the traditional desktop version in terms of user experience and performance.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/https://github.com/peterukud/WearableHapticsVR.

Ethics statement

The studies involving human participants were reviewed and approved by University of Aizu ethics committee. The participants provided written informed consent to participate in this study. Written informed consent was also obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

The core of this project was developed by the first author, PK, with support of supervising professor MC.

Funding

Creation of the wearable haptic device prototype was financially supported by the Spatial Media Group University of Aizu.

Acknowledgments

We thank Julián Alberto Villegas Orozco, Camilo Arévalo Arboleda, Georgi Mola Bogdan, Isuru Jayarathne, James Pinkl, and experimental subjects for support throughout the project implementation. We are also grateful for suggestions on this submission from referees.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

3D, 3-dimensional; API, application programming interface; AR, augmented reality; CAD computer-aided design; DLL, dynamic-link library; DOF, degrees-of-freedom; GTDD, geomagic touch device drivers; HDAPI, haptic device API; HLAPI, haptic library API; HMD, head-mounted display; IMI, intrinsic motivation inventory; MRTK, mixed reality toolkit; SDK, software development kit; UE, user experience; UI, user interface; USD, United States dollar; UEQ, user experience questionnaire; VE, virtual environment; VR, virtual reality; XR, extended reality.

References

3D System (2022). Haptic devices | 3D systems. [Online]. Available: https://www.3dsystems.com/haptics.

AREA: Augmented Reality for Enterprise Alliance (2020). XR Industry Insight report 2019-2020: Featuring Oculus Rift, HTC Vive, Intel, Nvidia and more - AREA. [Online]. Available: https://thearea.org/ar-news/xr-industry-insight-report-2019-2020-featuring-oculus-rift-htc-vive-intel-nvidia-and-more/.

Azmandian, M., Hancock, M., Benko, H., Ofek, E., and Wilson, A. D. (2016). “Haptic retargeting: Dynamic repurposing of passive haptics for enhanced virtual reality experiences,” in Proc. Conf. Human Factors Computing Sys. 1968–1979. doi:10.1145/2858036.2858226

Ben Lang (2022). 13 major new features added to Oculus Quest 2 since launch. [Online]. Available: https://www.roadtovr.com/oculus-quest-major-feature-updates-since-launch/3/.

Bernard, M. (2020). The 5 biggest virtual and augmented reality trends in 2020 everyone should know about. [Online]. Available: https://www.forbes.com/sites/bernardmarr/2020/01/24/the-5-biggest-virtual-and-augmented-reality-trends-in-2020-everyone-should-know-about/.

Choi, I., Culbertson, H., Miller, M. R., Olwal, A., and Follmer, S. (2017). “Grabity: A wearable haptic interface for simulating weight and grasping in virtual reality,” in Proc. 30th Annual ACM Symp (User Interface Software Technologies). doi:10.1145/3126594.3126599

Choi, I., Sinclair, M., Ofek, E., Holz, C., and Benko, H. (2018). Demonstration of CLAW: A multifunctional handheld VR haptic controller. Proc. Conf. Human. Factors Comput. Sys. 2018, 1–4. doi:10.1145/3170427.3186505

Clark Estes, A. (2021). Facebook’s new haptic glove lets you feel things in the metaverse. [Online]. Available: https://www.vox.com/recode/2021/11/17/22787191/facebook-meta-haptic-glove-metaverse.

Controllers - Valve Index (2022). Controllers - Valve Index® - upgrade your experience - Valve corporation. [Online]. Available: https://www.valvesoftware.com/en/index/controllers.

Dangxiao, W., Yuan, G., Shiyi, L., Yuru, Z., Weiliang, X., and Jing, X. (2019). Haptic display for virtual reality: Progress and challenges. Virtual Real. Intelligent Hardw. 1 (2), 136–162. doi:10.3724/sp.j.2096-5796.2019.0008

Fang, C., Zhang, Y., Dworman, M., and Harrison, C. (2020). “Wireality: Enabling complex tangible geometries in virtual reality with worn multi-string haptics,” in Proc. CHI Conf. Human Factors Computing Sys. 1–10. doi:10.1145/3313831.3376470

Kang, N., and Lee, S. (2018). “A meta-analysis of recent studies on haptic feedback enhancement in immersive-augmented reality,” in ACM Int. Conf. Proc. Ser. 3–9.

Kreimeier, J., and Götzelmann, T. (2018). “FeelVR: Haptic exploration of virtual objects,” in ACM Int. Conf. Proceeding Ser. 122–125. doi:10.1145/3197768.3201526

Kreimeier, J., Hammer, S., Friedmann, D., Karg, P., Bühner, C., Bankel, L., et al. (2019). Evaluation of different types of haptic feedback influencing the task-based presence and performance in virtual reality. ACM Int. Conf. Proc. Ser. 19, 289–298. doi:10.1145/3316782.3321536

Lawrence, M. A. (2016). ez: Easy analysis and visualization of factorial experiments. R package version 4.4-0. [Online]. Available: https://CRAN.R-project.org/package=ez.

McAuley, E. D., Duncan, T., and Tammen, V. V. (1989). Psychometric properties of the intrinsic motivation inventory in a competitive sport setting: A confirmatory factor analysis. Res. Q. Exerc. Sport 60, 48–58. doi:10.1080/02701367.1989.10607413

Mixed Reality ToolKit (2022). “Introducing MRTK for Unity - mixed reality | Microsoft Docs.” [Online]. Available: https://docs.microsoft.com/en-us/windows/mixed-reality/develop/unity/mrtk-getting-started.

Natasha Mathur (2018). “What’s new in VR Haptics? | Packt Hub.” [Online]. Available: https://hub.packtpub.com/whats-new-in-vr-haptics/.

Oculus XR Plugin (2020). About the Oculus XR plugin | Oculus XR plugin | 1.9.1. [Online]. Available: aHR0cHM6Ly9kb2NzLnVuaXR5M2QuY29tL1BhY2thZ2VzL2NvbS51bml0eS54ci5vY3VsdXNAMS45L21hbnVhbC9pbmRleC5odG1s.

OpenHapticsa (2018a). OpenHaptics® Toolkit version 3.5.0 programmer’s guide. [Online]. Available: https://s3.amazonaws.com/dl.3dsystems.com/binaries/Sensable/OH/3.5/OpenHaptics_Toolkit_ProgrammersGuide.pdf.

OpenHaptics (2018b). OpenHaptics® Toolkit version 3.5.0 API reference guide original instructions. [Online]. Available: https://s3.amazonaws.com/dl.3dsystems.com/binaries/Sensable/OH/3.5/OpenHaptics_Toolkit_API_Reference_Guide.pdf.

Polly Allcock, P. (2021). bHaptics reveals TactGlove for VR, which it will showcase at CES 2022. [Online]. Available: https://www.notebookcheck.net/bHaptics-reveals-TactGlove-for-VR-which-it-will-showcase-at-CES-2022.589393.0.html.

R Core Team, R (2018). A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing. [Online]. Available: https://www.R-project.org/.

Rosenberg, L. B. (1997). A force feedback programming primer: For gaming peripherals supporting DirectX 5 and I-force 2.0. San Jose, CA: Immersion Corporation.

Schrepp, M., Hinderks, A., and Thomaschewski, J. (2017). Construction of a benchmark for the user experience questionnaire (UEQ). Int. J. Interact. Multimedia Artif. Intell. 4, 40. doi:10.9781/ijimai.2017.445

Schrepp, M., Hinderks, A., and Thomaschewski, J. (2017). Design and evaluation of a short version of the user experience questionnaire (UEQ-S). Int. J. Interact. Multimedia Artif. Intell. 4, 103. doi:10.9781/ijimai.2017.09.001

Scott Stein (2022). Haptic gloves for Quest 2 are a small step toward VR you can touch. [Online]. Available: https://www.cnet.com/tech/computing/haptic-gloves-for-quest-2-are-a-small-step-towards-vr-you-can-touch/.

Shim, Y. A., Kim, T., and Lee, G. (2022). “QuadStretch: A forearm-wearable multi-dimensional skin stretch display for immersive VR haptic feedback,” in Proc. CHI Conf. Human Factors Computing Sys. 1–4. doi:10.1145/3491101.3519908

Slater, M. (2009). Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Phil. Trans. R. Soc. B 364 (1535), 3549–3557. doi:10.1098/rstb.2009.0138

Suzuki, R., Hedayati, H., Zheng, C., Bohn, J. L., Szafir, D., Do, E. Y.-L., et al. (2020). RoomShift: Room-scale dynamic haptics for VR with furniture-moving swarm robots. Proc. CHI Conf. Human Factors Comput. Sys. 20, 1–11. doi:10.1145/3313831.3376523

Tzemanaki, A., Al, G. A., Melhuish, C., and Dogramadzi, S. (2018). Design of a wearable fingertip haptic device for Remote palpation: Characterisation and interface with a virtual environment. Front. Robot. AI 5, 62. doi:10.3389/frobt.2018.00062

VRgluv (2021). VRgluv | force feedback haptic gloves for VR training. [Online]. Available: https://www.vrgluv.com.

Wang, D., Guo, Y., Liu, S., Zhang, Y., Xu, W., and Xiao, J. (2019). Haptic display for virtual reality: Progress and challenges. Virtual Real. Intelligent Hardw. 1 (2), 136–162. doi:10.3724/sp.j.2096-5796.2019.0008

Keywords: haptic interface, wearable computer, virtual reality, human-computer interaction (HCI), ambulatory, force-feedback, perceptual overlay, tangible user interface (TUI)

Citation: Kudry P and Cohen M (2022) Development of a wearable force-feedback mechanism for free-range haptic immersive experience. Front. Virtual Real. 3:824886. doi: 10.3389/frvir.2022.824886

Received: 29 November 2021; Accepted: 07 September 2022;

Published: 14 December 2022.

Edited by:

Michael Zyda, University of Southern California, United StatesReviewed by:

Sungchul Jung, Kennesaw State University, United StatesDaniele Leonardis, Sant'Anna School of Advanced Studies, Italy

Copyright © 2022 Kudry and Cohen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peter Kudry, cGV0ZXJrdWRyeUBwcm90b25tYWlsLmNvbQ==; Michael Cohen, bWNvaGVuQHUtYWl6dS5hYy5qcA==

Peter Kudry

Peter Kudry Michael Cohen

Michael Cohen